The effects of neuron morphology on graph theoretic measures of network connectivity: the analysis of a two-level statistical model

- 1Computational Neuroscience Group, Department of Signal Processing, Tampere University of Technology, Tampere, Finland

- 2Psychosis Research Centre, Institute of Clinical Medicine, University of Oslo, Oslo, Norway

We developed a two-level statistical model that addresses the question of how properties of neurite morphology shape the large-scale network connectivity. We adopted a low-dimensional statistical description of neurites. From the neurite model description we derived the expected number of synapses, node degree, and the effective radius, the maximal distance between two neurons expected to form at least one synapse. We related these quantities to the network connectivity described using standard measures from graph theory, such as motif counts, clustering coefficient, minimal path length, and small-world coefficient. These measures are used in a neuroscience context to study phenomena from synaptic connectivity in the small neuronal networks to large scale functional connectivity in the cortex. For these measures we provide analytical solutions that clearly relate different model properties. Neurites that sparsely cover space lead to a small effective radius. If the effective radius is small compared to the overall neuron size the obtained networks share similarities with the uniform random networks as each neuron connects to a small number of distant neurons. Large neurites with densely packed branches lead to a large effective radius. If this effective radius is large compared to the neuron size, the obtained networks have many local connections. In between these extremes, the networks maximize the variability of connection repertoires. The presented approach connects the properties of neuron morphology with large scale network properties without requiring heavy simulations with many model parameters. The two-steps procedure provides an easier interpretation of the role of each modeled parameter. The model is flexible and each of its components can be further expanded. We identified a range of model parameters that maximizes variability in network connectivity, the property that might affect network capacity to exhibit different dynamical regimes.

1. Introduction

We analyze how the low-resolution properties of single neuron morphology constrain the connectivity within a large population of neurons. We develop a two-level framework that includes details of single cell morphology while allowing the analysis of large populations of neurons as well as the derivation of compact analytical expressions for most of the considered aspects of morphology and connectivity. The presented framework can further be extended to take into account additional aspects of neuronal morphology and additional properties of connectivity.

In this work, single neurons and neurites are modeled statistically. Each axon and each dendrite is represented by a single neurite field, the probability distribution that describes the density of the neurite branches within a limited area of the neurite. This way each neuron consists of one neurite field for the dendrite, one for the axon, and the parameter that determines the average distance between the dendrite and axon centers. The adopted neurite field model is discussed in the literature. The studies in Snider et al. (2010) and Teeter and Stevens (2011) propose a universal method to describe different neuronal types based on the description of neurite fields of dendrites. A study in Cuntz (2012) demonstrates how realistic neuronal morphologies arise when dendrite segments, distributed according to Snider et al. (2010), get connected using the optimal wiring principle. In van Pelt and van Ooyen (2013) the realism of the obtained synaptic distributions and connectivity probabilities was tested for neurons modeled using density fields.

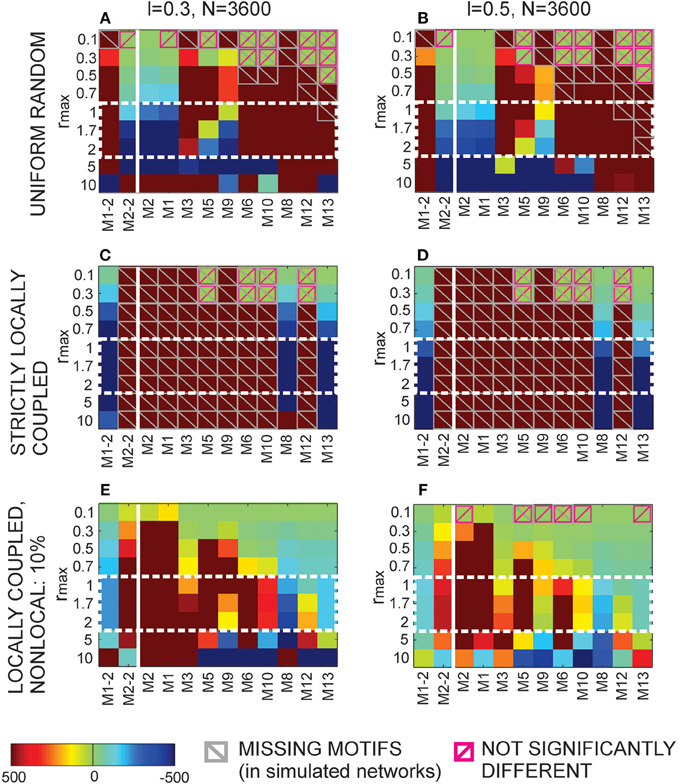

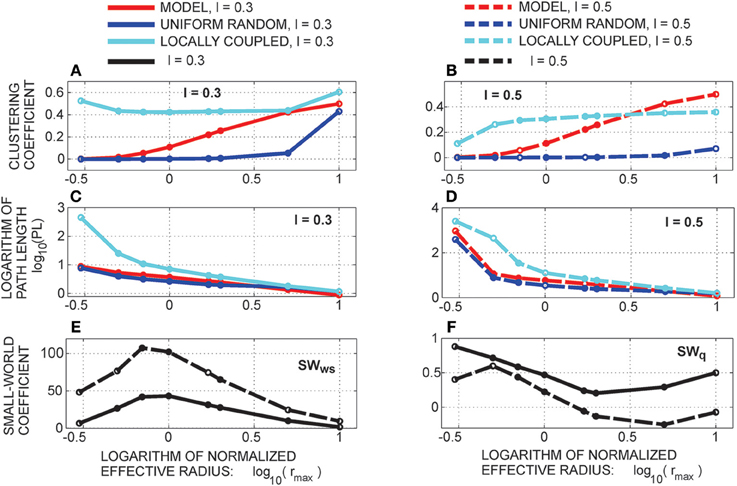

The use of graph theoretic measures to quantify neuronal connectivity is a methodology adopted from the classical studies of network theory. In various studies and different contexts, it has been demonstrated how such measures can distinguish between functionally different network types. The methodology has been applied to very different networks, from computer networks to social networks, and from gene regulatory networks to neuroanatomy (Boccaletti et al., 2006). Theoretical studies, on the other hand, focus on analysis of generic networks of coupled oscillators demonstrating how statistical properties of network connectivity change the overall dynamics of the complex system. A particularly interesting question in such studies is the search for connectivity that optimizes some aspects of network functionality. Some commonly addressed concepts include small-world networks that minimize the average distance between network nodes while maximizing the cooperation across the node neighborhood. Another concept is the scale-free network that installs system dynamics on the edge between order and disorder, thus maximizing the repertoire of dynamical regimes that a system can exhibit as well as the information diversity in the system (Boccaletti et al., 2006; Mäki-Marttunen et al., 2011). Small-world networks were first introduced in Watts and Strogatz (1998), and then addressed in other studies, also in the neuroscience context (Boccaletti et al., 2006; Herzog et al., 2007; Kriener et al., 2009; Voges et al., 2010; Sporns, 2011; McAssey et al., 2014). They were often examined in the context of the large-scale recordings of whole-brain activity, or the anatomical large-scale connectivity between brain regions (Sporns, 2011). For the smaller-scale networks of individual neurons it is relatively difficult to estimate the small-world property as it requires tracking the synaptic connectivity between neurons in large populations (particularly in order to estimate path lengths). Most of the studies present in the literature examine theoretical concepts through mathematical models, or analyze functional connectivity estimated from recordings. In our previous study, we examined a large repertoire of connectivity measures aiming to find a consistent descriptor of connectivity that has implications on network dynamics (Mäki-Marttunen et al., 2013). Two measures were distinguished, the clustering coefficient for networks with binary distribution of node degrees, and maximal eigenvalue for networks with more variability in the in-degree distribution.

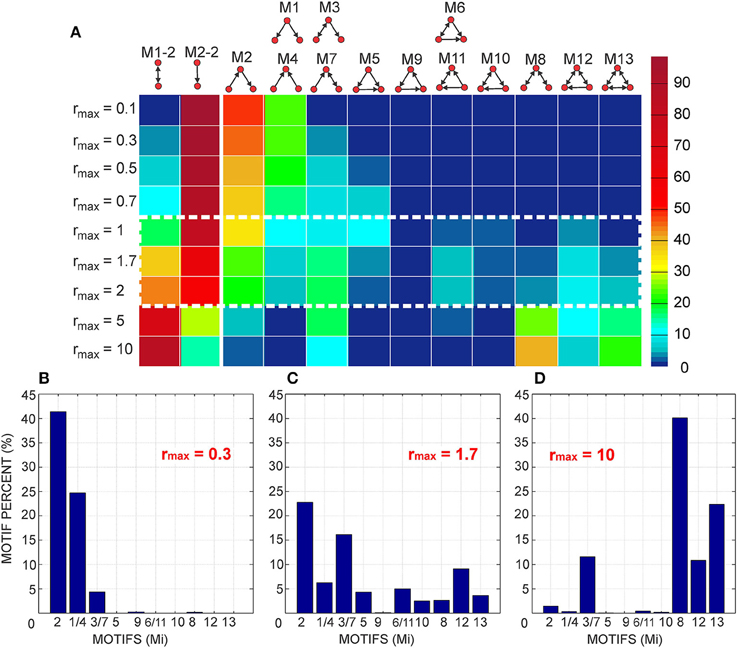

In this study, we primarily focus on the estimation of motif counts (Milo et al., 2002). Motifs represent minimal networks with structured connectivity and are as such suitable for experimental studies. In three previous studies, the non-random distribution of motifs was demonstrated in small networks of pyramidal cells (Song et al., 2005; Perin et al., 2011), and also in networks of interneurons (Rieubland et al., 2014). The implications of these non-random features of connectivity are yet to be explained. Using a theoretical model we derived closed-form expressions for motif counts that do not depend on the network size, but only on the average density of neurons. In addition, the clustering coefficient, that was already found to significantly affect the network activity (Mäki-Marttunen et al., 2013), can be straightforwardly computed from motif counts, as demonstrated in what follows.

A relatively large part of the paper is dedicated to analytical approach to solving the considered two-level model as well as the obtained closed-form solutions. Understanding different levels of organization in neuronal systems and the interaction between those levels is a frequently discussed issue in computational neuroscience literature (Frégnac et al., 2007; Deco et al., 2008). Even the detailed single-level models can become computationally exhaustive and complex, and combining them into multilevel models leads to an explosion in complexity that can obscure the interactions between particular model components. A suggested alternative is the mean-field approximation of each level before linking it to higher-levels of organization (Deco et al., 2008; Sompolinsky, 2014). The presented study complies with this methodology. We first analyze the level of neurons in order to derive simple properties relevant for the network level in the model. In this way, the dimensionality of that level is compressed, which provides the possibility of deriving simpler expressions for the second level characteristics.

Several approximations were adopted when constructing the model of this paper. The neurite structure is described statistically and the fine details of neurite structure are lost. The fine patterns of synaptic distribution are also averaged out. The organization of neurons in the space is chosen to be simple and corresponds to cell cultures more so than to the cortical tissue. Finally, the activity-dependent synaptic reorganization is not considered in this study. Synapses are formed solely based on geometry, and the obtained connectivity corresponds more to potential connectivity as defined in Stepanyants and Chklovskii (2005). In the discussion, we will address some relevant properties of neuronal systems that are not part of the model, and propose a way to incorporate them in the presented framework.

The main result of this study are the analytical expressions for several frequently addressed network measures, including motif counts, clustering coefficient, and path length between network nodes. We particularly addressed motif counts, as they represent the smallest possible networks with structured connectivity. As they capture only the local properties of connectivity, they can be measured experimentally, as demonstrated in Song et al. (2005), Perin et al. (2011), and Rieubland et al. (2014). In addition, the clustering coefficient can be straightforwardly computed from motif counts. From the clustering coefficient and path length, we computed small-world coefficients using two definitions from the literature (Watts and Strogatz, 1998; Telesford et al., 2011). The addressed connectivity measures depend on several model parameters. Some of the parameters contribute as multiplicative constants, while others show non-linear relations to the considered measures. The most interesting parameter is the ratio between the effective radius of a neurite and the distance between the axon and dendrite centers of the same neuron. The effective radius is the maximal distance that permits a connection between two neurons. Depending on this ratio, a network can have a connectivity similar to uniform random, or similar to locally coupled network. The most interesting situations are in between these two extremes, where the network increases variability in its connectivity repertoire.

2. Methods

To address the principal goal of this study, in other words, to analyze how neuronal morphology can affects connectivity in large networks, we constructed a two-level model. The first level specifies the anatomic properties of each neurite statistically, by defining a probability distribution of neurite branches. The probability distribution is non-zero only within a limited area, the support of neurite distribution. This low-resolution description of neurites was already analyzed in several studies (Snider et al., 2010; Teeter and Stevens, 2011; van Pelt and van Ooyen, 2013). It depends on a small number of parameters, four for the two-dimensional neurites, and is suitable for the analysis of large-scale network connectivity. The second level defines the properties of the neuronal population. In order to emphasize neuron morphology we selected the simplest network model, a two-dimensional virtually infinite-size network with a uniform distribution of neurons. Every pair of sufficiently close axon-dendrite branches forms synapses, the number of synapses is proportional to the axon-dendrite overlap (Peters' rule, Peters and Feldman, 1976; Peters et al., 1991). The obtained synapses correspond to potential connectivity as defined in Stepanyants and Chklovskii (2005). Activity dependent synapse formation and pruning was not considered in this study, although it has been shown to play an important role in remodeling synaptic patterns. Including the activity-dependent mechanisms would require a dynamical model with a more complex synapse formation rule, eventually also described statistically. Activity-induced modifications of neurite distribution might also be considered. In this study, we wanted to analyze a simpler model where the role of morphology was emphasized, as it is the most stable among several properties that shape the connectivity in large networks. The concepts presented here can be combined with models of other relevant mechanisms, including the models of network activity, e.g., the one described in Mäki-Marttunen et al. (2013).

The first part of Methods Section gives a detailed description of the analyzed model. The second part presents the analysis of neurite distribution and shows how its properties determine first-order connectivity statistics under the adopted synapse connectivity rule. In the third part, we present closed-form analytical expressions for the two network measures and an iterative method to obtain another measure frequently addressed in the literature (Sporns, 2011).

2.1. Model Description

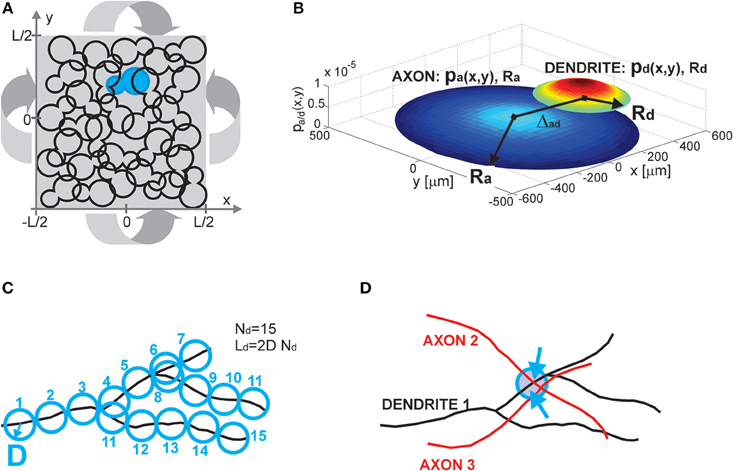

The model consists of several components, including a neuronal population description, single neuron and single neurite description, and the rule for establishing contacts between neurons (i.e., potential synapses). All these components are illustrated in Figure 1.

Figure 1. (A) Population of neurons. Each neuron is illustrated as an 8-shaped surface (to make it more visible, one such surface is colored blue). The population of neurons is homogenous, all of the neurons have identical properties and they are randomly oriented. The population lays in the planar space of the size L × L. The dimension L is chosen to be much bigger than the size of the neurons. To avoid boundary conditions, the plane is projected on a torus (indicated by four arrows). (B) Neuron and neurite models. The axon (a) and dendrite (d) are modeled as density distributions pa/d(x,y) on a limited circular support with radii Ra/d. The axon and dendrite centers are at a distance Δad. The example in (B) shows the neurites modeled as truncated Gaussians with the parameters: (axon) Ra = 500 μm, σa = 0.9 Ra, (dendrite) Rd = 200 μm, σd = 0.7 Rd, and the distance between neurite centers Δad = 400 μm. The x and y axes are in [μm], the z axis shows the value of density distribution for the given coordinates (x, y). (C) Neurite segments and density fields. Each neurite is divided into segments of length 2D, and a circle of radius D can be circumscribed around the middle of the segment. For a dendrite with Nd segments, the total dendrite length is Ld = 2DNd. Neurite distribution describes the probability of finding individual segments within the neurite support. It is derived by superimposing many neurites of the same type. (D) Potential synapse formation rule. An axon-dendrite pair can form a synapse if an axon segment crosses the near neighborhood of a dendrite segment, the near neighborhood is a circle of radius D circumscribed around the dendrite segment (blue circle in the figure). The dendrite segment can form at most one synapse with the considered axon, but it can at the same time form a synapse with every other axon that crosses its near neighborhood (a dendrite segment with two synapses shown in the figure, the two arrows indicate synapse positions).

2.1.1. Population of Neurons (Figure 1A)

Neurons are distributed randomly in the two-dimensional space of the size L × L, where L is chosen to be much bigger than the neuron size, thus making the space around each neuron virtually infinite. The population of neurons is homogeneous, all of the neurons have identical properties and they are randomly oriented in space. The neurons are uniformly distributed in space with the density equal to , i.e., a square of the size l × l contains on average one neuron, which gives a total of  neurons. To avoid boundary conditions, the edges of the surface are wrapped to form a torus and provide virtually infinite space (which is illustrated in Figure 1A). The model corresponds to the arrangement of neurons in dissociated neuronal cultures. A model of the cortical tissue, on the other hand, requires a non-uniform arrangement of neurons that should follow the distribution of the considered cell types across layers. In addition, the non-random orientation of neurons could be imposed.

neurons. To avoid boundary conditions, the edges of the surface are wrapped to form a torus and provide virtually infinite space (which is illustrated in Figure 1A). The model corresponds to the arrangement of neurons in dissociated neuronal cultures. A model of the cortical tissue, on the other hand, requires a non-uniform arrangement of neurons that should follow the distribution of the considered cell types across layers. In addition, the non-random orientation of neurons could be imposed.

2.1.2. Neuron and Neurite Models (Figure 1B)

All of the neurons in the model are identical and consist of two neurite fields, one for the (basal) dendrite and one for the axon. The dendrite is centered in the soma and the axon center is at a distance Δad from the soma. For the uniform distribution of somata and the random orientation of axons, the distribution of axon centers becomes equal to the one of somata. The neurites are modeled statistically, as a distribution of neurite segments on a finite area, the distribution support. In this study, we considered circular supports with a radius Ra for axons and Rd for dendrites, where Ra ≥ Rd. We analyzed cases with uniform and truncated Gaussian distributions of neurites, described by density functions pa(x, y) for axons and pd(x, y) for dendrites. The expression for the uniform distribution is given by Equation (1) and for the truncated Gaussian by Equation (2), with parameters (xa/d, ya/d)—the coordinates of the axon and dendrite centers, σd, σa—the variances along both axes.

are the normalization coefficients that compensate for the cut off part of Gaussians. The presented results can be extended to more general forms of density distributions and elliptic distribution supports.

2.1.3. Neurite Segments and Density Fields (Figure 1C)

We introduce the maximal number of neurite segments, Na for axons and Nd for dendrites, for two reasons. First, this concept allows us to compute the expected number of synapses between an axon-dendrite pair, which is an important first step in the derivation of the considered connectivity measures. Second, it connects the individual neurites with the statistical description of neurite fields, which is illustrated in Figure 1C. Each neurite is discretized into segments of length 2D. In what follows we will call D the unit length of a neurite, so each neurite segment is two units long. If the total length of a neurite is La/d, then La/d = 2DNa/d. The neurite field describes the probability of finding every neurite segment inside the neurite support, and it can be obtained by superimposing many neurites. We assume that the dendrite center coincides with the soma center as we represent all dendrite branches with the same density field.

2.1.4. Potential Synapse Formation Rule (Figure 1D)

We adopted a simple rule that forms synapses between a pair of neurons independently from other neurons in the population, the number of obtained synapses is proportional to the overlap between the two neurites (Peters' rule, Peters and Feldman, 1976; Peters et al., 1991). Consider a dendrite-axon pair, for each dendrite segment we examine its near neighborhood, a ball of radius D centered in the segment center (delineated with a blue circle in Figure 1D). If there is any axon segment present in this ball, the potential synapse between these segments is established. If there is more than one axon segment, only one, randomly selected, of them will form a potential synapse with the dendrite segment. Consequently, every dendrite segment can form at most one potential synapse with the considered axon, but it can simultaneously form potential synapses with other axons that cross its near neighborhood. In the example in Figure 1D, the near neighborhood of a dendrite segment is crossed by two axons and two potential synapses are formed (the blue arrows indicate positions of the potential synapses). This is a rather mild constraint on the number of synapses and in a large population of neurons the number of synapses per neurite can become unrealistically high. Still, it is a reasonable assumption when analyzing potential connectivity, as we are interested in estimating the number of all possible contact places, which is much bigger than the number of actually formed synapses. Alternative rules that take into account all the available segments from all the proximal axons can also be defined.

2.2. The Methodology Used to Analyze Neurites: Connectivity between Axon-dendrite Pairs

2.2.1. Expected Number of Synapses per Neurite

From the neurite description and the adopted synapse formation rule we derived the expression for the expected number of synapses per neurite (S, Equation 3). The details of the derivation of the expression are given in the Supplementary Material 1. The same expression was already proposed in the literature to estimate the number of synapses from neurite density fields (Peters et al., 1991; Liley and Wright, 1994; van Pelt and van Ooyen, 2013). In van Pelt and van Ooyen (2013), an equivalent equation was derived using less strict assumptions about the distribution of axonal field than the one adopted in our study.

Replacing the expressions for neurite field distributions into this equation gives the final formula for the expected number of synapses

Here, is the average neurite radius, Δ is the distance between the considered axon-dendrite pair of two proximal neurons, is the normalized distance between the axon-dendrite pair, is the asymmetry index that accounts for the different size of the axons and dendrites, and M is the set of parameters that determine the distribution of neurite segments. M is an empty set for a uniform distribution and M = {σ, kσ} for the considered case of truncated Gaussian distribution. Here, is the normalized dendrite distribution variance, and is the ratio between the dendrite and axon variances. In what follows, the function ϕ(ρ, η, M) will be called distance-dependent expected number of synapses as it describes the dependency between the expected number of synapses and the axon-dendrite distance. This function can be evaluated analytically for the uniform distribution and numerically for the truncated Gaussians, all relevant derivations are given in Supplementary Material 1 and the function is further discussed in Results Section. The only requirement for this function is to be reversible, at least partially. Similarly, the function ρ2ϕ(ρ, η, M) will be called size-dependent expected number of synapses as it describes the dependency on the average neurite size.

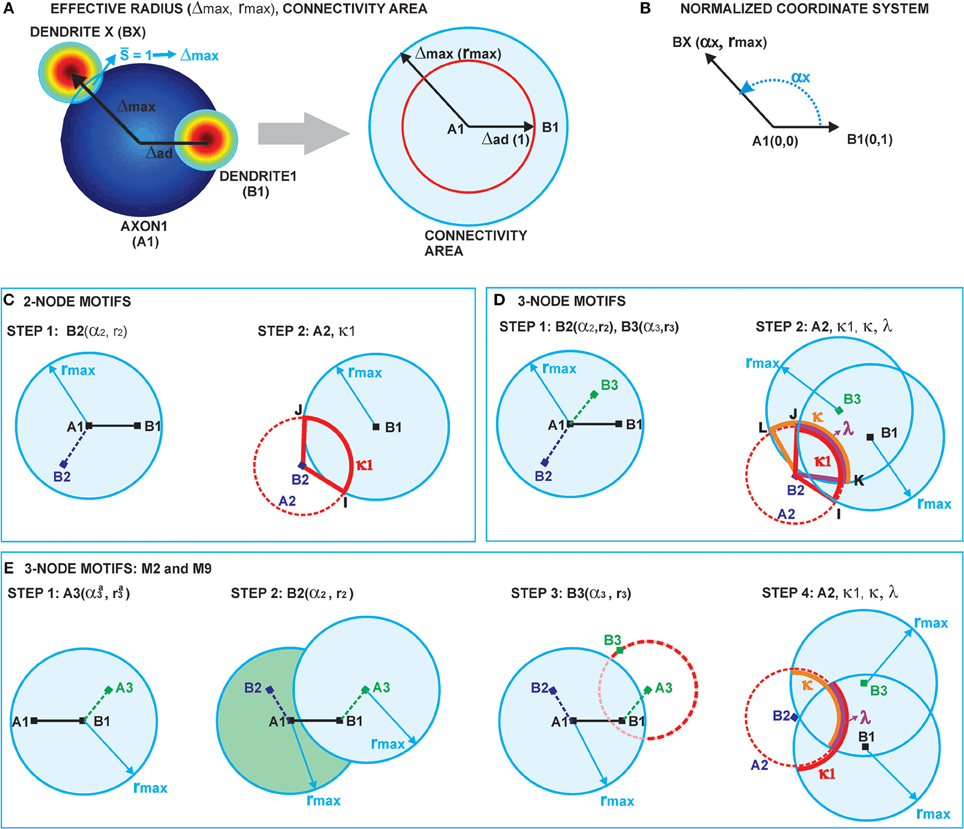

2.2.2. Computation of Node Degree and Effective Radius from Neurite Field Distributions (Figure 2A)

Two neurons are expected to connect if their axon-dendrite pair has S ≥ 1. The expected number of synapses depends on the model parameters (Na, Nd, D, Δ, R) and the normalized parameters ρ, η, and M. First we fix all the parameters except Δ (and ρ), and then we find the maximal axon-to-dendrite distance Δmax (and ρmax) which satisfies the condition S ≥ 1. This maximal distance is called the effective radius of a neurite and its computation is illustrated in Figure 2A. The circle centered in the neurite with the radius equal to the effective radius is called the connectivity area. The effective radius integrates the properties of both, the axon and the dendrite, and is consequently equal for both types of neurites. Once it is computed, it simplifies the analysis of network connectivity. Every neuron can be represented as two circles of radius Δmax with the distance between the circle centers being Δad. Different network connectivity measures are computed from the intersection of pairs of circles for several neurons.

Figure 2. (A) Definition of the effective radius and the connectivity area. The effective radius is the distance between an axon center A1 and a dendrite center BX that satisfies the condition S(Δ) = 1. Every point within the connectivity area of A1 is at a distance smaller than Δmax from A1. (B) Normalized coordinate system. The polar coordinate system is fixed to the representative neuron N1 defined by its axon center A1 and dendrite center B1. The coordinate center is in the axon center A1, and the coordinate axis goes from A1 to the dendrite center B1. The angular coordinate is measured counterclockwise from the coordinate axis. All radial coordinates are normalized, i.e., divided by Δad, so that B1 has coordinates (0, 1) and BX coordinates (αX, rmax), where . (C) 2-Node motif counts. The panel illustrates two steps in the computation of the expected numbers of 2-node motifs. In the first step, the position of the dendrite center B2 is chosen within the connectivity area of axon A1. In the second step, axon A2 is chosen on the circle of radius 1 around B2 (the red dashed line). The function κ1 gives the probability that A2 falls within the connectivity area of B1, κ1 is determined by the angle between points B2, I and J. (D) 3-Node motif counts. In the first step, the positions of two dendrite centers, B2 and B3, are chosen within the connectivity area of axon A1. The second step defines the position of axon center A2, placed on the circle of radius 1 around B2 (the dashed red line). Intersections of this circle with the connectivity areas of dendrites B1 and B3 define functions κ1 (the red line), κ (the orange line), and λ (the purple line), which are determined by the angles ∠B2IJ, ∠B2KL, and ∠B2JK, respectively. The expected number of motifs for all three-node motifs can be computed considering different positions of A2 with respect to the connectivity areas of B1 and B3, and as a combination of functions κ1, κ, and λ. (E) M2 and M9 counts: Computation of the expected numbers of M2 and M9 requires additional steps. In the first step, the axon center A3 is chosen within the connectivity area of B1. In the second step, the dendrite center B2 is chosen in the connectivity area of A1 but outside the connectivity area of A3 (dark green area). In the third step, the dendrite center B3 is chosen on the circle of radius 1 around A3 (the dashed red line), but outside of the connectivity area of A1 (unshaded part of the dashed red line). The fourth step is identical as the second step in (D).

The function ϕ(·) has to be invertible with respect to the first argument. Here, ϕ−1(x, η, M) means the inverse of ϕ with respect to argument x and with η and M considered as constants. In case of uniform distribution, the function ϕ is monotonic without discontinuities only for η ≤ ρ < 1. The analysis of this case, shown in Results Section, confirms that the general conclusions still apply.

Finally, the node degree, equal for all the neurons, can be computed as a function of the effective radius. The average number of output connections for a neuron is equal to the average number of dendrite centers within the connectivity area of its axon

2.2.3. Constraints on Model Parameters

So far, no constraints on model parameters were imposed, but obviously a random choice in the 8-dimensional space {D, Na, Nd, R, η, ρ, σ, kσ} can lead to unrealistic morphologies. In this work, we will not search for biologically realistic parameters using reconstructed neurons or detailed simulations of neurites, e.g., using NETMORPH toolbox (Koene et al., 2009). This will be addressed in our future work. Here, we only give a set of weak conditions necessary for having feasible morphologies.

Condition 1: Upper bound for the number of neurite segments. Figure 1C illustrates the discretization of neurites into segments of length 2D. A circle of radius D is circumscribed around each such segment. As shown in Figure 1C, these circles overlap only immediately after their branching points. As we assume that D is small compared to the average segment between two branching points, we can also assume a small number of overlapping circles compared to the total number of circles covering a neurite. If, in addition, we assume that the number of neurite segments should not be too dense, and that the neurites tend to avoid self-intersections, we derive the following upper bound for the number of neurite segments:

Right sides of the equations give the approximate number of circles of radius D inside the neurite of radius Rd/a. For the truncated Gaussian we have an additional relation:

If we replace the parameters (Ra, Rd, σa, σd) with the normalized parameters (R, η, σ, kσ) the relation becomes:

The function is derived in the Supplementary Material (see Supplementary Material 1, derivation of Equation 4) for the upper bound of Na. The relation for Nd follows from the same analysis when switching the roles of dendrites and axons.

Condition 2: Weak lower bound for the number of neurite segments. Each neurite should have at least one connected straight fiber. If the neurite radius is Rd/a, the fiber length should be at least 2Rd/a. Clearly, a better approximation for a single fiber would be elliptic support with a longer diagonal equal to Ra/d and a shorter one much smaller than Ra/d. But, if we only consider the circular support of neurites, as it is done in this study, the single fiber of length 2Rd/a is approximated with a circle of the radius Rd/a. Therefore, we have

Condition 3: Connected network. In order to have a connected network the following relation between the model parameters has to hold:

Condition 4: The inverse of function ϕ. The model parameters should be in the range of values where the inverse of ϕ exists:

Condition 5: Upper bound for the expected number of synapses. As each dendrite segment accommodates at most one synapse with a proximal axon, the upper bound of S can be estimated as the total number of circles of the radius D that can be placed inside the axon-dendrite intersection area:

In cases when the number of neurite segments is much smaller than the neurite radius this upper bound allows more than one synapse per neurite segment, so a more strict constraint should be imposed:

2.3. The Methodology Used to Analyze Networks: Statistical Measures of Network Connectivity

We analyze network connectivity by computing standard statistical measures, such as motifs, clustering coefficient, harmonic path length, and two versions of small-world coefficient. Most of the section is dedicated to motifs, and the expression for clustering coefficient directly follows from it. The harmonic path length is computed using an iterative procedure. Small-world coefficients are adopted from the literature (Watts and Strogatz, 1998; Telesford et al., 2011) and will only be described in brief. We compute the connectivity measures for one fixed cell, the neuron N1, which is the representative of all the neurons in the homogeneous population. We consider all the other neurons (N2, N3, …, Nk) that can form different connectivity patterns with N1.

2.3.1. Coordinate System and Normalization (Figure 2B)

The polar coordinate system is fixed to the neuron N1, with the axon center A1 and the dendrite center B1. The center of the coordinate system is in A1 and the coordinate axis follows the direction from A1 to B1. The angular coordinate is measured counterclockwise with respect to the coordinate axis and takes values α ϵ [− π, π]. The radial coordinates are normalized, i.e., divided by Δad, so that B1 has the coordinates (0, 1), and a dendrite center BX on the edge of connectivity area has the coordinates 1. Figure 2B illustrates the described coordinate system2.

2.3.2. Notation

The symbol  Rx(X) is used to denote “a ball” or “a circular neighborhood.” The subscript indicates the normalized radius, and the center of the ball is given between brackets. If the center X has the coordinates (αX, rX), the ball

Rx(X) is used to denote “a ball” or “a circular neighborhood.” The subscript indicates the normalized radius, and the center of the ball is given between brackets. If the center X has the coordinates (αX, rX), the ball  Rx (X(αx, Rx)) is a set of all points X(α, r) such that

Rx (X(αx, Rx)) is a set of all points X(α, r) such that

This notation is also used to mark the connectivity area of a neurite, for example  rmax(A) is the connectivity area of an axon centered in A. If we replace the inequality in the expression above with an equality the expression corresponds to the edge of the ball, the circle

rmax(A) is the connectivity area of an axon centered in A. If we replace the inequality in the expression above with an equality the expression corresponds to the edge of the ball, the circle  Rx(X).

Rx(X).

2.3.3. Expected Number of Two-Node Motifs (Figure 2C)

Figure 2C illustrates the two-step method for computation of two-node motifs. We consider two connected two-node motifs, i.e., whether two neurons have a unidirectional (N1 → N2) or a bidirectional (N1 ↔ N2) connection. For the bidirectional motif we will use the notation M1 − 2, and for the unidirectional the notation M2 − 2. In the first step (the left side of Figure 2C), the position of the dendrite center B2 is chosen inside the connectivity area of axon A1 which, according to the definition of the connectivity area, results in the connection N1 → N2. From the model definition, the axon-dendrite distance in a neuron is fixed to Δad (1 in the normalized coordinate system) and the orientation of the neuron is random in the 2D space. Therefore, for the fixed B2 the axon center A2 can take any position on the circle of radius 1 centered in B2, C1(B2), with equal probability. This circle is shown as a red dashed line on the right side of Figure 2C. Given the set of possible positions of A2, we can compute the probability that A2 falls inside the connectivity area of B1, which would give a bidirectional connection between the two neurons. This probability is proportional to the part of the circle C1(B2) that falls inside the connectivity area around B1 (highlighted in Figure 2C), and is also described by the function κ1. If A2 is outside the connectivity area of B1, the resulting motif will be the unidirectional connection N1 → N2.

From this analysis we can estimate the probability that neuron N2 forms a unidirectional or a bidirectional motif with the neuron N1. To compute the expected number of two-node motifs for N1 we should consider all the possible positions of B2 (and consequently A2) within the connectivity area of A1, which is done by integrating over all the coordinates B2(α2, r2) inside the ball  rmax(A1). In addition, the expression obtained for the motif M2 − 2 is multiplied by two as we should consider two directions of the connection, N1 → N2 and N2 → N1. The obtained expected numbers of motifs are given by the following expressions:

rmax(A1). In addition, the expression obtained for the motif M2 − 2 is multiplied by two as we should consider two directions of the connection, N1 → N2 and N2 → N1. The obtained expected numbers of motifs are given by the following expressions:

If the effective radius is larger than the axon-dendrite distance in a neuron (Δmax > Δad) the dendrite center B1 falls inside the connectivity area of its axon A1. In the considered model, the dendrite centers coincide with the somata and, in general case, they should not be dimensionless. We neglect the finite size of the somata assuming it to be much smaller than the size of the neurite field and the connectivity area. If the somata are not negligible, a correction needs to be applied in order to exclude possibility that some dendrite center overlaps with B1. The correction coefficients for all 2-node and 3-node motifs are given in Supplementary Material 2.

2.3.4. The Definition of κ1 and κ

The function κ1 describes the probability that A2 falls inside the connectivity area of B1,  rmax(B1), and is proportional to the intersection between this connectivity area and the circle C1(B2). The intersection is determined by the angle ∠B2IJ shown in Figure 2C, this angle is entirely determined by the coordinates of the dendrite centers B1(0, 1) and B2(α2, r2). Similarly, we can define a more general function κ if we replace B1 with some other dendrite center B3(α3, r3) with arbitrarily chosen coordinates. This way we have κ1(α2, r2) = κ(α2, r2, 0, 1)3. The function κ is shown by the orange line in Figure 2D, and it is equal to the angle ∠B2KL shown in the same panel

rmax(B1), and is proportional to the intersection between this connectivity area and the circle C1(B2). The intersection is determined by the angle ∠B2IJ shown in Figure 2C, this angle is entirely determined by the coordinates of the dendrite centers B1(0, 1) and B2(α2, r2). Similarly, we can define a more general function κ if we replace B1 with some other dendrite center B3(α3, r3) with arbitrarily chosen coordinates. This way we have κ1(α2, r2) = κ(α2, r2, 0, 1)3. The function κ is shown by the orange line in Figure 2D, and it is equal to the angle ∠B2KL shown in the same panel

One special case has to be considered when defining κ′. If the distance between the dendrite centers is smaller or equal to Δmax − Δad, i.e., if the circle  1(B2) entirely belongs to the connectivity area of the other dendrite, the function κ′(·) becomes complex as its argument becomes larger than 1. However, the intersection angle in this case is 2π. This special case is taken into account in the final definition of κ(·):

1(B2) entirely belongs to the connectivity area of the other dendrite, the function κ′(·) becomes complex as its argument becomes larger than 1. However, the intersection angle in this case is 2π. This special case is taken into account in the final definition of κ(·):

2.3.5. Three-Node Connectivity Patterns (Figure 2D)

Figure 2D describes the two-step procedure needed to evaluate the expected number of the majority of three-node motifs. In the first step, two dendrite centers B2 and B3 are placed inside the connectivity area of the axon A1, which ensures the connections from N1 to N2 and N3. In the second step, the position of the axon center A2 is chosen on the circle  1(B2) around the dendrite center B2. The intersections of this circle with the connectivity areas around B1 and B3 determine possible connectivity patterns between the three neurons, and the lengths of these intersections are proportional to the probabilities of the connectivity patterns.

1(B2) around the dendrite center B2. The intersections of this circle with the connectivity areas around B1 and B3 determine possible connectivity patterns between the three neurons, and the lengths of these intersections are proportional to the probabilities of the connectivity patterns.

The intersection  1(B2) ∩

1(B2) ∩  rmax(B1) defines the function κ1, as in the case of 2-node motifs, which corresponds to the angle ∠B2IJ in Figure 2D and is colored red. The intersection

rmax(B1) defines the function κ1, as in the case of 2-node motifs, which corresponds to the angle ∠B2IJ in Figure 2D and is colored red. The intersection  1(B2) ∩

1(B2) ∩  rmax(B3) defines the function κ, a generalization of κ1, which is shown in orange in Figure 2D and corresponds to the angle ∠B2KL. If the circle and both connectivity areas intersect, the function λ is non-zero. This is shown in purple in Figure 2D and corresponds to the angle ∠B2KJ.

rmax(B3) defines the function κ, a generalization of κ1, which is shown in orange in Figure 2D and corresponds to the angle ∠B2KL. If the circle and both connectivity areas intersect, the function λ is non-zero. This is shown in purple in Figure 2D and corresponds to the angle ∠B2KJ.

If A2 falls inside the connectivity area around B1, but outside of the connectivity area around B3, the neuron N2 will have a bidirectional connection with N1 but no connection toward N3 (although, it is possible that it receives a connection from N3). The probability for this is proportional to the function (κ1 − λ). If A2 falls inside the connectivity area of B3, but outside the one of B1, the neuron N2 receives a unidirectional connection from N1, and also forms the connection with N3 (which might be unidirectional or bidirectional, depending on the position of axon A3). Finally, if A2 falls within the intersection between two connectivity areas, neuron N1 has a bidirectional connection with N2 and at least a unidirectional connection to N3.

The same analysis is repeated for the intersections between the circle  1(B3), which defines the possible positions of the axon center A3, and the connectivity areas around B1 and B2. This gives the probabilities for the remaining connections. Finally, the following probabilities correspond to the connectivity patterns between the three neurons:

1(B3), which defines the possible positions of the axon center A3, and the connectivity areas around B1 and B2. This gives the probabilities for the remaining connections. Finally, the following probabilities correspond to the connectivity patterns between the three neurons:

The expressions on the right are divided by 2π, as the full circle corresponds to the probability 1.

2.3.6. Definition of λ

The first step is to find the angular coordinates of the intersection points between the circle C1(B2) and the edges of the two connectivity areas,  rmax(B1) and

rmax(B1) and  rmax(B3). These points are indicated as I, J, K, and L in Figure 2D. The same is done for the intersections between C1(B3) and the edges of connectivity areas around B1 and B2. The following list summarizes these angles:

rmax(B3). These points are indicated as I, J, K, and L in Figure 2D. The same is done for the intersections between C1(B3) and the edges of connectivity areas around B1 and B2. The following list summarizes these angles:

The angles ϕ211,2 and ϕ311,2 always exist as the corresponding intersections exist for every B2 and B3 inside the connectivity area of A1. The intersections ϕ231,2, ϕ321,2 exist when rmax ≥ 1, but for rmax < 1 an additional condition for the coordinates of B2 and B3 has to be imposed.

The function λ depends on the length of the arc between these angles, which is independent of the choice of the reference coordinate system. The simplest equations are obtained if we translate the coordinate system from A1 to B2, then rotate it to have the coordinate axis in the direction from B2 to B1. The new coordinate center is B2, while B1 maintains the zero angular coordinate. The first translation requires the following coordinate transform

The second rotation is done by subtracting the angular coordinate of B1 in the translated system, equal to τ(0, 1, α2, r2), from all other angles. The relations between the original coordinates and the coordinates in the translated-then-rotated system are:

Function τ updates the angular coordinates after the translation of the coordinate system to (α2, r2). In the new coordinate system the intersecting angles between C1(B2) and Brmax(B3) are given as

All the relevant intersection angles are:

Obtaining the length of the intersection arc from these angles requires considering each possible mutual position of the three angles. This problem was solved using the following procedure. The four angles were sorted from smallest to largest into a vector of angles (α2, r2, α3, r3). The sorted angles parcel the circle C1(B2) into four arcs. For each arc we evaluated the distance between its middle point and the two centers B1 and B3. If both distances are smaller than rmax, it indicates that the whole segment belongs to the intersection area Brmax(B1) ∩ Brmax(B3). All the segments that passed this test were summed up to obtain the function λ′(α2, r2, α3, r3). This function is non-zero when all three circles intersect. If dendrites B1 and B3 do not overlap, the function is zero. The function can be expressed as

The function h(·) is the Heaviside function, equal to one if the argument is positive and equal to zero otherwise. The variables d1i, j and d3i, j are distances from the middle points of the four arcs to the dendrite centers B1 and B3, respectively. The variables ϕi are the sorted angles from the vector .

If  1(B2) does not intersect with dendrite B1 or B3, the function λ′ is not defined, and the extension of the definition given by Equation (15) is needed. The first case in the list corresponds to the situation when all three circles intersect and the length of the intersection angle is between 0 and 2π. When ∥B2B3∥ ≤ rmax − 1 the circle

1(B2) does not intersect with dendrite B1 or B3, the function λ′ is not defined, and the extension of the definition given by Equation (15) is needed. The first case in the list corresponds to the situation when all three circles intersect and the length of the intersection angle is between 0 and 2π. When ∥B2B3∥ ≤ rmax − 1 the circle  1(B2) is inside

1(B2) is inside  rmax(B3) and λ = 2π. On the contrary, when ∥B2B3∥ ≤ 1 − rmax, the area

rmax(B3) and λ = 2π. On the contrary, when ∥B2B3∥ ≤ 1 − rmax, the area  rmax(B3) is inside

rmax(B3) is inside  1(B2) and the function is zero. It is also zero when ∥B2B3 ∥ ≥ 1 + rmax, i.e., when the circle and the area are missing each other.

1(B2) and the function is zero. It is also zero when ∥B2B3 ∥ ≥ 1 + rmax, i.e., when the circle and the area are missing each other.

2.3.7. Minimal Set of Connectivity Patterns Needed to Describe Three-Node Motifs, the Definition of Central Node in a Connectivity Pattern

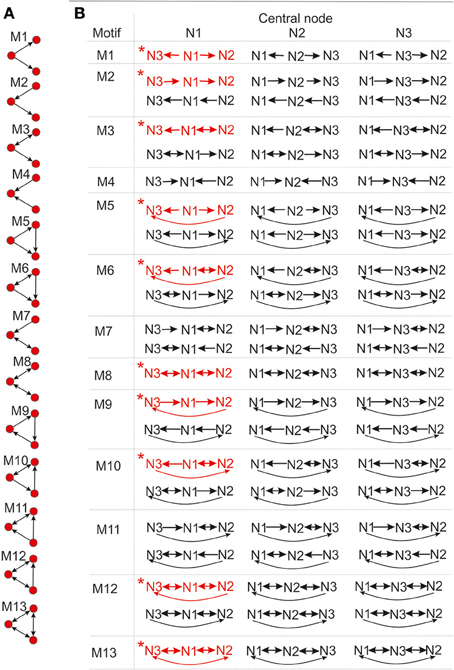

To compute the expected numbers of three-node motifs one has to analyze all the possible connectivity patterns between the three neurons N1, N2, and N3, each represented by two circular connectivity areas, one for the dendrite and one the for axon. Figure 3A shows the standard schematic representation of the 3-node motifs (Milo et al., 2002), and Figure 3B shows all the possible connectivity patterns between N1, N2, and N3 that correspond to each of the motifs4. We will demonstrate how this full list of patterns can be reduced to 10 representative ones, sufficient to compute the expected counts for all the motifs. These 10 patterns are shown in red in the table and are also marked with the star symbol. The choice of the patterns is somewhat arbitrary and an alternative set can also be adopted, which should not affect the obtained expected numbers of motifs. Reduction to the minimal set of patterns also ensures that each pattern is counted only once.

Figure 3. The schematic representation of all possible 3-node motifs. (A) The standard representation of motifs (Milo et al., 2002). (B) All the possible connectivity patterns between the three (fixed) nodes. Columns 1,2,3 in the table correspond to the nodes N1,N2,N3 being the central node in the connectivity pattern. The complete list of 3-node connectivity patterns can be reduced to the 10 representative patterns (highlighted in red), and only these 10 patterns are considered in computations of the expected motif counts.

First, we need to introduce the notion of central node n the motif, suppose it is N1. If N1 is central to the motifs M1, M3, M5, M6, M8, M10, M12, and M13, both dendrite centers B2 and B3 belong to the connectivity area of axon A1, i.e., N3 ← N1 → N2 has to be included in the connectivity pattern. If N1 is central to the motifs M4, M7, and M11, the situation is inverse, both axon centers A2 and A3 have to belong to the connectivity area of dendrite B1, i.e., N3 → N1 ← N2 has to be included in the pattern. If N1 is central to the motifs M2 and M9, the neuron N1 is on the path from N3 to N2, i.e., N3 → N1 → N2 has to be part of the pattern.

The definition of the central node for the three groups of motifs is chosen to emphasize the similarities between the connectivity patterns and to enable selection of the minimal set of patterns. For example, the central node for M11 can be defined the same way as for M1, but the adopted definition emphasizes the similarity between M11 and M6. Following the definition of the central node, all of the patterns are divided into three sets, shown as three columns in Figure 3B. Column i contains connectivity patterns where neuron Ni represents the central node. Since all of the neurons in the network have the same properties, the motif counts do not depend on the choice of the central node. Therefore, for counting all the motifs that include the neuron N1, it is sufficient to count the motifs where N1 is central and multiply the obtained counts with a coefficient.

Motifs M1, M4, M8, and M13 have one possible pattern with N1 as the central node, M2, M3, M5, M6, M7, M9, and M10 have two, M11 and M12 have four, but only two should be considered as the other two are repeated in columns two and three. If we further analyze the pairs of patterns that appear in column one, it is evident that one of them can be obtained from the other by switching the positions of N2 and N3. Therefore, it is sufficient to consider only one of them, irrelevant which one is chosen (here, we selected the first one). The reason is the following: in order to create patterns from the first group the dendrite centers B2 and B3 have to be inside the connectivity area of axon A1. To compute all the motif counts, we have to consider every possible position of B2 and B3 within  rmax(A1). Consequently, both choices of coordinates B2 = (αa, ra), B3 = (αb, rb) and B2 = (αb, rb), B2 = (αa, ra) are considered, as well as both connectivity patterns that correspond to a certain motif. It can also happen that B2 = B3 or B2 = B1 or B3 = B1, but the number of such examples is negligible, as shown in Supplementary Material 2. To count all the occurrences of M2 and M9, we put one dendrite center (B2 or B3) in the connectivity area of axon A1, and one axon center (A3 or A2) in the connectivity area of dendrite B1. Regardless of the neuron numeration, this is sufficient to take into account every appearance of these two motifs.

rmax(A1). Consequently, both choices of coordinates B2 = (αa, ra), B3 = (αb, rb) and B2 = (αb, rb), B2 = (αa, ra) are considered, as well as both connectivity patterns that correspond to a certain motif. It can also happen that B2 = B3 or B2 = B1 or B3 = B1, but the number of such examples is negligible, as shown in Supplementary Material 2. To count all the occurrences of M2 and M9, we put one dendrite center (B2 or B3) in the connectivity area of axon A1, and one axon center (A3 or A2) in the connectivity area of dendrite B1. Regardless of the neuron numeration, this is sufficient to take into account every appearance of these two motifs.

Next, consider motifs M1 and M4. One of them is obtained from another by switching the orientation of all the connections. This is equivalent to exchanging dendrites and axons, if motif M1 requires B2 and B3 inside the connectivity area of A1, then motif M4 requires A2 and A3 inside the connectivity area of B1. Connectivity areas of dendrites and axons are equal, which means that counts for M1 and M4 must be equal,  (M1) =

(M1) =  (M4). The same holds for motifs M3 and M7, and also for M6 and M11. Consequently, M4, M7, and M11 do not need to be considered separately. This completes the search for the minimal set of patterns that are shown in red in Figure 3B.

(M4). The same holds for motifs M3 and M7, and also for M6 and M11. Consequently, M4, M7, and M11 do not need to be considered separately. This completes the search for the minimal set of patterns that are shown in red in Figure 3B.

Once the counts for the 10 representative patterns are computed, the final motif counts are obtained by multiplying them with the following coefficients: 3 for M2, M3, M5, M7, M10, and M12, 1.5 for M1, M4, M6, M8, and M11, 1 for M9, 0.5 for M13. The first set of motifs is multiplied by 3 in order to take into account three possible choices of the central node. There is no need to take into account two different patterns for each central node because that is already accounted for by considering all the possible coordinates of B2 and B3, as described in a previous paragraph. Motifs M1, M4, M8 are multiplied by , because each central node corresponds to only one pattern. Consequently, the procedure that takes into account all possible positions of B2 and B3 leads to counting every pattern twice. Closer inspection of the patterns for M6, M10, and M11 shows that each pattern in the table in Figure 3B repeats twice, e.g., for M6, pattern 1 for N1 as the central node is equal to pattern 2 for N2 as the central node. If we multiply the motif counts for central node N1 by 3, in order to take into account other choices of central nodes, we actually consider each pattern twice. So the counts should be additionally divided by 2. Next, motifs M9 and M13 are circular and any choice of the central node gives the same pattern. So there is no need to multiply the counts obtained for N1 by 3. In addition, M13 has only one pattern that corresponds to N1 as the central node, so the count should be additionally divided by 2.

2.3.8. The Expected Number of Motifs M1, M3, M5, M6, M8, M10, M12, and M13

The expressions for the expected number of 3-node motifs are obtained by combining Equations (14) with the procedure for computing the expected number of 2-node motifs. Equations (14) give probabilities for different types of connections from N2 to N1 and N3, and also from N3 to N1 and N2. The probability for each connectivity pattern from Figure 3 is obtained by multiplying the probability of the appropriate connection from N2 to N1 and N3 with the probability of the connection from N3 to N1 and N2. These probabilities are defined for any pair of coordinates of B2 and B3. In order to form any of the listed motifs, B2 and B3 have to be inside the connectivity area of A1, which defines the range of their coordinates: in the coordinate system fixed to A1, the angular coordinates α2 and α3 take all the possible values and the radial coordinates r2 and r3 have to be smaller than rmax. Similarly, as in the case of 2-node motifs we should integrate the expressions for the probabilities of connectivity patterns over all the possible coordinates for both B2 and B3, i.e., over two pairs of coordinates. This results in a quadruple integral, and the coefficient in front of the integral is the square of the coefficient obtained for the 2-node motifs.

The following expression (Equation 16) gives the expected number of the representative connectivity patterns for the motifs from this group. The total motif counts are obtained by multiplying them with the coefficients given in the previous section.

The expression  Mi corresponds to the motif Mi, and depends on the function ni(α2, r2, α3, r3):

Mi corresponds to the motif Mi, and depends on the function ni(α2, r2, α3, r3):

From the definition of κ, κ1, and λ, all the functions ni have discontinuities and therefore cannot be integrated straightforwardly. The problem was solved by dividing the entire domain of integration into sub-domains where the functions are continuous. Then, the integration was performed for each sub-domain and the total motif count is obtained by summing up all of the obtained values. The details are presented in Supplementary Material 2.

2.3.9. The Expected Number of Motifs M4, M7, M11

From the previous discussion, these values are equal to the expected number of motifs M1, M3, and M6, respectively.

2.3.10. The Expected Number of Motifs M2 and M9 (Figure 2E)

The computations for motifs M2 and M9 require a four-step procedure illustrated in Figure 2E. First, the axon center A3, given by coordinates (αa3, ra3), is chosen inside the connectivity area of dendrite B1. Next, the dendrite center B2 with coordinates (α2, r2) is chosen inside the connectivity area of A1, but outside of the connectivity area of A3 (the dark green area in Figure 2E). This results in the connectivity pattern N3 → N1 → N2, a necessary condition for both motifs M2 and M9. In the third step, the dendrite center B3(α3, r3) is chosen on the circle  1(A3), but outside the connectivity area of A1, i.e., in the domain

1(A3), but outside the connectivity area of A1, i.e., in the domain  (B3) =

(B3) =  1(A3) \

1(A3) \  rmax(A1). This way, the bidirectional connection between N1 and N3 is avoided. If

rmax(A1). This way, the bidirectional connection between N1 and N3 is avoided. If  1(A3) entirely belongs to the connectivity area of A1, motifs M2 and M9 are impossible. Therefore, an additional condition for the coordinates of A3 is: ra3 > rmax − 1. In the final step, axon A2 is chosen on the circle

1(A3) entirely belongs to the connectivity area of A1, motifs M2 and M9 are impossible. Therefore, an additional condition for the coordinates of A3 is: ra3 > rmax − 1. In the final step, axon A2 is chosen on the circle  1(B2). Same as before, the intersection between this circle and the connectivity areas of B1 and B3 defines the probabilities to form motifs M2 and M9. These probabilities are expressed using functions κ1, κ, and λ. Motif M2 emerges if A2 falls outside of both connectivity areas, while M9 emerges if A2 falls inside the connectivity area of B3, but outside the one of B1.

1(B2). Same as before, the intersection between this circle and the connectivity areas of B1 and B3 defines the probabilities to form motifs M2 and M9. These probabilities are expressed using functions κ1, κ, and λ. Motif M2 emerges if A2 falls outside of both connectivity areas, while M9 emerges if A2 falls inside the connectivity area of B3, but outside the one of B1.

The expected numbers of motifs M2 and M9 are computed similarly as before. The probabilities of the representative connectivity patterns are integrated for all possible positions of A3 and B2. In addition, we have to take into account all the positions of B3, which adds the fifth integral to the equations. The easiest way to evaluate this innermost integral is by translating the coordinate system from A1 to A3, to simplify expressions for the coordinates of B3 in  (B3) =

(B3) =  1(A3) \

1(A3) \  rmax(A1). The outer quadruple integral is evaluated in the coordinate system of A1. The obtained expressions for the expected number of motif counts are:

rmax(A1). The outer quadruple integral is evaluated in the coordinate system of A1. The obtained expressions for the expected number of motif counts are:

2.3.11. Clustering Coefficient (CC)

Clustering coefficient quantifies the density of connections in the local neighborhood of each network node. The percent of connected neighbors is estimated for each network node, and the average over all nodes represents the clustering coefficient (Watts and Strogatz, 1998; Boccaletti et al., 2006). A global measure related to the clustering coefficient is transitivity (Watts and Strogatz, 1998; Boccaletti et al., 2006) which estimates the number of triangles among all the connected triplets in a network. Here, we consider a simple case of identical neurons (network nodes) uniformly distributed in a planar space without boundaries. The clustering coefficient of the resulting network is identical to the local clustering coefficient of each node. Similarly, the global transitivity measure reduces to the measure evaluated for a single node. We employ one possible extension of the original clustering coefficient (for undirected networks) to the case of directed networks (Boccaletti et al., 2006; Sporns, 2011; Telesford et al., 2011; Mäki-Marttunen et al., 2013):

The expression holds for a network of  nodes where each node has nneighbors neighbors. The values Mij describe the presence or absence of a connection between nodes i and j, Mij = 1 if a connection from i to j exists and Mij = 0 otherwise. This equation can be re-written as a linear combination of motif counts. We can group all pairs of neighbors of node N1 according to the motif they form. The number of pairs in each group is equal to the corresponding motif count. Each motif count should be multiplied with the coefficient determined by the product from the summation above. Clearly, if a motif has two unconnected nodes (like M1 or M2) the coefficient is zero. For M5 and M9 the coefficient is 1, for M6, M10, M11 it is 2, for M12 it is 4, and for M13 it is 8. From the previous derivations, the expected motif counts are given by the values 3

nodes where each node has nneighbors neighbors. The values Mij describe the presence or absence of a connection between nodes i and j, Mij = 1 if a connection from i to j exists and Mij = 0 otherwise. This equation can be re-written as a linear combination of motif counts. We can group all pairs of neighbors of node N1 according to the motif they form. The number of pairs in each group is equal to the corresponding motif count. Each motif count should be multiplied with the coefficient determined by the product from the summation above. Clearly, if a motif has two unconnected nodes (like M1 or M2) the coefficient is zero. For M5 and M9 the coefficient is 1, for M6, M10, M11 it is 2, for M12 it is 4, and for M13 it is 8. From the previous derivations, the expected motif counts are given by the values 3 M5 for M5, 1.5

M5 for M5, 1.5 M6 for M6,

M6 for M6,  M9 for M9, 3

M9 for M9, 3 M10 for M10, 1.5

M10 for M10, 1.5 M11 for M11, 3

M11 for M11, 3 M12 for M12, 0.5

M12 for M12, 0.5 M13 for M13. The number of neighbors can be expressed using the expected 2-node motif counts, nneighbors =

M13 for M13. The number of neighbors can be expressed using the expected 2-node motif counts, nneighbors =  M1−2 +

M1−2 +  M2−2, as the sum of unidirected and bidirected connections that start or end in N1. The equation for the expected clustering coefficient becomes

M2−2, as the sum of unidirected and bidirected connections that start or end in N1. The equation for the expected clustering coefficient becomes

2.3.12. Path Length

The path length PLij from neuron Ni to neuron Nj is equal to the minimal number of edges on a traversable path between them. If the neurons are unconnected then PLi, j = ∞. If PLi, j = k > 1, no direct connection between the two neurons exists. Instead, the path from one of them to the other goes through k−1 other neurons. We compute the harmonic path length (Watts and Strogatz, 1998; Boccaletti et al., 2006; Mäki-Marttunen et al., 2011), the harmonic mean over the shortest path lengths for all the pairs of neurons in the network. In the population of identical, randomly oriented and uniformly distributed neurons, this coefficient becomes equal to the harmonic path length computed for one fixed neuron, for example neuron N1, as follows

Instead of computing the harmonic mean we use an equivalent expression for the expected harmonic path length

There, P(PL = k) is the probability that the shortest path from N1 to some other node goes through k direct edges, i.e., through k−1 other nodes. For sufficiently large networks, the mean converges toward the expected value, which should hold for the considered model. In the derivations that follow, all the coordinates are expressed in the coordinate system fixed to neuron N1, as it was described before. In this coordinate system, the path length from N1 to a specific neuron NX depends only on the radial but not on the angular coordinate of NX, so we can fix the angular coordinate to αX = 0 and consider only the neurons along the coordinate axis.

The probability of the shortest path length P(PL = k) is computed using the following expression

where the integration is done over the radial coordinate rX. The integrated function is the joint distribution of path length and radial coordinate. The joint distribution is expressed as the product of the shortest path length distribution conditioned on the radial coordinate and the probability that a dendrite center has such radial coordinate. The probability of having a radial coordinate rX is simply expressed as the number of dendrite centers within a ring with the radius rX divided by the total number of neurons  . The path length distribution conditioned on the radial coordinate is expressed using another conditional probability, P(PL ≤ k | rX). For the fixed radial coordinate, this probability shows how likely is that the shortest path length of the considered neuron does not exceed k.

. The path length distribution conditioned on the radial coordinate is expressed using another conditional probability, P(PL ≤ k | rX). For the fixed radial coordinate, this probability shows how likely is that the shortest path length of the considered neuron does not exceed k.

The last conditional probability is obtained from the following analysis. Consider a neuron NX and fix its dendrite center to rX. If it has the shortest path length at most k, then there must be one other neuron that connects to NX, i.e., that has its axon center within the connectivity area of the dendrite BX, and that has the shortest path length no bigger than k−1. Clearly, this is opposite to the statement that every neuron either does not connect to NX or has the shortest path length bigger than k−1. If we express this formally, as probabilities of the described events, and consider all neurons independent on each other we can write the conditional probability as

The last equation depends on the assisting function ν(k − 1|rX). If we consider one particular neuron with the fixed coordinates, the probability that it connects to NX and has the path length at most equal to k−1 is described by ν(k − 1|rX). Finally, this function can be expressed as a function of the conditional probability

The expressions for the conditional probability and the ν-function form a pair of iterative equations that should be computed for all feasible values of k. The definition of connectivity area gives the initial condition for these equations

The obtained expressions are different from the methodology used for motif counts or clustering coefficient. The harmonic path length represents a global measure of network structure and consequently depends on the total number of neurons in the population. The equations derived here are carefully analyzed in Supplementary Material 3. Every step in the presented procedure is illustrated. An equivalent model is simulated and the results from the theoretical model (from this section) and the simulated model are shown alongside.

2.3.13. The Small-World Coefficient

The clustering coefficient and the shortest path length are sufficient for the computation of the small-world coefficient. We consider two different definitions. The classical definition of the small-world coefficient (Watts and Strogatz, 1998) is the following:

Here, the clustering coefficient CC and the shortest path length PL of the considered network are compared to those of a uniform random network. In a small-world network, the clustering coefficient should be relatively high, similarly to the situation in lattice networks, while the path length should be short, similarly to the case of uniform random networks. Therefore, the SW coefficient should be close to one for the uniform random networks and much bigger than one for the small-world networks.

Additionally, we consider another definition from the literature introduced in Telesford et al. (2011) that compares a network with both, uniform random and locally coupled networks

For a network similar to the uniform random one, the first factor should be close to one while the second factor becomes very small as uniform random networks have a much smaller clustering coefficient than locally coupled networks. Therefore, SWq is positive and close to one. For a network similar to a locally coupled network, the first factor is small, as the PL of such networks is much larger than in random networks, while the second factor is close to one. The coefficient SWq is negative and close to minus one. In case of small-world networks, both the first and the second factor are close to one and SWq is close to zero.

2.3.14. Locally Coupled Networks

The locally coupled networks are generated to correspond to the extreme situation in our model, the overlapping axon and dendrite centers (Δad = 0). The number of  nodes is uniformly distributed in the two-dimensional space (of size L × L) with the density equal as before (i.e., equal ). The two-dimensional space is projected on a torus to avoid boundary conditions. The number of nodes is sufficiently bigger than the maximal considered node degree. A node is connected to every other node inside its connectivity area, which gives the node degree according to Equation (6). A network generated this way has only bi-directional connections and can express only motifs M2-1, M8, and M13, we call it “strictly locally coupled network.” To increase variability in motif counts and still maintain the properties of a locally coupled network, we removed 10% randomly selected connections and established them with the nearest neurons outside the connectivity area, we refer to it as “locally coupled network with 10% of non-local connections.”

nodes is uniformly distributed in the two-dimensional space (of size L × L) with the density equal as before (i.e., equal ). The two-dimensional space is projected on a torus to avoid boundary conditions. The number of nodes is sufficiently bigger than the maximal considered node degree. A node is connected to every other node inside its connectivity area, which gives the node degree according to Equation (6). A network generated this way has only bi-directional connections and can express only motifs M2-1, M8, and M13, we call it “strictly locally coupled network.” To increase variability in motif counts and still maintain the properties of a locally coupled network, we removed 10% randomly selected connections and established them with the nearest neurons outside the connectivity area, we refer to it as “locally coupled network with 10% of non-local connections.”

2.3.15. Uniform Random Networks

These networks are generated in a standard way. Each connection is set with the probability  independently on other connections. Clearly, the finite size of these networks raises some issues. In the analyzed model networks, the total number of nodes is explicitly considered only when computing path length through the network. The network is considered virtually infinite. There is no boundary conditions and each node has an equal number of available neighbors. In the locally coupled networks, as described above, a comparable model is provided by choosing a large enough network and projecting it on a torus. In uniform random networks, the problem is somewhat more difficult because the network size determines probability of connection, the parameter that affects all considered network measures. In the results presented here, we fix the network size and the probability of connection solely varies with the node degree.

independently on other connections. Clearly, the finite size of these networks raises some issues. In the analyzed model networks, the total number of nodes is explicitly considered only when computing path length through the network. The network is considered virtually infinite. There is no boundary conditions and each node has an equal number of available neighbors. In the locally coupled networks, as described above, a comparable model is provided by choosing a large enough network and projecting it on a torus. In uniform random networks, the problem is somewhat more difficult because the network size determines probability of connection, the parameter that affects all considered network measures. In the results presented here, we fix the network size and the probability of connection solely varies with the node degree.

3. Results

The results of the model analysis are divided into two parts, similarly to the model description. In the first part, the properties of neurite morphology are related to the connectivity between pairs of neurons. Quantitative measures such as the expected number of synapses, the effective radius of the connectivity area, and the node degree are derived as functions of the neurite model parameters. In the second part, the concept derived in the first step, the effective radius, is related to the typical measures used to quantify connectivity in networks, motif counts, clustering coefficient, path length, and small-world coefficient. This way we divided the initial question, how the properties of neurite morphology affect connectivity in large networks, into two easier goals that better explain the role of different aspects of the model.

3.1. The Expected Number of Synapses

In this section, we show how the expected number of synapses S depends on the neuron model parameters. We give the general expression for this dependency in Methods Section by Equation (4). The derivation of S is given in Supplementary Material 1. We consider the neurites with circular support, i.e., with neurite segments distributed inside the circle of radius Ra for axons and Rd for dendrites, and with one of the two forms of distributions, uniform or truncated Gaussian. The truncated Gaussians have equal variances along the two dimensions and the zero cross-covariance, the cases that simplify computations. Neurite distributions are described by the parameter set M, which is an empty set for the uniform distribution and contains normalized parameters M = {σ, kσ} for the truncated Gaussians. The presented methodology can be applied in more general situations, for neurites with elliptic support and a general form of truncated Gaussian distribution.

According to Equation (4), S depends linearly on the number of axon and dendrite segments, Na and Nd, and also on the square of the unit length D. It has a non-trivial dependency on the axon-dendrite distance Δ, on the average neurite size R, and on their ratio, the normalized axon-dendrite distance ρ. In addition, it depends on the asymmetry index η, the parameter that quantifies asymmetry between the size of axons and dendrites. This parameter takes values from the interval η ϵ [0, 1], for η = 0 the dendrite and axon radii are the same (Ra = Rd), and for η → 1 the axons are much bigger than dendrites (Ra >> Rd). In the considered model, the axons are always bigger than the dendrites. Finally, S depends on the neurite density distributions and the set of normalized parameters M.

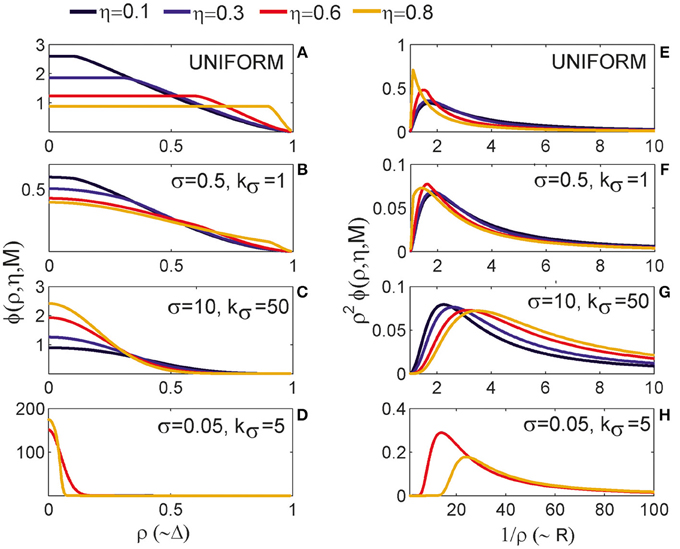

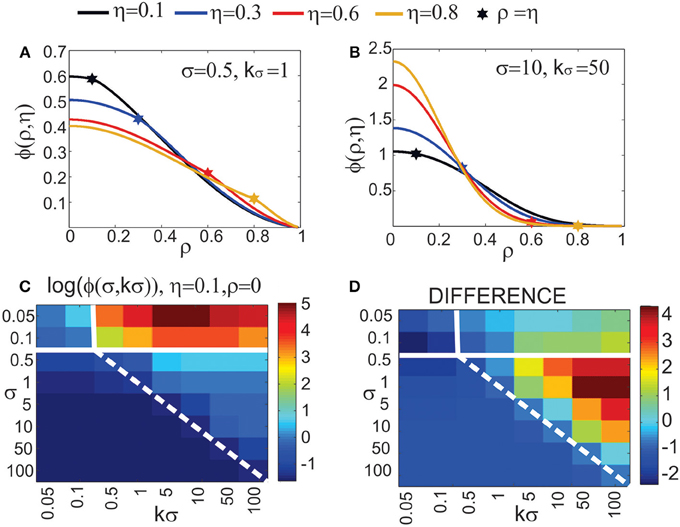

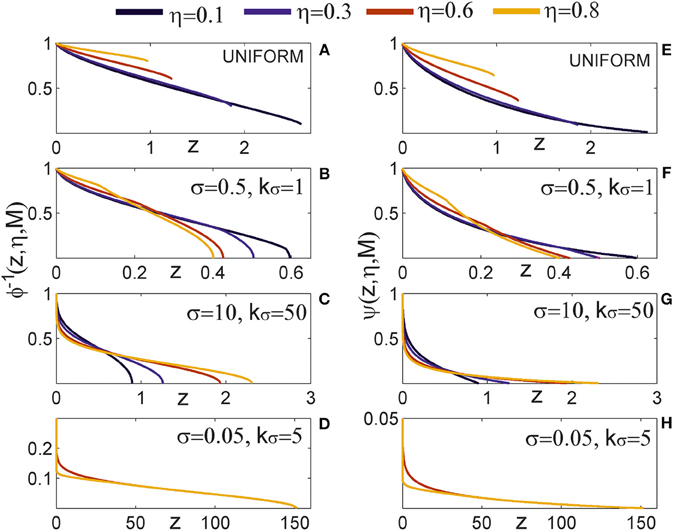

3.1.1. The Expected Number of Synapses as a Function of Axon-Dendrite Distance (Figures 4A–D)

We first show how S depends on the axon-dendrite distance and on the normalized axon-dendrite distance by fixing all the other parameters. This way the expected number of synapses becomes proportional to the function ϕ(ρ, η, M), consequently called the distance-dependent expected number of synapses. This is illustrated in the left column in Figures 4A–D. Different panels correspond to different distributions of neurite segments, which are indicated on each panel along with the distribution parameters. The x-axis in Figures 4A–D shows the axon-dendrite distance (Δ ∈ [0, Ra + Rd]) and the normalized axon dendrite distance (ρ ∈ [0, 1]). Four different cases in each panel correspond to different values of the asymmetry index (values for the asymmetry index and the color code are indicated in Figure 4).

Figure 4. Expected number of synapses. Left column: Distance-dependent expected number of synapses [ϕ(ρ, η, M)] as a function of the axon-dendrite distance (Δ), and consequently the normalized axon-dendrite distance (ρ). When all the parameters but Δ are fixed this function is equal to the expected number of synapses up to a multiplicative constant. Right column: Size-dependent expected number of synapses [ρ2ϕ(ρ, η, M)] as function of the average neurite radius R and the inverse of the normalized axon-dendrite distance (1/ρ) which is proportional to the average neurite radius. When all the parameters but the average neurite radius are fixed this function describes the expected number of synapses up to a multiplicative constant. The functions are shown for four values of the asymmetry index (η), dark blue, η = 0.1; blue, η = 0.3; red, η = 0.6; yellow, η = 0.8. (A,E) are obtained for the uniformly distributed axon and dendrite segments. (B–D, F–H) show three typical examples obtained for the truncated Gaussian distribution of neurite segments, and the normalized parameters of the distribution are indicated on each panel.

Figure 4A illustrates the expected number of synapses obtained when both the axon and dendrite have uniform distribution of neurite segments. In this case, the function is determined solely by the overlap between neurites, i.e., by the parameters that determine the overlap, the (normalized) axon-dendrite distance and the average neurite size. For ρ ≤ η, i.e., Δ ≤ Ra − Rd, the dendrite is entirely inside the axon and the expected number of synapses is maximal. As the axon-dendrite distance increases further, the overlap between the two neurites decreases until it vanishes for ρ > 1, i.e., for Δ > Ra + Rd.