The Body Action Coding System II: muscle activations during the perception and expression of emotion

- 1Brain and Emotion Laboratory, Department of Medical and Clinical Psychology, Tilburg University, Tilburg, Netherlands

- 2Department of Cognitive Neuropsychology, Tilburg University, Tilburg, Netherlands

- 3Brain and Emotion Laboratory, Faculty of Psychology and Neuroscience, Maastricht University, Maastricht, Netherlands

Research into the expression and perception of emotions has mostly focused on facial expressions. Recently, body postures have become increasingly important in research, but knowledge on muscle activity during the perception or expression of emotion is lacking. The current study continues the development of a Body Action Coding System (BACS), which was initiated in a previous study, and described the involvement of muscles in the neck, shoulders and arms during expression of fear and anger. The current study expands the BACS by assessing the activity patterns of three additional muscles. Surface electromyography of muscles in the neck (upper trapezius descendens), forearms (extensor carpi ulnaris), lower back (erector spinae longissimus) and calves (peroneus longus) were measured during active expression and passive viewing of fearful and angry body expressions. The muscles in the forearm were strongly active for anger expression and to a lesser extent for fear expression. In contrast, muscles in the calves were recruited slightly more for fearful expressions. It was also found that muscles automatically responded to the perception of emotion, without any overt movement. The observer's forearms responded to the perception of fear, while the muscles used for leaning backwards were activated when faced with an angry adversary. Lastly, the calf responded immediately when a fearful person was seen, but responded slower to anger. There is increasing interest in developing systems that are able to create or recognize emotional body language for the development of avatars, robots, and online environments. To that end, multiple coding systems have been developed that can either interpret or create bodily expressions based on static postures, motion capture data or videos. However, the BACS is the first coding system based on muscle activity.

Introduction

Faces, bodies, and voices are the major sources of social and emotional information. There is a vast amount of research on how humans perceive and express facial expressions. Some of this resulted in the creation of the Facial Action Coding System (FACS) which extensively describes which muscles are recruited for emotional expressions (Ekman and Friesen, 1978). Using the FACS and electromyography recordings (EMG), many studies have examined conscious and unconscious facial responses to emotional faces (Dimberg, 1990; see Hess and Fischer, 2013 for a review). In the last decade it has become increasingly clear that bodily expressions are an equally valid means of communicating emotional information (de Gelder et al., 2004; de Gelder, 2009). Bodily expressions, more so than facial expressions, quickly activate cortical networks involved in action preparation, action understanding and biological motion (Kret et al., 2011; de Gelder, 2013), and especially for emotions that are negative or threatening (de Gelder et al., 2004). For example, the perception of an angry or fearful person activates a network in the brain that facilitates the perception and execution of action, such as the (pre)motor areas and the cerebellum (Grezes et al., 2007; Pichon et al., 2008, 2009, 2012). This corroborates the idea that in daily life, expressing emotion with the body is an automatic, reflex-like behavior that is often triggered as soon as a response is required to something in the environment (Frijda, 2010). In line with this theory, transcranial magnetic stimulation (TMS) studies found motor facilitation of those muscles that were used in an observed movement (Fadiga et al., 2005), but also increased corticospinal excitability in response to fearful faces (Schutter et al., 2008) and emotional bodily expressions (Borgomaneri et al., 2012). In addition, Coombes et al. (2006, 2009) found increased force production and motor evoked potentials of finger and wrist extensors following a negative stimulus. Studies in a more clinical setting have found that psychological factors, such as fear of pain, influence the activation patterns of muscles in the back in chronic lower back pain patients (Watson et al., 1997; Geisser et al., 2004). Aditionally, muscles in the legs have a different muscle tone if one imagines the self in a painful situation (Lelard et al., 2013). These results inspired the question of whether automatic and covert muscle responses to emotion are limited to the face, or whether such activations might also be found in body muscles. To answer that question, the role of muscles in the neck, shoulders and arms during the expression and perception of angry and fearful emotions were recently assessed using EMG (Huis in ‘t Veld et al., 2014). It was found that it is indeed possible to measure covert responses of muscles in the body. Distinctly different response patterns of muscles in the neck and arms to the perception of fearful and as opposed to angry bodily emotions were found. However, to assess the underlying mechanisms of these activations, it is necessary to know what role these muscles play in the execution of emotion. The study by Huis in ‘t Veld et al. (2014) was the starting point of the Body Action Coding System (BACS), with the aim of creating a system that not only describes which muscles are used for emotional bodily expressions, but also which muscles respond covertly to the perception of emotion.

Understanding the role of the body in emotional expression is becoming increasingly important, with interest in developing systems for gaming (Savva and Bianchi-Berthouze, 2012; Zacharatos, 2013), robots (Castellano et al., 2013; Mccoll and Nejat, 2014), touchscreen interfaces (Gao et al., 2012) or even teaching (Mayer and Dapra, 2012), and tools for public speaking (Nguyen et al., 2013). To that aim, multiple coding systems have been developed that can either interpret or create bodily expressions, based on static postures, motion capture data or videos (see Zeng et al., 2009; Calvo and D'mello, 2010; Karg et al., 2013; Kleinsmith and Bianchi-Berthouze, 2013 for some extensive reviews). However, no system exists yet based on muscle involvement and electromyography (EMG) measurements. If such a system were available, it would enable research on automatic responses without overt movement and build natural emotional expressions based on biologically valid movement data. The current study continues the work on the BACS by firstly assessing the role of muscles in the lower back, forearms and calves involved in the expression of fear and anger, and secondly, determine whether it is possible to measure covert responses in these muscles during the passive viewing of emotion.

Materials and Methods

The stimuli described below, the experimental procedure, EMG data acquisition, processing procedures and statistical analyses are similar to what is reported in the previously mentioned BACS I article (Huis in ‘t Veld et al., 2014).

Participants

Forty-eight undergraduates of Tilburg University participated in exchange for course credit. Participants read and signed an informed ethical consent form and completed a screening form to assess physical, psychological, and neurological health. The study was approved by the Maastricht University ethical committee. The following participants were excluded from analysis: one subject suffered from hypermobility, one from fibromyalgia, four used medication, three were left-handed, and one did not adhere to the instructions. The data for three participants was of overall low quality and sessions for five participants were aborted by the researcher; two felt uncomfortable standing still (which made them dizzy), two felt uncomfortable in the electrically shielded room (indicating it was oppressive), and one felt unwell. Three participants failed to adhere to the dress code which prevented the measurements of the calf muscles, but all other muscles were measured. The sample therefore consisted of 30 healthy right-handed individuals between 18 and 24 year old, 12 males (M = 21.2, SD = 2.2) and 18 females (M = 19.5, SD = 2.2) with normal or corrected-to-normal vision.

Stimulus Materials and Procedure

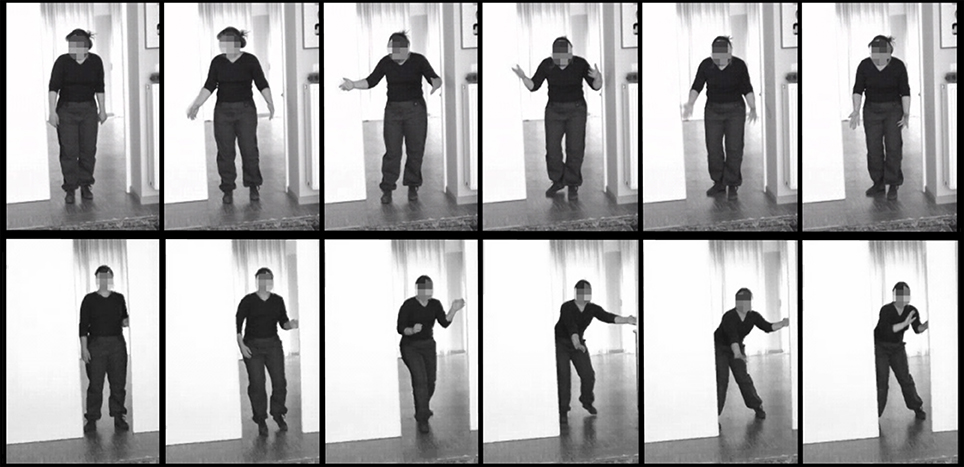

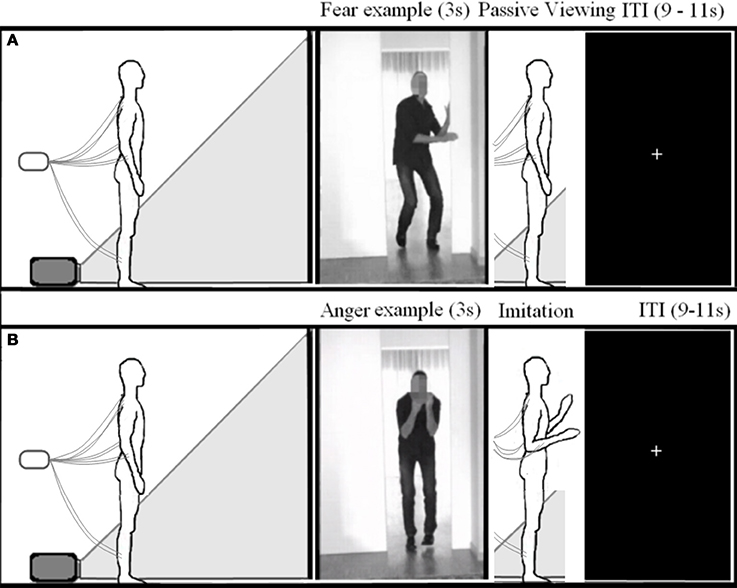

The experiments consisted of two emotion conditions, Fear and Anger. Twenty-four videos of 3000 ms were used, in which an actor opens a door followed by a fearful (12 videos) or angry (12 videos) reaction (see Figure 1). These stimuli have been used in other studies (Grezes et al., 2007; Pichon et al., 2008, 2009, 2012) and are well-recognized and controlled for movement and emotional intensity. The face of the actor was blurred. The videos were projected life-size on the wall, in front of the participant who was standing upright. In total there were 72 randomly presented trials, 36 for the Anger condition and 36 for the Fear condition, with an inter-trial interval (ITI) between 9 and 11 s. During the ITI a black screen with a white fixation cross at chest height of the stimulus was shown. The experiment was divided into 2 blocks of 36 trials with a break in between. The same procedure was used in both experiments.

Experiment 1: Passive Viewing

The participants were instructed to view all the videos while maintaining an upright posture with the head facing forward, feet positioned 20–30 cm apart, squared but relaxed shoulders and arms hanging loosely next to the body. They were asked to stand as still as possible while keeping a relaxed stance, and to minimize unnecessary movements, such as moving the head, shifting stance, or tugging at hair or clothing.

Experiment 2: Imitation

The participants were told that they would see the same videos as in experiment 1 and instructed to mimic the emotional reaction of the actor. They were urged to do this as convincingly as possible using their whole body. The subjects first viewed the whole video adopting the same stance as in experiment 1, and after the offset of each movie, imitated the emotional movement of the actor and then returned to their starting position and posture. This was first practiced with the experimenter to ensure the subjects understood the procedure. (see Figure 2). Experiment 2 always followed experiment 1. The order of the experiments was not counterbalanced, in order to prevent habituation to the videos and to keep the participants naïve as to the purpose of the study during the passive viewing experiment.

Figure 2. Experimental setup. Schematic overview of the experimental setup for experiment 1 (A) and 2 (B).

Electrophysiological Recordings and Analyses

The recordings took place in a dimly lit and electrically shielded room. Bipolar EMG recordings were made from the upper trapezius descendens (neck), the extensor carpi ulnaris (the wrist extensors in the dorsal posterior forearm), the erector spinae longissimus (lower back), and the peroneus longus (calf). The erector spinae longissimus in the lower back extends the trunk and is activated by backwards leaning or returning to an upright position after flexion. The peroneus longus in the calves is used in eversion of the foot and planar flexion of the ankle, or pushing off the foot. The location of each electrode pair was carefully established according to the SENIAM recommendations for the trapezius, erector spinae longissimus and the peroneus longus (Hermens and Freriks, 1997). To measure the extensor carpi ulnaris activity, the electrodes were placed on a line between the olecranon process of the ulna and the wrist, on the bulky mass of the muscle, approximately 6 cm from the olecranon process. A schematic overview of muscle and electrode locations can be found in Figures 5, 6, but see the SENIAM recommendations for exact electrode placements (Hermens and Freriks, 1997). The electrode sites were cleaned with alcohol and flat-type active electrodes (2 mm diameter), filled with conductive gel, were placed on each muscle with an inter-electrode distance of 20 mm. Two electrodes placed on the C7 vertebrae of the participant served as reference (Common Mode Sense) and ground (Driven Right Leg) electrodes. EMG data was digitized at a rate of 2048 Hz (BioSemi ActiveTwo, Amsterdam, Netherlands). To reduce subject awareness of the aim of the study, the participants were told that the electrodes measured heart rate and skin conductance. The researcher could see and hear the participant through a camera and an intercom.

The data were processed offline using BrainVision Analyzer 2.0 (Brain Products). Eight channels were created, one for each recorded muscle bilaterally, by subtracting electrode pairs. These channels were filtered using a band-pass filter (20–500 Hz, 48 dB/octave) and a notch filter (50 Hz, 48 dB/octave). The signal can be contaminated by the ECG signal. If this ECG contamination is stronger in one electrode of a pair, the ECG noise is not removed by subtraction. This is usually the case in the lower back recordings, as one electrode is closer to the heart than the other. In these cases, an independent component analysis was performed, which produces a sets of independent components present in the data, after which the EMG signal was rebuilt without the ECG component (Mak et al., 2010; Taelman et al., 2011). The data was then rectified, smoothed with a low-pass filter of 50 Hz (48 dB/octave) and segmented into 10 one-second epochs including a 1 s pre-stimulus baseline. The epochs were visually inspected and outlier trials were manually removed per channel per condition. Trials with movement or artifacts in the pre-stimulus baseline and trials with overt movement during the projection of the stimulus were rejected and removed. The remaining trials of each channel were averaged, filtered with 9 Hz, down sampled to 20 Hz and exported.

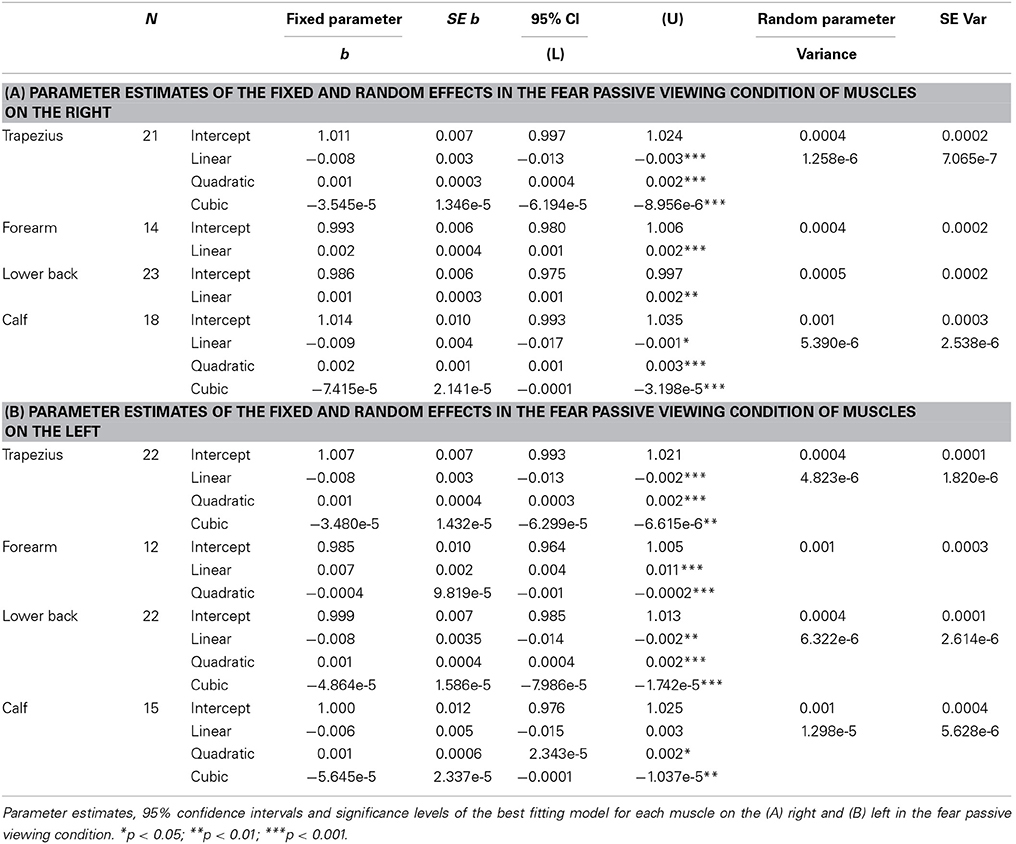

Statistical Analyses

Only channels with at least 30 valid trials per emotion condition were kept for analysis. To allow for comparison between participants, the data was normalized by expressing the EMG activity as a proportion to baseline for every muscle and emotion. This proportion was calculated by dividing the average activity of 500 ms bins by the average activity of the 1000 ms pre-stimulus baseline, during which the participant stood in a relaxed stance without any stimulus presentation. A value of one signifies no change, whereas values below one signify deactivation and those above one, activation. This resulted in normalized EMG magnitudes of 16 time points (8 s) in the passive viewing condition and 18 time points (9 s) in the active imitation condition, of which the first six time points are during stimulus presentation. To assess the shape and significance of these EMG time courses for each muscle response to each emotion, multilevel growth models were fitted. These models were built step-by-step, starting with a simple linear model with a fixed intercept and slope, to which quadratic and cubic effects of time and random intercepts and slopes are added. Every step is tested by comparing the −2 Log Likelihood with a chi-square distribution. For a more detailed explanation and justification for this method, see Bagiella et al. (2000); Huis in ‘t Veld et al. (2014). For the passive viewing experiment, all 16 time points were modeled, including the six time points during stimulus presentation. For the imitation condition, only the time period after the participants started moving was modeled.

Results

Expression of Anger

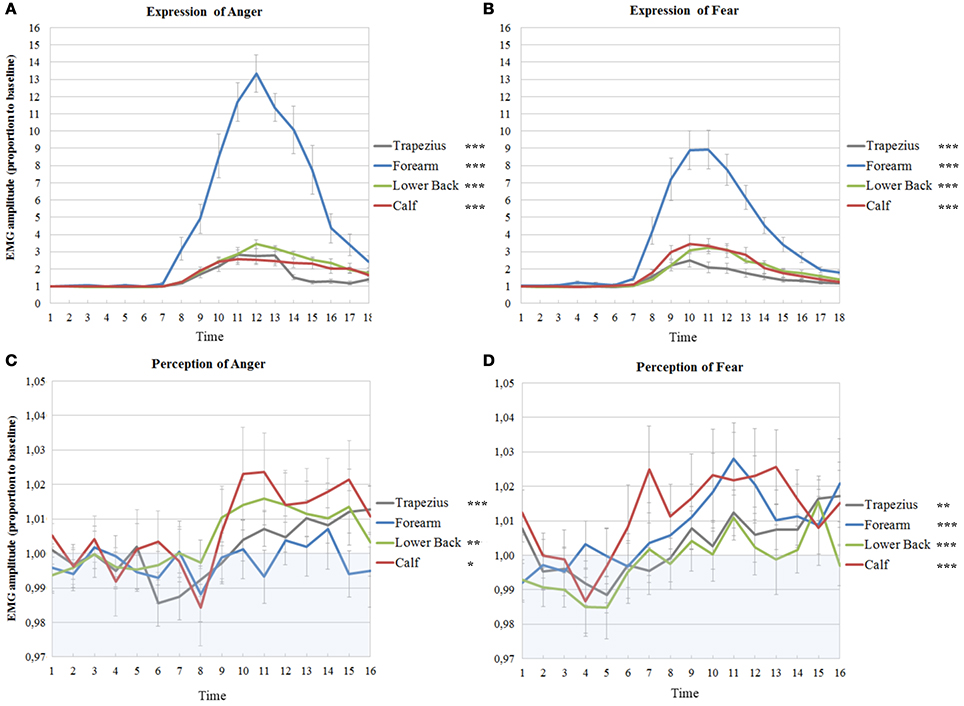

The first hypothesis pertains to the question of which muscles are used in the active expression of fearful and angry emotion and thus, the results from experiment 2 will be presented first.

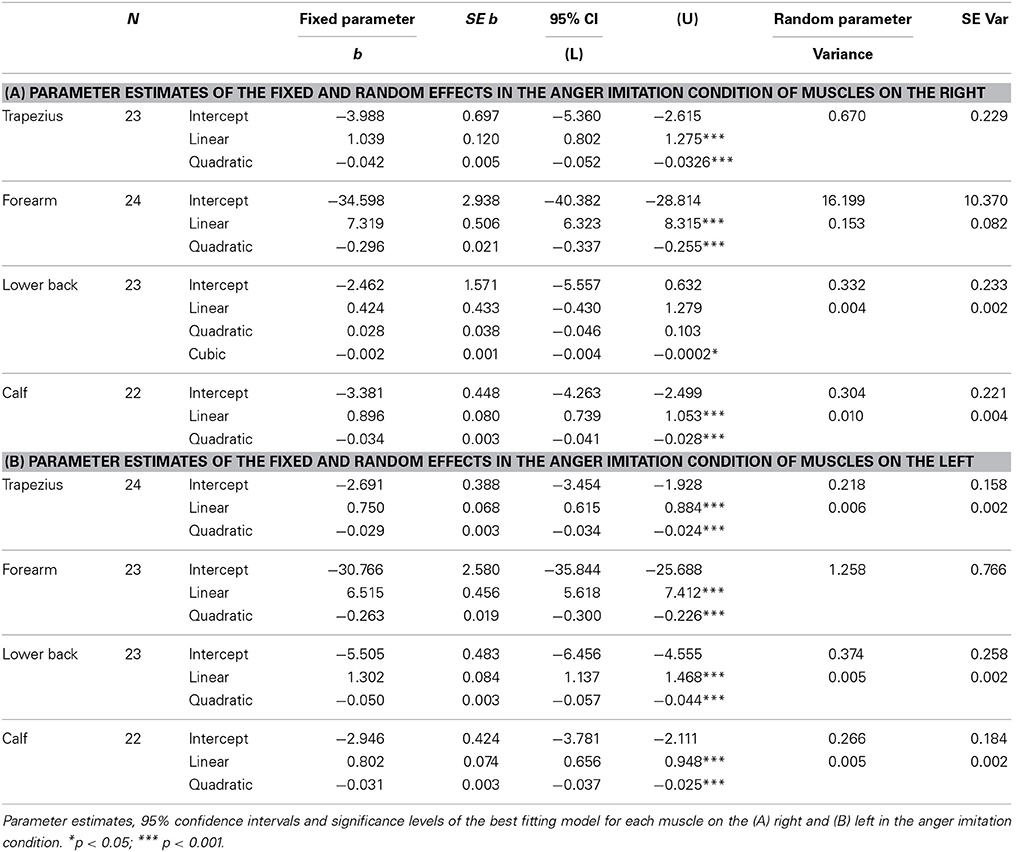

All models of time courses of EMG activity in the active anger condition for the muscles on the right benefited from the inclusion of random intercepts, even though the parameters themselves were not always significant (trapezius; Wald Z = 2.93, p = 0.003, forearm; Wald Z = 1.56, p > 0.05, lower back; Wald Z = 1.42, p > 0.05, calf; Wald Z = 1.38, p > 0.05). The EMG time courses of the right trapezius, the right forearm and the right calf were best described by a model with a fixed quadratic effect of time [trapezius; F(1, 255) = 72.78, p < 0.001, forearm; F(1, 252) = 205.23, p < 0.001, calf; F(1, 237) = 116.22, p < 0.001], with an additional random effect of time for the forearm (Wald Z = 1.86, p = 0.06) and the calf (Wald Z = 2.59, p = 0.01). A model with a fixed cubic effect of time [F(1, 252) = 4.51, p = 0.035] and a random effect of time (Wald Z = 2.04, p = 0.04) best fitted the EMG time course of the right lower back (see Table 1A and Figure 3A).

Figure 3. Muscle activations in experiment 1 and 2 on the right side of the body. EMG amplitudes and standard errors of the mean of muscles on the right during active imitation of anger (A) and fear (B) and during passive viewing of anger (C) and fear (D). The first six time points represent EMG activity during stimulus presentation. Activity below one (in blue area) indicates deactivation as compared to baseline. Significance levels of the fixed effect of time; ***p < 0.001, **p < 0.01, *p < 0.05.

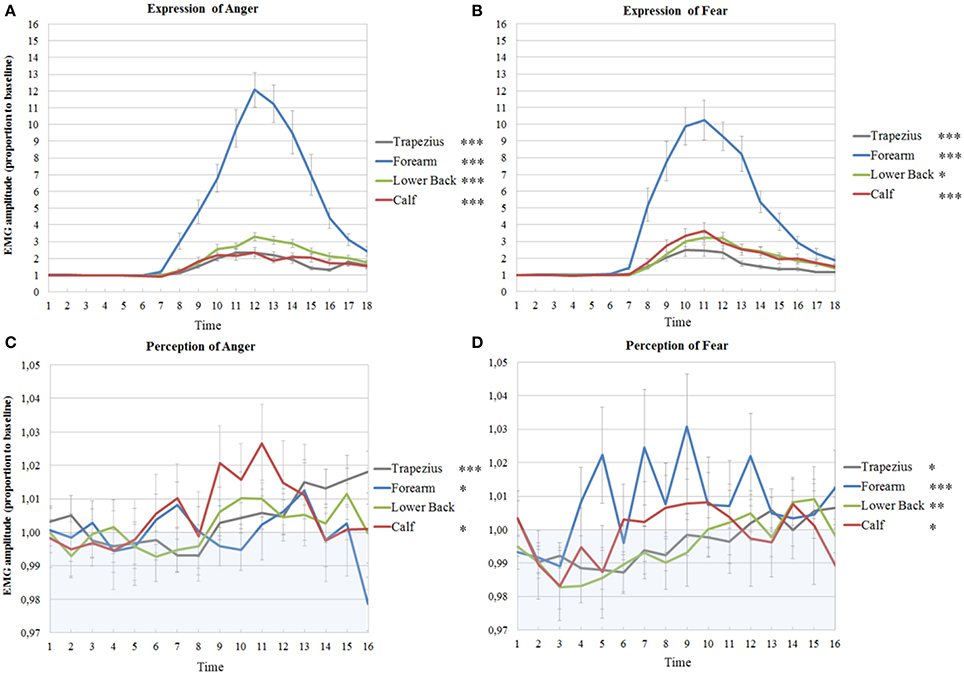

On the left, the random intercepts did not reach significance but inclusion did improve the models (trapezius; Wald Z = 1.38, p > 0.05, forearm; Wald Z = 1.65, p > 0.05, lower back; Wald Z = 1.45, p > 0.05, calf; Wald Z = 1.45, p > 0.05). Trapezius, forearm, lower back and calf EMG time courses were best described by models with a fixed quadratic effect of time [trapezius; F(1, 249) = 111.69, p < 0.001, forearm; F(1, 271) = 194.97, p < 0.001, lower back; F(1, 245) = 216.19, p < 0.001; calf; F(1, 237) = 108.40, p < 0.001] and with an additional random effect of time (trapezius; Wald Z = 2.48, p = 0.013, lower back; Wald Z = 1.99, p = 0.047, calf; Wald Z = 2.23, p = 0.025) (see Table 1B and Figure 4A).

Figure 4. Muscle activations in experiment 1 and 2 on the left side of the body. EMG amplitudes and standard errors of the mean of muscles on the left during active imitation of anger (A) and fear (B) and during passive viewing of anger (C) and fear (D). The first six time points represent EMG activity during stimulus presentation. Activity below one (in blue area) indicates deactivation as compared to baseline. Significance levels of the fixed effect of time; ***p < 0.001, **p < 0.01, *p < 0.05.

Expression of Fear

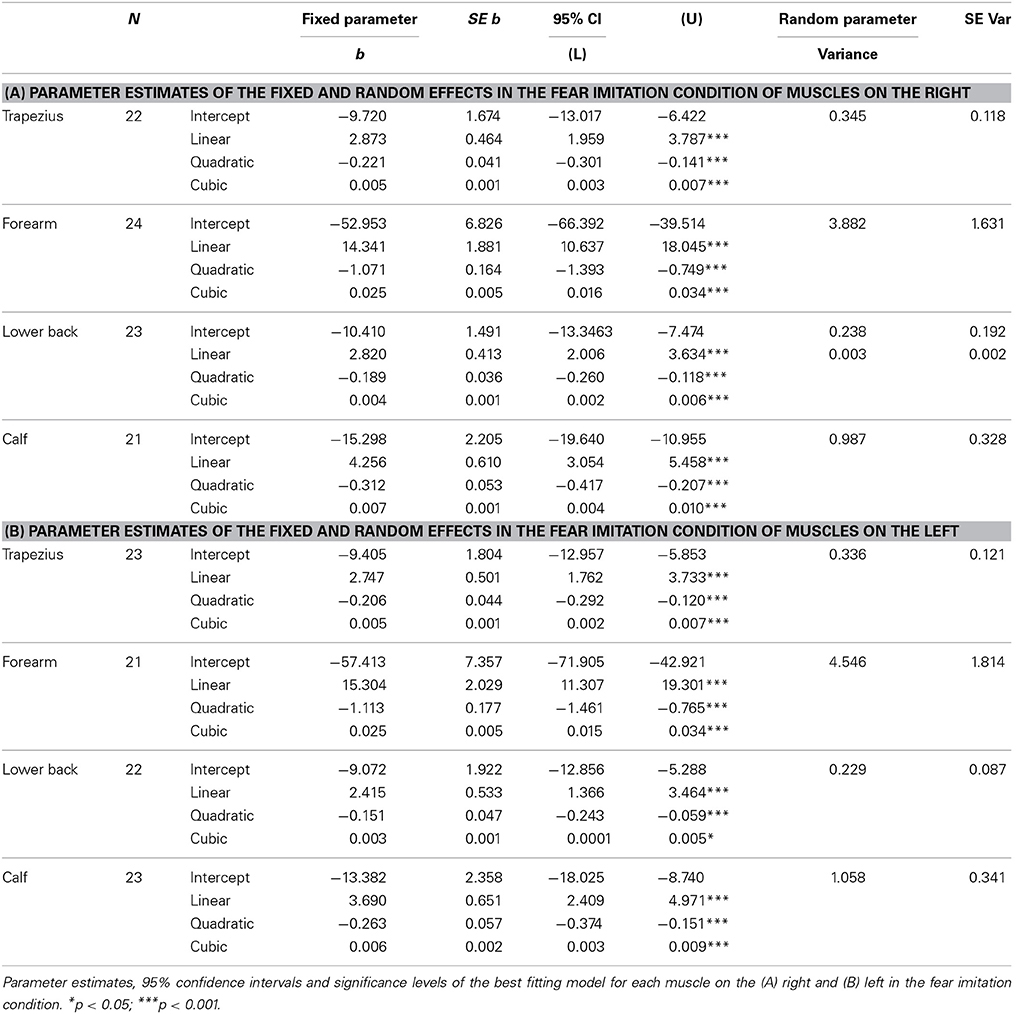

Random intercepts were also included in all models on the right (trapezius; Wald Z = 2.93, p = 0.003, forearm; Wald Z = 2.38, p = 0.017, lower back; Wald Z = 1.24, p > 0.05, calf; Wald Z = 3.01, p = 0.003). EMG activity of the right trapezius, forearm and calf were found to follow a fixed cubic trend, unlike the quadratic trends found for anger expression [trapezius; F(1, 253) = 22.01, p < 0.001, forearm; F(1, 265) = 29.63, p < 0.001, calf; F(1, 246) = 22.69, p < 0.001]. Activity in the lower back also followed a fixed cubic effect of time [F(1, 248) = 14.50, p < 0.001] where the slopes vary across participants (Wald Z = 1.86, p = 0.06), which was also found for anger expression (see Table 2A and Figure 3B).

On the left, the intercepts varied across participants in all models (trapezius; Wald Z = 2.77, p = 0.006, forearm; Wald Z = 2.51, p = 0.012, lower back; Wald Z = 2.65, p = 0.008, calf; Wald Z = 3.10, p = 0.002). Furthermore, the time courses were similar as those on the right side, with cubic effects of time [trapezius; F(1, 262) = 15.60, p < 0.001, forearm; F(1, 239) = 25.61, p < 0.001, lower back; F(1, 253) = 4.44, p = 0.036, calf; F(1, 269) = 13.41, p < 0.001] (see Table 2B and Figure 4B).

Passive Viewing of Angry Expressions

It was hypothesized that the muscles involved in the active expression of emotion also show activation during passive viewing of these emotions. The results of experiment 1 are shown below.

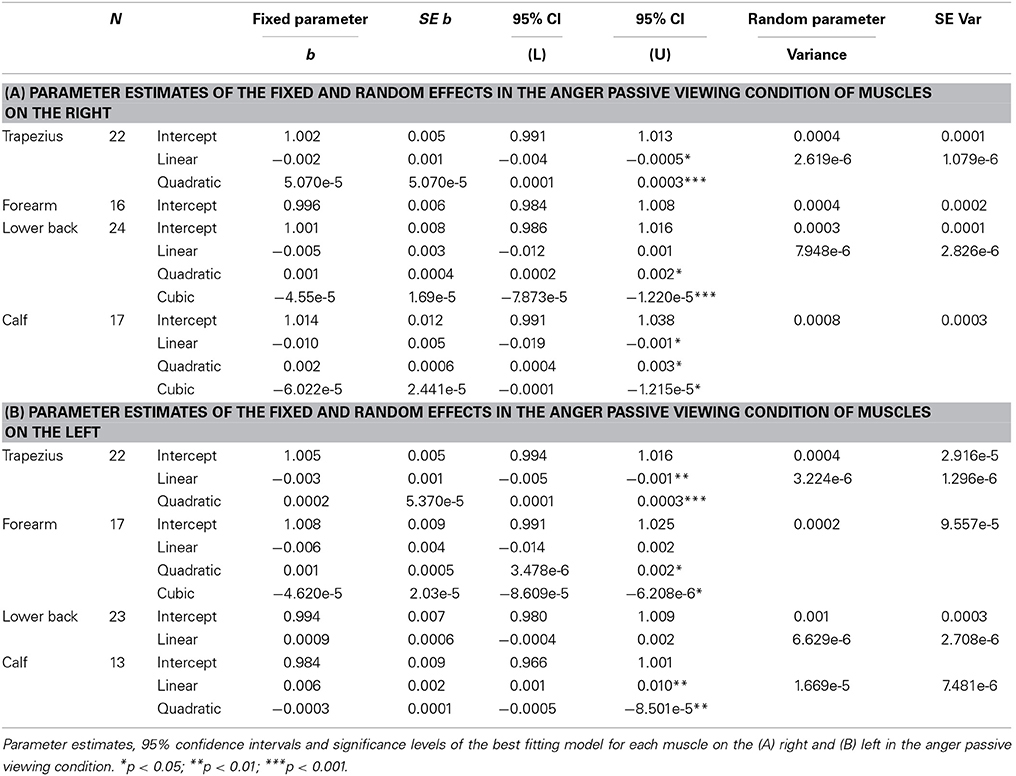

The intercepts of all time courses of muscles on the right in response to anger showed significant variance across participants (trapezius; Wald Z = 2.72, p = 0.007, forearm; Wald Z = 2.60, p = 0.009, lower back; Wald Z = 2.22, p = 0.026, calf; Wald Z = 2.72, p = 0.007). Furthermore, the EMG time course of the right trapezius was best described by a model with a fixed quadratic effect of time [F(1, 307) = 16.62, p < 0.001] where the effect of time was also allowed to vary (Wald Z = 2.43, p = 0.015). Even though there was a significant variance in intercepts for the EMG time courses of the muscle in the forearm, no significant effects of time could be found. The activity of the lower back followed a cubic trend [F(1, 336) = 7.23, p = 0.008] and the best fitting model also allowed the effect of time to vary (Wald Z = 2.81, p = 0.005). Finally, a fixed cubic effect of time [F(1, 253) = 6.09, p = 0.014] was found for the time course of the calf muscle (see Table 3A and Figure 3C).

Also on the left side, the intercepts of most time courses, with the exception of the calf, showed significant variance across participants (trapezius; Wald Z = 2.63, p = 0.009, forearm; Wald Z = 2.50, p = 0.013, lower back; Wald Z = 2.74, p = 0.006). The same model as found in the right trapezius was also found to fit best, with a fixed quadratic effect of time [F(1, 308) = 18.80, p < 0.001] and a random effect of time (Wald Z = 2.49, p = 0.013). In contrast to the lack of activity in the right, the left wrist extensors slightly, but significantly, responded to the perception of anger [fixed cubic effect of time; F(1, 254) = 5.18, p = 0.024]. In the left lower back, a more complicated model was found, with only a significant random, but not fixed, effect of time (Wald Z = 2.45, p = 0.014) and a significant covariance between intercepts and slopes (−0.80, Wald Z = −8.60, p < 0.001), signaling that slopes decrease as intercepts increase. Also, a fixed quadratic effect of time [F(1, 182) = 7.77, p = 0.006] where the effect of time is also allowed to vary (Wald Z = 2,23, p = 0.026) fit the data of the calf best (see Table 3B and Figure 4C).

Passive Viewing of Fearful Expressions

In response to fear, the intercepts of all time courses of muscles on the right also significantly varied across participants (trapezius; Wald Z = 2.65, p = 0.008, forearm; Wald Z = 2.37, p = 0.018, lower back; Wald Z = 3.01, p = 0.003, calf; Wald Z = 2.34, p = 0.02). A different model was found for the right trapezius in response to fear than to anger; the best fitting model included a fixed cubic effect of time [F(1, 294) = 6.94, p = 0.009] where the effect of time was also allowed to vary (Wald Z = 1.78, p = 0.07). The right forearm did respond significantly to the perception of fear, in contrast to the lack of response to anger, with a fixed linear effect of time [F(1, 208) = 19.96, p < 0.001]. The time course of the right lower back indicates a slight response to fear with a simple fixed linear effect of time [F(1, 340) = 16.27, p < 0.001]. Similar to the response to anger, a model with a fixed cubic effect of time [F(1, 252) = 11.99, p = 0.001] but with an additional random effect of time (Wald Z = 2.12, p = 0.034) fit the time course of the calf (see Table 4A and Figure 3D).

On the left side of the body, the intercepts of all models also varied significantly (trapezius; Wald Z = 2.56, p = 0.011, forearm; Wald Z = 2.36, p = 0.018, lower back; Wald Z = 2.20, p = 0.028, calf; Wald Z = 2.29, p = 0.022). EMG amplitudes of the left trapezius followed the same pattern as on the right, with a fixed cubic effect of time [F(1, 308) = 5.90, p = 0.016] with varying slopes across participants (Wald Z = 2.65, p = 0.008). The left forearm also significantly responded to fear, with a fixed cubic effect of time [F(1, 183) = 14.17, p < 0.001] but this model only fit 12 participants. One participant was removed from the analyses on the time courses of the left lower back in response to fear, due to a severely deviant time course. The resulting model included a cubic fixed effect [F(1, 301) = 9.4, p = 0.002] and a random effect of time (Wald Z = 2.42, p = 0.016). Finally, the same model as found in the right calf was found in the left calf in response to fear, with a fixed cubic effect of time [F(1, 202) = 5.83, p = 0.017] but with an additional random effect of time (Wald Z = 2.31, p = 0.021) (see Table 4B and Figure 4D).

Discussion

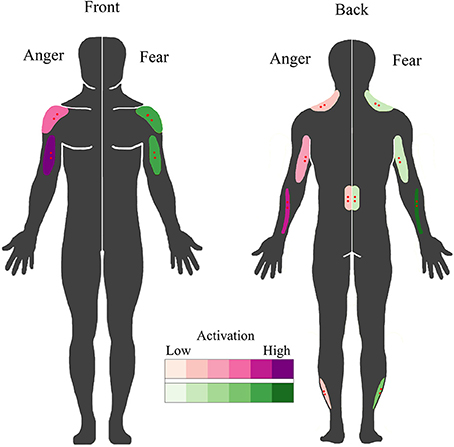

The objective of this study was to extend the BACS (Huis in ‘t Veld et al., 2014) by assessing the involvement of muscles in the forearms, the lower back and the calves in expressing angry and fearful bodily expressions and additionally, to determine whether these muscles also respond to the observers' passive perception of emotion. It was found that the wrist extensors in the forearm (extensor carpi ulnaris) are very strongly involved in angry and, to a lesser extent, fearful movements. Muscles in the lower back (erector spinae longissimus) were activated equally during the expression of both fear and anger, while the muscles in the calf (peroneus longus) were recruited slightly more for the expression of fear. For the muscles in the neck (upper trapezius descendens), the results from BACS I were replicated, with almost overlapping time courses for fear expression and a similar, but slightly delayed, activation pattern for anger expression. When these results are combined with those of BACS I, it is possible to extract unique patterns of muscle activity for angry versus fearful movements. The biceps were found to be the most important muscle for anger expression, followed secondly by forearm, then by shoulder and lastly by triceps activation. In contrast, a fearful expression was marked by forearm activity, followed by an equally strong involvement of shoulders and biceps, while activity of the triceps is quite low (see Figure 5). Considering the function of the muscles, these findings are in line with previous descriptions of angry and fearful movements, such as raising the forearms to the trunk or stretching the arms downward with palms held up (anger) and lifting the arms with the hands held up protectively with the palms outward (fear) (De Meijer, 1989; Wallbott, 1998; Sawada et al., 2003; Coulson, 2004; Kleinsmith et al., 2006; Kessous et al., 2010; Dael et al., 2012b). See Figure 1 for examples of these movements in the stimuli. Furthermore, similar postures were found to correspond to higher arousal ratings (Kleinsmith et al., 2011).

Figure 5. BACS of emotional expression. Schematic overview of the muscles involved in overt fearful and angry emotional expression. Muscles involved in the expression of anger are plotted on the left in purple; those involved in fear expression are plotted on the right in green. Higher color intensity means a greater involvement. Electrode locations are shown in red.

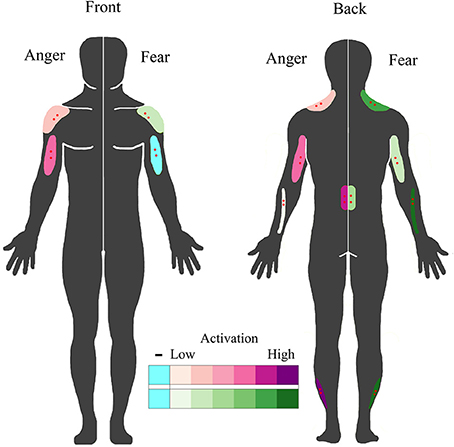

The second aim of the study was to assess automatic muscle activations in response to the perception of emotion in others, without any overt movement. The trapezius showed a very similar response both in time (with a quadratic time course for anger and a cubic effect of time for fear) and amplitude as found previously (Huis in ‘t Veld et al., 2014). The wrist extensors, the most active muscles during fear expression, quite strongly respond only to the perception of fear in others. In contrast, the perception of an angry expression caused a sudden activation of a muscle in the lower back, normally used for leaning backwards. The muscles in the calf strongly activated in response to both fear and anger, but with very different time patterns. Whereas the response of the calf to an angry person only occurs after the angry movement is directed at the observer (i.e., after stimulus presentation), there is an immediate response when a fearful person is seen (during stimulus presentation). The peroneus longus in the calf aids in pushing off the foot in order to walk or run, and thus this pattern may not be surprising, as it may be imperative to immediately avoid anything that presumable made the other person fearful. In contrast, responding to anger is most relevant when it is directed at the observer (Grezes et al., 2013). Fearful bodily expressions very quickly engage action preparation networks and adaptive response strategies, even without visual awareness or attention (Tamietto et al., 2007, 2009; Pichon et al., 2012). A similar interaction between the self-relevance of a perceived emotion and the response can be found between eye gaze direction and emotion recognition, where angry facial expressions are easier to recognize with direct eye contact, whereas the opposite is often true for fear (Wieser and Brosch, 2012). In addition, the fact that some of the largest covert activations can be found in the calves, wrist extensors and the lower back are partly in line with previous studies that showed differential activity of these muscles in response to negative stimuli (Coombes et al., 2006, 2009) or pain or fear for pain (Watson et al., 1997; Geisser et al., 2004; Lelard et al., 2013). Similarly, previous studies have found that it is easier to take a step toward a pleasant stimulus and away from an unpleasant one (Chen and Bargh, 1999; Marsh et al., 2005). Furthermore, as the lower back muscle is involved in moving the torso, this might be related to approach-avoidance tendencies (Azevedo et al., 2005; Horslen and Carpenter, 2011; Eerland et al., 2012) In short, these results, taken together with those described in BACS I, indicate that responses to fear and anger can indeed be distinguished by calf, lower back, trapezius, forearm and biceps (de)activation (see Figure 6).

Figure 6. BACS of emotional perception. Schematic overview of muscles that covertly respond to the perception of fearful and angry emotional bodily expressions. Muscles that responded to the perception of anger are plotted on the left in purple; those that responded to the perception of fear are plotted on the right in green. Higher color intensity means larger responses, blue indicates deactivation. Electrode locations are shown in red.

Furthermore, our findings indicate that not all muscles in the body simply mimic seen behaviors, in contrast to emotional mimicry theories based on facial expressions (Dimberg, 1982). Some muscles very actively involved in movement, like the biceps (Huis in ‘t Veld et al., 2014) and wrist extensors, activate in response to the perception of the emotion they are most strongly involved in. In contrast, the activity of postural muscles such as in the lower back, neck and calves, is not large during movement and the covert responses seem more reactive. This reaction might be enhanced by the interactive nature of the videos, in contrast to the more traditional facial EMG studies in which photographs are shown. Another indication that these processes may be more complex than mimicry or motor mapping, are the findings that facial muscle responses also occur without conscious awareness (Tamietto et al., 2009) and in response to non-facial stimuli such as bodies and voices (Hietanen et al., 1998; Bradley and Lang, 2000; Magnee et al., 2007; Grezes et al., 2013; Kret et al., 2013). Furthermore, most muscles deactivate during the actual perception of an emotional behavior of another person (during stimulus presentation). These deactivations may be a function of suppression of specific cells in the primary motor cortex, possibly through the supplementary motor area during action observation, to prevent imitation (Bien et al., 2009; Kraskov et al., 2009; Mukamel et al., 2010; Vigneswaran et al., 2013). Stamos et al. (2010) found that action observation inhibited metabolic activity in the area of the spinal cord that contains the forelimb innervations in macaques. In fact, it is known from studies assessing posture that the body can freeze upon the perception of aversive and arousing stimuli (Facchinetti et al., 2006; Roelofs et al., 2010; Stins et al., 2011; Lelard et al., 2013). Other recent studies also support the view that it may be more likely that unconscious emotional muscle responses reflect complex interactive and reactive processes (see Hess and Fischer, 2013 for a review).

The BACS now features descriptions of seven muscles during the expression and perception of anger and fear. In order to generalize the BACS it is important to assess the responses of these muscles in relation to other emotions and a neutral condition. As described in Huis in ‘t Veld et al. (2014), the stimuli used in the present study did not include neutral stimuli containing the same level of movement as their emotional counterparts. It was decided to first expand the BACS by exploring additional muscles, instead of more emotions, as unique muscle activity patterns are difficult to establish with only four muscles. A follow up experiment, including freely expressed emotions and two control conditions, is currently in preparation. Furthermore, future studies will relate the EMG signal to descriptions of the expressed movement in time and 3D space by using EMG and Motion Capture techniques in concordance. Similar experiments in which participants imitate fixed expressions from video might be improved by using other coding systems such as the Body Action and Posture coding system (BAP; Dael et al., 2012a) or autoBAP (Velloso et al., 2013). Performing principal component analyses (Bosco, 2010) or calculating which muscles co-contract during the expression of which emotions (Kellis et al., 2003), may be suitable methods of statistically appraising which action units are featured for which emotional expressions. Combining these techniques may provide a more complete picture of the specific dynamics of body language and the important contributions of bodily muscles.

Even though the different techniques and their respective coding systems all highlight a different aspect of emotional bodily expressions, one of the advantages of surface EMG is the relative ease of use and the availability of affordable recording systems. The action units in the arms and shoulders, such as the wrist extensors, biceps, triceps and anterior deltoids, are easy to locate and measure and provide very strong movement signals. Assessing their activity patterns in comparison to each other enables the discrimination between angry or fearful movements. In addition, the electrode locations of these action units are also very convenient if one uses wireless electrodes or electrodes in a shirt, making it ideal for use in non-laboratory settings, for example classrooms, hospitals or virtual reality environments.

Most importantly, only EMG is able to measure responses invisible to the naked eye. Future studies interested in assessing these covert activations are encouraged to take the following issues into account. Firstly, the number of trials should be high enough, as some muscles like the wrist extensor and calf muscles were quite sensitive to outliers. Also, EMG activations were best measured from muscles on the right. Implementing affective state information through measuring automatic and non-conscious, unintentional muscle activity patterns may serve as input for previously mentioned human-computer or human-robot interfaces, for example gaming consoles or online learning environments, in order to improve a successful user interaction. Virtual reality especially may prove to be a more effective way than presenting videos to more naturally induce emotion, or even create social interactions, in an immersive but still controlled setting. Furthermore, the BACS can be used as the FACS (Ekman and Friesen, 1978), for example in the study of emotional expression and contagious responses in different cultures (Jack et al., 2012), autism (Rozga et al., 2013), schizophrenia (Sestito et al., 2013), borderline personality (Matzke et al., 2014), aggression or behavioral disorders (Bons et al., 2013; Deschamps et al., 2014), or unconscious pain related behavior (van der Hulst et al., 2010; Aung et al., 2013), just to name a few.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by the National Initiative Brain & Cognition (056-22-011); the EU project TANGO (FP7-ICT-2007-0 FET-Open, project number 249858) and the European Research Council under the European Union's Seventh Framework Programme (ERC) (FP7/2007-2013, agreement number 295673).

References

Aung, M. S. H., Romera-Paredes, B., Singh, A., Lim, S., Kanakam, N., De Williams, A. C., et al. (2013). “Getting RID of pain-related behaviour to improve social and self perception: a technology-based perspective,” in 14th International Workshop on Image Analysis for Multimedia Interactive Services (WIAMIS) (Paris: IEEE), 1–4. doi: 10.1109/WIAMIS.2013.6616167

Azevedo, T. M., Volchan, E., Imbiriba, L. A., Rodrigues, E. C., Oliveira, J. M., Oliveira, L. F., et al. (2005). A freezing-like posture to pictures of mutilation. Psychophysiology 42, 255–260. doi: 10.1111/j.1469-8986.2005.00287.x

Bagiella, E., Sloan, R. P., and Heitjan, D. F. (2000). Mixed-effects models in psychophysiology. Psychophysiology 37, 13–20. doi: 10.1111/1469-8986.3710013

Bien, N., Roebroeck, A., Goebel, R., and Sack, A. T. (2009). The brain's intention to imitate: the neurobiology of intentional versus automatic imitation. Cereb. Cortex 19, 2338–2351. doi: 10.1093/cercor/bhn251

Bons, D., Van Den Broek, E., Scheepers, F., Herpers, P., Rommelse, N., and Buitelaaar, J. K. (2013). Motor, emotional, and cognitive empathy in children and adolescents with autism spectrum disorder and conduct disorder. J. Abnorm. Child Psychol. 41, 425–443. doi: 10.1007/s10802-012-9689-5

Borgomaneri, S., Gazzola, V., and Avenanti, A. (2012). Motor mapping of implied actions during perception of emotional body language. Brain Stimul. 5, 70–76. doi: 10.1016/j.brs.2012.03.011

Bosco, G. (2010). Principal component analysis of electromyographic signals: an overview. Open Rehabil. J. 3, 127–131. doi: 10.2174/1874943701003010127

Bradley, M. M., and Lang, P. J. (2000). Affective reactions to acoustic stimuli. Psychophysiology 37, 204–215. doi: 10.1111/1469-8986.3720204

Calvo, R. A., and D'mello, S. (2010). Affect detection: an interdisciplinary review of models, methods, and their applications. IEEE Trans. Affect. Comput. 1, 18–37. doi: 10.1109/T-AFFC.2010.1

Castellano, G., Leite, I., Pereira, A., Martinho, C., Paiva, A., and McOwan, P. W. (2013). Multimodal affect modeling and recognition for empathic robot companions. Int. J. Hum. Robot. 10:1350010. doi: 10.1142/S0219843613500102

Chen, M., and Bargh, J. A. (1999). Consequences of automatic evaluation: immediate behavioral predispositions to approach or avoid the stimulus. Pers. Soc. Psychol. B 25, 215–224. doi: 10.1177/0146167299025002007

Coombes, S. A., Cauraugh, J. H., and Janelle, C. M. (2006). Emotion and movement: activation of defensive circuitry alters the magnitude of a sustained muscle contraction. Neurosci. Lett. 396, 192–196. doi: 10.1016/j.neulet.2005.11.048

Coombes, S. A., Tandonnet, C., Fujiyama, H., Janelle, C. M., Cauraugh, J. H., and Summers, J. J. (2009). Emotion and motor preparation: a transcranial magnetic stimulation study of corticospinal motor tract excitability. Cogn. Affect. Behav. Neurosci. 9, 380–388. doi: 10.3758/CABN.9.4.380

Coulson, M. (2004). Attributing emotion to static body postures: recognition accuracy, confusions, and viewpoint dependence. J. Nonverbal Behav. 28, 117–139. doi: 10.1023/B:JONB.0000023655.25550.be

Dael, N., Mortillaro, M., and Scherer, K. R. (2012a). The body action and posture coding system (BAP): development and reliability. J. Nonverbal Behav. 36, 97–121. doi: 10.1007/s10919-012-0130-0

Dael, N., Mortillaro, M., and Scherer, K. R. (2012b). Emotion expression in body action and posture. Emotion 12, 1085–1101. doi: 10.1037/a0025737

de Gelder, B. (2009). Why bodies? Twelve reasons for including bodily expressions in affective neuroscience. Philos. Trans. R. Soc. Lond. B Biol. Sci. 364, 3475–3484. doi: 10.1098/rstb.2009.0190

de Gelder, B. (2013). “From body perception to action preparation: a distributed neural system for viewing bodily expressions of emotion,” in People Watching: Social, Perceptual, and Neurophysiological Studies of Body Perception, eds M. Shiffrar and K. Johnson (New York, NY: Oxford University Press), 350–368.

de Gelder, B., Snyder, J., Greve, D., Gerard, G., and Hadjikhani, N. (2004). Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proc. Natl. Acad. Sci. U.S.A. 101, 16701–16706. doi: 10.1073/pnas.0407042101

De Meijer, M. (1989). The contribution of general features of body movement to the attribution of emotions. J. Nonverbal Behav. 13, 247–268. doi: 10.1007/BF00990296

Deschamps, P., Munsters, N., Kenemans, L., Schutter, D., and Matthys, W. (2014). Facial mimicry in 6-7 year old children with disruptive behavior disorder and ADHD. PLoS ONE 9:e84965. doi: 10.1371/journal.pone.0084965

Dimberg, U. (1982). Facial reactions to facial expressions. Psychophysiology 19, 643–647. doi: 10.1111/j.1469-8986.1982.tb02516.x

Dimberg, U. (1990). Facial electromyography and emotional reactions. Psychophysiology 27, 481–494. doi: 10.1111/j.1469-8986.1990.tb01962.x

Eerland, A., Guadalupe, T. M., Franken, I. H. A., and Zwaan, R. A. (2012). Posture as index for approach-avoidance behavior. PLoS ONE 7:e31291. doi: 10.1371/journal.pone.0031291

Ekman, P., and Friesen, W. V. (1978). Facial Action Coding System (FACS): A Technique for the Measurement of Facial Action. Palo Alto, CA: Consulting Psychologists Press.

Facchinetti, L. D., Imbiriba, L. A., Azevedo, T. M., Vargas, C. D., and Volchan, E. (2006). Postural modulation induced by pictures depicting prosocial or dangerous contexts. Neurosci. Lett. 410, 52–56. doi: 10.1016/j.neulet.2006.09.063

Fadiga, L., Craighero, L., and Olivier, E. (2005). Human motor cortex excitability during the perception of others' action. Curr. Opin. Neurobiol. 15, 213–218. doi: 10.1016/j.conb.2005.03.013

Frijda, N. H. (2010). Impulsive action and motivation. Biol. Psychol. 84, 570–579. doi: 10.1016/j.biopsycho.2010.01.005

Gao, Y., Bianchi-Berthouze, N., and Meng, H. Y. (2012). What does touch tell us about emotions in touchscreen-based gameplay? ACM Trans. Comput. Hum. Int. 19:31. doi: 10.1145/2395131.2395138

Geisser, M. E., Haig, A. J., Wallbom, A. S., and Wiggert, E. A. (2004). Pain-related fear, lumbar flexion, and dynamic EMG among persons with chronic musculoskeletal low back pain. Clin. J. Pain 20, 61–69. doi: 10.1097/00002508-200403000-00001

Grezes, J., Philip, L., Chadwick, M., Dezecache, G., Soussignan, R., and Conty, L. (2013). Self-relevance appraisal influences facial reactions to emotional body expressions. PLoS ONE 8:e55885. doi: 10.1371/journal.pone.0055885

Grezes, J., Pichon, S., and de Gelder, B. (2007). Perceiving fear in dynamic body expressions. Neuroimage 35, 959–967. doi: 10.1016/j.neuroimage.2006.11.030

Hermens, H. J., and Freriks, B. (eds.). (1997). The State of the Art on Sensors and Sensor Placement Procedures for Surface Electromyography: A Proposal for Sensor Placement Procedures. Enschede: Roessingh Research and Development.

Hess, U., and Fischer, A. (2013). Emotional mimicry as social regulation. Pers. Soc. Psychol. Rev. 17, 142–157. doi: 10.1177/1088868312472607

Hietanen, J. K., Surakka, V., and Linnankoski, I. (1998). Facial electromyographic responses to vocal affect expressions. Psychophysiology 35, 530–536. doi: 10.1017/S0048577298970445

Horslen, B. C., and Carpenter, M. G. (2011). Arousal, valence and their relative effects on postural control. Exp. Brain Res. 215, 27–34. doi: 10.1007/s00221-011-2867-9

Huis in ‘t Veld, E. M. J., Van Boxtel, G. J. M., and de Gelder, B. (2014). The body action coding system I: muscle activations during the perception and expression of emotion. Soc. Neurosci. 9, 249–264. doi: 10.1080/17470919.2014.890668

Jack, R. E., Garrod, O. G. B., Yu, H., Caldara, R., and Schyns, P. G. (2012). Facial expressions of emotions are not culturally universal. Proc. Natl. Acad. Sci. U.S.A. 109, 7241–7244. doi: 10.1073/pnas.1200155109

Karg, M., Samadani, A. A., Gorbet, R., Kuhnlenz, K., Hoey, J., and Kulic, D. (2013). Body movements for affective expression: a survey of automatic recognition and generation. IEEE Trans. Affect. Comput. 4, 341–U157. doi: 10.1109/T-AFFC.2013.29

Kellis, E., Arabatzi, F., and Papadopoulos, C. (2003). Muscle co-activation around the knee in drop jumping using the co-contraction index. J. Electromyogr. Kinesiol. 13, 229–238. doi: 10.1016/S1050-6411(03)00020-8

Kessous, L., Castellano, G., and Caridakis, G. (2010). Multimodal emotion recognition in speech-based interaction using facial expression, body gesture and acoustic analysis. J. Multimodal User Inerface 3, 33–48. doi: 10.1007/s12193-009-0025-5

Kleinsmith, A., and Bianchi-Berthouze, N. (2013). Affective body expression perception and recognition: a survey. IEEE Trans. Affect. Comput. 4, 15–33. doi: 10.1109/T-AFFC.2012.16

Kleinsmith, A., Bianchi-Berthouze, N., and Steed, A. (2011). Automatic recognition of non-acted affective postures. IEEE Trans. Syst. Man Cybern. B Cybern. 41, 1027–1038. doi: 10.1109/TSMCB.2010.2103557

Kleinsmith, A., De Silva, P. R., and Bianchi-Berthouze, N. (2006). Cross-cultural differences in recognizing affect from body posture. Interact. Comput. 18, 1371–1389. doi: 10.1016/j.intcom.2006.04.003

Kraskov, A., Dancause, N., Quallo, M. M., Shepherd, S., and Lemon, R. N. (2009). Corticospinal neurons in macaque ventral premotor cortex with mirror properties: a potential mechanism for action suppression? Neuron 64, 922–930. doi: 10.1016/j.neuron.2009.12.010

Kret, M. E., Pichon, S., Grezes, J., and de Gelder, B. (2011). Similarities and differences in perceiving threat from dynamic faces and bodies. An fMRI study. Neuroimage 54, 1755–1762. doi: 10.1016/j.neuroimage.2010.08.012

Kret, M. E., Stekelenburg, J., Roelofs, K., and de Gelder, B. (2013). Perception of face and body expressions using electromyography, pupillometry and gaze measures. Front. Psychol. 4:28. doi: 10.3389/fpsyg.2013.00028

Lelard, T., Montalan, B., Morel, M. F., Krystkowiak, P., Ahmaidi, S., Godefroy, O., et al. (2013). Postural correlates with painful situations. Front. Hum. Neurosci. 7:4. doi: 10.3389/fnhum.2013.00004

Magnee, M. J. C. M., Stekelenburg, J. J., Kemner, C., and de Gelder, B. (2007). Similar facial electromyographic responses to faces, voices, and body expressions. Neuroreport 18, 369–372. doi: 10.1097/WNR.0b013e32801776e6

Mak, J. N., Hu, Y., and Luk, K. D. (2010). An automated ECG-artifact removal method for trunk muscle surface EMG recordings. Med. Eng. Phys. 32, 840–848. doi: 10.1016/j.medengphy.2010.05.007

Marsh, A. A., Ambady, N., and Kleck, R. E. (2005). The effects of fear and anger facial expressions on approach- and avoidance-related behaviors. Emotion 5, 119–124. doi: 10.1037/1528-3542.5.1.119

Matzke, B., Herpertz, S. C., Berger, C., Fleischer, M., and Domes, G. (2014). Facial reactions during emotion recognition in borderline personality disorder: a facial electromyography study. Psychopathology 47, 101–110. doi: 10.1159/000351122

Mayer, R. E., and Dapra, C. S. (2012). An embodiment effect in computer-based learning with animated pedagogical agents. J. Exp. Psychol. Appl. 18, 239–252. doi: 10.1037/a0028616

Mccoll, D., and Nejat, G. (2014). Recognizing rmotional body language displayed by a human-like social robot. Int. J. Soc. Robot. 6, 261–280. doi: 10.1007/s12369-013-0226-7

Mukamel, R., Ekstrom, A. D., Kaplan, J., Iacoboni, M., and Fried, I. (2010). Single-neuron responses in humans during execution and observation of actions. Curr. Biol. 20, 750–756. doi: 10.1016/j.cub.2010.02.045

Nguyen, A., Chen, W., and Rauterberg, G. W. M. (2013). “Online feedback system for public speakers,” in IEEE Symposium on E-Learning, E-Management and E-Services (IS3e): Piscataway, NY: IEEE Service Center, 1–5.

Pichon, S., de Gelder, B., and Grezes, J. (2008). Emotional modulation of visual and motor areas by dynamic body expressions of anger. Soc. Neurosci. 3, 199–212. doi: 10.1080/17470910701394368

Pichon, S., de Gelder, B., and Grezes, J. (2009). Two different faces of threat. Comparing the neural systems for recognizing fear and anger in dynamic body expressions. Neuroimage 47, 1873–1883. doi: 10.1016/j.neuroimage.2009.03.084

Pichon, S., de Gelder, B., and Grezes, J. (2012). Threat prompts defensive brain responses independently of attentional control. Cereb. Cortex 22, 274–285. doi: 10.1093/cercor/bhr060

Roelofs, K., Hagenaars, M. A., and Stins, J. (2010). Facing freeze: social threat induces bodily freeze in humans. Psychol. Sci. 21, 1575–1581. doi: 10.1177/0956797610384746

Rozga, A., King, T. Z., Vuduc, R. W., and Robins, D. L. (2013). Undifferentiated facial electromyography responses to dynamic, audio-visual emotion displays in individuals with autism spectrum disorders. Dev. Sci. 16, 499–514. doi: 10.1111/desc.12062

Savva, N., and Bianchi-Berthouze, N. (2012). “Automatic recognition of affective body movement in a video game scenario,” in International Conference on Intelligent Technologies for Interactive Entertainment (INTETAIN), eds A. Camurri, C. Costa, and G. Volpe (Genoa: Springer), 149–158.

Sawada, M., Suda, K., and Ishii, M. (2003). Expression of emotions in dance: relation between arm movement characteristics and emotion. Percept. Mot. Skills 97, 697–708. doi: 10.2466/pms.2003.97.3.697

Schutter, D. J., Hofman, D., and van Honk, J. (2008). Fearful faces selectively increase corticospinal motor tract excitability: a transcranial magnetic stimulation study. Psychophysiology 45, 345–348. doi: 10.1111/j.1469-8986.2007.00635.x

Sestito, M., Umilta, M. A., De Paola, G., Fortunati, R., Raballo, A., Leuci, E., et al. (2013). Facial reactions in response to dynamic emotional stimuli in different modalities in patients suffering from schizophrenia: a behavioral and EMG study. Front. Hum. Neurosci. 7:368. doi: 10.3389/fnhum.2013.00368

Stamos, A. V., Savaki, H. E., and Raos, V. (2010). The spinal substrate of the suppression of action during action observation. J. Neurosci. 30, 11605–11611. doi: 10.1523/JNEUROSCI.2067-10.2010

Stins, J. F., Roelofs, K., Villan, J., Kooijman, K., Hagenaars, M. A., and Beek, P. J. (2011). Walk to me when I smile, step back when I'm angry: emotional faces modulate whole-body approach-avoidance behaviors. Exp. Brain Res. 212, 603–611. doi: 10.1007/s00221-011-2767-z

Taelman, J., Mijovic, B., Van Huffel, S., Devuyst, S., and Dutoit, T. (2011). “ECG artifact removal from surface EMG signals by combining empirical mode decomposition and independent component analysis,” in International Conference on Bio-inspired Systems and Signal Processing (Rome), 421–424.

Tamietto, M., Castelli, L., Vighetti, S., Perozzo, P., Geminiani, G., Weiskrantz, L., et al. (2009). Unseen facial and bodily expressions trigger fast emotional reactions. Proc. Natl. Acad. Sci. U.S.A. 106, 17661–17666. doi: 10.1073/pnas.0908994106

Tamietto, M., Geminiani, G., Genero, R., and de Gelder, B. (2007). Seeing fearful body language overcomes attentional deficits in patients with neglect. J. Cogn. Neurosci. 19, 445–454. doi: 10.1162/jocn.2007.19.3.445

van der Hulst, M., Vollenbroek-Hutton, M. M., Reitman, J. S., Schaake, L., Groothuis-Oudshoorn, K. G., and Hermens, H. J. (2010). Back muscle activation patterns in chronic low back pain during walking: a “guarding” hypothesis. Clin. J. Pain 26, 30–37. doi: 10.1097/AJP.0b013e3181b40eca

Velloso, E., Bulling, A., and Gellersen, H. (2013). “AutoBAP: automatic coding of body action and posture units from wearable sensors,” in Humaine Association Conference on Affective Computing and Intelligent Interaction: IEEE Computer Society (Los Alamitos, CA), 135–140.

Vigneswaran, G., Philipp, R., Lemon, R. N., and Kraskov, A. (2013). M1 corticospinal mirror neurons and theirole in movement suppression during action observation. Curr. Biol. 23, 236–243. doi: 10.1016/j.cub.2012.12.006

Watson, P. J., Booker, C. K., and Main, C. J. (1997). Evidence for the role of psychological factors in abnormal paraspinal activity in patients with chronic low back pain. J. Musculoskelet. Pain 5, 41–56. doi: 10.1300/J094v05n04_05

Wieser, M. J., and Brosch, T. (2012). Faces in context: a review and systematization of contextual influences on affective face processing. Front. Psychol. 3:471. doi: 10.3389/fpsyg.2012.00471

Zacharatos, H. (2013). “Affect recognition during active game playing based on posture skeleton data,” in 8th International Conference on Computer Graphics Theory and Applications (Barcelona).

Keywords: surface EMG, body, emotion, coding, movement, muscles, perception, expression

Citation: Huis In ‘t Veld EMJ, van Boxtel GJM and de Gelder B (2014) The Body Action Coding System II: muscle activations during the perception and expression of emotion. Front. Behav. Neurosci. 8:330. doi: 10.3389/fnbeh.2014.00330

Received: 02 June 2014; Accepted: 03 September 2014;

Published online: 23 September 2014.

Edited by:

Jack Van Honk, Utrecht University, NetherlandsReviewed by:

Silvio Ionta, University Hospital Center (CHUV) and University of Lausanne (UNIL), SwitzerlandThierry Lelard, lnfp, France

Nadia Berthouze, University College London, UK

Andrea Kleinsmith, University of Florida, USA

Copyright © 2014 Huis In ‘t Veld, van Boxtel and de Gelder. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Beatrice de Gelder, Brain and Emotion Laboratory, Faculty of Psychology and Neuroscience, Maastricht University, Oxfordlaan 55, Maastricht, 6229 EV, Netherlands e-mail: b.degelder@maastrichtuniversity.nl

Elisabeth M. J. Huis In ‘t Veld

Elisabeth M. J. Huis In ‘t Veld Geert J. M. van Boxtel

Geert J. M. van Boxtel Beatrice de Gelder

Beatrice de Gelder