Neural and sympathetic activity associated with exploration in decision-making: further evidence for involvement of insula

- 1Department of Psychology, Nagoya University, Nagoya, Japan

- 2Department of Psychiatry and Neurosciences, Hiroshima University, Hiroshima, Japan

- 3Human Technology Research Institute, National Institute of Advanced Industrial Science and Technology, Tsukuba, Japan

- 4Chubu Ryogo Center, Kizawa Memorial Hospital, Minokamo, Japan

We previously reported that sympathetic activity was associated with exploration in decision-making indexed by entropy, which is a concept in information theory and indexes randomness of choices or the degree of deviation from sticking to recent experiences of gains and losses, and that activation of the anterior insula mediated this association. The current study aims to replicate and to expand these findings in a situation where contingency between options and outcomes is manipulated. Sixteen participants performed a stochastic decision-making task in which we manipulated a condition with low uncertainty of gain/loss (contingent-reward condition) and a condition with high uncertainty of gain/loss (random-reward condition). Regional cerebral blood flow was measured by 15O-water positron emission tomography (PET), and cardiovascular parameters and catecholamine in the peripheral blood were measured, during the task. In the contingent-reward condition, norepinephrine as an index of sympathetic activity was positively correlated with entropy indicating exploration in decision-making. Norepinephrine was negatively correlated with neural activity in the right posterior insula, rostral anterior cingulate cortex, and dorsal pons, suggesting neural bases for detecting changes of bodily states. Furthermore, right anterior insular activity was negatively correlated with entropy, suggesting influences on exploration in decision-making. By contrast, in the random-reward condition, entropy correlated with activity in the dorsolateral prefrontal and parietal cortices but not with sympathetic activity. These findings suggest that influences of sympathetic activity on exploration in decision-making and its underlying neural mechanisms might be dependent on the degree of uncertainty of situations.

Introduction

Electrophysiological (Denburg et al., 2006; Yen et al., 2012), pharmacological (Rogers et al., 2004), human lesion (Bechara et al., 1999; Gläscher et al., 2012) studies have verified a notion that activity of the sympathetic nervous system can affect decision-making (Bechara et al., 2000; Bechara and Damasio, 2005). The insula has been identified as a pivotal brain region for this phenomenon, because the insula receives all bodily inputs including peripheral sympathetic activity, and is thought to form an integrated representation of bodily states (Craig, 2009; Critchley, 2009). Furthermore, as the insula, especially its anterior portions, has tight connections with cognition and emotion-related brain regions such as the prefrontal cortex, amygdala, and anterior cingulate cortex (ACC) (Augustine, 1996), it has been proposed that bodily states including sympathetic activity can modulate decision-making through the mediation of changes of insular activity (Damasio, 1994).

Nevertheless, there remains a controversy about a direct role of sympathetic activity on decision-making (Dunn et al., 2006; Rolls, 2014), partly as sympathetic responses are too late to instantaneously affect decision-making (Nieuwenhuis et al., 2010). Considering kinetics of sympathetic nerves, it is reasonable to hypothesize that sympathetic activity might affect tonic states or modes of decision-making within relatively longer time-scales, rather than a specific decision at a local moment. We previously tested this possibility by examining effects of sympathetic activity on a dimension of exploitation and exploration, as an aspect of the tonic states of decision-making in stochastic reversal learning (Ohira et al., 2013). Exploitation is a strategy to stick to an option that has delivered reward at the highest possibility, and thus has the greatest utility. On the other hand, exploration is a strategy to seek for new and previously unexplored options, and thus means deviations from exploitation. While exploitation is more adaptive in a stable environment, organisms have to take the strategy of exploration in an unstable environment. In this sense, the relationship between exploitation and exploration is a trade-off and the balance between these two strategies is critical for survival of animals and humans.

On the basis of previous studies (Lee et al., 2004; Seo and Lee, 2008; Baek et al., 2013; Takahashi et al., 2013, 2014), we quantitatively represented the degree of exploration by using entropy, which is a concept in information theory (Shannon, 1948). Specifically, we calculated the conditional entropy representing the degree of dependence on the immediately previous outcomes in choices of options. Larger values of entropy mean that the strategy of decision-making is the more deviated from a fixed pattern just depending on immediately previous outcomes, and is the more exploratory. As a condition of a state to calculate entropy, an outcome in the immediately previous trial was considered. This was on the basis of a previous finding in humans that an outcome in the immediately previous trial as a history of experiences of reinforcement explained a large portion of following decision-making, while influences of outcomes in older trials decayed exponentially in a stochastic learning task (Katahira et al., 2011). Another index of exploration is probability of choice of an optimal option on the basis of expected values calculated in computational reinforcement learning models (Daw et al., 2006; Badre et al., 2012). While this parameter, which is sometimes called “inverse temperature,” is usually sensitive and can dynamically vary in a trial-by-trial manner along the progress of learning, entropy represents more tonic states of randomness of choices within relatively larger numbers of trials. Therefore, we adopted entropy as an index of exploration because we aimed to elucidate influences of sympathetic activity on tonic aspects of decision-making as described above.

Our results (Ohira et al., 2013) showed that an increase of epinephrine in the peripheral blood as an index of sympathetic activity was associated with larger values of entropy indicating greater tendency of exploration. The increase of epinephrine was positively correlated with brain activity in the right anterior insula, dorsal ACC, and dorsal pons [near the locus coeruleus (LC)]. Furthermore, activity in the anterior insula mediated this correlation between epinephrine and entropy. In this study, the association of sympathetic responses and exploration was found only after introduction of the reversal of the association between options and outcomes, but not during the initial learning stage before the reversal. This suggests that the effects of sympathetic activity were not fixed, but were tuned based on evaluation of situations. To our knowledge, this was the first report of an association between peripheral sympathetic responses and exploration in decision-making, and its underlying neural mechanisms. Apparently, further evidence is needed to support the findings.

Therefore, the present study aimed replication and expansion of our previous findings (Ohira et al., 2013), by examining whether association of neural and sympathetic activities with exploration in decision-making can be modulated by uncertainty, which is one of the important factors in decision-making. For this purpose, we report results of new analyses of an available dataset of our research project where behavioral, 15O-water positron emission tomography (PET), EEG, cardiovascular, neuroendocrine, and immune parameters were measured during a stochastic decision-making task. In that task, we manipulated the degree of contingency between options and outcomes (monetary gains and losses) to examine variations of association between the brain and autonomic activities during decision-making corresponding to uncertainty of situations. In a condition with lower uncertainty called the contingent-reward condition, an advantageous option, compared to a disadvantageous option, is associated with monetary gains at a higher probability and with monetary losses at a lower probability. On the other hand, in another condition with higher uncertainty called the random-reward condition, the gains and losses were delivered randomly for both stimuli. Thus, the situation was substantially stochastic and participants could not learn the contingency. One merit of utilization of this dataset is that involvement of brain regions which are well known to relate to decision-making, including the anterior cingulate, orbitofrontal, and dorsolateral cortices (ACC, OFC, and DLPFC, respectively) and dorsal striatum, during the stochastic decision-making task has been clarified and published elsewhere (Ohira et al., 2009, 2010). Compared with the contingent-reward condition, the OFC, DLPFC, and dorsal striatum were dominantly activated in the random-reward condition, where participants had to continue to seek contingency between options and outcomes.

Specifically, the novelty of the present article is to examine whether functional associations between sympathetic activity, its neural representation, and exploration in decision-making indexed by entropy varies with uncertainty in decision-making. For this aim, we analyzed a correlation matrix between exploration indexed by entropy, regional cerebral blood flow (rCBF) measured by 15O-water PET, catecholamine (epinephrine and norepinephrine) in peripheral blood, and cardiovascular indices (heart rate (HR), mean blood pressure (MBP), total peripheral resistance (TPR), and heart rate variability (HRV) representing vagal (parasympathetic) activity (Sayers, 1973). Because we have repeatedly reported that cardiovascular, endocrine, and immune responses are down-regulated in a highly uncertain situation of stochastic decision-making (Kimura et al., 2007; Ohira et al., 2009, 2010), we expected that the association between sympathetic activity, exploration, and underlying brain activity would be observed more dominantly in the contingent-reward condition, but such associations would be weakened in the random-reward condition.

Methods

Participants

Sixteen healthy right-handed Japanese male undergraduate and graduate students who had no past history of psychiatric and neurological illness were recruited (M ± SD; 21.69 ± 2.25 years). They gave written informed consent in accordance with the Declaration of Helsinki. The present study was approved by the Ethics Committee of Kizawa Memorial Hospital.

Task and Procedure

Stochastic decision-making task

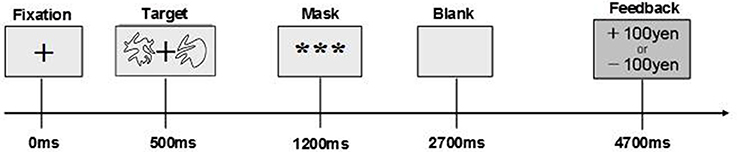

The timeline of a trial of the stochastic decision-making task which participants performed is shown in Figure 1. Following presentation of a hair-cross as a fixation, two abstract line drawings were presented for 700 ms on the left and right side of the fixation. The drawings were selected from the set of Novel Shapes, which were validated for levels of verbalization, association, and simplicity (Endo et al., 2001). Participants chose one of the two stimuli by pressing a key within 700 ms. After that, a feedback signal indicating a gain of 100 Japanese Yen (JPY) or a loss of 100 JPY was presented. If participants did not choose a stimulus within 700 ms, they lost 100 JPY. In the contingent-reward condition, one stimulus (advantageous stimulus) led to gain at a probability of 70% but with loss at a probability of 30%, and the other stimulus (disadvantageous stimulus) was linked with gain and loss at reversed probabilities (30% reward and 70% loss). By contrast, both stimuli were linked with gain and loss at probabilities of 50%, in the random-reward condition. In this condition, the advantageous stimulus was operationally defined as a stimulus that was randomly selected by the experimenters. The verbal instruction to participants was that this task was a gamble on each trial. In addition, they were told that the amount of money that would be paid for participation in the experiment would be increased or decreased according to their performance in the task. Furthermore, we set the control condition for subtraction analyses of PET (data shown in Ohira et al., 2010). The task in the control condition was identical to that in the other two conditions, except that the computer made a decision on each trial, and participants pressed a key that the computer indicated. In all conditions, the sides of stimuli (left vs. right) were randomized, thus the task is object learning but not spatial learning. The same pair of two stimuli was presented through blocks per each condition.

Experimental procedure

Participants performed eight blocks of the decision-making task. Three blocks were for the contingent-reward condition, three blocks were for the random-reward condition, and two blocks were for the control condition. Each block lasted for 4 min, with an 11-min interval from the previous block, and contained 40 trials. Each condition was consisted of three continuous blocks, and the order of the contingent-reward condition and the random-reward condition was counterbalanced between the participants. Both in the contingent-reward condition and in the random-reward condition, the advantageous and disadvantageous stimuli were counter-balanced between participants, and the same stimulus was delivered as an advantageous stimulus in all blocks for a participant. Blocks for the control condition were placed in the 1st and 5th block, such that a control condition was followed by either blocks of the contingent-reward condition or blocks of the random-reward condition. The contingency between stimuli and outcomes in each control block was matched to the in the following experimental blocks; i.e., 70:30% gain/loss mapping to stimuli in one control block and 50:50% gain/loss mapping to stimuli in the other control block. Participants were told that gain and loss in the control conditions would also influence the money paid for participation.

PET scanning to collect rCBF data was conducted in each block. Cardiovascular parameters (MBP, HR, and TPR) were measured for 2 min before each block as baseline and for 4 min during the task. For measurement of plasma catecholamine (epinephrine and norepinephrine), blood samples were taken using a heparinized 22-gage butterfly catheter placed in the antecubital vein of the right forearm, for 1 min just before the baseline period of measurement of cardiovascular parameters and for the last 1 min of each block. Finally, participants were remunerated. Although participants were told that their payment would depend on their performance, all participants were paid 15,000 JPY (140 USD) for participation.

Behavioral Indices

Task performance was evaluated in two behavioral indexes: response bias and reward acquisition. Response bias means the rate of choice of the advantageous stimulus. Reward acquisition was defined as the rate of getting gain regardless of choice of advantageous or disadvantageous stimulus. Following our previous study (Ohira et al., 2013), Shannon's (1948) entropy as an index of exploration was calculated from data of participants' decisions. First, we determined a conditional probability of an action (a) under a state (S). Here, the action is a choice of the same stimulus that was chosen in the previous trial or that of another stimulus that was not chosen in the previous trial (Stay or Shift). The state is an outcome (gain or loss) in the previous trial. Thus, the conditional probability P(a|S) is calculated as follows:

where Num(a|S) is the number of Stay or Shift (a) under a state S, and Num(k|S) is the number of total choices k under a state S. The constant c was introduced to stabilize the calculated probability, and was fixed to 1 here. Therefore, four conditional probabilities were calculated: (1) Stay (choice of the same stimulus chosen in the previous trial) when gain was given in the previous trial, (2) Stay when loss was given in the previous trial, (3) Shift (choice of different stimulus not chosen in the previous trial) when gain was given in the previous trial, and (4) Shift when loss was given in the previous trial. Then, entropy H was estimated as follows:

where N is a number of states S. The value of entropy H was standardized from 0 to 1 by dividing by N (here, N = 2). Thus, entropy calculated by this formula reflects the degree of deviation from dependence of a choice on the outcome of the previous trial. If a participant chooses the same stimulus regardless of whether it is advantageous or disadvantageous in all trials, H will be a minimum (approaching to 0, but H will not be 0 by the effect of the constant c). If a participant always chooses the same stimulus as the previous trial when gain was given in the previous trial and shifts the choice when loss was given in the previous trial (the Win-Stay, Lose-Shift), H will also be the minimum. These patterns of decision-making can be regarded as fixed strategies, independently from task performance reflected by response bias and reward acquisition. Conversely, if a participant chooses a stimulus totally independently from the outcome in the previous trial in all trials (random choice), entropy H will be a maximum (approaching to 1). Response bias, reward acquisition, and entropy were determined at each block of the contingent-reward and random-reward conditions, respectively.

Autonomic Indices

Cardiovascular responses

We recorded MBP and HR by using the finger cuff of a Portapres Model 2 (Finapres Medical Systems Inc., Amsterdam, The Netherlands) which was attached to the third finger of the dominant arm of each participant. HR was also measured and analyzed by using photoplethysmography using the Portapres at a sampling rate of 200 Hz, and the Beatfast software using a model flow. TPR was obtained by analyzing the sampled arterial pressure waveforms with the Beatfast software. Mean values of MBP, HR, and TPR were calculated for 2 min just before the task as baseline and during 4 min of the task in each block, for analyses.

We further measured components of HRV on the basis of HR data as indices of sympathetic and parasympathetic activity. Similar to other cardiovascular indexes, HRV was analyzed for 2 min just before the task for baseline and 4 min during the task in each block. First, the tachogram data on interbeat-intervals were re-sampled at 4 Hz to obtain equidistant time-series values. Then, a power spectral density was obtained by a fast Fourier transformation. The data were linearly detrended and filtered through a rectangular window. The integral of the power spectrum was measured in a low-frequency band (LF, 0.04–0.15 Hz) and a high-frequency band (HF, 0.15–0.4 Hz). Herein, we report the absolute value of HF power as an index of parasympathetic activity. For statistical analyses, we examined the LF and HF component expressed as natural logarithm values of the percentages of LF power and HF power of the total power in the spectrum (Perini et al., 2000). We then calculated the ratio of LF to HF (LF/HF), which reflects the sympatho-vagal balance (relative increase of sympathetic activity to parasympathetic activity) (Task Force of the European Society of Cardiology, The North American Society of Pacing and Electrophysiology, 1996).

Catecholamine

Blood samples were anticoagulated with ethylenediamine tetra-acetate, chilled, and centrifuged. Then the plasma was removed and frozen at −80°C for storage until the analysis. Epinephrine and norepinephrine in plasma were measured by using high performance liquid chromatography. Alumina was used for extraction, and the recovery rate for all amines as evaluated with a dihydroxybenzylamine standard, was between 60 and 70%. The intra-assay coefficient of variation was less than 5% for measurement of epinephrine and the inter-assay variations were less than 6% for measurement of norepinephrine.

Statistical Analyses for Behavioral and Autonomic Indices

We performed two-way (Condition [contingent-reward vs. random-reward] × Block [1, 2, 3]) repeated-measures analyses of variance (ANOVAs) for data of response bias, reward acquisition, and entropy, separately. Three-way (Condition [contingent-reward vs. random-reward] × Period [baseline vs. task] × Block [1, 2, 3]) repeated-measures ANOVAs were performed for autonomic data (MBP, HR, TPR. epinephrine, norepinephrine, the LF/HF ratio of HRV, and the HF component of HRV). The Greenhouse-Geisser epsilon correction factor, ε (Jennings and Wood, 1976), was used where necessary. When significant interactions were found by ANOVAs, post-hoc analyses using Tukey's test (p < 0.05) were performed to detect combinations of data points which showed significant differences.

Next, to explore relational structures within the behavioral and autonomic indices, correlations within the behavioral indices (response bias, reward acquisition, and entropy) and change scores of autonomic indices (MBP, HR, TPR. epinephrine, norepinephrine, the LF/HF ratio of HRV, and the HF component of HRV) were examined. Furthermore, we performed step-wise regression analyses by using change scores of autonomic indices (MBP, HR, TPR, epinephrine, norepinephrine, the LF/HF ratio of HRV, and the HF component of HRV) as independent variables in the contingent-reward condition and random-reward condition, separately, to examine the effects of sympathetic and parasympathetic parameters on entropy. To calculate the change scores of the autonomic indices, subtractions of the autonomic indices at baseline from values during the task in each block were conducted first. Mean scores of the subtracted values within three blocks were then calculated for each indices both in the contingent-reward condition and in the random-reward condition, and used for the regression analyses.

Neuroimaging by PET

Image acquisition

The distribution of rCBF was measured by using a PET scanner (General Electric Advance NXi) in a high-sensitivity three-dimensional mode at each block. A venous catheter for administering the tracer was inserted in an antecubital fossa vein in the left forearm of each participant. The participant's head was fixed in an inflatable plastic head-holder that prevented head movement. Then, a transmission scan using a rotating 68germanium pin source was completed for 10 min. 370-MBq bolus injection was started 60 s after initiation of each block. Scanning was started 30 s after initiation of bolus injection and continued for 60 s. The integrated radioactivity accumulated during the scanning was measured as the index of rCBF. Eight scans were performed for each participant, and the 15 min interval between successive scans was placed for clearance of radioactive levels. A Hanning filter was used to reconstruct images into 35 planes with 4.5 mm thickness and a resolution of 2 × 2 mm (full width half maximum).

Image processing and analyses

We used SPM 99 (Friston et al., 1995) implemented in Matlab (v. 5.3, The Mathworks Inc., Sherborn, MA, USA) for spatial preprocessing and statistical analyses of PET images. First, the images were realigned by using sinc interpolation to remove artifacts. Then, the images were transformed into a standard stereotactic space. After that, the images were corrected for whole brain global blood flow by proportional scaling and smoothed using a Gaussian kernel to a final in-plane resolution of 8 mm at full width at half maximum.

Brain activation during the contingent-reward and random-reward conditions has been previously reported (Ohira et al., 2010). Because the main interest of the current study was to examine brain regions that showed synchronized activity with autonomic activity and mediated association between the autonomic activity and exploration in decision-making, correlation maps were composed in the contingent-reward condition and in the random-reward condition, respectively. First, correlations between rCBF and the autonomic indices (MBP, HR, TPR, epinephrine, norepinephrine, the LF/HF ratio of HRV, and the HF component of HRV) that showed a significant contribution to entropy in the regression analyses described above were examined in both conditions. Change scores of the autonomic indices were used as covariates for the correlation analyses of PET images. Though whole brain activation was examined and reported (see Tables 4, 5) for the correlation analyses, we focused on the prefrontal, limbic, and striatum areas for interpretations, as we had found neural activity and neuro-autonomic associations in such regions during similar tasks of decision-making (Ohira et al., 2009, 2010, 2011, 2013). Next, we examined correlations between rCBF and entropy in the contingent-reward and random-reward conditions, respectively. For all correlation analyses, we adopted the statistical threshold of p < 0.001 (uncorrected) and K > 10. This threshold is relatively liberal in the current standard. However, it was chosen considering the balance between risks of the type-1 error and type-2 error (Lieberman and Cunningham, 2009) in a PET study with limited statistical power compared to fMRI studies.

Results

Behavioral Data

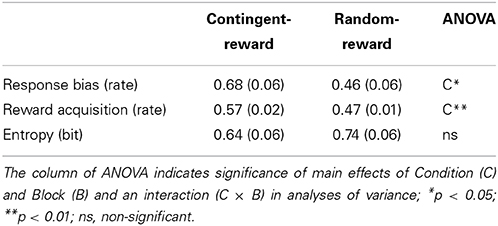

Means (Ms) and standard errors (SEs) of response bias, reward acquisition, and entropy are shown in Table 1. A main effect of Condition for response bias [F(1, 15) = 7.10, p < 0.05, η2p = 0.32] was significant, but neither a main effect of Block nor an interaction of Condition and Block was significant (F < 1.78), for response bias. Naturally, reward acquisition showed similar results as response bias, namely, a significant main effect of Condition [F(1, 15) = 13.49, p < 0.01, η2p= 0.47]. No significant effect was obtained on entropy (F < 1.00, ns.).

Entropy was not correlated with response bias and reward acquisition in either condition [see Table 3; r(14) < 0.17, ns.], indicating that entropy is independent of performance of the decision-making task.

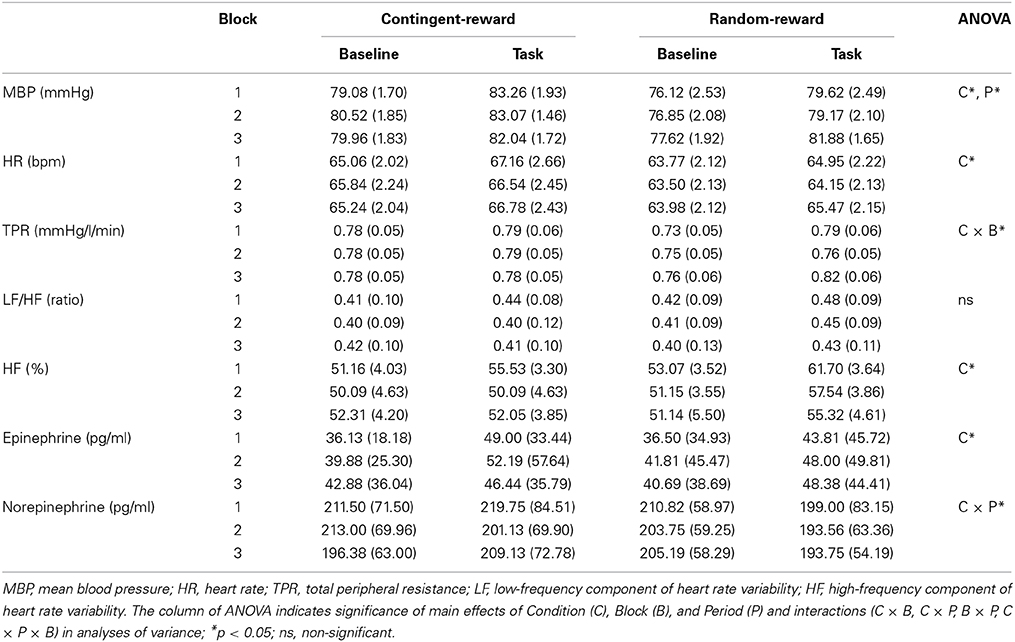

Autonomic Data

Ms and SEs of autonomic indices in each condition are shown in Table 2. For MBP, ANOVA showed significant main effects of Condition and Period [F(1, 15) = 6.18, p < 0.05, η2p = 0.29; F(1, 15) = 37.58, p < 0.001, η2p = 0.71], suggesting that MBP in the contingent-reward condition was higher than that in the random-reward condition, and that MBP elevated during the task compared to the baseline. For HR, a significant main effect of Condition was shown [F(1, 15) = 6.92, p < 0.05, η2p = 0.32], indicating that HR was higher in the contingent-reward condition compared with that in the random-reward condition. TPR showed a significant interaction of Condition and Block [F(1, 15) = 4.59, p < 0.05, η2p = 0.23], indicating that TPR was markedly increased in the random-reward condition but not in the contingent-reward condition, during the third block. A significant main effect of Condition in the HF component of HRV [F(1, 15) = 5.63, p < 0.05, η2p = 0.27] was also observed, suggesting that parasympathetic activity was more enhanced in the random-reward condition compared with that in the contingent-reward condition. The LF/HF ratio of HRV showed no significant effects in either condition.

For catecholamine, epinephrine showed a significant main effect of Condition [F(1, 15) = 6.10, p < 0.05, η2p = 0.29], indicating that overall concentration of epinephrine was higher in the contingent-reward condition compared with that in the random-reward condition. For norepinephrine, a significant interaction of Condition and Period was observed [F(1, 15) = 5.55, p < 0.05, η2p = 0.27]. Further it was indicated that norepinephrine concentration did not change between baseline and task periods in the contingent-reward condition, while it was reduced during the task period in the random-reward condition.

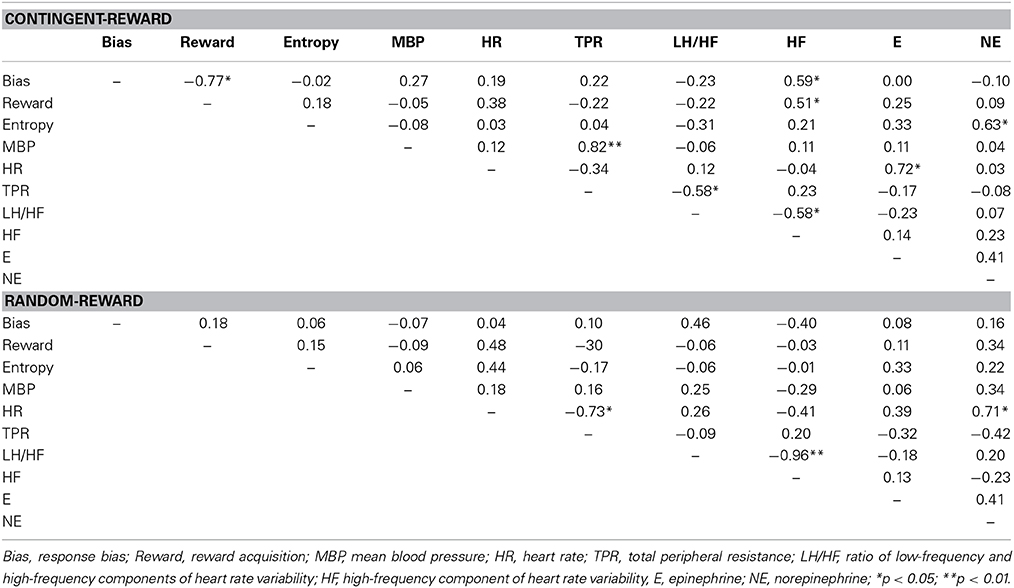

Associations of Autonomic Activity and Decision-Making

Table 3 shows the correlations within behavioral and autonomic indices in both conditions. In the contingent-reward condition, entropy was positively correlated with changes of norepinephrine, while response bias and reward acquisition were positively correlated with the HF component of HRV. MBP and TPR were positively correlated, suggesting sympathetic activity. The HF component of HRV and the LF/HF ratio of HRV were negatively correlated, suggesting that these parasympathetic and sympathetic indices worked in opposition to each other. Conversely, in the random-reward condition, no significant relations were found between autonomic indices and behavioral indices. In this condition, HR was correlated positively with norepinephrine and negatively with TPR. The HF component of HRV and the LF/HF ratio of HRV were also negatively correlated in this condition.

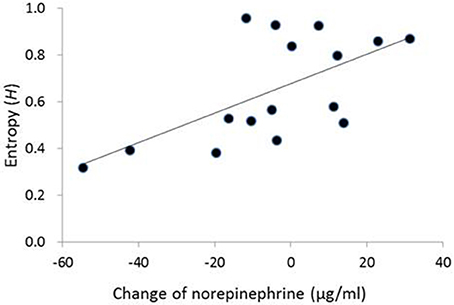

In the contingent-reward condition, a hierarchical regression analysis on entropy adopted a significant model [adjusted R2 = 0.44, F(2,13) = 6.94, p < 0.01], including norepinephrine and the LF/HF ratio of HRV as independent variables. The analysis also revealed that the change of norepinephrine as an index of sympathetic activity (β = 0.65, p < 0.05), but not the LF/HF ratio of HRV, significantly and positively contributed to entropy (Figure 2). Conversely, in the random-reward condition, the regression model was not significant [F(7,8) = 0.53, ns.].

Figure 2. Correlation between change of norepinephrine and entropy in decision-making in the contingent-reward condition. No correlation between change of norepinephrine and entropy was observed in the random-reward condition. The vertical axis of the graph represents change of norepinephrine between before and after blocks of the task (i.e., positive/negative values mean increase/decrease of norepinephrine from the baseline in each block).

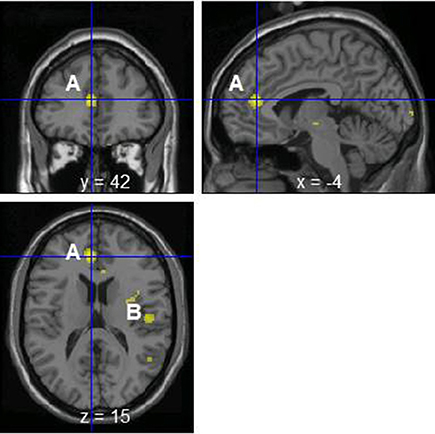

PET Data

The change of norepinephrine showed significant negative correlations with rCBF in brain regions including the parahippocampal gyrus, cerebellum, rostral ACC, right posterior insula, prefrontal cortex, globus pallidus, thalamus, putamen, and postcentral gyrus in the contingent-reward condition (Figure 3 and Table 4), while rCBF in the random-reward condition showed no significant correlations with norepinephrine in the frontal, limbic, and striatum regions. As already reported (Ohira et al., 2010), the HF component of HRV as an index of cardiovagal inhibitory control was positively correlated with rCBF in the rostral ACC and right DLPFC in the random-reward condition, but not in the contingent-reward condition. Other autonomic indices showed no significant correlations in either condition.

Figure 3. Significant negative correlations between regional cerebral blood flow and change of norepinephrine in the contingent-reward condition. A, Rostral anterior cingulate cortex; B, posterior insula.

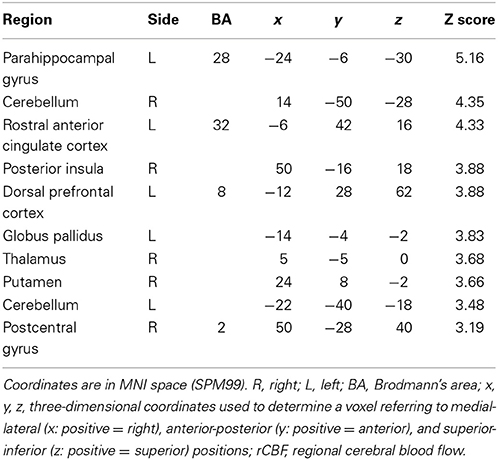

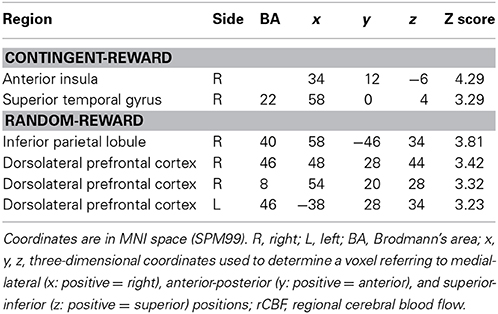

Table 4. Significant negative correlations between rCBF and norepinephrine in contingent-reward condition.

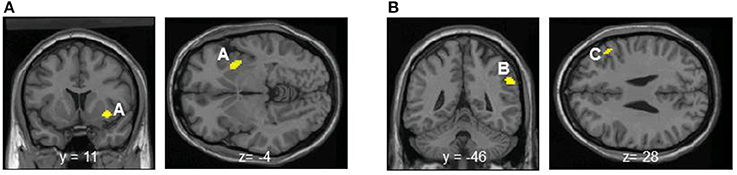

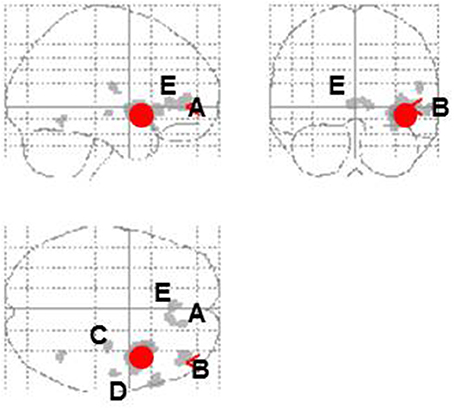

Entropy showed significant negative correlations with rCBF in the right anterior insula and superior temporal gyrus in the contingent-reward condition (Figure 4A and Table 5). In the random-reward condition, entropy was positively correlated with rCBF in the right inferior parietal lobule and bilateral DLPFC (Figure 4B and Table 5). Functional connectivity between brain regions that were related to exploration in the contingent-reward condition was examined by a further correlation analysis of the whole-brain using rCBF values from a cluster indicating the highest correlation with entropy (the right anterior insula) as a seed. As a result, activity in the right anterior insula was positively correlated with activity in several region in the right hemisphere including the ventrolateral prefrontal cortex (VLPFC: BA47, x = 60, y = 22, z = −4, Z = 4.11), lateral prefrontal cortex (LPFC: BA46, x = 38, y = 48, z = 8, Z = 4.08), putamen (x = 30, y = −18, z = −4, Z = 3.98), posterior insula (x = 32, y = −12, z = 18, Z = 3.81), and rostral ACC (x = 8, y = 32, z = 4, Z = 3.56) (Figure 5).

Figure 4. (A) Significant negative correlations between regional cerebral blood flow and entropy in decision-making in the contingent-reward condition. A, Anterior insula. (B) Significant positive correlations between regional cerebral blood flow and entropy in decision-making in the random-reward condition. B, Inferior parietal lobule; C, dorsolateral prefrontal cortex.

Table 5. Significant negative correlations between rCBF and entropy in the contingent-reward condition and positive correlations between rCBF and entropy in the random-reward condition.

Figure 5. Correlational activity between the right insula (red circle) and other brain regions in the contingent-reward condition. A, Ventromedial prefrontal cortex; B, lateral prefrontal cortex; C, putamen; D, posterior insula; E, rostral anterior cingulate cortex.

Correlations between sympathetic activity, rCBF, and entropy described above were examined in each condition, separately. Due to the small sample size, formal statistical tests did not show any significant differences of the correlations between the contingent-reward condition and the random-reward condition. Therefore, results of the present study must be interpreted with a caution.

Discussion

As predicted, sympathetic activity indexed by changes of norepinephrine was linked with exploration in decision-making represented by entropy. Activity of brain regions including the insula was associated with the correlation between sympathetic activity and exploration, in the contingent-reward condition where an appropriate option was stochastically determined and thus uncertainty in decision-making was relatively low. However, in the random-reward condition where uncertainty in decision-making was extremely high, exploration in decision-making was not linked with sympathetic activity but with brain activity in the DLPFC and inferior parietal lobule. These findings suggest that the linkage between sympathetic activity and decision-making might be, at least partly, dependent on the degree of uncertainty of a situation. Probabilities of response bias and reward acquisition were matched to the contingencies between options and outcomes both in the contingent-reward condition and in the random-reward condition (approximately 70 and 50%, respectively, see Table 1), suggesting validity of experimental manipulation in this study. Values of entropy in the two conditions of this study were consistent with those in our previous study where a similar decision-making task was used (Ohira et al., 2013), suggesting reliability of this index of exploration.

Only catecholamine but no other indices of sympathetic activity (the LF/HF component of HRV, MBP, HR, and TPR) predicted entropy in the contingent-reward condition. This seemed that signals of peripheral sympathetic activity affecting exploration are conveyed to the brain mainly via the neurochemical route including the afferent vagus nerve expressing β-adrenergic receptors, NTS, LC-norepinephrine system, and basal forebrain cholinergic system, as proposed by several researchers (e.g., Williams and McGaugh, 1993; Cahill and McGaugh, 1998; Clayton and Williams, 2000; Cahill and Alkire, 2003; Berntson et al., 2003, 2011), while the somatosensory signals driven by cardiovascular responses might play relatively minor roles in modulation of exploration. In addition, catecholamine and other sympathetic indices did not affect response bias or reward acquisition, suggesting that sympathetic activity is associated with exploration in decision-making, but not with currently appropriate strategies (exploitation).

Neither catecholamine nor cardiovascular indices were associated with entropy in the random-reward condition, where sympathetic activity was generally attenuated. This attenuation of sympathetic activity in such a highly uncertain condition has been reported in our previous studies (Kimura et al., 2007; Ohira et al., 2009, 2010), and corresponds to a typical physiological coping style to a stressful situation that is difficult to control and individuals experience insufficient resources (Blascovich et al., 1999; Keay and Bandler, 2001). Attenuation of cardiovascular activity in the random-reward condition suggests prevention of energy expenditure by reduction of allocation of energy to ongoing behaviors that have become inappropriate, and allocation of the saved energy to attention and cognitive processes to find a way to more appropriate coping. This result suggests that autonomic responses accompanying decision-making should be under the top-down regulation on the basis of appraisal for the current situation (Ohira et al., 2010; Studer and Clark, 2011; Stankovic et al., 2014). It should be noted that the average value of entropy was maintained at a high level in the random-reward condition (see Table 1), suggesting that participants did not abandon efforts for the task and did not just adopt simple strategies of decision-making (e.g., choice of the same option in all trials), even in the random-reward condition.

Changes of norepinephrine but not those of epinephrine specifically correlated with entropy in the contingent-reward condition of the present study, while epinephrine but not norepinephrine correlated with entropy in our previous study (Ohira et al., 2013). Although reasons for this difference are not clear, it is possible that the correlation between changes of norepinephrine and entropy in the contingent-reward condition of the present study was produced mainly by a decrease in norepinephrine level. Figure 2 showed that a decrease of norepinephrine from baseline (under the “0” level) was associated with lower values of entropy. These results suggest that some participants showed a reduction of sympathetic activity that accompanied the progress and establishment of learning about the contingency between options and outcomes. A decrease of norepinephrine might sensitively reflect such a reduction of sympathetic activity, while the concentrations of epinephrine in this study were maintained at high levels (see Table 2). Norepinephrine is the primary transmitter in the sympathetic nerve, while epinephrine is a secondary product that is synthesized and secreted in the adrenal medulla. Thus, norepinephrine might have higher temporal reactivity than epinephrine because levels of norepinephrine (but not epinephrine) are mainly modulated by the norepinephrine transporter that enables rapid shut-out of responses (Schroeder and Jordan, 2012). Furthermore, the rate in metabolism is higher for norepinephrine compared to epinephrine (Eisenhofer and Finberg, 1994).

The changes of norepinephrine in the contingent-reward condition were negatively correlated with rCBF in brain regions including the right insula and ACC, as well as the limbic and striatum regions such as the parahippocampal gyrus, thalamus, globus pallidus, and putamen, which have tight connections with the insula (Augustine, 1996). Neural activity in these brain regions related to bodily responses such as skin conductance responses (Critchley et al., 2002), inflammation induced by vaccination (Harrison et al., 2009), interoceptive awareness (Pollatos et al., 2007), and the increase of epinephrine in reversal learning (Ohira et al., 2013), has been repeatedly reported. In addition, the brain regions in which activity showed correlations with norepinephrine changes in the present study are included in the neural network whose functional connectivity in a resting-state showed synchronization with skin conductance as an index of sympathetic activity (Fan et al., 2012). As the insula and ACC are the top-level centers of the ascending pathways of information flow from the body to the brain including changes of catecholamine, mainly via the afferent vagus nerve, brain norepinephrine system, and basal forebrain cholinergic system (Berntson et al., 2003, 2011), our data provide additional evidence for the role of the insula and ACC to produce neural representations of bodily states (Craig, 2009; Critchley, 2009).

Nevertheless, the negative correlation between changes of norepinephrine and rCBF in the insula and the ACC observed in the present study seems to contradict our previous finding that changes of epinephrine were positively correlated with rCBF in those brain regions (Ohira et al., 2013). This discrepancy can be interpreted by considering that the insula does not respond just linearly to inputs of peripheral bodily signals, but might work as a “comparator.” Seth (2013) and their colleagues (Seth et al., 2012) argued that the insula can detect a mismatch (prediction error) between predicted bodily responses calculated by an inner model and actual inputs of bodily responses. The greater the mismatch between predicted bodily responses and actual bodily responses is, the larger insular activity should happen. The findings of the present study and our previous study (Ohira et al., 2013) seem consistent with this notion; specifically, we speculate that the insula and the connected neural network detected a positive prediction error (the increase of bodily responses compared to the current adaptation level) in the previous study and detected a negative prediction error (the decrease of bodily responses compared to the current adaptation level) of bodily responses in the present study. The negative correlation between the explorative tendency in decision-making indexed by entropy and rCBF in regions including the insula also seems to support this concept. Namely, activity of the “comparator” neural network including the anterior and posterior portions of the insula, driven by detection of the decrease of sympathetic activity compared to the current adaptation level, might lead to reduction of the explorative tendency in decision-making. The positive correlations between activity in the right anterior insula and other brain regions such as the VLPFC, LPFC, rostral ACC, and striatum suggest that the prediction error detected in the insula might serve to modulate activity in the frontal-striatum neural network that is directly involved in decision-making (e.g., van Leijenhorst et al., 2006; Eshel et al., 2007; Costa and Averbeck, 2013). Additionally the detected prediction error can be utilized to tune the strategy of decision-making in the dimension of exploration and exploitation (e.g., Daw et al., 2006; Frank et al., 2009; Sallet and Rushworth, 2009).

Exploration in decision-making indexed by entropy was positively correlated with rCBF in the bilateral DLPFC and inferior parietal lobule, but not with rCBF in the insula, in the random-reward condition. This result is consistent with previous findings showing that the prefrontal and parietal neural network is involved in exploration in several decision-making tasks (Daw et al., 2006; Sallet and Rushworth, 2009; Costa and Averbeck, 2013). We previously reported higher activation of the DLPFC in the random-reward condition than in the contingent-reward condition (Ohira et al., 2010). The DLPFC is involved in working memory, executive control, and top-down control over flow of information processing (Seo et al., 2007). Thus, the DLPFC might be more recruited during decision-making in a highly uncertain situation where continuous seeking for hidden rules on the basis of memorizing past experiences of own actions and the outcomes is required. Such cognitive functions may lead to exploratory seeking for an appropriate strategy of decision-making in the uncertain situation. Furthermore, the right DLPFC plays a critical role in the inhibitory control of superficially seductive options (Fecteau et al., 2007). This function likely contributes to exploration by inhibition of simple sticking to just recent gains. A neuroimaging study using 15O-PET showed that the left side of the DLPFC is critical for generation of randomness of behavioral sequences (Jahanshahi et al., 2000), and the causality of this notion was verified in a study using transcranial magnetic stimulation (Jahanshahi and Dirnberger, 1999). This function may also support exploration by avoiding simple behavioral patterns such as thoughtless repeats of previous choices or the Win-stay Lose-shift strategy. In addition, it has been suggested that the inferior parietal lobule works as an interface of frontal areas where values of options are calculated and motor output is controlled (Daw et al., 2006). Activity in such a frontal-parietal network was also shown to correlate with the amount of information that participants gathered before committing to a decision (Furl and Averbeck, 2011). In contrast to the findings in the contingent-reward condition, there were no correlations between sympathetic indices including norepinephrine with exploration in decision-making or activity of brain regions including the insula in the random-reward condition. The positive correlation between the HF component of HRV as an index of cardiovagal activity and rCBF in the rostral ACC and right DLPFC in this condition suggests that physiological responses are under inhibitory control on the basis of evaluation of the current situation in the frontal neural network (Thayer et al., 2012). Such neural processes likely canceled the effects of sympathetic activity on exploration in the random-reward condition.

It has been well known that activity of dopamine neurons in the midbrain-striatum neural circuit is the largest when uncertainty of delivery of reward is the highest (Fiorillo et al., 2003). This classical finding is consistent with the result of our previous study (Ohira et al., 2010) showing that activation of the dorsal striatum, which is a main target area of projection of midbrain dopamine neurons, was higher in the random-reward condition (higher uncertainty) than in the contingent-reward condition (lower uncertainty). On the other hand, entropy showed no correlation with activation of the midbrain-striatum dopamine circuit in both conditions in this study. Taken together, while activity in dopamine neurons might involve coding and evaluation of uncertainty in decision-making, the neural networks including the insula and DLPFC might involve modulation of exploration in decision-making on the basis of such coding and evaluation of uncertainty.

Some limitations of the present study should be noticed. First, as the sample size of this study was small and participants were all male, the generalizability of findings of this study should be further examined. Secondly, neuroimaging using PET has limited temporal resolution compared to fMRI. Also, as PET studies are largely correlative and we used relatively liberal statistical standards, the causality of these findings should be interpreted cautiously. Thirdly, the decision-making task used in the present study was minimally simple one with only two alternative options. Tasks with multiple alternative options like the task used in the study by Daw et al. (2006) might be more useful to draw dynamic characteristics of exploration in detail. Nevertheless, we replicated our previous finding that sympathetic activity correlates with exploration in decision-making indexed by entropy, and that this association between sympathetic activity and exploration can be at least partly mediated by insular activity. We also expanded this notion by showing that functions of such a brain-body circuit affecting exploration can vary according to the degree of uncertainty of a situation in decision-making. As a source of inconsistency of the relationship between sympathetic activity and decision-making (Dunn et al., 2006; Rolls, 2014), it has been shown that individual differences in sensitivity to one's own sympathetic activity (interoception) can moderate the relationship (Sokol-Hessner et al., 2014; Wölk et al., 2014). The present study suggested that uncertainty of the situation of decision-making might also be an additional moderator of the relationship.

Author Contributions

Hideki Ohira, Naho Ichikawa, and Kenta Kimura contributed to study design. Naho Ichikawa and Kenta Kimura contributed to measurements and analyses of behavioral and autonomic data. Seisuke Fukuyama was responsible for data-acquisition in neuroimaging using PET with the supervision of Jun Shinoda and Jitsuhiro Yamada. Hideki Ohira interpreted the data with helps of Naho Ichikawa and Kenta Kimura, and wrote the manuscript. All authors approved the final version of the paper.

Funding

This study was supported by a Grant-in-Aid for Scientific Research of the Japan Society for the Promotion of Science (No. 16330136) and a Grant-in-Aid for Scientific Research on Innovative Areas (Research in a Proposed Research Area) 2010 (No. 4102-21119003) from the Ministry of Education, Culture, Sports, Science and Technology, Japan.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Augustine, J. R. (1996). Circuitry and functional aspects of the insular lobe in primates including humans. Brain Res. Brain Res. Rev. 22, 229–244. doi: 10.1016/S0165-0173(96)00011-2

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Badre, D., Doll, B. B., Long, N. M., and Frank, M. J. (2012). Rostrolateral prefrontal cortex and individual differences in uncertainty-driven exploration. Neuron 73, 595–607. doi: 10.1016/j.neuron.2011.12.025

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Baek, K., Kim, Y. T., Kim, M., Choi, Y., Lee, M., Lee, K., et al. (2013). Response randomization of one- and two-person rock-paper-scissors games in individuals with schizophrenia. Psychiatry Res. 207, 158–163. doi: 10.1016/j.psychres.2012.09.003

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Bechara, A., and Damasio, A. (2005). The somatic marker hypothesis: a neural theory of economic decision. Games Econ. Behav. 52, 336–369. doi: 10.1016/j.geb.2004.06.010

Bechara, A., Damasio, H., Damasio, A. R., and Lee, G. P. (1999). Different contributions of the human amygdala and ventromedial prefrontal cortex to decision-making. J. Neurosci. 19, 5473–5481.

Bechara, A., Tranel, D., and Damasio, H. (2000). Characterization of the decision-making deficit of patients with ventromedial prefrontal cortex lesions. Brain 123, 2189–2202. doi: 10.1093/brain/123.11.2189

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Berntson, G. G., Norman, G. J., Bechara, A., Bruss, J., Tranel, D., and Cacioppo, J. T. (2011). The insula and evaluative processes. Psychol. Sci. 22, 80–86. doi: 10.1177/0956797610391097

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Berntson, G. G., Sarter, M., and Cacioppo, J. T. (2003). Ascending visceral regulation of cortical affective information processing. Eur. J. Neurosci. 18, 2103–2109. doi: 10.1046/j.1460-9568.2003.02967.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Blascovich, J., Mendes, W. B., Hunter, S. B., and Salomon, K. (1999). Social “facilitation” as challenge and threat. J. Pers. Soc. Psychol. 77, 68–77. doi: 10.1037/0022-3514.77.1.68

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Cahill, L., and Alkire, M. T. (2003). Epinephrine enhancement of human memory consolidation: interaction with arousal at encoding. Neurobiol. Learn. Mem. 79, 194–198. doi: 10.1016/S1074-7427(02)00036-9

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Cahill, L., and McGaugh, J. L. (1998). Mechanisms of emotional arousal and lasting declarative memory. Trends Neurosci. 21, 294–299. doi: 10.1016/S0166-2236(97)01214-9

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Clayton, E. C., and Williams, C. L. (2000). Adrenergic activation of the nucleus tractus solitarius potentiates amygdala norepinephrine release and enhances retention performance in emotionally arousing and spatial memory tasks. Behav. Brain Res. 112, 151–158. doi: 10.1016/S0166-4328(00)00178-9

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Costa, V. D., and Averbeck, B. B. (2013). Frontal-parietal and limbic-striatal activity underlies information sampling in the best choice problem. Cereb. Cortex. doi: 10.1093/cercor/bht286. [Epub ahead of print].

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Craig, A. D. (2009). How do you feel—now? The anterior insula and human awareness. Nat. Rev. Neurosci. 10, 59–70. doi: 10.1038/nrn2555

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Critchley, H. D. (2009). Psychophysiology of neural, cognitive and affective integration: fMRI and autonomic indicants. Int. J. Psychophysiol. 73, 88–94. doi: 10.1016/j.ijpsycho.2009.01.012

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Critchley, H. D., Mathias, C. J., and Dolan, R. J. (2002). Fear conditioning in humans: the influence of awareness and autonomic arousal on functional neuroanatomy. Neuron 33, 653–663. doi: 10.1016/S0896-6273(02)00588-3

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Damasio, A. R. (1994). Descartes' Error: Emotion, Reason, and the Human Brain. New York, NY: Putnam.

Daw, N. D., O'Doherty, J. P., Dayan, P., Seymour, B., and Dolan, R. J. (2006). Cortical substrates for exploratory decisions in humans. Nature 441, 876–879. doi: 10.1038/nature04766

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Denburg, N. L., Recknor, E. C., Bechara, A., and Tranel, D. (2006). Psychophysiological anticipation of positive outcomes promotes advantageous decision-making in normal older persons. Int. J. Psychophysiol. 61, 19–25. doi: 10.1016/j.ijpsycho.2005.10.021

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Dunn, B. D., Dalgleish, T., and Lawrence, A. D. (2006). The somatic marker hypothesis: a critical evaluation. Neurosci. Biobehav. Rev. 30, 239–271. doi: 10.1016/j.neubiorev.2005.07.001

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Eisenhofer, G., and Finberg, J. P. (1994). Different metabolism of norepinephrine and epinephrine by catechol-O-methyltransferase and monoamine oxidase in rats. J. Pharmacol. Exp. Ther. 268, 1242–1251.

Endo, N., Saiki, J., and Saito, H. (2001). Determinants of occurrence of negative priming for novel shapes with matching paradigm. Jpn. J. Psychol. 72, 204–212. doi: 10.4992/jjpsy.72.204

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Eshel, N., Nelson, E. E., Blair, R. J., Pine, D. S., and Ernst, M. (2007). Neural substrates of choice selection in adults and adolescents: development of the ventrolateral prefrontal and anterior cingulate cortices. Neuropsychologia 45, 1270–1279. doi: 10.1016/j.neuropsychologia.2006.10.004

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Fan, J., Xu, P., Van Dam, N. T., Eilam-Stock, T., Gu, X., Luo, Y. J., et al. (2012). Spontaneous brain activity relates to autonomic arousal. J. Neurosci. 32, 11176–11186. doi: 10.1523/JNEUROSCI.1172-12.2012

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Fecteau, S., Knoch, D., Fregni, F., Sultani, N., Boggio, P., and Pascual-Leone. (2007). Diminishing risk-taking behavior by modulating activity in the prefrontal cortex: a direct current stimulation study. J. Neurosci. 27, 12500–12505. doi: 10.1523/JNEUROSCI.3283-07.2007

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Fiorillo, C. D., Tobler, P. N., and Schultz, W. (2003). Discrete coding of reward probability and uncertainty by dopamine neurons. Science 299, 1898–1902. doi: 10.1126/science.1077349

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Frank, M. J., Doll, B. B., Oas-Terpstra, J., and Moreno, F. (2009). Prefrontal and striatal dopaminergic genes predict individual differences in exploration and exploitation. Nat. Neurosci. 12, 1062–1068. doi: 10.1038/nn.2342

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Friston, K. J., Holmes, A. P., Worsley, K. J., Poline, J. B., Frith, C. D., and Frackowiak, R. S. J. (1995). SPMs in functional imaging: a general linear approach. Hum. Brain Mapp. 1, 214–220.

Furl, N., and Averbeck, B. B. (2011). Parietal cortex and insula relate to evidence seeking relevant to reward-related decisions. J. Neurosci. 31, 17572–17582. doi: 10.1523/JNEUROSCI.4236-11.2011

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Gläscher, J., Adolphs, R., Damasio, H., Bechara, A., Rudrauf, D., Calamia, M., et al. (2012). Lesion mapping of cognitive control and value-based decision making in the prefrontal cortex. Proc. Natl. Acad. Sci. U.S.A. 109, 14681–14686. doi: 10.1073/pnas.1206608109

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Harrison, N. A., Brydon, L., Walker, C., Gray, M. A., Steptoe, A., Dolan, R. J., et al. (2009). Neural origins of human sickness in interoceptive responses to inflammation. Biol. Psychiatry 66, 415–422. doi: 10.1016/j.biopsych.2009.03.007

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Jahanshahi, M., and Dirnberger, G. (1999). The left dorsolateral prefrontal cortex and random generation of responses: studies with transcranial magnetic stimulation. Neuropsychologia 37, 181–190. doi: 10.1016/S0028-3932(98)00092-X

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Jahanshahi, M., Dirnberger, G., Fuller, R., and Frith, C. D. (2000). The role of the dorsolateral prefrontal cortex in random number generation: a study with positron emission tomography. Neuroimage 12, 713–725. doi: 10.1006/nimg.2000.0647

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Jennings, K. R., and Wood, C. C. (1976). The E-adjustment procedure for repeated measures analysis of variance. Psychophysiology 13, 277–278.

Katahira, K., Fujimura, T., Okanoya, K., and Okada, M. (2011). Decision-making based on emotional images. Front. Psychol. 2:311. doi: 10.3389/fpsyg.2011.00311

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Keay, K. A., and Bandler, R. (2001). Parallel circuits mediating distinct emotional coping reactions to different types of stress. Neurosci. Biobehav. Rev. 25, 669–678. doi: 10.1016/S0149-7634(01)00049-5

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kimura, K., Ohira, H., Isowa, T., Matsunaga, M., and Murashima, S. (2007). Regulation of lymphocytes redistribution via autonomic nervous activity during stochastic learning. Brain Behav. Immun. 21, 921–934. doi: 10.1016/j.bbi.2007.03.004

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lee, D., Conroy, M. L., McGreevy, B. P., and Barraclough, D. J. (2004). Reinforcement learning and decision making in monkeys during a competitive game. Brain Res. Cogn. Brain Res. 22, 45–58. doi: 10.1016/j.cogbrainres.2004.07.007

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lieberman, M. D., and Cunningham, W. A. (2009). Type I and Type II error concerns in fMRI research: re-balancing the scale. Soc. Cogn. Affect. Neurosci. 4, 423–428. doi: 10.1093/scan/nsp052

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Nieuwenhuis, S., De Geus, E. J., and Aston-Jones, G. (2010). The anatomical and functional relationship between the P3 and autonomic components of the orienting response. Psychophysiology 48, 162–175. doi: 10.1111/j.1469-8986.2010.01057.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Ohira, H., Fukuyama, S., Kimura, K., Nomura, M., Isowa, T., Ichikawa, N., et al. (2009). Regulation of natural killer cell redistribution by prefrontal cortex during stochastic learning. Neuroimage 47, 897–907. doi: 10.1016/j.neuroimage.2009.04.088

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Ohira, H., Ichikawa, N., Nomura, M., Isowa, T., Kimura, K., Kanayama, N., et al. (2010). Brain and autonomic association accompanying stochastic decision-making. Neuroimage 49, 1024–1037. doi: 10.1016/j.neuroimage.2009.07.060

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Ohira, H., Matsunaga, M., Kimura, K., Murakami, H., Osumi, T., Isowa, T., et al. (2011). Chronic stress modulates neural and cardiovascular responses during reversal learning. Neuroscience 193, 193–204. doi: 10.1016/j.neuroscience.2011.07.014

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Ohira, H., Matsunaga, M., Murakami, H., Osumi, T., Fukuyama, S., Shinoda, J., et al. (2013). Neural mechanisms mediating association of sympathetic activity and exploration in decision-making. Neuroscience 246, 362–374. doi: 10.1016/j.neuroscience.2013.04.050

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Perini, R., Milesi, S., Fisher, N. M., Pendergast, D. R., and Veicsteinas, A. (2000). Heart rate variability during dynamic exercise in elderly males and females. Eur. J. Appl. Physiol. 82, 8–15. doi: 10.1007/s004210050645

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Pollatos, O., Gramann, K., and Schandry, R. (2007). Neural systems connecting interoceptive awareness and feelings. Hum. Brain Mapp. 28, 9–18. doi: 10.1002/hbm.20258

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Rogers, R. D., Lancaster, M., Wakeley, J., and Bhagwagar, Z. (2004). Effects of beta-adrenoceptor blockade on components of human decision-making. Psychopharmacology 172, 157–641. doi: 10.1007/s00213-003-1641-5

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Sallet, J., and Rushworth, M. F. (2009). Should I stay or should I go: genetic bases for uncertainty-driven exploration. Nat. Neurosci. 12, 963–965. doi: 10.1038/nn0809-963

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Sayers, B. M. (1973). Analysis of heart rate variability. Ergonomics 16, 17–32. doi: 10.1080/00140137308924479

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Schroeder, C., and Jordan, J. (2012). Norepinephrine transporter function and human cardiovascular disease. Am. J. Physiol. Heart Circ. Physiol. 303, H1273–H1282. doi: 10.1152/ajpheart.00492.2012

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Seo, H., Barraclough, D. J., and Lee, D. (2007). Dynamic signals related to choices and outcomes in the dorsolateral prefrontal cortex. Cereb. Cortex 17(Suppl. 1), i110–i117. doi: 10.1093/cercor/bhm064

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Seo, H., and Lee, D. (2008). Cortical mechanisms for reinforcement learning in competitive games. Philos. Trans. R. Soc. Lond. B Biol. Sci. 363, 3845–3857. doi: 10.1098/rstb.2008.0158

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Seth, A. K. (2013). Interoceptive inference, emotion, and the embodied self. Trends Cogn. Sci. 17, 565–573. doi: 10.1016/j.tics.2013.09.007

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Seth, A. K., Suzuki, K., and Critchley, H. D. (2012). An interoceptive predictive coding model of conscious presence. Front. Psychol. 2:395. doi: 10.3389/fpsyg.2011.00395

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Shannon, C. E. (1948). A mathematical theory of communication. Bell Sys. Tech. J. 27, 379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x

Sokol-Hessner, P., Hartley, C. A., Hamilton, J. R., and Phelps, E. A. (2014). Interoceptive ability predicts aversion to losses. Cogn. Emot. doi: 10.1080/02699931.2014.925426. [Epub ahead of print].

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Stankovic, A., Fairchild, G., Aitken, M. R., and Clark, L. (2014). Effects of psychosocial stress on psychophysiological activity during risky decision-making in male adolescents. Int. J. Psychophysiol. 93, 22–29. doi: 10.1016/j.ijpsycho.2013.11.001

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Studer, B., and Clark, L. (2011). Place your bets: psychophysiological correlates of decision-making under risk. Cogn. Affect Behav. Neurosci. 11, 144–158. doi: 10.3758/s13415-011-0025-2

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Takahashi, H., Saito, C., Okada, H., and Omori, T. (2013). An investigation of social factors related to online mentalizing in a human-robot competitive game. Jpn. Psychol. Res. 55, 144–153. doi: 10.1111/jpr.12007

Takahashi, H., Terada, K., Morita, T., Suzuki, S., Haji, T., Kozima, H., et al. (2014). Different impressions of other agents obtained through social interaction uniquely modulate dorsal and ventral pathway activities in the social human brain. Cortex 58, 289–300. doi: 10.1016/j.cortex.2014.03.011

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Thayer, J. F., Ahs, F., Fredrikson, M., Sollers, J. J. 3rd., and Wager, T. D. (2012). A meta-analysis of heart rate variability and neuroimaging studies: implications for heart rate variability as a marker of stress and health. Neurosci. Biobehav. Rev. 36, 747–756. doi: 10.1016/j.neubiorev.2011.11.009

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

van Leijenhorst, L., Crone, E. A., and Bunge, S. A. (2006). Neural correlates of developmental differences in risk estimation and feedback processing. Neuropsychologia 44, 2158–21570. doi: 10.1016/j.neuropsychologia.2006.02.002

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Williams, C. L., and McGaugh, J. L. (1993). Reversible lesions of the nucleus of the solitary tract attenuate the memory-modulating effects of posttraining epinephrine. Behav. Neurosci. 107, 955–962. doi: 10.1037/0735-7044.107.6.955

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Wölk, J., Sütterlin, S., Koch, S., Vögele, C., and Schulz, S. M. (2014). Enhanced cardiac perception predicts impaired performance in the Iowa Gambling Task in patients with panic disorder. Brain Behav. 4, 238–246. doi: 10.1002/brb3.206

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Yen, N. S., Chou, I. C., Chung, H. K., and Chen, K. H. (2012). The interaction between expected values and risk levels in a modified Iowa gambling task. Biol. Psychol. 91, 232–237. doi: 10.1016/j.biopsycho.2012.06.008

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Keywords: decision-making, exploration, entropy, positron emission tomography (PET), sympathetic activity

Citation: Ohira H, Ichikawa N, Kimura K, Fukuyama S, Shinoda J and Yamada J (2014) Neural and sympathetic activity associated with exploration in decision-making: further evidence for involvement of insula. Front. Behav. Neurosci. 8:381. doi: 10.3389/fnbeh.2014.00381

Received: 10 July 2014; Accepted: 16 October 2014;

Published online: 10 November 2014.

Edited by:

Angela Roberts, University of Cambridge, UKCopyright © 2014 Ohira, Ichikawa, Kimura, Fukuyama, Shinoda and Yamada. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hideki Ohira, Department of Psychology, Nagoya University, Furo-cho, Chikusa-ku, Nagoya 464-8601, Japan e-mail: ohira@lit.nagoya-u.ac.jp

Hideki Ohira

Hideki Ohira Naho Ichikawa

Naho Ichikawa Kenta Kimura

Kenta Kimura Seisuke Fukuyama4

Seisuke Fukuyama4  Jun Shinoda

Jun Shinoda