A novel behavioral paradigm to assess multisensory processing in mice

- 1Multisensory Research Laboratory, Neuroscience Program, Vanderbilt University, Nashville, TN, USA

- 2Neuroscience Program, Vanderbilt University, Nashville, TN, USA

- 3Computer Software Engineering Department, MED Associates Inc., St. Albans, VT, USA

- 4Murine Neurobehavior Core, Vanderbilt University, Nashville, TN, USA

- 5Center for Autism and the Developing Brain, and Department of Psychiatry, Sackler Institute for Developmental Psychobiology, Columbia University, New York, NY, USA

- 6Department of Hearing and Speech Sciences, Vanderbilt University, Nashville, TN, USA

- 7Department of Psychology, Vanderbilt University, Nashville, TN, USA

- 8Department of Psychiatry, Vanderbilt University, Nashville, TN, USA

Human psychophysical and animal behavioral studies have illustrated the benefits that can be conferred from having information available from multiple senses. Given the central role of multisensory integration for perceptual and cognitive function, it is important to design behavioral paradigms for animal models to provide mechanistic insights into the neural bases of these multisensory processes. Prior studies have focused on large mammals, yet the mouse offers a host of advantages, most importantly the wealth of available genetic manipulations relevant to human disease. To begin to employ this model species for multisensory research it is necessary to first establish and validate a robust behavioral assay for the mouse. Two common mouse strains (C57BL/6J and 129S6/SvEv) were first trained to respond to unisensory (visual and auditory) stimuli separately. Once trained, performance with paired audiovisual stimuli was then examined with a focus on response accuracy and behavioral gain. Stimulus durations varied from 50 ms to 1 s in order to modulate the effectiveness of the stimuli and to determine if the well-established “principle of inverse effectiveness” held in this model. Response accuracy in the multisensory condition was greater than for either unisensory condition for all stimulus durations, with significant gains observed at the 300 ms and 100 ms durations. Main effects of stimulus duration, stimulus modality and a significant interaction between these factors were observed. The greatest behavioral gain was seen for the 100 ms duration condition, with a trend observed that as the stimuli became less effective, larger behavioral gains were observed upon their pairing (i.e., inverse effectiveness). These results are the first to validate the mouse as a species that shows demonstrable behavioral facilitations under multisensory conditions and provides a platform for future mechanistically directed studies to examine the neural bases of multisensory integration.

Introduction

We live in a world comprised of a multitude of competing stimuli delivered through a number of different sensory modalities. The appropriate filtering, segregation and integration of this information is integral to properly navigate through the world and for creating a unified perceptual representation. Having information available from multiple sensory modalities often results in substantial behavioral and perceptual benefits (Stein and Meredith, 1993; Murray and Wallace, 2011; Stein, 2012). For example, it has been shown that in noisy environments, seeing and hearing an individual speak can greatly enhance speech perception and comprehension relative to just the audible signal alone (Sumby and Pollack, 1954). In addition, responses have been shown to be both faster and more accurate under combined modality circumstances (Calvert et al., 2004; Stevenson et al., 2014a). Numerous other examples of such multisensory-mediated benefits have been established (Calvert and Thesen, 2004; Stein and Stanford, 2008), and serve to reinforce the utility of multisensory processing in facilitating behavioral responses and in constructing our perceptual view of the world. Furthermore, emerging evidence points to altered multisensory processing in a number of human clinical conditions, including autism and schizophrenia, reinforcing the importance of having a better mechanistic understanding of multisensory function (Foss-Feig et al., 2010; Kwakye et al., 2011; Marco et al., 2011; Cascio et al., 2012; Martin et al., 2013; Stevenson et al., 2014b).

Numerous animal model and human imaging studies have explored the neural mechanisms that underpin multisensory processing (Stein and Meredith, 1993; Calvert and Thesen, 2004; Calvert et al., 2004; Stein and Stanford, 2008; Murray and Wallace, 2011; Stein, 2012; Stevenson et al., 2014a). These studies have highlighted the neural operations performed by individual multisensory neurons and networks, demonstrating the importance of stimulus properties such as space, time and effectiveness in determining the final product of a multisensory pairing (Meredith and Stein, 1985, 1986a,b; Meredith et al., 1987; Wallace and Stein, 2007; Royal et al., 2009; Stevenson et al., 2012; Ghose and Wallace, 2014). In addition, an increasing emphasis is now being placed on detailing how neuronal responses relate to behavioral outcomes under multisensory circumstances (Wilkinson et al., 1996; Stein et al., 2002; Burnett et al., 2004; Hirokawa et al., 2008).

Historically, these multisensory studies have focused on large mammalian models such as the cat and monkey, given the similarities in their sensory systems to humans and the ease with which both neural responses and behavior can be measured. With the advent of molecular genetic manipulations in mouse models and their applicability to human disease, however, there is a growing need to better understand sensory and multisensory function in these lower mammals. As highlighted above, this has become very germane of late as evidence grows for the presence of sensory and multisensory deficits in clinical disorders (Iarocci and Mcdonald, 2006; Kern et al., 2007; Smith and Bennetto, 2007; Cascio, 2010; Keane et al., 2010; Russo et al., 2010; Marco et al., 2011; Brandwein et al., 2012; Foxe et al., 2013; Stevenson et al., 2013, 2014c; Wallace and Stevenson, 2014).

In addition to molecular genetic manipulations such as knock-ins or knock-outs of genes associated with human disease, the rodent offers additional practical advantages spurred by the development of optogenetic methods to study causal relations in brain circuits (Fenno et al., 2011; McDevitt et al., 2014). Application of such tools to multisensory questions could be of great utility in developing a better mechanistic understanding of how the integrative features of multisensory neurons and networks arise, and how neuronal and network properties relate to behavior.

For these reasons (and others), recent studies have begun to focus on examining multisensory processes in rodent models (Sakata et al., 2004; Gleiss and Kayser, 2012; Raposo et al., 2012; Carandini and Churchland, 2013; Olcese et al., 2013; Sheppard et al., 2013; Sieben et al., 2013; Gogolla et al., 2014; Hishida et al., 2014). For practical reasons, this work has initially focused on the rat, and has established strong neural-behavioral links in this species (Tees, 1999; Komura et al., 2005; Hirokawa et al., 2008, 2011). However, the mouse remains the primary model for molecular genetic and optogenetic manipulations, where limited knowledge concerning multisensory function still exists.

The current study represents the first of its kind to systematically examine unisensory (i.e., auditory alone, visual alone) and multisensory (i.e., paired audiovisual) behavioral function in mice. The core objective of these experiments was to determine if multisensory processing is conserved in the mouse and similar to the features observed in larger animal models. Our ultimate objective is that this behavioral paradigm, in conjunction with neurophysiological methods, could then be used to detail the neural bases of multisensory function. The establishment of such links would then allow for the application of powerful genetic, pharmacologic and optogenetic tools to evaluate questions of mechanistic relevance. Finally, further studies may assess multisensory processing in mouse models of disease/disorder along with determining the underlying systems that may be atypical under these multisensory conditions.

Materials and Methods

Animals

Nine male mice on C57BL/6J and 129S6/SvEv inbred strains were obtained from the Jackson Laboratory (Bar Harbor, ME, USA) and Taconic (Hudson, NY, USA), respectively. All animals were 4 weeks of age and housed in the Vanderbilt Murine Neurobehavioral Core with one cage mate. Mice were on a 12-h light/day cycle and given water ad libitum. For the first week, at 5 weeks of age, mice were given food ad libitum and handled daily to acclimate to the experimenters and facility. Since the proposed behavioral task requires mice to make a decision in order to obtain a food reward, animals were placed on a food-restricted diet. Mice were only given food ad libitum on weekends (non-testing days) and free access to food for 4 h every weekday, and this food restriction was gradually reached over a 2-week period before behavioral training began. Liquid vanilla Ensure (Abbott Laboratories, Abbott Park, IL, USA) was given in home cages for 60 min for 2 days before operant training began to expose animals to the reward. Body weights were recorded weekly and if an animal lost 20% of its initial weight, it would be excluded from the study until it had regained enough weight to participate based on this criterion. All experiments and protocols were approved by the Institutional Animal Care and Use Committee at Vanderbilt University.

Equipment

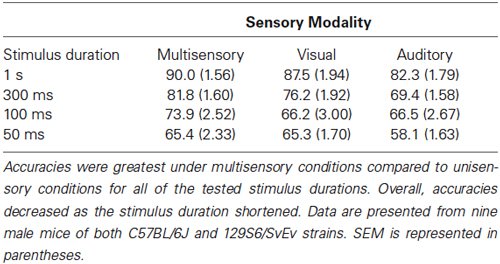

Mice were placed in adapted mouse operant chambers (Med Associates Inc, St. Albans, VT, USA) that measured 7.0” L × 6.0” W × 7.25” H and were contained in sound attenuating cubicles. The chamber contained three nose poke holes with infrared sensors on the front wall, a house light, fan and clicker positioned on the rear wall and a mounted camera placed on the ceiling of the cubicle above the chamber. A section of metal mesh replaced the standard chamber plate and was placed above the central hole with a 3” × 3” horn tweeter (Pyle Pro Audio, Brooklyn, NY, USA) that was located behind the mesh section (Figure 1A). LED lights were contained within the left and right nose poke holes and emitted a standard intensity of 1 lux, characteristic of the operant chamber. To minimize the small possibility that outside light may enter into the chambers, training and testing experiments were conducted in dim red light. Auditory stimuli were comprised of either white noise or an 8 kHz tone played at 85 db SPL, which were measured and calibrated using a SoundTrack LxT2 sound level meter (Larson Davis, Provo, UT, USA). Non-significant sound level measurements were observed in each chamber as auditory stimuli were played in the remaining chambers to ensure that sounds from one operant chamber could not be heard in another chamber. All rewards were presented in the central nose poke hole and comprised of 0.1 cc of liquid vanilla Ensure dispensed by an automated dipper.

Figure 1. Behavioral task schematic. (A) Diagram of operant chamber during the presentation of an audiovisual stimulus (represented by the yellow color within the nose poke hole, where the LED was positioned) and by the active speaker. (B) Schematic representation of the trial sequence and timing. The phrase variable represents the amount of time that progresses until the animal decides to initiate a new trial by then performing a nose poke in the central hole.

Behavioral Task

Initial training

On the first day of training mice were acclimated to the operant chamber. A reward was given in the central nose poke hole every 60 s for 1 h to demonstrate the location where the reward would be dispensed. In the next step of training, only the right nose poke hole was active. Mice were trained to respond in the right nose poke hole, which then resulted in a 500 ms visual stimulus presentation in the same location immediately followed by an Ensure reward. Once the number of responses was greater for the right vs. left nose poke hole for two consecutive days; the left nose poke hole was then activated and the right was inactivated. The initial training and reversal each lasted 4–5 days to meet the above criterion. This phase demonstrated that responding to either nose poke hole could result in a reward and was an early exposure to a sensory stimulus being paired with a reward.

Unisensory training

In the next step of the behavioral task, mice were presented with a visual light stimulus in either the left or right nose poke hole. The animal had 5 s to make a decision once the stimulus was presented, and a correct trial occurred when the animal responded to the nose poke hole where the visual stimulus was presented. A correct response resulted in an additional 5 s time period for the animal to collect the reward. In this training phase, mice also learned to initiate a new trial by nose poking in the central hole. After a correct response and a reward was obtained, there was a 2 s “wait” period where any response would not result in the start of a new trial, in order to minimize accidental or impulsive responses. After 2 s had passed, mice could then initiate a new trial at any time by nose poking in the central hole. Once a trial was initiated, a clicker (50 ms duration) would signal that a stimulus would be presented shortly, and, after a 1 s delay, a light stimulus appeared again either in the left or right nose poke hole in a pseudorandom order. Every incorrect trial resulted in a timeout, with the house light illuminating the chamber for 5 s, and no further responses could be made during this time period. In addition to timeouts for incorrect responses, timeouts could occur if the animal responded too early, before a stimulus was presented (<1 s), and if an animal waited too long (>5 s) before making a response (Figure 1B). As the mice became more accurate, the duration of the light stimulus was gradually reduced from 4 s to 2 s. For each training session mice completed a total of 100 trials (50 per side) for up to 90 min. Once the visual task was completed successfully for two consecutive days using a 65% correct response rate criterion, mice progressed to the auditory component of the task. In the auditory task, either white noise or an 8 kHz tone at a constant 85 db was played individually. Mice were trained to associate the tone with responding to the left nose poke hole and white noise with responding to the right nose poke hole. The trial description, behavioral sessions and criterion to advance to the next stage of the paradigm were the same as described in the visual component of the task.

Multisensory testing

After the visual and auditory training components of the task were completed, mice advanced to the behavioral testing phase where multisensory processing was evaluated. For multisensory trials only congruent/paired audiovisual trials were presented. Based on the variability of stimulus duration presentations in the multisensory rodent literature, we pragmatically selected a variety of stimulus durations. Unisensory and multisensory processing was evaluated for 5 days at each of the selected durations and proceeded by gradually shortening the durations. Therefore, mice were initially tested on 1 s presentations of visual alone, auditory alone and paired audiovisual stimuli for 5 days, and this was then evaluated at 300 ms, 100 ms and 50 ms stimulus durations. In these behavioral sessions, mice completed 150 trials (50 per condition presented in a pseudorandom order) lasting up to 90 min per testing day.

Data Analysis

All behavioral experiments were created utilizing customized Med-PC IV programs (Med Associates Inc.). Behavioral accuracies in the initial training phase were calculated by comparing the responses to the left vs. right nose poke hole (and vice versa during reversal learning) using two-tailed t tests. Accuracies measured for visual and auditory training sessions were calculated as percent correct utilizing a 65% correct response rate for two consecutive days to progress to the multisensory testing phase. Percent gain was calculated as (mean number of correct multisensory trials − mean number of correct best unisensory trials) / (mean number of correct best unisensory trials) × 100. Accuracy data was calculated as correct trials / correct + incorrect trials (misses only). Prism 6 (Graphpad Software Inc, La Jolla, CA, USA) was used to calculate all statistical analyses. Two-way analysis of variances (ANOVA) with repeated measures and Tukey’s multiple comparisons tests were utilized for all experiments unless otherwise specified. Mean and standard error of the mean is presented.

Results

Behavioral Performance for Unisensory (Visual Alone, Auditory Alone) Training

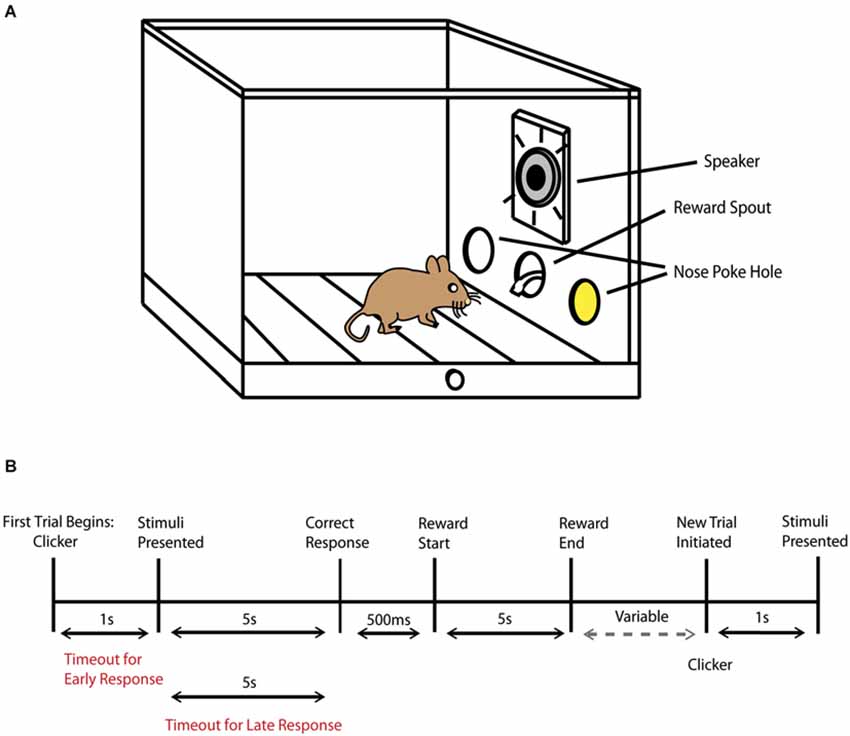

Mice were trained to identify both visual and auditory stimuli on separate and independent tasks. Each training session for these unisensory tasks consisted of 100 trials that occurred once daily. Criterion performance occurred when mice achieved 65% correct performance for two consecutive days. Mice first completed the visual training component of the behavioral task. Once animals reached criterion, they then advanced to the auditory training component. Using this criterion, mice completed the visual task with a final accuracy of 77.3% ± 1.8% and completed the auditory task with a final accuracy of 70.1% ± 1.1%. A paired t test revealed significant differences between visual and auditory unisensory behavioral performance upon achievement of criterion (p = 0.0002; Figure 2). Substantial differences were noted in the time it took the mice to learn the two unisensory tasks. Whereas mice completed the visual task in 12.5 ± 0.95 days, it took 35.1 ± 4.55 days to complete the auditory task. Finally, unpaired t tests revealed no significant differences between mouse strains for behavioral accuracies for either visual training (p = 0.62) or for auditory training (p = 0.29).

Figure 2. Criterion performance on unisensory tasks. Average behavioral accuracies for visual only (blue) and auditory only (red) training conditions for two consecutive days once animals had reached 65% correct criterion performance. A significant difference (p = 0.0002) in behavioral performance was found when comparing visual and auditory performance across animals. Error bars represent SEM.

Unisensory and Multisensory Behavioral Performance as a Function of Stimulus Duration

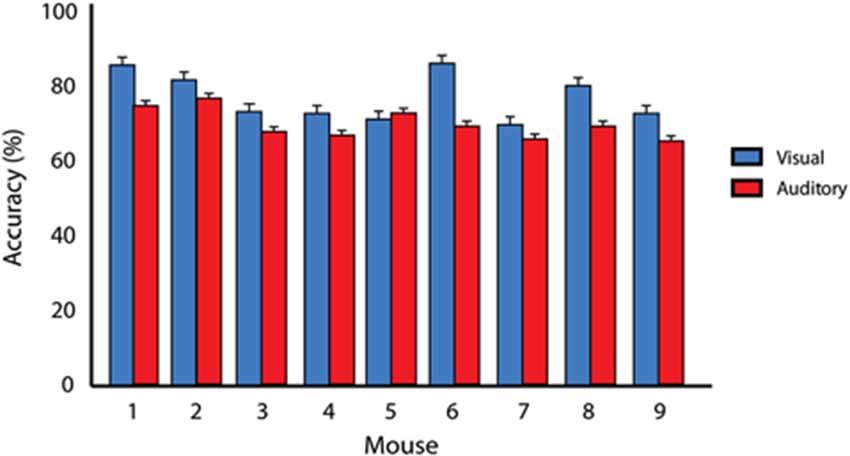

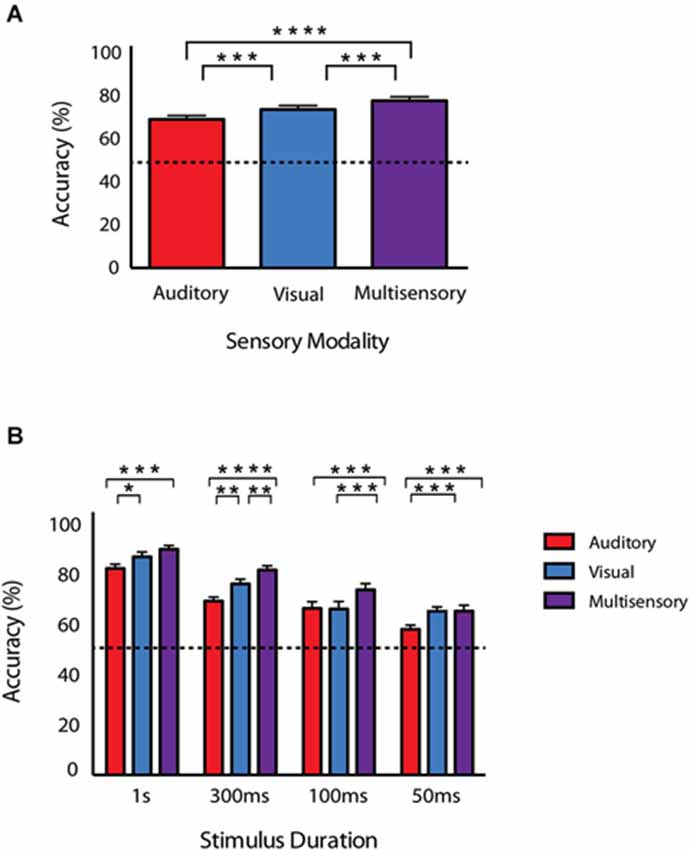

Once animals had achieved criterion performance for each of the unisensory tasks, they then performed a paired audiovisual version of the task. Furthermore, in order to modulate the effectiveness of the unisensory stimuli, the duration was varied. Mice were initially tested on the longest duration condition (i.e., 1 s) in response to visual, auditory and multisensory stimuli for 5 days. Following this, performance was then evaluated at durations of 300 ms, 100 ms and 50 ms. Collapsing across all durations, behavioral accuracies under multisensory conditions were greater than for visual or auditory only conditions (Figure 3A). Overall accuracy for these collapsed conditions was as follows: multisensory—77.8% ± 1.83, visual—73.7% ± 1.82 and auditory 69.1% ± 1.75. A repeated measures one-way ANOVA was used to evaluate accuracy as a function of sensory modality and revealed a significant difference (p < 0.0001; F(1.907,66.75) = 39.88). Using Sidak’s multiple comparison post hoc test, we found significant differences between the multisensory and visual conditions (p < 0.001), multisensory and auditory conditions (p < 0.0001) and the visual and auditory conditions (p < 0.001). Next, a repeated measures two-way ANOVA with a Tukey’s multiple comparisons post hoc test was used to compare response accuracy for unisensory and multisensory conditions across the different stimulus durations (Figure 3B). Main effects of stimulus duration (p < 0.0001; F(3,32) = 31.75), sensory modality (p < 0.0001; F(2,64) = 46.65) and a significant stimulus duration × sensory modality interaction effect (p = 0.0125; F(6,64) = 2.981) were observed. Table 1 shows response accuracy for each sensory modality and duration. When examined on a duration-by-duration basis, response accuracies under multisensory conditions were consistently greater than under either unisensory condition.

Figure 3. Behavioral accuracy for auditory, visual and audiovisual conditions across stimulus durations. (A) Accuracy for each of the conditions collapsed across all stimulus durations. (B) Accuracies as a function of sensory modality and duration. Note that response accuracy was greatest for multisensory compared to unisensory conditions across all of the tested durations. Data are presented from nine male mice of both C57BL/6J and 129S6/SvEv strains. Dotted line represents 50% accuracy or chance level. Error bars represent SEM. The significant levels are as follows: (* = p < 0.05, ** = p < 0.01, *** = p < 0.001, **** = p < 0.0001).

We were also interested in assessing any effects of inbred strain. Using a repeated measures two-way ANOVA a main effect of sensory modality was observed at every stimulus duration across both strains (1 s; p = 0.004, 300 ms; p = 0.001, 100 ms; p = 0.001, 50 ms; p = 0.006). However, no significant main effect of mouse strain was observed at any stimulus duration (1 s; p = 0.084, 300 ms; p = 0.29, 100 ms; p = 0.97, 50 ms; p = 0.061).

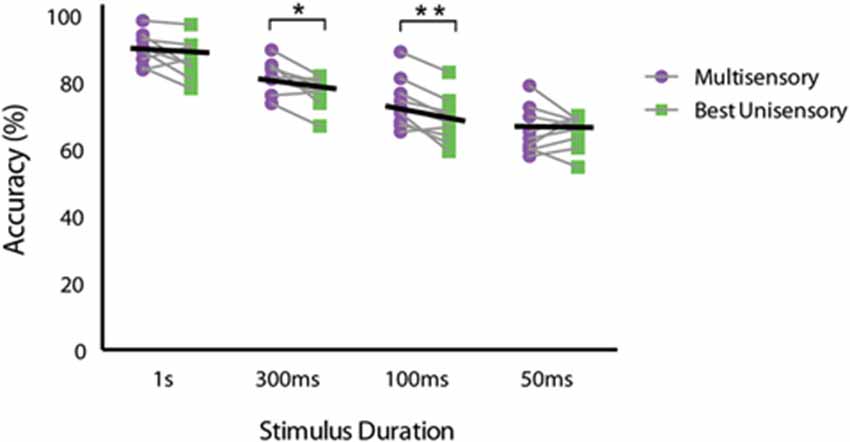

Evaluating Multisensory Gain

In order to measure the degree of facilitation attributable to having information available from both senses, we calculated multisensory gain by utilizing the equation (average multisensory correct trials − average best unisensory correct trials)/(average best unisensory correct trials) × 100 (Meredith and Stein, 1983). The greatest multisensory gain was seen for the 100 ms duration stimuli, with animals exhibiting a greater than 11% gain in performance. Using this calculation, we found multisensory gain at each of the tested stimulus durations (average gain at 1 s = 3.40%, 300 ms = 7.40%, 100 ms = 11.15%, and 50 ms = 0.10%). A similar pattern of gain was found for both mouse strains. To further evaluate multisensory gain, we then compared the original behavioral performance data for the multisensory and the best unisensory conditions for each individual mouse across all stimulus durations. A repeated measures two-way ANOVA with factors of stimulus duration and sensory modality with a Sidak’s multiple comparisons post hoc test was used. Significant main effects of stimulus duration (p < 0.0001; F(3,24) = 40.1) and sensory modality were observed (p = 0.0073; F(1,8) = 12.72), but no significant stimulus duration × sensory modality interaction (p = 0.068; F(3,24) = 2.70) was observed (Figure 4). Utilizing the Sidak’s multiple comparison post hoc test, we found significant differences between the multisensory and best unisensory conditions at the 300 ms (p < 0.05) and the 100 ms stimulus condition (p < 0.01). Overall, multisensory gain was found to gradually increase as stimulus duration was shortened, with gain increasing up to a maximum at stimulus durations of 100 ms. Multisensory gain was observed to be significantly different from zero at the 300 ms and 100 ms conditions. Somewhat surprisingly, however, little gain was seen at the shortest duration (i.e., 50 ms), even though animals were performing above chance levels on unisensory trials. One possible explanation for this lack of effect at this shortest duration is the mismatch in performance between the visual and auditory trials. In a Bayesian framework, differences in performance between the two unisensory conditions would be expected to yield little gain because of the differences in reliability of the different sensory channels (here with vision being more reliable) (Deneve and Pouget, 2004; Beierholm et al., 2007; Murray and Wallace, 2011).

Figure 4. Performance differences between multisensory and best unisensory conditions for individual animals. Multisensory and the best unisensory performance accuracies were averaged separately for each mouse across the 5 days of testing for each stimulus duration. Black lines represent the group average performance under multisensory and the best unisensory conditions. Note the descending slope of these lines, which is most apparent for the 300 ms and 100 ms duration conditions. Data are presented from nine male mice of both C57BL/6J and 129S6/SvEv strains. The significant levels are as follows: (* = p < 0.05, ** = p < 0.01).

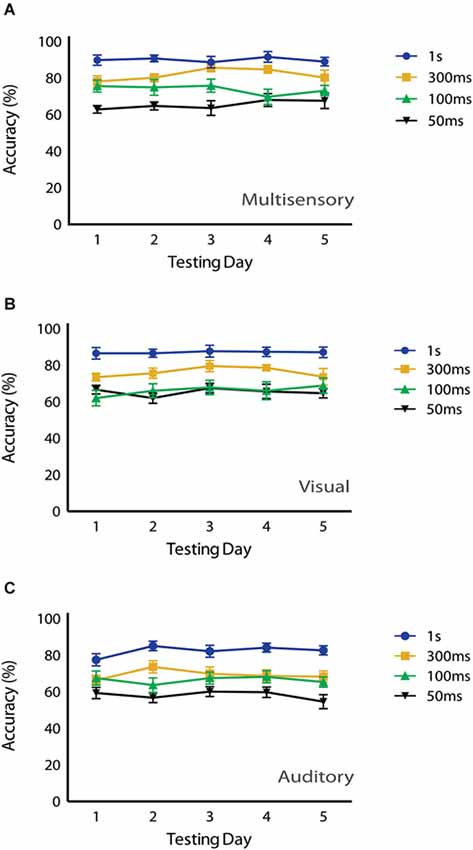

Effects of Testing Day

Lastly, in an attempt to determine if the potential novelty of the multisensory stimuli had any effect on behavioral performance, we evaluated accuracy as a function of testing day. Repeated measures two-way ANOVAs with Tukey’s multiple comparisons post hoc tests were used to compare behavioral accuracy for multisensory, visual and auditory conditions across testing days (Figure 5). For multisensory conditions, a significant main effect of stimulus duration (p = <0.0001; F(3,32) = 26.65) was observed, but neither a significant main effect of testing day (p = 0.846; F(4,128) = 0.346) nor a significant interaction effect (p = 0.465; F(12,128) = 0.987) was observed. This pattern was also found for both the visual and auditory conditions. For the visual condition a significant main effect of stimulus duration (p = <0.0001; F(3,32) = 21.49) was observed, yet neither a significant main effect of testing day (p = 0.381; F(4,128) = 1.056) nor a significant interaction effect (p = 0.901; F(12,128) = 0.502) was observed. Finally, for the auditory condition a significant main effect of stimulus duration (p = <0.0001; F(3,32) = 26.03) was found, but neither a significant main effect of testing day (p = 0.514; F(4,128) = 0.822) nor a significant interaction effect (p = 0.619; F(12,128) = 0.831) was observed. Overall, we found that accuracies were consistent across the testing days, and there were no differences in performance levels for visual, auditory or audiovisual stimuli across the days of testing.

Figure 5. Effects of testing day on behavioral performance. No significant main effects of testing day were observed under (A) multisensory (p = 0.846), (B) visual (p = 0.381) or (C) auditory conditions (p = 0.514) using a repeated measures 2-way ANOVA (Tukey’s test). Data are presented from nine male mice of both C57BL/6J and 129S6/SvEv strains. Error bars represent SEM.

Discussion

The current study is the first to evaluate behavioral performance under multisensory conditions in the mouse. A variety of studies have evaluated various facets of either visual or auditory behavioral function in mice (Pinto and Enroth-Cugell, 2000; Prusky et al., 2000; Klink and Klump, 2004; Radziwon et al., 2009; Busse et al., 2011; Jaramillo and Zador, 2014), yet none have focused on determining the behavioral effects when these stimuli are combined. Overall, we found that mice were more accurate at identifying paired audiovisual stimuli compared to either visual or auditory stimuli alone across all of the tested stimulus durations, with significant gains observed at the 300 ms and 100 ms durations. As a general rule, behavioral accuracy decreased as the stimulus duration was shortened down to the 100 ms duration, after which we believe that the stimulus duration was sufficiently short to be close to threshold detection levels. We suggest that this duration effect is in accordance with inverse effectiveness, a key concept in the multisensory literature, and complements a host of similar findings in human, monkey, cat, and rat model systems (Meredith and Stein, 1986b; Cappe et al., 2010; Murray and Wallace, 2011; Ohshiro et al., 2011; Gleiss and Kayser, 2012). Inverse effectiveness states that as the effectiveness of the unisensory stimuli decreases, greater behavioral benefits can be observed when these stimuli are combined compared to when the individual (visual or auditory) stimulus is presented alone (Meredith and Stein, 1986b). Although effectiveness in the larger animal models has been typically manipulated via changes in stimulus intensity, in our current study we were able to show corresponding effects in the mouse through manipulations of stimulus duration.

One key limitation from the current study is that we only manipulated one dimension of the sensory stimuli (i.e., duration). In the rat, stimulus intensity has been modulated to examine multisensory function (Gleiss and Kayser, 2012), and we hope to extend our studies into the domain of intensity in the future. Furthermore, due to practical constraints associated with the operant chambers, auditory stimuli were delivered from a single (central) spatial location, thus placing the visual and auditory stimuli somewhat out of spatial correspondence. Future work will add a second spatially congruent speaker to the operant chamber. These optimizations of the stimulus structure will likely reveal even larger multisensory interactions than those revealed in the current study, which we believe to be a conservative estimate of the potential gains in performance. However, we believe that the differences in multisensory gain by using either one or two speakers for this specific task would be minimal. The reasoning is that in this task we used a fairly loud auditory stimulus (85 db) from a centrally located speaker in a standard operant chamber that is 7.0” L × 6.0” W × 7.25” H, thus making the stimulus highly effective. Future work will indeed move toward the use of two speakers so that we can begin to manipulate stimulus intensity in a parametric manner, and thus move toward better examining the spatial and temporal aspects of the observed multisensory gain. Another potential caveat to these findings is the role of attention to the unisensory (visual or auditory) stimuli during this task. A variety of studies have demonstrated a relationship between (multi)sensory processing and attention (Stein et al., 1995; Spence and Driver, 2004; Talsma et al., 2010). In the current design it is difficult to control for the differential allocation of attention to one modality or the other, yet such attentional biases are likely. Nonetheless, the pattern of behavioral response argues against a fixed strategy of attending to one of the stimulus modalities, suggesting that there were some attentional resources deployed toward both modalities. Regardless of the distribution of attention, the presence of multisensory gain suggests that even a stimulus in an unattended modality can modulate performance in the attended modality. Future work may also include reversing the training order, where animals would first complete the auditory training followed by visual training before testing under multisensory conditions. In addition, this study focused on the performance under congruent/paired audiovisual trials; however future studies could examine cognitive flexibility or set shifting by utilizing incongruent audiovisual trials. An interesting variant of this task would be to train animals under only one sensory modality condition (e.g., auditory) and then pair this with a separate irrelevant sensory stimulus (e.g., visual) (Lovelace et al., 2003). Finally, future studies may focus on other sensory domains (e.g., tactile, olfaction) that may be more relevant or salient to mice.

A number of recent behavioral studies characterizing multisensory processing in the rat are highly relevant to these results (Raposo et al., 2012, 2014; Carandini and Churchland, 2013; Sheppard et al., 2013). These studies have demonstrated that behavioral gains can be observed under multisensory conditions similar to those found in larger animal models, and the most recent of these studies have evaluated the underlying circuits that may be crucial for audiovisual integration in the rat (Brett-Green et al., 2003; Komura et al., 2005; Hirokawa et al., 2008, 2011). Therefore, with the foundation established for this behavioral paradigm, we believe numerous future studies could be pursued focused on evaluating and linking mechanistic function with the associated behavior under multisensory conditions in the mouse model. More specifically, we believe that the current work will serve as the springboard for identifying the neurobiological substrates and circuits that support these behavioral effects. Classical studies focused on the deep layers of the superior colliculus (SC) have found this to be a watershed structure for the convergence and integration of information from vision, audition and touch (Meredith and Stein, 1983, 1986a,b; Meredith et al., 1987). Further studies demonstrated that lesions to the deeper (i.e., multisensory) layers of the SC cause not only a diminished neuronal response under multisensory conditions, but also result in a dramatic reduction in the associated behavioral benefits (Burnett et al., 2002, 2004, 2007). Thus, one likely substrate for the multisensory behavioral effects shown here is the SC, given its central role in audiovisual detection and localization (Hirokawa et al., 2011). In addition, work in the cat model has shown that this SC-mediated integration is heavily dependent upon convergent cortical inputs that appear to gate the integrative features of SC neurons (Wallace et al., 1992; Wallace and Stein, 1994; Wilkinson et al., 1996; Jiang et al., 2002). With the use of neurophysiological and neuroimaging methods, similar corticotectal circuits have been described in the rat, highlighting the conservation of a similar circuit system in smaller animal models (Brett-Green et al., 2003; Wallace et al., 2004; Menzel and Barth, 2005; Rodgers et al., 2008; Sanganahalli et al., 2009; Sieben et al., 2013). Specifically the selective deactivation to a higher order cortical region (V2L) resulted in a severe disruption in behavioral performance when responding to audiovisual stimuli (Hirokawa et al., 2008). Of greatest interest to this study, there have been a variety of recent studies focused on determining the underlying brain regions and circuits critical for multisensory processing in the mouse model, although none of these studies have examined the behavioral response to multisensory stimuli (Hunt et al., 2006; Cohen et al., 2011; Laramée et al., 2011; Charbonneau et al., 2012; Olcese et al., 2013; Gogolla et al., 2014; Reig and Silberberg, 2014). Understanding whether such a cortical dependency exists in the mouse model is a focus of future inquiry and therefore possible targets of multisensory input include the SC and the cortical region V2L. These mechanistically driven studies would then take advantage of the utility of the mouse model by using both genetic and optogenetic techniques to evaluate the underlying neural mechanisms of multisensory processing.

Another avenue of future research is to evaluate and further characterize mouse models of disease/disorder with known (multi)sensory processing deficits in the human population. Most importantly, the use of mouse models allows for the application of powerful genetic, pharmacologic and optogenetic tools to questions of mechanistic relevance that are not readily available for larger animal models. Two clinical populations with known (multi)sensory dysfunction are schizophrenia and autism (de Gelder et al., 2003, 2005; Behrendt and Young, 2004; Dakin and Frith, 2005; Iarocci and Mcdonald, 2006; Minshew and Hobson, 2008; de Jong et al., 2009; Grossman et al., 2009; Javitt, 2009; Marco et al., 2011; Cascio et al., 2012; O’Connor, 2012; Martin et al., 2013; Wallace and Stevenson, 2014). Recently, there has been an increased focus on linking these behavioral findings with possible neural correlates to gain a better understanding of the atypical (multi)sensory processing observed in these clinical populations (Russo et al., 2010; Brandwein et al., 2012; Stekelenburg et al., 2013). Numerous genetic mouse models of clinical disorders such as autism and schizophrenia have shown behavioral deficits and altered neural connectivity (Silverman et al., 2010; Provenzano et al., 2012; Hida et al., 2013; Karl, 2013; Lipina and Roder, 2014); however, behavioral studies of multisensory function have not yet been reported in these animals. In fact, a recent study demonstrated multisensory processing differences between wild type mice and mouse models of autism at the neuronal level and showed the potential to ameliorate these effects under pharmacologic manipulations (Gogolla et al., 2014). This approach has enormous potential to reveal mechanistic contributions of altered multisensory function to these disease states. The use of our behavioral paradigm, along with pharmacologic or optogenetic techniques, could then allow for the assessment of novel therapeutic approaches that may link altered neural mechanisms to the resultant atypical behavior. Finally, these types of studies would offer great promise as a translational bridge that seeks to better link genetic, phenotypic and neural factors in an effort to better elucidate the contributing role of alterations in sensory function in developmental disorders such as autism or schizophrenia.

Overall, this study has shown that multisensory processing is conserved in the mouse model by demonstrating similar behavioral benefits to those observed throughout numerous larger animal models. With the design of the first behavioral paradigm to assess multisensory function in the mouse, we believe this allows for a whole host of future research opportunities. This type of behavioral task will allow for a variety of mechanistically driven studies focused on the neural underpinnings of multisensory processing, in addition to studies dedicated to evaluating these circuits in models of clinical disorders with known (multi)sensory impairments.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank Dr. Randy Barrett and LeAnne Kurela for discussions about project design and Walter Lee with technical assistance. This work was supported by Vanderbilt University Institutional Funding and 5T32MH018921-24: Development of Psychopathology: From Brain and Behavioral Science to Intervention. This work was performed in part through the use of the Murine Neurobehavior Core lab at the Vanderbilt University Medical Center, which is supported in part by P30HD1505.

References

Behrendt, R.-P., and Young, C. (2004). Hallucinations in schizophrenia, sensory impairment and brain disease: a unifying model. Behav. Brain Sci. 27, 771–787. doi: 10.1017/s0140525x04000184

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Beierholm, U., Shams, L., Ma, W. J., and Koerding, K. (2007). “Comparing Bayesian models for multisensory cue combination without mandatory integration,” in Advances in Neural Information Processing Systems, eds J. C. Platt, D. Koller, Y. Singer and S. T. Roweis (Red Hook, NY: Curran Associates, Inc.), 81–88.

Brandwein, A. B., Foxe, J. J., Butler, J. S., Russo, N. N., Altschuler, T. S., Gomes, H., et al. (2012). The development of multisensory integration in high-functioning autism: high-density electrical mapping and psychophysical measures reveal impairments in the processing of audiovisual inputs. Cereb. Cortex 23, 1329–1341. doi: 10.1093/cercor/bhs109

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Brett-Green, B., Fifková, E., Larue, D. T., Winer, J. A., and Barth, D. S. (2003). A multisensory zone in rat parietotemporal cortex: intra- and extracellular physiology and thalamocortical connections. J. Comp. Neurol. 460, 223–237. doi: 10.1002/cne.10637

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Burnett, L. R., Henkel, C. K., Stein, B. E., and Wallace, M. T. (2002). A loss of multisensory orientation behaviors is associated with the loss of parvalbumin-immunoreactive neurons in the superior colliculus. FASEB J. 16, A735. doi: 10.1016/j.neruoscience.2003.12.026

Burnett, L. R., Stein, B. E., Chaponis, D., and Wallace, M. T. (2004). Superior colliculus lesions preferentially disrupt multisensory orientation. Neuroscience 124, 535–547. doi: 10.1016/j.neruoscience.2003.12.026

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Burnett, L. R., Stein, B. E., Perrault, T. J., and Wallace, M. T. (2007). Excitotoxic lesions of the superior colliculus preferentially impact multisensory neurons and multisensory integration. Exp. Brain Res. 179, 325–338. doi: 10.1007/s00221-006-0789-8

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Busse, L., Ayaz, A., Dhruv, N. T., Katzner, S., Saleem, A. B., Schölvinck, M. L., et al. (2011). The detection of visual contrast in the behaving mouse. J. Neurosci. 31, 11351–11361. doi: 10.1523/jneurosci.6689-10.2011

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Calvert, G., Spence, C., and Stein, B. E. (2004). The Handbook of Multisensory Processes. Cambridge, MA: MIT press.

Calvert, G. A., and Thesen, T. (2004). Multisensory integration: methodological approaches and emerging principles in the human brain. J. Physiol. Paris 98, 191–205. doi: 10.1016/j.jphysparis.2004.03.018

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Cappe, C., Murray, M. M., Barone, P., and Rouiller, E. M. (2010). Multisensory facilitation of behavior in monkeys: effects of stimulus intensity. J. Cogn. Neurosci. 22, 2850–2863. doi: 10.1162/jocn.2010.21423

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Carandini, M., and Churchland, A. K. (2013). Probing perceptual decisions in rodents. Nat. Neurosci. 16, 824–831. doi: 10.1038/nn.3410

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Cascio, C. J. (2010). Somatosensory processing in neurodevelopmental disorders. J. Neurodev. Disord. 2, 62–69. doi: 10.1007/s11689-010-9046-3

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Cascio, C. J., Foss-Feig, J. H., Burnette, C. P., Heacock, J. L., and Cosby, A. A. (2012). The rubber hand illusion in children with autism spectrum disorders: delayed influence of combined tactile and visual input on proprioception. Autism 16, 406–419. doi: 10.1177/1362361311430404

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Charbonneau, V., Laramée, M. E., Boucher, V., Bronchti, G., and Boire, D. (2012). Cortical and subcortical projections to primary visual cortex in anophthalmic, enucleated and sighted mice. Eur. J. Neurosci. 36, 2949–2963. doi: 10.1111/j.1460-9568.2012.08215.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Cohen, L., Rothschild, G., and Mizrahi, A. (2011). Multisensory integration of natural odors and sounds in the auditory cortex. Neuron 72, 357–369. doi: 10.1016/j.neuron.2011.08.019

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Dakin, S., and Frith, U. (2005). Vagaries of visual perception in autism. Neuron 48, 497–507. doi: 10.1016/j.neuron.2005.10.018

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

de Gelder, B., Vroomen, J., Annen, L., Masthof, E., and Hodiamont, P. (2003). Audio-visual integration in schizophrenia. Schizophr. Res. 59, 211–218. doi: 10.1016/s0920-9964(01)00344-9

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

de Gelder, B., Vroomen, J., de Jong, S. J., Masthoff, E. D., Trompenaars, F. J., and Hodiamont, P. (2005). Multisensory integration of emotional faces and voices in schizophrenics. Schizophr. Res. 72, 195–203. doi: 10.1016/j.schres.2004.02.013

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

de Jong, J., Hodiamont, P., Van Den Stock, J., and de Gelder, B. (2009). Audiovisual emotion recognition in schizophrenia: reduced integration of facial and vocal affect. Schizophr. Res. 107, 286–293. doi: 10.1016/j.schres.2008.10.001

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Deneve, S., and Pouget, A. (2004). Bayesian multisensory integration and cross-modal spatial links. J. Physiol. Paris 98, 249–258. doi: 10.1016/j.jphysparis.2004.03.011

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Fenno, L., Yizhar, O., and Deisseroth, K. (2011). The development and application of optogenetics. Annu. Rev. Neurosci. 34, 389–412. doi: 10.1146/annurev-neuro-061010-113817

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Foss-Feig, J. H., Kwakye, L. D., Cascio, C. J., Burnette, C. P., Kadivar, H., Stone, W. L., et al. (2010). An extended multisensory temporal binding window in autism spectrum disorders. Exp. Brain Res. 203, 381–389. doi: 10.1007/s00221-010-2240-4

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Foxe, J. J., Molholm, S., Del Bene, V. A., Frey, H.-P., Russo, N. N., Blanco, D., et al. (2013). Severe multisensory speech integration deficits in high-functioning school-aged children with autism spectrum disorder (ASD) and their resolution during early adolescence. Cereb. Cortex doi: 10.1093/cercor/bht213. [Epub ahead of print].

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Ghose, D., and Wallace, M. (2014). Heterogeneity in the spatial receptive field architecture of multisensory neurons of the superior colliculus and its effects on multisensory integration. Neuroscience 256, 147–162. doi: 10.1016/j.neuroscience.2013.10.044

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Gleiss, S., and Kayser, C. (2012). Audio-visual detection benefits in the rat. PLoS One 7:e45677. doi: 10.1371/journal.pone.0045677

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Gogolla, N., Takesian, A. E., Feng, G., Fagiolini, M., and Hensch, T. K. (2014). Sensory integration in mouse insular cortex reflects GABA circuit maturation. Neuron 83, 894–905. doi: 10.1016/j.neuron.2014.06.033

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Grossman, R. B., Schneps, M. H., and Tager-Flusberg, H. (2009). Slipped lips: onset asynchrony detection of auditory-visual language in autism. J. Child Psychol. Psychiatry 50, 491–497. doi: 10.1111/j.1469-7610.2008.02002.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hida, H., Mouri, A., and Noda, Y. (2013). Behavioral phenotypes in schizophrenic animal models with multiple combinations of genetic and environmental factors. J. Pharmacol. Sci. 121, 185–191. doi: 10.1254/jphs.12r15cp

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hirokawa, J., Bosch, M., Sakata, S., Sakurai, Y., and Yamamori, T. (2008). Functional role of the secondary visual cortex in multisensory facilitation in rats. Neuroscience 153, 1402–1417. doi: 10.1016/j.neuroscience.2008.01.011

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hirokawa, J., Sadakane, O., Sakata, S., Bosch, M., Sakurai, Y., and Yamamori, T. (2011). Multisensory information facilitates reaction speed by enlarging activity difference between superior colliculus hemispheres in rats. PLoS One 6:e25283. doi: 10.1371/journal.pone.0025283

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hishida, R., Kudoh, M., and Shibuki, K. (2014). Multimodal cortical sensory pathways revealed by sequential transcranial electrical stimulation in mice. Neurosci. Res. 87, 49–55. doi: 10.1016/j.neures.2014.07.004

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hunt, D. L., Yamoah, E. N., and Krubitzer, L. (2006). Multisensory plasticity in congenitally deaf mice: how are cortical areas functionally specified? Neuroscience 139, 1507–1524. doi: 10.1016/j.neuroscience.2006.01.023

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Iarocci, G., and Mcdonald, J. (2006). Sensory integration and the perceptual experience of persons with autism. J. Autism Dev. Disord. 36, 77–90. doi: 10.1007/s10803-005-0044-3

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Jaramillo, S., and Zador, A. M. (2014). Mice and rats achieve similar levels of performance in an adaptive decision-making task. Front. Syst. Neurosci. 8:173. doi: 10.3389/fnsys.2014.00173

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Javitt, D. C. (2009). Sensory processing in schizophrenia: neither simple nor intact. Schizophr. Bull. 35, 1059–1064. doi: 10.1093/schbul/sbp110

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Jiang, W., Jiang, H., and Stein, B. E. (2002). Two corticotectal areas facilitate multisensory orientation behavior. J. Cogn. Neurosci. 14, 1240–1255. doi: 10.1162/089892902760807230

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Karl, T. (2013). Neuregulin 1: a prime candidate for research into gene-environment interactions in schizophrenia? Insights from genetic rodent models. Front. Behav. Neurosci. 7:106. doi: 10.3389/fnbeh.2013.00106

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Keane, B. P., Rosenthal, O., Chun, N. H., and Shams, L. (2010). Audiovisual integration in high functioning adults with autism. Res. Autism Spectr. Disord. 4, 276–289. doi: 10.1016/j.rasd.2009.09.015

Kern, J. K., Trivedi, M. H., Grannemann, B. D., Garver, C. R., Johnson, D. G., Andrews, A. A., et al. (2007). Sensory correlations in autism. Autism 11, 123–134. doi: 10.1177/1362361307075702

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Klink, K. B., and Klump, G. M. (2004). Duration discrimination in the mouse (Mus musculus). J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 190, 1039–1046. doi: 10.1007/s00359-004-0561-0

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Komura, Y., Tamura, R., Uwano, T., Nishijo, H., and Ono, T. (2005). Auditory thalamus integrates visual inputs into behavioral gains. Nat. Neurosci. 8, 1203–1209. doi: 10.1038/nn1528

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kwakye, L. D., Foss-Feig, J. H., Cascio, C. J., Stone, W. L., and Wallace, M. T. (2011). Altered auditory and multisensory temporal processing in autism spectrum disorders. Front. Integr. Neurosci. 4:129. doi: 10.3389/fnint.2010.00129

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Laramée, M. E., Kurotani, T., Rockland, K. S., Bronchti, G., and Boire, D. (2011). Indirect pathway between the primary auditory and visual cortices through layer V pyramidal neurons in V2L in mouse and the effects of bilateral enucleation. Eur. J. Neurosci. 34, 65–78. doi: 10.1111/j.1460-9568.2011.07732.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lipina, T. V., and Roder, J. C. (2014). Disrupted-In-Schizophrenia-1 (DISC1) interactome and mental disorders: impact of mouse models. Neurosci. Biobehav. Rev. 45C, 271–294. doi: 10.1016/j.neubiorev.2014.07.001

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lovelace, C. T., Stein, B. E., and Wallace, M. T. (2003). An irrelevant light enhances auditory detection in humans: a psychophysical analysis of multisensory integration in stimulus detection. Brain Res. Cogn. Brain Res. 17, 447–453. doi: 10.1016/s0926-6410(03)00160-5

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Marco, E. J., Hinkley, L. B., Hill, S. S., and Nagarajan, S. S. (2011). Sensory processing in autism: a review of neurophysiologic findings. Pediatr. Res. 69, 48R–54R. doi: 10.1203/PDR.0b013e3182130c54

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Martin, B., Giersch, A., Huron, C., and Van Wassenhove, V. (2013). Temporal event structure and timing in schizophrenia: preserved binding in a longer “now”. Neuropsychologia 51, 358–371. doi: 10.1016/j.neuropsychologia.2012.07.002

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

McDevitt, R. A., Reed, S. J., and Britt, J. P. (2014). Optogenetics in preclinical neuroscience and psychiatry research: recent insights and potential applications. Neuropsychiatr. Dis. Treat. 10, 1369–1379. doi: 10.2147/ndt.s45896

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Menzel, R. R., and Barth, D. S. (2005). Multisensory and secondary somatosensory cortex in the rat. Cereb. Cortex 15, 1690–1696. doi: 10.1093/cercor/bhi045

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Meredith, M. A., Nemitz, J. W., and Stein, B. E. (1987). Determinants of multisensory integration in superior colliculus neurons. I. Temporal factors. J. Neurosci. 7, 3215–3229.

Meredith, M. A., and Stein, B. E. (1983). Interactions among converging sensory inputs in the superior colliculus. Science 221, 389–391. doi: 10.1126/science.6867718

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Meredith, M. A., and Stein, B. E. (1985). Descending efferents from the superior colliculus relay integrated multisensory information. Science 227, 657–659. doi: 10.1126/science.3969558

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Meredith, M. A., and Stein, B. E. (1986a). Spatial factors determine the activity of multisensory neurons in cat superior colliculus. Brain Res. 365, 350–354. doi: 10.1016/0006-8993(86)91648-3

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Meredith, M. A., and Stein, B. E. (1986b). Visual, auditory and somatosensory convergence on cells in superior colliculus results in multisensory integration. J. Neurophysiol. 56, 640–662.

Minshew, N. J., and Hobson, J. A. (2008). Sensory sensitivities and performance on sensory perceptual tasks in high-functioning individuals with autism. J. Autism Dev. Disord. 38, 1485–1498. doi: 10.1007/s10803-007-0528-4

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Murray, M. M., and Wallace, M. T. (2011). The Neural Bases of Multisensory Processes. Boca Raton, FL: CRC Press.

O’Connor, K. (2012). Auditory processing in autism spectrum disorder: a review. Neurosci. Biobehav. Rev. 36, 836–854. doi: 10.1016/j.neubiorev.2011.11.008

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Ohshiro, T., Angelaki, D. E., and DeAngelis, G. C. (2011). A normalization model of multisensory integration. Nat. Neurosci. 14, 775–782. doi: 10.1038/nn.2815

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Olcese, U., Iurilli, G., and Medini, P. (2013). Cellular and synaptic architecture of multisensory integration in the mouse neocortex. Neuron 79, 579–593. doi: 10.1016/j.neuron.2013.06.010

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Pinto, L. H., and Enroth-Cugell, C. (2000). Tests of the mouse visual system. Mamm. Genome 11, 531–536. doi: 10.1007/s003350010102

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Provenzano, G., Zunino, G., Genovesi, S., Sgadó, P., and Bozzi, Y. (2012). Mutant mouse models of autism spectrum disorders. Dis. Markers 33, 225–239. doi: 10.3233/DMA-2012-0917

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Prusky, G. T., West, P. W., and Douglas, R. M. (2000). Behavioral assessment of visual acuity in mice and rats. Vision Res. 40, 2201–2209. doi: 10.1016/s0042-6989(00)00081-x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Radziwon, K. E., June, K. M., Stolzberg, D. J., Xu-Friedman, M. A., Salvi, R. J., and Dent, M. L. (2009). Behaviorally measured audiograms and gap detection thresholds in CBA/CaJ mice. J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 195, 961–969. doi: 10.1007/s00359-009-0472-1

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Raposo, D., Kaufman, M. T., and Churchland, A. K. (2014). A category-free neural population supports evolving demands during decision-making. Nat Neurosci. 17, 1784–1792. doi: 10.1038/nn.3865

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Raposo, D., Sheppard, J. P., Schrater, P. R., and Churchland, A. K. (2012). Multisensory decision-making in rats and humans. J. Neurosci. 32, 3726–3735. doi: 10.1523/JNEUROSCI.4998-11.2012

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Reig, R., and Silberberg, G. (2014). Multisensory integration in the mouse striatum. Neuron 83, 1200–1212. doi: 10.1016/j.neuron.2014.07.033

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Rodgers, K. M., Benison, A. M., Klein, A., and Barth, D. S. (2008). Auditory, somatosensory and multisensory insular cortex in the rat. Cereb. Cortex 18, 2941–2951. doi: 10.1093/cercor/bhn054

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Royal, D. W., Carriere, B. N., and Wallace, M. T. (2009). Spatiotemporal architecture of cortical receptive fields and its impact on multisensory interactions. Exp. Brain Res. 198, 127–136. doi: 10.1007/s00221-009-1772-y

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Russo, N., Foxe, J. J., Brandwein, A. B., Altschuler, T., Gomes, H., and Molholm, S. (2010). Multisensory processing in children with autism: high-density electrical mapping of auditory-somatosensory integration. Autism Res. 3, 253–267. doi: 10.1002/aur.152

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Sakata, S., Yamamori, T., and Sakurai, Y. (2004). Behavioral studies of auditory-visual spatial recognition and integration in rats. Exp. Brain Res. 159, 409–417. doi: 10.1007/s00221-004-1962-6

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Sanganahalli, B. G., Bailey, C. J., Herman, P., and Hyder, F. (2009). “Tactile and non-tactile sensory paradigms for fMRI and neurophysiologic studies in rodents,” in Dynamic Brain Imaging, ed F. Hyder (New York, NY: Springer), 213–242.

Sheppard, J. P., Raposo, D., and Churchland, A. K. (2013). Dynamic weighting of multisensory stimuli shapes decision-making in rats and humans. J. Vis. 13:9. doi: 10.1167/13.12.9

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Sieben, K., Röder, B., and Hanganu-Opatz, I. L. (2013). Oscillatory entrainment of primary somatosensory cortex encodes visual control of tactile processing. J. Neurosci. 33, 5736–5749. doi: 10.1523/jneurosci.4432-12.2013

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Silverman, J. L., Yang, M., Lord, C., and Crawley, J. N. (2010). Behavioural phenotyping assays for mouse models of autism. Nat. Rev. Neurosci. 11, 490–502. doi: 10.1038/nrn2851

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Smith, E. G., and Bennetto, L. (2007). Audiovisual speech integration and lipreading in autism. J. Child Psychol. Psychiatry 48, 813–821. doi: 10.1111/j.1469-7610.2007.01766.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Spence, C., and Driver, J. (2004). Crossmodal Space and Crossmodal Attention. Oxford: Oxford University Press.

Stein, B. E., and Stanford, T. R. (2008). Multisensory integration: current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 9, 255–266. doi: 10.1038/nrn2331

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Stein, B. E., Wallace, M. T., and Meredith, M. A. (1995). “Neural mechanisms mediating attention and orientation to multisensory cues,” in The Cognitive Neurosciences, eds B. E. Stein, M. T. Wallace, M. A. Meredith, and M. S. Gazzaniga (Cambridge, MA: MIT Press), 683–702.

Stein, B. E., Wallace, M. W., Stanford, T. R., and Jiang, W. (2002). Cortex governs multisensory integration in the midbrain. Neuroscientist 8, 306–314. doi: 10.1177/107385840200800406

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Stekelenburg, J. J., Maes, J. P., Van Gool, A. R., Sitskoorn, M., and Vroomen, J. (2013). Deficient multisensory integration in schizophrenia: an event-related potential study. Schizophr. Res. 147, 253–261. doi: 10.1016/j.schres.2013.04.038

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Stevenson, R. A., Fister, J. K., Barnett, Z. P., Nidiffer, A. R., and Wallace, M. T. (2012). Interactions between the spatial and temporal stimulus factors that influence multisensory integration in human performance. Exp. Brain Res. 219, 121–137. doi: 10.1007/s00221-012-3072-1

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Stevenson, R. A., Ghose, D., Fister, J. K., Sarko, D. K., Altieri, N. A., Nidiffer, A. R., et al. (2014a). Identifying and quantifying multisensory integration: a tutorial review. Brain Topogr. 27, 707–730. doi: 10.1007/s10548-014-0365-7

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Stevenson, R. A., Siemann, J. K., Schneider, B. C., Eberly, H. E., Woynaroski, T. G., Camarata, S. M., et al. (2014b). Multisensory temporal integration in autism spectrum disorders. J. Neurosci. 34, 691–697. doi: 10.1523/JNEUROSCI.3615-13.2014

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Stevenson, R., Siemann, J., Woynaroski, T., Schneider, B., Eberly, H., Camarata, S., et al. (2013). Brief report: arrested development of audiovisual speech perception in autism spectrum disorders. J. Autism Dev. Disord. 44, 1470–1477. doi: 10.1007/s10803-013-1992-7

Stevenson, R. A., Siemann, J. K., Woynaroski, T. G., Schneider, B. C., Eberly, H. E., Camarata, S. M., et al. (2014c). Evidence for diminished multisensory integration in autism spectrum disorders. J. Autism Dev. Disord. 44, 3161–3167. doi: 10.1007/s10803-014-2179-6

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Sumby, W. H., and Pollack, I. (1954). Visual contribution to speech intelligibility in noise. J. Acoust. Soc. Am. 26, 212–215. doi: 10.1121/1.1907309

Talsma, D., Senkowski, D., Soto-Faraco, S., and Woldorff, M. G. (2010). The multifaceted interplay between attention and multisensory integration. Trends Cogn. Sci. 14, 400–410. doi: 10.1016/j.tics.2010.06.008

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Tees, R. C. (1999). The effects of posterior parietal and posterior temporal cortical lesions on multimodal spatial and nonspatial competencies in rats. Behav. Brain Res. 106, 55–73. doi: 10.1016/s0166-4328(99)00092-3

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Wallace, M. T., Meredith, M. A., and Stein, B. E. (1992). Integration of multiple sensory modalities in cat cortex. Exp. Brain Res. 91, 484–488. doi: 10.1007/bf00227844

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Wallace, M. T., Ramachandran, R., and Stein, B. E. (2004). A revised view of sensory cortical parcellation. Proc. Natl. Acad. Sci. U S A 101, 2167–2172. doi: 10.1073/pnas.0305697101

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Wallace, M. T., and Stein, B. E. (1994). Cross-Modal synthesis in the midbrain depends on input from cortex. J. Neurophysiol. 71, 429–432.

Wallace, M. T., and Stein, B. E. (2007). Early experience determines how the senses will interact. J. Neurophysiol. 97, 921–926. doi: 10.1152/jn.00497.2006

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Wallace, M. T., and Stevenson, R. A. (2014). The construct of the multisensory temporal binding window and its dysregulation in developmental disabilities. Neuropsychologia 64C, 105–123. doi: 10.1016/j.neuropsychologia.2014.08.005

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Wilkinson, L. K., Meredith, M. A., and Stein, B. E. (1996). The role of anterior ectosylvian cortex in cross-modality orientation and approach behavior. Exp. Brain Res. 112, 1–10. doi: 10.1007/bf00227172

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Keywords: multisensory integration, mouse behavior, operant conditioning, visual processing, auditory processing, mouse models

Citation: Siemann JK, Muller CL, Bamberger G, Allison JD, Veenstra-VanderWeele J and Wallace MT (2015) A novel behavioral paradigm to assess multisensory processing in mice. Front. Behav. Neurosci. 8:456. doi: 10.3389/fnbeh.2014.00456

Received: 24 October 2014; Accepted: 19 December 2014;

Published online: 12 January 2015.

Edited by:

Adam Kepecs, Cold Spring Harbor Laboratory, USAReviewed by:

Gidon Felsen, University of Colorado School of Medicine, USAAnne Churchland, Cold Spring Harbor Laboratory, USA

Copyright © 2015 Siemann, Muller, Bamberger, Allison, Veenstra-VanderWeele and Wallace. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution and reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Justin K. Siemann, Multisensory Research Laboratory, Neuroscience Program, Vanderbilt University, 7110 MRB III BioScience Bldg., 465 21st Avenue South, Nashville, TN 37232, USA e-mail: justin.k.siemann@vanderbilt.edu

Justin K. Siemann

Justin K. Siemann Christopher L. Muller2

Christopher L. Muller2  Jeremy Veenstra-VanderWeele

Jeremy Veenstra-VanderWeele Mark T. Wallace

Mark T. Wallace