Neuronal encoding of object and distance information: a model simulation study on naturalistic optic flow processing

- Department of Neurobiology and Center of Excellence “Cognitive Interaction Technology”, Bielefeld University, Bielefeld, Germany

We developed a model of the input circuitry of the FD1 cell, an identified motion-sensitive interneuron in the blowfly's visual system. The model circuit successfully reproduces the FD1 cell's most conspicuous property: its larger responses to objects than to spatially extended patterns. The model circuit also mimics the time-dependent responses of FD1 to dynamically complex naturalistic stimuli, shaped by the blowfly's saccadic flight and gaze strategy: the FD1 responses are enhanced when, as a consequence of self-motion, a nearby object crosses the receptive field during intersaccadic intervals. Moreover, the model predicts that these object-induced responses are superimposed by pronounced pattern-dependent fluctuations during movements on virtual test flights in a three-dimensional environment with systematic modifications of the environmental patterns. Hence, the FD1 cell is predicted to detect not unambiguously objects defined by the spatial layout of the environment, but to be also sensitive to objects distinguished by textural features. These ambiguous detection abilities suggest an encoding of information about objects—irrespective of the features by which the objects are defined—by a population of cells, with the FD1 cell presumably playing a prominent role in such an ensemble.

Introduction

Retinal image displacements are elicited when a moving object crosses the visual field (“object motion”). However, even if the outside world is stationary the retinal images are in continuous flow when the animal moves about in the environment. The resulting optic flow patterns are a rich source of information about the path and speed of locomotion, as well as the layout of the environment (Gibson, 1979; Koenderink, 1986; Dahmen et al., 1997; Lappe, 2000; Eckert and Zeil, 2001). During self-motion, visual motion cues may provide the world with a third dimension. When an animal passes or approaches a nearby object, the object appears to move faster than its background. Object detection based on such relative motion cues is thought to be particularly relevant in fast flying insects, since they generate a pronounced optic flow on their eyes and have hardly any other means to gain spatial information (e.g., Srinivasan, 1993). Accordingly, several insect species, ranging from flies to bees and hawkmoths, have been shown to use relative motion very efficiently to detect objects, to infer their distance and to respond to them adequately in different contexts, ranging from landing to spatial navigation. Thereby, they mainly use relative motion information at the edges of objects (Lehrer et al., 1988; Srinivasan et al., 1989; Lehrer and Srinivasan, 1993; Kimmerle et al., 1996; Kern et al., 1997; Kimmerle and Egelhaaf, 2000a; Dittmar et al., 2010).

However, spatial information can only be inferred from optic flow components that are induced by translational movements of the animal. The fly's and other insects' typical flight strategy to subdivide flights into saccades, i.e., phases of fast turns, and into intersaccadic intervals, i.e., phases of approximately straight flight, is discussed to be part of an active vision strategy to facilitate extracting information about the three-dimensional structure of the environment (Collett and Land, 1975; Wagner, 1986; Schilstra and van Hateren, 1999; van Hateren and Schilstra, 1999; Tammero and Dickinson, 2002; Braun et al., 2010; Boeddeker et al., 2010; Geurten et al., 2010).

Among insects, a great deal is known concerning flies about how optic flow information and, in particular, object information based on motion cues is represented and processed at the neural level. In the third visual neuropile of flies, the lobula plate, an ensemble of about 60 large individually identified neurons, the lobula plate tangential cells (LPTCs), play a prominent role in this context. Most LPTCs integrate signals from several hundreds of retinotopically arranged motion-sensitive input elements, i.e., the elementary motion detectors. Several LPTCs synaptically interact, in addition, with other LPTCs in the ipsi- and contralateral visual system. As a consequence, each LPTC responds best to a characteristic optic flow pattern, as induced during particular types of self-motion (for review, see Hausen, 1984; Egelhaaf, 2006, 2009; Borst et al., 2010). One class of these cells, which have been termed figure detection cells (FD cells; Egelhaaf, 1985b), differ from other LPTCs in their sensitivity to objects: FD cells respond strongest if an object moves across their receptive field. Their response decreases when the object is spatially extended beyond a certain size (Egelhaaf, 1985b). Other LPTCs, such as the H1 cell or the HSE cell (see below), have an increasing response with increasing object sizes (Hausen, 1982; Egelhaaf, 1985a). This distinct property of FD cells led to the functional interpretation that they mediate object-induced behavior, such as fixation or landing responses. This functional interpretation might be qualified by the fact that FD cells, though they respond best to objects, also respond, to some extent, to extended stimulus patterns. This complication becomes particularly obvious when they are not stimulated with simple objects of varying size moving at a constant velocity, but with spatially and dynamically more complex stimuli that approximate, to some extent, the complex optic flow pattern as seen by flies moving in three-dimensional environments (Kimmerle and Egelhaaf, 2000b; Liang et al., 2012).

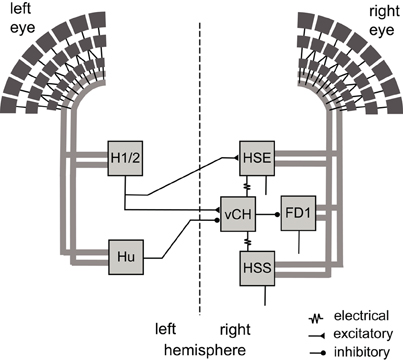

The issue of object specificity and its potential functional significance in object-induced behavior is approached in this study by model simulations of the most thoroughly analyzed FD cell, the FD1 cell (Egelhaaf, 1985b; Kimmerle et al., 2000; Kimmerle and Egelhaaf, 2000a,b; Liang et al., 2012). The analyzed network is formed by the FD1 cell and its presynaptic elements in the lobula plate. The FD1 cell integrates motion signals provided by retinotopic input elements in the frontal visual field. Its preference for moving objects over extended textures is achieved by an inhibitory GABAergic input from the vCH cell (Warzecha et al., 1993). The vCH cell is an LPTC that receives input from various other identified LPTCs, i.e., excitation from the ipsilateral HSE and HSS cells, as well as from the contralateral H1 and H2 cells, and inhibition from the contralateral Hu cell (Figure 1; Hausen, 1976, 1984; Eckert and Dvorak, 1983; Egelhaaf et al., 1993; Haag and Borst, 2001; Krapp et al., 2001; Spalthoff et al., 2010; Hennig et al., 2011).

Figure 1. Wiring sketch of the FD1 cell input circuit. Motion-sensitive elements of the right FD1 circuit that have a horizontally preferred direction. The FD1 cell and most of its presynaptic elements presumably receive retinotopic motion input (thick gray lines) from large parts of one eye. The right vCH cell inhibits the FD1 cell and receives itself excitatory and inhibitory input from motion sensitive LPTCs of both brain hemispheres. The left H1 and left H2 excite the right vCH cell, whereas the left Hu cell inhibits it. The right HSE cell and the right HSS cell are electrically coupled to the right vCH cell. FD1, HSE, and HSS are output neurons of the optic lobe, whereas H1, H2, Hu, and vCH connect exclusively to other LPTCs.

The preference of the FD1 cell for objects has already been modeled in several studies (Egelhaaf, 1985c; Borst and Egelhaaf, 1993; Hennig et al., 2008). However, none of these studies tried to mimic the cell's characteristic properties during naturalistic stimulation where objects and background move on the eyes depending not only on the three-dimensional layout of the environment, but also on the peculiar dynamics of the flies' self-motion. These studies rather targeted object-related response properties with highly simplified models and experimenter-designed stimuli.

In the present account, we developed a model of the FD1 cell and its input circuit that was optimized by an automatic and stochastic procedure on the basis of neural responses of the FD1 cell and its presynaptic elements to artificial and naturalistic stimulus scenarios used, thus far, in electrophysiological experiments. Naturalistic stimulus conditions are distinguished by their characteristic dynamics resulting from the saccadic flight and gaze strategy of flies. LPTCs other than FD1 could be shown to provide spatial information, in particular, during the intersaccadic translatory motion phases (Kern et al., 2005; Karmeier et al., 2006). Therefore, we expected object-induced responses in the FD1 cells, especially during the intersaccadic intervals. Based on a previous study that characterized and modeled the presynaptic elements of the FD1 cell (Hennig et al., 2011), the model of the FD1 circuit developed here mimics, in particular, the properties of the biological FD1 cell to naturalistic optic flow, as were unraveled in a parallel experimental study (Liang et al., 2012). We then challenged the model circuit with novel behavioral situations in order to test for hypotheses about the function of the FD1 cell as an object detector.

Materials and Methods

Model

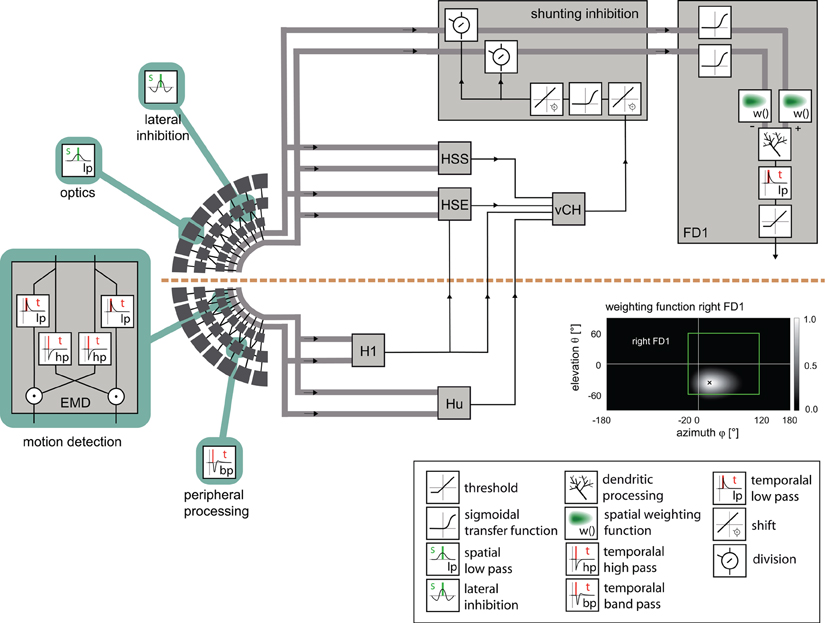

The model of the fly's visual motion pathway comprises the optics of the eyes, the peripheral processing stages of the visual system, local motion detection, the spatial pooling of arrays of local motion detectors by LPTCs, and the interaction between those LPTCs that are elements of the input circuitry of the FD1 cell (Figure 1). These different processing stages are organized into individual modules. As a first approximation, the flow of information is exclusively feed-forward. The individual time steps correspond to 1 ms. Model parameter values were obtained either from previous studies or were optimized as free model parameters in an automatic optimization process (see below).

Eye model and peripheral processing

Retinal images reconstructed from a free-flight trajectory and a 3D-model of the corresponding environment is spatially convolved with a Gaussian low-pass filter (σ = 2°). The filtered signals provide the input to the model photoreceptors, which are equally spaced at 2° in elevation and azimuth. The field of view of the left eye covers an elevation range from 60° above to 60° below the horizon, and extends horizontally from −20° in the contralateral field of view to +120° in the ipsilateral visual field (green rectangle in the inset at the bottom of Figure 2). The field of view of the right eye is mirror symmetric to the left one. For simplicity, the photoreceptors are arranged in a rectangular grid of 60 by 69 elements, which thus deviates in its details from the fly's roughly hexagonal ommatidial lattice (Exner and Hardie, 1989; Land, 1997; Petrowitz et al., 2000).

Figure 2. Model of the visual motion pathway of the fly from the eyes to the spatial integration in the lobula plate. A spatial low-pass filter approximated by a two-dimensional Gaussian function (inset) accounts for the optic properties of the ommatidia. The peripheral processing is approximated by an array of temporal band-pass filters (indicated by the impulse response of the filter) followed by a lateral inhibition, together providing the input to an array of elementary motion detectors (EMD) sensitive to horizontal motion. Each EMD is subdivided into two mirror-symmetric subunits with oppositely preferred directions, each consisting of a temporal high-pass filter, a low-pass filter and a multiplication stage. The retinotopic motion information of the half detectors with the same preferred direction is lumped into one channel (broad gray lines). The motion information conveyed by the channels is spatially integrated by the model FD1 and by model cells presynaptic to the vCH cell. The models of the H1, HSE, HSS, Hu, and vCH cells are as developed and tested in a previous study [Hennig et al. (2011)]. The retinotopic motion information is shunted before it reaches the FD1 cell (box “shunting inhibition”). The shunting is accomplished by a division by the vCH cell signal. The transmission is characterized by a half-wave rectification and a sigmoidal transmission function. Before the spatial signals are integrated by the model FD1 (box “FD1”), the excitatory and the inhibitory input channels are individually transmitted via a sigmoidal transmission function and weighted according to the spatial sensitivity of the respective FD1 cell (inset “weighting function right FD1”). The retinotopic signals are spatially integrated by means of an electrical equivalent circuit of a one-compartment passive membrane patch. One channel controls the inhibitory, the other the excitatory conductances of the integrating element. The integrated signals of all elements are temporally low-pass filtered to account for time constants of the cell. Additionally, the model FD1 is characterized by a threshold, because as a spiking element, it cannot convey negative signals. Inset box at bottom right: explanation of symbols referring to the computations in the circuit.

The peripheral processing module combines the properties of the photoreceptors and second-order neurons in the fly visual system to a temporal band-pass filter. The filter properties are approximated on the basis of experimental data and adjusted to the luminance conditions of the electrophysiological experiments on which the model simulations are based (Juusola et al., 1995; Lindemann et al., 2005). To enhance the edge contrast of the retinal images, a weak lateral inhibition between neighboring retinal input channels was implemented as a convolution with a 3 × 3 matrix:

Lateral inhibition between retinotopic elements is a common mechanism for contrast enhancement in biological visual systems and has also been proposed for second-order neurons in the fly visual system (Laughlin and Osorio, 1989).

Elementary motion detection

Elementary motion detection is based on an elaborated correlation-type motion detector with an arithmetic multiplication of a low-pass filtered signal of a peripheral processing module and a high-pass filtered signal of a horizontally-neighboring module (Figure 2) (Borst et al., 2003). The time constants are set to τlp =10 ms for the low-pass filter and to τhp = 60 ms for the high-pass filter. These parameters were estimated in a previous study (Lindemann et al., 2005). The detector consists of two half-detectors, i.e., mirror symmetric subunits with oppositely preferred directions. The corresponding half-detectors each form a retinotopic grid and are used as the input into the following model stages. For simplicity, the model does not contain contrast or luminance normalization. This appears to be justified for our current purposes as we analyzed the simulated neural responses only for a given luminance level and did not vary the pattern statistics. All the modules up to the level of elementary motion detection, except the lateral inhibition, are identical to the model of Lindemann et al. (2005).

Presynaptic elements of FD1

The vCH cell inhibits the FD1 cell and gives the FD1 cell its preference for objects. The vCH cell and its binocular integration of visual information were analyzed in a preceding model study (Hennig et al., 2011). This model vCH and its presynaptic elements were complemented by the lateral inhibition stage in the periphery, readjusted and taken for the current FD1 study.

Synaptic transmission

The synaptic transmission characteristic between the elementary movement detectors and the FD1 cell is implemented as a sigmoid function:

where α describes the slope of the sigmoid, χ the level of saturation and β the operating range of the synapse modeled. A rectification stage prevents the output values from falling below zero.

Spatial sensitivities of LPTCs

Heterogeneous dendritic branching of the LPTCs and synapse densities lead to receptive fields with characteristic sensitivity distributions (Hausen, 1984). The model takes these into account by using a two-dimensional Gaussian weighting function. The distribution is horizontally asymmetric, i.e., the angular width on the left is not equal to that on the right. The sensitivity distribution of the model FD1 is shown in the inset of Figure 2. The sensitivity for a given retinal position is defined as follows:

where θ denotes the elevation and φ the azimuth. θC and φC are the center of the weight field. σθ is the angular width of the distribution in elevation. σφ_r and σφ_l are the azimuthal angular widths on the right and left, respectively. The same weighting function is used for the inhibitory and excitatory inputs from the half-detectors. The different parameters are adjusted to approximate the different LPTCs' receptive field characteristics.

Shunting inhibition

The inhibition of the local input elements of the FD1 cell is presumably presynaptic and accomplished by the vCH cell (Warzecha et al., 1993; Hennig et al., 2008). The model approximates shunting of the array of the half-detectors by a division (Koch, 1999):

where emd is the movement detector output signal to be shunted. vCH is the axonal vCH signal shifted by shiftch · synvCH ( ) is the same type of transfer function as that used for the synapse between FD1 and its retinotopic input (see above). shiftch_fd is an additional shift to prevent division by small values. All parameters including those of synvCH ( ) were optimized.

Spatial integration

The dendritic integration of retinotopic motion signals by the FD1 cell, as well as of the other LPTCs that receive such input and are presynaptic to FD1 (Hennig et al., 2011), is approximated using an electrical equivalent circuit of a one-compartmental passive membrane patch. The resulting membrane potential is given by:

g− and g+ denote the total conductance of the inhibitory and excitatory synapses and g0, the leak conductance, respectively.

The excitatory and inhibitory conductances are controlled by the shunted outputs of the two half-detectors of local movement detectors. Ei and Ee are the corresponding reversal potentials with Ee set to 1. The resting potential E0 of the cell is set to zero. The leak conductance g0 of the element is arbitrarily set to 1. All other conductances are thus to be interpreted relative to the leak conductance. g− and g+ are calculated as the weighted output of synaptic transfer functions. Capacitive properties of the cell membrane are approximated by a temporal low-pass filter after the dendritic integration (see Lindemann et al., 2005).

The excitatory conductance g+ is controlled by the shunted outputs of the half-detectors emd+ at the corresponding grid locations with a preferred direction from front to back. In order to obtain the excitatory conductance g+, the half-detector outputs are transformed by the synaptic transfer function syn+ (Equation 1) before being weighted by the cells' sensitivity distribution wFD1:

where n and m denote the position in the retinotopic grid. The inhibitory conductance g− is controlled accordingly by the second set of half-detectors emd−:

The parameters of the synaptic transfer functions and the weight function are free parameters of the model. The parameters of the inhibitory and excitatory channels are independent.

Data on FD1 responses were obtained from extracellular recordings that were obtained in a parallel study (Liang et al., 2012). Thus, a spike threshold was incorporated into the model FD1:

Stimuli for the Model Simulations

Naturalistic stimulation

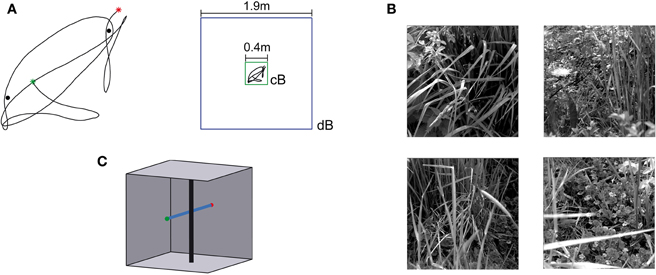

Naturalistic stimulation was based on a flight trajectory of a freely flying fly (Figure 3A, left). The position and the orientation of the head of blowflies flying in an arena of 0.4 × 0.4 × 0.4 m3 were recorded using magnetic fields driving search coils attached to the flies (van Hateren and Schilstra, 1999; Schilstra and van Hateren, 1999). The trajectory has a duration of 3.45 s. The side walls of the flight arena were covered with images of herbage (Figure 3B). The visual stimulus encountered by the fly during the flight could be reconstructed from the head trajectories, because the fly's compound eyes are fixed in its head and the visual interior of the cube was known. This visual stimulus sequence (close background condition, cB) represented one of the four stimulus conditions tested. The other stimulus conditions are based on virtual manipulations of the original flight arena. It was virtually enlarged to 1.9 × 1.9 × 1.9 m3, including the wall patterns, while the flight trajectory was left the same (distant background condition, dB; Figure 3A, right). Furthermore, two vertical cylinders with a diameter of 5 mm were placed in both the original and the enlarged flight arena near the trajectory (Figure 3A). In accordance with the corresponding electrophysiological experiments (Liang et al., 2012), the cylinders were covered with a blurred random dot pattern in the FD1 simulations and with a uniform grey pattern in the HSE and vCH simulations. In the condition with close background and objects (cBO), the cylinders extended from the bottom to the top of the arena. In the distant background condition with objects (dBO), the height of the cylinders was scaled with the arena in the HSE and vCH simulations; the height of the cylinders was kept in their original size in the FD1 simulations, again in accordance with the corresponding experiments. The location of the cylinders relative to the trajectory was not changed.

Figure 3. Flight trajectory and stimulus conditions.(A) Left: Horizontal projection of the natural trajectory (black line) into the horizontal plane. The green dot tags the starting point, and the red dot, the end point of the trajectory. Two objects were placed near the trajectory for the environmental condition “with objects.” The black dots mark the locations of the objects. Right: a cube with an edge length of 0.4 m was used (green square) for the environmental condition with close background (cB), and for the distant background (dB) environment, the edge length was 1.9 m (blue square). The trajectory shown in the center of the squares (black line) was the same for both conditions and is shown in scale. (B) Images used to texture the cube walls. Each wall was textured with a different image. The textures were placed on the walls in different orders to change the textural condition of the scene. (C) Sketch of the “object” test flight and environment. The black vertical cylinder indicates the object, and the blue line, the trajectory. The green dot tags the starting point, and the red dot, the end point. The wall texture is not shown.

Dependence on object size

To analyze the size dependence of the model FD1 cell responses, vertical cylinders moved back and forth within its ipsilateral visual field from a frontal position at 0° to a fronto-lateral position at 45°. The cylinders had retinal sizes of 5°, 10°, 15°, and 20°, respectively, and were covered by a blurred random dot pattern. The cylinders were moved within the original flight arena. The model fly was positioned at its center. Responses to whole-field motion were determined as reference by 45° clockwise and counter clockwise rotations of the arena around the model fly.

Test flight “object”

In the test flight with an object in the arena, the model fly flew on an artificial straight trajectory in the middle of a cube parallel to the side walls and to the floor of the original arena (Figure 3C). A vertical cylinder was placed at a distance of 20 mm on the right of the flight trajectory. The cylinder had a height of 370 mm and a diameter of 4 mm. The objects were textured with a section of a wall texture. The flights were performed at two velocities (0.5 m/s and 1.0 m/s) with and without the cylinder. Four different texture conditions were tested by exchanging the patterns on the different arena walls via four sequential 90° rotations of the flight arena.

Test flight “texture dependence”

To test for the texture dependence of the model cells' responses, the model fly was placed in the middle of a cylindrical flight arena. The arena had a diameter of 120 mm and rotated at a constant speed of 360°/s around its vertical axis. The model fly could not see the top or bottom of the drum. Four texture conditions were tested. One of the wall textures of the original flight arena was used for each condition. We extended each image horizontally by its mirrored version to avoid a distinct local border.

Optimization

For the optimization process, a 10 s training stimulus sequence was composed of several stimulus sections. The first 4 s of the sequence consisted of one of the four size dependence stimuli (motion of the 10° cylinder) as well as whole-field motion. Then four sections followed that consisted of the optic flow sequences as perceived during a virtual flight under the four environmental conditions (cB, cBO, dB, and dBO). Each section had a duration of 1.5 s and contained most flight sections in which an object moved across the receptive field of the right FD1 cell under the cBO and dBO conditions; for the cB and dB conditions the corresponding sections of the virtual flight sequences were used. Each of the four 1.5 s optimization flight sections comprised only part of the corresponding naturalistic image sequence (duration: 3.45 s) that was tested afterwards. Moreover, only one object size was included in the size dependence stimuli for optimization, whereas three additional object sizes were used for testing.

The root mean square difference drms was chosen as a quantitative measure of the similarity between the physiological data p(t) available from the parallel studies (Hennig et al., 2011; Liang et al., 2012) and the model data ms(t):

The model, as described above, does not contain all the latencies of the nervous system. To correct for this fact, we determined the optimal time shift between the model and neuronal signals by cross-correlation. Since the excitatory reversal potential was set arbitrarily to 1 (see above), the model response had to be rescaled before comparison with the physiological responses. This was done by determining the factor f that scales the model response to the corresponding neuronal response with the smallest drms. Since the model is not analytically accessible, an automatic method was applied for parameter optimization. As it is convenient for continuous, non-linear, multimodal, and analytically non-accessible functions, the automatic stochastic optimization method “Differential Evolution” was chosen (Storn and Price, 1997; Price, 1999).

The parameters of the search algorithm were adjusted to the current optimization task in preliminary tests (scaling factor f = 0.6; crossover constant CR = 0.9). Since Differential Evolution is a stochastic optimization method, finding the global optimum is not guaranteed, as it is possible to get stuck in a local inflection. Therefore, the procedure was repeated 25 times for each model with random starting values. Only the best solutions in terms of the similarity function drms were used in further analysis (for details, see Hennig et al., 2011).

Results

We analyzed the functional properties of the FD1 cell by a model approach. The model was optimized by an automatic and stochastic optimization process in order to mimic a wide range of properties of the FD1 cell as characterized in experimental studies (Egelhaaf, 1985b; Liang et al., 2012). The optic flow sequence used for optimization consisted of five sections. The first aimed to elicit large FD1 responses to object motion (width of object 10°) and small responses to background motion. The other sections targeted at object-dependent responses under naturalistic flight conditions. The model was stimulated with sections of two optic flow sequences, as seen on the natural flight trajectory in a small flight arena (close background) with and without object, respectively (conditions cB and cBO). The last two sections were based on the same section of the flight trajectory, however, with the optic flow determined for the large flight arena (distant background, conditions dB and dBO; Figure 3A). The performance of the model under naturalistic stimulus conditions was assessed on the basis of the complete flight sequences. For testing size dependence of the model cells, three further object widths were employed not used for optimization. Moreover, the model was tested on several novel stimulus scenarios to further examine the functional properties of FD1 cells.

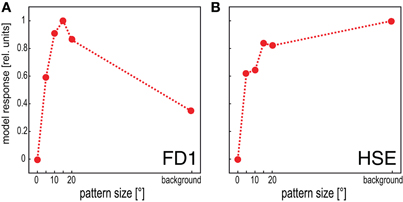

The model FD1 is able to mimic the most prominent property of FD1 cells, i.e., their characteristic size dependence: a small object elicits strong responses, whereas a spatially-extended motion stimulus elicits only moderate responses (Figure 4A; Egelhaaf, 1985b). This property distinguishes the FD1 cell and its model counterpart from the HSE cell and the corresponding models which are not only sensitive to objects, but respond with a similar strength to spatially-extended motion stimuli (Figure 4B; Egelhaaf and Borst, 1993).

Figure 4. Dependence of the mean response of the model FD1 and the model HSE on pattern size. Vertical cylinders with different diameters were moved within the receptive fields of the model cells to determine the responses to pattern size. The background response was obtained by moving the entire background around the model fly. (A) The model FD1 reached its largest mean response for objects with a limited extent. Motion of the entire background led to smaller responses. (B) The model HSE reached large responses for objects. However, its responses were largest when it was stimulated by motion of the entire background.

Responses to Naturalistic Stimulation

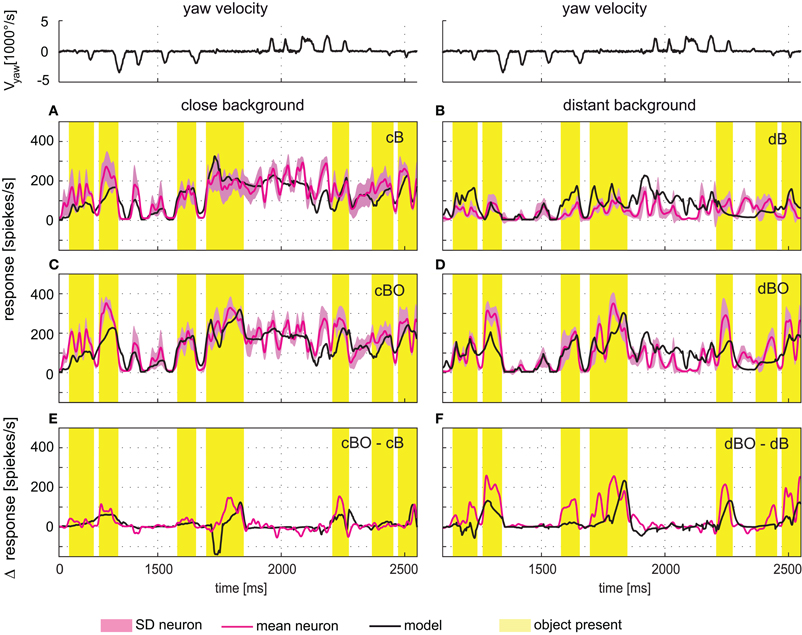

The time courses of model and cell responses to the naturalistic stimulus sequences are similar (Figure 5). Considerable sections of the response traces are within the biological cell's range of variability (e.g., Figure 5A around 1800 ms), however, other sections show clear differences (e.g., Figure 5A around 2200 ms). A similar model performance is achieved for stimulations based on modified versions of the original stimulus sequence, i.e., after objects had been inserted into the flight arena and/or the flight arena had been virtually enlarged (Figures 5B–D). Most importantly, the electrophysiologically-established object-induced response increments of the FD1 cell are also mimicked by the model during stimulation with dynamically complex naturalistic optic flow sequences. Both the physiological and model FD1 responses are larger when the object moves in the preferred direction within the excitatory receptive field than when the object was not present (Figures 5E,F).

Figure 5. Responses to naturalistic optic flow. The angular yaw velocity of the head determined during a section of natural flight is plotted against time for the flight sequence used for optimization of model parameters (top traces). The flight behavior can be divided into saccades—short phases of fast turns—and intervals primarily dominated by straight flight. (A–D) Experimentally measured response time course of the FD1 cell (red, ± SD light red) and simulated response of model FD1 (black). The yellow bars mark intervals with an object within the FD1 cell's receptive field. For comparison, the yellow marked intervals are also shown for those environmental conditions without objects. (A) Responses of model and cell for the close background condition without object (cB). (B) Responses of model and cell for the distant background condition without object (dB). (C) Responses of model and cell for the close background condition with object (cBO). (D) Responses of model and cell for the distant background condition without object (dBO). (E) Difference between the responses obtained under the cBO and cB conditions. (F) Difference between the responses of the dBO and dB conditions. Model response differences are plotted in black, and cell response differences in red. Experimental data were collected in a parallel study [Liang et al. (2012)].

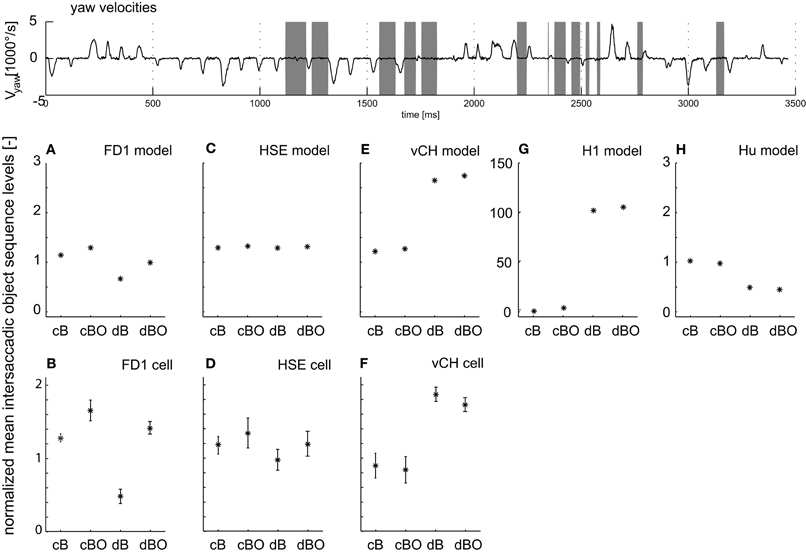

Such object- and distance-dependent responses are expected to be particularly pronounced during the translatory movements during intersaccadic intervals. Therefore, we took a closer look at object-induced response changes during intersaccadic flight intervals with the close and distant backgrounds, as well as with and without objects (cB, cBO, dB, and dBO) and compared the averaged intersaccadic responses of the cell and the model (gray time intervals in Figure 6 top trace). For the analysis of the responses, a shift of the intersaccadic intervals by 22.5 ms takes the delay in the fly's visual system into account. The averaged responses were normalized to the mean of all intersaccadic responses for the close background condition without object (cB). The FD1 cell and its presynaptic elements, i.e. the vCH, HSE, and H1 cells, showed very different intersaccadic response characteristics for the different conditions.

Figure 6. Intersaccadic response levels. The angular yaw velocity of the head plotted against time for the naturalistic flight sequence used for parameter optimization (top trace). Intersaccadic intervals with an object in the receptive field of the FD1 cell are marked by gray bars. Intersaccadic responses of model cells (middle diagrams) and biological cells (bottom diagrams) averaged over these intersaccadic intervals for all environmental conditions. The responses are normalized to the mean intersaccadic responses determined for the environmental condition with close background and without objects (cB). (A, B) FD1, (C, D) HSE, (E, F) vCH, (G) H1, and (H) Hu. Error bars shown for experimental data represent standard deviations across cells; experimental details are in Liang et al. (2012). Note: The experimental HS responses were not only taken from HSE, but—since the responses were not systematically different under the stimulus conditions tested—also from HSS and HSN; details are given in Liang et al. (2012).

FD1.

Both the model and the biological FD1 are sensitive in a similar way, despite quantitative differences, to the presence of objects and to background distance. In good accordance with the above analyses, an object increases the response independent of the background distance (Figure 6A, B; compare cB vs. cBO and dB vs. dBO). Without an object, a close background elicits larger responses than a distant background. This difference decreases once an object moves into the receptive field, since then the responses are dominated by object motion.

HSE.

Despite sharing the same preferred motion direction with FD1, the HSE cell responds differently to modifications in the 3D structure in the flight arena. The object has only a little impact on the response for both background conditions (Figure 6C). The increase in background distance (cB vs. dB and cBO vs. dBO) leads to a slight drop in the response level with overlapping standard deviations. Here again, the model mimics the properties of the cell (Figures 6C,D).

vCH.

Both the vCH model and biological cell show a similar dependence of the intersaccadic responses on background distance and the presence of an object. The presence of objects has only a small impact (Figures 6E,F; compare cB with cBO and dB with dBO), whereas background distance affects the responses considerably. The responses and, thus, vCH's inhibition of FD1, increase a lot when enlarging the arena. This finding may surprise at first sight, because vCH gets ipsilateral excitatory input from the HSE cell, which itself reacts with a slight response decrease when the distance to the background increases (Figures 6C,D). The response levels of excitatory contralateral input elements of vCH can explain this difference between vCH and HSE.

H1.

The H1 cell reaches a higher intersaccadic response level when the background is distant (Figure 6G) and passes this response increase onto vCH. The preferred direction of H1 is from back to front; it is inhibited by front-to-back motion. Consequently, its absolute intersaccadic responses are very small if the background is close, since the optic flow is mainly from front to back. The inhibition of H1 with a large distance to the background becomes small as a consequence of the much reduced retinal velocities resulting from front-to-back translatory motion. Our model simulation revealed that under these conditions, the residual rotations in the intersaccadic intervals overcome, to some extent, the inhibiting impact of the forward translation, and lead to large H1 and, thus, large vCH responses (van Hateren et al., 2005).

Hu.

The Hu cell, as an inhibitory element of vCH, contributes to the large vCH response levels if the background is distant. Hu has a preferred direction from front to back. In comparison with the close background conditions, the smaller overall optic flow under the distant background conditions leads to lower intersaccadic response levels (Figure 6H). As a consequence, vCH is then less inhibited, contributing to its large response amplitude.

Object and texture preference of the circuit elements

The FD1 model reflects the characteristic properties of the biological FD1 cell for a wide variety of experimentally tested conditions and, in particular, for naturalistic flight conditions. We now tested the model with a novel protocol by systematically varying the background texture during straight flight sequences to investigate in more detail the object specificity of the FD1 cell. We used straight translational flights to approximate the intersaccadic flight intervals, which are thought to be most important for gathering information about the 3D-structure of the environment (see above).

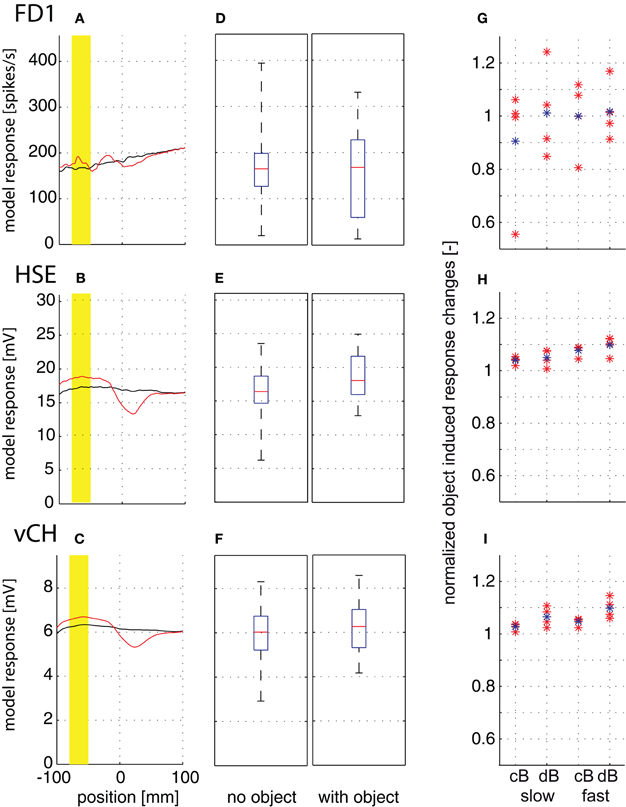

If the FD1 cell plays a dominant role in object detection, it is expected to indicate the presence of an object by an increase in its response amplitude. To test this hypothesis, we let the model pass an object on a straight flight trajectory at two velocities (0.5 m/s and 1.0 m/s). A vertical cylindrical object was placed at a small distance from the flight trajectory. The object was textured with a section of the wall texture. The flights were repeated for all four virtual arena conditions (cB, cBO, dB, and dBO) and with different wall textures. We addressed the following questions. Do responses with and without the object differ? Is it possible to infer the existence of an object unambiguously from the model response? How are the responses of other cells of the circuit related to those of FD1?

The response amplitudes of all model cells tested depend on the position along the movement trajectory, and change if there is an object close to the trajectory (Figures 7A–C; yellow mark indicates a 30 mm interval while the object moves through the center of the cells' receptive fields). The response traces with the object are complex: the response amplitudes are larger than those without the object when the object passes the cells' receptive field center, but may be smaller when the object moves in the rear part of the visual field. The object-induced responses of the different model cells were averaged over the marked 30 mm interval in two ways for quantification. (1) To assess whether an object induces larger responses than the background, irrespective of object velocity and background distance and texture, we calculated the median, the quartiles, and the ranges of the responses across all flight conditions without and with the object. The response ranges with and without the object are very broad and overlap considerably for all cell types and in particular for FD1 (Figures 7D–F). This finding indicates that it is hardly possible to infer unambiguous information about a nearby object from the responses of FD1 and of its presynaptic elements across environmental conditions. (2) We determined the object-induced response changes separately for the slow and fast translation velocities, the two arena sizes and the four pattern conditions (Figures 7G–I) to assess whether response changes evoked by a nearby object depend on the environmental conditions. The mean object-induced responses were normalized to the corresponding mean responses generated without the object. All cell types showed object-induced response changes. Surprisingly, they appeared to be more pronounced in HSE and vCH than in FD1, at least for the conditions tested. Object-induced response increments in HSE and vCH may increase slightly with background distance (Figures 7H,I). It is most obvious, however, that the FD1 responses especially depend very much on the wall texture (Figure 7G). This texture dependence obliterates any potential object preference. The pattern dependence of HSE and vCH responses was much smaller than that of FD1 (Figures 7H,I). We can conclude that all cells analyzed and, in particular, the FD1 cell do not unambiguously signal a nearby object. Rather the responses, especially of FD1, seem to be greatly affected by the textural properties of the environment.

Figure 7. Object-induced response changes. Responses to straight flight sequences. The fly crossed the virtual flight arena parallel to and at equal distance from the side walls and the floor. A vertical cylindrical object (4 mm) was placed at a small distance from the flight trajectory. (A–C) Position-dependent response traces of FD1 (A), HSE (B), and vCH (C) while passing the object at 1 m/s in the small arena (cB) for one texture condition. Response traces without object are plotted in black, and those with object, in red. Position 0 is defined as the fly's position on the trajectory with the object at 90° in the lateral visual field. The yellow bar marks a flight interval of 30 mm length where the moves were through the most sensitive part of the cells' receptive field. (D–F) The median, quartiles, and range of responses with object (left) and without object (right) are shown as averaged over the 30 mm interval across the two velocities, the four textures and both arena sizes to assess whether the object can lead to larger responses than the background for a wider range of conditions. Model responses of FD1 (D), HSE (E), and vCH (F). (G–I) Object-induced response changes induced by a velocity of 0.5 m/s (left) and 1 m/s (right) as averaged over the 30 mm interval and normalized to responses in the same interval but without object. The object-induced response changes of FD1 (G), HSE (H), and vCH (I) are given separately for each texture condition (red dots) and averaged over all texture conditions (blue dots). The object-induced response changes were normalized to the corresponding mean responses generated without object.

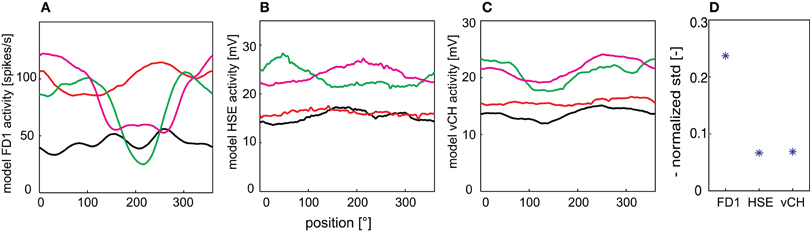

To analyze the texture dependence of the elements of the FD1 network systematically, we isolated the texture influence from the influence of objects, edges and looming walls by placing the model in the center of a cylindrical arena which was covered with the same textures as those used for the walls in the other simulations. We horizontally extended the texture by its mirrored version to prevent the edges changing the local texture statistics. The FD1 responses were characterized by an extreme pattern dependence not only between differently patterned drums, but also dependent on the drum position (Figure 8A). HSE and vCH also revealed pattern dependences, but at a considerably lower level (Figures 8B,C). To quantify this pattern dependence, we calculated the mean normalized standard deviation of the position-dependent fluctuations of the time-dependent responses to the different textures (Figure 8D). As expected from the position-dependent response traces, the relative pattern-dependent fluctuations were also much larger for the FD1 model cell than for its two presynaptic elements.

Figure 8. Pattern-dependant response fluctuations. Responses of the FD1 (A), HSE (B), and vCH (C) model to a drum rotating at a velocity of 360°/s around the model. (D) The normalized standard deviation as averaged over the four different texture conditions are given for all model cells to quantify the fluctuations of the model responses.

Discussion

We developed a model of the FD1 cell, an identified motion-sensitive interneuron in the blowfly's visual system, and of its major presynaptic elements. These presynaptic elements are responsible for the most characteristic property of the FD1 cell: Its larger responses to objects than to spatially extended patterns (Egelhaaf, 1985a,b,c; Warzecha et al., 1993; Hennig et al., 2008). Even the responses to dynamically complex naturalistic stimuli, shaped by the saccadic flight and gaze strategy of blowflies, are enhanced when an object crosses the receptive field during intersaccadic intervals compared to the same stimuli without an object (Liang et al., 2012). Our model of the FD1 circuit shares all these properties. However, it also revealed that the object-induced response increments, especially in the FD1 cell, are superimposed by pronounced pattern-dependent fluctuations. Thus, at first sight, this texture dependence may raise doubts about the ability of FD1 cells to signal the existence of nearby objects in textured environments.

Predictive power for naturalistic stimulation conditions

None of the former modeling studies on FD1 tried to mimic the cell's characteristic properties during naturalistic stimulation. They rather targeted object-related features with highly simplified models and artificial stimulation (Egelhaaf, 1985c; Borst and Egelhaaf, 1993; Hennig et al., 2008). In contrast, we used more naturalistic stimulation based on the blowfly's flight behavior and, in particular, took into account the typical dynamical properties of the saccadic flight and gaze strategy. Moreover, our model was less abstract than the previous ones: it takes into account the inhibition of FD1 via the vCH cell, as well as major parts of its experimentally-established input circuitry, including the complex receptive fields and synaptic interactions of the cells involved.

Our model FD1 shares major properties of its biological counterpart for artificial as well as for naturalistic stimulation. Despite differences between the details of the time course of the responses to naturalistic optic flow of the model and the biological FD1 cell, as well as of its presynaptic elements, the model circuit is well able to mimic the distinguishing qualitative features of the average intersaccadic responses of all cell types analyzed. These features include object-induced response increments, as well as the dependence of the responses on the three-dimensional layout of the environment (Figure 6).

The current model of the FD1 circuit has one major limitation, since it was adjusted only to the luminance and contrast conditions of the experiments that led to the neural data used to adjust the model parameters (Lindemann et al., 2005; Hennig et al., 2011; Liang et al., 2012). Before using the model as a sensory module in a comprehensive fly model operating under a broader range of environmental conditions, it needs to also account for the nonlinear contrast processing and adaptive processes in the peripheral visual system (Laughlin and Hardie, 1978; Laughlin, 1989; French et al., 1993). Models of peripheral visual information processing were implemented in previous studies simulating the responses to patterns with a natural range of luminance and contrast (van Hateren and Snippe, 2001; Mah et al., 2008). When integrated into models of LPTCs or of cells which are sensitive to extremely small objects (STMDs), they enabled these models to perform under a wide range of luminance and contrast conditions (Egelhaaf and Borst, 1989; Shoemaker et al., 2005; Wiederman et al., 2008; Brinkworth et al., 2008; Brinkworth and O'Carroll, 2009; Wiederman et al., 2010; Meyer et al., 2011).

Object detection and distance coding

In previous studies, the preference of FD1 for objects led to the interpretation that this cell may be able to detect objects even if they had the same texture as their background and could only be discriminated by relative motion cues (Egelhaaf, 1985a,c; Kimmerle et al., 1997; Kimmerle and Egelhaaf, 2000a; Kimmerle et al., 2000). Further studies revealed that the inhibitory input of the FD1 cell provided by the vCH cell is, in fact, capable of producing this object preference (Borst and Egelhaaf, 1993; Warzecha et al., 1993; Hennig et al., 2008). On this basis, it has been generally concluded that the FD1 cells play a role in detecting stationary objects which might be used as landing sites (Kimmerle et al., 1996) or which are obstacles in the fly's flight path. Such stationary objects might be detected by the FD1 cell, because during locomotion their retinal images move relative to that of the background. A study of Higgins and Pant (2004) showed that an inhibitory network similar to the input circuit of the FD1 cell is also able to mediate target tracking. However, it is very unlikely that the FD1 cell is involved in tracking moving targets (see below).

Since object detection on the basis of relative motion cues during translatory locomotion depends, to a large extent, on both the distance of the animal to the object and to the background and, thus, on discontinuities in the spatial layout of the environment, it is not surprising that the intersaccadic neural and model responses are distance-dependent. Owing to the peculiar increase in intersaccadic response amplitude of the vCH cell, the FD1 cell responds much less to background motion and, thus, is able to discriminate an object better when the background is distant than when it is close.

Despite the pattern dependence of the model responses, the three-dimensional structure of the environment, including objects, influences the FD1 cell, as well as the other elements of the network, though in quite different ways. The intersaccadic response level revealed clear object-induced effects in different environments, but the response level also depends on the distance to the background (Figure 6). In contrast to its presynaptic elements, the FD1 responses strongly depend on background distance and on the presence of objects. FD1 responds with large amplitudes if the background or an object is close. This might hint at a distance encoding independent of object size. However, the pronounced texture dependence of the FD1 response will presumably prevent an unambiguous performance in distance encoding.

This ambiguity has been further corroborated by testing the model with targeted translational flights. Under these conditions, the model FD1 does not respond with a clear activity increase to an object close to a straight trajectory. In our model simulations, even the HSE and vCH showed an object-induced response increment, although these do not show preferences for objects when their responses to an object and a spatially extended pattern are compared (Figure 4). We could show that one reason for the ambiguous object detection properties of the different cell types is the characteristic pattern dependence of their responses. These findings raise doubt about the FD1 cell representing an unambiguous detector of spatially salient objects.

Pattern dependent response fluctuations

Environmental texture strongly affects the responses of the model FD1 cell (Figure 8). Object-induced response changes that are induced by relative motion cues resulting from discontinuities in the spatial layout of the environment may be much less conspicuous than changes in the time course of the responses induced by textural features. This also holds true for other elements of the network. The texture-induced response changes of HSE and vCH are much smaller than those of the FD1 cell, but they might still be stronger than the changes induced by spatially salient objects.

Pronounced texture-dependent response fluctuations have been known to occur in fly LPTCs for a long time. If a textured image moves at a constant velocity across the receptive field of LPTCs or of models mimicking their properties, the response amplitude is usually not constant, but may modulate over time in a pattern-dependent fashion (Figure 8; Egelhaaf et al., 1989; Shoemaker et al., 2005; Rajesh et al., 2006; Brinkworth and O'Carroll, 2009; Meyer et al., 2011; O'Carroll et al., 2011). Because of these modulations, it is not easily possible to infer the time course of pattern velocity from such neuronal signals. Therefore, these modulations have been referred to in some studies as “pattern noise,” because they deteriorate the neuron's ability to provide unambiguous velocity information (Shoemaker et al., 2005; Rajesh et al., 2006; Brinkworth and O'Carroll, 2009; O'Carroll et al., 2011). This limitation of representing unambiguous velocity information is also reflected in the limited ability of the FD1 cell to detect an object based on relative motion cues, as characterized in the present study.

Why are the pattern-dependent response fluctuations more prominent in FD1 than in the other LPTCs that are part of its input circuitry? The excitatory receptive field of FD1 is smaller than that of the inhibitory vCH and slightly shifted in its sensitivity maximum (Egelhaaf, 1985b; Egelhaaf et al., 1993; Krapp et al., 2001). The shift between the sensitivity maximum of both cells leads to a phase shift of the response to pattern elements moving through the receptive fields. Moreover, the larger receptive field of vCH leads to stronger blurring of the pattern-dependent response fluctuations than in the FD1 cell. This blurring is even enhanced by the dendrodendritic interactions between the vCH and the HSE cells (Cuntz et al., 2003; Hennig et al., 2008). Thus, the mechanism which accounts for the FD1 cell being more sensitive to objects than to spatially extended patterns is also responsible for its sensitivity to the textural properties of the environment.

This finding extends the conclusion drawn in a recent modeling study with respect to pattern-dependent response fluctuations of LPTCs, such as HSE, that just spatially pool the outputs of local movement detectors (Meyer et al., 2011): Large receptive fields blur pattern-dependent response fluctuations and, thus, improve the quality of velocity signals; however, they do this at the expense of their locatability. Hence, if motion signals, e.g., originating from an object, need to be localized by a neuron, its receptive field should be sufficiently small; then, however, velocity coding is only poor and the signal provides local pattern information. This trade-off indicates that the size and geometry of receptive fields should be adjusted according to the particular task of the motion-sensitive neuron: they should be large if good velocity signals are required, but should be relatively small if motion-dependent pattern information is required that can be localized in the visual field (Meyer et al., 2011). Note that for a neuron that is to encode spatial information on the basis of optic flow elicited during translatory self-motion, good velocity signals are essential and, thus, a large receptive field is beneficial. However, a neuron such as FD1 that is destined from its input circuitry to represent object information can hardly have a very large receptive field. Therefore, it is almost inevitable that it also reveals pronounced pattern-dependent fluctuations in its responses.

Object induced behavior and potential functional significance of the FD1 circuit

Are such pattern-dependent response fluctuations inevitably a disadvantage for a neuron that is meant to represent object information? The answer to this question depends a lot on how “object information” is defined. In most experiments on object detection in flies and, in particular, on the FD1 cell, the discontinuities in the three-dimensional layout of the environment, such as a nearby structure that leads to relative motion cues on the retina during locomotion, have been regarded as objects. However, if we also regard any pronounced textural discontinuity in the environment as defining an object that can somehow be distinguished from other parts of the environment, texture-dependent response modulations of an FD1 cell also reflect object information. In such a conceptual framework, these kinds of object responses could also be of functional significance for behavioral control. In other words, the input circuit of the FD1 cell could then be interpreted as a means to enhance the sensitivity of the cell to all kinds of spatial discontinuities in the environment: Discontinuities in the three-dimensional layout, and also in pattern properties.

Nonetheless, it is hardly possible to infer the nature of the environmental discontinuity detected from the FD1 response and, therefore, of the object that is somehow signaled by the cell, since a given response level cannot be interpreted unambiguously and may result from different types of environmental features. This problem might be resolved by population coding. The ensemble of cells involved in object-dependent behavior presumably includes cells in addition to FD1. Other LPTCs reside in the blowfly lobula plate that also respond preferentially to objects, but have different preferred motion directions and receptive field properties (Egelhaaf, 1985b; Gauck and Borst, 1999). Other LPTCs, such as HSE, are discussed to encode translational motion and, thus, information about spatial parameters during the intersaccadic intervals (Kern et al., 2005; Karmeier et al., 2006). None of them seems on its own to provide unambiguous information about any environmental parameter. Hence, for most situations in the life of a fly, an ensemble of neurons might be essential to encode information about objects in cluttered surroundings.

In most cases when a collision with objects in a fly's natural environment needs to be avoided, the objects are stationary and may vary tremendously in size and shape. The same is true for objects that serve as landing sites. In contrast, when hunting a prey or chasing after a potential mate, insects are required to detect and pursue extremely small moving objects. In the visual system of dragonflies and several fly species, specialized neurons have been concluded to play a role in such tasks (Olberg, 1981, 1986; Gilbert and Strausfeld, 1991; Strausfeld, 1991; Wachenfeld, 1994; Nordström et al., 2006; Nordström and O'Carroll, 2006; Barnett et al., 2007; Geurten et al., 2007; Trischler et al., 2007). These neurons differ tremendously from the FD1 cell: They are highly selective to objects that are smaller than the interommatidial angle, even if they move in front of a cluttered background. These properties presumably play a role in predatory or chasing behavior for mates (Collett and Land, 1975; Wehrhahn, 1979; Wehrhahn et al., 1982; Zeil, 1983; Olberg et al., 2000; Boeddeker et al., 2003; Trischler et al., 2010). Pursuit, especially in the case of mating behavior, is thought to be mediated by male-specific visual neurons sensitive to small targets (Gilbert and Strausfeld, 1991; Strausfeld, 1991; Wachenfeld, 1994; Trischler et al., 2007), but not by the FD1 cell that has been characterized in females and is not sufficiently sensitive to extremely small objects.

Conclusions

Our model FD1 circuit is similar in its structure to its biological counterpart and mimics its characteristic response properties, which led in previous studies to the conclusion that the FD1 cell represents a kind of object detector. Systematic variations of the three-dimensional environment in virtual test flights of a model fly suggest that neither FD1 nor other cells of its presynaptic network are able to unambiguously detect objects, i.e. objects that are defined by discontinuities in the three-dimensional layout of the environment and, thus, move relative to its background on the retina of a translating animal. Rather, the FD1 responses are also affected by the textural features of the surroundings. Whether the FD1 cell is a detector for more general objects encompassing, for example, objects defined by spatial discontinuities, as well as by textural features, remains to be analyzed. However, the different response characteristics and the ambiguous detection abilities of each single cell of the circuit analyzed suggest an encoding of information about objects—irrespective of the features by which they are defined—by a population of cells. The FD1 cell presumably plays a prominent role in such a cell ensemble because of its ability to respond to objects defined by spatial and textural discontinuities.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We are grateful to Diana Rien for critically reading drafts of the paper and making helpful suggestions, to Jens Lindemann and Ralf Möller for contributing software, and to Hans van Hateren, Jochen Heitwerth, Pei Liang, and Roland Kern for providing experimental data. This study was supported by the Deutsche Forschungsgemeinschaft (DFG).

References

Barnett, P. D., Nordström, K., and O'Carroll, D. C. (2007). Retinotopic organization of small-field-target-detecting neurons in the insect visual system. Curr. Biol. 17, 569–578.

Boeddeker, N., Dittmar, L., Stürzl, W., and Egelhaaf, M. (2010). The fine structure of honeybee head and body yaw movements in a homing task. Proc. R. Soc. B 277, 1899–1906.

Boeddeker, N., Kern, R., and Egelhaaf, M. (2003). Chasing a dummy target: smooth pursuit and velocity control in male blowflies. Proc. R. Soc. Lond. B 270, 393–399.

Borst, A., and Egelhaaf, M. (1993). “Processing of synaptic signals in fly visual interneurons selectively responsive to small moving objects,” in Brain Theory – Spatio-temporal Aspects of Brain Function, eds A. Aertsen and W. Seelen (Amsterdam: Elsevier), 47–66.

Borst, A., Reisenman, C., and Haag, J. (2003). Adaptation of response transients in fly motion vision. II: Model studies. Vis. Res. 43, 1309–1322.

Braun, E., Geurten, B., and Egelhaaf, M. (2010). Identifying prototypical components in behaviour using clustering algorithms. PLoS One 5:e9361. doi: 10.1371/journal.pone.0009361

Brinkworth, R. S. A., Mah, E.-L., Gray, J. P., and O'Carroll, D. C. (2008). Photoreceptor processing improves salience facilitating small target detection in cluttered scenes J. Vis. 8, 1–17.

Brinkworth, R. S. A., and O'Carroll, D. C. (2009). Robust models for optic flow coding in natural scenes inspired by insect biology. PLoS Comput. Biol. 5:e1000555. doi: 10.1371/journal.pcbi.1000555

Collett, T., and Land, M. (1975). Visual control of flight behaviour in the hoverfly Syritta pipiens L. J. Comp. Physiol. 99, 1–66.

Cuntz, H., Haag, J., and Borst, A. (2003). Neural image processing by dendritic networks. Proc. Natl. Acad. Sci. U.S.A. 100, 11082–11085.

Dahmen, H. J., Wüst, R. M., and Zeil, J. (1997). “Extracting egomotion parameters from optic flow: principal limits for animals and machines,” in From Living Eyes to Seeing Machines, eds M. V. Srinivasan and S. Venkatesh (Oxford, NY: Oxford University Press), 174–198.

Dittmar, L., Stürzl, W., Baird, E., Boeddeker, N., and Egelhaaf, M. (2010). Goal seeking in honeybees: matching of optic flow snapshots? J. Exp. Biol. 213, 2913–2923.

Eckert, H., and Dvorak, D. R. (1983). The centrifugal horizontal cells in the lobula plate of the blowfly, Phaenicia sericata. J. Insect Physiol. 29, 547–560.

Eckert, M. P., and Zeil, J. (2001). “Towards an ecology of motion vision,” in Motion Vision: Computational, Neural, and Ecological Constraints, eds J. M. Zanker and J. Zeil (Berlin, Heidelberg, New York: Springer), 333–369.

Egelhaaf, M. (1985a). On the neuronal basis of figure-ground discrimination by relative motion in the visual system of the fly. I. Behavioural constraints imposed on the neuronal network and the role of the optomotor system. Biol. Cybern. 52, 123–140.

Egelhaaf, M. (1985b). On the neuronal basis of figure-ground discrimination by relative motion in the visual system of the fly. II. Figure-detection cells a new class of visual interneurones. Biol. Cybern. 52, 195–209.

Egelhaaf, M. (1985c). On the neuronal basis of figure-ground discrimination by relative motion in the visual system of the fly. III. Possible input circuitries and behavioural significance of the FD-cells. Biol. Cybern. 52, 267–280.

Egelhaaf, M. (2006). “The neural computation of visual motion information,” in Invertebrate Vision, eds E. Warrant and D. E. Nielsson (Cambridge, MA: Cambridge University Press), 399–461.

Egelhaaf, M., and Borst, A. (1989). Transient and steady-state response properties of movement detectors. J. Opt. Soc. Am. A 6, 116–127.

Egelhaaf, M., and Borst, A. (1993). Motion computation and visual orientation in flies. Comp. Biochem. Physiol. Comp. Physiol. 104, 659–673.

Egelhaaf, M., Borst, A., and Reichardt, W. (1989). Computational structure of a biological motion-detection system as revealed by local detector analysis in the fly's nervous system. J. Opt. Soc. Am. A 6, 1070–1087.

Egelhaaf, M., Borst, A., Warzecha, A. K., Flecks, S., and Wildemann, A. (1993). Neural circuit tuning fly visual neurons to motion of small objects. II. Input organization of inhibitory circuit elements revealed by electrophysiological and optical recording techniques. J. Neurophysiol. 69, 340–351.

Exner, S., and Hardie, R. (1989). The Physiology of the Compound Eyes of Insects and Crustaceans: A Study. Berlin, Heidelberg, NewYork: Springer-Verlag.

French, A. S., Korenberg, M. J., Järvilehto, M., Kouvalainen, E., Juusola, M., and Weckström, M. (1993). The dynamic nonlinear behavior of fly photoreceptors evoked by a wide range of light intensities. Biophys. J. 65, 832–839.

Gauck, V., and Borst, A. (1999). Spatial response properties of contralateral inhibited lobula plate tangential cells in the fly visual system. J. Comp. Neurol. 406, 51–71.

Geurten, B., Kern, R., Braun, E., and Egelhaaf, M. (2010). A syntax of hoverfly flight prototypes. J. Exp. Biol. 213, 2461–2475.

Geurten, B. R. H., Nordström, K., Sprayberry, J. D. H., Bolzon, D. M., and O'Carroll, D. C. (2007). Neural mechanisms underlying target detection in a dragonfly centrifugal neuron. J. Exp. Biol. 210, 3277–3284.

Gilbert, C., and Strausfeld, N. J. (1991). The functional organization of male-specific visual neurons in flies. J. Comp. Physiol. A 169, 395–411.

Haag, J., and Borst, A. (2001). Recurrent network interactions underlying flow-field selectivity of visual interneurons. J. Neurosci. 21, 5685–5692.

Hausen, K. (1976). Functional charaterization and anatomical identification of motion sensitive neurons in the lobula plate of the blowfly Calliphora erythrocephala. Z. Naturforsch. 31c, 629–633.

Hausen, K. (1982). Motion sensitive interneurons in the optomotor system of the fly. II. The horizontal cells: receptive field organization and response characteristics. Biol. Cybern. 46, 67–79.

Hausen, K. (1984). “The lobula-complex of the fly: structure, function and significance in visual behaviour,” in Photoreception and Vision in Invertebrates, ed M. A. Ali (New York: Plenum Press), 523–559.

Hennig, P., Kern, R., and Egelhaaf, M. (2011). Binocular integration of visual information: a model study on naturalistic optic flow processing. Front. Neural Circuits 5:4. doi: 10.3389/fncir.2011.00004

Hennig, P., Möller, R., and Egelhaaf, M. (2008). Distributed dendritic processing facilitates object detection: a computational analysis on the visual system of the fly. PLoS One 3:e3092. doi: 10.1371/journal.pone.0003092

Higgins, C. M., and Pant, V. (2004). An elaborated model of fly small-target tracking. Biol. Cybern. 91, 417–428.

Juusola, M., Weckström, M., Uusitalo, R. O., Korenberg, M. J., and French, A. S. (1995). Nonlinear models of the first synapse in the light-adapted fly retina. J. Neurophysiol. 74, 2538–2547.

Karmeier, K., van Hateren, J. H., Kern, R., and Egelhaaf, M. (2006). Encoding of naturalistic optic flow by a population of blowfly motion-sensitive neurons. J. Neurophysiol. 96, 1602–1614.

Kern, R., Egelhaaf, M., and Srinivasan, M. V. (1997). Edge detection by landing honeybees: behavioural analysis and model simulations of the underlying mechanism. Vis. Res. 37, 2103–2117.

Kern, R., van Hateren, J. H., Michaelis, C., Lindemann, J. P., and Egelhaaf, M. (2005). Function of a fly motion-sensitive neuron matches eye movements during free flight. PLoS Biol. 3:e171. doi: 10.1371/journal.pbio.0030171

Kimmerle, B., Eickermann, J., and Egelhaaf, M. (2000). Object fixation by the blowfly during tethered flight in a simulated three-dimensional environment. J. Exp. Biol. 203, 1723–1732.

Kimmerle, B., and Egelhaaf, M. (2000a). Detection of object motion by a fly neuron during simulated flight. J. Comp. Physiol. A 186, 21–31.

Kimmerle, B., and Egelhaaf, M. (2000b). Performance of fly visual interneurons during object fixation. J. Neurosci. 20, 6256–6266.

Kimmerle, B., Eickermann, J., and Egelhaaf, M. (2000). Object fixation by the blowfly during tethered flight in a simulated three-dimensional environment. J. Exp. Biol. 203, 1723–1732.

Kimmerle, B., Srinivasan, M., and Egelhaaf, M. (1996). Object detection by relative motion in freely flying flies. Naturwiss 83, 380–381.

Kimmerle, B., Warzecha, A., and Egelhaaf, M. (1997). Objekt detection in the fly during simulated translatory flight. J. Comp. Physiol. A 181, 247–255.

Krapp, H. G., Hengstenberg, R., and Egelhaaf, M. (2001). Binocular contributions to optic flow processing in the fly visual system. J. Neurophysiol. 85, 724–734.

Lappe, M. (ed). (2000). Neuronal Processing of Optic Flow. San Diego, San Francisco, New York: Academic Press.

Laughlin, S., and Hardie, R. (1978). Common strategies for light adaptation in the peripheral visual systems of fly and dragonfly. J. Comp. Physiol.A. 128, 319–340.

Laughlin, S., and Osorio, D. (1989). Mechanisms for neural signal enhancement in the blowfly compound eye. J. Exp. Biol. 144, 113–146.

Lehrer, M., and Srinivasan, M. (1993). Object detection by honeybees: why do they land on edges? J. Comp. Physiol. 173, 23–32.

Lehrer, M., Srinivasan, M., Zhang, S., and Horridge, G. (1988). Motion cues provide the bee's visual world with a third dimension. Nature 332, 356–357.

Liang, P., Heitwerth, J., Kern, R., Kurtz, R., and Egelhaaf, M. (2012). Visual object detection and distance encoding in three-dimensional environments by a neuronal circuit of the blowfly. J. Neurophysiol. (in press)

Lindemann, J. P., Kern, R., van Hateren, J. H., Ritter, H., and Egelhaaf, M. (2005). On the computations analyzing natural optic flow: quantitative model analysis of the blowfly motion vision pathway. J. Neurosci. 25, 6435–6448.

Mah, E. L., Brinkworth, R. S., and O'Carroll, D. C. (2008). Implementation of an elaborated neuromorphic model of a biological photoreceptor. Biol. Cybern. 98, 357–369.

Meyer, G. H., Lindemann, J. P., and Egelhaaf, M. (2011). Pattern-dependent response modulations in motion-sensitive visual interneurons – a model study. PLoS One 6:e21488. doi: 10.1371/journal.pone.0021488

Nordström, K., Barnett, P. D., and O'Carroll, D. (2006). Insect detection of small targets moving in visual clutter. PLoS Biol. 4:e54. doi: 10.1371/journal.pbio.0040054

Nordström, K., and O'Carroll, D. C. (2006). Small object detection neurons in female hoverflies. Proc. Biol. Sci. 273, 1211–1216.

O'Carroll, D. C., Barnett, P. D., and Nordström, K. (2011). Local and global responses of insect motion detectors to the spatial structure of natural scenes. J. Vis. 11, 1–17.

Olberg, R. M. (1981). Object- and self-movement detectors in the ventral nerve cord of the dragonfly. J. Comp. Physiol. 141, 327–334.

Olberg, R. M. (1986). Identified target-selective visual interneurons descending from the dragonfly brain. J. Comp. Physiol. 159, 827–840.

Olberg, R. M., Worthington, A. H., and Venator, K. R. (2000). Prey pursuit and interception in dragonflies J. Comp. Physiol. A 186, 155–162.

Petrowitz, R., Dahmen, H., Egelhaaf, M., and Krapp, H. G. (2000). Arrangement of optical axes and spatial resolution in the compound eye of the female blowfly Calliphora. J. Comp. Physiol. A 186, 737–746.

Price, K. V. (1999). “An introduction to differential evolution,” in New Ideas in Optimasation, eds D. Corne, M. Dorigo, F. Glover, D. Dasgupta, and P. Moscato (Maidenhead, UK: McGraw-Hill Ltd.), 79–108.

Rajesh, S., Rainsford, T., Brinkworth, R. S., Abbott, D., and O'Carroll, D. C. (2006). Implementation of saturation for modelling pattern noise using naturalistic stimuli. Proc. SPIE 6414, 641424.

Schilstra, C., and van Hateren, J. H. (1999). Blowfly flight and optic flow. I. Thorax kinematics and flight dynamics. J. Exp. Biol. 202, 1481–1490.

Shoemaker, P. A., O'Carroll, D. C., and Straw, A. D. (2005). Velocity constancy and models for wide-field visual motion detection in insects. Biol. Cybern. 93, 275–287.

Spalthoff, C., Egelhaaf, M., Tinnefeld, P., and Kurtz, R. (2010). Localized direction selective responses in the dendrites of visual interneurons of the fly. BMC Biol. 8, 36.

Srinivasan, M. (1993). “How insects infer range from visual motion,” in Visual Motion and its Role in the Stabilization of Gaze, eds F. A. Miles and J. Wallman (Amsterdam: Elsevier Science Publisher B.V), 139–156.

Srinivasan, M. V., Lehrer, M., Zhang, S. W., and Horridge, G. A. (1989). How honeybees measure their distance from objects of unknown size. J. Comp. Physiol. A 165, 605–613.

Storn, R., and Price, K. (1997). Differential evolution – a simple and efficient heuristic for global optimization over continuous spaces. J. Global Optim. 11, 341–359.

Strausfeld, N. (1991). Structural organization of male-specific visual neurons in calliphorid optic lobes. J. Comp. Physiol. A 169, 379–393.

Trischler, C., Boeddeker, N., and Egelhaaf, M. (2007). Characterisation of a blowfly male-specific neuron using behaviourally generated visual stimuli. J. Comp. Physiol. A 193, 559–572.

Trischler, C., Kern, R., and Egelhaaf, M. (2010). Chasing behaviour and optomotor following in free-flying male blowflies: flight performance and interactions of the underlying control systems. Front. Behav. Neurosci. 4:20. doi: 10.3389/fnbeh.2010.00020

Tammero, L. F., and Dickinson, M. H. (2002). The influence of visual landscape on the free flight behavior of the fruit fly Drosophila melanogaster. J. Exp. Biol. 205, 327–343.

van Hateren, J. H., and Schilstra, C. (1999). Blowfly flight and optic flow. II. Head movements during flight. J. Exp. Biol. 202, 1491–1500.

van Hateren, J. H., and Snippe, H. P. (2001). Information theoretical evaluation of parametric models of gain control in blowfly photoreceptor cells. Vis. Res. 41, 1851–1865.

van Hateren, J. H., Kern, R., Schwerdtfeger, G., and Egelhaaf, M. (2005). Function and coding in the blowfly H1 neuron during naturalistic optic flow. J. Neurosci. 25, 4343–4352.

Wachenfeld, A. (1994). Elektrophysiologische Untersuchungen und funktionelle Charakterisierung männchenspezifischer visueller Interneurone in der Schmeißfliege Calliphora erythrocephala (Meig.). Ph.D. dissertation, Universität Köln.

Wagner, H. (1986). Flight performance and visual control of flight of the free-flying housefly (Musca domestica). I. Organization of the flight motor. Phil. Trans. R. Soc. Lond. B 312, 527–551.

Warzecha, A.-K., Egelhaaf, M., and Borst, A. (1993). Neural circuit tuning fly visual interneurons to motion of small objects. 1. Dissection of the circuit by pharmacological and photoinactivation techniques. J. Neurophysiol. 69, 329–339.

Wehrhahn, C. (1979). Sex-specific differences in the chasing behaviour of houseflies (Musca). Biol. Cybern. 32, 239–241.

Wehrhahn, C., Poggio, T., and Bülthoff, H. (1982). Tracking and chasing in houseflies (Musca). Biol. Cybern. 45, 123–130.

Wiederman, S. D., Brinkworth, R. S., and O'Carroll, D. C. (2010). Performance of a bio-inspired model for the robust detection of moving targets in high dynamic range natural scenes. J. Comput. Theor. Nanosci. 7, 911–920.

Wiederman, S. D., Shoemaker, P. A., and O'Carroll, D. C. (2008). A model for the detection of moving targets in visual clutter inspired by insect physiology. PLoS One 3:e2784. doi: 10.1371/journal.pone.0002784

Keywords: object detection, modeling, network interactions, motion vision

Citation: Hennig P and Egelhaaf M (2012) Neuronal encoding of object and distance information: a model simulation study on naturalistic optic flow processing. Front. Neural Circuits 6:14. doi: 10.3389/fncir.2012.00014

Received: 27 October 2011; Paper pending published: 24 November 2011;

Accepted: 05 March 2012; Published online: 21 March 2012.

Edited by:

Paul S. Katz, Georgia State University, USACopyright: © 2012 Hennig and Egelhaaf. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: Martin Egelhaaf, Department of Neurobiology and Center of Excellence “Cognitive Interaction Technology”, Bielefeld University, Universitätsstraße 25, D-33615 Bielefeld, Germany. e-mail: martin.egelhaaf@uni-bielefeld.de