Design principles of the sparse coding network and the role of “sister cells” in the olfactory system of Drosophila

- 1State Key Laboratory of Cognitive Neuroscience and Learning & IDG/McGovern Institute for Brain Research, Beijing Normal University, Beijing, China

- 2School of Automation Science and Engineering, South China University of Technology, Guangzhou, China

- 3Center for Collaboration and Innovation in Brain and Learning Sciences, Beijing Normal University, Beijing, China

Sensory systems face the challenge to represent sensory inputs in a way to allow easy readout of sensory information by higher brain areas. In the olfactory system of the fly drosopohila melanogaster, projection neurons (PNs) of the antennal lobe (AL) convert a dense activation of glomeruli into a sparse, high-dimensional firing pattern of Kenyon cells (KCs) in the mushroom body (MB). Here we investigate the design principles of the olfactory system of drosophila in regard to the capabilities to discriminate odor quality from the MB representation and its robustness to different types of noise. We focus on understanding the role of highly correlated homotypic projection neurons (“sister cells”) found in the glomeruli of flies. These cells are coupled by gap-junctions and receive almost identical sensory inputs, but target randomly different KCs in MB. We show that sister cells might play a crucial role in increasing the robustness of the MB odor representation to noise. Computationally, sister cells thus might help the system to improve the generalization capabilities in face of noise without impairing the discriminability of odor quality at the same time.

1. Introduction

Sparse coding is a common computational strategy in neural systems (Olshausen and Field, 2004; Barak et al., 2013). For instance, it was shown that maximizing sparseness results in the emergence of receptive fields in model simulations which are strikingly similar in structure to that found in the visual system of primates (Olshausen, 1996; Olshausen and Field, 1997). Moreover, sparse codes were suggested to be important for memory [in the hippocampal CA3 region (Thompson and Best, 1989)], the auditory system (DeWeese et al., 2003), or the vocal tract of songbirds (Hahnloser et al., 2002). In insects, olfactory representations in the mushroom body (MB) by Kenyon cells (KCs) are also sparse (Perez-Orive et al., 2002; Heisenberg, 2003; Huerta et al., 2004; Jortner et al., 2007; Wessnitzer et al., 2007; Turner et al., 2008).

Sparse codes help to separate or decorrelate similar sensory input patterns, so that the discrimination of distinct sensory inputs becomes easier for a subsequent neural system processing sensory information. However, the capacity becomes limited in very sparse representation. For instance, in an ultimate sparse code, where a binary activation of a single neuron represents a distinct sensory input, at most N representations can be distinguished. In consequence, very sparse codes become sensitive to noise, because a random activation is easily misinterpreted as another odor quality. Thus, the ability to generalize to noisy sensory inputs can be poor for very sparse codes.

To overcome some of these limitations, sparse codes in neural systems are typically combined with divergent projections from lower sensory areas to higher areas to increase capacity through the number of neurons. For instance, in the human visual system, the ratio of LGN to V1 cells is 1:40 (Wandell, 1995). Similarly, the ratio of the number of glomeruli in the antennal lobe (AL) to the number of KCs in the mushroom body (MB) is also 1:40 (Hallem and Carlson, 2006). However, while increasing the number of neurons increases the capacity of sparse representations, additional mechanism might have to be implemented to improve the generalization capabilities of the network to noisy sensory inputs.

One way to investigate these constraints in a model network is to investigate whether the MB activation patterns change in response to noise and compute a measure whether two patterns can generally be discriminated with a given sparsity and connection structure. A previous study examined the constraints of the connection structure on discriminability in the locust olfactory system (García-Sanchez and Huerta, 2003). The authors proposed an interesting mathematical framework and found a number of constraints on the design of the AL to MB projection in locusts. We here adapt and expand this framework to the particularities of the olfactory system of the fly drosophila melanogaster to derive its constraints on good discriminability of odor qualities and further use the network model to investigate the robustness of the olfactory system to noise.

In flies, when stimulated with a particular odor, a number of different types of olfactory receptor neurons (ORN) are activated (Hallem and Carlson, 2006). Because axons from ORNs converge into just one dedicated glomerulus in AL for each receptor type (Wilson and Mainen, 2006), a given odor will activate a certain number of glomeruli. Although there exist mechanisms for decorrelation of the ORN activity patterns to different odors, such as lateral processing and divisive normalization (Olsen and Wilson, 2008; Olsen et al., 2010), an odor representation in the antennal lobe can still be regarded as relatively dense. Typically, a rather large proportion of neurons fire when processing a single odor quality. It was found that 30–50% glomeruli are activated per odor (Hallem and Carlson, 2006). In contrast, when projection neurons (PN) project sensory inputs further from the glomeruli to the KCs of the mushroom body (Wilson and Mainen, 2006), only a small percentage of the ca. 2000 (Turner et al., 2008; Aso et al., 2009) KCs are active, resulting in a sparse representation of odor quality in MB.

While the general architecture of the olfactory system of the fly is similar to that of the locust, there also exist important differences. First, in flies the number of glomeruli is relatively small [50 compared to e.g., 830 in locust (Leitch and Laurent, 1996; Chou et al., 2010)]. More importantly, in contrast to the locust, each glomerulus in flies contains on average about 3 [2–5 (Stocker et al., 1990)] homotypical PNs that show almost identical activity pattern caused by shared input and gap junction coupling (Kazama and Wilson, 2009; Huang et al., 2010; Yaksi and Wilson, 2010). Because of their high correlation, we here call these PNs “sister cells.” Despite almost identical firing behavior, these homotypic PNs project randomly to different target KCs in the mushroom body (Masuda-Nakagawa et al., 2005; Kazama and Wilson, 2009). Similar types of sister cells have been characterized in other species such as frog (Chen et al., 2009) and mice (Dhawale et al., 2010; Padmanabhan and Urban, 2010; Tan et al., 2010). However, their function in general, or their usefulness to each individual species in particular, remains unclear.

We here put forward the hypothesis that sister cells in flies help the olfactory system to increase the robustness to noise and therefore help to stabilize the odor representation of the KCs in MB. We show that strong gap junction coupling between sister cells is crucial for their influence on the robustness of the system. Depending on the assumed noise strength and tolerance thresholds of the system, we found that already a few sister cells per glomeruli increase the system's robustness to noise considerably.

By calculating the probability of the expected similarity of representations of distinct odors in MB, we further derived analytical equations for the discrimination capabilities without the explicit use of classifiers or readouts, and give constraints on the connectivity for a given sparseness level in MB.

2. Results

The Result section is structured as follows. After introducing our network model of the olfactory system of drosophila, we first investigate the robustness of the MB odor representation to noise and highlight the role of sister cells. Then we analyze constraints on network parameters resulting from requiring a good discrimination ability of odors in MB.

2.1. Network Model

During stimulation by an odor, a number of different types of olfactory receptor neurons (ORN) are activated. Because axons from ORNs of particular type converge into just one dedicated glomerulus in the antennal lobe (Wilson and Mainen, 2006), a given odor will activate a certain number of glomeruli. In our mathematical derivation, we assume that activity levels of glomeruli are binary, they are either activated by an odor or not. In simulations of Section 4.3, we consider graded neural responses.

In each of the NG glomeruli, M highly correlated PNs (sister cells) receive the ORN's input and project to several of the NK KCs in the mushroom body. We assume that all sister cells of a glomerulus behave identically to an odor, but may project to different KCs. The projection targets KCs randomly (Masuda-Nakagawa et al., 2005; Kazama and Wilson, 2009; Caron et al., 2013) in an independent fashion with a connection probability pc. Thus the probability of having C synaptic connections to a KC is given by the binomial distribution

Therefore, each KC receives on average 〈C〉 = pcNGM synaptic connections from PNs.

Due to divisive normalization by the total amount of ORN input, it was found that the total activity of all PNs to a given odor is approximately constant (Olsen and Wilson, 2008; Olsen et al., 2010; Luo et al., 2010). In the case of binary activation levels, constant total PN activity amounts to a constant number of activated glomeruli for a given odor. We thus assume that each odor activates exactly A glomeruli.

Following an early study investigating the olfactory system of locusts (García-Sanchez and Huerta, 2003; Huerta et al., 2004), we emphasize the analysis of the anatomical structure of the olfactory system and therefore simplify dynamical aspects. All PNs project their activation state to their targeted KCs. If the number of synaptic inputs reaches a firing threshold θ, a KC will fire. For simplicity, we assume that a KC has only one of two states: quiescent (0) or firing (1).

With these assumptions, we can write the response yi of the ith KC in the following form [McCulloch-Pitts neuron (McCulloch and Pitts, 1943)]:

where xj ∈ {0, 1} denotes the jth PN's response state to an odorant stimulus. In our framework, connections do either exist, wij = 1, or not, wij = 0. If the number of connections received by a KC is C, it is ∑j wij = C.

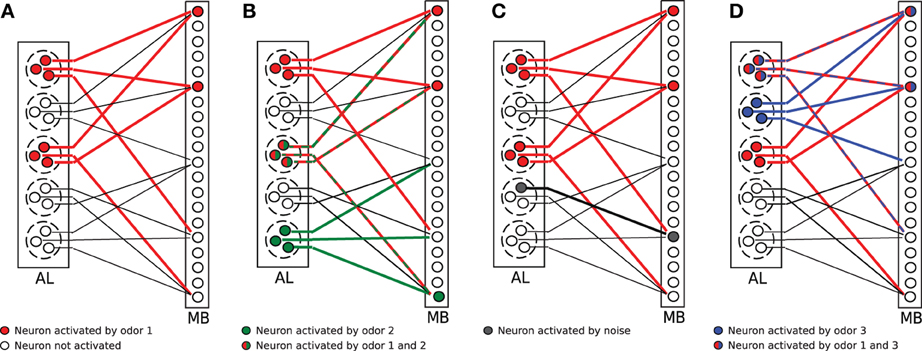

The model network is illustrated in Figure 1A. In the cartoon, A = 2 glomeruli (dashed circles) of the antennal lobe (AL) got activated by an odor. Thus, all sister cells (here M = 3) of the activated glomeruli changed into the firing state (red circles). All sister cells project randomly into the mushroom body (MB). For illustration purposes, each KC receives exactly C = 3 connections here (solid lines; connections are only plotted for every fifth KC). The firing threshold in Figure 1 is arbitrarily set to θ = 2, so that those KCs which receive two or more activated connections (red lines) activate in turn, resulting in an corresponding odor representation in MB (red circles in MB).

Figure 1. Illustration of the olfactory network structure. (A) An odorant pattern is represented by A randomly activated glomeruli in AL (red circles indicate activated PNs). All sister cells in activated glomeruli project to random KCs in the mushroom body (MB). The firing threshold of KCs is set to θ = 2 in this example. Two KCs get activated (red circles). (B) Two odors (red and green activations) overlap in the glomeruli activation, but the representation in MB might still be distinct. (C) Extrinsic noise is modeled as random activation of silent PNs (gray circle in AL). This synaptic input noise might induce a change in the activity pattern of KCs (gray circle in MB). (D) Faithful information transmission. Two odors (blue and red) might differ in glomeruli activation, but this information is lost in MB because the same selection of KCs are activated by chance.

2.2. Firing Probability of Kenyon Cells

We start by deriving the KC firing probability from combinatorial arguments. Since all incoming connections are from random PNs, the probability of the amount of synaptic inputs received by any KC is identically distributed and uncorrelated to others.

According to our assumptions, there are exactly MA active PNs in the AL a KC could receive input from. For a KC cell to receive exactly n active presynaptic inputs, the KC should have a connection from exactly n of the MA cells. Because there are combinations of connections to receive n connections from MA cells and the probability for a connection is pc = 〈C〉/(NGM), we can write the synaptic input distribution p(n) as the binomial distribution

In other words, p(n) is the probability of a KC having exactly n activated presynaptic neurons during presentation of an arbitrary odor. Since each activated presynaptic neuron delivers an input of size 1 to the postsynaptic neuron and inputs are simply added in our neuron model (see Eq. 2), p(n) describes the probability of a KC to have exactly n synaptic inputs in response to an odor.

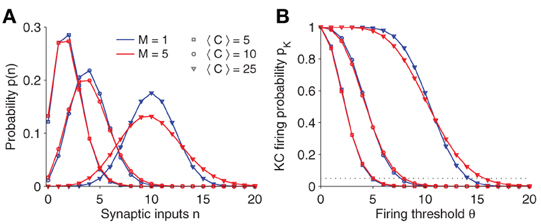

Figure 2A plots the synaptic input distribution p(n) for a number of parameter settings. Naturally, if the average number of connections 〈C〉 is increased, the mean synaptic input increases as well. Note, however, that even if 〈C〉 is fixed, the shape of the distribution broadens slightly for increasing number of sister cells M.

Figure 2. (A) Synaptic input distribution p(n). The distribution shifts and gets broader for increasing connectivity (〈C〉). Note that in case of multiple sister cells per glomerulus (red lines; M = 5) and fixed connectivity 〈C〉, its variance increases as well. The binomial distribution approximates a Gaussian for larger 〈C〉. (B) KC firing probability pK vs. firing threshold θ. Note that the slope changes for different parameter. The threshold θ is usually set so that the KC firing probability is fixed at 0.05 (dotted line). Parameter: NG = 50, A = 20.

In response to synaptic inputs, a KC will activate if the amount of input exceeds a threshold θ. Therefore, the KC firing probability pK is given as

See Figure 2B for a plot of pK(θ). Generally, the firing threshold should be set large enough to ensure a low firing probability and a sparse activation pattern in KCs (dashed line in Figure 2B corresponds to pK = 0.05).

2.3. Robustness to Intrinsic Noise

Noise is ubiquitous in neural systems. If the firing probability is very low in the MB to achieve a sparse representation of odors, a noise induced change of the firing state of even a few neurons might have a large impact. We wondered how the odor representation of KCs is affected by noise and whether the sister cells could promote robustness to some forms of noise.

In the following two sections, we consider two types of noise, intrinsic and extrinsic noise, respectively. Intrinsic noise refers to an accidental perturbation of an internal parameter, for instance a change in the firing mechanism. Extrinsic noise refers to fluctuations of the synaptic inputs. To be able to compare noise effects for different parameter values we will assume that the KC firing probability is given and maintained while changing parameters.

Let us first consider intrinsic noise. We model intrinsic noise by perturbing the firing threshold of a KC neuron. If the firing threshold is reduced by Δθ, a KC will fire more likely. The noise induced change of the firing probability Δpintr−K is given by summing up the probabilities of the additional synaptic inputs n = θ − Δθ,…,θ − 1 which result in a spike after perturbation:

Analogously, if the threshold is increased by an amount Δθ, the firing probability of KCs is lowered and can be calculated as

To get a better intuition of the involvement of parameters in the intrinsic noise, we approximate the binomial distribution of p(n) by a Gaussian distribution N(μ,σ) with same mean and variance (which is a good approximation for larger 〈C〉, see Figure 2A). For Eq. 5 one finds (Eq. 6 can be approximated in analogous manner):

with synaptic connection probability pc = 〈C〉/(MNG). When the sum in Eq. 5 is approximated by an integral, we find

where the firing threshold in Eq. 10 was set in terms of the mean and the variance of the (assumed Gaussian) synaptic input distribution θ = μ + α σ with α > 0 to ensure constant mean activity of the KCs. If Δθ is small, we can approximate the integral in Eq. 10 and find

by using the definition Eq. 8, abbreviating a term related to the mean activity, , and setting c ≡ 〈C〉/NG.

Assuming pc < 0.5 and a fixed KCs firing probability, Eq. 12 shows that the variability decreases if the connection probability pc is increased. Similarly, increasing the number of connections reduces the variability (see Figure 3B; crosses).

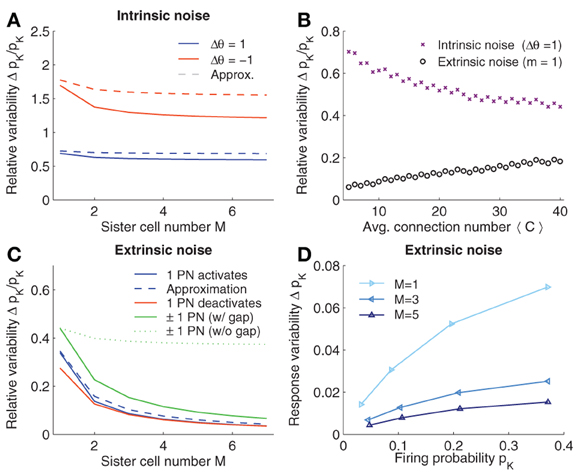

Figure 3. Robustness to noise. (A) Intrinsic noise modeled by varying the firing threshold of KCs by Δθ. Relative change in firing probability of KCs is plotted vs. the number of sister cells M. The Gaussian approximation Eq. 10 in dashed lines. (B) Relative noise induced variability with average number of connections 〈C〉. Varying 〈C〉 has opposite effects for extrinsic (m = 1 see panel C) and intrinsic noise. (C) Extrinsic noise. The effect of activating a single quiescent PN (blue curve) or deactivating an active PN (red curve) on the firing probability of KCs. Dashed curve shows the Gaussian approximation Eq. 20. General extrinsic noise where some PNs activate and others deactivates simultaneously (green curves). If gap junctions are included (that is the noise strength remains constant with M, i.e., m1 = 1 and m2 = 1) the induced KC variability reduced quickly (solid line), whereas if gap junctions are not considered (and thus the noise strength grows with M, i.e., m1 = M, m2 = M) the variability only slightly decreases (dotted line). (D) Variability of pK induced by extrinsic noise (m1 = 1 and m2 = 1) depends on pK. Parameters (if not varied): NG = 50, A = 20, 〈C〉 = 10, M = 3, and pK ≈ 0.05.

Interestingly, a higher number of sister cells M per glomeruli reduces the intrinsic noise. When relating the case of M sister cells to without sister cells, the relative reduction of Δpintr−K is (assuming constant 〈C〉; see Eq. 13). This reduction is moderate and saturates for large M. With c = 〈C〉/NG = 10/50 = 1/5, the relative change in Δpintr−K becomes which goes to for large M. Thus, while the amount of sister cells reduces the effect of intrinsic noise, the improvement relative to the case without sister cells does not exceed 10%.

In Figure 3A, the noise induced variability of the firing probability is plotted for varying M. For better comparison, we fixed the average number of synaptic inputs 〈C〉. Noise reduction with increasing numbers of M is slightly higher than the 10% estimated from the approximation (Eq. 12), however, the effect of sister cells on intrinsic noise remains small and saturates as predicted.

Note that we modeled intrinsic noise by changing the firing threshold in a single KC and looked at its change of firing probability. How frequent such threshold fluctuation occurs in individual cells of the large population of KCs in MB is not known. However, the qualitative behavior and the effect of parameters on robustness to noise should not change when regarding the whole population or only a single cell.

2.4. Robustness to Extrinsic Noise

Extrinsic noise in our model describes the situation when synaptic inputs to KCs have been perturbed: noise changes the activation level of some PNs. Since synaptic inputs might differ due to noise, KCs in turn might change their firing (see Figure 1C for an illustration).

To compute the effect of extrinsic noise, we randomly assign m quiescent PNs in the antennal lobe to be active. We will later analyze the case where both possibilities, activation of quiescent PNs and deactivation of active PNs, are present.

How would the activation of m quiescent PNs affect a KC in its firing? For that, one has to calculate the probability p(l|m) that l of the m randomly activated PNs project to a given KC. This problem is the same as for Eq. 3 and is given by a binomial distribution

Having l noise inputs to the KC will increase the total synaptic input of previously n by l. However, only if these additional noise inputs push the KC above threshold, i.e., n + l ≥ θ, will the firing probability be affected. Taking this into account, one finds

Analogous to the case of intrinsic noise, we approximate Eq. 15 to get a better intuition of the involvement of parameters. Using again a Gaussian distribution to approximate p(n), and set (analogous to above) θ = μ + ασ, one first finds

Now suppose that m quiescent PNs are activated as a result of a noise perturbation. This will cause the synaptic input distribution to change slightly as the total amount of active PNs is increased, i.e., MA → MA + m. Hence,

Computing the difference between the firing probability with and without noise yields the change of the firing probability caused by extrinsic noise. Using Eq. 16

with . Since m is small, the integral can again be approximated resulting in

with the same c ≡ 〈C〉/NG and γ as defined above.

Note first that the noise perturbation grows with the mean activity of the KCs (see also Figure 3D). From Eq. 22, we further find that, in contrast to intrinsic noise, reducing—instead of increasing—the average input connection 〈C〉 (or pc) results in a more robust noise response, if the firing activity in KCs is maintained (see Figure 3B).

Introducing sister cells, on the other hand, has again a positive effect on the robustness to noise. If we assume for the moment that m stays constant if M is changed (see Section 2.5), set 〈C〉 constant, and further assume A » m (so that MA/(MA + m) ≈ 1), we find that the relative change of Δpextr+K with M in respect to without sister cells is given by , which is approximately 1/M as c is relatively small (c = 1/5). Thus, the improvement of the robustness to noise is considerable with increasing number of sister cells and indeed does not saturate for larger M in contrast to the case of intrinsic noise.

So far we have analyzed the case where m quiescent PNs activate in response to noise. In a similar manner we can derive the noise induced probability change when noise causes previously active PNs to turn off, and when both cases are combined (see Method section 4.2). The qualitative results are similar. The change in noise induced firing probability when activating or deactivating a single PN is plotted in Figure 3C for different values of sister cells M. Note that the approximation with a Gaussian, Eq. 20 (dashed line), is close to the exact values (solid lines). Note that the effect of sister cells on the robustness of the system to extrinsic noise is considerably, approximately halving the noise induced variations of the firing probability when M is doubled.

In conclusion, our results suggest that incorporating highly correlated sister cells into glomeruli enhances the robustness of the firing pattern in the mushroom body to both, extrinsic and intrinsic noise. On the other hand, connectivity structure has opposite effects on both noise types.

We further tested in simulations whether our theoretically derived results on the robustness to noise are applicable also when the assumption of binary activation in AL is relaxed to allow graded firing rate responses (see Method section 4.3). We found that the qualitative behavior of the reduction of noise with increasing number of sister cells as well as the effect of connectivity are very similar (compare to Figure 8).

2.5. Strong Gap Junction Coupling Between Sister Cells Reduces the Extrinsic Noise

We found in the last section that the effects of extrinsic noise are reduced approximately by 1/M if sister cells are introduced.

However, we silently assumed that the number of PNs affected by the noise stays constant at m. However, if the number of sister cells is changed, the total number of PNs gets implicitly multiplied by M because additional PNs are introduced. In a larger pool of PNs, it is more likely to find a fixed number of m cells affected by noise; in fact m should be a certain fraction f of the total number of PNs, thus m = fMNG.

Thus m should be enlarged when adding sister cells. However, as described in the introduction, sister cells are highly correlated with each other by gap junction coupling. What would be the effect of noise on a system of strongly coupled sister cells?

To analyze this we built a simple rate model of M mutual connected sister cells and compared the variance of each cell's output rate to the variance of an injected independent Gaussian noise. As described in the following, we show that the variance of the noise gets reduced by a factor of 1/M for strong mutual coupling. Thus, gap junctions effectively reduce the risk of a sister cell to accidentally change its state.

We investigate the effect of the electrical gap-junction coupling of sister cells on extrinsic (added) noise. Within one glomerulus, we consider a group of M neurons receiving random external inputs with and without gap junctions between each other. In a stochastic differential equation formulation (Øksendal, 2003), the dynamics of an ith neuron's firing rate ri can be written as

where τ is the time constant of the neuronal firing rate. Ii is the input that is applied to the neuron, and f is the neuron's IO-function which concrete shape is not important for the discussion here. The strength of the gap-junction coupling between neurons is given by w and assumed equal for all neurons for simplicity. Extrinsic noise is modeled as a Gaussian process with zero mean and standard deviation σ. In Eq. 24, ξi is thus a Gaussian white noise variable with zero mean and unit variance.

In this rate model, we describe gap junctions as depending on the neuronal firing rate difference between neurons. This is a reasonable assumption because gap junctions are typically modeled as resistive connections dependent on the difference of somatic voltage between connected cells (e.g., Moortgat et al., 2000).

In the following, we compute the mean and the variance of the stochastic differential equation for the rate of the coupled neurons. Reorganizing the neural dynamics Eq. 24 into matrix form, we have

where r is a vector of length M, W is a M-dimensional Gaussian noise term (zero mean and unit variance matrix). The input term is bi = f(Ii) and the matrix U is defined as

where 1 is the vector of only ones and IM the identity matrix.

We note that Eq. 25 is a system of (inhomogeneous) linear stochastic differential equations (SDE) with independent Gaussian noise terms (having zero mean), symmetric U, and time-independent U and b. The evolution of the mean and variance of such a system of linear SDEs is well known (Øksendal, 2003; Jimenez, 2012) and the stationary solution for the mean and co-variance matrix of Eq. 25 is given by

Since the matrix U is a rank-1 update of a diagonal matrix, it turns out that its inverse can be written as

Altogether, we thus find for the variance

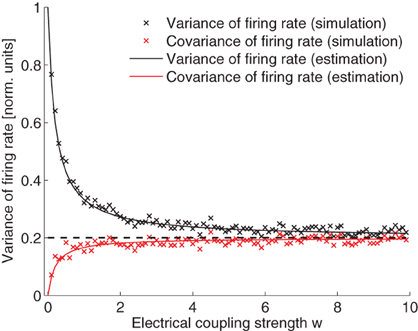

From Eq. 29, we can see that, when there is no electrical coupling, w = 0, the variance of the neuronal firing rate is σ2/(2τ) and the covariance of the neuronal firing rate is 0. When electrical coupling is extremely large, w → ∞, all variance and co-variance terms become equal and are given by σ2/(2Mτ). Note that the variance is exactly reduced by 1/M as a result of electrical coupling. The variance and covariance terms are compared in Figure 4. The simulated values fit very well the theoretical calculations.

Figure 4. Variance and covariance of the neuronal firing rate as a function of the electrical coupling strength. Values are normalized by the expected variance without coupling, that is σ2/(2τ). Simulation and theoretical estimation show that the variance decays with coupling strength while covariance increases. In the limit of large coupling, variance and covariance are both equal to 1/M of the magnitude of the variance when there is no electrical coupling (dashed line). Parameters (since the variables can be scaled arbitrarily the units are omitted): I = 5; M = 5; σ = 0.2; τ = 10. IO-function f(x) = α x + β with α = 3, β = 5.

Taken together, the number of noise activated PNs m stays indeed constant if M is increased, because the gap-junction coupling reduces the likelihood of the noise induced changes by 1/M. In consequence, the number of accidentally activated PNs, m, is not dependent on M anymore. With strong gap junction coupling, m is thus a constant when varying M. In this sense, our calculation in the last paragraph indeed modeled extrinsic noise in a realistic setting.

Note that if one would increase the number of PNs M-fold (while keeping the fraction of activated glomeruli constant), but without having highly correlated sister cells in the system, the reduction of extrinsic noise would be much less effective since the probability of having a noise event would grow with the number of PNs, e.g., m ∝ M. This situation is plotted in Figure 3C in case of extrinsic noise with both silencing and activation events (dotted green line). On the other hand, including sister cells would mean m constant (regardless of M), so that the relative change in the firing probability of KCs decreases much more rapidly (Figure 3C; solid green line).

2.6. Sparse Codes Reduce Overlaps in MB

In the previous sections, we examined the robustness to noise. From a computational perspective, robustness of the MB odor representation to noise improves the performance of a hypothetical readout of the sensory information. For instance, if a higher brain area has to decide whether two noisy patterns in MB result from an activation to the same odor, a more noise robust KC activation would make this generalization task easier.

Another mechanism to increase the generalization capabilities while maintaining discrimination performance at the same time is to require that odor representations do not share too many common activations for different odors. For instance, if two odor representations would be required to differ by the activation of at least three neurons, an incoming odor pattern with an accidental change in a single neuron would be still correctly classified (assuming that the margin of the classifier would be set accordingly). In contrast, if two odor representations differed by only one neuron, a pattern with an accidental change in a single neuron could instead be misclassified.

In this sense it should thus be beneficial to reduce the risk of large overlaps of odor representations when designing the olfactory system. In divergent projections, such as the AL to MB projection, it is well known that patterns in MB become generally less overlapping if activations are sparse. To investigate this property in our framework in a quantitative way, we compute the exact probability of finding shared neurons in two odor representations in MB for a given amount of shared activations in AL. Thus, we ask: for individual activation patterns, if we have a certain overlap in AL, what is the expected overlap in MB?

Assume that X1 = (x11,…,xMNG1) and X2 = (x12, …, xMNG2) are two {0, 1}-vectors with MNG elements representing the PN activation patterns in response to two odors. Each odor activates exactly A glomeruli (thus MA PNs). However, some of the activated glomeruli might be identical (Wilson and Mainen, 2006) that is both odors potentially have an overlapping representation in AL. This situation is illustrated in Figure 1B (green and red circles). Let the number of commonly activated glomeruli be X1 · X2 = Mo. Since |X1| = |X2| = MA PNs are always activated, the overlap normalized by the expected number of activated PNs is

The PNs project to the KCs which in turn fire according to their synaptic input from the PNs. Let Y1 = (y11,…,yNK1) and Y2 = (y12,…,yNK2) be the activation patterns corresponding to X1 and X2 in MB. Note that Y1 and Y2 are {0, 1}-vectors with NK elements (the number of KC cells). On average, according to the firing probability of KCs (pK; see Eq. 4), odors will activate pKNK neurons in the mushroom body.

We asked how large the induced overlap of two odor representation in MB will be on average if the overlap between two odor representations in AL is known to be o.

Since the connectivity structure is independent for each KC, it is enough to consider a single KC. The probability of having a common activation of a single KC for both odors given an overlap o of their AL representations is p(y1 = y2 = 1|o). Thus, NKp(y1 = y2 = 1|o) is the expected number of shared KCs in the representation of the two odors in MB. Since pKNK is the expected number of activated KCs in response to any odor, the (normalized) overlap in MB for a given AL overlap o is

The probability p(y1 = y2 = 1|o) can indeed be exactly calculated by using a combinatorial approach. We give the derivations in the Method Section 4.1 (Eq. 39).

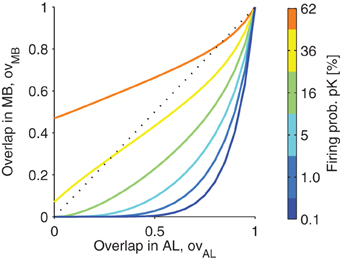

In Figure 5, the relation of the overlap of the representation in MB is plotted as a function of the overlap in AL. Note that the function is highly sub-linear, showing that the overlaps of representations are reduced as long as the firing in MB is sparse (low firing probability pK; colors in Figure 5).

Figure 5. The projection from antennal lobe to the mushroom body reduces the overlap of two odor representations for sparse codes. Overlap in the mushroom body ovMB is plotted as a function of the overlap of representations in the antennal lobe ovAL. Colors indicate different levels of KC firing probability pK (related to the sparseness of the MB representations). Note that for low pK even highly overlapping representations in the AL are very likely to be non-overlapping in MB. However, for higher firing probabilities pK, this effect diminishes. Parameters: M = 3, NG = 50, A = 20, 〈C〉 = 10 (Note that with these parameters the firing probability in the AL is 40%).

2.7. Probability of Having Distinct Odor Representations in MB

A challenge for the olfactory system is to ensure that sensory information can still be read out from the MB after projecting the AL odor representations to the MB. In particular, information about odor identity should not be lost. For instance, consider the situation illustrated in Figure 1D. Two odors, odor 1 (red), and odor 2 (blue), have different representations in AL, X1 and X2, with X1 ≠ X2. However, there is a chance that due to the particular structure of the connections, the corresponding activation patterns in the mushroom body, say Y1 and Y2, happen to be identical, i.e., Y1 = Y2. This situation will result in the loss of the information about odor identity, since the two odors cannot be distinguished based on the KC activation pattern alone even if the decoding would be perfect.

In fact, a hypothetical decoder of the odor identity from the MB representation usually should generalize for patterns that are very similar. If a classification is to be made whether Y1 and Y2 are representations of different odors or noisy version of the same odor, classifiers would usually make a decision based on the similarity of Y1 and Y2. If one defined the similarity by counting the amount of KCs responding differently, i.e., D(Y1, Y2) ≡ |Y1 − Y2|2, the classifier might decide that both odors are different if at least k KCs response differently, D(Y1, Y2) ≥ k, otherwise the classifier might assume that odors are identical and the minor difference of Y1 and Y2 is a result of noise.

How k should be chosen depends on the distribution of odors which has to be detected as well as the classifier used for distinguishing odor representations in MB and is thus unknown. However, to enable the readout of the information from the MB representation, the olfactory system should be designed to minimize the probability that AL activations to distinct odors, X1 ≠ X2, result in MB representations that are very similar, e.g., D(Y1, Y2) < k.

Such faithful transmission of the odor identity will set constraints on the design of the network, e.g., on the sparsity of the MB activation and on the connectivity structure (〈C〉). For example, if the firing threshold θ is small or the number of input connections large, many KCs fire regardless of the pattern in AL. Thus, MB would lose its selectivity to odors: the probability of having two similar KC patterns in response to two distinct odors in AL becomes large. Conversely, if the firing is too sparse, KCs may not respond at all, regardless of the odor. The information about the odor identity would equally be lost.

In the following, we compute the probability that two distinct patterns in AL, X1 ≠ X2, result in MB activations, Y1 and Y2, that are far apart, D(Y1, Y2) ≥ k. This probability p(D(Y1, Y2) ≥ k|X1 ≠ X2) is equal to 1 minus the probability that the MB activations are very similar:

Because exactly A glomeruli in the AL are activated in response to an odor, and since each glomerulus contains M sister cells (with identical responses), the condition X1 ≠ X2 is equivalent to requiring that the number of commonly activated glomeruli is less then A. We call these glomeruli the “overlapping” glomeruli between X1 and X2 and write p(o) for the probability of having o overlaps. It is

The probability p(o) can be computed as follows. An odor activates A glomeruli. There exist ways to select those out of the altogether NG glomeruli. Assume that o of the A glomeruli are shared between the two odors. Since there are ways to choose exactly o common glomeruli and A − o non-shared glomeruli, the probability of finding exactly o commonly activated glomeruli in both odors is therefore

Because each KC receives uncorrelated synaptic connections from PNs, the probability p(D(Y1, Y2) < k|o) in Eq. 33 can be further decomposed in terms of individual KCs

It is thus enough to compute the probability that one KC is commonly activated by two odors, p(y1 = y2|o). This probability is given by adding the probability that two odors both evoke firing, y1 = y2 = 1, and the probability that both do not evoke firing, y1 = y2 = 0. Hence

In the Method Section 4.1, we show how p(y1 = 1, y2 = 1|o) can be calculated. p(y1 = 0, y2 = 0|o) is computed analogously (by changing the range of summation in Eq. 42 accordingly).

Taken together, we have found an expression for the probability that two odor representations differ by at least k KC activations in MB, conditioned on whether they are distinct in AL, namely p(D(Y1, Y2) ≥ k|X1 ≠ X2).

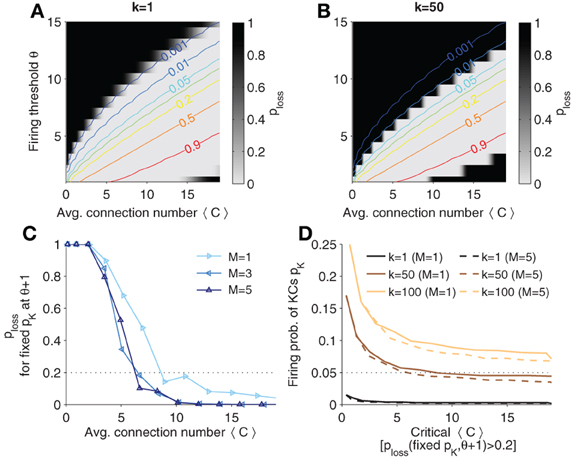

In Figures 6A, B, the probability of having two similar patterns in MB when AL patterns are different (termed ploss, “probability of information loss” in the following), p(D(Y1, Y2) < k|X1 ≠ X2), is plotted in contour plots for different thresholds of k, respectively. Lines of constant KC firing probability are plotted in color code. Note that ploss is high (dark areas) if the firing threshold is chosen too large (KCs firing is too sparse). Analogously, if firing is too strong, ploss is high again.

Figure 6. Probability that distinct odor patterns in AL project to MB patterns less than k apart, ploss = p(D(Y1, Y2) < k|X1 ≠ X2). (A,B) Contour plots of ploss for k = 1 and k = 50, respectively. Colored lines are (smoothed) isolines of constant firing probability pK. (C) Probability ploss evaluated at iso-lines of constant pK = 0.05 (as indicated in B; here k = 50), but with θ increased by 1. Note that the probability increases above 0.2 if 〈C〉 is too low (dotted line). (D) Sparsest allowable code. Plotted is the critical 〈C〉 against levels of firing probability pK for different k and M. A firing probability below the indicated lines would result in a high ploss (when θ is varied by one). The critical 〈C〉 is determined as the point when crossing the threshold (as exemplified in C). Colors show different values of k. Sister cells do not affect much the maximal allowable sparseness (compares solid to dashed lines). Parameters: NK = 2000, NG = 50, A = 20.

If one gradually decreases the firing threshold for fixed average synaptic inputs 〈C〉, one reaches an area where the probability of information loss quickly vanishes (white areas) and remains near zero. In this area the network can accomplish faithful information processing in a sense that distinct patterns in AL are distinct in MB (from the view point of a readout) with high probability.

The probability p(D(Y1, Y2) ≥ k|X1 ≠ X2) yields constraints if one has requirements on the discriminability of odors in the MB representation. If the probability is high, distinct odor activation in AL are likely to project to dissimilar representations in MB, so that a classifier could well discriminate odors. The larger k the more dissimilar MB representations are required, and the easier would a classifier be able to discriminate between odor patterns. On the other hand, for larger k generalization capabilities improve as well, because noisy versions of the odor pattern in MB would generalize to the same odor if less than k KCs fire differently. From Figure 6B we see that higher k = 50 shifts the probability landscape (as compared to k = 1 in Figure 6A) and this sets different constraints on sparseness.

In the previous section, we showed that sparse codes separate patterns. What is the sparsest code allowed? To quantify the sparsest possible firing rate for given k, we fixed pK and looked at ploss for corresponding θ and 〈C〉. Intuitively, the sparsest code will be on the edge of the white area in Figure 6B. For instance, the line of pK = 0.05 is very close too the border (see Figure 6B). However, if the code is too sparse, then ploss will change dramatically for a small perturbation in e.g., θ. To find the sparsest code, we thus evaluated ploss on the line of constant pK and increase the corresponding θ by one. This probability is plotted for different sister cell numbers in Figure 6C (with pK = 0.05 and k = 50). One notes that the sparsest allowable code depends on the connectivity as well. We define the sparsest allowable code as the one where the probability ploss crosses a threshold, ploss = 0.2 (see dotted line in Figure 6C). For instance, pK = 0.05 is the sparsest allowable firing probability when 〈C〉 ≈ 5 with little dependence on the sister cell number. In other words, if pK = 0.05 the average number of connection cannot be below 〈C〉 ≈ 5 if the system has to ensure discriminability with k = 50.

In Figure 6D is shown how k affects the highest allowable sparsity level as well as the minimal connectivity 〈C〉 (as estimated as described in Figure 6C). If k is increased, denser codes are required. For instance, if 〈C〉 = 10 and it is required that at least k = 100 KC cells fire differently for distinct odors, the firing probability pK should be at least 0.1 to ensure that ploss is low.

3. Discussion

3.1. Sister Cells Increase Robustness

We have built a feed-forward network model to understand the organization principles of the drosophila olfactory system. By analyzing the structure of the network and the effect of intrinsic and extrinsic noise, we found that homotypic projection neurons (referred to as sister cells in this paper) are particularly helpful in promoting the robustness of KCs' sparse code to extrinsic noise. Extrinsic noise is here modeled as random activations or inactivation of projection neurons perturbing the mushroom body's odor representation. While increasing the sheer number of PNs used for the odor representation in AL increases the robustness to noise as well, it turns out that inserting sister cells is much more efficient as the noise vanishes with 1/M (M being the number sister cells per glomerulus). The crucial mechanism is the strong gap-junction coupling of sister cells, which increases the correlation of sister cells and therefore reduces the probability of accidental activations or inactivation.

Although the strength of noise the system has to cope with, as well as the level of noise which is still tolerable in the MB representation, is unknown, our model suggests that a few sister cells per glomerulus (e.g., 4–5) might be sufficient given the strong 1/M noise dependence. This result is consistent with the experimental literature, where typically 2–5 highly correlated sister cells are found per glomeruli in drosophila (Stocker et al., 1990).

Other species might use different strategies than relying on sister cells to tackle noise. For instance, in locusts the number of PNs is large [≈830 (Leitch and Laurent, 1996)] and they seem to lack sister cells similar to those of flies (Martin et al., 2011). As mentioned, increasing the sheer amount of PNs indeed increases robustness to noise, although to a lesser degree. Therefore, additional mechanism might be necessary to reduce noise in the locust. Indeed, it has been reported that a wild field interneuron in the locust MB can improve the robustness of sparse code via a feedback modulation (Papadopoulou et al., 2011) suggesting that the locust olfactory system might have a different strategy.

Although evidences for sister cells in other insects remain few, there are, however, species where cells anatomically similar to sister cells in drosophila have been described. For instance, in frog similar gap-junction coupled cells in glomeruli have been found (coherent mitral/tufted cells) (Chen et al., 2009). Interestingly, each glomeruli only had a few cells (2–7) comparable to the situation in flies suggesting that these cells might have a similar functional role in the reduction of extrinsic noise. Another example are mitral cells in mice, which are also anatomically comparable to sister cells in drosophila as they receive inputs from the same glomeruli and project to an analogous structure to the KCs (Dhawale et al., 2010; Padmanabhan and Urban, 2010; Tan et al., 2010). However, in contrast to drosophila, where sister cells receive almost identical feed-forward input from ORNs and are additionally electrically coupled, mitral cells in mice have an intrinsic biophysical diversity (Padmanabhan and Urban, 2010) and are embedded into an inhibitory network causing more complex temporal dynamics (Dhawale et al., 2010; Tan et al., 2010). Thus, since mitral cells seem not to be correlated as strongly as drosophila sister cells, their functional role in the olfactory system of mice is likely to be different and not directly comparable to sister cells in drosophila.

Intrinsic noise variability, on the other hand, is less dependent on the number of sister cells. Although variability reduces when introducing sister cells (mainly because of a slight broadening of the synaptic input distribution) the effect saturates quickly with increasing sister cell number. We thus conclude that other strategies than increasing sister cell number might be employed to cope with intrinsic noise.

Interestingly, the average number of connections a KC receives, 〈C〉, has opposite influence on intrinsic or extrinsic noise. In principle, a trade-off of importance of both types of noises would thus allow to find an optimal value for the connectivity number. However, the strengths and importance weightings of the respective noise types, as well as potential other biological constraints on the connectivity structure, are unknown, so that calculating the “most robust” connectivity number remains elusive. However, different species potentially are exposed to different degrees of noises and thus might optimize the connectivity number according to their requirements. For instance, in contrast to the fly, it was found that in locust KCs receive inputs from almost 50% of the PNs (Jortner et al., 2007). This high connectivity would favor the reduction of intrinsic over extrinsic noise. One might speculate that the locust with its greater amount of neurons in the olfactory system (Leitch and Laurent, 1996) is more challenged by intrinsic noise rather than extrinsic noise and thus might have evolved a high connectivity.

3.2. Computational Aspects

A well accepted concept from machine learning and reservoir computing is that a divergent projection enables a system to improve its computation capabilities as representations are re-coded into a higher dimensional space, where representations become well separated, so that the extraction of information often requires less sophisticated read-outs, e.g., linear instead of non-linear classifiers (Maass et al., 2002; Bishop and Nasrabadi, 2006; Jaeger, 2007; Barak et al., 2013). Accordingly, in our model of the olfactory system, the divergent projection helps to separate AL representations when projected into the MB: we show that for sparse KC firing the number of shared activations in two odors are reduced (see Figure 5).

Taking the connectivity constraints of the drosophila olfactory system into account, such as a random, sparse, and purely excitatory projection, we further derived the probability of whether distinct input patterns (in AL) have similar representations in MB (the “reservoir”). This probability is at the core of a hypothetical read-out trying to discriminate between two odors: the parameter k regulates how far separated distinct representations in MB have to be. Any classifier of the neural responses will in some sense rely on the distance to distinguish responses to two odor patterns. Thus, higher k potentially improves the discrimination capabilities. We found that requiring a large separation of odor patterns puts constraints on the sparseness of the representation. Too sparse codes will not provide the required distance. For instance, if fewer than k/2 neurons are activated to two odors, the number of differently activated neurons is naturally lower than k, so that two odor patterns become too close. Furthermore, the lowest allowable firing probability also constrains the connectivity: having too few input connections will not allow the system to achieve a required firing level for a stable odor representation.

If one assumes very low level of firing probability pK as suggested by recent evidence (Campbell et al., 2013), for instance pK = 0.05, one can attempt to make a prediction on the minimal number of connections 〈C〉. Although this critical number of connections depends on the discrimination requirements (parameter k), we find that e.g., for k = 50 (that is 2.5% of the KCs have to be different for distinct odors) the average number of connections cannot be chosen smaller than 〈C〉 ≈ 6 − 8 (depending somewhat on the number of sister cells; see Figure 6C). This value agrees with experimental literature, where 10 inputs per KC (i.e., each KC receives connections from about 6.6% of the PNs) was measured (Turner et al., 2008) suggesting that the olfactory system might operate close to the maximally allowed sparsity level.

It is well known that discrimination ability trades-off with generalization capability (Barak et al., 2013). In this sense, larger k allow also for better generalization, because nearby patterns in MB could be assigned as noisy version of the same odor. Apart from this viewpoint of a classifier extracting the information from the output network alone, other strategies for better generalization capabilities might be implemented in neural systems. Here we put forward a new hypothesis how better generalization capabilities might be achieved in the specific case of drosophila: by the insertion of highly correlated sister cells into glomeruli to enhance noise robustness already at the level of the inputs.

3.3. Assumption of the Model Framework

We analyzed the design principles of the AL to MB projection in the drosophila. Our mathematical model is similar to the mathematical framework developed previously for analyzing the olfactory network in case of locusts (García-Sanchez and Huerta, 2003). While building on the earlier work, some crucial aspects of the drosophila network are different to the locust. In particular, lateral inhibition between glomeruli ensures that the total activity of PNs to any odor is roughly constant in flies (Luo et al., 2010; Olsen et al., 2010). Regarding thus the number of activated glomeruli as fixed (the parameter A) allowed us to derive analytical expressions and approximations, in contrast to the mainly numerical evaluation of the earlier study in locust (García-Sanchez and Huerta, 2003). Moreover, we here develop methods for investigating robustness to noise and focus on the special role of sister cells in the fly which are absent in locust.

Our network model makes several assumptions. First, we assumed that the sparse coding in MB is mainly determined by feedforward synaptic input and not for instance by recurrent processing in MB. Experimental evidences suggest that this assumption is biologically plausible, because the influence of (potential) recurrent interaction seem to be weak (Turner et al., 2008). Recently, another study reporting that the KC firing activity can be described by a linear combination of PN inputs together with a threshold mechanism points in the same direction (Li et al., 2013).

Second, sister cells in our model project independently to KCs in MB, which seem to be well supported by experimental data (Masuda-Nakagawa et al., 2005; Kazama and Wilson, 2009; Caron et al., 2013).

Third, the neuron model consists of simple binary neurons and thus neglects any graded activity (firing rate) or temporal structure of the odor representation. In simulations, we found that implementing graded activity in projection neurons does not have influence on the qualitative results. Moreover, experimental evidence suggests that temporal dynamics seems not to play a prominent role in the olfactory coding of flies in contrast to e.g., the locust, where spatio-temporal dynamics might be an important aspect of the code (Wehr and Laurent, 1996; Mazor and Laurent, 2005). Thus, at least for drosophila our approach of coincident spatial coding seems reasonable.

Fourth, we used fixed number of glomeruli to model AL's activity pattern to odorant input. This is reasonable because of the effect of a divisive normalization operation in AL which effectively equalizes the total activity of PNs, regardless of odorant identity and concentration. In reality, the number of activated glomeruli may vary somewhat in response to odorant input. However, this is not likely to affect the main results of our study.

Finally, we did not consider possible variation of the number of sister cells for some selected glomeruli as suggested experimentally (Stocker et al., 1990). However, a variation in the number of sister cells per glomeruli would not change our qualitative results. The prediction of the model would be that variation of sister cells per glomeruli would indicate a disproportional sensitivity to noise for those glomeruli having more sister cells.

4. Methods

4.1. Derivation of the KC Firing Probability for Given Overlap o

Here we derive the probability p(y1 = 1, y2 = 1|o) that a KC fires in response to each of two odors which share exactly o glomeruli activations in AL.

To respond similarly to both odors a KC has to receive enough synaptic inputs to reach the threshold θ for both odors. Since the input connections are random, one can decompose

where the connection structure p(C) is given by the binomial distribution Eq. 1.

We can decompose further when considering that a KC receives exactly wc synaptic inputs from the Mo commonly activated PNs of X1 and X2:

The probability p(wc|o, C) can be calculated as follows. Assume that the KC receives C connections from random PNs. There exist ways to connect to C of altogether MNG PNs. Since there are ways that exactly wc of the C connection come from the overlapping glomeruli and the other C − wc connections come from the MNG rest PNs, one can write

Note that p(wc|o, C) follows a hypergeometric distribution, which describes the probability of wc successes in C draws without replacement from population size MNG containing Mo wins.

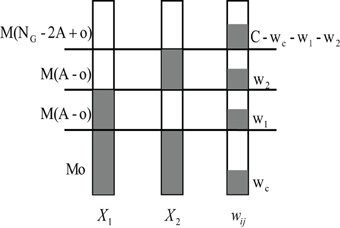

We now look closer at the probability p(y1 = 1, y2 = 1|o, wc, C) in Eq. 39. Apart from the wc active synaptic inputs from the shared glomeruli, the KC still gets other inputs from active glomeruli that are not shared. Assume that it receives w1 of the altogether C inputs from the M(A − o) other activated glomeruli of odor 1 and w2 inputs from the M(A − o) other activated glomeruli of odor 2, and the rest C − wc − w1 − w2 input connections from the non-activated PNs, we can compute the probability p(w1, w2|o, wc, C) as (compare to Figure 7):

Figure 7. Schematic diagram of calculating the joint probability p(w1, w2|o, wc, C). Suppose two input pattern X1 and X2 have o overlapping activated glomeruli, and the ith KC connects to wc PNs within these Mo shared PNs. The KC connects altogether to C PNs. It thus additionally receives w1 and w2 inputs from the rest of the M(A − o) non-overlapping but activated PNs of pattern X1 and X2, respectively. Finally, C − wc − w1 − w2 connections come from non-activated PNs.

Finally, p(y1 = 1, y2 = 1|o, wc, C) can be computed by adding the probability of all cases where the amount of input reaches the threshold, namely wc + w1 ≥ θ and wc + w2 ≥ θ:

Taken together we have derived the probability p(y1 = 1, y2 = 1|o).

4.2. Response Variability to General Extrinsic Noise

In the main text we did only consider that m previously quiescent PNs are activated as a result of noise. However, in principle, previously activated cells might also become silent because of the noise perturbation. Here we derive this more general case of extrinsic noise.

Assume that m1 active PNs become silent and m2 quiescent PNs activate in response to a noise perturbation. We calculate the change in the firing probability KC. Suppose a KC receives n inputs without noise. We suppose that as a result of the noise perturbation, m1 previously activated neurons become silent. The probability that inputs get reduced by n1 when silencing m1 PNs is given by the chance that the m1 cells are one of those n which are connected to the KC (hypergeometric distribution)

If m2 quiescent neurons become activated, the probability of a KC receiving n2 additional synaptic inputs from these additionally activated PNs follows the binomial distribution

Finally, to get the total noise induced change in firing probability ΔpextrK, we have to add the probabilities that it was n < θ before but it is n − n1 + n2 ≥ θ after perturbation (more firing), as well as the cases when it was n ≥ θ before and n − n1 + n2 < θ after perturbation (less firing):

Note that in our case noise induced silencing or activating cells is independent, i.e., p(n1, n2|n) = p(n1|n)p(n2).

4.3. Simulation of Graded Responses in AL

As we have only considered binary neurons in the main text for mathematical simplicity, we here analyze in a simulation whether results can be generalized to neurons with graded activities.

In fact, to use binary neurons is a simplification as PNs respond with a certain firing rate when presented with an odor. In this section, we tested whether graded activities would change the main results on noise robustness as derived for binary cells in the main text.

Each odor is represented with a subset of activated glomerulus A. We assume that non-activated PNs are silent, i.e., their firing rate is 0. Considering that the total sum of rates of all activated PNs in response to an odor stimulation is approximately constant [because of lateral divisive normalization (Luo et al., 2010)], we set the firing rate xi of an activated PN of the ith glomerulus to

where ξi is a random number obeying a binomial distribution (with parameters N and p), and R is a parameter determining the response range of the PNs. The normalization by the sum of all ξi ensures that the total activity to an odor is constant and equals to MR. Note that all sister cells have the same activation level. The connectivity structure from AL to MB is identical to that described in the main text.

As a result of noise, response patterns potentially change. To quantitative this change, we define the response dissimilarity

of a KC pattern Y ≠ 0 with its noise perturbed version Y′. Note that E is zero if Y′ = Y and is smaller than 1 if Y′ differs by less than the number of activated KCs in Y. In the numerical simulations, we randomly apply 1000 input patterns and calculate the response of KCs in case of noise and without. Then we calculate the sample mean and standard error of the dissimilarities E. When varying parameters (such as M and 〈C〉), we hold the firing probability of KCs fixed to 0.05 as done in the case of binary neurons.

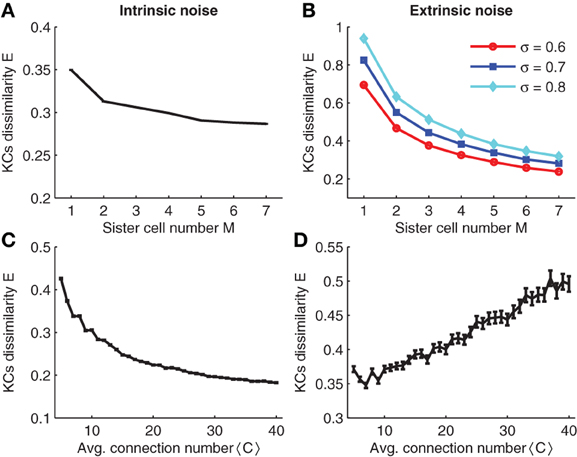

4.3.1. Intrinsic noise

In the simulations, intrinsic noise is a perturbation of the KC firing threshold, i.e., θ → θ + Δθ where Δθ is a Gaussian random number with zero mean and standard deviation σintr. The result of the simulation is plotted in Figure 8A. Note that for different sister cell numbers M, the variability decays in a similar manner as for the binary case (compare Figures 8A and 3A).

Figure 8. (A,C) Effect of intrinsic noise on KCs dissimilarity with respect to sister cell number and average input connection number. (B,D) Effect of extrinsic noise on KCs dissimilarity with respect to sister cell number and average input connection number. Parameters: NG = 50,A = 20,N = 100, p = 0.3, pK ≈ 0.05, σintr = 40,σextr = 0.6 in (D),<C> = 10 in (A and C), and M = 3 in (B,D).

4.3.2. Extrinsic noise

We next tested the effect of extrinsic noise on KC firing variability. Extrinsic noise is introduced by adding Gaussian noise fluctuations to the activity of PNs. That is, the activity in the ith glomeruli in response to odor stimuli is changed to , where ηi is a Gaussian random number (zero mean and unit variance). Note that the standard deviation of the extrinsic noise term is linearly dependent on the mean firing activity ξi to ensure a constant coefficient of variation. Furthermore, the term ensures that the variation of the noise decreases in the correct manner if strong gap-junction couplings are considered (see Section 2.5).

Simulation results are shown in Figure 8B. Note that sister cells promote the robustness to extrinsic noise in a similar manner as shown for binary neurons (compare to Figure 3B). Analogous to the case of binary neurons, increasing the average number of connections 〈C〉 has opposite effects on intrinsic and extrinsic noise: larger 〈C〉 reduces intrinsic noise while smaller 〈C〉 enhances robustness to extrinsic noise (compare Figures 8C, D).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work is supported by National Foundation of Natural Science of China (No.91132702, No. 31261160495, No.91120305, No. 31261160495), National High-Tech R&D Program of China (863 Program No.2012AA011601) Program for Changjiang Scholars of China, and High Level Talent Project of Guangdong Province, China.

References

Aso, Y., Grübel, K., Busch, S., Friedrich, A. B., Siwanowicz, I., and Tanimoto, H. (2009). The mushroom body of adult drosophila characterized by gal4 drivers. J. Neurogenet. 23, 156–172. doi: 10.1080/01677060802471718

Barak, O., Rigotti, M., and Fusi, S. (2013). The sparseness of mixed selectivity neurons controls the generalization–discrimination trade-off. J. Neurosci. 33, 3844–3856. doi: 10.1523/JNEUROSCI.2753-12.2013

Bishop, C. M., and Nasrabadi, N. M. (2006). Pattern Recognition and Machine Learning. Vol. 1. New York, NY: Springer.

Campbell, R. A., Honegger, K. S., Qin, H., Li, W., Demir, E., and Turner, G. C. (2013). Imaging a population code for odor identity in the drosophila mushroom body. J. Neurosci. 33, 10568–10581. doi: 10.1523/JNEUROSCI.0682-12.2013

Caron, S. J., Ruta, V., Abbott, L., and Axel, R. (2013). Random convergence of olfactory inputs in the drosophila mushroom body. Nature. 497, 113–117. doi: 10.1038/nature12063

Chen, T.-W., Lin, B.-J., and Schild, D. (2009). Odor coding by modules of coherent mitral/tufted cells in the vertebrate olfactory bulb. Proc. Natl. Acad. Sci. U.S.A. 106, 2401–2406. doi: 10.1073/pnas.0810151106

Chou, Y.-H., Spletter, M. L., Yaksi, E., Leong, J. C., Wilson, R. I., and Luo, L. (2010). Diversity and wiring variability of olfactory local interneurons in the drosophila antennal lobe. Nat. Neurosci. 13, 439–449. doi: 10.1038/nn.2489

DeWeese, M. R., Wehr, M., and Zador, A. M. (2003). Binary spiking in auditory cortex. J. Neurosci. 23, 7940–7949.

Dhawale, A. K., Hagiwara, A., Bhalla, U. S., Murthy, V. N., and Albeanu, D. F. (2010). Non-redundant odor coding by sister mitral cells revealed by light addressable glomeruli in the mouse. Nat. Neurosci. 13, 1404–1412. doi: 10.1038/nn.2673

García-Sanchez, M., and Huerta, R. (2003). Design parameters of the fan-out phase of sensory systems. J. Comput. Neurosci. 15, 5–17. doi: 10.1023/A:1024460700856

Hahnloser, R. H., Kozhevnikov, A. A., and Fee, M. S. (2002). An ultra-sparse code underliesthe generation of neural sequences in a songbird. Nature 419, 65–70. doi: 10.1038/nature00974

Hallem, E., and Carlson, J. (2006). Coding of odors by a receptor repertoire. Cell 125, 143–160. doi: 10.1016/j.cell.2006.01.050

Heisenberg, M. (2003). Mushroom body memoir: from maps to models. Nat. Rev. Neurosci. 4, 266–275. doi: 10.1038/nrn1074

Huang, J., Zhang, W., Qiao, W., Hu, A., and Wang, Z. (2010). Functional connectivity and selective odor responses of excitatory local interneurons in drosophila antennal lobe. Neuron 67, 1021–1033. doi: 10.1016/j.neuron.2010.08.025

Huerta, R., Nowotny, T., García-Sanchez, M., Abarbanel, H., and Rabinovich, M. (2004). Learning classification in the olfactory system of insects. Neural Comput. 16, 1601–1640. doi: 10.1162/089976604774201613

Jimenez, J. C. (2012). Simplified formulas for the mean and variance of linear stochastic differential equations. eprint: arXiv:1207.5067

Jortner, R. A., Farivar, S. S., and Laurent, G. (2007). A simple connectivity scheme for sparse coding in an olfactory system. J. Neurosci. 27, 1659–1669. doi: 10.1523/JNEUROSCI.4171-06.2007

Kazama, H., and Wilson, R. I. (2009). Origins of correlated activity in an olfactory circuit. Nat. Neurosci. 12, 1136–1144. doi: 10.1038/nn.2376

Leitch, B., and Laurent, G. (1996). Gabaergic synapses in the antennal lobe and mushroom body of the locust olfactory system. J. Comp. Neurol. 372, 487–514.

Li, H., Li, Y., Lei, Z., Wang, K., and Guo, A. (2013). Transformation of odor selectivity from projection neurons to single mushroom body neurons mapped with dual-color calcium imaging. Proc. Natl. Acad. Sci. U.S.A. 110, 12084–12089. doi: 10.1073/pnas.1305857110

Luo, S. X., Axel, R., and Abbott, L. (2010). Generating sparse and selective third-order responses in the olfactory system of the fly. Proc. Natl. Acad. Sci. U.S.A. 107, 10713–10718. doi: 10.1073/pnas.1005635107

Maass, W., Natschläger, T., and Markram, H. (2002). Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput. 14, 2531–2560. doi: 10.1162/089976602760407955

Martin, J. P., Beyerlein, A., Dacks, A. M., Reisenman, C. E., Riffell, J. A., Lei, H., and Hildebrand, J. G. (2011). The neurobiology of insect olfaction: sensory processing in a comparative context. Progr. Neurobiol. 95, 427–447. doi: 10.1016/j.pneurobio.2011.09.007

Masuda-Nakagawa, L. M., Tanaka, N. K., and O'Kane, C. J. (2005). Stereotypic and random patterns of connectivity in the larval mushroom body calyx of drosophila. Proc. Natl. Acad. Sci. U.S.A. 102, 19027–19032. doi: 10.1073/pnas.0509643102

Mazor, O., and Laurent, G. (2005). Transient dynamics versus fixed points in odor representations by locust antennal lobe projection neurons. Neuron 48, 661–673. doi: 10.1016/j.neuron.2005.09.032

McCulloch, W. S., and Pitts, W. (1943). A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 5, 115–133. doi: 10.1007/BF02478259

Moortgat, K. T., Bullock, T. H., and Sejnowski, T. J. (2000). Precision of the pacemaker nucleus in a weakly electric fish: network versus cellular influences. J. Neurophysiol. 83, 971–983.

Øksendal, B. (2003). Stochastic Differential Equations. Berlin; Heidelberg: Springer. doi: 10.1007/978-3-642-14394-6

Olsen, S. R., Bhandawat, V., and Wilson, R. I. (2010). Divisive normalization in olfactory population codes. Neuron 66, 287–299. doi: 10.1016/j.neuron.2010.04.009

Olsen, S. R., and Wilson, R. I. (2008). Lateral presynaptic inhibition mediates gain control in an olfactory circuit. Nature 452, 956–960. doi: 10.1038/nature06864

Olshausen, B. A. (1996). Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 381, 607–609. doi: 10.1038/381607a0

Olshausen, B. A., and Field, D. J. (1997). Sparse coding with an overcomplete basis set: a strategy employed by v1? Vis. Res. 37, 3311–3326. doi: 10.1016/S0042-6989(97)00169-7

Olshausen, B. A., and Field, D. J. (2004). Sparse coding of sensory inputs. Curr. Opin. Neurobiol. 14, 481–487. doi: 10.1016/j.conb.2004.07.007

Padmanabhan, K., and Urban, N. N. (2010). Intrinsic biophysical diversity decorrelates neuronal firing while increasing information content. Nat. Neurosci. 13, 1276–1282. doi: 10.1038/nn.2630

Papadopoulou, M., Cassenaer, S., Nowotny, T., and Laurent, G. (2011). Normalization for sparse encoding of odors by a wide-field interneuron. Science 332, 721–725. doi: 10.1126/science.1201835

Perez-Orive, J., Mazor, O., Turner, G. C., Cassenaer, S., Wilson, R. I., and Laurent, G. (2002). Oscillations and sparsening of odor representations in the mushroom body. Science 297, 359–365. doi: 10.1126/science.1070502

Stocker, R., Lienhard, M., Borst, A., and Fischbach, K. (1990). Neuronal architecture of the antennal lobe in drosophila melanogaster. Cell Tissue Res. 262, 9–34. doi: 10.1007/BF00327741

Tan, J., Savigner, A., Ma, M., and Luo, M. (2010). Odor information processing by the olfactory bulb analyzed in gene-targeted mice. Neuron 65, 912–926. doi: 10.1016/j.neuron.2010.02.011

Thompson, L., and Best, P. (1989). Place cells and silent cells in the hippocampus of freely-behaving rats. J. Neurosci. 9, 2382–2390.

Turner, G., Bazhenov, M., and Laurent, G. (2008). Olfactory representations by drosophila mushroom body neurons. J. Neurophysiol. 99, 734–746. doi: 10.1152/jn.01283.2007

Wehr, M., and Laurent, G. (1996). Odour encoding by temporal sequences of firing in oscillating neural assemblies. Nature 384, 162–166. doi: 10.1038/384162a0

Wessnitzer, J., Webb, B., and Smith, D. (2007). “A model of non-elemental associative learning in the mushroom body neuropil of the insect brain,” in Adaptive and Natural Computing Algorithms (Springer), 488–497.

Wilson, R., and Mainen, Z. (2006). Early events in olfactory processing. Annu. Rev. Neurosci. 29, 163–201. doi: 10.1146/annurev.neuro.29.051605.112950

Keywords: drosophila olfactory system, homotypical projection neurons, noise robustness, mushroom body, divergent projection, sparse coding

Citation: Zhang D, Li Y, Wu S and Rasch MJ (2013) Design principles of the sparse coding network and the role of “sister cells” in the olfactory system of Drosophila. Front. Comput. Neurosci. 7:141. doi: 10.3389/fncom.2013.00141

Received: 03 June 2013; Accepted: 30 September 2013;

Published online: 23 October 2013.

Edited by:

Rava A. Da Silveira, Ecole Normale Supérieure, FranceReviewed by:

Venkatesh N. Murthy, Harvard University, USAJames E. Fitzgerald, Harvard University, USA

Copyright © 2013 Zhang, Li, Wu and Rasch. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Si Wu and Malte J. Rasch, State Key Laboratory of Cognitive Neuroscience and Learning & IDG/McGovern Institute for Brain Research, Beijing Normal University, 19 Xinjiekouwai Street, Beijing 100875, China e-mail: malte.rasch@bnu.edu.cn; wusi@bnu.edu.cn

Danke Zhang

Danke Zhang Yuanqing Li

Yuanqing Li Si Wu

Si Wu Malte J. Rasch

Malte J. Rasch