The neuronal response at extended timescales: long-term correlations without long-term memory

- Department of Electrical Engineering, the Laboratory for Network Biology Research, Technion, Haifa, Israel

Long term temporal correlations frequently appear at many levels of neural activity. We show that when such correlations appear in isolated neurons, they indicate the existence of slow underlying processes and lead to explicit conditions on the dynamics of these processes. Moreover, although these slow processes can potentially store information for long times, we demonstrate that this does not imply that the neuron possesses a long memory of its input, even if these processes are bidirectionally coupled with neuronal response. We derive these results for a broad class of biophysical neuron models, and then fit a specific model to recent experiments. The model reproduces the experimental results, exhibiting long term (days-long) correlations due to the interaction between slow variables and internal fluctuations. However, its memory of the input decays on a timescale of minutes. We suggest experiments to test these predictions directly.

1. Introduction

Long term temporal correlations, or “f−α statistics” (Keshner, 1982), are ubiquitously found at multiple levels of brain and behavior (Ward and Greenwood, 2007, and refrences therein). For example, f−α statistics appear in human cognition (Gilden et al., 1995; Repp, 2005), brain and network activity (measured using electroencephalograph or local field potentials Bédard et al., 2006, and refrences therin), and even Action Potentials (APs) generated by single neurons (Musha and Yamamoto, 1997; Gal et al., 2010). The presence of these long correlations in a neuron's AP responses suggests it is affected by processes with slow dynamics, which can retain information for long times. As a result, if these slow processes are also affected by APs, then the generation of each AP (indirectly) depends on a rather long history of the neuron's previous inputs and APs. This potentially allows a single neuron to perform complex computations over very long timescales. However, it remains unclear whether this type of computation indeed occurs.

Cortical neurons indeed contain processes taking place on multiple timescales. Many types of ion channels are known, with a large range of kinetic rates (Channelpedia, http://channelpedia.epfl.ch/). Additional new sub-cellular kinetic processes are being discovered at an explosive rate (Bean, 2007; Sjöström et al., 2008; Debanne et al., 2011). This variety is particularly large for very slow processes (Marom, 2010). Such rich biophysical machinery can potentially modulate the generation of APs on long timescales. Evidence for such abilities was observed in recent works, which investigated how cortical neurons temporally integrate noisy current stimuli (Lundstrom et al., 2008, 2010; Pozzorini et al., 2013). The temporal integration of the input was approximated using filters with power law decay, reflecting “long memory.” However, these filters were fitted only up to a timescale of about 10 s (or equivalently, frequencies smaller than 10−1 Hz), possibly due to the limited duration of the experiments, which involve intracellular recording.

This raises the question – would the neuron still have long memory on timescales longer than 10 s? Generally, the answer may depend on the type of stimulus used. For example, certain ion channels may “remember” non-sparse inputs longer than sparse inputs (Soudry and Meir, 2010). Here, we focus on the case of the sparse (AP-like) input (Figure 1), imitating the “natural” input for an axonal compartment which receives APs from a previous compartment. Such stimulation is used in various experiments (e.g., Grossman et al., 1979; De Col et al., 2008; Gal et al., 2010).

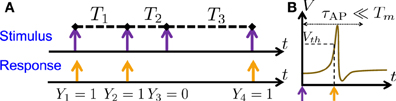

Figure 1. Stimulation Regime. (A) Stimulation consists of (extracellular) sparse current spikes, with inter-stimulus intervals Tm and Action Potential (AP) occurrences Ym. (B) An AP “occurred” if the voltage V crossed a threshold Vth following the (sparse) stimulus, with Tm ≫ τAP.

We find general conditions under which a neuron can generate f−α statistics in its spiking activity, and show that this does not imply that a neuron has long memory of its history. Specifically, in order to generate f−α statistics slow processes should span a wide range of timescales with slower processes having a higher level of internally generated fluctuations (e.g., more “noisy,” due to lower ion channel numbers). However, in a minimal model that generates this behavior, slow processes do not retain memory of the input fluctuations beyond a finite “short” timescale, even though they are affected by the membrane's voltage. A main reason for this is that the “fastest adaptation process” in the model adjusts the neuron's response in such a way that any perturbation in the response is canceled out, before slower processes are affected.

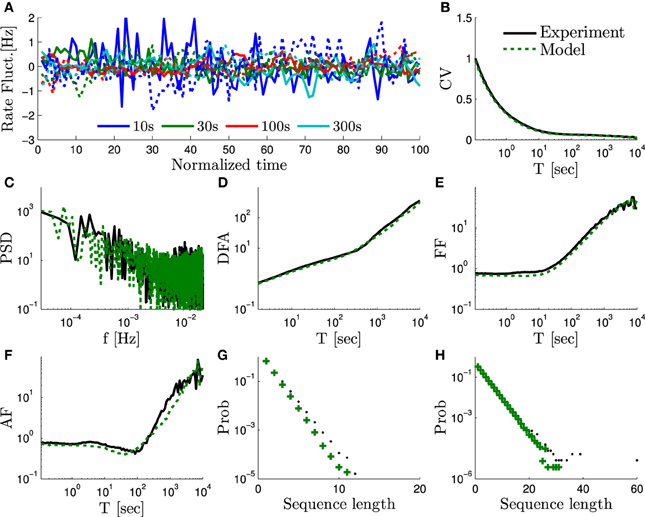

We fit the minimal model to the days-long experiments in Gal et al. (2010), where synaptically isolated individual neurons, from a rat cortical culture, were stimulated with extra-cellular sparse current pulses for an unprecedented duration of days. The neurons exhibited f−α statistics, responding in a complex and irregular manner from seconds to days. The synaptic isolation of the neurons in the network, and their low cross-correlations indicate that these f−α fluctuations are internally generated in the neurons (Appendix D). We are able to reproduce their results (Figure 3), and predict that the neuron should remember perturbations in its input for about 102 s (Figure 4). We suggest further experiments to test these predictions (Figure 5).

The remainder of the paper is organized as follows. We begin in section 2.1 by presenting the basic setup. Then, in section 2.2, we present the general framework for biophysical modeling of neurons. Working in this framework, in section 2.3 we recall the mathematical formalism from Soudry and Meir (2014) and derive the power spectrum density for periodic input stimuli. Following a description of f−α behavior in section 3.1, we provide in section 3.2 both general and “minimal” conditions for a neuron to display such scaling. In section 3.3 we consider the implications of the model for the input–output relation of the neuron, given general stationary inputs. In section 3.4 we demonstrate this numerically in a specific biophysical model which is fitted to the experimental results of Gal et al. (2010). We conclude in section 4 with a summary and discussion of our results. An extensive appendix contains many of the technical details used throughout the paper (see Supplementary Material).

2. Methods

2.1. Preliminaries

In our notation 〈·〉 is an ensemble average, i ≜ , a non-capital boldfaced letter x ≜ (x1,…, xn)⊤ is a column vector [where (·)⊤ denotes transpose], and a boldfaced capital letter X is a matrix (with components Xmn).

2.1.1. Stimulation

As in Soudry and Meir (2014) we examine a single, synaptically isolated, excitable neuron under “spike” stimulation. In this stimulation regime, the stimulation current, I(t), consists of a train of identical short pulses arriving at times tm and amplitude I0. The intervals between the stimulation times are denoted Tm ≜ tm + 1 − tm (Figure 1A, top). We assume that the stimulation is sparse, i.e., Tm ≫ τAP, with τAP being the timescale of an AP (Figure 1B). Since the neuron is “excitable” it does not generate APs unless stimulated, as in Gal et al. (2010) (i.e., the neuron is neither oscillatory nor spontaneously firing). However, after a stimulation the neuron can either respond with a detectable AP or not respond. We denote AP occurrences a Ym, where Ym = 1 if an AP occurred immediately after the m-th stimulation, and 0 otherwise (Figure 1A, bottom). Note also that Ym is not generally the same as the common count process generated from the APs by binning them into equally sized bins (Appendix B.1) – unless Tm is constant and equal to the bin size.

2.1.2. Statistics

We assume both Ym and Tm are wide-sense stationary (Papoulis and Pillai, 1965). We denote p* ≜ 〈 Ym 〉 to be the mean probability to generate an AP and T* ≜ 〈 Tm 〉 as the mean stimulation period. Furthermore, we denote Ŷm ≜ Ym − p* and m = Tm − T* as the perturbations of Ym and Tm from their means. An important tool in quantifying the statistics of signals is the power spectral density (PSD), namely the Fourier transform of the auto-covariance (Papoulis and Pillai, 1965). For analytical convenience, in this work we will use a PSD of the form

with 0 ≤ f ≪ T−1* in Hertz frequency units. Note that this PSD is proportional to the PSD of the common binned AP (Equation 70), under periodical stimulus and for low frequencies – which is the regime under which we will investigate the PSD (similarly to the experiment Gal et al., 2010). We similarly define the PSD

and the cross-PSD

2.2. General Framework

We model the neuron in the standard framework of biophysical neural models – i.e., Conductance Based Models (CBMs). However, rather than focusing only on a specific model, we establish general results about a broad class of models. In this framework, the voltage dynamics of an isopotential neuron are determined by ion channels, protein pores which change conformations stochastically with voltage-dependent rates (Hille, 2001). On the population level, such dynamics are generically very well described by models of the form of Soudry and Meir (2014), (Equations 4–6)

with voltage V, stimulation current I(t), rapid variables r (e.g., m, n, h in the Hodgkin–Huxley (HH) model Hodgkin and Huxley, 1952), slow “excitability” variables s ∈ [0, 1]M (e.g., slow sodium inactivation Chandler and Meves, 1970), white noise processes ξr/s (with zero mean and unit variance). Also, the matrices Ar/s and the vectors br/s can be written explicitly using the kinetic rates of the ion channels, while the matrices Br/s can be written using those rates in addition to ion channel numbers. Lastly, we denote

as the diffusion matrices (Orio and Soudry, 2012). In these models the voltage and the rapid variables constitute the AP generation, while the slow variables modulate the excitability of the cell. For simplicity, we assumed that r and s are not coupled directly, but this is non-essential (Soudry and Meir, 2014). The parameter space can be constrained (Soudry and Meir, 2012), since we consider here only excitable, non-oscillatory neurons which do not fire spontaneously1 and which have a single resting state – as is common for isolated cortical cells, e.g., Gal et al. (2010).

2.3. The Power Spectral Density of the Response

PSD-based estimators are central tools in quantifying long term correlations (Robinson, 2003; Lowen and Teich, 2005), and are commonly used in experimental settings – as in Gal et al. (2010). Therefore, in this section we focus on the PSD of the neural response under sparse stimulation regime (section 2.1) of a CBM (section 2.2).

2.3.1. Recap – previous mathematical results

Typically, CBMs (Equations 4–6) contain many unknown parameters, and are highly non-linear. Therefore, it is quite hard to fit them using a purely simulation based approach, especially over long timescales, where simulations are long and models have more unknown parameters. Therefore, we developed a reduction method that simplifies analysis and enables fitting of such models. We refer the reader to Soudry and Meir (2014) for full mathematical details.

In this method, we semi-analytically2 reduce the full model (Equations 4–6) to a simplified model, under the assumption that the timescales of rapid and slow variables are well separated. Given another assumption, that the neuron dynamics are sufficiently “noisy,” we can linearize the model dynamics, so that (Soudry and Meir, 2014, Equation 12)

where em is a white-noise signal with zero mean and variance σ2e ≜ p* − p2* (recall p* is the mean probability to generate an AP) and s* (the excitability fixed point) and wj (an “effective weight” of component sj) can be found self-consistently together with p* as a function of T* (Soudry and Meir, 2014, Equation 10). After these quantities are found, an expression for the output PSD SY (f) in this model can be written explicitly. We let X+, X− and X0 denote the averages of the quantity Xs during an AP response, a failed AP response and rest, respectively. Also, we denote

as the steady state mean value of Xs [this would be X(p*, T*) in Equation 7 in Soudry and Meir, 2014]. For example, A* and D* are the respective steady state means of As and Ds. Additionally, we denote (definition below Equation 12 in Soudry and Meir, 2014)

as a “feedback” vector (see Figure 1C in Soudry and Meir (2014) to understand this interpretation), and (Soudry and Meir, 2014, Equation 14)

as the “closed loop transfer function” (including the effect of the feedback), with I being the identity matrix. Using the above notation, we can derive the PSD of the response. Given a periodical stimulation (m = 0) we obtain (Soudry and Meir, 2014, Equation 13)

Though Equation (10) relies on two simplifying assumptions, extensive numerical simulations (Soudry and Meir, 2014, Figures 3–5) showed that this expression is rather robust and remains accurate in many cases even if these assumptions do not hold. Therefore, in this work we will always assume that Equation (10) is accurate.

2.3.2. The effect of feedback

In the neuron, the slow excitability variables s affect the response of the neuron, which, in turn, affects the dynamics of the slow excitability variables. To simplify analysis, it is desirable to “isolate” this feedback effect. In order to do this, we apply the Sherman Morrison lemma to Equation (9),

with

being the “open loop” version of Hc (f) (i.e., if a is set to zero). Using this in Equation (10) we obtain

with SoY (f) being the “open loop” version of SY (f) (i.e., SY (f) with a set to zero),

and κ (f) determines the effect of the feedback

Note that κ (f) depends on the feedback through the variable a. If a → 0, for example, the kinetic rates of s are not sensitive to AP occurrences3. In that case κ (f) → 1 and SY (f) → SoY (f).

2.3.3. Partial fractions decomposition

In order to simplify analysis, we decompose the vector expressions in Equations (13, 14) to partial fractions.

If A* is diagonalizable, than we can write Equation (13) as (Appendix A.1)

where the poles λk are the inverse timescales of the slow variables (the eigenvalues of A*), arranged from large to small according to their magnitudes (0 < |λM| < |λM − 1| < … < |λ1|) and

being the amplitude of these poles, with Dkj and wk being the respective components of D* and w in a basis in which A* is diagonal. Note that, ∀k, Re [λk] < 0 (from the properties of A*).

Using a similar derivation for κ (f), we obtain

with ak and wk being the respective components of a and w in a base in which A* is diagonal.

2.3.4. Example – a “diagonal” model

For concreteness, we demonstrate our results on a simple model in which A* is a diagonal matrix and, as a result, D* (which depends on A*) is also diagonal. In this “diagonal” model all the components of s are uncoupled (i.e., belong to different channel types), Equation (6) can be written as (Soudry and Meir, 2014, section 4.1)

∀ k∈{1, …, M}, where σs, k(V, s) = [(δk(V) (1 − sk) + γk(V)sk) N − 1s, k]1/2 and Ns, k are the number of slow ion channels of type k. Similarly as before, γ+, k,γ−, k and γ0, k denote the averages of the kinetic rate γk(V) during an AP response, a failed AP response and rest, respectively. In addition

is the average γk(V) in steady state. We use a similar notation for δ. Therefore

with zero on all other (non-diagonal) components and

Therefore, in Equations (15, 16) we have,

Importantly, by tuning the parameters M, γk(V), δk(V), Ns, k and wk we seem to have complete freedom in determining λk, ck and ak (Equations 19–21). This, in turn, would give complete freedom in tuning SoY (f) and κ (f). Therefore, it seems that for any CBM (i.e., not only diagonal models) we can find an equivalent diagonal model – which produces exactly the same PSD of the response.

The only caveat in the previous argument is that in non-diagonal models λk can be complex, but not in a diagonal model, since the kinetic rates γk(V) and δk(V) must be real numbers. How would the situation change if some of the poles had complex values? Complex poles (i.e., for which Im[λk] > 0) always come in conjugate pairs. These pairs behave asymptotically (i.e., for 2π f ≫ |λk| or 2π f ≪ |λk|) very similarly to two real poles, with an additional “resonance” (either a bump or depression) in a narrow range in the vicinity of these poles (i.e., 2π f ~ |λk|) (see Appendix A.2, or Oppenheim et al., 1983).

3. Results

3.1. Background on f−α Statistics

As observed in Gal et al. (2010), the responses of isolated neurons exhibit long-term correlations robustly 4, under sparse pulse stimulation (Figure 1 and section 2.1). Signals with such long-term correlations are often described by the term “f−α noise.” This is because the Power Spectral Density (PSD, Papoulis and Pillai, 1965) is a central tool in detecting and quantifying such signals (Robinson, 2003; Lowen and Teich, 2005). As the name implies, if the AP pattern Ym is a “f−α noise signal” then its PSD (Equation 10) has a f−α shape

where the PSD is defined here as in Equation (1). As is usually the case for most f − α phenomena, Equation (22) is true only in a certain range fmin ≤ f ≤ fmax, and with 0 < α ≤2. Note also that if α > 1, then fmin > 0 necessarily5. Such f−α behavior is considered interesting due to its “scale-free” properties, which can sometimes indicate a “long memory,” as explained in the introduction. Therefore, it is interesting to ask the following questions:

1. What is the biophysical origin of the f−α behavior?

2. Does this f−α behavior imply that the neuron “remembers” its history on very long timescales (hours and days)?

We aim to answer the first question in section 3.2, focusing on the case of periodical stimulation Tm = T*, as in Gal et al. (2010). The second question is addressed in section 3.3, where we examine a general sparse stimulation process Tm. Finally, in section 3.4.2 we fit a specific CBM (which is an extension of a previous CBM) so it adheres to this set of minimal constraints. We numerically reproduce the experimental results of Gal et al. (2010) and demonstrate our predictions.

3.2. Biophysical Modeling of f−α Statistics

As we explained in the introduction, neurons contain a large variety of processes operating on slow timescales. These processes are, in many cases, not well characterized or contain unknown parameters. Therefore, it is hard to model the behavior of the neuron on slow timescales with a CBM using only simulation. With so many unknowns, an exhaustive parameter search is unfeasible 6. Fortunately, since we derived a semi-analytic expression for the PSD (Equation 10), starting from some initial “guess” (as to which process to include, and with what parameters), it is relatively straightforward to tune the parameters so that the CBM reproduces the experimental results (i.e., by maximizing some “goodness of fit” measure).

However, even if a specific model could be found to reproduce the experimental results, it would still be unclear whether or not this is would be a “useful” model – one which can be used to infer the biophysical properties of the neuron, or its response to untested inputs. The first problem is that CBMs are highly degenerate, where different parameter values can generate similar behaviors7, so we can never be sure if the “correct” model was inferred. The second problem is that it is unclear whether a “correct” model would be generally useful – since different neurons from the same type can have very different parameters (Marder and Goaillard, 2006).

In order to address the first problem, initially, in section 3.2.1 we analyze Equation (10), and attempt to answer a more general question – what class of CBM models can generate the experimental results? We find “rather general” sufficient conditions – i.e., which, given a few assumptions, also become necessary conditions. Next, in section 3.2.2, we aim to find a “minimal” set of constraint on a CBM to fulfill theses conditions. Qualitatively, these conditions indicate that, in order to reproduce the experimental results, a general CBM must:

1. Include only a finite number of ion channels of each type (implying a stochastic model).

2. Include few slow processes with timescales “covering” the range of timescales over which SY (f) ∝ f−α is observed.

3. Obey a certain scaling relation (with an exponent of 1−α), implying that slower processes are more “noisy.”

More detailed explanations of these conditions, and a concrete example, are provided in the following two subsections.

3.2.1. General conditions for f−α statistics

In this section we derive general conditions on the parameters of a CBM (section 2.2) so it can generate robust f−α statistics in SY (f). Here, we focus on the case of sparse periodical input Tm = T* ≫ τAP (as in Gal et al., 2010).

This analysis is based on the decomposition of the PSD SY (f) as a ratio of SoY (f) and the feedback term |κ (f)|2. Recall that SY (f) ∝ f−α is robustly observed for all stimulation parameters – even when p* is near 0 or 1 (see section 3.1). Note that one can arbitrarily vary p* by changing the stimulation parameters (such as I0 or T*). It is straightforward to show that when p* → 0 or p* → 1, the effect of feedback is negligible8, and therefore SY (f) ≈ SoY (f). This implies that, at least for some simulation parameters, SoY (f) ∝ f−α. For this reason, and for the sake of analytical simplicity, we first develop general conditions so that SoY (f) ∝ f−α, and later we discuss the effects of the feedback κ (f).

Note from Equation (15) that if M (the dimension of s – the number of slow processes) is finite, one can have SoY (f) ∝ f−α exactly if and only if α = 0 or 2. However, these values are far from what was measured experimentally (Equation 42). Therefore, SoY (f) ∝ f−α can be generated exactly only in some limit (in which M is infinite), or approximately (if M is finite). Also, note that if 2π f ≫ |λ1|, then SoY (f) − T* σ2e ∝ f−2. Additionally, if 2π f ≪ |λM|, we have SoY (f) ≈ constant. Therefore, Equation (15) can generate SoY (f) ∝ f−α with 0 < α < 2 only for |λM| < 2π f < |λ1|.

Next, we explain when this becomes possible. For simplicity assume that in Equation (15) T* σ2e is negligible and all the poles are real (the effect of complex poles will be discussed below). We define the following pole density

where δ(·) is Dirac's delta function. Using Equations (15 and 23) we obtain

For |λM| ≪ 2π f ≪ |λ1| and 0 < α < 2, Equation (24) becomes

if and only if (Appendix A.3)

in the range |λ1| > |λ| > |λM|, with ρ0 = 2π−1C sin(πα/2). Therefore, ρ(λ), the distribution of the poles, must scale similarly to SoY (f) (but with a different exponent).

Several comments are in order at this point.

1. It was previously known that, in a linear system, a f−α PSD could be generated using a similarly scaled sum of real poles (Keshner, 1979, 1982). The novelty here is two-fold: (1) Quantitatively analyzing the PSD of CBMs (which are highly non-linear) in a similar way (through Equation 10) (2) Finding that condition 26 is not only sufficient, but necessary.

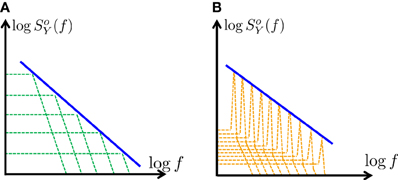

2. Formally, Equation (26) can be exact only in the continuum limit where the number poles is infinite and they are closely packed. However, in practice, Equation (25) remains a rather accurate approximation even if the poles are few and well separated (Figure 2A), as we shall demonstrate in the next section (as in Keshner, 1979, 1982). Clearly, for simulation purposes, it is beneficial to use a CBM with a finite number of (preferably, few) poles.

3. We have assumed that all the poles are real. What happens if some of the poles are complex? Recall (section 2.3.4) that if some poles have complex values then SoY (f) also has “resonances” (bumps or depressions) in a narrow range near these poles. Technically, scaling these resonance peaks can also be used to approximate Equation (25) (Figure 2B). However, we did not pursue this method here since it would require significantly more poles and would be much harder to implement.

4. Note that so far we have discussed only SoY (f). One can perform a similar analysis directly on SY (f). However, we find it is easier to first simplify κ (f) and then use Equation (12). From Equation (12) the PSD SY (f) will have a power-law shape in the range |λM|≪2π f ≪ |λ1| if, in that range: either (1) the magnitude of κ (f) is constant or slowly varying, or (2) κ (f) also has a power-law shape. In the first case the exponent of SY (f) will be the same as the exponent of SoY (f), and in the second case the exponent will differ. The conditions for both cases can be derived similarly to our analysis of SoY (f). We demonstrate this next, in a more specific context.

Figure 2. Generating f-α PSD using a finite number of poles – a graphic description. Using partial fraction decomposition (Equation 15) SoY (f) ∝ f-α (blue) can be approximated (on a log–log scale) in two distinct ways: (A) Using a sum of a real poles (green), with scaled amplitudes (approximating Equation 26) (B) Using a sum of complex poles (orange), with scaled “resonance peaks” (Equation 46). In this work we focus on the first case (A), since it is simpler and requires far fewer poles.

3.2.2. A minimal model for f−α statistics

In the previous section we found general conditions under which Equation (13) gives SoY (f) ∝ f−α. In this section, we aim is to generate SoY (f) ∝ f−α over fmin < f < fmax in a minimal model, in which M (the dimension of s) is as small as possible. As explained in section 2.3.4 we do not lose any relevant generality if we restrict ourselves to the case where A* is diagonal (Equation 18). From Equation (26), we know that |λk| must “cover” the frequency range fmin < f < fmax. In order for M to be small, we choose λk to be uniform over a logarithmic scale (similarly to Keshner, 1982), so λk ∝ ϵk with ϵ < 1. The “simplest” way to achieve this is to have (see Equation 18)

so

In order for λk/(2π) to cover the range [fmin, fmax] we require that

Given M, this sets a constraint on ϵ. In order to have scaling in ρ(λ), as in Equation (26), we also require that ck ∝ |λ |1−α dλ ∝ ϵ(2−α)k, since dλ = λk − λk − 1 ∝ ϵk. Therefore, from Equations (21) and (20) we have

so that SoY (f) ∝ f−α. Therefore, we require that wk ∝ ϵ−μ k, Ns, k ∝ ϵνk with 2μ + ν = α − 1. For μ > 0 the slower processes (larger k) have larger weight. For ν > 0 slower processes have a smaller number of ion channels (therefore, they are more “noisy”).

In Appendix A.4, we investigate what type of scaling will generate also SY (f) ∝ f−α, taking into account the effects of feedback (through κ (f)). We conclude that, because of the feedback, a value of μ > 0 would not change the exponent of SY (f) over a “reasonable” range of parameters (i.e., assuming ν > −2). Therefore, the simplest way to generate SY (f) ∝ f−α would be to take μ = 0. In this case, we have (Equation 59), for −1 < ν < 1 and |λM| ≪ 2π f ≪ |λ1|,

where the logarithmic correction arises from the effect of feedback κ (f). A few comments on Equation (30) are in order at this point.

1. Due to the logarithmic correction, in order to approximate SY (f) ∝ f−α it is a reasonable choice to set ν slightly higher than α−1, e.g.,

2. Even if there is no scaling in the parameters (i.e., μ = ν = 0), we obtain SY (f) ∝ f−1 (neglecting logarithmic factors).

3. Equation (30) is based on asymptotic derivation, which is correct in two opposing limits (“sparse” or “dense” poles, Appendix A.5), indicating that these results are rather robust to parameter perturbations.

4. The magnitude of the ion channels number Ns,1 is inversely proportional to the magnitude of SY (f) (i.e., its proportionality constant), while the value w1 (the magnitude of the weights) does not affect SY (f).

5. When Ns,1 → ∞ we have SY (f) → 0, implying that in the deterministic limit, such a CBM does not generate f−α noise (in accordance with our results from Soudry and Meir, 2012).

3.3. The Input–Output Relation of the Neuron

In the previous section we derived minimal biophysical constraints under which a neuron may generate f−α statistics in response to periodic stimulation. In this section we explore the input–output relation of the neuron under these constraints, in the case where the inter-stimulus intervals Tm form a general (sparse) random process. We decompose the neuronal response into contributions from its “long” history of internal fluctuations and its “short” history of inputs, quantifying neuronal memory.

3.3.1. The linearized input–output relation

Recall that m ≜ Tm − T*, with T* ≜ 〈 Tm 〉 and ST (f) the PSD of Tm (Equation 2). As explained in Soudry and Meir (2014), for a general CBM9 we can decompose Ŷm, the fluctuations in the neuronal response, to a linear sum of the history of the input and internal noise, i.e.,

with the filter hextk used to integrate external fluctuations in the inputs, and the filter hintk used to integrate zm, a zero mean and unit variance white noise representing internal fluctuations (e.g., ion channel noise). It is easier to analyze this I/O in the frequency domain, where Equation (32) becomes (Soudry and Meir, 2014, Equation 20)

where we define X(f) to be the Fourier transform of X(t). Together, Hext(f) and Hint(f) describe the m → Ŷm neuronal I/O at very long timescales.

Note that these filters are related to the PSDs, in the following way

where we recall that SYT (f) is the cross-PSD (Equation 3). Notably, from Equation (35), if the input to the neuron is not periodical (so, ST (f) ≠ 0), then the PSD SY (f) should be the same as calculated previously, except for the addition of |Hext(f)|2 ST (f).

3.3.2. The shape of the input–output filters

For a general CBM, we can derive semi-analytically the exact form of the filters in Equation (33) from its parameters, as we did for SY (f). For example, if m = 0 (periodical input), then also ST (f) = 0, and so

where SY (f) is the PSD we derived previously (Equation 10). Additionally, we obtain (Soudry and Meir, 2014, Equation 17)

with

Next, we find both filters for the minimal model described in section 3.2.2. Recall that in this model

with a1 and d1, respectively given by Equations (8) and (38). To simplify analysis, we derive an asymptotic form for both filters, for the cases |λM| ≪ 2π f ≪ |λ1| and 2π f ≫ |λ1|. First, from Equation (36), and Equation (59), we find

Similarly, from Equation (37), we find (Appendix A.6) that for the minimal model the interpolation between the two asymptotic cases is monotonic, so we can approximate

where q ≜ (1 − ϵ)−1T−1*w1. A few comments on Equations (40, 41) are in order at this point.

1. We found that Hext(f) is a low pass filter with a pole at fext = qa1/2π while Hint(f) ~ f−α/2 for 2π f ≪ |λ1|. Consequently, in the temporal domain (Equation 32), for large t (i.e., large k), the neuron's memory of its external input decays exponentially (hextk ~ e−f1T*k), while its memory of its internal fluctuations decays as a power law (hintk ~ k−(1−α/2)). Therefore, the input memory has a finite timescale (equal to f−1ext), while the memory of internal fluctuations is “long” (with a cutoff only near f−1min).

2. It is perhaps surprising that Equation (37), which has multiple poles, becomes a low pass filter with a single pole f1. The derivation (Appendix A.6) gives two main reasons for this. First, the scaling of wk and dk in Equation (39) induces only a weak (logarithmic) scaling of the poles in open-loop. Second, even this weak scaling is canceled by the effects of the feedback.

3. Naturally, other models may have a different shape of Hext(f). This could be probed directly, as we explain later, in section 3.4.3.

3.4. Modeling Experimental Results

In this section we apply our results to experimental data, described in section 3.4.1. In section 3.4.2 we implement the set of “minimal constraints” we found in section 3.2.2 in a specific CBM, and fit it to experimental data in which SY (f) ∝ f−α. The analytical results in section 3.2 suggest that this specific CBM is a “reasonable” representative of the family of CBMs that can generate the experimental results. Other members of this family can be reached by varying the parameters within the (either minimal or general) constraints. Next, in section 3.4.3 we use our results from section 3.3.2 on the fitted model. We show that, although internal fluctuations in the model can affect the neural response on a timescale of days, the memory of the input is only retained for a duration of minutes. We suggest specific experiments to test this prediction. In section 3.4.4 we suggest further predictions

3.4.1. Experimental details

The experiment from Gal et al. (2010), where a single synaptically isolated neuron, residing in a culture of rat cortical neurons, is stimulated periodically with a train of extracellular short current pulses with constant amplitude I0. The observed neuronal response was characterized by different modes (Gal et al., 2010, Figure 2). We focus on the “intermittent mode” steady state, in which 0 < p* < 1 (i.e., sometimes the stimulation evokes an AP, and sometimes it does not). The patterns observed in Ym, the AP occurrences timeseries, are rather irregular (Gal et al., 2010, Figure 2E), span multiple timescales (Gal et al., 2010, Figure 5) and variable (i.e., patterns are not repeatable Gal et al., 2010, Figure 9A). More quantitatively, as indicated by the analysis (Gal et al., 2010, Figure 6), for all intermittently firing neurons, the patterns in Ym fall into the category of “f−α noise” where the value of α varied significantly between neurons – with

(mean ± SD). As we explained in section 3.1, this f−α behavior is true only in some limited range fmin < f < fmax. From the experimental data, (Figure 6C in Gal et al., 2010) it can be estimated that fmin < 10−5Hz and fmax ~ 10−2Hz. Also, since α > 1, then 0 < fmin (see section 3.1).

3.4.2. The HHMS model – a biophysical implementation of the minimal constraints

In our previous work (Soudry and Meir, 2012) we already fitted a model that fits many of the “mean” properties of the neuronal response (e.g., firing modes, transients and firing rate). This model is an extension of the original Hodgkin–Huxley model which includes Slow sodium inactivation (Chandler and Meves, 1970; Fleidervish et al., 1996) (The HHS model, see Appendix C.1). In order to maintain this fit with the experimental results, we extend the HHS model with additional slow components, obeying Equation (18). We denote this as the HHMS model (Hodgkin Huxley model with Many Slow variables, Appendix C.2). The equations are identical to the HHS model, except that in the voltage equation (Equation 73) gNas is replaced by gNaM−1 , where s1 has the same equation as s in the HHS model (Equation 77). By symmetry, this gives identical weights to component si (i.e., ∀k: wk = w1). The remaining rates (for k ≥ 2) are chosen according to our constraints, so γk(V) = γ(V) ϵk − 1, δk(V) = δ(V) ϵk − 1 (as in Equation 27), where γ(V) and δ(V) are taken from the HHS model (Equation 77) and also Ns, k = Ns ϵν k. Therefore, the only free parameters are ϵ, M, Ns, ν and I0 (I0 is the current amplitude of the stimulation pulses).

This model can be used to fit the experimental results for any α ∈ [0,2). We performed a numerical simulation of the full equations (Equations 4–6) of the HHMS model under periodical stimulation with T* = 50 ms. We aimed to fit an experiment from Gal et al. (2010), which had a similar stimulation and exhibited SY (f) ~ f−α, with α = 1.4 (which is approximately the average α value measured in Gal et al., 2010). The current amplitude I0 was set to I0 = 7.7 μ A so that the model would have the same mean response probability p* ≈ 0.4 as in the experimental data (using the self consistent equations for p* from Soudry and Meir, 2014). We chose M = 5 and ϵ = 0.2 in order to satisfy constraint Equation (29) with a minimal M. We chose ν = 0.5 to satisfy Equation (31). Lastly, we chose Ns = 104 in order to fit the magnitude of the SY (f). This reproduced all the scaling relations observed experimentally (Figure 3).

Figure 3. The measures of “scale free” rate dynamics in the HHMS model – comparison of the experimental data from Gal et al. (2010) and a simulation of the extended HHS model (solid and dashed lines, respectively). We use here the same measures as in Figure 6 in Gal et al. (2010): (A) The firing rate fluctuations estimated using bins of different sizes (T = 10, 30, 100, and 300 s) and plotted on a normalized time axis (units in number of bins), after subtracting the mean of each series. (B) CV of the bin counts, as a function of bin size, plotted on a log-linear axis. (C) Firing rate periodogram. (D) Detrended fluctuations analysis. (E) Fano factor (FF) curve. (f) Allan factor (AF) curve. (G) Length distribution of spike–response sequences, on a half-logarithmic axes. (H) Length distribution of no-spike-response sequences, on a double-logarithmic axes. For additional details on measures used see Appendix B.1.

3.4.3. Predictions – probing the input–output relation of the neuron

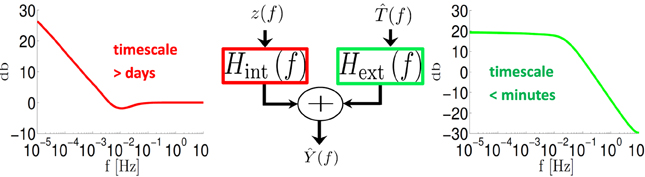

After fitting the HHMS model to the experimental results, we can examine its resulting linearized input–output relation, described by the filters Hext(f) and Hint(f) (Equation 33). The Hint(f) filter integrates internal fluctuations, while the Hext(f) filter determines how external fluctuations (in the input) affect its response.

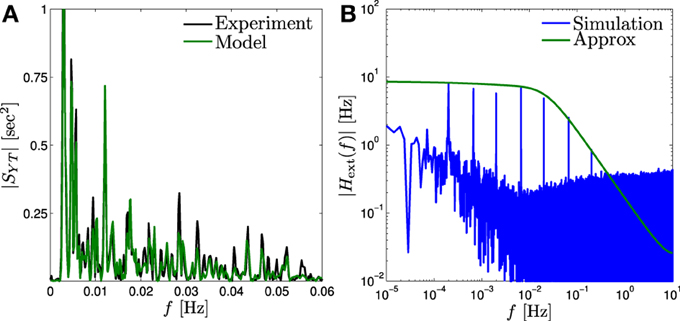

In accordance with the asymptotic forms in Equations (40) and (41), we find that Hext(f) is a low pass filter with a pole fext ~ 10−2 Hz (Figure 4, green) while Hint(f) ~ f−α/2 for fmin < f < 10−2 Hz (Figure 4, red) with fmin < 10−5 Hz. Therefore, as explained in section 3.3.2 this model implies that the response of the neuron is affected by internal fluctuations over the scale of days (~ f−1min) or more, generating the f−α behavior we observe in Figure 3. However, external input is remembered only for minutes (~ f−1ext).

Figure 4. System decomposition into external (input – (f)) and internal (fluctuation – z(f)) filters. For a fitted HHMS model, Hext(f) is a low pass filter with cutoff <10−2 Hz while Hint (f) ~ f-α/2 for f < 10−2 Hz.

Next, we examine two methods which allow us to probe Hext(f) directly and examine these predictions.

First, a simple method to probe the external input filter Hext(f) is through Equation (34). Allowing reliable estimation of Hext(f) in a certain frequency range requires a random process stimulation for which |Hext (f) |2ST (f) ≫ |Hint(f)| in that range, as explained in Appendix B.2. To demonstrate this method we estimate STY (f) from the existing experimental data taken from Gal and Marom (2013), in which ST (f) ~ f−β (above some lower cutoff). In Figure 5A we compare this estimation with STY (f) in the HHMS model in a limited range where ST (f) is sufficiently high for estimation to be accurate. It is similar to STY (f) from our fitted HHMS model, validating our estimate of Hext(f) for that frequency range.

Figure 5. Input memory in fitted model. (A) Comparison of |SYT (f)| of the fitted model (“Model”) to that estimated from the experimental confirms (“Experiment”) the prediction of the input filter |Hext(f)| for probed range. (B) This filter (“Approx”) can be probed more accurately by peaks of Ŷ (f) (“Simulation”), by applying a “sum of sines” input (Equation 43).

Second, The filter Hext(f) could be probed more accurately and at lower frequencies – by sinusoidally modulating the input (the internal-stimulus intervals), analogously to the sinusoidally modulated input current used in Lundstrom et al. (2008, 2010) and Pozzorini et al. (2013),

As we explain in Appendix B.3, in this case the output of the neuron would be

This allows us to easily estimate |Hext(f)| using the peaks of Tamp−1 Ŷ (f) (the Fourier transform of T−1amp Ŷm) at frequencies fl, as we demonstrate in Figure 5B, using our fitted HHMS model.

3.4.4. Additional predictions

As explained in Gal et al. (2010) and Soudry and Meir (2012) the latency of the AP can serve as an indicator of the cell's excitability. Specifically, this is true in the HHMS model, for periodical stimulus and p* = 1, where the PSD of the latency, SL (f), is a shifted and scaled version of SY (f) with p* → 1 (see section 4.4.6 in Soudry and Meir, 2014). Therefore, in the HHMS model we also have SL (f) ∝ f−α approximately (neglecting logarithmic factors).

Next, suppose we vary some measurable stimulation parameter, such as the mean stimulation rate T−1*. How would this affect the shape of the filters we derived? The analytical results allow us to calculate this explicitly in the HHMS model.

First, we consider the gain of the external input filter Hext(f) (i.e., Hext(0)). As we explain in Appendix A.7, if f ≪ fcutoff, than

which is the mean firing rate of the neuron – a simply measurable quantity.

Second, how would Hint (f) change if T* is varied? Since Hint (f) is directly measurable only through SY (f) (Equation 36), we consider SY (f) instead. From Equation (59) it is clear that if SY (f) ~ f−α approximately at low frequencies then the exponent α should not depend much on any external parameter (assuming 0 < p* < 1). This was observed experimentally when the stimulation rate (T−1*) was varied, as can be seen in Figure 1G in Gal and Marom (2013).

4. Discussion

4.1. Generating f−α PSD

In this work we aim to explain biophysically the phenomenon of f−α behavior in the response of isolated neurons, and explore its implications on the input–output relation of the neuron. We do this under a regime of sparse stimulation (Figure 1), and in the biophysical framework of stochastic conductance-based models (CBMs, Equations 4–6). In this setting our analytical results (Soudry and Meir, 2014) can be used to derive a closed form expression for the Power Spectral Density (PSD, Equation 10) based on the parameters of the slow kinetics in the CBM. This PSD is affected by the closed-loop interaction – the slow dynamics affect the AP response, which, in turn, feeds back and affects the kinetics of the slow processes (section 2.3.2). Moreover, the contribution of each slow process to the PSD can be exactly quantified (section 2.3.3), as we demonstrate using a simple model (section 2.3.4).

These mathematical results expose the large parameter degeneracy of CBMs (Marder and Goaillard, 2006; Soudry and Meir, 2014), i.e., that many “different” models will quantitatively produce the same behavior. Due to the degeneracy of CBMs, we first aimed to derive rather general sufficient conditions for the generation of f−α noise in a CBM (section 3.2.1). These conditions indicate which types of CBMs can generate the observed behavior. We show that, in order to generate f−α behavior, neurons should have intrinsic fluctuations (e.g., due to ion channel noise), and have a number of slow processes with a large range of timescales, “covering” the entire range over which f−α statistics is observed. Furthermore, the parameters of these processes must be scaled in a certain way in order to generate f−α noise with a specific α (Equation 26).

We implement these constraints in a minimal CBM (section 3.2.2), in which the slow processes are uncoupled, except through the voltage, as in Soudry and Meir (2012). Initially, we find that the specific scaling relation can be achieved either by scaling the (1) conductances or (2) the ion channel numbers. This scaling implies that slower processes will be either (1) “stronger” or (2) “noisier.” However, the “feedback” effect in the model (the slow process being affected by the APs) prevents f−α statistics from being generated in case (1). In contrast, option (2) can robustly generate the observed f−α statistics in the neuronal response for any 0 < α < 2 (Equation 30 and Figure 6).

Naturally, outside of the framework of CBMs (Equations 4–6) long term correlations may be modeled differently, since there are numerous distinct ways to generate power law distributions (Newman, 2005). For example, as numerically demonstrated in Gal and Marom (2013), 1/f statistics in neuronal firing patterns can be generated by assuming global (cooperative) interactions between ion channels (i.e., not through the voltage). Biophysically, the significance of interactions between ion channels is still not clear (Naundorf et al., 2006 and Brief Communications arising), but other cellular processes that might affect excitability on slower timescales clearly exhibit interactions (e.g., gene regulation networks Davidson and Levin, 2005). Mathematically, such interactions render the slow dynamics (Equation 6) non-linear at constant voltage (Gillespie, 2000). It would be interesting to generalize the theory we presented here in order to understand how to tune the PSD in such a non-linear setting, since this has the potential to further reduce the number of parameters and model complexity.

4.2. Biophysical Implementation

We examine our theoretical predictions numerically. We do this using a stochastic Hodgkin Huxley type model with slow sodium inactivation that was previously fitted to the basic experimental results (Soudry and Meir, 2012). We extend this model to include four additional slow processes, which resemble slow sodium inactivation (Appendix C.2). The only difference is that each process is slower than the previous one, and has a lower number of ion channels, according to the specific scaling relation that was derived. The resulting model indeed generates f−α noise, and is demonstrated numerically (Figure 3) to fit the experimental results of Gal et al. (2010). This is the first time, to our knowledge, that a cortical neuron model (either biophysical or phenomenological) reproduces experimental results over such long timescales. Notably, without the analytical results, it would be hard to tune the parameters of a biophysical neuron, due to the large number of unknown parameters.

Previous works (Lowen et al., 1999; Soen and Braun, 2000) demonstrated numerically that, even with constant current stimulation, incorporating slow processes into an excitable cell model can generate f−α in its response. In Lowen et al. (1999) a HH model was extended to include multiple slow processes with scaled rates in the potassium channel produced f−α firing rate response. Their model produced an exponent of α ≈ 0.5, replicating experiments measurements from the auditory nerves. Another work (Soen and Braun, 2000), aiming to reproduce the activity of heart cells, produced long term correlations with α ≈ 1.6 − 2 using a reflected diffusion process.

The identity of the specific slow processes involved in generating f−α remains a mystery at this point, since there are many possible mechanisms which can modulate the excitability of the cell in such long timescales. For example, ion channel numbers, conductances and kinetics are constantly being regulated and may change over time (e.g., Levitan, 1994; Staub et al., 1997). Also, the ionic concentrations in the cell depend on the activity of the ionic pumps, which can be affected by metabolism (Silver et al., 1997). Finally, the spike initiation region can significantly shift its location with time (e.g., 17 μm distally during 48 h of high activity Grubb and Burrone, 2010), and so can cellular neurites (Paola et al., 2006; Nishiyama et al., 2007). Only after such slow processes are quantitatively characterized, can we determine their effect on the neuron's excitability at long timescales.

4.3. The Input–Output Relation

The linearized input–output relation of the fitted CBM was derived using the methods described in Soudry and Meir (2014). This linearized relation decomposes the contributions of external inputs and internal fluctuations to the response of the neuron. This decomposition (Equation 33) shows that even though the neuron can “remember” its intrinsic fluctuations over timescales of days, its memory of past pulse inputs can be limited to a shorter timescale of ~102 s (Figure 4). Notably, the neuron has this limited memory for such inputs even though processes on much slower timescales exist in the model.

In the introduction we mentioned previous works (Lundstrom et al., 2008, 2010; Pozzorini et al., 2013) which also described the temporal integration in the neuron using power-law filters, although in a rather different (non-sparse) stimulation regime. Our fitted model indicates that similar power-law integration still occurs at very long timescales. However, it is not the input that is being integrated, but the internal fluctuations in the neuron, and this is what drives the f−α statistics measured by Gal et al. (2010). Also, as in Lundstrom et al. (2008, 2010) and Pozzorini et al. (2013), the neuronal response in our model is indeed affected by the last 10 s of its external inputs. However, our model suggests the response will not be significantly affected by spike perturbations in its input that occurred more than 102 s ago.

Qualitatively, this specific timescale of the input memory stems from the “fastest slow negative feedback process” in the model (in this specific model, slow sodium inactivation). This process responds to perturbations in the input which change the firing rate much more quickly than all the other slow processes. Its response to perturbation brings the firing rate back to its steady state, before slower processes even register that the firing rate has changed. Therefore, effectively, these slower processes do not store much information about input perturbations. We suggest experiments to test input memory directly, by using f−α stimulation (Figure 5A), “sum of sines” stimulation (Figure 5B) and a variation of the mean stimulation rate (Equation 44 and Supplementary Figure 2).

4.4. Significance

This work makes several practical contributions. First, our results impose specific constraints on the slow processes that modulate the excitability on very long timescales (e.g., a ratio between timescales and channel numbers). Such constraints facilitate the construction of neuronal models with “realistic” input–output relations over extended timescales. Hopefully, these constraints may also help to identify the relevant slow biophysical processes. Second, our results suggest that for sparse spiking inputs, the memory of a cortical neuron stretches back to the last minute of its input, but not much more. This limit could be especially relevant when fitting statistical models to neuronal data, and for setting limitations on neuronal computations.

As for the functional significance, it is still not clear why the neuronal response fluctuates so wildly, especially at long timescales. We end this paper by offering some speculations on this issue. We see three possible scenarios. One possibility is that these fluctuations are beneficial. For example, such non-stationary fluctuations should increase network heterogeneity, which may be advantageous (Tessone et al., 2006; Padmanabhan and Urban, 2010). Another possibility is that these fluctuations do not affect neural response when the neuron is connected within a network. For example, this could be due to network feedback canceling slow changes in excitability. Finally, it is possible that such slow fluctuations are deleterious, an unavoidable “noise” generated by the non-stationary environment of the cell. Interestingly, f−α noise imposes important constraints on electronic circuits, and was predicted to impose similar constraints on neural circuits (Sarpeshkar, 1998).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors are grateful to O. Barak, N. Brenner, Y. Elhanati, A. Gal, T. Knafo, Y. Kafri, S. Marom, J. Schiller, and M. Ziv for insightful discussions and for reviewing parts of this manuscript. The authors are also grateful to A. Gal and S. Marom for supplying the experimental data. The research was partially funded by the Technion V.P.R. fund and by the Intel Collaborative Research Institute for Computational Intelligence (ICRI-CI).

Supplementary Material

The Supplementary Material for this article can be found online at: http://www.frontiersin.org/journal/10.3389/fncom.2014.00035/abstract

Footnotes

1. ^I.e., if ∀t : I(t) = 0, then the probability that a neuron will fire is negligible – on any relevant finite time interval (e.g., minutes or days).

2. ^A semi-analytic derivation is an analytic derivation in which some terms are obtained by relatively simple numerics.

3. ^For example, this can happen if the kinetic rates all have low voltage threshold, resulting in A+ ≈ A_ and b+ ≈ b_.

4. ^I.e., in all neurons for which 0 < p* < 1.

5. ^Otherwise, 0.25 ≥ p* − p*2 = 〈 Ŷm2 〉 ≥ 2 (f)df = ∞, which is a contradiction.

6. ^Also simulations take a long time, since experiments, as in Gal et al. (2010), are days-long.

7. ^E.g., in Equation (16) many different parameters would give the same ck.

8. ^Near the edges, w → 0 (Equation 80 in Soudry and Meir, 2014), and so κ (f) → 1.

9. ^I.e., Equations (4–6), with the same assumptions as we had in section 2.3.1.

References

Bean, B. P. (2007). The action potential in mammalian central neurons. Nat. Rev. Neurosci. 8, 451–465. doi: 10.1038/nrn2148

Bédard, C., Kröger, H., and Destexhe, A. (2006). Does the 1/f frequency scaling of brain signals reflect self-organized critical states? Phys. Rev. Lett. 97:118102. doi: 10.1103/PhysRevLett.97.118102

Bendat, J. S., and Piersol, A. G. (2000). Random Data Analysis and Measurement Procedures, Vol. 11, 3rd edn. New York, NY: Wiley.

Chandler, W. K., and Meves, H. (1970). Slow changes in membrane permeability and long-lasting action potentials in axons perfused with fluoride solutions. J. Physiol. 211, 707–728.

Davidson, E., and Levin, M. (2005). Gene regulatory networks. Proc. Natl. Acad. Sci. U.S.A. 102:4935. doi: 10.1073/pnas.0502024102

De Col, R., Messlinger, K., and Carr, R. W. (2008). Conduction velocity is regulated by sodium channel inactivation in unmyelinated axons innervating the rat cranial meninges. J. Physiol. 586, 1089–1103. doi: 10.1113/jphysiol.2007.145383

Debanne, D., Campanac, E., Bialowas, A., and Carlier, E. (2011). Axon physiology. Physiol. Rev. 91, 555–602. doi: 10.1152/physrev.00048.2009

Fleidervish, I. A., Friedman, A., and Gutnick, M. J. (1996). Slow inactivation of NaC current and slow cumulative spike adaptation in mouse and guinea-pig neocortical neurones in slices. J. Physiol. 493, 83–97.

Gal, A., Eytan, D., Wallach, A., Sandler, M., Schiller, J., and Marom, S. (2010). Dynamics of excitability over extended timescales in cultured cortical neurons. J. Neurosci. 30, 16332–16342. doi: 10.1523/JNEUROSCI.4859-10.2010

Gal, A., and Marom, S. (2013). Entrainment of the intrinsic dynamics of single isolated neurons by natural-like input. J. Neurosci. 33, 7912–7918. doi: 10.1523/JNEUROSCI.3763-12.2013

Gal, A., and Marom, S. (2013). Self-organized criticality in single neuron excitability. Phys. Rev. E 88:062717. doi: 10.1103/PhysRevE.88.062717

Gilden, D., Thornton, T., and Mallon, M. (1995). 1/f noise in human cognition. Science 267, 1837–1839. doi: 10.1126/science.7892611

Gillespie, D. T. (2000). The chemical Langevin equation. J. Chem. Phys. 113, 297. doi: 10.1063/1.481811

Grossman, B. Y. Y., Parnas, I., and Spira, M. E. (1979). Differential conduction block in branches of a bifurcating axon. J. Physiol. 295, 307–322.

Grubb, M. S., and Burrone, J. (2010). Activity-dependent relocation of the axon initial segment fine-tunes neuronal excitability. Nature 465, 1070–1074. doi: 10.1038/nature09160

Hodgkin, A. L., and Huxley, A. F. (1952). A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117, 500.

Keshner, M. S. (1979). Renewal Process and Diffusion Models of 1/f Noise. Ph.D. thesis, Massachusetts Institute of Technology.

Levitan, I. B. (1994). Modulation of ion channels by protein phosphorylation and dephosphorylation. Annu. Rev. Physiol. 11, 193–212. doi: 10.1146/annurev.ph.56.030194.001205

Lowen, S. B., Liebovitch, L. S., and White, J. A. (1999). Fractal ion-channel behavior generates fractal firing patterns in neuronal models. Phys. Rev. E 59, 5970. doi: 10.1103/PhysRevE.59.5970

Lowen, S. B., and Teich, M. C. (2005). Fractal-Based Point Processes, 1st edn. Hoboken, NJ: Wiley-Interscience. doi: 10.1002/0471754722

Lundstrom, B. N., Fairhall, A. L., and Maravall, M. (2010). Multiple timescale encoding of slowly varying whisker stimulus envelope in cortical and thalamic neurons in vivo. J. Neurosci. 30, 5071–5077. doi: 10.1523/JNEUROSCI.2193-09.2010

Lundstrom, B. N., Higgs, M. H., Spain, W. J., and Fairhall, A. L. (2008). Fractional differentiation by neocortical pyramidal neurons. Nat. Neurosci. 11, 1335–1342. doi: 10.1038/nn.2212

Marder, E., and Goaillard, J.-M. (2006). Variability, compensation and homeostasis in neuron and network function. Nat. Rev. Neurosci. 7, 563–574. doi: 10.1038/nrn1949

Marom, S. (2010). Neural timescales or lack thereof. Prog. Neurobiol. 90, 16–28. doi: 10.1016/j.pneurobio.2009.10.003

Musha, T., and Yamamoto, M. (1997). “1/f fluctuations in biological systems,” in Proceedings of the 19th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. ‘Magnificent Milestones and Emerging Opportunities in Medical Engineering’ (Cat. No.97CH36136), Vol. 6 (Chicago, IL: IEEE), 2692–2697. doi: 10.1109/IEMBS.1997.756890

Naundorf, B., Wolf, F., and Volgushev, M. (2006). Unique features of action potential initiation in cortical neurons. Nature 440, 1060–1063. doi: 10.1038/nature04610

Newman, M. (2005). Power laws, Pareto distributions and Zipf's law. Contemp. Phys. 46, 323–351. doi: 10.1080/00107510500052444

Nishiyama, H., Fukaya, M., and Watanabe, M. (2007). Axonal motility and its modulation by activity are branch-type specific in the intact adult cerebellum. Neuron 56, 472–487. doi: 10.1016/j.neuron.2007.09.010

Oppenheim, A., Willsky, A., and Nawab, S. (1983). Signals and Systems. Englewood Cliffs, NJ: Prentice Hall.

Orio, P., and Soudry, D. (2012). Simple, fast and accurate implementation of the diffusion approximation algorithm for stochastic ion channels with multiple states. PLoS ONE 7:e36670. doi: 10.1371/journal.pone.0036670

Padmanabhan, K., and Urban, N. N. (2010). Intrinsic biophysical diversity decorrelates neuronal firing while increasing information content. Nat. Neurosci. 13, 1276–1282. doi: 10.1038/nn.2630

Paola, V. D., Holtmaat, A., Knott, G., Song, S., Wilbrecht, L., Caroni, P., et al. (2006). Cell type-specific structural plasticity of axonal branches and boutons in the adult neocortex. Neuron 49, 861–875. doi: 10.1016/j.neuron.2006.02.017

Papoulis, A., and Pillai, S. U. (1965). Probability, Random Variables, and Stochastic Processes. New York, NY: McGraw-Hill.

Pozzorini, C., Naud, R., Mensi, S., and Gerstner, W. (2013). Temporal whitening by power-law adaptation in neocortical neurons. Nat. Neurosci. 16, 942–948. doi: 10.1038/nn.3431

Repp, B. H. (2005). Sensorimotor synchronization: a review of the tapping literature. Psychon. Bull. Rev. 12, 969–992. doi: 10.3758/BF03206433

Sarpeshkar, R. (1998). Analog versus digital: extrapolating from electronics to neurobiology. Neural Comput. 10, 1601–1638. doi: 10.1162/089976698300017052

Silver, I. A., Deas, J., and Erecinska, M. (1997). Ion homeostasis in brain cells: differences in intracellular ion responses to energy limitation between cultured neurons and glial cells. Neuroscience 78, 589–601. doi: 10.1016/S0306-4522(96)00600-8

Sjöström, P. J., Rancz, E. A., Roth, A., and Häusser, M. (2008). Dendritic excitability and synaptic plasticity Physiol. Rev. 88, 769–840. doi: 10.1152/physrev.00016.2007

Soen, Y., and Braun, E. (2000). Scale-invariant fluctuations at different levels of organization in developing heart cell networks. Phys. Rev. E 61, R2216–R2219. doi: 10.1103/PhysRevE.61.R2216

Soudry, D., and Meir, R. (2014). The neuronal response at extended timescales: a linearized spiking input-output relation. Front. Comput. Neurosci. 8:35. doi: 10.3389/fncom.2014.00035

Soudry, D., and Meir, R. (2010). History-dependent dynamics in a generic model of ion channels - an analytic study. Front. Comput. Neurosci. 4:1–10. doi: 10.3389/fncom.2010.00003

Soudry, D., and Meir, R. (2012). Conductance-based neuron models and the slow dynamics of excitability. Front. Comput. Neurosci. 6:4. doi: 10.3389/fncom.2012.00004

Staub, O., Gautschi, I., Ishikawa, T., Breitschopf, K., Ciechanover, A., Schild, L., et al. (1997). Regulation of stability and function of the epithelial Na+ channel (ENaC) by ubiquitination. EMBO J. 16, 6325–6336. doi: 10.1093/emboj/16.21.6325

Tessone, C., Mirasso, C., Toral, R., and Gunton, J. (2006). Diversity-induced resonance. Phys. Rev. Lett. 97, 1–4. doi: 10.1103/PhysRevLett.97.194101

Keywords: neurons, temporal correlations, long memory, noise, input–output analysis, linear filters, power spectral density

Citation: Soudry D and Meir R (2014) The neuronal response at extended timescales: long-term correlations without long-term memory. Front. Comput. Neurosci. 8:35. doi: 10.3389/fncom.2014.00035

Received: 20 December 2013; Accepted: 06 March 2014;

Published online: 01 April 2014.

Edited by:

David Hansel, University of Paris, FranceReviewed by:

Germán Mato, Centro Atomico Bariloche, ArgentinaGianluigi Mongillo, Paris Descartes University, France

Copyright © 2014 Soudry and Meir. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Daniel Soudry, Department of Statistics, Center for Theoretical Neuroscience, Columbia University, 1255 Amsterdam Avenue, New York, NY 10027, USA e-mail: daniel.soudry@gmail.com

Daniel Soudry

Daniel Soudry Ron Meir

Ron Meir