Neural Computations in a Dynamical System with Multiple Time Scales

- 1Brain Science Center, Institute of Basic Medical Sciences, Beijing, China

- 2State Key Lab of Cognitive Neuroscience and Learning, IDG/McGovern Institute for Brain Research, Beijing Normal University, Beijing, China

Neural systems display rich short-term dynamics at various levels, e.g., spike-frequency adaptation (SFA) at the single-neuron level, and short-term facilitation (STF) and depression (STD) at the synapse level. These dynamical features typically cover a broad range of time scales and exhibit large diversity in different brain regions. It remains unclear what is the computational benefit for the brain to have such variability in short-term dynamics. In this study, we propose that the brain can exploit such dynamical features to implement multiple seemingly contradictory computations in a single neural circuit. To demonstrate this idea, we use continuous attractor neural network (CANN) as a working model and include STF, SFA and STD with increasing time constants in its dynamics. Three computational tasks are considered, which are persistent activity, adaptation, and anticipative tracking. These tasks require conflicting neural mechanisms, and hence cannot be implemented by a single dynamical feature or any combination with similar time constants. However, with properly coordinated STF, SFA and STD, we show that the network is able to implement the three computational tasks concurrently. We hope this study will shed light on the understanding of how the brain orchestrates its rich dynamics at various levels to realize diverse cognitive functions.

1. Introduction

The brain performs computations by updating its internal states in response to external inputs. Neurons, synapses, and circuits are the fundamental units for implementing brain functions. At the synapse level, neurons interact with each other to enhance or depress their responses. At the single neuron level, a neuron integrates synaptic inputs and generates spikes if its membrane potential crosses a threshold. At the network level, the topology of neuronal connection pattern shapes the overall population activity. Taken together, the dynamics of individual neurons, the efficacy of synapses, and the network structure jointly determine the dynamical behavior of a neural system in response to external inputs which consequently determine/restrict the computations a neural system can perform. Thus, understanding the dynamical properties of neural systems and their roles in neural computations is at the core of using mathematical models to elucidate brain functions (Herz et al., 2006).

Experimental data has revealed that neural systems display rich dynamical behaviors. For instance, at the single neuron level, in addition to voltage-gated sodium and potassium currents for generating action potentials, a neuron also initiates slow calcium-activated potassium currents, and the latter suppress neuronal responses to a prolonged stimulation, a property called spike-frequency adaptation (SFA) (Gutkin and Zeldenrust, 2014). At the synapse level, rather than being a constant, the efficacy of a synapse exhibits temporal changes depending on the firing history of the pre-synaptic neuron, a property called short-term plasticity (STP) (Markram et al., 1998; Dittman et al., 2000; Abbott and Regehr, 2004). At the network level, experience-dependent long-term plasticity of synapses reshapes the connectivity of a network, creating new memory states in the system (Bliss and Collingridge, 1993). These rich dynamical features of neural networks form the basis for the brain to carry out various computational tasks.

Building up a neural network model and elucidating how the network dynamics reproduces experimental data is a common practice in computational neuroscience research. However, conventional modeling studies often focus on exploring how a single neural computation is realized by certain dynamical features of a neural circuit, albeit that in reality the same neural circuit is often engaged in many computational tasks. This is not a trivial issue, since the dynamical features needed for implementing different computations may be contradictory to each other, casting doubt on the feasibility of the model in practice. For instance, persistent activity and adaptation are two such computational tasks requiring seemingly conflicting neural dynamics. Persistent activity, referring to the phenomenon that neurons keep firing after removing the stimulation, is widely regarded as the neural substrate of short-term memory (Amit, 1989; note that here our definition of persist activity refers to the general phenomenon of sustained neuronal response after the stimulation is removed, and it is not limited to working memory). Adaptation, referring to the phenomenon that neuronal firing rates attenuate over time in response to an invariant stimulation, is generally believed to encompass a strategy for neural systems utilizing resources efficiently (Laughlin, 1989; Wark et al., 2007). To achieve persistent activity, it essentially requires a positive feedback loop in neuronal interaction which retains neural activity in the absence of external drive (Wang, 2001; Carter and Wang, 2007); whereas, to achieve adaptation, it requires a negative feedback loop which suppresses neural activity in the presence of external drive (Abbott et al., 1997). Thus, to achieve both persistent activity and adaptation in a single neural circuit, conflicting requirements on positive and negative feedbacks need to be properly reconciled.

In this work, we propose that the brain exploits different time scales of different dynamical features to accommodate contradictory computational requirements in a single neural circuit. To demonstrate this idea, we employ continuous attractor neural networks (CANNs) as the working model. CANNs are recurrent networks that can hold a family of stationary states due to their translation-invariant property, and they have been widely used as a canonic model to describe the encoding of continuous variables, such as orientation (Ben-Yishai et al., 1995), moving direction (Georgopoulos et al., 1993), head direction (Zhang, 1996), and spatial location of objects (Samsonovich and McNaughton, 1997), in neural systems. They have also been used as models to study working memory and navigation behaviors of animals (Taube, 2007). Recent experimental data has shown that CANNs capture some fundamental features of neural information representation (Mante et al., 2013; Ponce-Alvarez et al., 2013; Wimmer et al., 2014), suggesting that CANNs serve as a good mathematic model to investigate the general principles of neural computation (Wu et al., 2016). Based on CANNs, we investigate how coordination of SFA in the neuronal dynamics and STP in the synapse dynamics [which is further divided into short-term facilitation (STF) and short-term depression (STD)] enables a CANN to implement three different tasks, which are persistent activity, adaptation, and anticipative tracking.

In the literature, a large volume of theoretical studies has revealed that when short-term dynamics, such as STD, STF, or SFA, are included in a CANN, new interesting dynamical behaviors emerge, such as population spike, adaptive response, spontaneous traveling wave, and anticipative tracking, and that these new dynamical properties lead to new computational powers of a network (see e.g., Kilpatrick and Bressloff, 2009; York and Rossum, 2009; Ermentrout et al., 2010; Kilpatrick and Bressloff, 2010a,b; Itskov et al., 2011; Bressloff, 2012; Fung et al., 2012; Miller, 2013; Miller and Katz, 2013; Tsodyks and Wu, 2013; Fung and Amari, 2015 and references therein). The study of Renart et al. (2003) also showed that activity-dependent homeostatic scaling of synaptic strengths helps a CANN with heterogeneity in synapses to hold persistent activity reliably. The study of Barak and Tsodyks (2007) investigated systematically how different combinations of STD and STP result in qualitatively different traces of a network reaching to persistent activity, which suggests that the varied network responses mediated by STP can encode time-dependent stimuli. Compared to those previous studies, the contribution of the present study is mainly a demonstration that coordination of multiple short-term dynamics with different time scales can enable a single neural circuit to implement contradictory computational tasks.

2. Materials and Methods

We employ two-dimensional CANNs as our working model to demonstrate the effects of different dynamical features. Consider that neurons are uniformly distributed in a two-dimensional space (x, y) with x, y∈(−∞, ∞). Denote U(x, t) to be the synaptic input to the neuron at the position x = (x, y), and r(x, t) the neuronal firing rate. The dynamics of the network is given by,

where τ denotes the synaptic time constant, which is typically in the order of 1−2 ms. ρ denotes the neuronal density. The variables f(x, t) and p(x, t) denote the STF and STD effects, respectively. J(x, x′) is the interaction strength between the neurons at x and x′, which is set to be

where the parameter a controls the range of neuronal interaction. Note that J(x, x′) denotes translation-invariant in the space, in terms of that it is a function of (x − x′), rather than x.

The firing rate of a neuron is determined by its received synaptic input according to

where [.]+ is the rectifying function. The firing rate first increases with the synaptic input and then saturates gradually due to normalization by the total network activity. The latter can be realized by shunting inhibition, with the parameter k denoting inhibition strength (Hao et al., 2009; Zhang et al., 2013).

The network model exhibits a number of short-term dynamical features.

Short-Term Facilitation in Recurrent Connection

The variable f(x, t) on the right-hand side of Equation (1) represents STF in recurrent interactions, whose dynamic is given by

where τf is the time scale of STF and the parameter α controls the amplitude of the STF effect. The variable f(x, t) increases with neuronal firing rate, whose minimum value is fmin and maximum value is 1.

Short-Term Depression in Recurrent Connection

The variable p(x, t) on the right-hand side of Equation (1) represents STD in recurrent interactions, whose dynamic is given by

where τd is the time scale of STD and the parameter β controls the amplitude of the STD effect. The variable p(x, t) decreases with neuronal firing rate, whose minimum value is 0 and maximum value is 1.

Short-Term Depression in Feedforward Connection

The variable g(x, t) on the right-hand side of Equation (1) represents STD in feedforward connections to the neuron. Its product with the raw external input, g(x, t)Iext(x, t), gives the diminished feedforward synaptic current to the neuron. Its dynamic is given by

where τg is the time scale of STD and the parameter η controls the amplitude of the STD effect. The variable g(x, t) decreases with external input Iext(x, t), whose minimum value is 0 and maximum value is 1.

Spike Frequency Adaptation at Single Neuron

The current V(x, t) on the right-hand side of Equation (1) represents the effect of SFA, whose dynamic is given by

where τv is the time scale of SFA and the parameter m controls the amplitude of the SFA effect. The solution of the above equation gives , implying that V(x, t) is determined by the averaged neural activity over a period of τv. The higher the neural activity, the larger is the negative feedback.

The External Input

The external input used in the present study is given by

where Aamp is the input strength, vext the speed of moving input and θ the moving direction. For studying persistent activity, we apply a transient input to the network, which is obtained by setting Aamp > 0 for a short-time interval and vext = 0. For studying adaptation, we apply a sustained input to the network, which is obtained by setting Aamp to be a positive constant and vext = 0. For studying anticipative tracking, we apply a moving input to the network, which is obtained by setting Aamp to be a positive constant and vext≠0.

3. Results

3.1. Network Dynamics with Individual Dynamical Features

To start, we first review the effect of each individual dynamical feature on the network dynamics. It can be checked that without these dynamical features (by setting α = β = m = η = 0), a CANN can hold a continuous family of Gaussian-shape stationary states (called bumps) in the absence of external drive (Iext = 0), when the global inhibition strength k is below a critical value (Fung et al., 2010). These stationary states are written as , where the peak position of the bump q = (qx, qy) is a free parameter.

3.1.1. The Effect of STF

In response to an external input, the effect of STF is to enlarge the interaction between neurons temporally, which enhances the positive feedback loop between neurons, and hence STF provides a mechanism to retain neural activity after removing the input. Figure 1A presents the phase diagram of a CANN with only STF included, which shows that STF enlarges the parameter regime for the network to hold active bump states.

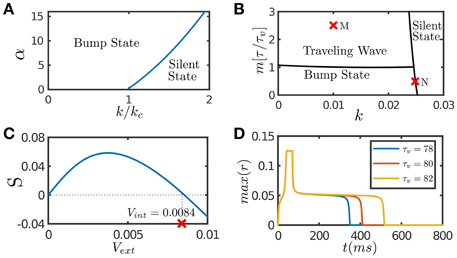

Figure 1. Network dynamics with individual features. (A) The phase diagram of a CANN with only STF included. The graph shows the stationary states of the network with the varying STF strength α and the global inhibition strength k. kc is the critical inhibition strength below which a CANN without STF can hold bump states. STF allows the regime of bump states to expand to k > kc. (B) The phase diagram of a CANN with only SFA included. The network can hold traveling waves if the strength of SFA satisfies m > τ/τv. (C) The tracking performance of a CANN with SFA. An external moving input given by Equation (8) is applied to the network, Aamp = 0.1. S is the separation between the network bump and the external input when tracking reaches to a stationary process. Svext > 0 implies anticipative tracking. The parameters (k, m) = (0.01, 2.5) at the point M in (B), where the network holds traveling waves, is used. vint denotes the speed of the traveling wave the network can hold without relying on external drive. Anticipation occurs when vext < vint. (D) The plateau decay of a CANN with SFA when the network is marginally unstable. The parameters (k, m) = (0.0249, 0.5) at the point N close to the boundary in (B) is used. The lifetime of the plateau increases with the time scale τv of SFA. The simulations were done with a 2D CANN having 100 × 100 neurons uniformly distributed in the space (−π, π] with the periodic condition. Other parameters are J0 = 0.05, a = 0.5, τ = 1 ms.

3.1.2. The Effect of STD

The effect of STD is to depress the interaction between neurons temporally. For recurrent connections, STD weakens the positive feedback loop between neurons and hence has the effect of suppressing STF-triggered neural activity. For feedforward connections, STD reduces the synaptic input to a neuron and hence has the effect of suppressing the neuronal response to a prolonged stimulation. STD and SFA have similar effects on the CANN dynamics, except that the former operates at the synapse level and the latter at the neuron level. For simplicity, we only present the result for SFA (see below). The result for STD can be straightforwardly deduced.

3.1.3. The Effect of SFA

SFA induces an activity-dependent negative current to a neuron, which serves as a self-inhibition mechanism to suppress the neuronal response if a neuron has experienced prolonged firing. The phase diagram of a CANN with only SFA is presented in Figure 1B. Note that SFA induces a new form of stationary state, called traveling wave state (Ben-Yishai et al., 1997; York and Rossum, 2009; Bressloff, 2012; Tsodyks and Wu, 2013). In such a state, the network holds a spontaneously moving bump without relying on external drive. This spontaneous moving activity may be related to the traveling wave phenomenon widely observed in experiments (Wu et al., 2008). This dynamical property is also intuitively understandable. Suppose that a bump is initiated at a position. Due to SFA, those neurons which are most active are desensitized by the strongest negative feedback, and their activities will be suppressed consequently. With competition from neighboring neurons which are less affected by SFA, the bump tends to shift to the neighborhood; and at the new location, SFA starts to suppress neuronal responses again. Thus, the bump will keep propagating in the network like a traveling wave. Interestingly, when a CANN is within the parameter regime having a traveling wave solution, the network response to an external moving input will lead the input's instant position, if the speed of the moving input is smaller than that of the traveling wave the network can hold in the absence of external drive (Mi et al., 2014). This result is shown in Figure 1C, and the detailed mathematical analysis is presented in Appendix (Supplementary Material). Overall, the effect of SFA is to induce mobility to the network state, such that the network can track a moving input anticipatively. An illustration of this anticipative tracking behavior is presented in Figure 1A, and the computational role of anticipative tracking is discussed in Section 3.2.3.

In the parameter regime close to the boundary between active and silent states (e.g., the point N in Figure 1B), the network dynamics displays another interesting phenomenon, called the plateau decay behavior (see Figure 1D), that is, starting from an initial active state, the network activity will decay very slowly in the time scale of SFA, followed by a rapid fall. The detailed analysis of this plateau decay behavior is presented in the Appendix (Supplementary Material), and its computational role is discussed in Section 4.1. Apparently, since the effect of STD is to induce negative feedback modulation similar to that of SFA, STD can also generate the plateau decay behavior if its amplitude is properly chosen (Fung et al., 2012).

3.2. Network Dynamics with Combined Short-Term Dynamical Features

We now come to study how combined dynamical features with different time scales endow a CANN with the capacity of implementing three contradictory computational tasks, which are: (1) retaining persistent neural firing for a considerable amount of time after removing the stimulation, (2) generating adaptive neural responses to a prolonged invariant stimulation, and (3) tracking an external moving input anticipatively.

As described above, the effects of STF and STD or SFA on the network dynamics are opposite to each other. If we simply combine them together with the same time scale, a CANN is unable to implement three computational tasks concurrently. However, by assigning different time scales for them, a CANN may be able to achieve this goal. For instance, by setting the time constants τf ≪ τd (note other parameters, such as the neuronal connection strengths J(x, x′), also need to be set properly, see e.g., the analysis in Barak and Tsodyks, 2007), a network can, on one hand, enhance the interaction between neurons in a shorter time scale τf necessary for generating persistent activity, and, on the other hand, reduce the interaction in a larger time scale τd necessary for adaptation and closing-down neural activity. The effects of STD and SFA on the network dynamics are similar, which essentially produce negative feedback modulation, but by including both of them with different time scales, a CANN is able to exploit multiple computational properties associated with the negative feedback modulation.

Below we show that if the time scales of different dynamical features satisfy the condition,

the CANN is able to implement the above three contradictory computational tasks. It is known that there exist large diversities for STP and SFA in different cortical regions and for different neuron types. The parameters we consider here tend to hold in the sensory cortex where the synapses are STD-dominating (Wang et al., 2006; Gutkin and Zeldenrust, 2014).

3.2.1. Persistent Activity

Persistent activity refers to the fact that neurons keep firing after removing the external drive, a property widely regarded as the neural substrate of short-term memory (Amit, 1989). With the condition Equation (9), our network model can reproduce this phenomenon.

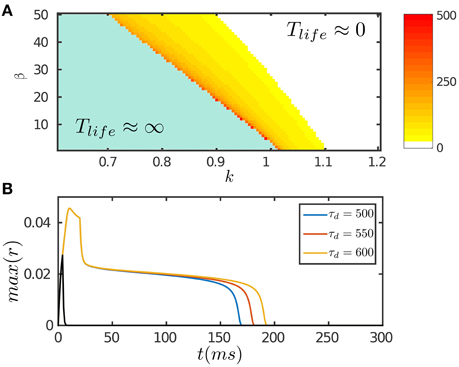

The results are presented in Figure 2. Figure 2A displays the phase diagram of the network with varying STD and inhibition strengths, which shows that the network holds persistent activity of finite lifetimes over a range of parameters around the boundary where the network is marginally unstable. In response to a transient input, the network first generates a strong response due to facilitation of the interaction between neurons via STF. If the stimulation duration is long enough, up to the order of τf, the neuronal recurrent connections will be sufficiently facilitated to retain neural activity without relying on external drive; otherwise, the neural activity fades away rapidly (Figure 2B). As time goes on, the negative feedback modulation via both SFA and STD begins to dominate and eventually suppresses the neural activity. We require that the amplitudes of SFA and STD satisfy two requirements: (1) the amplitude of SFA alone, which dominates on the time scale of τv (τf ≪ τv ≪ τd), is not adequate to suppress the neural activity, so that persistent activity lasts over the time scale τv. This condition is also required for implementing anticipative tracking introduced below. (2) The amplitude of STD, which dominates on the time scale of τd (τd ≫ τv), is in the parameter regime where the network is marginally unstable (Figure 2A), so that the network activity decays very slowly like a plateau on the time scale of τd and then falls rapidly (Figure 2B). We interpret this plateau decay as persistent activity of a finite lifetime. The plateau decay behavior is analyzed for SFA as shown in Figure 1D and with details given in Appendix (Supplementary Material). Since SFA and STD have similar effects on the network dynamics, it is understandable that STD also holds this property.

Figure 2. Persistent activity of the network in response to a transient input. The input is given by Equation (8). (A) The phase diagram of the network with varying STD strength β and inhibition strength k. The network can hold persistent activity of finite lifetimes over a range of parameter values around the boundary. The lifetime of persistent activity, Tlife, is measured by the length of the decaying plateau. (B) The lifetime of persistent activity with the varying time scale of STD τd. β = 100. k = 0.12. The network holds persistent activity if the stimulation duration is long enough in the order of τf; otherwise the network response fades away rapidly as indicated by the black curve. Other parameters are: a = 0.5, J0 = 0.5, fmin = 0.1, α = 20, m = 2.5τ/τv, η = 40, τ = 1 ms, τf = 10 ms, τv = 80 ms, τg = 3500 ms, Aamp = 0.15.

Note that we here consider a mechanism using STD to realize short-term memory in a network, and the duration of this kind of short-term memory is in the time scale of STD (Figure 2B). This mechanism has the advantage that it does not require an extra operation to turn-off the network activity (Gutkin et al., 2001). Apparently, there exist other types of short-term memory with different time scales in the brain, such as working memory in the prefrontal cortex whose duration lasts from seconds to minutes. These different types of short-term memory may recruit different mechanisms to hold and turn-off neuronal persistent activities.

3.2.2. Adaptation

Adaptation refers to the phenomenon that neurons dynamically adjust their response properties in accordance with the statistics of the external inputs. It has been widely suggested that adaptation encompasses a strategy for a neural system to utilize its resources (such as spikes) efficiently to encode input information (Laughlin, 1989; Wark et al., 2007). In luminance adaptation, in which the visual system adapts to a sustained stimulation of constant luminance, neuronal responses exhibit a stereo-typed temporal characteristic: at the onset of the stimulation, neuronal responses increase dramatically; and afterwards, neuronal responses attenuate gradually down to a level close to background activity (Boynton and Whitten, 1970). With the condition Equation (9), our model can reproduce this phenomenon.

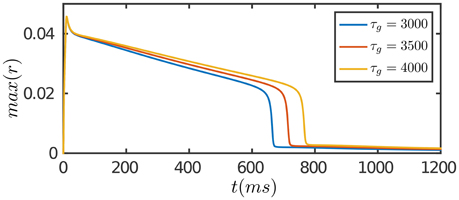

The results are presented in Figure 3. At the onset of the stimulation, the network first generates a strong transient response due to facilitation of neuronal interaction via STF. As time goes on, SFA and STD in recurrent connections begin to dominate, which suppress neuronal responses. Furthermore, STD in feedforward connections takes effect on the time scale of τg, which depresses the feedforward inputs to neurons. The overall effect is that neuronal responses attenuate over time, although the external input remains invariant, and eventually the network activity reaches to a level close to background activity. Compared to the case of persistent activity, STD in feedforward connections plays a crucial role in adaptation which diminishes the feedforward current to a neuron for large external inputs.

Figure 3. Adaptation of the network in response to a constant input. The input is given by Equation (8). The duration of adaptation, measured from the onset of stimulation to the moment the network activity fades away, is determined by the time scale of STD in feedforward connection, τg. Other parameters are the same as Figure 2.

3.2.3. Anticipative Tracking

Anticipative tracking refers to the phenomenon that a neural system smoothly tracks the changing of a moving stimulus in a manner that the neural representation leads the actual position of the stimulus. This phenomenon has been widely observed in retinal neurons (Leonardo and Meister, 2013), and head direction (HD) neurons when animals are navigating in space (Taube, 2007). For instance, in anterior dorsal thalamic nuclei of rodent, it was found that the head-direction encoded by HD neurons led the actual instant direction of the rat head by around 20 ms, i.e., the neural representation pointed to the angle the rat head would turn into 20 ms later (Blair et al., 1997). Similarly, anticipation occurs when our eyes smoothly pursuit moving objects (Heinen and Liu, 1997). Anticipative tracking is fundamental for the brain to process motion information, since transmission delays of neural signals are significant and need to be compensated, e.g., the delay for neural signal transmitted from retina to V1 takes about 50~80 ms (Nowak et al., 1995). With the condition Equation (9), our model can reproduce this phenomenon.

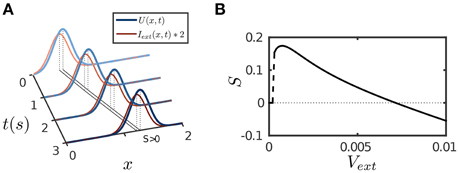

The results are presented in Figure 4. The parameter values are the same as for implementing persistent activity and adaptation. For a transient or a static input, since STD has the longest time scale τd, its effect will eventually dominate the network dynamics and suppress neural activity as described above. However, for a moving input, once the network bump moves away on the time scale of τv (τv ≪ τd) to track the input, the effect of depressed synapses at the old location can be neglected, thus, the network dynamics can be largely understood as if the CANN only includes SFA; and the latter is analyzed in Figures 1B,C. We therefore set the amplitude of SFA in the parameter range where the network holds traveling waves and is able to achieve anticipative tracking. Figure 4A displays an example of anticipative tracking of the network. Figure 4B presents the condition for anticipative tracking. Note that when the speed of the moving input is too small, no tracking occurs, since STD suppresses the neural activity before it starts to move.

Figure 4. Tracking performance of the network. The moving input is given by Equation (8). (A) An example of anticipative tracking of the network. vext = 0.8/s. For the illustration purpose, the 2D bump is projected on the normal of the moving direction, and the external input strength is scaled up by twofold. (B) The condition for anticipative tracking. Anticipation occurs when Svext > 0, where S is the separation between the network bump and the external input. When the speed is too small, S = 0 implies no tracking at all; and when the speed is too large, Svext < 0 implies lagging. Other parameters are the same as Figure 2.

4. Discussion

Neural systems display rich short-term dynamics at all levels, from neurons to synapses and to circuits. These dynamical features cover a broad range of time scales and exhibit large diversity in different cortical regions. For instances, the time constants of SFA for different neuron types and in different areas expand from tens to thousands of milliseconds (Benda and Herz, 2003); the time constants of STP cover a similar range but the relative sizes between STF and STD vary in different cortical areas, e.g., STF is dominating and has a longer time constant in the prefrontal cortex; whereas, STD is dominating and has a longer time constant in the sensory cortex (Wang et al., 2006). It remains largely unclear what the purpose for the brain is to hold such variabilities in dynamical features and their time scales.

In the present study, we argue that one benefit for having multiple dynamical features with varied time scales is that the brain can fully exploit the advantages of these features to implement what are otherwise contradictory computational tasks. To demonstrate this idea, we consider STF, SFA, and STD with increasing time constants in the dynamics of a CANN, and show that the network is able to implement three seemingly contradictory computations, which are persistent activity, adaptation and anticipative tracking. Simply stated, the role of STF is to hold persistent activity in the absence of external drive, the role of SFA is to support anticipative tracking for a moving input, and the role of STD is to eventually suppress neural activity for a static or transient input. Notably, the time constants of SFA and STD can be swapped with each other, since SFA and STD have similar effects on the network dynamics. Nevertheless, we need to include both of them, or only one of them (either SFA or STD) but with multiple time scales, since a single negative feedback modulation with a constant time scale is unable to achieve both anticipative tracking and plateau decay concurrently. The implementation of each individual computational task based on a single or combined dynamical features has been studied previously (e.g., Barak and Tsodyks, 2007; Fung et al., 2012). Here, our main contribution is a demonstration that contradictory computational tasks can be realized concurrently in a single neural circuit if different short-term dynamics are combined properly.

In the present study, we have considered STP and SFA with multiple time scales. Alternatively, a neural system may have other mechanisms to realize short-term dynamics of varied time scales. For instance, the work of (Goldman, 2009) considered heterogeneities in synapse strengthes and neuronal connections among neuronal groups, such that different groups have different time constants in response to a transient external input, and a read-out neuron can integrate the responses of different neuronal groups over time to achieve persistent firing. It will be interesting to explore whether such a mechanism can also implement the three computational tasks considered in this study concurrently.

Finally, we should point out that we have not found direct experimental evidence confirming that a single cortical region realizes three computational tasks considered in this study. However, based on the known experimental data, we expect that this is very likely to be true. For instance, the primary visual cortex V1 may realize these three computations. It is known that in the sensory cortex, neuronal synapses are STD-dominating, fitting the parameter condition Equation (9), and that neurons in V1 exhibit adaptive behaviors, have the capacity to track a moving stimulus anticipatively (Xu et al., 2012), and can hold long-lasting residual activities in response to a transient stimulation (Jancke et al., 2004). Further experimental studies are needed to validate our hypothesis. Nevertheless, we hope that this study can shed light on our understanding of how the brain orchestrates its rich dynamics at various levels to realize diverse cognitive functions.

Author Contributions

YM and SW conceived the project idea. XL implemented the simulation. YM and SW performed the theoretical analysis. XL, YM, and SW wrote the paper.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We acknowledge Malte Rasch for helping us to improve the language. This work was supported by the National Basic Research Program of China [2014CB846101 (SW)], the National Natural Science Foundation of China [31261160495 (SW), 11305112 (YM)], the Fundamental Research funds For the Central Universities [24810584GK (SW)] and the Specialized Research Fund for the Doctoral Program of Higher Education [20130003110022 (SW)].

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/article/10.3389/fncom.2016.00096

References

Abbott, L. F., and Regehr, W. G. (2004). Synaptic computation. Nature 431, 796–803. doi: 10.1038/nature03010

Abbott, L. F., Varela, J. A., Sen, K., and Nelson, S. B. (1997). Synaptic depression and cortical gain control. Science 275, 221–224. doi: 10.1126/science.275.5297.221

Amit, D. J. (1989). Modeling Brain Function: The World of Attractor Neural Networks. Cambridge, UK: Cambridge University Press. doi: 10.1017/cbo9780511623257

Barak, O., and Tsodyks, M. (2007). Persistent activity in neural networks with dynamic synapses. PLoS Comput. Biol. 3:e35. doi: 10.1371/journal.pcbi.0030035

Ben-Yishai, R., Bar-Or, R. L., and Sompolinsky, H. (1995). Theory of orientation tuning in visual cortex. Proc. Natl. Acad. Sci. U.S.A. 92, 3844–3848. doi: 10.1073/pnas.92.9.3844

Ben-Yishai, R., and Hansel D, Sompolinsky, H. (1997). Traveling waves and the processing of weakly tuned inputs in a cortical network module. J. Neurosci. 4, 57–77.

Benda, J., and Herz, A. V. (2003). A universal model for spike-frequency adaptation. Neural Comput. 15, 2523–2564. doi: 10.1162/089976603322385063

Blair, H. T., Lipscomb, B. W., and Sharp, P. E. (1997). Anticipatory time intervals of head-direction cells in the anterior thalamus of the rat: implications for path integration in the head-direction circuit. J. Neurophysiol. 78, 145–159.

Bliss, T. V., and Collingridge, G. L. (1993). A synaptic model of memory: long-term potentiation in the hippocampus. Nature 361, 31–39. doi: 10.1038/361031a0

Boynton, R. M., and Whitten, D. N. (1970). Visual adaptation in monkey cones: recordings of late receptor potentials. Science 170, 1423–1426. doi: 10.1126/science.170.3965.1423

Bressloff, P. C. (2012). Spatiotemporal dynamics of continuum neural fields. J. Phys. A Math. Theor. 45:033001. doi: 10.1088/1751-8113/45/3/033001

Carter, E., and Wang, X-J. (2007). Cannabinoid-mediated disinhibition and working memory: dynamical interplay of multiple feedback mechanisms in a continuous attractor model of prefrontal cortex. Cereb. Cortex 17, i17–i26. doi: 10.1093/cercor/bhm103

Dittman, J. S., Kreitzer, A. C., and Regehr, W. G. (2000). Interplay between facilitation, depression, and residual calcium at three presynaptic terminals. J. Neurosci. 20, 1374–1385.

Ermentrout, G. B., Jalics, J. Z., and Rubin, J. E. (2010). Stimulus-driven traveling solutions in continuum neuronal models with a general smooth firing rate function. SIAM J. Appl. Math. 70, 3039–3064. doi: 10.1137/090775737

Fung, C. C. A., and Amari, S. I. (2015). Spontaneous motion on two-dimensional continuous attractors. Neural Comput. 27, 507–547. doi: 10.1162/NECO_a_00711

Fung, C. C. A., Wong, K. Y. M., and Wu, S. (2010). A moving bump in a continuous manifold: a comprehensive study of the tracking dynamics of continuous attractor neural networks. Neural Comput. 22, 752–792. doi: 10.1162/neco.2009.07-08-824

Fung, C. C. A., Wong, K. Y. M., Wang, H., and Wu, S. (2012). Dynamical synapses enhance neural information processing: gracefulness, accuracy, and mobility. Neural Comput. 24, 1147–1185. doi: 10.1162/NECO_a_00269

Georgopoulos, A. P., Taira, M., and Lukashin, A. (1993). Cognitive neurophysiology of the motor cortex. Science 260, 47–52. doi: 10.1126/science.8465199

Goldman, M. (2009). Memory without feedback in a neural network. Neuron 61, 621–634. doi: 10.1016/j.neuron.2008.12.012

Gutkin, B., and Zeldenrust, F. (2014). Spike frequency adaptation. Scholarpedia 9:30643. doi: 10.4249/scholarpedia.30643

Gutkin, B. S., Laing, C. R., Colby, C. L., Chow, C. C., and Ermentrout, G. B. (2001). Turning on and off with excitation: the role of spike-timing asynchrony and synchrony in sustained neural activity. J. Comput. Neurosci. 11, 121–134. doi: 10.1023/A:1012837415096

Hao, J., Wang, X.-d., Dan, Y., Poo, M.-M., and Zhang, X.-H. (2009). An arithmetic rule for spatial summation of excitatory and inhibitory inputs in pyramidal neurons. Proc. Natl. Acad. Sci. U.S.A. 106, 21906–21911. doi: 10.1073/pnas.0912022106

Heinen, S. J., and Liu, M. (1997). Single-neuron activity in the dorsomedial frontal cortex during smooth-pursuit eye movements to predictable target motion. Visual Neurosci. 14, 853–865. doi: 10.1017/S0952523800011597

Herz, A. V., Gollisch, T., Machens, C. K., and Jaeger, D. (2006). Modeling single-neuron dynamics and computations: a balance of detail and abstraction. Science 314, 80–85. doi: 10.1126/science.1127240

Itskov, V., Curto, C., Pastalkova, E., and Buzsáki, G. (2011). Cell assembly sequences arising from spike threshold adaptation keep track of time in the hippocampus. J. Neurosci. 31, 2828–2834. doi: 10.1523/JNEUROSCI.3773-10.2011

Jancke, D., Chavane, F., Naaman, S., and Grinvald, A. (2004). Imaging cortical correlates of illusion in early visual cortex. Nature 428, 423–426. doi: 10.1038/nature02396

Kilpatrick, Z. P., and Bressloff, P. C. (2009). Spatially structured oscillations in a two-dimensional excitatory neuronal network with synaptic depression. J. Comput. Neurosci. 28, 193–209. doi: 10.1007/s10827-009-0199-6

Kilpatrick, Z. P., and Bressloff, P. C. (2010a). Binocular rivalry in a competitive neural network with synaptic depression. SIAM J. Appl. Dyn. Syst. 9, 1303–1347. doi: 10.1137/100788872

Kilpatrick, Z. P., and Bressloff, P. C. (2010b). Effects of synaptic depression and adaptation on spatiotemporal dynamics of an excitatory neuronal network. Physica D 239, 547–560. doi: 10.1016/j.physd.2009.06.003

Leonardo, A., and Meister, M. (2013). Nonlinear dynamics support a linear population code in a retinal target-tracking circuit. J. Neurosci. 33, 16971–16982. doi: 10.1523/JNEUROSCI.2257-13.2013

Mante, V., Sussillo, D., Shenoy, K. V., and Newsome, W. T. (2013). Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature 503, 78–84. doi: 10.1038/nature12742

Markram, H., Wang, Y., and Tsodyks, M. (1998). Differential signaling via the same axon of neocortical pyramidal neurons. Proc. Natl. Acad. Sci. U.S.A. 95, 5323–5328. doi: 10.1038/nature12742

Mi, Y., Li, L., Wang, D., and Wu, S. (2014). “A synaptical story of persistent activity with graded lifetime in a neural system,” in Advances in Neural Information Processing Systems (Montreal, QC), 352–360.

Miller, P. (2013). Stabilization of memory states by stochastic facilitating synapses. J. Math. Neurosci. 3, 1–22. doi: 10.1186/2190-8567-3-19

Miller, P., and Katz, D. B. (2013). Accuracy and response-time distributions for decision-making: linear perfect integrators versus nonlinear attractor-based neural circuits. J. Comput. Neurosci. 35, 261–294. doi: 10.1007/s10827-013-0452-x

Nowak, L. G., Munk, M. H., Girard, P., and Bullier, J. (1995). Visual latencies in areas v1 and v2 of the macaque monkey. Vis. Neurosci. 12, 371–384. doi: 10.1017/S095252380000804X

Ponce-Alvarez, A., Thiele, A., Albright, T. D., Stoner, G. R., and Deco, G. (2013). Stimulus-dependent variability and noise correlations in cortical mt neurons. Proc. Natl. Acad. Sci. U.S.A. 110, 13162–13167. doi: 10.1073/pnas.1300098110

Renart, A., Song, P., and Wang, X. J. (2003) Robust spatial working memory through homeostatic synaptic scaling in heterogeneous cortical networks. Neuron 38, 473–485. doi: 10.1073/pnas.1300098110

Samsonovich, A., and McNaughton, B. L. (1997). Path integration and cognitive mapping in a continuous attractor neural network model. J. Neurosci. 17, 5900–5920.

Taube, J. S. (2007). The head direction signal: origins and sensory-motor integration. Annu. Rev. Neurosci. 30, 181–207. doi: 10.1146/annurev.neuro.29.051605.112854

Tsodyks, M., and Wu, S. (2013). Short-term synaptic plasticity. Scholarpedia 8:3153. doi: 10.4249/scholarpedia.3153

Wang, X.-J. (2001). Synaptic reverberation underlying mnemonic persistent activity. Trends Neurosci. 24, 455–463. doi: 10.1016/S0166-2236(00)01868-3

Wang, Y., Markram, H., Goodman, P. H., Berger, T. K., Ma, J., and Goldman-Rakic, P. S. (2006). Heterogeneity in the pyramidal network of the medial prefrontal cortex. Nat. Neurosci. 9, 534–542. doi: 10.1038/nn1670

Wark, B., Lundstrom, B. N., and Fairhall, A. (2007). Sensory adaptation. Curr. Opin. Neurobiol. 17, 423–429. doi: 10.1016/j.conb.2007.07.001

Wimmer, K., Nykamp, D. Q., Constantinidis, C., and Compte, A. (2014). Bump attractor dynamics in prefrontal cortex explains behavioral precision in spatial working memory. Nat. Neurosci. 17, 431–439. doi: 10.1038/nn.3645

Wu, S., Wong, K. Y. M., Fung, C. C. A., Mi, Y., and Zhang, W. (2016). Continuous attractor neural networks: candidate of a canonical model for neural information representation. F1000 Res. 66, 209–226. doi: 10.12688/f1000research.7387.1

Wu, J.-Y., Huang, X., and Zhang, C. (2008). Propagating waves of activity in the neocortex: what they are, what they do. Neuroscientist 14, 487–502. doi: 10.1177/1073858408317066

Xu, S. J., Jiang, W. C., Poo, M. M., and Dan, Y. (2012) Activity recall in a visual cortical ensemble. Nat. Neurosci. 15, 449–455. doi: 10.1038/nn.3036

York, L. C., and Rossum, M. C. W. V. (2009). Effects of synaptic depression and adaptation on spatiotemporal dynamics of an excitatory neuronal network. J. Neurosci. 27, 607–620.

Zhang, K. (1996). Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensemble: a theory. J. Neurosci. 16, 2112–2126.

Keywords: continuous attractor neural networks, short-term plasticity, spike frequency adaptation, persistent activity, adaptation, anticipative tracking

Citation: Mi Y, Lin X and Wu S (2016) Neural Computations in a Dynamical System with Multiple Time Scales. Front. Comput. Neurosci. 10:96. doi: 10.3389/fncom.2016.00096

Received: 15 April 2016; Accepted: 25 August 2016;

Published: 13 September 2016.

Edited by:

Yoram Burak, Hebrew University of Jerusalem, IsraelReviewed by:

Paul Miller, Brandeis University, USAPedro J. Goncalves, University College London, UK

Copyright © 2016 Mi, Lin and Wu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Si Wu, wusi@bnu.edu.cn

†These authors have contributed equally to this work.

Yuanyuan Mi

Yuanyuan Mi Xiaohan Lin

Xiaohan Lin Si Wu

Si Wu