- Centre for Advanced Imaging, The University of Queensland, Brisbane, QLD, Australia

Digital Imaging Processing (DIP) requires data extraction and output from a visualization tool to be consistent. Data handling and transmission between the server and a user is a systematic process in service interpretation. The use of integrated medical services for management and viewing of imaging data in combination with a mobile visualization tool can be greatly facilitated by data analysis and interpretation. This paper presents an integrated mobile application and DIP service, called M-DIP. The objective of the system is to (1) automate the direct data tiling, conversion, pre-tiling of brain images from Medical Imaging NetCDF (MINC), Neuroimaging Informatics Technology Initiative (NIFTI) to RAW formats; (2) speed up querying of imaging measurement; and (3) display high-level of images with three dimensions in real world coordinates. In addition, M-DIP provides the ability to work on a mobile or tablet device without any software installation using web-based protocols. M-DIP implements three levels of architecture with a relational middle-layer database, a stand-alone DIP server, and a mobile application logic middle level realizing user interpretation for direct querying and communication. This imaging software has the ability to display biological imaging data at multiple zoom levels and to increase its quality to meet users’ expectations. Interpretation of bioimaging data is facilitated by an interface analogous to online mapping services using real world coordinate browsing. This allows mobile devices to display multiple datasets simultaneously from a remote site. M-DIP can be used as a measurement repository that can be accessed by any network environment, such as a portable mobile or tablet device. In addition, this system and combination with mobile applications are establishing a virtualization tool in the neuroinformatics field to speed interpretation services.

Introduction

Digital Imaging Processing (DIP) is a rapidly evolving area in medical science. It plays an important role in the analysis, interpretation, and viewing of data. It has been widely introduced due to technological advances in digital imaging, computer processors, and mass storage devices (1). In medical science, DIP involves the modification of data to improve image quality for interpretation. Processing and extraction of information from images is indispensable in the experimental workflow of medical science research, and in particular in cell biology and neuroscience (2). DIP helps not only in maximizing details of interest for image extraction and analysis but also provides the capability of capturing the raw data for further study and interpretation.

New digital acquisition techniques in biomedical research have led to a vast increase of scientific data across spatial and temporal scales (3). Technological improvements in the last decade, such as image resolution, contrast enhancement, and microscopic precision have resulted in the ever-increasing size of data (4, 5). These datasets are multi-gigabyte/terabyte when re-stacked (reconstructing serial sections to a 3D block). This has complicated image manipulation, retrieval, and sharing. Several systems have addressed the issue of image processing and data management on data handling, extraction, protocol management, and real world collaboration such as the LONI system from UCLA, (6) XNAT system, (7) and HID system (8) [for a review, see Jessica and John (9)]. A great challenge for DIP is that the extraction of information from large raw data sets is slow and can limit scientific progress. To overcome these issues, the expertise for developing enterprise software tools or even simply running the hardware necessary for this scale of data management and analysis has been introduced in individual laboratories (2). This processing can still only be done by specialized hardware with advanced processors and mass storage. These features are not conducive to remote viewing due to the need for massive data communication.

In the biomedical field, several open source neuroinformatics implementations of DIP have been introduced (10, 11). For example, an open source cell finding and tracking package called ZFIQ (12) is available for quantitative, reproducible, and accurate data analysis. Those implementations serve well to acknowledge the basic parameters of a biomedical study (13). However, recent advances in mobile technology have increased the application of technology to telemedicine and imaging processing (14). This technology can greatly increase the ability of local hospitals or clinics to provide general neurologic consultation services (15). It is now possible to perform DIP so that users can query images remotely without significant data transfer. Mobile devices that support this must be capable of displaying imaging data (with tri-planar views) and be able to increase performance of direct image tiling.

The purpose of this study is to (a) facilitate a greater mobile DIP application for medical science, (b) offer an innovative application that displays information graphically, (c) increase the performance of data handling, and (d) increase the speed of rendering, particularly for MRI datasets. This study describes a comprehensive design for the current mobile implementation and future potential of remote tools in medical science. The application developed by the authors of this paper is called TissueStack (4). TissueStack is a HTML5 multi-dimensional tile-based image-viewing platform with targeted interfaces for desktop and mobile clients. It allows visualization of high-resolution MRI and histological data. The server component runs on an Apache Tomcat webserver, is multi-threaded and connected to a Postgres (16) database. The development was motivated by the need to view large multi-dimensional MRI and histological imaging data in the neuroinformatics field.

Software Design

Rapid advances in bioimaging technology and mobile networks enable an M-DIP system to provide improvements in the quality of image extraction and workflow speed (17–19). M-DIP for bioimaging services is intended to provide: (3) (a) solid technological architecture; (b) transparent data extraction and querying; (c) technical and semantic interoperability between multiple datasets; (d) demonstrated operational efficiency and flexibility; and (e) Reliable user access and support with a friendly user interface (UI). M-DIP exhibits a comprehensive framework for multi-center data acquisition and is deployed for TissueStack datasets – specifically from Medical Imaging NetCDF (MINC), Neuroimaging Informatics Technology Initiative (NIFTI), or RAW image formats. The aim of using a M-DIP is to select single voxels from TissueStack datasets and perform image interpretation. Three main techniques of data image processing have been implemented in M-DIP: image lookup, data extraction, and direct tiling. The front-end interface is comprised of two components: a web and direct querying service.

System architecture

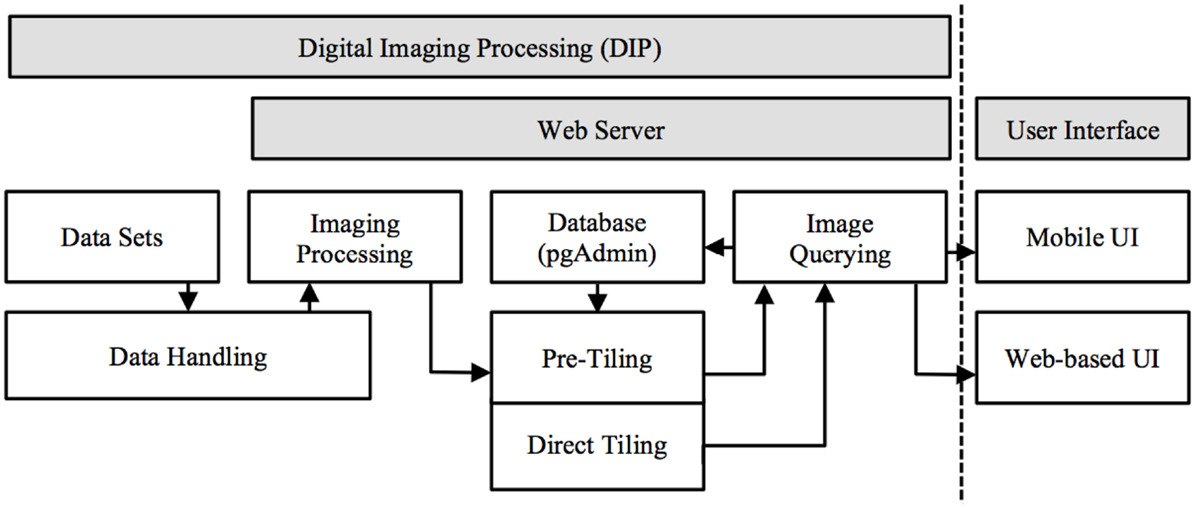

M-DIP provides a structured information context for histological and MRI voxel data interpretation. It includes a low-level server and high-level client. Several programing languages were used: (a) Structured Query Language (SQL) for relational database based image queries; (b) server side C programing for image processing, data handling, and manipulation; and (c) Java programing (Oracle Inc., USA) for server and client side web services. M-DIP implements a three-tier architecture including a DIP server (back-end server side), an application middle tier with a relational database (middle-end) and a mobile (front-end) UI (Figure 1).

Figure 1. Mobile digital imaging processing system architecture. Image processed in server side and presented in front-end UI (data float from left to right and handled based on dataset format).

The three-level design can be both modular and flexible depending on the needs of the user. The server side performs three main functions: (a) data handling and conversion: the dataset is capable of being loaded into memory to be used by the server or the dataset requires conversion to RAW file format. (b) Data Manipulation: extracting from the volume the slice(s) needed, image processing (color mapping, scaling, and rotation), and passing the result to the Server. (c) Server Communication: once the data is ready to be sent, the server sends the data via sockets [Transmission Control Protocol (TCP) and UNIX] and can be handled locally (by another program using a UNIX socket) or remotely using a standard TCP connection. A communication component has been created to communicate directly with the browser and send images as binary data.

A Java middle-layer links the database, front-end, and server. It handles new information sent by the server, stores it and retrieves it from the database. Afterward, the information can be transmitted to the front-end interface. To facilitate the communication from the front-end to the middle-end Java, a web service has been created and integrated into the latter. The responses produced are formatted as JavaScript Object Notation (JSON) or Extensible Markup Language (XML). The web service also communicates with the server side through a UNIX socket when the two instances are on the same server and through TCP when one part is remote.

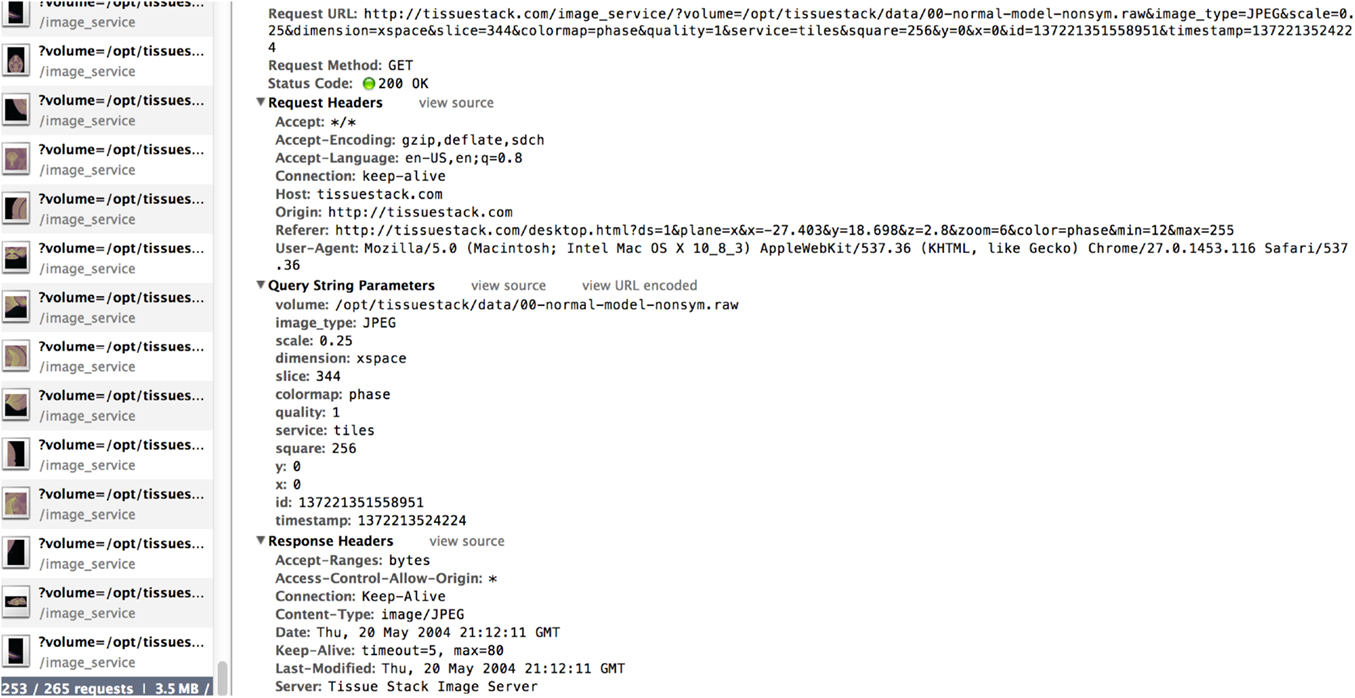

The third part of the system consists of an HTML5 web-based front-end, the UI for the final user. It is the only visible part of TissueStack to a user, nevertheless, this part communicates frequently with other layers via Asynchronous JavaScript and XML (AJAX) requests. Figure 2 shows an example of the image-querying request from Google Chrome webkit inspector. This service ensures a better user experience by increasing responsiveness as the entire page is not reloaded with each request.

Data handling (server side)

A major problem with current imaging formats is how data are represented and stored inside a volume. MINC (20) or NIFTI (21) files store only one dimension of data linearly; other dimensions are retrieved via permutation (22). This method severely affects the performance of data retrieval and storage. When accessing the first dimension, speed and resources used are constant. But the remaining dimensions will be progressively slower due to permutation. The storage techniques used by MINC and NIFTI files have their advantages (e.g., file size and portability) but are not conducive to high performance image retrieval. The speed of data retrieval is critical when performing image extraction in real time. Three main solutions exist to overcome this problem: (a) down-sampling the data and creating smaller datasets, (b) dicing the volume into multiple smaller cubes, and (c) loading the entire volume into the memory. Down-sampling increases the performance of data manipulation but the quality loss inherent in this technique is not suitable for this project. Dicing requires the creation of a file and directory structure that is fragile to file movement and can be difficult to maintain. However, it is still the solution used by many imaging tools to improve the general speed of applications (23). Loading the entire file into memory is not feasible because significant RAM is required for Giga/Tera byte datasets. These three solutions were not used in TissueStack and instead we chose to create a specific imaging format that could meet the project’s primary need of speed.

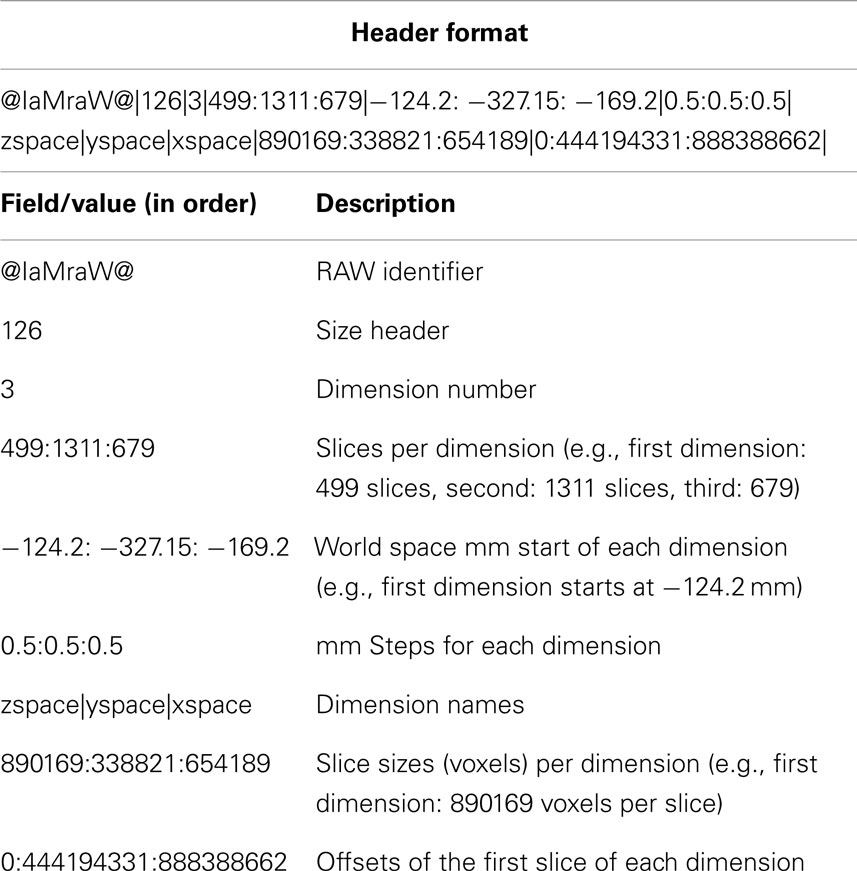

Our specific file format, known as RAW, not only stores data linearly for the first dimension but for all dimensions. This implies redundancy as all voxel values are repeated x times where x is the number of dimensions. However, this solution is currently only tractable with three-dimensional datasets as adding further dimensions increases the storage space required exponentially. Despite increasing the storage size, some benefits are: (a) this format stores the voxels as a raw value meaning that no modification, transformation or translation is done on the original voxel values. These values can be copied directly into a new file (e.g., jpeg, png.) without computational overhead; (b) the redundancy allows fetching the data linearly for all dimensions. This avoids the need to perform dimension permutation that is undertaken on other imaging formats resulting in a speed loss as a function of volume size; (c) there is no need to memory-load an entire volume as this can be done with portions due to the sequential nature of the file; and (d) it is straightforward to offset and retrieve any data in the volume. Theoretically, the dataset could be infinite and achieve constant performance in fetching any slice/voxel in any dimension of the volume. Despite the speed of this file format, the associated files remain simplistic with a minimal header (see Table 1). To ease the adoption of this file format, an automatic converter has been created to support the automatic conversion from MINC and NIFTI to the RAW file format.

Web access and mobile interface (front-end)

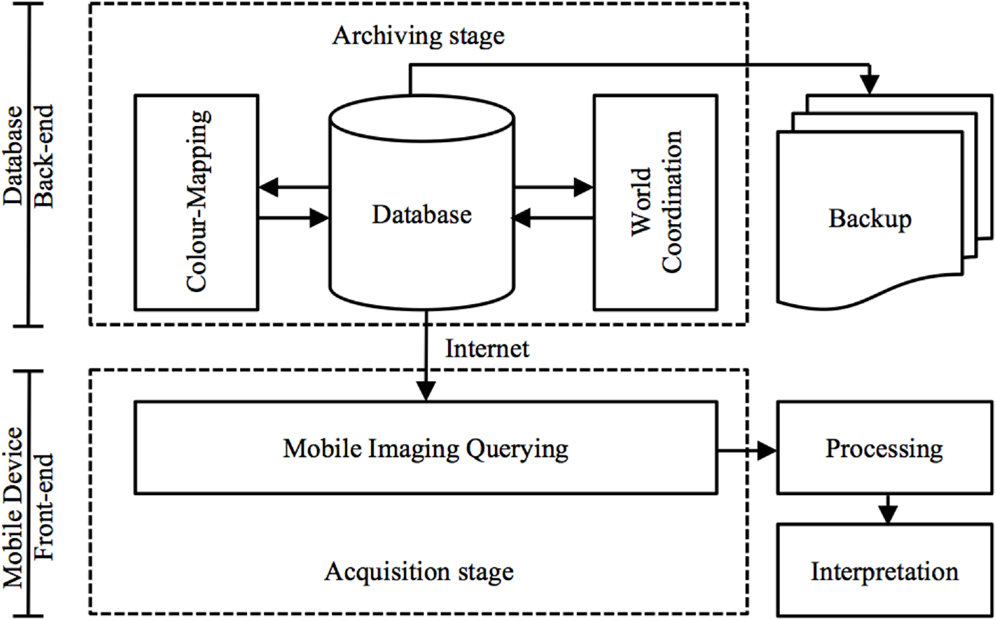

The front-end of M-DIP is divided into five main modules: data and imaging, manipulation, world coordinates, imaging querying, color mapping, and system administration. Screenshots of the mobile UI are in the Section “Results” (Figure 9). This multi-level mobile application features two distinct stages of data flow: (a) an archiving stage with database management and a web access front-end; and (b) an acquisition stages that enables data querying and image interpretation for subsequent analysis (Figure 3).

Figure 3. Data flow via a mobile virtualization tool (application). An archiving stage (back-end) focuses on database management and acquisition stages (front-end) handle image-querying and interpretation.

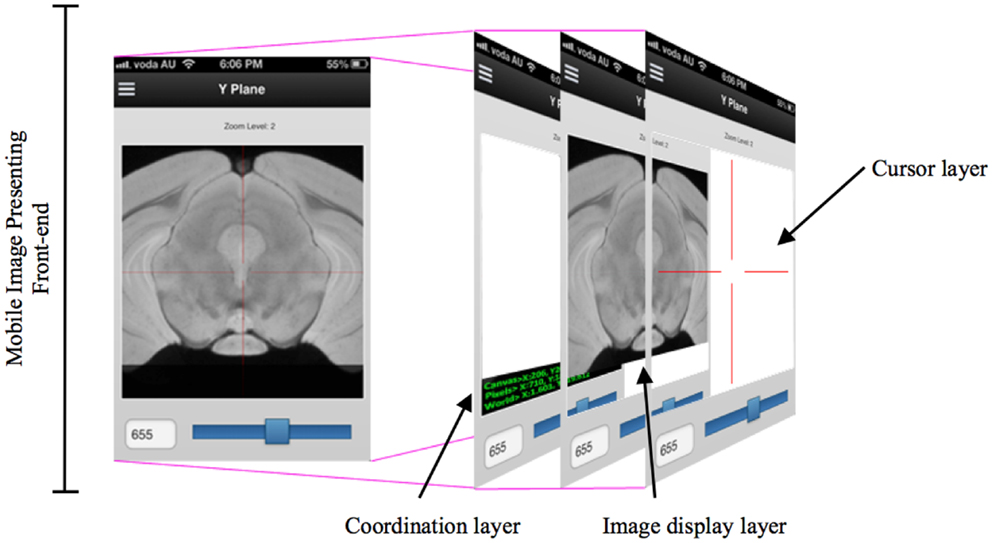

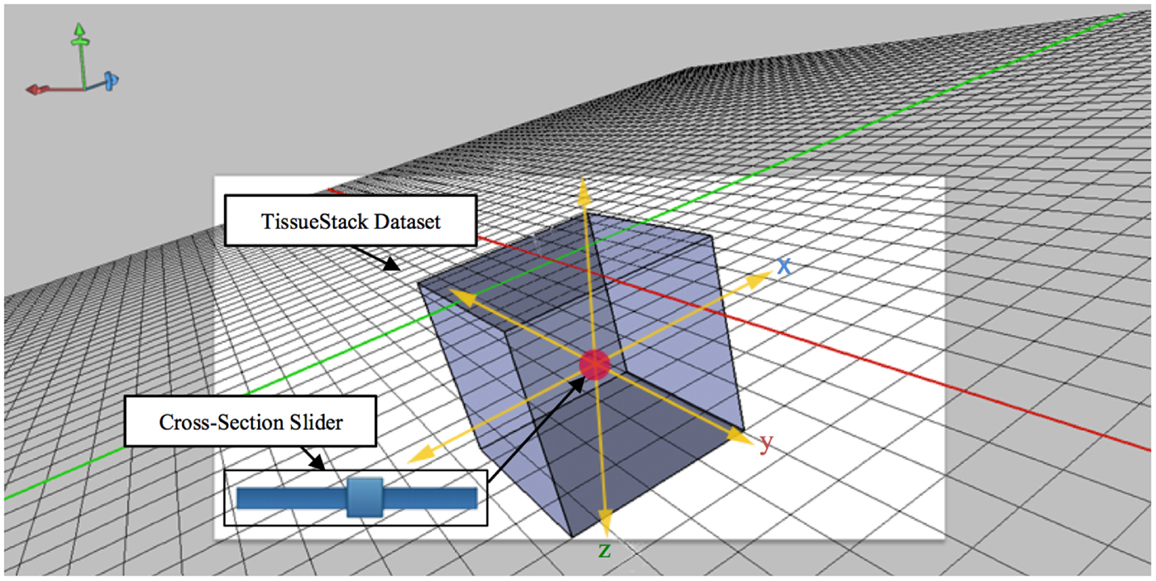

While developing the mobile application to cooperate with the DIP server, the various layers of interface need to be implemented so that researchers can access DIP models in a seamless and intuitive fashion and easily access other images in the same plane. When designing a mobile UI, it is useful to provide a familiar style to take advantage of human recognition (24). M-DIP adopts this idea in its development goals. The UI acts in a fashion similar to a mapping tool so that it is intuitive to use. The interface also provides a cross-section tool to zoom in and out for displaying longitudinal planes (Figure 4) so that users can easily interpret histological and MRI data at different scales without image degradation. The M-DIP interface has three layers: a superficial and independent cursor layer that prevents redrawing; a middle-layer that contains the raw images; and a deep layer that contains the world coordinates. Unlike the top layer, the bottom two need to be redrawn following every user interaction.

Figure 4. A multiple layers in a mobile digital imaging processing application. Layers include but are not limited to a coordination layer, image display layer, and a cursor layer.

The technique shown in Figure 5 extends the idea of an area cursor by allowing users to move the image object as well as coordinates using touch gestures. Users can interpret histological data while querying images by moving their fingers on top of the object, where the relative positions can be associated with other tissue planes. For example, when users interact with the x dimension at the point [106,72,52], the y dimension will move from the previous position to [52,72,106]. This method of moving the longitudinal plane to interpret data is necessary because it can enable effective search and interpretation of image data without display artifacts. During acquisition, M-DIP can provide enough information for users by querying and applying image processing in the real time such as color mapping.

Figure 5. Cross-section slider for viewing any plane defined by two of the axes along a third axis orthogonal to aforementioned plane.

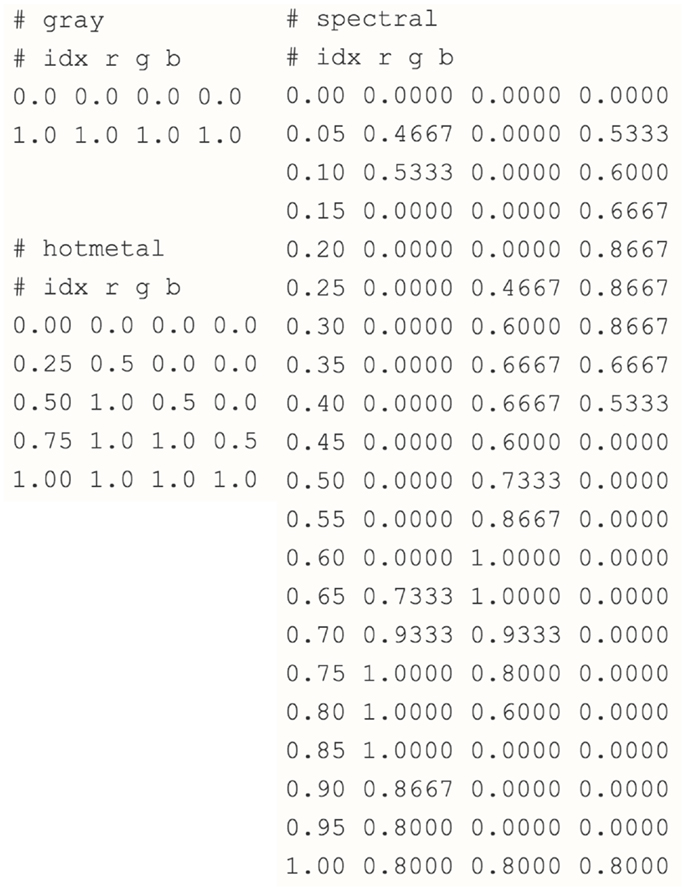

Remote access via a standard Internet (mobile) connection allows users to extend both metadata and workflow models for analysis. This type of service is referred to as an “archiving stage” where a back-end service holds and manages the data and delivers a view of the images to the front-end interface. The service includes color mapping that displays images in a contrast view and allows cell regions to be tracked and identified in a TissueStack dataset. The key M-DIP implementation in the archiving stage includes the following two components: (a) World coordinate space: a key concept for interpretation in the histological field is the spatial representation of the data. The object can be constructed from data on the client side and displayed with respect to a world coordinate space. This millimetric mapping allows users to consistently locate and identify sub-regions in a dataset. Via external registration and realignment regions and co-ordinated can be related between multiple datasets; and (b) Color mapping: this is an automated transformation of the image that allows a user to compress or expand the range of image values being displayed. It also allows arbitrary colors to be assigned to different parts of an image based upon their intensity. Figure 6 shows an example of “gray,” “hot metal,” and “spectral” color map RGB values. The first column of each color map represents the gray-scale intensity. The other three represents the RGB values mapped to the first column. All the value goes from 0 to 1. The number of steps (rows) from 0 to 1 defines the complexity of the spectrum of the color map. This allows the presentation of large-scale datasets in a compelling graphical manner.

User roles

Based on reported use of DIP in the medical science field (1, 25, 26), M-DIP should allow users to view, annotate, and measure multi-dimensional images remotely. For this paper we focus on a possible solution to DIP in a mobile environment for users in the neuroinformatics field. There are three major users: researchers, editors, and administrators. Researchers use this application for data interpretation; editors can upload, edit, perform image tiling, and conversion on data; administrators have rights to access the image server and database and change user roles. Our implementation embraces new technologies for user interaction and most of this has been conducted in environments where adoption is voluntary. This voluntary use environment is defined as “one in which users perceive the technology adoption or use to be a good choice; a mandated environment is where users perceive use to be organizationally compulsory.” (27) In this user role, the adopted system will be able to complete job tasks that are tightly integrated with multiple users (28).

Materials and Methods

This research has focused on three key elements (data handling, link-speed, and mobile UI) in this web-based mobile application development for processing medical images, particularly on MRI datasets. (a) Data handling: create a specific imaging format that could meet the specialists’ need (increase speed and associated performance) to enhance its information and “system” qualities. (b) Link-speed: test system performance and the communication between the mobile and image server to meet specialists’ “satisfaction” and increase “service quality.” (c) Mobile UI: provide a mobile graphical interface for visualizing image data to create an environment for image interpretation and analysis in the real time.

To improve the quality of our M-DIP implementation we refer to the overall level of user need, and also use it as a metric to measure information, system, and service qualities. Our implementation will achieve these qualities by providing new methods of data handling with both theoretical and practical contributions. To demonstrate the effectiveness of the TissueStack we have evaluated the system using the Information System Success Model (ISSM) (29). The ISSM states clear, specific dimensions of success, or acceptance for a new system implementation and the relationships between users. In the ISSM, “intention to use” or “user satisfaction” is determined by “information,” “system,” and “service” qualities (30). By combining these independent factors, we used a survey in order to identify users’ experience and intention while using M-DIP.

Data Handling

To demonstrate the benefit of a RAW file over a MINC/NIFTI file, we performed a speed test of extraction of all slices from all dimensions of a dataset. We calculated the number of “operation per seconds” (OPS) for a volume using the formula:

In addition, we compared the MINC/NIFTI and RAW file performances in this specific environment with a measurement of OPS. For instance, a simple ratio between the RAW OPS and the MINC OPS of the same volume could quantify a speed gain; however, the OPS are dependent on the file size. Therefore, the file size of the dataset needs to be considered to obtain an accurate representation of numerical gain. The gain is calculated with the following formula:

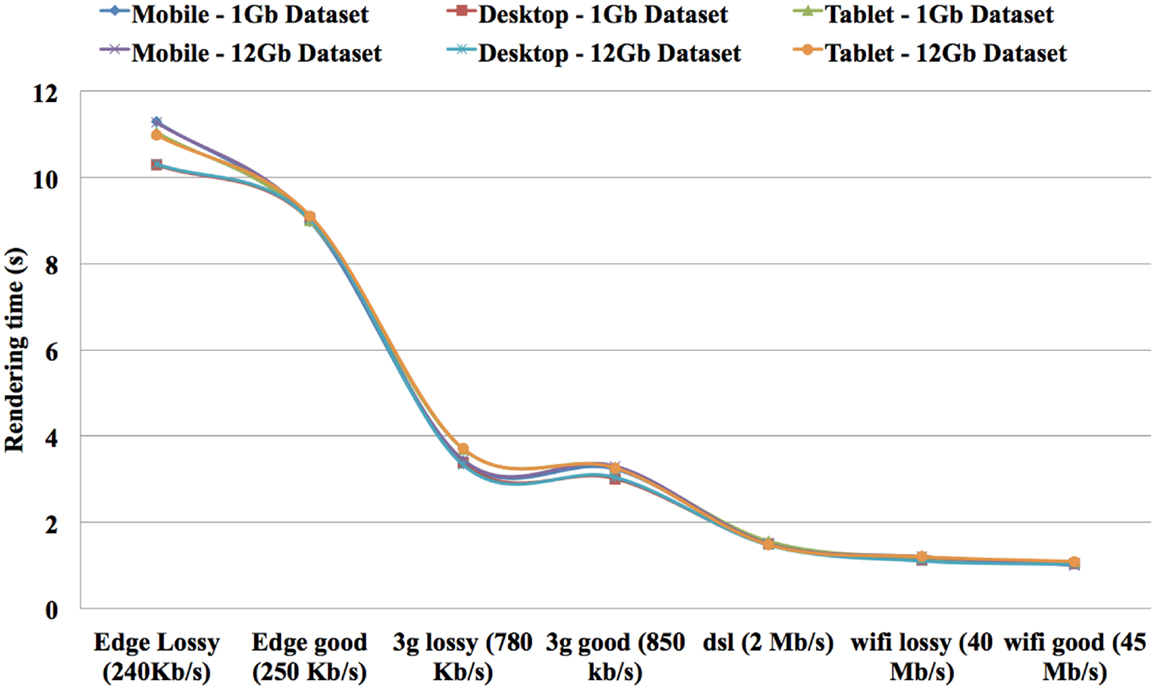

Link-Speed

To demonstrate the effect of link-speed on the user experience, a “network degradation” test was performed. Instances of TissueStack were loaded with different network speeds for two datasets and the respective rendering times (RT) recorded. RT was calculated with the following formula:

This RT formula illustrates the time difference while querying different size of datasets. The evaluation is to perform service and information qualities while using mobile devices for bioimaging interpretation.

Mobile User Interface

A number of software development methods were used while creating the mobile interface in order to maximize user image interpretation and analysis. These methods were chosen to ensure “information quality” in the resulting interface.

The first was to take special perceptual aspects into account so that this implementation can be used with minimal errors and frustration. Secondly, the mobile UI will provide content adaptation and prioritization of the most relevant items when a user searches for information. This is referred to “adaptation of visible components layout.” (31) Thirdly, all implementation followed the principle of “keeps it super simple (KISS).” (32) M-DIP aims to change the way bioimaging is presented and increase users “intention to use” and “user satisfaction.”

Results

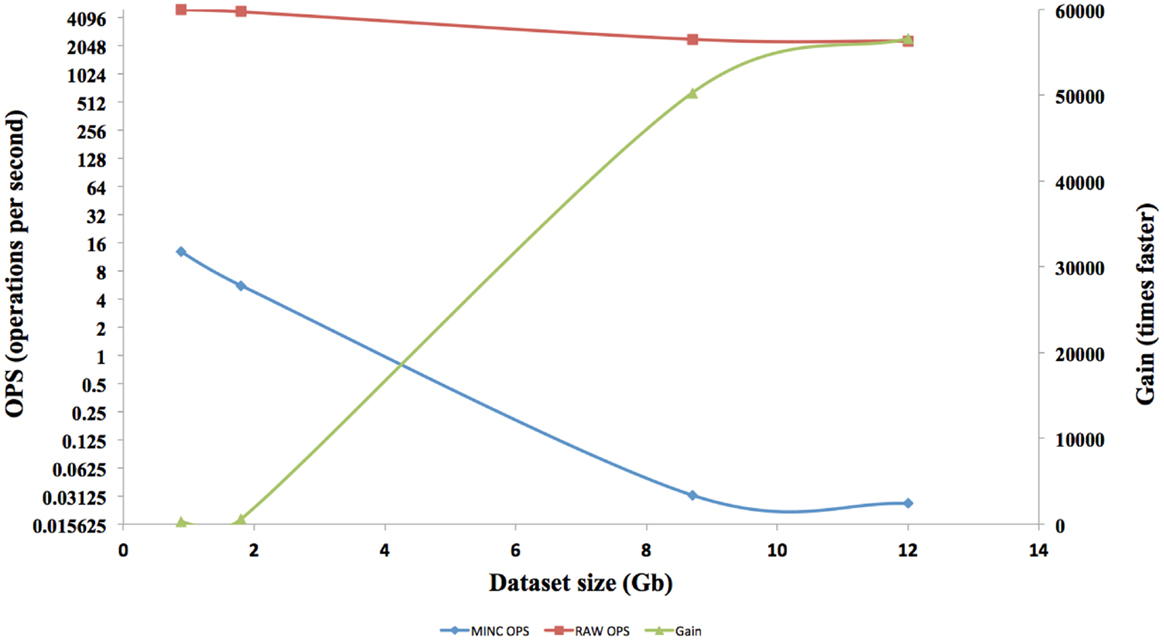

The interface response times of four different size datasets in RAW format are shown in Figure 7. Each of the speed tests was repeated for MINC files. Afterward, the results are compared and a gain in term of speed is calculated. The speed gain is plotted on the right axis and speed on the left axis.

The speed results show that there is a significant gain in speed when using a RAW file and this speed increase improves as the dataset size increases. The RAW’s OPS also reduced slightly when the volume size increased but not on the scale that a MINC file does. The RT for RAW files from 1GB to 12GB on both the TissueStack mobile (M-DIP) and desktop platforms (DIP) while varying the link-speed is shown in Figure 8.

As expected, the RT reduces when the connection speed increases. Importantly, RT is only affected by the connection speed and not by the volume size. For example, it took 1 s to display a ∼12 GB dataset on a mobile device with a 45 Mb/s link-speed while it took 11.28 s to display same dataset with 240 kb/s connection. In contrast, it took 1.03 s to display ∼1 GB dataset in mobile device with 45 Mb/s link-speed while it took 11.30 s to display same dataset with 240 kb/s connection. This shows that the RT can be considered equal on both the mobile and desktop platforms. The results show that it took 1.20 s to display ∼12 GB dataset in tablet device with 40 Mb/s link-speed while it took 1.1 s to display same dataset in a same link-speed condition in a desktop device. In addition, it took 1.18 s to display ∼1 GB dataset in tablet device with 40 Mb/s link-speed while it took 1.12 s to display same dataset in a same link-speed condition in a desktop device. The results show an increase of “service quality” for data communication.

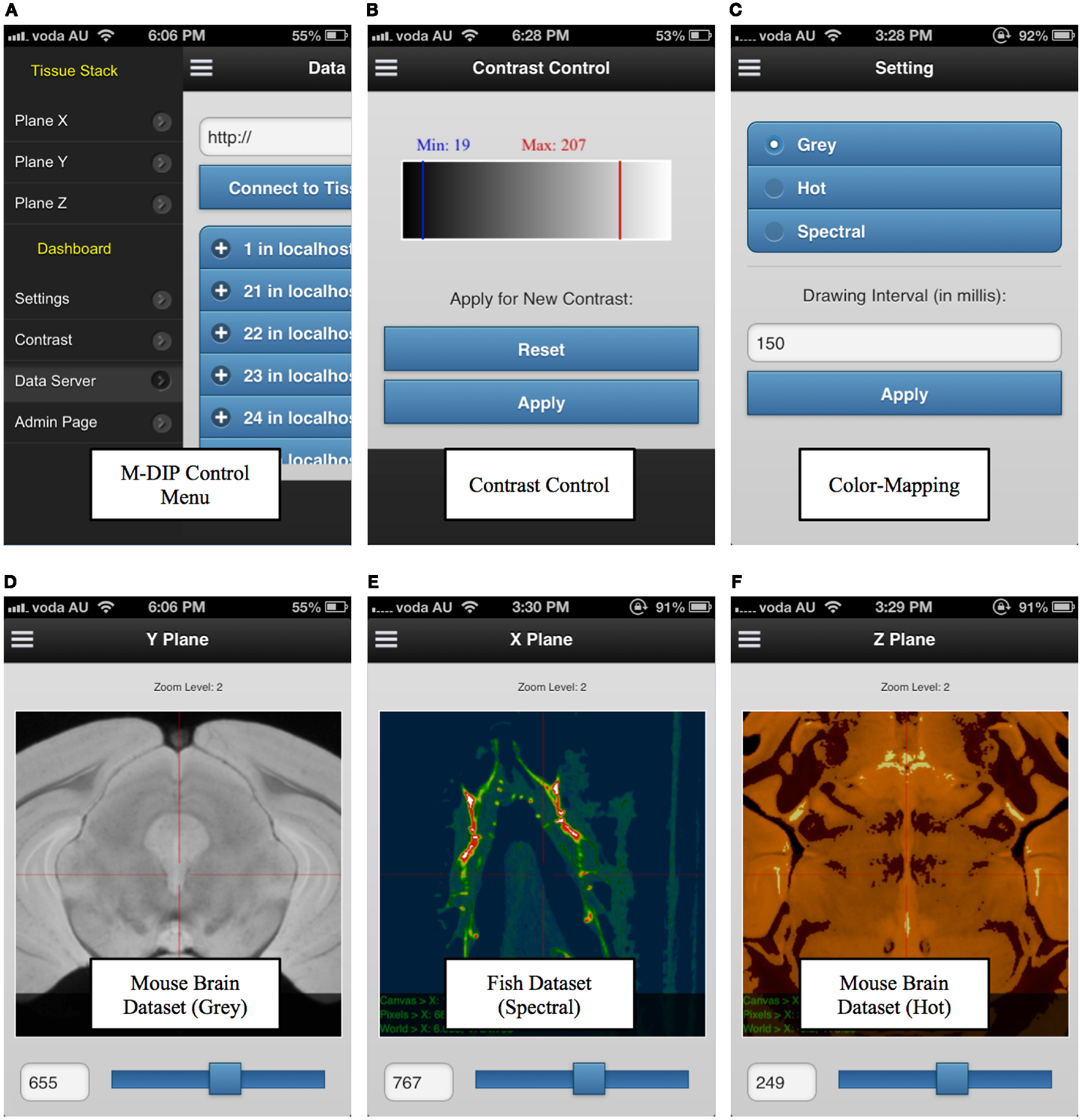

M-DIP has also been successfully implemented on mobile devices. This M-DIP system has been publicly released for ∼100 tests from 10 specialists who have significant experience using image-viewing software. Each test lasted at least 250 requests, consisting of multiple modality querying (e.g., plain dimensions, color mapping) and behavioral testing for mobile interactions (KISS method). M-DIP is able to query data of up to ∼12TB and process data with the same speed (RT) as desktop browsers with far greater memory and processing power. The M-DIP interface allows researchers and specialists to execute and query in a simple and intuitive manner (see Figure 9), with easy browsing and data interaction. This has been achieved by basing as much as possible of the interface upon known mobile interaction paradigms from existing well known applications such as mobile mapping. Screenshots of various parts of the system are shown in Figure 9 and a demonstration mobile interface can be viewed at http://tissuestack.com/phone.html.

Figure 9. Mobile digital imaging processing (M-DIP) application user interface. The user interface contains a control menu (A), a contrast control panel (B), color mapping panel with three choices (C), and the three viewing planes (D–F). In each plane, the slice number and zoom level are also presented.

M-DIP has been tested extensively on both iOS and Android devices. These two platforms cover 87.8% of the mobile market in Q4 2012 (33). M-DIP is able to build an atlas view of a larger image, similar to the Google Map application in mobile devices. Overall, M-DIP currently provides a framework for more than 12 unique dataset profiles, with volumes for over 450,000,000 voxels (499 × 1311 × 679). It is able to handle more than three concurrent interactive functions (color mapping, contrast, and zoom levels) and over ∼12TB of imaging data. M-DIP resulted in a highly positive perspective for interpretation potential under mobile environment.

Discussion

This study addresses the research needs of biomedical researchers and details the specific implications for theory and practice. A good user experience is one of the main goals of any successful application (30, 34). To achieve this, an application must focus on many details such as UI, accessibility, and response time. For a web-based mobile application, a crucial point is RT. Many optimizations can be performed to improve the RT of an application, however at some point it will become dependent on the link-speed and to some extent the dataset size. In our implementation the use of RAW files has reduced this dependence on file size to a negligible amount. Correlating mobile interpretation requirements with a particular service in MRI datasets has led to the mobile application becoming a valuable analysis and diagnosis tool. Our mobile implementation has the ability to retrieve datasets in real time without image degradation even when multiple M-DIP capabilities are used. It is important to note that the use of an M-DIP system without acceptable speed may lead to lower “satisfaction” levels in users’ attitudes toward using the application.

There are numerous products that are capable of digital processing and even managing large-scale three-dimensional datasets on the front-end or the server side. To our knowledge none of these systems have been designed to tackle the speed of data handling in a mobile Internet environment. Although REDCap (35) and Deduce (36) have provided web-based applications for inputting and modifying bioimaging data, there is still no such program that has the ability to increase speed of direct tiling or capabilities for imaging modalities.

In M-DIP, the data access speed has been increased by providing a better real time pre-tiling on the server side. This achievement has been made possible by creating and using RAW files, which provide a considerable speed gain compared to a standard MINC or NIFTI file. The gain generated by using RAW files was higher than expected and was in fact 56,000 times faster than a MINC file. This gain has been made possible due to the RAW file structure and the number of OPS reached. However, the gain cannot be extrapolated above files around 12TB, as even if the RAW OPS were constant, there are still hardware factors [disk Input and Output (I/O)] that would decrease OPS as the volume size increases.

Theoretically, this result should be linear but the system is not perfect and hits hardware limitations such as I/O saturation. Nevertheless, RAW file performance is only half for a 12 times larger volume. Meanwhile, MINC file performance dropped by almost 500 times. RAW files have been created to solve a speed bottleneck for real time tiling, but do not offer all the capabilities of a structured file such as MINC or NIFTI and can therefore only be used for TissueStack’s specific purpose. They are a good solution to meet the speed requirements that determine “system quality” in M-DIP. This method allows the retrieval of data with the shortest time possible and improves the “satisfaction” to users. In addition, we found that dataset size does not hinder the speed of the application by using a RAW file, therefore, it meets the current specialists need and will thus increase their intention to use the application. In addition, RT is only affected by the connection speed and not by the volume size. All versions (mobile, tablet, and desktop) of TissueStack have near identical RT, which also helps the final user experience.

This study demonstrated that the delivery of M-DIP via a specialized format and integrated web-based mobile environment is an efficient tool for imaging specialists. Our survey found that 86% of participants felt that M-DIP was beneficial for initiating appropriate analyses while also making more accurate assessments. Moreover, 98% of participants stated that the M-DIP application facilitated image data visualization. In general, imaging specialists found that M-DIP performed significantly faster in bioimaging data processing than compared to their preferred imaging software and DIP allowed parallel browsing of linked datasets of differing scales. These findings reiterate the development of M-DIP purpose that adopting mobile technology and increasing performance of data handling in DIP are able to deliver better image processing solution to users.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This project is supported by the Australian National Data Service (ANDS). ANDS is supported by the Australian Government through the National Collaborative Research Infrastructure Strategy Program and the Education Investment Fund (EIF) Super Science Initiative. The authors acknowledge the facilities, and the scientific and technical assistance of the National Imaging Facility at the University of Queensland. TissueStack is also an OpenSource project licenced under the GNU GPLv3. It is currently available on GitHub (github.com/NIF-au/TissueStack).

References

1. Seemann T. Digital Image Processing Using Local Segmentation. Faculty of Information Technology. Melbourne: Monash University (2002).

2. Swedlow JR, Eliceiri KW. Open source bioimage informatics for cell biology. Trends Cell Biol (2009) 19(11):656–60. doi:10.1016/j.tcb.2009.08.007

3. Van Horn JD, Toga AW. Is it time to re-prioritize neuroimaging databases and digital repositories? Neuroimage (2009) 47(4):1720–34. doi:10.1016/j.neuroimage.2009.03.086

4. Janke AL, Waxenegger H, Ullmann J, Galloway G. TissueStack: A New Way to Whom it May Concern: View Your Imaging Data. Munich: International Neuroinformatics Coordinating Facility (2012).

5. Meng Kuan L, Mula JM, Gururajan R, Leis JW. Development of a prototype multi-touch ECG diagnostic decision support system using mobile technology for monitoring cardiac patients at a distance. 15th Pacific Asia Conference on Information Systems (PACIS2011); July 11–13; Brisbane, Australia (2011).

6. LONI. Laboratory of Neuro Imaging, UCLA (2009) [cited 2013 Jan 14]. Available from: http://www.loni.ucla.edu/

7. Marcus DS, Olsen TR, Ramaratnam M, Buckner RL. The Extensible Neuroimaging Archive Toolkit: an informatics platform for managing, exploring, and sharing neuroimaging data. Neuroinformatics (2007) 5:11–34.

8. Keator DB, Grethe JS, Marcus D, Ozyurt B, Gadde S, Murphy S, et al. A national human neuroimaging collaboratory enabled by the Biomedical Informatics Research Network (BIRN). IEEE Trans Inf Technol Biomed (2008) 12:162–72. doi:10.1109/TITB.2008.917893

9. Jessica AT, John DVH. Electronic data capture, representation, and applications for neuroimaging. Front Neuroinform (2012) 6:16. doi:10.3389/fninf.2012.00016

10. Peng H. Bioimage informatics: a new area of engineering biology. Bioinformatics (2008) 24:1827–36. doi:10.1093/bioinformatics/btn346

11. Zhou X, Liu KY, Bradley P, Perrimon N, Wong ST. Towards automated cellular image segmentation for RNAi genome-wide screening. Medical Image Computing and Computer-Assisted Intervention, 8th International Conference; Palm Springs, CA, USA: Medical Image Computing and Computer-Assisted Intervention – MICCAI (2005). p. 885–92.

12. Liu T, Nie J, Li G, Guo L, Wong ST. ZFIQ: a software package for zebrafish biology. Bioinformatics (2008) 24(3):438–9. Epub 2007, doi:10.1093/bioinformatics/btm615

13. Rubin MN, Wellik KE, Channer DD, Demaerschalk BM. Systematic review of teleneurology: methodology. Front Neurol (2012) 3:156. doi:10.3389/fneur.2012.00156

14. Qiang F, Fahim S, Irena C. A mobile device based ECG analysis system. In: Giannopoulou E, editor. Data Mining in Medical and Biological Research. Austria: I-Tech Education and Publishing KG (2008). p. 209–26.

15. Wechsler LR, Tsao JW, Levine SR, Swain-Eng RJ, Adams RJ, Demaerschalk BM, et al. Teleneurology applications Report of the Telemedicine Work Group of the American Academy of Neurology. Neurology (2013) 80(7):670–6. doi:10.1212/WNL.0b013e3182823361

16. PostgreSQL. PostgreSQL: The PostgreSQL Global Development Group (1996) [cited 2012 Dec 05]. Available from: http://www.postgresql.org/

17. Caban JJ, Joshi A, Nagy P. Rapid development of medical imaging tools with open-source libraries. J Digit Imaging (2007) 20(1):83–93. doi:10.1007/s10278-007-9062-3

18. Das S, Zijdenbos AP, Harlap J, Vins D, Evans AC. LORIS: a web-based data management system for multi-center studies. Front Neuroinform (2012) 5:37. doi:10.3389/fninf.2011.00037

19. PHILIPS. Mobile Radiography Realizes its Full Potential: Integrated, Wireless Workflows Increase Mobile Radiography’s Contribution to Patient Care. Philips Healthcare (2011).

20. BIC McConnell Brain Imaging Center. MINC Montreal Neurological Institute. Montreal: McGill University (2000).

21. Mark J. NIfTI-1 Data Format: Neuroimaging Informatics Technology Initiative (2007) [cited 2012 Nov 01]. Available from: http://nifti.nimh.nih.gov/nifti-1

22. Sled JG, Vincent RD, Baghdadi L. MINC/Reference/MINC2.0 Users Guide Wikibook (2011) [cited 2012 Nov 01]. Available from: http://en.wikibooks.org/wiki/MINC/Reference/MINC2.0_Users_Guide

23. Won-Ki J, Johanna B, Markus H, Rusty B, Charles L, Amelio V-R, et al. Ssecrett and NeuroTrace: interactive visualization and analysis tools for large-scale neuroscience data sets. IEEE Comput Graph Appl (2010) 30(3):58–70. doi:10.1109/MCG.2010.56

24. Horner V. Developing a Consumer Health Informatics Decision Support System Using Formal Concept. South Africa: University of Pretoria (2007).

25. Eliceiri KW, Berthold MR, Goldberg IG, Ibáñez L, Manjunath BS, Martone ME, et al. Biological imaging software tools. Nat Methods (2012) 9(7):697–710. doi:10.1038/nmeth.2084

26. Prodanov D. Data ontology and an information system realization for web-based management of image measurements. Front Neuroinform (2011) 5:25. doi:10.3389/fninf.2011.00025

27. Jason PS, Joan C, Carole A, Lois B, Damien R. Enhancing user acceptance of mandated technology implementation in a mobile healthcare setting: a case study. In: Wilson JU, Bunker D, Campbell B, editors. ACIS2005: Socialising IT: Thinking about the People Sydney. Australia: Australasian Chapter of the Association for Information Systems (2005). p. 642–6.

28. Brown SA, Massey AP, Montoya-Weiss MM, Burkman JR. A decision model and support system for the optimal design of health information networks. IEEE Trans Syst Man Cybern (2001) 31(2):146–58. doi:10.1109/5326.941839

29. DeLone WH, McLean ER. Information system success: the quest for the dependent variable. Inf Syst Res (1992) 3(1):60–95. doi:10.1287/isre.3.1.60

30. DeLone WH, McLean ER. Information system success revisited. 35th Hawaii International Conference on System Sciences; Jan 7–10; Big Island, HI (2002).

31. Ekaterina V, Mykola P, Seppo P. Towards the framework of adaptive user interfaces for eHealth. The 18th IEEE Symposium on Computer-Based Medical Systems; June 23–24; Ireland (2005).

32. Misra RB. Global IT outsourcing: metrics for success of all parties. J Inform Technol Cases Appl (2004) 6(3):21.

33. Jon F. Strategy Analytics: Android claimed 70 Percent of World Smartphone Share in Q4 2012: Engadget (2013) [cited 2013 Feb 05]. Available from: http://www.engadget.com/2013/01/29/strategy-analytics-android-70-percent-share/

34. Karin AT, Michael M. User-centered design techniques for a computerised antibiotic decision support system in a intensive care unit. Int J Med Inform (2007) 76(10):760–8. doi:10.1016/j.ijmedinf.2006.07.011

35. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap) – a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform (2009) 42:377–81. doi:10.1016/j.jbi.2008.08.010

Keywords: mobile digital imaging processing, mobile visualization tool, medical science, bioimaging, biomedical research, neuroinformatics

Citation: Lin MK, Nicolini O, Waxenegger H, Galloway GJ, Ullmann JFP and Janke AL (2013) Interpretation of medical imaging data with a mobile application: a mobile digital imaging processing environment. Front. Neurol. 4:85. doi: 10.3389/fneur.2013.00085

Received: 14 March 2013; Accepted: 19 June 2013;

Published online: 04 July 2013.

Edited by:

William J. Culpepper, University of Maryland School of Pharmacy, USACopyright: © 2013 Lin, Nicolini, Waxenegger, Galloway, Ullmann and Janke. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and subject to any copyright notices concerning any third-party graphics etc.

*Correspondence: Meng Kuan Lin, The Centre for Advanced Imaging Gehrmann Laboratory (Building 60), Research Road, The University of Queensland, St Lucia, QLD 4072, Australia e-mail: adam.lin@cai.uq.edu.au