A meta-analytic review of multisensory imagery identifies the neural correlates of modality-specific and modality-general imagery

- Department of Communication Sciences and Disorders, Northwestern University, Evanston, IL, USA

The relationship between imagery and mental representations induced through perception has been the subject of philosophical discussion since antiquity and of vigorous scientific debate in the last century. The relatively recent advent of functional neuroimaging has allowed neuroscientists to look for brain-based evidence for or against the argument that perceptual processes underlie mental imagery. Recent investigations of imagery in many new domains and the parallel development of new meta-analytic techniques now afford us a clearer picture of the relationship between the neural processes underlying imagery and perception, and indeed between imagery and other cognitive processes. This meta-analysis surveyed 65 studies investigating modality-specific imagery in auditory, tactile, motor, gustatory, olfactory, and three visual sub-domains: form, color and motion. Activation likelihood estimate (ALE) analyses of activation foci reported within- and across sensorimotor modalities were conducted. The results indicate that modality-specific imagery activations generally overlap with—but are not confined to—corresponding somatosensory processing and motor execution areas, and suggest that there is a core network of brain regions recruited during imagery, regardless of task. These findings have important implications for investigations of imagery and theories of cognitive processes, such as perceptually-based representational systems.

Perception describes our immediate environment. Imagery, in contrast, affords us a description of past, future and hypothetical environments. Imagery and perception are thus two sides of the same coin: Perception relates to mental states induced by the transduction of energy external to the organism into neural representations, and imagery relates to internally-generated mental states driven by representations encoded in memory. Various forms of mental imagery have been implicated in a wide array of cognitive processes, from language comprehension (Bottini et al., 1994), to socially-motivated behaviors such as perspective taking (Ruby and Decety, 2001), to motor learning (Yágüez et al., 1998). Understanding the networks supporting imagery thus provides valuable insights into many behaviors.

What are the Neural Substrates of Modality-Specific Imagery?

Though representations generated through mental imagery clearly have perceptual analogs, a persistent question of the imagery literature concerns the extent to which imagery and perceptual processes overlap. Within the visual imagery domain, Kosslyn and Thompson (2003) analyzed contemporary neuroimaging studies to explain the lack of consistency with which studies demonstrate recruitment of early visual cortex during imagery. They showed that imagery was most likely to recruit early visual cortex when it requires attention to high-resolution detail, suggesting that perceptual processing during imagery depends on attention or processing level (Craik and Lockhart, 1972). The analogs question has been posed in the auditory and motor imagery domains, with some studies finding activation in primary sensorimotor areas (Wheeler et al., 2000; Hanakawa, 2002; Bunzeck et al., 2005) and others not (Zatorre and Halpern, 1996; Halpern and Zatorre, 1999; Vingerhoets et al., 2002).

In an early review of the imagery literature, Kosslyn et al. (2001) concluded from the auditory and motor imagery that dominated literature at the time, that “most of the neural processes that underlie like-modality perception are also used in imagery,” (p. 641). Subsequent study of imagery in other modalities and continuations of earlier lines of imagery study now afford a clearer picture of imagery across all sensory modalities and, importantly, of imagery in general. Moreover, recently developed analytic techniques now permit a more precise description of the perception-related processes underlying imagery. The present paper uses one such analytic technique to explore the body of modality-specific imagery literature with the overall aim of identifying the neural substrates of modality-specific and modality-general imagery. As will be discussed below, of particular importance is the question of whether modality-specific imagery recruits primary sensorimotor cortex as a rule. The resolution of this this question bears importantly on issues central to cognitive processes with which imagery is tightly bound.

Imagery and Perceptually-Grounded Representations: Theoretical Issues

Semantic memory—one's knowledge of the meaning of things—critically supports a wide array of cognitive processes, from language production and comprehension, to action planning. Of all cognitive processes, imagery and semantic processing are perhaps most closely related. Imagery regularly relies on previously organized and stored semantic information (Kosslyn et al., 1997) about the features to be imagined. A large body of literature makes the complimentary argument that the reactivation of perceptual representations—that is, imagery—underlies semantic retrieval. The assumption that imagery underlies semantic retrieval is the central premise of perceptually-based theories of cognition. The Perceptual Symbol System account (Barsalou, 1999) assumes that reactivation of perceptual representations (“perceptual simulations”) underlies semantic retrieval and provides one of the most recent and explicit accounts of the importance of imagery to semantic processing. Under this account, perceiving an object elicits a unique pattern of activation in primary sensorimotor cortices encoding salient perceptual properties of that object. Perceptually-based theories argue that encoding and retrieving these activations within the perceptual system naturally permits high-fidelity perceptually-rich representations. Similar ideas underlie Warrington and McCarthy's sensory/functional theory (Warrington and McCarthy, 1987), and Paivio's dual coding theory (Paivio, 1971), which explicitly argues that abstract propositional and (visual) imagery representations comprise concept knowledge.

Full elucidation of the assumptions and criticisms of a perceptually-grounded system are beyond the scope of this article, but have been given extensive consideration elsewhere (Barsalou, 1999; Simmons and Barsalou, 2003). One advantage of perceptually-grounded models is that they arguably overcome the reverse inference problem (Poldrack, 2006), which is the neuroimaging equivalent of the symbol grounding problem (Harnad, 1990). The symbol grounding problem describes the circularity inherent in relating arbitrary symbols to an equally arbitrary symbol system. The solution Harnad proposes is for one symbolic system to be non-arbitrary—that is, to be grounded in an external physical system. Because primary sensory cortices contain populations driven by external physical systems, the perceptual system provides the grounding required to understand those cognitive systems that interact with it. For example, patterns of activations within olfactory cortex reflect detection of particular smells. Olfactory imagery and knowledge retrieval might engage a wide network of brain areas related to any number of cognitive processes. However, if imagery is perceptually-grounded, one would additionally expect an involvement of the corresponding sensory cortex. Whatever other brain regions may contribute toward olfactory imagery, it is relatively straightforward to argue activity within olfactory cortex is part of an olfactory representation.

Imagery and Perceptually-Grounded Representations: Methodological Issues

The strong theoretical ties between perceptually-based semantic theories and imagery suggest that a thorough understanding of the former requires an understanding of imagery. It is important to reiterate that perceptually-based representational theories assume that semantic representations are rooted in imagery, rather than perception per se. Nonetheless, a common practice is to localize these perceptually-based representational systems using perceptual tasks. For example, Simmons et al. (2007) investigated color knowledge retrieval within color-sensitive visual cortex localized using a modified Farnsworth-Munsell 100-Hue Task (Farnsworth, 1957).

The demonstration of a common neural basis underlying perception and modality-specific semantic knowledge provides compelling support for perceptually-based theories. Such findings support a strong version of a perceptually-grounded semantic system—that is, that perceptual processing is implied by semantic retrieval. The approach of using primary sensory cortex to define these representational areas has some limitations, however. First, the choice of localizer task is not a trivial consideration, and may impact the ability to detect the true extent of the modality-specific region. For example, recruitment of color-selective areas has been shown to be modulated by attention (Beauchamp et al., 1999), and thus different perceptual localizer tasks may give different estimates of the corresponding perceptual areas. Second, multiple localization tasks and specialized delivery apparatus required for some perceptual tasks may be impractical for investigations of multiple representational modalities. Even when primary sensory regions are well-defined, there remains one important consideration: Semantic encoding and retrieval processes are assumed to be rooted in imagery. Thus, to the extent that the network supporting imagery extends beyond primary somatosensory perceptual areas, important imagery-related contributions to semantic encoding and retrieval may be overlooked. Thus, an understanding of the neural substrates underlying imagery provides critical insight into the organization of the semantic system, and can guide investigations of representational systems.

The ALE Meta-Analytic Technique

An empirically-driven characterization of the neural correlates of modality-specific and modality-general imagery processes has been made possible in recent years by the development of meta-analytic techniques for assessing neuroimaging data. Techniques such as Activation Likelihood Estimation (ALE) (Chein et al., 2002; Turkeltaub et al., 2002) and Multilevel Kernel Density Analysis (MKDA) (Wager et al., 2007) allow the application of statistical measures to the literature to assess the reliability with which an effect is demonstrated in a particular brain area. In short, these methods permit an empirical test of consensus within a body of neuroimaging literature. A detailed explanation of the advantages and underlying statistics behind voxel-based meta-analytic approaches was presented by Laird et al. (2005). Briefly, these approaches examine the activation foci reported for a common contrast among multiple studies. Statistical tests on these data (e.g., chi-square analyses, Monte Carlo simulations) provide quantifiable, statistically-thresholded measures of the reliability of activation for a given contrast within a given region. As with other meta-analytic techniques, these approaches importantly highlight commonalities among studies, and minimize idiosyncratic effects. The ALE approach has been used in recent years to examine representational knowledge in the semantic system in general (Binder et al., 2009) and for more specific representational knowledge about categories such as tools and animals (Chouinard and Goodale, 2010). The utility of this approach in identifying important networks within these domains suggests it may be similarly useful in the conceptually-related imagery domain.

What follows is an ALE analysis of the neuroimaging literatures in modality-specific imagery across visual, auditory, motor, tactile, olfactory, and gustatory modalities. These analyses provide a descriptive survey of the imagery literature and were intended to meet three main goals: First, to identify the brain areas recruited during imagery, regardless of modality. Second, to identify within each modality the brain regions associated with modality-specific imagery with particular attention to the extent to which primary sensorimotor perceptual regions are recruited. Finally, various sub-processes are carried out by different and well-defined populations of neurons tuned for processing color, form and motion during visual perception. The number of studies investigating corresponding subtypes of visual imagery provides an opportunity to investigate whether evidence for a similar organization can be found during visual imagery.

Materials and Methods

Searches for candidate imagery studies were conducted in the PubMed and Google Scholar databases for fMRI and PET studies related to imagery or sensory-specific imagery (e.g., “gustatory imagery,” “taste imagery”). Iterative searches within the citations among candidate imagery studies located additional candidate imagery studies with the intention of creating a comprehensive list of studies explicitly examining imagery or imagery-like tasks. For purposes of this study, imagery-like tasks were defined as those for which the retrieval of perceptual information from long term memory was required. These tasks were framed as perceptual knowledge retrieval by study authors and extensively cited or were cited by explicit studies of imagery. As discussed above, perception-based theories of knowledge representations are explicitly rooted in imagery (Paivio, 1971; Warrington and McCarthy, 1987; Barsalou, 1999), and a large body of literature supports the hypothesis that imagery underlies perceptual knowledge retrieval. Consequently, in many cases, similar tasks were used by different authors to investigate perceptual knowledge retrieval and imagery [e.g., color feature verification used by Kellenbach et al. (2001) and color feature comparison used by Howard et al. (1998)]. ALE measures concordance among reported activations; therefore heterogeneity among studies should lead to a reduction in power, rather than inflation of type I error. To the extent that perceptual knowledge retrieval does not involve imagery processes, inclusion of perceptually-based knowledge studies should therefore lead to slightly more conservative estimates of imagery activation. These studies comprised a small minority of the overall body of literature surveyed, however, so any such conservative bias should be rather small. For these reasons, these inclusionary criteria were deemed appropriate. Studies investigating special populations (e.g., synaesthetes, neurological patients) were excluded, as were those that did not conduct whole brain analyses or report coordinates in stereotactic space for significant modality vs. baseline contrasts. The studies included in the present analysis are listed in Table 1.

Imagery vs. low-level baseline contrasts were categorized with respect to one of eight modality conditions: Auditory, Tactile, Motor, Olfactory, Gustatory, Visual-Form, Visual-Color, and Visual-Motion. Modality categorizations were generally straightforward to determine (e.g., taste recall clearly relating to gustatory imagery), though classification of visual imagery subtypes required careful consideration of the task, stimuli and baseline contrasts used. One study (Roland and Gulyás, 1995) required participants to recall both the colors and geometric description of colored geometric patterns. The remaining visual form studies used monochromatic stimuli. The relative scarcity of color imagery studies, and the saliency of both form and color information in the task motivated the inclusion of this study in both modalities. Despite this single commonality, the ALE maps for these two modalities did not resemble one another.

The focus on the lowest-level baseline contrast was mandated by the fact that it alone was included across all imagery studies. Though the baseline task varied among studies, ranging from rest baselines to passive viewing baselines controlling for other modalities (e.g., passive viewing of scrambled scenes for auditory imagery) or within-modality (e.g., passive viewing of letter strings for form imagery), no particular baseline task dominated any modality. Implicit or resting-baselines were used in approximately 40% of studies, nearly all of which employed tasks requiring no overt response on the part of the participant. The remaining studies employed somewhat more complex baseline tasks generally designed to account for attention or response processes (e.g., those associated with button presses) under the assumption of cognitive subtraction. Direct contrasts between perception- and imagery-related activity tend to show reduced activity in primary perceptual areas for imagery relative to perception (e.g., Ganis, 2004). Care was thus taken to ensure that baselines involving a sensorimotor component excluded activity only in modalities of non-interest. For example, the detection of taste within a tasteless solution (Veldhuizen et al., 2007) likely involves motor activity in the planning and execution of passing the solution over the tongue and swallowing. The baseline task used in that study involved swallowing the solution without making a taste judgment. Under the assumption of pure insertion, the contrast between the two tasks should reveal activations associated only with the gustatory judgment (but see Friston et al., 1996 for a critique of the logic of cognitive subtraction). In the analyses that follow, however, the lack of systematicity among active baseline tasks somewhat mitigates concerns about the validity of cognitive subtraction. Aggregated across studies, imagery-related activations should be more reliable than those related to particular baseline choices, just as random-effects analyses across participants distinguish the influence of an experimental manipulation from noise. Though the complexity of baseline task was generally commensurate with that of the experimental task across studies, baseline complexity was investigated in detail in the general imagery analysis, where the numbers of studies permitted such an analysis. The reduced number of studies available for individual modalities, however, precluded such an analysis within each modality.

Concordance among imagery vs. baseline activation foci reported across the neuroimaging literature was analyzed using a widely used activation likelihood estimate (ALE) meta-analytic approach (Eickhoff et al., 2012). Analyses were performed using GingerALE 2.1 (http://brainmap.org/ale/). Correction for multiple comparisons was performed using a false-discovery rate (FDR) threshold of pN < 0.05. GingerALE reports the number of voxels meeting the selected FDR threshold within each ALE map. Except where noted, a cluster size threshold, equal to the FDR rate times the number of suprathreshold voxels, was applied to each map (hereafter extent-thresholded clusters). For example, if 1000 voxels reached a FDR threshold of 0.05, then the expected number of false positives within that ALE map would be 50. A cluster size threshold of 50 in this example ensures that no extent-thresholded cluster would consist entirely of false positives. Because the number of FDR-significant voxels varied by modality, this approach resulted in different cluster thresholds across modalities. It should be noted, however, that imagery-related clusters were analyzed independently and with respect to sensorimotor ROIs rather than with each other (see below). Thus, these differences had little to bear on the results that follow, other than to increase the confidence with which conclusions can be drawn about the meaningfulness of any given extent-thresholded cluster for that analysis.

ROI Definition and Overlap Analysis

The question of whether modality-specific imagery activates primary sensorimotor cortex was addressed within each modality by assessing the overlap between extent-thresholded ALE clusters and the primary sensorimotor ROI defined for each modality. ROIs were drawn from several publicly available anatomical atlases. The source(s) for each ROI are indicated in each modality analysis. Multiple atlases were necessitated by the fact that no single atlas contained ROI definitions corresponding to all modalities included in the present analysis. In some cases, different atlases contained different definitions of the same region. When a given anatomical region was defined in exactly one atlas, that definition was taken as the ROI; when multiple atlases defined the same region, the intersection (i.e., only those voxels common to all definitions) was taken as the ROI. This atlas-based approach was intended to arrive at a set of ROIs that are easily reproducible and for which there should be general agreement are representative of the corresponding sensorimotor cortices.

The degree of overlap was assessed for each ROI by determining whether the number of voxels in the extent-thresholded ALE clusters overlapping with a given ROI reached an overlap criterion. The overlap criterion was set independently for each ROI using 3dClustSim (available as part of the AFNI fMRI analysis package, available at http://afni.nimh.nih.gov/afni/download). Briefly, 3dClustSim calculates cluster size threshold (k) for false positive (noise-only) clusters at specified uncorrected alpha level. Though the ALE analyses used FDR corrected alpha thresholds, the equivalent voxel-wise alpha threshold for each ALE map is available in the GingerALE output. 3dClustSim carries out a user-specified number of Monte Carlo simulations of random noise activations at a particular voxel-wise alpha level within a masked brain volume. Ten thousand such simulations for each ALE map were used for this study. The number of simulations in which clusters of various sizes appear within the volumetric mask is tallied among these simulations. These data are then used to calculate size thresholds across a range of probability values for that region. For example, in a specified volume using a voxel-wise alpha of 0.001, if clusters of size 32 mm3 or greater appear in 50 of 10,000 iterations by chance, this correspond to a p < 0.05 cluster-level significance threshold. In other words, within the specified volume using a voxel-wise alpha of 0.001, clusters exceeding 32 mm3 are unlikely to occur by chance. To be clear, the cluster thresholds calculated using 3dClustSim was used to calculate an overlap criterion for each ROI, and not as an additional ALE cluster thresholding step. To the author's knowledge, no previous meta-analysis of neuroimaging data has attempted to qualify overlap between ALE clusters and a priori ROIs. However, the cluster size threshold approach is widely used to test statistical significance of clusters in conventional ROI analyses. That is, size thresholding is often used to determine whether a cluster of a particular size occurring within a given ROI is statistically significant. The present analysis had identical requirements, thus it was deemed to be an appropriate metric of overlap significance. A benefit of this approach when considering different ROIs is that it naturally takes into account differences in ROI extents: Larger sensorimotor ROIs require correspondingly greater overlap with imagery clusters for the overlaps to reach statistical significance.

Finally, it is important to note that the following analyses identify concordance of activation across studies within each modality, rather than contrast modalities directly. That is, they do not identify regions of activation unique to imagery in a particular modality. There are regions for which only studies of imagery for one modality converges (e.g., gustatory cortex activation apparent only for gustatory imagery studies). Nonetheless, the following results do not speak to whether one imagery modality recruits a particular region more than any other imagery. Inter-modal contrasts were not performed for two reasons: First, such contrasts address the question, not of what regions are implicated in a particular type of imagery, but what regions are implicated more for that type of imagery than any other. Networks defined by such contrasts would thus be more exclusive, and reducing the usefulness of these analyses to those interested in a non-comparative description of imagery for a particular modality. Second, there is a practical problem imposed by the disparity between the frequencies with which imagery in each sensorimotor modality has been investigated. This disparity would plausibly skew any such comparisons and generate networks driven by a single modality. When analyses are restricted to within-modality, however, differences with respect to numbers of studies are not problematic: a coherent network can be identified from relatively few studies, provided they are mutually consistent. Though modality-specific activations are not explicitly contrasted, crossmodal overlap between clusters is noted where it occurs.

Results

The results are presented in order of generality. The first analysis identifies those regions consistently active relative to baseline in neuroimaging studies of imagery across all modalities. The eight subsequent analyses identify regions consistently active relative to baseline in modality-specific imagery for each of 5 sensorimotor modalities and 3 subtypes of visual imagery. All coordinates are reported in MNI standard space.

General Imagery Network

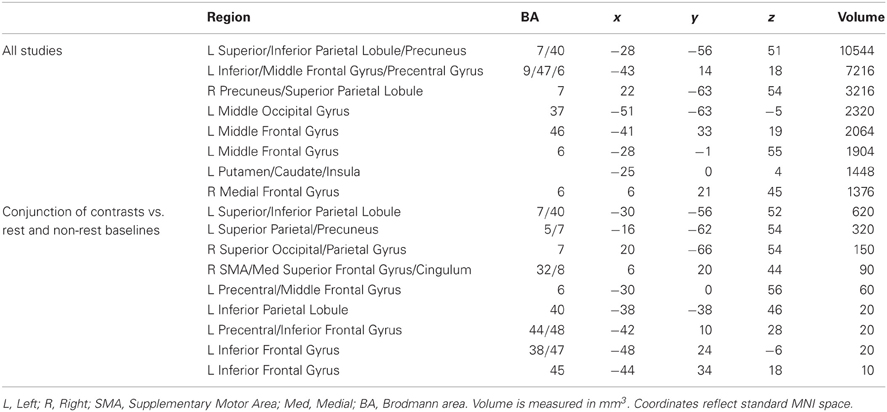

A statistical threshold of pN < 0.01 (FDR corrected) and a minimum cluster size threshold of 800 mm3 was used for the general imagery analysis. One thousand hundred and three foci from 84 contrasts involving 915 participants contributed toward these results. Nine primarily left-lateralized clusters reached the significance threshold (Table 2, Figure 1). These activations were found in bilateral dorsal parietal, left inferior frontal and anterior insula regions.

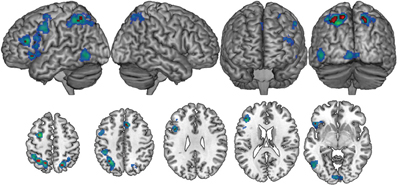

Figure 1. The general imagery network (cool colors) was primarily left-lateralized, with bilateral activations in superior parietal regions. A conjunction analysis of studies employing complex and resting state baselines found nine clusters (red) within the general imagery network that were active across all imagery conditions, regardless of baseline task.

As indicated earlier, one advantage of meta-analytic techniques is that random-effects analyses minimize spurious effects attributable to idiosyncratic experimental design decisions among studies (e.g., choice of baseline) and highlight commonalities among them (e.g., choice of imagery modality). Imagery vs. baseline contrasts in the ALE analyses involved two broad classes of low-level baseline tasks: The resting state baseline tasks are assumed to be homogeneous across the 33 contrasts that used them. The non-resting state baseline tasks used across the remaining 50 contrasts were more varied, typically involving passive perceptual control conditions (for non-target modalities) or foil trials. Because ALE is sensitive to activation consistencies, it was plausible that baseline-related (rather than strictly imagery-related) networks may emerge in the ALE statistics. This concern was conservatively addressed by a conjunction analysis of resting-baseline vs. non-resting-baseline studies. The significance threshold was maintained at pN < 0.01 (FDR corrected) for both baseline conditions, though no cluster extent threshold was used (the resulting false discovery rate was 0.0001). The conjunction analysis found nine clusters, primarily in bilateral dorsal parietal and left inferior frontal regions that were active across all imagery modalities for all baseline conditions (Table 2). These results are suggestive of a core imagery network, though the extent of activation beyond this core network presumably depends baseline task. As the remaining analyses indicate, these activations also depend on imagery modality.

Auditory Imagery

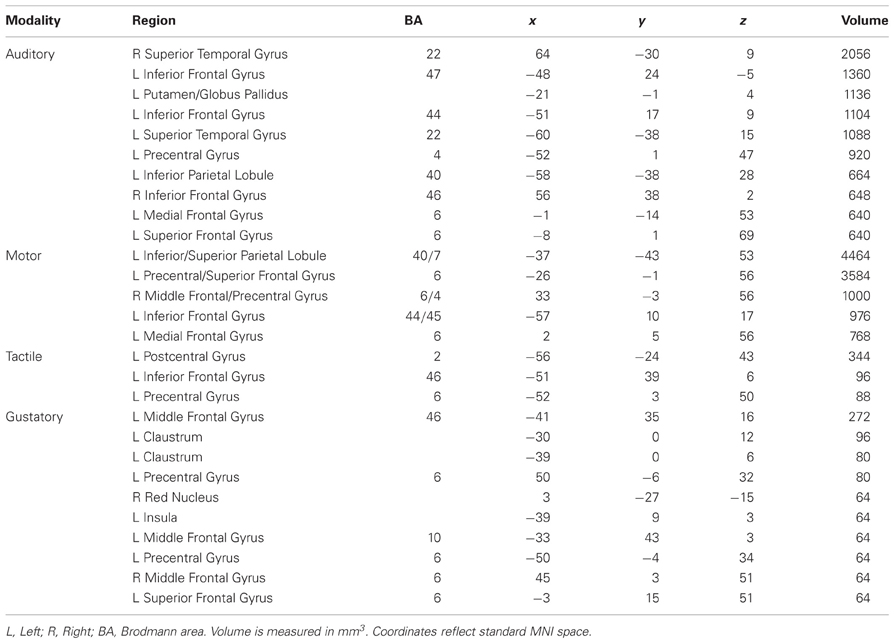

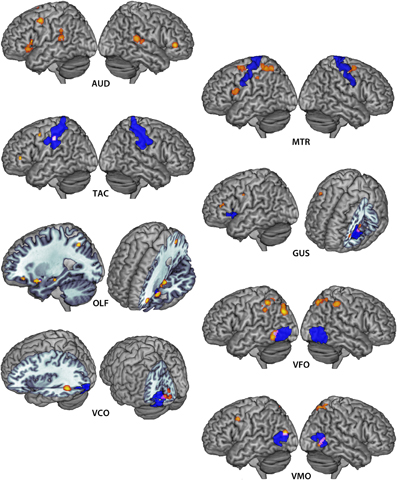

Minimum cluster size threshold in the ALE analysis of auditory imagery studies was set at 632 mm3. Ninety-three foci from 11 experiments involving 127 participants contributed toward these results. For the purposes of this analysis, primary auditory cortex was defined by the AAL template definition of Heschl's Gyrus within the MRIcroN software package (http://www.mccauslandcenter.sc.edu/mricro/mricron/index.html). Ten clusters were reliably associated with auditory imagery at a statistical threshold of pN < 0.05 (FDR corrected) (Table 3). No cluster overlapped with primary auditory cortex. Seven of eleven auditory imagery experiments reported activation peaks within two ALE clusters bilaterally overlapping secondary auditory cortex (planum temporale), indicating reliable activation of these areas during auditory imagery (Figure 2). Bilateral activations of inferior frontal cortex were also apparent. Because the imagery tasks used across auditory imagery experiments were non-linguistic in nature (e.g., tone imagery), involvement of Broca's area in auditory imagery was not readily attributable to language-related phonological processing.

Table 3. Weighted centers of significant clusters in the auditory, motor, tactile, and gustatory imagery ALE analyses.

Figure 2. Extent-thresholded clusters of voxels reaching pN < 0.05 significance in the auditory (AUD), motor (MTR), tactile (TAC), gustatory (GUS), olfactory (OLF), visual form (VFO), visual color (VCO) and visual motion (VMO) imagery ALE maps are depicted in warm values. Corresponding primary sensorimotor cortices are depicted in violet. Overlapping regions are depicted in pink/white.

Motor Imagery

Minimum cluster size threshold in the ALE analysis of motor imagery studies was set at 712 mm3. One hundred and fifty seven foci from 13 experiments involving 137 participants contributed toward these results. For the purposes of this analysis, motor cortex was defined by the Brodmann area 4 definition within the MRIcroN software package. Five clusters were reliably associated with motor imagery at a statistical threshold of pN < 0.05 (FDR corrected) (Table 3; Figure 2). Recruitment of primary motor cortex in motor imagery was not apparent in either hemisphere, though three clusters overlapped to a large extent (right: 222 mm3; left superior: 608 mm3; left inferior: 72 mm3) with premotor cortex. The posterior-most motor imagery cluster, centered at (x = −37, y = −43, z = 53), did overlap substantially with the tactile imagery ROI. The overlapping region was centered at (x = −38, y = −37, z = 53: 1351 mm3). The 3dClustSim simulations determined that the volume of this overlapping region corresponded to a cluster size corrected threshold of p < 0.001 within the primary somatosensory cortex ROI.

Tactile Imagery

Minimum cluster size threshold in the ALE analysis of tactile imagery studies was set at 88 mm3. Forty-nine foci from four experiments involving 44 participants contributed toward these results. For the purposes of this analysis, primary somatosensory cortex was defined by the union of the Brodmann area 1, 2, and 3 definitions within the MRIcroN software package. Three left-lateralized clusters were reliably associated with motor imagery at a statistical threshold of pN < 0.05 (FDR corrected) (Table 3; Figure 2). Recruitment of primary sensorimotor cortex was apparent in the cluster centered at (x = −56, y = −24, z = 43: 344 mm3), which overlapped entirely with primary somatosensory cortex. The 3dClustSim simulations determined that the volume of this overlapping region corresponded to a cluster size corrected threshold of p < 0.001 within the primary somatosensory cortex ROI. No tactile imagery ALE cluster overlapped with the primary motor cortex ROI.

Gustatory Imagery

Minimum cluster size threshold in the ALE analysis of gustatory imagery studies was set at 45 mm3. Note that this cluster size threshold was smaller than the GingerALE-recommended minimum threshold for this dataset. The 3dClustSim analysis determined that 45 mm3 clusters of size would occur by chance within the gustatory ROI with a probability of 0.05. This reduced cluster threshold permitted the detection of clusters that would reach corrected-level significance in the ROI. Fifty-three foci from five experiments involving 63 participants contributed toward these results. For the purposes of this analysis, gustatory cortex was defined by the AAL template definition of bilateral frontal operculum and anterior bilateral insula (y > 7, corresponding to the anterior third of the volume of the AAL template insula definition). The ALE analysis of all gustatory imagery studies found nine clusters that were reliably associated with gustatory imagery (Table 3; Figure 2). There was evidence for left-lateralized recruitment of gustatory cortex in gustatory imagery: One cluster, centered at (left: x = −39, y = 9, z = 3: 64 mm3), was overlapped completely by the gustatory cortex definition. The 3dClustSim simulations determined that the volume of this overlapping region corresponded to a cluster size corrected threshold of p < 0.05 within the primary gustatory cortex ROI.

Olfactory Imagery

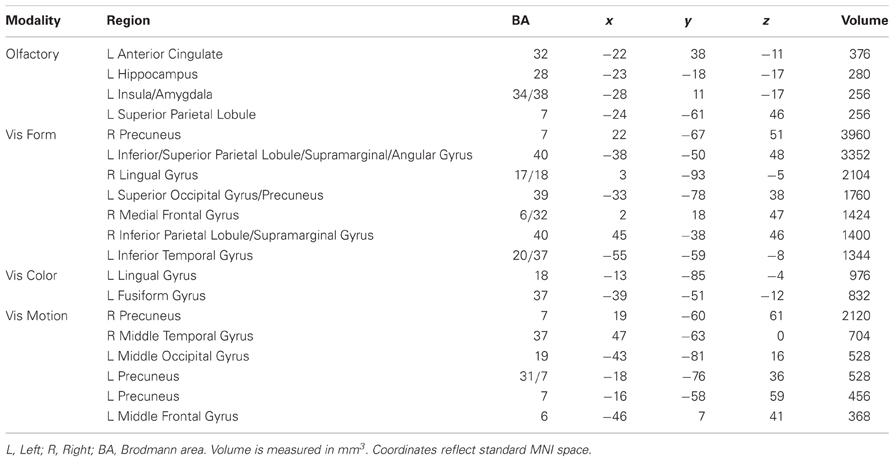

Minimum cluster size threshold in the ALE analysis of olfactory imagery studies was set at 136 mm3. Fifty-one foci from five experiments involving 80 participants contributed toward these results. Olfactory cortex was defined by the AAL template definition of bilateral piriform cortex. The ALE analysis of all olfactory imagery studies found four clusters that were reliably associated with olfactory imagery (Table 4; Figure 2). There was overlap, centered at (x = −25, y = 8, z = −16: 14 mm3), between olfactory cortex and the third largest cluster centered at (x = −28, y = 11, z = −17). The 3dClustSim simulations determined that the volume of this overlapping region corresponded to a cluster size corrected threshold of p < 0.001 within the primary olfactory cortex ROI.

Table 4. Weighted centers of significant clusters in the olfactory, visual form, visual color, and visual motion imagery ALE analyses.

Visual Imagery

Whether early visual cortex, corresponding to Brodmann areas 17 and 18, participates critically in visual imagery has been a subject of much study (Kosslyn and Thompson, 2003). Visual input is rich in information, however, and we distinguish between different types of visual information. Importantly, a number of functionally specialized brain regions contain neurons that are preferentially tuned to different aspects of visual input: The lateral occipital complex (LOC) is specialized for shape processing (Sathian, 2005); neurons in area V4 are tuned to discriminate color (Bramão et al., 2010), and neurons in area V5/MT are critical in the perception of motion (Grèzes, 2001). Kosslyn and Thompson concluded that early visual cortex is involved in visual imagery, in the general sense, when the imagery task requires high-fidelity representations (Kosslyn and Thompson, 2003). An interesting extension to this question is whether the functional organization apparent in visual perception may be found in various subtypes of visual imagery. This is a strong test of the hypothesis that perceptual processes underlie imagery, as there is no reason that retrieval of stored visual representations—that is, visual information that has already been processed by the perceptual system—should necessarily require the involvement of these specialized brain regions. The following three analyses test whether form, color and motion imagery recruits the corresponding functionally specialized visual perception areas. Overlap between the form, color and motion ROIs was avoided by removing voxels appearing in the LOC ROI from the V4 and V5 ROI definitions.

Visual Form Imagery

Minimum cluster size threshold in the ALE analysis of visual form imagery studies was set at 2384 mm3. Two hundred and forty eight foci from 21 experiments involving 218 participants contributed toward these results. For the purposes of this analysis, LOC was defined by the intersection of the Harvard-Oxford Cortical Structural Atlas definition of Lateral Occipital Cortex with the reverse inference map generated by Neurosynth (Yarkoni et al., 2011) for the term “LOC,” thresholded at Z > 5.39. The ALE analysis of all visual form imagery studies found seven clusters, bilaterally- but primarily left-distributed, reliably associated with visual form imagery (Table 4, Figure 2). There was overlap, centered at (x = −52, y = −62, z = −4: 631 mm3), between LOC and the smallest cluster centered at (x = −55, y = −59, z = −8). The 3dClustSim simulations determined that the volume of this overlapping region corresponded to a cluster size corrected threshold of p < 0.001 within the LOC ROI. Because the visual ROIs were adjacent to one another, the overlap of the visual form clusters with the color and motion ROIs was additionally assessed. The cluster overlapping with LOC additionally overlapped the visual color ROI definition by 126 mm3, which corresponded to a cluster size corrected threshold of p < 0.005 within the visual color ROI. No visual form ALE cluster overlapped with the visual color ROI.

Visual Color Imagery

Minimum cluster size threshold in the ALE analysis of visual color imagery was set at 192 mm3. Eighty-one foci from seven experiments involving 76 participants contributed toward these results. For the purposes of this analysis, V4 was defined by the Juelich Histological Atlas definition of left and right V4. The ALE analysis of all visual color imagery studies found 4 left-lateralized clusters that were reliably associated with visual color imagery (Table 4, Figure 2). Overlap, centered at (left: x = −18, y = −82, z = −6: 42 mm3), was found between V4 and the largest cluster centered at (x = −13, y = −85, z = −4). The 3dClustSim simulations determined that the volume of this overlapping region corresponded to a cluster size corrected threshold of p < 0.05 within the V4 ROI. No visual color ALE cluster overlapped with either the visual form or visual motion ROIs.

Visual Motion Imagery

Minimum cluster size threshold in the ALE analysis of visual motion imagery was set at 368 mm3. One hundred and ten foci from 10 experiments involving 97 participants contributed toward these results. For the purposes of this analysis, V5 was defined by the intersection of the Juelich Histological Atlas definition of left and right V5 with the reverse inference map generated by Neurosynth (Yarkoni et al., 2011) for the term “mt,” thresholded at Z > 5.39. The ALE analysis of all visual motion imagery studies found six clusters that were reliably associated with visual motion imagery (Table 4, Figure 2). Bilateral overlap between ALE clusters and V5 was noted (left: x = −42, y = −8, z = 14, 204 mm3; right: x = 47, y = −61, z = 1, 548 mm3). The 3dClustSim simulations determined that the volume of both overlapping regions corresponded to a cluster size corrected threshold of p < 0.001 within the V5 ROI. The cluster overlapping with right V5 additionally overlapped the visual form ROI definition by 262 mm3, which corresponded to a cluster size corrected threshold of p < 0.001 within the visual form ROI. The form and motion imagery clusters did not overlap within either of the ROIs, however there was a 244 mm3 overlap between form and motion imagery clusters centered in the right superior parietal lobule (x = 17, y = −66, z = 57; Brodmann area 7). The overlap between form and motion imagery activations in BA 7 is notable in light of the implication of this region in the integration of visual and motor information (Wolpert et al., 1998).

General Discussion

Modality-General Imagery

The first goal of this study was to identify the neural substrate underpinning modality-general imagery. Across all sensorimotor modalities, and many experimental paradigms, a core network emerged of brain regions associated with imagery. Activations were seen bilaterally in the general imagery analysis, and in some modalities (auditory, motor, gustatory, visual form and visual motion), but were primarily left-lateralized. It was noted earlier that perceptually-based representational theories assume that multisensory imagery underlies semantic retrieval. Others have suggested that the default-mode network, a well-defined network of brain regions more active during periods of rest than under cognitive load, may arise in part out of introspective processes, including imagery (Daselaar et al., 2010). Though the general imagery network bears a superficial resemblance to the resting state network described in the literature, it does not generally overlap with this network. The imagery network was derived from activations for contrasts of imagery greater than baseline. Activation of the resting state network would thus be precluded by definition. These results should not, therefore, be taken as evidence implying any particular property of the default-mode network. For example, a relative increase in imagery network activation may be apparent when resting state activity is compared to tasks that do not involve imagery.

Modality-Specific Imagery

A second goal of this study was to identify the neural substrates underpinning modality-specific imagery, and assess the degree to which imagery in each modality recruited sensorimotor cortex. The ALE analysis of activation loci suggests that modality-specific imagery or knowledge retrieval for most modalities is associated with increased activation in corresponding sensorimotor regions. Though modalities differ with respect to the lateralization and extent of this recruitment, this suggests that modality-specific imagery generally recruits the corresponding primary perceptual areas. Whether these differences reflect differences in cognitive processing, or have behavioral implications remains unclear. For example, proportionally greater recruitment of perceptual regions may be associated with higher fidelity imagery, whereas greater recruitment of adjacent areas is associated with more abstract (e.g., linguistically-dependent) manipulations of imagery representations.

One challenge for this interpretation concerns the failure to show recruitment of primary sensorimotor perceptual cortices for the auditory and motor modalities. The ALE analyses showed imagery in these modalities does reliably recruit posterior superior temporal gyrus (STG) and premotor cortex, respectively. These results are consistent with Kosslyn et al. (2001) review finding that auditory imagery does not activate primary auditory cortex (A1), but does activate auditory associative areas. The same review concluded that motor imagery conditionally activates motor areas, but required a more liberal definition of motor area: Of the studies reviewed, most reported imagery-related activations in premotor cortex but not primary motor cortex. Posterior STG and premotor cortex have been associated with maintaining auditory and motor sequence representations, respectively (Ohbayashi et al., 2003; Arnott et al., 2005; Buchsbaum and D'Esposito, 2008). Thus, an alternative interpretation of imagery-related activations is that they reflect activations within memory systems for these modalities, and that these systems are situated adjacent to, rather than within primary auditory and motor cortices. This may indeed be the case, though such a conclusion rests on the sort of circular logic that highlights the centrality of the symbol grounding problem to understanding the neural bases of cognitive processes. It thus remains to be seen whether a satisfactory solution to the symbol grounding problem can be found for these imagery-related processes. These patterns are, however, suggestive of a modality-specific working memory system.

Though the question of whether visual imagery, in the general sense, recruits early visual cortex has been extensively studied (Kosslyn and Thompson, 2003), the more specific question of whether the functional distinction of color, motion and form perception is reliably found in visual imagery has remained unclear. A third goal of the present study was to determine whether similar functional specialization occurs in visual imagery. The present results indicate that, though visual imagery may activate early visual areas, imaginary color, motion, and shape processing is facilitated by upstream visual areas specialized for color, motion, and form perception, respectively. This parallel specialization during visual imagery is interesting in light of the fact that imagery involves the retrieval of stored representations. That is, imagery is based on information previously processed by the perceptual system. Nonetheless, imagery recruits brain regions involved in processing the original perceptual stream. To retrieve pre-processed rather than post-processed representations would thus be a sub-optimal strategy unless it conveys some other benefit. One possibility is that this processing reflect does not reflect processing of the raw visual stream. Rather, these regions may encode perceptual patterns that are reliably associated with information in other modalities. If this information is captured in the perceptual processing stream, it would be unnecessary to encode this information at higher levels of abstraction. Thus, imagery processes implied by perceptually-based representational theories may recruit these areas in order to generate more veridical multisensory representations.

Several crossmodal asymmetries were observed within the modality-specific imagery results. When the results of the motor and tactile imagery analyses are taken together, they suggest that motor imagery may imply a tactile component, but not the converse. This asymmetry may arise from the types of motor imagery tasks used: in more than half of the motor imagery tasks, the task implied imagery of an action on an object. This asymmetry would be predicted by the dependency of imagery on perceptual experience: one is often passively touched by objects (i.e., tactile perception without an associated motor response), but seldom acts on an object without also touching it. Similarly, an asymmetrical relationship existed among the three visual modality subtypes: First, form imagery clusters additionally overlapped the color ROI, but not vice versa. Second, motion imagery clusters additionally overlapped the form ROI, but not vice versa. This second asymmetry plausibly reflects our visual experience of moving objects: Form processing may be commonly implicated in motion processing because one typically perceives motion of an object with form. The converse relationship does not seem quite as strong, as we regularly encounter inanimate forms that do not move. In contrast, the apparently consistent recruitment of primary color processing regions during form imagery, but not the converse, is puzzling. We do not usually experience fields of color, but instead see colored objects, or forms. On the other hand, we do regularly experience well-defined forms without any associated color: square vs. oval windows, for example. The observed asymmetry would thus appear to be the reverse of what one would expect on the basis of real-world experience. One possibility is that it reflects an interaction between a statistical artifact of the number of form imagery studies and the proximity of the two regions of interest. More visual form imagery studies were conducted with more participants, generating more extensive ALE maps, with a higher probability of overlapping an adjacent ROI. Alternatively, it may reflect a real property of the systems involved in color and form imagery, though that remains a subject for future investigation.

Modality-Specific Imagery and Perceptually-Grounded Representations

Finally, and perhaps most importantly for investigations of perceptually-grounded representations, in no modality were imagery clusters restricted to brain regions immediately involved in perception. Those clusters that did overlap with primary somatosensory regions generally extended beyond these areas. In contrast to perception or imagery-based accounts of knowledge representations, amodal models of semantic memory assume concept knowledge is maintained as an abstraction bearing no connection to perceptual processing (Pylyshyn, 1973; Tyler and Moss, 2001). It is no less reasonable to suppose that a modality-specific representational system encodes information in sensory association areas, but not necessarily in primary sensorimotor areas. This perspective is consistent with Thompson-Schill's review of neuroimaging studies of semantic memory (Thompson-Schill, 2003), which concluded that the literature supported a distributed modality-specific semantic system, but that “studies which have directly compared semantic retrieval and perception have consistently found an anterior shift in activation during semantic processing” (p. 283).

The present meta-analysis suggests that a perceptually-grounded representational system recruits primary sensory cortex to a modest and varying degree, but that processing relies greatly on upstream (though not necessarily anatomically anterior) unimodal convergence zones (Binder and Desai, 2011; McNorgan et al., 2011). These regions tend to be adjacent to their associated perceptual areas, and integrate downstream perceptual codes into somewhat more abstract (but perceptually-grounded) representations. This account would be consistent with the distribution of modality-specific imagery activations about primary somatosensory cortices, and with the theoretical ties between modality-specific representations and imagery. This interpretation would also be consistent with a recent investigation of visual imagery and memory by Slotnick et al. (2011) in which the authors concluded that “visual memory and visual mental imagery are mediated by largely overlapping neural substrates in both frontal-parietal control regions and occipital-temporal sensory regions” (p. 20). These results suggest that neuroimaging investigations of perceptually-based knowledge might pay particular attention to primary sensorimotor areas also implied in imagery, but also should consider contributions of other brain regions supporting imagery processes.

Conclusions

Though neuroscientific studies of imagery have proliferated over the last decade, not all forms of imagery have been investigated to the same extent—imagery of the chemical senses and tactile imagery appear to be relatively underrepresented. Some of the questions posed here may not be adequately answerable without further study in these imagery domains. Similarly, the present review omits studies of imagery in other domains, such as emotional, temporal or spatial imagery, which may be more abstract forms of meta-imagery involving the integration of multiple modalities or function as representational primitives.

Finally, these results are generally consistent with the assumption that mental imagery underlies representational knowledge, though the matter is far from resolved. These considerations point toward a need for further investigation in the imagery domain. These efforts will help relate cognitive processes to one another and to arrive at a fully grounded model of cognitive processing.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Alivisatos, B., and Petrides, M. (1997). Functional activation of the human brain during mental rotation. Neuropsychologia 35, 111–118.

Arnott, S. R., Grady, C. L., Hevenor, S. J., Graham, S., and Alain, C. (2005). The functional organization of auditory working memory as revealed by fMRI. J. Cogn. Neurosci. 17, 819–831.

Barnes, J., Howard, R. J., Senior, C., Brammer, M., Bullmore, E. T., Simmons, A., et al. (2000). Cortical activity during rotational and linear transformations. Neuropsychologia 38, 1148–1156.

Beauchamp, M. S., Haxby, J. V., Jennings, J. E., and Deyoe, E. A. (1999). An fMRI version of the farnsworth–munsell 100-Hue test reveals multiple color-selective areas in human ventral occipitotemporal cortex. Cereb. Cortex 9, 257–263.

Belardinelli, M. O., Palmiero, M., Sestieri, C., Nardo, D., Di Matteo, R., Londei, A., et al. (2009). An fMRI investigation on image generation in different sensory modalities: the influence of vividness. Acta Psychol. 132, 190–200.

Binder, J. R., and Desai, R. H. (2011). The neurobiology of semantic memory. Trends Cogn. Sci. 15, 527–536.

Binder, J. R., Desai, R. H., Graves, W. W., and Conant, L. L. (2009). Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb. Cortex 19, 2767–2796.

Bottini, G., Corcoran, R., Sterzi, R., Paulesu, E., Schenone, P., Scarpa, P., et al. (1994). The role of the right hemisphere in the interpretation of figurative aspects of language A positron emission tomography activation study. Brain 117, 1241–1253.

Bramão, I., Faísca, L., Forkstam, C., Reis, A., and Petersson, K. M. (2010). Cortical brain regions associated with color processing: an FMRi Study. Open Neuroimaging J. 4, 164–173.

Buchsbaum, B. R., and D'Esposito, M. (2008). The Search for the phonological store: from loop to convolution. J. Cogn. Neurosci. 20, 762–778.

Bunzeck, N., Wuestenberg, T., Lutz, K., Heinze, H.-J., and Jancke, L. (2005). Scanning silence: mental imagery of complex sounds. Neuroimage 26, 1119–1127.

Canessa, N., Borgo, F., Cappa, S. F., Perani, D., Falini, A., Buccino, G., et al. (2007). The different neural correlates of action and functional knowledge in semantic memory: an fMRI study. Cereb. Cortex 18, 740–751.

Chein, J. M., Fissell, K., Jacobs, S., and Fiez, J. A. (2002). Functional heterogeneity within Broca's area during verbal working memory. Physiol. Behav. 77, 635–639.

Chouinard, P. A., and Goodale, M. A. (2010). Category-specific neural processing for naming pictures of animals and naming pictures of tools: an ALE meta-analysis. Neuropsychologia 48, 409–418.

Craik, F. I. M., and Lockhart, R. S. (1972). Levels of processing: a framework for memory research. J. Verbal Learn. Verbal Behav. 11, 671–684.

Creem-Regehr, S. H., Neil, J. A., and Yeh, H. J. (2007). Neural correlates of two imagined egocentric transformations. Neuroimage 35, 916–927.

Daselaar, S. M., Porat, Y., Huijbers, W., and Pennartz, C. M. A. (2010). Modality-specific and modality-independent components of the human imagery system. Neuroimage 52, 677–685.

Dechent, P. (2004). Is the human primary motor cortex involved in motor imagery? Cogn. Brain Res. 19, 138–144.

De Lange, F. P., Hagoort, P., and Toni, I. (2005). Neural topography and content of movement representations. J. Cogn. Neurosci. 17, 97–112.

D'Esposito, M., Detre, J. A., Aguirre, G. K., Stallcup, M., Alsop, D. C., Tippet, L. J., et al. (1997). A functional MRI study of mental image generation. Neuropsychologia 35, 725–730.

Djordjevic, J., Zatorre, R. J., Petrides, M., Boyle, J. A., and Jones-Gotman, M. (2005). Functional neuroimaging of odor imagery. Neuroimage 24, 791–801.

Eickhoff, S. B., Bzdok, D., Laird, A. R., Kurth, F., and Fox, P. T. (2012). Activation likelihood estimation meta-analysis revisited. Neuroimage 59, 2349–2361.

Farnsworth, D. (1957). The Farnsworth-Munsell 100-Hue Test for the Examination of Color Vision. Baltimore, MD: Munsell Color Company.

Friston, K. J., Price, C. J., Fletcher, P., Moore, C., Frackowiak, R. S. J., and Dolan, R. J. (1996). The trouble with cognitive subtraction. Neuroimage 4, 97–104.

Ganis, G. (2004). Brain areas underlying visual mental imagery and visual perception: an fMRI study. Cogn. Brain Res. 20, 226–241.

Goebel, R., Khorram-Sefat, D., Muckli, L., Hacker, H., and Singer, W. (1998). The constructive nature of vision: direct evidence from functional magnetic resonance imaging studies of apparent motion and motion imagery. Eur. J. Neurosci. 10, 1563–1573.

Gottfried, J. A., and Dolan, R. J. (2004). Human orbitofrontal cortex mediates extinction learning while accessing conditioned representations of value. Nat. Neurosci. 7, 1144–1152.

Grèzes, J. (2001). Does perception of biological motion rely on specific brain regions? Neuroimage 13, 775–785.

Guillot, A., Collet, C., Nguyen, V. A., Malouin, F., Richards, C., and Doyon, J. (2009). Brain activity during visual versus kinesthetic imagery: an fMRI study. Hum. Brain Mapp. 30, 2157–2172.

Gulyás, B. (2001). Neural networks for internal reading and visual imagery of reading: a PET study. Brain Res. Bull. 54, 319–328.

Halpern, A. R., and Zatorre, R. J. (1999). When that tune runs through your head: a PET investigation of auditory imagery for familiar melodies. Cereb. Cortex 9, 697–704.

Hanakawa, T. (2002). Functional properties of brain areas associated with motor execution and imagery. J. Neurophysiol. 89, 989–1002.

Hauk, O., Johnsrude, I., and Pulvermüller, F. (2004). Somatotopic representation of action words in human motor and premotor cortex. Neuron 41, 301–307.

Howard, R. J., Ffytche, D. H., Barnes, J., Mckeefry, D., Ha, Y., Woodruff, P. W., et al. (1998). The functional anatomy of imagining and perceiving colour. Neuroreport 9, 1019–1023.

Hsu, N. S., Frankland, S. M., and Thompson-Schill, S. L. (2012). Chromaticity of color perception and object color knowledge. Neuropsychologia 50, 327–333.

Hsu, N. S., Kraemer, D. J. M., Oliver, R. T., Schlichting, M. L., and Thompson-Schill, S. L. (2011). Color, context, and cognitive style: variations in color knowledge retrieval as a function of task and subject variables. J. Cogn. Neurosci. 23, 2544–2557.

Ishai, A., Ungerleider, L. G., and Haxby, J. V. (2000). Distributed neural systems for the generation of visual images. Neuron 28, 979–990.

Johnson, S. (2002). Selective activation of a parietofrontal circuit during implicitly imagined prehension. Neuroimage 17, 1693–1704.

Jordan, K., Heinze, H. J., Lutz, K., Kanowski, M., and Jancke, L. (2001). Cortical activations during the mental rotation of different visual objects. Neuroimage 13, 143–152.

Kaas, A., Weigelt, S., Roebroeck, A., Kohler, A., and Muckli, L. (2010). Imagery of a moving object: the role of occipital cortex and human MT/V5+. Neuroimage 49, 794–804.

Kellenbach, M. L., Brett, M., and Patterson, K. (2001). Large, colorful, or noisy? Attribute- and modality-specific activations during retrieval of perceptual attribute knowledge. Cogn. Affect. Behav. Neurosci. 1, 207–221.

Kiefer, M., Sim, E. J., Herrnberger, B., Grothe, J., and Hoenig, K. (2008). The sound of concepts: four markers for a link between auditory and conceptual brain systems. J. Neurosci. 28, 12224–12230.

Kikuchi, S., Kubota, F., Nisijima, K., Washiya, S., and Kato, S. (2005). Cerebral activation focusing on strong tasting food: a functional magnetic resonance imaging study. Neuroreport 18, 281–283.

Kobayashi, M., Takeda, M., Hattori, N., Fukunaga, M., Sasabe, T., Inoue, N., et al. (2004). Functional imaging of gustatory perception and imagery: top-down processing of gustatory signals. Neuroimage 23, 1271–1282.

Kosslyn, S. M., Alpert, N. M., Thompson, W. L., Maljkovic, V., Weise, S. B., Chabris, C. F., et al. (1993). Visual mental imagery activates topographically organized visual cortex: PET investigations. J. Cogn. Neurosci. 5, 263–287.

Kosslyn, S. M., Ganis, G., and Thompson, W. L. (2001). Neural foundations of imagery. Nat. Rev. Neurosci. 2, 635–642.

Kosslyn, S. M., and Thompson, W. L. (2003). When is early visual cortex activated during visual mental imagery? Psychol. Bull. 129, 723–746.

Kosslyn, S. M., Thompson, W. L., and Alpert, N. M. (1997). Neural systems shared by visual imagery and visual perception: a positron emission tomography study. Neuroimage 6, 320–334.

Kosslyn, S. M., Thompson, W. L., Klm, I. J., and Alpert, N. M. (1995). Topographical representations of mental images in primary visual cortex. Nature 378, 496–498.

Laird, A. R., Fox, P. M., Price, C. J., Glahn, D. C., Uecker, A. M., Lancaster, J. L., et al. (2005). ALE meta-analysis: controlling the false discovery rate and performing statistical contrasts. Hum. Brain Mapp. 25, 155–164.

McNorgan, C., Reid, J., and McRae, K. (2011). Integrating conceptual knowledge within and across representational modalities. Cognition 118, 211–233.

Mellet, E., Tzourio, N., Crivello, F., Joliot, M., Denis, M., and Mazoyer, B. (1996). Functional anatomy of spatial mental imagery generated from verbal instructions. J. Neurosci. 16, 6504–6512.

Newman, S. D., Klatzky, R. L., Lederman, S. J., and Just, M. A. (2005). Imagining material versus geometric properties of objects: an fMRI study. Cogn. Brain Res. 23, 235–246.

Nyberg, L. (2001). Reactivation of motor brain areas during explicit memory for actions. Neuroimage 14, 521–528.

Nyberg, L., Habib, R., McIntosh, A. R., and Tulving, E. (2000). Reactivation of encoding-related brain activity during memory retrieval. Proc. Natl. Acad. Sci. U.S.A. 97, 11120–11124.

Ohbayashi, M., Ohki, K., and Miyashita, Y. (2003). Conversion of working memory to motor sequence in the monkey premotor cortex. Science 301, 233–236.

Oliver, R. T., Geiger, E. J., Lewandowski, B. C., and Thompson-Schill, S. L. (2009). Remembrance of things touched: how sensorimotor experience affects the neural instantiation of object form. Neuropsychologia 47, 239–247.

Plailly, J., Delon-Martin, C., and Royet, J.-P. (2012). Experience induces functional reorganization in brain regions involved in odor imagery in perfumers. Hum. Brain Mapp. 33, 224–234.

Poldrack, R. A. (2006). Can cognitive processes be inferred from neuroimaging data? Trends Cogn. Sci. 10, 59–63.

Pylyshyn, Z. W. (1973). What the mind's eye tells the mind's brain: a critique of mental imagery. Psychol. Bull. Psychol. Bull. 80, 1–24.

Roland, P. E., and Gulyás, B. (1995). Visual memory, visual imagery, and visual recognition of large field patterns by the human brain: functional anatomy by positron emission tomography. Cereb. Cortex 5, 79–93.

Ruby, P., and Decety, J. (2001). Effect of subjective perspective taking during simulation of action: a PET investigation of agency. Nat. Neurosci. 4, 546–550.

Sack, A. T., Sperling, J. M., Prvulovic, D., Formisano, E., Goebel, R., Di Salle, F., et al. (2002). Tracking the mind's image in the brain II: transcranial magnetic stimulation reveals parietal asymmetry in visuospatial imagery. Neuron 35, 195–204.

Sathian, K. (2005). Visual cortical activity during tactile perception in the sighted and the visually deprived. Dev. Psychobiol. 46, 279–286.

Servos, P., Osu, R., Santi, A., and Kawato, M. (2002). The neural substrates of biological motion perception: an fMRI study. Cereb. Cortex 12, 772–782.

Simmons, W. K., and Barsalou, L. W. (2003). The similarity-in-topography principle: reconciling theories of conceptual deficits. Cogn. Neuropsychol. 20, 451–486.

Simmons, W. K., Ramjee, V., Beauchamp, M. S., McRae, K., Martin, A., and Barsalou, L. W. (2007). A common neural substrate for perceiving and knowing about color. Neuropsychologia 45, 2802–2810.

Slotnick, S. D., Thompson, W. L., and Kosslyn, S. M. (2005). Visual mental imagery induces retinotopically organized activation of early visual areas. Cereb. Cortex 15, 1570–1583.

Slotnick, S. D., Thompson, W. L., and Kosslyn, S. M. (2011). Visual memory and visual mental imagery recruit common control and sensory regions of the brain. Cogn. Neurosci. 3, 14–20.

Small, D. M., Gregory, M. D., Mak, Y. E., Gitelman, D., Mesulam, M. M., and Parrish, T. (2003). Dissociation of neural representation of intensity and affective valuation in human gustation. Neuron 39, 701–711.

Thompson, W. L., Kosslyn, S. M., Sukel, K. E., and Alpert, N. M. (2001). Mental imagery of high- and low-resolution gratings activates area 17. Neuroimage 14, 454–464.

Thompson-Schill, S. L. (2003). Neuroimaging studies of semantic memory: inferring “how” from “where”. Neuropsychologia 41, 280–292.

Trojano, L., Grossi, D., Linden, D. E., Formisano, E., Hacker, H., Zanella, F. E., et al. (2000). Matching two imagined clocks: the functional anatomy of spatial analysis in the absence of visual stimulation. Cereb. Cortex 10, 473–481.

Turkeltaub, P. E., Eden, G. F., Jones, K. M., and Zeffiro, T. A. (2002). Meta-analysis of the functional neuroanatomy of single-word reading: method and validation. Neuroimage 16, 765–780.

Tyler, L. K., and Moss, H. E. (2001). Towards a distributed account of conceptual knowledge. Trends Cogn. Sci. 5, 244–252.

Veldhuizen, M. G., Bender, G., Constable, R. T., and Small, D. M. (2007). Trying to detect taste in a tasteless solution: modulation of early gustatory cortex by attention to taste. Chem. Senses 32, 569–581.

Vingerhoets, G., Delange, F., Vandemaele, P., Deblaere, K., and Achten, E. (2002). Motor imagery in mental rotation: an fMRI study. Neuroimage 17, 1623–1633.

Wager, T. D., Lindquist, M., and Kaplan, L. (2007). Meta-analysis of functional neuroimaging data: current and future directions. Soc. Cogn. Affect. Neurosci. 2, 150–158.

Warrington, E. K., and McCarthy, R. A. (1987). Categories of knowledge. Further fractionations and an attempted integration. Brain 110(Pt 5), 1273–1296.

Wheeler, M. E., Petersen, S. E., and Buckner, R. L. (2000). Memory's echo: vivid remembering reactivates sensory-specific cortex. Proc. Natl. Acad. Sci. U.S.A. 97, 11125–11129.

Wolpert, D. M., Goodbody, S. J., and Husain, M. (1998). Maintaining internal representations: the role of the human superior parietal lobe. Nat. Neurosci. 1, 529–533.

Yágüez, L., Nagel, D., Hoffman, H., Canavan, A. G. M., Wist, E., and Hömberg, V. (1998). A mental route to motor learning: improving trajectorial kinematics through imagery training. Behav. Brain Res. 90, 95–106.

Yarkoni, T., Poldrack, R. A., Nichols, T. E., Van Essen, D. C., and Wager, T. D. (2011). Large-scale automated synthesis of human functional neuroimaging data. Nat. Meth. 8, 665–670.

Yeshurun, Y., Lapid, H., Dudai, Y., and Sobel, N. (2009). The privileged brain representation of first olfactory associations. Curr. Biol. 19, 1869–1874.

Yomogida, Y. (2004). Mental visual synthesis is originated in the fronto-temporal network of the left hemisphere. Cereb. Cortex 14, 1376–1383.

Yoo, S.-S., Freeman, D. K., McCarthy, J. J. I., and Jolesz, F. A. (2003). Neural substrates of tactile imagery: a functional MRI study. Neuroreport 14, 581–585.

Keywords: embodied cognition, imagination, imagery, modality-independent, modality-specific, semantic memory

Citation: McNorgan C. (2012) A meta-analytic review of multisensory imagery identifies the neural correlates of modality-specific and modality-general imagery. Front. Hum. Neurosci. 6:285. doi: 10.3389/fnhum.2012.00285

Received: 22 August 2012; Accepted: 28 September 2012;

Published online: 17 October 2012.

Edited by:

Penny M. Pexman, University of Calgary, CanadaReviewed by:

Ron Borowsky, University of Saskatchewan, CanadaSharlene D. Newman, Indiana University, USA

Copyright © 2012 McNorgan. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and subject to any copyright notices concerning any third-party graphics etc.

*Correspondence: Chris McNorgan, Department of Communication Sciences and Disorders, Northwestern University, 2240 Campus Drive, Evanston, IL 60208, USA. e-mail: chris.mcnorgan@alumni.uwo.ca