Multimodal emotion perception after anterior temporal lobectomy (ATL)

- 1Swiss Center for Affective Sciences, University of Geneva, Geneva, Switzerland

- 2Neuroscience of Emotion and Affective Dynamics Laboratory, Department of Psychology, Faculty of Psychology and Educational Sciences, University of Geneva, Geneva, Switzerland

- 3Laboratory for Neurology and Imaging of Cognition, Department of Neurology and Department of Neuroscience, Medical School, University of Geneva, Geneva, Switzerland

- 4Epilepsy Unit, Department of Neurology, Geneva University Hospital, Geneva, Switzerland

In the context of emotion information processing, several studies have demonstrated the involvement of the amygdala in emotion perception, for unimodal and multimodal stimuli. However, it seems that not only the amygdala, but several regions around it, may also play a major role in multimodal emotional integration. In order to investigate the contribution of these regions to multimodal emotion perception, five patients who had undergone unilateral anterior temporal lobe resection were exposed to both unimodal (vocal or visual) and audiovisual emotional and neutral stimuli. In a classic paradigm, participants were asked to rate the emotional intensity of angry, fearful, joyful, and neutral stimuli on visual analog scales. Compared with matched controls, patients exhibited impaired categorization of joyful expressions, whether the stimuli were auditory, visual, or audiovisual. Patients confused joyful faces with neutral faces, and joyful prosody with surprise. In the case of fear, unlike matched controls, patients provided lower intensity ratings for visual stimuli than for vocal and audiovisual ones. Fearful faces were frequently confused with surprised ones. When we controlled for lesion size, we no longer observed any overall difference between patients and controls in their ratings of emotional intensity on the target scales. Lesion size had the greatest effect on intensity perceptions and accuracy in the visual modality, irrespective of the type of emotion. These new findings suggest that a damaged amygdala, or a disrupted bundle between the amygdala and the ventral part of the occipital lobe, has a greater impact on emotion perception in the visual modality than it does in either the vocal or audiovisual one. We can surmise that patients are able to use the auditory information contained in multimodal stimuli to compensate for difficulty processing visually conveyed emotion.

Introduction

The ability to decode emotional information is crucial in everyday life, allowing us to adapt our behaviors when confronted with salient information, both for survival and for social adaptiveness purposes. The emotional features of objects in the environment have been shown to bring about an increase in the neuronal response, compared with the processing of non-emotional information (for a review, see Phan et al., 2002). The role that different brain regions play in decoding emotional information appears to depend on the modality. Furthermore, research has shown that both the primary and secondary sensory regions are modulated by emotion. For example, visual extrastriate regions are modulated by emotions conveyed by facial expressions (e.g., Morris et al., 1998; Pourtois et al., 2005a; Vuilleumier and Pourtois, 2007), while temporal voice-sensitive areas have been shown to be modulated by emotional prosody (e.g., Mitchell et al., 2003; Grandjean et al., 2005; Schirmer and Kotz, 2006; Wildgruber et al., 2006; Frühholz et al., 2012).

According to Haxby’s face perception model (Haxby et al., 2000), visual information is processed along a ventral pathway leading from the primary visual cortex (V1) to the fusiform face area (FFA) and inferior temporal cortex (ITC). Face perception is sufficient to activate the FFA (see, for example, Pourtois et al., 2005a; Kanwisher and Yovel, 2006; Pourtois et al., 2010), but the activity of this structure is enhanced when the facial information is emotional (see, for example, Breiter et al., 1996; Dolan et al., 2001; Vuilleumier et al., 2001; Williams et al., 2004; Vuilleumier and Pourtois, 2007). Another structure whose activity increases when decoding emotional facial information is the amygdala (see, for example, Haxby et al., 2000; Calder and Young, 2005; Phelps and LeDoux, 2005; Adolphs, 2008). In monkeys, this structure has been shown to project to almost every step along the visual ventral pathway (Amaral et al., 2003). Human studies, meanwhile, have suggested that connectivity between the amygdala and the FFA is modulated by emotion perception (Morris et al., 1998; Dolan et al., 2001; Vuilleumier et al., 2004; Sabatinelli et al., 2005; Vuilleumier, 2005; Vuilleumier and Pourtois, 2007).

Regarding the amygdala’s role in emotion perception, the current hypothesis is that this structure detects salience, a general feature of emotion (for a discussion, see Sander et al., 2003; Armony, 2013; Pourtois et al., 2013), through reciprocal connections with the cortex (Amaral et al., 2003). Its main function is to facilitate attention and perception processing (e.g., Armony and Ledoux, 1997, 1999; Whalen, 1998; Vuilleumier et al., 2001) without explicit voluntary attention (for a review, see Vuilleumier and Pourtois, 2007). According to Ledoux’s (2007) model, the amygdala’s output is directed both to regions that modulate bodily responses (via the endocrine system related to the autonomic system), and to the primary and associative cortices. These encompass regions modulated by emotion such as in the extrastriate visual system, the FFA for face perception, and the voice area in the superior temporal gyrus (STG; including the primary auditory region).

Further insight into emotional face perception and its subcortical bases has been provided by studies of patients with lesions of the amygdala. More specifically, studies have assessed patients with temporal lobe epilepsy whose lesions are linked either to the epileptogenic disease itself or else to its surgical treatment (see, for example, Cristinzio et al., 2007). These studies included patients with congenital or acquired diseases resulting in bilateral lesions, and patients with unilateral epilepsy arising from mesial temporal sclerosis who had undergone lobectomy with amygdalectomy. Patients with bilateral damage have been found to display impaired fearful face perception (Adolphs et al., 1994, 1995; Young et al., 1995; Calder et al., 1996; Broks et al., 1998) and deficits in the perception of surprise and anger (Adolphs et al., 1994). Unilateral lesions have yielded either no differences (Adolphs et al., 1995; prior surgery, Batut et al., 2006) or else a deficit for patients with right-sided lesions covering either a range of emotions (Anderson et al., 2000; Adolphs and Tranel, 2004) or solely fearful faces (prior surgery, Meletti et al., 2003). Palermo et al. (2010) found that both left- and right-lesion groups exhibited a deficit in fear intensity perception, but the left-lesion group was more impaired for fear detection. Anterior temporal lobectomy with amygdalectomy is generally expected to affect the perceived intensity of facial emotional expressions. The functional explanation for this is a lack of modulation by the amygdala of the ventral visual processing network and, more specifically in the case of emotional faces, of the FFA.

In addition to visual emotional information, the amygdala has been shown to be associated with different responses to emotional vocalizations. According to Schirmer et al. (2012), the processing of auditory information takes place along three streams in the temporal lobe: a posterior stream passing through the posterior part of the superior temporal sulcus (pSTS) for sound embodiment; a ventral stream directed toward the middle temporal gyrus (MTG) for concept processing; and an anterior stream extending as far as the temporal pole (TmP) for the perceptual domain (i.e., semantic processing). Another specificity of emotional vocalization perception is the hemispheric specificity modeled by Schirmer and Kotz (2006). In their model, the left temporal lobe has a higher temporal resolution for processing information than the right hemisphere, and is more involved in linguistic signal processing (segmental information), with suprasegmental analysis taking place in the right hemisphere. The amygdala has been shown to be modulated by emotional vocalizations, including onomatopoeia (e.g., Morris et al., 1999; Fecteau et al., 2007; Plichta et al., 2011), and emotional prosody consisting either of pseudowords (e.g., Grandjean et al., 2005; Sander et al., 2005; Frühholz and Grandjean, 2012, 2013), or of words and sentences (e.g., Ethofer et al., 2006, 2009; Wiethoff et al., 2009).

In contrast to research on emotional face perception, studies of auditory emotion processing in patients with bilateral amygdala lesions have produced divergent results. Some have failed to find any effect at all on emotion recognition (semantically neutral sentences: Adolphs and Tranel, 1999; names and onomatopoeia: Anderson and Phelps, 1998). Others have reported either a general impairment (counting sequences: Brierley et al., 2004) or specific impairments for fear (semantically neutral sentences: Scott et al., 1997; non-verbal vocalizations: Dellacherie et al., 2011), surprise (Dellacherie et al., 2011), anger (Scott et al., 1997), or sadness perception (musical excerpt: Gosselin et al., 2007). There is a similar divergence for unilateral lesions, with either no effects (Adolphs and Tranel, 1999; Adolphs et al., 2001) or a specific impairment for fear (counting sequences: Brierley et al., 2004; meaningless words: Sprengelmeyer et al., 2010; non-verbal vocalizations: Dellacherie et al., 2011). To sum up current knowledge about auditory emotion processing, there is a strong hypothesis about right hemispheric involvement for emotional prosody. The amygdala appears to be involved in prosody perception, but may also be sensitive to the proximal context of the stimulus presentation (for a discussion, see Frühholz and Grandjean, 2013).

In the case of face-voice emotion integration, studies featuring audiovisual emotional stimuli have replicated the response facilitation effect at the behavioral level, namely an increase in perceptual sensitivity and reduced reaction times (e.g., Massaro and Egan, 1996; De Gelder and Vroomen, 2000; Dolan et al., 2001; Kreifelts et al., 2007), that has already been demonstrated in non-emotional studies (e.g., Miller, 1982; Schröger and Widmann, 1998). Responsibility for the behavioral improvement has been mainly attributed to various cortical substrates, including the left MTG (e.g., Pourtois et al., 2005b), the posterior STG (pSTG; e.g., Ethofer et al., 2006; Kreifelts et al., 2007), and, interestingly, the amydala, either bilaterally (e.g., Klasen et al., 2011) or the left side (e.g., Dolan et al., 2001; Ethofer et al., 2006; Müller et al., 2012). Animal studies have yielded a more detailed multimodal model, with different levels of integration. For instance, a rhinal cortex lesion, as opposed to a direct lesion of the amygdala, is sufficient to disrupt associative mechanisms (Goulet and Murray, 2001). Meanwhile, a comparison of the roles of the perirhinal cortex (PRC) and the pSTS led Taylor et al. (2006) to suggest that the pSTS plays a presemantic integration role, while the PRC integrates higher level conceptual representations.

In summary, studies of the amygdala’s modal specificity have reported impairments in patients with temporal lobectomy or specific amygdalectomy for faces and either voices (Scott et al., 1997; Sprengelmeyer et al., 1999; Brierley et al., 2004) or emotion in music (Gosselin et al., 2007, 2011). However, some patients seem to have a specific deficit for visual emotional stimuli (Adolphs et al., 1994, 2001; Anderson and Phelps, 1998; Adolphs and Tranel, 1999). Discrepancies between studies have been explained by a number of different factors, including the date of epilepsy onset (e.g., McClelland et al., 2006), the nature and context of the stimuli (e.g., face presentation duration; Graham et al., 2007; Palermo et al., 2010). The fear specificity of amygdala processing has also been strongly called into question (for a discussion, see Cahill et al., 1999; Murray, 2007; Morrison and Salzman, 2010). To the best of our knowledge, however, the role of lesion size has not been taken in account thus far.

Our aim in the present study was to test whether the categorization and intensity perception of unimodal (i.e., either visual or non-verbal auditory emotional stimuli), as opposed to bimodal (i.e., audiovisual) emotional stimuli is modified in patients who have undergone unilateral temporal anterior lobectomy with amygdalectomy. The impact of anterior temporal lobe ablation is assumed to differ with modality. Regarding the auditory network, above and beyond the absence of voice area modulations owing to amygdala resection, Schirmer et al. (2012) suggests that the anterior temporal lobe is more involved in semantic processing, representing the final temporal step before the processing shifts to the frontal regions associated with emotion evaluation. We would therefore expect disruption of this input to have an impact on categorization, with patients making more mistakes or confusing more items than matched controls. For the visual modality, we would expect to find the same kind of deficit, stemming from the lack of emotion-related modulation of visual cortical input. Finally, for audiovisual material, we would expect to observe either a better preserved ability for correct detection and perceived intensity, if an intact pSTS and a more dorsal pathway toward the frontal lobe are sufficient to integrate audiovisual information, or no improvement because of the PRC lesion.

Participants rated the intensity of brief onomatopoeic vocalizations produced by actors (Bänziger et al., 2012) and animated synthetic faces (Roesch et al., 2011) on visual analog scales. At the group level, we expected the patients to have a higher error rate than controls when it came to identifying unimodal emotional stimuli. This has been shown to be the case in the visual modality for fearful faces (bilateral lesion: Adolphs et al., 1994, 1995; Young et al., 1995; Calder et al., 1996; Broks et al., 1998; unilateral lesion: Anderson et al., 2000; McClelland et al., 2006), and in the auditory modality for both fearful voices (bilateral lesion: Scott et al., 1997; Adolphs and Tranel, 2004; unilateral lesion: Scott et al., 1997; Brierley et al., 2004; Sprengelmeyer et al., 2010; Dellacherie et al., 2011) and angry voices (bilateral lesion: Scott et al., 1997). For the audiovisual stimuli, we expected to observe a higher error rate for fear identification, arising from the combined effects of the unimodal deficits in each modality. Regarding intensity perception, we expected to observe similar patterns, even after controlling for the extent of the lesion along the ventral pathway. Finally, we investigated the effects of lesion size on emotion recognition. We predicted that perception of emotion intensity would be modulated by the size of the lesion, with more extensive lesions resulting in impairment at different levels of information processing. We developed an additional hypothesis to explain the discrepant findings of previous studies.

Materials and Methods

Participants

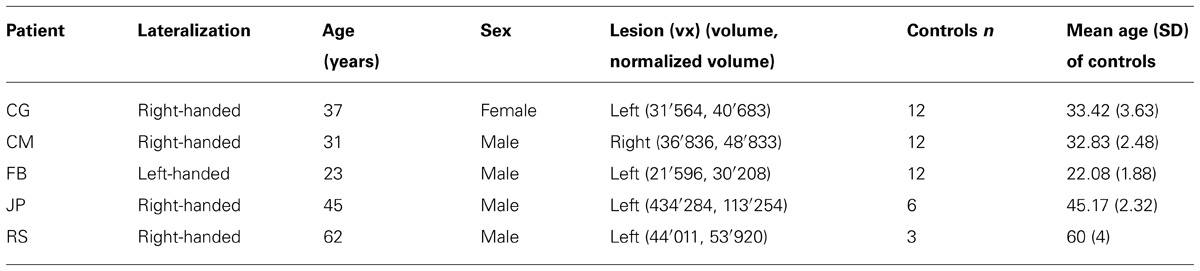

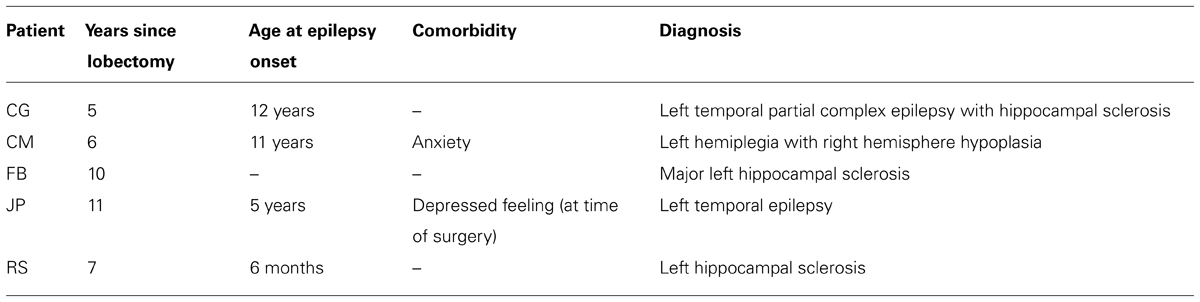

We recruited five patients who had undergone unilateral anteromedial temporal lobectomy together with the unilateral removal of the amygdala. One patient (JP) had a lesion that extended to the occipital and posterior parietal lobes. The surgery had been performed to control the patients’ medically intractable seizures (see Figure 1 for the location and extent of their lesions): four on the left side (FB, 23 years old; CG, 37 years old; JP, 45 years old; and RS, 62 years old) and one on the right (CM, 31 years old). CG was the only woman in the patient group, and FB the only left-handed patient. Controls were recruited via local advertisements: 12 were matched with FB, CM, and CG for sex, handedness, and age; six with JP; and three with RS (see Table 1 for a summary and Table 2 for a detailed description of each patient). Patients did not exhibit any gnosis deficit in their respective neuropsychological tests. The study was approved by the local ethics committee, and all the participants gave their written informed consent. The controls received financial compensation (CHF 15) for taking part in the experiment.

FIGURE 1. (A) Anatomical images of the lesions for each patient: each lesion was delineated manually on the axial plane and corrected using the coronal plane. (B) Probability map for the normalized lesion size.

Lesion Delimitation and Description

In order to compute the lesion size of each patient, anatomical images were segmented and normalized using a unified segmentation approach (Ashburner and Friston, 2005) together with the Clinical toolbox1. Because of the cost function masking purpose (Andersen et al., 2010), lesion masks drawn on the patients’ anatomical scans were included in the brain segmentation. Structural images and lesion masks were normalized to MNI space with the DARTEL toolbox, using individual flow fields, which were estimated on the basis of the segmented gray (GM) and white matter (WM) tissue classes. The normalized lesion masks were used to calculate the lesion size for each patient in standard space.

CG had a left anterior temporal lesion with an intact inferior temporal gyrus (ITG) and lateral occipitotemporal gyrus (LOTG). The lesion area included the periamygdaloid cortex (PAM), entorhinal cortex (Ent), medial occipitotemporal gyrus (MOTG), inferior part of the hippocampus (Hi), parahippocampal gyrus (PHG), and amygdala, and ended in the lateral anterior portion of the temporal lobe, in the MTG and TmP.

CM had a right anterior temporal lesion extending to the middle and ventromedial part of the temporal lobe, including the inferior temporal pole (ITmP), ITG, Ent, PAM, PRC, amygdala, inferior Hi, STG, anterior fusiform gyrus (FuG), and rhinal sulcus. In the posterior part of the lesion, the PPo (planum polare), STG and STS were intact.

FB had a left anterior temporal lesion that included the TmP, MTG, MOTG, Ent, Hi, PAM, amygdala, anterior STG, and posterior temporal cortex (PTe). The lesion ended in two separate tails: one in the lateral anterior part of the temporal lobe, the other in the medial part.

JP had an extended left lateral resection including the temporal, frontal, parietal, and occipital lobes. The temporal part included the TmP, MTG, Ent, MOTG, ITG, PTe, anterior STG, and PHG. The frontal part included the lateral inferior and superior frontal gyri, precentral gyrus and postcentral gyrus. Finally, part of the lateral superior posterior occipital gyrus had been removed, but the FFA was intact.

RS had a left anterior temporal lesion encompassing the TmP, STG, MTG, MOTG, ITG, FuG, amygdala, anterior Hi, Ent, PAM, FuG and anterior PHG. It ended in the lateral anterior part. See Figure 1 for visual descriptions of the patients’ brain damage.

Stimuli and Procedure

Non-verbal auditory expressions were drawn from the validated Geneva Multimodal Emotion Portrayal (GEMEP) corpus (Bänziger et al., 2012). We selected angry, joyful, and fearful non-verbal sounds (“ah”) produced by two male and two female actors, on the basis of the recognition rate established in a previous pilot study. For the neutral stimuli, we chose the most neutrally rated vocal expressions produced by the same actors (neutrality rating: M = 26.5, SD = 15.67), and the fundamental frequency was flattened using Praat (Boersma and Weenink, 2011). Sounds were cut and/or stretched to achieve a duration of 1 s (mean duration before time stretch = 0.92 s, SD = 0.30 s) with SoundForge2, and 0.025 s fade-ins and fade-outs were included using Audacity3. The dynamic faces were created with FACSGen (Roesch et al., 2011), which allows for the parametric manipulation of 3D emotional facial expressions according to the Facial Action Coding System (Ekman and Friesen, 1978). They were selected on the basis of results of a previous study in which participants assessed the gender and believability of each avatar (Roesch et al., 2011). The lips were animated to match the intensity contour of each different sound for both unimodal visual and audiovisual items. The action units (AUs) for each emotion began at 0.25 s and ended at 0.75 s after onset, with their apex at 0.5 s (100% intensity). VirtualDub4 was used to generate the image sequences and to combine the voiced sounds with them at a rate of 26 frames per second (the final image was a dark screen).

After signing the consent form, participants completed the behavioral inhibition system (BIS)/behavioral approach system (BAS) scales and the state trait anxiety inventory (STAI) on a web interface. They then rated the intensity of 216 items in unimodal [auditory (A), or visual (V)] and audiovisual (AV; congruent: same information in both modalities; incongruent: one modality emotional, the other neutral) conditions. The unimodal and congruent audiovisual stimuli could either express the emotions of anger, fear, or joy, or be neutral (control condition). Each condition (modality, emotion, or congruency) was repeated 12 times. Items were presented using E-Prime (standard v2.08.905) in a pseudorandomized order to avoid repetition of the same stimulus (i.e., synthetic face or actor’s voice) or condition. The participants gave their answers by clicking on a continuous line between Not intense and Very intense for six different emotions (disgust, joy, anger, surprise, fear, sadness), plus neutral. In each trial, they could provide ratings on one or more scales. At the end of the experiment, they completed a debriefing questionnaire.

Statistical Analysis

Since multiple intensity scales were used to collect the answers, our data mostly contained zero ratings. To assess the interactions, we therefore ran a zero-inflated mixed model on congruent trials only, using the glmmADMB package for R6. This allowed the excess zeroes and remaining values to be modeled as binomial responses, and modeled the distribution as a generalized linear model (GLM) following a negative binomial distribution. Main effects were tested for group (control vs. patient), modality (audio, visual, audiovisual), and emotion (anger, fear, or joy, plus neutral). Contrasts were performed to test specific hypotheses.

The first hypothesis we tested was a group effect for a specific emotion on the target scale (e.g., fearful item ratings on the fear scale) for each modality (A, V, AV). Sex, age, and normalized lesion size were added as control variables. Participant and stimulus ID were added as random effects. A different model was run for each of the three emotions, plus neutral. Second, four different models, one for each emotion, plus neutral, were tested in order to compare the impact of the three different modalities in each group. For instance, for angry item ratings on the anger scale, the modalities were tested in pairs (AV-A, AV-V, A-V) for the patient group, and individually for the control group. For this second set of models, we added the same control and random variables as for the first model. The third model was run to investigate the lateralization effect of the lesion for a specific modality and a specific emotion, controlling for handedness, age, and sex, and with random effect variables for participant ID and stimulus ID. Owing to the limited size of our patient sample, this comparison was of a purely descriptive and exploratory nature. In order to test whether the effects we found in the different modalities were perceptual or emotional, we ran a complementary analysis to compare emotional versus neutral items in each modality and each group, adding age, sex, and normalized lesion size as control variables, and participant ID and stimulus ID as random effects. Intergroup effects were also tested for emotional versus neutral items in each modality (A, AV, V), with the same control variables. Finally, we tested the impact of lesion size by including the number of voxels in a separate linear model for each emotion and each modality. In this final set of models, random effect variables (participant ID and stimulus ID) were added.

Results

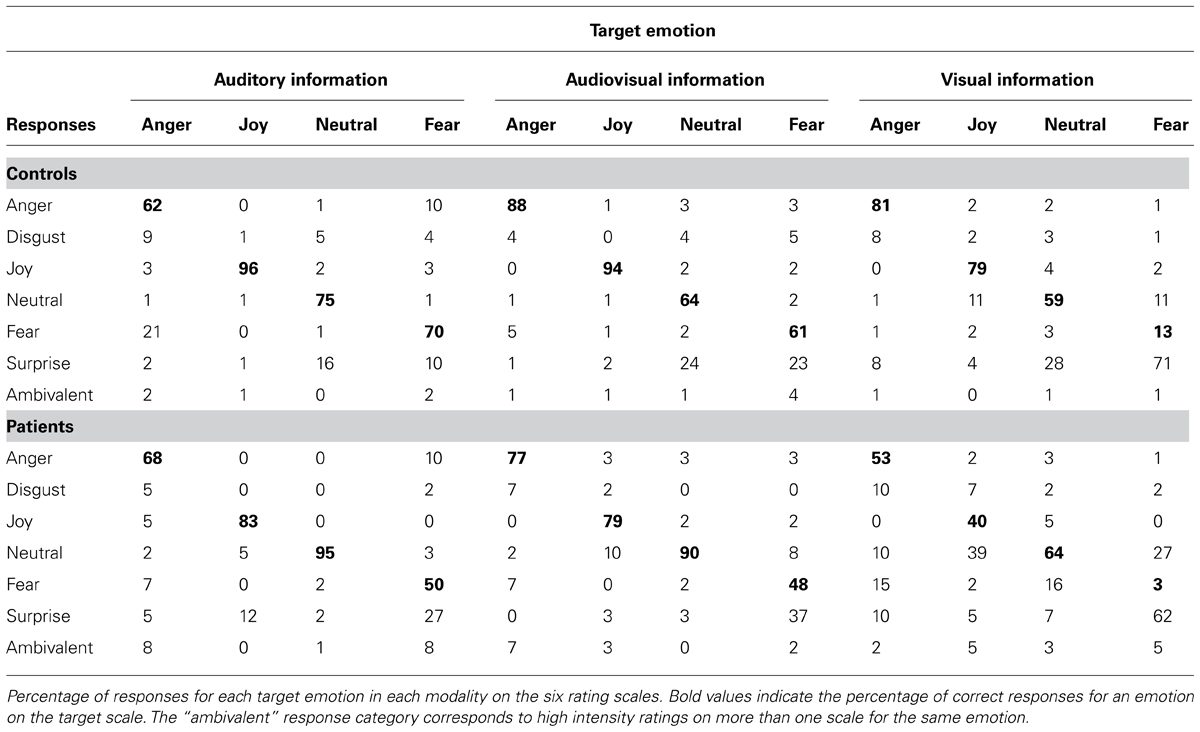

Categorical Responses

Participants could rate the intensity of each item on six different scales (anger, disgust, fear, surprise, joy, sadness, and neutral). For each item, we identified the scale with the highest rating, and calculated a proportional corrected score for each participant (Heberlein et al., 2004; Dellacherie et al., 2011), by looking at how many other members of the participant’s group (patient or control) had given the same response. This score could range from 0, meaning that nobody else in the group had chosen the same scale, to 1, meaning that everyone in the group had chosen the same scale. This type of correction is used to weight labeling errors, bearing in mind that some errors are more correct than others. For instance, it is easier to confuse visual fear and surprise (see, for example, Etcoff and Magee, 1992) than it is to confuse fear and anger, as the first two expressions share a number of AUs. For vocal expressions, confusion is also possible, but between different pairs of emotions (see, for example, Banse and Scherer, 1996; Belin et al., 2008; Bänziger et al., 2009).

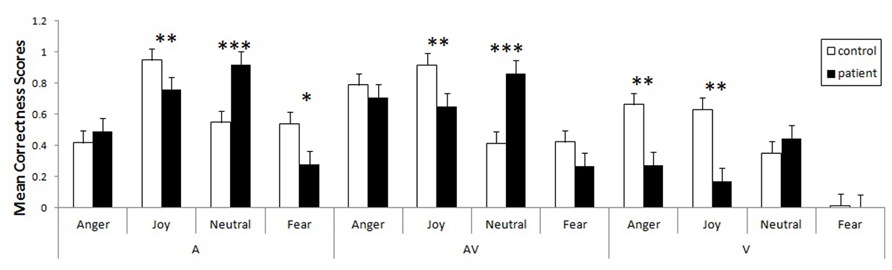

Using these corrected scores, we looked for possible differences between the two groups. As our data violated the assumptions of homoscedasticity and normal distribution, we ran non-parametric tests for multiple groups. In order to pinpoint differences between the groups within a specific emotion in a specific modality, we used the Kruskal–Wallis test, calculating z scores and p values corrected for multiple comparisons of mean ranks (z′). These multiple comparisons are summarized in Figure 2. The control group was more accurate than the patient group in recognizing joy, whether it was expressed vocally (z′ = 3.02, p < 0.005), visually (z′ = 3.17, p < 0.005), or bimodally (z′ = 3.19, p < 0.005). Greater accuracy within the control group was also observed for visual anger (z′ = 2.99, p < 0.005), vocal fear (z′ = 2.78, p < 0.01) and - marginally - visual (z′ = 1.69, p = 0.08) and bimodal fear (z′ = 1.89, p = 0.058). Finally, a reverse group effect was observed for the neutral vocal (z′ = 3.64, p < 0.001) and audiovisual (z′ = 3.64, p < 0.001) stimuli.

FIGURE 2. Mean proportional corrected scores for patients and controls, taking modality and emotion into account (bars represent the standard error of the mean, *p < 0.05, **p < 0.005, ***p < 0.001).

Finally, we tested the impact of lesion size on the corrected hit rate for emotion recognition. We ran supplementary analyses using a GLM to test this effect with the modality (A, AV, V) and emotion (anger, joy, fear, neutral) factors, and added the normalized lesion size as a covariate. The control variables were age, sex, and lateralization. We observed a significant linear relationship between normalized lesion size and corrected hit score for visual anger (z = -2.91, p < 0.005), visual joy (z = -2.37, p < 0.05) and visual neutral stimuli (z = -3.52, p < 0.001). All the linear regressions were negative, meaning that the more extensive the lesion, the lower the corrected score. We observed no such effect for fear in the visual modality, as patients did not recognize this emotion (their corrected score was equal to 0), confusing it with surprise.

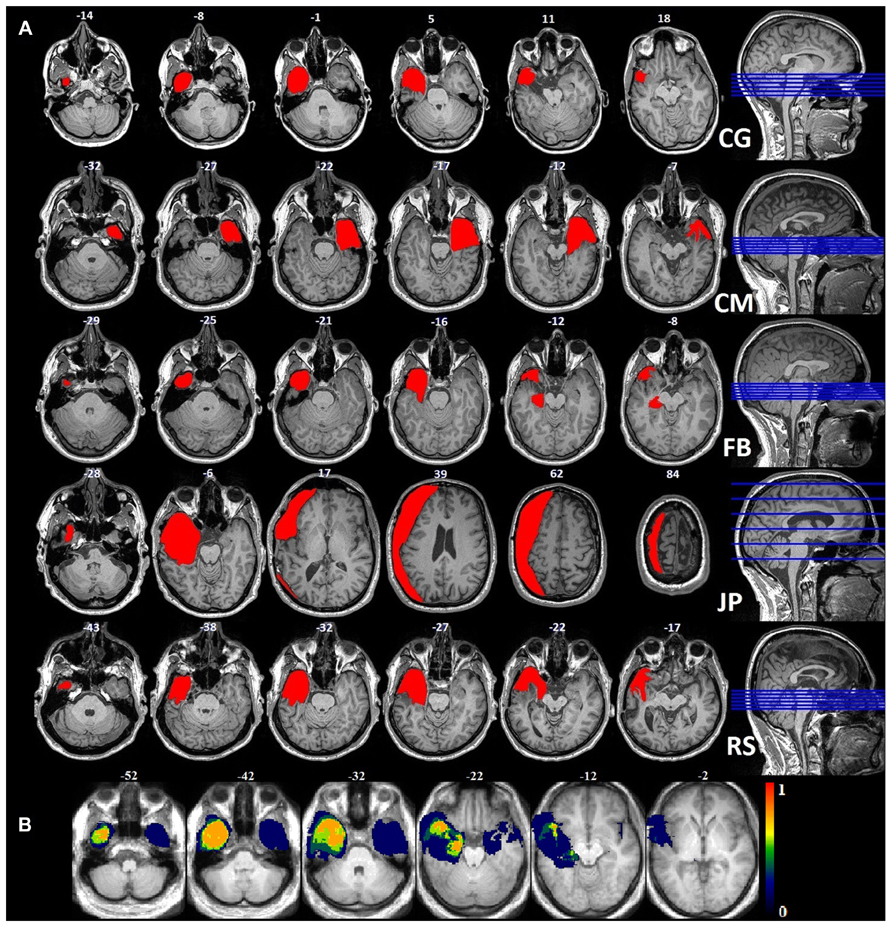

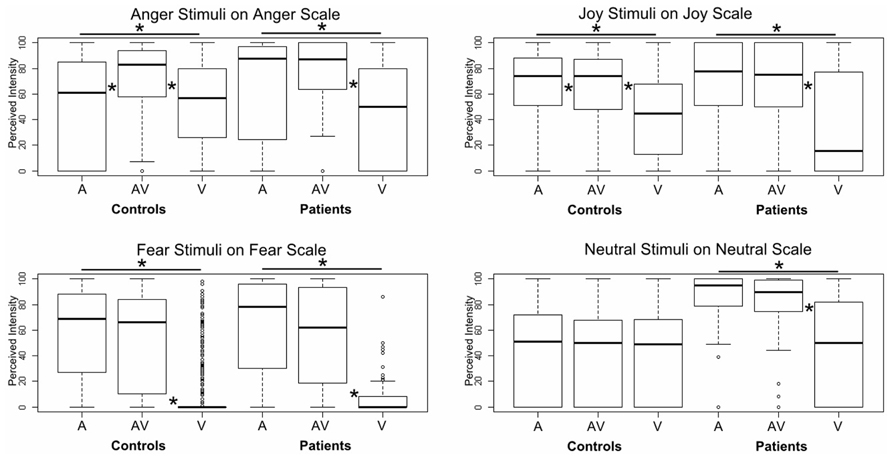

Intensity Perception

Using a GLM, we first compared the two groups on each specific emotion in each specific modality, controlling for sex, age, and normalized lesion size, and adding participant and stimulus ID as random effects. No significant results were observed, even for the fear items. However, when we ran pairwise comparisons of the modalities for a specific emotion on its target scale and for a specific group, we did observe significant effects, especially for the three emotions (see Figure 3). Patients provided higher intensity ratings of audiovisual versus unimodal visual information for angry (z = -4.14, p < 0.001), joyful (z = -6.14, p < 0.001), fearful (z = -8.45, p < 0.001), and neutral (z = -5.61, p < 0.001) items. They also provided higher intensity ratings of auditory versus visual information for the same emotions (anger: z = -4.14, p < 0.001; joy: z = -6.14, p < 0.001; fear: z = -8.45, p < 0.001; neutral: z = -5.61, p < 0.001). The differences between audiovisual and unimodal auditory information were not significant for any of the emotions (p > 0.15). In the control group, a slightly different pattern emerged for anger and joy. Anger was given a higher intensity rating in the audiovisual condition than in either the auditory (z = -3.27, p < 0.001) or visual (z = -10.93, p < 0.001) condition, and a higher rating in the auditory condition than in the visual one (z = -6.94, p < 0.001). For joy, audiovisual information was perceived of as more intense than visual information (z = -12.69, p < 0.001), but auditory information was given a higher intensity rating than both audiovisual information (z = 3.07, p < 0.005) and visual information (z = -15.58, p < 0.001). Finally, fear stimuli were rated as more intense in the audiovisual modality than in the visual one (z = -11.74, p < 0.001), and also more intense in the auditory modality than in the visual one (z = -12.33, p < 0.001). No significant differences were observed between the modalities for neutral stimuli (p > 0.4).

FIGURE 3. Boxplot of GLM results for intensity ratings of each emotion on the corresponding target scale. Each box corresponds to a specific modality (A: auditory, AV: audiovisual, V: visual) and a specific group (patients vs. controls). The difference between A and AV for the controls is almost invisible, as zero values data were included in the plot. *Indicates a significant difference between modalities (pairwise).

In order to ascertain whether the results were perceptual or emotional, we tested another model contrasting emotional versus neutral stimuli for each group and each modality (A, V, AV). Controls rated emotional auditory items as more intense than neutral auditory items (z = 5.61, p < 0.001), and this was also the case for audiovisual information (z = 5.15, p < 0.001). By contrast, the patients provided higher intensity ratings for neutral items than they did for emotional items in the auditory (z = -2.64, p < 0.01) and audiovisual (z = -2.42, p < 0.05) modalities. In the visual modality, patients (z = -3.56, p < 0.001) and controls (z = -8.40, p < 0.001) alike gave higher intensity ratings for neutral items than for emotional ones. When we compared the two groups on emotional and neutral items in each modality, we found that the patients rated the intensity of the neutral items more highly than the controls did in the auditory modality (z = 2.24, p < 0.025). For the audiovisual modality, the effect was only marginal (z = 1.86, p = 0.062).

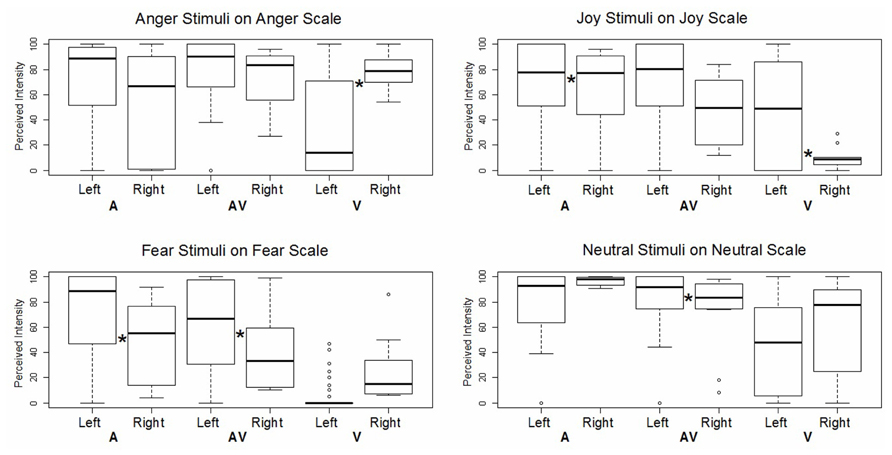

Intensity Perception and Lesion Effect

We then assessed the impact of lesion lateralization for each specific emotion in each specific modality. In this GLM analysis, we compared the patients’ ratings on the target scale according to the side of their lesion, controlling for handedness, age, and sex, and adding participant and stimulus ID as random effects (see Figure 4). The patient with a right lesion was found to provide higher intensity ratings than the patients with left lesions, but only for angry faces (z = -4.36, p < 0.001) and auditory joy (z = -3.23, p < 0.005). All other significant effects concerned the opposite relationship, namely, the patients with left lesions rated the items as more intense than the patient with a right lesion did. This was the case for visual joy (z = 3.19, p < 0.005), auditory fear (z = 8.29, p < 0.001), audiovisual fear (z = 8.23, p < 0.001), and audiovisual neutral items (z = 3.67, p < 0.001).

FIGURE 4. Boxplot of GLM results for intensity ratings of each emotion on the corresponding target scale. Each box corresponds to a specific modality (A: auditory, AV: audiovisual, V: visual) and a specific patient subgroup (left lesion vs. right lesion). *Indicates a significant difference between left and right lesion conditions.

When we added the normalized lesion size as a covariate and compared the interactions of perceived intensity and modality for a specific emotion on the target scale, we observed a massive effect in the visual modality across all emotions: the larger the lesion, the less intensely the patients perceived visual anger (z = -2.90, p < 0.005), visual joy (z = -2.79, p < 0.005), and visual fear (z = -2.96, p < 0.005). Neutral visual stimuli, however, failed to reach significance (p > 0.15). This relationship also held good for audiovisual joy (z = -2.15, p < 0.051), but no significant effects were observed either for other audiovisual expressions (angry, fearful or neutral), or for auditory stimuli (p > 0.15).

Discussion

Categorical Responses

Our main goal was to investigate the relationship between emotion and modality, comparing patients who had undergone unilateral anterior temporal lobectomy and amydalectomy with a matched control group. Overall, proportional corrected scores revealed that patients detected joy less accurately across all modalities, in contrast to previous studies postulating that impairments are restricted to negatively valenced stimuli (e.g., Brierley et al., 2004). In addition, the patients displayed deficits for auditory fear and visual anger. The massive effect we observed for decoding joy has several possible explanations. First, this effect could be associated with the amount of information needed for accurate decoding. For instance, Graham et al. (2007) reported that patients were impaired in categorizing emotional faces when these were only presented for a limited duration. In the auditory domain, timing is also a crucial feature for prosody decoding. In healthy individuals, researchers have shown that there is a positive correlation between the duration of the sound and the correct recognition of the vocal stimulus (Pollack et al., 1960; Cornew et al., 2010; Pell and Kotz, 2011). Furthermore, happy prosody needs a duration of at least 1 s to be decoded accurately (Pell and Kotz, 2011), and our stimuli included 0.25 s fade-ins and fade-outs, thus reducing the amount of available information and its actual duration. The second explanation also concerns a lack of information. In the visual items, the lips were animated to match the intensity contour of each vocal stimulus, even in the unimodal visual condition. As a result, this manipulation may have had an impact on emotion recognition because the information needed to detect a smile was masked by the movement of the lips accompanying the vocalization. More specifically, the visual cues in the mouth region that are needed to detect joy (AU 12 – lip corner puller) and anger (AUs 0 – upper lip raise, 17 – chin raise, 23 – lip funnel, 24 – lip press) were less visible, and thus less salient. Although we expected fear perception accuracy to be poorer among patients than among controls across all the modalities, we found that it was only diminished for auditory stimuli, indicating that unilateral amygdala damage is not sufficient to impair fear recognition in the visual domain. Numerical differences in the confusion matrix (Table 3) suggest that the lack of an effect for visual information stemmed from the fact that fearful faces and faces expressing surprise were confused by both patients (62%) and controls (71%). This confusion between fear and surprise at the visual level is easily explained by the proximity of the AUs used to produce these emotional expressions. In actual fact, they differ by only two AUs: one in the brow region (AU 4 – brow lowerer), the other around the mouth (AU 20 – lip stretcher).

Interestingly, the patients were more accurate than controls in their detection of neutral expressions in both the auditory and audiovisual modalities. In this experiment, controls may have been biased toward emotional stimuli, in that 75% of items contained emotional information. They were therefore more driven to search for emotional cues in the faces. Assuming that emotion detection plays a functional role, we can surmise that it is less detrimental to identify an object as emotional, than to miss information that could indicate a threat. One can also argue that the patients’ emotion detection networks were less activated (expressed behaviorally by emotional blunting) by emotional stimuli, meaning that a neutral item was more likely to be perceived of as non-emotional.

Intensity Perception

First, controls and patients alike provided lower intensity ratings for visual emotional items than for auditory or audiovisual ones. More specifically, the control group rated visual angry, joyful, and fearful items as significantly less intense, while the patient group gave significantly lower intensity ratings for all the visual items (both emotional and neutral), when lesion size was taken into account. This less intense perception of visual stimuli could be explained by the differing nature of the auditory (real human voices) and visual (synthetic faces created with FACSGen) items. Nevertheless, the control group exhibited specific patterns of intensity perception for auditory and audiovisual items, depending on the emotion. In the case of anger, audiovisual items were perceived of as being more intense than unimodal auditory ones. This could be interpreted as an increase in the perceived potential threat, driven by the redundant information in the bimodal condition, as we are hard-wired to attribute particular importance to threat-related signals in order to avoid danger more effectively (Marsh et al., 2005). For joy, we observed the opposite pattern, in that auditory joyful items were rated as more intense than audiovisual items. Finally, there was no difference between the intensity ratings provided for auditory and audiovisual fear items, either in the control group or in the patient group. It seems, therefore, that anterior temporal lobe lesions disrupt the processing not just of fear-related stimuli, but also of other emotions in the visual modality. An additional analysis comparing emotional and neutral items showed that patients produced higher intensity ratings for neutral items than for emotional ones, regardless of modality. This effect across modalities lends further weight to the assumption of emotional blunting among these patients. When we compared the groups on emotional and neutral items for each modality, we found that differences only showed up in the auditory and audiovisual modalities, with higher ratings for neutral items provided by patients compared with controls. It is not entirely clear whether the lesions alone were responsible for this effect or whether a more general dysfunction of the epileptic brain was to blame, although the correlations between lesion size and emotional judgments suggest that the lesions themselves had an impact, beyond a general epileptic effect.

The present data indicate that the anterior temporal lobe plays a variety of roles, depending on the modality. First, patients exhibited a greater deficit in intensity perception for the visual modality as a linear function of lesion size for all emotional expressions. This result highlights an important role of this region at the end of the ventral visual pathway, regardless of the nature of the emotional information. Second, modality had an impact on the ratings provided by the controls for specific emotions. Anger, for instance, was perceived of as more intense in the auditory modality than in the audiovisual or visual ones, while joy was perceived of as more intense in the audiovisual modality than in the two unimodal ones. This emotional modality preference has already been flagged up by Bänziger et al. (2009). Until now, however, it has never been observed in patients. It could be linked to the deficit in the visual pathway mentioned earlier, as no differences were observed between the unimodal auditory condition and the audiovisual one, suggesting that the disruption of the visual processing channel meant that the processing focus had to be switched to the auditory modality. We can therefore hypothesize that our patients’ audiovisual processing was impaired as a consequence of a lack of input from the visual pathway toward the anterior temporal lobe. Crossmodal integration in the PRC, an associative area in the anterior temporal lobe that has been highlighted in both animal (e.g., Goulet and Murray, 2001) and human (e.g., Taylor et al., 2006) studies, may therefore play a major role in audiovisual integration.

Intensity Perception and Lesion Effect

We expected the patient with right amygdala damage to exhibit a greater deficit than those with left damage, given that emotion perception decoding appears to be right-lateralized (e.g., Adolphs, 2002; Schirmer and Kotz, 2006). Different deficit patterns were observed, however, depending on emotion and modality. The patient with a right temporal lesion displayed a deficit in auditory and audiovisual fear perception, along with a deficit in visual joy perception, while the left-lesion patients rated joyful prosody and angry visual expressions as less intense. These two last emotions can be seen as approach emotions, and BAS scores have been shown to correlate with activity in the left hemisphere (Harmon-Jones and Allen, 1997; Coan and Allen, 2003).

In addition to the lateralization effect, results highlighted a major impact of lesion size, mainly for the recognition and intensity ratings of visual emotional items. This massive visual impairment could be explained by the impact of the resection on part of the visual “what” (ventral) pathway: the absence or disruption of this component of the visual pathway system may have had a greater effect because of the reduced cues for determining expressions in the visual stimuli (i.e., masking by lip movements matched with vocalizations). Based on prior research with animals (Ungerleider and Mishkin, 1982), Catani and Thiebaut de Schotten (2008) showed, using diffusion tensor imaging, that the inferior longitudinal fasciculus, a ventral associative bundle, connects the occipital and temporal lobes (more specifically, the visual areas) to the amygdala. Given that lesion size particularly seemed to affect the visual modality in our study, we can surmise that a compensatory mechanism was at work, whereby the lack of discriminating information in a specific modality triggered a shift toward another modality (see, for example, Bavelier and Hirshorn, 2010).

Limitations

The first caveat regarding our experiment concerns the small number of patients, and the fact that only one patient had undergone a right anterior temporal resection, while another had a larger resection. However, the discrepancy between the number of patients and the number of controls did not impede our statistical analysis, owing to our choice of model and the fact that we tested every model excluding Patients JP or CM to see if we observed any change, which was not the case. The more important point to take into consideration is the difference between the visual and auditory information. The sounds were taken from the GEMEP database, which features real human voices. By contrast, the visual stimuli were non-natural faces (i.e., avatars), and this difference could account for the increased difficulty in labeling the expressions, even though they matched the Ekman coding system (see FACSGen; Roesch et al., 2011).

Conclusion

The results revealed a visual deficit in the perceived intensity of emotional stimuli. This deficit was explained by lesion size, in that the larger the lesion, the lower the intensity ratings for the visual items. This could be caused by disruption to the visual pathway connecting the occipital lobe and the amygdala, but further investigation is needed to test this hypothesis. Furthermore, emotional blunting effects may also have played a part, given that the neutral expressions were given higher intensity ratings by patients than by controls. It would be useful to determine whether the absence of audiovisual enhancement in the patients’ perception can be accounted for solely by the amygdala or whether the absence of the PRC, an area that has already been identified as an integrating area in both animals (Goulet and Murray, 2001) and humans (Taylor et al., 2006), is also an important factor.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank the reviewers for their precious comments, as well as Elizabeth Wiles-Portier for preparing the manuscript. This research was partly funded by the National Center of Competence in Research (NCCR) Affective Sciences, financed by the Swiss National Science Foundation (no. 51NF40-104897 – Didier Grandjean), and hosted by the University of Geneva.

Footnotes

- ^ http://www.mccauslandcenter.sc.edu/CRNL/clinical-toolbox

- ^ http://www.sonycreativesoftware.com/soundforge

- ^ http://audacity.sourceforge.net/

- ^ http://www.virtualdub.org/

- ^ http://www.pstnet.com/eprime.cfm

- ^ http://www.r-project.org/

References

Adolphs, R. (2002). Neural systems for recognizing emotion. Curr. Opin. Neurobiol. 12, 169–177. doi: 10.1016/S0959-4388(02)00301-X

Adolphs, R. (2008). Fear, faces, and the human amygdala. Curr. Opin. Neurobiol. 18, 166–172. doi: 10.1016/j.conb.2008.06.006

Adolphs, R., and Tranel, D. (1999). Intact recognition of emotional prosody following amygdala damage. Neuropsychologia 37, 1285–1292. doi: 10.1016/S0028-3932(99)00023-8

Adolphs, R., and Tranel, D. (2004). Impaired judgments of sadness but not happiness following bilateral amygdala damage. J. Cogn. Neurosci. 16, 453–462. doi: 10.1162/089892904322926782

Adolphs, R., Tranel, D., and Damasio, H. (2001). Emotion recognition from faces and prosody following temporal lobectomy. Neuropsychology 15, 396–404. doi: 10.1037/0894-4105.15.3.396

Adolphs, R., Tranel, D., Damasio, H., and Damasio, A. (1994). Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 372, 669–672. doi: 10.1038/372669a0

Adolphs, R., Tranel, D., Damasio, H., and Damasio, A. R. (1995). Fear and the human amygdala. J. Neurosci. 15, 5879–5891.

Amaral, D. G., Behniea, H., and Kelly, J. L. (2003). Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience 118, 1099–1120. doi: 10.1016/S0306-4522(02)01001-1

Anderson, A. K., and Phelps, E. A. (1998). Intact recognition of vocal expressions of fear following bilateral lesions of the human amygdala. Neuroreport 9, 3607–3613. doi: 10.1093/neucas/6.6.442

Anderson, A. K., Spencer, D. D., Fulbright, R. K., and Phelps, E. A. (2000). Contribution of the anteromedial temporal lobes to the evaluation of facial emotion. Neuropsychology 14, 526–536. doi: 10.1037/0894-4105.14.4.526

Andersen, S. M., Rapcsak, S. Z., and Beeson, P. M. (2010). Cost function masking during normalization of brains with focal lesions: still a necessity? Neuroimage 53, 78–84. doi: 10.1016/j.neuroimage.2010.06.003

Armony, J. L. (2013). Current emotion research in behavioral neuroscience: the role (s) of the amygdala. Emot. Rev. 5, 104–115. doi: 10.1177/1754073912457208

Armony, J. L., and Ledoux, J. E. (1997). How the brain processes emotional information. Ann. N. Y. Acad. Sci. 821, 259–270. doi: 10.1111/j.1749-6632.1997.tb48285.x

Armony, J. L., and Ledoux, J. E. (1999). “How danger is encoded: towards a systems, cellular, and computational understanding of cognitive-emotional interactions,” in The New Cognitive Neuroscience, ed. M. S. Gazzaniga (Cambridge, MA: MIT Press), 1067–1079.

Ashburner, J., and Friston, K. J. (2005). Unified segmentation. Neuroimage 26, 839–851. doi: 10.1016/j.neuroimage.2005.02.018

Banse, R., and Scherer, K. R. (1996). Acoustic profiles in vocal emotion expression. J. Pers. Soc. Psychol. 70, 614–636. doi: 10.1037/0022-3514.70.3.614

Bänziger, T., Grandjean, D., and Scherer, K. R. (2009). Emotion recognition from expressions in face, voice, and body: the Multimodal Emotion Recognition Test (MERT). Emotion 9, 691–704. doi: 10.1037/a0017088

Bänziger, T., Mortillaro, M., and Scherer, K. R. (2012). Introducing the Geneva multimodal expression corpus for experimental research on emotion perception. Emotion 12, 1161–1179. doi: 10.1037/a0025827

Batut, A. C., Gounot, D., Namer, I. J., Hirsch, E., Kehrli, P., and Metz-Lutz, M. N. (2006). Neural responses associated with positive and negative emotion processing in patients with left versus right temporal lobe epilepsy. Epilepsy Behav. 9, 415–423. doi: 10.1016/j.yebeh.2006.07.013

Bavelier, D., and Hirshorn, E. A. (2010). I see where you’re hearing: how cross-modal plasticity may exploit homologous brain structures. Nat. Neurosci. 13, 1309–1311. doi: 10.1038/nn1110-1309

Belin, P., Fillion-Bilodeau, S., and Gosselin, F. (2008). The montreal affective voices: a validated set of non-verbal affect bursts for research on auditory affective processing. Behav. Res. Methods 40, 531–539. doi: 10.3758/BRM.40.2.531

Boersma, P., and Weenink, D. (2011). “Praat: doing phonetics by computer [Computer program]”. 5.2.37. Available at: http://www.praat.org/ (accessed September 2, 2011).

Breiter, H. C., Etcoff, N. L., Whalen, P. J., Kennedy, W. A., Rauch, S. L., Buckner, R. L., et al. (1996). Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17, 875–887. doi: 10.1016/S0896-6273(00)80219-6

Brierley, B., Medford, N., Shaw, P., and David, A. S. (2004). Emotional memory and perception in temporal lobectomy patients with amygdala damage. J. Neurol. Neurosurg. Psychiatry 75, 593–599. doi: 10.1136/jnnp.2002.006403

Broks, P., Young, A. W., Maratos, E. J., Coffey, P. J., Calder, A. J., Isaac, C. L., et al. (1998). Face processing impairments after encephalitis: amygdala damage and recognition of fear. Neuropsychologia 36, 59–70. doi: 10.1016/S0028-3932(97)00105-X

Cahill, L., Weinberger, N. M., Roozendaal, B., and McGaugh, J. L. (1999). Is the amygdala a locus of “conditioned fear?” Some questions and caveats. Neuron 23, 227–228. doi: 10.1016/S0896-6273(00)80774-6

Calder, A. J., and Young, A. W. (2005). Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 6, 641–651. doi: 10.1038/nrn1724

Calder, A. J., Young, A. W., Rowland, D., Perrett, D. I., Hodges, J. R., and Etcoff, N. L. (1996). Facial emotion recognition after bilateral amygdala damage: differentially severe impairment of fear. Cogn. Neuropsychol. 13, 699–745. doi: 10.1080/026432996381890

Catani, M., and Thiebaut de Schotten, M. (2008). A diffusion tensor imaging tractography atlas for virtual in vivo dissections. Cortex 44, 1105–1132. doi: 10.1016/j.cortex.2008.05.004

Coan, J. A., and Allen, J. J. (2003). Varieties of emotional experience during voluntary emotional facial expressions. Ann. N. Y. Acad. Sci. 1000, 375–379. doi: 10.1196/annals.1280.034

Cornew, L., Carver, L., and Love, T. (2010). There’s more to emotion than meets the eye: a processing bias for neutral content in the domain of emotional prosody. Cogn. Emot. 24, 1133–1152. doi: 10.1080/02699930903247492

Cristinzio, C., Sander, D., and Vuilleumier, P. (2007). Recognition of emotional face expressions and amygdala pathology. Epileptologie 24, 130–138.

De Gelder, B., and Vroomen, J. (2000). The perception of emotions by ear and by eye. Cogn. Emot. 14, 289–311. doi: 10.1080/026999300378824

Dellacherie, D., Hasboun, D., Baulac, M., Belin, P., and Samson, S. (2011). Impaired recognition of fear in voices and reduced anxiety after unilateral temporal lobe resection. Neuropsychologia 49, 618–629. doi: 10.1016/j.neuropsychologia.2010.11.008

Dolan, R. J., Morris, J. S., and De Gelder, B. (2001). Crossmodal binding of fear in voice and face. Proc. Natl. Acad. Sci. U.S.A. 98, 10006–10010. doi: 10.1073/pnas.171288598

Ekman, P., and Friesen, W. V. (1978). Facial Action Coding System: A Technique for the Measurement of Facial Movement. Palo Alto, CA: Consulting Psychologists Press.

Etcoff, N. L., and Magee, J. J. (1992). Categorical perception of facial expressions. Cognition 44, 227–240. doi: 10.1016/0010-0277(92)90002-Y

Ethofer, T., Anders, S., Wiethoff, S., Erb, M., Herbert, C., Saur, R., et al. (2006). Effects of prosodic emotional intensity on activation of associative auditory cortex. Neuroreport 17, 249–253. doi: 10.1097/01.wnr.0000199466.32036.5d

Ethofer, T., De Ville, D. V., Scherer, K., and Vuilleumier, P. (2009). Decoding of emotional information in voice-sensitive cortices. Curr. Biol. 19, 1028–1033. doi: 10.1016/j.cub.2009.04.054

Fecteau, S., Belin, P., Joanette, Y., and Armony, J. L. (2007). Amygdala responses to non-linguistic emotional vocalizations. Neuroimage 36, 480–487. doi: 10.1016/j.neuroimage.2007.02.043

Frühholz, S., Ceravolo, L., and Grandjean, D. (2012). Specific brain networks during explicit and implicit decoding of emotional prosody. Cereb. Cortex 22, 1107–1117. doi: 10.1093/cercor/bhr184

Frühholz, S., and Grandjean, D. (2012). Towards a fronto-temporal neural network for the decoding of angry vocal expressions. Neuroimage 62, 1658–1666. doi: 10.1016/j.neuroimage.2012.06.015

Frühholz, S., and Grandjean, D. (2013). Amygdala subregions differentially respond and rapidly adapt to threatening voices. Cortex 49, 1394–1403. doi: 10.1016/j.cortex.2012.08.003

Gosselin, N., Peretz, I., Hasboun, D., Baulac, M., and Samson, S. (2011). Impaired recognition of musical emotions and facial expressions following anteromedial temporal lobe excision. Cortex 47, 1116–1125. doi: 10.1016/j.cortex.2011.05.012

Gosselin, N., Peretz, I., Johnsen, E., and Adolphs, R. (2007). Amygdala damage impairs emotion recognition from music. Neuropsychologia 45, 236–244. doi: 10.1016/j.neuropsychologia.2006.07.012

Goulet, S., and Murray, E. A. (2001). Neural substrates of crossmodal association memory in monkeys: the amygdala versus the anterior rhinal cortex. Behav. Neurosci. 115, 271–284. doi: 10.1037/0735-7044.115.2.271

Graham, R., Devinsky, O., and Labar, K. S. (2007). Quantifying deficits in the perception of fear and anger in morphed facial expressions after bilateral amygdala damage. Neuropsychologia 45, 42–54. doi: 10.1016/j.neuropsychologia.2006.04.021

Grandjean, D., Sander, D., Pourtois, G., Schwartz, S., Seghier, M. L., Scherer, K. R., et al. (2005). The voices of wrath: brain responses to angry prosody in meaningless speech. Nat. Neurosci. 8, 145–146. doi: 10.1038/nn1392

Harmon-Jones, E., and Allen, J. J. (1997). Behavioral activation sensitivity and resting frontal EEG asymmetry: covariation of putative indicators related to risk for mood disorders. J. Abnorm. Psychol. 106, 159–163. doi: 10.1037/0021-843X.106.1.159

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Heberlein, A. S., Adolphs, R., Tranel, D., and Damasio, H. (2004). Cortical regions for judgments of emotions and personality traits from point-light walkers. J. Cogn. Neurosci. 16, 1143–1158. doi: 10.1162/0898929041920423

Kanwisher, N., and Yovel, G. (2006). The fusiform face area: a cortical region specialized for the perception of faces. Philos. Trans. R. Soc. Lond. B Biol. Sci. 361, 2109–2128. doi: 10.1098/rstb.2006.1934

Klasen, M., Kenworthy, C. A., Mathiak, K. A., Kircher, T. T., and Mathiak, K. (2011). Supramodal representation of emotions. J. Neurosci. 31, 13635–13643. doi: 10.1523/JNEUROSCI.2833-11.2011

Kreifelts, B., Ethofer, T., Grodd, W., Erb, M., and Wildgruber, D. (2007). Audiovisual integration of emotional signals in voice and face: an event-related fMRI study. Neuroimage 37, 1445–1456. doi: 10.1016/j.neuroimage.2007.06.020

Marsh, A. A., Ambady, N., and Kleck, R. E. (2005). The effects of fear and anger facial expressions on approach- and avoidance-related behaviors. Emotion 5, 119–124. doi: 10.1037/1528-3542.5.1.119

Massaro, D. W., and Egan, P. B. (1996). Perceiving affect from the voice and the face. Psychon. Bull. Rev. 3, 215–221. doi: 10.3758/BF03212421

McClelland, S. III, Garcia, R. E., Peraza, D. M., Shih, T. T., Hirsch, L. J., Hirsch, J., et al. (2006). Facial emotion recognition after curative non-dominant temporal lobectomy in patients with mesial temporal sclerosis. Epilepsia 47, 1337–1342. doi: 10.1111/j.1528-1167.2006.00557.x

Meletti, S., Benuzzi, F., Rubboli, G., Cantalupo, G., Stanzani Maserati, M., Nichelli, P., et al. (2003). Impaired facial emotion recognition in early-onset right mesial temporal lobe epilepsy. Neurology 60, 426–431. doi: 10.1212/WNL.60.3.426

Miller, J. (1982). Divided attention: evidence for coactivation with redundant signals. Cogn. Psychol. 14, 247–279. doi: 10.1016/0010-0285(82)90010-X

Mitchell, R. L. C., Elliott, R., Barry, M., Cruttenden, A., and Woodruff, P. W. R. (2003). The neural response to emotional prosody, as revealed by functional magnetic resonance imaging. Neuropsychologia 41, 1410–1421. doi: 10.1016/S0028-3932(03)00017-4

Morris, J. S., Friston, K. J., Buchel, C., Frith, C. D., Young, A. W., Calder, A. J., et al. (1998). A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain 121(Pt 1), 47–57. doi: 10.1093/brain/121.1.47

Morris, J. S., Scott, S. K., and Dolan, R. J. (1999). Saying it with feeling: neural responses to emotional vocalizations. Neuropsychologia 37, 1155–1163. doi: 10.1016/S0028-3932(99)00015-9

Morrison, S. E., and Salzman, C. D. (2010). Re-valuing the amygdala. Curr. Opin. Neurobiol. 20, 221–230. doi: 10.1016/j.conb.2010.02.007

Müller, V. I., Cieslik, E. C., Turetsky, B. I., and Eickhoff, S. B. (2012). Crossmodal interactions in audiovisual emotion processing. Neuroimage 60, 553–561. doi: 10.1016/j.neuroimage.2011.12.007

Murray, E. A. (2007). The amygdala, reward and emotion. Trends Cogn. Sci. 11, 489–497. doi: 10.1016/j.tics.2007.08.013

Palermo, R., Schmalzl, L., Mohamed, A., Bleasel, A., and Miller, L. (2010). The effect of unilateral amygdala removals on detecting fear from briefly presented backward-masked faces. J. Clin. Exp. Neuropsychol. 32, 123–131. doi: 10.1080/13803390902821724

Pell, M. D., and Kotz, S. A. (2011). On the time course of vocal emotion recognition. PLoS ONE 6:e27256. doi: 10.1371/journal.pone.0027256

Phan, K. L., Wager, T., Taylor, S. F., and Liberzon, I. (2002). Functional neuroanatomy of emotion: a meta-analysis of emotion activation studies in PET and fMRI. Neuroimage 16, 331–348. doi: 10.1006/nimg.2002.1087

Phelps, E. A., and LeDoux, J. E. (2005). Contributions of the amygdala to emotion processing: from animal models to human behavior. Neuron 48, 175–187. doi: 10.1016/j.neuron.2005.09.025

Plichta, M. M., Gerdes, A. B., Alpers, G., Harnisch, W., Brill, S., Wieser, M., et al. (2011). Auditory cortex activation is modulated by emotion: a functional near-infrared spectroscopy (fNIRS) study. Neuroimage 55, 1200–1207. doi: 10.1016/j.neuroimage.2011.01.011

Pollack, I., Rubenstein, H., and Horowitz, A. (1960). Communication of verbal modes of expression. Lang. Speech 3, 121–130. doi: 10.1177/002383096000300301

Pourtois, G., Dan, E. S., Grandjean, D., Sander, D., and Vuilleumier, P. (2005a). Enhanced extrastriate visual response to bandpass spatial frequency filtered fearful faces: time course and topographic evoked-potentials mapping. Hum. Brain Mapp. 26, 65–79. doi: 10.1002/hbm.20130

Pourtois, G., De Gelder, B., Bol, A., and Crommelinck, M. (2005b). Perception of facial expressions and voices and of their combination in the human brain. Cortex 41, 49–59.

Pourtois, G., Schettino, A., and Vuilleumier, P. (2013). Brain mechanisms for emotional influences on perception and attention: what is magic and what is not. Biol. Psychol. 92, 492–512. doi: 10.1016/j.biopsycho.2012.02.007

Pourtois, G., Spinelli, L., Seeck, M., and Vuilleumier, P. (2010). Modulation of face processing by emotional expression and gaze direction during intracranial recordings in right fusiform cortex. J. Cogn. Neurosci. 22, 2086–2107. doi: 10.1162/jocn.2009.21404

Roesch, E. B., Tamarit, L., Reveret, L., Grandjean, D., Sander, D., and Scherer, K. R. (2011). FACSGen: a tool to synthesize emotional facial expressions through systematic manipulation of facial action units. J. Nonverbal Behav. 35, 1–16. doi: 10.1007/s10919-010-0095-9

Sabatinelli, D., Bradley, M. M., Fitzsimmons, J. R., and Lang, P. J. (2005). Parallel amygdala and inferotemporal activation reflect emotional intensity and fear relevance. Neuroimage 24, 1265–1270. doi: 10.1016/j.neuroimage.2004.12.015

Sander, D., Grafman, J., and Zalla, T. (2003). The human amygdala: an evolved system for relevance detection. Rev. Neurosci. 14, 303–316. doi: 10.1515/REVNEURO.2003.14.4.303

Sander, D., Grandjean, D., Pourtois, G., Schwartz, S., Seghier, M. L., Scherer, K. R., et al. (2005). Emotion and attention interactions in social cognition: brain regions involved in processing anger prosody. Neuroimage 28, 848–858. doi: 10.1016/j.neuroimage.2005.06.023

Schirmer, A., Fox, P. M., and Grandjean, D. (2012). On the spatial organization of sound processing in the human temporal lobe: a meta-analysis. Neuroimage 63, 137–147. doi: 10.1016/j.neuroimage.2012.06.025

Schirmer, A., and Kotz, S. A. (2006). Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cogn. Sci. 10, 24–30. doi: 10.1016/j.tics.2005.11.009

Schröger, E., and Widmann, A. (1998). Speeded responses to audiovisual signal changes result from bimodal integration. Psychophysiology 35, 755–759. doi: 10.1111/1469-8986.3560755

Scott, S. K., Young, A. W., Calder, A. J., Hellawell, D. J., Aggleton, J. P., and Johnson, M. (1997). Impaired auditory recognition of fear and anger following bilateral amygdala lesions. Nature 385, 254–257. doi: 10.1038/385254a0

Sprengelmeyer, R., Atkinson, A. P., Sprengelmeyer, A., Mair-Walther, J., Jacobi, C., Wildemann, B., et al. (2010). Disgust and fear recognition in paraneoplastic limbic encephalitis. Cortex 46, 650–657. doi: 10.1016/j.cortex.2009.04.007

Sprengelmeyer, R., Young, A. W., Schroeder, U., Grossenbacher, P. G., Federlein, J., Buttner, T., et al. (1999). Knowing no fear. Proc. Biol. Sci. 266, 2451–2456. doi: 10.1098/rspb.1999.0945

Taylor, K. I., Moss, H. E., Stamatakis, E. A., and Tyler, L. K. (2006). Binding crossmodal object features in perirhinal cortex. Proc. Natl. Acad. Sci. U.S.A. 103, 8239–8244. doi: 10.1073/pnas.0509704103

Ungerleider, L. G., and Mishkin, M. (1982). “Two cortical visual systems,” in Analysis of Visual Behavior, eds D. J. Ingle, M. Goodale, and R. J. W. Mansfield (Cambridge, MA: MIT Press), 549–586.

Vuilleumier, P. (2005). How brains beware: neural mechanisms of emotional attention. Trends Cogn. Sci. 9, 585–594. doi: 10.1016/j.tics.2005.10.011

Vuilleumier, P., Armony, J. L., Driver, J., and Dolan, R. J. (2001). Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron 30, 829–841. doi: 10.1016/S0896-6273(01)00328-2

Vuilleumier, P., and Pourtois, G. (2007). Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia 45, 174–194. doi: 10.1016/j.neuropsychologia.2006.06.003

Vuilleumier, P., Richardson, M. P., Armony, J. L., Driver, J., and Dolan, R. J. (2004). Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nat. Neurosci. 7, 1271–1278. doi: 10.1038/nn1341

Whalen, P. J. (1998). Fear, vigilance, and ambiguity: initial neuroimaging studies of the human amygdala. Curr. Dir. Psychol. Sci. 7, 177–188.

Wiethoff, S., Wildgruber, D., Grodd, W., and Ethofer, T. (2009). Response and habituation of the amygdala during processing of emotional prosody. Neuroreport 20, 1356–1360. doi: 10.1097/WNR.0b013e328330eb83

Wildgruber, D., Ackermann, H., Kreifelts, B., and Ethofer, T. (2006). Cerebral processing of linguistic and emotional prosody: fMRI studies. Prog. Brain Res. 156, 249–268. doi: 10.1016/S0079-6123(06)56013-3

Williams, M. A., Moss, S. A., and Bradshaw, J. L. (2004). A unique look at face processing: the impact of masked faces on the processing of facial features. Cognition 91, 155–172. doi: 10.1016/j.cognition.2003.08.002

Keywords: amygdala, emotion perception, multimodal, prosody, facial expression

Citation: Milesi V, Cekic S, Péron J, Frühholz S, Cristinzio C, Seeck M and Grandjean D (2014) Multimodal emotion perception after anterior temporal lobectomy (ATL). Front. Hum. Neurosci. 8:275. doi: 10.3389/fnhum.2014.00275

Received: 16 June 2013; Accepted: 14 April 2014;

Published online: 05 May 2014.

Edited by:

Benjamin Kreifelts, University of Tübingen, GermanyReviewed by:

Thomas Ethofer, University Tubingen, GermanyMartin Klasen, RWTH Aachen University, Germany

Copyright © 2014 Milesi, Cekic, Péron, Frühholz, Cristinzio, Seeck and Grandjean. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Valérie Milesi, Swiss Center for Affective Sciences, University of Geneva, Rue des Battoirs 7, 1205 Geneva, Switzerland e-mail: valerie.milesi@unige.ch

Valérie Milesi

Valérie Milesi Sezen Cekic

Sezen Cekic Julie Péron1,2

Julie Péron1,2  Sascha Frühholz

Sascha Frühholz Chiara Cristinzio

Chiara Cristinzio Didier Grandjean

Didier Grandjean