Facilitated detection of social cues conveyed by familiar faces

- 1Department of Psychological and Brain Sciences, Dartmouth College, Hanover, NH, USA

- 2Department of Medicina Specialistica, Diagnostica e Sperimentale (DIMES), Medical School, University of Bologna, Italy

Recognition of the identity of familiar faces in conditions with poor visibility or over large changes in head angle, lighting and partial occlusion is far more accurate than recognition of unfamiliar faces in similar conditions. Here we used a visual search paradigm to test if one class of social cues transmitted by faces—direction of another's attention as conveyed by gaze direction and head orientation—is perceived more rapidly in personally familiar faces than in unfamiliar faces. We found a strong effect of familiarity on the detection of these social cues, suggesting that the times to process these signals in familiar faces are markedly faster than the corresponding processing times for unfamiliar faces. In the light of these new data, hypotheses on the organization of the visual system for processing faces are formulated and discussed.

Introduction

In previous work we have proposed that recognition of familiar faces is based on activation of a distributed network of areas including the theory of mind areas and areas involved in the emotional response (Gobbini et al., 2004; Leibenluft et al., 2004; Gobbini and Haxby, 2006, 2007; Gobbini, 2010). In this manuscript we present new data in the context of a series of psychophysical experiments that focus on visual processing of familiar faces.

We are constantly exposed to faces and face perception is extremely efficient and quick. Even in the context of disrupted visual awareness through various forms of masking and interocular suppression, faces seem to be detected and processed by the visual system more so than other categories of stimuli. For example, upright faces break through interocular suppression one-half second faster than do inverted faces, indicating that the upright facial configuration is processed even when the subject is unaware of the image (Jiang et al., 2007; Yang et al., 2007; Zhou et al., 2010). Social cues such as facial expressions, head direction, and eye gaze direction also appear to be processed when the subject is unaware of the face image, as evidenced by faster breakthrough of interocular suppression by faces with fearful expressions, faces presented in full-frontal view, and faces with eye gaze directed at the viewer (Jiang and He, 2006; Yang et al., 2007; Stein et al., 2011; Gobbini et al., 2013a). Neural response to masked or suppressed faces with fearful expression has been reported in the amygdala suggesting the possibility of a subcortical pathway for fast processing of socially relevant stimuli (Morris et al., 1998; Whalen et al., 1998; Williams et al., 2004; and for review see Tamietto and de Gelder, 2010; but see also Pessoa and Adolphs, 2010; and Valdés-Sosa et al., 2011).

Measurement of saccadic reaction has shown that we can detect a face as fast as 100 ms after stimulus onset (Crouzet et al., 2010). Some research supports the idea that faces, as colors, shapes or orientation might be processed pre-attentively (according to the definition of parallel processing proposed by Treisman and Gelade, 1980), in an automatic way (Hershler and Hochstein, 2005 but see also VanRullen, 2006). Interestingly, the first face-specific evoked potential has been consistently reported at around 170 ms post-stimulus (Bentin et al., 1996; Puce et al., 1999; Eimer and Holmes, 2002) raising the question of which aspect and what level of processing at short latencies (before the N170) is performed to enable rapid face detection.

According to our functional model on face perception (Haxby et al., 2000, 2002) the encoding of the structural aspect of a face that affords recognition of identity is performed by a distinct pathway as compared to the one that is involved with perception of facial movements and, more generally, biological motion (Allison et al., 2000; O'Toole et al., 2002; Winston et al., 2004; Gobbini et al., 2007, 2011; Pitcher et al., 2012). While the ventral temporal pathway, in particular the fusiform gyrus seems to be involved in recognition of the unchangeable aspect of a face, the posterior superior temporal sulcus (pSTS) seems to be involved with perception of the changeable aspects of a face. The STS also seems to be involved in detecting other people's direction of attention. Neurons in the anterior temporal cortex of the monkey are tuned to direction of others' social attention cues, such as head orientation, eye gaze and body movements (Perrett et al., 1985). In humans, fMRI has shown specific regions such as the posterior and anterior superior temporal sulcus, the fusiform gyrus, the medial prefrontal cortex, preferentially engaged by eye gaze and head turns highlighting how dedicated neuronal population are involved in processing relevant social cues (Hoffman and Haxby, 2000; Pageler et al., 2003; Pelphrey et al., 2003; Engell and Haxby, 2007; Schweinberger et al., 2007; Carlin et al., 2012; and for a review Senju and Johnson, 2009).

We have shown that personally familiar faces are detected more efficiently than are faces of strangers in conditions in which attentional resources are reduced and in which faces are rendered subjectively invisible (Gobbini et al., 2013b). Visual search paradigms used by others have reported faster detection of familiar faces in a visual search paradigm (Tong and Nakayama, 1999; see also Deuve et al., 2009) and showed that detecting a specific identity involves a serial search with no pop-out. In Tong and Nakayama (1999), detection of one's own face or a familiar face was faster than detection of unfamiliar faces with a smaller effect of familiarity on search speed that was not significant in one experiment and less than half of the effect on detection speed in a second experiment.

With the present experiment we tested whether social cues, which are supposedly processed by a distinct pathway from that for identity, are detected more efficiently if conveyed by familiar faces. We predicted that the familiarity of a face affects not only the visual representation of invariant aspects for identification, but also the perception of subtle changes that can signal an internal state, such as direction of attention. The extensive expertise with a familiar face might result in efficient processing that is independent of capture of attention. We used a visual search paradigm in which the task is to detect a target with a specified direction of attention—toward or away from the viewer—as conveyed by the gaze direction or head angle of personally familiar or unfamiliar. Importantly, all distractors on target present trials were unfamiliar faces to avoid confounding the effect of faster processing of the target social cue in a familiar face from attentional capture by the familiar face—an effect that would lead to biasing search to check the familiar face containing the target feature earlier than the distractor faces (such a confound muddied the interpretation of results in Buttle and Raymond, 2003). If distractors are familiar faces, a shallower slope for the effect of set size on reaction time (response time vs. set size function, RSF) could be due to faster processing of the familiar face distractors rather than to attentional biasing of a serial search, as was the case in Persike et al. (2013). Thus, in our paradigm an effect of the familiarity of the face with the target feature on the RSF would indicate attentional capture unconfounded by faster processing of distractors. Conversely, an effect of familiarity on target social cue detection independent of an effect on RSF would indicate faster processing in familiar faces independent of attentional capture. Results showed no effect of the familiarity of the target face on the RSF, indicating that the main effect of familiarity on reaction time that was constant across set sizes was due to faster processing of only the target stimulus, not to altered processing of distractors or to an attention-driven bias to process familiar target stimuli earlier in a visual search.

Thus, our results confirm our prediction. Two facial cues for others' direction of attention—gaze direction and head angle—are detected much faster if the faces are personally familiar, corroborating our previous findings on facilitated detection of personally familiar faces under conditions of lack of awareness and reduced attentional resources (Gobbini et al., 2013b). These results suggest that the learned representation involves more than invariant features for identifying familiar individuals but also changeable features for social communication.

Methods

Participants

Two sets of four friends (three females, five males) participated in the experiment. As a criterion for familiarity, we chose friends that had extensive interaction with each other for more than a year before the experiment. They were recruited from the Dartmouth College community. Their pictures were taken in different head and gaze orientations to be used as stimuli in the experiment. To ensure that all the stimuli were equal in terms of image quality, we took the pictures in a photo studio with identical lighting and camera placement and settings. Subjects were reimbursed for their participation; all gave written informed consent to use their pictures and to participate in the experiment. The experiment was approved by the local IRB committee.

Stimuli

For each subject we created three sets of images: target familiar faces (three identities), target unknown faces (three identities), and distractor unknown faces (five identities). Three target unknown individuals were pseudo-randomly sampled from a set of eight identities (four females). Five different identities were used as distractors. Images of the distractor face identities were never used as targets. The pictures of the eight unfamiliar individuals had been previously taken at the University of Vermont with the same lighting, camera placement and settings used for the friends.

Images were cropped, resized to 150 × 150 pixels, and then grayscaled using ImageMagick (version 6.8.7-7 Q16, x86_64, 2013-11-27) on Mac OS X 10.9.2. The average pixel intensity of each image (ranging from 0 to 255) was set to 128 with a standard deviation of 40 using the SHINE toolbox (function lumMatch) (Willenbockel et al., 2010) in MATLAB (version 8.1.0.604, R2013a).

Experimental Setup

The experiment was run on an Apple MacPro 1,1, display Apple Cinema HD (23″) set at a resolution of 1280 × 800 pixels with a 60 Hz refresh rate, using Psychtoolbox (version 3.0.8) (Brainard, 1997; Pelli, 1997; Kleiner et al., 2007) in MATLAB (version 7.8.0.347, R2009a).

Before the actual experiment, subjects practiced the task with a set of unrelated images. They sat at a distance of approximately 80 cm from the screen (eyes to screen) in a dimly lit room. The experiment consisted of four different tasks (see below for a detailed description) divided into four blocks. At the beginning of each block, a visual cue indicated the current task. After two blocks, the script invited the subjects to take a break and let the experimenter know they completed the first part of the experiment. After this break, the experimenter ran the script for the second part, and subjects completed the last two blocks. The order of the tasks was randomized.

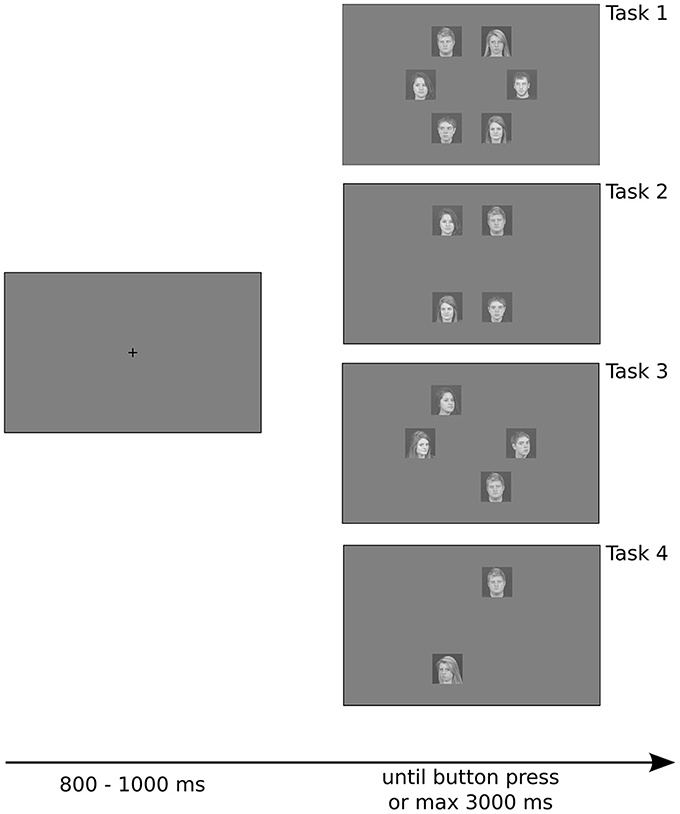

Stimuli were presented on a gray background (pixel intensity set to 128 for all the pixels), and were positioned approximately 6.89° from the fixation point. Each stimulus had a retinal size of approximately 4.08 × 4.08°. Intertrial intervals were randomly jittered from trial to trial, ranging from 800 to 1000 ms, during which subjects were required to maintain fixation on a black cross in the center of the screen. Stimulus presentation ended with the subject's response or after 3000 ms if no response was made. Subjects were not required to maintain fixation during stimulus presentation (Figure 1).

Figure 1. Example of trials with different number of stimulus array used in the experiment. Stimuli were positioned on a circle, separated by 60° from each other, making them equidistant from the fixation point and lying on a regular hexagon. Note that for set sizes of two and four there are three possible shapes that the stimuli can create (rotations of 60 and 120° of the shape depicted here), which were randomly chosen from trial to trial. See details in the text.

Tasks

Subjects were required to detect a target among a different number of distractors (set of 2 or 4 or 6 stimuli), and had to press the left arrow-key (YES) when they found the target, or the right arrow-key (NO) if the target was absent. They heard a beep if they were wrong or if they took too much time to respond (maximum allowed time of 3 s).

The experiment had four tasks. The first two tasks investigated detection of a target with gaze orientation that differed from distractors, controlling for head orientation—all stimuli depicted faces in frontal view. In Task 1 subjects detected a face with gaze directed to the observer among faces with averted gaze. In Task 2 they detected a face with averted gaze among faces with gaze directed to the observer. The other two tasks investigated detection of a target with head orientation that differed from distractors, controlling for gaze orientation—all stimuli depicted faces with gaze directed to the observer. In Task 3 subjects detected a face in full view among faces in profile view (head turned approximately 40°). In Task 4 subjects detected a face in profile view among faces in full view. The order of the tasks was randomized for each participant.

We manipulated the set size (total number of stimuli on the screen: 2, 4, or 6), the familiarity of the target, and the presence of the target. For all set sizes, the stimuli were positioned on a circle with a radius of 250 px (or 6.89° of visual angle) centered on the fixation point, and were positioned on the vertices of a regular hexagon. Thus, all stimuli were equidistant from the fixation point, and the first saccade covered the same distance regardless of the condition. We controlled the position of the stimuli such that the shape they created was always symmetrical with respect to the fixation point (see Figure 1). Thus, the total number of possible shapes was 3, 3, and 1 respectively for set sizes of 2, 4, and 6 (for set sizes of 2 and 4, the other possible shapes are rotations of 60 and 120° of the shapes in Figure 1).

Since we were unable to completely cross the target position and the possible shapes due to time constraints for the experiment, we decided to balance the occurrence of the target in the left and right hemifield, thus avoiding any lateral bias. The shape and the target position were randomly determined for each trial with the constraint that in 50% of the trials the target was on the left side.

The target could be either a familiar or a stranger face. Likewise, on each target absent trial one distractor image was a target face identity (familiar or stranger) with the same gaze and head orientation as the other distractors. Half of target absent trials had a familiar target identity as a distractor, and half had a stranger target identity as a distractor. Thus, the presence of a target identity was not informative on the presence of a target gaze or head orientation.

We also controlled for rightward and leftward orientation of gaze and head angle of targets in Tasks 2 and 4, in which the target had either averted gaze or averted head angle. The orientation of the targets was balanced to the left and right. In Tasks 1 and 3 the orientation of the distractors was similarly balanced. For each trial, all distractors were oriented to one side. Half of the trials had all distractors oriented to the left, and the other half had all distractors oriented to the right.

For each task we presented each target identity two times for each set size, target present or absent, and right- or leftward orientation condition, thus yielding 144 trials per task (Number of target identities × 2 × Set size × Presence of target × Orientation = 6 × 2 × 3 × 2 × 2 = 144). The trial order was randomized.

Results

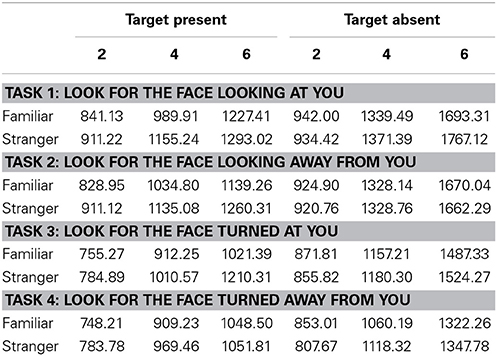

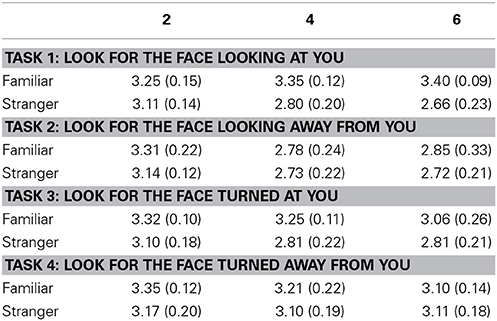

We analyzed reaction times for target present and target absent trials separately. Table 1 shows the Reaction Times (RTs) in ms and Table 2 shows mean d' values and SE for each task and each condition.

Data were analyzed in R (version 3.0.2, R Core Team, 2013) using a Linear Mixed-Effect Model on RTs and d' values, as implemented in the package lme4 (version 1.0-6, Bates et al., 2014). The model was then fitted with Maximum-Likelihood estimation. To find the best fitting model, different models were evaluated according to the AIC (Akaike Information Criterion), and tested by means of a log-likelihood ratio test (Baayen et al., 2008). Once the best model was found, interaction or main fixed effects of this model were also evaluated with a log-likelihood ratio test (Baayen et al., 2008).

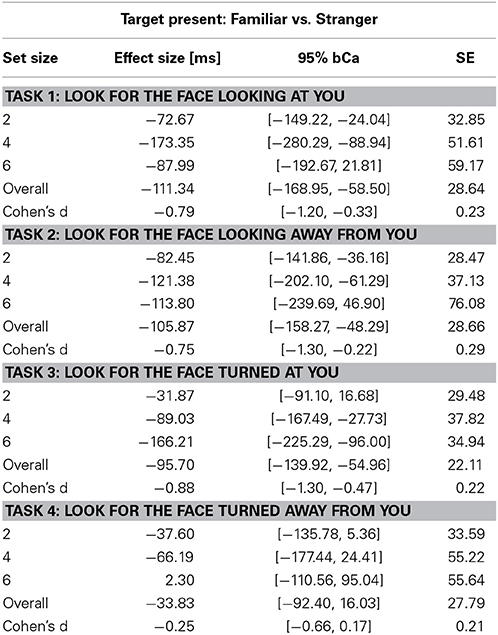

Reliability of parameter estimates for main fixed effects and contrasts were evaluated through parametric bootstrapping (10,000 replicates), and then computing 95% basic bootstrap confidence intervals (bCI). Effect sizes for familiarity and 95% bCa confidence intervals (10,000 repetitions) shown in Tables 3, 4 were computed using the package bootES (version 1.01, Kirby and Gerlanc, 2013).

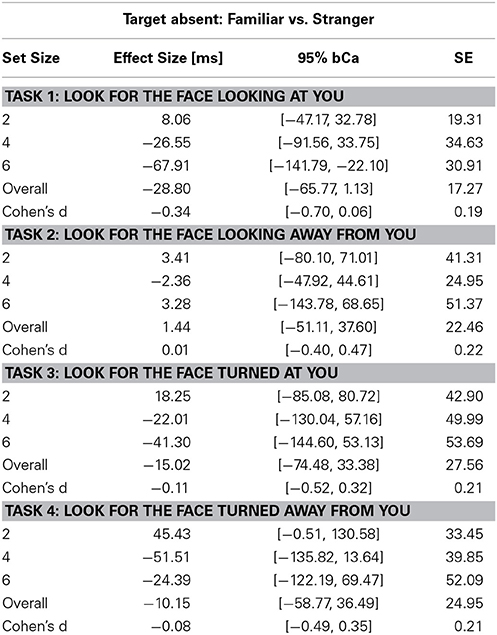

Table 3. Unstandardized effect size [ms] of familiarity for the Target Present condition and Cohen's d effect size of familiarity across set sizes in the four tasks (bCa bias-corrected and accelerated confidence intervals, computed with 10,000 repetitions).

Table 4. Unstandardized effect size [ms] of familiarity for the Target Absent conditions and Cohen's d effect size of familiarity across set sizes in the four tasks (bCa bias-corrected and accelerated confidence intervals, computed with 10,000 repetitions).

Target Present

We first created a general model entering main effects of task, set size, and familiarity of the target, and the interaction between set size and familiarity; subjects and target items were entered as random effects with random intercepts and random slopes for familiarity. Then we removed random slopes for familiarity (one at a time) to test whether a parsimonious model could be found. Indeed, we found that removing random slopes for both random effects decreased the AIC, while the X2 log-likelihood ratio tests were not significant.

The RSF for familiar and unfamiliar targets were not significantly different, as indicated by a non-significant interaction between familiarity and set size (X2(1) = 1.28, p = 0.26). Consequently, we further simplified the model by removing this interaction effect. Thus, this yielded the best model in terms of AIC with task, set size, and familiarity as main fixed effects, and subjects and target items as random effects with random intercepts.

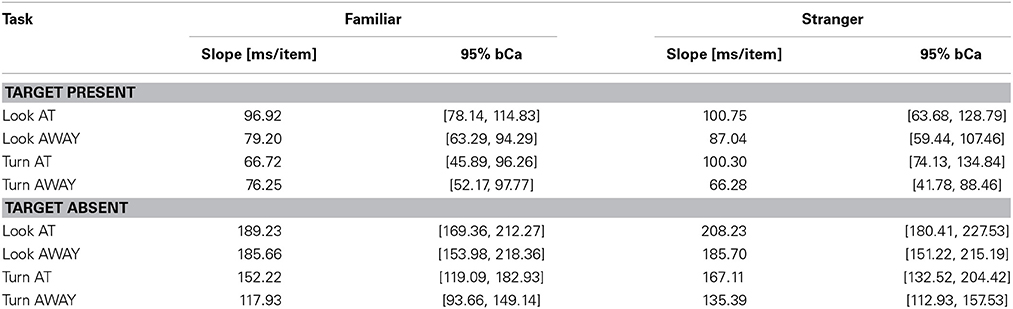

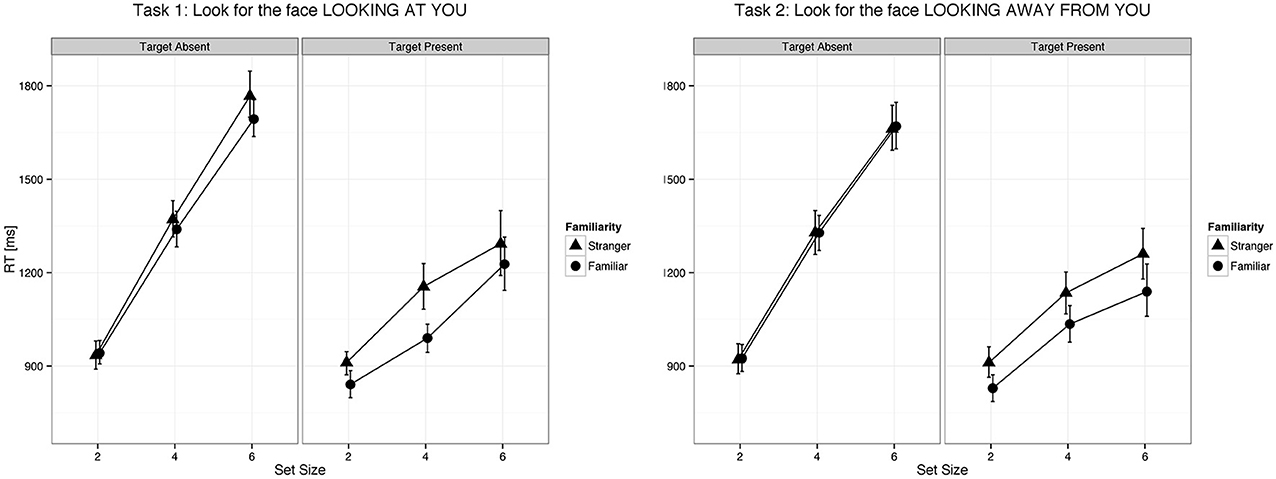

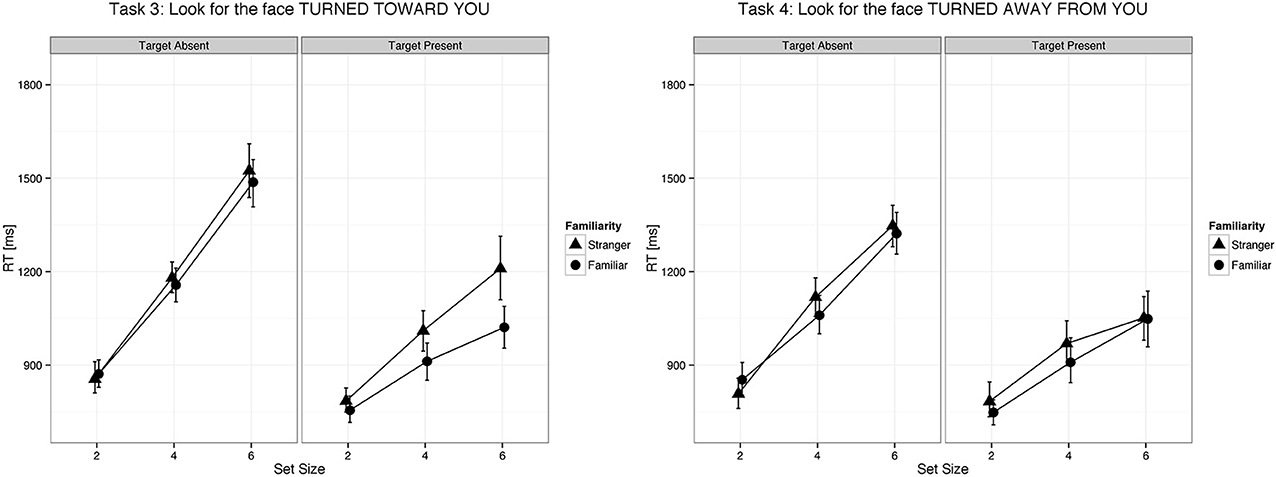

We found a main effect of familiarity (X2(1) = 21.07, p < 0.0001, parameter estimate = −83.8 ms, 95% bCI: [−115.7, −52.1]), set size (X2(1) = 385.35, p < 0.0001, parameter estimate = 168.6 ms, bCI: [152.4, 185.1]), and task (X2(3) = 73.94, p < 0.0001). The strong effect of set size on target present trials for all tasks indicates that visual search for gaze direction and head angle is serial with no evidence for parallel search or pop-out. Mean slope for the RSF on target present trials for gaze detection was 91 ms/item for gaze direction and 77 ms/item for head angle. Mean difference time for detection of target social cues in familiar and unfamiliar faces was 109 ms for gaze direction and 65 ms for head angle. We found a statistical difference between the two tasks (Gaze vs. Head, parameter estimate = 62.97 ms, bCI: [49.2, 76.7]), but no difference between Task 1 and Task 2 (parameter estimate = 9.18 ms, bCI: [−11.5, 30.1]) nor between Task 3 and Task 4 (parameter estimate = 15.57 ms, bCI: [−5.1, 36.1]). For an overview of all results in the Target Present conditions see Tables 1–3, 5, and Figures 2, 3.

Table 5. RSF slope estimates for Target Present and Target Absent conditions for the four tasks and for Familiar/Stranger targets (bCa bias-corrected and accelerated confidence intervals, computed with 10,000 repetitions).

Figure 2. Eye gaze was detected faster in familiar faces than in unfamiliar faces both when it was directed to the viewer and when it was averted. Error bars represent 95% bootstrapped confidence intervals.

Figure 3. Changes in head position of familiar faces were detected faster as compared to changes in head position of unfamiliar faces. Error bars represent 95% bootstrapped confidence intervals.

Target Absent

We ran the same analysis for target absent and found that the best model was again with task, set size, and familiarity as main fixed effects, and subjects and target items as random effects with random intercepts. All interactions (two-way and three-way) were not significant.

We found a main effect of task (X2(3) = 215.88, p < 0.0001) and set size (X2(1) = 1443.3, p < 0.0001, parameter estimate = 335.5 ms, bCI: [320.9, 350.0]), but not for familiarity (X2(1) = 1.3, p = 0.26, parameter estimate = −15.1 ms, bCI: [−41.9, 11.2]). Mean slope for the RSF on target absent trials for gaze detection was 192 ms/item for gaze direction and 143 ms/item for head angle. A contrast of tasks showed that the first two tasks were statistically different from the last two (Gaze vs. Head, parameter estimate = 95.0 ms, bCI: [82.6, 107.0]), and that Task 3 was statistically different from Task 4 (Detect Full View vs. Detect Profile View, parameter estimate = 47.2 ms, bCI: [29.6, 65.1]), but Task 1 was not statistically different from Task 2 (Detect Direct Gaze vs. Detect Averted Gaze, parameter estimate = 17.6 ms, bCI: [−0.1, 35.6]). For an overview of all results in the Target Absent conditions see Tables 1, 2, 4, 5, and Figures 2, 3.

d' Values

Since many subjects had False Alarm rates of 0, we computed the Hit and FA ratios by adding 0.5 and dividing by N + 1, thus scaling the ratios to avoid extremes. To analyze d' values, we used the same analyses (Linear Mixed-Effect Models) as described above. We found that the best model was with task, set size, and familiarity as main fixed effects, and subjects as random effects with random intercepts. All interactions (two-way and three-way) were not significant.

We found a main effect of set size (X2(1) = 10.26, p = 0.0014, parameter estimate = −0.1284 [−0.2074, −0.0509]), familiarity (X2(1) = 14.32, p = 0.0002, parameter estimate = 0.2490 [0.1258, 0.3769]), and task (X2(3) = 7.83, p = 0.0497). The contrasts for task we specified before were not statistically significant for the d' values: Gaze vs. Head angle, parameter estimate = −0.0538 [−0.3510, 0.0060]; Task 1 vs. Task 2, parameter estimate = 0.0872 [−0.2134, 0.1430]; Task 3 vs. Task 4, parameter estimate = −0.0570 [−0.1081, 0.2547] (see Table 2 for the mean d' values and SE for each condition and each task).

Discussion

Face perception is arguably one of the most developed visual skills in humans. Faces are detected more readily than other objects (Crouzet et al., 2010). Familiar face perception is especially sensitive and efficient and is dramatically better than unfamiliar face perception (Jenkins and Burton, 2011). Here we show that one class of social cues transmitted by faces—perception of the direction of another's attention—is detected much more rapidly in familiar faces than in unfamiliar faces. In previous work, we have shown that personally familiar faces, as compared to faces of strangers, are detected more readily in conditions with reduced attentional resources and even without awareness (Gobbini et al., 2013b). With the experiments reported in the present manuscript, we extend this line of research to show that the increased efficiency afforded by familiarity includes not only simple detection but also the perception of socially-relevant cues.

We used a visual search paradigm to test the effect of face familiarity on the detection of a target with a different gaze or head orientation. We found that the familiarity of the face with the target feature had a strong effect on detection time but no effect on RSF slopes—in other words, a facilitation of social cue detection that was constant across set sizes. This result indicates that the social cue was detected much faster in familiar than unfamiliar faces and that attentional capture—a bias to process the familiar faces earlier in a serial visual search—did not play a significant role, as such an effect would be reflected in a flatter RSF.

As expected we found that increasing the number of distractors made the task harder as evidenced by increased reaction times and decreased d' values. Moreover, as expected, we found that detecting a target head orientation was faster than detecting a target gaze direction, albeit with no difference in accuracy. This effect could be due to the fact that head orientation differences are evident in larger changes in the visual stimulus than are gaze direction differences, thus making the visual search easier.

Our results clearly show that detection of target gaze directions and head angles involves a serial visual search with no indication of parallel processing or pop-out. Detection times on target present trials showed a strong effect of set size. This finding is consistent with those of Tong and Nakayama (1999) who found that detection of a target individual (self or a stranger) among distractor faces involved a serial search. Pop-out for simple face detection among non-face distractors was shown in one report using large set sizes (Hershler and Hochstein, 2005) but appears to be due to low level visual features, namely the amplitude spectrum of spatial frequencies (VanRullen, 2006).

Images of familiar and unfamiliar faces were carefully matched. All pictures were made with the same lighting and photographic equipment in a studio setting. Mean luminance and contrast were the same for all stimuli. Thus, spurious low-level differences cannot account for performance differences between the detection of familiar and stranger targets. Indeed, we found a large main effect of familiarity for both the speed and accuracy of target detection.

The slope of the RSF is an indication of how much time is required to check each stimulus for the target feature. Target absent trials require checking all stimuli for the target feature, resulting in RSF slopes that are twice as steep as those for target present trials on which visual search terminates with detection of the target feature. Processing each distractor for gaze orientation, as indicated by the RSF slope on target absent trials, required on average 192 ms, and processing each distractor for head angle required 143 ms. In this context, the effect of familiarity on gaze orientation and head angle tasks (109 ms and 65 ms, respectively) suggests that the times to process these signals in familiar faces are markedly faster than the corresponding processing times for unfamiliar faces.

Familiar faces also may attract attention, biasing visual search to process familiar faces earlier than unfamiliar faces, an effect that also could cause faster detection of social cues in familiar faces. Such an effect, however, would make the RSF slope flatter for familiar target trials than for unfamiliar target trials, an effect that was not significant in the current study. In Tong and Nakayama (1999), the RSF slope was slightly flatter for finding one's own face than for finding an unfamiliar face target in a visual search task. This effect was not significant in their first experiment, with an RSF slope difference of 15 ms/item, and was significant in the second experiment, with an RSF slope difference of 23 ms/item. Estimate of the equivalent effect in our data, based on target present trials as in Tong and Nakayama (1999), was 10 ms/item and not significant. When we include this non-significant effect in a model that accounts for the difference in detection times with both cue processing and RSF slope differences, the facilitation of detection by familiarity is still due mostly to a faster processing of the social cue rather than to looking at familiar faces earlier. The more parsimonious explanation that better fits our data, therefore, is that the target social cue—gaze angle and head direction—is examined in each stimulus in the search array, that this process is serial, that a familiar face is no more likely than an unfamiliar face to be examined earlier in the serial search, and that the social cue is processed more quickly if the face is familiar.

We also found that responding “no” on target absent trials was slowed by 20–40 ms if the distractors all had attention directed away from the viewer, as indicated either by averted gaze or averted head angle. Perceived gaze and head orientation represent strong signals for reallocating attention in humans, and the attentional shift to the side elicited when someone else stares or turns their head away from us appears to be automatic (Friesen and Kingstone, 1998; Frischen et al., 2007). This automatic diversion of attention may be the underlying cause for slower response times on target absent trials when distractor face images had averted gaze or head angle. To summarize, not only are familiar faces detected faster than are faces of strangers (Tong and Nakayama, 1999; Deuve et al., 2009; Ramon et al., 2011; Gobbini et al., 2013b) but also cues that represent strong social signals (Perrett et al., 1985; Senju and Johnson, 2009; Stein et al., 2011; Gobbini et al., 2013a)—eye gaze and head direction—are detected much more rapidly if they are perceived in a familiar face.

We spend a great amount of time at looking at faces of immediate family and close friends that become intimately familiar over repeated exposure and social interaction extending over years. This slow and prolonged exposure can contribute to the development of a more stable representation of the visual appearance of a familiar face. Personally familiar faces, in contrast to the faces of strangers, are detected faster and recognized with great efficiency in conditions of poor visibility and over large changes in a head angle, lighting, partial occlusion, and age (Burton et al., 1999; O'Toole et al., 2006; Johnston and Edmonds, 2009; Burton and Jenkins, 2011). Personally familiar faces are among the most highly-learned and salient visual stimuli for humans and are associated with changes in the representation of both the visual appearance and associated person knowledge, affording highly efficient and robust recognition. By contrast, recognition of unfamiliar faces—identifying a target unfamiliar face among other faces—is surprisingly inaccurate (Burton et al., 1999; O'Toole et al., 2006; Burton and Jenkins, 2011). Whereas the performance of machine vision systems for face recognition is equivalent to human performance for unfamiliar face recognition, human performance for familiar face recognition is much better (Jenkins and Burton, 2011; O'Toole et al., 2011). Understanding the perceptual and neural mechanisms underlying this remarkable performance is of great interest for understanding how neural systems become highly efficient for highly salient stimuli and for designing better machine vision systems. The relative roles played by detectors for fragmentary or holistic visual features and by top-down influences of semantic information in the facilitation of familiar face processing are unknown. Face detection and perception of the direction of another's attention, however, appear to be extremely fast, efficient, and independent of attentional resources and even awareness (Jiang et al., 2007; Crouzet and Thorpe, 2011; Gobbini et al., 2013a), suggesting that top-down influences of semantic information may play a minor role and that facilitation of familiar face processing may be due mostly to the development of detectors of fragmentary or holistic visual features that are specific to familiar individuals.

A distributed system for face perception has been described in humans (Haxby et al., 2000, 2002; Ishai et al., 2005; Gobbini and Haxby, 2007; Haxby and Gobbini, 2011) and monkeys (Tsao et al., 2008; Freiwald and Tsao, 2010). In humans the system includes visual cortical areas that are involved in perception of invariant visual attributes diagnostic of identity and perception of changeable aspects for facial expression and speech (the “core system”) and additional areas involved in representation of information associated with faces, such as person knowledge, emotion, and spatial attention (the “extended system”) (Haxby et al., 2000, 2002; Ishai et al., 2005; Gobbini and Haxby, 2007; Taylor et al., 2009; Natu and O'Toole, 2011; Bobes et al., 2013). Repeated exposure to faces might result in natural and protracted learning that tunes this hierarchical and distributed system at all levels to afford efficient and robust detection and identification of these faces. This could be due to development of representations of the visual appearance across many different changes in head angle, lighting, expression, and partial occlusion. The integration of multiple representations into a general representation of an individual could help build a system that is stable, robust, and efficient (Bruce, 1994; Burton et al., 2011). Neurophysiological data from monkeys suggest that a view-independent representation of faces is achieved through a series of processing steps from posterior toward more anterior face responsive patches in the temporal cortex that exhibit population responses tuned to head angle more posteriorly (MF/ML) and to head-angle invariant face identity more anteriorly (AM) (Freiwald and Tsao, 2010). In humans, face areas in the core system are tuned differentially to face parts (the occipital face area, OFA), invariant aspects that support recognition of identity (the fusiform face area, FFA) and changeable aspects such as facial expression, eye gaze, and speech movements (the pSTS). In addition, human face areas have been described in anterior temporal and inferior frontal cortices (the ATFA and IFFA) that may play a critical role in identification (Rajimehr et al., 2009; Kriegeskorte et al., 2007; Natu et al., 2010; Nestor et al., 2011; Kietzmann et al., 2012; Anzellotti and Caramazza, 2014; Anzellotti et al., 2014).

Classical cognitive models on face perception and recognition posit that visual recognition necessarily precedes access to person knowledge (Bruce and Young, 1986). Evoked potential studies have shown that the first face-specific response to a face, the N170, is not modulated by familiarity (Bentin et al., 1999; Puce et al., 1999; Eimer, 2000; Paller et al., 2000; Abdel Rahman, 2011 but see also Caharel et al., 2011). Instead, modulation of the response by familiarity appears at later latencies (greater than 250 ms) (Eimer, 2000; Schweinberger et al., 2004; Tanaka et al., 2006). Whereas early face-specific evoked potentials are recorded in posterior temporal locations, the later potentials that are modulated by familiarity are recorded in temporal, frontal and parietal locations (Bentin et al., 1999; Puce et al., 1999; Eimer, 2000; Tanaka et al., 2006). Faster detection without awareness of personally familiar faces as compared to faces of strangers suggest that early face processing that precedes explicit recognition may be facilitated for personally familiar faces (Gobbini et al., 2013b). Models of object perception hypothesize that the recognition of objects despite pronounced changes in appearance is due to a multistep sequence of processing, characterized by stages in which stimulus features of increasing complexity are analyzed and combined until a representation, invariant to visual transformation is achieved in the inferior temporal cortex (Ullman et al., 2002; Riesenhuber and Poggio, 2002; Serre et al., 2007; DiCarlo et al., 2012; but see also Kravitz et al., 2013).

Psychophysical studies have shown that faces can be detected very rapidly, with the earliest reliable saccades to faces at 100–110 ms (Crouzet et al., 2010; Crouzet and Thorpe, 2011). Face specific patterns of neural activity can be detected as early as 100 ms with EEG using multivariate pattern analysis (Cauchoix et al., 2014). These very rapid responses to faces may be due to low-level visual features that are more frequent in faces (Tanskanen et al., 2005). For example, Honey et al. (2008) and Crouzet and Thorpe (2011) demonstrated the importance of specific spatial frequency amplitudes underlying ultra-fast face detection. Specific properties of faces, such as eye gaze direction, head angle and personal familiarity, differentially facilitate detection even without awareness (Stein et al., 2011; Gobbini et al., 2013a,b). These findings raise the question of how such fast and preconscious processing can be achieved—through a subcortical system (for a review see Tamietto and de Gelder, 2010 but see also Pessoa and Adolphs, 2010) or through a cortical route with a fast feed-forward integration of information (VanRullen and Thorpe, 2001) and activation of the distributed network in the fronto-parietal areas for retrieval of person knowledge. Highly-learned representations of personally familiar faces may also include detectors for visual features—face fragments or more holistic configurations—that are diagnostic for familiar individuals (Butler et al., 2010). The facilitation of familiar face processing that appears to be at least partially independent of attentional resources and awareness may be due to activation of such learned diagnostic feature detectors. The results presented here suggest that these detectors also may be specific for features that carry social signals, such as eye gaze direction, head orientation, and expression.

A largely unexplored mechanism in the expertise for familiar faces involves detectors for diagnostic facial features in early visual cortex. Petro et al. (2013) have shown facial attributes such as gender and expression can be decoded, using multivariate pattern analysis (MVPA), in V1 cortical patches. Diagnostic features specific to familiar faces might be learned through experience and might afford “pre-recognition” detection, namely facilitated detection without an explicit recognition of the identity of highly familiar faces. Instead, explicit recognition of a highly familiar face may require top-down processing from neural systems that are involved in retrieval of person knowledge and in the emotional response, and this top-down input could serve to tune and amplify the visual representation of personally familiar faces (Gobbini and Haxby, 2007; Gobbini, 2010).

In this manuscript we have presented new evidence for facilitated processing of personally familiar faces. We have highlighted the importance of testing the human system for familiar face detection and recognition. Experiments using familiar faces as stimuli can offer insight on the organization of the neural systems for recognition of highly familiar objects, can help improve software for face recognition and can shed further light on practical issues such as flaws in eye witness reports. Our expertise with face recognition seems to be most developed for familiar faces, and unfamiliar face recognition is disappointing. Our expertise with familiar faces could be due to the integrated functioning of the distributed neural system for face perception at multiple levels (Haxby et al., 2000, 2001; Gobbini and Haxby, 2007; Haxby and Gobbini, 2011). The extended system components for the representation of person knowledge may interact with the representation of the visual appearance to stabilize and strengthen the representation of visual features that are diagnostic of the identity and facial gestures of familiar individuals. The development of a robust representation of the visual appearances of familiar individuals affords detection even in conditions with poor visibility (O'Toole et al., 2006; Burton and Jenkins, 2011). Activation of these simple features might facilitate detection preceding explicit recognition and facilitate processing of social signals. Understanding how learning tunes integrated processing of personally familiar faces in the hierarchical system for face perception may serve as a model for how learning tunes neural systems for recognition of other highly salient stimuli, such as gestures and actions, personal objects and places, or voices and written words.

Author Contributions

M. Ida Gobbini conceived the idea; M. Ida Gobbini, J. Swaroop Guntupalli and Matteo Visconti di Oleggio Castello designed the experiment; J. Swaroop Guntupalli and Hua Yang collected and analyzed the data of a pilot study; Matteo Visconti di Oleggio Castello collected and analyzed the data for the final experiment; M. Ida Gobbini and Matteo Visconti di Oleggio Castello wrote the manuscript; Hua Yang and J. Swaroop Guntupalli provided critical inputs to the final version of the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank Jim Haxby and Carlo Cipolli for insightful discussions and comments on the manuscript. The authors would like to thank also Ming Meng and Patrick Cavanagh for feedback on the experimental design. Furthermore, the authors would like to thank Easha Narayan, Kelsey Wheeler and Courtney Rogers for help with subject recruitment and stimulus development.

References

Abdel Rahman, R. (2011). Facing good and evil: early brain signatures of affective biographical knowledge in face recognition. Emotion 11, 1397–1405. doi: 10.1037/a0024717

Allison, T., Puce, A., and McCarthy, G. (2000). Social perception from visual cues: role of the STS region. Trends Cogn. Sci. 4, 267–278. doi: 10.1016/S1364-6613(00)01501-1

Anzellotti, S., and Caramazza, A. (2014). Individuating the neural bases for the recognition of conspecifics with MVPA. Neuroimage 89, 165–170. doi: 10.1016/j.neuroimage.2013.12.005

Anzellotti, S., Fairhall, S. L., and Caramazza, A. (2014). Decoding representations of face identity that are tolerant to rotation. Cereb. Cortex 24, 1988–1995. doi: 10.1093/cercor/bht046

Baayen, R. H., Davidson, D. J., and Bates, D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. J. Mem. Lang. 59, 390–412. doi: 10.1016/j.jml.2007.12.005

Bates, D., Maechler, M., Bolker, B., and Walker, S. (2014). lme4: Linear Mixed-Effects Models Using Eigen and S4. R package version 1.0-6. Available online at: http://CRAN.R-project.org/package=lme4

Bentin, S., Allison, T., Puce, A., Perez, E., and McCarthy, G. (1996). Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 8, 551–565. doi: 10.1162/jocn.1996.8.6.551

Bentin, S., Deouell, L. Y., and Soroker, N. (1999). Selective visual streaming in face recognition: evidence from developmental prosopagnosia. Neuroreport 10, 823–827.

Bobes, M. A., Lage Castellanos, A., Quiñones, I., García, L., and Valdes-Sosa, M. (2013). Timing and tuning for familiarity of cortical responses to faces. PLoS ONE 8:e76100. doi: 10.1371/journal.pone.0076100

Bruce, V. (1994). Stability from variation: the case of face recognition. The M. D. Vernon memorial lecture. Q. J. Exp. Psychol. 47A, 5–28.

Burton, A. M., and Jenkins, R. (2011). “Unfamiliar face perception,” in Handbook of Face Perception, eds A. J. Calder, G. Rhodes, M. H. Johnson, and J. V. Haxby (Oxford: Oxford University Press), 287–306.

Burton, A. M., Jenkins, R., and Schweinberger, S. R. (2011). Mental representations of familiar faces. Br. J. Psychol. 102, 943–958. doi: 10.1111/j.2044-8295.2011.02039.x

Burton, A. M., Wilson, S., Cowan, M., and Bruce, V. (1999). Face recognition in poor quality video: evidence from security surveillance. Psychol. Sci. 10, 243–248.

Butler, S., Blais, C., Gosselin, F., Bub, D., and Fiset, D. (2010). Recognizing famous people. Atten. Percept. Psychophys. 72, 1444–1449. doi: 10.3758/APP.72.6.1444

Buttle, H., and Raymond, J. E. (2003). High familiarity enhances visual change detection for face stimuli. Percept. Psychophys. 65, 1296–1306. doi: 10.3758/BF03194853

Caharel, S., Jacques, C., d'Arripe, O., Ramon, M., and Rossion, B. (2011). Early electrophysiological correlates of adaptation to personally familiar and unfamiliar faces across viewpoint changes. Brain Res. 1387, 85–98. doi: 10.1016/j.brainres.2011.02.070

Carlin, J. D., Rowe, J. B., Kriegeskorte, N., Thompson, R., and Calder, A. J. (2012). Direction-sensitive codes for observed head turns in human superior temporal sulcus. Cereb. Cortex 22, 735–744. doi: 10.1093/cercor/bhr061

Cauchoix, M., Barragan-Jason, G., Serre, T., and Barbeau, E. J. (2014). The neural dynamics of face detection in the wild revealed by MVPA. J. Neurosci. 34, 846–854. doi: 10.1523/JNEUROSCI.3030-13.2014

Crouzet, S. M., Kirchner, H., and Thorpe, S. J. (2010). Fast saccades toward faces: face detection in just 100 ms. J. Vis. 10, 1–17. doi: 10.1167/10.4.16

Crouzet, S. M., and Thorpe, S. J. (2011). Low-level cues and ultra-fast face detection. Front. Psychol. 2:342. doi: 10.3389/fpsyg.2011.00342

Deuve, C., Van der Stigchel, S., Brédart, S., and Theeuwes, J. (2009). You do not find your own face faster; you just look at it longer. Cognition 111, 114–122. doi: 10.1016/j.cognition.2009.01.003

DiCarlo, J. J., Zoccolan, D., and Rust, N. C. (2012). How does the brain solve visual object recognition? Neuron 73, 415–434. doi: 10.1016/j.neuron.2012.01.010

Eimer, M. (2000). The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport 11, 2319–2324. doi: 10.1097/00001756-200007140-00050

Eimer, M., and Holmes, A. (2002). An ERP study on the time course of emotional face processing. Neuroreport 13, 427–431. doi: 10.1097/00001756-200203250-00013

Engell, A. D., and Haxby, J. V. (2007). Facial expression and gaze-direction in human superior temporal sulcus. Neuropsychologia 45, 3234–3241. doi: 10.1016/j.neuropsychologia.2007.06.022

Persike, M., Meinhardt-Injac, B., and Meinhardt, G. (2013). The preview benefit for familiar and unfamiliar faces. Vision Res. 87, 1–9. doi: 10.1016/j.visres.2013.05.005

Freiwald, W. A., and Tsao, D. Y. (2010). Functional compartmentalization and viewpoint generalization within the macaque face-processing system. Science 330, 845–851. doi: 10.1126/science.1194908

Friesen, C. K., and Kingstone, A. (1998). The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychon. Bull. Rev. 5, 490–495.

Frischen, A., Bayliss, A. P., and Tipper, S. P. (2007). Gaze cueing of attention: visual attention, social cognition, and individual differences. Psychol. Bull. 133, 694–724. doi: 10.1037/0033-2909.133.4.694

Gobbini, M. I. (2010). “Distributed process for retrieval of person knowledge,” in Social Neuroscience: Toward the Underpinnings of the Social Mind, eds A. Todorov, S. T. Fiske, and D. A. Prentice (New York, NY: Oxford University Press), 40–53.

Gobbini, M. I., Gentili, C., Ricciardi, E., Bellucci, C., Salvini, P., Laschi, C., et al. (2011). Distinct neural systems involved in agency and animacy detection. J. Cogn. Neurosci. 23, 1911–1920. doi: 10.1162/jocn.2010.21574

Gobbini, M. I., Gors, J. D., Halchenko, Y. O., Hughes, H. C., and Cipolli, C. (2013a). Processing of invisible social cues. Conscious. Cogn. 22, 765–770. doi: 10.1016/j.concog.2013.05.002

Gobbini, M. I., Gors, J. D., Halchenko, Y. O., Rogers, C., Guntupalli, J. S., Hughes, H. C., et al. (2013b). Prioritized detection of personally familiar faces. PLoS ONE 8:e66620. doi: 10.1371/journal.pone.0066620

Gobbini, M. I., and Haxby, J. V. (2006). Neural response to the visual familiarity of faces. Brain Res. Bull. 71, 76–82. doi: 10.1016/j.brainresbull.2006.08.003

Gobbini, M. I., and Haxby, J. V. (2007). Neural systems for recognition of familiar faces. Neuropsychologia 45, 32–41. doi: 10.1016/j.neuropsychologia.2006.04.015

Gobbini, M. I., Koralek, A. C., Bryan, R. E., Montgomery, K. J., and Haxby, J. V. (2007). Two takes on the social brain: a comparison of theory of mind tasks. J. Cogn. Neurosci. 19, 1803–1814. doi: 10.1162/jocn.2007.19.11.1803

Gobbini, M. I., Leibenluft, E., Santiago, N., and Haxby, J. V. (2004). Social and emotional attachment in the neural representation of faces. Neuroimage 22, 1628–1635. doi: 10.1016/j.neuroimage.2004.03.049

Haxby, J. V., and Gobbini, M. I. (2011). “Distributed neural systems for face perception,” in Handbook of Face Perception, eds A. J. Calder, G. Rhodes, M. H. Johnson, and J. V. Haxby (Oxford: Oxford University Press), 93–110.

Haxby, J. V., Gobbini, M. I., Furey, M. L., Ishai, A., Schouten, J. L., and Pietrini, P. (2001). Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293, 2425–2430. doi: 10.1126/science.1063736

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 46, 223–233.

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2002). Human neural systems for face recognition and social communication. Biol. Psychiatry 51, 59–67. doi: 10.1016/S0006-3223(01)01330-0

Hershler, O., and Hochstein, S. (2005). At first sight: a high-level pop out effect for faces. Vision Res. 45, 1707–1724. doi: 10.1016/j.visres.2004.12.021

Hoffman, E. A., and Haxby, J. V. (2000). Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat. Neurosci. 3, 80–84. doi: 10.1038/71152

Honey, C., Kirchner, H., and VanRullen, R. (2008). Faces in the cloud: fourier power spectrum biases ultrarapid face detection. J. Vis. 8, 9.1–9.13. doi: 10.1167/8.12.9

Ishai, A., Schmidt, C. F., and Boesiger, P. (2005). Face perception is mediated by a distributed cortical network. Brain Res. Bull. 67, 87–93. doi: 10.1016/j.brainresbull.2005.05.027

Jenkins, R., and Burton, A. M. (2011). Stable face representations. Philos. Trans. R. Soc. Lond. B Biol. Sci. 366, 1671–1683. doi: 10.1098/rstb.2010.037

Jiang, Y., Costello, P., and He, S. (2007). Processing of invisible stimuli: advantage of upright faces and recognizable words in overcoming interocular suppression. Psychol. Sci. 18, 349–355. doi: 10.1111/j.1467-9280.2007.01902.x

Jiang, Y., and He, S. (2006). Cortical responses to invisible faces: dissociating subsystems for facial-information processing. Curr. Biol. 16, 2023–2029. doi: 10.1016/j.cub.2006.08.084

Johnston, R. A., and Edmonds, A. J. (2009). Familiar and unfamiliar face recognition: a review. Memory 17, 577–596. doi: 10.1080/09658210902976969

Kirby, K. N., and Gerlanc, D. (2013). BootES: an R package for bootstrap confidence intervals on effect sizes. Behav. Res. Methods 45, 905–927. doi: 10.3758/s13428-013-0330-5

Kleiner, M., Brainard, D., and Pelli, D. (2007). What's new in psychtoolbox-3? Perception 36(ECVP Abstract Supplement), 14.

Kravitz, D. J., Saleem, K. S., Baker, C. I., Ungerleider, L. G., and Mishkin, M. (2013). The ventral visual pathway: an expanded neural framework for the processing of object quality. Trends Cogn. Sci. 17, 26–49. doi: 10.1016/j.tics.2012.10.011

Kriegeskorte, N., Formisano, E., Sorger, B., and Goebel, R. (2007). Individual faces elicit distinct response patterns in human anterior temporal cortex. Proc. Natl. Acad. Sci. U.S.A. 104, 20600–20605. doi: 10.1073/pnas.0705654104

Leibenluft, E., Gobbini, M. I., Harrison, T., and Haxby, J. V. (2004). Mothers' neural activation in response to pictures of their, and other, children. Biol. Psychiatry 56, 225–232. doi: 10.1016/j.biopsych.2004.05.017

Morris, J. S., Ohman, A., and Dolan, R. J. (1998). Conscious and unconscious emotional learning in the human amygdala. Nature 393, 467–470. doi: 10.1038/30976

Natu, V., and O'Toole, A. J. (2011). The neural processing of familiar and unfamiliar faces: a review and synopsis. Br. J. Psychol. 102, 726–747. doi: 10.1111/j.2044-8295.2011.02053.x

Natu, V. S., Jiang, F., Narvekar, A., Keshvari, S., Blanz, V., and O'Toole, A. J. (2010). Dissociable neural patterns of facial identity across changes in viewpoint. J. Cogn. Neurosci. 22, 1570–1582. doi: 10.1162/jocn.2009.21312

Nestor, A., Plaut, D. C., and Behrmann, M. (2011). Unraveling the distributed neural code of facial identity through spatiotemporal pattern analysis. Proc. Natl. Acad. Sci. U.S.A. 108, 9998–10003. doi: 10.1073/pnas.1102433108

O'Toole, A. J., Jiang, F., Roark, D., and Abdi, H. (2006). “Predicting human performance for face recognition,” in Face Processing, Advanced Modeling and Methods, eds W. Zhao and R. Chellappa (Burlington, MA; San Diego, CA; London, UK: Elsevier), 293–320.

O'Toole, A. J., Roark, D., and Abdi, H. (2002). Recognizing moving faces: a psychological and neural synthesis. Trends Cogn. Neurosci. 6, 261–266. doi: 10.1016/s1364-6613(02)01908-3

O'Toole, A. J., Phillips, P. J., Weimer, S., Roark, D. A., Ayyad, J., Barwick, R., et al. (2011). Recognizing people from dynamic and static faces and bodies: dissecting identity with a fusion approach. Vision Res. 51, 74–83. doi: 10.1016/j.visres.2010.09.035

Pageler, N. M., Menon, V., Merin, N. M., Eliez, S., Brown, W. E., and Reiss, A. L. (2003). Effect of head orientation on gaze processing in fusiform gyrus and superior temporal sulcus. Neuroimage 20, 318–329. doi: 10.1016/S1053-8119(03)00229-5

Paller, K. A., Gonsalves, B., Grabowecky, M., Bozic, V. S., and Yamada, S. (2000). Electrophysiological correlates of recollecting faces of known and unknown individuals. Neuroimage 11, 98–110. doi: 10.1006/nimg.1999.0521

Pelli, D. G. (1997). The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat. Vis. 10, 437–442. doi: 10.1163/156856897X00366

Pelphrey, K. A., Singerman, J. D., Allison, T., and McCarthy, G. (2003). Brain activation evoked by perception of gaze shifts: the influence of context. Neuropsychologia 41, 156–170. doi: 10.1016/S0028-3932(02)00146-X

Perrett, D. I., Smith, P., Potter, D., Mistlin, A., Head, A., Milner, A., et al. (1985). Visual cells in the temporal cortex sensitive to face view and gaze direction. Proc. R. Soc. Lond. B Biol. Sci. 223, 293–317. doi: 10.1098/rspb.1985.0003

Pessoa, L., and Adolphs, R. (2010). Emotion processing and the amygdala: from a ‘low road’ to ‘many roads’ of evaluating biological significance. Nat. Rev. Neurosci. 11, 773–783. doi: 10.1038/nrn2920

Petro, L. S., Smith, F. W., Schyns, P. G., and Muckli, L. (2013). Decoding face categories in diagnostic subregions of primary visual cortex. Eur. J. Neurosci. 37, 1130–1139. doi: 10.1111/ejn.12129

Pitcher, D., Goldhaber, T., Duchaine, B., Walsh, V., and Kanwisher, N. (2012). Two critical and functionally distinct stages of face and body perception. J. Neurosci. 32, 15877–15885. doi: 10.1523/JNEUROSCI.2624-12.2012

Puce, A., Allison, T., and McCarthy, G. (1999). Electrophysiological studies of human face perception. III: effects of top-down processing on face-specific potentials. Cereb. Cortex 9, 445–958. doi: 10.1093/cercor/9.5.445

Rajimehr, R., Young, J. C., and Tootell, R. B. (2009). An anterior temporal face patch in human cortex, predicted by macaque maps. Proc. Natl. Acad. Sci. U.S.A. 106, 1995–2000. doi: 10.1073/pnas.0807304106

Ramon, M., Caharel, S., and Rossion, B. (2011). The speed of recognition of personally familiar faces. Perception 40, 437–449. doi: 10.1068/p6794

R Core Team. (2013). R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing. Available online at: http://www.R-project.org/

Riesenhuber, M., and Poggio, T. (2002). Neural mechanisms of object recognition. Curr. Opin. Neurobiol. 12, 162–168. doi: 10.1016/S0959-4388(02)00304-5

Schweinberger, S. R., Huddy, V., and Burton, A. M. (2004). N250r: a face-selective brain response to stimulus repetitions. Neuroreport 15, 1501–1505. doi: 10.1097/01.wnr.0000131675.00319.42

Schweinberger, S. R., Kloth, N., and Jenkins, R. (2007). Are you looking at me? Neural correlates of gaze adaptation. Neuroreport 18, 693–696. doi: 10.1097/WNR.0b013e3280c1e2d2

Senju, A., and Johnson, M. H. (2009). The eye contact effect: mechanisms and development. Trends Cogn. Sci. 13, 127–134. doi: 10.1016/j.tics.2008.11.009

Serre, T., Oliva, A., and Poggio, T. (2007). A feedforward architecture accounts for rapid categorization. Proc. Natl. Acad. Sci. U.S.A. 104, 6424–6429. doi: 10.1073/pnas.0700622104

Stein, T., Senju, A., Peelen, M. V., and Sterzer, P. (2011). Eye contact facilitates awareness of faces during interocular suppression. Cognition 119, 307–311. doi: 10.1016/j.cognition.2011.01.008

Kietzmann, T. C., Swisher, J. D., König, P., and Tong, F. (2012). Prevalence of selectivity for mirror-symmetric views of faces in the ventral and dorsal visual pathways. J. Neurosci. 32, 11763–11772. doi: 10.1523/JNEUROSCI.0126-12.2012

Tamietto, M., and de Gelder, B. (2010). Neural bases of the non-conscious perception of emotional signals. Nat. Rev. Neurosci. 11, 697–709. doi: 10.1038/nrn2889

Tanaka, J. W., Curran, T., Porterfield, A. L., and Collins, D. (2006). Activation of preexisting and acquired face representations: the N250 event-related potential as an index of face familiarity. J. Cogn. Neurosci. 18, 1488–1497. doi: 10.1162/jocn.2006.18.9.1488

Tanskanen, T., Näsänen, R., Montez, T., Päällysaho, J., and Hari, R. (2005). Face recognition and cortical responses show similar sensitivity to noise spatial frequency. Cereb. Cortex 15, 526–534. doi: 10.1093/cercor/bhh152

Taylor, M. J., Arsalidou, M., Bayless, S. J., Morris, D., Evans, J. W., and Barbeau, E. J. (2009). Neural correlates of personally familiar faces: parents, partner and own faces. Hum. Brain Mapp. 30, 2008–2020. doi: 10.1002/hbm.20646

Tong, F., and Nakayama, K. (1999). Robust representations for faces: evidence from visual search. J. Exp. Psychol. Hum. Percept. Perform. 25, 1016–1035. doi: 10.1037/0096-1523.25.4.1016

Treisman, A. M., and Gelade, G. (1980). A feature-integration theory of attention. Cogn. Psychol. 12, 97–136. doi: 10.1016/0010-0285(80)90005-5

Tsao, D. Y., Moeller, S., and Freiwald, W. A. (2008). Comparing face patch systems in macaques and humans. Proc. Natl. Acad. Sci. U.S.A. 105, 19514–19519. doi: 10.1073/pnas.0809662105

Ullman, S., Vidal-Naquet, M., and Sali, E. (2002). Visual features of intermediate complexity and their use in classification. Nat. Neurosci. 5, 682–687. doi: 10.1038/nn870

Valdés-Sosa, M., Bobes, M. A., Quiñones, I., Garcia, L., Valdes-Hernandez, P. A., Iturria, Y., et al. (2011). Covert face recognition without the fusiform-temporal pathways. Neuroimage 57, 1162–1176. doi: 10.1016/j.neuroimage.2011.04.057

VanRullen, R. (2006). On second glance: still no high-level pop-out effect for faces. Vision Res. 46, 3017–3027. doi: 10.1016/j.visres.2005.07.009

VanRullen, R., and Thorpe, S. J. (2001). The time course of visual processing: from early perception to decision-making. J. Cogn. Neurosci. 13, 454–461. doi: 10.1162/08989290152001880

Whalen, P. J., Rauch, S. L., Etcoff, N. L., McInerney, S. C., Lee, M. B., and Jenike, M. A. (1998). Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J. Neurosci. 18, 411–418.

Willenbockel, V., Sadr, J., Fiset, D., Horne, G. O., Gosselin, F., and Tanaka, J. W. (2010). Controlling low-level image properties: the SHINE toolbox. Behav. Res. Methods 42, 671–684. doi: 10.3758/BRM.42.3.671

Williams, M. A., Morris, A. P., McGlone, F., Abbott, D. F., and Mattingley, J. B. (2004). Amygdala responses to fearful and happy facial expressions under conditions of binocular suppression. J. Neurosci. 24, 2898–2904. doi: 10.1523/JNEUROSCI.4977-03.2004

Winston, J. S., Henson, R. N., Fine-Goulden, M. R., and Dolan, R. J. (2004). fMRI-adaptation reveals dissociable neural representations of identity and expression in face perception. J. Neurophysiol. 92, 1830–1839. doi: 10.3758/BRM.42.3.671

Yang, E., Zald, D. H., and Blake, R. (2007). Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion 7, 882–886. doi: 10.1037/1528-3542.7.4.882

Keywords: face perception, familiar face recognition, attention, visual search, eye gaze, head angle, social cognition

Citation: Visconti di Oleggio Castello M, Guntupalli JS, Yang H and Gobbini MI (2014) Facilitated detection of social cues conveyed by familiar faces. Front. Hum. Neurosci. 8:678. doi: 10.3389/fnhum.2014.00678

Received: 06 June 2014; Accepted: 13 August 2014;

Published online: 02 September 2014.

Edited by:

Aina Puce, Indiana University, USAReviewed by:

Joseph Allen Harris, Otto-von-Guericke Universität Magdeburg, GermanyBozana Meinhardt-Injac, Johannes Gutenberg University Mainz, Germany

Copyright © 2014 Visconti di Oleggio Castello, Guntupalli, Yang and Gobbini. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: M. Ida Gobbini, DIMES at the Medical School, University of Bologna, Viale C. Berti-Pichat 5, 40126 Bologna, Italy e-mail: mariaida.gobbini@unibo.it; maria.i.gobbini@dartmouth.edu

† These authors have contributed equally to this work.

Matteo Visconti di Oleggio Castello

Matteo Visconti di Oleggio Castello J. Swaroop Guntupalli

J. Swaroop Guntupalli Hua Yang

Hua Yang M. Ida Gobbini

M. Ida Gobbini