Automated real-time behavioral and physiological data acquisition and display integrated with stimulus presentation for fMRI

- 1 Brain Imaging and Analysis Center, Duke University, Durham, NC, USA

- 2 Department of Radiology, Stanford University, Stanford, CA, USA

- 3 Department of Radiology, Massachusetts General Hospital, Boston, MA, USA

- 4 Department of Psychiatry, University California Irvine, Irvine, CA, USA

Functional magnetic resonance imaging (fMRI) is based on correlating blood oxygen-level dependent (BOLD) signal fluctuations in the brain with other time-varying signals. Although the most common reference for correlation is the timing of a behavioral task performed during the scan, many other behavioral and physiological variables can also influence fMRI signals. Variations in cardiac and respiratory functions in particular are known to contribute significant BOLD signal fluctuations. Variables such as skin conduction, eye movements, and other measures that may be relevant to task performance can also be correlated with BOLD signals and can therefore be used in image analysis to differentiate multiple components in complex brain activity signals. Combining real-time recording and data management of multiple behavioral and physiological signals in a way that can be routinely used with any task stimulus paradigm is a non-trivial software design problem. Here we discuss software methods that allow users control of paradigm-specific audio–visual or other task stimuli combined with automated simultaneous recording of multi-channel behavioral and physiological response variables, all synchronized with sub-millisecond temporal accuracy. We also discuss the implementation and importance of real-time display feedback to ensure data quality of all recorded variables. Finally, we discuss standards and formats for storage of temporal covariate data and its integration into fMRI image analysis. These neuroinformatics methods have been adopted for behavioral task control at all sites in the Functional Biomedical Informatics Research Network (FBIRN) multi-center fMRI study.

Introduction

Functional magnetic resonance imaging (fMRI) has become a standard neuroimaging method for measuring human brain function. Performing fMRI studies typically involves the use of three different types of computer software: MRI pulse sequence software for image acquisition, behavioral software for temporal control of stimulus presentation and response recording, and image analysis software for extracting brain function signals from MR images. For most fMRI applications, use of image acquisition and image analysis software packages involves specifying a variety of important parameter options but does not otherwise involve much computer programming by the user. Behavioral control software, however, does typically require some custom programming for each application in order to specify the task the subject is to perform, to control the subject’s sensory environment, and to record the subject’s behavior while they perform the task.

For most fMRI tasks the emphasis in developing behavioral control software is on the task stimuli and in detecting task-elicited button-press responses. Tasks used for fMRI can range from the simplest resting-state paradigm (e.g., “lie still with your eyes open,” followed by a blank screen) to complex adaptive behavior tasks where the stimulus varies depending on the subject’s real-time responses. Because of the variability of specific task designs, programs such as E-Prime (Psychology Software Tools Inc.), Presentation (Neurobehavioral Systems Inc.), Cogent1, Paradigm2, and others provide a variety of different programming approaches to enable users to prepare customized sequences of visual and auditory stimuli, and to accept behavioral responses from button press and cursor movement devices. Stimulus programming is typically all user-defined and organized in a single processing stream consisting of a series of stimulus events. Response recording is designed to be external event-driven (either interrupt triggered or via fast device polling) but the user program specifies when to expect responses during the task and how those responses should be linked to particular stimulus events. The only non-task signal typically monitored in most fMRI software programs is the timing of the beginning of MR scanner image acquisition in order to ensure proper synchronization of behavioral task timing with the time series of brain images being collected.

Increasingly, however, fMRI users recognize that brain BOLD signals can be affected by many physiological processes other than the specific sensory and motor behaviors that their cognitive task is designed to elicit. For example, ongoing regular cardiac and respiratory oscillations have been shown to contribute phase-dependent fluctuations in T2*-weighted image intensity comparable to the magnitude of task-dependent signal changes (Daqli et al., 1999; Glover et al., 2000). Variations in the rate or amplitude of respiratory or cardiac processes during a fMRI scan can contribute additional large fluctuations in the apparent BOLD signal due to a combination of brain tissue motion, susceptibility effects, and variations in blood oxygenation (Kruger and Glover, 2001; Birn et al., 2006, 2008; Shmueli et al., 2007; Chang and Glover, 2009a,b; Chang et al., 2009). Other physiological processes such as fluctuations in visual behavior (e.g., eye movements and gaze location) or emotional responses (e.g., skin conductance changes or pupil dilation) may differ widely within or across scans or subjects and contribute significantly to fMRI signals. Recording multiple physiological signals during fMRI provides temporal reference time courses that can be used in a multivariate analysis to regress different components of complex brain activity signals and help isolate specific task-dependent signals of interest. Recording multiple behavioral signals can also provide a quality control check on whether the subject was performing the task in the expected manner.

Despite broad recognition of the potential significance of physiological and behavioral signal fluctuations, most fMRI applications do not routinely record such variables because most fMRI behavioral software does not make it easy to integrate physiological signals within user-defined task stimulus programs. Modern computers are fast enough to handle even complex stimulus designs while simultaneously recording from multiple external devices, but accurate interleaving of stimulus and recording timing in real-time is non-trivial. One solution is to run separate programs simultaneously, one for controlling the behavioral task and another for recording physiological signals. Running such programs on different computers allows each to run independently but involves the extra expense of multiple computer systems and the added complexity of setting up and synchronizing different programs and later integration of data files. The logistical problem is somewhat simplified if multiple programs are run simultaneously on a single computer, but at the risk of one program affecting the timing performance of the other. Running multiple programs on the same computer depends on the operating system to time-share resources so that each appears to run independently, but interleaving multiple processing threads can interfere with the real-time accuracy of each. Using a single computer with multiple processors can reduce that interference but still involves the logistical complication of synchronizing the programs, avoiding screen display interference, and integrating recorded data files.

Here we describe novel neuroinformatics methods as implemented by the Functional Biomedical Informatics Research Network (FBIRN3) for combining accurate multi-modal physiological recordings with any behavioral task paradigm in a single computer program. The FBIRN is a multi-center research consortium designed to develop and test neuroinformatics methods and infrastructure for performing collaborative fMRI clinical research studies. As part of this development effort, the FBIRN made use of the CIGAL software package4 (Voyvodic, 1999) to provide automated integration of fMRI stimulus control and multi-channel behavioral recording at all its data collection sites. To ensure standardization and to enhance data quality across multiple centers, CIGAL was enhanced during the FBIRN study to enable dual video display output with task stimuli presented on one screen and simultaneous real-time display of all recorded physiological and behavioral signals on a second screen. The format for storing recorded behavioral data was also standardized to accommodate adequate provenance metadata and to allow compatibility with multiple fMRI analysis packages. This combination of automated data integration, real-time data monitoring, and well-documented output files produces more comprehensive behavioral data sets and has broad applicability for fMRI and other behavioral research studies.

Materials and Methods

The FBIRN is a large multi-phase project aimed at optimizing standardized methodologies for MR image acquisition, behavioral task control, clinical assessments, data analysis, and data sharing for multi-center clinical fMRI studies. The current report describes only those aspects of the study that are directly related to the control and acquisition of behavioral data during fMRI scanning; other aspects of the project will be reported elsewhere (e.g., Brown et al., 2011; Glover et al., 2012; Greve et al., 2011).

Sites

The FBIRN is a collaboration of 12 universities (Duke, Harvard, Iowa, Minnesota, New Mexico, Stanford, UC Irvine, UC Los Angeles, UC San Diego, UC San Francisco, UNC Chapel Hill, and Yale) each of which has a different configuration of MRI scanners, video and audio stimulus presentation, and behavioral response recording hardware. In the phase of the project described here (FBIRN Phase 3) data acquisition was restricted to eight MRI sites using only Siemens 3T (Iowa, Minnesota, New Mexico, UC Irvine, UCLA, UCSF) or GE 3T (Duke, UCSD) scanners. At each acquisition site the behavioral control hardware already included a Windows PC, MR-compatible video projector or goggles, audio headphones, multi-channel button response box, and a cable connection for sending scanner acquisition trigger pulses to the PC.

To standardize physiological data collection, each site used a respiratory belt transducer (Biopac, TSD201) and a finger cuff pulse-oximeter (Biopac, TSD123A), providing analog signals that were connected to a simple analog/digital (A/D) acquisition device (Measurement Computing, USB 1280FS) with a USB interface to the Windows PC. The respiratory transducer is a variable resistor, which was connected directly to the A/D device using the A/D’s 5 V supply and a 6-kΩ biasing resistor. The cardiac pulse-oximeter needed a separate power supply and amplifier (Biopac OXY100C), the analog output of which was connected directly to the A/D device. Where available, some sites connected additional behavioral response signals as A/D inputs, such as galvanic skin response (Biopac, EL507 and GSR100C), or connected eye-tracking signals via a serial input cable from a second PC computer running ViewPoint software (Arrington Research, Inc.) to track pupil position from an MR-compatible eye camera (MagConcepts Inc.) mounted on the scanner head coil.

Behavioral Control Software

For the FBIRN phase 3 study all sites used the CIGAL software package (Voyvodic, 1999). CIGAL can be downloaded for free for research applications (see text footnote 4). CIGAL was chosen because it could support all of the programming needs of the study’s behavioral tasks and simultaneously provide continuous automated recording of all physiological and other behavioral input signals. CIGAL is a single C program that defines an interactive user environment and two different programming languages: a command scripting language and a real-time control language (Voyvodic, 1999). CIGAL was recently enhanced to support separate displays on multiple video monitors. The program supports a wide variety of peripheral hardware devices, including keyboard, mouse, gameport joysticks, serial ports, parallel ports, analog/digital devices (Measurement Computing, National Instruments), and network socket connections. During installation at each site, the configuration of locally available peripheral hardware options and standard preferred stimulus presentation and data acquisition settings were specified and saved using interactive dialog menus.

Task Paradigms

The FBIRN study included three different types of task paradigms. The simplest were rest tasks where a blank screen stimulus was displayed and the subject was instructed to just stay awake with their eyes closed. This task was used during resting-state fMRI scans and during arterial spin-labeled (ASL) perfusion scans. The second type of paradigm was an auditory-oddball (AudOddball) task (Stevens et al., 2000) in which the subject heard a series of audio tones presented every 0.5 s and was instructed to press a button when they heard an unusual tone within the series of mostly identical tones. The third paradigm was an object working-memory (ObjWM) task involving an emotional distractor component and adaptive difficulty design. For this event-related paradigm, each task trial started with 1 s presentation of a photograph from the International Affective Picture System (IAPS). Following a 500-ms pause the subject was presented with a static image of 2–10 separate objects randomly arranged in a 12 position grid, which they were instructed to remember. After a 2-s pause, a single object image was presented and the subject pressed a yes or no button to indicate whether the object was in the previous memory set. The interval between trials varied from 2 to 14 s.

Integration of stimulus presentation and behavioral recording was accomplished using the generic Showplay program for fMRI written in CIGAL’s scripting language. For each behavioral task Showplay read a user-defined paradigm input text file containing a list of parameter options and a table of task stimulus events. Execution of the task involved three automated stages, each of which was initiated by the user via graphical menus. The stages were: (1) running a series of CIGAL script modules to load stimulus files and prepare the task, (2) linking together multiple real-time program modules depending on task options to create a single real-time program, which was then compiled and executed using CIGAL’s real-time processor (Voyvodic, 1999), and (3) running a series of CIGAL script modules for saving task data in output files. Most tasks use only pre-existing program modules for all three stages and thus require no user-programming other than the paradigm input file itself. Because the FBIRN ObjWM task involved an interactive adaptive component to adjust task difficulty independently for each subject, that task included a customized data preparation script and a customized real-time task program module in order to manipulate how many objects were presented during the memory portion of each trial. A customized post-processing script module was also inserted for all the FBIRN tasks to accommodate an FBIRN-specific file naming convention and to generate an extra summary data file containing trial-by-trial performance results.

The real-time programs created at run-time for execution of the behavioral task itself used standard generic Showplay modules for all stimulus and response processing, plus one extra run module included for adaptation of the ObjWM task. CIGAL’s “real-time” command is a single-threaded software event processor that allows multiple stimulus and response components of the task to be programmed independently and run in parallel with accurate real-time control. The software processor automatically interleaves execution of events in each program module so that they all occur at the designated real-time moment. CIGAL thus provides the illusion of parallel processing via efficient real-time multi-tasking within a single serial process. For the FBIRN study the real-time programs for all tasks at each site included up to seven independent modules running in parallel: (1) the main stimulus event module, (2) a button-press hardware-specific module, (3) a generic button response module, (4) an analog data input module recording physiological signals at 100 Hz using the Measurement Computing USB device in its automatic sampling mode with all accumulated data transferred from the USB device to CIGAL every 100 ms, (5) most sites included a scanner trigger acquisition module, (6) one site included an eye-tracker input module recording eye position and pupil diameter at 30 Hz, and (7) when a second monitor was available a behavioral data feedback module was included, providing continuous oscilloscope-like display of scanner pulses, button presses, task accuracy, and all other behavioral input signals. Communication across parallel real-time modules was mediated by common data variables. Where necessary, individual modules specified short series of events that could not be interrupted to ensure accurate inter-module synchronization.

Behavioral Data Output

For each task paradigm CIGAL automatically generated multiple output files in different formats to accommodate different analysis goals. The type and format of output files produced depended on user-defined settings in the paradigm input files. The standard output files included:

(1) a data archive file in CIGAL’s custom “Pdigm” format containing all recorded data in separate binary records, a text list of all software parameter settings including all software version numbers, a binary run-time log table describing the timing of all important stimulus and I/O events with 20 μs accuracy, a copy of the paradigm input text file, a copy of the Showplay script and run-time programs executed for that task, a summary of the status of the current hardware configuration, and copies of most of the other output files listed below. The Pdigm files included all data necessary to generate all other task output files. An optional associated XML text header file describes the contents of the Pdigm archive.

(2) an XML text format Events file (Gadde et al., 2011) describing every stimulus and associated button-press response, along with user-defined coding of different types of stimulus events.

(3) multiple physiological and eye-tracking data files in multi-column text format where each line included a time-stamp relative to task onset and all digitized analog values recorded at that time.

(4) trial-by-trial summary text files describing the conditions for each trial plus subject response values in table format appropriate for direct input to spreadsheet or database programs.

(5) multiple timing files in three-column text format used by the FSL image processing package (Smith et al., 2004) describing the timing of individual trials grouped by task conditions.

(6) a stimulus–response summary text file in customized format to include FBIRN-specific parameters and customized task performance tables. This file was generated by an FBIRN-defined optional script module run during the post-processing sequence.

In addition to these files created for each task run, CIGAL also generated a session summary text file listing all tasks run, the computer used, the person operating it, and any comments entered for each run during the session. Finally, a “keylog” text output file was generated and stored for each session, which listed every keyboard and mouse click event performed by the computer user while running CIGAL. All output files for one session were stored together within a single data directory. Collectively they provided a comprehensive record of all aspects of the behavioral session.

Subjects

The FBIRN phase three study involved both schizophrenic patients and healthy control subjects of both sexes between 19 and 60 years of age. All subjects provided local IRB-approved informed consent for research and sharing of data results across all FBIRN sites.

MRI Scanning

Imaging sessions at each site were 2 h in length and involved a standardized scanning and behavioral task protocol across all sites. The fMRI scanning used T2*-weighted gradient-echo EPI (TR/TE/Flip = 2000/30/77, 64 × 64 × 32 voxels, FOV = 22 mm); there were 7 runs of the ObjWM fMRI task (200 volumes), 2 resting-state fMRI scans (162 and 30 volumes), and 2 runs of the AudOddball task (140 volumes).

Results

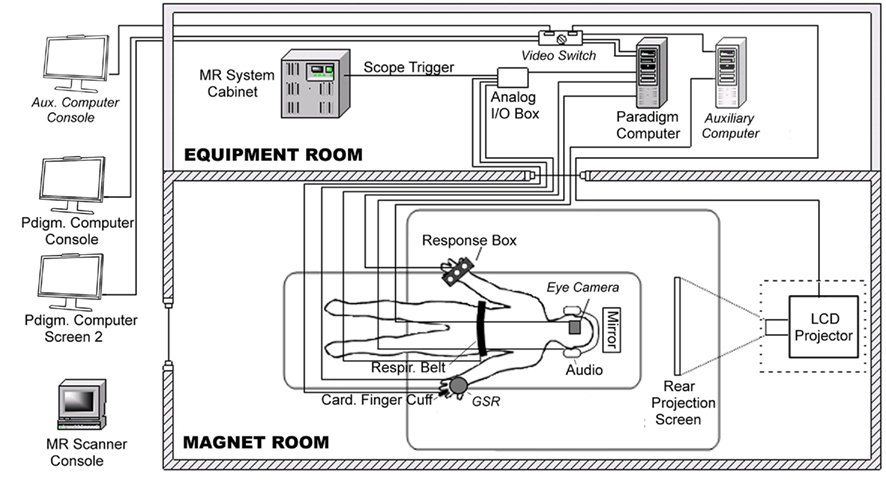

Due to variable behavioral results in its earlier study phases, for its Phase 3 multi-center fMRI study the FBIRN project decided that all eight MRI acquisition sites should purchase and install similar behavioral and physiological monitoring equipment. Although the starting configuration for scanner, fMRI task computer, and associated equipment differed across sites, all sites were able to install the specified respiratory and cardiac analog signal transducers, cardiac amplifier with power supply, and simple USB analog/digital converter device following simple on-line instructions. The most complicated aspect of the hardware setup involved connecting analog input cables from the physiology transducers to the USB analog/digital converter device, so for simplicity one FBIRN site (Stanford) created a standard cable interface box for all sites with BNC connectors for all inputs. To enable real-time monitoring of behavioral performance, all sites attached dual video monitors at the operator’s console for their paradigm computer; due to local differences in computer hardware, video boards, and cable arrangements the dual video configurations used varied somewhat across sites. In addition to these hardware changes, all sites also successfully downloaded and installed the CIGAL software, using its interactive dialog interface to adjust the software’s hardware interface options to work with their local hardware configurations. Figure 1 illustrates the variety and connectivity of devices involved in behavioral control in this study.

Figure 1. Diagram of behavioral hardware connections. fMRI data acquisition at all FBIRN Phase 3 data acquisition sites involved a paradigm control computer (Windows OS) with dual video monitors, a video projector, a scanner trigger cable, a manual response button box, a respiratory belt transducer, a cardiac finger pulse-oximeter and associated Biopac amplifier, and a Measurement Computing Analog/Digital interface USB device. One site (site 3 – Duke) also included an MR-compatible eye-tracker camera, an auxiliary computer with eye-tracking software, a video switch, and galvanic skin resistance electrodes and Biopac amplifier. The diagram illustrates all equipment as configured at Duke; components unique to that site are shaded lighter gray and labeled in italics.

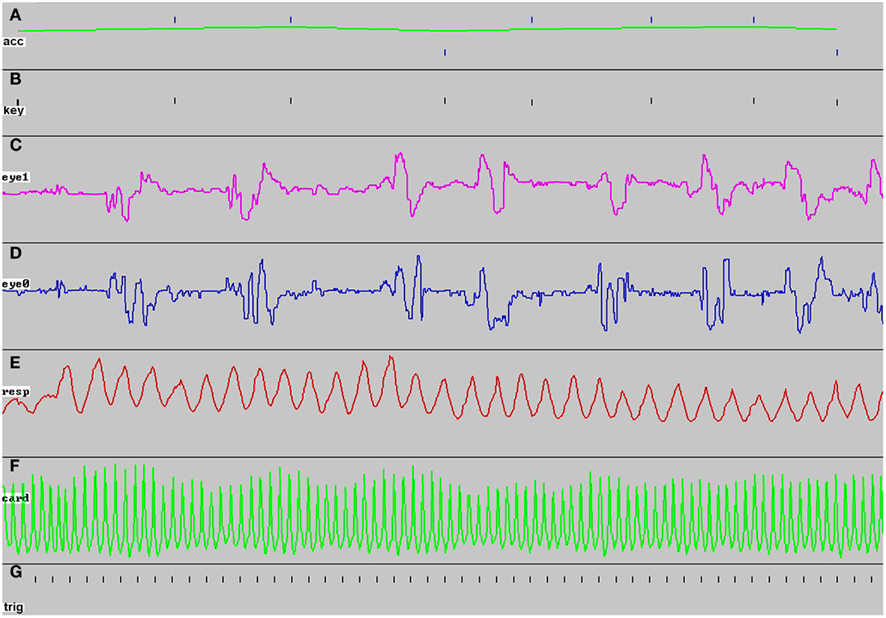

To date, the FBIRN Phase 3 study has scanned 330 subjects using the cardiac and respiratory data recording setup. For most subjects at each site (89% of subjects across all sites) the dual video screens were used to monitor behavioral recording during each task paradigm. Figure 2 shows an example of CIGAL’s oscilloscope-like real-time behavioral performance display seen on one computer monitor during the ObjWM task while the stimulus was simultaneously displayed on the other monitor. The behavioral data appeared as a continuous time sweep from left to right across the screen, updated at up to 100 Hz depending on the user-controlled sweep speed setting. The display automatically paused whenever the task expected a button-press response from the subject to minimize the possibility of delaying detection of the response event; after the response (or a 2-s timeout) the display was automatically filled in and continued in real-time. Behavioral feedback during fMRI allowed problems with either equipment function or subject behavior to be detected quickly. When serious task performance problems were detected (e.g., no responses or wrong buttons being used) the scan could be aborted immediately to avoid spending valuable scanner time collecting low quality data. Less serious task performance problems (e.g., inattention or task-correlated respiration patterns) or equipment problems (e.g., loose transducers or incorrect parameter settings) could be identified and diagnosed during the scan and then steps could often be taken quickly between scans to correct the problem.

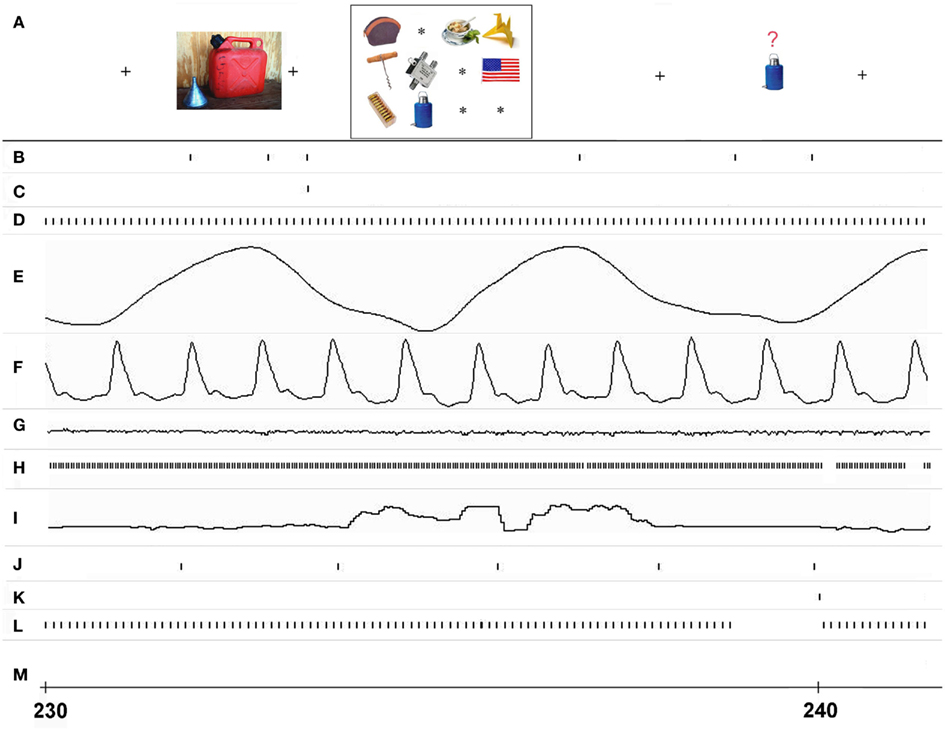

Figure 2. Example of real-time behavioral performance display seen on screen 2 during ObjWM task. The image shows 100 s of the task display for a subject scanned at site 3 just before the screen was erased to begin a new sweep [the bold letters (A–G) were added later]. During the scan the display appeared as a continuous sweep in real-time, except for ~1 s pauses each time a subject response was expected. At the start of each task, the number of sweep panels displayed was determined and scaled automatically depending on the local hardware and software configuration being used for that scan. (A) Task performance appeared as a tick mark each time the subject was asked to respond, with the tick position indicating correct (upper ticks) or incorrect (lower ticks) responses; the green line indicated average performance over the most recent five trials, (B) every response key press appeared as a tick mark, whether expected by the task or not, (C) vertical eye position recorded from a serial input signal sent from a separate eye-tracking computer, (D) horizontal eye position, (E) respiration recorded from an elastic belt transducer connected directly as an analog input, (F) cardiac pulse-oximeter signal recorded from a Biopac amplifier as an analog input, (G) the scanner trigger pulse was recorded once per TR interval throughout each scan.

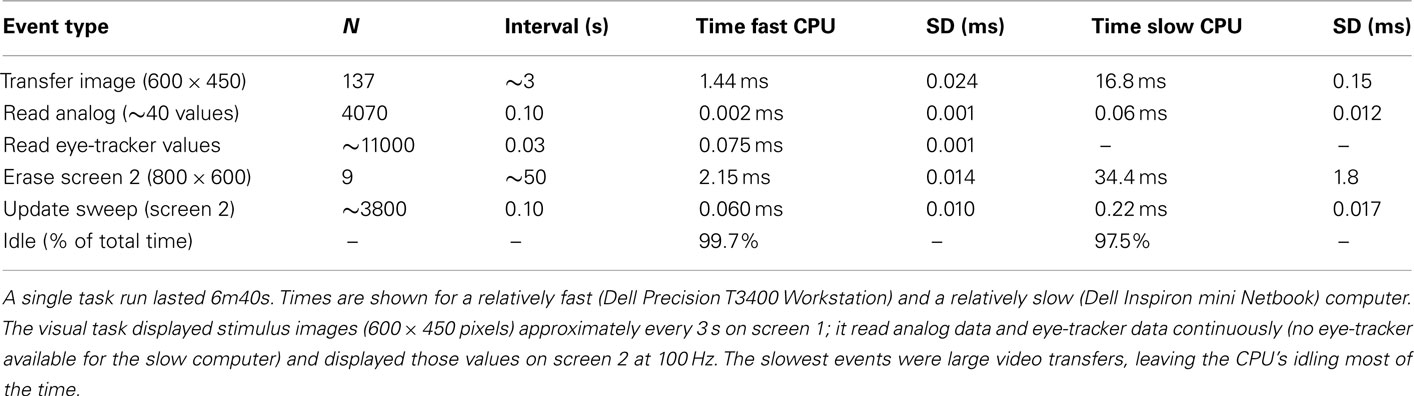

We measured whether adding automated interleaving of physiological recording and real-time feedback displays required enough computer processing resources to interfere with the processing necessary for task stimulus presentation or response detection. These measurements were based on extracting the timing of real-time events using the run logs generated by CIGAL, which recorded the exact time of occurrence of all stimulus and response events with 20 μs resolution (Figure 3). We calculated how much computer time was necessary for behavioral recording and display by running repeated test scans using the ObjWM task where both the beginning and end of I/O events were recorded (normally CIGAL only records the start of I/O events). The results for the relatively fast Windows computer (Dell Precision T3400 with NVIDIA Quadro FX570 video) normally used for scanning at site 3 (Duke) are shown in Table 1. Table 1 also shows processing times when using a relatively slow Windows computer (Dell Inspiron Netbook) in order to cover a wide range of processor speeds. Overall, we found that on a fast computer the total amount of computer time required to process all behavioral recording events was approximately 0.15% of the total time for each task, and that stimulus presentation processing and I/O for the FBIRN ObjWM task accounted for approximately 0.20% of total time. The remaining 99% of the time the computer was idling while it waited between scheduled I/O events. Even on a simple Netbook, which is slower than any computer used at FBIRN sites and any actually likely to be used for real data recording, interleaved processing of all stimulus and response events was still fast enough that 97% of the computer’s time was spent idling between events. Running similar CIGAL performance tests on a non-FBIRN animated movie task on the fast scanner paradigm computer at site 3, we found that even when the stimulus involved continuously displaying a new image (720 × 480 pixels) at 30 frames/s, the processor was still only busy 16% of the time.

Figure 3. Real-time event interleaving during a single trial of the ObjWM task. Timing of I/O events and associated data in different processing streams is shown for approximately 11 s starting at 230 s, as recorded at site 3. (A) The stimulus events as displayed on screen 1 and seen by the subject (time positions are approximate), (B) stimulus image I/O events for screen 1, (C) whole display erase events for screen 1, (D) analog data input events from USB A/D device, (E) respiratory data analog signal, (F) cardiac data analog signal, (G) galvanic skin resistance analog data signal, (H) eye-tracker data input events from serial port, (I) vertical eye position signal, (J) MR scanner trigger recorded each TR interval via serial port, (K) subject button-press input from serial USB port, (L) sweep display events for all channels to screen 2, (M) whole display erase events for screen 2.

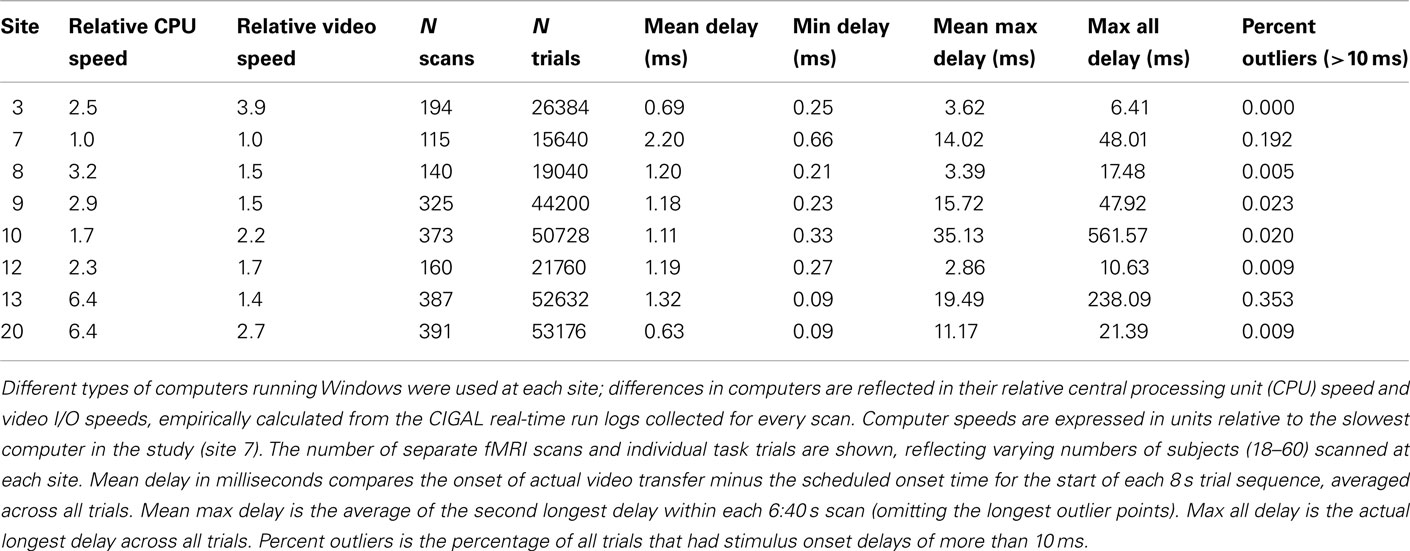

To test directly whether interleaving continuous behavioral recording interfered with the timing accuracy of stimulus presentation, we compared the time that each visual stimulus was actually transferred to video output during the ObjWM task to the time that it was scheduled to be displayed. The results for all scans at every site are summarized in Table 2. Stimulus timing delays varied depending on computer and video board processor speeds. For the site with the fastest computer and video hardware, the average stimulus delay was less than 1 ms and the maximum delay was 6 ms across all 39 subjects scanned. The site using the slowest computer and video hardware had average stimulus delays of 2.2 ms, with 99.8% of all delays less than 10 ms relative to the scheduled time. Occasional outlier delays (>10 ms) occurred at most sites and were usually less than 50 ms. Such variability is expected because standard Windows computers are not designed for precise real-time control and all programs are interrupted regularly for operating system events. Our timing data showed that some sites had rare stimulus delays lasting hundreds of milliseconds, which is longer than normal operating system interrupts and was probably either due to some other program being running concurrently or a network delay briefly hanging the operating system. Determining the source of any particular delay is difficult because CIGAL’s logs do not indicate what other Windows programs were running during the task. However, because CIGAL automatically records the actual time of every stimulus event and reports those in its timing output files, rare significant variations in task timing were easily detected in post-processing and those trials could be omitted from analysis.

Behavioral data acquired during MRI scanning (ObjWM, AudOddball, and resting scans) was stored in multiple types of output files (see Materials and Methods). The run-time log, input and run-time parameter settings, and all raw data values (in original data units) were stored in CIGAL’s custom “Pdigm” archive format file. All other output files were generated from data stored in the Pdigm archive files. The Pdigm archive files also provided all the data for the current task performance analyses. The archives were also very valuable during the course of the study whenever a question or problem arose concerning a particular data set because the parameter and log records contained enough information to reconstruct most aspects of the behavioral acquisition process. For interoperability with the various different software approaches used to analyze the FBIRN data, CIGAL automatically generated behavioral task timing files in XML format, FSL “schedule” file format, text matrix format, and text summary formats to accommodate, respectively, interpretation using Duke’s custom Eventstats program, FSL’s FEAT scripts, Excel or database tables, and human readers. Although these output files contained largely redundant information, having each different format proved very useful for allowing different members of the multi-center collaboration to carry out their preferred form of analysis without needing to write additional code to reformat the behavioral data. The fact that all other output files were created from data in the Pdigm archive also proved to be quite useful part way through the study when a coding error was discovered in some task input files, because once the error was identified CIGAL was used to read the archive files and automatically regenerate corrected versions of all output files in the other formats.

Discussion

As fMRI matures there is growing recognition of the importance of multiple aspects of the subject’s behavior, beyond simply performance of a stimulus driven overt response task. Changes in task performance levels can indicate variations in the degree of functional engagement, which can in turn affect the quality of the fMRI BOLD response. Other behavioral variables, such as cardiac and respiratory oscillations can generate relatively large fluctuations in observed BOLD signals. Both phase-dependent pulsation and breathing cycle effects, as well as slower amplitude modulation across many cycles, contribute to brain fMRI signals in different ways (Daqli et al., 1999; Kruger and Glover, 2001; Shmueli et al., 2007; Wise et al., 2007; Birn et al., 2008; Chang et al., 2009). Although these signal sources can be partially compensated by frequency filtering during post-processing, the complexity of these physiological processes requires that they be explicitly recorded during MRI scanning in order to be fully integrated into image analysis procedures. As the emphasis in functional imaging moves increasingly toward analysis of more subtle task effects or toward resting-state connectivity analyses, the importance of recording and properly removing physiological signals becomes even more apparent.

For simple resting-state functional imaging, simultaneous recording of cardiac and respiratory physiology is quite straightforward, simply requiring accurate synchronization with the timing of MR image acquisition. For task-dependent fMRI, however, accurate recording of multiple streams of physiological and other behavioral response input data synchronized with both image acquisition and the presentation of task stimuli is considerably more complicated. Each new fMRI study generally involves creating a new stimulus paradigm, which requires some degree of task software programming. Users designing new tasks focus on programming the stimulus sequence and what to do with overt task-elicited responses. They are unlikely to want to also have to worry about how to record physiological or other behavioral inputs such as eye-tracking data, or how stimulus and response processing may interfere with each other.

The solution presented here integrates stimulus presentation with accurate and highly automated response recording and real-time monitoring of both stimuli and responses for continuous data quality assessment. Behavioral task programming is simplified because CIGAL’s real-time processor allows any number of different data processing streams to be written independently, linked together and compiled at run-time, and then automatically interleaved during execution to run in parallel in real-time. Pre-existing program modules handle a wide variety of stimulus sequences and all supported data input devices. For most simple tasks users do not need to do any programming other than preparing their specific stimulus files and the paradigm input file that lists the stimulus sequence and sets a few global parameter settings. For more complex tasks that involve response-dependent stimulus modification the user can modify an existing module or add a new program module. Real-time processing modules are written in CIGAL’s custom real-time programming language (Voyvodic, 1999). Since the modules are written to run independently in parallel, a user can if necessary completely rewrite a stimulus presentation module without adversely affecting physiological recording or real-time feedback monitoring capabilities.

Temporal accuracy of event timing is limited by how long it takes to complete the slowest events in each separate processing stream, and those tend to be the stimulus video display events. Video I/O speeds vary greatly across different video hardware boards and account for most of the variability in timing accuracy across sites seen in Table 2. The CPU’s and peripheral recording devices such as the simple USB analog/digital (A/D) converter used here could easily handle all of the computational and data input demands for any task tested. Using the A/D converter device in its asynchronous analog scanning mode allows up to eight analog channels to be continuously recorded at relatively high rates (up to 12 kHz), unaffected by events occurring in any other processing stream. Blocks of analog data simply need to be transferred periodically to computer memory at a rate convenient for the real-time feedback display (typically 10 Hz).

Given the processing speed of current personal computers, a single program can easily accommodate all the stimulus and behavioral data recording requirements of most fMRI studies. Overall performance is limited by the computer’s ability to keep up with the demands of the stimulus paradigm. In this respect, the FBIRN ObjWM task provides a fairly typical example of fMRI task timing. With stimulus images appearing at approximately 1 s intervals it is not particularly demanding in terms of computer power, and as our performance analysis demonstrated standard PC computers can accommodate all of its stimulus and recording operations using only approximately 1% of the available processing capacity. Even a task with an I/O intensive stimulus such as a full frame movie running at 30 frames/s can be run on a fast dual-core PC using only 16% of one processor’s time. All events involved with behavioral monitoring and the real-time feedback display are fast enough that their impact on timing accuracy of any particular stimulus is within the sub-millisecond range.

However fast the software and hardware there will inevitably be task designs that exceed the available performance capabilities. Because CIGAL records both the scheduled times and the actual presentation times for every stimulus, a task that exceeds a particular computer’s processing capabilities can be readily detected. CIGAL also provides a performance testing option that users can select to generate a summary of timing accuracy for running their task on their particular computer system. In cases where the task exceeds the hardware capabilities the task can be modified or the computer could be upgraded to meet the stimulus performance demand. Our multi-site timing data show that there is considerable variability in standard PC performance characteristics, and that high-end computers with fast video boards and dual processors can provide very accurate stimulus timing.

As more types of behavioral data are recorded during MRI scanning the question of how best to organize and store behavioral data files becomes an issue. There are as yet no generally accepted standard file formats for such data, so behavioral data format is largely a matter of convenience determined by which analysis software is likely to be used. For the FBIRN study described here we decided to address this issue by creating multiple different output files in order to facilitate interoperability with all of the major analysis packages being used by different sites within the multi-center collaboration. This was implemented by the creation of a modular set of data output scripts in CIGAL, from which any particular user can select simply by setting a control parameter. In general, however, a flexible generic approach such as the XML-based data resources in XCEDE (Gadde et al., 2011) expanded here to also describe CIGAL’s Pdigm archive files could be generalized to provide a standardized method for accessing any type of behavioral data.

The FBIRN experience illustrates that routine integrated stimulus presentation and multi-modal physiological data recording can be implemented effectively without on-site technical expertise. Both the software and hardware described here are generally available and simple to install and configure. The dual screen approach for providing real-time feedback for simultaneously monitoring task presentation and subject behavioral data provides a simple and comprehensive quality assurance tool. Having this feedback during the current data acquisition stage of the FBIRN study has allowed the quality of task performance and recorded physiological data to be assessed for every scan. Integrating this physiological data into the FBIRN infrastructure is expected to significantly enhance the various different fMRI analyses efforts to be undertaken in this large multi-center study. The enhancements in our behavioral data acquisition tools to make them easier to use and the success of their implementation and testing across multiple data acquisition sites suggests that these tools may be broadly applicable for other studies.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors thank all of their collaborators in the FBIRN. This work was supported in part by NIH grants P01NS041328 and 1U24RR025736-01.

Footnotes

References

Birn, R. M., Diamond, J. B., Smith, M. A., and Bandettini, P. A. (2006). Separating respiratory-variation-related fluctuations from neuronal-activity-related fluctuations in fMRI. Neuroimage 31, 1536–1548.

Birn, R. M., Smith, M. A., Jones, T. B., and Bandettini, P. A. (2008). The respiration response function: the temporal dynamics of fMRI signal fluctuations related to changes in respiration. Neuroimage 40, 644–654.

Brown, G. G., Mathalon, D. H., Stern, H., Ford, J., Mueller, B., Greve, D., McCarthy, G., Voyvodic, J., Glover, G., Diaz, M., Yetter, E., Ozyurt, B., Jorgenson, K., Wible, C., Turner, J., Thompson, W., Potkin, S., and FBIRN. (2011). Multisite reliability of cognitive BOLD data. Neuroimage 54, 2163–2175.

Chang, C. E., Cunningham, J. P., and Glover, G. H. (2009). Influence of heart rate on the BOLD signal: the cardiac response function. Neuroimage 44, 857–869.

Chang, C. E., and Glover, G. H. (2009a). Relationship between respiration, end-tidal CO2, and BOLD signals in resting-state fMRI. Neuroimage 47, 1381–1393.

Chang, C. E., and Glover, G. H. (2009b). Effects of model-based physiological noise correction on default mode network anti-correlations and correlations. Neuroimage 47, 1448–1459.

Daqli, M. S., Ingeholm, J. E., and Haxby, J. V. (1999). Localization of cardiac-induced signal change in fMRI. Neuroimage 9, 407–415.

Gadde, S., Aucoin, N., Grethe, J. S., Keator, D. B., Marcus, D. S., Pieper, S., FBIRN, MBIRN, and BIRN-CC. (2011). XCEDE: an extensible schema for biomedical data. Neuroinformatics. doi: 10.1007/s12021-011-9119-9. [Epub ahead of print].

Glover, G. H., Li, T. Q., and Ress, D. (2000). Image-based method for retrospective correction of physiological motion effects in fMRI: RETROICOR. Magn. Reson. Med. 44, 162–167.

Glover, G. H., Mueller, B. A., Turner, J. A., van Erp, T. G. M., Liu, T. T., Greve, D. N., Voyvodic, J. T., Rasmussen, J., Brown, G. G., Keator, D. B., Calhoun, V. D., Lee, H. J., Ford, J. M., Mathalon, D. H., Diaz, M., O’Leary, D. S., Gadde, S., Preda, A., Lim, K. O., Wible, C. G., Stern, H. S., Belger, A., McCarthy, G., Ozyurt, B., Potkin, S. G., and FBIRN. (2012). Function Biomedical Informatics Research Network recommendations for prospective multi-center functional magnetic resonance imaging studies. J. Mag. Reson. Imaging (in press).

Greve, D. N., Mueller, B. A., Liu, T., Turner, J. A., Voyvodic, J., Yetter, E., Diaz, M., McCarthy, G., Wallace, S., Roach, B. J., Ford, J. M., Mathalon, D. H., Calhoun, V. D., Wible, C. G., Potkin, S. G., Glover, G., and FBIRN. (2011). A novel method for quantifying scanner instability in fMRI. Magn. Reson. Med. 65, 1053–1061.

Kruger, G., and Glover, G. H. (2001). The physiological noise in oxygen-sensitive magnetic resonance imaging. Magn. Reson. Med. 46, 631–637.

Shmueli, K., van Gelderen, P., de Zwart, J. A., Horovitz, S. G., Fukunaga, M., Jansma, J. M., and Duyn, J. H. (2007). Low-frequency fluctuations in the cardiac rate as a source of variance in the resting-state fMRI BOLD signal. Neuroimage 38, 306–320.

Smith, S. M., Jenkinson, M., Woolrich, M. W., Beckmann, C. F., Behrens, T. E. J., Johansen-Berg, H., Bannister, P. R., De Luca, M., Drobnjak, I., Flitney, D., Niazy, R., Saunders, J., Vickers, J., Zhang, Y., De Stefano, N., Brady, J. M., and Matthews, P. M. (2004). Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage 23(Suppl. 1), S208–S219.

Stevens, A. A., Skudlarski, P., Gatenby, J. C., and Gore, J. C. (2000). Event-related fMRI of auditory and visual oddball tasks. Mag. Reson. Imaging 18, 495–502.

Voyvodic, J. T. (1999). Real-time fMRI paradigm control, physiology, and behavior combined with near real-time statistical analysis. Neuroimage 10, 91–106.

Wise, R. G., Pattinson, K. T., Bulte, D. P., Chiarelli, P. A., Mayhew, S. D., Balanos, G. M., O’Connor, D. F., Pragnell, T. R., Robbins, P. A., Tracey, I., and Jezzard, P. (2007). Dynamic forcing of end-tidal carbon dioxide and oxygen applied to functional magnetic resonance imaging. J. Cereb. Blood Flow Metab. 27, 1521–1532.

Keywords: brain imaging, fMRI, heart beat, respiration, eye-tracking

Citation: Voyvodic JT, Glover GH, Greve D, Gadde S and FBIRN (2011) Automated real-time behavioral and physiological data acquisition and display integrated with stimulus presentation for fMRI. Front. Neuroinform. 5:27. doi: 10.3389/fninf.2011.00027

Received: 15 September 2011;

Paper pending published: 03 October 2011;

Accepted: 20 October 2011;

Published online: 23 December 2011.

Edited by:

Jessica A. Turner, Mind Research Network, USAReviewed by:

James Kozloski, IBM Research Division, USAThomas Wennekers, University of Plymouth, UK

Copyright: © 2011 Voyvodic, Glover, Greve and Gadde. This is an open-access article subject to a nonexclusive license between the authors and Frontiers Media SA, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and other Frontiers conditions are complied with.

*Correspondence: James T. Voyvodic, Brain Imaging and Analysis Center, Duke University, 2424 Erwin Road, Suite 501, Box 2737 DUMC, Durham, NC 27705, USA. e-mail: jim.voyvodic@duke.edu