- 1Bernstein Center Freiburg, Albert-Ludwig University of Freiburg, Freiburg, Germany

- 2Department of Bioengineering, Imperial College London, London, UK

The performance of neural decoders can degrade over time due to non-stationarities in the relationship between neuronal activity and behavior. In this case, brain-machine interfaces (BMI) require adaptation of their decoders to maintain high performance across time. One way to achieve this is by use of periodical calibration phases, during which the BMI system (or an external human demonstrator) instructs the user to perform certain movements or behaviors. This approach has two disadvantages: (i) calibration phases interrupt the autonomous operation of the BMI and (ii) between two calibration phases the BMI performance might not be stable but continuously decrease. A better alternative would be that the BMI decoder is able to continuously adapt in an unsupervised manner during autonomous BMI operation, i.e., without knowing the movement intentions of the user. In the present article, we present an efficient method for such unsupervised training of BMI systems for continuous movement control. The proposed method utilizes a cost function derived from neuronal recordings, which guides a learning algorithm to evaluate the decoding parameters. We verify the performance of our adaptive method by simulating a BMI user with an optimal feedback control model and its interaction with our adaptive BMI decoder. The simulation results show that the cost function and the algorithm yield fast and precise trajectories toward targets at random orientations on a 2-dimensional computer screen. For initially unknown and non-stationary tuning parameters, our unsupervised method is still able to generate precise trajectories and to keep its performance stable in the long term. The algorithm can optionally work also with neuronal error-signals instead or in conjunction with the proposed unsupervised adaptation.

1. Introduction

Brain-Machine Interfaces (BMI) are systems that convey users brain signals into choices, text, or movement (Birbaumer et al., 1999; Donoghue, 2002; Wolpaw et al., 2002; Nicolelis, 2003; Lebedev and Nicolelis, 2006). Being still in development, BMI systems can potentially provide assistive technology to people with severe neurological disorders and spinal cord injuries, as their functioning does not depend on intact muscles. For motor control tasks, parameters of intended movements (e.g., movement direction or velocity) can be decoded from electrophysiological recordings of individual neurons (Wessberg et al., 2000; Hochberg et al., 2006), from local field potentials inside (Mehring et al., 2003; Scherberger et al., 2005) and on the surface of the cerebral cortex (Leuthardt et al., 2004; Mehring et al., 2004; Schalk et al., 2007; Pistohl et al., 2008; Ball et al., 2009) or from electrical fields on the scalp (Blankertz et al., 2003; Wolpaw and McFarland, 2004; Waldert et al., 2008). The decoded parameters can be used for online control of external effectors (Hochberg et al., 2006; Schalk et al., 2008; Velliste et al., 2008; Ganguly and Carmena, 2009).

The relation between recorded brain-activity and movement is subject to change as a result of neuronal adaptation or due to changes in attention, motivation, and vigilance of the user. Moreover, the neural activity-movement relationship might be affected by changes in the behavioral context or changes in the recording. All these non-stationarities can decrease the accuracy of movements decoded from the brain-activity. A solution to this problem is employing adaptive decoders, i.e., decoders that learn online from measured neuronal activity during the operation of a BMI system and that track the changing tuning parameters (Taylor et al., 2002; Wolpaw and McFarland, 2004).

Adaptive BMI decoders can be categorized according to which signals are employed for adaptation: Supervised adaptive decoders use user’s known movement intentions in conjunction with corresponding neuronal signals. During autonomous daily operation of the BMI systems, however, neither the user’s precise movement intention nor his movement goal is known to the BMI decoder – otherwise one would not need a decoder. Therefore, supervised decoders can only adapt during calibration phases, where the BMI system guides the user to perform pre-specified movements. Unsupervised adaptive decoders, in contrast, track tuning changes automatically without a calibration phase. They can for example benefit from multi-modal distributions of neuronal signals to perform probabilistic unsupervised clustering (Blumberg et al., 2007; Vidaurre et al., 2010, 2011a,b). Evidently much less information is available to the adaptation algorithm in the unsupervised case compared to the supervised case. Unsupervised decoders, hence, might not work for strong non-stationarities and might be less accurate and slower during adaptation. The third category, namely error-signal based adaptive decoders, do not use an informative supervision signal such as instantaneous movement velocity or target position but employ neuronal evaluation (or error) signals, which the brain generates, e.g., if the current movement of the external effector is different from the intended movement or if the movement goal is not reached (Diedrichsen et al., 2005; Krigolson et al., 2008; Milekovic et al., 2012). Unsupervised and error-based adaptive decoders are applicable during autonomous BMI control in contrast to supervised adaptive decoders.

1.1. Related Work Brain-Machine Interfaces

In earlier work, BMI research has already addressed online adaptivity issue. For instance, Taylor et al. (2002) has proposed a BMI system, where individual neuron’s directional tuning changes are tracked with online adaptive linear filters. Wolpaw and McFarland (2004) have shown that intended 2-dimensional cursor movements can be estimated from EEG recordings. In that study, they employed Least Mean Squares (LMS) algorithm to update the parameters of a linear filter after each trial. Later, Wolpaw et al. has also shown that a similar method can be used to decode 3-dimensional movements from EEG recordings (McFarland et al., 2010). Vidaurre et al. (2006, 2007, 2010) have proposed adaptive versions of Linear Discriminant Analysis (LDA) and Quadratic Discriminant Analysis for cue-based discrete choice BMI-tasks. These works employ supervised learning algorithms, i.e., they necessitate that the decoder knows the target of the movement or the choice in advance and adapts the decoding parameters. In other words, the employed methods know and make use of the true label of the recorded neural activity.

More recently, DiGiovanna et al. (2009); Sanchez et al. (2009); Gage et al. (2005) have proposed co-adaptive BMIs, where both subjects (rats) and decoders adapt themselves in order to perform a defined task. This task is either a discrete choice task like pushing a lever (DiGiovanna et al., 2009; Sanchez et al., 2009) or a continuous estimation task such as reproducing the frequency of the cue tone by neural activity (Gage et al., 2005). Gage et al. employ a supervised adaptive Kalman filter to update the decoder parameters that match the neural activity to cue tone frequency. DiGiovanna et al. and Sanchez et al. utilize a reward signal to train the decoder. The reward signal is an indicator of a successful completion of the discrete choice task. The decoder adaptation follows a reinforcement learning algorithm rather than a supervised one. Whether the target has been reached, however, in contrast to a fully autonomous BMI task, is known to the decoder.

Error related activity in neural recordings (Gehring et al., 1993; Falkenstein et al., 2000) is very interesting from a BMI perspective. In both discrete choice tasks and cursor movement tasks, EEG activity has been shown to be modulated, when subjects notice their own errors in the given tasks (Blankertz et al., 2003; Parra et al., 2003). The modulation of the neural activity is correlated with the failure of the BMI task, and hence, can be used to modify the decoder model. With reliable detection of error related activity, the requirement for the decoder to know the target location could be removed. Instead, the error signal could be utilized as an inverse reward signal (Rotermund et al., 2006; Mahmoudi and Sanchez, 2011). An unsupervised, i.e., working in complete absence of a supervision or error signal, approach has also been taken for an EEG-based BMI binary choice task. Blumberg et al. (2007) have proposed an adaptive unsupervised LDA method, where distribution parameters for each class are updated by the Expectation-Maximization algorithm. More recently, unsupervised LDA has also been applied to an EEG-based discrete choice task (Vidaurre et al., 2010, 2011a,b). Unsupervised LDA, however, is limited to finite number of targets. In other words, it can not be applied to BMI-tasks where possible target locations are arbitrarily many and uniformly (or unimodal) distributed. Kalman filtering methods for unsupervised adaptation after an initial supervised calibration have also been proposed for trajectory decoding tasks (Eden et al., 2004a,b; Wang and Principe, 2008). These methods adapt by maintaining consistency between a model of movement kinematics and a neuronal encoding model.

1.2. Optimal Control Theory for Motor Behavior

Motor behavior and associated limb trajectories is most commonly and successfully explained by optimality principles that trade off precision, smoothness, or speed against energy consumption (Todorov, 2004). This trade off is often expressed as a motor cost function. Within the optimality based theory motor behavior, open loop, and feedback optimization compose two distinct classes of motor control models. The former involves the optimization of the movement prior to its start ignoring the online sensory feedback, whereas the latter incorporates a feedback mechanism and intervenes with the average movement when intervention is sufficiently cheap. Optimal feedback control (OFC) models explain optimal strategies better than open loop models under uncertainty (Todorov and Jordan, 2002b). OFC models also provide a framework, in which high movement goals can be discounted based on online sensory input flow (Todorov, 2004). Optimal feedback control usually accommodates a state estimator module, e.g., a Kalman filter, and a Linear-Quadratic controller, which expresses the motor command as a linear mapping of the estimated state (Stengel, 1994). The state estimator uses sensory feedback as well as the afferent copy of the motor command. The motor command is a feedback rule between the sensory motor system and the environment. OFC models obey the minimal intervention principle, i.e., they utilize more effort and cost for relatively unsuccessful movements in order to correct for the errors (Todorov and Jordan, 2002a,b). Minimal intervention principle is also very important for the current work, as substantial deviations can result from both noise and a model mismatch between the organism and the environment. The non-minimal intervention, hence, can be interpreted as a sign of a possible model mismatch between a BMI user and the decoder. Recent evidence indicates that OFC should also model trial-by-trial and online adaptation in order to be plausible empirical evidence on motor adaptation (Izawa et al., 2008; Braun et al., 2009).

1.3. Scope and Goals of Our Research

During autonomous operation of a BMI system, the BMI decoder does not know the individual movement intentions of the subject nor the goal of the movement, apart from what can be derived from the measured brain-activity and from sensing the environment. Hence, the decoder has no access to an explicit supervision signal for adaptation. We, therefore, developed an algorithmic framework for adaptive decoding without supervision in which the following adaptive decoding strategies could be implemented:

(1) Unsupervised, here the adaptation works using exclusively the neuronal signals controlling the BMI movements.

(2) Error-signal based, the adaptation uses binary neuronal error-signals which indicate the time points where the decoded movement deviates from the intended movement more than a certain amount.

(3) Unsupervised + error-signal based, the combination of the adaptive mechanisms of (i) and (ii).

With a BMI system involving those strategies, lifelong changes in brain dynamics do not have to be tracked by supervised calibration phases, where users would go under attentive training. Instead, decoder adaptation would track possible model mismatches continually. The BMI users behavior could provide a hint to the decoder even without an explicit supervision signal. It is presumable that inaccurate movements result in corrective attempts, which in turn increase control signals and control signal variability. Optimal feedback control models, which widely explain human motor behavior, support this presumption as they would generate jerky and larger control signals under mismatches between the users and the systems tuning parameters. Here, we develop a cost measure for online unsupervised decoder adaptation, which takes the amplitudes and the variations in the user’s control signals into account (strategy i). Our unsupervised method incorporates a log-linear model that relates the decoding parameters to the cost via meta-parameters. Randomly selected chosen parameters are tested during also randomly chosen exploration episodes. In the rest of the time, the best decoding parameters according to the existing model (initially random) are used. The switch between these exploration and exploitation episodes is random and follows an ε-greedy policy (see Section 2). Harvested rewards for all episodes and associated decoding parameters compose the training data, from which meta-parameters are detected using the least squares method recursively. Note that we utilize the same algorithm for strategies (ii) and (iii). In strategy (ii), we employ the error signal as the cost instead of the derived one. In strategy (iii), a combination of both measures serves as the cost.

2. Materials and Methods

2.1. Simulated Task

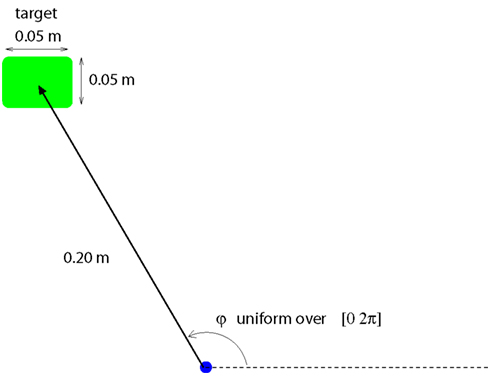

The user’s task is to move a cursor on a 2-dimensional screen from one target to the next. Each new target is located randomly on a circle of 0.2 m radius around the previous target (Figure 1). If the user reaches the target within 4 s and stays there for 0.16 s, the trial is successful. After an unsuccessful trial, the users selects a new random target. Upon success, the trial immediately ends and the user selects a new target again. The state of the controlled system, i.e., computer screen and cursor, at a discrete time step, t, is given by

where, are horizontal cursor velocity, cursor position, and goal position, respectively. are the corresponding vertical state variables. The screen state evolves according to first order linear discrete time dynamics,

where ut is the C-dimensional control signal and Bd is a 6 × C-dimensional decoder matrix. We assume that the motor command, ut, affects only the cursor velocity directly. Therefore, Bd’s first 2 and last 2 rows are 0:

Figure 1. BMI-task: The user has to move the computer cursor toward the target. The target is 0.2 m away, at a random direction. The target location is decided by the user and unknown to the decoder, also for training purposes. The target has to be reached in 4 s and the cursor has to stay on the target for at least 0.16 s. Upon both success or failure, the user selects a new target.

The state transition matrix A models the temporal evolution of the screen state. It simply performs the operation ,

Note that the goal position remains constant within a trial and it is left untouched by the linear dynamics of the screen state. Including the goal position in the state vector, however, simplifies the formulation of control signal generation by the user model (Section 2.2).

2.2. User Model: Stochastic Optimal Controller

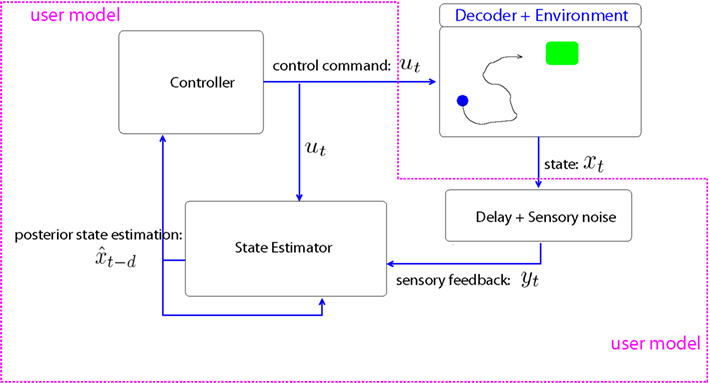

The BMI user is modeled as a stochastic optimal controller, who sends the C-dimensional control command ut at discrete time step t (Figure 2). The controller, i.e., the user, assumes that the screen state evolves according to a first order discrete time dynamics,

where Bu is the user’s estimation of the decoder matrix Bd,

Figure 2. BMI-user model and the environment. We model the BMI user with a stochastic optimal controller. The state estimator module is a Kalman filter that corrects the forward module estimation with sensory feedback. The controller generates the C-dimensional control command ut, which is linearly converted to 2-dimensional cursor movement by the decoder.

The BMI user perceives the state of the cursor with sensory delay and normally distributed zero-mean noise,

where d is the sensory delay in time steps and ηt is the noise drawn from . The user observes a 4-dimensional vector, yt, which contains the velocity and position observations. H is therefore:

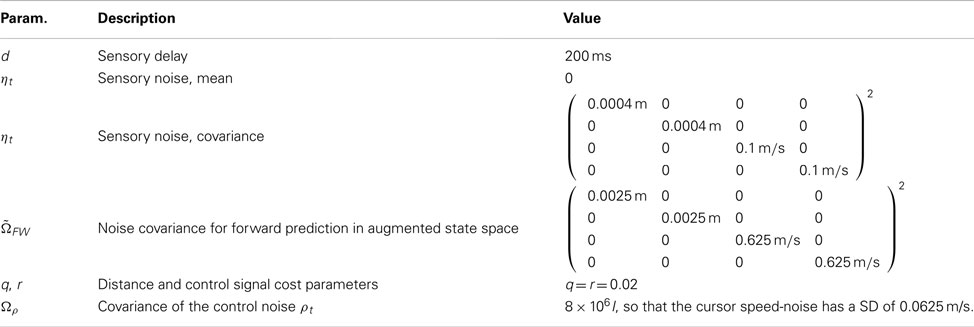

In computer simulations, we use a time step of 40 ms. Sensory delay is set to 200 ms, i.e., 5 time steps. We assume that all dimensions of ηt are independently normally distributed with SD of (0.0004 m, 0.0004 m, 0.1 m/s, 0.1 m/s)T.

We model the control signals from the BMI user as the output of a stochastic optimal controller. The BMI-user model aims at optimizing the cost function

stands for the euclidean distance between the 2-dimensional cursor position and the goal position vectors. q and r are constants that account for the relative weights of the two terms in the cost. The same cost expression can be written alternatively as

where Qt is a 6 × 6 matrix that allows for the quadratic expression of the distance cost

and Rt = rI. Qt and Rt stay constant for all t in our cost model, Qt = Q and Rt = R for all t.

Assume that the stochastic optimal controller minimizes the cost by sending the optimal control command at every time step t. In fact, the optimal command is disturbed by noise. Here, we model the inherent noise in biological circuits with a 0-mean normally distributed additive noise vector ρt,

This noise consequently presents itself also as additive at state update in equation (1)

where ωt ∼ . The problem of computing is known as Linear-Quadratic-Gaussian (LQG) control and can be recursively solved by an interconnected Linear-Quadratic-Regulator (Stengel, 1994; Todorov, 2005),

and a state estimating Kalman Filter

Here, Σt is the covariance estimate of the state vector variable xt and is estimate of its mean value posterior to noisy observation yt. ΩFW is the covariance of the noise associated with the forward model prediction. Kalman filter above is a model for the state estimator in user’s motor control circuitry. Bu is the user’s estimation for Bd. When Bu deviates from Bd, the user’s control signals are not optimal anymore. Above equations assume that the sensory delay equals to 1 time step. Larger sensory delays, e.g., d time steps, can be realized by using an augmented state vector, which contains d + 1 states together (Todorov and Jordan, 2002b; Braun et al., 2009),

State transition and observation matrices are redefined for the augmented state space,

in order to satisfy the system dynamics, . Kalman filter equations (6–8) are also modified according to augmented states and system parameters: prior state and covariance estimations before the delayed observation, i.e., are computed using the subject’s forward model,

Posterior state and covariance estimates are similarly computed using the Kalman gain matrix

Note that in our simulations, is set to a diagonal matrix, whose first 4 diagonal entries are the squares of the noise SD (0.0025 m, 0.0025 m, 0.625 m/s, 0.625 m/s)T, and the remaining entries are 0.

2.3. Decoder Models

The decoder is modeled by Bd, i.e., it decodes velocity information from the neuronal control signal ut. This decoder matrix might deviate from the user’s decoder matrix Bu, on the basis of which he generates his control signals. Therefore, the proposed adaptive decoders adapt their Bd according to Bu. In the current section, we describe three decoders: Our recursive least squares (RLS) based learning algorithm with unsupervised and error-signal based cost functions as well as a supervised RLS filter for performance comparison.

2.3.1. Unsupervised learning algorithm

For unsupervised and error-signal based decoder adaptation, we define a cost function and estimate Bu by optimizing the proposed cost function. In the unsupervised setting, the cost is associated with control signal,

Here, stands for the transpose of the control command vector. t and n are indices over time steps. T is the number of time steps in the control signal history for computing the cost function. Note that the decoder needs to know only the control signal, ut, in order to compute the above cost function. This cost function reflects the control-related term of the user’s cost function equation (4). The value of the cost function is expected to be high, if the user aims at correcting the movement errors which result from a model mismatch between the user and the decoder, i.e., between Bu and Bd.

We name the cost in equation (9) amplitude cost, as it is based on the amplitudes of the control commands. We, however, propose a further cost function that can be utilized for decoder adaptation, namely deviation cost. Deviation cost uses the variances of the control signals across time instead of the summed squared norms of the control commands,

where c is an index over control channels is the control command at channel c at time step t. ) is the mean value of for channel c across the interval [n − T + 1, n]. A weighted sum of the above costs can also be used as the cost function,

where Z is a constant for weighting the contributions from each individual cost type.

Alternatively, in case neuronal evaluation signals (i.e., error signals) are available in the recordings, we use the number of errors over a finite number of discrete time steps as cost,

We simulated the neuronal error-signal by assuming that neuronal error-signals are generated if the deviation between intended and performed velocities exceeds a certain amount,

where is the intended velocity. errt is swapped probabilistically with a probability of k in order to reflect the reliability of error signals. Note that similar binary movement mismatch events are also recorded in human ECoG (Milekovic et al., 2012), though 20° in our simulation was arbitrarily chosen (see discussion).

We assume a log-linear model for the decoder cost. Let β be the parameter vector generated by the horizontal concatenation of the third and fourth rows in Bd matrix, i.e., β = [Bd3,Bd4]. The model estimates the decoder cost as,

where b is a constant bias value concatenated to the flattened decoder parameter β and w is the column vector of the meta-parameters of this log-linear model. We denote the −log of the decoder cost by ℓ,

Let . Here, the task is to learn w from explored β and Jd collections and to simultaneously optimize β for a given w. Note that for a given w, the cost-minimizing β would go the infinity, since −log-cost linearly depends on w. Therefore, the minimization is performed on the unit circle, i.e., |β| = 1. The motivation here is to generate trajectories in the right direction rather than to optimize the speed of movement. The goal of the unsupervised as well as the error-signal based learning algorithm is to minimize the summed squared error,

where n is the index of the current time step and k is an index over past time steps. λ is a constant for degrading the relative contribution of the past time steps (0 < λ ≤ 1). ξn can be further expressed as,

Optimum parameters can be found by solving

Defining

solution to can be found as

Utilizing matrix inversion dilemma, Recursive Least Squares (RLS) (Farhang-Boroujeny, 1999) algorithm proposes a recursive formulation for Ψ−1

where

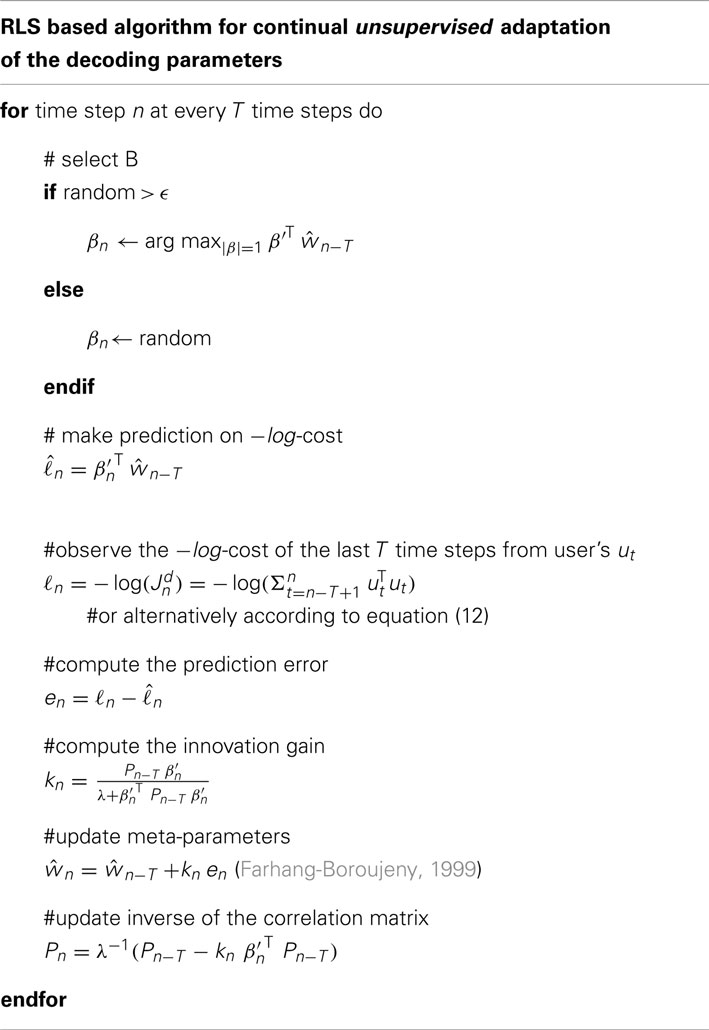

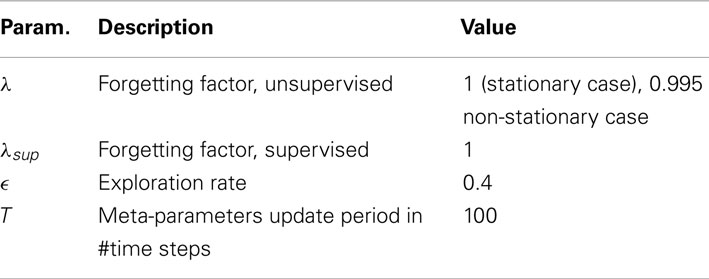

Our method aims at simultaneous harvesting of various decoding parameters Bd and, hence, β and detecting optimum meta-parameters w. These subtasks correspond to exploration and exploitation phases of a reinforcement learning algorithm, respectively. We employ ε-greedy exploration policy. In other words, with a predefined probability, ε, the algorithm prefers exploring the parameter space, which means a new β is chosen randomly. Otherwise, i.e., with a probability of 1 − ε, the algorithm uses the best decoding parameters, i.e., the β that minimizes the estimated decoder cost equation (13). Given the current estimate of w, the optimal unit normed β is computed by finding This is equivalent to maximizing the cosine between β′ and by setting and normalizing the corresponding β. A pseudocode for the algorithm is sketched in Table 1.

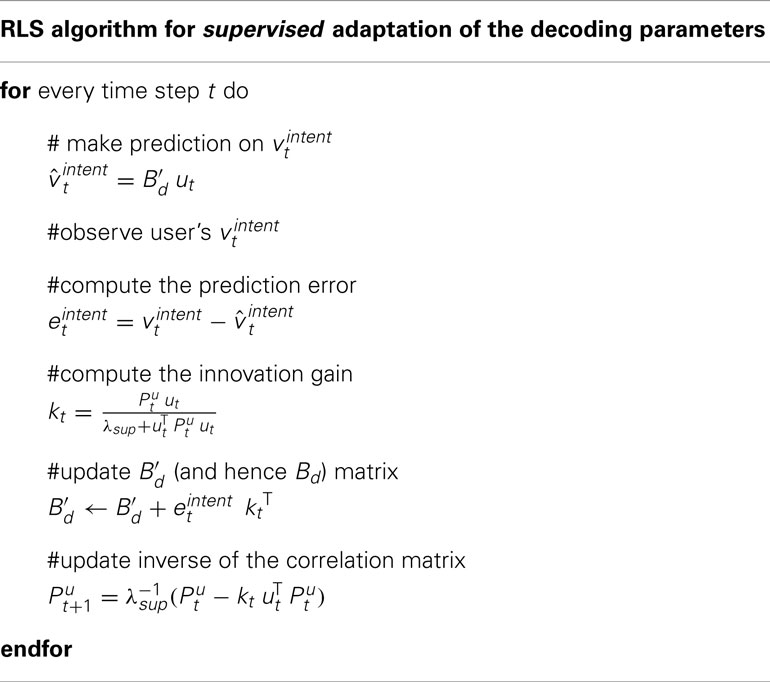

2.3.2. Adaptive supervised recursive least squares filtering

Under the assumption that the decoder knows the intended movements of the user, Bd can be adapted to Bu by utilizing an RLS filter. Let vintent be the intended velocity of the user at time step t.

where is the submatrix of the third and fourth rows of Bu, i.e., . The supervised decoder estimates the intended velocity using Bd,

For the supervised decoder, it is assumed that the user’s intent, vintent, is known to the decoder. The supervised RLS learning algorithm infers Bu online from The supervised adaptive decoder is used to benchmark the proposed unsupervised and error-based adaptive decoders. The supervised RLS method is described in Table 2. Note that stands for the inverse of the C × C sample correlation matrix for (u0…ut). λsup is the forgetting parameter of the supervised algorithm and is set to 1. is set to 100I.

2.4. Simulation Procedures

We simulated the interaction of the optimal feedback controller with different adaptive decoders described in Section 2. The behavior of the BMI user was simulated using the framework of stochastic optimal feedback control which has been shown to provide a good model for human motor behavior in various motor tasks (Todorov and Jordan, 2002b; Braun et al., 2009; Diedrichsen et al., 2010). The combined system of the optimal controller and the adaptive decoder was simulated at 40 ms time steps and we used a sensory delay of 200 ms. The user’s task was to control a mouse cursor. The user selects a target at 0.2 m distance with a random orientation at each trial. The user has to reach the target within 4 s and stay at the target for at least 0.16 s. Upon both success or failure, the user selects a new target. The distance cost parameter q and control signal cost parameter r are both set to 0.02. We set Ωρ to 8 × 106I, so that the cursor speed-noise had an average SD of 0.0625 m/s over a uniform distribution of unit normed β vectors. The variance value was manually adjusted to obtain the aimed speed-noise by testing on 104 unit normed random β vectors. Values of the optimal feedback controller and the decoder parameters used in our simulations are presented in Tables 3 and 4, respectively.

Note that the decoder does not have the information whether a trial is finished or continuing, nor does it know the target of the cursor movement. We simulated and evaluated the following adaptive decoders:

2.4.1. Unsupervised

The decoder learns exclusively from continuous neuronal control signals of the user according to equation (9), without any additional information. Note that the decoder knows neither whether the target has been reached nor when a trial finishes.

2.4.2. Error-signal based

The adaptation uses binary neuronal error-signals which indicate the time points where the decoded movement deviates from the intended movement more than 20°. The reliability of the neuronal error-signal was mainly assumed to be 80%, i.e., swapping probability, κ, was 0.2. The effect of various κ on the decoding performance, however, was also investigated in Section 3.3.

2.4.3. Unsupervised + error-signal based

The combination of the unsupervised and the error-signal based decoders, i.e., ℓn was a linear combination of the unsupervised ℓn and the error-signal based ℓn.

For all of the above algorithms, the current cost is computed from the last 100 time steps (T = 100). This corresponds to a parameter update period of 4 s. λ of equation (14) was set to 1, i.e., no gradual discount of the parameter history was performed. Exploration rate was 0.4, i.e., ε = 0.4. We simulated 50 random instantiations of all these unsupervised and the error-signal based adaptive decoding algorithms. 1501 successive trials of target reaching were simulated for each instantiation. Note that from trial 1463 on the adaptation of the decoding algorithms was frozen and the current optimal decoding parameters were used for the last 39 trials (decoder-freeze). We evaluated their performances and compared it to the performance of a supervised adaptive decoder where the adaptation is based on perfect knowledge of the intended movement velocity at each time step (see Section 2.3.2). Such a supervised adaptive decoder yields the best possible adaptation, however, it assumes knowledge that is certainly not available during autonomous BMI operation. In addition, we also compared the performance of our adaptive decoders to the performance of a static untrained random decoder.

3. Results

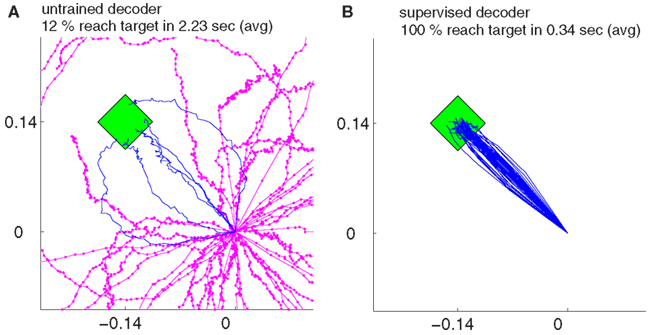

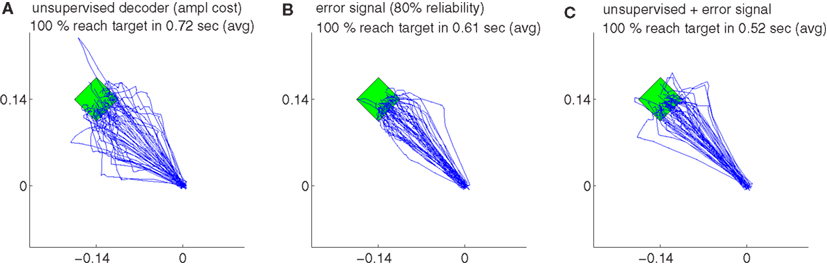

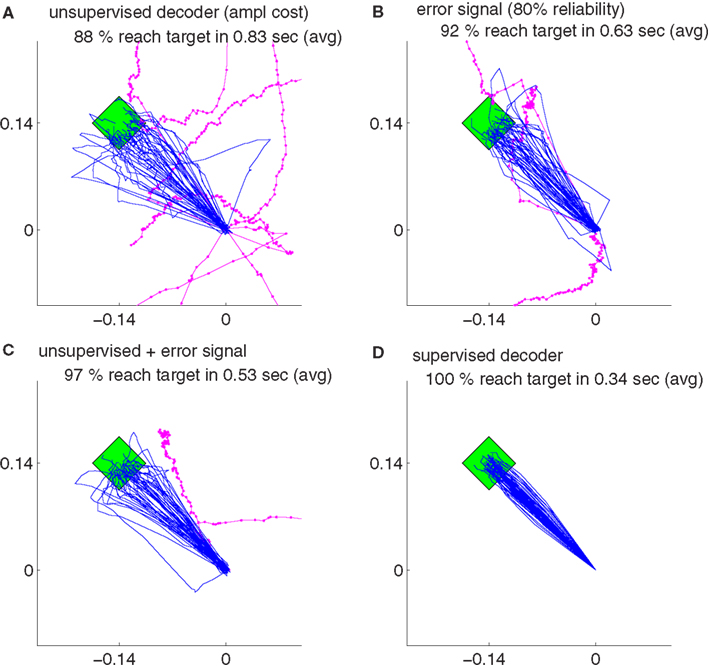

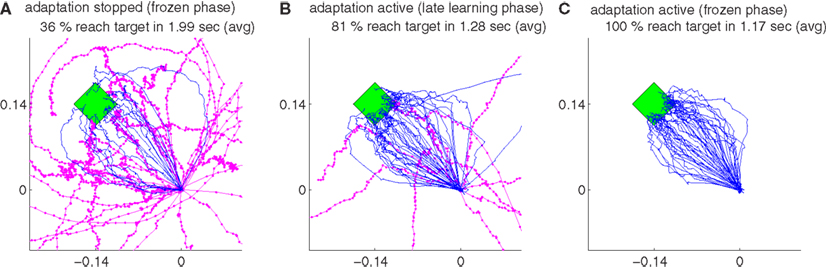

Our findings show that all the decoders described in Section 2.4 can rapidly adapt to accurate cursor control from totally unknown tuning of the neuronal signals to movement velocity whereas the random decoder fails to reach the target (Figure 3A). Although trajectories of the unsupervised and error-based decoders after adaptation are more jerky compared to the supervised case, they are still mainly straight and yield a high target hit rate of nearly 100% (Figure 4). These results show that decoders can be trained during autonomous BMI control in the absence of any explicit supervision signal.

Figure 3. The trajectories for random decoders (A) and supervised adaptive decoders (B) during decoder-freeze, i.e., after decoder exploration and adaptation have been switched off for performance evaluation. Magenta thick curves indicate the failed trajectories. Each plot depicts the trajectories of 50 training simulations, each at trial 1501. The 50 different targets and trajectories at trial 1501 are rotated to the same orientation for a better visual evaluation.

Figure 4. The trajectories for different strategies and their variations during decoder-freeze. The trajectories are shown for the unsupervised decoder (A), for the error signal based decoder (B) and for the combination of the both (C). Magenta thick curves indicate the failed trajectories. Each plot depicts the trajectories of 50 training simulations, each at trial 1501. Note that not only trial 1501 included 50 simulations, but the whole history of 1501 trials are simulated 50 times with random initial tunings. The 50 different targets and trajectories at trial 1501 are rotated to the same orientation for a better visual evaluation.

3.1. Comparison of Different Adaptation Algorithms

As a baseline for comparisons, we implemented the supervised decoder that knows the intention of the user and fits the decoder parameters, Bd, based on this intention (see Section 2.3.2). Though unrealistic, this learning scheme is obviously the most successful of the presented methods (Figure 3B). In order to compare different algorithms, we utilize a measure that counts for the cumulative distance to the movement target and call it cumulative error,

where gt and pt and are the 2-dimensional target and cursor position vectors at time step t of trial m, respectively. Mm is duration of trial m in time steps. For a more intuitive interpretation of the given error measure, we present out results in terms of relative cumulative error, which is the normalized cumulative error with respect to average cumulative error of the supervised decoder after adaptation,

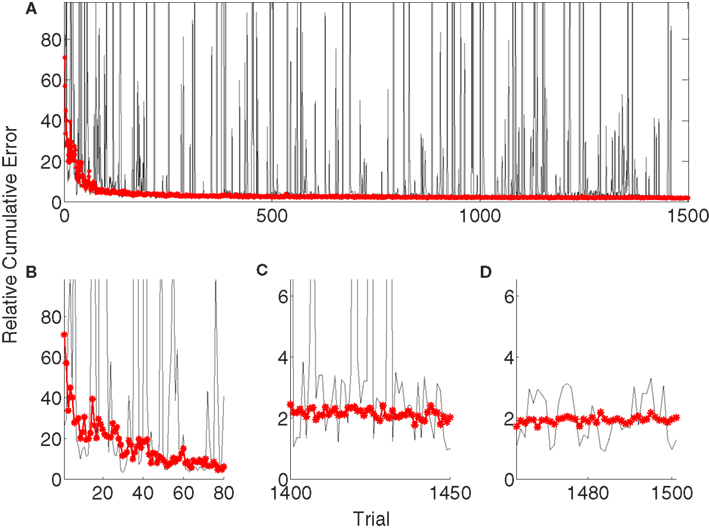

Figure 5A depicts the evolution of the relative cumulative error for a single simulation (gray) of the unsupervised algorithm and median relative cumulative errors (MRCE) for 50 randomly initialized simulations (red, *). The jumps in the gray curve correspond to exploration periods, where random decoder matrices, Bd, are explored and evaluated. The relative cumulative error shows different characteristics for early learning (Figure 5B), late learning (Figure 5C), and decoder-freeze (Figure 5D) phases. The early learning phase was investigated to compare the learning speeds of different algorithms, whereas the late learning phase shows the saturated final performance of the algorithms when adaptation continues. During decoder-freeze (last 39 time steps), the adaptation (also the exploration) was stopped and the final performance of the decoder was evaluated. No jumps in the performance are observed anymore due to absence of exploration.

Figure 5. Evolution of the relative cumulative error (RCE) for the unsupervised strategy. (A) The plot shows the RCE with respect to trial number for a single simulation (gray) and the Median RCE (MRCE) for 50 simulations with random initial tuning parameters (red with marker). Zoom into RCE and MCE curves for early (B) and late (C) learning and during decoder-freeze (D).

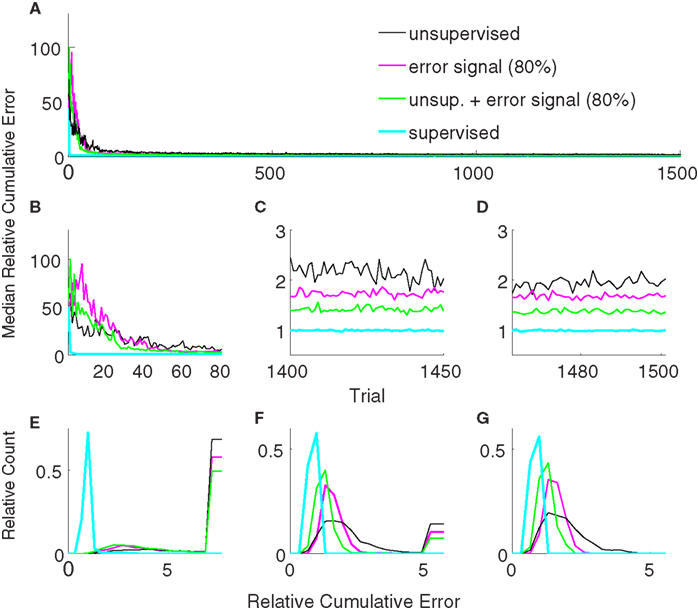

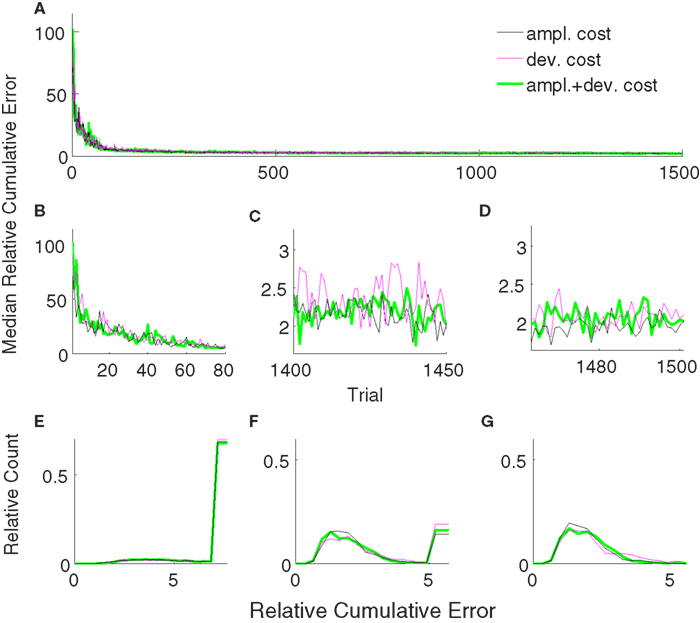

Figure 6 shows the comparison between supervised, error-signal based, and unsupervised algorithms. MRCEs across 50 runs are shown for the entire simulation (Figure 6A), for early learning (Figure 6B), for late learning (Figure 6C), and during decoder-freeze (Figure 6D). Distribution of the relative cumulative errors for the individual phases (Figures 6E–G) reveal that the supervised algorithm is superior to the other algorithms in all phases (p < 0.01, Wilcoxon rank sum test). In all of the phases, the combination of the error-signal based and the unsupervised strategies yielded a significantly lower cumulative error than the individual strategies alone (p < 0.01, Wilcoxon rank sum test). The performance of the the error-based learning was significantly better than the unsupervised strategy also for all of the phases (p < 0.01, Wilcoxon rank sum test). Note that the trajectories reached the targets not only during decoder-freeze (Figure 4) but also mostly in the late learning phase (Figure 7), where exploration can occasionally cause some trajectories to deviate strongly from a straight line toward the target.

Figure 6. Comparison of the unsupervised (black), the error-signal based (80% reliability, magenta), their combination (green), and the supervised (cyan) strategies. Medians of relative cumulative errors of 50 simulations from each group for all of the trials. (A) Zoomed medians for early (B) and late (C) learning and during decoder-freeze. (D) The distributions of the relative cumulative errors for each of the phases. (E–G) The rightmost values of the distribution plots denote the total relative counts of the outlier values that are greater than the associated x-axis value. The number of outlier values decreased across trials, i.e., it was the highest during early learning and zero during decoder-freeze. Outliers correspond to the failed trajectories in Figures 4 and 7.

Figure 7. The trajectories for different strategies and their variations in the late learning phase. The trajectories are shown for the unsupervised decoder (A), for the error signal based decoder (B), for the combination of the error signal and unsupervised decoder (C) and for the supervised decoder (D). Magenta thick curves indicate the failed trajectories. Each plot depicts the trajectories of 50 training simulations, each at trial 1404. Durations and target hit rates, however, are computed from pooled trajectories of 5 consecutive trials (1402–1406, totally 250 trajectories).

3.2. Effect of the Parameter Update Period

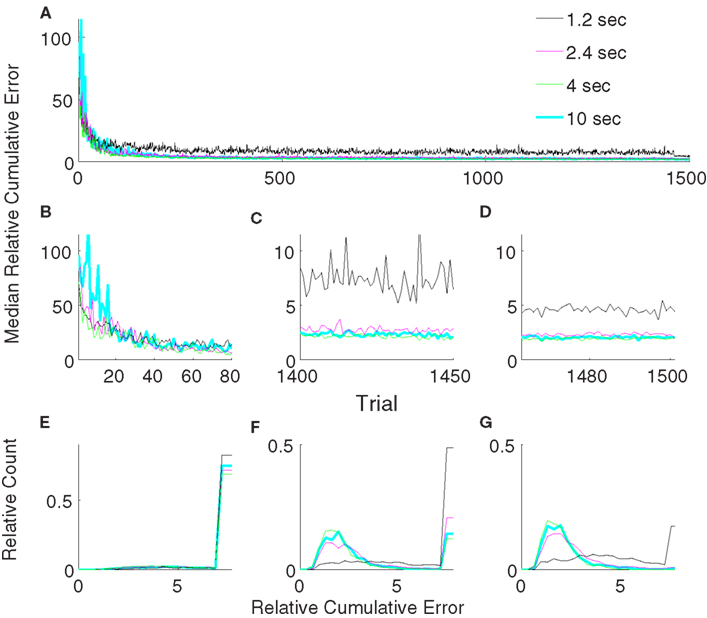

We varied the parameter update period, T, between 1.2 and 10 s in order to check the stability of the unsupervised strategy with respect to this parameter (Figure 8). Our results show that for all tested update periods greater than or equal to 2.4 s, the performance depended only weakly on exact value of the update period. Though the performance for an update period of 2.4 s was significantly (p < 0.05, Wilcoxon rank sum test) inferior compared to an update rate of 4 or 10 s in both late learning and during the freeze of the decoder, the difference was minor. Moreover, the performance of the update periods 4 and 10 s were not significantly different during decoder-freeze (p > 0.05, Wilcoxon rank sum test). We, therefore, conclude that our algorithm is robust against the update rate as long as it is high enough and used an update period of 4 s for all remaining simulations.

Figure 8. Comparison of relative cumulative error measures using the unsupervised strategy for different update periods (T). The algorithm updates the decoding parameters either every 1.2 s (black) or 2.4 s (magenta) or 4 s (green) or 10 s (cyan). Medians of relative cumulative errors of 50 simulations from each group for all of the trials. (A) Zoomed medians for early (B) and late (C) learning and during decoder-freeze. (D) The distributions of the relative cumulative errors for each of the phases. (E–G) The rightmost values of the distribution plots denote the total relative counts of the outlier values that are greater than the associated x-axis value. The number of outlier values decreased across trials, i.e., it was the highest during early learning and the lowest during decoder-freeze.

3.3. Effect of Error-Signal Reliability

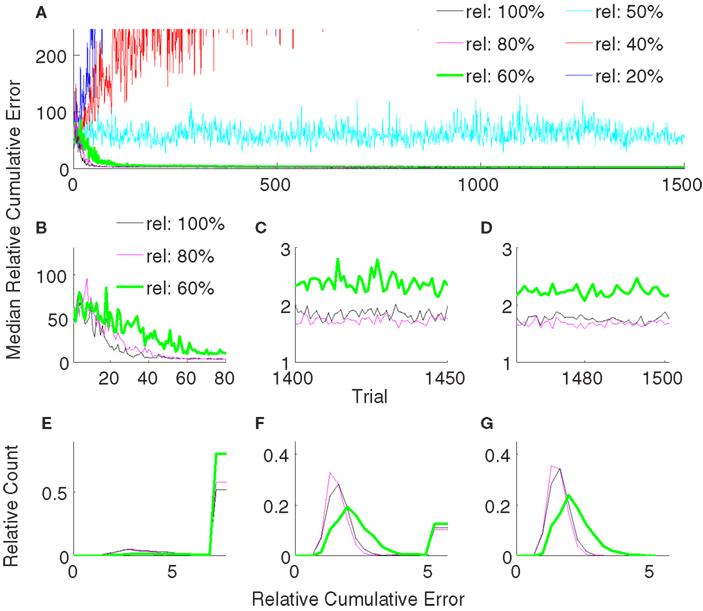

Error-signal based decoder performance obviously depends on the reliability of the error signals. Our results so far used an error signal with 80% reliability, i.e., κ = 0.2. Although several studies have shown evidence on neuronal error-signals (Gehring et al., 1993; Falkenstein et al., 2000; Diedrichsen et al., 2005; Krigolson et al., 2008), conclusive quantitative data on the reliability of the error signals is still missing. To compute the dependence of the error-based adaptive decoder on κ, we varied it between 0 and 0.8. Our findings show that the reliability must be greater than 50% for successful adaptation (Figures 9A–D). Reliabilities of 80 and 100% yielded statistically indistinguishable performance during decoder-freeze (p > 0.05, Wilcoxon rank sum test). Though 80% was slightly yet significantly better than 100% in the late learning phase (p = 0.049). A decoder with a reliability of 60% yielded a significantly inferior performance in all of the phases to the decoders with 80 and 100% reliability (Figures 9E–G, rank sum test, p-value < 0.05), its median error during late learning and freezing was only about 30% higher. Decreasing the reliability further to 50% drastically increased the median relative cumulative error.

Figure 9. Comparison of relative cumulative error measures using the error-signal-based training strategy for different reliabilities of error signals. The error-signal reliability is either 100% (black) or 80% (magenta) or 60% (green) or 50% (cyan) or 40% (red) or 20% (blue). Medians of relative cumulative errors of 50 simulations from each group for all of the trials. (A) Zoomed medians for early (B) and late (C) learning and during decoder-freeze. (D) The distributions of the relative cumulative errors for each of the phases. (E–G) The rightmost values of the distribution plots denote the total relative counts of the outlier values that are greater than the associated x-axis value. The number of outlier values decreased across trials, i.e., it was the highest during early learning and the lowest during decoder-freeze. Outliers correspond to the failed trajectories in Figures 4 and 7. In general, higher the reliability, better the performance. A minimum reliability of 60% is needed for successful training. 100 and 80% reliabilities are statistically equivalent during decoder-freeze (rank sum test, p > 0.05).

3.4. Adaptivity to Non-Stationary Tuning

We furthermore investigated, whether the unsupervised adaptive algorithm can cope with continual changes in the tuning. The velocity tuning parameters of the user, i.e., βu, flattened third and fourth rows of Bu, were changed after each trial according to the following random walk model,

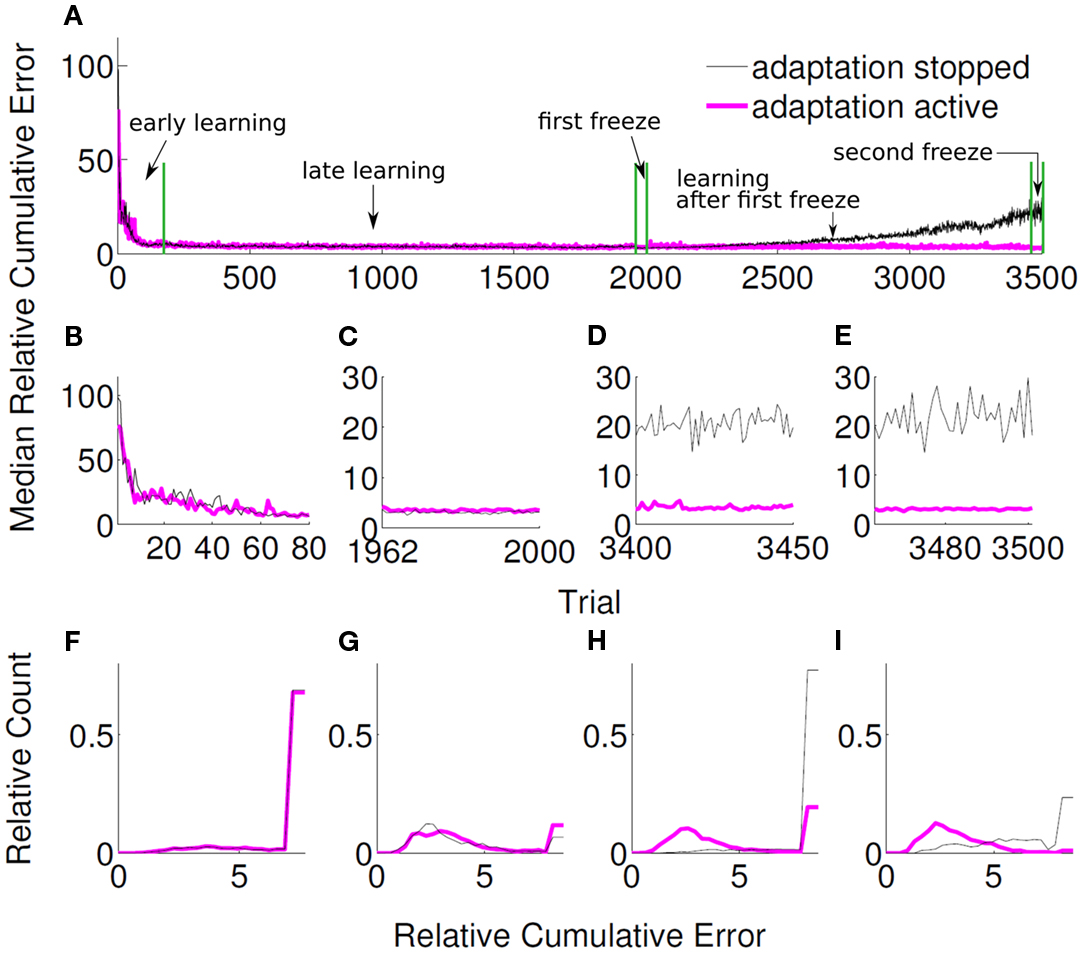

where ϱ is 40-dimensional row vector whose entries are randomly drawn from a normal distribution, ϱ ∼ . We put a hard limit on the magnitude of the entries of βu, so that they did not exceed −0.3 and 0.3. In order to investigate the performance of our algorithm under non-stationary tuning, 50 randomly initialized unsupervised adaptive decoders was compared to another group of 50 randomly initialized unsupervised decoders, for which adaptation was stopped after a certain amount of trials. Both decoder groups were adaptive for the first 1961 trials, at the end of which they reached a stationary performance (Figures 10A,B,F). Then, the adaptation of both groups was switched off during trials 1962 to 2000 (1st freeze) to compare the baseline performance of both decoder groups (Figures 10C,G). As expected, both groups performed equally well during the first 2000 trials (p > 0.1, Wilcoxon rank sum test). For the first group, the adaptation was then switched on again for the next 1462 trials, whereas for the other group the adaptation was kept off. Evidently, non-adaptive decoders could not cope with the changing tuning anymore and the performance strongly degraded (Figures 10A,D,H and Figure 11A). Adaptive decoders, in opposite, tracked the changes in Bu well and kept the performance stable (Figures 10A,D,H). After trial 3462 a 2nd freeze phase of 39 trials was used to quantify the difference in performance between both groups for non-stationary tuning parameters: adaptive decoders yielded a significantly (p < 0.0001 Wilcoxon rank sum test) and about 7 times smaller error than non-adaptive decoders (Figures 10A,E,I). In these simulations, we employed the unsupervised decoder cost as in equation (9). The simulation settings are the same as described at the beginning of Section 3 except for λ. Here, we set λ = 0.995, to reduce the influence of the earlier trials relative to the recent ones. This improves performance as recent trials contain relatively more information on Bu. Trajectories of the adaptive group reach very accurately to the target during decoder-freeze (Figure 11C) and less but also with high accuracy during the late learning phase (Figure 11B).

Figure 10. Relative cumulative errors for the unsupervised strategy under non-stationary tuning. Black curve shows the median RCE of 50 simulations, where adaptation was active between trial 1 and 1961 and stopped after that. Magenta curve shows the median RCE of 50 simulations, where adaptation was active both between trial 1 and 1961 and after trial 2000. In both groups, adaptation was inactive between 1962 and 2000 for comparison purposes. (A) Zoomed medians during the early learning phase, (B) the first decoder-freeze, (C) the late learning phase after the first freeze, and (D) the second decoder-freeze. (E) The distributions of the relative cumulative errors for each of the phases. (F–I) The rightmost values of the distribution plots denote the total relative counts of the outlier values that are greater than the associated x-axis value. Outliers correspond to the failed trajectories in Figure 11.

Figure 11. The trajectories under non-stationary tuning for unsupervised strategy and their variations during late learning and during decoder-freeze. The trajectories are shown for the decoder-freeze phase of the simulation group, whose adaptation was kept off after trial 1961 (A) as well as for the late learning (B) and decoder-freeze (C) phases of the simulation group, whose adaptation was kept active also between trials 2001 and 3462. Magenta thick curves indicate the failed trajectories. Each plot depicts the trajectories of 50 training simulations. Trajectories during decoder-freeze belong to trial 3501. The late learning trajectories were recorded at trial 3402, durations and target hit rates are from pooled trajectories of trials 3402–3406. The 50 different targets and trajectories at the recorded trial are rotated to the same orientation for a better visual evaluation.

3.5. Different Decoder Costs for Unsupervised Adaptation

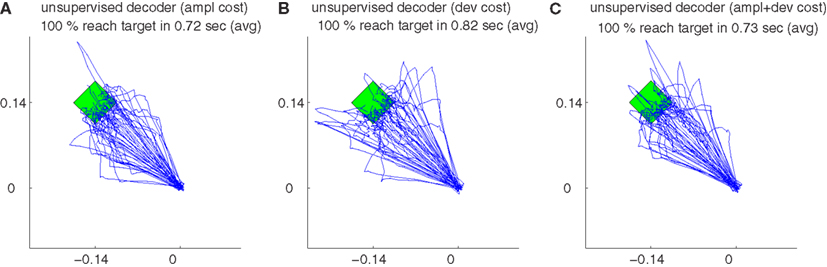

Figure 12B shows the trajectories obtained by 50 simulations of the unsupervised algorithm using deviation cost equation (10) during decoder-freeze. The trajectories were precise and fast.

Figure 12. The trajectories for different cost measures and their variations during decoder-freeze. The trajectories are shown for the amplitude cost (A), for the deviation cost (B) and for the combination of both costs (C). Magenta thick curves indicate the failed trajectories. Each plot depicts the trajectories of 50 training simulations, each at trial 1501. Note that not only trial 1501 included 50 simulations, but the whole history of 1501 trials are simulated 50 times with random initial tunings. The 50 different targets and trajectories at trial 1501 are rotated to the same orientation for a better visual evaluation.

The trajectories obtained using amplitude + deviation cost equation (11) are shown in Figure 12C. Again, straight and fast movements were obtained. The trajectories for the amplitude cost are reshown in Figure 12A for a direct visual comparison between different cost measures. A comparison between the three unsupervised cost functions is presented in Figure 13. All these three costs yielded equal performance (p > 0.05 Wilcoxon rank sum test) during all phases.

Figure 13. Comparison of relative cumulative errors for the unsupervised strategy using different cost measures. The algorithm employs either amplitude cost (black), or deviation cost (magenta) or their combination (green). Medians of relative cumulative errors of 50 simulations from each group for all of the trials. (A) Zoomed medians for early (B) and late (C) learning and during decoder-freeze. (D) The distributions of the relative cumulative errors for each of the phases. (E–G) The rightmost values of the distribution plots denote the total relative counts of the outlier values that are greater than the associated x-axis value. The number of outlier values decreased across trials, i.e., it was the highest during early learning and zero during decoder-freeze.

4. Discussion

Our results show that under realistic conditions, adaptive BMI decoding starting with random tuning parameters is feasible without an explicit supervision signal. Decoding performance gradually improves across trials and reaches a value close to the maximum possible performance as obtained by a supervised adaptive decoder, which assumes perfect knowledge of the intended movement of the BMI user. Moreover, we propose an adaptive decoder which employs neuronal error-signals and show that this decoder yields a similar performance to our unsupervised adaptive decoder. Unsupervised and error-signal based decoders adapt rapidly and generate precise movement trajectories to the target. The suggested decoders do not require a supervision signal, e.g., the intended movement, and therefore can be used during autonomous BMI control. The suggested unsupervised adaptation is based on the minimization of a simple cost function, which penalizes high control signals and/or high variability of the neuronal control signals. The rationale behind these costs is, that inaccurate decoding causes corrective attempts by the BMI user, which in turn increase control signals and control signal variability. Therefore, accurate movement decoding corresponds to lower costs and the minimization of the suggested cost functions improves the accuracy of BMI movement control. Due to the generality of this approach we expect this to work in different kinds of motor tasks and not only for the reaching task considered in our simulations. Note that the cost function could be alternatively derived from only trajectories instead of control signals (e.g., deviations from straight line could be punished). An additional argument in favor of our cost functions comes from behavioral studies of human motor control: A wide range of human motor behavior can be described by optimal feedback control (OFC) models, which minimize cost functions containing the same dependence on the motor control signals as we used in our decoder cost (Todorov and Jordan, 2002b; Braun et al., 2009; Diedrichsen et al., 2010).

Besides adaptation to unknown but static neuronal tuning to movement, we demonstrated that the proposed algorithms can also keep the decoding performance stable for non-stationary tuning. This is even possible if the tuning is not only non-stationary but also initially unknown. In these simulations, we assumed that the non-stationarity of the tuning parameters follows a random walk model and, hence, is independent of the decoder. If the decoded movement is fed back to the BMI user, the neuronal signals might adapt (Jarosiewicz et al., 2008) and the learning speed as well as the final accuracy might even increase beyond the presented values.

In order to train the decoder, we assumed a log-linear model that relates the decoder parameters to cost via meta-parameters. We introduced a learning algorithm, which explores the parameter space with a ε-greedy policy. Our method performs least squares regression recursively to estimate the optimal values of the meta-parameters. In other words, the algorithm performs simultaneous exploration of the decoding parameters and recursive least squares (RLS) (Farhang-Boroujeny, 1999) regression on the decoder cost function. The same algorithm works also with neuronal error-signals, where the cost is the number of error-signals detected in a given time period. Error related neuronal activity has indeed been recorded from the brain via EEG (Gehring et al., 1993; Falkenstein et al., 2000; Krigolson et al., 2008), functional magnetic resonance imaging (fMRI) (Diedrichsen et al., 2005), and single-unit electrophysiology (Ito et al., 2003; Matsumoto et al., 2007). Here, we assume a simple partially reliable error-signal that indicates a substantial deviation from the movement intention. Neuronal activity related to this kind of movement execution errors has been found in ECoG (Milekovic et al., 2012) and in fMRI (Diedrichsen et al., 2005). Milekovic et al. (2012) observed neuronal responses evoked by a 180° movement mismatch during continuous joystick movement in 1-dimension. In our simulations of 2-dimensional movements, we assumed that neuronal error-signals are evoked when the deviation between intended and decoded movement exceeds the somewhat arbitrary threshold of 20°. Although it remains to be shown in future studies that neuronal error-signals are indeed observable already at this threshold, we consider this a plausible assumption and expect our algorithm to be robust against the exact value of the threshold. Our results show that the overall performance of our algorithm is robust against different parameter update periods (T) and different error-signal reliabilities (>50%). Arguably, the proposed algorithm has the potential to work with various types of neuronal error-signals, though the computation of the cost function in terms of error signals might need adjustments to achieve high performance.

An alternative to our algorithm would be to use standard reinforcement learning algorithms and generalization methods (Sutton and Barto, 1998) for directly training the decoding parameters without using a meta-parametric model relating cost to decoding parameters. In our practical experience, keeping a record of the previously explored parameters via P matrix of the RLS algorithm and relating the parameters to the log-cost yields good performance. A comparison of our method to different reinforcement algorithms that utilize the same cost and/or other cost functions than the ones suggested here, are interesting topics for future studies. Previously, Kalman filtering methods were also applied for unsupervised adaptation during trajectory decoding (Eden et al., 2004a,b; Wang and Principe, 2008; Wu and Hatsopoulos, 2008). These methods adapt by maintaining consistency between a model of movement kinematics and a neuronal encoding model. They have been shown to track non-stationarities once an initial model is learned via supervised calibration (Eden et al., 2004a,b; Wang and Principe, 2008). Our unsupervised approach in this work is fundamentally different from these methods. We assume that, in the aftermath to decoding errors, the statistics of the control signals change and this change is utilized for unsupervised adaptation. In the future, it would be worthwhile to compare the performance of these different methods and their robustness against model violations in online BMI-tasks.

In principle, our adaptive decoding framework is independent of the type of neuronal signal that is used to control the movement. As neuronal control commands, the instantaneous firing rates of multiple single-unit or multi-unit activities could be used. Alternatively, filtered LFP, ECoG, EEG, or MEG signals or the power of LFP, ECoG, EEG, and MEG signals in different frequency bands could be employed. Our algorithms assume that neuronal control signals are linearly related to movement velocity. For many different neuronal signal types, indeed, movement trajectories can be reconstructed well using this assumption [(Wessberg et al., 2000; Serruya et al., 2002; Taylor et al., 2002) for SUA (Schalk et al., 2007; Pistohl et al., 2008), for ECoG]. Linear tuning to movement position or simultaneous linear tuning to position and velocity can easily be implemented in our algorithms by straightforward modifications of the B matrices (see Section 2). Future extension of our algorithmic framework might also consider non-linear tuning. The cost measures we introduced, might need some modifications depending on the tuning of the recorded signals. For instance, if the control signal, e.g., firing rates for individual recording channels, takes an all-or-none behavior, i.e., certain channels are on for one direction and off for another direction, the norms of the command vectors might hardly vary across different movement directions. In such a case, deviation cost might be preferable over amplitude cost.

How realistic is the online BMI-user model used in the simulations? The user model is based on optimal feedback control, which can predict motor behavior at the level of movement kinematics (Todorov and Jordan, 2002b; Braun et al., 2009; Diedrichsen et al., 2010) in several different motor tasks. Whether the framework of OFC correctly predicts the subject’s behavior during BMI control is an open question, which can only be addressed by online closed-loop BMI experiments. For the suggested unsupervised adaptive decoder to work, however, neither optimality nor the validity of OFC is required. Instead, it would be sufficient that the magnitudes of the control signals change in consequence of the observed mismatches between the intended and decoded movements. OFC models fulfill this requirement as well as many other control policies would do. In our opinion, penalizing the control signals with larger magnitudes should therefore work in many different movements tasks. In addition, even the changes in the magnitudes of the control signals might not be needed for the algorithmic framework to be applicable. As long as the statistics of the neuronal control signals consistently change as a response to movement mismatches, these changes could be penalized by an accordingly designed decoder cost function, which would allow the decoder to adapt. For the error-signal based method to work, the simulation model for the BMI user has even less relevance than for the unsupervised method to work, as it solely depends on the existence of reliable and precise error-signals.

It could also be argued that not all components of the cortical recordings used as input to the BMI decoder do represent a cortical movement control signal, i.e., part of it could also represent the ongoing activity, attention, or other signals, which are not directly related to the movement. In many cases it should be possible to model this movement-irrelevant activity as part of the noise in 5. Despite these task-irrelevant components, the algorithm should therefore still be able to adapt as long as consistent statistical changes in the aftermath of movement mismatches exist in the control signal.

For our simulation studies, we needed to make further assumptions. For instance, we used a constant sensory delay as well as normally distributed and temporally uncorrelated noise for the optimal control command and for the sensory feedback equations (3 and 5). However, we believe that these assumptions are not critical and moderate deviations from them will only weakly affect the proposed adaptation method.

In summary, we present a novel adaptive BMI decoder, which utilizes neuronal responses to movement mismatches and/or neuronal error-signals. The decoder is robust and not dependent on specific assumptions about the BMI users behavior or neuronal signals. To ultimately demonstrate the usability of our approach, the decoder has to be tested in closed-loop online BMI experiments.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Authors would like to thank German Bundesministerium für Bildung for grant 01GQ0830 to BFNT Freiburg-Tübingen and Boehringer Ingelheim Funds for supporting this work. We further acknowledge the anonymous referees, whose feedback significantly contributed to the quality of the presented work.

References

Ball, T., Schulze-Bonhage, A., Aertsen, A., and Mehring, C. (2009). Differential representation of arm movement direction in relation to cortical anatomy and function. J. Neural Eng. 6, 016006.

Birbaumer, N., Ghanayim, N., Hinterberger, T., Iversen, I., Kotchoubey, B., Kübler, A., et al. (1999). A spelling device for the paralysed. Nature 398, 297–298.

Blankertz, B., Dornhege, G., Schäfer, C., Krepki, R., Kohlmorgen, J., Müller, K.-R., et al. (2003). Boosting bit rates and error detection for the classification of fast-paced motor commands based on single-trial eeg analysis. IEEE Trans. Neural Syst. Rehabil. Eng. 11, 127–131.

Blumberg, J., Rickert, J., Waldert, S., Schulze-Bonhage, A., Aertsen, A., and Mehring, C. (2007). Adaptive classification for brain computer interfaces. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2007, 2536–2539.

Braun, D. A., Aertsen, A., Wolpert, D. M., and Mehring, C. (2009). Learning optimal adaptation strategies in unpredictable motor tasks. J. Neurosci. 29, 6472–6478.

Diedrichsen, J., Hashambhoy, Y., Rane, T., and Shadmehr, R. (2005). Neural correlates of reach errors. J. Neurosci. 25, 9919–9931.

Diedrichsen, J., Shadmehr, R., and Ivry, R. B. (2010). The coordination of movement: optimal feedback control and beyond. Trends Cogn. Sci. (Regul. Ed.) 14, 31–39.

DiGiovanna, J., Mahmoudi, B., Fortes, J., Principe, J. C., and Sanchez, J. C. (2009). Coadaptive brain–machine interface via reinforcement learning. IEEE Trans. Biomed. Eng. 56, 54–64.

Donoghue, J. P. (2002). Connecting cortex to machines: recent advances in brain interfaces. Nat. Neurosci. 5(Suppl.), 1085–1088.

Eden, U. T., Frank, L. M., Barbieri, R., Solo, V., and Brown, E. N. (2004a). Dynamic analysis of neural encoding by point process adaptive filtering. Neural Comput. 16, 971–998.

Eden, U., Truccolo, W., Fellows, M., Donoghue, J., and Brown, E. (2004b). Reconstruction of hand movement trajectories from a dynamic ensemble of spiking motor cortical neurons. Conf. Proc. IEEE Eng. Med. Biol. Soc. 6, 4017–4020.

Falkenstein, M., Hoormann, J., Christ, S., and Hohnsbein, J. (2000). Erp components on reaction errors and their functional significance: a tutorial. Biol. Psychol. 51, 87–107.

Farhang-Boroujeny, B. (1999). Adaptive Filters: Theory and Applications, 1st Edn. New York, NY: John Wiley & Sons, Inc.

Gage, G. J., Ludwig, K. A., Otto, K. J., Ionides, E. L., and Kipke, D. R. (2005). Naive coadaptive cortical control. J. Neural Eng. 2, 52–63.

Ganguly, K., and Carmena, J. M. (2009). Emergence of a stable cortical map for neuroprosthetic control. PLoS Biol. 7, e1000153. doi:10.1371/journal.pbio.1000153

Gehring, W. J., Goss, B., Coles, M. G. H., Meyer, D. E., and Donchin, E. (1993). A neural system for error detection and compensation. Psychol. Sci. 4, 385–390.

Hochberg, L. R., Serruya, M. D., Friehs, G. M., Mukand, J. A., Saleh, M., Caplan, A. H., et al. (2006). Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature 442, 164–171.

Ito, S., Stuphorn, V., Brown, J. W., and Schall, J. D. (2003). Performance monitoring by the anterior cingulate cortex during saccade countermanding. Science 302, 120–122.

Izawa, J., Rane, T., Donchin, O., and Shadmehr, R. (2008). Motor adaptation as a process of reoptimization. J. Neurosci. 28, 2883–2891.

Jarosiewicz, B., Chase, S. M., Fraser, G. W., Velliste, M., Kass, R. E., and Schwartz, A. B. (2008). Functional network reorganization during learning in a brain-computer interface paradigm. Proc. Natl. Acad. Sci. U.S.A. 105, 19486–19491.

Krigolson, O. E., Holroyd, C. B., Gyn, G. V., and Heath, M. (2008). Electroencephalographic correlates of target and outcome errors. Exp. Brain Res. 190, 401–411.

Lebedev, M. A., and Nicolelis, M. A. L. (2006). Brain-machine interfaces: past, present and future. Trends Neurosci. 29, 536–546.

Leuthardt, E. C., Schalk, G., Wolpaw, J. R., Ojemann, J. G., and Moran, D. W. (2004). A brain-computer interface using electrocorticographic signals in humans. J. Neural Eng. 1, 63–71.

Mahmoudi, B., and Sanchez, J. C. (2011). A symbiotic brain-machine interface through value-based decision making. PLoS ONE 6, e14760. doi:10.1371/journal.pone.0014760

Matsumoto, M., Matsumoto, K., Abe, H., and Tanaka, K. (2007). Medial prefrontal cell activity signaling prediction errors of action values. Nat. Neurosci. 10, 647–656.

McFarland, D. J., Sarnacki, W. A., and Wolpaw, J. R. (2010). Electroencephalographic (EEG) control of three-dimensional movement. J. Neural Eng. 7, 036007.

Mehring, C., Nawrot, M. P., de Oliveira, S. C., Vaadia, E., Schulze-Bonhage, A., Aertsen, A., et al. (2004). Comparing information about arm movement direction in single channels of local and epicortical field potentials from monkey and human motor cortex. J. Physiol. Paris 98, 498–506.

Mehring, C., Rickert, J., Vaadia, E., de Oliveira, S. C., Aertsen, A., and Rotter, S. (2003). Inference of hand movements from local field potentials in monkey motor cortex. Nat. Neurosci. 6, 1253–1254.

Milekovic, T., Ball, T., Schulze-Bonhage, A., Aertsen, A., and Mehring, C. (2012). Error-related electrocorticographic activity in humans during continuous movements. J. Neural Eng. 9, 026007.

Nicolelis, M. A. L. (2003). Brain-machine interfaces to restore motor function and probe neural circuits. Nat. Rev. Neurosci. 4, 417–422.

Parra, L. C., Spence, C. D., Gerson, A. D., and Sajda, P. (2003). Response error correction–a demonstration of improved human-machine performance using real-time eeg monitoring. IEEE Trans. Neural Syst. Rehabil. Eng. 11, 173–177.

Pistohl, T., Ball, T., Schulze-Bonhage, A., Aertsen, A., and Mehring, C. (2008). Prediction of arm movement trajectories from ecog-recordings in humans. J. Neurosci. Methods 167, 105–114.

Rotermund, D., Ernst, U. A., and Pawelzik, K. R. (2006). Towards on-line adaptation of neuro-prostheses with neuronal evaluation signals. Biol. Cybern. 95, 243–257.

Sanchez, J. C., Mahmoudi, B., DiGiovanna, J., and Principe, J. C. (2009). Exploiting co-adaptation for the design of symbiotic neuroprosthetic assistants. Neural Netw. 22, 305–315.

Schalk, G., Kubánek, J., Miller, K. J., Anderson, N. R., Leuthardt, E. C., Ojemann, J. G., et al. (2007). Decoding two-dimensional movement trajectories using electrocorticographic signals in humans. J. Neural Eng. 4, 264–275.

Schalk, G., Miller, K. J., Anderson, N. R., Wilson, J. A., Smyth, M. D., Ojemann, J. G., et al. (2008). Two-dimensional movement control using electrocorticographic signals in humans. J. Neural Eng. 5, 75–84.

Scherberger, H., Jarvis, M. R., and Andersen, R. A. (2005). Cortical local field potential encodes movement intentions in the posterior parietal cortex. Neuron 46, 347–354.

Serruya, M. D., Hatsopoulos, N. G., Paninski, L., Fellows, M. R., and Donoghue, J. P. (2002). Instant neural control of a movement signal. Nature 416, 141–142.

Sutton, R. S., and Barto, A. G. (1998). Reinforcement Learning: An Introduction (Adaptive Computation and Machine Learning). Cambridge: The MIT Press.

Taylor, D. M., Tillery, S. I. H., and Schwartz, A. B. (2002). Direct cortical control of 3D neuroprosthetic devices. Science 296, 1829–1832.

Todorov, E. (2005). Stochastic optimal control and estimation methods adapted to the noise characteristics of the sensorimotor system. Neural Comput. 17, 1084–1108.

Todorov, E., and Jordan, M. I. (2002a). “A minimal intervention principle for coordinated movement,” in Advances in Neural Information Processing Systems, Vol. 15, eds S. Becker, S. Thrun, and K. Obermayer (Cambridge: MIT Press), 27–34.

Todorov, E., and Jordan, M. I. (2002b). Optimal feedback control as a theory of motor coordination. Nat. Neurosci. 5, 1226–1235.

Velliste, M., Perel, S., Spalding, M. C., Whitford, A. S., and Schwartz, A. B. (2008). Cortical control of a prosthetic arm for self-feeding. Nature 453, 1098–1101.

Vidaurre, C., Kawanabe, M., von Bünau, P., Blankertz, B., and Müller, K. R. (2011a). Toward unsupervised adaptation of lda for brain-computer interfaces. IEEE Trans. Biomed. Eng. 58, 587–597.

Vidaurre, C., Sannelli, C., Müller, K.-R., and Blankertz, B. (2011b). Co-adaptive calibration to improve bci efficiency. J. Neural Eng. 8, 025009.

Vidaurre, C., Sannelli, C., Müller, K.-R., and Blankertz, B. (2010). Machine-learning-based coadaptive calibration for brain-computer interfaces. Neural Comput. 23, 791–816.

Vidaurre, C., Schlogl, A., Cabeza, R., Scherer, R., and Pfurtscheller, G. (2006). A fully on-line adaptive bci. IEEE Trans. Biomed. Eng. 53, 1214–1219.

Vidaurre, C., Schlögl, A., Cabeza, R., Scherer, R., and Pfurtscheller, G. (2007). Study of on-line adaptive discriminant analysis for eeg-based brain computer interfaces. IEEE Trans. Biomed. Eng. 54, 550–556.

Waldert, S., Preissl, H., Demandt, E., Braun, C., Birbaumer, N., Aertsen, A., et al. (2008). Hand movement direction decoded from MEG and EEG. J. Neurosci. 28, 1000–1008.

Wang, Y., and Principe, J. C. (2008). Tracking the non-stationary neuron tuning by dual kalman filter for brain machine interfaces decoding. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2008, 1720–1723.

Wessberg, J., Stambaugh, C. R., Kralik, J. D., Beck, P. D., Laubach, M., Chapin, J. K., et al. (2000). Real-time prediction of hand trajectory by ensembles of cortical neurons in primates. Nature 408, 361–365.

Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., and Vaughan, T. M. (2002). Brain-computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791.

Wolpaw, J. R., and McFarland, D. J. (2004). Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proc. Natl. Acad. Sci. U.S.A. 101, 17849–17854.

Keywords: brain-machine interfaces, optimal feedback control, unsupervised learning, brain-computer interface, movement decoding

Citation: Gürel T and Mehring C (2012) Unsupervised adaptation of brain-machine interface decoders. Front. Neurosci. 6:164. doi: 10.3389/fnins.2012.00164

Received: 02 March 2012; Accepted: 24 October 2012;

Published online: 16 November 2012.

Edited by:

Niels Birbaumer, Istituto di Ricovero e Cura a Carattere Scientifico Ospedale San Camillo, ItalyReviewed by:

Ricardo Chavarriaga, Ecole Polytechnique Fédérale de Lausanne, SwitzerlandDennis J. McFarland, Wadsworth Center for Laboratories and Research, USA

Copyright: © 2012 Gürel and Mehring. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and subject to any copyright notices concerning any third-party graphics etc.

*Correspondence: Tayfun Gürel, Bernstein Center Freiburg, Albert-Ludwig University Freiburg, Hansastrasse 9a, Freiburg 70104, Germany. e-mail: tayfun.guerel@bcf.uni-freiburg.de; Carsten Mehring, Department of Bioengineering and Electric and Electronic Engineering, Imperial College London, South Kensington Campus, London SW7 2AZ, UK. e-mail: mehring@imperial.ac.uk

†Present address: Tayfun Gürel, Department of Bioengineering, Imperial College London, London, UK.