Corrigendum: Investigating Emotional Top Down Modulation of Ambiguous Faces by Single Pulse TMS on Early Visual Cortices

- 1Centre for Cognition and Decision Making, National Research University Higher School of Economics, Moscow, Russia

- 2Department of Experimental Psychology, University of Groningen, Groningen, Netherlands

- 3Department of Psychology, National Research University Higher School of Economics, Moscow, Russia

Top-down processing is a mechanism in which memory, context and expectation are used to perceive stimuli. For this study we investigated how emotion content, induced by music mood, influences perception of happy and sad emoticons. Using single pulse TMS we stimulated right occipital face area (rOFA), primary visual cortex (V1) and vertex while subjects performed a face-detection task and listened to happy and sad music. At baseline, incongruent audio-visual pairings decreased performance, demonstrating dependence of emotion while perceiving ambiguous faces. However, performance of face identification decreased during rOFA stimulation regardless of emotional content. No effects were found between Cz and V1 stimulation. These results suggest that while rOFA is important for processing faces regardless of emotion, V1 stimulation had no effect. Our findings suggest that early visual cortex activity may not integrate emotional auditory information with visual information during emotion top-down modulation of faces.

Introduction

When perceptual input is ambiguous observers may rely on contextual information in order to process what we see (Jolij and Meurs, 2011). The fact that contextual information can be employed for top-down modulation suggests that perception is rather Bayesian in nature, in that observers generate the most likely interpretation of our visual input relying on contextual information, memories and expectations (Bar, 2004; Kersten et al., 2004; Summerfield and Egner, 2009).

Top-down modulation of perception is not only facilitated by what we expect, but is also contingent on our emotional state. In some studies participants tend to show an emotional bias toward ambiguous stimuli in emotion-related paradigms. For example, when subjects in a negative mood simultaneously view ambiguous faces, participants tend to judge ambiguous facial expressions as sad (Bouhuys et al., 1995; Niedenthal, 2007).

Enhancing emotional significance of stimuli using music has been shown to influence the perception of facial expressions. Emotion laden influences on perception have been demonstrated in a face detection task while subjects listen to happy and sad music (Jolij and Meurs, 2011; Jolij et al., 2011). Jolij and Meurs (2011) were able to demonstrate that when subjects rated facial features of emoticons as happy or sad while passively listening to happy or sad music, participants became more sensitive to emoticons that were congruent with the music mood. They explained this phenomenon by suggesting that perception may be influenced by emotional state in a top-down manner.

For this study we plan to investigate the influence of emotional state on perception of ambiguous faces within the visual cortex. While some studies have been conducted on top-down emotional influence of perception of faces (Baumgartner et al., 2006; Li et al., 2009; Jeong et al., 2011; Müller et al., 2012), no studies have investigated the role of the early visual cortex in the context of emotional top-down perception of faces. In particular, we will investigate the role of the rOFA, a region within the inferior occipital gyrus which processes face parts (Rossion et al., 2003; Pitcher et al., 2007; Liu et al., 2010; Nichols et al., 2010; Renzi et al., 2015), has been linked with integration of facial stimuli (Cohen Kadosh et al., 2011), and becomes activate 60–100 ms after stimulus onset, prior to the fusiform gyrus (Pizzagali et al., 1999; Halgren et al., 2000; Liu et al., 2002; Rossion et al., 2003; Pitcher et al., 2007, 2008; Rotshtein et al., 2007; Cohen Kadosh et al., 2010; Sadeh et al., 2010; Pitcher, 2014). In addition to the rOFA, we chose to stimulate V1 since it has been shown to be relevant for auditory-visual integration (Clavagnier et al., 2004; Muckli et al., 2015, and becomes activate during face perception—around the same time frame as the rOFA, ~90 ms (Pourtois et al., 2004).

The purpose of the study is to investigate the role of the early visual cortex during emotion dependent top-down processing of faces. To this end, we will use single pulse TMS, to disrupt activation of rOFA and V1 while subjects identify ambiguous facial expressions and simultaneously listen to happy and sad classical music. We expect that stimulation of the rOFA and V1 will interfere with identification of happy and sad ambiguous faces, especially when music and faces are mismatched in emotional content.

Methods and Materials

Subjects

Twenty-four right-handed Bachelor students (15 males, mean age 22 years, SD 1.4 years) at the University of Groningen with normal or corrected-to-normal vision were recruited for the study. Each recruit was paid 7 euros per hour for the entire 2.5 h they had spent in the lab. Participants either taking drugs or prescribed with medications were excluded from the participant pool. The study was permitted by the local Ethics Committee (“Ethische Commissie van het Heymans Instituut voor Psychologisch Onderzoek”) and conducted according to the Declaration of Helsinki. Written informed consent was acquired from all participants.

Visual Stimulation

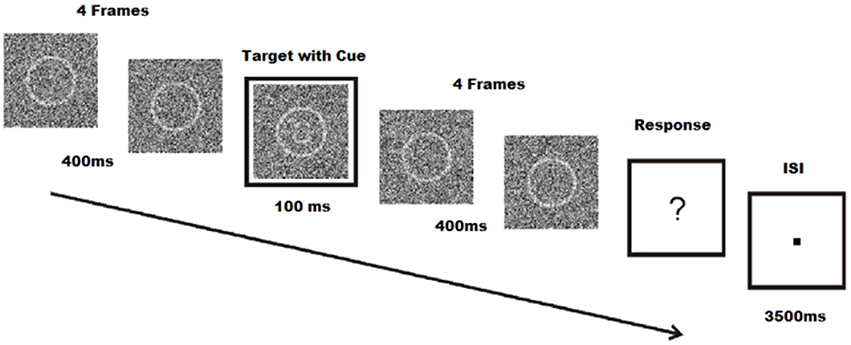

Stimuli were created using Matlab R2010a (The Mathworks Inc., Natick, Massachusetts, USA), and consisted of a 140 by 140 pixels array of random noise. Within the random noise, a circle with a diameter of 74 pixels was centered and shaded with a lighter contrast. All 255 values of the grayscale palette were used to create the random noise images. The difference between target and non-target stimuli was that the target stimuli also contained eyes, eyebrows, and a mouth within the circle, forming a graphic representation of a face with either a sad or a happy expression. Schematic faces have been shown to have similar emotional content compared to real faces, and may be used to influence emotion in perceptual tasks (Jolij and Lamme, 2005). Stimuli were presented on a 190 Philips Brilliance 190B TFT monitor (Philips BV, Eindhoven, The Netherlands) with a resolution of 800 by 600 pixels; viewing distance was ~80 cm. Trials were animations of 9 frames, each frame lasting 100 ms. The fifth frame also included a cue, a white square around the noise patch which also lasts for 100 ms. After the 9 frames, subjects were presented with a fixation dot until they responded. Once they responded, subjects were presented with an inter-stimulus interval between 3500 and 4500 ms. The following trial began after the inter-stimulus interval (Figure 1). Target and non-target stimuli were chosen at random in each trial to avoid expectancy or learning effects. Noise patches were randomly chosen from a list of 500 different noise patches. Within these noise patches, targets were chosen from a series of 50 happy and 50 sad images. While schematic faces were identical, noise patches added to the schematic face stimuli varied the stimuli.

Figure 1. Face-detection task. Participations identify facial expressions of emoticons during a 100 ms target frame with cue. Each target, coupled with four pre- and post-frames are masked with noise. Subjects are required to respond even when no face is seen. Modified from Jolij and Meurs (2011).

Music Manipulation

While performing the task participants listened to classical music which lasted 30 min maximum for all blocks in the happy and sad music condition. For the happy music blocks, pieces that had higher and more frequent pitches (faster beats per minute) as well as a dominant major key were chosen to represent happy music. Conversely, pieces in the key of a minor chord and those with a slower tempo were chosen to represent sad emotional music. Songs were played in random order. Music type was counterbalanced across subjects. Music was played via an MP3-player with a handheld speaker. Headphones were not used because the TMS stimulation would cause interference when stimulating the rOFA. Participants chose a volume that they felt comfortable with. Happy music pieces: Violin Concerto In A, Rv 347 (Ed. Malipiero)—I Allegro; Vivaldi—Concerto for Guitar and String Orchestra in A Major—I. Allegro non-molto; Concerto No. 1 In E, Rv 269 “spring”—I Allegro; Vivaldi—Concerto for Guitar and String Orchestra in A Major—III. Allegro; Vivaldi—Concerto for Guitar and String Orchestra in D major—III. Allegro; Concerto No. 1 In E, Rv 269 “spring”—Iii Allegro; Concerto No. 3 In F, Rv 293 “autumn”—Iii Allegro; Violin Concerto In A, Rv 347 (Ed. Malipiero)—Iii Allegro. Sad music pieces: Beethoven Moonlight Sonata (i), Chopin Nocturne No. 20 in c# (The Pianist), Chopin Prelude 6, Chopin Etude 6 Chopin Prelude No. 20 in c minor, Violin Concerto In A Minor, Rv 356 (Ed. Malipiero)—Ii Largo, Scriabin Etude Op2 No1 (1887), Barber Adagio for Strings see Supplementary Material for full list of music pieces.

Mood Assessment

Participants' emotional state was evaluated by using the Self-Assessment Manakin (SAM), a non-verbal method used to assess the valence, arousal, and dominance associated with a person's emotional state (Bradley and Lang, 1994). For this experiment, the SAM is utilized to assess the contrast in arousal and emotional valence between music and no music conditions while performing the task. Previous research has been done using the SAM to assess mood while performing a face-detection task and listening to music (Jolij and Meurs, 2011). The authors demonstrated that while music increases arousal level, music mood may influence subjects' emotional valence depending on the direction of the music mood.

Experimental Procedure

Subjects are randomly assigned to one of three TMS locations (rOFA, V1 and Cz as a baseline stimulation). Subjects were given a total of 4 blocks for each music condition (happy, sad, no music) equating to 12 blocks in total. Each block consisted of 72 trials, divided into happy, sad or no face trials. TMS pulses were fixed at 80 ms after stimulus onset for all conditions. We settled with a fixed stimulation time because our main hypothesis concerned the role of the rOFA which activates at 80 ms. Participants had to indicate whether they had seen a sad face or a happy face by using the computer keyboard (the F and J keys, respectively), or to press the Spacebar when they had not seen a face. Participants were specifically instructed to be conservative with their responses, i.e., to only respond when they were absolutely sure to have seen a face. Trials were discarded from the average reaction times if they responded later than 3 s after stimuli onset. No feedback was given. Participants' mood was assessed using the SAM immediately after each music condition.

Magnetic Stimulation and Coil Positioning

The TMS regions of interest were measured by first converting foci of the rOFA (Rossion et al., 2003) into the EEG 10–20 system using T2T Munster (http://archive.is/wwwneuro03.uni-muenster.de). Cz was located by marking 50% between the inion to nasion and 50% between the left and right preauricular points. V1 was located at electrode Oz, 10% above the inion. The rOFA was located at electrode T6 by scoring 10% above the inion (Oz), 10% above the right periauricular point (T4) and 20% of the total circumference between Oz and T4, ~5 cm to the right of V1. Stimulation of TMS was applied using a Magstim Model 200 stimulator (The Magstim Company Ltd, Wales, UK), using a 70 mm figure-of-eight coil. Intensity was set for below visual threshold for each subject (between 65 and 80% for all subjects).

Statistical Analyses and Post-hoc Comparisons

Data were analyzed using SPSS version 18.0.03 (SPSS Inc., Natick, Massachucetts, USA). Mixed ANOVA tests were performed on accuracy and reaction time of facial expression identification (correct detection of face) for a within subject's factor: Congruence (No music, Congruent, Incongruent) and between subject's factor: Location (rOFA, V1, Cz). A separate mixed ANOVA was performed to test accuracy on arousal of music: Music condition (No music, Sad, Happy), Face condition and between subject's factor: Location (rOFA, V1, Cz). Post-hoc comparisons were corrected using Bonferonni procedure. Sphericity was not violated for any of the effects (all p > 0.05). T-tests were used to analyze three scales of the SAM (valence, dominance, and arousal) for each music condition.

Results

SAM Questionnaire

The valance scale of the SAM revealed a main effect of music mood suggesting that subjects report a more positive mood after listening to happy music and report a more negative mood after listening to sad music (F = 23.995, p < 0.01). The arousal scale of the SAM revealed higher arousal when listening to sad music compared to happy and no music condition (F = 8.556, p = 0.002). No differences in the dominance scale were tested.

Behavioral Data

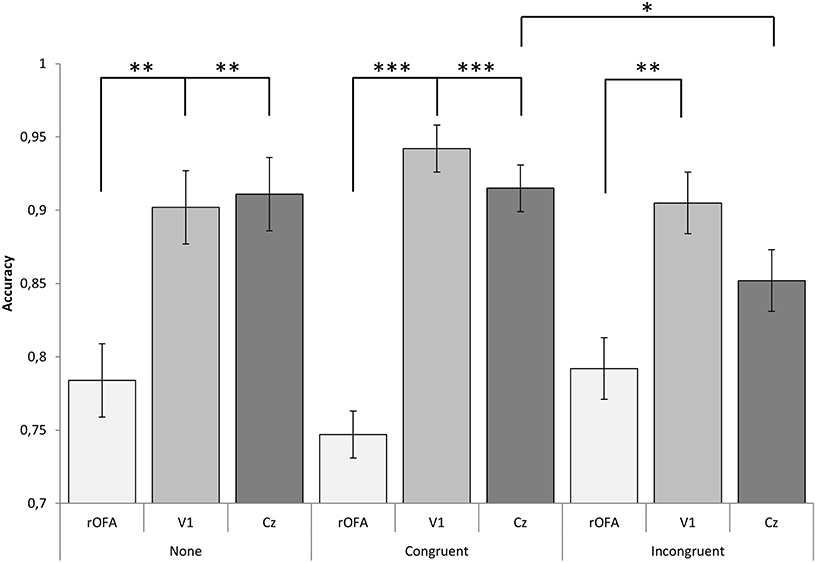

The mixed ANOVA on Congruency × Location revealed a two-way interaction effect [F(4, 42) = 2.993, p = 0.031, partial η2 = 0.219]. When comparing across Congruency, differences were observed between congruent and incongruent for subjects that received stimulation on Cz (p = 0.043).

When comparing across Location, Bonferroni corrected comparisons revealed statistical significance in accuracy between groups receiving rOFA and Cz stimulation for congruent face/music stimuli (p < 0.001) and stimuli with no music (p = 0.005). Statistical differences were also found between rOFA and V1 for congruent stimuli (p < 0.001) and the no music condition (p = 0.009). Both of these effects were driven by a decrease in performance rOFA stimulation.

Conversely, differences between rOFA and Cz groups were non-significant for incongruent stimuli (p = 0.186) and different between rOFA and V1 stimulation (p = 0.004). No differences were observed between V1 and Cz across Congruency, see Figure 2.

Figure 2. Graphical representation of interaction effect. An effect between Congruence and Location reflects emotion dependent top-down influence of face perception at baseline level (Cz), yet for V1 and rOFA, no effects were found. Instead, rOFA disrupted perception of faces regardless of emotion content while V1 stimulation facilitated performance. ***p < 0.001; **p < 0.01; *p < 0.05; p < 0.10.

Results also revealed a main effect of location [F(2, 21) = 25.467, p < 0.001, partial η2 = 0.708]. Subjects receiving rOFA stimulation correctly identified 77% of faces, ~14% less than subjects receiving V1 stimulation (μ = 92%; p < 0.001) and 12% less than subjects receiving Cz stimulation (μ = 89%; p < 0.001).

Furthermore, no effects were found significant for reaction time across conditions. Finally, due to an increase in arousal level for sad music compared to the other two conditions, we divided Congruency condition into Music condition and Face condition and compared behavioral scores on face identification. This analysis revealed no effects on Music condition alone, indicating that the effects reported above were merely due to incongruent music and face stimuli.

Discussion

For this study we investigated the functional role of early visual cortical areas involved in emotion dependent top-down modulation on the perception of facial expressions. This was done by stimulating rOFA and V1 while subjects identified ambiguous happy and sad faces and simultaneously listened to happy and sad music. Similarly to a previous behavioral study, performance of face identification during conditions of incongruent music mood decreased with respect to conditions of congruent music mood, yet only at baseline level. During stimulation of the rOFA and V1, identification of faces was modulated, irrespective of congruence between music mood and faces. While rOFA decreased performance of face identification in all music-face pairings, V1 increased performance across conditions.

In contrast to our expectations, rOFA stimulation decreased face perception, irrespective of auditory stimuli. The finding that music did not facilitate or impede facial expression identification compared to no music condition demonstrates how the rOFA is selective to face processing. One potential explanation may be that stimulation time was fixed at 80 ms for all conditions. Since this time is critical for rOFA during face processing (Pitcher, 2014), this may explain how performance of face identification decreased equivalently across music mood. On the other hand, V1 did not manipulate performance of face identification compared to baseline, which was not according to our expectations. Although V1 becomes activate early on, the role of V1 may not be specific to emotional dependent top-down modulation on face perception. It has been shown, however, that multisensory integration occurs from long-distance feedback connections projecting from higher cortical areas to V1 in a top-down hierarchical fashion (Ahissar and Hochstein, 2000; Juan and Walsh, 2003; Clavagnier et al., 2004; Muckli et al., 2015). This may suggest that the V1 becomes active for emotional dependent top-down modulation at a later duration, after activation of higher cortical areas.

Compared with previous studies, incongruent music-face pairings shows activation of the bilateral fusiform gyrus (Jeong et al., 2011), while congruent auditory-visual stimuli activates the right fusiform gyrus (Baumgartner et al., 2006). However, in this latter study classical music and fearful and sad pictures was used to induce emotional congruence, rather than face stimuli. This may suggest that while the right fusiform gyrus may account for auditory-visual integration of emotional stimuli in general, the rOFA specifically may account for emotional auditory-visual integration of faces. Further research is necessary to explore the distinctive functional roles of rOFA and right fusiform gyrus in face perception while influenced by emotional state.

In this study we stimulated early visual cortical regions to investigate emotion dependent top-down processing of faces. However, perhaps the influences of face perception may be attributed to higher cortical areas. For example, the superior temporal region has been associated with the binding of faces with voices (Watson et al., 2013, 2014a,b). In particular, the posterior superior temporal sulcus plays a crucial role in audio-visual integration which gates influences from the fusiform gyrus toward the left amygdala when processing emotion-laden faces (Müller et al., 2012). Furthermore, generation of top-down signals during face perception revealed activity within the anterior cingulate cortex, orbitofrontal cortex and left dorsolateral prefrontal cortex that (Li et al., 2009). Therefore, emotional state may influence face perception from higher cortical regions such as the superior temporal cortex, anterior cingulate cortex, orbitofrontal cortex or left dorsolateral prefrontal cortex. For this reason, it is necessary to further explore neural networks involved in emotion dependent top-down modulation in order to understand the how emotional state influences visual perception of faces.

Conclusion

Earlier studies suggest that V1 facilitates integration of multisensory stimuli while the rOFA is involved in processing of face parts. For this study we attempted to investigate the role of the rOFA and V1 during emotion dependent top-down modulation of face perception. Subjects passively listened to emotionally congruent or incongruent music were instructed to identify ambiguous happy and sad faces in a face-detection task. Results provided evidence of emotional influence on face perception in the control (Cz) stimulation condition, yet stimulation of rOFA decreased accuracy of face identification, irrespective of congruency. No effect was found between V1 and baseline. These results suggest that while the rOFA has more general role of face perception, early activation of V1 (80 ms) did not manipulate emotion dependent top-down modulation.

Author Contributions

Data collection, analysis, and writing of report was mainly performed by ZY. RV assisted analysis and writing of report. Study design and supervision was conducted by JJ.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The study has been funded by the Russian Academic Excellence Project “5–100” and by the University of Groningen.

References

Ahissar, M., and Hochstein, S. (2000). The spread of attention and learning in feature search: effects of target distribution and task difficulty. Vision Res. 40, 1349–1364. doi: 10.1016/S0042-6989(00)00002-X

Baumgartner, T., Lutz, K., Schmidt, C. F., and Jäncke, L. (2006). The emotional power of music: how music enhances the feeling of affective pictures. Brain Res. 1075, 151–164. doi: 10.1016/j.brainres.2005.12.065

Bouhuys, A. L., Bloem, G. M., and Groothuis, T. G. G. (1995). Induction of depressed and elated mood by music influences the perception of facial emotional expressions in healthy subjects. J. Affect. Disord. 33, 215–225. doi: 10.1016/0165-0327(94)00092-N

Bradley, M. M., and Lang, P. J. (1994). Measuring emotion: the Self-Assessment Manakin and the semantic differential. J. Behav. Ther. Exp. Psychol. 25, 49–59. doi: 10.1016/0005-7916(94)90063-9

Clavagnier, S., Falchier, A., and Kennedy, H. (2004). Long-distance feedback projections to area V1: implications for multisensory integration, spatial awareness, and visual consciousness. Cogn. Affect. Behav. Neurosci. 4, 117–126. doi: 10.3758/CABN.4.2.117

Cohen Kadosh, K. C., Walsh, V., and Cohen Kadosh, R. C. (2011). Investigating face-property specific processing in the right OFA. Soc. Cogn. Affect. Neurosci. 6, 58–65. doi: 10.1093/scan/nsq015

Cohen Kadosh, K., Henson, R. N. A., Cohen Kadosh, R., Johnson, M. H., and Dick, F. (2010). Task-dependent activation of face-sensitive cortex: an fMRI adaptation study. J. Cogn. Neurosci. 22, 903–917. doi: 10.1162/jocn.2009.21224

Halgren, E., Raij, T., Marinkovic, K., Jousmaki, V., and Hari, R. (2000). Cognitive response profile of the human fusiform face area as determined by MEG. Cereb. Cortex 10, 69–81. doi: 10.1093/cercor/10.1.69

Jeong, J. W., Diwadkar, V. A., Chugani, C. D., Sinsoongsud, P., Muzik, O., Behen, B. E., et al. (2011). Congruence of happy and sad emotion in music and faces modifies cortical audiovisual activation. Neuroimage 54, 2973–2982. doi: 10.1016/j.neuroimage.2010.11.017

Jolij, J., and Lamme, V. A. F. (2005). Repression of unconscious information by conscious processing: evidence from transcranial magnetic stimulation-induced blindsight. Proc. Natl. Acad. Sci. U.S.A. 102, 10747–10751. doi: 10.1073/pnas.0500834102

Jolij, J., and Meurs, M. (2011). Music alters visual perception. PLoS ONE 6:e18861. doi: 10.1371/journal.pone.0018861

Jolij, J., Meurs, M., and Haitel, E. (2011). Why do we see what's not there? Commun. Integ. Biol. 4, 764–767. doi: 10.4161/cib.17754

Juan, C. H., and Walsh, V. (2003). Feedback to V1: a reverse hierarchy in vision. Exp. Brain Res. 150, 259–263. doi: 10.1007/s00221-003-1478-5

Kersten, D., Mamassian, P., and Yuille, A. (2004). Object perception as Bayesian inference. Annu. Rev. Psychol. 55, 271–304. doi: 10.1146/annurev.psych.55.090902.142005

Li, J., Liu, J., Liang, J., Zhang, H., Zhao, J., Huber, D. E., et al. (2009). A distributed neural system for top-down face processing. Neurosci. Lett. 451, 6–10. doi: 10.1016/j.neulet.2008.12.039

Liu, J., Harris, A., and Kanwisher, N. (2002). Stages of processing in face perception: an MEG study. Nat. Neurosci. 5, 910–916. doi: 10.1038/nn909

Liu, J., Harris, A., and Kanwisher, N. (2010). Perception of face parts and face configurations: an fMRI study. J. Cogn. Neurosci. 22, 203–211. doi: 10.1162/jocn.2009.21203

Muckli, L., DeMartino, F., Vizioli, L., Petro, L. S., Smith, F. W., Ugurbil, K., et al. (2015). Context feedback to superficial layers to V1. Curr. Biol. 25, 2690–2695. doi: 10.1016/j.cub.2015.08.057

Müller, V., Cieslik, E., Turetsky, B. I., and Eickhoff, S. B. (2012). Crossmodal interactions in audiovisual emotion processing. Neuroimage 60, 553–561. doi: 10.1016/j.neuroimage.2011.12.007

Nichols, D. F., Betts, L. R., and Wilson, H. R. (2010). Decoding of faces and face components in face-sensitive human visual cortex. Front. Psychol. 1:28. doi: 10.3389/fpsyg.2010.00028

Pitcher, D., Garrido, L., Walsh, V., and Duchaine, B. (2008). TMS disrupts the perception and embodiment of facial expressions. J. Neurosci. 28, 8929–8933. doi: 10.1523/JNEUROSCI.1450-08.2008

Pitcher, D., Walsh, V., Yovel, G., and Duchaine, B. (2007). TMS evidence for the involvement of the right occipital face area in early face processing. Curr. Biol. 17, 1568–1573. doi: 10.1016/j.cub.2007.07.063

Pitcher, P. (2014). Facial expression recognition takes longer in the posterior superior temporal sulcus than in the occipital face area. J. Neurosci. 34, 9173–9177. doi: 10.1523/JNEUROSCI.5038-13.2014

Pizzagali, D., Regard, M., and Lehmann, D. (1999). Rapid emotional face processing in the human right and left brain hemisphere: an ERP study. Neuroreport 10, 2691–2698. doi: 10.1097/00001756-199909090-00001

Pourtois, G., Grandjean, D., Sander, D., and Vuilleumier, P. (2004). Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cereb. Cortex 14, 619–633. doi: 10.1093/cercor/bhh023

Renzi, C., Renzi, C., Ferrari, C., Schiavi, S., Pisoni, A., Papagno, C., et al. (2015). The role of the occipital face area in holistic processing involved in face detection and discrimination: a tDCS study. Neuropsychology 29, 409–416. doi: 10.1037/neu0000127

Rossion, B., Caldara, R., Seghier, M., Schuller, A. M., Lazeyras, F., and Mayer, E. (2003). A network of occipito-temporal face-sensitive areas besides the right middle fusiform gyrus is necessary for normal face processing. Brain 126, 2381–2395. doi: 10.1093/brain/awg241

Rotshtein, P., Geng, J. J., Driver, J., and Dolan, R. J. (2007). Role of features and second-order spatial relations in face discrimination, face recognition, and individual face skills: behavioral and functional magnetic resonance imaging data. J. Cogn. Neurosci. 19, 1435–1452. doi: 10.1162/jocn.2007.19.9.1435

Sadeh, B., Podlipsky, I., Zadanov, A., and Yovel, G. (2010). Face-selective fMRI and event-related potential responses are highly correlated: evidence from simultaneous ERP-fMRI investigation. Hum. Brain Mapp. 31, 1490–1501. doi: 10.1002/hbm.20952

Summerfield, C., and Egner, T. (2009). Expectation (and attention) in visual cognition. Trends Cogn. Sci. 13, 403–409. doi: 10.1016/j.tics.2009.06.003

Watson, R., Latinus, M., Charest, I., Crabbe, F., and Belin, P. (2014b). People-selectivity, audiovisual integration and heteromodality in the superior temporal sulcus. Cortex 50, 125–136. doi: 10.1016/j.cortex.2013.07.011

Watson, R., Latinus, M., Noguchi, T., Garrod, O., Crabbe, F., and Belin, P. (2013). Dissociating task difficulty from incongruence in face-voice emotion integration. Front. Hum. Neurosci. 7:744. doi: 10.3389/fnhum.2013.00744

Keywords: emotion dependent top-down processing, face perception, occipital face area, primary visual cortex, TMS

Citation: Yaple ZA, Vakhrushev R and Jolij J (2016) Investigating Emotional Top Down Modulation of Ambiguous Faces by Single Pulse TMS on Early Visual Cortices. Front. Neurosci. 10:305. doi: 10.3389/fnins.2016.00305

Received: 22 March 2016; Accepted: 16 June 2016;

Published: 30 June 2016.

Edited by:

Huan Luo, Peking University, ChinaReviewed by:

Zonglei Zhen, Beijing Normal University, ChinaKe Zhou, Chinese Academy of Sciences, China

Copyright © 2016 Yaple, Vakhrushev and Jolij. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zachary A. Yaple, zachyaple@gmail.com

Zachary A. Yaple

Zachary A. Yaple Roman Vakhrushev3

Roman Vakhrushev3