Neural substrates of reliability-weighted visual-tactile multisensory integration

- 1 Department of Neurobiology and Anatomy, University of Texas Health Science Center at Houston, Houston, TX, USA

- 2 Department of Psychology and Program in Cognitive Neuroscience, The City College of the City University of New York, New York, NY, USA

As sensory systems deteriorate in aging or disease, the brain must relearn the appropriate weights to assign each modality during multisensory integration. Using blood-oxygen level dependent functional magnetic resonance imaging of human subjects, we tested a model for the neural mechanisms of sensory weighting, termed “weighted connections.” This model holds that the connection weights between early and late areas vary depending on the reliability of the modality, independent of the level of early sensory cortex activity. When subjects detected viewed and felt touches to the hand, a network of brain areas was active, including visual areas in lateral occipital cortex, somatosensory areas in inferior parietal lobe, and multisensory areas in the intraparietal sulcus (IPS). In agreement with the weighted connection model, the connection weight measured with structural equation modeling between somatosensory cortex and IPS increased for somatosensory-reliable stimuli, and the connection weight between visual cortex and IPS increased for visual-reliable stimuli. This double dissociation of connection strengths was similar to the pattern of behavioral responses during incongruent multisensory stimulation, suggesting that weighted connections may be a neural mechanism for behavioral reliability weighting.

Introduction

Integrating information from different sensory modalities is critical for obtaining an accurate representation of the environment. On a windy day, it may be more accurate to rely on the visual modality to determine if an insect has landed on one’s arm because the somatosensory stimulation of the skin by the breeze renders the somatosensory modality unreliable, while on a calm day the somatosensory modality may be more reliable. Behavioral experiments show that neurologically normal subjects take reliability into account when making behavioral decisions, weighting each modality by its reliability (Ernst and Banks, 2002 ; Alais and Burr, 2004 ). A better understanding of the neural mechanisms for reliability-weighted multisensory integration may help in the development of treatment and rehabilitation strategies for the many disorders in which the information from a sensory modality is degraded, such as vision loss due to macular degeneration.

Computational modeling studies have suggested that reliability weighting could occur by a simple linear summation of neuronal responses (Ma et al., 2006 ; Ma and Pouget, 2008 ). This model, which we term the “linear summation” model, predicts that increasing stimulus reliability scales the responses of neurons in sensory cortex (“early” areas) that respond to that stimulus. An explicit prediction of this model is that connection weights between early and late areas should not change (Ma et al., 2006 ). In an alternative model, which we term the “weighted connections” model, the connection weights between early and late areas change depending on the reliability of the stimulus (and are independent of the level of activity in early areas). This model receives some support from a recent electrophysiological study of visual-vestibular multisensory integration in macaque monkeys. Morgan et al. (2008) found that the effective weight of visual inputs into area MST decreased when the visual stimulus was made less reliable. However, Morgan et al., derived these weights from response measurements within a single area without directly measuring connection strengths between areas.

In order to better understand the neural substrates of reliability-weighted multisensory integration, we measured activity in both early and late areas using blood-oxygen level dependent functional magnetic resonance imaging (BOLD fMRI). Subjects detected viewed and felt touches delivered to the right index finger. The reliability of each sensory modality was adjusted by varying the signal-to-noise ratio (SNR) of the stimulus.

We used the average BOLD signal change to assess the neural activity associated with visual and somatosensory processing within early sensory areas. To measure connection strengths between early sensory areas and later multisensory ones, we used structural equation modeling, a validated technique for examining the effective connectivity between different brain areas (McIntosh et al., 1994 ; Horwitz et al., 1995 ; Buchel and Friston, 2001 ; Stein et al., 2007 ). The weighted connections model predicts that connection weights should be modulated by reliability, independent of the level of activity in early sensory areas.

Materials and Methods

Experiments were conducted in accordance with the Institutional Review Boards of the University of Texas Health Science Center at Houston and the City University of New York. Written informed consent was obtained from each subject prior to experimentation.

Sensory Stimuli and Task

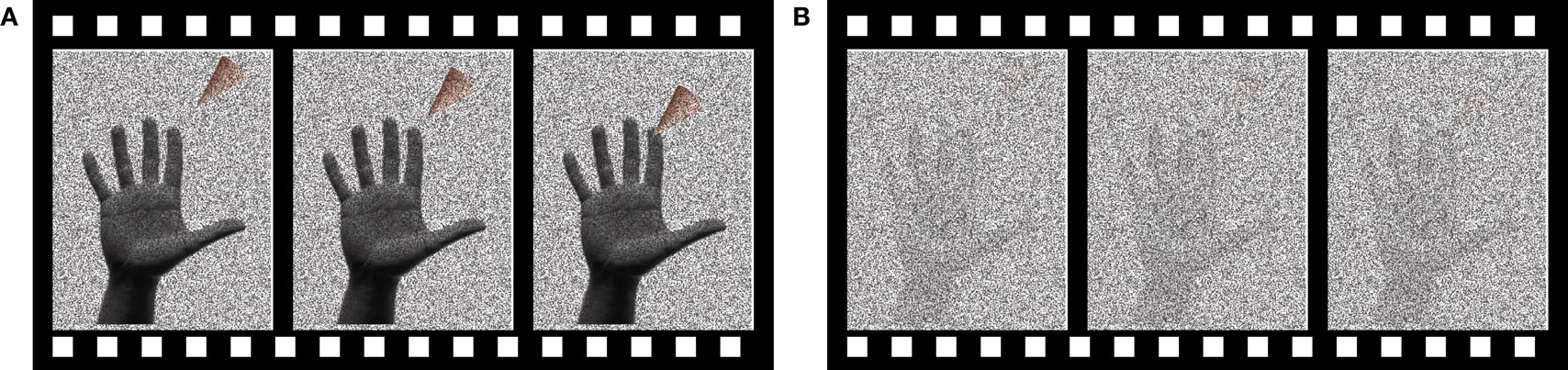

Subjects performed a two-alternative forced choice task, deciding whether a touch was delivered to the index finger of the right hand or not. Visual stimuli consisted of a 1.5 s video of an animated triangular probe approaching the tip of the index finger of a photograph of an actor’s hand (Figure 1 ). On “visual touch” trials, the probe contacted the finger at t = 1.0 s and then withdrew. On “visual no-touch” trials, the probe stopped just short of the fingertip at t = 1.0 s and then withdrew.

Figure 1. The visual stimulus. The visual stimulus consisted of a video of an animated probe (triangular shape) approaching the image of a hand. Three frames of the video are shown. (A) Reliable visual stimulus. Dynamic random noise was overlaid on the visual stimulus. During reliable visual stimulation, the dynamic noise was transparent. (B) Unreliable visual stimulus. During unreliable visual stimulation, the dynamic noise was opaque.

The reliability of the visual stimulus was manipulated by adjusting the transparency of the dynamic noise. First, the digital video of the probe approaching the finger was generated using Apple FinalCut software. Next, single video frames containing white noise (i.e., dynamic random black and white bit maps) were generated using Matlab and overlaid on the video. Finally, Neurobehavioral Systems Presentation software was used to combine each frame of the probe video and a randomly-selected white noise frame using the alpha-channel compositing method (Porter and Duff, 1984 ). Every pixel in the image was assigned the same alpha-channel transparency value, which was then used to combine the two images, with lower values indicating more transparency of the white noise frame and high values indicating more opacity of the white noise frame. Transparency of the noise made it easy to discriminate the touch and no-touch stimuli (reliable); opacity of the noise made it difficult to discriminate the stimuli (unreliable).

Somatosensory stimuli were delivered using piezoelectric benders attached to the tip of the index finger of the subject’s right hand. The somatosensory stimulus consisted of a small deflection of the piezoelectric benders that was perceived as a faint tap. The deflection was created by a 150 ms Gaussian-modulated sine wave delivered to the benders under computer control at t = 1.0 s, precisely synchronized with the visual touch during multisensory trials.

On multisensory trials, subjects perceived the visual probe touching the index finger shown on screen as “causing” the tap delivered by the bender to their index finger, an inference familiar to those who have played video games that provide tactile feedback.

To adjust the reliability of the somatosensory stimulus, a 100 Hz background oscillation of variable amplitude was introduced into the piezoelectric benders throughout the entire 1.5 s stimulus period, analogous to the dynamic noise in the visual stimulus. This background noise was perceived as a tactile hum, and was distinct from the “tap” percept produced by the Gaussian monopulse. A low amplitude of the background noise resulted in the tap being easily detectable above the background (reliable); high-amplitude noise made it difficult to detect the tap (unreliable). On “somatosensory touch” trials, taps were delivered; on “somatosensory no-touch” trials, no tap was delivered (only background noise).

Behavioral Experiment

In the first experiment, 21 subjects participated in a behavioral experiment of visual-somatosensory multisensory integration. Subjects always performed the same touch/no-touch judgment. Subjects viewed the video on a 21′ CRT display placed 42 cm from the face. The right hand was placed out-of-sight on a table in a palm up position, corresponding to the posture of the viewed right hand on the display. Subjects responded verbally as to whether or not they saw or felt a tap; each response was entered into the computer by an experimenter. In the first part of the experiment, subjects performed a staircase level-setting procedure to find the stimulus reliability level (adjusted by manipulating the SNR of the stimulus) that produced 70% correct performance on each of the modalities in isolation. Then, subjects viewed and felt unisensory visual touches, unisensory somatosensory touches, and congruent and incongruent multisensory touches. In congruent multisensory trials, a touch stimulus was presented in both modalities or a no-touch stimulus was presented in both modalities. In incongruent trials, a touch stimulus was presented in one modality (e.g., tactile tap) and a no-touch stimulus was presented in the other modality (e.g., a video showing the probe missing the finger). In the neuroimaging experiment, only congruent touches were presented.

During unisensory somatosensory touches, subjects viewed a fixation crosshairs that changed intensity to signal the beginning of each trial. Subjects were presented with the somatosensory stimulus. Then, the fixation crosshairs changed intensity again, signaling subjects to respond. During unisensory visual touches, subjects viewed the stimulus video until it was replaced by fixation crosshairs, signaling them to respond. During multisensory trials, the somatosensory and visual stimuli commenced at the same time, and trial offset was signaled by the return to fixation crosshairs. Congruent and incongruent multisensory trials were equally distributed and randomly intermixed, with 40 of each type.

Visual-somatosensory stimuli can produce changes in response criteria in addition to true changes in sensitivity (Johnson et al., 2006 ). Therefore, our primary behavioral measure was d′, a measure of sensitivity independent of response biases.

Neuroimaging Experiment

In the second experiment, nine subjects participated in an fMRI experiment (data from one subject was discarded due to sleepiness). An MR-compatible eye-tracking system (Applied Science Laboratories, Bedford, MA, USA) was used to monitor fixation and behavioral state. The visual display was projected into the bore of the MR scanner using an LCD projector but was otherwise identical to the visual stimuli in the behavioral experiment. The right hand was placed out-of-sight at the side of the subject in the palm up position, corresponding to the posture of the viewed hand. Subjects responded with an fMRI compatible button box (Current Designs, Philadelphia, PA, USA) held in the left hand. Before scanning commenced, the same level-setting procedure as in the behavioral experiment was used to find the appropriate dynamic noise level for each modality.

Anatomical MRI

Anatomical MRI scans were obtained from each subject using a 3 T whole-body MR scanner (Phillips Medical Systems, Bothell, WA, USA). Images were collected using a magnetization-prepared 180° radio-frequency pulses and rapid gradient-echo (MP-RAGE) sequence optimized for gray–white matter contrast with 1 mm thick sagittal slices and an in-plane resolution of 0.938 mm × 0.938 mm. AFNI software (Cox, 1996 ) was used to analyze MRI data. 3D cortical surface models were created with FreeSurfer (Fischl et al., 1999a ) and visualized in SUMA (Argall et al., 2006 ). Surface averages were created using the FreeSurfer template (Fischl et al., 1999b ) and volume averages were created using the N27 template brain (Mazziotta et al., 2001 ).

fMRI Experimental Design and Data Analysis

Functional images were collected using a gradient-recalled-echo echo-planar-imaging sequence sensitive to the BOLD signal. Thirty-three axial slices were collected with a repetition time (TR) of 2000 ms, an echo time of 30 ms and a flip angle of 90°. Slice thickness was 3 mm and in-plane resolution was 2.75 mm × 2.75 mm. Each scan series contained 150 scans. Following motion correction and slice timing correction, data were smoothed with a spatial Gaussian filter with root-mean-square deviation of 3 mm.

Experimental Conditions and Region of Interest Creation

A block design was used in all scan series. Each block contained 10 trials with total duration of 20 s followed by 10 s of fixation baseline in which no stimulus was presented. Each trial within a block consisted of the presentation of a 1.5 s stimulus, followed by a 0.5 s response window for total trial duration of 2.0 s.

There were nine block types: unisensory visual (reliable and unreliable); unisensory somatosensory (reliable and unreliable); multisensory visual + somatosensory (both modalities reliable or both modalities unreliable); multisensory visual + somatosensory (visual-reliable/somatosensory-unreliable and visual-unreliable/somatosensory-reliable); and passive tactile stimulation (touches only, with no behavioral task). The multisensory stimuli were always congruent and touch and no-touch trials were randomly intermixed within each block.

We had strong a priori hypotheses about three brain regions: the secondary somatosensory cortex, lateral occipital cortex, and anterior intraparietal sulcus (IPS). In previous studies using piezoelectric benders, we have observed robust activity in inferior parietal lobe and the parietal operculum (Beauchamp et al., 2007 , 2009 ), the location of secondary somatosensory cortex and associated areas (Disbrow et al., 2000 ; Francis et al., 2000 ; Ruben et al., 2001 ; Beauchamp et al., 2007 , 2009 ; Eickhoff et al., 2007 , 2008 ; Burton et al., 2008 ). fMRI studies using visual motion stimuli (such as the moving probe) and biological stimuli (such as the image of the hand) the strongest activity was observed in lateral occipital cortex (Tootell et al., 1995 ; Beauchamp et al., 1997 , 2002 , 2003 ; Downing et al., 2001 ; Wheaton et al., 2004 ; Pelphrey et al., 2005 ). Examinations of visual-somatosensory interactions have implicated the anterior IPS for visual-somatosensory integration, at the junction with the postcentral sulcus (Grefkes and Fink, 2005 ; Culham and Valyear, 2006 ; Stilla and Sathian, 2008 ; Pasalar et al., 2010 ). The somatosensory stimulus was delivered to the right hand, evoking somatosensory-related responses in the left, contralateral hemisphere, while the behavioral response was made with the left hand, resulting in motor-related responses in the right hemisphere. In order to maximize the contribution of the somatosensory stimulus and minimize the contribution of the motor response to the observed activity, ROIs were formed only in the left hemisphere. Independent data from each subject was used to create the ROIs and perform the comparisons of interest (BOLD amplitude between reliable and unreliable stimulation) to prevent bias (Simmons et al., 2007 ; Vul et al., 2009 ).

Bold Amplitude Measures

We used the 3dREMLfit program in the AFNI package (Cox, 1996 ) to account for serial correlations in the fMRI data by fitting an autoregressive moving average model with one autoregressive term and one moving average term separately to each voxel. The time series data were analyzed with the general linear model; the motion correction estimates were used as regressors of no interest. A separate regressor of interest was used for each block type. The beta-weight of the regressor for each block type was converted to percent signal change and used as a measure of response amplitude. The full F (omnibus) statistic from only reliable stimuli was thresholded at p < 0.001 corrected for false discovery rate (Genovese et al., 2002 ) to identify voxels that responded significantly.

Structural Equation Modeling

In BOLD fMRI, measures of activity correlation can be used to derive the connection strength between areas (Buchel and Friston, 2001 ; Stein et al., 2007 ). For each subject and each ROI, a normalized time series was constructed by subtracting the amplitude of the mean response to each condition from the average time series, preventing the high-amplitude block onset and offset from artificially inflating the correlation between ROIs (Buchel and Friston, 1997 ).We used the 1ddot program in AFNI to calculate the correlation matrix between the ROI time series. Two separate matrices were constructed, one for the time series during each of two block types: visual-reliable/somatosensory-unreliable and visual-unreliable/somatosensory-reliable. The correlation weights were calculated independently for each subject and then averaged.

Results

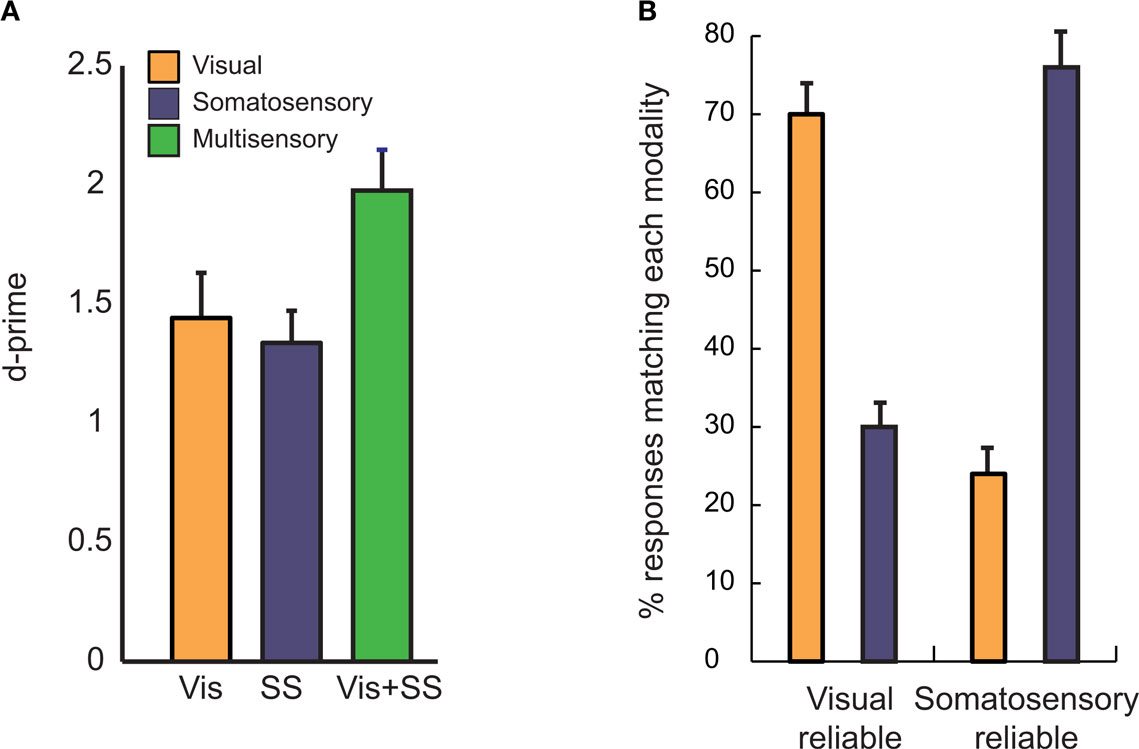

Behavioral Experiment: Multisensory Increases in Sensitivity

For reliable unisensory stimuli, subjects were able to determine with near perfect accuracy whether or not a visual or somatosensory touch occurred. For unreliable unisensory stimuli, performance decreased to 68 ± 5% SEM for visual and 58 ± 5% for somatosensory. When unreliable stimuli were presented in both modalities simultaneously, performance improved to 79 ± 3% for visual-somatosensory (Figure 2 A). As measured with d′, a criterion-independent measure of performance, there was a significant benefit of multisensory stimulation, demonstrating that the visual-somatosensory stimulus successfully induced multisensory integration [d′ = 1.98 ± 0.17 for visual-somatosensory vs. d′ = 1.44 ± 0.19 for visual and d′ = 1.34 ± 0.13 for somatosensory, F(2,40) = 12.41; p = 6e-5]. There were no differences in criterion between the conditions [mean c = 0.31, F(2,40) = 1.78; p = 0.18].

Figure 2. Behavioral measures of visual-somatosensory multisensory integration. (A) In the visual condition (Vis, orange), subjects made a touch/no-touch judgment, discriminating between noisy movies of a probe touching or just missing the finger (see Figure 1 ). In the somatosensory condition (SS, blue) a touch/no-touch judgment was performed on a background vibration delivered to the finger with or without an additional touch. In the congruent multisensory condition (Vis + SS, green) the touch/no-touch judgment was performed on a touch that was both seen and felt, or neither seen nor felt. The error bars show the SEM (n = 21 subjects). (B) In the incongruent multisensory condition, subjects made a touch/no-touch judgment for stimuli which were reliable in one modality but not the other (e.g., probe clearly missed the finger in the video but a barely detectable touch was delivered in the somatosensory modality). The orange bars show the percentage of responses that corresponded to the visual stimulus; the blue bars show the percentage of responses that corresponded to the somatosensory stimulus, collapsed across touch and no-touch conditions. Subjects responses usually matched the stimulus presented in the more reliable modality, with responses corresponding to the visual modality in the visual-reliable condition (left bars) and the somatosensory modality in the somatosensory-reliable condition (right bars).

Reliability Weighting

In order to study the effectiveness of each modality in driving behavior, we presented incongruent multisensory stimuli in which a touch occurred in one modality but not the other. The reliability-weighting literature predicts that subjects should weight the more reliable modality more heavily in their judgment (Ernst and Banks, 2002 ; Alais and Burr, 2004 ). As predicted, subjects were much more likely to report that a touch occurred when a touch was presented in the more reliable modality (Figure 2 B). This effect was significant for both visual and somatosensory modalities as measured with a paired t-test (visual-reliable: 70 ± 3% visual wins vs. 30 ± 3% somatosensory wins, p < 1e − 6; Somatosensory reliable: 24 ± 4% visual wins vs. 76 ± 4% somatosensory wins, p < 1e − 6).

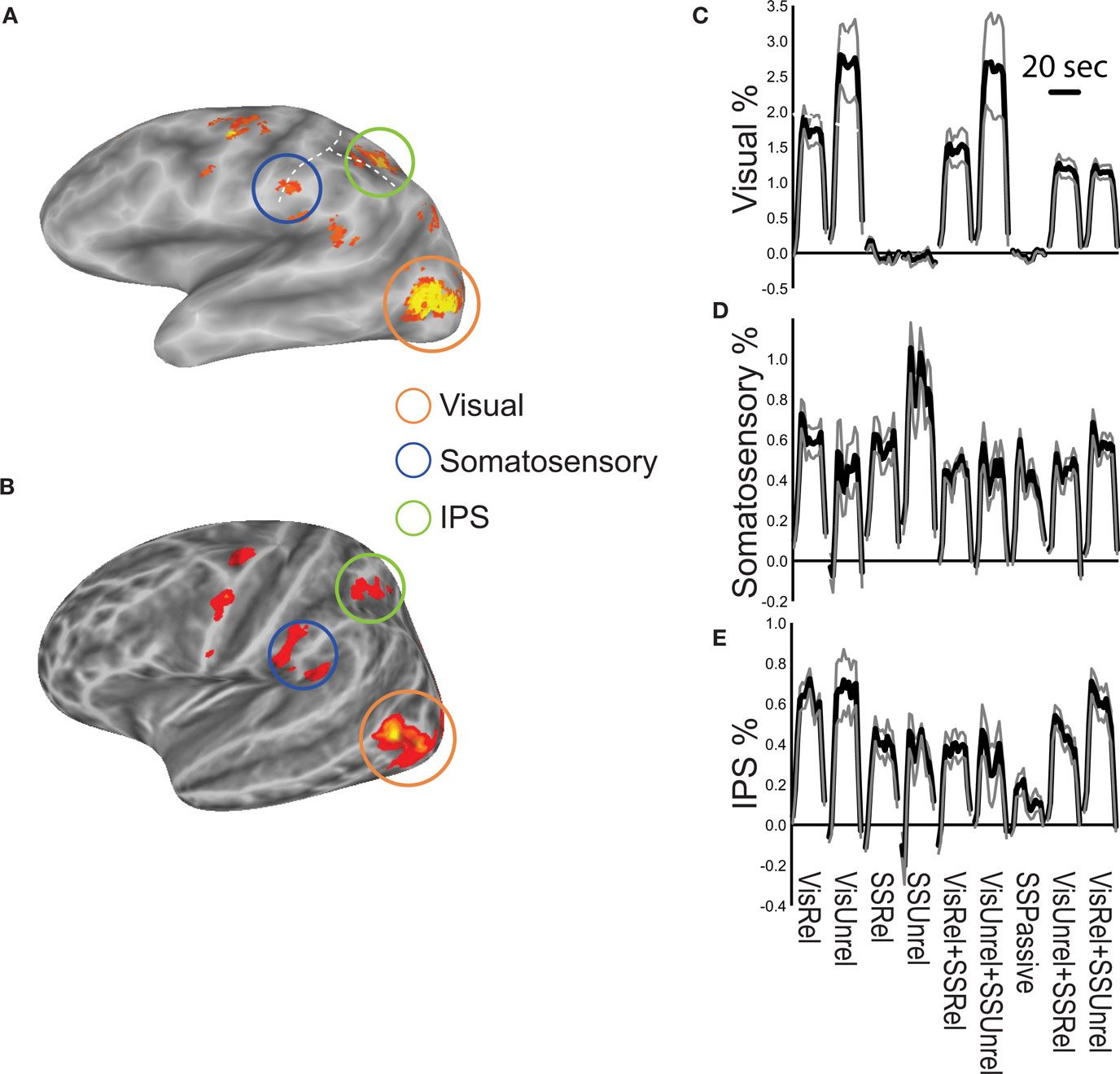

Localizers: Active Brain Areas

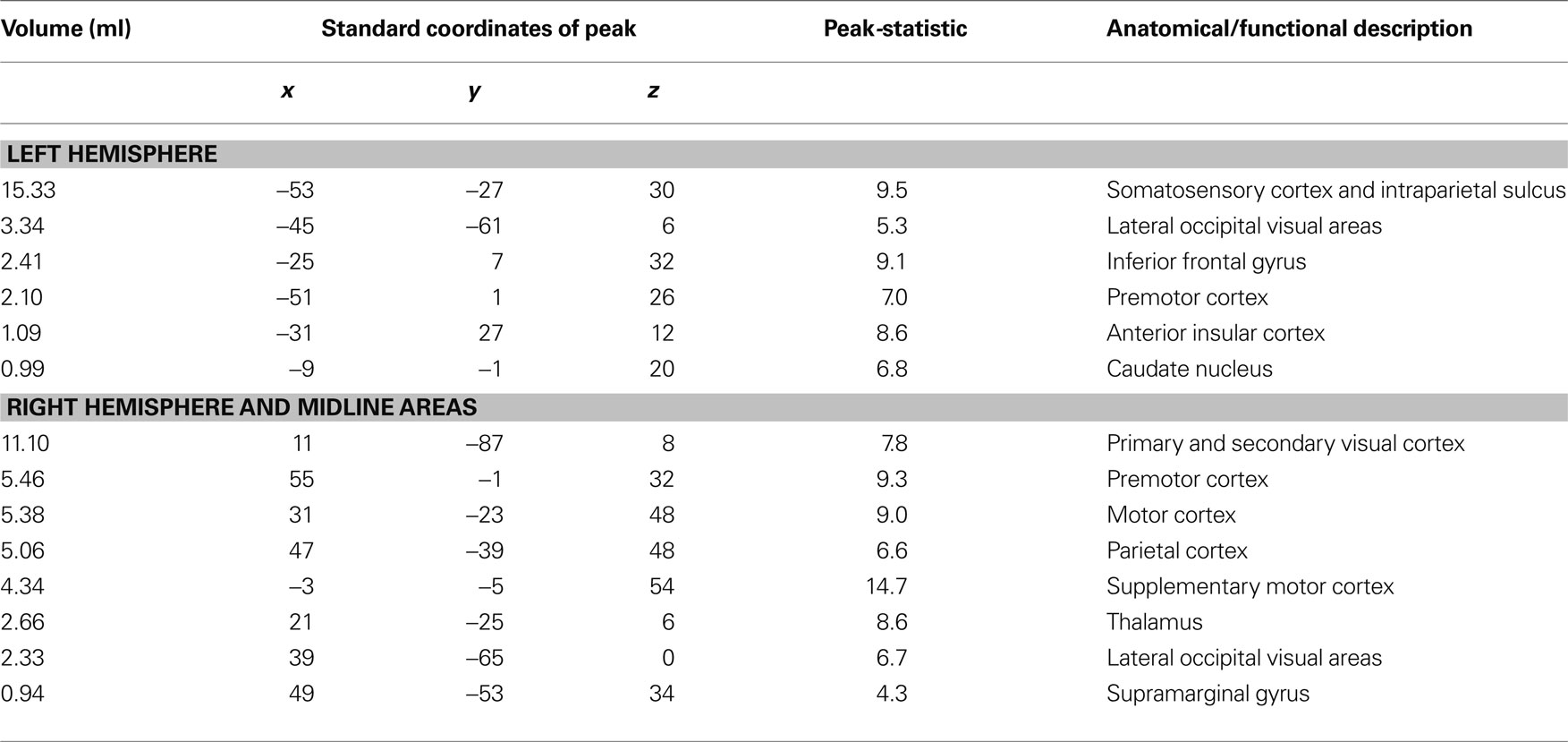

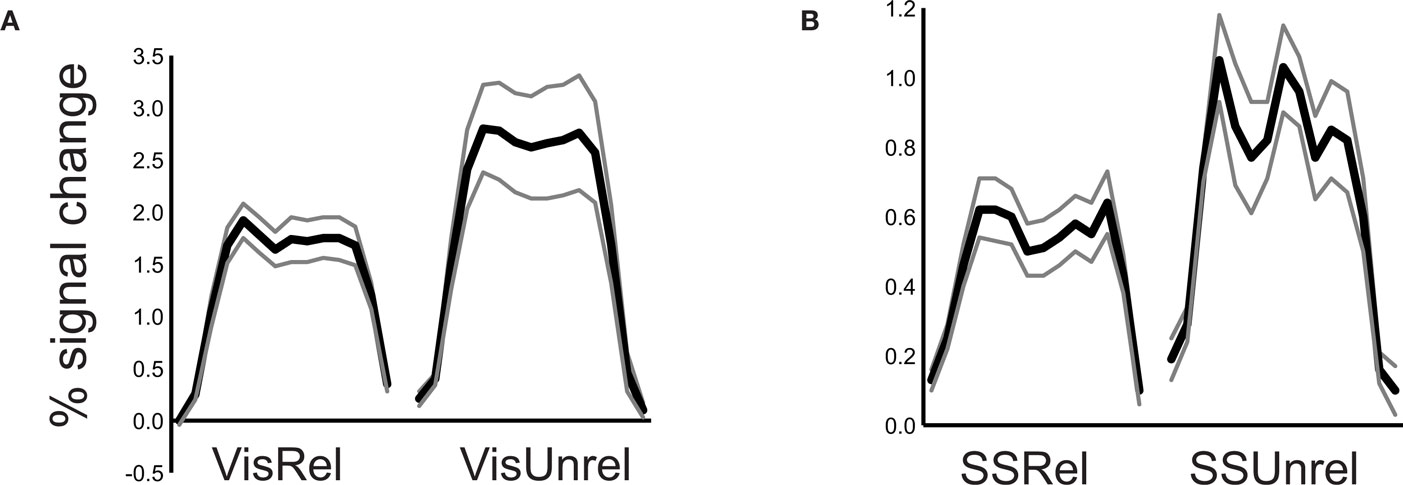

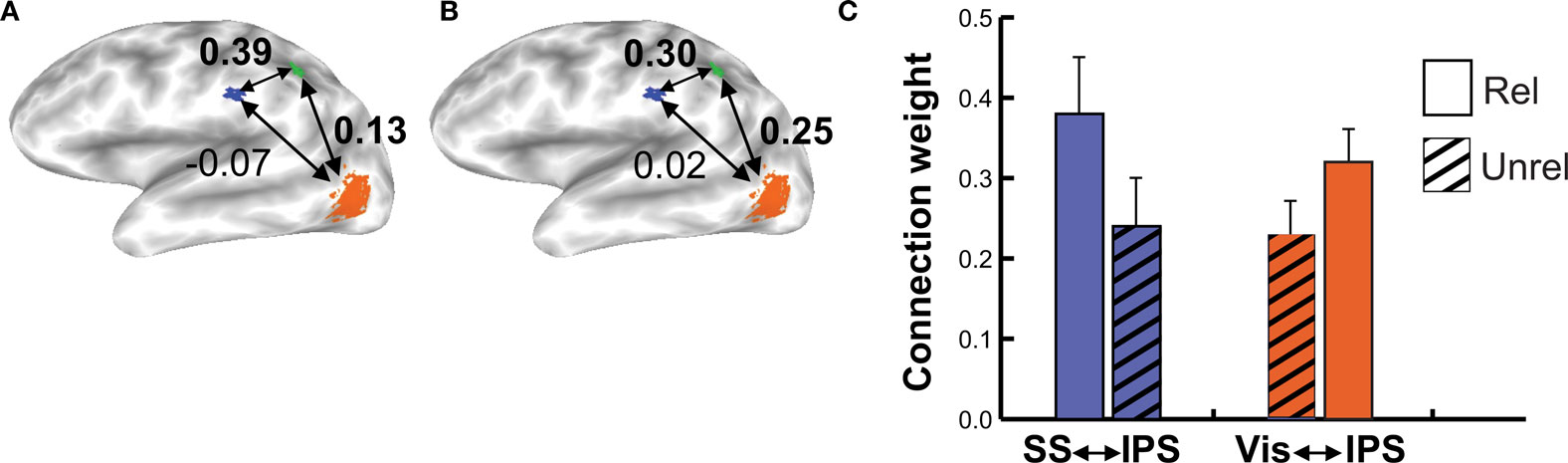

When subjects viewed and felt touches, the largest clusters of activity were observed in extrastriate visual areas in lateral occipital cortex, in inferior parietal lobe in the location of secondary somatosensory cortex and associated areas, and in anterior IPS near the junction with postcentral sulcus (see Figure 3 and Table 1 for a list of all active regions). We measured BOLD fMRI activity in three regions of interest (visual, somatosensory, and IPS) in order to test the two competing models of multisensory integration (see Figure 3 for the average time series from each ROI for each stimulus condition). As shown in Figure 4 , the response to unreliable stimuli was slightly greater than the response to reliable stimuli (0.92% vs. 0.76% for somatosensory, 2.7% vs. 2.3% for visual, p = 0.06 in a paired t-test). We examined the connectivity between visual cortex, somatosensory cortex and IPS during presentation of multisensory stimuli with varying stimulus reliability (Figure 5 ). The connection weight, measured as a correlation coefficient, between somatosensory cortex and IPS was lower during somatosensory-unreliable stimulation than during somatosensory-reliable stimulation (0.24 vs. 0.38, p = 0.002 in a paired t-test), even though somatosensory cortex was slightly more activated in the unreliable condition. Similarly, the connection weight between visual cortex and IPS was lower during visual-unreliable stimulation than during visual-reliable stimulation (0.23 vs. 0.32, p = 0.001), even though visual cortex was slightly more activated in the unreliable condition. As predicted by the weighted connections model, the connection weights were higher for the reliable stimulus modality despite there being less activity in the unisensory cortices for the reliable as compared to the unreliable conditions. The connection weight changes (higher for reliable stimuli) were in the opposite direction as the mean BOLD signal change (lower for reliable stimuli). The connection weight between visual cortex and somatosensory cortex was unaffected by reliability (0.11 vs. 0.15, p = 0.3).

Figure 3. Summary of fMRI activations. (A) Activation during performance of the multisensory touch detection task shown on an inflated average cortical surface model (left hemisphere, single subject). The orange circle highlights active visual areas in lateral occipital cortex. The blue circle highlights active areas in inferior parietal lobe, the location of secondary somatosensory cortex. The green circle highlights active areas in and around the intraparietal sulcus (IPS). The horizontal dashed white line shows the intraparietal sulcus, vertical dashed white line shows the postcentral sulcus. (B) Group activation map from n = 8 subjects. (C) Time course of the BOLD response in the visual cortex ROI during 20 s stimulation blocks of each experimental condition, averaged across blocks and subjects (black lines show the mean percent signal change, gray lines show + -SEM). (D) Time course of the somatosensory cortex response. (E) Time course of the IPS response.

Table 1. Summary table showing all active brain areas during the localizer scan series using the contrast of multisensory touch vs. fixation baseline. Constructed from the group average volume activation map (n = 8 subjects). The active brain areas are ordered by the size of the active region, as shown in the first column, followed by the location of the peak activation within the active region, and the t-statistic of the peak activation. The center of the coordinate system is the anterior commissure, with left, posterior, and inferior the negative direction and right, anterior and superior the positive direction. The final column shows the anatomical description.

Figure 4. Response to reliable and unreliable unisensory stimuli. (A) The average BOLD signal change in the visual cortex ROI during 20 s stimulation blocks of unisensory visual-reliable stimulation (left plot) and visual-unreliable unisensory stimulation (right plot). Black line shows mean response, gray lines shows ±SEM (n = 8 subjects). (B) The average BOLD signal change in the somatosensory cortex ROI during unisensory somatosensory-reliable stimulation blocks (left plot) and somatosensory-unreliable stimulation blocks (right plot).

Figure 5. Connection weights during reliable and unreliable stimulation. (A) Connectivity in the multisensory somatosensory-reliable/visual-unreliable condition in an individual subject, viewed on that subject’s inflated cortical surface. Colored regions show areas with a significant fMRI response during the localizer scan used to create the regions of interest, with a different color for each region of interest (orange for visual, blue for somatosensory, green for IPS). The numbers adjacent to each arrow show the weights between that pair of ROIs, as derived from the structural equation model. (B) Connectivity in the multisensory somatosensory-unreliable/visual-reliable condition in the same subject. (C) Group data showing connection strengths across subjects during multisensory reliable and unreliable stimulation (n = 8 subjects). The blue bars show the connection strength from somatosensory cortex to the IPS, the orange bars show the connection strength from the visual ROI to the IPS ROI. The solid bar in each pair represents the reliable condition for that modality; the hatched bar in each pair is the unreliable condition.

Discussion

We found that a somatosensory tap to the finger in combination with a video showing a finger touch produced behavioral visual-somatosensory multisensory integration. Behavioral studies have shown that vision can enhance touch perception, especially for touches to the hand (Kennett et al., 2001 ; Ro et al., 2004 ; Haggard et al., 2007 for a review see Maravita et al., 2003 ). While it might seem surprising that a video of an actor’s hand being touched by an artificial probe could result in multisensory integration, it is consistent with previous results that a video feed of the subject’s own hand (Tipper et al., 1998 ), a flash of light near the subject’s hand (Johnson et al., 2006 ), or an image or line drawing of a hand (Schaefer et al., 2005 ; Igarashi et al., 2008 ) can result in multisensory integration. Multisensory enhancements are even noted at the end of tools that serve to artificially extend the hand (Farne et al., 2007 ; Holmes et al., 2007 ). The behavioral multisensory integration that occurred during a touch to the finger was reliability weighted, with the more reliable modality receiving a stronger behavioral weighting. Reliability weighting during multisensory integration makes intuitive sense as an adaptation to cope with changes in sensation: as we age or in some diseases, the sensitivity of different modalities deteriorates at different rates and the brain must compensate. Ernst and Banks (2002) made the important discovery that behavioral reliability weighting of visual and somatosensory stimuli is statistically optimal. By artificially adjusting the amount of noise in the visual stimulus, they showed that we weight visual stimuli in inverse proportion to their variance. This finding has been extended to other visual-somatosensory tasks (Helbig and Ernst, 2007 ) and other modality combinations (Alais and Burr, 2004 ). The ubiquitous nature of reliability weighting suggests that it may be a fundamental building block of multisensory integration.

To study the neural mechanisms of reliability weighting, we performed BOLD fMRI experiments of human subjects detecting a touch to the index finger of the hand. Consistent with previous studies of visual-somatosensory integration, brain activity was observed in a network of brain areas. These areas were subdivided into three regions of interest: visual, somatosensory, and multisensory. The visual ROI contained a group of visual areas in lateral occipito-temporal cortex, centered on posterior middle temporal gyrus and inferior temporal sulcus, which corresponds to areas that respond strongly to moving objects and pictures or videos of hands and hand-held manipulable objects (Downing et al., 2001 ; Beauchamp et al., 2002 , 2003 ; Wheaton et al., 2004 ; Pelphrey et al., 2005 ). While these areas are located in classical visual cortex, they are also responsive to touch (Amedi et al., 2001 , 2002 ; Hagen et al., 2002 ; James et al., 2002 ; Prather et al., 2004 ; Beauchamp et al., 2007 ; Summers et al., 2009 ). The somatosensory ROI contained a group of areas in the inferior parietal lobe that respond to hand stimulation that have been labeled S2+ (Disbrow et al., 2000 ; McGlone et al., 2002 ; Burton et al., 2004 , 2008 ; Beauchamp et al., 2007 ; Eickhoff et al., 2007 , 2008 ). For reasons that are not fully clear, in fMRI studies that stimulate the hand, especially with vibrotactile stimuli like those used in the present study, S2+ activation is much stronger than S1 activation (Ruben et al., 2001 ; Gizewski et al., 2005 ; Beauchamp et al., 2007 , 2009 ). S2+ is also active during observation of touch (Keysers et al., 2004 ; Blakemore et al., 2005 ; Schaefer et al., 2006 ). The multisensory ROI contained association areas in and around the IPS thought to be critical for the integration of vision and touch (Grefkes and Fink, 2005 ). Although many studies have used fMRI to show that the IPS responds to visual and somatosensory stimulation (Bremmer et al., 2001 ; Saito et al., 2003 ; Makin et al., 2007 ; Peltier et al., 2007 ; Nakashita et al., 2008 ; Stilla and Sathian, 2008 ; Tal and Amedi, 2009 ) this does not demonstrate the necessity of the IPS for behavioral multisensory integration. We recently conducted a transcranial magnetic stimulation (TMS) study that disrupted activity in the IPS while subjects performed a touch/no-touch discrimination task using visual and somatosensory stimuli similar to those used in the present study (Pasalar et al., 2010 ). Without TMS, a significant behavioral improvement for multisensory compared with unisensory stimuli was observed. However, when activity in the IPS was disrupted with TMS, multisensory behavioral improvement was eliminated. Unisensory discrimination performance was not affected, illustrating that the effect was specific to multisensory integration. Furthermore, TMS of a control brain location did not interfere with multisensory integration, illustrating that the effect could not be attributed to non-specific effects of TMS, such as the auditory click produced by each TMS pulse.

We classified the visual and somatosensory ROIs as “early” and the IPS ROI as “late,” based on their location in the cortical processing hierarchy (Felleman and Van Essen, 1991 ). Early areas responded more to less reliable sensory stimuli. While this is contrary to the predictions of the linear summation model (Ma et al., 2006 ; Ma and Pouget, 2008 ) it is likely to be a result of our method of creating unreliable stimuli, and therefore does not disconfirm the linear summation model. In our experiments, we determined the threshold for visual and tactile stimulation, and then decreased the reliability of the stimuli by adding noise. This added noise is likely to evoke neural activity in a broad population of neurons in the sensory cortex, causing the observed increases in the BOLD fMRI signal. However, the linear summation model hypothesizes only that the neurons carrying information about the sensory stimulus (in this case, the touch vs. no-touch distinction) show decreased activity with decreasing reliability. With BOLD fMRI, we cannot easily distinguish the neural activity of the relatively small population of neurons in a voxel that carry information about the sensory stimulus (and presumably show a decreased response with increasing noise) from other neurons in the voxel that do not carry information about the sensory stimulus (and show an increased response to the dynamic noise present in the unreliable condition). In future experiments, it may be possible to create a better test of the linear summation model by using fMRI adaptation to measure activity in only those neurons that carry information about the sensory stimulus, or by manipulating reliability in other ways, such as decreasing the signal strength by making the visual and tactile stimuli weaker.

Unlike the linear summation model, the weighted connections model makes no predictions about the response amplitude of early sensory areas. Instead, it predicts that the connection strength between early and late areas should be proportional to reliability. To test this prediction, structural equation modeling was used to assess the connection strengths. During presentation of reliable visual stimuli, the connection strength between visual cortex and IPS was high; during presentation of unreliable visual stimuli, this connection strength decreased. Conversely, during presentation of reliable somatosensory stimuli, the connection strength between somatosensory cortex and IPS was high, and during presentation of unreliable somatosensory stimuli, this connection strength was low. These findings match the predictions of the weighted connections model.

The double dissociation of connection strengths between the IPS and somatosensory and visual cortex in the two conditions mirrored the pattern of behavioral responses observed during incongruent multisensory stimulation, in which the reliable modality was more effective at driving behavior. The correspondence between the neural connection strengths and behavior substantiates the notion that connection weight changes underlie behavioral reliability weighting.

Two recent studies in non-human primates examined visual-vestibular integration (Morgan et al., 2008 ; Fetsch et al., 2009 ). Responses in single MST neurons were a function of the weighted sum of visual and vestibular responses: the weight of the visual modality decreased as the visual stimulus was degraded, while the strength of the vestibular responses increased. This converging evidence from two different modality combinations (visual-vestibular and visual-somatosensory) and techniques (electrophysiology and BOLD fMRI) supports the weighted connections model. However, the electrophysiological data might also be consistent with a modified version of the linear-sum model (Morgan et al., 2008 ; Fetsch et al., 2010 ).

We considered the possibility that the weighted connections model could be more parsimoniously explained by visual attention. Attention to the visual modality increases the connection weights between early visual cortex and parietal regions (Buchel and Friston, 1997 ). In the attention scenario, if subjects attended more to the visual modality in the visual-reliable stimulus condition, the visual-to-IPS connection weights would increase (as predicted by the weighted connections model) but the weight change would be mediated by top-down visual attention and not by reliability. However, in addition to changing weights, visual attention also increases the response in early visual areas, including lateral occipital areas such as area MT (Beauchamp et al., 1997 ; Buchel et al., 1998 ; Kastner et al., 1998 ; Saenz et al., 2002 ). In the attention scenario, visual-reliable stimuli attract more attention and thus should evoke a larger response in visual areas. We saw the opposite pattern, with a trend towards reliable stimuli evoking smaller responses in visual areas (although this may have been a consequence of the visual noise that we added to make the stimuli less reliable). Conversely, if we suppose that subjects attended more to the unreliable visual stimuli because it was harder to see, the connection weight between visual cortex and IPS should increase for the unreliable visual condition (Buchel and Friston, 1997 ), which is the opposite of the observed weight change. Therefore, our BOLD fMRI data is incompatible with a simple effect of top-down visual attention, and consistent with behavioral studies showing that reliability weighting is independent of attention (Helbig and Ernst, 2008 ).

An caveat to the reliability-weighting model is that effective and functional connectivity methods applied to BOLD fMRI data do not necessarily correspond to direct axonal projections from one area to another (Buchel and Friston, 2001 ). Information may instead flow through a third area that is not modeled, such as thalamic nuclei in the “porpoise model” of Sherman (2007) . However, there is anatomical evidence for direct connections between IPS, somatosensory cortex and visual cortex. Tracer-injection studies in macaque monkeys have shown that area VIP in anterior IPS receives strong inputs from extrastriate visual areas including area MST and weak or absent connections from primary visual cortex, V1, and area VIP and nearby areas also receive direct projections from the upper-body representation of secondary somatosensory cortex (Boussaoud et al., 1990 ; Lewis and Van Essen, 2000 ). Diffusion tensor imaging tractography studies in humans have shown comparable results, with anterior IPS showing the strongest anatomical connectivity with the superior longitudinal fasciculus connecting temporal, parietal, and frontal regions (Rushworth et al., 2006 ).

The results of the present study suggest a number of promising avenues for future exploration. In the influential behavioral experiments of Ernst and Banks (2002) and Alais and Burr (2004) , subjects made quantitative estimates about stimulus properties using different sensory modalities. When discrepancies between the modalities were introduced, the quantitative weight given to each modality in the sensory judgment could be measured experimentally. However, in our behavioral experiment, the judgment was qualitative (touch vs. no-touch) rather than quantitative, so we were unable to precisely measure the weights of different modalities. Multidimensional scaling could be used to extend optimal integration to situations in which perceptual judgments are qualitative instead of quantitative, such as word perception (Ma et al., 2009 ).

By introducing incongruent stimulation (even though the judgments were qualitative), our behavioral experiment demonstrates that subjects gave more weight to the more reliable modality in their perceptual decision. However, in our neuroimaging experiment, all of the stimuli were congruent. Therefore, we were unable to measure the perceptual reliability-weighting or compare the percept on each trial with the BOLD fMRI data from the different ROIs for that trial. The neural connection strengths could be compared with the percepts for incongruent stimuli on a trial by trial basis to ascertain the relationship between neural connection strengths and perception.

There has been a burst of interest in the use of multi-voxel pattern analysis (MVPA) to decode the sensory stimulus presented to the subject from the observed BOLD fMRI response (Norman et al., 2006 ). Recently, we demonstrated that MVPA could be used to decode the location of somatosensory stimulation from the fMRI signal across the whole brain or in somatosensory cortex (Beauchamp et al., 2009 ). An extension of the present study would be to determine the effects of stimulus reliability on MVPA decoding performance.

A further avenue for exploration will be examining connectivity changes in patients with sensory loss. For instance, macular degeneration causes the visual stimulus to be constantly degraded, as in our dynamic noise condition. Our results predict that in patients with macular degeneration, the connectivity weight between visual cortex and IPS should be low, regardless of the amplitude of the BOLD amplitude of the response in visual cortex to a given stimulus. However, it may be possible to increase this decreased connectivity through attention or other top-down mechanisms, counteracting the effects of the disease. Similarly, patients who are blinded at an early age may suffer from reduced connectivity between visual cortex and other brain areas because of the lack of normal input. Restoration of the retinal image in adulthood in these patients does not allow them to regain the visual abilities critical for daily function (Levin et al., 2010 ). A better understanding of how reliability weighting adjusts connection strengths between sensory cortex and other brain areas may help in the rehabilitation of these patients (Ro and Rafal, 2006 ).

In summary, our experiments suggest that changes in the BOLD signal amplitude and changes in connection weights between early and late areas may both be important for behavioral reliability-weighting in visual-somatosensory multisensory integration. Because reliability-weighting is a ubiquitous phenomenon across many modality combinations (Witten and Knudsen, 2005 ), in future experiments it will be necessary to test the connection weights model in a variety of modality combinations and behavioral conditions, and to investigate the synaptic and physiological mechanisms underlying changes in connection weights. Our findings suggest that particularly dramatic weight changes should be observed following brain damage affecting one sensory system (Ro et al., 2007 ).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This research was supported by National Science Foundation Cognitive Neuroscience Initiative Research Grants 0642532 to MSB and 0847607 to TR and NIH NS065395 to MSB. SP was supported by NIH T32HD049350. NIH S10 RR19186 provided partial funding for the purchase of the 3T scanner. We thank Vips Patel for assistance with MR data collection and Kyung Hwa Kim and Olivia Plante for assistance with behavioral data collection.

References

Alais, D., and Burr, D. (2004). The ventriloquist effect results from near-optimal bimodal integration. Curr. Biol. 14, 257–262.

Amedi, A., Jacobson, G., Hendler, T., Malach, R., and Zohary, E. (2002). Convergence of visual and tactile shape processing in the human lateral occipital complex. Cereb. Cortex 12, 1202–1212.

Amedi, A., Malach, R., Hendler, T., Peled, S., and Zohary, E. (2001). Visuo-haptic object-related activation in the ventral visual pathway. Nat. Neurosci. 4, 324–330.

Argall, B. D., Saad, Z. S., and Beauchamp, M. S. (2006). Simplified intersubject averaging on the cortical surface using SUMA. Hum. Brain Mapp. 27, 14–27.

Beauchamp, M. S., Cox, R. W., and DeYoe, E. A. (1997). Graded effects of spatial and featural attention on human area MT and associated motion processing areas. J. Neurophysiol. 77, 516–520.

Beauchamp, M. S., Laconte, S., and Yasar, N. (2009). Distributed representation of single touches in somatosensory and visual cortex. Hum. Brain Mapp. 30, 3163–3171.

Beauchamp, M. S., Lee, K. E., Haxby, J. V., and Martin, A. (2002). Parallel visual motion processing streams for manipulable objects and human movements. Neuron 34, 149–159.

Beauchamp, M. S., Lee, K. E., Haxby, J. V., and Martin, A. (2003). fMRI responses to video and point-light displays of moving humans and manipulable objects. J. Cogn. Neurosci. 15, 991–1001.

Beauchamp, M. S., Yasar, N. E., Kishan, N., and Ro, T. (2007). Human MST but not MT responds to tactile stimulation. J. Neurosci. 27, 8261–8267.

Blakemore, S. J., Bristow, D., Bird, G., Frith, C., and Ward, J. (2005). Somatosensory activations during the observation of touch and a case of vision-touch synaesthesia. Brain 128, 1571–1583.

Boussaoud, D., Ungerleider, L. G., and Desimone, R. (1990). Pathways for motion analysis: cortical connections of the middle superior temporal and fundus of the superior temporal visual areas in the macaque. J. Comp. Neurol. 296, 462–495.

Bremmer, F., Schlack, A., Shah, N. J., Zafiris, O., Kubischik, M., Hoffmann, K., Zilles, K., and Fink, G. R. (2001). Polymodal motion processing in posterior parietal and premotor cortex: a human fMRI study strongly implies equivalencies between humans and monkeys. Neuron 29, 287–296.

Buchel, C., and Friston, K. (2001). Interactions among neuronal systems assessed with functional neuroimaging. Rev. Neurol. (Paris) 157, 807–815.

Buchel, C., and Friston, K. J. (1997). Modulation of connectivity in visual pathways by attention: cortical interactions evaluated with structural equation modelling and fMRI. Cereb. Cortex 7, 768–778.

Buchel, C., Josephs, O., Rees, G., Turner, R., Frith, C. D., and Friston, K. J. (1998). The functional anatomy of attention to visual motion. A functional MRI study. Brain 121, 1281–1294.

Burton, H., Sinclair, R. J., and McLaren, D. G. (2004). Cortical activity to vibrotactile stimulation: an fMRI study in blind and sighted individuals. Hum. Brain Mapp. 23, 210.

Burton, H., Sinclair, R. J., Wingert, J. R., and Dierker, D. L. (2008). Multiple parietal operculum subdivisions in humans: tactile activation maps. Somatosens. Mot. Res. 25, 149–162.

Cox, R. W. (1996). AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput. Biomed. Res. 29, 162–173.

Culham, J. C., and Valyear, K. F. (2006). Human parietal cortex in action. Curr. Opin. Neurobiol. 16, 205–212.

Disbrow, E., Roberts, T., and Krubitzer, L. (2000). Somatotopic organization of cortical fields in the lateral sulcus of Homo sapiens: evidence for SII and PV. J. Comp. Neurol. 418, 1–21.

Downing, P. E., Jiang, Y., Shuman, M., and Kanwisher, N. (2001). A cortical area selective for visual processing of the human body. Science 293, 2470–2473.

Eickhoff, S. B., Grefkes, C., Fink, G. R., and Zilles, K. (2008). Functional lateralization of face, hand, and trunk representation in anatomically defined human somatosensory areas. Cereb. Cortex. 18, 2820–2830.

Eickhoff, S. B., Grefkes, C., Zilles, K., and Fink, G. R. (2007). The somatotopic organization of cytoarchitectonic areas on the human parietal operculum. Cereb. Cortex 17, 1800–1811.

Ernst, M. O., and Banks, M. S. (2002). Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415, 429–433.

Farne, A., Serino, A., and Ladavas, E. (2007). Dynamic size-change of peri-hand space following tool-use: determinants and spatial characteristics revealed through cross-modal extinction. Cortex 43, 436–443.

Felleman, D. J., and Van Essen, D. C. (1991). Distributed hierarchical processing in the primate cerebral cortex. Cereb. Cortex 1, 1–47.

Fetsch, C. R., Deangelis, G. C., and Angelaki, D. E. (2010) Neural corrrelates of dynamic sensory cue re-weighting in macaque area MSTd. Comput. Syst. Neurosci. doi: 10.3389/conf.fnins.2010.03.00299.

Fetsch, C. R., Turner, A. H., DeAngelis, G. C., and Angelaki, D. E. (2009). Dynamic reweighting of visual and vestibular cues during self-motion perception. J. Neurosci. 29, 15601–15612.

Fischl, B., Sereno, M. I., and Dale, A. M. (1999a). Cortical surface-based analysis. II: inflation, flattening, and a surface-based coordinate system. Neuroimage 9, 195–207.

Fischl, B., Sereno, M. I., Tootell, R. B., and Dale, A. M. (1999b). High-resolution intersubject averaging and a coordinate system for the cortical surface. Hum. Brain Mapp. 8, 272–284.

Francis, S. T., Kelly, E. F., Bowtell, R., Dunseath, W. J., Folger, S. E., and McGlone, F. (2000). fMRI of the responses to vibratory stimulation of digit tips. Neuroimage 11, 188–202.

Genovese, C. R., Lazar, N. A., and Nichols, T. (2002). Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage 15, 870–878.

Gizewski, E. R., Koeze, O., Uffmann, K., de Greiff, A., Ladd, M. E., and Forsting, M. (2005). Cerebral activation using a MR-compatible piezoelectric actuator with adjustable vibration frequencies and in vivo wave propagation control. Neuroimage 24, 723–730.

Grefkes, C., and Fink, G. R. (2005). The functional organization of the intraparietal sulcus in humans and monkeys. J. Anat. 207, 3–17.

Hagen, M. C., Franzen, O., McGlone, F., Essick, G., Dancer, C., and Pardo, J. V. (2002). Tactile motion activates the human middle temporal/V5 (MT/V5) complex. Eur. J. Neurosci. 16, 957–964.

Haggard, P., Christakou, A., and Serino, A. (2007). Viewing the body modulates tactile receptive fields. Exp. Brain Res. 180, 187–193.

Helbig, H. B., and Ernst, M. O. (2007). Optimal integration of shape information from vision and touch. Exp. Brain Res. 179, 595–606.

Helbig, H. B., and Ernst, M. O. (2008). Visual-haptic cue weighting is independent of modality-specific attention. J. Vis. 8, 1–16.

Holmes, N. P., Calvert, G. A., and Spence, C. (2007). Tool use changes multisensory interactions in seconds: evidence from the crossmodal congruency task. Exp. Brain Res. 183, 465–476.

Horwitz, B., McIntosh, A. R., Haxby, J. V., and Grady, C. L. (1995). Network analysis of brain cognitive function using metabolic and blood flow data. Behav. Brain Res. 66, 187–193.

Igarashi, Y., Kimura, Y., Spence, C., and Ichihara, S. (2008). The selective effect of the image of a hand on visuotactile interactions as assessed by performance on the crossmodal congruency task. Exp. Brain Res. 184, 31–38.

James, T. W., Humphrey, G. K., Gati, J. S., Servos, P., Menon, R. S., and Goodale, M. A. (2002). Haptic study of three-dimensional objects activates extrastriate visual areas. Neuropsychologia 40, 1706–1714.

Johnson, R. M., Burton, P. C., and Ro, T. (2006). Visually induced feelings of touch. Brain Res. 16, 398–406.

Kastner, S., De Weerd, P., Desimone, R., and Ungerleider, L. G. (1998). Mechanisms of directed attention in the human extrastriate cortex as revealed by functional MRI [see comments]. Science 282, 108–111.

Kennett, S., Taylor-Clarke, M., and Haggard, P. (2001). Noninformative vision improves the spatial resolution of touch in humans. Curr. Biol. 11, 1188–1191.

Keysers, C., Wicker, B., Gazzola, V., Anton, J. L., Fogassi, L., and Gallese, V. (2004). A touching sight: SII/PV activation during the observation and experience of touch. Neuron 42, 335–346.

Levin, N., Dumoulin, S. O., Winawer, J., Dougherty, R. F., and Wandell, B. A. (2010). Cortical maps and white matter tracts following long period of visual deprivation and retinal image restoration. Neuron 65, 21–31.

Lewis, J. W., and Van Essen, D. C. (2000). Corticocortical connections of visual, sensorimotor, and multimodal processing areas in the parietal lobe of the macaque monkey. J. Comp. Neurol. 428, 112–137.

Ma, W. J., Beck, J. M., Latham, P. E., and Pouget, A. (2006). Bayesian inference with probabilistic population codes. Nat. Neurosci. 9, 1432–1438.

Ma, W. J., and Pouget, A. (2008). Linking neurons to behavior in multisensory perception: a computational review. Exp. Brain Res. 1242, 4–12.

Ma, W. J., Zhou, X., Ross, L. A., Foxe, J. J., and Parra, L. C. (2009). Lip-reading aids word recognition most in moderate noise: a Bayesian explanation using high-dimensional feature space. PLoS ONE 4, e4638. doi: 10.1371/journal.pone.0004638.

Makin, T. R., Holmes, N. P., and Zohary, E. (2007). Is that near my hand? Multisensory representation of peripersonal space in human intraparietal sulcus. J. Neurosci. 27, 731–740.

Maravita, A., Spence, C., and Driver, J. (2003). Multisensory integration and the body schema: close to hand and within reach. Curr. Biol. 13:R531–539.

Mazziotta, J., Toga, A., Evans, A., Fox, P., Lancaster, J., Zilles, K., Woods, R., Paus, T., Simpson, G., Pike, B., Holmes, C., Collins, L., Thompson, P., MacDonald, D., Iacoboni, M., Schormann, T., Amunts, K., Palomero-Gallagher, N., Geyer, S., Parsons, L., Narr, K., Kabani, N., Le Goualher, G., Boomsma, D., Cannon, T., Kawashima, R., and Mazoyer, B. (2001). A probabilistic atlas and reference system for the human brain: international consortium for brain mapping (ICBM). Philos. Trans. R. Soc. Lond., B, Biol. Sci. 356, 1293–1322.

McGlone, F., Kelly, E. F., Trulsson, M., Francis, S. T., Westling, G., and Bowtell, R. (2002). Functional neuroimaging studies of human somatosensory cortex. Behav. Brain Res. 135, 147–158.

McIntosh, A. R., Grady, C. L., Ungerleider, L. G., Haxby, J. V., Rapoport, S. I., and Horwitz, B. (1994). Network analysis of cortical visual pathways mapped with PET. J. Neurosci. 14, 655–666.

Morgan, M. L., Deangelis, G. C., and Angelaki, D. E. (2008). Multisensory integration in macaque visual cortex depends on cue reliability. Neuron 59, 662–673.

Nakashita, S., Saito, D. N., Kochiyama, T., Honda, M., Tanabe, H. C., and Sadato, N. (2008). Tactile-visual integration in the posterior parietal cortex: a functional magnetic resonance imaging study. Brain Res. Bull. 75, 513–525.

Norman, K. A., Polyn, S. M., Detre, G. J., and Haxby, J. V. (2006). Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends Cogn. Sci. (Regul. Ed.) 10, 424–430.

Pasalar, S., Ro, T., Beauchamp, M. S. (2010). TMS of posterior parietal cortex disrupts visual tactile multisensory integration. Eur. J. Neurosci. 31, 1783–1790.

Pelphrey, K. A., Morris, J. P., Michelich, C. R., Allison, T., and McCarthy, G. (2005). Functional anatomy of biological motion perception in posterior temporal cortex: an fMRI study of eye, mouth and hand movements. Cereb. Cortex. 15, 1866–1876.

Peltier, S., Stilla, R., Mariola, E., LaConte, S., Hu, X., and Sathian, K. (2007). Activity and effective connectivity of parietal and occipital cortical regions during haptic shape perception. Neuropsychologia 45, 476–483.

Prather, S. C., Votaw, J. R., and Sathian, K. (2004). Task-specific recruitment of dorsal and ventral visual areas during tactile perception. Neuropsychologia 42, 1079–1087.

Ro, T., Farne, A., Johnson, R. M., Wedeen, V., Chu, Z., Wang, Z. J., Hunter, J. V., and Beauchamp, M. S. (2007). Feeling sounds after a thalamic lesion. Ann. Neurol. 62, 433–441.

Ro, T., and Rafal, R. (2006). Visual restoration in cortical blindness: insights from natural and TMS-induced blindsight. Neuropsychol. Rehabil. 16, 377–396.

Ro, T., Wallace, R., Hagedorn, J., Farne, A., and Pienkos, E. (2004). Visual enhancing of tactile perception in the posterior parietal cortex. J. Cogn. Neurosci. 16, 24–30.

Ruben, J., Schwiemann, J., Deuchert, M., Meyer, R., Krause, T., Curio, G., Villringer, K., Kurth, R., and Villringer, A. (2001). Somatotopic organization of human secondary somatosensory cortex. Cereb. Cortex 11, 463–473.

Rushworth, M. F., Behrens, T. E., and Johansen-Berg, H. (2006). Connection patterns distinguish 3 regions of human parietal cortex. Cereb. Cortex 16, 1418–1430.

Saenz, M., Buracas, G. T., and Boynton, G. M. (2002). Global effects of feature-based attention in human visual cortex. Nat. Neurosci. 5, 631–632.

Saito, D. N., Okada, T., Morita, Y., Yonekura, Y., and Sadato, N. (2003). Tactile-visual cross-modal shape matching: a functional MRI study. Brain Res. Cogn. Brain Res. 17, 14–25.

Schaefer, M., Flor, H., Heinze, H. J., and Rotte, M. (2006). Dynamic modulation of the primary somatosensory cortex during seeing and feeling a touched hand. Neuroimage 29, 587–592.

Schaefer, M., Heinze, H. J., and Rotte, M. (2005). Seeing the hand being touched modulates the primary somatosensory cortex. Neuroreport 16, 1101–1105.

Simmons, W. K., Bellgowan, P. S., and Martin, A. (2007). Measuring selectivity in fMRI data. Nat. Neurosci. 10, 4–5.

Stein, J. L., Wiedholz, L. M., Bassett, D. S., Weinberger, D. R., Zink, C. F., Mattay, V. S., and Meyer-Lindenberg, A. (2007). A validated network of effective amygdala connectivity. Neuroimage 36, 736–745.

Stilla, R., and Sathian, K. (2008). Selective visuo-haptic processing of shape and texture. Hum. Brain Mapp. 29, 1123–1138.

Summers, I. R., Francis, S. T., Bowtell, R. W., McGlone, F. P., and Clemence, M. (2009). A functional-magnetic-resonance-imaging investigation of cortical activation from moving vibrotactile stimuli on the fingertip. J. Acoust. Soc. Am. 125, 1033–1039.

Tal, N., and Amedi, A. (2009). Multisensory visual-tactile object related network in humans: insights gained using a novel crossmodal adaptation approach. Exp. Brain Res. 198, 165–182.

Tipper, S., Lloyd, D., Shorland, B., Dancer, C., Howard, L., and McGlone, F. (1998). Vision influences tactile perception without proprioceptive orienting. Neuroreport 9, 1741–1744.

Tootell, R. B., Reppas, J. B., Kwong, K. K., Malach, R., Born, R. T., Brady, T. J., Rosen, B. R., and Belliveau, J. W. (1995). Functional analysis of human MT and related visual cortical areas using magnetic resonance imaging. J. Neurosci. 15, 3215–3230.

Vul, E., Harris, C., Winkielman, P., and Pashler, H. (2009). Puzzlingly high correlations in fMRI studies of emotion, personality and social cognition. Perspect. Psychol. Sci. 4, 274–290.

Wheaton, K. J., Thompson, J. C., Syngeniotis, A., Abbott, D. F., and Puce, A. (2004). Viewing the motion of human body parts activates different regions of premotor, temporal, and parietal cortex. Neuroimage 22, 277–288.

Keywords: effective connectivity, intraparietal cortex, BOLD fMRI, structural equation modeling, weighted connections, area MT

Citation: Beauchamp MS, Pasalar S and Ro T (2010) Neural substrates of reliability-weighted visual-tactile multisensory integration. Front. Syst. Neurosci. 4:25. doi: 10.3389/fnsys.2010.00025

Received: 03 March 2010;

Paper pending published: 31 March 2010;

Accepted: 25 May 2010;

Published online: 23 June 2010

Edited by:

Barry Horwitz, National Institutes of Health, USAReviewed by:

Gregory C. DeAngelis, University of Rochester, USARandy McIntosh, Rotman Research Institute, Canada

Copyright: © 2010 Beauchamp, Pasalar and Ro. This is an open-access article subject to an exclusive license agreement between the authors and the Frontiers Research Foundation, which permits unrestricted use, distribution, and reproduction in any medium, provided the original authors and source are credited.

*Correspondence: Michael S. Beauchamp, Department of Neurobiology and Anatomy, University of Texas Health Science Center at Houston, 6431 Fannin St Suite G.550G, Houston, TX 77030, USA. e-mail: michael.s.beauchamp@uth. tmc.edu