Is the auditory evoked P2 response a biomarker of learning?

- 1Department of Speech and Hearing Sciences, University of Washington, Seattle, WA, USA

- 2Baycrest Centre, Rotman Research Institute, Toronto, ON, Canada

- 3Department of Medical Biophysics, University of Toronto, Toronto, ON, Canada

- 4Department of Radiology, Integrated Brian Imaging Center, University of Washington, Seattle, WA, USA

- 5Life Sciences Department, Royal Military Academy, Brussels, Belgium

- 6Unité de Recherche en Neurosciences Cognitives, Centre de Recherches en Cognition et Neurosciences Université Libre de Bruxelles, Brussels, Belgium

Even though auditory training exercises for humans have been shown to improve certain perceptual skills of individuals with and without hearing loss, there is a lack of knowledge pertaining to which aspects of training are responsible for the perceptual gains, and which aspects of perception are changed. To better define how auditory training impacts brain and behavior, electroencephalography (EEG) and magnetoencephalography (MEG) have been used to determine the time course and coincidence of cortical modulations associated with different types of training. Here we focus on P1-N1-P2 auditory evoked responses (AEP), as there are consistent reports of gains in P2 amplitude following various types of auditory training experiences; including music and speech-sound training. The purpose of this experiment was to determine if the auditory evoked P2 response is a biomarker of learning. To do this, we taught native English speakers to identify a new pre-voiced temporal cue that is not used phonemically in the English language so that coinciding changes in evoked neural activity could be characterized. To differentiate possible effects of repeated stimulus exposure and a button-pushing task from learning itself, we examined modulations in brain activity in a group of participants who learned to identify the pre-voicing contrast and compared it to participants, matched in time, and stimulus exposure, that did not. The main finding was that the amplitude of the P2 auditory evoked response increased across repeated EEG sessions for all groups, regardless of any change in perceptual performance. What’s more, these effects are retained for months. Changes in P2 amplitude were attributed to changes in neural activity associated with the acquisition process and not the learned outcome itself. A further finding was the expression of a late negativity (LN) wave 600–900 ms post-stimulus onset, post-training exclusively for the group that learned to identify the pre-voiced contrast.

Introduction

Long before the effects of auditory deprivation and stimulation on the brain were known, audiologists used auditory training exercises as a way to help people compensate for hearing loss (Carhart, 1960). The motivation for such exercises stemmed from the fact that adults and children with hearing loss often needed help in dealing with their speech perception deficits that remained after being fit with hearing aid amplification devices (Boothroyd, 2010). Some people reported training exercises to be helpful and others did not, so the use of auditory training exercises was questioned and slowly faded from clinical practice. By the year 2005, a mere 30% of audiology practices reported using auditory training type interventions in routine clinical practice (Kricos, 2006).

Advances in neuroscience reignited the interest in auditory training because of the plethora of research documenting the capacity of the human brain to change, depending on the type of sensory input or lack thereof. Here we focus on auditory perceptual training as a means of exploring the human capacity to learn so that brain plasticity can be optimized in ways that enhance the rehabilitation of people with hearing loss. Previous studies have shown that training-related changes in neural activity precede changes in auditory perception (Tremblay et al., 1998; Atienza et al., 2002) therefore, non-invasive physiological measures might provide an opportunity to monitor and optimize intervention efforts in people with different types of hearing loss.

Even though auditory training exercises in humans have been shown to improve certain perceptual skills of individuals with and without hearing loss (Boothroyd, 1997; Tremblay et al., 1997, 1998, 2001; Fu et al., 2004; Irvine and Wright, 2005; Sweetow and Sabes, 2006; Burk and Humes, 2007; Tremblay and Moore, 2012; Anderson et al., 2013; Chisolm et al., 2013; Sullivan et al., 2013), there is a lack of knowledge pertaining to which aspects of training are responsible for the perceptual gains, and which aspects of perception are changed (Amitay et al., 2006, 2013; Boothroyd, 2010; Henshaw and Ferguson, 2013; Jacoby and Ahissar, 2013). This lack of knowledge hinders the rehabilitation of people with hearing loss because individuals do not always respond as expected to the training program in which they participate. Even among normal hearing listeners, the effects of training can be highly heterogeneous. Without knowing which aspects of the training exercises are responsible for observed benefits, it is difficult to determine which components of the training paradigm are ineffective and what individual needs still require targeted intervention.

To better define how auditory training exercises impact brain and behavior, electroencephalography (EEG) and magnetoencephalography (MEG) have been used to determine the time course and coincidence of cortical and sub-cortical modulations in evoked activity associated with different types of auditory training (Tremblay et al., 1997, 2001, 2009, 2010; Brattico et al., 2003; Shahin et al., 2003; Bosnyak et al., 2004; Sheehan et al., 2005; Alain et al., 2010; Carcagno and Plack, 2011; Shahin, 2011; Anderson et al., 2013; Barrett et al., 2013). Here we focus on studies involving the P1-N1-P2 waves of the cortical auditory evoked response (AEP), as there are consistent reports of gains in P2 amplitude following various types of auditory training experiences; including music (Shahin et al., 2003; Kuriki et al., 2007; Seppänen et al., 2012; Kühnis et al., 2013) and speech-sound training. Despite converging evidence that increases in the amplitude of the P2 wave of the P1-N1-P2 complex coincides with improved perception, little is known about the functional meaning and neural generators of the auditory P2 response and whether or not it could serve as a biological marker of auditory learning. Our earlier studies show the center of activity for P2 to be in the anterior auditory cortex, but how this relates to learning is still unknown (Ross and Tremblay, 2009).

Speech sounds and acoustic elements thereof are represented in the neural activity patterns along the auditory pathway. One example is the representation of voice-onset time (VOT), as reflected through a sequence of onset responses recorded from primary auditory cortices in feline, primate, and human models (Eggermont, 1995; Steinschneider et al., 2005). Monotonic increases in VOT result in latency shifts and double onset responses involving the N1 peak of the P1-N1-P2 complex (Tremblay et al., 2003; Steinschneider et al., 2005). The N1 is often described to be an “exogenous” response, meaning that it is sensitive to physical characteristics of the sound used to evoke the response (see Picton, 2013 for a recent review). As an example, the N1 reflects the detection of acoustic changes; including, the onset of sound, and acoustic changes within an ongoing sound (such as a consonant-vowel transitions) (Ostroff et al., 1998; Wagner et al., 2013). The P1 wave is thought to reflect gating of auditory information to the auditory cortex (Alho et al., 1994) whereas the P2 may reflect auditory processing beyond sensation (Crowley and Colrain, 2004). It is for this reason; the P1-N1-P2 complex has been used to examine the neural representation of perceptually relevant temporal cues such as VOT.

In a series of past experiments, the effects of VOT training on the human P1-N1-P2 complex have also been studied (Tremblay et al., 2001, 2009, 2010; Sheehan et al., 2005; Alain et al., 2010). These experiments were used to determine if neural VOT codes could be altered through training. That is, could the perception of two within category VOT stimuli (e.g., identification and/or discrimination) that are perceived alike, and that evoke similar N1 peak latencies be altered with training? What’s more, if perception changes, does the neural representation of VOT, marked by the latency of N1, change?

The VOT training studies described earlier did not reveal modifications in the latency of the N1 response. Instead, P2 amplitudes increased following VOT training. Training-related enhancements in P2 turned out not to be specific to VOT or VOT training. Enhanced P2 amplitudes appeared after various types of sound exposures (Tremblay and Ross, 2007; Tremblay et al., 2001, 2009; Atienza et al., 2002; Bosnyak et al., 2004; Sheehan et al., 2005) including identification or discrimination training; for different types of stimuli including tones and speech sounds; presented in different types of event-related potentials (ERPs) contexts (homogenous block or oddball paradigm, monaurally or binaurally); over different time courses (1 day vs. 1 year); using EEG or MEG. The P2 effect is robust, can be reliably seen in individuals, and is retained for months following initial exposure (Tremblay et al., 2010). This phenomenon is not limited to the laboratory either; enhanced P2 amplitudes appear to reflect life-long learning such as musical training (Kuriki et al., 2006; Shahin, 2011).

Even though P2 amplitude gains have been reported to be physiological correlates of auditory learning, it is important to challenge this notion by recognizing that contributions of stimulus exposure, executive function, cognitive tasks, and memory are inherent in any auditory training paradigm. Any one or combination of these components, rather than learning itself, could be influencing P2 changes reported in the literature. In fact, our previous studies (Ross and Tremblay, 2009; Tremblay et al., 2010), and others (Sheehan et al., 2005) suggest that mere stimulus exposure, during EEG and MEG recording sessions and behavioral baseline testing, in the absence of training or changes in perceptual performance, contribute to enhanced P2 amplitude.

Expanding this program of research by including different experimental designs, while involving the same stimuli, enables us to identify converging evidence across the studies. Therefore, the purpose of this study was to determine whether or not P2 amplitude changes represent biologic markers of auditory learning. To do so required examining modulations in brain activity in a group of participants who learned the task and comparing it to participants, matched in time, task, and stimulus exposure, that did not learn. Modulations in P2 amplitude could be viewed as a biomarker of auditory learning if P2 amplitudes increased only for the group that learned the VOT contrast, but not in the other groups.

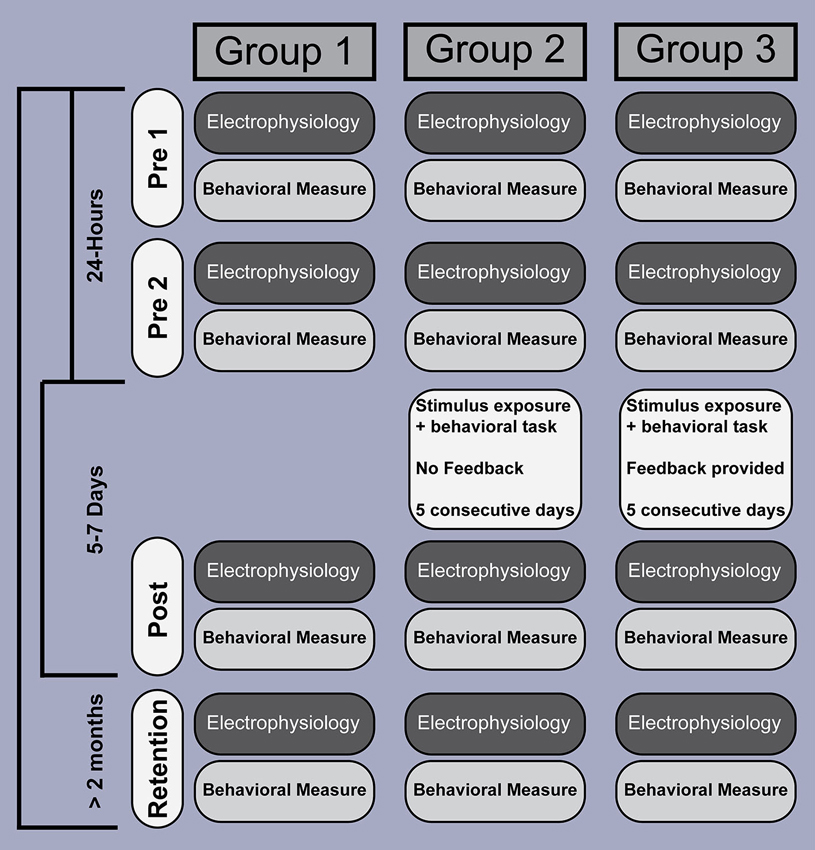

We therefore recorded behavioral responses and brain activity, elicited by stimuli differing in VOT, from three groups of participants, who were tested within similar time windows (Figure 1). The first group served as a control group without intervening listening or training experience, so that quantifiable modulations in brain activity could be related solely to the passage of time. The remaining two experimental groups (Groups 2 and 3) participated in listening tasks during a 5 day intervening period between pre 2 and post sessions. Both groups heard the same number of stimulus sounds during these intervening days, but the two groups differed in the type of task and feedback they received. One facilitated learning whereas the other did not. For example, members of Group 2 were asked to click a mouse button (to proceed to the next sound) after hearing each sound without receiving any feedback to facilitate learning the VOT contrast. Group 3 members were instructed how to label each sound (the two-alternative force-choice task) by clicking a mouse button, feedback about their performance followed so to facilitate learning. In doing so, we were able to examine brain-related changes in activity among a group that did and did not learn the VOT contrast. We also looked beyond a typical P1-N1-P2 time window (<200 ms in latency), to determine if VOT training modulates more endogenous, higher-level, aspects of sound processing.

Figure 1. Experiment design and time course. EEG recording and behavioral testing was performed at similar points in time, across four sessions, and involved three groups. EEG data were acquired separate from the behavioral sessions. Whereas participants in Groups 2 and 3 were exposed to, and interacted with, the stimuli over a 5 day period between test sessions, Group 1 did not. The number of stimuli (amount of stimulus exposure) was identical across Groups 2 and 3, and participants were required to perform a similar task (click the mouse to advance to the next stimulus), but what differed between the two groups was the instructions and feedback. Participants in Group 3 received instructions and response feedback intended to improve their ability to correctly identify each of the two pre-voiced stimuli, but participants in Group 2 did not.

Materials and Methods

Participants

Thirty normal-hearing native-English speakers (18–39 years) were randomly assigned to one of three groups (10 in each group). Normal hearing was defined as pure tone thresholds ≤25 dB HL across frequencies between 250 and 8000 Hz. All participants were right handed and provided their written informed consent prior to participation. The Research Ethics Board of the University of Washington approved the study. Data from ten of these subjects (Group 1) were previously described in a publication that reported only the effects of repeated stimulus exposure (Tremblay et al., 2010).

Stimuli

Two Klatt synthesized pre-voiced “ba” syllables, 180 ms in duration, were used in this experiment. They were the same stimuli used in a series of experiments designed to examine the neural encoding of VOT with training (see series of experiments by Tremblay et al., 1997 through 2010). Adult native English speakers consistently describe both pre-voiced stimuli as “ba” (McClaskey et al., 1983), but following training, they can learn to identify and label the −10 ms VOT stimulus as “ba” and the −20 ms VOT stimulus as “mba” (Tremblay et al., 1997).

Behavioral Tests

The ability to correctly identify the two stimuli was tested in four sessions for all groups within the same time frame (Figure 1). The first two tests were performed on 2 subsequent days, termed pre 1 and 2, and provided baseline performance scores. A post-training test was administered 5–7 days later and a retention test more than 2 months later. All groups were involved in the identification task, which was the same for all sessions. Participants were presented with randomized trials of the “mba” and “ba” stimuli. Twenty-five of the “mba” and 25 “ba” stimuli were presented in each session binaurally at a level of 76 dB SPL using insert earphones (Etymotic Research ER3a). The test was self-paced and a response, entered via a computer mouse, triggered the presentation of the next sound. Feedback was not provided in any test. The instructions to all participants were: “You will hear some sounds and I want you to label the sounds as you hear them using the left button on the computer mouse. You will label the sounds based on two choices that will be displayed on the computer monitor. There is no right or wrong answer; it is simply your perception of what you hear”. Two labels appeared on the computer screen as text: “mba” and “ba”.

Behavioral Training

Group 1 participated in the four-behavioral tests only and served as a control group for examining changes in perception and physiology, over the same time periods as Groups 2 and 3. Groups 2 and 3 participated in training sessions on 5 consecutive days, starting immediately following the pre 2 behavioral testing. Both groups heard four blocks of 50 randomized presentations of the “mba” and “ba” syllables, 25 of each on each day. Behavioral testing was self-paced and lasted approximately 20 min each day. Whereas the numbers of stimuli (amount of stimulus exposure) and the motor task of clicking the mouse were similar across the two groups, the instructions and feedback were different between groups. The task for Group 3 involved evaluating the stimulus they just heard, making a decision about what label they will assign to each sound, and then clicking the mouse to indicate which sound they heard. Group 3 also received feedback, which was intended to motivate participants to “correctly” label each sound.

Participants in Group 2 were instructed: “You will hear some sounds. After each sound press the button on the screen to continue to the following sound”. A button labeled “NEXT” was displayed on the computer screen to advance the task following each stimulus presentation.

Group 3 participants were instructed: “Now, we’re going to help you label one sound /ba/ and one /mba/. You will be given feedback following each trial. If you select the correct label, it will turn green. If you do not select the correct label, the next trial will begin”. Two text labels, “mba” and “ba”, were displayed on the computer screen.

Electroencephalography (EEG) Acquisition

EEG recordings and behavioral testing were completed in a sound-attenuated booth on 2 consecutive days (Session pre 1 and 2) 1 week following initial testing (post-training session) and 2 months to 1+ year following initial testing (retention session). Retention tests were staggered in time so changes in brain and behavior could be tracked over a large time window.

Similar to our previous experiments, stimuli were delivered monaurally via insert earphones to the right ear at 76 dB SPL; the same intensity was used for the behavioral tests. A passive EEG paradigm was used, meaning participants watched closed-captioned movies and were instructed to stay alert but no particular attention to the stimuli was requested. No behavioral task took place during EEG recordings. Four hundred presentations of the same type stimuli (“ba” or “mba”) were presented with an inter-stimulus interval of 1993 ms in a block. Following a 5 min break, a block of the other sound stimulus (“mba” or “ba”) was recorded. Stimulus order was counter-balanced across groups and test sessions. This particular ISI was used because our previous studies have shown that younger and older adults are differentially sensitive to stimulus presentation rates faster than 2 s and in future studies we wish to compare these data to those of older adults (Tremblay et al., 2004).

Continuous EEG signals were recorded from 59 electrodes using an elastic cap (Electro-cap International, Inc.) and a PC-based Neuroscan system (SCAN, ver. 4.3.3) with SynAmps2 amplifiers. The electrode montage followed an extended 10–20 system, reported in more detail in Tremblay et al. (2010). Four additional electrodes were placed on the inferior and outer canthus of each eye to monitor eye blink activity. EEG signals were referenced to the Cz electrode, analog bandpass-filtered between 0.15 and 100 Hz (12 dB/octave roll off), amplified with a gain of 500, and digitized at a sampling rate of 1000 Hz.

For offline analysis, an artifact correction procedure using BESA (5.2) was applied to reduce the effects of contamination from eye-blinks and ocular movements. Eye-blink artifacts were identified by a threshold criterion and corresponding waveforms were averaged to obtain a template of ocular artifacts. A principal component analysis of these averaged recordings provided a set of components that best explained the eye movements. The scalp projections of these components were then removed from the EEG signal to minimize ocular contamination.

In BESA the continuous EEG signal was parsed into stimulus onset related epochs of 1200 ms length, including a 200 ms pre-stimulus interval, which was used for baseline-correction. The signals were averaged for each stimulus condition and re-referenced to the average across all electrodes. Waveforms were low pass filtered at 32 Hz. The peak amplitudes and latencies of the N1 and P2 waves were measured as the signal maxima at electrode Cz in the latency intervals of ±50 ms around 100 ms and 200 ms for each participant, each stimulus type and each session.

Results

Behavioral Data Analysis

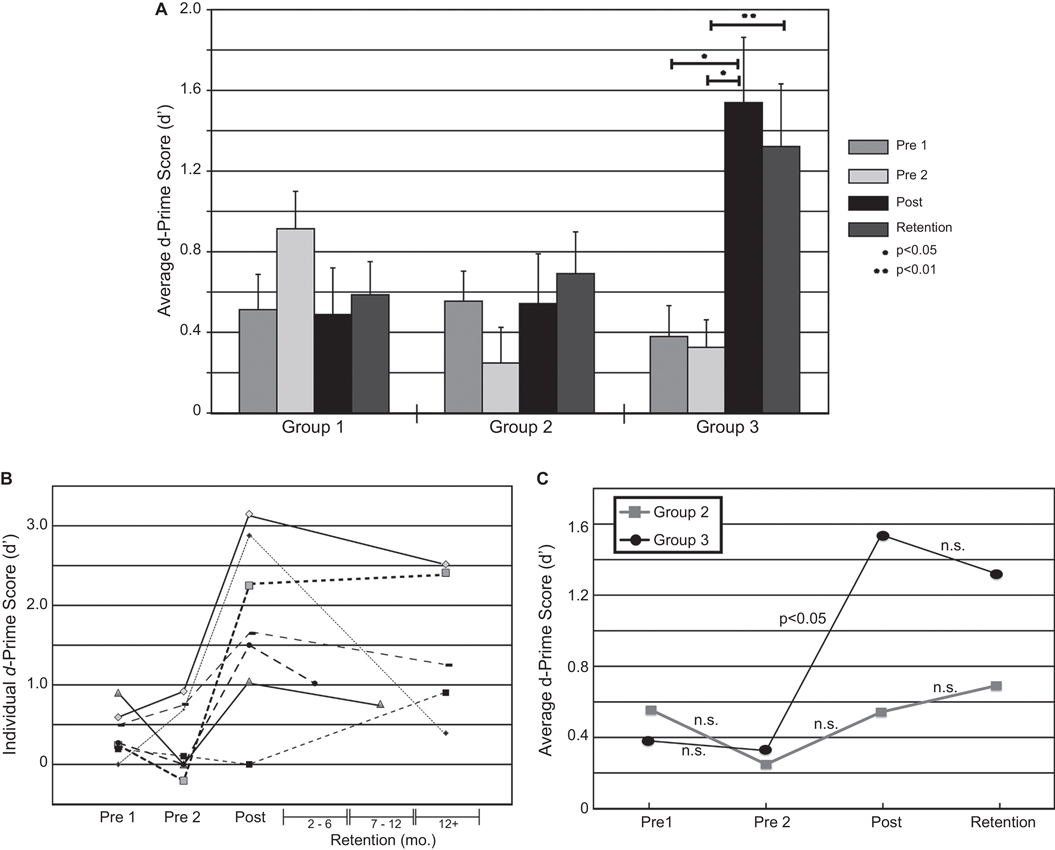

To assess perceptual performance across groups, d-prime (d′) scores (Macmillan and Creelman, 1991) were computed for each participant from the rates of hits, misses, false alarms, and correct rejections for each behavioral test. A response was scored correct (hit) if the participant assigned the label “mba” to the −20 ms VOT stimulus. A correct rejection involved choosing the label “ba” for the −10 ms VOT stimulus. A split-plot 3 (fixed between groups; “Group”) × 4 (fixed within groups; “Session”) mixed model ANOVA was used to test the effects of “Group” and “Session” as well as their interaction on the d′-scores. F-statistics for the within-group effects and interactions were adjusted to control for Type I error due to significance of Mauchly’s test of sphericity. Follow-up pairwise comparisons were made using the Dunn-Sidak multiple comparisons procedure to control for Type I error. Figure 2A–C summarize the behavioral results. Significant improvement in identification performance was seen for Group 3 only, and was retained for as long as 1 year for some individuals.

Figure 2. Changes in behavioral performance over the time course of the experiment. (A) Significant increases in performance were seen only for Group 3. Members of Group 3 participated in the identification task and received feedback. (B) Changes in d’ over time for 7 out of 10 individuals in Group 3 individuals who participated in the retention sessions. (C) A comparison in performance, over time, between Groups 2 and 3. Each group experienced the same number of trials and executed a button-pushing task, but Group 2 did not receive instructions or feedback designed to facilitate learning. No significant changes in performance were seen for Group 2.

Performance Evaluation—Pre 1, Pre 2 and Post Sessions

There was a significant main effect of “Session” on d′-scores (F(2.33, 53.50) = 6.59, p < 0.01, partial ω2 = 0.13); as well as a “Group” × “Session” interaction (F(4.65, 53.50) = 7.17, p < 0.001, partial ω2 = 0.28). Follow-up pairwise comparisons showed an increase in d′ between baseline (Pre 2) and the post-test for Group 3 only i.e., for those participants in the training who received performance feedback (p-values < 0.05).

Performance Retention

Figure 2B shows changes in d′-scores over time for Group 3. Three individuals were lost to attrition and were unavailable to return for retention testing. Analysis of d′-scores measured more than 2 months after the initial testing (Retention) revealed sustained improvements in performance for Group 3 (Figure 2A). Significant increases in d′-scores were seen between the baseline session (pre 2) and the post-training measures (p = 0.033), with significant differences between baseline and retention (p = 0.009) and no significant differences between post-training and retention measures (p = 0.697). An analysis of the d′-scores revealed that the improvements in performance, which was found between pre- and post-training measures persisted in the retention measure.

Electroencephalography (EEG) Analysis: Auditory Evoked Responses

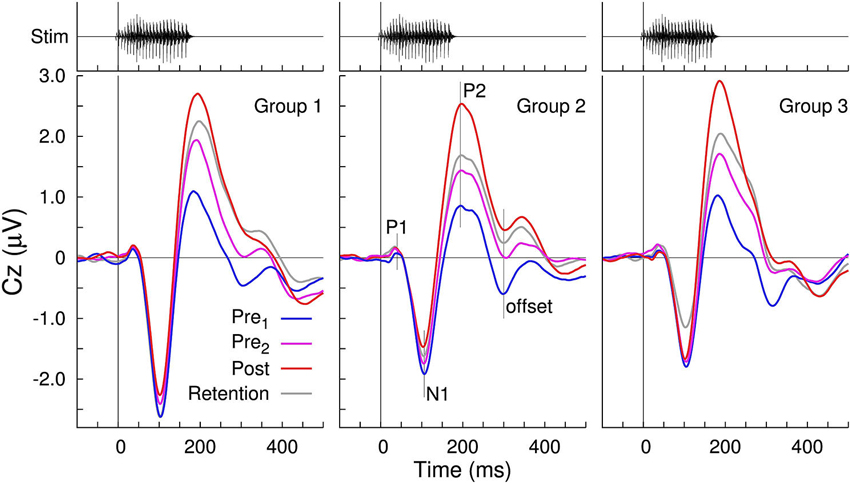

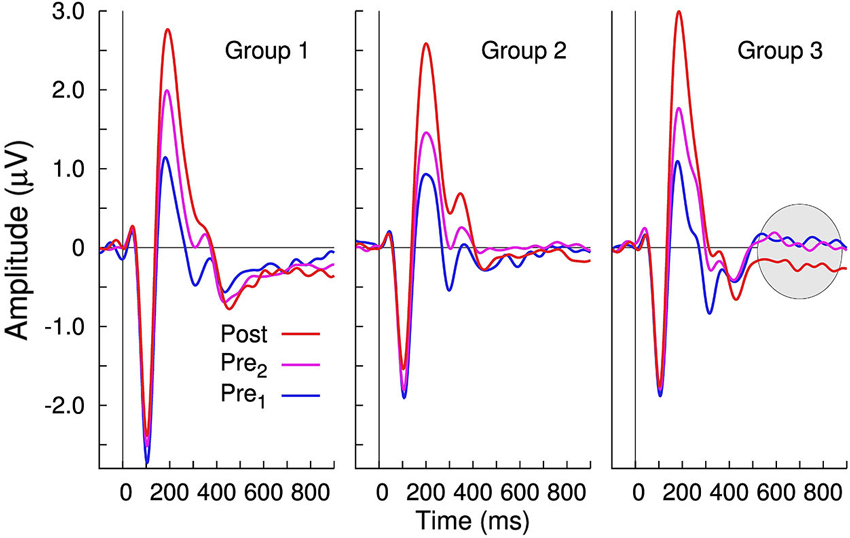

To compare to our previously published studies, grand averaged evoked responses at electrode Cz are shown for the three groups and the four recording sessions in Figures 3, 4. All waveforms are in response to the −10 ms prevoiced “ba” stimulus and show prominent N1-P2 waves. The P1 wave is small. Although the response morphologies are quite different between groups as, for example, expressed in different ratios of the N1 and P2 amplitudes and variations at longer latencies beyond 300 ms, the effect of increasing P2 amplitudes between the first baseline recording and the post-training session is apparent in all three groups. Also, similar to our previous study (Tremblay et al., 2009), P2 amplitude measured across staggered retention sessions more than 2 months after the first recording, remained larger than the initially measured P2 amplitude. In contrast, changes in N1 amplitude over the time course of the experiment were small. Offset responses also appear to decrease over time, but we assume them to be driven by growth of P2.

Figure 3. Grand averaged voltages at the vertex electrode Cz in response to the −10 ms VOT stimulus “ba”. Prominent N1 and P2 waves are visible in all time-series as well as the gradual increase in the P2 amplitude across the three sessions. Offset responses decrease across sessions, presumably due to the P2 amplitude growth.

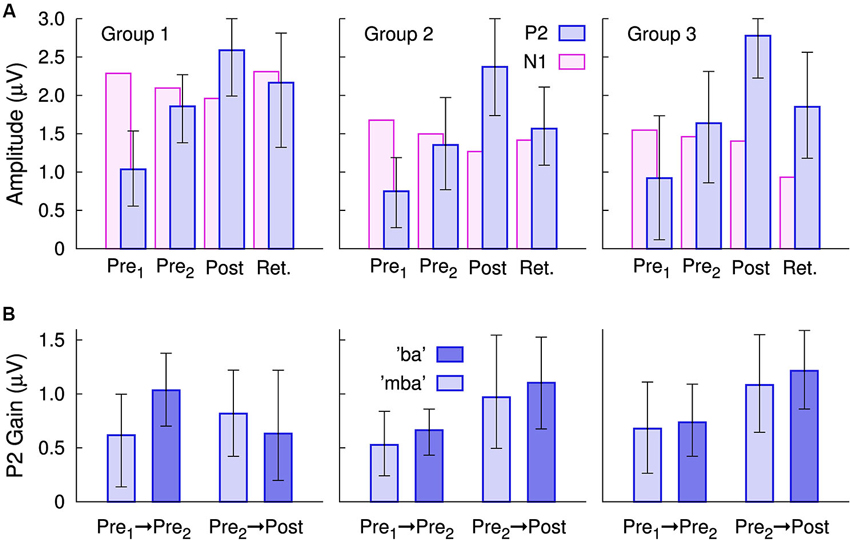

Figure 4. Changes in the group mean P2 amplitudes, measured at electrode Cz. (A) In all three groups, P2 amplitude gains were seen for the Pre 2, post-training, and even during the retention sessions. P2 amplitudes were still larger in the retention session than in the first session. By comparison, changes in the N1 amplitude were small. The error bars indicate the 95% confidence intervals for each group mean. (B) Gain in the P2 amplitudes between Pre 1 and Pre 2 sessions and between the Pre 2 and post-training sessions

The N1 amplitude showed smaller between-session changes than that of P2 (Figure 4A). N1 amplitude diminished over the time course of the first three recordings. A repeated measures ANOVA for the N1 amplitude revealed main effects of “Session” (F(3,81) = 4.67, p = 0.0046) and “Stimulus” (F(1,27) = 5.32, p = 0.029) and a “Session” × “Group” interaction (F(6,81) = 3.11, p = 0.0087). When averaged across sessions, the stimulus effect appeared to be driven by the slightly larger “ba” amplitude for all three groups (mba: 1.63 µV and ba: 1.85 µV). The interaction diminished when considering the first three recordings only, suggesting it was mainly caused by the continuing N1 decrease in the retention session in Group 3 only. It should be kept in mind, that an N1 amplitude decrease means a positive voltage shift at the Cz electrode, which appeared in line with P2 amplitude increases, thus, a cross talk of the P2 changes has to be considered when interpreting the N1 changes. No significant changes in N1 latency were found for either stimulus, across sessions.

A repeated measures ANOVA on P2 amplitude with the between subjects factor “Group” (3 levels) and the within subjects factors “Session” (4 levels) and “Stimulus” (2 levels) revealed no main effect of “Group” (F(2,27) = 0.5), but there were main effects of “Session” (F(3,27) = 62.7, p < 0.0001) and of “Stimulus” (F(2,27) = 13.6, p = 0.001). No “Group” × “Session” or “Group” × “Stimulus” interaction was significant. For the mean across groups, the P2 amplitude increased from 0.90 µV to 1.61 µV between the pre 1 and 2 baseline recordings, continued to increase to 2.59 µV in the post-training session, and decreased to 1.86 µV in the retention session. Compared to the first baseline recording, the P2 amplitude increased by 79% at the second baseline recording, by 187% at the post-training session, and retained larger than twice the initial amplitude after more than 2 months.

Gains in P2 amplitude between the pre-training sessions and between pre- and post-training sessions are illustrated in Figure 4B. An ANOVA revealed a main effect of “Session” (F(1,27) = 4.9, p = 0.035) and a “Session” × “Group” interaction (F(2,27) = 5.2, p = 0.030) because the P2 gain between pre- and post-training was larger than the P2 increase between the baseline sessions in Group 2 (t(19) = 2.21, p = 0.040) and in Group 3 (t(19) = 2.31, p = 0.035) but not in Group 1 (t(19) = 0.3). There were no differences in the amount of P2 gain between Groups 2 and 3.

Results of a spatio-temporal principal component analysis on the evoked response waveforms observed in Group 3 are summarized in Figure 5 with the topographic distributions of the five largest components, which explain in total 98.4% of the variance, and the corresponding waveforms separately for the two baseline sessions and the post-training session. Overlaid are the responses to the “ba” and the “mba” stimuli. The aim of this analysis was to explore whether learning to identify the two stimuli would result in a different responses to “ba” and “mba”. Recognizing there are spatial precision limitations with EEG, we report the largest component, characterized by the N1-P2 waves, as being maximal at frontal midline electrodes, and the second largest component was predominant above the posterior parietal region. Smaller components were localized to left and right temporal and inferior frontal regions. Although the smallest component explained only 2.2% of the signal variance, the corresponding time series were clearly reproduced between sessions. Most importantly, no clear distinction between “ba” and “mba” responses became obvious. Accordingly, a formal multivariate test using PLS analysis showed a main effect of “Session” but no “Session” × “Stimulus” interaction. So far, the current data do not suggest that learning results in different cortical representation of the learned stimulus item beyond the statistical power of our analysis.

Figure 5. Principal component analysis of the group averaged responses in the training group with feedback (Group 3). The graphs show the time series of the five largest principal components (PC) for the two pre-training and the post-training recordings. The responses to the “ba” and “mba” stimuli are overlaid for comparison. The topographic map of the potential distribution across the head is shown right to the graphs of time series. The first PC explains 74% of the signal variance and shows the typical fronto-central maximum corresponding to two tangential dipole sources in left and right auditory cortices.

Late Negativity

Changes in evoked neural activity, in the 600–900 ms latency interval, were also observed during post- training and retention-sessions in the trained Group 3. Therefore, the mean amplitude in the 600–900 ms latency interval was measured and compared between groups and recording sessions. The repeated measures ANOVA for this late negativity (LN) revealed only a tendency toward significance for “Group” (F(2,27) = 2.71, p = 0.085); however, a main effect of the within-subject factor “Session” (F(2,54) = 6.92, p = 0.0021) and the “Session” × “Group” interaction (F(4,54) = 3.47, p = 0.0135) was observed. Pairwise comparisons help to explain the interaction because between-session differences in the LN were significant in Group 3 only (Figure 6). In Group 3, the LN was larger after the training compared to the pre 1 session (t(19) = 4.18, p < 0.0001) and compared to the pre 2 session (t(19) = 3.62, p = 0.0018). Despite the significant training-related changes in the LN latency range for Group 3, the magnitude of perceptual change did not correlate with the amount of amplitude LN change (R2 = 0.07, F = 0.61, p = 0.46). Also, even though a visible LN can be seen in the retention data, the between subject variability was large (likely because of the staggered test times) and thus the retention effect was not significant.

Figure 6. Group averaged time series of the largest principal component of the evoked responses. Whereas no between-session changes in the 600–900 ms latency interval are noticeable in Groups 1 and 2; in Group 3, there is an increased negativity following training.

Discussion

There is a long history of using auditory training exercises as a part of auditory rehabilitation programs for people with and without hearing loss. One assumption is that listening training modifies the way sound is encoded and processed in the central auditory system, another is that listening exercises permit the person to make better use of existing neural codes. We still do not know what aspects of auditory training are responsible for perceptual gains (Boothroyd, 2010) and how coincident changes in neural activity relate to the auditory cue being trained. To address this issue, we compared training-related changes in perception and physiology, evoked by the same VOT stimuli, so brain and behavior relationships could be made. N1 and P2 latencies are consistently reported to be important neural correlates of VOT (Wagner et al., 2013); however, when people are trained to alter the perception of VOT, P2 amplitude, rather than N1 or P2 latencies are observed. Therefore, the purpose of this experiment was to determine if the auditory evoked P2 response is a biomarker of VOT learning.

Is P2 A Biomarker of Learning?

The main finding was that P2 amplitude growths were observed for people who did and did not learn the novel VOT contrast. Based on these data, the most obvious conclusion is that P2 amplitude is not a biomarker of learning. This conclusion is reinforced by the growing body of evidence suggesting it is the elements of training (exposure, task execution) that contribute to P2 enhancements, and not the learned product of a goal-directed act. It would also explain why no study has been able to establish a one-to-one relationship between the magnitude of P2 change and the magnitude of perceptual change (Tremblay et al., 2001; Sheehan et al., 2005) and why enhancements appear to generalize to other stimuli exposed to but not necessarily learned (Tremblay et al., 2009). However, it is also possible that the large training related changes in P2 might overlay and obscure smaller effects of learning; or reflect other related processes not measured here. Therefore, to entirely dismiss a relationship between P2 and auditory learning would be to ignore converging evidence, from multiple laboratories, linking enhanced neuroplastic P2 activity to multiple forms of learned behaviors. When learning to discriminate the rate of frequency modulation in tones, for example, differences in performance gain related to different learning strategies, and were reported be reflected in P2 amplitude increases (Orduña et al., 2012). In a study of pitch discrimination training, absolute P2 amplitude correlated with reaction time (Tong et al., 2009). Also, long-term experience in musicianship and effects of auditory training in musicians were expressed in larger P2 amplitudes and amplitude increments compared to non-musicians (Seppänen et al., 2012). Collectively, there is a growing body of literature linking enhanced P2 amplitudes to auditory learning that it makes it difficult to entirely reject some type of brain-behavior relationship. We therefore put forth an alternative hypothesis; changes in P2 amplitude reflect neural activity associated with the acquisition process, but not the learned outcome itself. What neural mechanisms are associated with the process, and driving modulations in P2 activity, still need to be defined.

Based on source modeling we can assume some degree of auditory cortex involvement. Ross and Tremblay (2009) showed N1 and P2 to originate from different anatomical structures that likely serve different functions. N1 sources lay in the posterior part of auditory cortex, the planum temporale, whereas the center of activity for P2 lay in anterior auditory cortex, the lateral part of Heschl’s gyrus. P2 sources have also been identified in planum temporale, Brodmann’s area 22, and auditory association cortices (Crowley and Colrain, 2004). Whereas the P2 increase exhibits a neuroplastic nature, with enhanced activity becoming evident only after a period of sleep (Atienza et al., 2002; Ross and Tremblay, 2009; Zhu, 2010) and persisting for months; decreases in N1 amplitude occur within an experimental session and return to baseline in subsequent recordings (Ross and Tremblay, 2009). This type of N1 behavior pattern is more in line with habituation and less so with the types of learning–related N1 changes exhibited during active EEG recordings where modulations in brain activity are recorded while the participant is attending and executing the training task (Alain et al., 2010). Then again, habituation is sometimes termed “non-associative learning” and may be facilitating the P2 effects reported here (Rankin et al., 2009). N1 suppression mechanisms may also help consolidation, resulting in an increase of P2 between sessions.

The stimuli and passive recording paradigms used in our original VOT studies were designed to determine if neural codes reflecting VOT, and reflected by the N1, could be altered through training. If so, these far-field AEP recordings could be used clinically to assess the temporal resolution and rehabilitation of populations with suspected temporal processing disorders. The passive EEG recording paradigm is ideal for difficult to test populations and avoids potential confounds that can interfere with perceptual performance. Moreover, the stimulus block design was designed with future clinical applications in mind as these types of recording paradigms are within the capacity, and similar to electrophysiological procedures, used in audiology clinics today. However, to date, using this approach, no evidence of significant N1 latency shifts, reflecting perceptual changes in VOT, over time, have been reported. One possibility is that N1 latencies do not reflect subtle differences in pre-voicing. Another is that mechanisms underlying N1 are resistant to training (Wagner et al., 2013), or changes in synchronous activity are so modest that they cannot be detected using far-field recordings in humans. However, there is some evidence that N1 (and some subcomponents) can be modified with training but these were all observed as amplitude rather than latency changes (Menning et al., 2000; Brattico et al., 2003; Bosnyak et al., 2004). An exception is Reinke et al. (2003) who reported decreased N1 and P2 latencies, as well as enhanced P2 amplitudes following training, but these latency changes were recorded using an active EEG task while listeners partook in a vowel segregation-training task. This means, attention, auditory and visual sensory processing, memory and executive function could have contributed to the observed latency changes. Thus, it is difficult to differentiate sensory vs. cognitive (top-down) contributions to learning, as well as the various types of top-down contributors.

The P1-N1-P2 responses recorded here were acquired in a passive way and as such are described as being mainly exogenous in nature, meaning they are highly dependent on the physical properties of the stimulus used to evoke it. However, these AEPs can be endogenous, and modulated by attention in certain circumstances (Hillyard et al., 1973; Woldorff and Hillyard, 1991; Woods, 1995). This point is important when considering potential contributors to enhanced P2 activity. In our design, participants heard stimuli during the AEP sessions and during each perceptual training and testing task. They saw visual instructions and text response options. In all instances, auditory and visual input tapped into memory sources because sessions were repeated on different days. So, as described by Tremblay et al. (2001) and others, it is possible that some of the training-related physiological changes reported here might reflect other top-down modulatory influences that are activated during AEP recordings as well as focused listening tasks. What’s more, the P2 effects might not even be auditory specific. Similar to the auditory evoked P2, the visually evoked P2 is modulated by attention, language context information, and memory and repetition effects. It is also considered to be part of cognitive matching system that compares sensory inputs with stored memory (Luck and Hillyard, 1994; Freunberger et al., 2007). Therefore, although our source modeling studies (Ross and Tremblay, 2009) showed involvement of primary and association cortical areas, we have not yet ruled out multisensory interactions from contributing to our results. Until future experiments are designed to disentangle the various multi-sensory top-down contributing components such as: attention, memory, and executive function, we are left to speculate about neural mechanisms, and their contributions to the results reported here.

One possibility worth exploring in future studies is the concept of object representation (Näätänen and Winkler, 1999; Ross et al., 2013). If we view N1 and P2 as reflecting synchronous evoked auditory involved in the early stages of perceptual learning, where the neural representation of the sensory input takes place, we could speculate that the P2 indicates memory updating, and consolidation, where the two similar sounding (“ba” and “mba”) stimuli, are stored in a buffer. This phase could be passive, not requiring engagement of the participants, which would explain enhanced P2 activity from session to session in the absence of training. With directions and feedback, it would become possible to separate this sensory information into two objects “mba” and “ba”. Within this framework, we suggest that P2 plays a role in stimulus familiarization and auditory object representation; critical processes for successful perception. The second phase of learning is likely mediated by top-down processes and probably involves many interactive aspects involving attention, motivation, reinforcement etc. Whereas the first stage applies to the neural detection of sound, the second stage reflects how the brain makes use of the sound. To better understand later stages in sound processing, we expanded our prior analyses to determine if auditory training, and its components, result in recordable modulations in brain activity—later in time. As seen in previous studies (Tremblay et al., 2009) there might be experience-related changes occurring outside the P2 latency region that are visible in different scalp locations.

Late Negativity (LN)

A previously unreported finding was the presence of the LN in the post-training session, for the group that learned to identify the pre-voiced contrast. It appears to a lesser degree in the retention data as well, but brain-and-behavior scores do not correlate with each other. Like the P2, the magnitude of LN change does not predict a person’s perceptual change score. So what does the LN reflect?

It is well established that distinct forms of cognitive control are associated with unique patterns of activation over a distributed network of regions. These networks can include the dorsolateral prefrontal cortex (DLPFC), ventrolateral prefrontal cortex (VLPFC), supplementary and pre-supplementary motor areas, the anterior cingulate cortex (ACC), superior and inferior aspects of the posterior parietal cortex (Corbetta and Shulman, 2002; Cole and Schneider, 2007). What’s more, many aspects of cognitive control have been shown to manifest themselves as negativities in ERP recordings (e.g., N2, Nd, MMN, N400, Late Difference Negativity (LDN) and Error Related Negativity (ERN)). However, these types of negativities are typically recorded when the task involved attention switching, or other complex stimulus paradigms like an oddball paradigm, or often require active participation during the EEG recording. In the present experiment, participants were not engaged in a purposeful attention task and the stimuli were presented as a homogenous train of equiprobable events with no salient deviant stimuli. Thus, our use of the term LN is descriptive and does not neatly fit a well-characterized ERP profile. If left to speculate, we hypothesize that members of Group 3 learned to identify subtle acoustic cues that separated the two pre-voiced stimuli prior to the final ERP session. It is possible then that the training sessions drew greater attention to the stimuli as being separate objects. At the time of post-training EEG sessions, these two stimuli were automatically recognized as two separate auditory objects, but members of Group 3 were the only ones who were taught to attach each object to a perceptual label.

Summary and Conclusions

The purpose of this study was to determine if enhanced auditory evoked P2 activity is a biomarker of learning. The question is relevant to the study of auditory rehabilitation in that neurophysiological correlates of auditory training are needed to better understand the mechanisms of action presumed to be involved when using training as an intervention approach for people with and without hearing loss. This study showed increases in P2 AEP amplitude following exposure to auditory stimuli as well as the participation in tasks (with and without feedback). Enhanced P2 amplitudes were seen regardless of any change in perceptual performance and therefore not interpreted to be a biomarker of learning. Instead, modulations in P2 amplitude were attributed to changes in neural activity associated with the acquisition process and not the learned outcome itself. A process that is robust enough to be retained for months. A further finding was the expression of a LN wave 600–900 ms post-stimulus onset, in the post-training session, exclusively for the group that learned to identify the pre-voiced contrast. Collectively, we conclude that being exposed to and interacting with sound, alters the way those sounds are represented in the brain and these changes in neural activity are part of the learning process. Consistent with our earlier findings (Tremblay et al., 1998, 2009), changes in neural activity appear to precede changes in auditory perception and are retained for months. The application of this information to the assessment and rehabilitation of people with hearing loss and other communication-based disorders will depend on future studies aimed at disentangling multi modal bottom-up and top-down neural mechanisms contributing to changes in the N1, P2 and LN. However, a final take home point is that research directed at identifying neural mechanisms related to training and learning should take into consideration the contribution of repeated stimulus exposure as well as other possible coincident contributors to reported physiological changes.

Author Contributions

All authors contributed substantially to the concept/design of the work; the interpretation of data; draft reviews including revisions, and approved the final version to be published. Collectively we are accountable for all aspects of the work, including accuracy and integrity. Additionally, Katrina McClannahan and Gregory Collet contributed to data collection.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by the National Institutes of Health (R01-DC007705; R01DC012769), (P30-DC04661), (F30-DC010297), and the Fonds National de Recherche Scientifique (FRS-FNRS), Brussels, Belgium. Funding from the American Academy of Audiology awarded to Katrina McClannahan is also acknowledged. The authors would also like to thank Dr. Chris Bishop for his review of earlier drafts and Yu He for her assistance with data processing.

References

Alain, C., Campeanu, S., and Tremblay, K. (2010). Changes in sensory evoked responses coincide with rapid improvement in speech identification performance. J. Cogn. Neurosci. 22, 392–403. doi: 10.1162/jocn.2009.21279

Alain, C., Snyder, J. S., He, Y., and Reinke, K. S. (2007). Changes in auditory cortex parallel rapid perceptual learning. Cereb. Cortex 17, 1074–1084. doi: 10.1093/cercor/bhl018

Alho, K., Teder, W., Lavikainen, J., and Näätänen, R. (1994). Strongly focused attention and auditory event-related potentials. Biol. Psychol. 38, 73–90. doi: 10.1016/0301-0511(94)90050-7

Amitay, S., Irwin, A., and Moore, D. R. (2006). Discrimination learning induced by training with identical stimuli. Nat. Neurosci. 9, 1446–1448. doi: 10.1038/nn1787

Amitay, S., Zhang, Y. X., Jones, P. R., and Moore, D. R. (2013). Perceptual learning: top to bottom. Vision Res. doi: 10.1016/j.visres.2013.11.006. [Epub ahead of print].

Anderson, S., White-Schwoch, T., Parbery-Clark, A., and Kraus, N. (2013). A dynamic auditory-cognitive system supports speech-in-noise perception in older adults. Hear. Res. 300, 18–32. doi: 10.1016/j.heares.2013.03.006

Atienza, M., Cantero, J. L., and Dominguez-Marin, E. (2002). The time course of neural changes underlying auditory perceptual learning. Learn. Mem. 9, 138–150. doi: 10.1101/lm.46502

Barrett, K. C., Ashley, R., Strait, D. L., and Kraus, N. (2013). Art and science: how musical training shapes the brain. Front. Psychol. 4:713. doi: 10.3389/fpsyg.2013.00713

Boothroyd, A. (2010). Adapting to changed hearing: the potential role of formal training. J. Am. Acad. Audiol. 21, 601–611. doi: 10.3766/jaaa.21.9.6

Bosnyak, D. J., Eaton, R. A., and Roberts, L. E. (2004). Distributed auditory cortical representations are modified when non-musicians are trained at pitch discrimination with 40 Hz amplitude modulated tones. Cereb. Cortex 14, 1088–1099. doi: 10.1093/cercor/bhh068

Brattico, E., Tervaniemi, M., and Picton, T. W. (2003). Effects of brief discrimination-training on the auditory N1 wave. Neuroreport 14, 2489–2492. doi: 10.1097/00001756-200312190-00039

Burk, M. H., and Humes, L. E. (2007). Effects of training on speech recognition performance in noise using lexically hard words. J. Speech Lang. Hear. Res. 50, 25–40. doi: 10.1044/1092-4388(2007/003)

Carcagno, S., and Plack, C. J. (2011). Subcortical plasticity following perceptual learning in a pitch discrimination task. J. Assoc. Res. Otolaryngol. 12, 89–100. doi: 10.1007/s10162-010-0236-1

Carhart, R. (1960). Assessment of sensorineural response in otosclerotics. AMA Arch. Otolaryngol. 71, 141–149. doi: 10.1001/archotol.1960.03770020013004

Chisolm, T. H., Saunders, G. H., Frederick, M. T., McArdle, R. A., Smith, S. L., and Wilson, R. H. (2013). Learning to listen again: the role of compliance in auditory training for adults with hearing loss. Am. J. Audiol. doi: 10.1044/1059-0889(2013/12-0081). [Epub ahead of print].

Cole, M. W., and Schneider, W. (2007). The cognitive control network: integrated cortical regions with dissociable functions. Neuroimage 37, 343–360. doi: 10.1016/j.neuroimage.2007.03.071

Corbetta, M., and Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 201–215. doi: 10.1038/nrn755

Crowley, K. E., and Colrain, I. M. (2004). A review of the evidence for P2 being an independent component process: age, sleep and modality. Clin. Neurophysiol. 115, 732–744. doi: 10.1016/j.clinph.2003.11.021

Eggermont, J. J. (1995). Representation of a voice onset time continuum in primary auditory cortex of the cat. J. Acoust. Soc. Am. 98, 911–920. doi: 10.1121/1.413517

Freunberger, R., Klimiesch, W., Doppelmayr, M., and Holler, Y. (2007). Visual P2 component is related to theta phase-locking. Neurosci. Lett. 426, 181–186. doi: 10.1016/j.neulet.2007.08.062

Fu, Q. J., Galvin, J., Wang, X., and Nogaki, G. (2004). Effects of auditory training on adult cochlear implant patients: a preliminary report. Cochlear Implants Int. 5(Suppl. 1), 84–90. doi: 10.1002/cii.181

Henshaw, H., and Ferguson, M. A. (2013). Efficacy of individual computer-based auditory training for people with hearing loss: a systematic review of the evidence. PLoS One 8:e62836. doi: 10.1371/journal.pone.0062836

Hillyard, S. A., Hink, R. F., Schwent, V. L., and Picton, T. W. (1973). Electrical signs of selective attention in the human brain. Science 182, 177–180. doi: 10.1126/science.182.4108.177

Irvine, D. R., and Wright, B. A. (2005). Plasticity of spectral processing. Int. Rev. Neurobiol. 70, 435–472. doi: 10.1016/s0074-7742(05)70013-1

Jacoby, N., and Ahissar, M. (2013). What does it take to show that a cognitive training procedure is useful? A critical evaluation. Prog. Brain Res. 207, 121–140. doi: 10.1016/B978-0-444-63327-9.00004-7

Kricos, P. B. (2006). Audiologic management of older adults with hearing loss and compromised cognitive/psychoacoustic auditory processing capabilities. Trends Amplif. 10, 1–28. doi: 10.1177/108471380601000102

Kühnis, J., Elmer, S., Meyer, M., and Jäncke, L. (2013). Musicianship boosts perceptual learning of pseudoword-chimeras: an electrophysiological approach. Brain Topogr. 26, 110–125. doi: 10.1007/s10548-012-0237-y

Kuriki, S., Kanda, S., and Hirata, Y. (2006). Effects of musical experience on different components of MEG responses elicited by sequential piano-tones and chords. J. Neurosci. 26, 4046–4053. doi: 10.1523/jneurosci.3907-05.2006

Kuriki, S., Ohta, K., and Koyama, S. (2007). Persistent responsiveness of long-latency auditory cortical activities in response to repeated stimuli of musical timbre and vowel sounds. Cereb. Cortex 17, 2725–2732. doi: 10.1093/cercor/bhl182

Luck, S. J., and Hillyard, S. A. (1994). Electrophysiological correlates of feature analysis during visual search. Psychophysiology 31, 291–308. doi: 10.1111/j.1469-8986.1994.tb02218.x

Macmillan, N. A., and Creelman, C. D. (1991). Detection Theory: A User’s Guide. Mahwah, NJ: Lawrence Erlbaum Association.

McClaskey, C. L., Pisoni, D. B., and Carrell, T. D. (1983). Transfer of training of a new linguistic contrast in voicing. Percept. Psychophys. 34, 323–330. doi: 10.3758/bf03203044

Menning, H., Roberts, L. E., and Pantev, C. (2000). Plastic changes in the auditory cortex induced by intensive frequency discrimination training. Neuroreport 11, 817–822. doi: 10.1097/00001756-200003200-00032

Näätänen, R., and Winkler, I. (1999). The concept of auditory stimulus representation in cognitive neuroscience. Psychol. Bull. 125, 826–859. doi: 10.1037/0033-2909.125.6.826

Orduña, I., Liu, E. H., Church, B. A., Eddins, A. C., and Mercado, E. (2012). Evoked-potential changes following discrimination learning involving complex sounds. Clin. Neurophysiol. 123, 711–719. doi: 10.1016/j.clinph.2011.08.019

Ostroff, J. M., Martin, B. A., and Boothroyd, A. (1998). Cortical evoked response to acoustic change within a syllable. Ear Hear. 19, 290–297. doi: 10.1097/00003446-199808000-00004

Picton, T. (2013). Hearing in time: evoked potential studies of temporal processing. Ear Hear. 34, 385–401. doi: 10.1097/aud.0b013e31827ada02

Rankin, H. A., Abrams, T., Barry, R. J., Bhatnagar, S., Clayton, D. F., Colombo, J., et al. (2009). Habituation revisited: an updated and revised description of the behavioral characteristics of habituation. Neurobiol. Learn. Mem. 92, 135–138. doi: 10.1016/j.nlm.2008.09.012

Reinke, K. S., He, Y., Wang, C., and Alain, C. (2003). Perceptual learning modulates sensory evoked response during vowel segregation. Brain Res. Cogn. Brain Res. 17, 781–791. doi: 10.1016/s0926-6410(03)00202-7

Ross, B., Jamali, S., and Tremblay, K. L. (2013). Plasticity in neuromagnetic cortical responses suggests enhanced auditory object representation. BMC Neurosci. 14:151. doi: 10.1186/1471-2202-14-151

Ross, B., and Tremblay, K. (2009). Stimulus experience modifies auditory neuromagnetic responses in young and older listeners. Hear. Res. 248, 48–59. doi: 10.1016/j.heares.2008.11.012

Seppänen, M., Hämäläinen, J., Pesonen, A. K., and Tervaniemi, M. (2012). Music training enhances rapid neural plasticity of N1 and P2 source activation for unattended sounds. Front. Hum. Neurosci. 6:43. doi: 10.3389/fnhum.2012.00043

Shahin, A. J. (2011). Neurophysiological influence of musical training on speech perception. Front. Psychol. 2:126. doi: 10.3389/fpsyg.2011.00126

Shahin, A., Bosnyak, D. J., Trainor, L. J., and Roberts, L. E. (2003). Enhancement of neuroplastic P2 and N1c auditory evoked potentials in musicians. J. Neurosci. 23, 5545–5552.

Sheehan, K. A., Mcarthur, G. M., and Bishop, D. V. (2005). Is discrimination training necessary to cause changes in the P2 auditory event-related brain potential to speech sounds? Brain Res. Cogn. Brain Res. 25, 547–553. doi: 10.1016/j.cogbrainres.2005.08.007

Steinschneider, M., Volkov, I. O., Fishman, Y. I., Oya, H., Arezzo, J. C., and Howard, M. A. 3rd. (2005). Intracortical responses in human and monkey primary auditory cortex support a temporal processing mechanism for encoding of the voice onset time phonetic parameter. Cereb. Cortex 15, 170–186. doi: 10.1093/cercor/bhh120

Sullivan, J. R., Thibodeau, L. M., and Assmann, P. F. (2013). Auditory training of speech recognition with interrupted and continuous noise maskers by children with hearing impairment. J. Acoust. Soc. Am. 133, 495–501. doi: 10.1121/1.4770247

Sweetow, R. W., and Sabes, J. H. (2006). The need for and development of an adaptive listening and communication enchancement (LACE™) program. J. Am. Acad. Audiol. 17, 538–558. doi: 10.3766/jaaa.17.8.2

Tong, Y., Melara, R. D., and Rao, A. (2009). P2 enhancement from auditory discrimination training is associated with improved reaction times. Brain Res. 1297, 80–88. doi: 10.1016/j.brainres.2009.07.089

Tremblay, K. L., Billings, C., and Rohila, N. (2004). Speech evoked cortical potentials: effects of age and stimulus presentation rate. J. Am. Acad. Audiol. 15, 226–237. doi: 10.3766/jaaa.15.3.5

Tremblay, K. L., Inoue, K., Mcclannahan, K., and Ross, B. (2010). Repeated stimulus exposure alters the way sound is encoded in the human brain. PLoS One 5:e10283. doi: 10.1371/journal.pone.0010283

Tremblay, K., Kraus, N., Carrell, T. D., and Mcgee, T. (1997). Central auditory system plasticity: generalization to novel stimuli following listening training. J. Acoust. Soc. Am. 102, 3762–3773. doi: 10.1121/1.420139

Tremblay, K., Kraus, N., and Mcgee, T. (1998). The time course of auditory perceptual learning: neurophysiological changes during speech-sound training. Neuroreport 9, 3557–3560. doi: 10.1097/00001756-199811160-00003

Tremblay, K., Kraus, N., Mcgee, T., Ponton, C., and Otis, B. (2001). Central auditory plasticity: changes in the N1-P2 complex after speech-sound training. Ear Hear. 22, 79–90. doi: 10.1097/00003446-200104000-00001

Tremblay, K., and Moore, D. R. (2012). Current issues in auditory plasticity and auditory training. Transl. Perspect. Aud. Neurosci. BOOK 3, 165–189.

Tremblay, K. L., Piskosz, M., and Souza, P. (2003). Effects of age and age-related hearing loss on the neural representation of speech cues. Clin. Neurophysiol. 114, 1332–1343. doi: 10.1016/s1388-2457(03)00114-7

Tremblay, K. L., and Ross, B. (2007). Auditory rehabilitation and the aging brain. ASHA Lead. 12, 12–13.

Tremblay, K. L., Shahin, A. J., Picton, T., and Ross, B. (2009). Auditory training alters the physiological detection of stimulus-specific cues in humans. Clin. Neurophysiol. 120, 128–135. doi: 10.1016/j.clinph.2008.10.005

Van Veen, V., and Carter, C. S. (2002). The timing of action-monitoring processing in the anterior cingulate cortex. J. Cogn. Neurosci. 14, 593–602.

Wagner, M., Shafer, V. L., Martin, B., and Steinschneider, M. (2013). The effect of native-language experience on the sensory-obligatory components, the P1-N1-P2 and the T-complex. Brain Res. 1522, 31–37. doi: 10.1016/j.brainres.2013.04.045

Woldorff, M. G., and Hillyard, S. A. (1991). Modulation of early auditory processing during selective listening to rapidly presented tones. Electroencephalogr. Clin. Neurophysiol. 79, 170–191. doi: 10.1016/0013-4694(91)90136-r

Woods, D. L. (1995). The component structure of the N1 wave of the human auditory evoked potential. Electroencephalogr. Clin. Neurophysiol. Suppl. 44, 102–109.

Zhu, Kuang Da. (2010). Neuroplastic changes during auditory perceptual learning over multiple practice sessions within and between days. Master of Arts Degree. Department of Psychology, University of Toronto. https://tspace.library.utoronto.ca/bitstream/1807/24291/3/Zhu_KuangDa_20103_MA_thesis.pdf

Keywords: auditory, training, ERP, P2, exposure, learning, rehabilitation, electrophysiology

Citation: Tremblay KL, Ross B, Inoue K, McClannahan K and Collet G (2014) Is the auditory evoked P2 response a biomarker of learning? Front. Syst. Neurosci. 8:28. doi: 10.3389/fnsys.2014.00028

Received: 28 October 2013; Accepted: 06 February 2014;

Published online: 20 February 2014.

Edited by:

Arthur Wingfield, Brandeis University, USAReviewed by:

Jonas Obleser, Max Planck Institute for Human Cognitive and Brain Sciences, GermanyLisa Payne, Brandeis University, USA

Copyright © 2014 Tremblay, Ross, Inoue, McClannahan and Collet. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kelly L. Tremblay, Department of Speech and Hearing Sciences, University of Washington, Eagleson Hall, 1417 NE 42nd St., Seattle WA 98105, USA e-mail: tremblay@uw.edu

Kelly L. Tremblay

Kelly L. Tremblay Bernhard Ross

Bernhard Ross Kayo Inoue1,4

Kayo Inoue1,4  Katrina McClannahan

Katrina McClannahan Gregory Collet

Gregory Collet