- 1 Department of Psychology, University of York, York, UK

- 2 Laboratoire de Psychologie Sociale et Cognitive, Centre National de la Recherche Scientifique, University Blaise Pascal, Clermont-Ferrand, France

Many studies have repeatedly shown an orthographic consistency effect in the auditory lexical decision task. Words with phonological rimes that could be spelled in multiple ways (i.e., inconsistent words) typically produce longer auditory lexical decision latencies and more errors than do words with rimes that could be spelled in only one way (i.e., consistent words). These results have been extended to different languages and tasks, suggesting that the effect is quite general and robust. Despite this growing body of evidence, some psycholinguists believe that orthographic effects on spoken language are exclusively strategic, post-lexical, or restricted to peculiar (low-frequency) words. In the present study, we manipulated consistency and word-frequency orthogonally in order to explore whether the orthographic consistency effect extends to high-frequency words. Two different tasks were used: lexical decision and rime detection. Both tasks produced reliable consistency effects for both low- and high-frequency words. Furthermore, in Experiment 1 (lexical decision), an interaction revealed a stronger consistency effect for low-frequency words than for high-frequency words, as initially predicted by Ziegler and Ferrand (1998), whereas no interaction was found in Experiment 2 (rime detection). Our results extend previous findings by showing that the orthographic consistency effect is obtained not only for low-frequency words but also for high-frequency words. Furthermore, these effects were also obtained in a rime detection task, which does not require the explicit processing of orthographic structure. Globally, our results suggest that literacy changes the way people process spoken words, even for frequent words.

Introduction

The Influence of Orthographic Information in Spoken Language Processing

Over the last 30 years, a number of studies have provided a growing body of evidence of orthographic influences on the perception of spoken words. An early study by Seidenberg and Tanenhaus(1979; see also Donnenwerth-Nolan et al., 1981) found that rime judgments for pairs of spoken words were delayed for orthographically dissimilar words (e.g., rye–tie) compared to orthographically similar words (e.g., pie–tie). Because orthographic information is not relevant for making rime judgments, one would not expect to find evidence for the activation of orthographic activation in this task1. This result suggests that some form of orthographic representation is automatically activated as a consequence of hearing a spoken word. Later studies have employed a variety of tasks to explore the influence of orthographic information in spoken language processing. For instance, Ziegler and Ferrand (1998) demonstrated that in the auditory lexical decision task, words with phonological rimes that could be spelled in multiple ways (i.e., inconsistent words such as “beak”) typically produce longer auditory lexical decision latencies and more errors than did words with rimes that could be spelled in only one way (i.e., consistent words such as “luck”). This finding, called the orthographic consistency effect, has been replicated many times in different languages (see, e.g., Ventura et al., 2004, 2007, 2008; Ziegler et al., 2004, 2008; Pattamadilok et al., 2007; Perre and Ziegler, 2008; Dich, 2011) and it strongly supports the claim that orthography affects the perception of spoken words.

Since then, orthographic effects have been found in a variety of other tasks involving spoken stimuli, such as primed lexical decision (Slowiaczek et al., 2003; Chéreau et al., 2007; Perre et al., 2009a), pseudohomophone priming (Taft et al., 2008), semantic categorization (Pattamadilok et al., 2009a), gender categorization (Peereman et al., 2009), rime detection (Ziegler et al., 2004), phoneme detection (Frauenfelder et al., 1990; Dijkstra et al., 1995; Hallé et al., 2000), and the visual-world paradigm (Salverda and Tanenhaus, 2010). Brain imaging and event-related potential (ERP) studies have also reported orthographic effects in spoken-word processing (Perre and Ziegler, 2008; Pattamadilok et al., 2009a; Perre et al., 2009a,b; Dehaene et al., 2010).

Taken together, these results demonstrate convincingly that some orthographic knowledge has a substantial influence on the processing of a spoken word. However, it remains debated whether an orthographic code is activated online whenever we hear a spoken word, or whether orthography changes the nature of phonological representations (see, e.g., Perre et al., 2009a,b; Dehaene et al., 2010; Pattamadilok et al., 2010; Dehaene and Cohen, 2011; Ranbom and Connine, 2011). According to the “online orthographic activation” hypothesis, learning to read and write would create strong and permanent associations between phonological representations used in spoken language and orthographic representations used in written language, thus orthography would be automatically activated whenever we hear a spoken word (e.g., Grainger and Ferrand, 1996; Ziegler and Ferrand, 1998). According to the “phonological restructuring” hypothesis, orthography contaminates phonology during the process of learning to read and write, thus altering the very nature of the phonological representations themselves (e.g., Muneaux and Ziegler, 2004; Ziegler and Goswami, 2005). Thus, orthography would not be activated in an online fashion but would rather influence the quality of phonological representations at an earlier stage. Of course, both effects might occur simultaneously, that is, orthography might be activated online in addition to having changed the nature of phonological representations (see, e.g., Perre et al., 2009b for such a suggestion)2.

Orthographic Consistency Effects

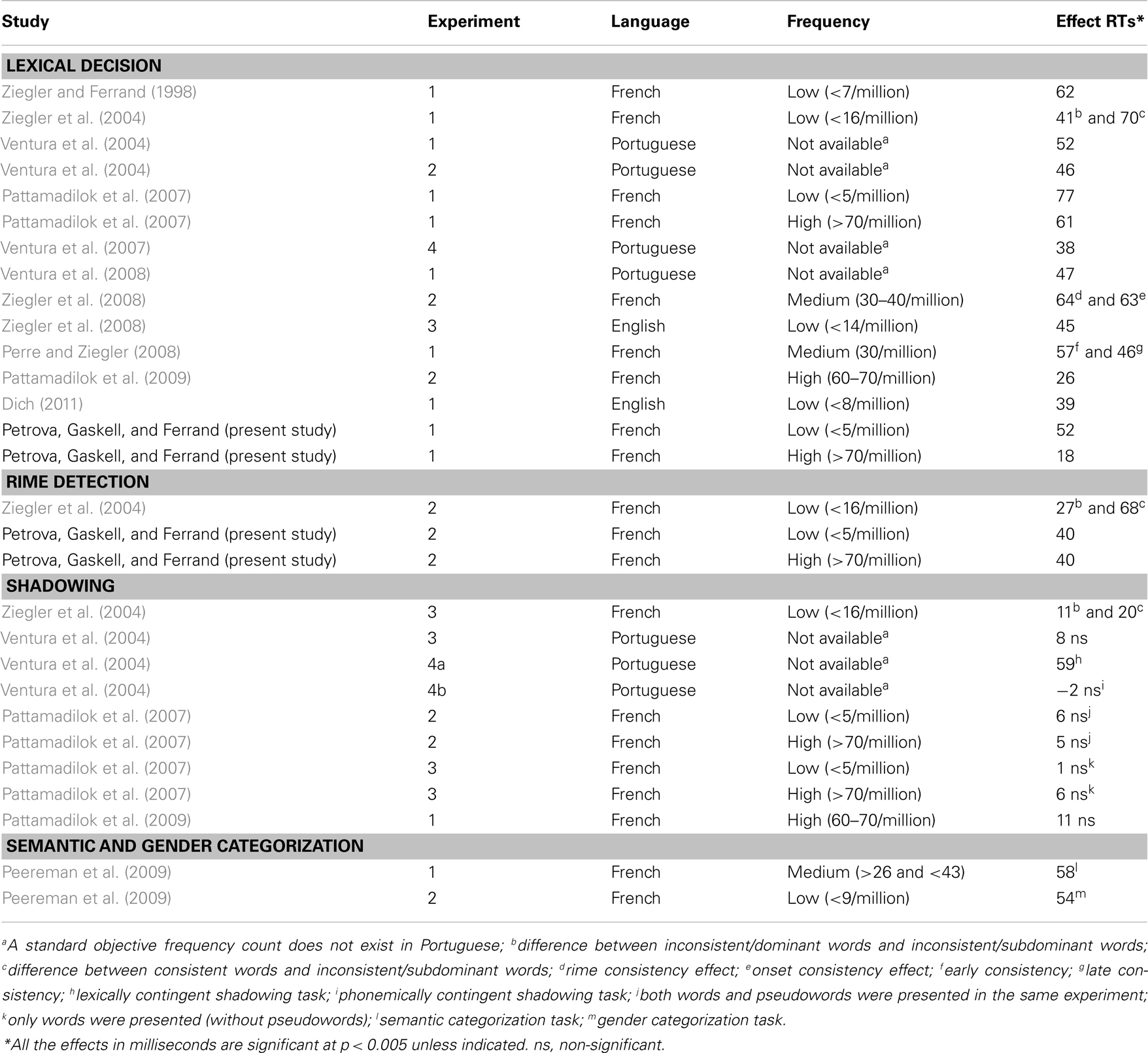

In the present article, we focus on the orthographic consistency effect initially discovered by Ziegler and Ferrand (1998). Since this demonstration, many studies have repeatedly shown an orthographic consistency effect in the auditory lexical decision task (see Table 1 for a brief summary of orthographic consistency effects with adults). These results have been extended to different languages and tasks, suggesting that the effect is quite general and robust (again, see Table 1). Furthermore, recent ERP studies have also provided information about the time course of the activation of orthographic information in spoken-word recognition (Perre and Ziegler, 2008; Pattamadilok et al., 2009a), clearly showing that orthographic information is activated rapidly and relatively early in the recognition process (see also Salverda and Tanenhaus, 2010). Indeed, two ERP studies showed that the orthographic consistency effect can be obtained in both lexical decision (Perre and Ziegler, 2008) and semantic categorization tasks (Perre et al., 2009a) as early as 300–350 ms after the stimulus onset, which is earlier in time than the word-frequency effect (a classic marker of lexical access). These findings suggest that orthography effects are not restricted to post-lexical/decisional stages but rather that the activation of orthographic information occurs early enough to affect the core processes of lexical access.

Table 1. Summary of empirical investigations of orthographic consistency effects on adults with monosyllabic words.

Despite this growing body of evidence, some psycholinguists have argued that orthographic effects on spoken language are exclusively strategic, post-lexical or restricted to peculiar (low-frequency) words (see, e.g., Taft et al., 2008; Cutler et al., 2010; Damian and Bowers, 2010).

The Present Study: Orthographic Consistency and Word-Frequency

The orthographic consistency effect has often been interpreted within the framework of a recurrent network theory proposed by Stone and Van Orden(1994; see also Van Orden and Goldinger, 1994; Stone et al., 1997). The recurrent network assumes a bi-directional flow of activation between orthography and phonology. The coupling between orthography and phonology constitutes a general mechanism not only in visual word perception but also in auditory word perception. Consistent symmetrical relations results in stable and fast activation, whereas inconsistent and asymmetrical relations slow down the system on its way to equilibrium. Thus, according to the model, inconsistency in both directions (spelling-to-sound, and sound-to-spelling) should slow down word recognition. Therefore, this model naturally predicts that sound-to-spelling inconsistency should affect spoken-word processing and this is what Ziegler and Ferrand (1998) indeed found.

Although Ziegler and Ferrand (1998) did not manipulate word frequency (all their items were low-frequency words), they predicted that the auditory consistency effect, much like the visual consistency effect, should be stronger for low-frequency words than for high-frequency words (see, e.g., Seidenberg et al., 1984). As they put it (p. 686), the recurrent network “may account for the consistency × frequency interaction, because it assumes that the greater amount of learning for high-frequency words will reinforce spelling-to-sound mappings at the biggest grain size (i.e., word level). Except with homographs and homophones, inconsistency at this level is smaller than inconsistency at the subword level. Thus, the more stable word-level grain sizes of high-frequency words can help the inconsistent words to overcome competition at subword grain sizes more efficiently.”

In the present study, we manipulated consistency and word-frequency orthogonally. The frequency manipulation had two main goals. First, we wanted to test the prediction of the recurrent network (Stone and Van Orden, 1994) assuming a significant interaction between consistency and frequency. Based on results obtained in the visual modality, in which the consistency effect was found only with low-frequency words (Seidenberg et al., 1984), Ziegler and Ferrand (1998) predicted that the auditory consistency effect should also be stronger for low-frequency than for high-frequency words. Second we wanted to explore whether, irrespective of the presence or absence of a consistency by frequency interaction, the orthographic consistency effect extends to high-frequency words (so far, only low- or medium-frequency words have been tested; see Table 1 for a summary of the lexical decision studies). A finding of a consistency effect for high-frequency words would have an important impact on theories of spoken-word recognition because no current view predicts such a result.

We are aware of only one published study manipulating consistency and word-frequency orthogonally (Pattamadilok et al., 2007). Surprisingly, these authors found no interaction between consistency and word frequency, although the consistency effect tended to be larger for low-frequency words (77 ms) than for high-frequency words (61 ms), as predicted by Ziegler and Ferrand (1998). Our aim was to try to replicate this study by using the same materials but with an increased number of participants in order to re-examine the influence of frequency on orthographic consistency (57 participants were tested in the present experiment vs. 26 in the original study). Furthermore, we controlled for item familiarity (something that was not done in Pattamadilok et al., 2007), since it has been suggested that low-frequency inconsistent words may be rated as less familiar than low-frequency consistent words even though they are matched on objective frequency (Peereman et al., 1998).

Experiment 1: Lexical Decision

Method

Participants

Eighty-six psychology students from Paris Descartes University, France, participated in the study (aged 18–29 years; average: 21 years): 57 in the auditory lexical decision task and 29 in the familiarity rating task. All were native speakers of French and received course credit for their participation. None of the 86 participants had any hearing problems. They gave written informed consent to inclusion in this study.

Stimuli and design

The set of 80 words and 80 pseudowords used by Pattamadilok et al., 2007; see Appendix A for a full list of words) was used in the present experiment. All items were recorded by a female native French speaker3. They were digitized on a PC using Praat (Boersma and Weenik, 2004). All items were matched across conditions on at least their initial phoneme (this material was aimed at testing both lexical decision and shadowing).

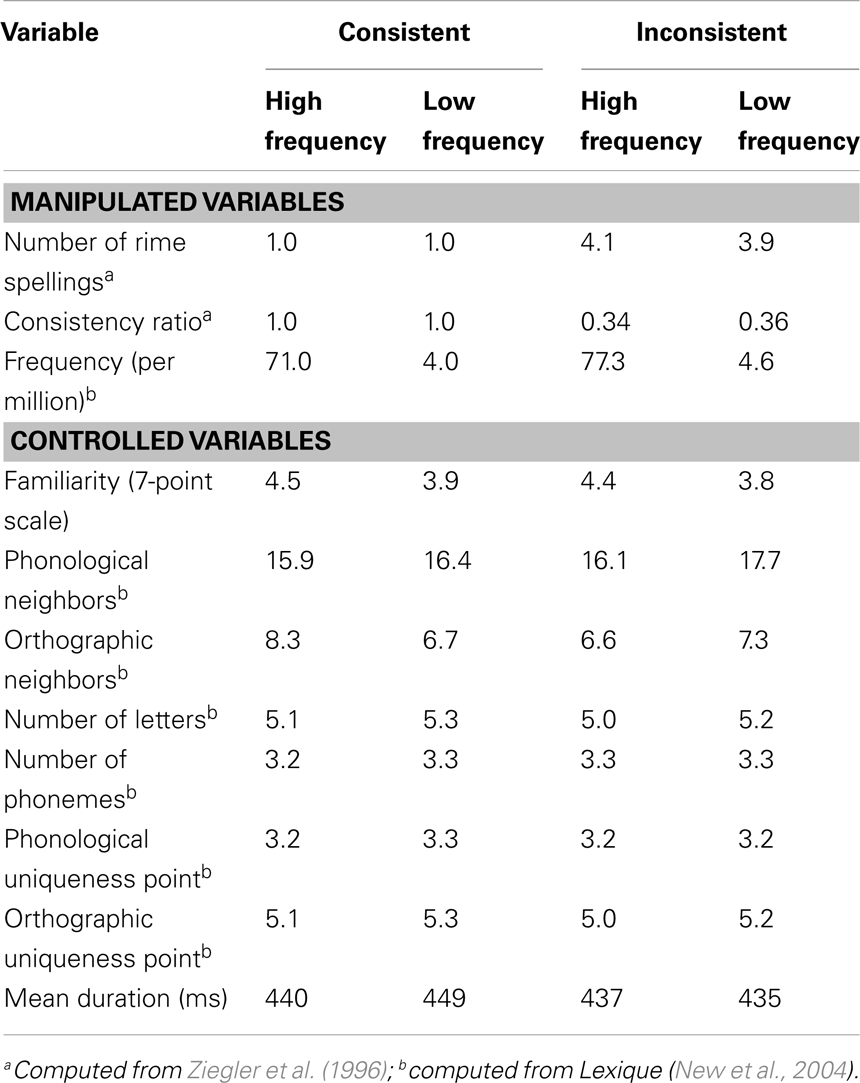

The factorial manipulation of orthographic consistency and word-frequency resulted in four groups: (1) consistent and high-frequency words (e.g., “douche”), (2) inconsistent and high-frequency words (e.g., “douce”), (3) consistent and low-frequency words (e.g., “digue”), and (4) inconsistent and low-frequency words (e.g., “dose”). Each group contained 20 words. Consistent words (with phonological rimes that are spelled in only one way) and inconsistent words (with phonological rimes that can be spelled in more than one ways) were selected on the basis of D:Ziegler et al. (1996) statistical analysis of bi-directional consistency of spelling and sound in French. The stimuli were matched on the following variables across conditions (item characteristics, computed from the Lexique database; New et al., 2004 are given in Table 2): number of phonological and orthographic neighbors, number of phonemes and letters, phonological and orthographic uniqueness point, and mean duration (none of the stimuli was compressed or stretched). In the group of high-frequency words, consistent and inconsistent words were also matched for frequency (this was also the case for the group of low-frequency words). Finally, to complement the objective frequency measure, we also obtained subjective familiarity ratings (see, e.g., Peereman et al., 1998). Twenty-nine students who had not participated in the experiment rated familiarity using a 7-point scale in which 1 was very unfamiliar and 7 very familiar. The stimuli were presented auditorily and participants were asked to circle the number that corresponded best to the familiarity of the item. Mean familiarity for the four groups of items is given in Table 2. The results showed that consistent and inconsistent words did not significantly differ in rated familiarity: this is true for both high-frequency words (4.5 vs. 4.4) and low-frequency words (3.9 vs. 3.8)4. Note however that the high-frequency words were rated as significantly more familiar than the low-frequency words (p < 0.001). Experiment 1 was a 2 (orthographic consistency: inconsistent vs. consistent) × 2 (word frequency: high-frequency vs. low-frequency) within-participants design.

Table 2. Stimulus characteristics of words used in Experiment 1 (lexical decision) manipulating orthographic consistency and word frequency.

The 80 pseudowords were manipulated on orthographic consistency, resulting in two groups: (1) consistent pseudowords (i.e., that ended with a consistent rime), and (2) inconsistent pseudowords (i.e., that ended with an inconsistent rime). These stimuli were created by replacing the initial phoneme(s) of the critical consistent and inconsistent words, therefore they only included the critical word’s rime.

Procedure

Stimulus presentation and data collection were controlled by DMDX software (Forster and Forster, 2003) run on a PC. Participants were tested individually in a sound-proof room. The stimuli were presented to the participants at a comfortable decibel level through a pair of headphones. They were instructed to listen carefully to each stimulus and to respond, by pushing one of the two response button on a joystick, as quickly and accurately as possible if the item was a real French word or a pseudoword. The participants responded on a Logitech Dual Action Gamepad, which is used for superfast computer games and does not have the time delays associated with keyboards (see, e.g., Shimizu, 2002). The clock measuring response latency was started at the onset of the auditory stimulus and was stopped when the participant responsed. Each trial was preceded by a fixation cross (for 500 ms).

The 160 stimuli were presented in 10 lists. In each list, the words were presented in a pseudo-random way, with the following constraints: words or pseudowords never occurred more than three times consecutively; consistent or inconsistent stimuli never occurred more than three times consecutively; the same phonological onset or rime never occurred consecutively. To familiarize the participants with the task, the session started with a practice block of 40 trials (consisting of 20 words and 20 pseudowords). The rimes of these stimuli were different from those used in the experimental phase.

Results

Reaction times longer or shorter than the mean RT ± 2.5 SD were discarded from the response time analyses on correct responses. This was done, by participant, separately for each stimulus type (as defined by frequency and consistency), leading us to eliminate 2.21% of the RT data on words and 2.63% on pseudowords. The data from seven words were also discarded due to excessively high error rates (>50%): “brade,” “lange,” and “pagne” from the low-frequency consistent words and “bru,” “couse,” “teigne,” and “tisse” from the low-frequency inconsistent words. The four word groups were still perfectly matched on frequency and all other potentially confounding variables. The results are presented in Table 3.

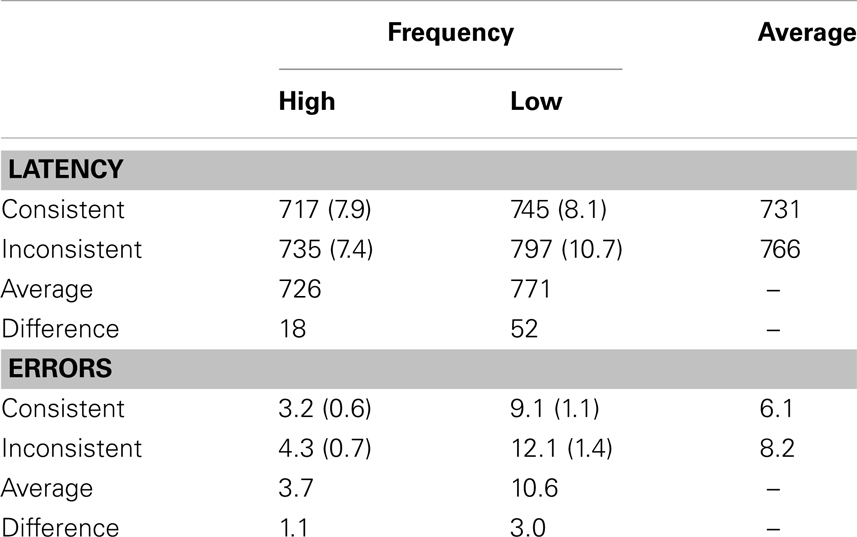

Table 3. Mean latencies (in milliseconds) and percentages of errors for an orthogonal manipulation of consistency and frequency in the auditory lexical decision task of Experiment 1 (SEs are into brackets).

Analyses of variance (ANOVAs) run by participants (F1) and by items (F2) on the RT data for word stimuli included orthographic consistency (consistent vs. inconsistent words) and word frequency (high-frequency vs. low-frequency words) as within-participant factors in the participant analyses and as between-item factors in the item analyses.

These analyses revealed an orthographic consistency effect [F1(1,56) = 62.46,  = 0.527, p < 0.0001; F2(1,69) = 5.27,

= 0.527, p < 0.0001; F2(1,69) = 5.27,  = 0.071, p < 0.05], with inconsistent words being processed 35 ms slower than consistent words. The frequency effect was significant as well [F1(1,56) = 110.13,

= 0.071, p < 0.05], with inconsistent words being processed 35 ms slower than consistent words. The frequency effect was significant as well [F1(1,56) = 110.13,  = 0.662, p < 0.0001; F2(1,69) = 11.40,

= 0.662, p < 0.0001; F2(1,69) = 11.40,  = 0.141, p = 0.01], with high-frequency words being processed 46 ms faster than low-frequency words. The interaction between the two factors was significant [F1(1,56) = 17.09,

= 0.141, p = 0.01], with high-frequency words being processed 46 ms faster than low-frequency words. The interaction between the two factors was significant [F1(1,56) = 17.09,  = 0.233, p = 0.001; F2(1,69) = 5.91,

= 0.233, p = 0.001; F2(1,69) = 5.91,  = 0.078, p < 0.05]. This interaction shows that the orthographic consistency effect was larger for low-frequency words (52 ms) than for high-frequency words (18 ms). Simple main effects tests revealed that the consistency effect for high-frequency words was significant when tested on its own [F1(1,56) = 15.69,

= 0.078, p < 0.05]. This interaction shows that the orthographic consistency effect was larger for low-frequency words (52 ms) than for high-frequency words (18 ms). Simple main effects tests revealed that the consistency effect for high-frequency words was significant when tested on its own [F1(1,56) = 15.69,  = 0.218, p < 0.001; F2(1,69) = 5.82,

= 0.218, p < 0.001; F2(1,69) = 5.82,  = 0.077, p < 0.05], as was the case for low-frequency words [F1(1,56) = 52.08,

= 0.077, p < 0.05], as was the case for low-frequency words [F1(1,56) = 52.08,  = 0.481, p < 0.001; F2(1,69) = 5.18,

= 0.481, p < 0.001; F2(1,69) = 5.18,  = 0.069, p < 0.05].

= 0.069, p < 0.05].

Analyses on the accuracy data revealed a significant consistency effect [F1(1,56) = 7.26,  = 0.114, p = 0.01; F2 = 1.18], with inconsistent words producing more errors than consistent words. The frequency effect was also significant [F1(1,56) = 44.59,

= 0.114, p = 0.01; F2 = 1.18], with inconsistent words producing more errors than consistent words. The frequency effect was also significant [F1(1,56) = 44.59,  = 0.443, p < 0.0001; F2(1,69) = 13.06,

= 0.443, p < 0.0001; F2(1,69) = 13.06,  = 0.159, p = 0.001], with high-frequency words producing less errors than low-frequency words. The interaction between consistency and frequency was not significant (F1 = 1.44; F2 < 1).

= 0.159, p = 0.001], with high-frequency words producing less errors than low-frequency words. The interaction between consistency and frequency was not significant (F1 = 1.44; F2 < 1).

For pseudowords, two items were excluded due to high error rates (>35%; “klonne” in the consistent condition and “vierre” in the inconsistent condition). The analyses revealed no consistency effect in the RT (834 vs. 846 ms for consistent and inconsistent pseudowords respectively; F1 and F2 < 1) and the accuracy data (2.18 vs. 2.99 %ER for consistent and inconsistent pseudowords respectively; F1 = 1.78; F2 < 1).

Discussion

In Experiment 1, word frequency and orthographic consistency were manipulated orthogonally. The goal of this experiment was twofold: (1) examine whether word frequency and orthographic consistency interact; and (2) determine whether orthographic consistency affects the processing of high-frequency words. As predicted by Ziegler and Ferrand (1998), we found an interaction between consistency and frequency: the consistency effect was obtained for both low- and high-frequency words, but it was three times as large for low-frequency words than for high-frequency words. This contrasts with Pattamadilok et al.’s (2007) results showing no interaction between these factors (although there was a tendency in their data, see Table (1)5. Note that in the present study, we tested 57 participants whereas Pattamadilok et al. had 26. It is therefore possible that the interaction remained undetected due to insufficient statistical power in the study conducted by Pattamadilok et al. (2007). Furthermore, because it had been suggested (Peereman et al., 1998) that low-frequency inconsistent words may be rated as less familiar than low-frequency consistent words even though they are matched on objective frequency, we had participants rate Pattamadilok et al.’s stimuli for familiarity. The results showed that consistent and inconsistent words did not significantly differ in rated familiarity (see Table 2). It suggests that the present consistency effects (obtained for both low-frequency and high-frequency words) are not reducible to simple differences in rated familiarity. Objective word frequency had also a reliable effect on decision latencies (as it is usually the case in auditory word recognition; see Cleland et al., 2006). Finally, there was no consistency effect for pseudowords (in agreement with Ziegler and Ferrand, 1998; Ventura et al., 2004, 2007; Dich, 2011; but see Pattamadilok et al., 2009b; see also Taft, 2011, for an in-depth discussion).

Apart from Pattamadiloks et al.’s (2007) study, no other studies examined the influence of frequency on consistency (see Table (1). Here, we not only report a frequency by consistency interaction but we also show the influence of orthographic consistency on the processing of high-frequency words. The finding of a consistency effect for high-frequency words has interesting theoretical implications (see General Discussion).

In sum, the results of this experiment generalize the consistency effect to high-frequency words. This result allows us to eliminate the hypothesis according to which during auditory word recognition, orthography is activated only with peculiar (i.e., low-frequency) words.

Experiment 2: Rime Detection

In this and previous studies (Ziegler and Ferrand, 1998; Ventura et al., 2004; Ziegler et al., 2004, 2008; Pattamadilok et al., 2007, 2009b; Perre and Ziegler, 2008; Dich, 2011), the auditory lexical decision task was used to investigate the consistency effect. One could argue that the lexical decision task is sufficiently difficult and unusual that participants might try to “visualize” the spoken word in order to improve task performance (see Taft et al., 2008; Cutler et al., 2010, for such a criticism). Thus, it cannot be ruled out that participants use an orthographic checking mechanism in a strategic way in the lexical decision task. In order to address this potential criticism, we attempted to replicate the present effects in a less difficult auditory task.

The rime detection task6 is an interesting candidate since, as suggested by Ziegler et al. (2004), it is a purely phonological task and a participant does not have to be literate to do the task. Furthermore, it is quite easy, because the rime, unlike the phoneme, is an easily accessible unit in speech perception (Kirtley et al., 1989; Goswami, 1999). Finally, orthographic consistency effects (for low-frequency words) on rime judgments have been found in previous studies (see, e.g., Ziegler et al., 2004). Furthermore, the orthographic endings of words have been found to influence performance on auditory rime decisions in both adults and children (Seidenberg and Tanenhaus, 1979; Donnenwerth-Nolan et al., 1981; Cone et al., 2008; Desroches et al., 2010; but see Damian and Bowers, 2010). Given that tasks such as auditory rime decision only require processing in the phonological domain, these findings suggest that orthographic representations are activated automatically during spoken language processing.

As in Experiment 1, word frequency and orthographic consistency were manipulated orthogonally. On each trial, participants were presented auditorily with a target rime followed by a target word. On half of the trials, the target rime was present in the word, and on the other half the target rime was absent.

Method

Participants

Fifty additional psychology students from Paris Descartes University participated in the rime detection task (aged 18–27 years; average: 21 years). All were native speakers of French and received course credit for their participation. None of the participants had any hearing problems. They gave written informed consent to inclusion in this study.

Stimuli and design

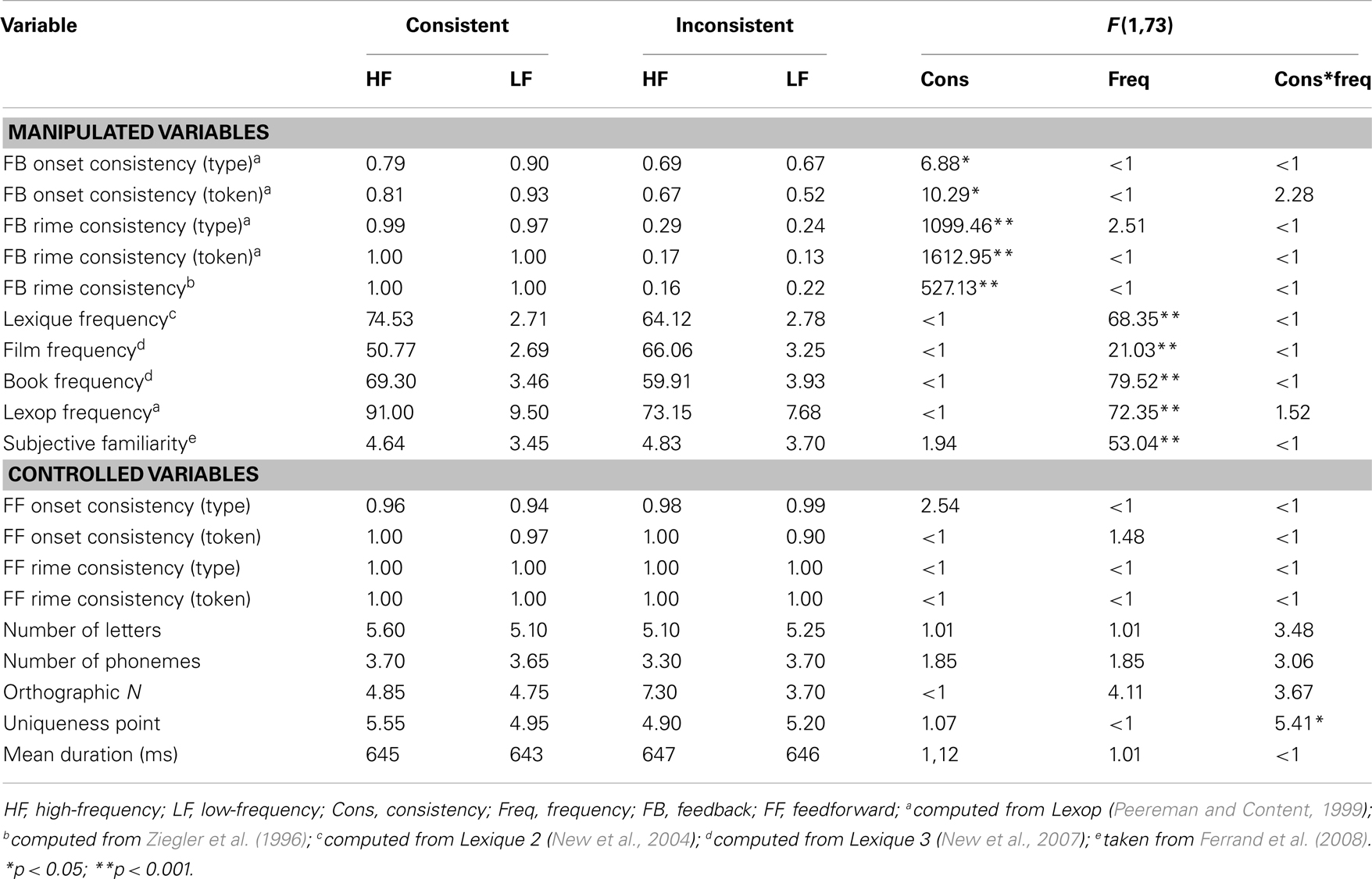

We used the same design as in Experiment 1, but with different stimuli for the purpose of the rime detection task. Item characteristics are presented in Table 4, and all items are listed in Appendix B. As can be seen in Table 4, the stimuli used in Experiment 2 had very similar characteristics to those tested in Experiment 1.

Table 4. Stimulus characteristics of words used in Experiment 2 (rime detection) manipulating orthographic consistency and word frequency.

The factorial manipulation of orthographic consistency and word-frequency resulted in four groups: (1) consistent and high-frequency words (e.g., “lune”), (2) inconsistent and high-frequency words (e.g., “gare”), (3) consistent and low-frequency words (e.g., “cuve”), and (4) inconsistent and low-frequency words (e.g., “puce”). Each group contained 20 words. Consistent words (with phonological rimes that are spelled in only one way) and inconsistent words (with phonological rimes that can be spelled in more than one ways) were selected on the basis of Peereman and Content’s (1999) and Ziegler et al.’s (1996) statistical analyses of bi-directional consistency of spelling and sound in French. The stimuli were matched on the following variables across conditions (item characteristics, computed from the Lexique database; New et al., (2004, 2007, are given in Table 4): feedforward consistency, number of phonemes and letters, number of orthographic neighbors, uniqueness point, and mean duration (none of the stimuli was compressed or stretched). In the group of high-frequency words, consistent and inconsistent words were also matched for frequency (this was also the case for the group of low-frequency words). Finally, to complement the objective frequency measure, we also provide the subjective familiarity ratings (taken from Ferrand et al., 2008). Mean familiarity for the four groups of items is given in Table 4. The results showed that consistent and inconsistent words did not significantly differ in rated familiarity: this is true for both high-frequency words (4.6 vs. 4.8) and low-frequency words (3.5 vs. 3.7). Note however that, as in Experiment 1, the high-frequency words were rated as significantly more familiar than the low-frequency words (p < 0.001). Experiment 2 was a 2 (orthographic consistency: inconsistent vs. consistent) × 2 (word frequency: high-frequency vs. low-frequency) within-participants design.

Procedure

The procedure used was identical to the one used by Ziegler et al. (2004). Stimulus presentation and data collection were controlled by DMDX software (Forster and Forster, 2003) running on a PC. The stimuli were presented to the participants at a comfortable sound level through a pair of headphones. At the end of the auditory rime and after a delay of 50 ms, the target was presented. The participants were instructed to judge as quickly and as accurately as possible whether the auditorily presented rime was present or absent in the following French word. The participants gave their responses by pressing either the “yes” or the “no” button on a Logitech Dual Action Gamepad. The participants were tested individually in a sound-proof room. They were first given 20 practice trials. No feedback was provided during the experiment.

Results

Reaction times longer or shorter than the mean RT ± 2.5 SD were discarded from the response time analyses on correct responses. This was done, by participant, separately for each stimulus type (as defined by frequency and consistency), leading us to eliminate 1.93% of the RT data. The data from three words were also discarded due to excessively high error rates: “lourd,” “chance,” and “gare” from the high-frequency inconsistent words. The four word groups were still perfectly matched on frequency and all other potentially confounding variables. The results are presented in Table 5.

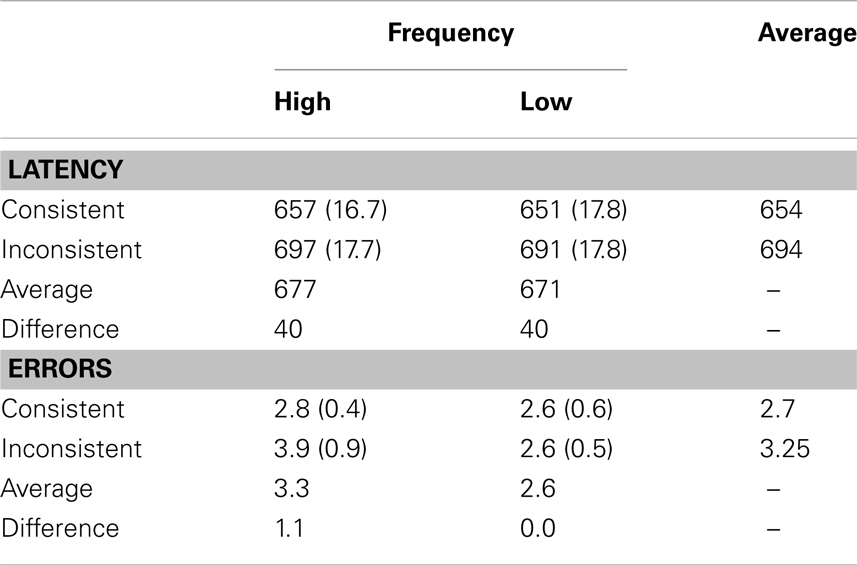

Table 5. Mean latencies (in milliseconds) and percentages of errors for an orthogonal manipulation of consistency and frequency in the auditory rime detection task of Experiment 2 (SEs are into brackets).

The ANOVAs run by participants (F1) and by items (F2) on the RT data for word stimuli included orthographic consistency (consistent vs. inconsistent words) and word frequency (high-frequency vs. low-frequency words) as within-participant factors in the participant analyses and as between-item factors in the item analyses.

These analyses revealed an orthographic consistency effect [F1(1,49) = 89.95,  = 0.647, p < 0.0001; F2(1,73) = 8.79,

= 0.647, p < 0.0001; F2(1,73) = 8.79,  = 0.107, p < 0.005], with inconsistent words being processed 40 ms slower than consistent words. The frequency effect was non-significant (F1 = 2.41; F2 < 1), as was the interaction between the two factors (F1 and F2 < 1). Simple main effects tests revealed that the consistency effect for high-frequency words was significant when tested on its own [40 ms; F1(1,49) = 40.16,

= 0.107, p < 0.005], with inconsistent words being processed 40 ms slower than consistent words. The frequency effect was non-significant (F1 = 2.41; F2 < 1), as was the interaction between the two factors (F1 and F2 < 1). Simple main effects tests revealed that the consistency effect for high-frequency words was significant when tested on its own [40 ms; F1(1,49) = 40.16,  = 0.450, p < 0.0001; F2(1,73) = 4.30,

= 0.450, p < 0.0001; F2(1,73) = 4.30,  = 0.055, p < 0.05]. It was also the case for low-frequency words [40 ms; F1(1,49) = 36.77,

= 0.055, p < 0.05]. It was also the case for low-frequency words [40 ms; F1(1,49) = 36.77,  = 0.428, p < 0.0001; F2(1,73) = 4.49,

= 0.428, p < 0.0001; F2(1,73) = 4.49,  = 0.057, p < 0.05].

= 0.057, p < 0.05].

Analyses on the accuracy data revealed no consistency effect (F1 = 1; F2 < 1) and no frequency effect (F1 = 2.58; F2 = 1.71). The interaction between consistency and frequency was not significant (F1 and F2 < 1).

Discussion

In Experiment 2, word frequency and orthographic consistency were manipulated orthogonally. A consistency effect was obtained for both low and high-frequency words, which was equivalent in magnitude for low-frequency words and high-frequency words. This contrasts with the results of Experiment 1 showing a significant interaction between consistency and frequency. This absence of interaction might be explained by the lack of a frequency effect in this task7. However, the finding that the orthographic consistency effect (obtained for both low and high-frequency words) is present in rime detection and is therefore not confined to the lexical decision task suggests that the effect is robust and not strategic. Most importantly, a significant effect of consistency was found for high-frequency words once again.

General Discussion

The results of the present experiments demonstrate that high-frequency words, as well as low-frequency words, produced significant effects of orthographic consistency in lexical decision and rime detection tasks. In Experiment 1 (lexical decision), the magnitude of the consistency effect was three times as large for low-frequency words than for high-frequency words, whereas in Experiment 2 (rime detection), this magnitude was similar for high- and low-frequency words. Overall, these results suggest that high-frequency words are not immune to effects of orthographic consistency.

The Loci of the Consistency Effect

Previous studies with skilled adult readers have shown that the orthographic consistency effect is obtained preferentially with stimuli and/or in situations that involve lexical activation (Ziegler and Ferrand, 1998; Ventura et al., 2004; Ziegler et al., 2004; Pattamadilok et al., 2007). First, the effect is usually observed only for words and not for pseudowords (e.g., the present study; Ziegler and Ferrand, 1998; Ventura et al., 2004, 2007, 2008; Dich, 2011). Second, Ziegler et al. (2004) found that the size of the consistency effect decreased through tasks (lexical decision > rime detection > shadowing) because these were likely to rely less and less on accessing lexical representations. It seems therefore that the influence of orthography in spoken-word recognition is stronger when lexical representations are involved. However, the results of Experiment 2 (rime detection) also suggest a sublexical locus of the consistency effect. In the rime detection task, access to the lexicon might be beneficial for segmenting words into onsets and rimes, but it is not strictly required for performing the task. The data of the present experiments exhibited no smaller consistency effects in rime detection than in lexical decision. Recent findings suggest that the involvement of orthography in spoken-word recognition also occurs at the sublexical level, since orthographic information starts to be activated before the listener has heard the end of the word (Perre and Ziegler, 2008; Pattamadilok et al., 2009a). This is consistent with the results of Experiment 2 (rime detection) in which a robust consistency effect was obtained without lexical involvement (indexed by the lack of a frequency effect), suggesting therefore that the effect occurred at the sublexical level in this task. Taken together, our results suggest both a sublexical and a lexical locus of the consistency effect.

Implications for Theories of Word Perception

What are the implications of our results for the recurrent network theory of word perception proposed by Stone and Van Orden 1994) (1994); see also Van Orden and Goldinger, 1994; Stone et al., 1997)? In their recurrent network, the flow of activation between orthography and phonology is inherently bi-directional. The presentation of a spoken-word activates phoneme nodes, which in turn, activate letter nodes. Similarly, the visual presentation of a word activates letter nodes, which in turn, activate phoneme nodes. Following initial activation, recurrent feedback begins between these two node families. Whenever the activation that is sent is consistent (compatible) with the activation that is returned, nodes conserve and strengthen their activation in relatively exclusive and stable feedback loops. The capacity to conserve and strengthen activation thus depends on the consistency of the coupling between phonology and orthography. Such a model naturally explains that sound-to-spelling inconsistency affects the perception of spoken words.

In this framework, it is not clear however whether high-frequency words are expected to show smaller effects of consistency than low-frequency words or whether the effect of orthographic consistency is thought to be absent for high-frequency words. The recurrent network (whose performance is characterized by the asymptotic learning principle described in Van Orden et al., 1990) would necessarily produce diminishing effects of consistency with increasing exposure to a word. Explicit simulation is needed to determine whether the processing dynamics of such a recurrent network would predict the residual consistency effect on high-frequency words (and indeed such implementation has not yet been carried out for spoken-word recognition).

The orthographic consistency effect has also been explained within the framework of the bimodal interactive activation model of word recognition proposed by Grainger and Ferrand 1994) (Grainger and Ferrand 1994), Grainger and Ferrand 1996; see also Ferrand and Grainger, 2003; Grainger et al., 2003; Ziegler et al., 2003; Grainger and Holcomb, 2009). This model assumes the existence of feedback (sound-to-spelling) and feedforward (spelling-to-sound) connections between the phonological and orthographic representations at both lexical and sublexical levels8. In auditory tasks, inconsistent words are at a disadvantage compared to consistent words because the sublexical phonological representation activated by an inconsistent phonological rime would activate several spellings that are incompatible with the orthographic representation of the target. This orthographic inconsistency would slow down and/or make less precise the activation of the phonological representation of inconsistent spoken words. The present results show that this applies also to high-frequency words. In the bimodal interactive activation model, high-frequency words are expected to show effects of consistency because the effects can take place at both a sublexical and a lexical level.

The present results support a theory according to which orthographic information is activated online in spoken-word recognition (Stone and Van Orden, 1994; Van Orden and Goldinger, 1994; Grainger and Ferrand, 1996; Ziegler and Ferrand, 1998; Ziegler et al., 2003; Perre and Ziegler, 2008; Pattamadilok et al., 2009a). According to this view, the existence of strong functional links between spoken and written word forms automatically activates visual/orthographic representations of words. For inconsistent spellings, this gives rise to competition at the visual/orthographic level which slows responses relative to words with consistent spellings. However, these results are also consistent with the phonological restructuration hypothesis according to which phonological representations are contaminated by orthographic representations (Perre et al., 2009b; Pattamadilok et al., 2010). According to this view, learning to read alters preexisting phonological representations, in such a way that literacy restructures phonological representations and introduces an advantage for words with consistent spellings that arises at a purely phonological level. Orthographically consistent words would develop better and finer-grained phonological representations than do inconsistent words in the course of reading development. Although many studies (including the present one; see also Table 1) provide strong evidence for early and automatic activation of orthographic information in spoken-word recognition, at the moment, none of them is able to tease apart these hypotheses (note however that they need not be mutually exclusive).

Turning to the models specifically developed for spoken-word recognition, none of them allow orthographic knowledge to affect performance. For instance, in both TRACE (McClelland and Elman, 1986), the Neighborhood Activation Model (Luce et al., 2000) and MERGE (Norris et al., 2000), spoken words are perceived without reference to their orthography. Such models need to be seriously revised in order to integrate the influence of orthographic knowledge in spoken-word recognition (see Taft, 2011, for such an endeavor).

Conclusion

In conclusion, the present results show that (1) for skilled adult readers, all words (even high-frequency words) are affected by orthographic consistency; (2) consistency effects are not restricted to the lexical decision task; and (3) learning about orthography alters permanently the way we perceive spoken language, even for frequent words. However, much remains to be discovered regarding how orthography alters spoken language. An important challenge for future research is to determine whether an orthographic code is activated online whenever we hear a spoken word, or whether orthography changes the nature of phonological representations (e.g., Perre et al., 2009a,b; Dehaene et al., 2010; Pattamadilok et al., 2010).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

- ^Although many have argued that orthographic information may influence such metaphonological judgments.

- ^Note that the current study was not designed to distinguish between these two possibilities.

- ^We would like to thank Chotiga Pattamadilok for sending us the auditory stimuli. But because these stimuli were recorded by a Belgium speaker, we decided to record them again with a Parisian speaker.

- ^Another analysis based on visual subjective frequency (Ferrand et al., 2008) confirmed these results. Consistent and inconsistent words did not significantly differ in rated subjective frequency: for high-frequency words (4.5 vs. 4.6) and for low-frequency words (3.9 vs. 3.9; all Fs < 1).

- ^On average, French participants were 165 ms faster (for words) than Belgium participants; it is therefore possible that the interaction between frequency and consistency only emerges for fast participants. In her Ph.D., Pattamadilok (2006, p. 80) reports an analysis of Pattamadilok et al.’s (2007) Experiment 1 on the processing speed of their participants (separating the participants into two groups of fast vs. slow respondents, using the median of the overall RTs as the cut-off point). She found that the interaction between consistency and frequency was significant only in the fast group but not in the slow group. In the fast group, although the consistency effect was significant for both high- and low-frequency words, it was significantly larger [F(1,12) = 6.7, p < 0.025] for low- than for high-frequency words (77 vs. 45 ms respectively). In the slow group however, the size of the effect was the same on high- and low-frequency words (77 ms). We have conducted the same analysis on processing speed (although our participants are globally fast compared to Pattamadilok et al.’s participants). There was no interaction between speed and consistency [F(1,54) = 1.96]. However, the consistency × frequency interaction was significant in both groups. In the fast group (n = 28), the consistency effect was significant for both high- and low-frequency words, but it was significantly larger [F(1,27) = 5.31, p < 0.05] for low- than for high-frequency words (38 vs. 18 ms respectively). Similarly, in the slow group (n = 28), the consistency effect was significant for both high- and low-frequency words, but again it was significantly larger [F(1,27) = 13.41, p < 0.005] for low- than for high-frequency words (66 vs. 17 ms respectively). Finally, the consistency × frequency × speed interaction approached significance [F(1,54) = 3.43, p = 0.069], suggesting that, if anything, the consistency × frequency interaction tended to be stronger for the slow group.

- ^The rime detection task we used was the one developed by Ziegler et al. (2004) and as such, it does not suffer from the limitations raised by Damian and Bowers (2010).

- ^Note that the same stimuli tested in visual lexical decision exhibited a robust frequency effect when RTs were taken from the French Lexicon Project (Ferrand et al., 2010). On average high-frequency words were responded faster than low-frequency words [624 vs. 676 ms: F(1,73) = 27.55, p < 0.001]. So the absence of frequency effect might be attributed to the rime detection task being more shallow than the lexical decision task (see Ziegler et al., 2004, for such a proposal).

- ^Note however that Ziegler et al. (2008) found evidence for feedback connections to be restricted to the auditory modality (but see Barnhart and Goldinger, 2010).

References

Barnhart, A. S., and Goldinger, S. D. (2010). Interpreting chicken-scratch: lexical access for handwritten words. J. Exp. Psychol. Hum. Percept. Perform. 36, 906–923.

Boersma, P., and Weenik, D. (2004). Praat: Doing Phonetics by Computer. Available at: http://www.fon.hum.uva.nl/praat/

Chéreau, C., Gaskell, M. G., and Dumay, N. (2007). Reading spoken words: orthographic effects in auditory priming. Cognition 102, 341–360.

Cleland, A. A., Gaskell, M. G., Quinlan, P. T., and Tamminen, J. (2006). Frequency effects in spoken and visual word recognition: evidence from dual-task methodologies. J. Exp. Psychol. Hum. Percept. Perform. 32, 104–119.

Cone, N. E., Burman, D. D., Bitan, T., Bolger, D. J., and Booth, J. R. (2008). Developmental changes in brain regions involved in language processing. Neuroimage 41, 623–635.

Cutler, A., Treiman, R., and van Ooijen, B. (2010). Strategic deployment of orthographic knowledge in phoneme detection. Lang. Speech 53, 307–320.

Damian, M. F., and Bowers, J. S. (2010). Orthographic effects in rhyme monitoring tasks: are they automatic? Eur. J. Cogn. Psychol. 22, 106–116.

Dehaene, S., and Cohen, L. (2011). The unique role of the visual word form area in reading. Trends Cogn. Sci. (Regul. Ed.) 15, 254–262.

Dehaene, S., Pegado, F., Braga, L. W., Ventura, P., Filho, G. B., Jobert, A., Dehaene-Lambertz, G., Kolinsky, R., Morais, J., and Cohen, L. (2010). How learning to read changes the cortical networks for vision and language. Science 330, 1359–1364.

Desroches, A. S., Cone, N. E., Bolger, D. J., Bitan, T., Burman, D. D., and Booth, J. R. (2010). Children with reading difficulties show differences in brain regions associated with orthographic processing during spoken language processing. Brain Res. 1356, 73–84.

Dich, N. (2011). Individual differences in the size of orthographic effects in spoken word recognition: the role of listeners’ orthographic skills. Appl. Psycholinguist. 32, 169–186.

Dijkstra, T., Roelofs, A., and Fieuws, S. (1995). Orthographic effects on phoneme monitoring. Can. J. Exp. Psychol. 49, 264–271.

Donnenwerth-Nolan, S., Tanenhaus, M. K., and Seidenberg, M. S. (1981). Multiple code activation in word recognition: evidence from rhyme monitoring. J. Exp. Psychol. Hum. Learn. 7, 170–180.

Ferrand, L., Bonin, P., Méot, A., Augustinova, M., New, B., Pallier, C., and Brysbaert, M. (2008). Age of acquisition and subjective frequency estimates for all generally known monosyllabic French words and their relation with other psycholinguistic variables. Behav. Res. Methods 40, 1049–1054.

Ferrand, L., and Grainger, J. (2003). Homophone interference effects in visual word recognition. Q. J. Exp. Psychol. 56A, 403–419.

Ferrand, L., New, B., Brysbaert, M., Keuleers, E., Bonin, P., Méot, A., Augustinova, M., and Pallier, C. (2010). The French Lexicon Project: lexical decision data for 38,840 French words and 38,840 pseudowords. Behav. Res. Methods 42, 488–496.

Forster, K. I., and Forster, J. C. (2003). DMDX: a windows display program with millisecond accuracy. Behav. Res. Methods Instrum. Comput. 35, 116–124.

Frauenfelder, U. H., Segui, J., and Dijkstra, T. (1990). Lexical effects in phonemic processing: facilitatory or inhibitory? J. Exp. Psychol. Hum. Percept. Perform. 16, 77–91.

Goswami, U. (1999). Causal connections in beginning reading: the importance of rhyme. J. Res. Read. 22, 217–240.

Grainger, J., Diependaele, K., Spinelli, E., Ferrand, L., and Farioli, F. (2003). Masked repetition and phonological priming within and across modalities. J. Exp. Psychol. Learn. Mem. Cogn. 29, 1256–1269.

Grainger, J., and Ferrand, L. (1994). Phonology and orthography in visual word recognition: effects of masked homophone primes. J. Mem. Lang. 33, 218–233.

Grainger, J., and Ferrand, L. (1996). Masked orthographic and phonological priming in visual word recognition and naming: cross-task comparisons. J. Mem. Lang. 35, 623–647.

Grainger, J., and Holcomb, P. J. (2009). Watching the word go by: on the time-course of component processes in visual word recognition. Lang. Linguist. Compass 3, 128–156.

Hallé, P. A., Chéreau, C., and Segui, J. (2000). Where is the/b/in “absurde” [apsyrd]? It is in French listeners’ minds. J. Mem. Lang. 43, 618–639.

Kirtley, C., Bryant, P., MacLean, M., and Bradley, L. (1989). Rhyme, rime, and the onset of reading. J. Exp. Child Psychol. 48, 224–245.

Luce, P. A., Goldinger, S. D., Auer, E. T., and Vitevitch, M. S. (2000). Phonetic priming, neighborhood activation, and PARSYN. Percept. Psychophys. 62, 615–625.

McClelland, J. L., and Elman, J. L. (1986). The TRACE model of speech perception. Cogn. Psychol. 18, 1–86.

Muneaux, M., and Ziegler, J. (2004). Locus of orthographic effects in spoken word recognition: novel insights from the neighbor generation task. Lang. Cogn. Process. 19, 641–660.

New, B., Brysbaert, M., Véronis, J., and Pallier, C. (2007). The use of film subtitles to estimate word frequencies. Appl. Psycholinguist. 28, 661–677.

New, B., Pallier, C., Brysbaert, M., and Ferrand, L. (2004). Lexique 2: a new French lexical database. Behav. Res. Methods Instrum. Comput. 36, 516–524.

Norris, D., McQueen, J. M., and Cutler, A. (2000). Merging information in speech recognition: feedback is never necessary. Behav. Brain Sci. 23, 299–325.

Pattamadilok, C. (2006). Orthographic Effects on Speech Processing: Studies on the Conditions of Occurrence. Ph.D., Faculté des Sciences Psychologiques et de l’Education, Université Libre de Bruxelles, Bruxelles.

Pattamadilok, C., Knierim, I. N., Duncan, K. J. K., and Devlin, J. T. (2010). How does learning to read affect speech perception? J. Neurosci. 30, 8435–8444.

Pattamadilok, C., Morais, J., Ventura, P., and Kolinsky, R. (2007). The locus of the orthographic consistency effect in auditory word recognition: further evidence from French. Lang. Cogn. Process. 22, 700–726.

Pattamadilok, C., Perre, L., Dufau, S., and Ziegler, J. C. (2009a). One-line orthographic influences on spoken language in a semantic task. J. Cogn. Neurosci. 21, 169–179.

Pattamadilok, C., Morais, J., De Vylder, O., Ventura, P., and Kolinsky, R. (2009b). The orthographic consistency effect in the recognition of French spoken words: an early developmental shift from sublexical to lexical orthographic activation. Appl. Psycholinguist. 30, 441–462.

Peereman, R., and Content, A. (1999). LEXOP: a lexical database providing orthography-phonology statistics for French monosyllabic words. Behav. Res. Methods Instrum. Comput. 28, 504–515.

Peereman, R., Content, A., and Bonin, P. (1998). Is perception a two-way street? The case of feedback consistency in visual word recognition. J. Mem. Lang. 39, 151–174.

Peereman, R., Dufour, S., and Burt, J. S. (2009). Orthographic influences in spoken word recognition: the consistency effect in semantic and gender categorization tasks. Psychon. Bull. Rev. 16, 363–368.

Perre, L., Midgley, K., and Ziegler, J. C. (2009a). When beef primes reef more than leaf: orthographic information affects phonological priming in spoken word recognition. Psychophysiology 46, 739–746.

Perre, L., Pattamadilok, C., Montant, M., and Ziegler, J. C. (2009b). Orthographic effects in spoken language: on-line activation or phonological restructuring? Brain Res. 1275, 73–80.

Perre, L., and Ziegler, J. C. (2008). On-line activation of orthography in spoken word recognition. Brain Res. 1188, 132–138.

Ranbom, L. J., and Connine, C. M. (2011). Silent letters are activated in spoken word recognition. Lang. Cogn. Process. 26, 236–261.

Salverda, A. P., and Tanenhaus, M. K. (2010). Tracking the time course of orthographic information in spoken-word recognition. J. Exp. Psychol. Learn. Mem. Cogn. 36, 1108–1117.

Seidenberg, M. S., and Tanenhaus, M. K. (1979). Orthographic effects on rhyme monitoring. J. Exp. Psychol. Hum. Learn. 5, 546–554.

Seidenberg, M. S., Waters, G. S., Barnes, M. A., and Tanenhaus, M. K. (1984). When does irregular spelling or pronunciation influence word recognition? J. Verbal Learn. Verbal Behav. 23, 383–404.

Shimizu, H. (2002). Measuring keyboard response delays by comparing keyboard and joystick inputs. Behav. Res. Methods Instrum. Comput. 34, 250–256.

Slowiaczek, L. M., Soltano, E. G., Wieting, S. J., and Bishop, K. (2003). An investigation of phonology and orthography in spoken-word recognition. Q. J. Exp. Psychol. 56A, 233–262.

Stone, G. O., and Van Orden, G. C. (1994). Building a resonance framework for word recognition using design and system principles. J. Exp. Psychol. Hum. Percept. Perform. 20, 1248–1268.

Stone, G. O., Vanhoy, M., and Van Orden, G. C. (1997). Perception is a two-way street: feedforward and feedback phonology in visual word recognition. J. Mem. Lang. 36, 337–359.

Taft, M. (2011). Orthographic influences when processing spoken pseudowords: theoretical implications. Front. Psychol. 2:140.

Taft, M., Castles, A., Davis, C., Lazendic, G., and Nguyen-Hoan, M. (2008). Automatic activation of orthography in spoken word recognition: pseudohomograph priming. J. Mem. Lang. 58, 366–379.

Van Orden, G. C., and Goldinger, S. D. (1994). Interdependence of form and function in cognitive systems explains perception of printed words. J. Exp. Psychol. Hum. Percept. Perform. 20, 1269–1291.

Van Orden, G. C., Pennington, B. F., and Stone, G. O. (1990). Word identification and the promise of subsymbolic psycholinguistics. Psychol. Rev. 97, 488–522.

Ventura, P., Kolinsky, R., Pattamadilok, C., and Morais, J. (2008). The developmental turnpoint of orthographic consistency effects in speech recognition. J. Exp. Child Psychol. 100, 135–145.

Ventura, P., Morais, J., and Kolinsky, R. (2007). The development of the orthographic consistency effect in speech recognition: from sublexical to lexical involvement. Cognition 105, 547–576.

Ventura, P., Morais, J., Pattamadilok, C., and Kolinsky, R. (2004). The locus of the orthographic consistency effect in auditory word recognition. Lang. Cogn. Process. 19, 57–95.

Ziegler, J. C., and Ferrand, L. (1998). Orthography shapes the perception of speech: the consistency effect in auditory word recognition. Psychon. Bull. Rev. 5, 683–689.

Ziegler, J. C., Ferrand, L., and Montant, M. (2004). Visual phonology: the effects of orthographic consistency on different word recognition tasks. Mem. Cognit. 32, 732–741.

Ziegler, J. C., and Goswami, U. (2005). Reading acquisition, developmental dyslexia, and skilled reading across languages: a psycholinguistic grain size theory. Psychol. Bull. 131, 3–29.

Ziegler, J. C., Jacobs, A. M., and Stone, G. O. (1996). Statistical analysis of the bidirectional inconsistency of spelling and sound in French. Behav. Res. Methods Instrum. Comput. 28, 504–515.

Ziegler, J. C., Muneaux, M., and Grainger, J. (2003). Neighborhood effects in auditory word recognition: phonological competition and orthographic facilitation. J. Mem. Lang. 48, 779–793.

Ziegler, J. C., Petrova, A., and Ferrand, L. (2008). Feedback consistency effects in visual and auditory word recognition: where do we stand after more than a decade? J. Exp. Psychol. Learn. Mem. Cogn. 34, 643–661.

Appendix A

Stimuli used in experiment 1 (taken from Pattamadilok et al., 2007)

High-frequency consistent words. Blonde, bombe, bonne, bouche, brume, cave, chaude, code, coude, douche, lâche, linge, monde, plage, poche, pompe, roche, tâche, tige, trompe.

Low-frequency consistent words. Blague, bague, boude, biche, brade*, cube, chauve, conne, quiche, digue, louche, lange*, moche, plombe, pagne*, ponce, rhume, talc, taupe, trouille.

High-frequency inconsistent words. Blouse, bol, boire, basse, brûle, coeur, chaud, caisse, cure, douce, loin, lampe, môme, plante, pierre, pont, rampe, tour, tiers, tronche.

Low-frequency inconsistent words. Blâme, bouffe, bosse, bouse, bru*, coiffe, chauffe, couse*, case, dose, laine, latte, moelle, plaide, panne, pull, râle, teigne*, tisse*, trousse.

*Star means that words were excluded from analyses due to excessively high error rates (>50%).

Appendix B

Stimuli used in experiment 2

High-frequency consistent words. Branche, bronze, charge, charme, couple, crise, gauche, genre, grave, jambe, lune, marche, nombre, plume, preuve, prince, proche, ronde, rouge, sombre.

Low-frequency consistent words. Broche, bulbe, cube, cuve, dinde, dune, figue, fougue, frange, fronde, larme, luge, lustre, morgue, muse, norme, pulpe, rustre, sonde, zèbre.

High-frequency inconsistent words. Basse, caisse, chance*, classe, flamme, frappe, frère, gare*, laisse, lourd*, masse, nuque, plante, rare, russe, sac, seize, soeur, style, zone.

Low-frequency inconsistent words. Bûche, châle, cintre, flair, graine, gramme, grappe, griffe, grippe, latte, mule, noce, puce, score, spleen, store, stress, tank, tempe, transe.

*Star means that words were excluded from analyses due to excessively high error rates (>50%).

Keywords: orthographic consistency, literacy, word recognition, frequency, spoken language, lexical decision, rime detection

Citation: Petrova A, Gaskell MG and Ferrand L (2011) Orthographic consistency and word-frequency effects in auditory word recognition: new evidence from lexical decision and rime detection. Front. Psychology 2:263. doi: 10.3389/fpsyg.2011.00263

Received: 29 June 2011; Accepted: 21 September 2011;

Published online: 19 October 2011.

Edited by:

Chotiga Pattamadilok, Université Libre de Bruxelles, BelgiumReviewed by:

Lisa D. Sanders, University of Massachusetts Amherst, USAPatrick Bonin, University of Bourgogne and Institut Universitaire de France, France

Copyright: © 2011 Petrova, Gaskell and Ferrand. This is an open-access article subject to a non-exclusive license between the authors and Frontiers Media SA, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and other Frontiers conditions are complied with.

*Correspondence: Ludovic Ferrand, Laboratoire de Psychologie Sociale et Cognitive, UMR 6024, CNRS, University Blaise Pascal, 34, Avenue Carnot, 63037 Clermont-Ferrand, France. e-mail: ludovic.ferrand@univ-bpclermont.fr