- 1 Department of Experimental Psychology, University of Oxford, Oxford, UK

- 2 Institute of Cognitive Neuroscience and Department of Psychology, University College London, London, UK

The ability to process facial information is vital for social interactions. Previous research has shown that mature face processing depends on the extraction of featural and configural face information. It has been also shown that the acquisition of these processing skills is prolonged in children. The order in which different face properties are processed is currently less understood. Namely, while some research has supported a parallel-route model which groups different properties according to their variability, other studies have shown that specific invariant properties, such as facial identity, can serve as a reference frame for interpreting more dynamic aspects, such as facial expression or eye gaze direction. The current study tested a different approach, which proposes that face property processing varies with task requirements. Sixteen adults did a same-different task where the second face could differ from the first in the identity, expression, or gaze, or any combination of those. We found that reaction times increased and accuracy rates decreased when the identity was repeated, suggesting that changes in facial identity were the most salient ones. Finally, we tested two groups of 7-to 8- and 10- to 11-year-old children and found lower accuracy rates for those face properties that rely in particular on configural information processing strategies. This suggests that while overall, face processing strategies are adult-like from 7 years of age, the processing of specific face properties develops continuously throughout mid-childhood.

Introduction

Humans consult a person’s face to determine the identity of its bearer, his emotional state, or the current focus of attention via the direction of their eye gaze. Over recent decades much research has been aimed at describing the specific cognitive mechanisms for extracting facial information (Yin, 1969; Carey and Diamond, 1977; Maurer et al., 2002). In a seminal study, Carey and Diamond (1977) introduced the term configural information to describe the interrelationship between different facial features, suggesting that configural face information processing differs from a more featural information processing style that can be employed to process any object. It has been shown that adults use these inter-featural distances to recognize facial identities (Mondloch et al., 2006) or changes in expression (Calder and Young, 2005; Leppaenen and Nelson, 2006; Durand et al., 2007), while featural strategies are more often used to determine the direction of gaze (Mondloch et al., 2002). The distinction between configural- and featural-information processing strategies has been supported by studies of face inversion (Yin, 1969), or the manipulation of local features in the Thatcher illusion (Thompson, 1980). There is some evidence to suggest that configural information processing is reserved for objects of expertise (Gauthier et al., 1999, 2000) and that it is specific for the processing of objects in the canonical upright orientation (Mondloch et al., 2002). In 1969, Yin showed that turning faces upside down has a disruptive effect on the recognition and this inversion effect was not obtained in this magnitude for any other group of objects in a variety of follow-up studies. Moreover, it has been shown that turning a face upside down will be particularly disruptive for the processing of facial identity, but less so for the processing of gaze (Mondloch et al., 2003). We note that while configural and featural information processing strategies have proved to be extremely useful models to describe face processing strategies in humans, much remains to be learned from investigating the effect of changing face properties on performance directly.

The processing order for the different face properties is less well understood. It remains to be determined whether these properties are processed in a serial fashion and largely independent from each other, or whether they are instead processed in parallel and therefore will influence each other in the process. Two decades ago Bruce and Young (1986) proposed a model that postulated independent processing paths for identity vs. expression and speech movements (parallel-route-hypothesis). This model was based on neuropsychological studies which found a double dissociation in prosopagnosia participants who were unable to identify a particular face, while being at the same time surprisingly proficient at determining the expression within the same face (Young et al., 1993; Behrmann and Avidan, 2005; Humphreys et al., 2006). Additional support came from brain imaging studies with normal participants that revealed segregated response patterns for invariant (such as identity) vs. changeable (such as expression or gaze) face aspects within the different face-network areas (Haxby et al., 2000).

Recent data from a range of methods challenge this model (see Calder and Young, 2005 for a review). Ganel and colleagues(Ganel and Goshen-Gottstein, 2004) found evidence for interdependent processing of identity and expression at the behavioral level, and more specifically, that facial identity is used as a reference frame for interpreting idiosyncratic influences on specific expressions. Similarly, interactions were found for gaze and expression processing (Klucharev and Sams, 2004), suggesting that the processing of different face properties will depend on the particular strategy used (Ganel et al., 2005a). In a recent study however Ganel (2011) found evidence for independent processing of expression and gaze. Last, Calder and Young’s PCA model (2005) of identity and expression processing revealed principal components that process each face property either separately or in combination, thus suggesting that a strict parallel-route model cannot explain the behavioral evidence.

Based on the previously presented data, we would like to go a step further and offer a different approach to interpreting face property specific processing. Namely, we propose that the processing of different face properties could be linked to the cognitive strategies induced by the specific tasks (strategy hypothesis). Strategy-dependent processing means that face properties that rely on similar facial information (and consequently on the same cognitive and possibly neural mechanism) will influence each other (for a similar idea in other cognitive domains, see: Posner et al., 1990; Fias et al., 2001; Cohen Kadosh and Henik, 2006). For example, one can encourage the use of configural information processing strategies by instructing participants to compare the facial identity of two faces while ignoring concurrent expression information. In this case, due to the overlap at the information extraction level, the concurrent, but task-irrelevant expression information will interfere with identity processing. Support for overlap at the neural level comes from high-resolution fMRI-adaptation studies that probe the response properties for regions in the core face processing network as a function of stimulus property repetition (Henson et al., 2002; Ganel et al., 2005b; Cohen Kadosh et al., 2010). Cohen Kadosh et al. (2010) used fMR-adaptation to show that the regions in the core face-network respond equally to changing facial identities and expressions, but not gaze, as a function of top-down modulated processing strategies. A similar conclusion can be drawn from the results of the Ganel et al. (2005b) fMR-adaptation study. Ganel and colleagues found that the fusiform gyrus processed task-irrelevant, but strategy-relevant emotional expression information in a configural identity processing task.

The present study used a new paradigm to investigate the interdependent processing or otherwise of three different face properties (identity, expression, or gaze) in adults and 7- to 8- and 10- to 11-year-old children. In our same-different task, participants had to compare two consecutively presented faces that could differ with regard to facial identity, expression, direction of gaze, or any combination of these, thus preventing the adoption of a single strategy as it was not possible to base a same-different decision on a specific face property. Crucially, to perform successfully, participants had to use both configural and featural information processing strategies for the same-different decision. It was not possible to focus on a particular face property to perform the task. We also included conditions with a combination of two or three face property changes to be able to look at the interdependent processing of the different face properties, such as the influence of co-occurring identity and expression changes. We note that the current experiment investigated the processing of different face properties directly, an approach which would then allow us to contrast the two processing hypotheses that have been proposed for faces (parallel-route hypothesis vs. strategy hypothesis). In Experiment 1, we assessed which face property adults would use to compare two faces. We were particularly interested in interactions for simultaneously changing face properties and whether any specific combination would make a comparison more difficult, or easier. For this experiment, we predicted that in line with the strategy hypothesis, face properties that rely on the same facial information (configural vs. featural information) would interact with each other. In counterbalanced blocks, we then turned the faces upside down (a measure shown to selectively impair the use of configural information processing), to draw conclusions about the respective influence of the two cognitive processing strategies. In line with previous studies, we predicted that face inversion would interfere with configural face processing and in particular with the processing of facial identity. Experiment 2 tested how 7- to 8- and 10- to 11-year-old children process the three face properties in the same-different task, using upright faces only. Testing two age groups of children had the dual purpose of testing the progressive acquisition of face processing abilities for different face properties, while looking at the influence of the two processing strategies that have been suggested to develop along differing developmental trajectories (e.g., Mondloch et al., 2002). For Experiment 2, we predicted to observe developmental differences for those face properties that are processed by extracting configural face information. In line with previous reports of prolonged trajectories, we also predicted that these differences would still be present in our older children group.

Experiment 1

The underlying rationale for Experiment 1 was to investigate the processing order of all three face properties in adults. Using both the canonical upright orientation and the inverted orientation which has been shown to selectively disrupt face-specific processing strategies, we aimed to establish whether the three face properties are processed independently (parallel-route hypothesis) or whether specific properties interact, possibly as a result of similar underlying processing strategies (strategy hypotheses).

Methods

Participants

Sixteen adults (mean age: 28.38, SD: 10.44 years, eight male) participated in Experiment 1. All participants had normal or corrected-to-normal vision and informed consent was obtained before testing. The study was approved by the local ethics committee at Birkbeck College, University of London.

Stimulus and apparatus

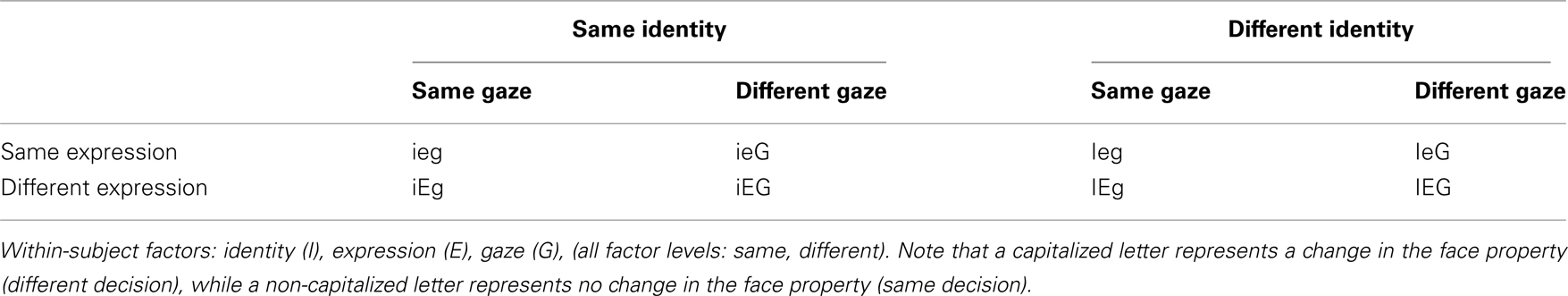

A stimulus set was created from 8 color photographs taken under standard lighting conditions (two females, two expressions (happy and angry), two directions of gaze (directed to the right, or the left, see Table 1 for the factorial design). The stimuli subtended a visual angle of 6.3° × 7°, and the participants sat 57 cm from the screen. Stimulus pairs in each trial varied with regard to none, one, two, or three face properties. The experimental design therefore consisted of the face property factors identity × expression × gaze, which were either repeated or changed (two levels). We also included the factor face orientation (upright, inverted). We note that the same design and stimulus set has been used in a recent transcranial magnetic stimulation (TMS) study with adults (Cohen Kadosh et al., 2011).

Procedure

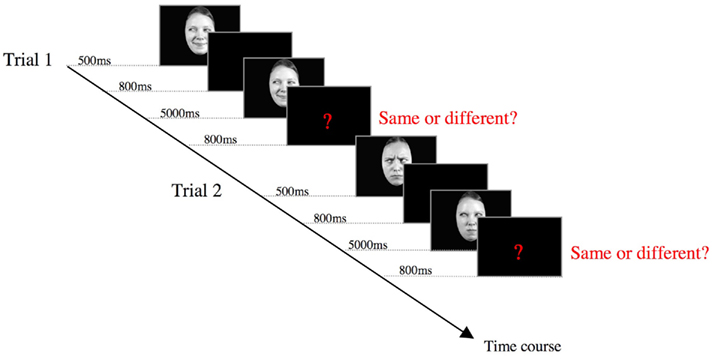

Each trial consisted of a face presented for 500 ms in the center of the computer screen followed by a fixation cross for 800 ms, followed by a second face. The second face (test stimulus) remained on the screen for a maximum of 5000 ms, or until a button press was registered and was followed by a question mark for 800 ms, indicating the beginning of a new trial (Figure 1). The participants had to decide whether the two consecutively presented faces were the same or different with regard to changes in any face property. The second face could change with regard to any number of face properties, thus avoiding the adoption of a single strategy, such as for example a featural strategy that would have been suitable for comparing faces on the basis of the direction of gaze only. Note that this also means that a “same” decision could not depend on a particular face property alone. Participants were asked to respond as quickly as possible while avoiding mistakes. The participants indicated their choices by pressing one of two keys (“f” or “j”) on a keyboard. Prior to testing, it was pointed out to each participant that a change in any face property should lead to a “different” response and each participant was familiarized with the presentation speed and the general pace of the design by viewing three example trials. The assignment of the keys to same and different decisions was counterbalanced across blocks in each participant. The number of responses to “same” or “different” was equal and each participant completed 224 trials. The order for blocks of upright and inverted faces was counterbalanced across participants.

Results

Reaction times

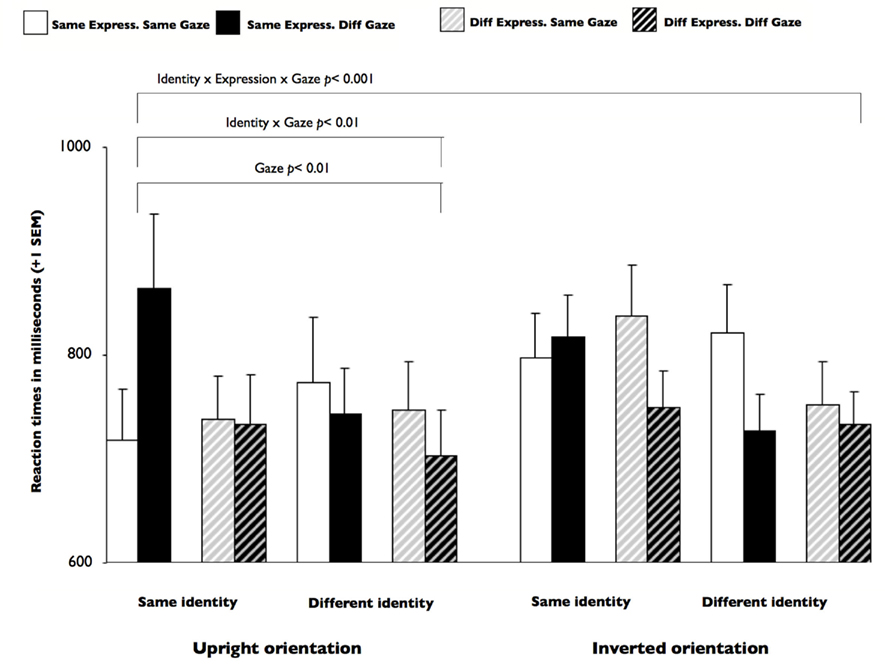

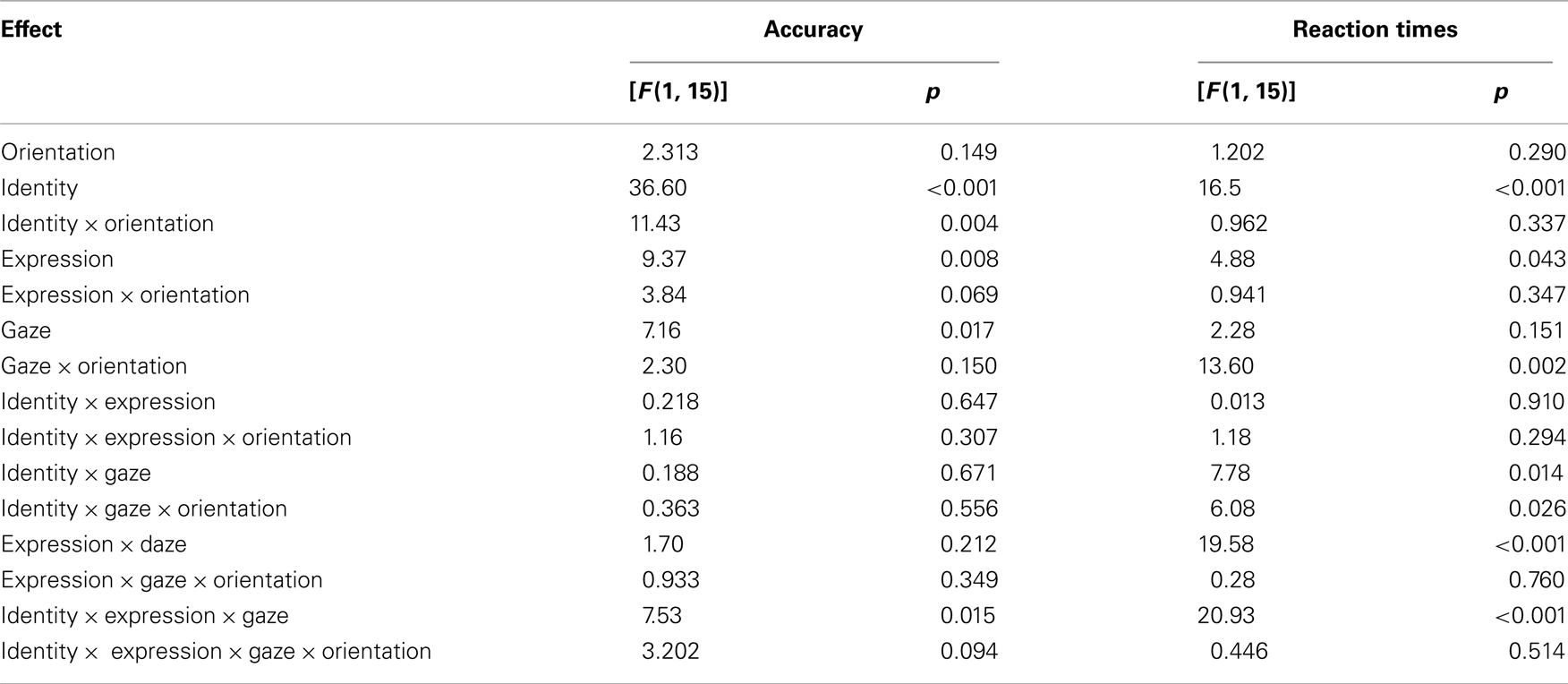

The mean reaction times (RT) were calculated for each participant in each condition and subjected to a four-way analysis of variance (ANOVA) with identity, expression, gaze (factor levels: same, different), and orientation (upright, inverted) as within-subject factors. Responses were filtered for correct answers. Table 2 provides a full description of all effects. Only the main effects of identity and expression were significant [identity: F(1, 15) = 16.48, p = 0.001; expression: F(1, 15) = 4.87, p = 0.04]. Moreover, the three-way interaction between identity, expression, and gaze was significant [F(1, 15) = 20.93, p < 0.001], as well as the interaction between orientation, identity, and gaze [F(1, 15) = 6.08, p = 0.026]. The three-way interaction is presented in Figure 2.

Figure 2. Experiment 1: overview of the average reaction times for all conditions for both orientation blocks. Additional parentheses highlight significant F-tests (interactions and main effects, not t-tests). The three-way interaction for identity × expression × gaze was significant (see also Table 2). When faces were presented in the canonical upright orientation, a significant interaction was found for the factors identity and gaze, which was due to participants being slower to detect a change in gaze, when identity remained the same. For the inverted condition, no significant interaction between identity and gaze was observed. The simple main effects were significant for both identity and gaze, with participants being faster to detect a change in either feature. Diff, different; Express, expression.

Table 2. Experiment 1: main effects and interactions for the experimental factors identity × expression × gaze × orientation.

In order to understand the source of the interaction between identity, expression, and gaze, we analyzed the simple two-way interactions for expression and gaze under same identity and different identity (Keppel, 1991). Note that only significant interactions were decomposed and that all t-tests are two-tailed.

Same identity

The interaction between expression and gaze was significant [F(1, 15) = 28.20, p < 0.001]. Further decompositions revealed that it took longer time to detect a gaze change when the expression was repeated [t(15) = 3.92, p = 0.001]. In contrast, when the expression changed a trend toward the reversed pattern was observed; it took longer to process gaze repetition [t(15) = 2.043, p = 0.059].

Different identity

No interaction was observed for expression and gaze [F(1, 15) = 0.571, p = 0.461], and only the simple main effect for gaze [F(1, 15) = 7.57, p = 0.015], but not expression [F(1, 15) = 2.70, p = 0.121] was significant. The simple main effect for gaze was due to faster responses for changed gaze (1088 ms) vs. repeated gaze (1154 ms).

To understand how the orientation of the faces affected the processing of identity and gaze, we further probed the interaction between identity and gaze for the upright and inverted faces conditions separately. Note that we decomposed based on a priori hypotheses that orientation would affect face processing abilities and that all t-tests are Bonferroni corrected.

Upright orientation

When faces were presented in the canonical upright orientation, a significant interaction was found for the factors identity and gaze [F(1, 15) = 9.07, p = 0.008], which was due to participants being slower to detect a change in gaze, when identity remained the same [t(15) = 3.353, p = 0.004] than when it changed [t(15) = 2.75, p = 0.015].

Inverted orientation

For the inverted condition, no significant interaction was observed between identity and gaze [F(1, 15) = 1.205, p = 0.290]. The simple main effects were significant for both identity [F(1, 15) = 13.0, p = 0.003] and gaze [F(1, 15) = 12.5, p = 0.003], with participants being faster to detect a change in either feature [same identity (800 ms) vs. different identity (758 ms); or same gaze (802 ms) vs. different gaze (757 ms)].

Accuracy rates

The main effects for all three face properties were significant [identity: F(1, 15) = 36.6, p < 0.001; expression: F(1, 15) = 9.37, p = 0.008; gaze: F(1, 15) = 7.16, p = 0.017]. Moreover, the two-way interaction between orientation and identity [F(1, 15) = 11.44, p = 0.004], and the three-way interaction between identity, expression, and gaze (Figure 3) were [F(1, 15) = 7.53, p = 0.015] significant. As in the case of the RTs, we decomposed the interaction between expression and gaze for same and different identity separately.

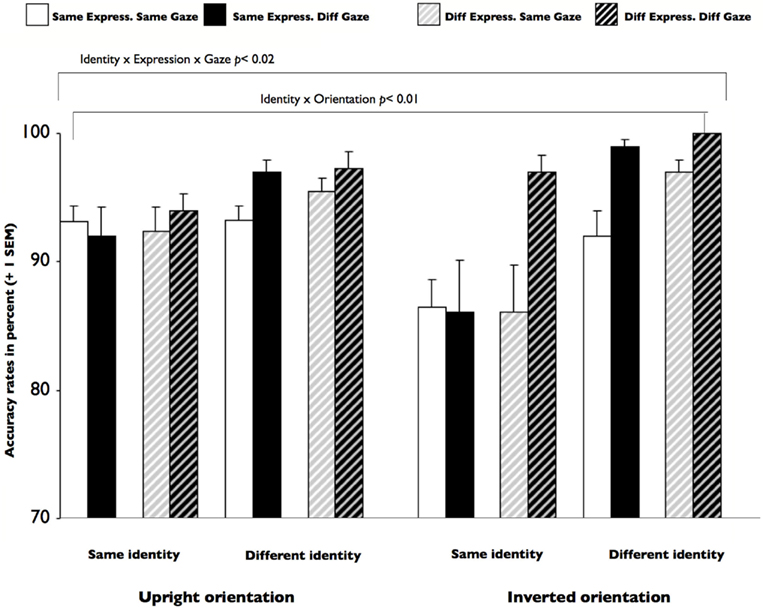

Figure 3. Experiment 1: overview of the mean accuracy rates for all conditions for both orientation blocks. Additional parentheses highlight significant F-tests (interactions and main effects, not t-tests). The three-way interaction for identity × gaze × orientation for the accuracy rates was significant. The interaction between identity and orientation was due to significantly increased accuracies for detecting a change in identity in the upright orientation condition, but even more so for detecting an identity change in the inverted orientation condition. Diff, different; Express, expression.

Same identity

The interaction between expression and gaze was significant when identity remained the same [F(1, 15) = 5.74, p = 0.03]. Further decompositions established that this interaction was driven by higher accuracy rates for the condition when both expression and gaze changed [t(15) = 2.75, p = 0.15].

Different identity

The interaction between expression and gaze was significant [F(1, 15) = 4.67, p = 0.047] and further analyses established that it was due to lower accuracies for making a “different” decision based on gaze information, independent of the expression information {same expression [t(15) = 3.47, p = 0.003]; different expression [t(15) = 2.34, p = 0.033]}.

Finally, to probe the source of the interaction between identity and orientation, we looked at the simple main effect for identity in the two orientation conditions. We found that accuracies increased significantly for detecting identity changes in the upright orientation condition as opposed to identity repetition [t(15) = 3.51, p = 0.003; same identity (93%) vs. different identity (96%)], but even more so for the inverted orientation condition [t(15) = 5.38, p < 0.001; same identity (88%) vs. different identity (97%)].

Discussion

Experiment 1 probed the processing sequence and interaction of the different face properties as a function of stimulus orientation. It was found that independent of face orientation, RTs increased and accuracy rates decreased when the identity was repeated, thus making facial identity changes the most salient ones. The analysis of the RTs for the upright condition also seems to suggest that face property-specific processing was conducted in parallel and then integrated. At the same time, we found support for the strategy hypothesis in that face properties that rely on similar information, such as configural face information in the case of identity and expression, were processed together. For example, the decomposition of the interaction between the three face properties revealed that RTs were slowest when identity and expression remained the same and a different decision was based on gaze. Similarly, participants were slower for gaze-based decision when both identity and expression changed. This is somewhat surprising, as one could have expected that a change in either face property would be sufficiently salient. Instead, this seems to suggest that information is integrated across different processing strategies before the decision is made. With regard to the orientation factor, it was found that the dominant effect of identity processing was more pronounced in the inverted orientation condition, a finding that runs in line with the evidence for disrupted configural information processing for inverted faces.

Experiment 2

Based on the results of Experiment 1, we then tested two groups of 7- to 8- and 10- to 11-year-old children with the same experimental design to assess the developmental trajectories for the processing of the three face properties.

Developmental studies on emerging face processing abilities in children have provided valuable insights in the processing sequence of different face properties. Human infants exhibit a particular interest in faces from very early on in development (Johnson et al., 1991; de Haan and Nelson, 1999; Farroni et al., 2005) and evidence for rudimentary abilities to process the identity, expression, or gaze of a face has been found in several studies (de Haan et al., 2001; Senju et al., 2006; Grossmann and Johnson, 2007). It has therefore been a somewhat surprising finding that face processing abilities reach adult-like levels only during mid-childhood and adolescence (Diamond and Carey, 1977; Carey et al., 1980; Farroni et al., 2002; Mondloch et al., 2003, 2006; Mosconi et al., 2005; Durand et al., 2007; Thomas et al., 2007; Karayanidis et al., 2009; Johnston et al., 2011). Some research suggests that, in contrast to adults who will predominantly use configural information, children tend to rely more on featural information processing up until 10 years of age and that face-irrelevant paraphernalia (such as a hat or glasses) can easily distract (Carey and Diamond, 1977; Freire and Lee, 2001). Mondloch et al. (2002) asked 6, 8, and 10-year-old children and adults to compare a limited set of upright and inverted female faces when stimuli differed either in the spacing between the face properties (configural set) or with regard to specific features (featural set). They found that while all child groups exhibited greater difficulties with the spacing set, only the 6 and 8-year-olds showed also lower accuracies in the featural set. Moreover, the size of the inversion effect was found to increase with age, which was interpreted as a sign that the upright orientation of faces becomes more canonical and easier to process with increasing age. These age-differences persisted when controlling for factors, such as poor encoding efficiency, limited memory span, and low saliency in the stimulus changes (Mondloch et al., 2004). These results suggest that the observed developmental differences can be attributed to differences in face-specific processing strategies, and not simply to general cognitive abilities. Indeed, a recent study Karayanidis and colleagues (Karayanidis et al., 2009) accommodated possible age-dependent differences in memory and executive function by using reduced stimuli samples for different age groups in a facial identity and expression matching task in 139 5- to 15-year-old children and adults. Nevertheless, they found a significant increase in accuracy with age, with children ages 12 years or younger being significantly worse at matching facial identities.

Experiment 2 compared the processing patterns for the three face properties in adults to those in 7- to 11-year-old children.

Testing two age groups of children had the dual purpose of testing the progressive acquisition of face processing abilities for different face properties, while looking at the influence of the two processing strategies that have been suggested to develop along differing developmental trajectories. In line with the empirical evidence reviewed above, we predicted to observe developmental differences for those face properties that are processed by extracting configural face information and that these differences would still be present in our older children group.

Method

Participants

Twenty-nine participants (13 7- to 8-year-old children (mean age: 8.3 years, SD: 0.28 years, seven male) and 16 10- to 11-year-old children (mean age: 11.3 years, SD: 0.26 years, eight male) participated in the experiment. All participants had normal or corrected-to-normal vision and informed consent was obtained from the parents or the primary caregiver before testing. The study was approved by the local ethics committee at Birkbeck College.

Stimulus, apparatus, and procedure

The stimuli and experimental procedure were identical to the ones used in Experiment 1, with the one exception that only upright faces were presented in this experiment. See previous experiment for a detailed description.

Results

Reaction times

Mean RTs were calculated for each participant in each condition. To investigate developmental differences between the three age groups, a four-way ANOVA with the within-subject factors identity, expression, and gaze (factors levels: same, different), and age (7–8 years, 10–11 years, adults) as a between-subject factor (see Table 3 for all effects). Responses were filtered for correct answers. Note that the adult group data were the same as reported in Experiment 1. The main effects of identity, gaze, and age but not expression were significant (Table 3). Further, the three-way interaction between identity × expression × gaze was significant [F(1, 42) = 37.6, p < 0.001; Figure 4], as well as the interaction between age × identity [F(1, 42) = 7.47, p = 0.002] and age × gaze [F(1, 42) = 3.93, p = 0.03].

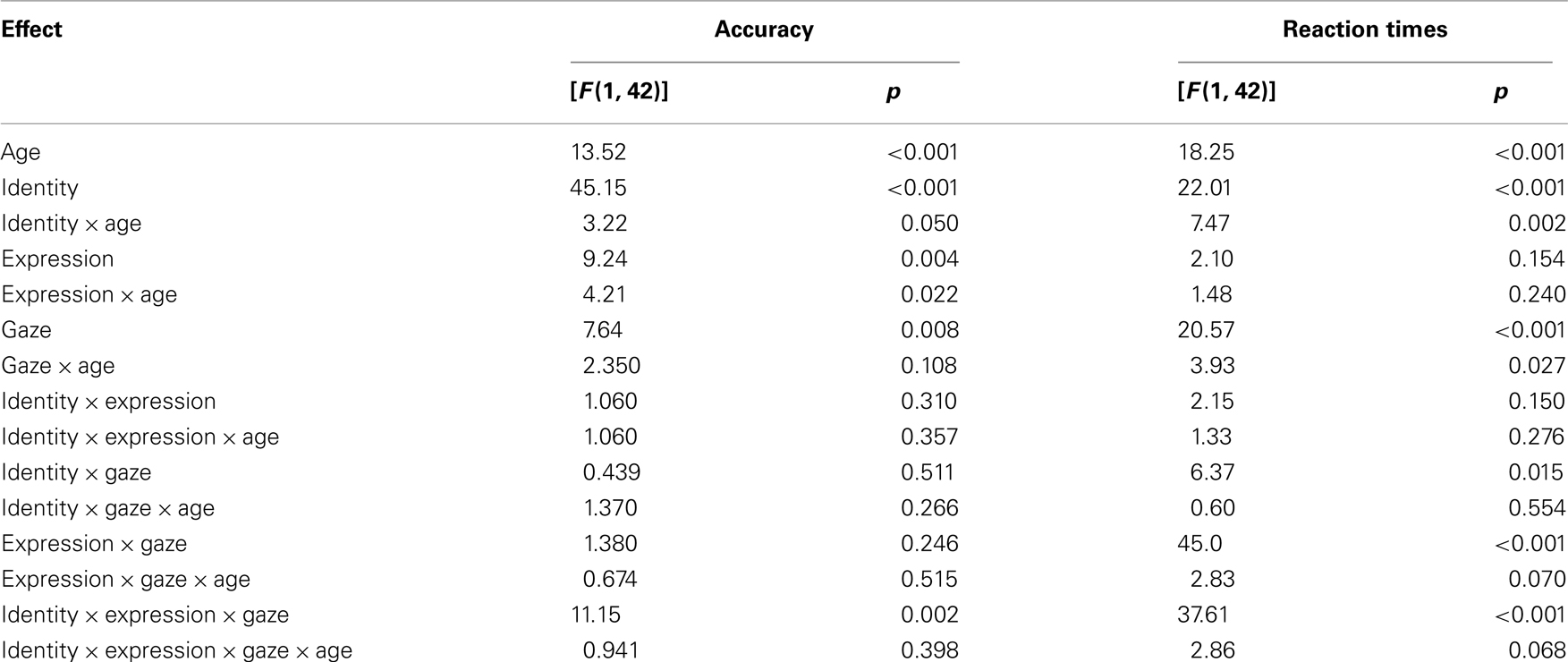

Table 3. Experiment 2: main effects and interactions for the experimental factors identity × expression × gaze × age group.

Figure 4. Experiment 2: three-way interaction for identity × expression × gaze for the reaction times for all three age groups. Additional parentheses highlight significant F-tests (interactions and main effects, not t-tests). Diff, different; Express, expression; ID, identity.

To reveal the source of the three-way interaction between identity × expression × gaze, we decomposed for the same and different identity condition separately.

Same identity

An interaction between expression and gaze was found only when identity remained the same [F(1, 44) = 48.4, p < 0.001] and further decompositions showed that this interaction was due to increased reactions times when either expression [t(44) = 2.28, p = 0.028] or gaze changed [t(44) = 7.47, p < 0.001]. Thus, participants were slower to detect a change in expression or gaze when the identity was repeated.

Different identity

The interaction between expression × gaze was not significant for this condition [F(1, 44) = 0.006, p = 0.937].

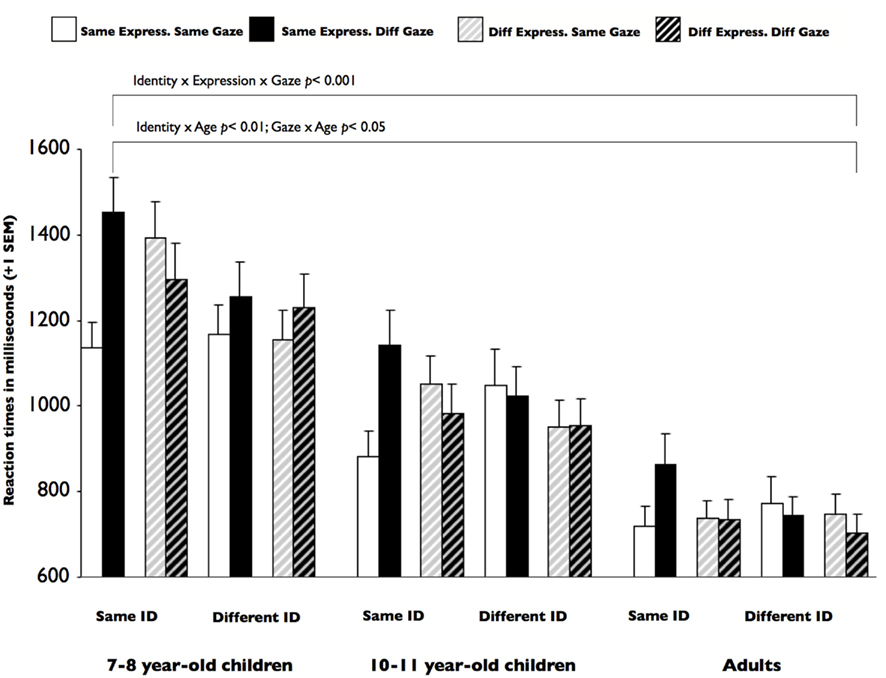

Identity × Age

The interaction between identity × age was due to the 7- to 8-year-olds taking significantly longer to decide that the identity had not changed from the first to the second face than the other two age groups [7–8 years: t(12) = 3.82, p = 0.002; 10- to 11-years: t(15) = 1.47, p = 0.161; adults: t(15) = 1.55, p = 0.143; Figure 5].

Figure 5. Experiment 2: two-way interaction for identity × age group in the reaction times. The interaction was due to the 7- to 8-year-olds taking significantly longer to decide that the identity had not changed from the first to the second face than the other two age groups.

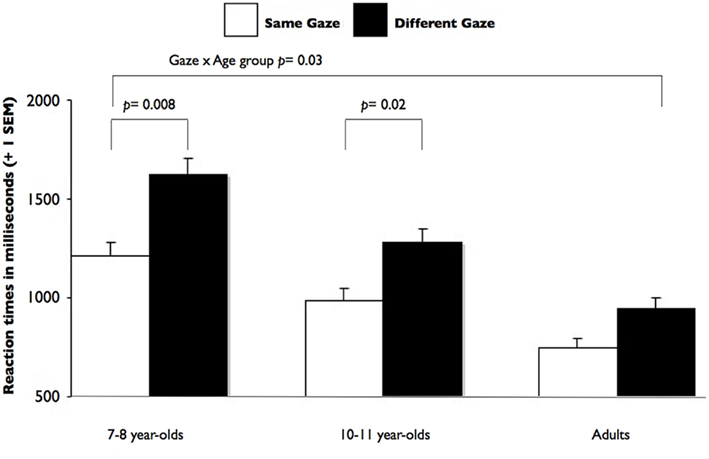

Gaze × Age

A decomposition of the interaction showed that increased RT for a detection of a change in gaze in the two children groups were the source of this interaction [7–8 years: t(12) = 3.17, p = 0.008; 10- to 11-years: t(15) = 2.60, p = 0.02; adults: t(15) = 1.36, p = 0.2; Figure 6].

Figure 6. Experiment 2: two-way interaction for gaze × age group in the reaction times. Further decompositions showed that this interaction was driven by increased reaction times for a detection of a change in gaze in the two children groups.

Accuracy rates

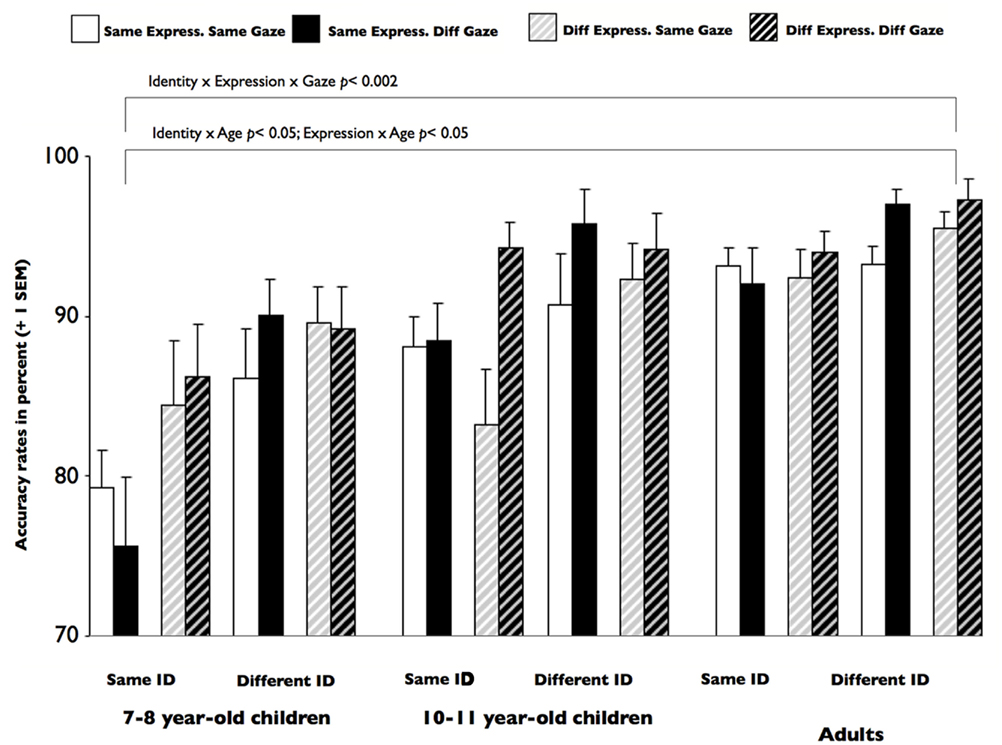

The analysis of the accuracy rates had the same factorial structure as the analysis of the RT. All four main effects were significant (see Table 3 for all effects), as well as the three-way interaction between identity, expression, and gaze [F(1, 42) = 11.15, p = 0.002; Figure 7] and the interactions between age and identity [F(1, 42) = 3.22, p = 0.05] and age and expression [F(1, 42) = 4.21, p = 0.022]. Further analysis decomposed the three-way interaction between the three face properties for the same and different identity conditions separately.

Figure 7. Experiment 2: three-way interaction for identity × expression × gaze for the reaction accuracy rates for all three age groups. Additional parentheses highlight significant F-tests (interactions and main effects, not t-tests). Diff, different; Express, expression; ID, identity.

Same identity

The interaction between expression and gaze was significant [F(1, 44) = 8.35, p = 0.006] and further decompositions revealed that it was due to lower accuracy rates when the expression, but not the gaze changed between the first and second face presentation [t(44) = 3.15, p = 0.003], as opposed to the condition when the expression remained the same [t(44) = 0.705, p = 0.484]. Therefore, accuracy decreased if identity was repeated and expression changed.

Different identity

The interaction between expression and gaze was not significant [F(1, 44) = 3.49, p = 0.068].

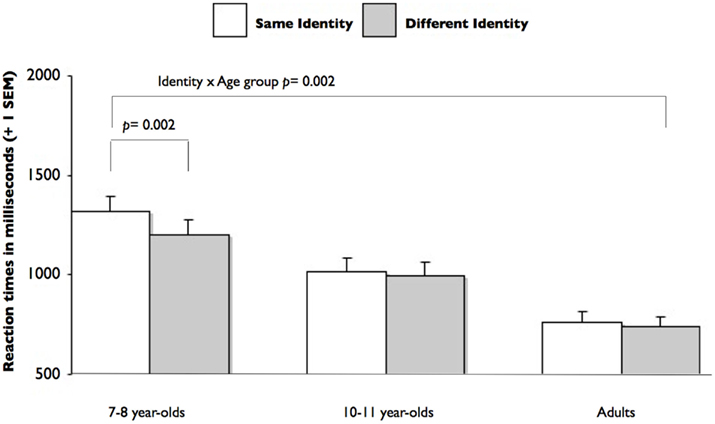

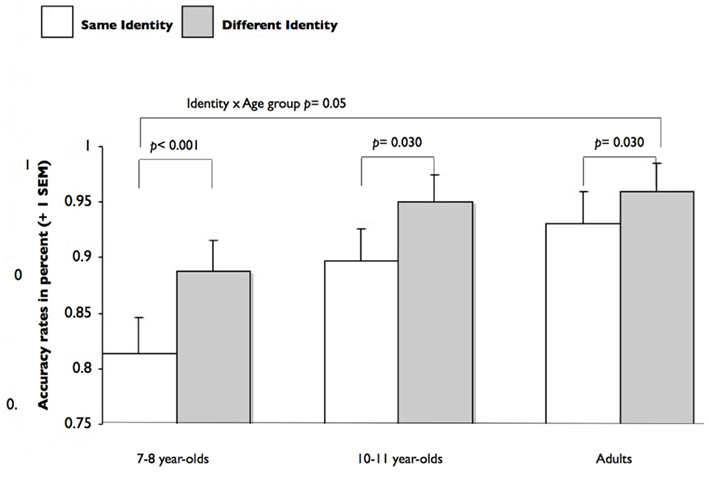

Identity × Age

The interaction between identity and age was due to significantly higher accuracy for detecting a change in identity in comparison to no change in identity in all three age groups [7- to 8-year-olds: t(12) = 4.48, p < 0.001; 10- to 11-year-olds: t(15) = 3.47, p = 0.003; adults: t(15) = 3.50, p = 0.003]. This increase was significantly larger for the combined children groups in comparison to the adult groups [t(42) = 2.54, p = 0.015], but did not differ between the children groups [t(27) = 1.2, p = 0.24; Figure 8].

Figure 8. Experiment 2: two-way interaction for identity × age group in the accuracy rates. The interaction between identity and age was due to significantly higher accuracy for detecting a change in identity in comparison to no change in identity in all three age groups. This increase was significantly larger for the combined children groups in comparison to the adult groups, but did not differ between the children groups.

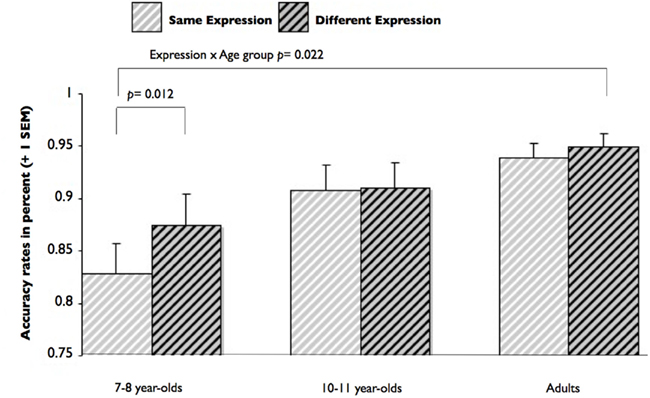

Expression × Age

Only the group of 7- to 8-year-old children made significantly more mistakes in detecting expression changes [7- to 8-year-olds: t(12) = 2.95, p = 0.012; 10- to 11-year-olds: t(15) = 0.930, p = 0.360; adults: t(15) = 0.930, p = 0.360; Figure 9].

Figure 9. Experiment 2: two-way interaction for expression × age group in the accuracy rates. This interaction was due to the group of 7- to 8-year-old children making significantly more mistakes in detecting expression changes.

Discussion Experiment 2

The aim of the Experiment 2 was to investigate whether the processing order of different face properties changes as a function of age. We found that while the overall processing order did not differ for children from 7 years of age (i.e., no higher interactions for the factors age and the three face properties), both child groups were less accurate at processing facial identity and the younger children made more mistakes in detecting expression changes. This corresponds with previously reported lower proficiency levels for face properties depending on predominantly configural strategies. We note that all three groups showed a similar response patterns for processing facial identity, with children being significantly less accurate. The younger children were also slower to process identity, a finding which rules out the possibility of a speed–accuracy trade-off for the developmental results. Finally, the younger children were slower to detect a gaze change, while the older children and the adults only showed a trend toward the same effect.

General Discussion

The current experiments set out to investigate the processing order of three face properties in children and adults and to disentangle the respective influence of stimulus characteristics and processing strategies. We used a paradigm that required of participants to process all three face properties simultaneously, while preventing the adoption of a single strategy (Cohen Kadosh et al., 2011). As outlined in the introduction, possible face processing models range from those that propose a strict separation for different face properties (i.e., the parallel-route hypothesis) with more permissive models that allow for interactions between some face properties, to multi-factorial face space models where each face has an idiosyncratic loading pattern on the different factorial dimensions.

We would like to propose a different approach to that above, and suggest that the processing of different face properties is dependent on the specific task-induced strategy and that properties relying on similar strategies will interact (strategy hypothesis). This approach has received some support from research investigating the neural basis of face processing in recent fMRI-adaptation studies (Henson et al., 2002; Ganel et al., 2005b; Cohen Kadosh et al., 2010), which showed that the same regions of the core face-network differentially process the three face properties, depending on the specific task requirements.

In the first instance, we tested a group of adults to establish the processing order for upright faces, before turning the faces upside down to assess the selective impairment of configural information processing strategies. This approach allowed us to probe the respective influence of stimulus characteristics and processing strategies. In the adult, the processing of facial identity had the strongest influence on the same-different decision. That is, participants were slower and less accurate in the conditions when identity remained the same. Interestingly, identity processing also relied on the information from expressions, with participants being faster when both either changed or were repeated, supporting the suggestion that the two face properties are processed integratively. While some behavioral evidence suggests that facial identity can serve as a reference point for assessing expressions (Schweinberger and Soukup, 1998; Baudouin et al., 2000; Ganel and Goshen-Gottstein, 2004), more direct support comes from a recent TMS study which used the same experimental design while stimulating the right occipital face area (rOFA) in the core face-network in adults (Cohen Kadosh et al., 2011). In this TMS study, we found that accuracies for the combined processing of identity and expression decreased after TMS to the rOFA, but not for gaze, supporting the notion of combined processing for these two face properties also at the neural level. The finding that accuracies were highest when all three face properties changed is counterintuitive, as one might expect that changes in a particular salient face property would already be sufficient for making a same-different decision. Rather, it seems that both configural and featural information processing strategies run in parallel and are then matched prior to making a decision. This also validates our methodological approach, which explicitly avoided tapping into a single strategy. We also found that by inverting the face stimuli, overall accuracies improved slightly, possibly due to the fact that face inversion selectively impairs configural information processing (Yin, 1969; Maurer et al., 2002; Mondloch et al., 2002) and that participants focus on processing specific face properties with a featural strategy. The three-way interaction between identity, gaze, and orientation supports this interpretation. In sum, our findings find strong support for the strategy hypothesis, which proposes that face properties that rely on the same cognitive and possibly neural mechanism will influence each other. Conversely, our findings provide little support for the parallel-route hypothesis, which proposes independent processing routes for identity and expression (Bruce and Young, 1986).

The developmental results established that while 7-year-old children process the different face properties in a similar order, they also exhibit selective difficulties for face properties relying on configural information processing strategies. That is, the younger children were less accurate in processing facial identity and expressions, while the older children had difficulties with the processing of identity only. The RT results further support this, as children were faster to detect a change in identity, but in turn were slower to detect change in gaze, suggesting that gaze is processed at a later time point. The findings of differential accuracy effects for the three face properties refutes the notion that poorer face processing abilities are due to overall general cognitive differences, such as a lower attention span or working memory capacities (e.g., Crookes and McKone, 2009). The results also show that from the age of 7, face property-specific processing is dominated by the strategy and processing proficiencies for the particular face property will improve along with the strategy. We note that while the current study relied on a commonly used approach to keep age-differences in general cognitive skills at a minimum (i.e., a small stimulus set), this somewhat affects the generalizability of the results. In addition, it might be that processing abilities differ when participants process the faces of age-matched peers, a hypothesis that might affect face processing particularly in the child participants, and that should be addressed in future studies.

To conclude, the current study aimed at investigating the processing patterns for different face properties. In adults, we found that the processing of different face properties is dependent on their specific cognitive strategy, and that face properties that depend on the same strategy will influence each other, thus supporting the strategy hypothesis. It was also found that while overall face processing is adult-like in 7-year-old children, specific properties that depend on configural information processing continue to lag behind until 11 years-of-age, thus stressing the dependence of factors such as exposure and training for this stimulus category.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

I would like to thank Tzvi Ganel, Mark Johnson, Roi Cohen Kadosh, and Wim Notebaert for helpful comments on an earlier version of this manuscript, and Julia Kennedy for help with testing participants.

References

Baudouin, J. Y., Sansone, S., and Tiberghien, G. (2000). Recognizing expression from familiar and unfamiliar faces. Pragmatics Cogn. 8, 123–146.

Behrmann, M., and Avidan, G. (2005). Congenital prosopagnosia: face-blind from birth. Trends Cogn. Sci. (Regul. Ed.) 9, 180–187.

Calder, A. J., and Young, A. W. (2005). Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 6, 641–651.

Carey, S., and Diamond, R. (1977). From piecemeal to configurational representation of faces. Science 195, 312–314.

Carey, S., Diamond, R., and Wood, B. (1980). Development of face recognition – a maturational component. Dev. Psychol. 16, 257–269.

Cohen Kadosh, K., Walsh, V., and Cohen Kadosh, R. (2011). Investigating face-property specific processing in the right OFA. Soc. Cogn. Affect. Neurosci. 6, 58–65.

Cohen Kadosh, K., Henson, R. N. A., Cohen Kadosh, R., Johnson, M. H., and Dick, F. (2010). Task-dependent activation of face-sensitive cortex: an fMRI adaptation study. J. Cogn. Neurosci. 22, 903–917.

Cohen Kadosh, R., and Henik, A. (2006). A common representation for semantic and physical properties: a cognitive-anatomical approach. Exp. Psychol. 53, 87–94.

Crookes, K., and McKone, E. (2009). Early maturity of face recognition: no childhood development of holistic processing, novel face encoding, or face-space. Cognition 111, 219–247.

de Haan, M., and Nelson, C. A. (1999). Brain activity differentiates face and object processing in 6-month-old infants. Dev. Psychol. 35, 1113–1121.

de Haan, M., Johnson, M. H., Maurer, D., and Perrett, D. I. (2001). Recognition of individual face and average face prototypes by 1- and 3-month-old infants. Cogn. Dev. 16, 659–678.

Diamond, R., and Carey, S. (1977). Developmental changes in the representation of faces. J. Exp. Child Psychol. 23, 1–22.

Durand, K., Gallay, M., Seigneuric, A., Robichon, F., and Baudouin, J.-Y. (2007). The development of facial emotion recognition: the role of configural information. J. Exp. Child Psychol. 97, 14–27.

Farroni, T., Csibra, G., Simion, F., and Johnson, M. H. (2002). Eye contact detection in humans from birth. Proc. Natl. Acad. Sci. U.S.A. 99, 9602–9605.

Farroni, T., Johnson, M. H., Menon, E., Zulian, L., Faraguna, D., and Csibra, G. (2005). Newborn’s preference for face-relevant stimuli: effects of contrast polarity. Proc. Natl. Acad. Sci. U.S.A. 102, 17245–17250.

Fias, W., Lauwereyns, J., and Lammertyn, J. (2001). Irrelevant digits affect feature-based attention depending on the overlap of neural circuits. Brain Res. Cogn. Brain Res. 12, 415–423.

Freire, A., and Lee, K. (2001). Face recognition in 4- to 7-year-olds: processing of configural, featural, and paraphernalia information. J. Exp. Child Psychol. 80, 347–371.

Ganel, T. (2011). Revisiting the relationship between the processing of gaze direction and the processing of facial expression. J. Exp. Psychol. Hum. Percept. Perform. 37, 48–57.

Ganel, T., and Goshen-Gottstein, Y. (2004). Effects of familiarity on the perceptual integrality of the identity and expression of faces: the parallel-route hypothesis revisited. J. Exp. Psychol. Hum. Percept. Perform. 30, 583–597.

Ganel, T., Goshen-Gottstein, Y., and Goodale, M. A. (2005a). Interactions between the processing of gaze direction and facial expression. Vision Res. 45, 1191–1200.

Ganel, T., Valyear, K. F., Goshen-Gottstein, Y., and Goodale, M. A. (2005b). The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia 43, 1645–1654.

Gauthier, I., Skudlarski, P., Gore, J. C., and Anderson, A. W. (2000). Expertise for cars and bird recruits brain areas involved in face recognition. Nat. Neurosci. 3, 191–197.

Gauthier, I., Tarr, M. J., Anderson, A. W., Skudlarski, P., and Gore, J. C. (1999). Activation of the middle fusiform “face area” increases with expertise in recognizing novel objects. Nat. Neurosci. 2, 568–573.

Grossmann, T., and Johnson, M. H. (2007). The development of the social brain in human infancy. Eur. J. Neurosci. 25, 909–919.

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. (Regul. Ed.) 4, 223–233.

Henson, R. N. A., Shallice, T., Gorno-Tempini, M. L., and Dolan, R. J. (2002). Face repetition effects in implicit and explicit memory tests as measured by fMRI. Cereb. Cortex 12, 178–186.

Humphreys, K., Avidan, G., and Behrmann, M. (2006). A detailed investigation of facial expression processing in congenital prosopagnosia as compared to acquired prosopagnosia. Exp. Brain Res. 176, 356–373.

Johnson, M. H., Dziurawiec, S., Ellis, H., and Morton, J. (1991). Newborn’s preferential tracking of face-like stimuli and its subsequent decline. Cognition 40, 1–19.

Johnston, P. J., Kaufman, J., Bajic, J., Sercombe, A., Michie, P. T., and Karayanidis, F. (2011). Facial emotion and identity processing development in 5- to 15-year-old children. Front. Psychol. 2:26. doi: 10.33389/fpsyg.2011.00026

Karayanidis, F., Kelly, M., Chapman, P., Mayes, A., and Johnston, P. (2009). Facial identity and facial expression matching in 5-12-year-old children and adults. Infant Child Dev. 18, 404–421.

Keppel, G. (1991). Design and Analysis: A Researchers Handbook, 3rd Edn. Upper Saddle River: Prentice Hall.

Klucharev, V., and Sams, M. (2004). Interaction of gaze direction and facial expression processing: ERP study. Neuroreport 15, 621–625.

Leppaenen, J. M., and Nelson, C. A. (2006). “The development and neural bases of facial emotion recognition,” in Advances in Child Development and Behavior, Vol. 34, ed. R. V. Kail (San Diego: Academic Press), 207–245.

Maurer, D., Le Grand, R., and Mondloch, C. J. (2002). The many faces of configural processing. Trends Cogn. Sci. (Regul. Ed.) 6, 255–260.

Mondloch, C. J., Dobson, K. S., Parsons, J., and Maurer, D. (2004). Why 8-year-olds cannot tell the dfference between Steve Martin and Paul Newman: factors contributing to the slow development of sensitivity to the spacing of facial features. J. Exp. Child Psychol. 89, 159–181.

Mondloch, C. J., Geldart, S., Maurer, D., and Le Grand, R. (2003). Developmental changes in face processing skills. J. Exp. Child Psychol. 86, 67–84.

Mondloch, C. J., Le Grand, R., and Maurer, D. (2002). Configural face processing develops more slowly than featural face processing. Perception 31, 553–566.

Mondloch, C. J., Maurer, D., and Ahola, S. (2006). Becoming a face expert. Psychol. Sci. 17, 930–934.

Mosconi, M. W., Macka, P. B., McCarthy, G., and Pelphrey, K. A. (2005). Taking an “intentional stance” on eye-gaze shifts: a functional neuroimaging study of social perception in children. Neuroimage 27, 247–252.

Posner, M. I., Sandson, J., Dhawan, M., and Shulman, G. L. (1990). Is word recognition automatic? A cognitive-anatomical approach. J. Cogn. Neurosci. 1, 50–60.

Schweinberger, S. R., and Soukup, G. R. (1998). Asymmetric relationships among perceptions of facial identity, emotion, and facial speech. J. Exp. Psychol. Hum. Percept. Perform. 24, 1748–1765.

Senju, A., Johnson, M. H., and Csibra, G. (2006). The development and neural basis of referential gaze perception. Soc. Neurosci. 1, 220–234.

Thomas, L. A., De Bellis, M. D., Graham, R., and LaBar, K. S. (2007). Development of emotional facial recognition in late childhood an dadolescence. Dev. Sci. 10, 547–558.

Keywords: face processing, development, cognitive strategies

Citation: Cohen Kadosh K (2012) Differing processing abilities for specific face properties in mid-childhood and adulthood. Front. Psychology 2:400. doi: 10.3389/fpsyg.2011.00400

Received: 23 August 2011;

Accepted: 31 December 2011;

Published online: 02 February 2012.

Edited by:

Jordy Kaufman, Swinburne University of Technology, AustraliaReviewed by:

Frini Karayanidis, University of Newcastle, AustraliaOlivier Pascalis, CNRS and Universite Pierre Mendes France, France

Copyright: © 2012 Cohen Kadosh. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: Kathrin Cohen Kadosh, Department of Experimental Psychology, Institute of Cognitive Neuroscience, University of Oxford, 17 Queen Square, London WC1N 3AR, UK. e-mail: kathrin.cohenkadosh@psy.ox.ac.uk