- Department of Psychology, University of Nevada, Reno, NV, USA

The perceived configuration of a face can be strongly biased by prior adaptation to a face with a distorted configuration. These aftereffects have been found to be weaker when the adapt and test faces differ along a number of dimensions. We asked whether the adaptation shows more transfer between faces that share a common identity, by comparing the strength of aftereffects when the adapt and test faces differed either in expression (a configural change in the same face identity) or gender (a configural change between identities). Observers adapted to expanded or contracted images of either male or female faces with either happy or fearful expressions, and then judged the perceived configuration in either the same faces or faces with a different gender and/or expression. The adaptation included exposure to a single face (e.g., expanded happy) or to alternated faces where the distortion was contingent on the attribute (e.g., expanded happy versus contracted fearful). In all cases the aftereffects showed strong transfer and thus only weak selectivity. However, selectivity was equal or stronger for the change in expression than gender. Our results thus suggest that the distortion aftereffects between faces can be weakly modulated by both variant and invariant attributes of the face.

Introduction

The appearance of a face can be strongly biased by adaptation to faces an observer has been exposed to previously. For example, after viewing a face that has been configurally distorted so that it appears too expanded, an undistorted test face appears too contracted (Webster and MacLin, 1999). Numerous studies have now characterized the properties of these aftereffects and their implications for the perception and neural representation of faces (Webster and MacLeod, 2011). In particular, aftereffects have been demonstrated for many of the characteristic dimensions along which faces naturally vary, including their individual identity (Leopold et al., 2001) and attributes such as their gender ethnicity, expression (Hsu and Young, 2004; Webster et al., 2004), or age (Schweinberger et al., 2010; O’Neil and Webster, 2011). Thus the adaptation may play an important role in shaping how different aspects of the face are encoded and interpreted.

Several studies have explored whether separate face aftereffects could be induced for different types of faces, for example so that male faces appear too contracted while female faces look too expanded. Partial selectivity has been found for a number of dimensions including differences in identity, gender, ethnicity, age, and species (Little et al., 2005, 2008; Yamashita et al., 2005; Ng et al., 2006; Jaquet and Rhodes, 2008; Jaquet et al., 2008). This selectivity is in part of interest because it might reveal the response characteristics or tuning of the underlying adapted mechanisms, and has also been examined to explore the extent to which distinct adaptable processes underlie the encoding of different facial attributes, for instance so that different norms or prototypes could be established for different populations of faces. However, the basis for this selectivity, and the extent to which it reflects face-specific versus more generic levels of visual coding, remain poorly understood (Webster and MacLeod, 2011).

In this study we compared the relative selectivity of the adaptation for two different facial attributes – changes in expression or changes in gender. Dimensions like gender reflect stable or invariant aspects of identity and thus distinguish one face from another, while facial expressions instead represent an example of variant facial configurations that correspond to changes in the state or pose of the same identity (Bruce and Young, 1986). A number of lines of evidence suggest that the variant and invariant properties of the face are represented in processing streams that are at least partially separable (Haxby et al., 2000; Andrews and Ewbank, 2004; Calder and Young, 2005; Said et al., 2011). Both gender and expression changes can induce strong adaptation effects (Hsu and Young, 2004; Webster et al., 2004; Little et al., 2005; Ng et al., 2006; Jaquet and Rhodes, 2008; Barrett and O’Toole, 2009) that are consistent with sensitivity changes that at least in part reflect high and possibly face-specific levels of response change (Bestelmeyer et al., 2008; Davidenko et al., 2008; Afraz and Cavanagh, 2009; Ghuman et al., 2010). Thus after adapting to a male face an androgynous face appears more female, while adapting to an angry face causes a test face to appear less angry. Studies have also shown that the aftereffects are selective for the specific expression, so that an angry face has a larger effect on the appearance of angry faces than happy ones (Hsu and Young, 2004; Rutherford et al., 2008; Skinner and Benton, 2010; Cook et al., 2011; Pell and Richards, 2011). Moreover, for both expression and identity the aftereffects appear to reflect shifts in the norm for each facial dimension rather than shifts along arbitrary axes determined by the morphing sequence (Rhodes and Jeffery, 2006; Benton and Burgess, 2008).

However, there are intriguing asymmetries between expression and identity adaptation. Expression aftereffects are weaker when the adapt and test faces differ in identity (Fox and Barton, 2007; Ellamil et al., 2008) or gender (Bestelmeyer et al., 2009). Conversely, changes in expression did not affect the degree of identity adaptation (Fox et al., 2008). These differences could not be attributed to the degree of physical difference between the images (e.g., so that two expressions of the same identity are more similar than two identities with the same expression; Ellamil et al., 2008; Fox et al., 2008) or to response changes to the low-level features of the images (Butler et al., 2008; Fox et al., 2008). This suggests that the asymmetry might at least in part reflect differences in how expressions and facial identities are encoded, and specifically, that the processes coding invariant features like identity or gender might reflect a more abstracted representation that is independent of the variant “pose” of the face. Consistent with this, other studies have found analogous asymmetric effects of expression or identity changes on face recognition and discrimination tasks (Schweinberger and Soukup, 1998; Schweinberger et al., 1999; Atkinson et al., 2005). (Conversely, there are also examples where changeable aspects of the face such as mouth shape can show aftereffects that show little dependence on identity (Jones et al., 2010).

One possible account of these differences in selectivity for attributes like expression or gender is that adaptation shows greater transfer between an adapt and test face when the two faces appear to be share a common identity – i.e., when both images appear to be drawn from the same person. This idea was suggested by Yamashita et al. (2005) in a study comparing how selective the face adaptation was for a variety of different “low-level” changes in the images. Some differences, including a change in size, average contrast, or average color between the adapt and test faces, had weak or no effect on the magnitude of the adaptation. Yamashita et al. noted that these stimulus changes had in common that they did not alter the apparent identity of the face. Conversely, bandpass filtering the images into different spatial frequency ranges, or inverting the contrasts so that the adapt and test images had different polarities, resulted in substantially weakened aftereffects, and these were stimulus manipulations that also caused the adapt and test face to look like different individuals. Their hypothesis might account for why face aftereffects are relatively robust to changes in size or position, differences which are in fact frequently introduced to try to isolate high-level and possibly face specific levels of the adaptation (Leopold et al., 2001; Zhao and Chubb, 2001; Afraz and Cavanagh, 2008). Moreover, the aftereffects are also surprisingly robust across global transformations such as uniformly stretching the images (Hole, 2011). This stretching alters many of the configural relationships in the image (e.g., the relative distances between the eyes and nose), yet again has little effect on the recognizability of the face (Hole et al., 2002). Finally, the proposal might also explain why aftereffects are selective for differences in the actual identity of faces (and may become more selective as the similarity between two identities decreases; Yamashita et al., 2005), but is not selective for differences in the identity strength of a given face (e.g., between a face and its caricature; Loffler et al., 2005; Rotshtein et al., 2005; Bestelmeyer et al., 2008).

We sought to test this hypothesis in the context of invariant versus variant aspects of the face. In particular, by this account aftereffects might show less selectivity for changes in expression because these changes do not alter the perceived identity of the face. Alternatively, the aftereffects should show less transfer when the gender is altered. To test this, we compared the relative selectivity for changes in expression or gender on the same configural aftereffect. Surprisingly however, the results instead suggest that if anything the adaptation was more selective for the expression difference, thus arguing against perceived identity as the primary factor controlling how adaptation to the configural distortion transferred from one face to another.

Materials and Methods

Subjects

Observers included the authors (denoted as S1 and S2 in the figures), and four additional observers who were unaware of the aims of the study, with different observers participating in different subsets of the experiments. All observers had normal or corrected-to-normal vision acuity and participated with informed consent. Experiments followed protocols approved by the University’s Institutional Review Board.

Stimuli

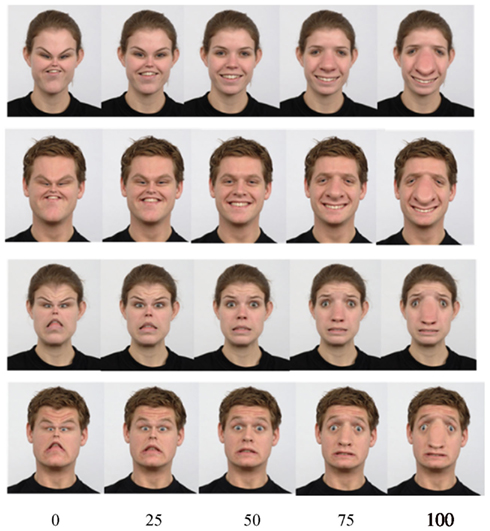

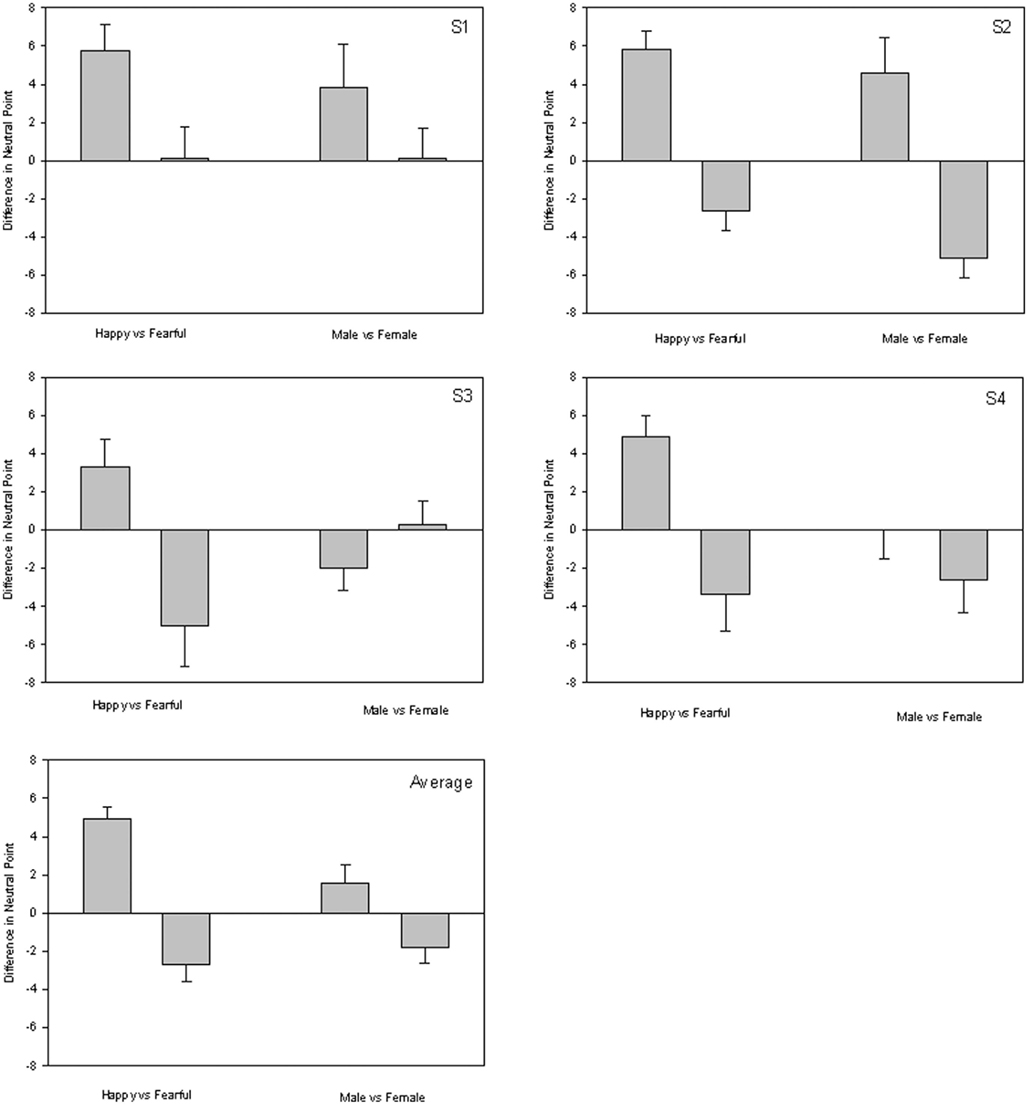

Faces for the study consisted of full-color frontal view images of Dutch female and male faces with happy or fearful posed expressions, acquired from the Radboud Face Database (Langner et al., 2010; http://www.socsci.ru.nl:8180/RaFD2/RaFD? p = main). Two models (female 32 and male 23) were used throughout as the test images, while the same models as well as additional faces were chosen as the adapting images. In order to maximize the identity cues to the face, the images were not cropped and thus included the full outline of the head and neckline. (Models were dressed uniformly in black shirts and with their hair pulled back.) The images were distorted by a local expansion or contraction of the face relative to a midpoint on the nose, using a procedure similar to the algorithms described Webster and MacLin (1999) and Yamashita et al. (2005). Equal expansions were applied to the vertical and horizontal axes of the image. The magnitude of the distortion was varied in finely graded steps in order to generate an array of 100 images, which ranged from fully contracted (0) to fully expanded (100), with the original face corresponding to a level of 50 (Figure 1).

Figure 1. Test faces corresponding to a happy female, happy male, fearful female, or fearful male. For each the face was distorted from maximally contracted (0) to maximally expanded (100). The original, undistorted face is shown in the middle column, corresponding to a level of 50.

The images were displayed on a SONY E540 monitor, centered on a 16 by 12° gray background with a similar mean luminance of 15 cd/m2. The test images subtended 5 by 5.8° at the 140 cm viewing distance, while the adapt images were shown 1.5 times larger in order to reduce the potential for an influence of low-level aftereffects. Observers viewed the stimuli binocularly in an otherwise dark room, and used a handheld keypad to record their responses.

Procedure

Observers first adapted to either a single distorted face image or to alternating pairs of distorted images for a period of 2–5 min. In the single face condition, the adapt face image remained static and corresponded to a happy male, happy female, fearful male, or fearful female, shown either fully contracted or expanded. The opposing face condition involved adapting to face images that differed either in expression and/or in gender which were paired with opposing distortions (e.g., to adapt to a contracted happy male and an expanded happy female). The faces were alternated at a rate of 1 image/s. After the initial adapt period, observers were presented test images shown for 1 s and interleaved with 4 s periods of readaptation, with a blank gray screen shown for 150 ms between each adapt and test period. Observers made a forced-choice response to indicate whether the test face appeared “too contracted” or “too expanded.” The distortion level in subsequent tests was varied in a staircase with the level that appeared undistorted estimated from the mean of the final eight reversals. Either two or four test faces were shown in randomly interleaved order during the run, each adjusted by its own staircase. These consisted of test faces that were the same as the adapt, differed in expression, differed in gender, or differed in both expression and gender. In a single session each observer completed four repeated settings with the test images for a single adapting condition, with the order of adapt condition counterbalanced across sessions.

Results

Distortion Aftereffects for Neutral or Expressive Faces

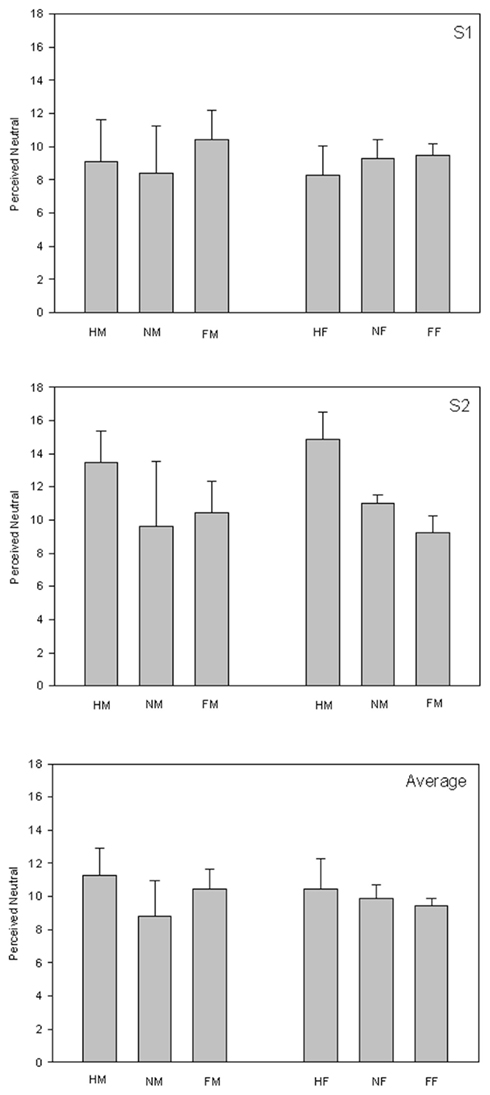

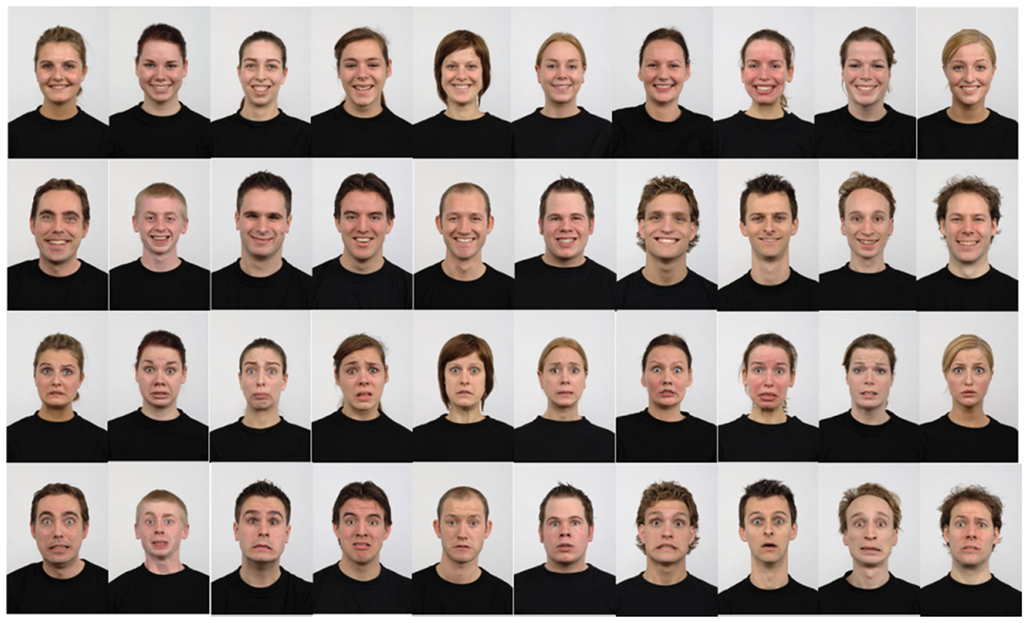

The basic aftereffects we examined involved changes in the perceived configuration of faces with different expressions or genders after adapting to expanded or contracted faces. These distortions themselves can alter the perceived expression of the face (Zhao and Chubb, 2001; Neth and Martinez, 2009), and conversely the expression might alter the apparent distortion. Thus as a preliminary control experiment we examined whether adaptation to the distortions depended on the expression or gender. In pilot studies we in fact found that horizontal distortions in the images were difficult to judge because the neutral, undistorted level was unclear in the highly expressive faces. As noted in the methods, we therefore applied both vertical and horizontal distortions in the actual experiment. For these, observers could more reliably judge the undistorted face, and we found that simple aftereffects for these faces did not differ in magnitude from the aftereffects for the same configural distortions in images of faces with neutral expressions. These aftereffects are shown in Figure 2, which plots the difference between the physical distortion levels in the faces that appeared undistorted to the observer, after adapting to either the expanded or contracted face. A two-way ANOVA confirmed that there was not a significant effect of expression [F(2,35) = 0.47, p = 0.63] or gender [F(1,35) = 0.05, p = 0.82] on the strength of the aftereffects.

Figure 2. Aftereffects for adapt and test faces with the same expression and gender. The aftereffects are plotted as the difference in the perceived neutral point for each test face after adapting to an expanded face versus a contracted face. The faces corresponded to the male and female models with a happy, fearful, or neutral expression. Panels plot the settings for the two individual observers and the average.

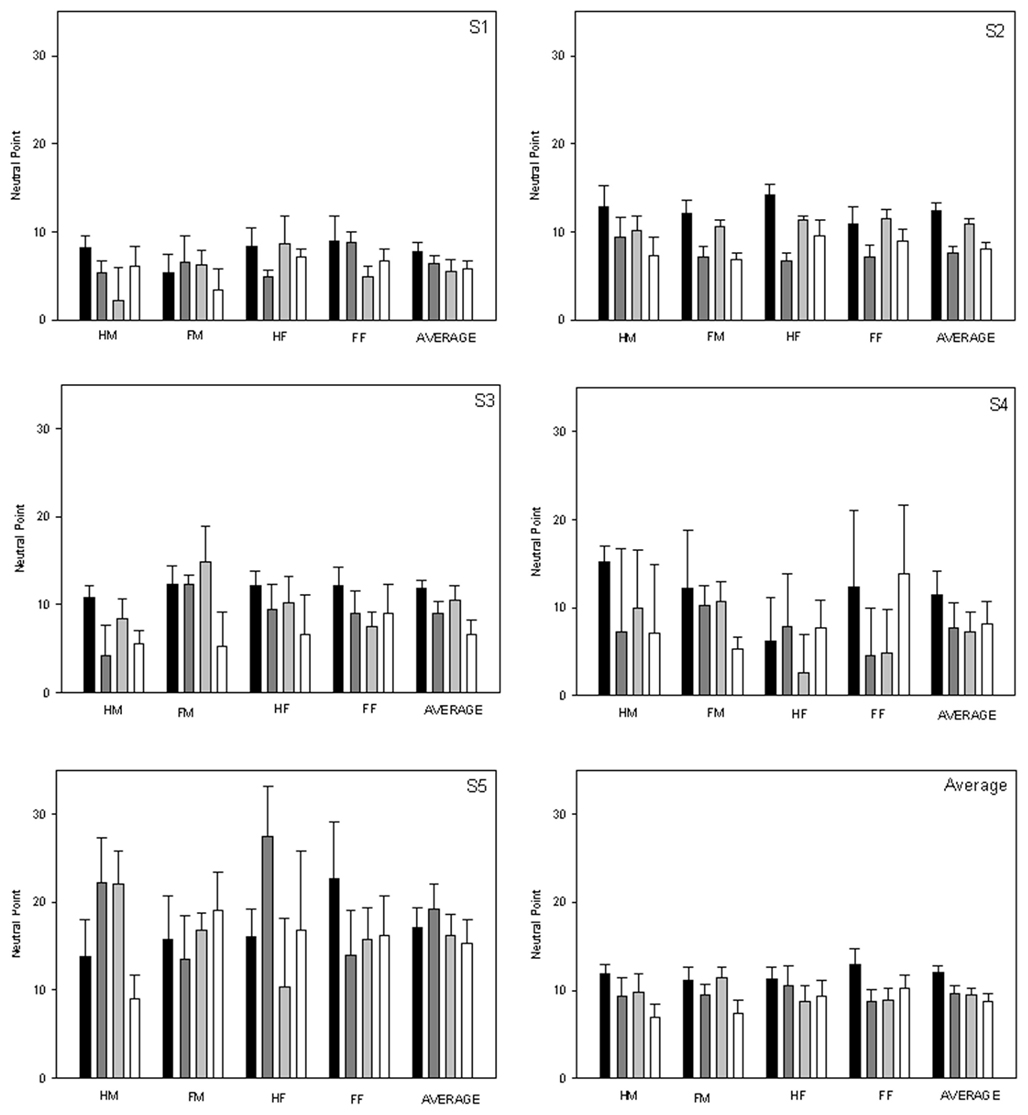

Transfer of Adaptation across Changes in Expression or Identity

To compare the selectivity of adaptation for different facial attributes, we first investigated the transfer from a single adapting face to either the same or a different face. The observers adapted for 2 min to the image of a happy male, a happy female, a fearful male, or a fearful female. For each they then judged the perceived configuration of images that were the same as the adapt, different in expression, different in gender, and different in expression and gender, with the displayed face chosen at random on each trial (Figure 3). Aftereffects were again assessed as the difference in the null settings after adapting to an expanded face versus a contracted face (Figure 4). These differences showed strong transfer of the adaptation across all four different test faces. That is, the aftereffects in the test faces were strong whether the adapt and test face were the same or different. The sizes of the aftereffects were compared with a Kruskal–Wallis one-way ANOVA on ranks, which showed a significant effect of the adapt–test combination [H(3) = 16.29, p = 0.001]. Pairwise comparisons revealed a significantly larger aftereffect when the test face was the same as the adapt versus when the test face differed in expression (Q = 3.05, p < 0.05) or both expression and gender (Q = 3.80, p < 0.05). However, the adaptation magnitude did not differ between when the test face was the same as the adapt or differed only in gender (Q = 2.37, NS). Finally, the aftereffects were also similar whether the test and adapt faces differed in gender or expression (Q = 0.69, NS). Thus overall the aftereffects tended to be modulated as much or more by the expression difference than by the gender difference between the faces.

Figure 3. Adaptation to a single image. After adapting to a single face image, the observers were presented with one of four test face images, which included the same adapt face, a face differing in expression, a face differing in gender, and a face differing in both expression and gender.

Figure 4. Transfer of adaptation across individual faces. Aftereffects are plotted as the difference between the settings following adaptation to expanded or contracted faces. Sets of bars correspond to the four adapt faces (hm, happy male; fm, fearful male; hf, happy female; and ff, fearful female) or to the average for the four adapting faces. For each the bars show the settings when the test face was the same as the adapt (black), differed in expression (dark gray), gender (light gray), or both attributes (unfilled). Each panel plots the settings for the five individual observers or the average.

Contingent Adaptation

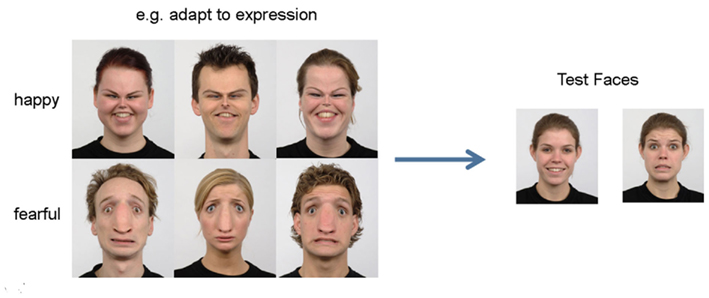

To better isolate the components of adaptation that are actually selective for the facial attributes, we next measured the aftereffects in a contingent adaptation task, in which the expanded and contracted distortions were paired with differences in the gender and/or expression of the face. This has the advantage that any non-selective adaptation is canceled out between the two opposing distortions, thus leaving a more sensitive probe of the selectivity (Yamashita et al., 2005). The observers were adapted for 2 min to a 1-s alternation between two opposing faces with opposite distortions that differed in either gender or expression, while the test faces consisted of two interleaved faces that were the same as the adapt faces (Figure 5).

Figure 5. Contingent adaptation. Observers adapted to an alternation between two faces with opposite distortions that differed in either gender or expression, and then judged the apparent distortion in each gender or expression.

Figure 6 plots the mean neutral settings after adapting to opposing distortions paired with the different expressions or genders. The selectivity of the adaptation was assessed by comparing the difference between the aftereffects for the same test face across the two adapting conditions. For the two test faces, these differences should be of opposite sign if the aftereffects were contingent on the facial attribute (since in one pair the difference corresponded to expanded adapt – contracted adapt, while for the other it was contracted adapt – expanded adapt). Alternatively, the difference should be similar for both faces if the distortion aftereffect did not depend on the value of the attribute. There were significant contingent aftereffects for the adapting face pairs whether they differed in expression [t(31) = 6.67, p < 0.001] or gender [t(31) = 2.52, p = 0.017]. However, the contingent aftereffects for expression differences were significantly stronger than for the gender differences [t(31) = 2.27, p = 0.030]. Thus again the results pointed to stronger selectivity for the expression differences.

Figure 6. Aftereffects for the contingent adaptation for expression or gender. Bars plot the difference between the settings for the happy or fearful face (left) or male or female face (right) after adapting to opposing distortions in the faces. Panels show the settings for the four observers or the mean.

Transfer of Adaptation across Gender and Expression in Faces with Different Identities

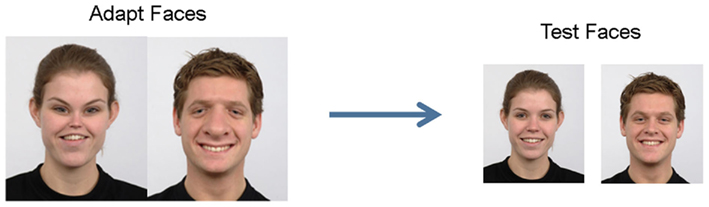

In the preceding experiments, we utilized only two faces, which corresponded to two individual identities as well as two genders. Moreover, we had no way of controlling the magnitude of the identity difference relative to the expression difference. Thus a possible confound with the results was that “gender” and “expression” really do differ in the selectivity of the adaptation, but the identity differences may have been weak in the specific pair of faces we tested. To control for this, in the final experiment, we tested the contingent adaptation aftereffects for sets of faces that might more directly capture the attributes of expression and gender. For this, we used 10 female and 10 male faces with the same happy and fearful expressions (Figure 7). The observer adapted to these 20 faces, which were interleaved with each other and alternated between the two distortions and in either gender or expression or both (Figure 8). They were then tested on the same two model faces used in the preceding experiments, but which were no longer part of the adapting set.

Figure 7. Face sets shown for adaptation to populations of female or male, or happy or fearful faces.

Figure 8. Adaptation to different face sets with different attributes. Observers viewed an alternation between two faces with opposite distortions that were drawn at random from sets that differed in either gender and/or expression, and then judged the apparent distortion in each gender and/or expression.

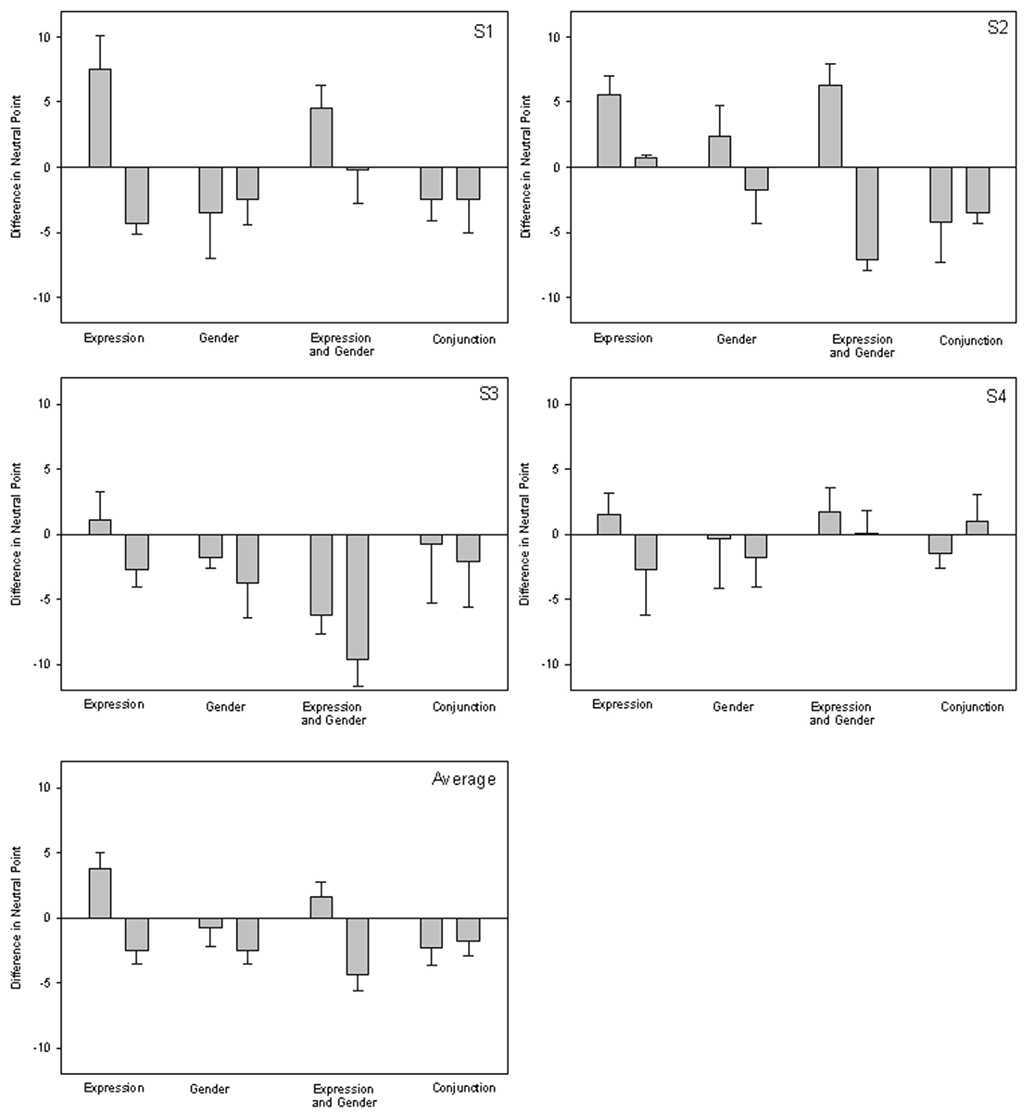

Aftereffects were now measured for four conditions. In the expression difference, the faces within each group were all happy or all fearful, but were drawn equally from males or females. In the gender difference, the two groups were all male or all female, with half showing a happy expression and half fearful. In the correlated expression and gender difference, each member of the adapting group had the same expression and gender (e.g., happy females versus fearful males or fearful females versus happy males). Finally, in the conjunction of expression and gender differences, observers were adapted to both expressions and genders within each group but combined in opposite ways. For example, they were exposed to expanded faces that were either happy females and fearful males, alternated with contracted faces that were either fearful females and happy males. Again, these conditions allowed us to compare adaptation to the attributes of expression or gender which were now less closely tied to a given individual identity. The latter two cases also allowed us to test what happens when the adapting images differ along more than one dimension, and whether this depends on whether these differences are covarying or reflect higher order combinations of the adapting attributes.

Mean settings at which the test faces appeared undistorted are shown in Figure 9 for each of the four adapting contingencies. The results showed significant selective aftereffects for the expression difference [t(14) = 4.55, p < 0.001], and for a difference in both expression and gender [t(30) = 5.03, p < 0.001]. However, for the gender difference the selectivity did not reach significance [t(15) = 1.34, p = 0.20]. Moreover, a significant contingent aftereffect was not found when the observers were adapted to different conjunctions of gender and expression [t(15) = −0.218, p = 0.76]. Thus in this case the selectivity of the aftereffects across all of the conditions appeared to largely depend on the differences in expression.

Figure 9. Adaptation to opposing distortions in sets of faces. Bars plot the settings for the two opposing conditions when the sets of faces differed in expression, gender, both gender and expression, or conjunctions of gender and expression. Panels show the settings for four observers or the mean.

Discussion

As noted in the Introduction, our study was motivated by the possibility that face distortion aftereffects might be more robust to image changes that preserved the identity of the face than to changes that caused the adapt and test faces to appear to be drawn from different individuals (Yamashita et al., 2005). This difference is generally consistent with the selectivity of the aftereffects for low-level transformations in the images, as well as a number of higher-level aspects of the adaptation. We therefore asked whether this difference might be manifest when comparing the selectivity of aftereffects between natural variations within the same face versus between different faces. However, we did not find stronger transfer when the adapt and test images showed different expressions of the same face than when they differed in gender and thus identity. Instead, in our case the aftereffects tended to be more selective for the expression change. Moreover, selectivity for both the expression and gender differences were surprisingly weak. We consider the relative selectivity and the general lack of selectivity in turn.

An obvious problem in interpreting comparisons across the facial dimensions is that the differences in expression may have represented larger physical differences in the images. The differences in selectivity could then simply reflect the degree to which the adapt and test faces differed as images. Indeed, this factor has been suggested as a possible reason for differences in the susceptibility of identity versus expression adaptation to suppression from visual awareness (Moradi et al., 2005; Adams et al., 2010, 2011; Yang et al., 2010). However, by this account the previously reported asymmetries between expression and identity aftereffects should have been reversed, for again the identity aftereffects showed greater transfer across expression (implying that the expression differences were weaker; Fox and Barton, 2007; Fox et al., 2008). Fox and Barton also showed that this could not account for the asymmetries they observed by showing that there were not corresponding differences in discrimination thresholds for the faces (Fox et al., 2008). In our case, we did not evaluate an independent measure of facial similarity. Yet we did not observe stronger selectivity when the faces differed in both expression and gender, which might have been expected if overall similarity were the important factor in determining the degree of transfer. Moreover, even if the expression change introduced a larger physical difference in the image, the images corresponded to natural patterns of variation in the face, and thus coding and adaptation for the relative attributes might be expected to be matched to the relative range of variation along the two dimensions (Robbins et al., 2007; Webster and MacLeod, 2011). In any case, these differences would not alter our conclusion that natural variations in the same identity owing to a change in expression resulted in similar or more selective aftereffects than natural variations across identities owing to a change in gender. Thus our results would still be inconsistent with a strong form of the proposal that the aftereffects transfer more strongly across changes that preserve identity (though this assumes that the expression changes were not so strong that they in fact masked the model’s identity).

Why might our conditions have led to a different pattern of selectivity for identity and expression than found previously (Fox and Barton, 2007; Fox et al., 2008)? An important difference between our studies is that these previous studies tested the effect of facial changes directly on identity and expression aftereffects. That is, they tested how a change in identity affected the perceived expression of the face or vice versa. In contrast, our aftereffects instead measured how the same configural change (the perceived expansion or contraction of the face) was modulated by a difference in gender or expression. This had the advantage that the same aftereffects could be compared for different variations between the adapt and test images. However, it has the important drawback that the aftereffects are not directly tapping the perception of the specific dimensions of gender or expression. Thus our results are not inconsistent with asymmetries between expression and identity aftereffects, but instead suggest that the configural changes induced by adaptation to the distorted faces can be affected by differences in both expression and gender. Thus again they are inconsistent with the specific hypothesis we tested that the distortion aftereffects would be stronger between faces that shared a common identity.

A conspicuous feature of our results was that the degree of selectivity we observed for both expression and gender was in fact very weak. The aftereffects were instead arguably notable for the high degree of transfer across fairly obvious changes in the appearance of the adapt and test faces. This is all the more compelling because the images were not cropped and thus provided unusually strong cues to the identity difference. The strong transfer is consistent with studies that have pointed out that changes in facial attributes lead to only partial selectivity in the distortion aftereffect (Jaquet and Rhodes, 2008), though it remains possible that the degree of selectivity varies with the specific form and magnitude of the configural change.

The basis for selectivity in face aftereffects is uncertain. One account assumes that different types of faces might be encoded relative to distinct norms (Little et al., 2005, 2008; Jaquet and Rhodes, 2008; Jaquet et al., 2008). In this case adaptation to distortions in a male or female face might therefore each induce a mean shift in the appearance of the subpopulation. Selectivity in such models assumes that the channels are very broadly tuned along one coding dimension (since this broad tuning is require to account for the normalization observed in face adaptation), while more narrowly tuned along other dimensions (so that stronger distortion aftereffects occur when the adapt and test have shared attributes). By this model, for the specific conditions we examined the channels coding the configural distortions are fairly broadly tuned for both gender and expression, and in particular, are not more selective for the identity attribute of gender than they are to the variant attribute of expression.

Webster and MacLeod (2011) noted that the contingent adaptation for different facial attributes could also reflect a form of tilt-aftereffect in the multidimensional space, so that adaptation to a specific identity trajectory (or “angle” in the space) biases the appearance of other face trajectories away from the adapting axis. For example, after adapting to an axis defined by a variation from expanded males to contracted females, male and female faces might appear “tilted” toward opposite distortions, while androgynous contracted or expanded faces would be shifted toward opposite genders. This pattern is similar to the changes in perceived hue following adaptation to different color directions (Webster and Mollon, 1994), and has the advantage that the aftereffects still reflect shifts relative to a single common norm. However, selective response changes in this model reflect a form of “contrast adaptation” that adjusts to the variance of the faces, and is distinct from the “mean adaptation” that characterizes most face aftereffects (Webster and MacLeod, 2011). Adaptation induced changes in the perceived variance of faces has been difficult to demonstrate (MacLin and Webster, 2001), suggesting that this form of adaptation may generally be weak. Under this model then, the weak selectivity we found for changes in expression or gender is consistent with the possibility that adaptation to the facial distortions primarily induces a mean bias in the face norm rather than a bias in perceived contrast or gamut of faces relative to the norm.

Finally, it remains possible that the configural aftereffects we tested show weak tuning for the subtle image variations that define different faces, because the aftereffects depend at least in part on response changes at more generic levels of visual coding. Adaptation can potentially arise at many if not all levels of the visual pathway (Webster, 2011; Webster and MacLeod, 2011). While the size difference between the adapt and test images provided a commonly used control for simple retinotopic afterimages (Zhao and Chubb, 2001), the distortions we probed have nevertheless been found to include both shape-generic as well as shape-specific and possibly face-specific components. For example, Dickinson et al. (2010) have noted that the aftereffects for configural distortions could in part arise from changes in the distribution of local orientations in the images, a pattern which could be preserved even when the adapt and test images differ in size. On the other hand, aftereffects for the distortions survive the size change even when the faces are altered to remove all internal structure except the eyes and mouth, so that the aftereffect in this case cannot be driven by the local texture (Yamashita et al., 2005). Moreover, aftereffects for distortions along one axis (e.g., horizontally stretching the face) transfer across changes in head orientation, and thus must again include a response change that is specific to the object (Watson and Clifford, 2003). Susilo et al. (2010) further examined the extent to which the configural aftereffects might be face-specific. They found that distorting faces by varying eye-height induced aftereffects which showed complete transfer from faces to “T” shapes when the images were inverted, but only partial transfer when the images were upright. This suggested that aftereffects for the distorted faces were driven by non-selective shape aftereffects for the inverted images, while reflecting both shape and face-selective response changes in upright faces. Again, in the present experiments we used configural distortions in order to have a common metric for comparing the expression and gender aftereffects. The fact that these aftereffects were contingent on the facial attributes indicates that the adaptation was not dependent on the distortion alone. Yet as the preceding studies suggest, it is also unlikely that they reflected response changes in mechanisms that were responsive only to faces. Different configural manipulations may vary in the extent to which they isolate face-specific levels of processing (Susilo et al., 2010), and these might reveal different patterns of selectivity from those we observed. Whatever the response changes and coding sites underlying the current configural aftereffects, our results suggest that they can adjust to the attribute of the configural change to a large extent independently of the specific face carrying the change, and in particular do not show more selectivity for an invariant attribute like gender than for a variant attribute like expression.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Supported by National Eye Institute Grant EY-10834.

References

Adams, W. J., Gray, K. L., Garner, M., and Graf, E. W. (2010). High-level face adaptation without awareness. Psychol. Sci. 21, 205–210.

Adams, W. J., Gray, K. L., Garner, M., and Graf, E. W. (2011). On the “special” status of emotional faces. Comment on Yang, Hong, and Blake (2010). J. Vis. 11(3): 10, 1–4.

Afraz, A., and Cavanagh, P. (2009). The gender-specific face aftereffect is based in retinotopic not spatiotopic coordinates across several natural image transformations. J. Vis. 9(10): 10, 11–17.

Andrews, T. J., and Ewbank, M. P. (2004). Distinct representations for facial identity and changeable aspects of faces in the human temporal lobe. Neuroimage 23, 905–913.

Atkinson, A. P., Tipples, J., Burt, D. M., and Young, A. W. (2005). Asymmetric interference between sex and emotion in face perception. Percept. Psychophys. 67, 1199–1213.

Barrett, S. E., and O’Toole, A. J. (2009). Face adaptation to gender: does adaptation transfer across age categories? Vis. Cogn. 17, 700–715.

Benton, C. P., and Burgess, E. C. (2008). The direction of measured face aftereffects. J. Vis. 8(15): 1, 1–6.

Bestelmeyer, P. E. G., Jones, B. C., DeBruine, L. M., Little, A. C., Perrett, D. I., Schneider, A., Welling, L. L. M., and Conway, C. A. (2008). Sex-contingent face aftereffects depend on perceptual category rather than structural encoding. Cognition 107, 353–365.

Bestelmeyer, P. E. G., Jones, B. C., DeBruine, L. M., Little, A. C., and Welling, L. L. M. (2009). Face aftereffects suggest interdependent processing of expression and sex and of expression and race. Vis. Cogn. 18, 255–274.

Butler, A., Oruc, I., Fox, C. J., and Barton, J. J. (2008). Factors contributing to the adaptation aftereffects of facial expression. Brain Res. 1191, 116–126.

Calder, A. J., and Young, A. W. (2005). Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 6, 641–651.

Cook, R., Matei, M., and Johnston, A. (2011). Exploring expression space: adaptation to orthogonal and anti-expressions. J. Vis. 11(4): 2, 1–9.

Davidenko, N., Witthoft, N., and Winawer, J. (2008). Gender aftereffects in face silhouettes reveal face-specific mechanisms. Vis. Cogn. 16, 99–103.

Dickinson, J. E., Almeida, R. A., Bell, J., and Badcock, D. R. (2010). Global shape aftereffects have a local substrate: A tilt aftereffect field. J. Vis. 10(13): 5, 1–12.

Ellamil, M., Susskind, J. M., and Anderson, A. K. (2008). Examinations of identity invariance in facial expression adaptation. Cogn. Affect. Behav. Neurosci. 8, 273–281.

Fox, C. J., and Barton, J. J. (2007). What is adapted in face adaptation? The neural representations of expression in the human visual system. Brain Res. 1127, 80–89.

Fox, C. J., Oruc, I., and Barton, J. J. (2008). It doesn’t matter how you feel. The facial identity aftereffect is invariant to changes in facial expression. J. Vis. 8(3): 11, 1–13.

Ghuman, A. S., McDaniel, J. R., and Martin, A. (2010). Face adaptation without a face. Curr. Biol. 20, 32–36.

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. (Regul. Ed.) 4, 223–233.

Hole, G. J. (2011). Identity-specific face adaptation effects: evidence for abstractive face representations. Cognition 119, 216–228.

Hole, G. J., George, P. A., Eaves, K., and Rasek, A. (2002). Effects of geometric distortions on face-recognition performance. Perception 31, 1221–1240.

Hsu, S. M., and Young, A. W. (2004). Adaptation effects in facial expression recognition. Vis. Cogn. 11, 871–899.

Jaquet, E., and Rhodes, G. (2008). Face aftereffects indicate dissociable, but not distinct, coding of male and female faces. J. Exp. Psychol. Hum. Percept. Perform. 34, 101–112.

Jaquet, E., Rhodes, G., and Hayward, W. G. (2008). Race-contingent aftereffects suggest distinct perceptual norms for different race faces. Vis. Cogn. 16, 734–753.

Jones, B. C., Feinberg, D. R., Bestelmeyer, P. E., Debruine, L. M., and Little, A. C. (2010). Adaptation to different mouth shapes influences visual perception of ambiguous lip speech. Psychon. Bull. Rev. 17, 522–528.

Langner, O., Dotsch, R., Bijlstra, G., Wigboldus, D. H. J., Hawk, S. T., and Knippenberg, A. (2010). Presentation and validation of the Radboud Faces Database. Cogn. Emot. 24, 1377–1388.

Leopold, D. A., O’Toole, A. J., Vetter, T., and Blanz, V. (2001). Prototype-referenced shape encoding revealed by high-level aftereffects. Nat. Neurosci. 4, 89–94.

Little, A. C., DeBruine, L. M., and Jones, B. C. (2005). Sex-contingent face after-effects suggest distinct neural populations code male and female faces. Proc. Biol. Sci. 272, 2283–2287.

Little, A. C., DeBruine, L. M., Jones, B. C., and Waitt, C. (2008). Category contingent aftereffects for faces of different races, ages and species. Cognition 106, 1537–1547.

Loffler, G., Yourganov, G., Wilkinson, F., and Wilson, H. R. (2005). fMRI evidence for the neural representation of faces. Nat. Neurosci. 8, 1386–1390.

MacLin, O. H., and Webster, M. A. (2001). Influence of adaptation on the perception of distortions in natural images. J. Electron. Imaging 10, 100–109.

Moradi, F., Koch, C., and Shimojo, S. (2005). Face adaptation depends on seeing the face. Neuron 45, 169–175.

Neth, D., and Martinez, A. M. (2009). Emotion perception in emotionless face images suggests a norm-based representation. J. Vis. 9(1): 5, 1–11.

Ng, M., Ciaramitaro, V. M., Anstis, S., Boynton, G. M., and Fine, I. (2006). Selectivity for the configural cues that identify the gender, ethnicity, and identity of faces in human cortex. Proc. Natl. Acad. Sci. U.S.A. 103, 19552–19557.

O’Neil, S., and Webster, M. A. (2011). Adaptation and the perception of facial age. Vis. Cogn. 19, 534–550.

Pell, P. J., and Richards, A. (2011). Cross-emotion facial expression aftereffects. Vision Res. 51, 1889–1896.

Rhodes, G., and Jeffery, L. (2006). Adaptive norm-based coding of facial identity. Vision Res. 46, 2977–2987.

Robbins, R., McKone, E., and Edwards, M. (2007). Aftereffects for face attributes with different natural variability: adapter position effects and neural models. J. Exp. Psychol. Hum. Percept. Perform. 33, 570–592.

Rotshtein, P., Henson, R. N., Treves, A., Driver, J., and Dolan, R. J. (2005). Morphing Marilyn into Maggie dissociates physical and identity face representations in the brain. Nat. Neurosci. 8, 107–113.

Rutherford, M. D., Chattha, H. M., and Krysko, K. M. (2008). The use of aftereffects in the study of relationships among emotion categories. J. Exp. Psychol. Hum. Percept. Perform. 34, 27–40.

Said, C. P., Haxby, J. V., and Todorov, A. (2011). Brain systems for assessing the affective value of faces. Philos. Trans. R. Soc. Lond. B Biol. Sci. 366, 1660–1670.

Schweinberger, S. R., Burton, A. M., and Kelly, S. W. (1999). Asymmetric dependencies in perceiving identity and emotion: experiments with morphed faces. Percept. Psychophys. 61, 1102–1115.

Schweinberger, S. R., and Soukup, G. R. (1998). Asymmetric relationships among perceptions of facial identity, emotion, and facial speech. J. Exp. Psychol. Hum. Percept. Perform. 24, 1748–1765.

Schweinberger, S. R., Zaske, R., Walther, C., Golle, J., Kovacs, G., and Wiese, H. (2010). Young without plastic surgery: perceptual adaptation to the age of female and male faces. Vision Res. 50, 2570–2576.

Skinner, A. L., and Benton, C. P. (2010). Anti-expression aftereffects reveal prototype-referenced coding of facial expressions. Psychol. Sci. 21, 1248–1253.

Susilo, T., McKone, E., and Edwards, M. (2010). Solving the upside-down puzzle: why do upright and inverted face aftereffects look alike? J. Vis. 10(13): 1, 1–16.

Watson, T. L., and Clifford, C. W. G. (2003). Pulling faces: an investigation of the face-distortion aftereffect. Perception 32, 1109–1116.

Webster, M. A., Kaping, D., Mizokami, Y., and Duhamel, P. (2004). Adaptation to natural facial categories. Nature 428, 557–561.

Webster, M. A., and MacLeod, D. I. A. (2011). Visual adaptation and face perception. Philos. Trans. R. Soc. Lond. B Biol. Sci. 366, 1702–1725.

Webster, M. A., and MacLin, O. H. (1999). Figural aftereffects in the perception of faces. Psychon. Bull. Rev. 6, 647–653.

Webster, M. A., and Mollon, J. D. (1994). The influence of contrast adaptation on color appearance. Vision Res. 34, 1993–2020.

Yamashita, J. A., Hardy, J. L., De Valois, K. K., and Webster, M. A. (2005). Stimulus selectivity of figural aftereffects for faces. J. Exp. Psychol. Hum. Percept. Perform. 31, 420–437.

Yang, E., Hong, S. W., and Blake, R. (2010). Adaptation aftereffects to facial expressions suppressed from visual awareness. J. Vis. 10(12): 24, 1–13.

Keywords: adaptation, aftereffects, face perception, facial expressions

Citation: Tillman MA and Webster MA (2012) Selectivity of face distortion aftereffects for differences in expression or gender. Front. Psychology 3:14. doi: 10.3389/fpsyg.2012.00014

Received: 02 October 2011;

Paper pending published: 24 November 2011;

Accepted: 11 January 2012;

Published online: 30 January 2012.

Edited by:

Peter James Hills, Anglia Ruskin University, UKCopyright: © 2012 Tillman and Webster. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: Michael A. Webster, Department of Psychology, University of Nevada, Reno, NV 89557, USA. e-mail: mwebster@unr.edu