- 1 Center for Cognitive Neuroscience, Duke University, Durham, NC, USA

- 2 Department of Psychology and Neuroscience, Duke University, NC, USA

Emotional arousal at encoding is known to facilitate later memory recall. In the present study, we asked whether this emotion-modulation of episodic memory is also evident at very short time scales, as measured by “feature integration effects,” the moment-by-moment binding of relevant stimulus and response features in episodic memory. This question was motivated by recent findings that negative emotion appears to potentiate first-order trial sequence effects in classic conflict tasks, which has been attributed to emotion-modulation of conflict-driven cognitive control processes. However, these effects could equally well have been carried by emotion-modulation of mnemonic feature binding processes, which were perfectly confounded with putative control processes in these studies. In the present experiments, we tried to shed light on this question by testing explicitly whether feature integration processes, assessed in isolation of conflict–control, are in fact susceptible to negative emotion-modulation. For this purpose, we adopted a standard protocol for assessing the rapid binding of stimulus and response features in episodic memory (Experiment 1) and paired it with the presentation of either neutral or fearful background face stimuli, shown either at encoding only (Experiment 2), or at both encoding and retrieval (Experiment 3). Whereas reliable feature integration effects were observed in all three experiments, no evidence for emotion-modulation of these effects was detected, in spite of significant effects of emotion on response times. These findings suggest that rapid feature integration of foreground stimulus and response features is not subject to modulation by negative emotional background stimuli and further suggest that previous reports of emotion-modulated trial–transition effects are likely attributable to the effects of emotion on cognitive control processes.

Introduction

Affectively salient stimuli have been found to impact cognitive processing in a variety of ways. For instance, a survival-relevant stimulus, such as a fearful face, can exert a powerful exogenous pull on attention (LeDoux, 2000; Ohman et al., 2001), even if it is irrelevant to the task at hand (Mathews and MacLeod, 1985; McKenna, 1986; Isenberg et al., 1999; Phelps et al., 2006; Reeck and Egner, 2011). In addition to attentional effects, a rich animal and human research literature has documented that affectively salient stimuli or situations also modulate episodic memory processes. Specifically, it is well-established that emotional arousal at the time of encoding enhances long-term memory consolidation in comparison to emotionally neutral conditions (McGaugh and Roozendaal, 2002; McGaugh, 2004; for reviews, see Hamann, 2001; LaBar and Cabeza, 2006). Other studies have shown that emotional stimuli also have more immediate effects on memory encoding, which are likely attention-mediated and may enhance episodic memory in the short-term (that is, prior to consolidation into long-term memory) as well as in the long term (Hamann et al., 1999; Hamann, 2001; Tabert et al., 2001).

In the latter work, an important distinction has been drawn between the recall of a specific object that carries emotional salience (item memory) and the binding of that item and its context (source memory). Specifically, a number of studies suggest that emotional arousal facilitates the recall of emotional stimuli themselves but either does not enhance (Mather and Nesmith, 2008) or is detrimental to the recall of contextual features that are not part of the salient object, resulting in poor context or source memory (Mather et al., 2006; Mitchell et al., 2006). These effects have been theorized to reflect the fact that emotionally arousing stimuli attract attention at the expense of other, co-occurring stimuli, which results in a boost to emotional item memory but poorer mnemonic integration of incidental, non-emotional stimuli into episodic memory (Mather, 2007; but see Hadley and Mackay, 2006). In the current study, we sought to further elucidate the interplay between (negative) emotional stimuli and mnemonic feature binding processes, but with a focus on immediate (or very fast) binding effects, which occur at a time-scale that has been largely ignored in the previous literature.

The temporal lag between memory encoding and retrieval in studies that assessed emotion effects on short-term memory is typically in the range of several minutes (LaBar and Phelps, 1998; Hamann et al., 1999; Tabert et al., 2001; Sharot and Phelps, 2004), and at the least in the range of 7–8 s (Mather et al., 2006; Mitchell et al., 2006). By contrast, in the present study, we sought to evaluate the influence of emotional stimuli on very fast-acting processes in episodic memory associated with “feature integration,” the seemingly obligatory moment-by-moment binding of stimulus and response features into compound episodic memory traces referred to as “event files” (Hommel, 1998). These binding processes appear to take effect almost instantaneously, as their consequences can be observed at a time-scale of less than 1 s (Hommel, 1998) and they last at least several seconds (Hommel et al., 2004). Note that this type of feature integration involves not only the binding of different perceptual features, which has typically been the focus of previous emotional memory studies (see Mather, 2007), but also the integration of these stimulus features with a motor action on the part of the subject (Hommel, 1998).

Specifically, building on the notion of “object files” – the momentary binding of object features at attended locations into a cohesive percept and memory representation (Kahneman et al., 1992) – Hommel has shown convincingly that both task-relevant (and sometimes task-irrelevant) stimulus and response features comprising an “event” appear to be integrated into a common short-term memory trace, such that processing costs are incurred when this integrated memory has to be bypassed or “unbound” subsequently (e.g., Hommel, 1998, 2004; Hommel et al., 2004). For instance, if a participant performs a right-hand button-press response that temporally coincides with the presentation of a particular visual stimulus (e.g., a blue square), the stimulus and response features are thought to be bound together into an episodic event file. If, shortly afterward, the subject has to perform a left-hand button-press in the presence of the same stimulus features (a “partial repetition” of the previous event), the response will be slowed in comparison to conditions in which either all response/stimulus features repeat or they all change. This is argued to reflect the fact that the reoccurring stimulus features will reactivate (or retrieve) the strongest or most recent memory trace involving those features, including their associated response, which now conflicts with the required response; this conflict has to be overcome for the correct response to be selected, resulting in slower response times (RT; for a similar scheme, see Logan, 1988). These partial repetition costs incurred on partial repetition trials as compared to complete repetitions or alternations can therefore serve as a behavioral index of feature integration in short-term episodic memory, and they will be employed as the dependent variable for testing the effects of emotional stimuli on fast short-term memory binding processes in the present study.

The major motivation for assessing the impact of (negative) emotion on these feature integration processes in the current study stems from the fact that this type of feature binding represents a well-known confound in many studies investigating trial–transition effects in traditional conflict tasks (like Stroop and Simon tasks; Hommel et al., 2004; for review see Egner, 2007). Here, conflict (or interference) effects are typically reduced following an incongruent, high-conflict trial compared to a congruent, low-conflict trial, because performance on successive incongruent and successive congruent trials tends to be faster than performance on incongruent trials following a congruent trial and on congruent trials following an incongruent trial, respectively (Gratton et al., 1992). These inter-trial dependencies in behavioral performance are typically attributed to the operation of a conflict-driven cognitive control mechanism that enhances the selection of task-relevant stimulus information following an incongruent trial, thus reducing the performance difference between congruent and incongruent trials under these conditions (Botvinick et al., 2001; Egner and Hirsch, 2005). However, this sequence effect can often equally well be interpreted in terms of feature integration processes, because in conflict tasks with small stimulus sets, successive congruent and incongruent trials tend to be associated with either an exact repetition of all stimulus and response features or a complete change of these features, whereas congruent trials following an incongruent trial and incongruent trials following a congruent trial tend to be partial repetition trials (Hommel et al., 2004; Egner, 2007).

Importantly, recent studies have suggested that emotional states modulate these trial–transition effects (van Steenbergen et al., 2009, 2010; but see Stürmer et al., 2011), whereby negative emotion is held to potentiate inter-trial dependencies, and these effects have been interpreted as reflecting the modulation of conflict-driven cognitive control by emotion (van Steenbergen et al., 2009, 2010). However, the designs of these studies did not allow for segregating the effects of conflict-driven control from those of feature integration, such that it is presently not clear whether the emotion effects observed were carried by modulation of conflict-driven control or by modulation of feature integration processes. Here, we sought to clarify this issue by assessing the impact of negative emotion-evoking stimuli on a pure measure of feature integration, outside of the context of a conflict task: if negative emotion were to potentiate feature binding, the previously reported effects might be attributable to this type of modulation, whereas if there were no evidence for emotion-modulation of feature integration, the potentiating effects of negative emotion on trial–transition effects in previous studies are likely attributable to the modulation of conflict-driven control processes.

Given this particular perspective on emotion–memory interactions, our design entailed an additional distinction from many previous studies of emotional memory, in that we did not assess memory for the emotional stimulus itself, but rather whether the mere presence of a (task-irrelevant) background stimulus thought to evoke emotional arousal would modulate episodic binding processes concerning task-relevant foreground stimuli and responses. This approach mimics more closely the type of designs used in investigating emotion-modulation of cognitive control processes (Dreisbach and Goschke, 2004; van Steenbergen et al., 2009, 2010; Stürmer et al., 2011). From the item vs. context perspective in the emotional memory literature highlighted above, this design could be cast as assessing whether emotional arousal modulates fast binding processes of contextual stimuli that are task-relevant but not part of the emotional stimulus itself. In sum, our goal was to gauge whether threat-related emotional (as compared to neutral) background stimuli would influence the short-term episodic binding phenomena underlying the moment-by-moment integration of stimulus and response features.

The Present Experiments

We approached the question of whether emotional stimuli modulate feature integration by adopting the basic task design developed by Hommel (1998). Specifically, in each trial, a multi-featured stimulus (a colored shape) was first paired with a pre-cued response in an arbitrary manner (i.e., the response was not determined by the stimulus features), followed by a second stimulus presentation where the task-relevant stimulus feature (color) determined which one of two possible responses to perform. This design allows one to independently vary repetitions vs. changes in the task-relevant stimulus feature (color), the irrelevant stimulus feature (shape), and the response (left vs. right) from the first to the second stimulus presentation. Consequently, the degree of integration between the response and the relevant and irrelevant stimulus features can be gauged via the respective partial repetition costs (i.e., the relative increase in RT in partial repetition trials as compared to complete repetitions and alternations). In Experiment 1, we simply aimed at replicating the basic stimulus–response feature integration effects reported by Hommel (1998) in the absence of any additional stimuli. In the second and third experiments, we then tested whether presenting additional task-irrelevant background stimuli of varying emotional valence (face stimuli with neutral vs. fearful expressions) either at encoding (Experiment 2) or at encoding and retrieval (Experiment 3) would differentially modulate the episodic binding between the foreground stimulus features and the response. For a different approach to investigating emotional modulation of feature integration effects, see Colzato et al. (2007), which will be addressed in detail in the Section “General Discussion.”

Experiment 1

Our first experiment simply served to ascertain that we could replicate the standard feature integration effects reported by Hommel (1998) in a simple task where the task-relevant stimulus feature consisted of stimulus color and the task-irrelevant feature consisted of stimulus shape. We anticipated observing reliable binding effects between stimulus color and response and weaker (if any) binding effects between stimulus shape and response.

Methods

Subjects

Twenty healthy college student volunteers (Mage = 19.15 years, SD = 1.1, eight women) participated in this study for course credit. All participants were fluent in English and had normal or corrected-to-normal vision. Prior to study participation, written informed consent was obtained from each participant in accordance with institutional guidelines.

Stimuli and procedure

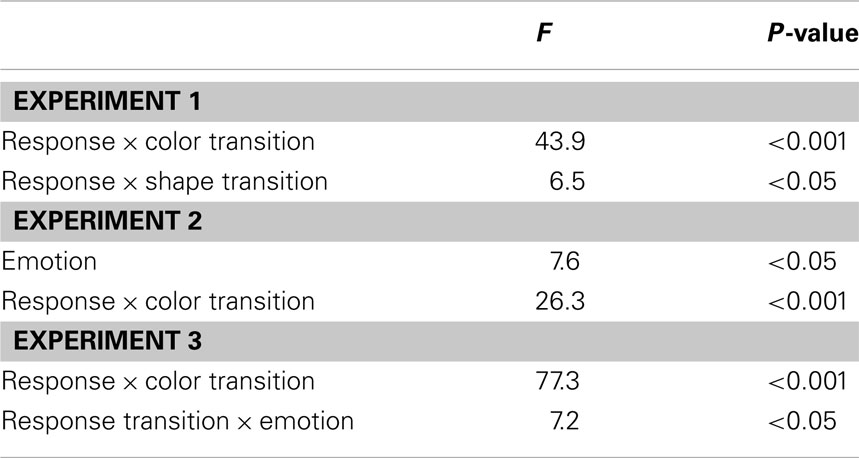

The experiment was programmed and presented using Presentation software (Neurobehavioral Systems, http://nbs.neuro-bs.com), with stimuli displayed on a 19′′ Dell Flat Panel 1907FPVt monitor that was controlled by a Dell Optiplex 960 computer. Stimuli consisted of luminance-equated blue and green circles and squares; response cues were the words “left” and “right,” printed in black uppercase letters. Both stimuli and response cues always appeared in the center of the screen on a gray background (see Figure 1A). Participants were seated approximately 60 cm from the screen. The colored shape stimuli subtended approximately 5 (height) × 5 (width) degrees of visual angle and the response cues subtended approximately 2 (height) × 3 (width) degrees of visual angle. Responses were made by pressing the “N” and “J” keys of a standard QWERTY keyboard with the index and middle finger of the right hand, respectively.

Figure 1. Experimental protocols. In each experiment, participants first performed a delayed simple reaction task, where a response cue pre-determined the response (left vs. right) subjects had to execute upon presentation of stimulus 1 (S1), regardless of the particular S1 stimulus features. This was followed by a binary-choice reaction task upon presentation of stimulus 2 (S2), where subjects had to respond in accordance with the color of a colored shape stimulus (e.g., blue = left response, green = right response, counterbalanced across subjects). In Experiment 1 (A), this task was performed without any background stimuli. In Experiment 2 (B), a task-irrelevant face stimulus that could display either a neutral or a fearful expression was shown in the background during S1 presentation. In Experiment 3 (C), a task-irrelevant face stimulus that could display either a neutral or a fearful expression was shown in the background during both S1 and S2 presentation.

The task was modeled after Hommel’s (1998) feature integration experiments: in a given trial, participants first performed a delayed simple reaction task (T1), followed by a binary-choice reaction task (T2). For T1, responses (R1) had to be performed in accordance with the content of a preceding response cue (i.e., “left,” “right”) as soon as the stimulus (S1) appeared on the screen. Importantly, S1 only served as a generic “go” signal for the execution of the pre-cued response, such that the S1 stimulus features did not determine which response had to be selected. For T2, the color (i.e., blue vs. green) of the second stimulus (S2) had to be identified by pressing the left or right response button (R2). Color-to-button response mappings were counterbalanced across participants.

The timing of events in each trial was adopted from Hommel (1998) and was as follows: a fixation cross was presented for 2,000 ms, after which the response cue was shown for 1,500 ms. The fixation cross reappeared for 1,000 ms, followed by S1 presentation for 500 ms. Following S1, there was another fixation interval of 500 ms before S2 was displayed for 2,000 ms. Trials were presented to each participant in one of five pseudo-random orders, generated to satisfy the criterion of equal numbers of occurrences of the possible S2 color (blue vs. green) and shape (circle vs. square) combinations, as well as the possible transition relationships between S1 and S2 regarding color and shape (i.e., repetition vs. alternation), and the two possible relationships between R1 and R2 (repetition vs. alternation). Moreover, the stimulus sequences did not include any direct repetitions of trial types. The experimental session consisted of 4 blocks of 48 trials each, preceded by 20 practice trials. Participants were instructed to respond as fast as possible while maintaining accuracy and they were allowed to take short breaks between blocks.

Analyses

The analyses focused on R2 RT. Only trials where both R1 and R2 had been performed correctly were considered correct trials. All error and post-error trials, as well as outlier RT values of more than 2 SDs from subject-specific grand means were excluded from the analysis. Accuracy was at ceiling (mean = 97.3%, SD = 3.2) and was not considered for statistical analysis. We analyzed R2 RT data as a function of the transition relationship between S2 stimulus and R2 response features and S1 and R1 features (i.e., repetition vs. alternation of features). Specifically, the data were submitted to a 2 (color: repetition vs. alternation) × 2 (shape: repetition vs. alternation) × 2 (response: repetition vs. alternation) repeated-measures ANOVA.

Results

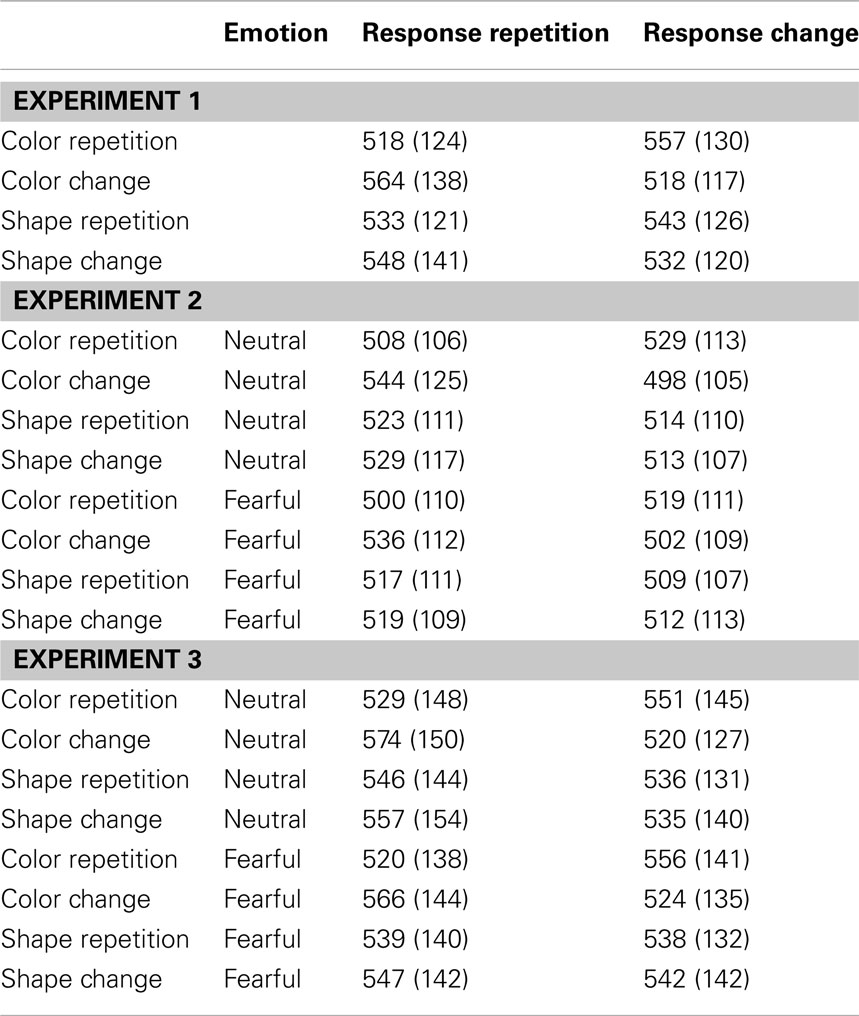

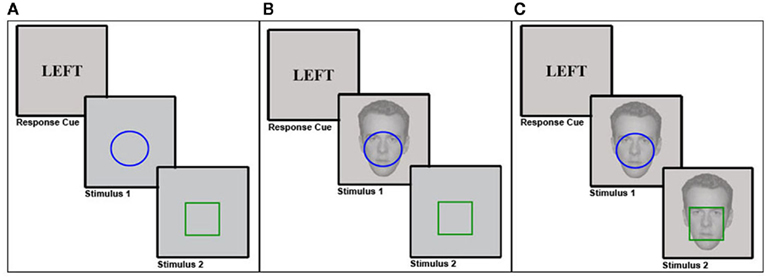

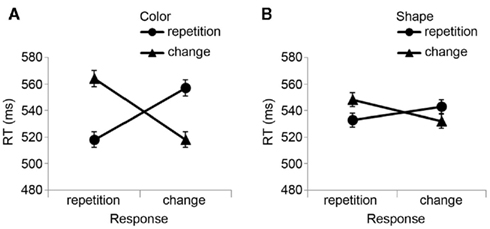

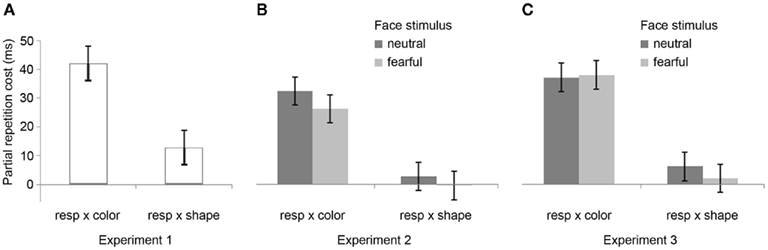

The R2 RT data (Table 1) displayed no main effects of the color, shape, or response transition factors but, as expected, response and color transitions interacted [F(1, 19) = 43.9, P < 0.001], as response repetitions were faster than response changes when they were accompanied by color feature repetitions (518 vs. 557 ms), but slower when they were accompanied by color feature changes (564 vs. 518 ms; Figure 2A). Shape transitions also interacted with response transitions [F(1, 19) = 6.5, P < 0.05], as response repetitions were faster than response changes when accompanied by a shape feature repetition (533 vs. 543 ms) but slower when accompanied by a shape feature change (548 vs. 532 ms; Figure 2B). No other main or interaction effects were obtained. A direct comparison between the respective color–response and shape–response binding effects revealed that the former were significantly larger than the latter [t(19) = 5.0, P < 0.001]. In order to facilitate comparison with results from Experiments 2 and 3, Figure 3A plots the two-way interactions between response and stimulus features as single values that capture the degree of binding between response and stimulus features by indicating the RT cost of having to overcome conflicting bindings (partial repetition costs), using the formula [(feature repetition, response change + feature change, response repetition)/2 − (feature repetition, response repetition + feature change, response change)/2]. Thus, positive RT values reflect the relative slow-down in responses when the response repeats but a given stimulus feature changes (and vice versa), compared to when both the response and stimulus feature repeat or alternate in concert (cf. Hommel, 2005).

Figure 2. Experiment 1 feature integration effects. Mean R2 response times (±SEM) in Experiment 1 are displayed as a function of (A) stimulus color and response transitions, and (B) stimulus shape and response transitions.

Figure 3. Feature integration effects across Experiments 1–3. Bar graphs summarize mean partial repetition costs (±SEM) involving the task-relevant foreground feature (color) and responses, and the task-irrelevant foreground feature (shape) and responses for Experiments 1 (A), 2 (B), and 3 (C), as well as the modulation of these effects by the affective expression of background face stimuli (neutral vs. fearful) for Experiments 2 (B) and 3 (C). Note that there were no background face stimuli (and thus no modulation thereby) in Experiment 1. Each bar represents the two-way interaction between response and stimulus features as a single value that captures the degree of binding between response and stimulus features by indicating the RT cost of partial repetitions [(feature repetition, response change + feature change, response repetition)/2 − (feature repetition, response repetition + feature change, response change)/2]. In other words, positive RT values reflect the relative slow-down in responses when the response repeats but a given stimulus feature changes (and vice versa), compared to when both the response and stimulus feature repeat or alternate in concert (cf. Hommel, 2005).

Discussion

The data obtained in Experiment 1 represent a successful replication of the key feature integration results obtained by Hommel (1998): substantial costs are incurred when a response has to be repeated in the presence of a stimulus feature change, and vice versa, compared to complete repetition and complete alternation conditions. Moreover, the binding effect is much more pronounced for the task-relevant stimulus feature (color) than for the task-irrelevant one (shape) (Hommel, 1998, 2005). In the subsequent experiments, we sought to test whether either one of these indices of fast episodic stimulus–response binding processes would be modulated by the presence of a (task-irrelevant) emotionally salient stimulus of negative valence.

Experiment 2

Our basic research question concerned the impact of emotion on the short-term episodic memory phenomenon of feature integration. In Experiment 1, we established the basic procedure for obtaining reliable feature integration effects. In Experiment 2, we aimed at adding a manipulation of emotion to that procedure. As emotion-provoking stimuli, we chose photographs of male faces with fearful expressions, to be contrasted with the same individuals’ faces posing neutral expressions. A large literature has established that fearful face stimuli are potent emotional stimuli that evoke heightened arousal (as measured by galvanic skin response; e.g., Williams et al., 2001), trigger strong responses in neural fear circuits centering on the amygdala (e.g. Breiter et al., 1996), and can have immediate effects on perceptual and cognitive processing (e.g., Phelps et al., 2006; Reeck and Egner, 2011). In order to assess the effects of threat-related, negative emotion on feature integration, in Experiment 2 we therefore simply added a task-irrelevant background face stimulus (neutral vs. fearful) during the encoding phase (that is, at S1) of the feature integration task we had employed in Experiment 1 (see Figure 1B).

Methods

Subjects

Twenty-one healthy college student volunteers (Mage = 19.33 years, SD = 1.4, eight women) participated in this study for course credit. All participants were fluent in English and had normal or corrected-to-normal vision. Prior to study participation, written informed consent was obtained from each participant in accordance with institutional guidelines.

Stimuli and procedure

The stimuli and procedure were identical to the ones in Experiment 1, with the following modification: for the S1 stimuli, the colored shapes were now overlaid on semi-transparent, gray-scale photographs of male faces (40% opacity), tightly surrounding the eye- and mouth-region of any given face (see Figure 1B). The face stimuli subtended approximately 10 (height) × 8 (width) degrees of visual angle. The faces stemmed from the NimStim Set of Facial Expressions (Tottenham et al., 2009) and consisted of six male actors posing neutral and fearful facial expressions. Thus, a given S1 could have either a neutral or fearful face displayed in the background. The faces (and their expressions) were irrelevant to the subjects’ task. The experimental session was composed of 1 practice block of 20 trials followed by 8 experimental blocks of 48 trials each.

Analyses

The analyses focused on R2 RT. Only trials where both R1 and R2 had been performed correctly were considered correct trials. All error and post-error trials, as well as outlier RT values of more than 2 SDs from subject-specific grand means were excluded from the analysis. Accuracy was at ceiling (mean = 97.8%, SD = 2.0) and was not considered for statistical analysis. We analyzed R2 RT data as a function of the transition relationship between S2/R2 and S1/R1 features as well as the affect of the facial expression during S1. Specifically, the data were submitted to a 2 (color: repetition vs. alternation) × 2 (shape: repetition vs. alternation) × 2 (response: repetition vs. alternation) × 2 (facial affect: neutral vs. fearful) repeated-measures ANOVA.

Results

In the R2 RT data (Table 1), a main effect of emotion was evident [F(1, 20) = 7.6, P < 0.05], as responses were generally a little faster following the presentation of a fearful background face (514 ms) than following an emotionally neutral face stimulus (520 ms). There was also a trend toward a main effect of response transition [F(1, 20) = 3.7, P = 0.069], with responses tending to be generally slower on response repetition (522 ms) than response change trials (513 ms). More importantly, the standard feature integration interaction effect between response and color transitions was obtained [F(1, 20) = 26.3, P < 0.001], as response repetitions were faster than response changes when they were accompanied by color feature repetitions (504 vs. 524 ms), but slower when they were accompanied by color feature changes (540 vs. 500 ms). No significant interaction was observed between response and shape transitions. However, most pertinent to our current concerns, as can be seen in Figure 3B, the emotional expression of the background face in S1 did not have any impact on the foreground stimulus–response binding effects, as neither the three-way interaction involving emotion, shape, and response transitions [F(1, 20) = 0.4, P = 0.55], nor that involving emotion, color, and response transitions [F(1, 23) = 2.0, P = 0.17] were significant.

Discussion

The data obtained in Experiment 2 replicated the basic partial repetition costs associated with episodic stimulus–response integration, though that effect was only significant for the task-relevant color feature. Importantly, we observed no interaction of this feature integration effect with the emotionality of the background face stimulus presented at encoding, suggesting that emotional responses do not modulate the fast-acting short-term binding of foreground stimulus and response features in episodic memory. It is important to note that the emotion manipulation as such did have an impact on performance, as expressed in a main effect of facial affect at S1 on R2 RT, where responses were found to be faster following a fearful background face stimulus than a neutral one. This general speed-up of RT suggests that the fearful face stimuli indeed triggered an increase in threat-related arousal, but that this emotional response had no effect on the foreground feature binding. Crucially, this finding documents that the lack of an emotion-modulation effect on feature integration cannot be attributed to a weak or ineffective manipulation of emotion. It also demonstrates that, even though the face stimuli were irrelevant to the subjects’ task, subjects did not manage to avoid processing of those stimuli. In Experiment 3, we sought to replicate these findings while controlling for a possible confound stemming from the fact that faces were presented only at S1 but not at S2.

Experiment 3

One aspect of the design of Experiment 2 that may have impeded the expression of emotion-modulation of feature integration is that the background face stimuli were shown at encoding (S1) but were not displayed at S2. While this approach makes intuitive sense when one is interested in how emotion impacts encoding processes, the fact that there was a major feature change in the stimulus display between S1 and S2 could have feasibly confounded the results. Specifically, it is likely that the face stimuli themselves will form part of the event file that is encoded at S1, such that the non-appearance of faces at S2 would essentially render every trial a partial repetition trial, in that even if all other S1 and R1 features repeat, one salient aspect of the stimulus display does not repeat. In order to avoid any possible confounds stemming from the non-repetition of the background face stimuli, in Experiment 3 we simply added the background faces both to S1 and S2, such that on each trial, the exact same face (identity and expression) was shown both at encoding and during S2/R2 (see Figure 1C).

Methods

Subjects

Twenty-four healthy college student volunteers (Mage = 21.08 years, SD = 4.9, 14 women) participated in this study for course credit. All participants were fluent in English and had normal or corrected-to-normal vision. Prior to study participation, written informed consent was obtained from each participant in accordance with institutional guidelines.

Stimuli and procedure

Stimuli and procedure were identical to Experiment 2, with the only exception that the background faces shown during S1 were now also present during S2. Specifically, the exact same face stimulus (identity plus expression) shown in S1 was repeated in S2 on each trial (see Figure 1C).

Analyses

The analyses focused on R2 RT. Only trials where both R1 and R2 had been performed correctly were considered correct trials. All error and post-error trials, as well as outlier RT values of more than 2 SDs from subject-specific grand means were excluded from the analysis. Accuracy was at ceiling (mean = 96.8%, SD = 2.8) and was not considered for statistical analysis. We analyzed R2 RT data as a function of the transition relationship between S2/R2 and S1/R1 features as well as the affect of the facial expression of the background stimulus. Specifically, the data were submitted to a 2 (color: repetition vs. alternation) × 2 (shape: repetition vs. alternation) × 2 (response: repetition vs. alternation) × 2 (facial affect: neutral vs. fearful) repeated-measures ANOVA.

Results

In the R2 RT data (Table 1), we observed a trend toward a main effect of response transition [F(1, 23) = 4.0, P = 0.057], due to a tendency for slower responses on response repetition (547 ms) as compared to response change trials (538 ms). A trend toward a main effect of color transitions [F(1, 23) = 3.7, P = 0.066] was characterized by a tendency for faster responses on color repetition (539 ms) than color change trials (546 ms). Moreover, a trend toward a main effect of shape transitions [F(1, 23) = 4.1, P = 0.054] was characterized by a tendency for faster responses on shape repetition (540 ms) than shape change trials (545 ms). More importantly, the standard feature integration interaction effect between response and color transitions was obtained [F(1, 23) = 77.3, P < 0.001], as response repetitions were faster than response changes when they were accompanied by color feature repetitions (525 vs. 554 ms), but slower when they were accompanied by color feature changes (570 vs. 522 ms). No significant interaction was observed between response and shape transitions but, interestingly, response transitions interacted with the emotional expression of the background face stimulus [F(1, 23) = 7.2, P < 0.05], as response repetitions were slower than response changes in the presence of a neutral face stimulus (551 vs. 535 ms), but of similar speed in the presence of a fearful face (543 vs. 540 ms). Most importantly, as can be observed in Figure 3C, the emotional expression of the background face stimulus again did not modulate the standard feature integration effect(s), as neither the three-way interaction involving emotion, shape, and response transitions [F(1, 23) = 0.3, P = 0.58], nor that involving emotion, color, and response transitions [F(1, 23) = 0.1, P = 0.81] were significant.

Discussion

Akin to Experiments 1 and 2, Experiment 3 reproduced the basic feature integration effect of binding costs associated with the relevant stimulus feature and the response. However, we again did not obtain any evidence for this effect being susceptible to modulation by a threat-related emotional background stimulus, thus suggesting that feature integration is not affected by negative emotional states. In contrast to Experiment 2, these results were obtained in the presence of (constant) background stimuli during both S1 and S2, thus preempting the possibility that an additional stimulus feature change between S1 and S2 that was present in Experiment 2 could somehow have masked an emotion-modulation effect on feature integration. Importantly, just as in Experiment 2, the emotion manipulation as such did produce an effect on behavior. Whereas in Experiment 2 there was a main effect of emotion on R2 RT, in Experiment 3 the emotional expression of the face stimulus interacted with the response transition factor in influencing R2 RT. This finding rules out the possibility that a lack of emotion-modulation of the feature integration effect was due to an ineffective manipulation of emotion, or that subjects somehow managed to ignore the irrelevant face stimuli.

General Discussion

We adopted Hommel’s (1998) experimental set-up for gauging rapid episodic memory integration effects of stimulus and response features (Experiment 1) and tested whether such feature binding was susceptible to modulation by emotional arousal, as evoked by background fearful (vs. neutral) face stimuli (Experiments 2 and 3). The results were clear-cut, in that we reliably reproduced the behavioral signature of the basic feature integration effect (partial repetition costs) (see Table 2) but were unable to detect any evidence for emotional modulation of this effect, regardless of whether threat-related background stimuli were displayed at encoding (S1) or at both encoding and retrieval (S1 and S2). In fact, a direct comparison of feature integration effects across Experiments 1–3 (with experiment serving as a between-subjects factor) reveals that the two-way interaction between the relevant stimulus feature (color) and response transitions (that is, the standard feature integration effect) did not differ across the experiments [three-way interaction of color × response × experiment, F(2, 62) = 0.5, P = 0.61]. Moreover, a between-experiment analysis involving only Experiments 2 and 3, which included two subtly different emotion manipulations, revealed no effect of experiment on the relationship between emotion and color/response transitions [four-way interaction of emotion × color × response × experiment, F(1, 43) = 1.7, P = 0.20]. Thus, feature integration between the relevant stimulus feature and response was entirely unaffected by the emotion manipulations, both within and across experiments.

In the context of these null-effects, it is crucial to note, however, that the emotion manipulation per se was nevertheless found to be effective, as the expression of the background face stimulus did significantly affect R2 performance in both Experiments 2 and 3. In Experiment 2, fearful facial expressions in S1 sped up R2 RT compared to neutral expressions, and in Experiment 3, the emotional face expression interacted with the response transition factor, due to slower R2 RT for response repetitions than response changes in the presence of neutral faces, but similar RT for response changes and repetitions in the presence of fearful faces. These data allow us to rule out a number of alternative reasons for not obtaining emotion-modulation effects on feature integration. First, the fact that R2 RT in both Experiments 2 and 3 were significantly modulated by the emotional expression of the task-irrelevant face stimuli clearly shows that these stimuli effectively influenced information processing, thus ruling out the possibility that our manipulation of emotion was too weak to affect subjects’ behavior. Second, these significant effects demonstrate that our design was sensitive enough to detect the influences of facial expressions on behavior. Finally, these findings also rule out the possibility that subjects were able to somehow filter out the task-irrelevant emotional face stimuli. Given these data, it can be concluded that our study should have been able to detect negative emotion-modulation of short-term feature binding processes if such effects existed, unless those bindings were, for some unknown reason, subject to a substantially higher threshold for emotion-modulation than response selection and transition effects.

As noted above, the emotion manipulations in Experiment 2 and 3 had qualitatively different effects on behavior. Recall that in Experiment 2, face stimuli were shown only at encoding (S1) whereas in Experiment 3 they were shown both during encoding and retrieval. This change in procedure could evidently be responsible for the differential effects. Alternatively, these distinct effects could in theory have stemmed from a difference in how the face stimuli were processed during S1/R1. Accordingly, we analyzed mean R1 RT as a function of facial affect and experiment. However, no differences in the response to neutral vs. emotional face stimuli was observed across experiments [F(1, 43) = 0.34, P = 0.56]. Thus, it appears that the procedural differences between the two experiments were responsible for the distinct effects of emotion. However, we remain ignorant (and agnostic) about the exact reasons for this differential impact of emotion as well as the specifics of its interaction with the response transition factor in Experiment 3.

Our main motivation for assessing the effect of emotion on rapid feature integration processes stemmed from recent reports of emotion-modulation concerning trial-by-trial effects of conflict-driven control in experiments that did not tease apart pure conflict–control effects from possible feature integration effects (van Steenbergen et al., 2009, 2010). Specifically, these studies documented that negative emotional states potentiate trial–transition effects in conflict tasks, and the present experiments were geared at testing whether these findings may have been mediated by potentiation of feature integration rather than cognitive control processes. We observed no evidence of emotion modulating feature integration processes. Vis-à-vis these and other previous studies of emotion manipulations on trial–transition effects (e.g., Dreisbach and Goschke, 2004; Stürmer et al., 2011), the current results therefore suggest that it is unlikely such effects are carried by emotion-modulation of rapid feature integration, and are thus probably reflective of emotion-modulation of cognitive control processes. A feasible avenue for the rapid modulation of cognitive control processes by positive or negative emotional stimuli is provided by the well-known interactions between the midbrain dopamine system and the prefrontal cortex (e.g., Braver and Cohen, 2000). An important caveat concerning the current conclusions, however, is that we only assessed the effects of a negative, threat-related emotion manipulation (in comparison to neutral stimuli), from which one cannot necessarily extrapolate to effects of positive emotional stimuli or rewards. Thus, it remains a possibility that feature integration effects of the type we investigated here are susceptible to modulation by positive emotional states.

Some suggestive evidence for this possibility has been supplied by a study on feature integration and emotion by Colzato et al. (2007). In a similar protocol to the current study, these authors presented either negative or positive task-irrelevant emotional picture stimuli not during encoding of S1/R1 but just prior to the retrieval of these S–R ensembles. Specifically, emotional stimuli were displayed 1,000 ms after S1 offset, starting from 200 ms before and lasting until the presentation of S2 (and execution of R2). Thus, this study likely gauged the effects of emotion on the retrieval of recent S–R bindings, in contrast to the present study’s focus on the encoding of S–R bindings. Colzato et al. (2007) found some evidence for feature integration effects following negative stimuli to be less pronounced than following positive stimuli, though this effect was only observed when the task-relevant S2 feature was stimulus shape but not when it was stimulus location or color. The authors interpreted the latter effect by suggesting that the processing of positive/negative picture stimuli would require the processing of shape but not of color or location information. Given that the Colzato et al. (2007) study did not include an emotionally neutral baseline comparison, it is impossible to tell to which degree this modulation was carried by the positive or negative stimuli. But, considering the present findings of a lack of differential feature integration effects when comparing negative and neutral stimuli, it could be speculated that the Colzato et al. (2007) results were likely driven by the positive emotional stimuli modulating the retrieval of recently formed S–R bindings.

However, given the considerable differences in study design between the present experiments and those of Colzato et al. (2007), any conclusions drawn from contrasting their respective results must be regarded as very tentative at best. In addition to the use of positive and negative stimuli in Colzato et al. (2007) as opposed to negative and neutral stimuli in the present study, differences in results could also stem from the fact that the two studies targeted different time points in the binding process (encoding vs. retrieval) and/or due to the use of different task-relevant stimulus features. Specifically, unlike Colzato et al. (2007), the present study only employed color as the task-relevant stimulus feature, such that it is in principle not certain whether our findings can be generalized to contexts where other stimulus features are task-relevant. In any case, however, even though there is thus some evidence for (positive) emotion-modulation of feature integration (Colzato et al., 2007), the direction of this effect (positive emotional stimuli enhancing feature integration) is precisely opposite to the effects of emotion manipulations in cognitive control tasks, where it is negative emotional states that appear to potentiate trial-by-trial performance dependencies and positive states that appear to loosen these dependencies (Dreisbach and Goschke, 2004; van Steenbergen et al., 2009, 2010). Thus, our conclusion that the previously observed effects of emotion on trial–transition metrics of cognitive control are unlikely to have been mediated by emotion-modulated feature integration effects is actually supported by this prior study (Colzato et al., 2007).

From the perspective of the emotional memory literature, the current data could be argued to provide support for the “object-based” framework of emotional arousal put forward by Mather (2007), which holds that emotional arousal benefits the recall of an emotional item itself but not that of other stimuli providing the context to that item. The present results demonstrate the latter, namely, that the presence of emotional stimuli does not confer any memory advantage (or disadvantage) on other non-emotional stimuli presented concurrently (see also Mather and Nesmith, 2008; Mather et al., 2009). Moreover, the current data extend this notion to a shorter time-scale than has been investigated in previous studies, and to the binding of concurrent stimulus and responses features rather than of stimulus features alone. While the present results are thus in principle in line with an object-based binding framework of emotional arousal, additional experiments would be required to determine whether memory for the emotional stimuli themselves would actually be enhanced in the type of protocol we employed here, as would be predicted by this theory (Mather, 2007).

Conclusion

In sum, the rapid integration of task-relevant stimulus and response features into episodic “event files” is not modulated by concurrent presentation of threat-related negative emotional stimuli at encoding and/or retrieval. This finding is concordant with the proposal that emotional arousal facilitates emotional item memory but does not extend this mnemonic benefit to other concurrent stimulus or response features. Moreover, this lack of emotion-modulation of feature integration effects has important implications for the interpretation of emotion-modulated trial–transition effects in studies of cognitive control, in that the latter effects are unlikely to be mediated by the influence of emotion on feature integration effects that are commonly confounded with the effects of cognitive control.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by NIMH R01 MH087610-02 (Tobias Egner).

References

Botvinick, M. M., Braver, T. S., Barch, D. M., Carter, C. S., and Cohen, J. D. (2001). Conflict monitoring and cognitive control. Psychol. Rev. 108, 624–652.

Braver, T. S., and Cohen, J. D. (2000). “On the control of control: the role of dopamine in regulating prefrontal function and working memory,” in Attention and Performance XVIII: Control of Cognitive Processes, eds S. Monsell and J. Driver (Cambridge: MIT Press), 713–737.

Breiter, H. C., Etcoff, N. L., Whalen, P. J., Kennedy, W. A., Rauch, S. L., Buckner, R. L., Strauss, M. M., Hyman, S. E., and Rosen, B. R. (1996). Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17, 875–887.

Colzato, L. S., van Wouwe, N. C., and Hommel, B. (2007). Feature binding and affect: emotional modulation of visuo-motor integration. Neuropsychologia 45, 440–446.

Dreisbach, G., and Goschke, T. (2004). How positive affect modulates cognitive control: reduced perseveration at the cost of increased distractibility. J. Exp. Psychol. Learn Mem. Cogn. 30, 343–353.

Egner, T. (2007). Congruency sequence effects and cognitive control. Cogn. Affect. Behav. Neurosci. 7, 380–390.

Egner, T., and Hirsch, J. (2005). Cognitive control mechanisms resolve conflict through cortical amplification of task-relevant information. Nat. Neurosci. 8, 1784–1790.

Gratton, G., Coles, M. G., and Donchin, E. (1992). Optimizing the use of information: strategic control of activation of responses. J. Exp. Psychol. Gen. 121, 480–506.

Hadley, C. B., and Mackay, D. G. (2006). Does emotion help or hinder immediate memory? Arousal versus priority-binding mechanisms. J. Exp. Psychol. Learn Mem. Cogn. 32, 79–88.

Hamann, S. (2001). Cognitive and neural mechanisms of emotional memory. Trends Cogn. Sci. (Regul. Ed.) 5, 394–400.

Hamann, S. B., Ely, T. D., Grafton, S. T., and Kilts, C. D. (1999). Amygdala activity related to enhanced memory for pleasant and aversive stimuli. Nat. Neurosci. 2, 289–293.

Hommel, B. (1998). Event files: evidence for automatic integration of stimulus-response episodes. Vis. Cogn. 5, 183–216.

Hommel, B. (2004). Event files: feature binding in and across perception and action. Trends Cogn. Sci. (Regul. Ed.) 8, 494–500.

Hommel, B. (2005). How much attention does an event file need? J. Exp. Psychol. Hum. Percept. Perform. 31, 1067–1082.

Hommel, B., Proctor, R. W., and Vu, K. P. (2004). A feature-integration account of sequential effects in the Simon task. Psychol. Res. 68, 1–17.

Isenberg, N., Silbersweig, D., Engelien, A., Emmerich, S., Malavade, K., Beattie, B., Leon, A. C., and Stern, E. (1999). Linguistic threat activates the human amygdala. Proc. Natl. Acad. Sci. U.S.A. 96, 10456–10459.

Kahneman, D., Treisman, A., and Gibbs, B. J. (1992). The reviewing of object files: object-specific integration of information. Cogn. Psychol. 24, 175–219.

LaBar, K. S., and Cabeza, R. (2006). Cognitive neuroscience of emotional memory. Nat. Rev. Neurosci. 7, 54–64.

LaBar, K. S., and Phelps, E. A. (1998). Arousal-mediated memory consolidation: role of the medial temporal lobe in humans. Psychol. Sci. 9, 527–540.

Mather, M. (2007). Emotional arousal and memory binding: an object-based framework. Perspect. Psychol. Sci. 2, 33–52.

Mather, M., Gorlick, M. A., and Nesmith, K. (2009). The limits of arousal’s memory-impairing effects on nearby information. Am. J. Psychol. 122, 349–369.

Mather, M., Mitchell, K. J., Raye, C. L., Novak, D. L., Greene, E. J., and Johnson, M. K. (2006). Emotional arousal can impair feature binding in working memory. J. Cogn. Neurosci. 18, 614–625.

Mather, M., and Nesmith, K. (2008). Arousal-enhanced location memory for pictures. J. Mem. Lang. 58, 449–464.

Mathews, A. M., and MacLeod, C. (1985). Selective processing of threat cues in anxiety states. Behav. Res. Ther. 23, 563–569.

McGaugh, J. L. (2004). The amygdala modulates the consolidation of memories of emotionally arousing experiences. Annu. Rev. Neurosci. 27, 1–28.

McGaugh, J. L., and Roozendaal, B. (2002). Role of adrenal stress hormones in forming lasting memories in the brain. Curr. Opin. Neurobiol. 12, 205–210.

McKenna, F. P. (1986). Effects of unattended emotional stimuli on color-naming performance. Curr. Psychol. Res. Rev. 5, 3–9.

Mitchell, K. J., Mather, M., Johnson, M. K., Raye, C. L., and Greene, E. J. (2006). A functional magnetic resonance imaging investigation of short-term source and item memory for negative pictures. Neuroreport 17, 1543–1547.

Ohman, A., Flykt, A., and Esteves, F. (2001). Emotion drives attention: detecting the snake in the grass. J. Exp. Psychol. Gen. 130, 466–478.

Phelps, E. A., Ling, S., and Carrasco, M. (2006). Emotion facilitates perception and potentiates the perceptual benefits of attention. Psychol. Sci. 17, 292–299.

Reeck, C., and Egner, T. (2011). Affective privilege: asymmetric interference by emotional distracters. Front. Psychol. 2:232. doi:10.3389/fpsyg.2011.00232

Sharot, T., and Phelps, E. A. (2004). How arousal modulates memory: disentangling the effects of attention and retention. Cogn. Affect. Behav. Neurosci. 4, 294–306.

Stürmer, B., Nigbur, R., Schacht, A., and Sommer, W. (2011). Reward and punishment effects on error processing and conflict control. Front. Psychol. 2:335. doi:10.3389/fpsyg.2011.00335

Tabert, M. H., Borod, J. C., Tang, C. Y., Lange, G., Wei, T. C., Johnson, R., Nusbaum, A. O., and Buchsbaum, M. S. (2001). Differential amygdala activation during emotional decision and recognition memory tasks using unpleasant words: an fMRI study. Neuropsychologia 39, 556–573.

Tottenham, N., Tanaka, J. W., Leon, A. C., McCarry, T., Nurse, M., Hare, T. A., Marcus, D. J., Westerlund, A., Casey, B. J., and Nelson, C. (2009). The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res. 168, 242–249.

van Steenbergen, H., Band, G. P., and Hommel, B. (2009). Reward counteracts conflict adaptation. Evidence for a role of affect in executive control. Psychol. Sci. 20, 1473–1477.

van Steenbergen, H., Band, G. P., and Hommel, B. (2010). In the mood for adaptation: how affect regulates conflict-driven control. Psychol. Sci. 21, 1629–1634.

Williams, L. M., Phillips, M. L., Brammer, M. J., Skerrett, D., Lagopoulos, J., Rennie, C., Bahramali, H., Olivieri, G., David, A. S., Peduto, A., and Gordon, E. (2001). Arousal dissociates amygdala and hippocampal fear responses: evidence from simultaneous fMRI and skin conductance recording. Neuroimage 14, 1070–1079.

Keywords: feature integration, emotion, cognitive control, event files, binding

Citation: Trübutschek D and Egner T (2012) Negative emotion does not modulate rapid feature integration effects. Front. Psychology 3:100. doi: 10.3389/fpsyg.2012.00100

Received: 08 December 2011; Accepted: 16 March 2012;

Published online: 09 April 2012.

Edited by:

Mara Mather, University of Southern California, USAReviewed by:

Hao Zhang, Duke University Medical Center, USAMara Mather, University of Southern California, USA

Bernhard Hommel, Leiden University, Netherlands

Copyright: © 2012 Trübutschek and Egner. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: Tobias Egner, Center for Cognitive Neuroscience, Levine Science Research Center, Box 90999, Durham, NC 27708, USA. e-mail: tobias.egner@duke.edu