- 1TNO, Soesterberg, Netherlands

- 2Department of Information and Computing Sciences, University Utrecht, Utrecht, Netherlands

In the current study participants explored a desktop virtual environment (VE) representing a suburban neighborhood with signs of public disorder (neglect, vandalism, and crime), while being exposed to either room air (control group), or subliminal levels of tar (unpleasant; typically associated with burned or waste material) or freshly cut grass (pleasant; typically associated with natural or fresh material) ambient odor. They reported all signs of disorder they noticed during their walk together with their associated emotional response. Based on recent evidence that odors reflexively direct visual attention to (either semantically or affectively) congruent visual objects, we hypothesized that participants would notice more signs of disorder in the presence of ambient tar odor (since this odor may bias attention to unpleasant and negative features), and less signs of disorder in the presence of ambient grass odor (since this odor may bias visual attention toward the vegetation in the environment and away from the signs of disorder). Contrary to our expectations the results provide no indication that the presence of an ambient odor affected the participants’ visual attention for signs of disorder or their emotional response. However, the paradigm used in present study does not allow us to draw any conclusions in this respect. We conclude that a closer affective, semantic, or spatiotemporal link between the contents of a desktop VE and ambient scents may be required to effectively establish diagnostic associations that guide a user’s attention. In the absence of these direct links, ambient scent may be more diagnostic for the physical environment of the observer as a whole than for the particular items in that environment (or, in this case, items represented in the VE).

Introduction

Background

Desktop virtual environments (VEs) are increasingly deployed to study future design plans and the possible effects of environmental qualities and interventions on human behavior and feelings of safety in built environments with signs of public disorder (Cozens et al., 2003; Park et al., 2008, 2010; Toet and van Schaik, 2012). Desktop VEs offer cost-effective, safe, controlled, and flexible environments that allow to investigate human response to a wide range of environmental factors without the constraints, distractions, and dangers of the real world (e.g., Nasar and Cubukcu, 2011). They are relatively cheap, widely available, and easy to use, while most users are familiar with these displays and their interaction devices. Desktop VEs are also preferred for communication of design and intervention plans because they can be made accessible to a large numbers of users in internet applications (Dang et al., 2012). For these applications it is essential that users perceive the desktop VE in a similar way as they would perceive its real world counterpart. Previous studies have shown that environmental characteristics like lighting, sound, and dynamic elements similarly affect the perception of desktop VEs and real environments (Bishop and Rohrmann, 2003; Houtkamp et al., 2008). Ambient scent is another important environmental characteristic that is currently lacking in most VEs. Ambient scent is known to significantly affect our perception of real environments (Wrzesniewski et al., 1999), and people have strong expectations about the way an environment should smell (Henshaw and Bruce, 2012). It has also been shown that ambient odor can increase the sense of presence in immersive VEs (Dinh et al., 1999; Washburn et al., 2003; Tortell et al., 2007). Thus, ambient odors may be an effective tool to tune the user perception of less immersive desktop VEs (e.g., by evoking implicit associations).

Despite the importance of scent in our everyday life olfaction is rarely applied in the scope of VEs (Baus and Bouchard, 2010). Recent technological developments enable the effective and localized dispersion and control of scents (Yanigada et al., 2003, 2004, 2005; Yu et al., 2003; Oshima et al., 2007; for reviews see Richard et al., 2006; Riener and Harders, 2012), thereby providing VE researchers and developers with the ability to utilize scent to create compelling VEs (Tomono et al., 2011). Enhancing VEs with olfactory stimuli may enhance user experience by heightening the sense of reality (Chalmers et al., 2009; Ghinea and Ademoye, 2011). It has indeed been shown that the addition of olfactory cues to an immersive VE can increase the user’s sense of presence, memory and perceived realism of the simulated environment (Dinh et al., 1999; Washburn et al., 2003; Tortell et al., 2007). However, it is still unknown if ambient scents can influence the attention for details in a desktop VE (Ghinea and Ademoye, 2011).

In a previous study we found that signs of disorder influence the affective appraisal of a desktop VE to a large degree in a similar way as the appraisal of its real world counterpart (Toet and van Schaik, 2012). However, it appeared that participants focused more on signs of disorder in a desktop VE than in a similar real world environment. This finding, which may seriously degrade the ecological validity of VEs for the aforementioned applications, was partly reduced by the addition of a realistic soundscape to the VE simulation. We argued that in the real world the saliency of signs of public disorder is typically modulated by various environmental factors which are typically lacking in a desktop VE, such as ambient sounds, tactile or olfactory cues. For instance, their saliency may be ameliorated by the sound of birds, a soft warm breeze, sun, and pleasant ambient smells of fresh air and vegetation, or enhanced by loud noise, strong cold wind, or unpleasant (e.g., garbage and urine) smells. In this study we investigated if ambient odors can influence the visual attention for these details in a desktop VE.

Visual-Olfactory Interactions

Interactions between olfaction and vision appear to be widespread. Neuroimaging studies have shown that interaction between olfaction and vision occurs at multiple levels of information processing (Gottfried and Dolan, 2003; Österbauer et al., 2005; Walla, 2008; Seubert et al., 2013). Also, it was found that stimulation of the human visual cortex enhances odor discrimination (Jadauji et al., 2012). Linking the perceptions of odors and colors appears to occur mainly in the amygdala and the orbitofrontal cortex (OFC; Gottfried and Dolan, 2003; Österbauer et al., 2005).

The amygdala is a central perceptual node where information from olfactory, visual, auditory, and tactile modalities converges (Zald, 2003). It is an integral component of a distributed affective circuit in the mammalian brain that mediates both positive and negative affect and the processing of reward-predicting cues (Murray, 2007). Recent evidence suggests that the amygdala also plays a central causal role in the modulation of visual attention (Vuilleumier, 2005; Williams et al., 2005; Duncan and Feldman Barrett, 2007; for a recent overview see Pourtois et al., 2013). The amygdala enhances the visual saliency of affective targets (Duncan and Feldman Barrett, 2007). This implies that the activation state of the amygdala determines whether affective features or objects are prioritized. Since the amygdala responds to both positive and negative valenced odors (but not to neutral odors: Winston et al., 2005), olfactory induced amygdala activity may boost visual attention for affectively congruent (potentially threatening or rewarding) targets (Vuilleumier, 2005; Mohanty et al., 2009; Jacobs et al., 2012).

There is ample evidence for the visual modulation of olfactory perception. A neutral suprathrehold odor is rated significantly more pleasant after viewing positive pictures and significantly less pleasant and more intense after seeing unpleasant pictures (Pollatos et al., 2007). A visual feature that has a particular strong influence on odor perception is color (Zellner, 2013). Color enhances the perceived intensity of odors (independent of color appropriateness: Zellner and Kautz, 1990). Color also modulates the hedonic value of odors: both neural response in brain area encoding the hedonic value of smells (Österbauer et al., 2005) and the subjectively judged pleasantness of color-odor combinations (Zellner et al., 1991) increase with perceived color-odor appropriateness. Odors are detected faster and more accurately in the presence of semantically congruent colors (Zellner et al., 1991) or pictures (Gottfried and Dolan, 2003; Demattè et al., 2009), while incongruent colors and shape cues reduce odor discrimination accuracy (Demattè et al., 2009). Color-smell associations can be so compelling that color can even completely change the quality of the perceived odor (a white wine is perceived as having the odor of a red wine when artificially colored red: Morrot et al., 2001). Visual-olfactory interactions appear to be automatic: color and shape cues affect the accuracy of odor discrimination, even when the information is task irrelevant and when participants are explicitly instructed to ignore these cues (Demattè et al., 2009). Specific odor components of complex odor mixtures that are congruent with a presented color are perceived as more prominent, suggesting that color directs olfactory attention to color-associated components (Arao et al., 2012). Functional magnetic resonance imaging studies have shown neurophysiological correlates of olfactory response modulation by color cues: activity in caudal regions of the OFC and in the insular cortex increase progressively with perceived odor-color congruency (Österbauer et al., 2005).

In contrast to the large amount of evidence for the visual modulation of olfactory perception, there are less reports on the reverse. However, recently evidence was presented that olfactory input can indeed modulate visual perception. Fear-related chemical signals modulate visual emotion perception in an emotion-specific way (Zhou and Chen, 2009), while unpleasant odors reduce perceived attractiveness of faces (Demattè et al., 2007). Olfactory cues also bias the dynamic process of binocular rivalry: an odorant that is congruent with one of the competing images prolongs the time that image is visible and shortens its suppression time (Zhou et al., 2010, 2012). Finally, subliminal olfactory cues modulate visual sex discriminations made on the basis of biological motion cues: ambiguous point-light walkers are more often judged as males in the presence of unconsciously perceived male sweat (Hacker et al., 2013). Hence, there is now sufficient evidence for the modulation of visual perception by olfactory input.

Olfaction and Visual Attention

An organism continuously and simultaneously receives an overload of multisensory input from its environment. Because of limitations in processing capacity, simultaneous stimuli cannot be fully analyzed in parallel and thus compete for processing resources in order to gain access to higher cognitive stages and awareness. Attention serves as a gating mechanism to prioritize and enhance sensory information that is relevant for survival such as threats (Fox et al., 2002; Koster et al., 2004; Williams et al., 2006; Lin et al., 2009) or rewards (Anderson, 2013), while suppressing irrelevant information. Attentional selection is typically driven by stimulus saliency, novelty, and reward-related associations (Anderson, 2013). Attention acts upon and modulates information in each sensory modality (visual, auditory, olfactory, etc.; Woldorff et al., 1993; Zelano et al., 2005). Information from different sensory modalities is pre-attentively integrated into a unified coherent percept, resulting in multimodal internal representations in which attention can be directed (Driver and Spence, 1998). As a result, tactile (Van der Burg et al., 2009), auditory (Van der Burg et al., 2008), and olfactory (Seo et al., 2010; Tomono et al., 2011; Seigneuric et al., 2012; Chen et al., 2013; Durand et al., 2013) cues can boost the saliency of visual features, even when the cues provide no information about the location or nature of the visual feature. Thus, ambient odors (even at sub-threshold levels) can modulate visual attention (Morrin and Ratneshwar, 2000; Michael et al., 2003, 2005; Chen et al., 2013), even in 4-month-old infants (Durand et al., 2013). Recent studies have shown that odors can reflexively direct visual attention to semantically congruent visual objects (Seo et al., 2010; Tomono et al., 2011; Seigneuric et al., 2012; Chen et al., 2013). Objects that are semantically congruent with a presented odor are looked at faster and more frequently than other objects in a scene (Seo et al., 2010; Chen et al., 2013), even if participants are not aware that an odor has been presented (Seigneuric et al., 2010). It appears that crossmodal odor-object associations are automatically activated, without the need for explicit odor identification (Seigneuric et al., 2012), thus boosting the saliency of the corresponding visual object (Chen et al., 2013). Ambient odors also bias visual attention to favor stimuli that are affectively congruent to their hedonic quality (a case of affect-biased attention: Todd et al., 2012). Pleasant odors facilitate the processing of positive visual cues (Leppänen and Hietanen, 2003), while unpleasant odors facilitate the processing of negative cues (Ehrlichman and Halpern, 1988) and inhibit the processing of positive cues (Leppänen and Hietanen, 2003). The pre-attentive affective bias induced by ambient unpleasant odors probably serves the ecological purpose of facilitating threat detection (Krusemark and Li, 2012).

Current Study

The current study was performed to test if exposure to ambient odor can modulate the visual attention to signs of disorder in a desktop VE representing an urban area. Participants performed a walking tour through the VE while being exposed to either room air (control group), tar (typically perceived as unpleasant and frequently associated with burned or waste material), or the odor of freshly cut grass (typically perceived as pleasant and frequently associated with natural or fresh material). Whenever they noticed signs of disorder during their walk they reported their detection and their emotional response. The scent of cut grass had semantically congruent visual and auditory representations in the simulation, since the VE showed abundant greenery and contained the occasional sound of grass mowers in the associated soundtrack. The scent of tar could be associated with the occasional sounds of construction activities (e.g., hammering, sawing) in the soundtrack of the VE, and was affectively congruent with derelict areas in general. Since people tend to respond to an environment as a whole (a “molar” environment) rather than to its individual features (Bitner, 1992; Bell et al., 2010; Brosch et al., 2010; Houtkamp, 2012), and since affective qualities are prioritized in this categorization process (Brosch et al., 2010), the presence of an ambient scent with an affective (pleasant or unpleasant) loading was expected to bias the visual attention (away from or toward) for signs of disorder in the VE. More specifically, it was hypothesized that (H1) participants in the ambient tar (unpleasant) odor condition would report more signs of public disorder than participants in the control condition, because the unpleasant odor would bias visual attention to visual cues with a negative affective connotation. In contrast, it was expected that (H2) participants in the cut grass (pleasant) odor condition would report less signs of public disorder than participants in the control condition, because the smell of cut grass would bias their attention to the – semantically congruent – greenery and thereby distract them from the negative cues.

Materials and Methods

Virtual Environment

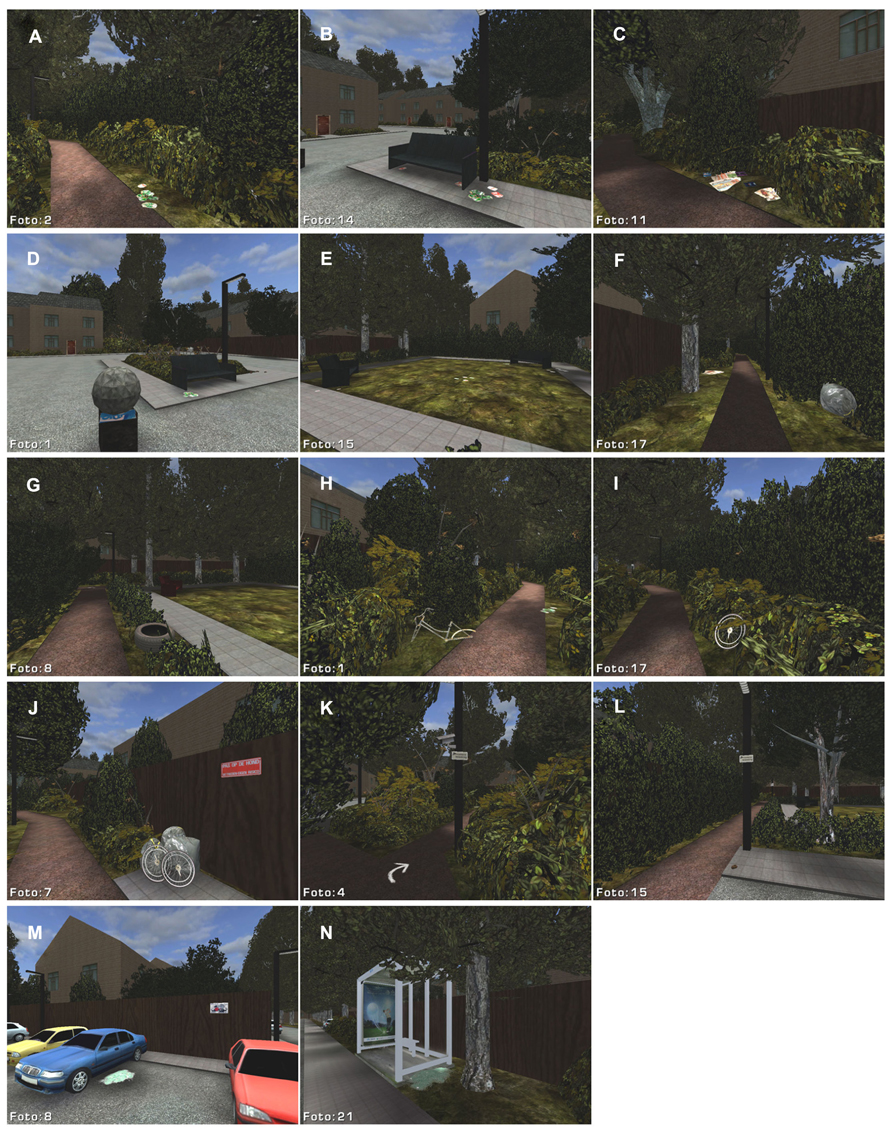

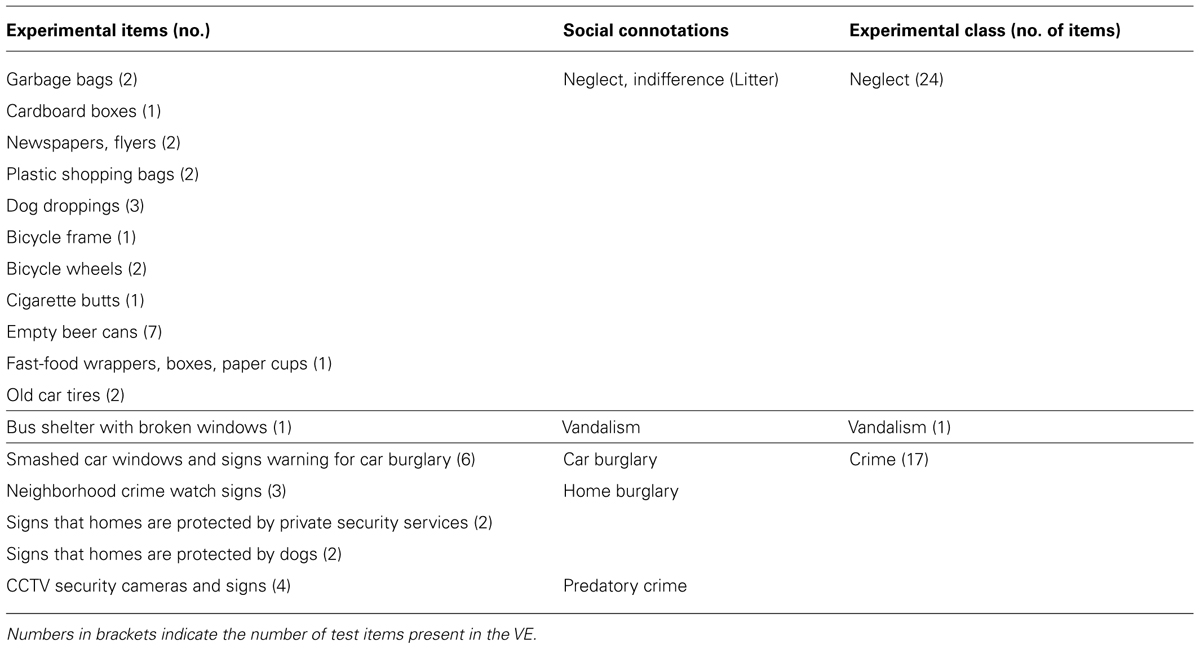

A small area in the town of Soesterberg, The Netherlands (with a rectangular shape and a total extent of about 200 m × 200 m; coordinates 52°; 7′ N, 5°; 17′34″ E:) was simulated in 3D using the Unreal Tournament 2004 game-engine v2.5 (Epic Games Inc.; for further details on the VE model and its contents see Toet and van Schaik, 2012). The area is enclosed by roads on four sides and contains blocks of houses, two squares with parking places, benches, and statues, two playgrounds with benches, and a network of pathways connecting the squares and playgrounds (see Figure 1). All houses have a garden in the back, typically enclosed with a wooden fence, with an exit door to a pathway. The pathways are typically covered with tarmac, and bordered on both sides with trees and shrubs. The houses are generally well maintained and quite uniform. The pathways and parks are reasonably well kept. The walking route (designated by arrows drawn on the ground) had no intersections and covered most of the area. To simulate a state of public disorder 42 test items were distributed over 34 different locations in the VE. The items signaled three different classes of social incivilities: Neglect (24 items), Vandalism (one item), and Crime (17 items: see Table 1; Perkins et al., 1992; Caughy et al., 2001), and had social connotations ranging from indifference (e.g., litter, trash, dog droppings) and loitering (e.g. empty beer cans, cigarette butts, fast food wrappers) to vandalism (broken bus shelter windows) and predatory crime (smashed car windows, crime watch signs, CCTV cameras, and camera surveillance signs).

FIGURE 1. Screen shots of the virtual environment, showing locations with litter (A–E), garbage (F,J), bicycle- and car parts (G–J), warning signs (J–M), cameras (K), and evidence of car burglary (M) and vandalism (N).

TABLE 1. Experimental items, their connotations of physical and social disorder, and the experimental classification.

The simulation was performed on Dell Precision 490 PC computers, equipped with Dell 19″ monitors. Logitech Rumblepad 2 Gamepads were used for navigation. User movement in the VE was from a first-person viewing perspective with walking motion supporting forward and backward movements and left and right rotation movements. User movement speed was fixed and collision detection enabled to prevent users from walking through objects. A non-repeating soundscape that was characteristic for the environment was composed from sounds (birds twittering, cars passing by, children shouting, hammering and drilling, and dogs barking) recorded at several locations and at different times in the corresponding real environment. The soundscape was presented through Sennheiser eH 150 headphones. A previous study showed that this soundscape effectively increased the ecological validity of the VE (Toet and van Schaik, 2012).

Odor Selection

The scent of freshly cut grass was selected as a semantically congruent pleasant odor in this study. This scent is generally considered to be stimulating and refreshing (the smell of freshly cut grass ranks among the top five preferred smell in several recent independent large scale polls in Britain: Reynolds, 2012; Henning, 2013). Since the VE used in this study shows a lot of grass and vegetation, the scent of grass may direct attention toward the greenery (Seo et al., 2010; Tomono et al., 2011; Seigneuric et al., 2012). The smell of cut-grass was created by mixing ethanol with cis-3-hexenol (leaf alcohol) in a 9:1 ratio. The associations that could be elicited by this scent in combination with the VE were investigated by presenting it to a panel of 10 participants while they were viewing the VE. The scent was presented in small glass tubes containing a cotton swab with three to four drops of the solution and sniffed by the participants approximately 5″ from their nose. About 9 out of 10 participants reported associations with greenery (four mentioned grass, three named freshly cut leaves and one mentioned broken twigs). All participants judged the scent to be pleasant.

An affectively congruent unpleasant scent was selected in a pilot test from a set of eight candidate aversive smells. The candidate smells were respectively Burned Wood (RS/420), Reptile (RS/424), Diesel Fumes (RS/423), Metal (RS/426), Dusty (RS/425), Tar (RS/401), Cow Manure, and Natural Gas (all obtained from RetroScent, Rotterdam, The Netherlands: www.geurmachine.nl). The scents were identified by randomly assigned numbers, presented in small glass tubes containing a cotton swab with 3–4 drops of aroma oil, and sniffed by the 10 participants of the pilot test in random order, approximately 5″ from their nose, while viewing the VE. The degree to which each scent fitted the VE (how environmentally appropriate the scent was for the VE) was evaluated on a 11 point Likert scale (ranging from 0 = absolutely not to 10 = definitely). Tar received the highest mean score (7.4), followed by Dusty (5.7). In addition, although the exact the nature of the tar smell was not identified by any of the testers, 8 out of 10 spontaneously reported associations with fire and burned material, while it was unanimously judged to be a very unpleasant scent that could occur in an environment as the one represented by the VE.

A second pilot test served to investigate the spontaneous associations that may be elicited by the two selected scents (grass and tar) independent of visual feedback. Three small glass tubes containing a cotton swab with three to four drops of either the grass odor solution, the tar aroma oil or clear tap water were presented in random order to 10 participants (who did not take part in the first pilot test). The tap water condition served as a control condition. The participants sniffed the samples approximately five inches from their nose, and rated respectively their pleasantness and familiarity on five point Likert scales (ranging from 0 = absolutely not to 4 = very much). The grass smell received the highest mean pleasantness rating (3.6), followed by tap water (2.6), while the tar smell received the lowest mean pleasantness rating (0.2). The tar smell received the highest mean familiarity score (2.9), followed by tap water (2.0), and grass (1.9). For the tar smell, 6 out of 10 participants reported associations with smoke, fire, and burned material, while two participants associated this smell with industrial activities, and two others had respectively associations with garages and garbage dumps. For the grass smell, 5 out of 10 participants reported associations with nature, flowers, pine trees, or leafs, one was reminded of fruit, while four participants associated it with air refreshers or cleaning material. Hence, the tar smell was frequently perceived as an unpleasant smell and associated with negative (burned or waste) material, while the grass smell was predominantly considered a pleasant smell associated with positive (natural) material.

Odor Diffusion

Scents were diffused in the room (about 25 m2) through a commercial electronic dispenser (1-3 RS-Classic Scentvertiser, RetroScent, Rotterdam, The Netherlands: www.geurmachine.nl). No odor was applied in the control condition. The dispenser was placed out of the participant’s sight behind a screen. The participants could not hear the sound of the dispenser when they wore their headphones and listened to the soundscape of the VE. The experimenter turned on the dispenser after the participants had started their tour through the VE and he turned it off before they were instructed to take off their headphones. Odor was intermittently diffused (with a cycle period of 1 min) during the experiment so that the participants received fluctuating concentrations over time, thus preventing full adaptation.

It is likely that both aversive and pleasant odors turn on the sensory-driven attentional systems even at subthreshold levels to facilitate the detection and analysis of behavioral relevant stimuli (Krusemark and Li, 2012). In this study olfactory stimulation was therefore intentionally performed at a near-threshold level to preclude the possibility of top-down influence on visual perception (e.g., the use of explicit search strategies), thereby narrowing the effects down to bottom-up sensory driven attentional systems facilitating threat or reward detection. Ideally, the odor intensity should be sufficiently strong to be just noticeable when attended to. The odor intensity used in this study was between low and intermediate, corresponding to a mean level between 3 and 5 on a 10-point scale. A pilot experiment was performed to determine a setting of the dispenser and a duty cycle that resulted in a mean rating of 5.

The room in which the test was performed was well ventilated prior to each session. Only one scent per day was diffused to avoid mixing odors, and the lab was fully ventilated overnight to remove any lingering trace of the scent. Before beginning the study each morning, the room was “sniff-tested” by the two experimenters; no odors were detected to have remained in the room.

Instruments

General Questionnaire

As the results may be influenced by the characteristics of the participants, they were asked to complete a General Questionnaire including socio-demographic measures (sex, age, and education). Education was clustered into four groups: middle and higher level education, academic education, and other types of education.

Mental State Questionnaire

A 7-item Mental State Questionnaire (adapted from Spielberger, 1983), consisting of four negative (agitated, angry, anxious, distressed), two neutral (calm, relaxed), and one positive (cheerful) emotional terms served to assess the emotions elicited by the individual incivilities. On each encounter with a sign of disorder during their walk participants reported their emotional reaction by selecting one of the seven items (“I feel⋯”).

Post-Experiment Questionnaire

A 4-item Post-Experiment Questionnaire contained three questions investigating the extent to which the ambient temperature, illumination, and atmosphere in the room were characteristic for the VE (these three items were scored on a 5-point Likert scale, ranging from 1 = completely disagree to 5 = completely agree) and an open question (“Was there anything else you noticed during the experiment?”) to test if the participants had noticed the ambient scent in the room.

Experimental Procedure

After their arrival at the laboratory, the participants first read and signed an informed consent form. Next, they filled out the General Questionnaire. Then they read the following instructions:

“The experiment concerns an area of Soesterberg near the TNO lab, and will take about 45 minutes. Citizens living in this area are concerned about the increasing social disorder in their neighborhood. They intend to draft a plan of action to confront this problem. After making an inventory of the different types of incivilities occurring in their neighborhood, the citizens will prioritize the order in which these should be addressed. To enable a large number of people to give their opinion on the social disorder in this area, the concerned citizens have commissioned a realistic and highly detailed computer model of their neighborhood.

It is your task to make a tour through this virtual model and assess the social disorder in this neighborhood. Your route is marked by arrows drawn on the ground. Each time you notice signs of incivilities (e.g., litter, dog droppings, broken car windows, etc.) during your inspection tour, you are requested to:

1. Make a snapshot of each sign of incivilities you notice (by pressing key F12).

2. Enter a brief description of the incivility on your questionnaire.

3. Report your current mental state by choosing one of the 7 emotional terms on the ‘Mental State Questionnaire’ (agitated, angry, anxious, distressed, calm, relaxed, cheerful).”

Next, the experimenter verified if the participants had understood their instructions, and started the simulation. The experimenter then explained the function of the gamepad, and gave the participant the opportunity to practice maneuvering through the VE for about 5 min. At the end of this practice period the experimenter checked if the participant was able to perform the required maneuvers, and whether the participant paid attention to the arrows on the ground and the signs of disorder. Then, the experimenter gave the participants the printed questionnaires which they could use to fill out their reports, and positioned the point-of-view in the VE at the starting location, facing the direction of the route. The participants then put on their headphones and started their walkthrough, which they performed at their own pace. Each time the participants noticed signs of disorder during their walk they reported the item they had noticed and their current mental state. During the test, the experimenter was seated behind a screen in the room and intermittently turned on the odor dispenser at one minute intervals, maintaining a slightly fluctuating near threshold ambient odor level. Finally, after finishing their walkthrough, the participants filled out the Post-Experiment Questionnaire.

The experimental protocol was reviewed and approved by the TNO internal review board on experiments with human participants (TNO Toetsings Commissie Proefpersoon Experimenten, Soesterberg, The Netherlands), and was in accordance with the Helsinki Declaration of 1975, as revised in 2000 (World Medical Association, 2000). The participants provided their written informed consent prior to testing. The participants received a modest financial compensation for their participation.

Participants

The experiment was performed by 69 participants (3 groups of 23 each) that were selected from the TNO database of volunteers: 39 males and 30 females, aged 43 ± 18 years. The selection criteria guaranteed that they were not familiar with the urban area represented by the VE, that they had no problems with their sense of smell, and that they all had normal (or corrected to normal) vision with no color deficiencies. Also, they were unaware of the aim of the experiment. The participants’ mean age, level of education, and computer proficiency and game experience were approximately the same for all three (no-ambient smell, ambient tar odor, and ambient grass odor) experimental conditions.

Data Analysis

The emotional responses reported for the detected signs of disorder (from the Mental State Questionnaires) were clustered for each of the three classes of experimental items: neglect, vandalism, and crime. Analysis of variance (ANOVA) was used to test the relationships between the main variables. Chi-squared tests were performed to determine whether observed frequencies were significantly different from expected frequencies. The statistical analyses were performed with IBM SPSS 20.0 for Windows. For all analyses a probability level of p < 0.05 was considered to be statistically significant.

Results

Chi-squared tests showed a significant difference (χ2 = 18.94; df = 4; p ≤ 0.05) between the observed and expected frequencies of the emotional responses (negative, neutral, or positive) associated with the reported items (signs of incivilities) in the classes Neglect, Vandalism, and Crime. Items in the classes Vandalism and Crime were more frequently associated with negative emotional responses than items in the class Neglect.

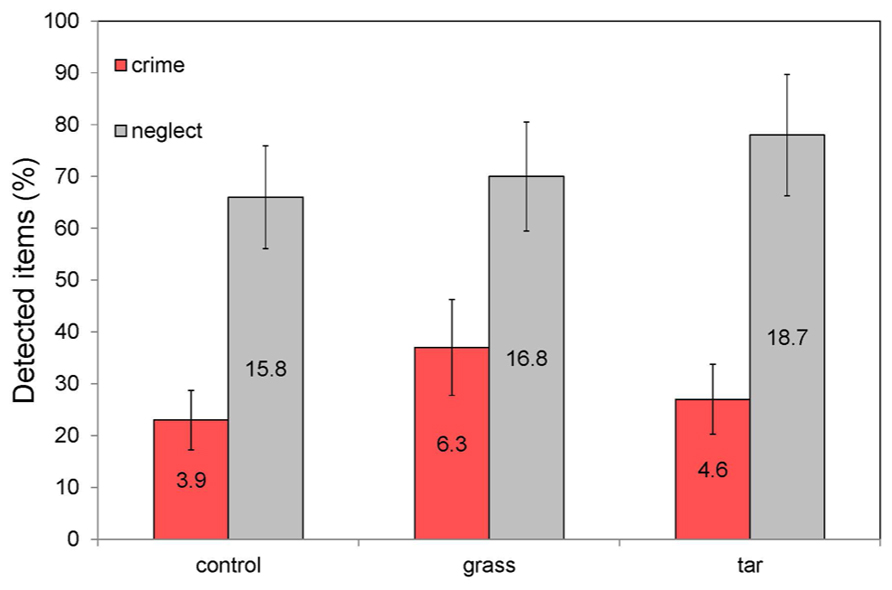

Figure 2 lists the detection performance for items signaling Neglect and Crime in each of the three experimental conditions. To enable a comparison of the performance between the different experimental classes (that were each represented by a different number of test items) the results are expressed in percentages (for the sake of completeness this figure also provides the mean number of detected items for each condition). Figure 2 clearly shows that the relative detection performance is lower for signals of crime than for signals of neglect in all conditions.

FIGURE 2. Percentage of detected items signaling Neglect (a total of 24 items) and Crime (a total of 17 items) in each of the three experimental ambient scent conditions (no odor, grass odor, tar odor). The labels inside the bars represent the mean number of detected items. The error bars represent the standard error in the mean.

A one-way ANOVA showed that the mean numbers of detected items signaling respectively Neglect and Crime did not differ significantly between the three ambient odor conditions. More specifically, there were no significant differences between the Control and Grass (respectively F1,42 = 0.57, p = 0.45 and F1,37 = 1.76, p = 0.19), Control and Tar (respectively F1,45 = 3.10, p = 0.09 and F1,36 = 0.96, p = 0.33) and between the Tar and Grass (respectively F1,42 = 0.79, p = 0.38 and F1,38 = 0.01, p = 0.93) conditions. Hence, the hypotheses (H1 and H2) that participants in the (un)pleasant odor condition would notice (more) less signs of disorder than participants in the (odorless) control condition is not supported by the present data. Compared to the control (odorless) condition, participants reported the same mean number (percentage) of signs of disorder in both (tar and grass) ambient odor conditions. In addition, there appears to be no effect of the hedonic tone of the ambient odor on visual attention toward neglect or crime objects. Also, ambient scent did not affect participants’ subjectively reported emotional state. Since there were no main or interaction effects of age and level of education, these factors were omitted from later analyses.

The VE contained multiple objects representing Neglect and Crime, but only a single object signaling Vandalism (a broken bus shelter). Since this item was rather conspicuous it was never missed by any of the participants. Hence, the results for this item have no discriminative value and are therefore not further discussed in this study.

In response to the open question in the Post-Experiment Questionnaire one participant (out of 23) claimed to have noticed a Lysol smell in the room in the control condition. In the tar odor condition one participant (out of 23) reported to have noticed a smell, but he was unable to identify its nature, and did not link the odor to the exploration task. No participant noticed a smell in the grass odor condition.

Discussion

Based on the present we cannot conclude whether a subliminal ambient scent can affect the perception of the VE. The finding that ambient scent did not seem to affect participants’ subjectively reported emotional state agrees with similar findings from related earlier studies who observed that pleasant ambient scents did not affect self-reported mood and arousal (Morrin and Ratneshwar, 2000, 2003; Teller and Dennis, 2011).

Contrary to our expectations the presence of the ambient odors also did not bias the participants’ attention for the experimental items. Thus, we found no indication that ambient smell of a given nature selectively biases visual attention to details in a desktop VE. The design of the current study does not allow to determine whether the fact that we did not observe an effect is due to (1) the absence of an effect or (2) the limited power of the study design itself. In any case, it appears that ambient smell may only have limited effectiveness as a tool to direct a user’s attention to specific details in a desktop VE. This result is somewhat surprising given the substantial amount of evidence that odors draw visual attention to congruent visual objects (e.g., Seo et al., 2010; Tomono et al., 2011; Seigneuric et al., 2012; Chen et al., 2013). However, the present result agrees with earlier reports that ambient scent has no effect on shopping behavior (Schifferstein and Blok, 2002; Teller and Dennis, 2011). It has in fact been argued that previous reports of significant effects of ambient scents on perception, emotions, and behavior in shopping environments need to be taken with care since most previous studies typically did not control for different sources of bias (Teller and Dennis, 2011). Our results also agree with those of Schifferstein and Blok (2002), who found that the scent of freshly cut grass did not affect sales of thematically (in-) congruent products. They argue that ambient scent is probably more diagnostic for the physical environment of the observer than for the particular items in that environment. This suggests that ambient scent may only effectively guide visual attention when there is a close link between the affective or semantic qualities of the scent and visual features in the VE. Although there may be a semantic link between the scent of cut grass and the greenery shown in the VE, the link between the scent of tar and signs of disorder is probably less evident. Also, more immersive VEs may be required to automatically establish associations between ambient scents and the VE itself. In case of desktop VEs, a close spatiotemporal link between the contents of the desktop VE and the scents with which they are supposed to be associated may be required to effectively establish diagnostic associations (i.e., smells and visual features may need to appear and disappear together to effectively induce the illusion that the smells actually emanate from the objects shown on the screen) that guide a user’s attention.

Experimental items signaling vandalism (e.g., a damaged bus shelter) and crime (e.g., home protection signs and cameras) more frequently evoked negative affective appraisals than items representing neglect (e.g., litter, dog droppings, old bicycle parts). This finding agrees with the discriminant validity of different types of perceptual incivilities that is also found in the real world (e.g., between crime and social incivilities: Worrall, 2006; Armstrong and Katz, 2010). In reality, signs of crime are also more likely to evoke negative appraisals since they are typically associated with the risk of personal victimization (Phillips and Smith, 2004). This finding suggests that the affective appraisal of the VE had at least some ecological validity.

In all experimental conditions, the relative detection performance for signals of crime was lower than for signals of neglect. This is probably due to the fact that most signals of crime (i.e., the warning signs and CCTV cameras) were positioned at eye height or higher in the VE (e.g., attached to trees, lamp posts, or walls), while the signals of neglect were on the ground or on low supports (statues). Although participants were informed about the nature of the signals of disorder, and shown examples during their introduction to the experiment, they may have focused primarily on the signs of neglect on the ground and may have paid less attention to signals higher up in the scene. The fact that the walking route was indicated by arrows drawn on the ground may also have induced a bias for downward perception.

Summarizing, the present study does not allow us to conclude whether ambient odors may be an effective tool to direct a user’s attention to specific (congruent) objects in a desktop VE (e.g., by evoking implicit associations).

Limitations of the Present Study

In previous studies on the effects of odor on visual attention participants freely inspected visual scenes without any explicit instructions, and odor induced attentional bias became manifest in spontaneous fixation behavior (Seigneuric et al., 2010; Seo et al., 2010). In the current study the participants were explicitly instructed to look for signs of disorder in the VE. The cognitive effort associated with this strict assignment may have overruled any odor induced attentional bias effects. However, the fact that only a fraction of the targets was actually noticed suggests that there was still room for odor modulated performance enhancement.

The walking route through the VE was indicated by arrows drawn on the ground, which may have induced a bias for visual search near the ground. Unfortunately, fixation behavior was not measured in this study, so this hypothesis cannot be verified.

The scent of grass had an explicit visual representation in the VE, while the scent of tar could only implicitly be linked to visual (litter) and auditory (construction sounds) elements in the VE. Future studies should preferably employ scents that have explicit and unequivocal visual counterparts in the VE. Also, a range of both (1) neutral odors or odors with the same valence but different semantic connotations, and (2) odors of different valence but without any semantic counterparts in the VE should be deployed to enable a distinction between effects induced by hedonic or semantic congruency.

There was only one sign of vandalism in this study (the broken bus shelter) which was also highly salient. As a result this item had no discriminant value. Future studies should include a larger number of test items for each experimental class, with different (including low) visual saliencies. The attention enhancing effects of olfactory cues may be more prominent for targets with low visual saliencies.

The participants in this study reported that they had no problems with their sense of smell at the time of this experiment. Also, there were no entries in the TNO database of volunteers that any olfactory deficiencies had been noted during their participation in previous smell experiments. However, since we did not explicitly test their sense of smell in the current experiment there is no guarantee that they all had normal olfactory function.

Suggestions for Future Research

It would be interesting to test whether the finding that specific odors can reflexively direct visual attention to semantically congruent visual objects (Seo et al., 2010; Tomono et al., 2011; Seigneuric et al., 2012; Chen et al., 2013) can also be replicated with dynamic desktop VEs. To effectively guide a user’s attention dynamic olfactory displays are probably required so that a close spatiotemporal link may be established between the contents of the VE and the scents with which they are supposed to be associated.

Future studies should also register eye movements, since human fixation behavior may provide valuable additional information to subjectively reported results. Also, future studies should track the exact path of the participants through the VE. It is in principle possible that participants use scent cues to adjust their distance to certain items in the VE (e.g., that they show an approach or avoidance behavior, maintaining a larger distance to unpleasant smelling items, and coming closer to pleasant smelling items). Since distance affects the visual saliency and detectability of targets this may affect the results. Path deviations are not likely to be a significant confounding factor in the present study, since most parts of the route were rather narrow and did not leave much room for deviations.

It has previously been shown that the addition of olfactory cues to an immersive VE increases the user’s sense of presence and perceived realism of the simulated environment, and ultimately his memory for details therein (Dinh et al., 1999; Washburn et al., 2003; Tortell et al., 2007). It would therefore be interesting to investigate whether an odor induced visual attention bias may also become manifest for desktop VEs when memory for details is tested instead of the number of detections. From the abovementioned previous studies we expect that participants in an (un)pleasant odor condition will remember (more) less signs of disorder than participants in an odorless control condition after completing their inspection tour of the VE.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Anderson, B. A. (2013). A value-driven mechanism of attentional selection. J. Vis. 13, 7. doi: 10.1167/13.3.7

Arao, M., Suzuki, M., Katayama, J., and Akihiro, Y. (2012). An odorant congruent with a colour cue is selectively perceived in an odour mixture. Perception 41, 474–482. doi: 10.1068/p7152

Armstrong, T., and Katz, C. (2010). Further evidence on the discriminant validity of perceptual incivilities measures. Justice Q. 27, 280–304. doi: 10.1080/07418820802506198

Baus, O., and Bouchard, S. (2010). The sense of olfaction: its characteristics and its possible applications in virtual environments. J. Cyber Ther. Rehab. 3, 31–50.

Bell, P. A., Greene, T. C., Fisher, J. D., and Baum, A. (2010). Environmental Psychology. 5th Edn. London: Lawrence Erlbaum Associates.

Bishop, I. D., and Rohrmann, B. (2003). Subjective responses to simulated and real environments: a comparison. Landsc. Urban Plan. 65, 261–277. doi: 10.1016/S0169-2046(03)00070-7

Bitner, M. J. (1992). Servicescapes: the impact of physical surroundings on customers and employees. J. Mark. 56, 57–71. doi: 10.2307/1252042

Brosch, T., Pourtois, G., and Sander, D. (2010). The perception and categorisation of emotional stimuli: a review. Cogn. Emot. 24, 377–400. doi: 10.1080/02699930902975754

Caughy, M. O., O’Campo, P. J., and Patterson, J. (2001). A brief observational measure for urban neighborhoods. Health Place 7, 225–236. doi: 10.1016/S1353-8292(01)00012-0

Chalmers, A., Debattista, K., and Ramic-Brkic, B. (2009). Towards high-fidelity multi-sensory virtual environments. Vis. Comput. 25, 1101–1108. doi: 10.1007/s00371-009-0389-2

Chen, K., Zhou, B., Chen, S., He, S., and Zhou, W. (2013). Olfaction spontaneously highlights visual saliency map. Proc. Biol. Sci. 280, 1–7. doi: 10.1098/rspb.2013.1729

Cozens, P., Neal, R., Whitaker, J., and Hillier, D. (2003). Investigating personal safety at railway stations using “virtual reality” technology. Facilties 21, 188–194. doi: 10.1108/02632770310489936

Dang, A., Liang, W, and Chi, W. (2012). “Review of VR application in digital urban planning and managing,” in Geospatial Techniques in Urban Planning, ed. Z. Shen (Berlin: Springer), 131–154.

Demattè, M. L., Osterbauer, R., and Spence, C. (2007). Olfactory cues modulate facial attractiveness. Chem. Sens. 32, 603–610. doi: 10.1093/chemse/bjm030

Demattè, M. L., Sanabria, D., and Spence, C. (2009). Olfactory discrimination: when vision matters? Chem. Sens. 34, 103–109. doi: 10.1093/chemse/bjn055

Dinh, H. Q., Walker, N., Hodges, L. F., Song, C, and Kobayashi, A. (1999). “Evaluating the importance of multi-sensory input on memory and the sense of presence in virtual environments,” in Proceedings of the Virtual Reality Annual International Symposium (Piscataway, NJ: IEEE Press), 222–228.

Driver, J., and Spence, C. (1998). Cross-modal links in spatial attention. Phil. Trans. R. Soc. B Biol. Sci. 353, 1319–1331. doi: 10.1098/rstb.1998.0286

Duncan, S., and Feldman Barrett, L. (2007). The role of the amygdala in visual awareness. Trends Cogn. Sci. 11, 190–192. doi: 10.1016/j.tics.2007.01.007

Durand, K., Baudouin, J. Y., Lewkowicz, D. J., Goubet, N., and Schaal, B. (2013). Eye-catching odors: olfaction elicits sustained gazing to faces and eyes in 4-month-old infants. PLoS ONE 8:e70677. doi: 10.1371/journal.pone.0070677

Ehrlichman, H., and Halpern, J. N. (1988). Affect and memory: effects of pleasant and unpleasant odors on retrieval of happy and unhappy memories. J. Pers. Soc. Psychol. 55, 769–779. doi: 10.1037/0022-3514.55.5.769

Fox, E., Russo, R., and Dutton, K. (2002). Attentional bias for threat: evidence for delayed disengagement from emotional faces. Cogn. Emot. 16, 355–379. doi: 10.1080/02699930143000527

Ghinea, G., and Ademoye, O. A. (2011). Olfaction-enhanced multimedia: perspectives and challenges. Multimed. Tools Appl. 55, 601–626. doi: 10.1007/s11042-010-0581-4

Gottfried, J. A., and Dolan, R. J. (2003). The nose smells what the eye sees: crossmodal visual facilitation of human olfactory perception. Neuron 39, 375–386. doi: 10.1016/S0896-6273(03)00392-1

Hacker, G., Brooks, A., and van der Zwan, R. (2013). Sex discriminations made on the basis of ambiguous visual cues can be affected by the presence of an olfactory cue. BMC Psychol. 1:10. doi: 10.1186/2050-7283-1-10

Henning, E. (2013). Britain’s Favourite Smells. Available at: http://blog.ambius.com/britains-favourite-smells

Henshaw, V., and Bruce, N. (2012). “Smell and sound expectation and the ambiances of English cities,” in Proceedings of the 2nd International Congress on Ambiances (Montreal), 449–454.

Houtkamp, J. M. (2012). Affective Appraisal of Virtual Environments. Ph.D. Thesis. University Utrecht, Utrecht. Available at: http://igitur-archive.library.uu.nl/dissertations/2012-0620-200449/UUindex.html

Houtkamp, J. M., Schuurink, E. L., and Toet, A. (2008). “Thunderstorms in my computer: the effect of visual dynamics and sound in a 3D environment,” in Proceedings of the International Conference on Visualisation in Built and Rural Environments BuiltViz’08, eds M. Bannatyne and J. Counsell (Los Alamitos: IEEE Computer Society), 11–17.

Jacobs, R. H., Renken, R., Aleman, A., and Cornelissen, F. W. (2012). The amygdala, top-down effects, and selective attention to features. Neurosci. Biobehav. Rev. 36, 2069–2084. doi: 10.1016/j.neubiorev.2012.05.011

Jadauji, J. B., Djordjevic, J., Lundstrõm, J. N., and Pack, C. C. (2012). Modulation of olfactory perception by visual cortex stimulation. J. Neurosci. 32, 3095–3100. doi: 10.1523/JNEUROSCI.6022-11.2012

Koster, E. H., Crombez, G., Van Damme, S., Verschuere, B., and De Houwer, J. (2004). Does imminent threat capture and hold attention? Emotion 4, 312–317. doi: 10.1037/1528-3542.4.3.312

Krusemark, E., and Li, W. (2012). Enhanced olfactory sensory perception of threat in anxiety: an event-related fMRI study. Chemosens. Percept. 5, 37–45. doi: 10.1007/s12078-011-9111-7

Leppänen, J. M., and Hietanen, J. K. (2003). Affect and face perception: odors modulate the recognition advantage of happy faces. Emotion 3, 315–326. doi: 10.1037/1528-3542.3.4.315

Lin, J. Y., Murray, S. O., and Boynton, G. M. (2009). Capture of attention to threatening stimuli without perceptual awareness. Curr. Biol. 19, 1118–1122. doi: 10.1016/j.cub.2009.05.021

Michael, G. A., Jacquot, L., Millot, J.-L., and Brand, G. (2003). Ambient odors modulate visual attentional capture. Neurosci. Lett. 352, 221–225. doi: 10.1016/j.neulet.2003.08.068

Michael, G. A., Jacquot, L., Millot, J.-L., and Brand, G. (2005). Ambient odors influence the amplitude and time course of visual distraction. Behav. Neurosci. 119, 708–715. doi: 10.1037/0735-7044.119.3.708

Mohanty, A., Egner, T., Monti, J. M., and Mesulam, M. M. (2009). Search for a threatening target triggers limbic guidance of spatial attention. J. Neurosci. 29, 10563–10572. doi: 10.1523/JNEUROSCI.1170-09.2009

Morrin, M., and Ratneshwar, S. (2003). Does it make sense to use scents to enhance brand memory? J. Market. Res. 40, 10–25. doi: 10.1509/jmkr.40.1.10.19128

Morrin, M., and Ratneshwar, S. (2000). The impact of ambient scent on evaluation, attention, and memory for familiar and unfamiliar brands. J. Bus. Res. 49, 157–165. doi: 10.1016/S0148-2963(99)00006-5

Morrot, G., Brochet, F., and Dubourdieu, D. (2001). The color of odors. Brain Lang. 79, 309–320. doi: 10.1006/brln.2001.2493

Murray, E. A. (2007). The amygdala, reward and emotion. Trends Cogn. Sci. 11, 489–497. doi: 10.1016/j.tics.2007.08.013

Nasar, J. L., and Cubukcu, E. (2011). Evaluative appraisals of environmental mystery and surprise. Environ. Behav. 43, 387–414. doi: 10.1177/0013916510364500

Oshima, C., Wada, A., Ando, H., Matsuo, N., Abe, S., and Yanigada, Y. (2007). “Improved delivery of olfactory stimukus to keep drivers awake,” in Workshop on DSP for in-Vehicle and Mobile Systems, Istanbul.

Österbauer, R. A., Matthews, P. M., Jenkinson, M., Beckmann, C. F., Hansen, P. C., and Calvert, G. A. (2005). The color of scents: chromatic stimuli modulate odor responses in the human brain. J. Neurophysiol. 93, 3434–3441. doi: 10.1152/jn.00555.2004

Park, A. J., Calvert, T., Brantingham, P. L., and Brantingham, P. J. (2008). The use of virtual and mixed reality environments for urban behavioural studies. Psychnol. J. 6, 119–130.

Park, A. J., Spicer, V., Guterres, M., Brantingham, P. L., and Jenion, G. (2010). “Testing perception of crime in a virtual environment,” in Proceedings of the 2010 IEEE International Conference on Intelligence and Security Informatics (ISI) (Piscataway, NJ: IEEE Press), 7–12.

Perkins, D. D., Meeks, J. W., and Taylor, R. B. (1992). The physical environment of street blocks and resident perceptions of crime and disorder: implications for theory and measurement. J. Environ. Psychol. 12, 21–34. doi: 10.1016/S0272-4944(05)80294-4

Phillips, T., and Smith, P. (2004). Emotional and behavioral responses to everyday incivility: challenging the fear/avoidance paradigm. J. Sociol. 40, 378–399. doi: 10.1177/1440783304048382

Pollatos, O., Kopietz, R., Linn, J., Albrecht, J., Sakar, V., Anzinger, A., et al. (2007). Emotional stimulation alters olfactory sensitivity and odor judgment. Chem. Sens. 32, 583–589. doi: 10.1093/chemse/bjm027

Pourtois, G., Schettino, A., and Vuilleumier, P. (2013). Brain mechanisms for emotional influences on perception and attention: what is magic and what is not. Biol. Psychol. 92, 492–512. doi: 10.1016/j.biopsycho.2012.02.007

Reynolds, E. (2012). Our favourite smells: cut grass, aftershave, a freshly cleaned house, baking and a Sunday roast. Daily Mail. Available at: http://www.dailymail.co.uk/femail/article-2157519/ (accesses June 11, 2012).

Richard, E., Tijou, A., Richard, P., and Ferrier, J.-L. (2006). Multi-modal virtual environments for education with haptic and olfactory feedback. Virtual Reality 10, 207–225. doi: 10.1007/s10055-006-0040-8

Riener, R., and Harders, M. (2012). “Olfactory and gustatory aspects,” in Virtual Reality in Medicine, eds R. Riener and M. Harders (London: Springer), 149–159.

Schifferstein, H. N. J., and Blok, S. T. (2002). The signal function of thematically (in)congruent ambient scents in a retail environment. Chem. Sens. 27, 539–549. doi: 10.1093/chemse/27.6.539

Seigneuric, A., Durand, K., Jiang, T., Baudouin, J. Y., and Schaal, B. (2010). The nose tells it to the eyes: crossmodal associations between olfaction and vision. Perception 39, 1541–1554. doi: 10.1068/p6740

Seigneuric, A., Durand, K., Jiang, T., Baudouin, J. Y., and Schaal, B. (2012). The nose tells it to the eyes: crossmodal associations between olfaction and vision. Perception 39, 1541–1554. doi: 10.1068/p6740

Seo, H. S., Roidl, E., Müller, F., and Negoias, S. (2010). Odors enhance visual attention to congruent objects. Appetite 54, 544–549. doi: 10.1016/j.appet.2010.02.011

Seubert, J., Freiherr, J., Djordjevic, J., and Lundström, J. N. (2013). Statistical localization of human olfactory cortex. Neuroimage 66, 333–342. doi: 10.1016/j.neuroimage.2012.10.030

Spielberger, C. D. (1983). State-Trait Anxiety Inventory for adults. Mountain View, CA: Consulting Psychologists Press, Inc.

Teller, C., and Dennis, C. (2011). The effect of ambient scent on consumers’ perception, emotions and behaviour: a critical review. J. Mark. Manage. 28, 14–36. doi: 10.1080/0267257X.2011.560719

Todd, R. M., Cunningham, W. A., Anderson, A. K., and Thompson, E. (2012). Affect-biased attention as emotion regulation. Trends Cogn. Sci. 16, 365–372. doi: 10.1016/j.tics.2012.06.003

Toet, A., and van Schaik, M. G. (2012). Effects of signals of disorder on fear of crime in real and virtual environments. J. Environ. Psychol. 32, 260–276. doi: 10.1016/j.jenvp.2012.04.001

Tomono, A., Kanda, K., and Otake, S. (2011). Effect of smell presentation on individuals with regard to eye catching and memory. Electron. Comm. Jpn. 94, 9–19. doi: 10.1002/ecj.10319

Tortell, R., Luigi, D. P., Dozois, A., Bouchard, S., Morie, J. F., and Ilan, D. (2007). The effects of scent and game play experience on memory of a virtual environment. Virtual Real. 11, 61–68. doi: 10.1007/s10055-006-0056-0

Van der Burg, E., Olivers, C. N., Bronkhorst, A. W., and Theeuwes, J. (2008). Pip and pop: nonspatial auditory signals improve spatial visual search. J. Exp. Psychol. Hum. Percept. Perform. 34, 1053–1065. doi: 10.1037/0096-1523.34.5.1053

Van der Burg, E., Olivers, C. N., Bronkhorst, A. W., and Theeuwes, J. (2009). Poke and pop: tactile-visual synchrony increases visual saliency. Neurosci. Lett. 450, 60–64. doi: 10.1016/j.neulet.2008.11.002

Vuilleumier, P. (2005). How brains beware: neural mechanisms of emotional attention. Trends Cogn. Sci. 9, 585–594. doi: 10.1016/j.tics.2005.10.011

Walla, P. (2008). Olfaction and its dynamic influence on word and face processing: cross-modal integration. Prog. Neurobiol. 84, 192–209. doi: 10.1016/j.pneurobio.2007.10.005

Washburn, D. A., Jones, L. M., Satya, R. V., Bowers, C. A., and Cortes, A. (2003). Olfactory use in virtual environment training. Model. Simulat. Mag. 2, 19–25.

Williams, L. M., Palmer, D., Liddell, B. J., Song, L., and Gordon, E. (2006). The ‘when’ and ‘where’ of perceiving signals of threat versus non-threat. Neuroimage 31, 458–467. doi: 10.1016/j.neuroimage.2005.12.009

Williams, M. A., McGlone, F., Abbott, D. F., and Mattingley, J. B. (2005). Differential amygdala responses to happy and fearful facial expressions depend on selective attention. Neuroimage 24, 417–425. doi: 10.1016/j.neuroimage.2004.08.017

Winston, J. S., Gottfried, J. A., Kilner, J. M., and Dolan, R. J. (2005). Integrated neural representations of odor intensity and affective valence in human amygdala. J. Neurosci. 25, 8903–8907. doi: 10.1523/JNEUROSCI.1569-05.2005

Woldorff, M. G., Gallen, C. C., Hampson, S. A., Hillyard, S. A., Pantev, C., Sobel, D., et al. (1993). Modulation of early sensory processing in human auditory cortex during auditory selective attention. Proc. Natl. Acad. Sci. U.S.A. 90, 8722–8726. doi: 10.1073/pnas.90.18.8722

World Medical Association. (2000). World Medical Association Declaration of Helsinki: ethical principles for medical research involving human subjects. J. Am. Med. Assoc. 284, 3043–3045. doi: 10.1001/jama.284.23.3043

Worrall, J. L. (2006). The discriminant validity of perceptual incivility measures. Justice Q. 23, 360–383. doi: 10.1080/07418820600869137

Wrzesniewski, A., McCauley, C., and Rozin, P. (1999). Odor and affect: individual differences in the impact of odor on liking for places, things and people. Chem. Sens. 24, 713–721. doi: 10.1093/chemse/24.6.713

Yanigada, Y., Adachi, T., Miyasato, T., Tomono, A., Kawato, S., Noma, H., et al. (2005). “Integrating a projection-based olfactory display with interactive audio-visual contents,” in HCI International 2005, Las Vegas.

Yanigada, Y., Kawato, S., Noma, H., Tetsutani, N., and Tomono, A. (2003). “A nose-tracked, personal olfactory display,” in International Conference on Computer Graphics and Interactive Techniques. ACM SIGGRAPH 2003 Sketches & Applications (New York: ACM), 1.

Yanigada, Y., Kawato, S., Noma, H., Tomono, A., and Tetsutani, N. (2004). “Projection-based olfactory display with nose tracking,” in Proceedings of IEEE Virtual Reality 2004 (Piscataway, NJ: IEEE), 43–50.

Yu, J., Yanigada, Y., Kawato, S., and Tetsutani, N. (2003). “Air cannon design for projection-based olfactory display,” in Proceedings of the 13th International Conference on Artificial Reality and Telexistence, Tokyo, 136–142.

Zald, D. H. (2003). The human amygdala and the emotional evaluation of sensory stimuli. Brain Res. Rev. 41, 88–123. doi: 10.1016/S0165-0173(02)00248-5

Zelano, C., Bensafi, M., Porter, J., Mainland, J., Johnson, B., Bremner, E., et al. (2005). Attentional modulation in human primary olfactory cortex. Nat. Neurosci. 8, 114–120. doi: 10.1038/nn1368

Zellner, D. A. (2013). Color odor interactions: a review and model. Chemsens. Percept. 1–15. doi: 10.1007/s12078-013-9154-z

Zellner, D. A., Bartoli, A. M., and Eckard, R. (1991). Influence of color on odor identification and liking ratings. Am. J. Psychol. 104, 547–561. doi: 10.2307/1422940

Zellner, D. A., and Kautz, M. A. (1990). Color affects perceived odor intensity. J. Exp. Psychol. Hum. Percept. Perform. 16, 391–397. doi: 10.1037/0096-1523.16.2.391

Zhou, W., and Chen, D. (2009). Fear-related chemosignals modulate recognition of fear in ambiguous facial expressions. Psychol. Sci. 20, 177–183. doi: 10.1111/j.1467-9280.2009.02263.x

Zhou, W., Jiang, Y., He, S., and Chen, D. (2010). Olfaction modulates visual perception in binocular rivalry. Curr. Biol. 20, 1356–1358. doi: 10.1016/j.cub.2010.05.059

Keywords: attention, ambient odor, semantic congruency, affective congruency, virtual environment

Citation: Toet A and van Schaik MG (2013) Visual attention for a desktop virtual environment with ambient scent. Front. Psychol. 4:883. doi: 10.3389/fpsyg.2013.00883

Received: 11 September 2013; Accepted: 06 November 2013;

Published online: 26 November 2013.

Edited by:

Ilona Croy, University of Gothenburg, SwedenReviewed by:

Johannes Frasnelli, Université de Montréal, CanadaHan-Seok Seo, University of Arkansas, USA

Copyright © 2013 Toet and van Schaik. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alexander Toet, TNO, Kampweg 5, 3769 DE Soesterberg, Netherlands e-mail: lex.toet@tno.nl; lextoet@gmail.com

Alexander Toet

Alexander Toet Martin G. van Schaik

Martin G. van Schaik