- Music Dynamics Lab, Department of Psychology, University of Connecticut, Storrs, CT, USA

Is there something special about the way music communicates feelings? Theorists since Meyer (1956) have attempted to explain how music could stimulate varied and subtle affective experiences by violating learned expectancies, or by mimicking other forms of social interaction. Our proposal is that music speaks to the brain in its own language; it need not imitate any other form of communication. We review recent theoretical and empirical literature, which suggests that all conscious processes consist of dynamic neural events, produced by spatially dispersed processes in the physical brain. Intentional thought and affective experience arise as dynamical aspects of neural events taking place in multiple brain areas simultaneously. At any given moment, this content comprises a unified “scene” that is integrated into a dynamic core through synchrony of neuronal oscillations. We propose that (1) neurodynamic synchrony with musical stimuli gives rise to musical qualia including tonal and temporal expectancies, and that (2) music-synchronous responses couple into core neurodynamics, enabling music to directly modulate core affect. Expressive music performance, for example, may recruit rhythm-synchronous neural responses to support affective communication. We suggest that the dynamic relationship between musical expression and the experience of affect presents a unique opportunity for the study of emotional experience. This may help elucidate the neural mechanisms underlying arousal and valence, and offer a new approach to exploring the complex dynamics of the how and why of emotional experience.

Introduction

Every known civilization creates unique and sophisticated musical forms to communicate affect, and humans consciously seek out musical experiences because of the feelings they evoke. There is widespread agreement that music induces emotional experiences, and that at least some aspects of this phenomenon are universal. But what is the nature of musical feelings and what is the relationship between musical feelings and emotions? Over the years, competing theories have been developed to address these issues, and sophisticated experimental paradigms have been devised to investigate them. However, incommensurate claims and variable findings leave many open questions. Are affective responses to music accidents of evolution? Is there something special about musical communication? Can the study of emotion teach us anything about the nature of music? And what, if anything, can music teach us about the nature of emotional experience?

Since Meyer’s (1956) pioneering work in music and emotion, theorists have struggled to explain how music could stimulate emotional experiences by somehow triggering basic, evolutionarily ancient psychological processes tied to survival. Empirical approaches have sought specific behavioral and/or physiological responses to music (Juslin and Sloboda, 2001). One specific problem that arises is the question of how an object-directed emotion can be evoked by music with no external referent. Another is how a sophisticated, non-referential form of cultural expression, learned over many years of exposure, could engender innate, survival-related responses.

The goal of this paper is to stake out some new territory in the debate on musical emotion. First, we will ask whether emotional experiences are really “basic” (Allport, 1924; Izard, 1971), or whether they are psychologically constructed from domain-general processes (Barrett, 2009a). Next, we will explore the idea that all conscious systems consist of multiple neural processes, produced by spatially dispersed events in the physical brain and integrated into a seamless neurodynamic whole through synchrony of neural oscillations (Edelman, 2003). Core affect is thought to be one primitive, domain-general aspect of the dynamic core of consciousness (Russell, 2003) especially relevant to emotional experience (Barrett, 2009a). Then, we will review recent evidence that neuronal synchrony with music gives rise to musical qualia including tonal and temporal expectancies (Large and Jones, 1999; Lerdahl, 2001; Huron, 2005; Large, 2010a). Finally, we will argue that music-synchronous responses couple into the dynamic core of consciousness, directly modulating core affect.

Theories of Music and Emotion

Music does not have obvious survival value (cf. Pinker, 1997) and yet is able to elicit strong emotional reactions. Many biological perspectives consider the primary function of emotion as a response to behavioral demands that may require mobilization for action; they evolved to prepare an individual to deal with situations that were significant for survival and reproduction (Darwin, 1872/1965; Cosmides and Tooby, 2000; Huron, 2005, 2006). Darwin (1872/1965) proposed that the origin of the musical communication of emotion was to be found in the evolutionary process of sexual selection.

Psychological approaches to musical emotions have been heavily influenced by the theory of basic emotion. Basic emotion theorists have sought to identify categories of emotions that share distinct collections of properties such as patterns of autonomic nervous system activity and behavioral responses or action tendencies (Allport, 1924; Izard, 1971; Ekman, 1972; Panksepp, 1998). Other approaches consider emotions to be constructed from more primitive processes, including affect (Irons, 1987; Ortony et al., 1988; Russell, 2003; Barrett, 2006, 2009b; Duncan and Barrett, 2007). Mechanisms of musically induced emotion have been explored at great length with varying causations, interpretations, and results.

Meyer’s (1956) approach to musical emotion has been highly influential in part because he was the first to seriously take into account both philosophical works (e.g., Langer, 1951) and psychological theories of emotion (cf. Dewey, 1895; MacCurdy, 1925; Angier, 1927; Rapaport, 1950). Meyer observed that namable emotions are – unlike music – event-directed, and that emotional experiences are much more subtle than the “crude and standardized words we use to denote them.” He also observed that emotional responses are not innate, they are highly variable, and they depend on learning and enculturation (Meyer, 1956). He therefore concluded that, for the most part, music does not communicate genuine emotions. “That which we wish to consider” wrote Meyer, “is that which is most vital and essential in emotional experience, the feeling-tone accompanying emotional experience, that is, the affect” (Meyer, 1956, p.12).

Meyer was also the first to suggest that what is now called statistical learning applies to music and determines musical feelings. For example, a passage of tonal music leads to the feeling that some pitches are more stable than others. More stable pitches are felt as points of repose, and less stable pitches are felt to point toward, or be attracted to the more stable ones (Lerdahl, 2001). Such relationships are reflected in naming conventions of many musical cultures, including Western, Indian and Chinese (Meyer, 1956). Based on the failure of earlier attempts to account for musical communication based on vibrations, ratios of intervals, and so on, he argued that feelings of stability and attraction are learned through experience with the music of a particular culture. Moreover, the associations of musical moods, such as happy and sad, with major or minor harmonies, or the affective qualities associated with ragas in North Indian tonal systems are conventional designations, having little to do with the sound itself (Meyer, 1956).

Finally, Meyer (1956) argued that the frustration of expectancy is the basis for affective responses to music. He believed that affect is aroused when an action tendency is inhibited. Music, unlike other emotional stimuli, is not referential; it both creates and inhibits expectancies thereby providing meaningful and relevant resolutions within itself. Music communicates affect through violations and resolutions of learned expectancies.

The latter two points were taken up by modern empiricists and theorists, who studied musical expectancy and statistical learning in a variety of musical domains, and from various points of view (e.g., Krumhansl, 1990; Narmour, 1990; Large and Jones, 1999; Tillmann et al., 2000; Lerdahl, 2001; Huron, 2005; Temperley, 2007). Perhaps the most comprehensive attempt to extend Meyer’s expectancy theory of musical emotion is Huron’s “Sweet Anticipation” (2006). Huron argues that a fundamental job of the brain is to make predictions about the world, and successful predictions are rewarded. Within tonal context, the most stable pitches are experienced as most pleasant; within a metrical context, events that occur at expected times are more pleasurable. Thus, Huron argues, music is fundamentally a hedonic experience.

Huron emphasizes that tonal and temporal expectancies in music are learned. Musical events evoke distinctive musical qualia, and Huron reviews the body of empirical evidence showing that qualia such as stability and attraction (“scale degree qualia”) correlate with statistical properties of music (Huron, 2006). He agrees with Meyer that the associations of major and minor modes with happy and sad qualia are learned associations.

In a key break from Meyer, however, Huron argues that expectancy evokes emotions, not merely affect. He adopts a two-process approach, which posits a fast time-scale reaction and a slow time-scale appraisal (LeDoux, 1996). Specific emotional responses involve primitive circuits that are conserved throughout mammalian evolution, and function relatively independently of cognitive circuits (LeDoux, 2000). He hypothesizes, for example, that unexpected events in music activate the neural circuitry for fear, leading to the feeling of surprise. He goes so far as to suggest that basic survival-related responses, including fight, flight and freezing, lead to the specific subjective musical experiences of frisson, laughter, and awe, respectively.

Juslin and Vastfjall (2008), consider emotions to be affective responses that involve subjective feelings, physiological arousal, expressions, and action tendencies. They too, reject Meyer’s claim that music does not induce genuine emotions, because musical responses can display all these features. They endorse the notion that emotions involve intentionality1; emotions are “about” something. However, they claim that music induces a wide range of both basic and complex emotions because music triggers a variety of psychological mechanisms beyond expectancy. They go on to describe how brain stem reflexes, evaluative conditioning, visual imagery, episodic memory, and emotional contagion can lead to genuine emotional responses. Brain stem reflexes trigger emotional responses because acoustic characteristics are taken by the brain stem to signal an urgent event. Evaluative conditioning is a special kind of classic conditioning in which a stimulus without an emotional meaning, e.g., music, is consistently paired with an emotional experience, eventually coming to trigger the emotional response. Presumably, the learned pairing of major with happy and minor with sad (Meyer, 1956; Huron, 2006) would be an example of this phenomenon. Triggering of visual imagery as well as episodic memories (Janata et al., 2007) can also lead to emotional experiences (Sloboda and O’Neill, 2001). In all of these cases the emotional responses are intentional – they are about something.

Emotional contagion, in Juslin and Vastfjall’s (2008) view, is a process in which a listener perceives the emotional expression of the music, and then “mimics” this expression internally, as with other forms of interpersonal interaction like bodily gestures, facial gestures, and speech (Juslin and Vastfjall, 2008). In their conceptualization, music evokes basic emotions with distinct nonverbal expressions (Juslin and Laukka, 2003; Laird and Strout, 2007), and this process operates similarly to emotional contagion via facial and vocal expressions of emotion (Tomkins, 1962; Ekman, 1993). Emotional contagion is linked to activation of the so-called mirror neuron system (Rizzolatti and Craighero, 2004), and Juslin (2001) suggests that music can operate in this way because in some sense it imitates other forms of social interaction. Below we will offer a somewhat different view of contagion.

If we consider the full spectrum of phenomena discussed by Juslin and Vastfjall (2008), it seems clear that a wide range of emotional responses can be triggered by music. However, from a musical point of view, some of these mechanisms are more interesting than others. Loud unexpected sounds can frighten us, and auditory stimuli can trigger conditioned responses. But in these examples, music serves merely as a trigger. Many other kinds of stimuli can trigger such responses equally well; they need not be musical or even auditory (e.g., LeDoux, 2000). Episodic memory and visual imagery likely account for a significant proportion of the emotional responses people experience on a day-to-day basis (Janata et al., 2007). Moreover, there are a many reasons to believe that music is especially effective at eliciting episodic memories (Janata et al., 2007; Eschrich et al., 2008). If we could understand the fundamental mechanisms of musical communication, this may help us to understand why episodic memories are so effectively evoked by music. Here, we take a different approach to understanding the ability of music to elicit feelings, one that does not treat music merely as a trigger but rather focuses on fundamental dynamic mechanisms of affective communication.

The remainder of this article is concerned primarily with musical expectancy and contagion, as these are mechanisms that seem to us to be most inherently musical. Expectancies arise in response to complex, explicitly musical structures such as tonality and meter. Contagion is a kind of empathic resonance (Molnar-Szakacs and Overy, 2006; Chapin et al., 2010) that enables music to function as a type of interpersonal communication. Our approach will be to link expectancy and contagion with the dynamics of the physical brain. This involves addressing several basic questions. What is the nature of emotional experience: are emotions basic, evolutionarily adapted and cross-cultural, or are emotions constructed from more fundamental psychological ingredients? Are musical qualia based solely on learned contingencies, or do they arise from intrinsic neurodynamics? And what is the nature of the relationship, such that music is able to elicit affective experiences?

Emotion, Affect, and Consciousness

Influenced by Darwin’s (1872/1965) theory of pan cultural emotions, Tomkins (1963) and Ekman et al. (1987) argued that emotions are genetically determined products of evolution. Basic emotions are discrete, and each category shares a distinctive collection of properties including patterns of autonomic nervous system activity, behavioral responses or action tendencies, and a set of emotion-specific brain structures that are thought to mediate these particular “basic” emotions. Each basic emotion derives from a particular causal mechanism; an evolutionarily preserved module in the brain (Tomkins, 1963; Ekman, 1992; Panksepp, 1998). Ekman (1984) proposed that the natural boundaries between types of emotion could be determined by differences in facial expression. Huron’s (2005) approach to musical emotion and Juslin and Vastfjall’s (2008) multiple mechanisms theory tend to endorse the basic emotion view. Basic emotions are cross-cultural and non-basic emotions are specific to cultural upbringing. However, there is little agreement about which emotions are basic, how many emotions are basic, and how basic emotions are defined.

Recent behavioral, psychophysiological, and neural findings (e.g., Barrett, 2006; Pessoa, 2008; Lindquist et al., 2012) have led a number of emotion theorists to question the basic emotion view (Ortony and Turner, 1990; Russell and Barrett, 1999; Duncan and Barrett, 2007). An alternative approach holds that diverse human emotions result from the interplay of more fundamental domain-general processes (Russell, 2003; Pessoa, 2008; Barrett, 2009b). Psychological constructionists argue that emotions are culturally relative, learned, and, though they are a result of evolution, they are not biologically basic (Russell and Barrett, 1999; Duncan and Barrett, 2007). Emotions are the combination of psychologically primitive processes that encompass both affective and intentional components. A specific emotion is not the invariable result of activation in a particular brain area; neural circuitry realizes more basic processes across emotion categories (Pessoa, 2008; Wilson-Mendenhall et al., 2013). Meyer’s approach to the musical communication of affect is consistent with this view.

Contemporary neurodynamic approaches hypothesize that all conscious states are a multimodal process entailed by physical events occurring in the brain (Tononi and Edelman, 1998; Engel and Singer, 2001; Searle, 2001; Seth et al., 2006; Pessoa, 2008). The neural structures and mechanisms underlying consciousness contribute domain-general processes to many psychological phenomena. Importantly, when spatially distinct areas contribute to the contents of consciousness, they enter into a unified neurodynamic core (e.g., Edelman, 2003). Neurodynamic theories of consciousness propose that the synchronous activations of the thalamocortical system give rise to the unity of conscious experience (Edelman and Tononi, 2000; Varela et al., 2001; Cosmelli et al., 2006). Binding of spatially distinct processes is thought to occur through enhanced synchrony in gamma and beta band rhythms (Engel and Singer, 2001; Fujioka et al., 2012), and high frequency activity is modulated by slower rhythms such as delta and theta (Lakatos et al., 2005; Buzsáki, 2006; Canolty et al., 2006).

Intentionality and affect are fundamental properties of conscious experience (Searle, 2001). Conscious processes point to or are about something (Brentano, 1973; Searle, 2000), and they possess a valence and a level of activation (Barrett, 1998, 2006; Searle, 2000). Searle’s theory of consciousness (Searle, 1992, 2004), Edelman’s dynamic core theory (Edelman, 1987; Edelman and Tononi, 2000) and Damasio’s somatic marker hypothesis (cf. Damasio, 1999) all emphasize dynamic processes that encompass both intentionality (e.g., appraisal, see Scherer, 2001; Smith and Kirby, 2001) and affect (Russell and Barrett, 1999; Davidson, 2000). An emotional experience includes affect as one important ingredient, but intentional psychological processes – perception, cognition, attention, and behavior – are also necessary (Pessoa, 2008; Barrett, 2009b). To a great extent, the difference between an emotion and a cognition depends on the level of attention paid to the core affect (Russell, 2003).

Affect can be characterized as fluctuating level of valence (pleasure/displeasure) and arousal (activation/deactivation; Wundt, 1897; Russell, 2003; Barrett and Bliss-Moreau, 2009). It is the most elementary consciously accessible sensation evident in moods and emotions (Russell, 2003). Core affect is so called because it is thought to arise in the core of the body or in neural representations of body state change (Russell and Barrett, 1999; Russell, 2003). It has been observed in subjective reports (Barrett, 2004), in peripheral nervous system activation (Cacioppo et al., 2000), and in facial and vocal expression (Cacioppo et al., 2000; Russell, 2003). The experience of core affect is thought to be present in infants (Lewis, 2000) and psychologically universal (Russell, 1991; Mesquita, 2003).

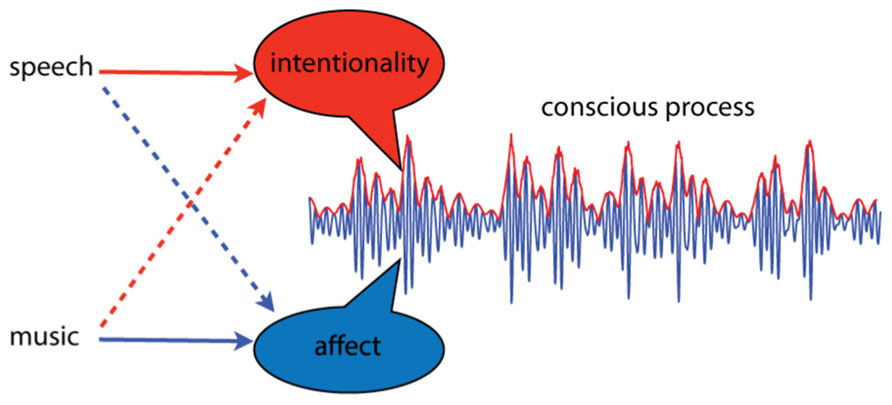

Intentional thought and affective experience are thought to arise as dynamic aspects of spatially distinct dynamic processes, integrated through synchrony of neural oscillations (Tononi and Edelman, 1998; Searle, 2001; Seth et al., 2006). Let us attempt to illustrate this idea, emphasizing dynamic over spatial aspects, by integrating over neural location. The result is an average, summarizing the activity of multiple brain areas, as shown in Figure 1. The dynamic properties of this pattern are the critical features; intentionality and affect correspond to dynamic aspects of the integrated neural activity. In this illustration, affective aspects correspond to changes in higher frequency activity, while intentional aspects take place at lower frequencies, and appear as amplitude modulations. This is only a visual aid of course; we do not know enough to speculate about which frequency bands or dynamic features might correspond to intentionality and affect. Here we oversimplify to illustrate the point that relevant aspects of experience may correspond to dynamical aspects of integrated neural processes. If this approach is on the right track, however, then this way of thinking about core affect may lead to a better understanding why music is such an especially effective means of affective communication.

FIGURE 1. A conscious process is conceived as a dynamic pattern of activity. Intentionality and affect are conceived as separable aspects of such processes, and different types of communication sounds may convey more of one or the other. Language primarily communicates intentionality; it is “about” events in the external world. However, certain aspects of speech, such as prosody, can communicate affect. Music, on the other hand, communicates primarily affect; it is most often not “about” anything. However, under certain circumstances music can signify events or elicit memories.

Musical Neurodynamics

At any given moment, a unified neurodynamic process is shaped by exogenous sensory input such as sights or sounds, input from the body such as vestibular sensations, endogenous constructs such as autobiographical memories, and by communication sounds such as music and speech. It seems likely that if intentionality and affect are different dynamic aspects of these spatiotemporal patterns, then different kinds of communication sounds may couple into different aspects of the dynamics. Of course, it is well established that different modes of auditory communication, i.e., music and speech, convey more of one aspect or the other. Speech primarily communicates intentionality; it is “about” events in the external world. Nevertheless, certain aspects of speech, such as prosody, directly communicate affect. Music, on the other hand, communicates primarily affect; it is most often not “about” anything. However music can signify objects or events, and it can evoke memories and images. Thus, both types of signals can induce emotions, although in different ways. What we want to suggest is that music may couple directly into affective dynamics because it causes the brain to resonate in certain ways.

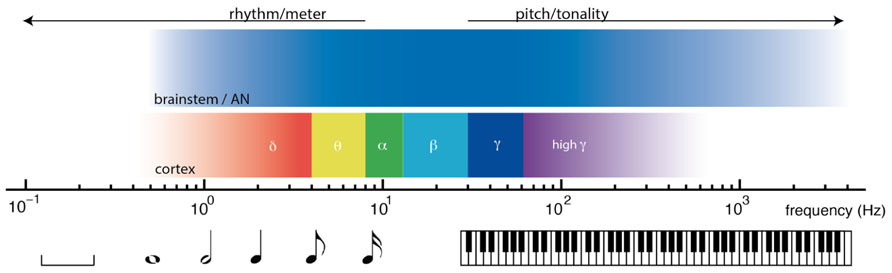

Nonlinear oscillation and resonant responses to acoustic signals are found at multiple time scales in the nervous system, from thousands of Hertz in the auditory nerve and brainstem, to cortical oscillations in delta, theta, beta, and gamma ranges. The relative timescales of these processes are illustrated in Figure 2. From the earliest stages of the auditory system, volleys of action potentials time-lock to dynamic features of acoustic waves (Joris et al., 2004; Laudanski et al., 2010). Time-locked brainstem responses are thought to be important in the perception of pitch, which is observed from 30 Hz (Pressnitzer et al., 2001) up to about 4000 Hz (Plack and Oxenham, 2005). In auditory cortex, endogenous cortical oscillations entrain to low frequency rhythms of acoustic stimuli (Lakatos et al., 2008; Nozaradan et al., 2011). Cortical entrainment is thought to be important in the perception of rhythm, which extends from about 8 Hz (Repp, 2005a) to ultra low cortical frequencies (Buzsáki, 2006; Large, 2008). Between the timescales of pitch and rhythm lie the frequencies thought to be important in binding neural processes into unified conscious scenes (Engel and Singer, 2001; Seth et al., 2006; Fujioka et al., 2012).

FIGURE 2. Timescales of neural dynamics and timescales of musical communication. Music engages the brain on multiple timescales, from fractions of one cycle per second (left) to thousands of cycles per second (right). At rhythmic timescales, cortical oscillations entrain to rhythmic stimuli. At tonal timescales, volleys of action potentials time lock to acoustic waves.

Pitch and Tonality

In central auditory circuits, action potentials phase- and mode-lock to the fine time structure and the temporal envelope modulations of auditory stimuli at many different neural levels (Langner, 1992; Large et al., 1998; Joris et al., 2004; Laudanski et al., 2010). Neural synchrony is thought to be important in pitch perception (Cariani and Delgutte, 1996; Hartmann, 1996), consonance (Ebeling, 2008; Shapira Lots and Stone, 2008), and musical tonality (Tramo et al., 2001; Large, 2010a). While phase-locking is well established, mode-locked spiking patterns have recently been reported in the mammalian auditory system (Laudanski et al., 2010) and may explain the highly nonlinear responses to musical intervals that can be measured in the human auditory brainstem response (Lee et al., 2009; Large and Almonte, 2012; Lerud et al., 2013).

Mode-locking implies binding between neural frequencies that display particular frequency relationships (Hoppensteadt and Izhikevich, 1997). In this form of synchrony a periodic stimulus interacts with intrinsic neural dynamics causing m cycles of the oscillation to lock to k cycles of the stimulus. Mode-locking leads to neural resonance at harmonics (k*f1), subharmonics (f1/m), summation frequencies (e.g., f1 + f2), difference frequencies (e.g., f2 - f1), and integer ratios (e.g., k*f1/m)2. This implies feature binding based on harmonicity (Bregman, 1990), and suggests a role for mode-locking in the perception of pitch (cf. Cartwright et al., 1999). This also predicts a significant cross-cultural musical invariant (Burns, 1999) because octave frequency relationships (2:1 and 1:2) are the most stable, followed by fifths (3:2), and fourths (4:3). Mode-locking may provide a neurodynamic explanation for musical consonance and dissonance (Shapira Lots and Stone, 2008) that does not depend on interference (e.g., Plomp and Levelt, 1965).

Perhaps most relevant to the current discussion is the issue of scale degree qualia (Huron, 2006), which has important implications for understanding musical expectancy (Meyer, 1956; Zuckerkandl, 1956). Scale degree qualia differentiate musical sound sequences from arbitrary sound sequences, and are thought to enable non-referential sound patterns to carry meaning. Most discussions of expectancy and emotion assume scale degree qualia to be learned based on the statistics of tonal sequences (e.g., Meyer, 1956; Krumhansl and Kessler, 1982; Lerdahl, 2001; Huron, 2006), and therefore culture-dependent. However, recent dynamical analyses have shown that mode-locking provides a better explanation for quantitative measurements of stability in both Western and North Indian tonal systems (Krumhansl and Kessler, 1982; Castellano et al., 1984; Large, 2010a; Large and Almonte, 2011). Thus, scale degree qualia likely depend on the interaction of the stimulus sequence with intrinsic neurodynamic properties of the physical brain.

Rhythm and Meter

At the timescale of rhythm and meter, relationships between musical and neural rhythms are equally striking (Musacchia et al., 2013). In auditory cortex, brain rhythms nest hierarchically, for example delta phase modulates theta amplitude, and theta phase modulates gamma amplitude (Lakatos et al., 2005). Like neural rhythms, music rhythms nest hierarchically, such that faster metrical frequencies subdivide the pulse (London, 2004). Pulse perception provides a good match for the delta band (0.5–4 Hz, see London, 2004) while fast metrical frequencies occupy theta (4–8 Hz, see e.g., Repp, 2005b; Large, 2008). Importantly, acoustic stimulation in the pulse range synchronizes auditory cortical rhythms in the delta-band (Will and Berg, 2007; Stefanics et al., 2010; Nozaradan et al., 2011) and modulates the amplitude of higher frequency beta and gamma rhythms (Snyder and Large, 2005; Iversen et al., 2006; Fujioka et al., 2012). Models of synchronization to acoustic rhythms (see e.g., Large, 2008) have successfully predicted a wide range of behavioral observations in time perception (Jones, 1990; McAuley, 1995), meter perception (Large and Kolen, 1994; Large, 2000), attention allocation (Large and Jones, 1999; Stefanics et al., 2010), and motor coordination (Kelso et al., 1990; Repp, 2005c). Moreover, musical qualia including metrical expectancy (Huron, 2005), syncopation (London, 2004), and groove (Tomic and Janata, 2008; Janata et al., 2012), have all been linked to synchronization of cortical rhythms and/or bodily movements. In addition, synchronization of rhythmic movements to music (Burger et al., 2013) and synchronization between individuals (e.g., Hove and Risen, 2009) have been linked to affective responses.

The perception of rhythm also provides an example of synchronous time-locked patterns of activity integrating the function of multiple brain regions. When people listen to musical rhythms that have a pulse or basic beat, multiple brain regions are activated, including auditory cortices, cerebellum, basal ganglia, premotor cortex, and the supplementary motor cortex (Zatorre et al., 2007; Chen et al., 2008; Grahn and Rowe, 2009). In these areas, the amplitude of beta band activity waxes and wanes with the pulse of the acoustic stimulation (Snyder and Large, 2005; Iversen et al., 2006; Fujioka et al., 2012). The specific neural structures involved depend on the tempo of the stimulus, and it appears that the synchrony of beta band processes is what binds the neural activity (Fujioka et al., 2012). This suggests that perhaps it is not the areas per se, but the integrated neural activity that corresponds to the experience of pulse.

Musical Communication as Neurodynamic Resonance

We can summarize the above discussion by saying that music taps into brain dynamics at the right time scales to cause both brain and body to resonate to the patterns. This causes the formation of spatiotemporal patterns of activity on multiple temporal and spatial scales within the nervous system. The dynamical characteristics of such spatiotemporal patterns – oscillations, bifurcations, stability, attraction, and responses to perturbations – predict perceptual, attentional, and behavioral responses to music, as well as musical qualia including tonal and rhythmic expectations. Conceptualization of consciousness in similar neurodynamic terms leads to a new way to think about how music may communicate affective content. Neurodynamic responses that give rise to musical qualia also resonate with affective circuits, enabling music to directly engage the sorts of feelings that are associated with emotional experiences. In this section we ask, how might affective resonance take place, do musical qualia arise from intrinsic neurodynamics, and what exactly is communicated?

Affective Resonance

We begin with an example of affective resonance to rhythm. Expressive piano performance is a kind of social interaction in which correlated fluctuations in timing and intensity transfer emotional information from the performer to the listener (Bhatara et al., 2010). Expressive tempo fluctuations display 1/f structure (Rankin et al., 2009; Hennig et al., 2011), and listeners predict such tempo changes when entraining to musical performances (Rankin et al., 2009; Rankin, 2010). A recent study compared BOLD responses to an expressive performance and a mechanical performance, in which the piece was “performed” by computer, with no fluctuations in timing and intensity. Greater activations were found in emotion and reward related areas for the expressive performance, consistent with transfer of affective information. Tempo fluctuations, BOLD activations and real-time ratings of valence and arousal were also compared for the expressive performance. Over the 3–1/2 min performance, fluctuations in timing correlated with BOLD changes in motor networks known to be involved in rhythmic entrainment, and in a network consistent with the human “mirror neuron” system (Chapin et al., 2010). As tempo increased, activation in these regions increased. Tempo fluctuations also correlated with real-time reports of affective arousal.

Despite the fact that the tempo-correlated activations were observed in so-called mirror neuron areas, this was not motor mirroring; half the participants were not musicians, and none were familiar with the piece. Could listener responses arise from a more general form of contagion in which the perception of affective expression directly induces the same emotion in the perceiver (Carr et al., 2003; Rizzolatti and Craighero, 2004; Molnar-Szakacs and Overy, 2006)? Based on what is known about neural responses to rhythm (Nozaradan et al., 2011; Fujioka et al., 2012), we propose a simple, if somewhat speculative, interpretation. Activation in mirror regions reflects resonance of endogenous cortical rhythms to exogenous musical rhythms. Activation increases as tempo increases because, as this neural circuit entrains to the musical rhythm it tracks the tempo (i.e., frequency modulations) of the performance (Herrmann et al., 2013). The frequency modulations themselves would represent violations of temporal expectancy (Large and Jones, 1999). The expressive performance also led to emotion and reward related neural activations (when compared with a mechanical performance that precisely controlled for melody, harmony, and rhythm, see Chapin et al., 2010). We hypothesize that the frequency modulation of mirror regions led to these activations (Molnar-Szakacs and Overy, 2006; Chapin et al., 2010). Thus, perhaps music directly couples into affective circuitry by exploiting resonant modes of cortical function, thereby creating the basis for affective communication

Intrinsic Dynamics, Musical Qualia and Cognitive Development

The preceding discussion suggests that at least some aspects of affective responses to music are deeply rooted in the intrinsic physics of the brain and body. If this is true, then neurodynamic investigations may ultimately explain how musical rhythms couple into neural circuits and modulate affective responses. But, could the neurodynamic approach explain musical qualia more generally? Consider the fundamental qualitative difference between pitch and rhythm. A simple acoustic click, repeated at 5 ms intervals, generates a pitch percept at 200 Hz. Increase the interval to 500 ms and the percept is that of a series of discrete events, with a pulse rate of 2 Hz. From a dynamical systems point of view, it makes perfect sense that the neural mechanisms brought to bear on the two stimuli may be similar; the difference is merely one of timescale. Yet from a phenomenological point of view, the two are fundamentally different: a single continuous event versus a rhythmic sequence. Why the difference in qualia? Perhaps it is because the timescale at which distinct neural events are bound together into unified conscious scenes lie between these timescales of pitch and rhythm. Perhaps the difference in qualia lies in the timescale relationship, not in the mechanism per se. If so, perhaps neural oscillation explains not only rhythm related responses, but also pitch related responses, such as stability and attraction.

We have argued elsewhere that the terms stability and attraction, used by theorists to describe scale degree qualia (Meyer, 1956; Zuckerkandl, 1956; Lerdahl and Jackendoff, 1983; Lerdahl, 2001), are not metaphorical. These refer to real, dynamical stability and attraction relationships in a neural field stimulated by external frequencies (Large, 2010a; Large and Almonte, 2011). In other words, scale degree qualia are simply what it feels like when our brains resonate to tonal sequences. This approach can explain the perception of tonal stability and attraction in Western modes (Krumhansl and Kessler, 1982; Large, 2010a), and North Indian raga (Castellano et al., 1984; Large and Almonte, 2011). It may also shed light on the development of statistical regularities in tonal melodies, implying that certain pitches occur more frequently because they have greater dynamical stability in underlying neural networks.

There is now a great deal of evidence regarding development of basic music structure cognition, including meter (Hannon and Trehub, 2005; Kirschner and Tomasello, 2009; Winkler et al., 2009) and tonality (Trainor and Trehub, 1992; Schellenberg and Trehub, 1996; Trehub et al., 1999). Such results reveal developmental trajectories that occur over the first several years of life, as well as perceptual invariants that are consistent with intrinsic neurodynamics (Large, 2010b) tuned with Hebbian plasticity (Hoppensteadt and Izhikevich, 1996; Large, 2010a). Dalla Bella et al. (2001) asked if children can determine whether music is happy or sad. 3- to 4-year-olds failed to distinguish happy from sad above chance, 5-year-olds” responses were affected by tempo, while 6- to 8-year-old children used both tempo and mode. Thus, children begin to use tempo at about the same time the ability to synchronize movements emerges, and they begin to use mode at about the same time that sensitivity to key emerges (Trainor and Trehub, 1992; Schellenberg and Trehub, 1999; McAuley et al., 2006). The fact that the development of the two main musical dimensions – rhythm and tonality – have the same time course as their affective correlates, strongly suggests a link between the development of neurodynamic responses and music-induced affective experience.

We do not claim that musical qualia are hard-wired, however, our argument does suggest that substantive aspects of musical expectancy and musical contagion may be explainable directly in neurodynamic terms, linking “high-level” perception with “low-level” neurodynamics. In combination with Hebbian plasticity, intrinsic neurodynamic constraints could explain the sensitivity of infant listeners to musical invariants, as well as the ability to acquire sophisticated musical knowledge. Moreover, this explanation suggests that association of affective responses with the musical modes of diverse cultures may not be due entirely to convention, as has been speculated previously (Meyer, 1956; Huron, 2005). Indeed cross-cultural studies in the perception of Western music suggest that happiness and sadness are communicated, at least in part, based on mode (Balkwill et al., 2004; Fritz et al., 2009). Moreover, unencultured Western listeners may be able to understand the moods intended by Indian raga performances (Balkwill and Thompson, 1999; Chordia and Rae, 2008). At the very least, these cross-cultural findings suggest that associations of mood with mode have been prematurely dismissed as conventional, and these relationships deserve to be reevaluated.

What is Communicated – Basic Emotion or Core Affect?

Juslin and Vastfjall (2008) propose that emotional contagion operates similarly to facial expression of basic emotions (Juslin and Laukka, 2003; Laird and Strout, 2007). However, because the theory of basic emotions has recently been called into question, it makes sense to review the body of evidence that pertains to music. In communication studies, both performances and listener judgments of intended emotion have been linked to specific musical features, including tempo, articulation, intensity, and timbre (Gabrielsson and Juslin, 1996; Peretz et al., 1998; Juslin, 2000; Juslin and Laukka, 2003). However, these studies also show that, at least for Western music, happiness and sadness are the most reliably communicated emotions (Kreutz et al., 2002; Lindström et al., 2002; Kallinen, 2005; Kreutz et al., 2008; Mohn et al., 2011), while other “basic” emotions are more often confused (Gabrielsson and Juslin, 1996; Peretz et al., 1998; Juslin, 2000; Juslin and Laukka, 2003; Kreutz et al., 2008; Mohn et al., 2011). Interestingly, Ekman (1993) and Izard et al. (2000) have both questioned the theory of facial expression of basic emotion based on variability and confusability. Moreover, analyses of physiological responses to music show that while musical stimuli elicit significant responses, physiological measures do not generally match listener self-reports using emotion terms (Krumhansl, 1997).

Basic emotion theory has been linked to an approach in which music is supposed to somehow imitate or mimic more biologically relevant stimuli, such as speech or mother-infant interactions (Juslin and Laukka, 2003), leading to the direct perception of emotion. Such discussions generally assume that musical communication is not evolutionarily selected, but needs to piggyback on more fundamental mechanisms. Our proposal is that music speaks to the brain in its own language, it need not imitate any other form of communication. In this sense, other forms of communication may be seen to induce or modulate emotions more indirectly, i.e., the effect is more cognitive (cf. Langer, 1951). Thus, the study of music may provide a unique window into the fundamental nature of affective communication, which might explain, for example, why music has the ability to evoke emotional memories (Janata et al., 2007).

It is tempting to try to unify core affect with basic emotion (Juslin, 2001) by assuming that each emotion category is associated with a specific core affective state (e.g., fear is unpleasant and highly arousing, sadness is unpleasant and less arousing, etc.). However, the mapping of emotion to affect is not unique; core affective states experienced during two different episodes of a given, nameable emotion (e.g., fear) typically differ depending on the situation (Meyer, 1956; Barrett, 2009b; Wilson-Mendenhall et al., 2013). Moreover, musical variables such as melodic contour, tempo, loudness, texture, and timbral sharpness, predict real-time listener ratings of arousal and valence well (Schubert, 1999, 2001, 2004), and correlate with BOLD responses in a number of brain regions (Chapin et al., 2010). Neuroimaging studies have also revealed BOLD responses to parametric manipulation of pleasantness (Blood et al., 1999; Koelsch et al., 2006), and these overlap with responses to intensely pleasurable music (Blood and Zatorre, 2001; Salimpoor et al., 2010).

Conclusion

In summary, we believe that a coherent picture is developing, based on recent findings of nonlinear resonant responses to acoustic stimulation at multiple timescales (Ruggero, 1992; Joris et al., 2004; Lakatos et al., 2005; Lee et al., 2009; Laudanski et al., 2010; Nozaradan et al., 2011) and theoretical analyses that show how such processes could underlie complex cognitive computations as well as phenomenal and affective aspects of our musical experiences (Baldi and Meir, 1990; Hoppensteadt and Izhikevich, 1997; Izhikevich, 2002; Shapira Lots and Stone, 2008; Large, 2010b). Such results and analyses suggest that neurodynamics provides an appropriate level at which to understand not only perceptual and cognitive responses to music, but ultimately affective and emotional responses as well. We suggest that, to support affective communication, music need not mimic some other type of social interaction; it need only engage the nervous system at the appropriate timescales. Indeed, music may be a unique type of stimulus that engages the brain in ways that no other stimulus can.

Thus, we suggest that there is something special about the way music communicates emotion. Our approach recasts musical expectancy and affective contagion as nonlinear resonance to musical patterns. Resonance occurs simultaneously on multiple timescales, leading to stable or metastable patterns of neural responses. Such patterns are inherently spatiotemporal, however, temporal aspects of the stimulus determine at any specific point which neural structures are involved. Violations of expectancy, such as the occurrence of a strong rhythmic event on a weak beat (a syncopation) or the prolongation of an unstable tone where a stable tone is expected (an appoggiatura), would correspond to a disruptions, or perturbations of the ongoing pattern. Implication and realization would correspond to relaxation toward, and reestablishment of a stable orbit. Stable and unstable in this context, are determined by the intrinsic neurodynamics of brain networks involved, which depend, in part, on tuning of the dynamics via synaptic plasticity. In this way, music may modulate affective neurodynamics directly by coupling into those aspects of the dynamic core of consciousness that govern our subjective feelings from moment to moment.

Glossary

Affect/Core affect: The most elementary consciously accessible sensation evident in moods and emotions. Affect can be characterized as fluctuating level of valence (pleasure/displeasure) and arousal (activation/ deactivation; Barrett and Bliss-Moreau, 2009). Core affect is so called because it is thought to arise in the core of the body or in neural representations of body state change (Russell, 2003).

Basic emotions: A few privileged emotion kinds (e.g., anger, sadness, fear, and happiness), each of which is thought to derive from an evolutionarily preserved brain module. Basic emotions are discrete, and each category shares a distinctive collection of properties, including patterns of autonomic nervous system activity, behavioral responses, and action tendencies. A set of emotion-specific brain structures is thought to mediate these particular “basic” emotions (Allport, 1924; Izard, 1971; Ekman, 1972; Panksepp, 1998).

Dynamic core: Functional clusters of neuronal groups in the thalamocortical system that are hypothesized to underlie consciousness. Distinct neuronal groups contribute to the contents of consciousness through enhanced synchrony of neural rhythms. The boundaries of this core are suggested to shift over time, with transitions occurring under the influence of internal and external stimulation (Seth, 2007).

Empathy: A feeling that arises when the perception of an emotional gesture in another person directly induces the same emotion in the perceiver without any appraisal process (see Juslin and Vastfjall, 2008).

Emotional contagion/Affective contagion: A process that occurs between individuals in which emotional or affective information is transferred from one individual to another. The idea that people may “catch” the emotions of others when seeing their facial expressions, hearing their vocal expressions, or hearing their musical performances (see Juslin and Vastfjall, 2008)

Emotion: Affective responses to situations that usually involve a number of sub-components – subjective feeling, physiological arousal, thought, expression, action tendency, and regulation – which are more or less synchronized (Juslin and Vastfjall, 2008). Emotions are intentional; they are about an object or event.

Feelings: The subjective phenomenal character of an experience, used informally to refer to qualia or affect.

Intentionality: The power of minds to be about, to represent, or to stand for, things, properties and states of affairs (Jacob, 2010).

Psychological constructionism: The theory that emotions results from the combination of psychologically primitive processes, which encompass both affective and intentional components. A specific emotion is not the invariable result of activation in a particular brain area; neural circuitry realizes more basic processes across emotion categories. Psychological constructionists argue that emotions are culturally relative and learned (see Russell and Barrett, 1999; Barrett and Bliss-Moreau, 2009; Barrett, 2009a,b).

Qualia/musical qualia: The distinctive subjective character of a mental state; what it is like to experience each state; the introspectively accessible, phenomenal aspects of our mental lives (Tye, 2013). Musical qualia refers to the subjective character of specific musical events, experienced within a tonal and/or temporal context.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by NSF grant BCS-1027761 to Edward W. Large. We wish to thank Dan Levitin and all of the reviewers (with special thanks to reviewer 3) for their careful reading and excellent suggestions for improving this manuscript.

Footnotes

- ^ We use the word intentionality to refer to any mental phenomena that have referential content (see glossary).

- ^ f1 and f2 denote frequencies of pure tones and k and m are positive integers.

References

Anders, S., Heinzle, J., Weiskopf, N., Ethofer, T., and Haynes, J. D. (2011). Flow of affective information between communicating brains. Neuroimage 54, 439–446. doi: 10.1016/j.neuroimage.2010.07.004

Angier, R. P. (1927). The conflict theory of emotion. Am. J. Psychol. 39, 390–401. doi: 10.2307/1415425

Baldi, P., and Meir, R. (1990). Computing with arrays of coupled oscillators: an application to preattentive texture discrimination. Neural Comput. 2, 458–471. doi: 10.1162/neco.1990.2.4.458

Balkwill, L. L., and Thompson, W. F. (1999). A cross-cultural investigation of the perception of emotion in music: psychophysical and cultural cues. Music Percept. 17, 43–64. doi: 10.2307/40285811

Balkwill, L. L., Thompson, W. F., and Matsunaga, R. (2004). Recognition of emotion in Japanese, Western, and Hindustani music by Japanese listeners. Jpn. Psychol. Res. 46, 337–349. doi: 10.1111/j.1468-5584.2004.00265.x

Barrett, L. F. (1998). Discrete emotions or dimensions? The role of valence focus and arousal focus. Cogn. Emot. 12, 579–599. doi: 10.1080/026999398379574

Barrett, L. F. (2004). Feelings or words? Understanding the content in self-report ratings of experienced emotion. J. Pers. Soc. Psychol. 87, 266–281. doi: 10.1037/0022-3514.87.2.266

Barrett, L. F. (2006). Valence as a basic building block of emotional life. J. Res. Pers. 40, 35–55. doi: 10.1016/j.jrp.2005.08.006

Barrett, L. F. (2009a). The future of psychology: connecting mind to brain. Perspect. Psychol. Sci. 4, 326–339. doi: 10.1111/j.1745-6924.2009.01134.x

Barrett, L. F. (2009b). Variety is the spice of life: a psychological construction approach to understanding variability in emotion. Cogn. Emot. 23, 1284–1306. doi: 10.1080/02699930902985894

Barrett, L. F., and Bliss-Moreau, E. (2009). Affect as a psychological primitive. Adv. Exp. Soc. Psychol. 41, 167–218. doi: 10.1016/S0065-2601(08)00404-8

Bhatara, A., Tirovolas, A. K., Duan, L. M., Levy, B., and Levitin, D. J. (2010). Perception of emotional expression in musical performance. J. Exp. Psychol. Hum. Percept. Perform. 37, 921–934. doi: 10.1037/a0021922

Blood, A. J., and Zatorre, R. J. (2001). Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc. Natl. Acad. Sci. U.S.A. 98, 11818–11823. doi: 10.1073/pnas.191355898

Blood, A. J., Zatorre, R. J., Bermudez, P., and Evans, A. C. (1999). Emotional responses to pleasant and unpleasant music correlate with activity in paralimbic brain regions. Nat. Neurosci. 2, 382–387. doi: 10.1038/7299

Bregman, A. S. (1990). Auditory Scene Analysis: The Perceptual Organization of Sound. Cambridge, MA: MIT Press.

Burger, B., Saarikallio, S., Luck, G., Thompson, M. R., and Toiviainen, P. (2013). Relationships between perceived emotions in music and music-induced movement. Music Percept. 30, 517–533. doi: 10.1525/mp.2013.30.5.517

Burns, E. M. (1999). “Intervals, scales, and tuning,” in The Psychology of Music, ed. D. Deustch (San Diego: Academic Press), 215–264. doi: 10.1016/B978-012213564-4/50008-1

Buzsáki, G. (2006). Rhythms of the Brain. New York: Oxford University Press. doi: 10.1093/acprof:oso/9780195301069.001.0001

Cacioppo, J. T., Berntson, G. G., Larsen, J. T., Poehlmann, K. M., and Ito, T. A. (2000). “The psychophysiology of emotion,” in The Handbook of Emotion, eds R. Lewis and J. M. Haviland-Jones (New York: Guilford Press), 173–191.

Canolty, R. T., Edwards, E., Dalal, S. S., Soltani, M., Nagarajan, S. S., Kirsch, H. E., et al. (2006). High gamma power is phase-locked to theta oscillations in human neocortex. Science 313, 1626–1628. doi: 10.1126/science.1128115

Cariani, P. A., and Delgutte, B. (1996). Neural correlates of the pitch of complex tones. I. Pitch and pitch salience. J. Neurophysiol. 76, 1698–1716.

Carr, L., Iacoboni, M., Dubeau, M.-C., Mazziotta, J. C., and Lenzi, G. L. (2003). Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. Proc. Natl. Acad. Sci. U.S.A. 100, 5497–5502. doi: 10.1073/pnas.0935845100

Cartwright, J. H. E., González, D. L., and Piro, O. (1999). Nonlinear dynamics of the perceived pitch of complex sounds. Phys. Rev. Lett. 82, 5389–5392. doi: 10.1103/PhysRevLett.82.5389

Castellano, M. A., Bharucha, J. J., and Krumhansl, C. L. (1984). Tonal hierarchies in the music of North India. J. Exp. Psychol. Gen. 113, 394–412. doi: 10.1037/0096-3445.113.3.394

Chapin, H., Jantzen, K. J., Kelso, J. A. S., Steinberg, F., and Large, E. W. (2010). Dynamic emotional and neural responses to music depend on performance expression and listener experience. PLoS ONE 5:e13812. doi: 10.1371/journal.pone.0013812

Chen, J. L., Penhune, V. B., and Zatorre, R. J. (2008). Moving on time: brain network for auditory-motor synchronization is modulated by rhythm complexity and musical training. J. Cogn. Neurosci. 20, 226–239. doi: 10.1162/jocn.2008.20018

Chordia, P., and Rae, A. (2008). “Understanding emotion in raag: an empirical study of listener responses,” in Computer music modeling and retrieval. Sense of sounds, ed. D. Swaminathan (Berlin: Springer), 110–124. doi: 10.1007/978-3-540-85035-9_7

Cosmelli, D., Lachaux, J.-P., and Thompson, E. (2006). “Neurodynamical approaches to consciousness,” in The Cambridge handbook of consciousness, eds P. D. Zelazo, M. Moscovitch, and E. Thompson (Cambridge: Cambridge University), 731–774.

Cosmides, L., and Tooby, J. (2000). “Consider the source: the evolution of adaptations for decoupling and metarepresentations,” in Metarepresentations: A Multidisciplinary Perspective, ed. D. Sperber (Oxford: Oxford University Press), 53–115.

Dalla Bella, S., Peretz, I., Rousseau, L., and Gosselin, N. (2001). A developmental study of the affective value of tempo and mode in music. Cognition 80, B1–B10. doi: 10.1016/S0010-0277(00)00136-0

Damasio, A. (1999). The Feeling of What Happens: Body and Emotion in the Making of Consciousness. New York: Harcourt Brace.

Darwin, C. (1872/1965). The Expression of the Emotions in Man and Animals. Chicago, IL: University of Chicago Press. (Original work published 1872).

Davidson, R. J. (2000). “The functional neuroanatomy of affective style,” in Cognitive Neuroscience of Emotion, eds R. D. Lane and L. Nadel (New York: Oxford University Press) 371–388.

Duncan, S., and Barrett, L. F. (2007). Affect is a form of cognition: a neurobiological analysis. Cogn. Emot. 21, 1184–1211. doi: 10.1080/02699930701437931

Ebeling, M. (2008). Neuronal periodicity detection as a basis for the perception of consonance: a mathematical model of tonal fusion. J. Acoust. Soc. Am. 124, 2320–2329. doi: 10.1121/1.2968688

Edelman, G. M. (1987). Neural Darwinism: The Theory of Neuronal Group Selection. New York: Basic Books.

Edelman, G. M. (2003). Naturalizing consciousness: a theoretical framework. Proc. Natl. Acad. Sci. U.S.A. 100, 5520–5524. doi: 10.1073/pnas.0931349100

Edelman, G. M., and Tononi, G. (2000). A Universe of Consciousness: How Matter Becomes Imagination. New York: Basic Books.

Ekman, P. (1972). “Universal and cultural differences in facial expressions of emotions,” in Nebraska Symposium on Motivation, ed. J. K. Cole (Lincoln: University of Nebraska Press), 207–283.

Ekman, P. (1984). “Expression and the nature of emotion,” in Approaches to Emotion, eds K. Scherer and P. Ehan (Hillsdale, NJ: Lawrence Erlbaum Associates Inc), 319–344.

Ekman, P. (1992). An argument for basic emotions. Cogn. Emot. 6, 169–200. doi: 10.1080/02699939208411068

Ekman, P. (1993). Facial expression and emotion. Am. Psychol. 48, 384–392. doi: 10.1037/0003-066X.48.4.384

Ekman, P., Friesen, W. V., O’Sullivan, M., Chan, A., Diacoyanni-Tarlatzis, I., Heider, K., et al. (1987). Universals and cultural differences in the judgments of facial expressions of emotion. J. Pers. Soc. Psychol. 53, 712–717. doi: 10.1037/0022-3514.53.4.712

Engel, A. K., and Singer, W. (2001). Nonlinear spectrotemporal sound analysis by neurons in the auditory midbrain. J. Neurosci. 22, 4114–4131.

Eschrich, S., Münte, T. F., and Altenmüller, E. O. (2008). Unforgettable film music: the role of emotion in episodic long-term memory for music. BMC Neurosci. 9:48. doi: 10.1186/1471-2202-9-48

Fritz, T., Jentsche, S., Gosselin, N., Sammler, D., Peretz, I., Turner, R., et al. (2009). Universal recognition of three basic emotions in music. Curr. Biol. 19, 573–576. doi: 10.1016/j.cub.2009.02.058

Fujioka, T., Trainor, L. J., Large, E. W., and Ross, B. (2012). Internalized timing of isochronous sounds is represented in neuromagnetic beta oscillations. J. Neurosci. 32, 1791–1802. doi: 10.1523/JNEUROSCI.4107-11.2012

Gabrielsson, A., and Juslin, P. N. (1996). Emotional expression in music performance: between the performer’s intention and the listener’s experience. Psychol. Music 24, 68–91. doi: 10.1177/0305735696241007

Grahn, J. A., and Rowe, J. B. (2009). Feeling the beat: premotor and striatal interactions in musicians and non-musicians during beat processing. J. Neurosci. 29, 7540–7548. doi: 10.1523/JNEUROSCI.2018-08.2009

Hannon, E. E., and Trehub, S. E. (2005). Metrical categories in infancy and adulthood. Psychol. Sci. 16, 48–55. doi: 10.1111/j.0956-7976.2005.00779.x

Hartmann, W. M. (1996). Pitch, periodicity, and auditory organization. J. Acoust. Soc. Am. 100, 3491–3502. doi: 10.1121/1.417248

Hennig, H., Fleischmann, R., Fredebohm, A., Hagmayer, Y., Nagler, J., Witt, A., et al. (2011). The nature and perception of fluctuations in human musical rhythms. PLoS ONE 6:e26457. doi: 10.1371/journal.pone.0026457

Herrmann, B., Henry, M., Grigutsch, M., and Obleser, J. (2013). Oscillatory phase dynamics in neural entrainment underpin illusory percepts of time. J. Neurosci. 33, 15799–15809. doi: 10.1523/JNEUROSCI.1434-13.2013

Hoppensteadt, F. C., and Izhikevich, E. M. (1996). Synaptic organizations and dynamical properties of weakly connected neural oscillators II: learning phase information. Biol. Cybern. 75, 126–135.

Hoppensteadt, F. C., and Izhikevich, E. M. (1997). Weakly Connected Neural Networks. New York: Springer. doi: 10.1007/978-1-4612-1828-9

Hove, M. J., and Risen, J. L. (2009). It’s all in the timing: interpersonal synchrony increases affiliation. Soc. Cogn. 27, 949–960. doi: 10.1521/soco.2009.27.6.949

Huron, D. B. (2005). Exploring the musical mind: cognition, emotion, ability, function. Music Sci. 9, 217–221.

Huron, D. B. (2006). Sweet Anticipation: Music and the Psychology of Expectation. Cambridge: MIT Press.

Iversen, J. R., Repp, B. H., and Patel, A. D. (2006). “Metrical interpretation modulates brain responses to rhythmic sequences,” in Proceedings of the 9th International Conference on Music Perception and Cognition (ICMPC9), eds M. Baroni, A. R. Addessi, R. Caterina, and M. Costa (Bologna), 468.

Izard, C. E., Ackerman, B. P., Schoff, K. M., and Fine, S. E. (2000). “Self-organization of discrete emotions, emotion patterns, and emotion-cognition relations,” Emotion, Development, and Self-Organization: Dynamic Systems Approaches to Emotional Development, eds M. D. Lewis and I. Granic (Cambridge: Cambridge University Press), 15–36.

Izhikevich, E. M. (2002). Resonance and selective communication via bursts in neurons having subthreshold oscillations. Biosystems 67, 95–102. doi: 10.1016/S0303-2647(02)00067-9

Jacob, P. (2010). “Intentionality,” in The Stanford Encyclopedia of Philosophy, ed. E. N. Zalta. Available at: http://plato.stanford.edu/archives/fall2010/entries/intentionality/

Janata, P., Tomic, S. T., and Haberman, J. M. (2012). Sensorimotor coupling in music and the psychology of the groove. J. Exp. Psychol. Gen. 141, 54–75. doi: 10.1037/a0024208

Janata, P., Tomic, S. T., and Rakowski, S. K. (2007). Characterization of music-evoked autobiographical memories. Memory 15, 845–860. doi: 10.1080/09658210701734593

Jones, M. R. (1990). “Musical events and models of musical time,” in Cognitive Models of Psychological Time, ed. R. A. Block (Hillsdale, NJ: Lawrence Erlbaum), 207–240.

Joris, P. X., Schreiner, C. E., and Rees, A. (2004). Neural processing of amplitude-modulated sounds. Physiol. Rev. 84, 541–577. doi: 10.1152/physrev.00029.2003

Juslin, P. N. (2000). Cue utilization in communication of emotion in music performance: relating performance to perception. J. Exp. Psychol. Hum. Percept. Perform. 26, 1797–1813. doi: 10.1037/0096-1523.26.6.1797

Juslin, P. N. (2001). “Communicating emotion in music performance: a review and a theoretical framework,” in Music and Emotion: Theory and Research, eds P. N. Juslin and J. A. Sloboda (New York: Oxford University Press), 309–337.

Juslin, P. N., and Laukka, P. (2003). Communication of emotions in vocal expression and music performance: different channels, same code? Psychol. Bull. 129, 770–814. doi: 10.1037/0033-2909.129.5.770

Juslin, P. N., and Sloboda, J. (Eds.). (2001). Music and Emotion: Theory and Research. New York: Oxford University Press.

Juslin, P. N., and Vastfjall, D. (2008). Emotional responses to music: the need to consider underlying mechanisms. Behav. Brain Sci. 31, 559–621. doi: 10.1017/S0140525X08005293

Kallinen, K. (2005). Emotional ratings of music excerpts in the western art music repertoire and their self-organization in the Kohonen neural network. Psychol. Music 33, 373–393. doi: 10.1177/0305735605056147

Kelso, J. A. S., de Guzman, G. C., and Holroyd, T. (1990). “The self-organized phase attractive dynamics of coordination,” in Self-Organization, Emerging Properties, and Learning, ed. A. Babloyantz (New York: Plenum Publishing Corporation), 41–62.

Kirschner, S., and Tomasello, M. (2009). Joint drumming: social context facilitates synchronization in preschool children. J. Exp. Child Psychol. 102, 299–314. doi: 10.1016/j.jecp.2008.07.005

Koelsch, S., Fritz, T., von Cramon, D. Y., Müller, K., and Friederici, A. D. (2006). Investigating emotion with music: an fMRI study. Hum. Brain Mapp. 27, 239–250. doi: 10.1002/hbm.20180

Kreutz, G., Bongard, S., and Jussis, J. V. (2002). Cardiovascular responses to music listening: on the influences of musical expertise and emotion. Music Sci. 6, 257–278.

Kreutz, G., Ott, U., Teichmann, D., Osawa, P., and Vaitl, D. (2008). Using music to induce emotions: influences of musical preference and absorption. Psychol. Music 36, 101–126. doi: 10.1177/0305735607082623

Krumhansl, C. L. (1997). An exploratory study of musical emotions and psychophysiology. Can. J. Exp. Psychol. 51, 336–352. doi: 10.1037/1196-1961.51.4.336

Krumhansl, C. L., and Kessler, E. J. (1982). Tracing the dynamic changes in perceived tonal organization in a spatial representation of musical keys. Psychol. Rev. 89, 334–368. doi: 10.1037/0033-295X.89.4.334

Laird, J. D., and Strout, S. (2007). “Emotional behaviors as emotional stimuli,” in Handbook of Emotion Elicitation and Assessment, eds J. A. Coan and J. J. Allen (New York: Oxford University Press), 54–64.

Lakatos, P., Karmos, G., Mehta, A. D., Ulbert, I., and Schroeder, C. E. (2008). Entrainment of neuronal oscillations as a mechanism of attentional selection. Science 320, 110–113. doi: 10.1126/science.1154735

Lakatos, P., Shah, A. S., Knuth, K. H., Ulbert, I., and Schroeder, C. E. (2005). An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. J. Neurophysiol. 94, 1904–1911. doi: 10.1152/jn.00263.2005

Langner, G. (1992). Periodicity coding in the auditory system. Hear. Res. 60, 115–142. doi: 10.1016/0378-5955(92)90015-F

Large, E. W. (2000). On synchronizing movements to music. Hum. Mov. Sci. 19, 527–566. doi: 10.1016/S0167-9457(00)00026-9

Large, E. W. (2008). “Resonating to musical rhythm: theory and experiment,” in The Psychology of Time, ed. S. Grondin (Cambridge: Emerald), 189–231.

Large, E. W. (2010a). “Dynamics of musical tonality,” in Nonlinear Dynamics in Human Behavior, eds R. Huys and V. JIrsa (New York: Springer), 193–211.

Large, E. W. (2010b). “Neurodynamics of music,” in Springer Handbook of Auditory Research: Music Perception, eds M. Riess Jones, R. R. Fay, and A. N. Popper (New York: Springer), 201–231.

Large, E. W., and Almonte, F. (2011). Neurodynamics and Learning in Musical Tonality. Rochester, NY: Society for Music Perception and Cognition.

Large, E. W., and Almonte, F. V. (2012). Neurodynamics, tonality, and the auditory brainstem response. Ann. N. Y. Acad. Sci. 1252, E1–E7. doi: 10.1111/j.1749-6632.2012.06594.x

Large, E. W., and Jones, M. R. (1999). The dynamics of attending: how people track time-varying events. Psychol. Rev. 106, 119–159. doi: 10.1037/0033-295X.106.1.119

Large, E. W., and Kolen, J. F. (1994). Resonance and the perception of musical meter. Connect. Sci. 6, 177–208. doi: 10.1080/09540099408915723

Large, E. W., Kozloski, J., and Crawford, J. D. (1998). A dynamical model of temporal processing in the fish auditory system. Assoc. Res. Otolaryngol. Abstr. 21, 717.

Laudanski, J., Coombes, S., Palmer, A. R., and Sumner, C. J. (2010). Mode-locked spike trains in responses of ventral cochlear nucleus chopper and onset neurons to periodic stimuli. J. Neurophysiol. 103, 1226–1237. doi: 10.1152/jn.00070.2009

LeDoux, J. E. (1996). Emotional networks and motor control: a fearful view. Prog. Brain Res. 107, 437–446. doi: 10.1016/S0079-6123(08)61880-4

LeDoux, J. E. (2000). Emotion circuits in the brain. Annu. Rev. Neurosci. 23, 155–184. doi: 10.1146/annurev.neuro.23.1.155

Lee, K. M., Skoe, E., Kraus, N., and Ashley, R. (2009). Selective subcortical enhancement of musical intervals in musicians. J. Neurosci. 29, 5832–5840. doi: 10.1523/JNEUROSCI.6133-08.2009

Lerdahl, F., and Jackendoff, R. (1983). An overview of hierarchical structure in music. Music Percept. 1, 229–252. doi: 10.2307/40285257

Lerud, K. D., Almonte, F. V., Kim, J. C., and Large, E. W. (2013). Mode-locking neurodynamics predict human auditory brains tem responses to musical intervals. Hear. Res. 308, 41–49. doi: 10.1016/j.heares.2013.09.010

Lewis, M. (2000). “The emergence of human emotions,” in Handbook of Emotions, eds M. Lewis and J. M. Haviland-Jones (New York: Guilford Press), 265–280.

Lindquist, K. A., Wager, T. D., Kober, H., Bliss-Moreau, E., and Barrett, L. F. (2012). The brain basis of emotion: a meta-analytic review. Behav. Brain Sci. 35, 121–143. doi: 10.1017/S0140525X11000446

Lindström, E., Juslin, P. N., Bresin, R., and Williamon, A. (2002). “Expressivity comes from within your soul”: a questionnaire study of music students. Perspectives on expressivity. Res. Stud. Music Educ. 20, 23–47. doi: 10.1177/1321103X030200010201

London, J. (2004). Hearing in Time: Psychological Aspects of Musical Meter. Oxford: Oxford University Press. doi: 10.1093/acprof:oso/9780195160819.001.0001

McAuley, J. D. (1995). Perception of Time as Phase: Toward an Adaptive-Oscillator Model of Rhythmic Pattern Processing. Unpublished doctoral dissertation. Bloomington: Indiana University.

McAuley, J. D., Jones, M. R., Holub, S., Johnston, H. M., and Miller, N. S. (2006). The time of our lives: life span development of timing and event tracking. J. Exp. Psychol. Gen. 135, 348–367. doi: 10.1037/0096-3445.135.3.348

Mesquita, B. (2003). “Emotions as dynamic cultural phenomena,” in Handbook of the Affective Sciences, eds R. Davidson and K. Scherer (New York: Oxford University Press), 871–890.

Mohn, C., Argstatter, H., and Wilker, F. W. (2011). Perception of six basic emotions in music. Psychol. Music 39, 503–517. doi: 10.1177/0305735610378183

Molnar-Szakacs, I., and Overy, K. (2006). Music and mirror neurons: from motion to emotion. Soc. Cogn. Affect. Neurosci. 1, 235–241. doi: 10.1093/scan/nsl029

Musacchia, G., Large, E. W., and Schroeder, C. E. (2013). Thalamocortical mechanisms for integrating musical tone and rhythm. Trends Neurosci, 51, 2812–2824.

Narmour, E. (1990). The Analysis and Cognition of Basic Melodic Structures: The Implication-Realization Model. Chicago: University of Chicago Press.

Nozaradan, S., Peretz, I., Missal, M., and Mouraux, A. (2011). Tagging the neuronal entrainment to beat and meter. J. Neurosci. 31, 10234–10240. doi: 10.1523/JNEUROSCI.0411-11.2011

Ortony, A., Clore, G. L., and Collins, A. (1988). The Cognitive Structure of Emotions. Cambridge: Cambridge University Press. doi: 10.1017/CBO9780511571299

Ortony, A., and Turner, T. J. (1990). What’s basic about basic emotions? Psychol. Rev. 97, 315–331. doi: 10.1037/0033-295X.97.3.315

Peretz, I., Gagnon, L., and Bouchard, B. (1998). Music and emotion: perceptual determinants, immediacy, and isolation after brain damage. Cognition 68, 111–141. doi: 10.1016/S0010-0277(98)00043-2

Pessoa, L. (2008). On the relationship between emotion and cognition. Nat. Rev. Neurosci. 9, 148–158. doi: 10.1038/nrn2317

Plack, C. J., and Oxenham, A. J. (2005). “The psychophysics of pitch,” in Pitch: Neural Coding and Perception, eds C. J. Plack, R. R. Fay, A. J. Oxenham, and A. N. Popper (New York: Springer), 7–55.

Plomp, R., and Levelt, W. J. M. (1965). Tonal consonance and critical bandwidth. J. Acoust. Soc. Am. 38, 548–560. doi: 10.1121/1.1909741

Pressnitzer, D., Patterson, R. D., and Krumbholz, K. (2001). The lower limit of melodic pitch. J. Acoust. Soc. Am. 109, 2074–2084. doi: 10.1121/1.1359797

Rankin, S. K. (2010). 1/f Structure of Temporal Fluctuation in Rhythm Performance and Rhythmic Coordination. Unpublished doctoral dissertation, Florida Atlantic University.

Rankin, S. K., Large, E. W., and Fink, P. W. (2009). Fractal tempo fluctuation and pulse prediction. Music Percept. 26, 401–413. doi: 10.1525/mp.2009.26.5.401

Repp, B. H. (2005a). Rate limits of on-beat and off-beat tapping with simple auditory rhythms: 1. Qualitative observations. Music Percept. 22, 479–496. doi: 10.1525/mp.2005.22.3.479

Repp, B. H. (2005b). Rate limits of on-beat and off-beat tapping with simple auditory rhythms: 2. The roles of different kinds of accent. Music Percept. 23, 165–187. doi: 10.1525/mp.2005.23.2.165

Repp, B. H. (2005c). Sensorimotor synchronization: a review of the tapping literature. Psychon. Bull. Rev. 12, 969–992. doi: 10.3758/BF03206433

Rizzolatti, G., and Craighero, L. (2004). The mirror-neuron system. Annu. Rev. Neurosci. 27, 169–192. doi: 10.1146/annurev.neuro.27.070203.144230

Ruggero, M. A. (1992). Responses to sound of the basilar membrane of the mammalian cochlea. Curr. Opin. Neurobiol. 2, 449–456. doi: 10.1016/0959-4388(92)90179-O

Russell, J. A. (1991). Culture and the categorization of emotion. Psychol. Bull. 110, 426–450. doi: 10.1037/0033-2909.110.3.426

Russell, J. A. (2003). Core affect and the psychological construction of emotion. Psychol. Rev. 110, 145–172. doi: 10.1037/0033-295X.110.1.145

Russell, J. A., and Barrett, L. F. (1999). Core affect, prototypical emotional episodes, and other things called emotion: dissecting the elephant. J. Pers. Soc. Psychol. 76, 805–819. doi: 10.1037/0022-3514.76.5.805

Salimpoor, V. N., Chang, C., and Menon, V. (2010). Neural basis of repetition priming during mathematical cognition: repetition suppression or repetition enhancement? J. Cogn. Neuro. sci. 22, 790–805. doi: 10.1162/jocn.2009.21234

Schellenberg, E. G., and Trehub, S. E. (1996). Natural musical intervals: evidence from infant listeners. Psychol. Sci. 7, 272–277. doi: 10.1111/j.1467-9280.1996.tb00373.x

Schellenberg, E. G., and Trehub, S. E. (1999). Culture-general and culture-specific factors in the discrimination of melodies. J. Exp. Child Psychol. 74, 107–127. doi: 10.1006/jecp.1999.2511

Scherer, K. R. (2001). “Appraisal considered as a process of multilevel sequential processing,” in Appraisal Processes in Emotion: Theory, Methods, Research, eds K. R. Scherer, A. Schorr, and T. Johnstone (New York: Oxford University Press), 92–120.

Schubert, E. (1999). Measuring emotion continuously: validity and reliability of the two-dimensional emotion-space. Austr. J. Psychol. 51, 154–165. doi: 10.1080/00049539908255353

Schubert, E. (2001). “Continuous measurement of self-report emotional response to music,” in Music and Emotion: Theory and Research, eds P. N. Juslin and J. A. Sloboda (New York: Oxford University Press), 393–414.

Schubert, E. (2004). Modeling perceived emotion with continuous musical features. Music Percept. 21, 561–585. doi: 10.1525/mp.2004.21.4.561

Searle, J. R. (2000). Consciousness. Annu. Rev. Neurosci. 23, 557–578. doi: 10.1146/annurev.neuro.23.1.557

Searle, J. R. (2001). Free will as a problem in neurobiology. Philosophy 76, 491–514. doi: 10.1017/S0031819101000535

Seth, A. K., Izhikevich, E., Reeke, G. N., and Edelman, G. M. (2006). Theories and measures of consciousness: an extended framework. Proc. Natl. Acad. Sci. U.S.A. 103, 10799–10804. doi: 10.1073/pnas.0604347103

Shapira Lots, I., and Stone, L. (2008). Perception of musical consonance and dissonance: an outcome of neural synchronization. J. R. Soc. Interface 5, 1429–1434. doi: 10.1098/rsif.2008.0143

Sloboda, J. A., and O’Neill, S. A. (2001). “Emotions in everyday listening to music,” in Music and Emotion: Theory and Research, eds P. N. Juslin and J. A. Sloboda (New York: Oxford University Press ), 415–429.

Smith, C. A., and Kirby, L. D. (2001). “Toward delivering on the promise of appraisal theory,” in Appraisal Processes in Emotion: Theory, Methods, Research, eds K. R. Scherer and A. Schorr (New York: Oxford University Press), 121–138.

Snyder, J. S., and Large, E. W. (2005). Gamma-band activity reflects the metric structure of rhythmic tone sequences. Cogn. Brain Res. 24, 117–126. doi: 10.1016/j.cogbrainres.2004.12.014

Stefanics, G., Hangya, B., Hernadi, I., Winkler, I., Lakatos, P., and Ulbert, I. (2010). Phase entrainment of human delta oscillations can mediate the effects of expectation on reaction speed. J. Neurosci. 30, 13578–13585. doi: 10.1523/JNEUROSCI.0703-10.2010

Tillmann, B., Bharucha, J. J., and Bigand, E. (2000). Implicit learning of tonality: a self-organizing approach. Psychol. Rev. 107, 885–913. doi: 10.1037/0033-295X.107.4.885

Tomic, S. T., and Janata, P. (2008). Beyond the beat: modeling metric structure in music and performance. J. Acoust. Soc. Am. 124, 4024–4041. doi: 10.1121/1.3006382

Tomkins, S. S. (1962). Affect, Imagery, Consciousness, Volume 1: The positive affects. New York: Springer.

Tomkins, S. S. (1963). Affect, Imagery, Consciousness, Volume 2: The negative affects. New York: Springer.

Tononi, G., and Edelman, G. M. (1998). Consciousness and the integration of information in the brain. Adv. Neurol. 77, 245–279.

Trainor, L. J., and Trehub, S. E. (1992). A comparison of infants’ and adults’ sensitivity to Western musical structure. J. Exp. Psychol. Hum. Percept. Perform. 18, 394–402. doi: 10.1037/0096-1523.18.2.394

Tramo, M. J., Cariani, P. A., Delgutte, B., and Braida, L. D. (2001). Neurobiological foundations for the theory of harmony in western tonal music. Ann. N. Y. Acad. Sci. 930, 92–116. doi: 10.1111/j.1749-6632.2001.tb05727.x

Trehub, S. E., Schellenberg, E. G., and Kamenetsky, S. B. (1999). Infants’ and adults’ perception of scale structure. J. Exp. Psychol. Hum. Percept. Perform. 25, 965–975. doi: 10.1037/0096-1523.25.4.965

Tye, M., (2013). “Qualia,” in The Stanford Encyclopedia of Philosophy, ed. E. N. Zalta Available at: http://plato.stanford.edu/archives/fall2013/entries/qualia/

Varela, F., Lachaux, J. P., Rodriguez, E., and Martinerie, J. (2001). The brainweb: phase synchronization and large-scale integration. Nat. Rev. Neurosci. 2, 229–239. doi: 10.1038/35067550

Will, U., and Berg, E. (2007). Brain wave synchronization and entrainment to periodic acoustic stimuli. Neurosci. Lett. 424, 55–60. doi: 10.1016/j.neulet.2007.07.036

Wilson-Mendenhall, C. D., Barrett, L. F., and Barsalou, L. W. (2013). Neural evidence that human emotions share core affective properties. Psychol. Sci. 24, 947–956. doi: 10.1177/0956797612464242

Winkler, I., Háden, G., Ladinig, O., Sziller, I., and Honing, H. (2009). Newborn infants detect the beat in music. Proc. Natl. Acad. Sci. U.S.A. 106, 2468–2471. doi: 10.1073/pnas.0809035106

Wundt, W. (1897). Outlines of Psychology. (C. H. Judd, Trans.). Oxford: Engelman. doi: 10.1037/12908-000

Zatorre, R. J., Chen, J. L., and Penhune, V. B. (2007). When the brain plays music: auditory–motor interactions in music perception and production. Nat. Rev. Neurosci. 8, 547–558. doi: 10.1038/nrn2152

Keywords: neurodynamics, consciousness, affect, emotion, musical expectancy, oscillation, synchrony

Citation: Flaig NK and Large EW (2014) Dynamic musical communication of core affect. Front. Psychol. 5:72. doi: 10.3389/fpsyg.2014.00072

Received: 30 April 2013; Paper pending published: 01 July 2013;

Accepted: 19 January 2014; Published online: 17 March 2014.

Edited by:

Daniel J. Levitin, McGill University, CanadaReviewed by:

Sarah Creel, University of California at San Diego, USASilke Anders, Universität zu Lübeck, Germany

Emery Schubert, University of New South Wales, Australia

Copyright © 2014 Flaig and Large. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Edward W. Large, Music Dynamics Lab, Department of Psychology, University of Connecticut, 406 Babbidge Road, Unit 1020, Storrs, CT 06269-1020, USA e-mail: edward.large@uconn.edu

Nicole K. Flaig

Nicole K. Flaig Edward W. Large

Edward W. Large