- School of Psychology and Speech Pathology, Curtin University, Perth, WA, Australia

The adoption of mixed methods research in psychology has trailed behind other social science disciplines. Teaching psychology students, academics, and practitioners about mixed methodologies may increase the use of mixed methods within the discipline. However, tailoring and evaluating education and training in mixed methodologies requires an understanding of, and way of measuring, attitudes toward mixed methods research in psychology. To date, no such measure exists. In this article we present the development and initial validation of a new measure: Attitudes toward Mixed Methods Research in Psychology. A pool of 42 items developed from previous qualitative research on attitudes toward mixed methods research along with validation measures was administered via an online survey to a convenience sample of 274 psychology students, academics and psychologists. Principal axis factoring with varimax rotation on a subset of the sample produced a four-factor, 12-item solution. Confirmatory factor analysis on a separate subset of the sample indicated that a higher order four factor model provided the best fit to the data. The four factors; ‘Limited Exposure,’ ‘(in)Compatibility,’ ‘Validity,’ and ‘Tokenistic Qualitative Component’; each have acceptable internal reliability. Known groups validity analyses based on preferred research orientation and self-rated mixed methods research skills, and convergent and divergent validity analyses based on measures of attitudes toward psychology as a science and scientist and practitioner orientation, provide initial validation of the measure. This brief, internally reliable measure can be used in assessing attitudes toward mixed methods research in psychology, measuring change in attitudes as part of the evaluation of mixed methods education, and in larger research programs.

Introduction

The emergence of mixed methods research; the integration of quantitative and qualitative research methods within one project (Johnson et al., 2007); has been heralded as a ‘new era’ (Tashakkori and Creswell, 2007) and ‘the third methodological movement’ (Lopez-Fernandez and Molina-Azorin, 2011) whose ‘time has come’ (Johnson and Onwuegbuzie, 2004). However, the adoption of mixed methods research in psychology has trailed behind other social science disciplines. We begin this article with a brief overview of the prevalence of mixed methods research in the social sciences, before narrowing down to focus on mixed methods research in psychology. We argue that teaching mixed methods research in psychology is central to increasing the use of mixed methods within this field, but that teaching alone is not enough. We need to be able to understand and measure attitudes toward mixed methods research in order to tailor and evaluate education and training in this research methodology. There are currently no measures available to identify and measure changes in attitudes toward mixed methods research in psychology student, academic, and practitioner populations. We present the development and initial validation of a measure of attitudes toward mixed methods research in psychology, building on our earlier research that identified the range of attitudes toward mixed methods research held by psychology students and academics (Povee and Roberts, 2014b). We conclude this article with an evaluation of the psychometric properties of the measure and provide recommendations for its use in assessing attitudes, evaluating the teaching of mixed methods research and in larger research programs.

Mixed Methods Research in the Social Sciences

The use of mixed methods research in social sciences is increasing in some disciplines, particularly in applied research areas (Teddlie and Johnson, 2009; Alise and Teddlie, 2010), where mixed methods research may be viewed as a “practical necessity” (Fielding, 2010, p. 127). The proportion of publications featuring mixed methods research in applied social science disciplines (estimated at 16%) is two to three times higher than in ‘pure’ social sciences (including psychology) with an estimated prevalence rate of 6% (Alise and Teddlie, 2010). However, the proportion of published articles reporting mixed methods research varies by journal, even within the same discipline. For example, within education journals, prevalence estimates have ranged widely. Truscott et al. (2010) reviewed articles published in education journals from 1995 to 2005, reporting 14% of articles presented mixed methods research, with no systematic increase across the period. In contrast, Ross and Onwuegbuzie (2010) noted that mixed methods research was reported in 24% of articles published in the American Educational Research Journal and 33% of articles published in the Journal for Research in Mathematics Education and Alise and Teddlie (2010) reported an estimated prevalence rate of 24% across five elite education journals.

The increased publication rates of mixed methods research in the social sciences has been accompanied by increased scholarly attention to mixed methods as a research methodology. This has included publications on how to conduct mixed methods research (e.g., Tashakkori and Teddlie, 2010; Creswell and Plano-Clark, 2011; Creswell, 2015), how to evaluate mixed methods research (e.g., Leech et al., 2010; O’Cathain, 2010; Collins et al., 2012; Heyvaert et al., 2013), and how to write mixed methods articles (e.g., Leech et al., 2011; Mertens, 2011).

The increased use of mixed methods approaches has also been followed by demand for training and education in using mixed methods research. There is an emerging literature on teaching mixed methods research (e.g., Tashakkori and Teddlie, 2003; Early, 2007; Christ, 2009; Baran, 2010; Ivankova, 2010; Onwuegbuzie et al., 2011, 2013; Poth, 2014), highlighting the difficulties faced by ‘first generation’ mixed methods instructors (Tashakkori and Teddlie, 2003; Early, 2007; Onwuegbuzie et al., 2011), who often have not themselves been trained in mixed methods research (Early, 2007). There is an identified need for dialog and development of resources to support the teaching of mixed methods research (Tashakkori and Teddlie, 2003; Early, 2007).

Mixed Methods Research in Psychology

The uptake of mixed methods research is lower in psychology journals than many other social science disciplines. Based on systematic random sampling, Alise and Teddlie (2010) estimated that 7% of articles published in five elite psychology journals immediately prior to 2006 presented mixed methods research, with all adopting a quasi-mixed design. Other studies have reported lower prevalence estimates, ranging from 1.7 to 3% (Ponterotto, 2005; Leech and Onwuegbuzie, 2011; Lopez-Fernandez and Molina-Azorin, 2011).

The relative paucity of mixed methods research in psychology is perhaps unsurprising given the historical dominance of behaviorism, positivist, and post-positivist research paradigms and associated valuing of quantitative and experimental methods (Ponterotto, 2005; Alise and Teddlie, 2010), as evidenced by publications in psychology journals, funding, research training, and current teaching models (Walsh-Bowers, 2002; Bhati et al., 2013). Psychology students continue to be socialized within the dominant culture of positivism and quantitative research methods (Breen and Darlaston-Jones, 2010; Bhati et al., 2013). Compounding this, the ‘paradigm wars’ (Gage, 1989) has juxtaposed quantitative and qualitative methods as binary opposites arising from incompatible world views and therefore not suitable for mixing (Wiggins, 2011). Other barriers to conducting mixed methods research include the difficulties in learning and applying both methods (Hanson et al., 2005); particularly given the limited availability of education and training in mixed methods research (O’Cathain et al., 2010). These factors may have contributed to perceptions of lack of rigor in the field (Bergman, 2011).

However, over the last decade, there are encouraging signs of increased interest in mixed methods research in psychology. There have been calls to increase the adoption of this approach to research in psychology (e.g., Haverkamp et al., 2005; Weisner and Fiese, 2011; Barnes, 2012), and the teaching of a wide range of methodologies within the undergraduate psychology curriculum (Breen and Darlaston-Jones, 2010). However, it must be noted that these calls have not been universally welcomed (see, for example, Toomela (2011, p. 38) who argued that qualitative and mixed methods in psychology “may be just other paths to a fairy land; to a land where science and fairy-tales are equally acceptable truths”).

As lack of familiarity with, and expertise in conducting, mixed methods research underlie the low levels of mixed methods research published in psychology journals, teaching mixed methods in psychology is central to increasing the use of mixed methods in psychology. In order to effectively teach mixed methods research in psychology, it is important to understand the attitudes toward mixed methods held by psychology students, academics, and psychologists. As part of a larger mixed methods project examining attitudes toward qualitative and mixed methods research in psychology (see Povee and Roberts, 2014a; Roberts and Povee, 2014 for information on the component of the project examining attitudes toward qualitative research), we interviewed 21 psychology students and academics about their attitudes toward mixed methods research (Povee and Roberts, 2014b). Using the multicomponent model (also known as the tripartite or neotripartite model) of attitudes (Eagly and Chaiken, 1993, 2007) as a framework, through thematic analysis of interview transcripts we identified a range of behavioral and cognitive themes underlying attitudes toward mixed methods research.

The behavioral component of attitudes refers to experience and intentions (Eagly and Chaiken, 1993, 2007). Three themes were identified within the behavior domain. First, mixed methods research was seen as providing opportunities for broadening perspectives of research, sharing knowledge, and learning (‘Expanding Research Capabilities’). Second, a lack of training and experience was seen as limiting opportunities for conducting mixed methods research (‘Limited Exposure’). Third, mixed methods research was viewed as requiring more time, resources and effort than other types of research (‘Time and Resource Intensive’), all barriers to conducting mixed methods research (Povee and Roberts, 2014b).

The cognitive component of attitudes refers to the associations and attributes ascribed to mixed methods research (Eagly and Chaiken, 1993, 2007). Seven themes were identified within the cognitive domain. Mixed methods research was viewed as a flexible approach to psychological inquiry (‘Flexibility’), with the combination of qualitative and quantitative components viewed as either complementary or incompatible [‘(in)Compatibility’]. Mixed Methods research was described by some as being the most ‘valid’ approach to psychological inquiry while others were concerned over the possible mismatch of the findings of the qualitative and quantitative components (‘Validity’). Concern was also raised that the qualitative component of mixed methods research was too often secondary to the quantitative components (‘Tokenistic Qualitative Component’), that mixed methods research may be adopted simply to satisfy quantitatively oriented academics, researchers, or thesis markers (‘Skepticism of Motivation’), that methodologies may be mixed without a considered rationale (‘Rationale for Mixing’) and that a mixed methods approach was more susceptible to researcher bias than purely qualitative or purely quantitative research studies (‘Researcher Bias’).

While understanding the range of attitudes toward mixed methods research held by psychologists and psychology students is helpful in tailoring education and training in mixed methods research, it is also important to be able to evaluate the efficacy of training and evaluation in terms of increased knowledge and skills, and also in terms of attitudes. Previous research on the teaching of research methodologies has demonstrated that increased knowledge is not always associated with increased positive attitudes. For example, research on psychology students’ attitude change with the teaching of quantitative research methods and statistics suggests that while teaching may increase knowledge, attitudes toward the perceived utility of both research methods and statistics decline following teaching (Manning et al., 2006; Sizemore and Lewandowski, 2009). Similar results have been reported across disciplines (Schau and Emmioglu, 2012). Attitudes impact judgments and behaviors (Petty et al., 1997) and if the teaching of mixed methods results in more negative attitudes toward mixed methods research, mixed methods education is unlikely to result in the increased conduct of mixed methods research.

There are currently no measures available to identify and measure changes in attitudes toward mixed methods research in psychology student, academic, and practitioner populations. In this article we draw on the themes identified in our previous research to develop and begin the validation of a new measure to fill this gap: Attitudes toward Mixed Methods Research in Psychology (AMMRP). Given the increasing interest in mixed methods research in psychology, such a measure is required to quickly identify attitudes and measure changes in attitudes over time. It may be of particular use within psychology student populations to identify pre-existing attitudes prior to entrance to a course and to measure changes in attitudes after completion of mixed methods research education.

The primary aim of this study was to develop a psychometrically sound brief measure of attitudes toward mixed methods research in psychology. Mixed methods is recommended as the methodology for measure development, particularly Likert scales (Onwuegbuzie et al., 2010), and in our research we used a sequential mixed methods design. Thematic analysis of qualitative interviews (results reported in Povee and Roberts, 2014b) resulted in a pool of items which was then administered to a large sample of psychology students, academics and practitioners. A combination of exploratory and confirmatory factor analyses were used to assess the factor structure of the measure and Cronbach’s alpha calculated to test the internal reliability of factors. This study also begins the validation of the new measure. Known groups validity was examined through comparing scores on the AMMRP scales of those who have a stated preference for mixed methods research with those who have a stated preference for qualitative or quantitative research. Convergent and divergent validity was examined through correlations with scores on the ‘Scientist’ and ‘Practitioner’ scales of the Scientist–Practitioner Inventory for Psychology (Leong and Zachar, 1991) and the Psychology as Science Scale (Friedrich, 1996).

Materials and Methods

A cross-sectional correlational design was utilized with data collected using an online survey.

Participants

Participants were a convenience sample of 274 psychology students, academics, and practitioners. Reflecting the gender bias in the psychology student and practitioner population, 74.1% of the research participants were female. Participants ranged in age from 18 to 87 (M = 28 years SD = 12 years) and resided in a range of countries including Australia (58.8%), the UK (15.6%), the USA (11.5%), and Singapore (5%). The majority were psychology students (78.1%), ranging from first year undergraduate to Ph.D. students. Approximately one-fifth (22.2%) of the sample were academics with between one and 33 years in academia and 11.7% were currently employed as psychologists across a range of specialities1. Almost half of participants (46.2%) expressed a preference for conducting mixed methods research, while approximately a quarter each expressed a preference for qualitative (28.4%) and quantitative research (25.4%). Self-rated skills in conducting mixed methods research ranged from very poor (5.6%), poor (21.6%), fair (43.7%), good (24.6%) to very good (4.5%).

Measures

An online questionnaire was constructed containing items designed to measure attitudes to qualitative2 and mixed methods research, the Psychology as Science Scale, The Scientist–Practitioner Inventory for Psychology and a range of demographic questions.

Attitudes toward Mixed Methods Research in Psychology items were developed based on the themes identified in our prior qualitative analysis (Povee and Roberts, 2014b). A pool of 42 items (short statements) designed to measure the eleven themes of ‘Expanding Research Capabilities,’ ‘Limited Exposure,’ ‘Time and Resource Intensive,’ ‘Flexibility,’ ‘(in)Compatibility,’ ‘Increased Validity,’ ‘(in)Congruency,’ ‘Tokenistic Qualitative Component,’ ‘Skepticism of Motivation,’ ‘Rationale for Mixing,’ and ‘Researcher Bias’ were developed by the authors. This pool of items was sent to a psychology academic with expertise in research methodologies for expert review. Based on the feedback provided, some items were removed and minor changes were made to other items. The final pool of 34 items was used in the survey. Attitudes are expected to vary in valence (from positive to negative) and strength (from weak to strong; Maio and Haddock, 2010). The items in the item pool vary in valence so a response format was needed that could measure strength. In line with this, a response format of strongly disagree to strongly agree was selected for use with all items.

The Psychology as Science Scale (Friedrich, 1996) is a 15-item scale designed to measure perceptions of psychology as a science. Three factors underlie the scale, measuring (a) perceptions of psychology as a hard science (example item “It’s just as important for psychology students to do experiments as it is for students in chemistry and biology”), (b) the perceived value of methodological training and psychological research (example item “Courses in psychology place too much emphasis on research and experimentation”, and (c) deterministic views regarding the predictability of human behavior (example item “Carefully controlled research is not likely to be useful in solving psychological problems”). This measure was selected as it has a bias toward quantitative research in psychology. Each item is rated by participants on a seven point scale ranging from (1) strongly disagree to (7) strongly agree. Seven items are reverse-scored. Scores range from 15 to 85 with higher scores indicating increased perceptions of psychology as a science. The measure is intended to be used as an overall score (Friedrich, 1996), with the total scale having acceptable internal reliability with samples of undergraduate psychology students (α = 0.71 to 0.72; Friedrich, 1996; Holmes and Beins, 2009). In this sample the internal reliability was good (α = 0.86).

The Scientist–Practitioner Inventory for Psychology (Leong and Zachar, 1991) is a 42-item inventory that measures scientist and practitioner interests in psychology. Participants are asked to rate each item in terms of their level of interest in conducting the specified activities in their future careers. The five point response scale ranges from (1) very low interest to (5) very high interest. Whilst exploratory factor analysis suggests a seven factor solution underlies the items, there are two second order factors and the measure is treated as two separate scales: ‘Scientist’ scale and ‘Practitioner’ scale. Each scale consists of 21 items. Example items are “Collecting data on a research project you designed” (‘Scientist’ scale) and “Conducting group psychotherapy sessions” (‘Practitioner’ scale). Possible scale scores range from 21 to 105, with higher scores representing higher interest. The scales have good internal reliability: ‘Scientist’ scale α = 0.91, ‘Practitioner’ scale α = 0.88 to 0.94 (Leong and Zachar, 1991; Holmes and Beins, 2009). In this sample, the internal reliability was also high (‘Scientist’ scale α = 0.94, ‘Practitioner’ scale α = 0.95).

Procedure

Prior to commencing the research, ethics approval was obtained from Curtin University Human Research Ethics Committee. Recruitment for the research commenced in July 2012, through messages posted on social networking sites (Facebook; Linked In) and advertisements on noticeboards around the university. Interested persons were provided with a link to an online participant information sheet, and upon consenting to participate were redirected to an online questionnaire Participation in the research was voluntary and participants who completed the survey were offered the opportunity to enter into a draw for a US$100 Amazon.com gift voucher. In addition, students in the second year undergraduate psychology participant pool had the option of participating in this study, and those electing to do so were assigned credits toward their research participation requirement.

Recruitment ceased in December 2012, with survey data downloaded into SPSS v. 20 for analysis. Three hundred and twenty four participants had accessed the online survey. In initial screening 50 cases where the survey had not been commenced or mixed methods items had not been completed were deleted, leaving a data set with 274 cases. To meet the suggested criterion for sample size of five items per variable, 170 cases (5 × 34 variables) were randomly selected and used as the dataset for the exploratory factor analysis. The remaining 104 cases were used as the dataset for the confirmatory factor analysis.

Results

Exploratory Factor Analysis

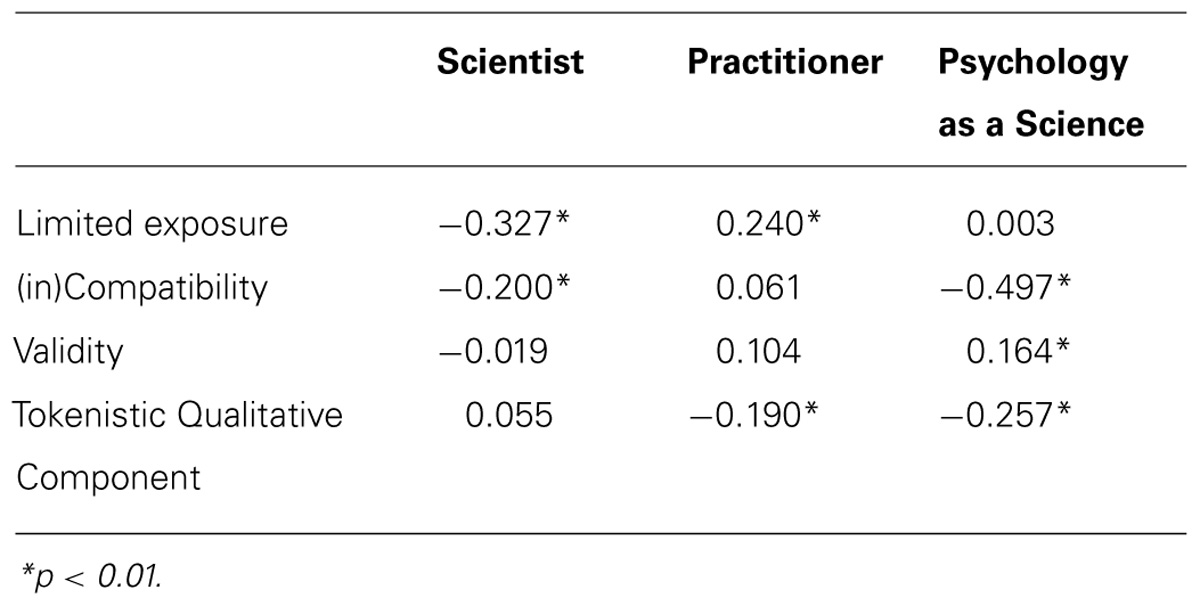

The exploratory factor analysis dataset was inspected for missing values. In total there were 16 missing data points across the 34 items (0.002%). These were replaced using mean item scores. Prior to conducting the exploratory factor analysis, a parallel analysis was conducted and indicated five factors should be retained. Based on this, Principal Axis Factoring with varimax rotation was conducted with a forced five factor extraction. Items that cross-loaded across factors with a loading greater than 0.3 on a second factor were removed. This resulted in the removal of the fifth factor, as all items cross-loaded above 0.3 on other factors. An iterative process was used to continue to remove items that cross-loaded, loaded weakly or reduced the internal reliability of the factor. The resulting 12 item four factor model, with factor loadings, is presented in Table 1. The items in the first factor reflect the behavioral component of attitudes and relate to the individual’s perceived ability to conduct mixed methods research, reflecting the ‘Limited Exposure’ theme from the qualitative analysis. The second, third, and fourth factors contains items reflecting the ‘(in)Compatibility,’ ‘Validity,’ and ‘Tokenistic Qualitative Component’ themes from the qualitative research (Povee and Roberts, 2014b) and have been named accordingly.

TABLE 1. Factor loadings for exploratory factor analysis with varimax rotation for attitudes to mixed methods.

Confirmatory Factor Analysis

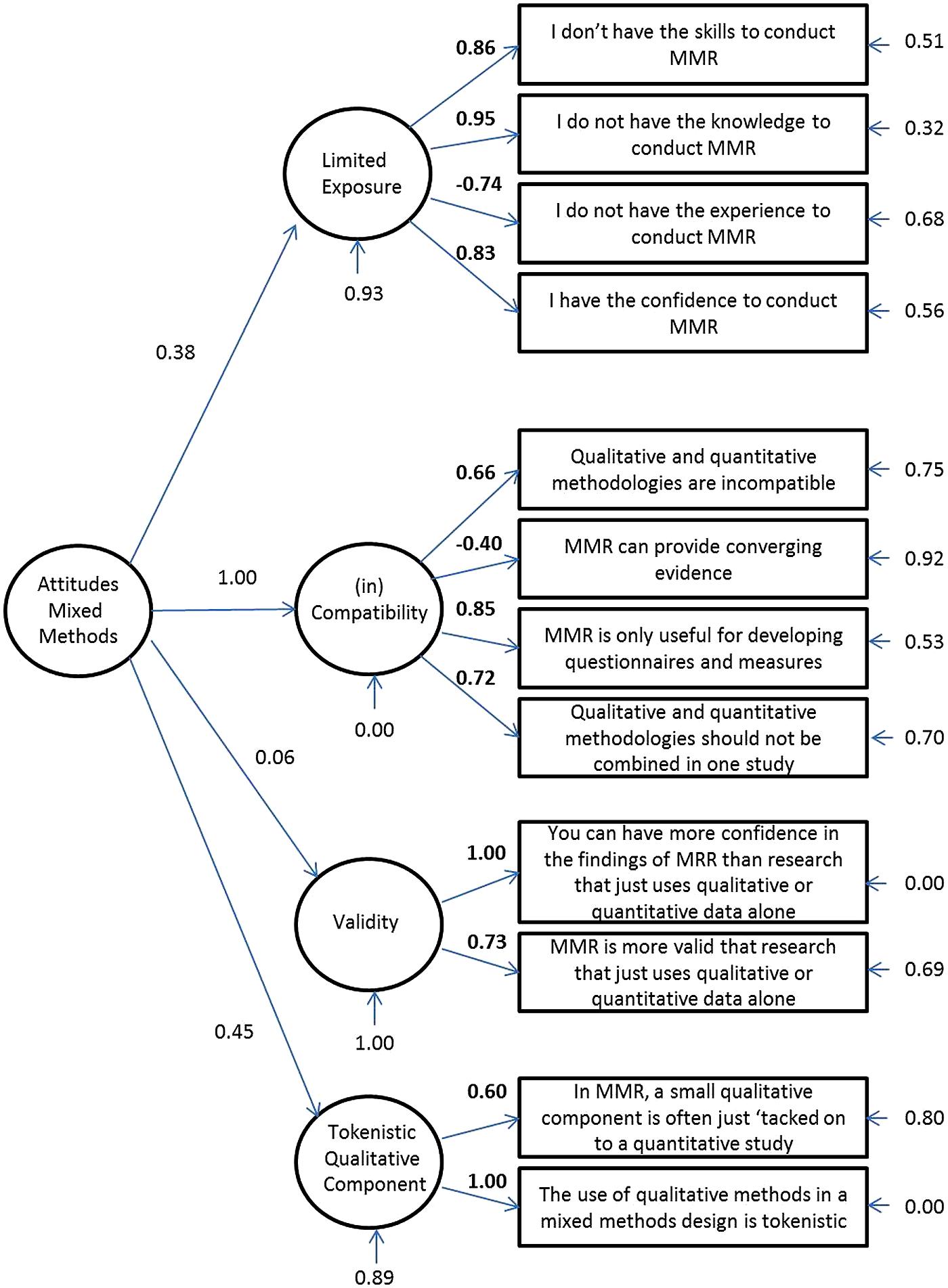

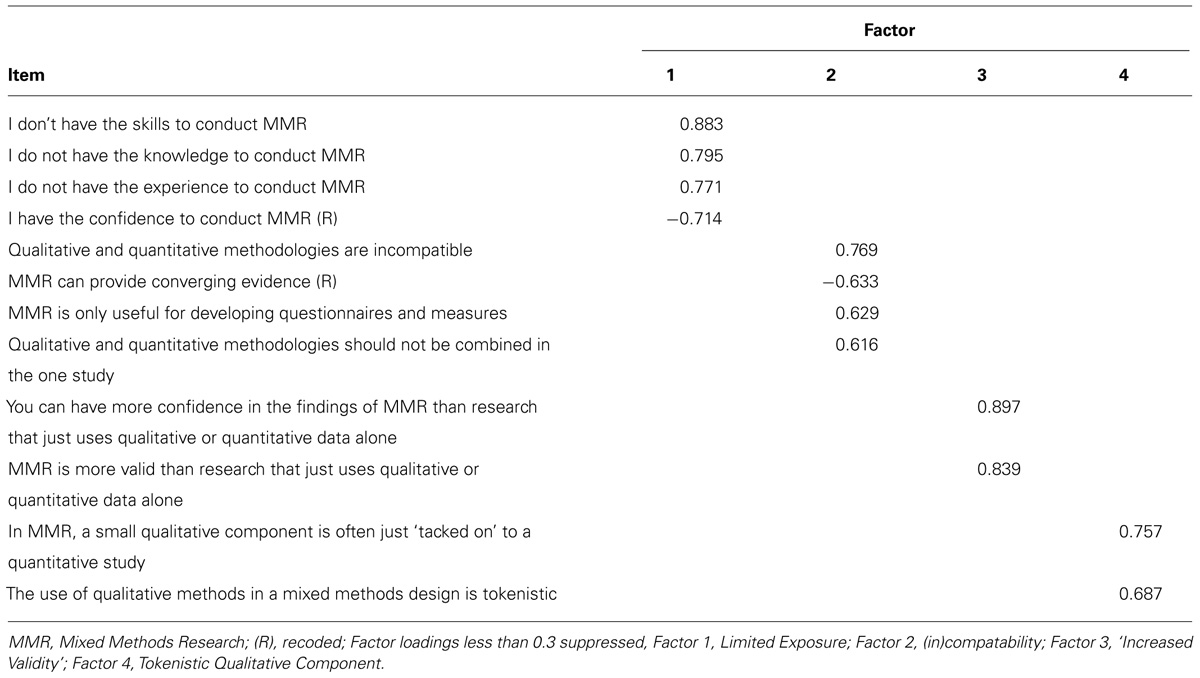

The remaining 104 cases were used as the sample for conducting confirmatory factor analysis using EQS v. 6.2. This dataset was inspected for missing values and item means were used to substitute the two missing data points. A higher-order four factor model and uncorrelated four factor model were tested against a single factor model for goodness of fit using the recommended cut-offs for four fit indices: the Satorra-Bentler Chi Square divided by degrees of freedom, the comparative fit index (CFI), the non-normed fit index (NNFI), and the root mean square error of approximation (RMSEA). The fit indices for each model are presented in Table 2, and clearly indicate that the four factor higher order model is preferred as it is the only model that meets all the recommended cut-off criteria for good fit. The four factor higher order model is presented in Figure 1.

TABLE 2. Fit indices (robust statistics) for confirmatory factor analysis models of the attitudes to mixed methods measure.

Internal Reliability and Validity Analyses

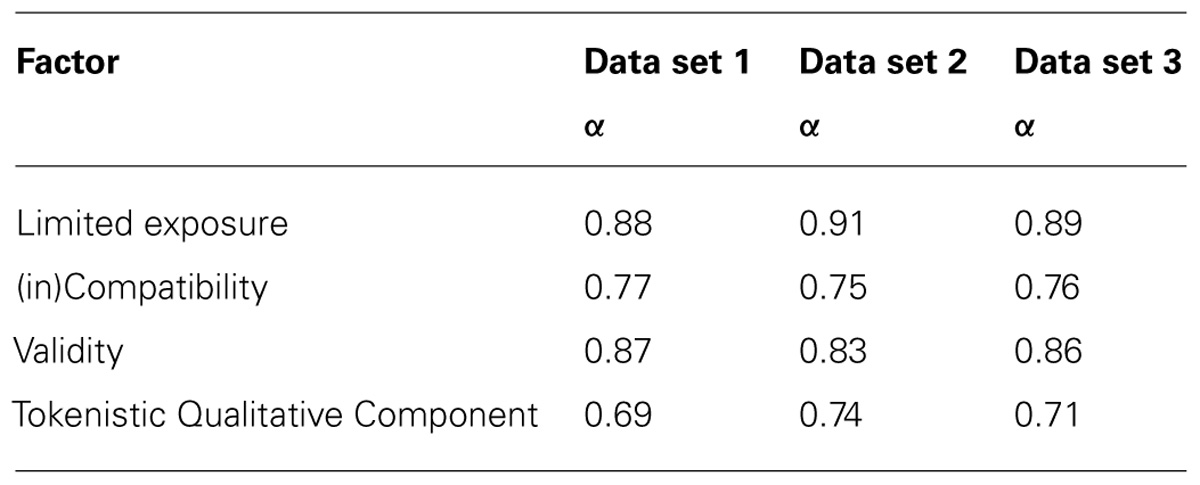

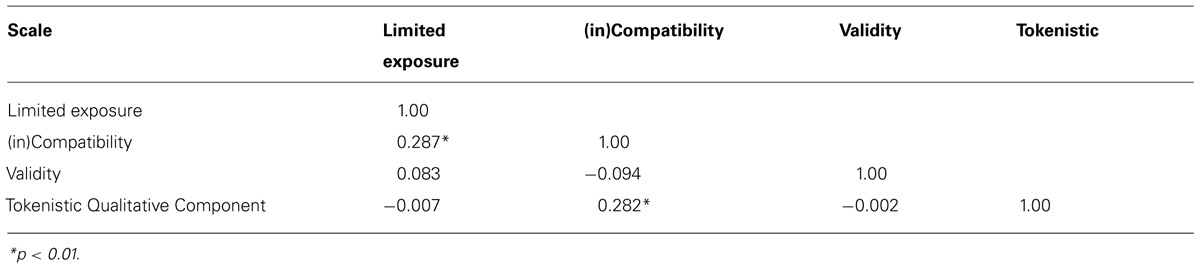

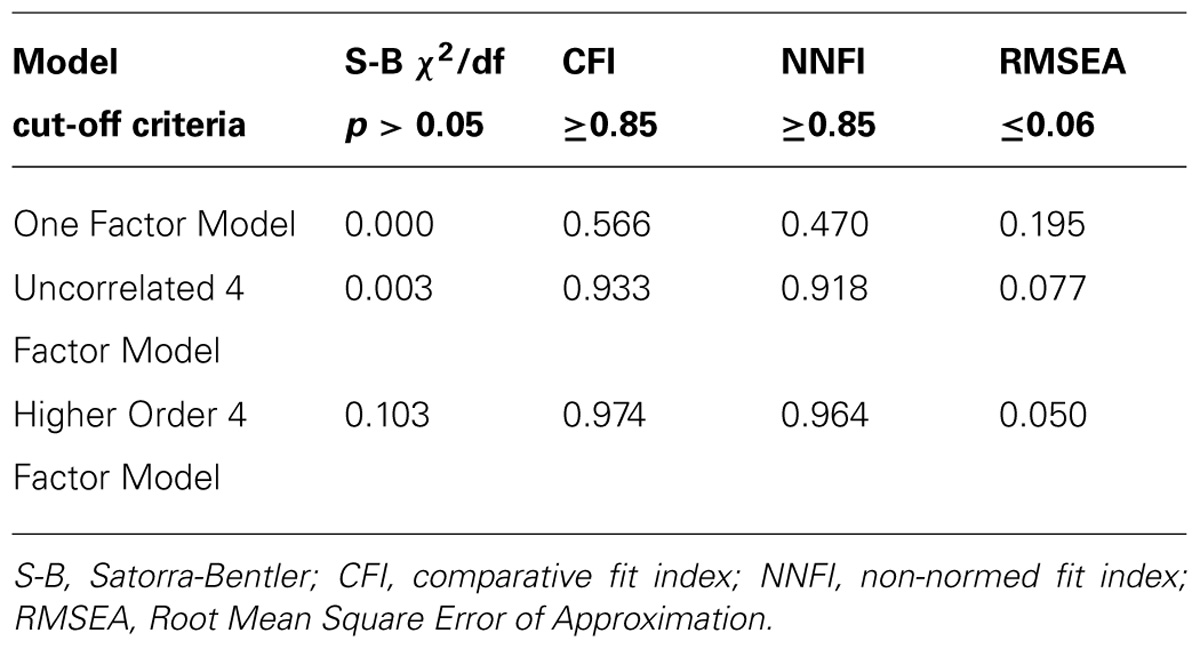

The two datasets were merged for internal reliability and validity analyses. The internal reliability coefficients for each factor in the exploratory factor analysis data set (data set 1), confirmatory factor analysis data set (data set 2), and merged data set (data set 3) are presented in Table 3. All factors have acceptable internal reliability. Descriptive statistics for the new measure are presented in Table 4 and the correlation between factors in Table 5.

TABLE 4. Descriptive statistics for the attitudes toward mixed methods research in psychology measure.

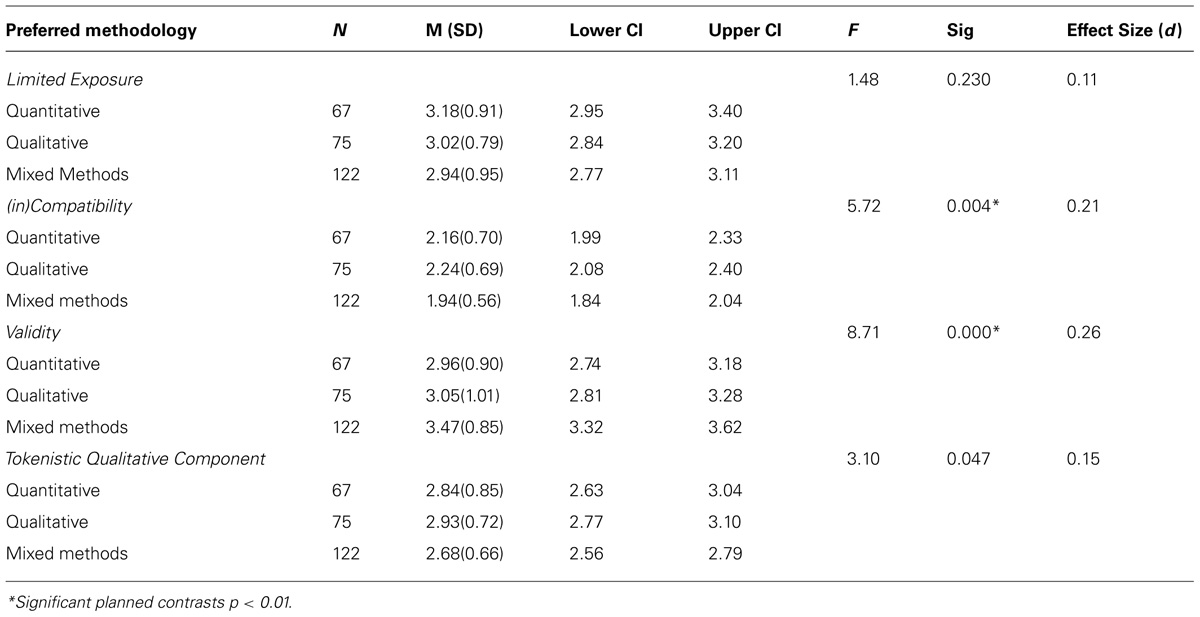

A conservative alpha of p < 0.01 was adopted for all validity tests. Known groups validity was examined through comparing scores on the AMMRP scales of those who have a stated preference for mixed methods research with those who have a stated preference for qualitative or mixed methods research. Four one way ANOVAs with planned contrasts were conducted and the results are presented in Table 6. While those preferring mixed methods research did not significantly differ on ‘Limited Exposure’ to mixed methods or perceptions of a ‘Tokenistic Qualitative Component,’ they were less likely to view the mixing of qualitative and quantitative components as problematic [‘(in)Compatibility’], and more likely to view mixed methods as a valid methodology (‘Validity’).

Respondents’ self-ratings of mixed methods skills (measured on a 5 point scale ranging from ‘very poor’ to ‘very good’) were correlated with AMMRP scale scores. The results indicate that there is a strong negative correlation (rS = -0.57, p < 0.001, N = 268) between self-rated mixed methods skills and ‘Limited Exposure,’ and weaker correlations with ‘(in)Compatibility’(rS = -0.22, p < 0.001, N = 268) and ‘Validity’ (rS = 0.15, p < 0.001, N = 268). There was no significant relationship between self-rated skills and the ‘Tokenistic Qualitative Component’ scale.

Independent samples t-tests revealed that students (N = 211) scored significantly higher than other survey respondents (N = 59) on the ‘Limited Exposure’ (students M = 3.23, SD = 0.80; non-students M = 2.30, SD = 0.88; t(268) = 7.725, p < 0.001, d = 0.81) and ‘(in)Compatibility’ scales (students M = 2.15, SD = 0.61; non-students M = 1.88, SD = 0.73; t(268) = 2.862, p = 0.005, d = 0.64). However, they did not differ on the ‘Validity’ (students M = 3.29, SD = 0.88; non-students M = 2.98, SD = 1.08; t(268) = 2.315, p = 0.021, d = 0.93.) or ‘Tokenistic Qualitative Component’ scales (students M = 2.75, SD = 0.69; non-students M = 2.93, SD = 0.85; t(80.56) = -1.50, p = 0.136, d = 0.73). Students responses on the AMMRP scales varied by year of study, with significant negative correlations between year of study and ‘Limited Exposure’ (rS = -0.431, p < 0.001) and ‘(in)Compatibility’ (rS = -0.406, p < 0.001).

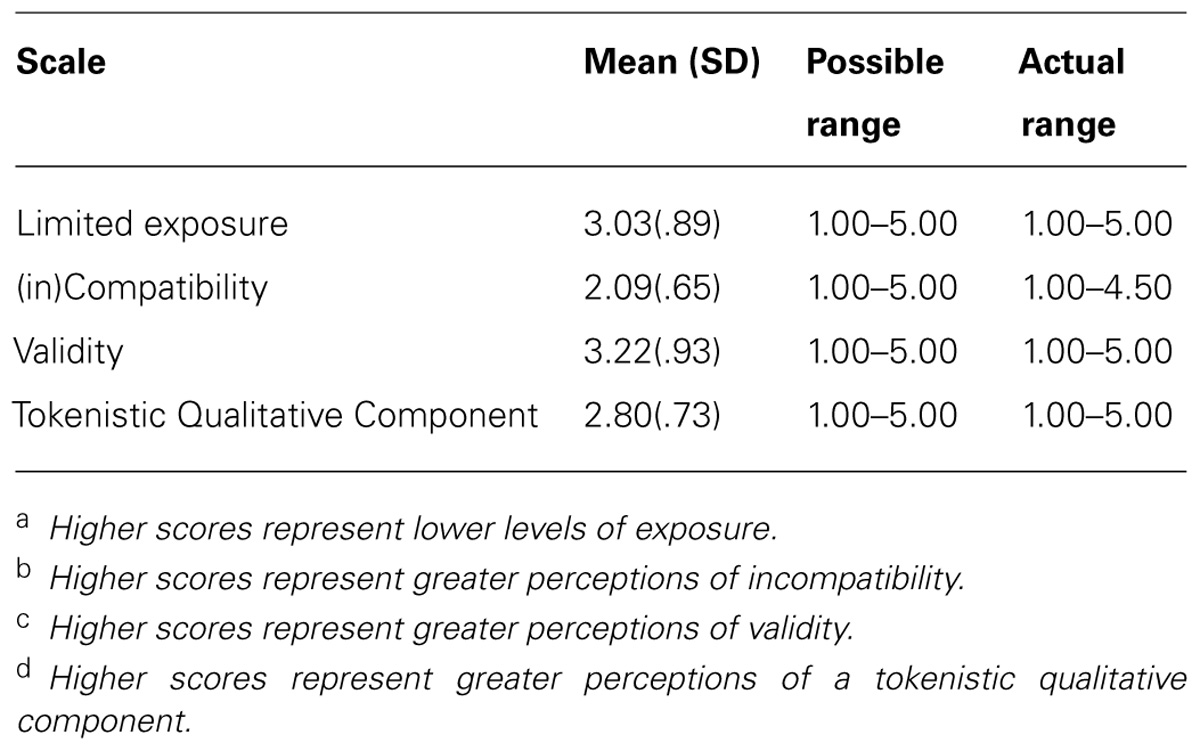

Convergent and divergent validity were examined through correlating scores on the newly developed AMMRP scales with the ‘Scientist’ and ‘Practitioner’ scales of the Scientist–Practitioner Inventory for Psychology (Leong and Zachar, 1991) and the Psychology as Science Scale (Friedrich, 1996). The results are presented in Table 7. Scores on the ‘(in)Compatibility’ scale were negatively associated with scores on both the ‘Scientist’ scale of the Scientist–Practitioner Inventory for Psychology and Psychology as Science Scale. The strongest negative relationship (large effect size) was found between ‘(in)Compatibility’ and Psychology as Science Scale. Consistent with this, scores on the ‘(in)Compatibility’ scale were also negatively associated with scores on the ‘Scientist’ scale of the Scientist–Practitioner Inventory for Psychology (small to medium effect size). ‘Limited exposure’ was positively associated with the ‘Practitioner’ scale, but negatively correlated with the ‘Scientist’ scale of the Scientist–Practitioner Inventory for Psychology (small to medium size correlations). ‘Tokenistic Qualitative Component’ was negatively correlated with both the ‘Practitioner’ scale of the Scientist–Practitioner Inventory for Psychology and Psychology as Science Scale (small to medium size correlations). ‘Validity’ was weakly correlated with Psychology as Science Scale, but neither the ‘Scientist’ nor ‘Practitioner’ scales of the Scientist–Practitioner Inventory for Psychology.

Discussion

In this paper we have presented the development and initial validation of a new brief measure of attitudes toward mixed methods research in psychology, the AMMRP. This measure has good content validity, covering four themes that emerged in previous qualitative research (Povee and Roberts, 2014b). The converging results of the exploratory factor analysis and confirmatory factor analysis support the factor structure of the measure, with confirmatory factor analysis indicating that a higher order four factor model best represented the measure. Internal reliability testing indicated the scales have good internal consistency.

Supporting the validity of the measure, known groups analyses demonstrated that respondents indicating a preference for mixed methods research rated the mixing of qualitative and quantitative components as less ‘(in)Compatible,’ and mixed methods research as higher in ‘Validity’ than respondents with a preference for qualitative or quantitative research. Perhaps surprisingly, a preference for mixed methods research was not significantly associated with ‘Limited Exposure.’ This is however, consistent with previous findings that training in a particular type of research methodology, while increasing skill levels, may have a negative effect on the perceived utility of the research method (Manning et al., 2006; Sizemore and Lewandowski, 2009). Self-ratings of mixed methods skills were strongly negatively related to scores on the ‘Limited Exposure’ scale, in addition to being negatively related to ‘(in)Compatibility’ and positively related to ‘Validity,’ further supporting the known groups validity of the measure.

Students scored lower on the ‘Limited Exposure’ scale than other respondents, with a negative relationship also found between year of study and ‘Limited Exposure’ scores. Similarly, students rated combining qualitative and quantitative methods more problematic [‘(in)Compatibility scale’] than non-students, but the relationship with year of study was negative. Combined, these results suggest that as psychology students’ progress through their undergraduate and postgraduate studies their exposure to mixed methods increases and their perceptions of incompatibility decrease. This is a positive finding for the future of mixed methods research within the discipline.

In the absence of an existing ‘gold standard’ measure of attitudes to mixed methods research, divergent, and convergent validity was assessed through associations with scientist and practitioner measures and a measure of psychology as a science. Divergent validity was demonstrated through the absence of large correlations between AMMRP scales and the three other measures. Interestingly, ‘(in)Compatibility’ and ‘Tokenistic Qualitative Component’ were negatively correlated, and ‘Validity’ positively correlated with perceptions of psychology as a science. This indicates that in this sample mixed methods research was viewed as compatible with a belief in psychology as a science.

A limitation of this study is the use of convenience sampling. Given the limited amount of mixed methods research currently published in psychology journals (Ponterotto, 2005; Alise and Teddlie, 2010; Leech and Onwuegbuzie, 2011; Lopez-Fernandez and Molina-Azorin, 2011) a surprisingly large proportion of respondents (almost half of the sample) nominated mixed methods research as their preferred methodology. It may be that the topic of this research attracted those students/academics/practitioners with an interest in mixed methods approaches and as such, the sample cannot be seen as representative of the wider psychology student/academic/practitioner population. Despite this, our survey respondents self-rated mixed methods research skills were normally distributed over the full range of possible scores, and scores on each of the AMMRP scales were moderate, covering the full range of possible scores, increasing our confidence in our findings. However, future research conducted with a larger, more representative sample is encouraged to examine the stability of the factor structure of the AMMRP. This will also enable further testing of the relationships between AMMRP scales and validity measures as, despite the adoption of a conservative alpha of p < 0.01 for validity testing, there remains the possibility of type 1 errors associated with multiple testing.

The promising psychometric properties and brevity of the AMMRP indicate its suitability for use in teaching, evaluation, and research. The AMMRP administered at the start of a mixed methods course can provide instructors with information on common misperceptions of mixed methods research which can be targeted during teaching. Administered again at the end of the course, the AMMRP can be used as a measure of changing attitudes, evaluating the effectiveness of mixed methods training in changing attitudes toward mixed methods research in psychology. The AMMRP is also suitable for use in research projects investigating attitudes toward mixed methods research as a predictor or outcome of other variables of interest. There now exists a full suite of measures assessing attitudes toward research: AMMRP, Attitudes Toward Qualitative Research in Psychology (ATQRP; Roberts and Povee, 2014) and Psychology as a Science (Friedrich, 1996). Used together, these three measures will allow a more nuanced assessment of attitudes toward psychological research.

In summary, this paper has presented the development and initial validation of a 12-item measure of attitudes toward mixed methods research in psychology, the AMMRP. This is a brief, internally reliable measure that can be used in assessing attitudes toward mixed methods research in psychology and measuring change in attitudes over time.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This research was funded by a PsyLIFE Small Grant awarded to Associate Professor Lynne Roberts and Kate Povee from the School of Psychology and Speech Pathology at Curtin University.

Footnotes

- ^ Some respondents fell into multiple categories (e.g., academic and practicing psychologist).

- ^ Only the mixed methods items are of interest in this paper. Details of the results for qualitative items have been reported in Roberts and Povee (2014).

References

Alise, M. A., and Teddlie, C. (2010). A continuation of the paradigm wars? Prevalence rates of methodological approaches across the social/behavioral sciences. J. Mix. Methods Res. 4, 103–126. doi: 10.1177/1558689809360805

Baran, M. (2010). Teaching multi-methodology research courses to doctoral students. Int. J. Mult. Res. Approaches 4, 19–27. doi: 10.5172/mra.2010.4.1.019

Barnes, B. R. (2012). Using mixed methods in South African psychological research. S. Afr. J. Psychol. 42, 463–475.

Bergman, M. M. (2011). The good, the bad, and the ugly in mixed methods research and design. J. Mix. Methods Res. 5, 271–275. doi: 10.1177/1558689811433236

Bhati, K. S., Hoyt, W. T., and Huffman, K. L. (2013). Integration or assimilation? Locating qualitative research in psychology. Qual. Res. Psychol. 11, 98–114. doi: 10.1080/14780887.2013.772684

Breen, L. J., and Darlaston-Jones, D. (2010). Moving beyond the enduring dominance of positivism in psychological research: implications for psychology in Australia. Aust. Psychol. 45, 67–76. doi: 10.1080/00050060903127481

Christ, T. W. (2009). Designing, teaching, and evaluating two complementary mixed methods research courses. J. Mix. Methods Res. 3, 292–325. doi: 10.1177/1558689809341796

Collins, K. M. T., Onwuegbuzie, A. J., and Johnson, R. B. (2012). Securing a place at the table: a review and extension of legitimation criteria for the conduct of mixed research. Am. Behav. Sci. 56, 849–865. doi: 10.1177/0002764211433799

Creswell, J. W., and Plano-Clark, V. L. (2011). Designing and Conducting Mixed Methods Research. Thousand Oaks: Sage.

Eagly, A. H., and Chaiken, S. (1993). The Psychology of Attitudes. Orlando, FL: Harcourt Brace Jovanovich College Publishers.

Eagly, A. H., and Chaiken, S. (2007). The advantages of an inclusive definition of attitude. Soc. Cogn. 25, 582–602. doi: 10.1521/soco.2007.25.5.582

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Early, M. A. (2007). Developing a syllabus for a mixed-methods research course. Int. J. Soc. Res. Methodol. 10, 145–162. doi: 10.1080/13645570701334118

Fielding, N. (2010). Mixed methods research in the real world. Int. J. Soc. Res. Methodol. 13, 127–138. doi: 10.1080/13645570902996186

Friedrich, J. (1996). Assessing students’ perceptions of psychology as a science: validation of a self-report measure. Teach. Psychol. 23, 7–13. doi: 10.1207/s15328023top2301_1

Gage, N. L. (1989). The paradigm wars and their aftermath: a “historical” sketch of research on teaching since 1989. Educ. Res. 18, 4–10. doi: 10.3102/0013189X018007004

Hanson, W. E., Creswell, J. W., Plano Clark, V. L., Petska, K. S., and Creswell, J. D. (2005). Mixed methods research designs in counseling psychology. J. Couns. Psychol. 52, 224–235. doi: 10.1037/0022-0167.52.2.224

Haverkamp, B. E., Morrow, S. L., and Ponterotto, J. G. (2005). A time and place for qualitative and mixed methods in counseling psychology research. J. Couns. Psychol. 52, 123–125. doi: 10.1037/0022-0167.52.2.123

Heyvaert, M., Hannes, K., Maes, B., and Onghena, P. (2013). Critical appraisal of mixed methods studies. J. Mix. Methods Res. 7, 302–327. doi: 10.1177/1558689813479449

Holmes, J. D., and Beins, B. C. (2009). Psychology is a science: at least some students think so. Teach. Psychol. 36, 5–11. doi: 10.1080/00986280802529350

Ivankova, N. V. (2010). Teaching and learning mixed methods research in computer mediated environment: educational gains and challenges. Int. J. Mult. Res. Approaches 4, 49–65. doi: 10.5172/mra.2010.4.1.049

Johnson, R. B., and Onwuegbuzie, A. J. (2004). Mixed methods research: a research paradigm whose time has come. Educ. Res. 33, 14–26. doi: 10.3102/0013189X033007014

Johnson, R. B., Onwuegbuzie, A. J., and Turner, L. A. (2007). Toward a definition of mixed methods research. J. Mix. Methods Res. 1, 112–133. doi: 10.1177/1558689806298224

Leech, N. L., Dellinger, A. B., Brannagan, K. B., and Tanaka, H. (2010). Evaluating mixed research studies: a mixed methods approach. J. Mix. Methods Res. 4, 17–31. doi: 10.1177/1558689809345262

Leech, N. L., and Onwuegbuzie, A. J. (2011). Mixed research in counselling: trends in the literature. Meas. Eval. Couns. Dev. 44, 169–180. doi: 10.1177/0748175611409848

Leech, N. L., Onwuegbuzie, A. J., and Combs, J. P. (2011). Writing publishable mixed research articles: guidelines for emerging scholars in the health sciences and beyond. Int. J. Mult. Res. Approaches 5, 7–24. doi: 10.5172/mra.2011.5.1.7

Leong, F. T., and Zachar, P. (1991). Development and validation of the scientist-practitioner inventory for psychology. J. Couns. Psychol. 38, 331–341. doi: 10.1037/0022-0167.38.3.331

Lopez-Fernandez, O., and Molina-Azorin, J. (2011). The use of mixed methods research in the field of behavioral sciences. Qual. Quant. 45, 1459–1472. doi: 10.1007/s11135-011-9543-9

Maio, G., and Haddock, G. (2010). The Psychology of Attitudes and Attitude Change. Thousand Oaks: Sage.

Manning, K., Zachar, P., Ray, G. E., and LoBello, S. (2006). Research methods courses and the scientist and practitioner interests of psychology majors. Teach. Psychol. 33, 194–196.

Mertens, D. M. (2011). Publishing mixed methods research. J. Mix. Methods Res. 5, 3–6. doi: 10.1177/1558689810390217

O’Cathain, A. (2010). “Assessing the quality of mixed methods research: toward a comprehensive framework,” in Sage Handbook of Mixed Methods in Social and Behavioral Research, 2nd edn, eds A. Tashakkori and C. Teddlie (Thousand Oaks: Sage), 531–557.

O’Cathain, A., Murphy, E., and Nicholl, J. (2010). Three techniques for integrating data in mixed methods studies. Br. Med. J. 341, 1147–1150. doi: 10.1136/bmj.c4587

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Onwuegbuzie, A. J., Bustamante, R. M., and Nelson, J. A. (2010). Mixed research as a tool for developing quantitative instruments. J. Mix. Methods Res. 4, 56–78. doi: 10.1177/1558689809355805

Onwuegbuzie, A. J., Frels, R. K., Collins, K. M. T., and Leech, N. L. (2013). Conclusion: a four-phase model for teaching and learning mixed research. Int. J. Mult. Res. Approaches 7, 133–156. doi: 10.5172/mra.2013.7.1.133

Onwuegbuzie, A. J., Frels, R. K., Leech, N. L., and Collins, K. M. T. (2011). A mixed research study of pedagogical approaches and student learning in doctoral-level mixed research courses. Int. J. Mult. Res. Approaches 5, 169–199. doi: 10.5172/mra.2011.5.2.169

Petty, R. E., Wegener, D. T., and Fabrigar, L. R. (1997). Attitudes and attitude change. Annu. Rev. Psychol. 48, 609–647. doi: 10.1146/annurev.psych.48.1.609

Ponterotto, J. G. (2005). Qualitative research in counseling psychology: a primer on research paradigms and philosophy of science. J. Couns. Psychol. 52, 126–136. doi: 10.1037/0022-0167.52.2.126

Poth, C. (2014). What constitutes impactful learning experiences in a mixed methods research course? An examination from the student perspective. Int. J. Mult. Res. Approaches 8, 74–86. doi: 10.5172/mra.2014.8.1.74

Povee, K., and Roberts, L. D. (2014a). Qualitative research in psychology: attitudes of psychology students and academic staff. Aust. J. Psychol. 66, 28–37. doi: 10.1111/ajpy.12031

Povee, K., and Roberts, L. D. (2014b). Attitudes toward mixed methods research in psychology: the best of both worlds? Int. J. Soc. Res. Methodol. doi: 10.1080/13645579.2013.872399

Roberts, L. D., and Povee, K. (2014). A brief measure of attitudes towards qualitative research in psychology. Aust. J. Psychol. 66, 249–256. doi: 10.1111/ajpy.12059

Ross, A. A., and Onwuegbuzie, A. J. (2010). Mixed methods research design: a comparison of prevalence in JRME and AERJ. Int. J. Mult. Res. Approaches 4, 233–245. doi: 10.5172/mra.2010.4.3.233

Schau, C., and Emmioglu, E. S. M. A. (2012). Do introductory statistics courses in the United States improve students’ attitudes. Stat. Educ. Res. J. 11, 70–79.

Sizemore, O. J., and Lewandowski, G. W. (2009). Learning might not equal liking: research methods course changes knowledge but not attitudes. Teach. Psychol. 36, 90–95. doi: 10.1080/00986280902739727

Tashakkori, A., and Creswell, J. W. (2007). Editorial: the new era of mixed methods. J. Mix. Methods Res. 1, 3–7. doi: 10.1177/2345678906293042

Tashakkori, A., and Teddlie, C. (2003). Issues and dilemmas in teaching research methods courses in social and behavioral sciences: US perspective. Int. J. Soc. Res. Methodol. 6, 61–77. doi: 10.1080/13645570305055

Tashakkori, A., and Teddlie, C. (eds). (2010). Sage Handbook of Mixed Methods in Social and Behavioral Research, 2nd Edn. Thousand Oaks; Sage.

Teddlie, C., and Johnson, R. B. (2009). “Methodological thought since the 20th century,” in Foundations of Mixed Methods Research: Integrating Quantitative and Qualitative Approaches in the Social and Behavioral Sciences, eds C. Teddlie and A. Tashakkori (Thousand Oaks: Sage), 40–61.

Toomela, A. (2011). Travel into a fairy land: a critique of modern qualitative and mixed methods psychologies. Integr. Psychol. Behav. Sci. 45, 21–47. doi: 10.1007/s12124-010-9152-5

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Truscott, D. M., Swars, S., Smith, S., Thornton-Reid, F., Zhao, Y., Dooley, C.,et al. (2010). A cross-disciplinary examination of the prevalence of mixed methods in educational research: 1995–2005. Int. J. Soc. Res. Methodol. 13, 317–328. doi: 10.1080/13645570903097950

Walsh-Bowers, R. (2002). Constructing qualitative knowledge in psychology: students and faculty negotiate the social content of inquiry. Can. Psychol. 43, 163–178. doi: 10.1037/h0086913

Weisner, T. S., and Fiese, B. H. (2011). Introduction to special section of the journal of family psychology, advances in mixed methods in family psychology: integrative and applied solutions for family science. J. Fam. Psychol. 25, 795–798. doi: 10.1037/a0026203

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Keywords: attitudes, measure development, mixed methods research, psychology, teaching and learning

Citation: Roberts LD and Povee K (2014) A brief measure of attitudes toward mixed methods research in psychology. Front. Psychol. 5:1312. doi: 10.3389/fpsyg.2014.01312

Received: 28 August 2014; Paper pending published: 06 October 2014;

Accepted: 29 October 2014; Published online: 12 November 2014.

Edited by:

Carl Senior, Aston University, UKReviewed by:

Jie Zhang, The College at Brockport State University of New York, USAOlusola Olalekan Adesope, Washington State University, USA

Copyright © 2014 Roberts and Povee. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lynne D. Roberts, School of Psychology and Speech Pathology, Curtin University, GPO Box U1987, Perth, WA 6845, Australia e-mail: lynne.roberts@curtin.edu.au

Lynne D. Roberts

Lynne D. Roberts Kate Povee

Kate Povee