- 1Department of Psychological Sciences, University of Chieti, Chieti, Italy

- 2Schepens Eye Research Institute, Harvard Medical School, Boston, MA, USA

In this study, we investigated whether age-related differences in emotion regulation priorities influence online dynamic emotional facial discrimination. A group of 40 younger and a group of 40 older adults were invited to recognize a positive or negative expression as soon as the expression slowly emerged and subsequently rate it in terms of intensity. Our findings show that older adults recognized happy expressions faster than angry ones, while the direction of emotional expression does not seem to affect younger adults’ performance. Furthermore, older adults rated both negative and positive emotional faces as more intense compared to younger controls. This study detects age-related differences with a dynamic online paradigm and suggests that different regulation strategies may shape emotional face recognition.

Introduction

Face perception is one of the most well developed visual skills in human beings. Moreover, it is a skill present from the very early stages of life (Johnson et al., 1991) and holds a crucial role in social communication (Haxby et al., 2000). Indeed, we describe feelings, intentions, motivations, impressions, and above all, emotions based on faces that reveal a large amount of information to the perceiver and at least six emotional expressions expressed by the human species are communicated through facial expressions. In fact, happiness, fear, surprise, anger, disgust, and sadness are typically identified with extreme precision even when shown in static or dynamic images (Howell and Jorgensen, 1970; Buck et al., 1972; Wagner et al., 1986; Ekman et al., 1987). Most importantly, face perception, although sensitive to aging and clinical conditions, plays an adaptive role (Zebrowitz et al., 2015).

Interestingly, contrary to this adaptive function in which we would expect negative faces to have an advantage, literature in emotional face recognition has constantly identified a behavioral recognition advantage for happy faces with respect to negative ones (Calvo and Beltrán, 2013). One of the reasons for this advantage may be that participants in these studies are generally asked to recognize only a final version of an emotional face. Here, we were interested in examining emotional biases and preferences in online recognition of emotional faces that may be more closely related to motivational preferences (Fairfield et al., 2015a).

Increasing evidence shows that face recognition may be impaired in older adults. Studies investigating the effects of aging on face perception using tasks such as face detection (Norton et al., 2009), face identification (Habak et al., 2008; Megreya and Bindemann, 2015) and emotion recognition (Calder et al., 2003) have shown how older adults are slower and less accurate on these face perception tasks (Hildebrandt et al., 2011, 2013). More importantly, aging seems to be related to qualitative changes as well as quantitative changes in face perception [e.g., reaction time (RTs), accuracy etc.]. Different fields of psychology such as perception and memory have shown that older adults seem to show a preference for positive emotional stimuli, a phenomenon referred to in the literature as the positivity effect. This effect on working memory is well documented in literature (Mammarella et al., 2012; Fairfield et al., 2013) and many studies on the effects of aging on memory have highlighted enhanced memory for positive-valence autobiographical events (Kennedy et al., 2004) and in remembering positive images (Mikels et al., 2005) compared to younger adults. In addition, studies on trait impression have shown that older adults tend to judge faces as more positive than younger adults and to perceive faces as more trustworthy as well as less hostile and less dangerous, especially for the most threatening-looking faces (Ruffman et al., 2006; Castle et al., 2012; Zebrowitz et al., 2013).

Traditionally, tasks that assess emotion perception use static facial stimuli representing happy, fear, and neutral expressions but a potentially important factor influencing visual emotion perception concerns the role of dynamic information. It has been reported that healthy controls show an improvement in emotion recognition for dynamic over static point-light displays (Atkinson et al., 2004). Dynamic stimuli therefore present an interesting case for investigating emotion perception in aging. In fact, few studies have assessed the threshold of intensity at which emotions are most consistently identified.

Previous studies have used dynamic emotion recognition tasks based on real videos (Banziger et al., 2009; Minardi, 2012), but here we adopted a new online task. Starting from two pictures of the “Karolinska Directed Emotional Faces” (Lundqvist et al., 1998) portraying the same actor, we generated several morphs and subsequently created videos of faces in which facial expressions changed their intensity from neutral to happy or from neutral to angry. In this way, we have been able to examine whether normal aging is associated with reduced perceptual processing of emotional cues and to determine whether older adults require more intense stimuli to correctly label and discriminate emotional facial expressions. We recorded RTs in facial expression recognition in younger and older adults. In line with facial expression recognition literature, we expected older adults to perform more slowly than younger adults. In addition, to investigate the direction of emotions (i.e., positivity bias for older adults), we asked participants to rate angry, negative and hybrids faces on a visual analogic scale from positive to negative. In this case, we predicted that older adults would rate faces more positively than younger ones.

Materials and Methods

Participants

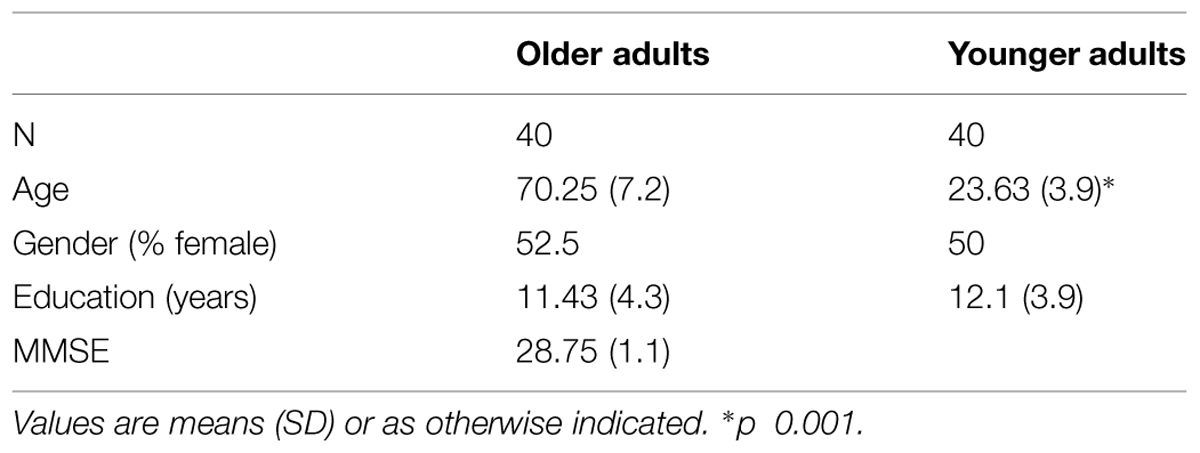

A group of 40 younger and 40 healthy older adults who scored high on the Mini-Mental State Examination (MMSE; Folstein et al., 1975; M = 28.75, SD = 1.1; maximum score = 30) participated in the experiment after giving written informed consent in accordance with the the Declaration of Helsinki. The study was approved by the local departmental ethical committee. Participants’ demographic and clinical characteristics are presented in Table 1. Exclusion criteria included history of severe head trauma, stroke, neurological disease, severe medical illness or alcohol or substance abuse in the past 6 months. All participants reported normal or corrected-to-normal visual and auditory acuity and younger and older adults reported being in good health.

Stimuli

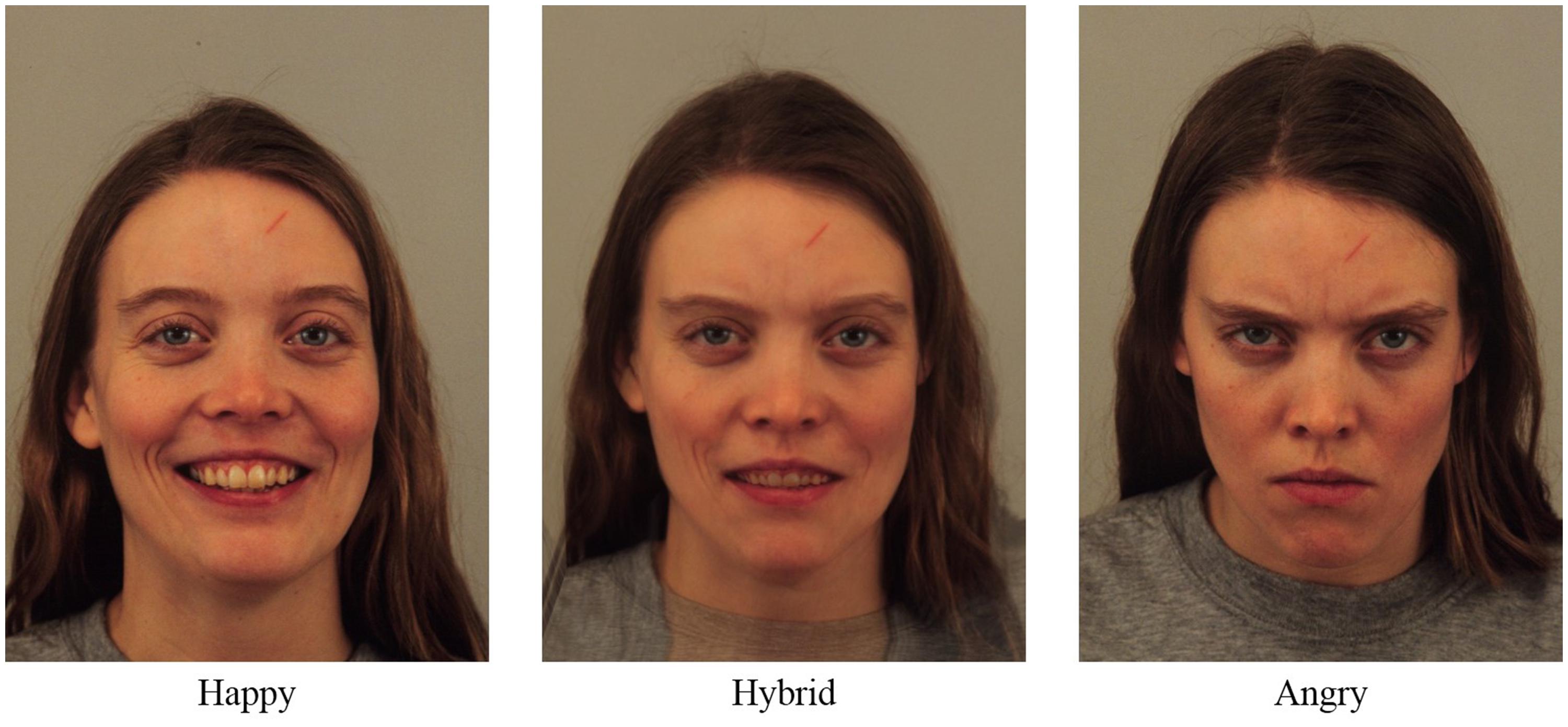

We created 20 dynamic videos from two versions of the same actor selected from the “Karolinska Directed Emotional Faces” (Lundqvist et al., 1998). The first version was neutral while the second was happy or angry (gender of the actors and emotions were balanced across trials). These two pictures were then morphed to obtain 98 hybrid faces with an increasing percentage of happiness or anger and these 100 pictures were presented, from the neutral to the happy/angry, for 40 ms in order to generate the video.

Procedure

Recognition Phase

The recognition phase was split into two identical sessions to avoid fatigue. In each session, participants watched 10 videos in the center of the screen and then complete a forced choice recognition test.

During the videos, an initially neutral face gradually changed to assume an expression of happiness or anger. Each video, preceded by a 200 ms fixation point, lasted 4000 ms. Participants pressed the space bar as soon as they were able to identify the emotional expression the face was assuming. Participants subsequently pressed the “m” key if the face had assumed a positive expression or the “z” key if the face had assumed a negative one.

Rating Phase

Participants rated 24 new faces according to valence. Six faces were happy, six faces were angry and 12 faces were hybrid (Figure 1). Each hybrid face (50% happy and 50% angry) was created starting from two pictures of the “Karolinska Directed Emotional Faces” portraying the same actor; the first picture was happy and the second was angry (gender of actors was balanced across trials). Each face, preceded by a 200 ms fixation point, was presented in the center of the screen for 1000 ms. Participants were then instructed to evaluate, using a visual analog scale (i.e., a line presented horizontally in the center of the screen), how positive or negative the face seemed by moving a slider along the line with the mouse. The line represented a double-ended continuum where the two ends indicated the maximum value of positivity on one side and the maximum value of negativity on the opposite side. The direction of the continuum positive/negative or negative/positive was balanced across participants.

Results

First, the t-test on recognition accuracy did not show any significant differences between groups (t = 1.71, p = 0.09). This indicates that older and younger adults were equally able to process, label and discriminate faces.

Second, we submitted the accuracy scores (percentage) for facial expression changes to a 2 (Emotion: Happy, Angry) × 2 (Group: Younger vs. Older Adults) mixed-design analysis of variance. No significant effect were found, indicating that there were no differences in discriminating the changing to happy versus changing to angry for both groups, younger and older adults (the average accuracy was 96%).

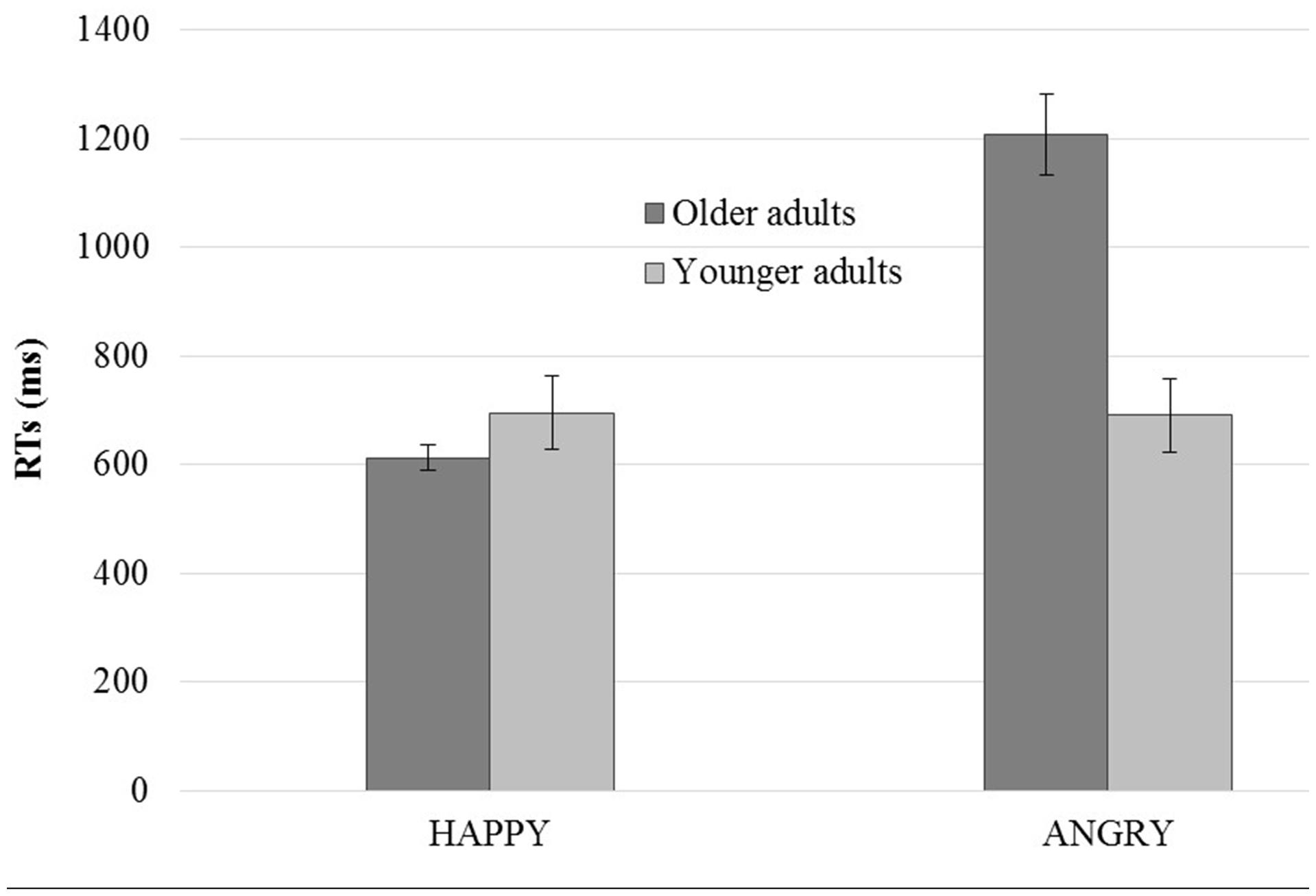

Third, in order to evaluate differences between groups in the temporal processing of the facial expression changes, we submitted RTs to a 2 (Emotion: Happy, Angry) × 2 (Group: Younger vs. Older Adults) mixed-design analysis of variance. The mixed ANOVA revealed a main effect of group (F1,78 = 9.99, p < 0.01) since younger adults were faster than older adults, a main effect of emotion (F1,78 = 30.08, p < 0.001) because participants recognized changes from neutral to happy faster than changes to angry and a significant two way Emotion × Group interaction (F1,78 = 31.19, p < 0.001). The post hoc analysis on the Emotion × Group interaction confirmed that older adults were slower to recognize changes from neutral to angry (M = 1208.5) compared to happy (M = 613.1, p < 0.001). No differences were found in the RTs of younger adults (p = 0.94; Figure 2).

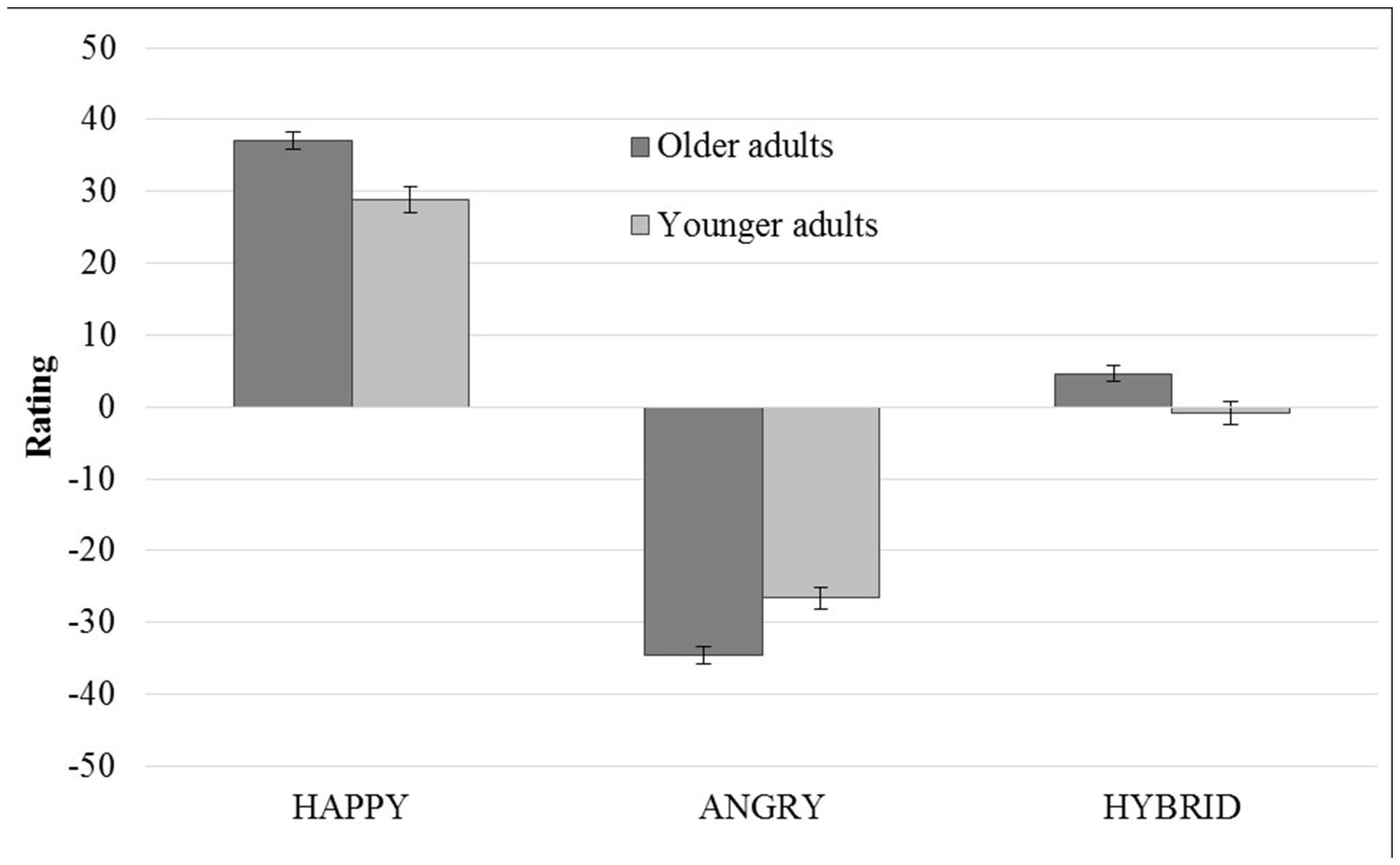

Finally, in order to examine differences between groups in facial expression ratings, we submitted the face judgment ratings to a 3 (Emotion: Happy, Hybrid, Angry) × 2 (Group: Younger vs. Older Adults) mixed-design analysis of variance. The mixed ANOVA revealed a main effect of group (F1,78 = 4.72, p < 0.05) because younger adults judge faces more negatively than older adults, a main effect of emotion (F1,78 = 838.74, p < 0.001) and a significant two-way Emotion × Group interaction (F2,156 = 15.59, p < 0.001). The post hoc analysis confirmed that older adults rated negative facial expressions more negatively (M = -34.53) than younger adults (M = -26.64) and positive facial expressions more positively (M = 37.09) than younger adults (M = 28.77). Older adults also rated the hybrid faces as more positive (M = 4.65) than younger adults (M = -0.85; Figure 3).

Discussion

The aim of this paper was to examine what aspects of emotional facial recognition are impaired in older adults by using a novel emotional face recognition task that combines a dynamic recognition phase with a more general static facial rating. Accuracy data indicated that both groups are able to perform the task correctly. However, when we analyzed RTs, we found that older and younger adults showed different patterns of recognition based on face expression. Older adults detected happy expressions faster than angry expressions while younger adults did not show any differences in the time it took them to recognize facial expression. This pattern of performance seems to be linked to the emotional valence of the facial expression since we did not find any differences between the two groups when we asked them to complete a subsequent forced choice recognition phase to evaluate general recognition difficulties. All together, these results seem to suggest a positivity bias during dynamic emotion recognition in older adults. We did not find a happy face advantage typically found in younger adults. This may be because participants did not recognize a single final face in our study, but pressed a key as soon as they were able to detect the direction of the emotional change on a face. The recognition task in itself was very easy and led to ceiling effects in the younger adults that may have “hidden” the happy face advantage. In addition, we found that older adults evaluate unambiguous emotional faces of both valences more intensely than controls. Interestingly, when faces are ambiguous, as in the hybrid condition, only the older adults maintain more intense ratings for positive faces compared to younger adults.

Older adults exhibited enhanced recognition of happy expressions. This finding is consistent with literature showing that older adults prefer positive emotional stimuli (Mather and Carstensen, 2005; Mammarella et al., 2013; Di Domenico et al., 2014). It is possible that age-related motivational changes guide the processing of emotional information and subsequently lead to emotional effects. In fact, older adults often show enhanced memory for positive emotional information. Accordingly, they tend to focus less on negative information linked to perceived time limitations that lead to motivational shifts and direct attention to emotionally meaningful goals (Carstensen, 1995; Fairfield et al., 2015b). Differently, younger adults typically perceive time as more expansive and consequently prioritize goals related to knowledge acquisition and are typically motivated toward knowledge-related goals.

However, our results might also be influenced by the fact that older adults favor different facial features (e.g., Wong et al., 2005). Indeed specific parts of the face can drive emotional processing. For example, the mouth for happiness and the eyes for anger (e.g., Schyns et al., 2007). In future studies, may want to investigate the scanning path of older adults compared to younger adults by manipulating experimental emotional faces.

Conclusion

In our study, the age-related differences in emotional facial expression recognition evidenced how different regulation strategies shape preferences in emotion processing leading older adults to show a preference for positive information, while younger adults prefer negative information. These findings may have implications for developing new clinical treatments in terms of new emotional facial recognition training programs.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgment

We thank all the participants in our study.

References

Atkinson, A. P., Dittrich, W. H., Gemmell, A. J., and Young, A. W. (2004). Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception 33, 717–746. doi: 10.1068/p5096

Banziger, T., Grandjean, D., and Scherer, K. R. (2009). Emotion recognition from expressions in face, voice, and body: the Multimodal Emotion Recognition Test (MERT). Emotion 9, 691–704. doi: 10.1037/a0017088

Buck, R. W., Savin, V. J., Miller, R. E., and Caul, W. F. (1972). Communication of affect through facial expressions in humans. J. Pers. Soc. Psychol. 23, 362–371. doi: 10.1037/h0033171

Calder, A. J., Keane, J., Manly, T., Sprengelmeyer, R., Scott, S., Nimmo-Smith, I., et al. (2003). Facial expression recognition across the adult life span. Neuropsychologia 41, 195–202. doi: 10.1016/S0028-3932(02)00149-5

Calvo, M. G., and Beltrán, D. (2013). Recognition advantage of happy faces: tracing the neurocognitive processes. Neuropsychologia 51, 2051–2061. doi: 10.1016/j.neuropsychologia.2013.07.010

Carstensen, L. L. (1995). Evidence for a life-span theory of socioemotional selectivity. Curr. Dir. Psychol. Sci. 4, 151–156. doi: 10.1111/1467-8721.ep11512261

Castle, E., Eisenberger, N. I., Seeman, T. E., Moons, W. G., Boggero, I. A., Grinblatt, M. S., et al. (2012). Neural and behavioral bases of age differences in perceptions of trust. Proc. Natl. Acad. Sci. U.S.A. 109, 20848–20852. doi: 10.1073/pnas.1218518109

Di Domenico, A., Fairfield, B., and Mammarella, N. (2014). Aging and others’ pain processing: implications for hospitalization. Curr. Gerontol. Geriatr. Res. 2014, 737291. doi: 10.1155/2014/737291

Ekman, P., Friesen, W. V., and O’Sullivan, M. (1987). Universals and cultural differences in the judgments of facial expressions of emotion. J. Pers. Soc. Psychol. 53, 712–717. doi: 10.1037/0022-3514.53.4.712

Fairfield, B., Mammarella, N., and Di Domenico, A. (2013). Centenarians’ “holy” memory: is being positive enough? J. Gen. Psychol. 174, 42–50. doi: 10.1080/00221325.2011.636399

Fairfield, B., Mammarella, N., and Di Domenico, A. (2015a). Motivated goal pursuit and working memory: are there age-related differences? Motiv. Emot. 39, 201–215. doi: 10.1007/s11031-014-9428-z

Fairfield, B., Mammarella, N., Di Domenico, A., and Palumbo, R. (2015b). Running with emotion: when affective content hampers working memory performance. Int. J. Psychol. 50, 161–164. doi: 10.1002/ijop.12101

Folstein, M. F., Folstein, S. E., and McHugh, P. R. (1975). “Mini-mental state.” A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 12, 189–198. doi: 10.1016/0022-3956(75)90026-6

Habak, C., Wilkinson, F., and Wilson, H. R. (2008). Aging disrupts the neural transformations that link facial identity across views. Vision Res. 48, 9–15. doi: 10.1016/j.visres.2007.10.007

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Hildebrandt, A.,Wilhelm, O., Herzmann, G., and andSommer, W. (2013). Face and object cognition across adult age. Psychol. Aging 28, 243–248. doi: 10.1037/a0031490

Hildebrandt, A., Wilhelm, O., Schmiedek, F., Herzmann, G., and Sommer, W. (2011). On the specificity of face cognition compared with general cognitive functioning across adult age. Psychol. Aging 26, 701–715. doi: 10.1037/a0023056

Howell, R. J., and Jorgensen, E. C. (1970). Accuracy of judging unposed emotional behavior in a natural setting: a replication study. J. Soc. Psychol. 81, 269–270. doi: 10.1080/00224545.1970.9922450

Johnson, M. H., Dziurawiec, S., Ellis, H., and Morton, J. (1991). Newborns’ preferential tracking of face-like stimuli and its subsequent decline. Cognition 40, 1–19. doi: 10.1016/0010-0277(91)90045-6

Kennedy, Q., Mather, M., and Carstensen, L. L. (2004). The role of motivation in the age-related positivity effect in autobiographical memory. Psychol. Sci. 15, 208–214. doi: 10.1111/j.0956-7976.2004.01503011.x

Lundqvist, D., Flykt, A., and öhman, A. (1998). The Karolinska Directed Emotional Faces–KDEF [CD-ROM]. Department of Clinical Neuroscience, Psychology section, Karolinska Institutet, Stockholm.

Mammarella, N., Fairfield, B., De Leonardis, V., Carretti, B., Borella, E., Frisullo, E., et al. (2012). Is there an affective working memory deficit in patients with chronic schizophrenia? Schizophr. Res. 138, 99–101. doi: 10.1016/j.schres.2012.03.028

Mammarella, N., Fairfield, B., Frisullo, E., and Di Domenico, A. (2013). Saying it with a natural child’s voice! When affective auditory manipulations increase working memory in aging. Aging Ment. Health 17, 853–862. doi: 10.1080/13607863.2013.790929

Mather, M., and Carstensen, L. L. (2005). Aging and motivated cognition: The positivity effect in attention and memory. Trends Cogn. Sci. 9, 496–502. doi: 10.1016/j.tics.2005.08.005

Megreya, A. M., and Bindemann, M. (2015). Developmental improvement and age-related decline in unfamiliar face matching. Perception 44, 2–44. doi: 10.1068/p7825

Mikels, J. A., Larkin, G. R., Reuter-Lorenz, P. A., and Carstensen, L. L. (2005). Divergent trajectories in the aging mind: changes in working memory for affective versus visual information with age. Psychol. Aging 20, 542–553. doi: 10.1037/0882-7974.20.4.542

Minardi, H. (2012). Developing the dynamic emotion recognition instrument (DERI). Nurs. Stand. 26, 35–42. doi: 10.7748/ns2012.08.26.50.35.c9239

Norton, D., McBain, R., and Chen, Y. (2009). Reduced ability to detect facial configuration in middle-aged and elderly individuals: associations with spatiotemporal visual processing. J. Gerontol. Psychol. Sci. 64, 328–334. doi: 10.1093/geronb/gbp008

Ruffman, T., Sullivan, S., and Edge, N. (2006). Differences in the way older and younger adults rate threat in faces but not situations. J. Gerontol. Ser. B Psychol. Sci. Soc. Sci. 61, 187–194. doi: 10.1093/geronb/61.4.P187

Schyns, P. G., Petro, L. S., and Smith, M. L. (2007). Dynamics of visual information integration in the brain for categorizing facial expressions. Curr. Biol. 17, 1580–1585. doi: 10.1016/j.cub.2007.08.048

Wagner, H. L., MacDonald, C. J., and Manstead, A. S. (1986). Communication of individual emotions by spontaneous facial expressions. J. Pers. Soc. Psychol. 50, 737–743. doi: 10.1037/0022-3514.50.4.737

Wong, B., Cronin-Golomb, A., and Neargarder, S. (2005). Patterns of visual scanning as predictors of emotion identification in normal aging. Neuropsychology 19, 739–749. doi: 10.1037/0894-4105.19.6.739

Zebrowitz, L. A., Franklin, R. G. J., Hillman, S., and Boc, H. (2013). Comparing older and younger adults’ first impressions from faces. Psychol. Aging 28, 202–212. doi: 10.1037/a0030927

Keywords: aging, positivity bias, emotion recognition, facial expression recognition, face perception

Citation: Di Domenico A, Palumbo R, Mammarella N and Fairfield B (2015) Aging and emotional expressions: is there a positivity bias during dynamic emotion recognition? Front. Psychol. 6:1130. doi: 10.3389/fpsyg.2015.01130

Received: 15 April 2015; Accepted: 20 July 2015;

Published: 04 August 2015.

Edited by:

Bozana Meinhardt-Injac, Johannes Gutenberg University Mainz, GermanyReviewed by:

Nicola Jane Van Rijsbergen, University of Glasgow, UKAhmed Megreya, Qatar University, Qatar

Copyright © 2015 Di Domenico, Palumbo, Mammarella and Fairfield. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alberto Di Domenico, Department of Psychological Sciences, University of Chieti, Via dei Vestini 31, Chieti 66100, Italy, alberto.didomenico@unich.it

†These authors have contributed equally to this work.

Alberto Di Domenico

Alberto Di Domenico Rocco Palumbo

Rocco Palumbo Nicola Mammarella

Nicola Mammarella Beth Fairfield

Beth Fairfield