- 1Key Laboratory of Behavioral Science, Institute of Psychology, Chinese Academy of Sciences, Beijing, China

- 2Natural Language Processing Laboratory, College of Information Science and Engineering, Northeastern University, Liaoning, China

- 3Department of Learning and Philosophy, Aalborg University, Aalborg, Denmark

Adolescence is a critical period for the neurodevelopment of social-emotional processing, wherein the automatic detection of changes in facial expressions is crucial for the development of interpersonal communication. Two groups of participants (an adolescent group and an adult group) were recruited to complete an emotional oddball task featuring on happy and one fearful condition. The measurement of event-related potential was carried out via electroencephalography and electrooculography recording, to detect visual mismatch negativity (vMMN) with regard to the automatic detection of changes in facial expressions between the two age groups. The current findings demonstrated that the adolescent group featured more negative vMMN amplitudes than the adult group in the fronto-central region during the 120–200 ms interval. During the time window of 370–450 ms, only the adult group showed better automatic processing on fearful faces than happy faces. The present study indicated that adolescent’s posses stronger automatic detection of changes in emotional expression relative to adults, and sheds light on the neurodevelopment of automatic processes concerning social-emotional information.

Introduction

From a neurodevelopmental perspective, adolescence is a crucial period for cognitive and emotional development (Paus, 2005; Steinberg, 2005; Yurgelun-Todd, 2007; Casey et al., 2008; Ahmed et al., 2015). During adolescence, dramatic changes in white and gray matter density (Giedd et al., 1999) reflect alterations in the neural plasticity of regions supporting social and cognitive functions (Steinberg, 2005; Blakemore and Choudhury, 2006; Casey et al., 2010, 2011; Somerville et al., 2010; Burnett et al., 2011; Pfeifer et al., 2011; Kadosh et al., 2013). In particular, the adolescent brain appears to be more emotionally driven, featuring heightened sensitivity to affective information and a subsequent increase in vulnerability to affective disorders (Monk et al., 2003; Dahl, 2004; Taylor et al., 2004; Scherf et al., 2011). This vulnerability likely reflects the high risk for mood and behavioral disorders observed during adolescence (Nelson et al., 2005; Blakemore, 2008; Casey et al., 2008). Therefore, it is vital to investigate the neurodevelopmental processes underlying emotional development in healthy adolescents, and identify appropriate standards to characterize abnormal development in adolescents at risk of mood disorders and psychosis (Passarotti et al., 2009; van Rijn et al., 2011).

Facial expressions encode essential social-emotional information, wherein the appropriate perception and interpretation of facial expression is important for the development and maturation of social-emotional processing in adolescence (Monk et al., 2003; Herba and Phillips, 2004; Taylor et al., 2004; Guyer et al., 2008; Hare et al., 2008; Passarotti et al., 2009; Segalowitz et al., 2010; Scherf et al., 2012; van den Bulk et al., 2012). Furthermore, electrophysiological studies indicate that several event-related potential (ERP) components are associated with different stages of facial expression processing. This includes the P1 component, which peaks at approximately 100 ms after stimulus onset, and mediates the early visual processing of face categorization in the temporal and occipital areas (Linkenkaer-Hansen et al., 1998; Itier and Taylor, 2002; Pourtois et al., 2005). In addition, the N170 component has been reported to reflect the structural encoding of faces and/or facial expression within the temporal-occipital region (Bentin et al., 1996; Rossion et al., 1999a,b; Taylor et al., 1999; Itier and Taylor, 2002, 2004a,b; Segalowitz et al., 2010; Zhang et al., 2013; Dundas et al., 2014). Moreover, the late positive potential (LPP), which peaks approximately 200 ms after exposure to emotional stimuli, mediates the sustained attention to affective information (Cuthbert et al., 2000; Itier and Taylor, 2002; Olofsson et al., 2008; Foti et al., 2009; Hajcak and Dennis, 2009; Hajcak et al., 2011). Accordingly, LPP amplitudes are greater in response to emotional rather than neutral stimuli (Kujawa et al., 2012a,b).

In electrophysiological studies, children and adolescents demonstrate longer N170 latencies and smaller negative N170 amplitudes compared to adults (Taylor et al., 1999; Dundas et al., 2014). Furthermore, adolescents exhibit greater LPP amplitudes at occipital sites relative to young adults (Gao et al., 2010). Neuroimaging studies indicate that the neural networks implicated in the perception of facial expression, including the limbic, amygdala, temporal, parietal and prefrontal regions, undergo a period of maturation between early childhood and late adolescence (Taylor et al., 1999; Killgore et al., 2001; Monk et al., 2003; McClure et al., 2004; Nelson et al., 2005; Batty and Taylor, 2006; Lobaugh et al., 2006; Costafreda et al., 2008). However, the majority of existing studies have investigated the role of attentional focus in the perception of facial expression, while the automatic change detection of changes in emotional expression has received relatively less attention. Since this process is also crucial for the development of social-emotional communication in adolescence (Miki et al., 2011; Hung et al., 2012), it requires a stronger focus in current research.

Electrophysiological studies have demonstrated that the ERP component mismatch negativity (MMN) reflects automatic change detection of auditory and visual stimuli (auditory MMN [aMMN] and visual MMN [vMMN], respectively) (Czigler, 2007; Näätänen et al., 2007). In the passive oddball paradigm, standard stimuli, which are presented at frequent intervals, are randomly replaced by deviant stimuli. The MMN value is therefore computed by subtracting the neural responses to standard stimuli from that of deviant stimuli (Pazo-Alvarez et al., 2003; Czigler, 2007; Näätänen et al., 2007). vMMN responses to facial expressions have been identified between 70 and 430 ms post-stimulus presentation within single (Susac et al., 2004; Zhao and Li, 2006; Gayle et al., 2012) or multiple time windows (Astikainen and Hietanen, 2009; Chang et al., 2010; Kimura et al., 2012; Li et al., 2012; Stefanics et al., 2012; Astikainen et al., 2013; Kecskés-Kovács et al., 2013; Kreegipuu et al., 2013). Moreover, the neural distribution of emotion-related vMMN has been reported to include the bilateral posterior occipito-temporal regions (Susac et al., 2004; Zhao and Li, 2006; Astikainen and Hietanen, 2009; Chang et al., 2010; Gayle et al., 2012; Kimura et al., 2012; Li et al., 2012; Stefanics et al., 2012; Astikainen et al., 2013; Kreegipuu et al., 2013) and the anterior frontal regions (Astikainen and Hietanen, 2009; Kimura et al., 2012; Stefanics et al., 2012). The methodology of expression-related vMMN studies was improved by Stefanics et al. (2012), wherein a primary task was added to the emotional oddball paradigm. The primary task aimed to draw the participant’s attention toward a central cross, and the participant was required to press a response button when the central cross changed in size, and ignore the bilateral emotional faces. The emotion oddball paradigm was presented simultaneously with the primary task, but the changes in expressions occurred independent to the changes in the size of the central cross. The authors reported significant expression-related vMMN at the bilateral occipito-temporal electrode sites, 170–360 ms following stimulus presentation (Stefanics et al., 2012). Based on prior vMMN studies, the emotional vMMN component might provide an important measure of automatic emotional processing in adolescence, and a means of further exploring the automatic detection of regularity violation, and predictive memory representation of facial expressions.

The main aim of the current study was to investigate the automatic detection of changes in facial expression in adolescents, which was achieved using an oddball paradigm similar to that previously described. Expression-related vMMN responses were then analyzed and compared between two age groups (adolescent and adult) to reveal the differing neural dynamics in the automatic detection of changes in facial expression.

Materials and Methods

Subjects

The present study included 36 subjects, including 19 adolescent participants (male, 9, female, 10; age range 14.2–14.9 years; mean age, 14.6 years) recruited from a normal middle school, and 17 adult participants (male, 8, female, 9; age from 21.3 to 29.7 years; mean age, 26.2 years) recruited as undergraduate students. The enrolment of participants occurred in agreement with the Declaration of Helsinki. This study was approved by the Ethics Committee of the Institute of Psychology, Chinese Academy of Sciences. Written informed consent was obtained from both participants and the parents of adolescent participants. Each subject was paid 100 RMB for participating. All participants were right handed with normal or corrected-to-normal visual acuity, and none had been diagnosed with neurological or psychiatric disorders. All participants were naïve to the purposes of the experiment.

Materials and Procedure

Figure 1 illustrates the procedure used in the present study. The presentation screen was a computer monitor (17′, 1024 × 768 at 100 Hz) with a black background, and participant’s viewing distance was 60 cm. A primary task (in the central visual field) and an expression-related oddball task (on the bilateral sides of the central visual field) were presented simultaneously but independently on the screen. Participants were required to focus their attention on the primary task and ignore the surrounding emotional stimuli (images of facial expressions). This design was used to ensure that the perception of emotional information (presented in the oddball paradigm) was automatic.

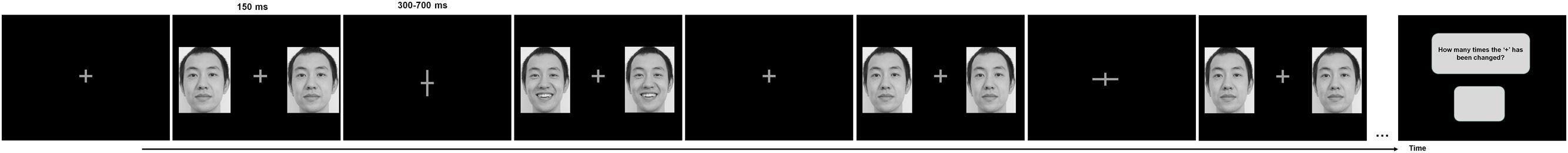

FIGURE 1. The sample of experimental procedure in the happy oddball condition. A central cross changed (wider or longer) occasionally during each sequence, and participants were instructed to detect the changes and to report how many times the cross had been changed at the end of each sequence. Two identical faces with the same expression were displayed on the bilateral sides of the cross for 150 ms, followed by the inter-stimulus interval of 300–700 ms. The presentation of face-pairs and the changes of the fixation cross were independent.

The emotional stimuli used were images of facial expressions (in a light gray color) from 10 Chinese models (male, 5; female, 5) in three set expressions: neutral, happy, and fearful. Normative 9-point scale ratings were carried by another 30 volunteers (male, 16, female, 14; age range 22.3-28.7 years) to assess the valence and arousal of each facial image. For the valence rating, a t-test demonstrated that happy images (M = 7.84, SD = 0.23) featured higher scores than neutral images (M = 5.03, SD = 0.21), while neutral images featured higher scores than fearful images (M = 1.92, SD = 0.2) (ps < 0.001). The reliability of the volunteers’ judgments with regard to valence was high (Kendall’s W = 0.94, χ2 = 816.91, p < 0.001). For the arousal rating, happy (M = 6.8, SD = 0.21) and fearful images (M = 6.97, SD = 0.26) produced a higher level of arousal than neutral images (M = 1.33, SD = 0.25) (ps < 0.001), while no significant differences were observed between happy and fearful images with regard to arousal scores (p > 0.05). The reliability of the volunteers’ judgment of arousal ratings was high (Kendall’s W = 0.76, χ2 = 663.48, p < 0.001).

The face-pairs that were presented in each sequence were randomly selected from the stimulus pool of 10 models. For each trial of the oddball task, two identical expressions from one model were synchronously displayed on both sides of the central cross. Each face was displayed with a visual angle of 6° horizontally and 8° vertically. Each face-pair was displayed for 150 ms, followed by an inter-stimulus interval (offset-to-onset) of 300–700 ms. Two experimental conditions were adopted: one happy oddball condition and one fearful oddball condition, and the presentation order of the two oddball conditions was randomized across participants. For the happy oddball condition, happy faces were presented as the deviant stimuli (probability of 0.2) and neutral faces as the standard stimuli (probability of 0.8). For the fearful oddball condition, fearful faces were presented as the deviant stimuli (probability of 0.2) and neutral expressions as the standard stimuli (probability of 0.8). Each condition consisted of six practice sequences and 60 formal sequences, and each sequence contained 10 face-pair presentations (two deviant stimuli and eight standard stimuli). In total, 480 standard stimuli and 120 deviant stimuli were presented for each condition. Furthermore, each deviant was presented after at least two standards in each sequence, and the average position of deviant was 7.2. Each sequence lasted for 10 s, and the next sequence started directly after participants reported the change number. These settings guaranteed that memory trace could survive the break and vMMN responses could be elicited (Sulykos et al., 2013).

For the primary task, participants were instructed to detect and count how many times the central cross (“+”) had changed in size (the horizontal line of the cross was longer than its vertical line, or the length of the vertical line was wider), and to report this number at the end of each sequence. The start and end of each sequence was synchronized with that of the oddball task. Each change in cross size lasted 300 ms, before the cross returned to its original size. During each sequence, there were nine possible options for the number of cross changes. Participants were required to concentrate on counting the number of changes, and to press the corresponding button on the keyboard (“0” to “9”) with their right index finger at the end of each sequence. The answer screen did not fade away until participants pressed a button.

EEG Recording and Analysis

Sixty-four electrodes embedded in a NeuroScan Quik-Cap were used to record electroencephalography (EEG) data, and the electrodes were positioned according to the 10–20 system locations. EEG data were collected and analyzed, using the nose as reference. For electrooculography (EOG) recording, four bipolar electrodes were positioned on the inferior and superior regions of the left eye and the outer canthi of both eyes to monitor vertical and horizontal EOG (VEOG and HEOG). Electrode impedance was maintained below 5 kΩ, and EEG signal was continuously recorded with online band-pass filters at 0.05–100 Hz with a nose reference. The signal was amplified using SynAmps amplifiers with a sample rate of 500 Hz. The signal was edited to include 50 ms prior to (for baseline correction) and 450 ms after stimulus onset. Epochs contaminated by eye blinks, movement artifacts, or muscle potentials exceeding ±70 μV at any electrode were excluded. ERPs underwent offline Zero Phase Shift digital filtering (bandwidth: 1–30 Hz, slope: 24 dB/octave).

For the fearful condition, the mean number of trials for the deviant condition (fearful expression) was 94 for adolescents and 97 trials for adults, while a mean of 323 trials was calculated for the standard condition (neutral expression) in adolescents and 328 in adults. For the happy condition, the mean number of trials for the deviant condition (happy expression) was 94 for adolescents and 96 in adults, while a mean of 325 trials were calculated for the standard condition (neutral expression) in adolescents and 324 in adults.

Three ERP components were analyzed: P1 (100–170 ms), N170 (150–220 ms) and LPP (210–280 ms) within the temporal and occipital regions (average neural activation of the electrodes positioned at TP7, P7, PO7, CB1, O1, TP8, P8, PO8, CB2, O2) (Bentin et al., 1996; Campanella et al., 2002; Pourtois et al., 2005). A three-way repeated ANOVAs was used to analyze the peak latency and amplitude of each ERP component, with the independent variables of Age-group (adolescent vs. adult), Stimulus-type (standard vs. deviant), and Expression condition (happy vs. fearful). Greenhouse–Geisser corrections for violations of sphericity were used where appropriate and significant interactions were further investigated using SIDAK post hoc tests with Bonferroni correction for multiple comparisons.

The difference waveforms were created by subtracting the ERP responses to standard stimuli from the ERP responses to deviant stimuli, to analyze the automatic processing of emotional stimuli (Czigler, 2007; Näätänen et al., 2007). The time window of vMMN was determined according to previous vMMN literature and the visual inspection of current waveforms (Stefanics et al., 2014, 2015). Three time windows were selected for vMMN analysis: 120–200 ms, 230–320 ms, and 370–450 ms within the fronto-central (averaging the electrodes at F1, FC1, C1 F2, FC2, and C2) and occipito-temporal areas (averaging the electrodes at TP7, P7, PO7, O1, TP8, P8, PO8, and O2). The mean amplitudes of difference waveform responses were compared with zero to confirm that the vMMN responses were significant for different brain regions and time windows with regard to both age groups. Next, a three-way repeated ANOVA was used to analyze the peak amplitudes of vMMNs with regard to the independent variables of Age-group (adolescent vs. adult), Expression condition (happy vs. fearful), and Anterior-posterior distribution (fronto-central vs. occipito-temporal). Greenhouse–Geisser corrections for violations of sphericity were applied where appropriate, and significant interactions were further analyzed using the SIDAK test.

Results

Effect of Deviant and Standard Emotional Stimuli on ERPs

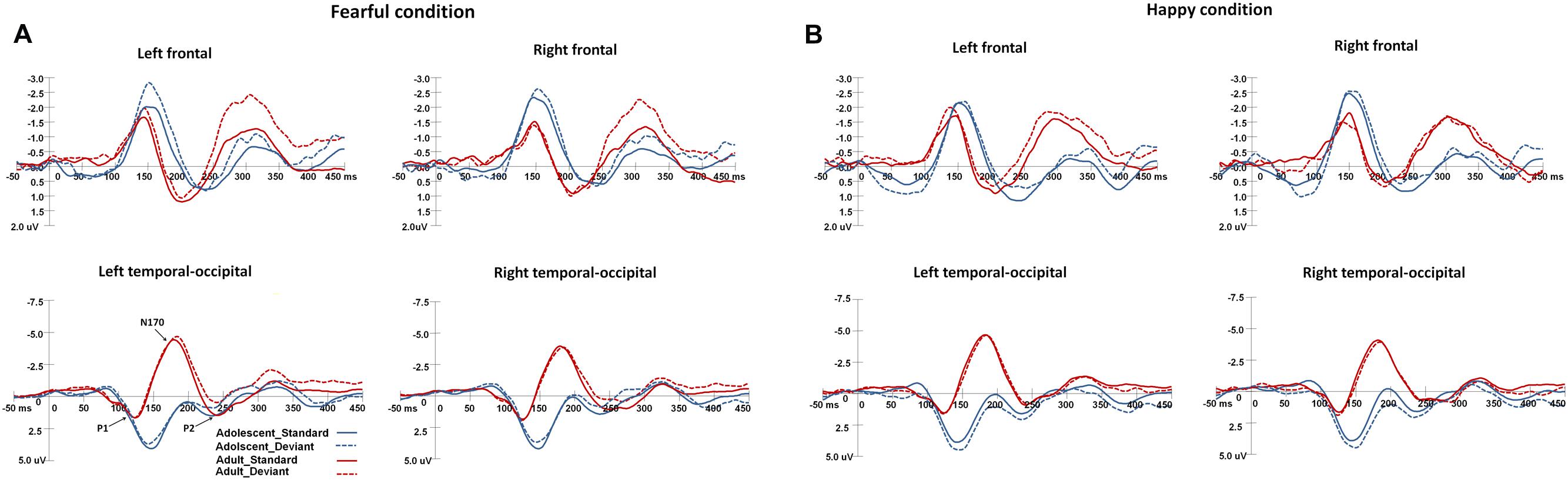

Figure 2 shows the grand-averaged ERPs waveforms elicited by standard and deviant stimuli in both the happy and fearful oddball conditions.

FIGURE 2. The grand-average ERPs elicited by the standard and deviant stimuli in the happy and fearful oddball conditions for both adolescent and adult groups. ERP responses in the electrodes of F1, FC1, and C1 were averaged to plot the neural activity over the left frontal areas, and F2, FC3, and C2 were averaged for the right frontal areas. The ERP responses in the left occipito-temporal areas were averaged from the electrodes of TP7, P7, PO7, CB1, and O1, and the ERP responses in the electrodes of TP8, P8, PO8, CB2, and O2 were averaged for the right occipito-temporal areas. (A) Shows the ERP waveforms in the fearful condition, and (B) for the ERP waveforms in the happy condition.

For P1 latency, the main effect of Age-group was significant [F(1,34) = 64.1, p < 0.001, η2 = 0.62], and adolescents demonstrated longer P1 latencies than adults (145 ms [SD = 2 ms] vs. 120 ms [SD = 2.4 ms]). The main effect of Expression condition was also significant [F(1,34) = 4.2, p < 0.05, η2 = 0.1], and participants demonstrated faster P1 responses in the happy condition compared to the fearful (132 ms [SD = 1.7 ms] vs. 134 ms [SD = 1.5 ms]). With regard to P1 amplitudes, the main effect of Age-group was significant [F(1,34) = 10.6, p < 0.005, η2 = 0.2], wherein adolescents featured larger P1 amplitudes than adults (4.4 μV [SD = 0.5 μV] vs. 1.9 μV [SD = 0.6 μV]). The interaction effect of Stimulus-type × Expression condition [F(1,34) = 4.1, p < 0.05, η2 = 0.1], was also significant, wherein deviant stimuli induced larger P1 amplitudes than standard stimuli in the happy condition (3.4 μV [SD = 0.38 μV] vs. 2.9 μV [SD = 0.38 μV], p < 0.05).

For N170 latency, the main effect of Age-group was significant [F(1,34) = 25.4, p < 0.001, η2 = 0.4], and adolescents featured longer N170 latencies than adults (199 ms [SD = 2.1 ms] vs. 182 ms [SD = 2.5 ms]). With regard to N170 amplitudes, the main effect of Age-group was also significant [F(1,34) = 15.8, p < 0.001, η2 = 0.29], and adolescents demonstrated less negative N170 amplitudes than adults (-1.1 μV [SD = 0.6 μV] vs. -4.6 μV [SD = 0.7 μV]).

For LPP latency, the main effect of Age-group was significant [F(1,34) = 12.8, p < 0.005, η2 = 0.25], and adolescents had faster LPP responses than adults (238 ms [SD = 2.7 ms] vs. 253 ms [SD = 3.2 ms]). The interaction effect of Expression condition × Age-group was particularly significant [F(1,34) = 3.8, p < 0.05, η2 = 0.1], whereupon post hoc analysis indicated that adults had faster LPP responses in the fearful condition than in the happy condition (250 ms [SD = 3.5 ms] vs. 256 ms [SD = 3.4 ms], p < 0.05). For LPP amplitudes, the interaction effect of Expression condition × Stimulus-type was significant [F(1,34) = 12.6, p < 0.005, η2 = 0.25], and post hoc analysis showed that the deviant stimuli elicited larger LPP amplitudes in the happy condition than that in the fearful condition (1.9 μV [SD = 0.4 μV] vs. 1.2 μV [SD = 0.5 μV], p < 0.05). In addition, the standard stimuli induced larger LPP amplitudes than the deviant stimuli in the fearful condition (1.7 μV [SD = 0.4 μV] vs. 1.2 μV [SD = 0.5 μV], p < 0.05), however, the standard stimuli elicited smaller LPP amplitudes than deviant stimuli in the happy condition (1.5 μV [SD = 0.5 μV] vs. 1.9 μV [SD = 0.4 μV], p < 0.05).

vMMN Responses in the Happy and Fearful Oddball Conditions

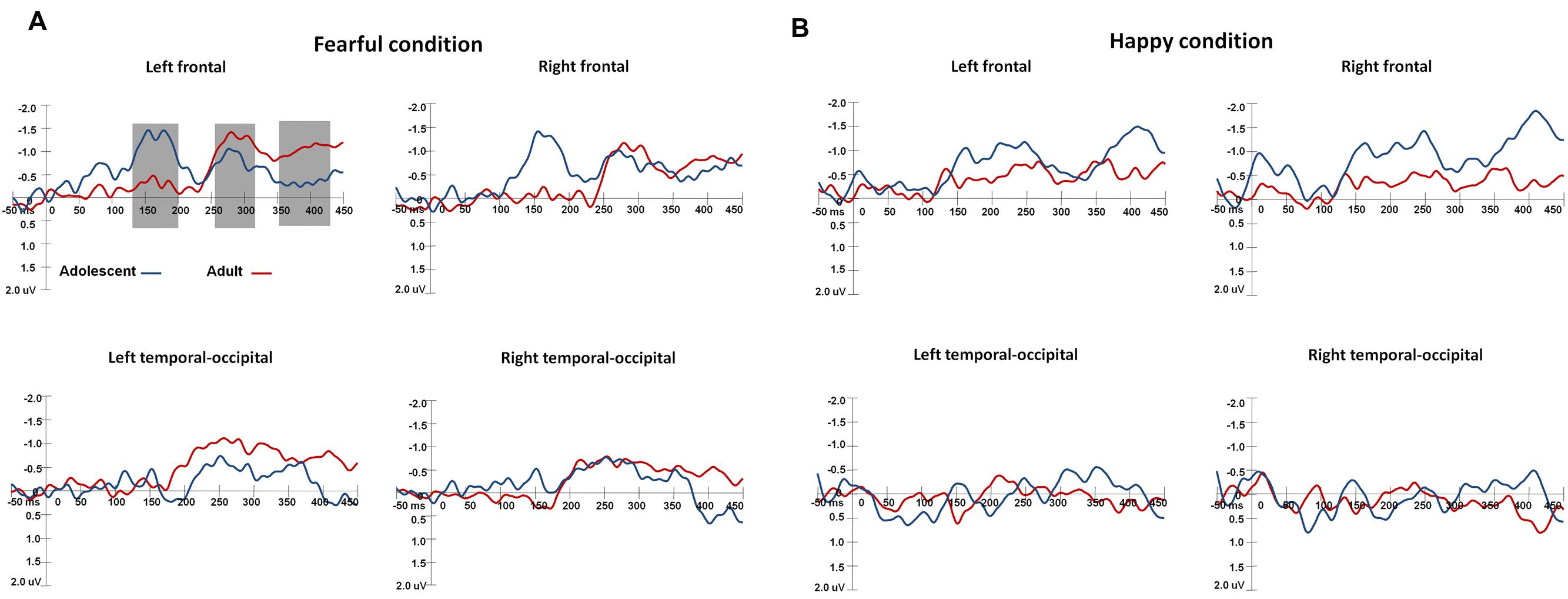

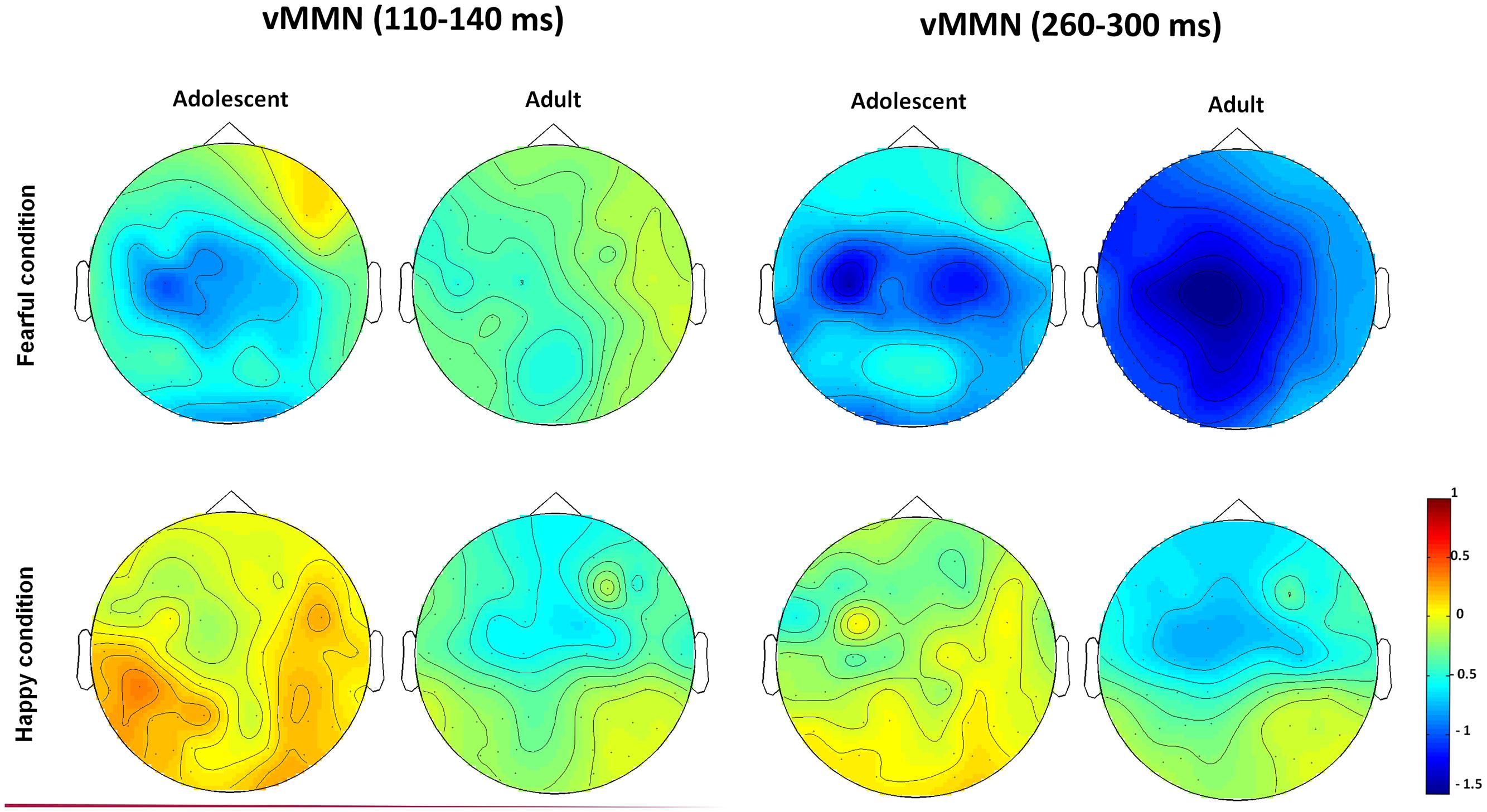

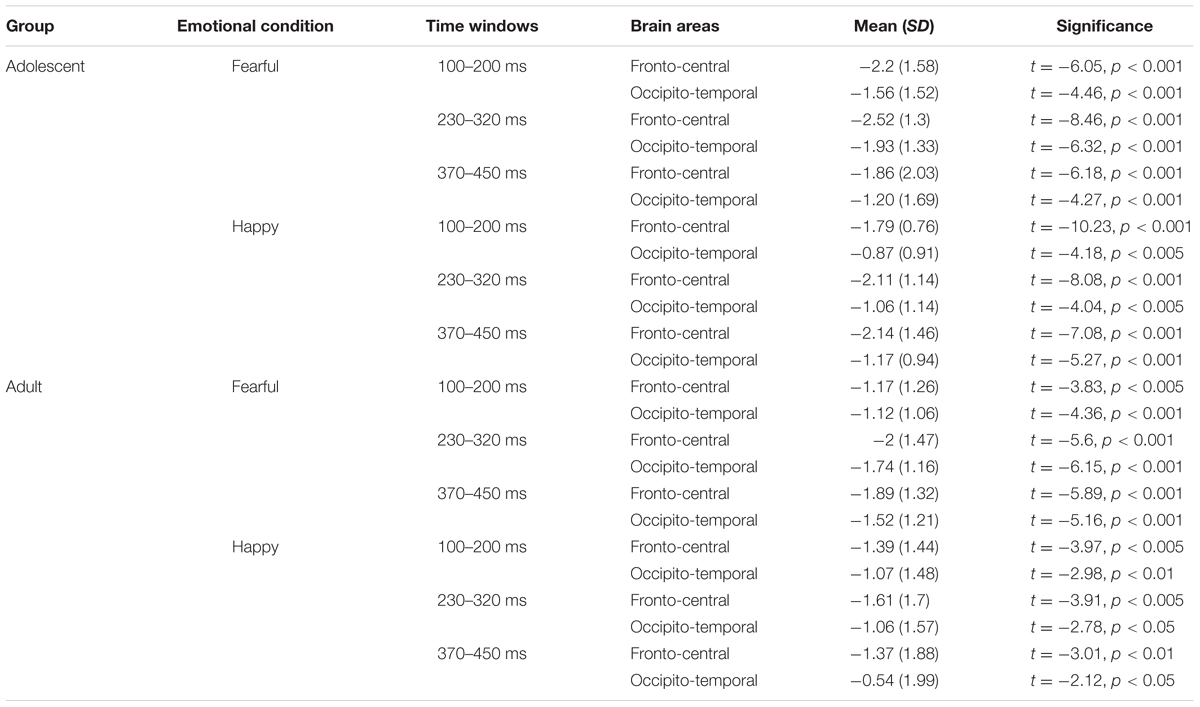

The waveforms of vMMNs in both the happy and fearful conditions are displayed in Figure 3 and the topographic maps are presented in Figure 4. The mean amplitudes of difference waveforms were compared with zero, and the t-tests results indicated that the vMMN components were significant for all time windows, brain regions, and emotional conditions for adults and adolescents (see Table 1).

FIGURE 3. The waveforms of vMMNs in both happy and fearful conditions. The ERP responses in the electrodes of F1, FC1, and C1 were averaged to plot the neural activity over the left frontal areas, and F2, FC3, and C2 were averaged for the right frontal areas. The ERP responses in the electrodes of TP7, P7, PO7, CB1, and O1 were averaged to plot the neural activity over the left occipito-temporal areas, and TP8, P8, PO8, CB2, and O2 averaged for the right occipito-temporal areas. (A) Shows the vMMN waveforms in the fearful condition, and (B) for the vMMN waveforms in the happy condition.

FIGURE 4. The topographic maps of the vMMNs for both adolescent and adult groups in the happy and fearful conditions.

TABLE 1. The comparisons between mean amplitudes (μV) of VMMN and zero in different brain areas, time windows, and emotional conditions.

For early vMMN values (120–200 ms), the interaction between Age-group and Anterior-posterior distribution was significant [F(1,34) = 4.97, p < 0.05, η2 = 0.13], and the adolescent group demonstrated more negative vMMN amplitudes than the adult group over the fronto-central regions (p < 0.05). vMMN amplitude was more negative in the fronto-central regions than occipito-temporal areas in the adolescent group (p < 0.001), but not the adult group (p = 0.33).

For middle vMMN values at the 230–320 ms interval, the main effect of Expression condition was significant [F(1,34) = 9.41, p < 0.005, η2 = 0.22], while vMMNs was greater in the fearful condition relative to the happy condition. The main effect of Anterior-posterior distribution was also significant [F(1,34) = 15.36, p < 0.001, η2 = 0.31], and vMMN was more negative in the fronto-central regions than the occipito-temporal.

With regard to late vMMN values (370–450 ms), the main effect of Expression condition remained significant [F(1,34) = 3.98, p = 0.05, η2 = 0.11], wherein vMMN was greater in the fearful condition than the happy condition. Anterior-posterior distribution was also significant [F(1,34) = 23.05, p < 0.001, η2 = 0.4], and vMMN was more negative in the fronto-cental regions relative to the occipito-temporal. The interaction between Expression × Age group was significant [F(1,34) = 3.98, p = 0.06, η2 = 0.11], and the post hoc analyses indicated that adults featured a more negative vMMN in the fearful condition relative to the happy condition (p < 0.05).

Discussion

The current study investigated the neurodevelopmental processes underlying the automatic detection of changes in facial expression. This was achieved by evaluating the electrophysiological responses of adolescents and adults to emotional and neutral facial stimuli. The main findings of this study indicated that adolescents featured a greater level of vMMN compared to adults in the fronto-central areas in early stage of processing, and only adults featured a more negative vMMN in the fearful condition relative to the happy condition in the late stage of processing.

Sensory Responses

Changes in visual evoked potentials (VEPs) are reported to persist throughout late childhood and adolescence, likely due to the continuous electrophysiological maturation of the human visual system (Brecelj et al., 2002; Clery et al., 2012). With regard to the ERP responses evoked by deviant and standard stimuli, adolescents demonstrated larger P1 amplitudes, indicating that adolescents featured stronger early visual detection processes on facial expressions than adults (Guyer et al., 2008; Passarotti et al., 2009; Scherf et al., 2012; van den Bulk et al., 2012). The N170 component reflects the structural encoding of facial expression (Bentin et al., 1996), and shorter N170 latencies relate to more accurate facial perception in both adolescents and adults (McPartland et al., 2004). In the present study, adults demonstrated greater N170 amplitudes and faster responses, supporting reports of more accurate structural encoding in adults versus adolescents. These findings were consistent with previous reports that adult N170 response patterns could not be reached even by the mid-adolescence and late-adolescence (Taylor et al., 1999, 2004; Itier and Taylor, 2004a,b; Batty and Taylor, 2006; Miki et al., 2011). Moreover, with regard to the LPP component, adults exhibited faster LPP responses in the fearful condition than that in the happy condition. This is in agreement with the prevalence of negativity bias and suggests that a negative stimulus triggers a greater degree of context evaluation and stimulus elaboration than a positive stimulus (Williams et al., 2006).

Visual Mismatch Responses

Visual mismatch negativity is reported to reflect the automatic process underlying the detection of mismatches between sensory input and the representation of frequently presented stimuli in transient memory (Czigler, 2007). vMMN relates to prediction errors arising between the transient representation of visual information and actual perceptual input (Winkler and Czigler, 2012; Stefanics et al., 2014, 2015). Sources of vMMN are reported to include retinotopic regions of the visual cortex (Czigler et al., 2004; Sulykos and Czigler, 2011) and prefrontal regions (Kimura et al., 2010, 2011). Despite an increasing number of vMMN studies in adults, relatively few have examined vMMN in children and adolescents. Clery et al. (2012) examined the visual mismatch response (vMMR) in children (8–14 years) and adults. In particular, the authors reported that the detection of changes in non-emotional information required a longer duration for children in late childhood than adults (Clery et al., 2012). In addition, Tomio et al. (2012) investigated the developmental changes in vMMN for subjects aged 2–27 years old, and observed that vMMN latencies decreased with age, maturing to adult level by the age of 16 years. In contrast, another ERP study reported no significant differences in vMMN responses (180–400 ms) to color modality between children (aged ∼10 years) and adults (Horimoto et al., 2002).

The current study was the first to investigate vMMN with regard to the development of emotional processing in adolescence. In this experiment, adolescents demonstrated a greater level of vMMN compared to adults in the fronto-central regions during the early processing phase (120–200 ms), which might indicate that adolescents are more sensitive to changes in facial expression at this stage. This early vMMN finding correlated with P1 amplitude (peaking during 100–170 ms), wherein adolescents featured greater P1 amplitudes compared to adults. Since this early vMMN overlaps with the P1 time window, it is possible to suggest that early vMMN represents the initial processing of standard and deviant stimuli reflected by P1 amplitude. Furthermore, adolescents featured more negative vMMN responses in the fronto-central regions than the occipito-temporal, which was not observed in adults. This was consistent with previous studies, in which enhanced prefrontal activity was identified in adolescents in response to affective faces (Killgore et al., 2001; Yurgelun-Todd and Killgore, 2006). Several frontal structures are considered to be essential neural interfaces between cognitive and emotional processes, including the ventrolateral prefrontal cortex (VLPFC) and dorsal anterior cingulated cortex (dACC) are (Petrides and Pandya, 2002; Pavuluri et al., 2007). Furthermore, the frontal cortex undergoes a prominent transformation during adolescence (Gogtay et al., 2004; Taylor et al., 2014), which correlates strongly with the profound neural development of social-emotional processing during this period (Monk et al., 2003; Palermo and Rhodes, 2007; Blakemore, 2008; Fusar-Poli et al., 2009).

Adults showed more negative late vMMN (370–450 ms) in the fearful condition than the happy condition, which was consistent with previous expression-related vMMN studies (Kimura et al., 2011; Stefanics et al., 2012). This also highlighted the enhanced automatic detection of fearful expressions relative to happy, which is in line with the effects of negativity bias, wherein individuals demonstrate comparatively faster behavioral responding and/or greater ERP responses to negative stimuli compared to positive or neutral stimuli (Nelson and de Haan, 1996; Ito et al., 1998; Carretié et al., 2001; Batty and Taylor, 2003; Smith et al., 2003; Dawson et al., 2004; Rossignol et al., 2005; Leppänen et al., 2007; Vaish et al., 2008). However, adolescents did not show such differences, and they had comparable vMMN responses in fearful condition and happy condition, which suggested that during late automatic processing phase adolescents’ neural automatic processing to both types of affective information were equal.

With regard to vMMN during the middle and late processing phases (230–320 ms and 370–450 ms, respectively), both groups demonstrated greater vMMN responses in the fronto-central regions than the occipito-temporal. This indicates that fronto-central brain regions were strongly activated in response to affective changes in both adults and adolescents. Accordingly, mismatch components with regard to the processing of emotional faces have also been reported in the frontal regions (Astikainen and Hietanen, 2009; Kimura et al., 2012; Stefanics et al., 2012; Astikainen et al., 2013), wherein Kimura et al. (2012) demonstrated that frontal activation was observed in all phases of vMMN. In addition, Yurgelun-Todd and Killgore (2006) reported that individual’s age was positively correlated with stronger neural activation in the prefrontal cortex during facial perception with regard to fearful but not happy stimuli in children and adolescents. The neural generators of vMMN with regard to non-emotional information have also been observed in the medial prefrontal and lateral prefrontal areas (Yucel et al., 2007; Kimura et al., 2010; Urakawa et al., 2010). Moreover, prior neuroimaging studies have reported that adolescents feature greater amygdala and fusiform activation during passive viewing of fearful expressions (Guyer et al., 2008). Therefore, the effects of negativity bias in adolescents indicate that the recognition of negative expressions is essential for social-emotional development (Williams et al., 2009).

Two limitations were identified within the current study: first, no vMMN data on non-emotional stimuli was collected in adolescents, hence, it was uncertain that the present observed vMMN effects between adolescents and adults were on facial emotion specificity. In further studies, a control condition could be included with a non-emotional standard and deviant, in order to analyze both emotional and non-emotional vMMN in both adolescents and adults. Second, a flip-flop design, as used by Stefanics et al. (2012), was not implemented in the current study. In a flip-flop paradigm, the images used as the standard and deviant visual stimuli are subsequently reversed in the oddball sequence. In future work, incorporating a flip-flop design would enable the analysis and comparison of vMMN responses induced by reversal of the oddball design.

Conclusion

The current study investigated the neurodevelopmental processes underlying the automatic detection of changes in emotional expression in adolescents. Adolescents demonstrated stronger automatic processes with regard to the perception of emotional faces in early processing stage. During the late phase, only adults showed better automatic processing on fearful faces compared to happy faces, while, adolescents had comparable automatic change processes on fearful and happy expressions. The present findings revealed dynamic differences in the automatic neural processing of facial expression between adolescents and adults.

Author Contributions

TL designed the experiment and collected the data. TX analyzed the data. TL, TX, and JS wrote the MS.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgment

This research was supported by the National Natural Science Foundation of China (Grant No. 31370020).

References

Ahmed, S. P., Bittencourt-Hewitt, A., and Sebastian, C. L. (2015). Neurocognitive bases of emotion regulation development in adolescence. Dev. Cogn. Neurosci. 15, 11–25.

Astikainen, P., Cong, F., Ristaniemi, T., and Hietanen, J. K. (2013). Event-related potentials to unattended changes in facial expressions: detection of regularity violations or encoding of emotions? Front. Hum. Neurosci. 7:557. doi: 10.3389/fnhum.2013.00557

Astikainen, P., and Hietanen, J. K. (2009). Event-related potentials to task-irrelevant changes in facial expressions. Behav. Brain Funct. 5:30. doi: 10.1016/S0926-6410(03)00174-5

Batty, M., and Taylor, M. J. (2003). Early processing of the six basic facial emotional expressions. Brain Res. Cogn. Brain Res. 17, 613–620. doi: 10.1111/j.1467-7687.2006.00480.x

Batty, M., and Taylor, M. J. (2006). The development of emotional face processing during childhood. Dev. Sci. 9, 207–220. doi: 10.1162/jocn.1996.8.6.551

Bentin, S., Allison, T., Puce, A., Perez, E., and McCarthy, G. (1996). Electrophysiological studies of face perception in human. J. Cogn. Neurosci. 8, 551–565. doi: 10.1038/nrn2353

Blakemore, S. J. (2008). The social brain in adolescence. Nat. Rev. Neurosci. 9, 267–277. doi: 10.1111/j.1469-7610.2006.01611.x

Blakemore, S. J., and Choudhury, S. (2006). Development of the adolescent brain: implications for executive function and social cognition. J. Child Psychol. Psychiatry 47, 296–312. doi: 10.1037/0894-4105.12.3.446

Brecelj, J., Štrucl, M., Zidar, I., and Tekačič-Pompe, M. (2002). Pattern ERG and VEP maturation in school children. Clin. Neurophysiol. 113, 1764–1770. doi: 10.1016/j.neubiorev.2010.10.011

Burnett, S., Sebastian, C., Kadosh, K. C., and Blakemore, S. J. (2011). The social brain in adolescence: evidence from functional magnetic resonance imaging and behavioural studies. Neurosci. Biobehav. Rev. 35, 1654–1664. doi: 10.1162/089892902317236858

Campanella, S., Quinet, P., Bruyer, R., Crommelinck, M., and Guerit, J. M. (2002). Categorical perception of happiness and fear facial expressions: an ERP study. J. Cogn. Neurosci. 14, 210–227. doi: 10.1016/S0167-8760(00)00195-1

Carretié, L., Mercado, F., Tapia, M., and Hinojosa, J. A. (2001). Emotion, attention, and the ‘negativity bias’, studied through event-related potentials. Int. J. Psychophysiol. 41, 75–85. doi: 10.1016/j.neuron.2010.08.033

Casey, B. J., Duhoux, S., and Cohen, M. M. (2010). Adolescence: what do transmission, transition, and translation have to do with it? Neuron 67, 749–760. doi: 10.1196/annals.1440.010

Casey, B., Jones, R. M., and Hare, T. A. (2008). The adolescent brain. Ann. N. Y. Acad. Sci. 1124, 111–126. doi: 10.1111/j.1532-7795.2010.00712.x

Casey, B., Jones, R. M., and Somerville, L. H. (2011). Braking and accelerating of the adolescent brain. J. Res. Adolesc. 21, 21–33. doi: 10.1016/j.neulet.2010.01.050

Chang, Y., Xu, J., Shi, N., Zhang, B., and Zhao, L. (2010). Dysfunction of processing task-irrelevant emotional faces in major depressive disorder patients revealed by expression-related visual MMN. Neurosci. Lett. 472, 33–37. doi: 10.1016/j.neuropsychologia.2012.01.035

Clery, H., Roux, S., Besle, J., Giard, M.-H., Bruneau, N., and Gomot, M. (2012). Electrophysiological correlates of automatic visual change detection in school-age children. Neuopsychologia 50, 979–987. doi: 10.1016/j.brainresrev.2007.10.012

Costafreda, S. G., Brammer, M. J., David, A. S., and Fu, C. H. Y. (2008). Predictors of amygdala activation during the processing of emotional stimuli: a meta-analysis of 385 PET and fMRI studies. Brain Res. Rev. 58, 57–70. doi: 10.1016/S0301-0511(99)00044-7

Cuthbert, B. N., Schupp, H. T., Bradley, M. M., Birbaumer, N., and Lang, P. J. (2000). Brain potentials in affective picture processing: covariation with autonomic arousal and affective report. Biol. Psychol. 52, 95–111. doi: 10.1027/0269-8803.21.34.224

Czigler, I. (2007). Visual mismatch negativity: violating of nonattended environmental regularities. J. Psychophysiol. 21, 224–230. doi: 10.1016/j.neulet.2004.04.048

Czigler, I., Balazs, L., and Pato, L. G. (2004). Visual change detection: event-related potentials are dependent on stimulus location in humans. Neurosci. Lett. 364, 149–153. doi: 10.1196/annals.1308.034

Dahl, R. E. (2004). Adolescent development and the regulation of behavior and emotion: introduction to part VIII. Ann. N. Y. Acad. Sci. 1021, 294–295. doi: 10.1111/j.1467-7687.2004.00352.x

Dawson, G., Webb, S. J., Carver, L., Panagiotides, H., and McPartland, J. (2004). Young children with autism show atypical brain responses to fearful verus neutral facial expressions of emotion. Dev. Sci. 7, 340–359. doi: 10.1016/j.neuropsychologia.2014.05.006

Dundas, E. M., Plaut, D. C., and Behrmann, M. (2014). An ERP investigation of the co-development of hemispheric lateralization of face and word recognition. Neuropsychologia 61, 315–323. doi: 10.1111/j.1469-8986.2009.00796.x

Foti, D., Hajcak, G., and Dien, J. (2009). Differentiating neural responses to emotional pictures: evidence from temporal-spatial PCA. Psychophysiology 46, 521–530.

Fusar-Poli, P., Placentino, A., Carletti, F., Landi, P., Allen, P., Surguladze, S., et al. (2009). Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J. Psychiatry Neurosci. 34, 418–432. doi: 10.3724/sp.j.1041.2010.00342

Gao, P. X., Liu, H. J., Ding, N., and Guo, D. J. (2010). An event-related-potential study of emotional processing in adolescence. Acta Psychol. Sin. 42, 342–351. doi: 10.3389/fnhum.2012.00334

Gayle, L. C., Gal, D., and Kieffaber, P. D. (2012). Measuring affective reactivity in individuals with autism spectrum personality traits using the visual mismatch negativity event-related brain potential. Front. Hum. Neurosci. 6:334. doi: 10.3389/fnhum.2012.00334

Giedd, J. N., Blumenthal, J., Jeffries, N. O., Castellanos, F. X., Liu, X., Zijdenbos, A., et al. (1999). Brain development during childhood and adolescence: a longitudinal MRI study. Nat. Neurosci. 2, 861–863. doi: 10.1073/pnas.0402680101

Gogtay, N., Giedd, J. N., Lusk, L., Hayashi, K. M., Greenstein, D., Vaituzis, A. C., et al. (2004). Dynamic mapping of human cortical development during childhood through early adulthood. Proc. Natl. Acad. Sci. U.S.A. 101, 8174–8179. doi: 10.1162/jocn.2008.20114

Guyer, A. E., Monk, C. S., McClure-Tone, E. B., Nelson, E. E., Roberson-Nay, R., Adler, A. D., et al. (2008). A developmental examination of amygdala response to facial expressions. J. Cogn. Neurosci. 20, 1565–1582. doi: 10.1016/j.biopsycho.2008.11.006

Hajcak, G., and Dennis, T. A. (2009). Brain potential during affective picture processing in children. Biol. Psychol. 80, 333–338.

Hajcak, G., Weinberg, A., MacNamara, A., and Foti, D. (2011). “ERPs and the study of emotion,” in Handbook of Event-Related Potential Components, eds S. J. Luck and E. Kappenman (New York, NY: Oxford University Press). doi: 10.1016/j.biopsych.2008.03.015

Hare, T. A., Tottenham, N., Galvan, A., Voss, H. U., Glover, G. H., and Casey, B. J. (2008). Biological substrates of emotional reactivity and regulation in adolescence during an emotional go-nogo task. Biol. Psychiatry 63, 927–934. doi: 10.1111/j.1469-7610.2004.00316.x

Herba, C., and Phillips, M. (2004). Annotation: development of facial recognition from childhood to adolescence: behavioral and neurological perspectives. J. Child Psychol. Psychiatry 45, 1185–1189. doi: 10.1016/S0387-7604(02)00086-4

Horimoto, R., Inagaki, M., Yano, T., Sata, Y., and Kaga, M. (2002). Mismatch negativity of the color modality during a selective attention task to auditory stimuli in children with mental retardation. Brain Dev. 24, 703–709. doi: 10.1016/j.neuroimage.2011.12.003

Hung, Y., Smith, M. L., and Taylor, M. J. (2012). Development of ACC-amygdala activations in processing unattended fear. Neuroimage 60, 545–552. doi: 10.1006/nimg.2001.0982

Itier, R. J., and Taylor, M. J. (2002). Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: a repetition study using ERPs. Neuroimage 15, 353–372. doi: 10.1111/j.1467-7687.2004.00367.x

Itier, R. J., and Taylor, M. J. (2004a). Effects of repetition and configural changes on the development of face recognition processes. Dev. Sci. 7, 469–487. doi: 10.1111/j.1467-7687.2004.00342.x

Itier, R. J., and Taylor, M. J. (2004b). Face inversion and contrast-reversal effects across development: in contrast to the expertise theory. Dev. Sci. 7, 246–260. doi: 10.1037/0022-3514.75.4.887

Ito, T. A., Larsen, J. T., Smith, N. K., and Cacioppo, J. T. (1998). Negative information weighs more heavily on the brain: the negativity bias in evaluative categorizations. J. Pers. Soc. Psychol. 75, 887–900. doi: 10.1111/desc.12054

Kadosh, K. C., Linden, D. E. J., and Lau, J. Y. F. (2013). Plasticity during childhood and adolescence: innovative approaches to investigating neurocognitive development. Dev. Sci. 16, 574–583. doi: 10.3389/fnhum.2013.00532

Kecskés-Kovács, K., Sulykos, I., and Czigler, I. (2013). Is it a face of a woman or a man? Visual mismatch negativity is sensitive to gender category. Front. Hum. Neurosci. 7:532. doi: 10.3389/fnhum.2013.00532

Killgore, W. D., Oki, M., and Yurgelun-Todd, D. A. (2001). Sex-specific developmental changes in the amygdala responses to affective faces. Neuroreport 12, 427–433. doi: 10.1093/cercor/bhr244

Kimura, M., Kondo, H., Ohira, H., and Schröger, E. (2012). Unintentional temporal context-based prediction of emotional faces: an electrophysiological study. Cereb. Cortex 22, 1774–1785. doi: 10.1016/j.neulet.2010.09.011

Kimura, M., Ohira, H., and Schröger, E. (2010). Localizing sensory and cognitive systems for pre-attentive visual deviance detection: an sLORETA analysis of the data of Kimura et al. (2009). Neurosci. Lett. 485, 198–203. doi: 10.1097/WNR.0b013e32834973ba

Kimura, M., Schröger, E., and Czigler, I. (2011). Visual mismatch negativity and its importance in visual cognitive sciences. Neuroreport 22, 669–673. doi: 10.3389/fnhum.2013.00714

Kreegipuu, K., Kuldkepp, N., Sibolt, O., Toom, M., Allik, J., and Näätänen, R. (2013). vMMN for schemiatic faces: automatic detection of change in emotional expression. Front. Hum. Neurosci. 7:714. doi: 10.3389/fnhum.2013.00714

Kujawa, A. J., Hajcak, G., Torpey, D., Kim, J., and Klein, D. N. (2012a). Electrocortical reactivity to emotional faces in young children and associations with maternal and paternal depression. J. Child Psychol. Psychiatry 53, 207–215. doi: 10.1016/j.dcn.2012.03.005

Kujawa, A. J., Klein, D. N., and Hajcak, G. (2012b). Electrocortical reactivity to emotional images and faces in middle childhood to early adolescence. Dev. Cogn. Neurosci. 2, 458–467. doi: 10.1111/j.1467-8624.2007.00994.x

Leppänen, J. M., Moulson, M. C., Vogel-Farley, V. K., and Nelson, C. A. (2007). An ERP study of emotional face processing in the adult and infant brain. Child Dev. 78, 232–245. doi: 10.1186/1744-9081-8-7

Li, X., Lu, Y., Sun, G., Gao, L., and Zhao, L. (2012). Visual mismatch negativity elicited by facial expressions: new evidence from the equiprobable paradigm. Behav. Brain Funct. 8:7. doi: 10.1016/S0304-3940(98)00586-2

Linkenkaer-Hansen, K., Palva, J. M., Sams, M., Hietanen, J. K., Aronen, H. J., and Ilmoniemi, R. J. (1998). Face-selective processing in human extrastriate cortex around 120 ms after stimulus onset revealed by magneto- and electroencephalography. Neurosci. Lett. 253, 147–150. doi: 10.1097/01.wnr.0000198946.00445.2f

Lobaugh, N. J., Bibson, E., and Taylor, M. J. (2006). Children recruit distinct neural systems for implicit emotional face processing. Neuroreport 17, 215–219. doi: 10.1016/S0065-2407(09)03706-9

McClure, E. B., Monk, C. S., Nelson, E. E., Zarahn, E., Leibenluft, E., Bilder, R. M., et al. (2004). A developmental examination of gender differences in brain engagement during evaluation of threat. Biol. Psychiatry 55, 1047–1055. doi: 10.1111/j.1469-7610.2004.00318.x

McPartland, J., Dawson, G., Webb, S. J., Panagiotides, H., and Carver, L. J. (2004). Event related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. J. Child Psychol. Psychiatry 45, 1235–1245. doi: 10.1016/j.clinph.2010.07.013

Miki, K., Watanabe, S., Teruya, M., Takeshima, Y., Urakawa, T., Hirai, M., et al. (2011). The development of the perception of facial emotional change examined using ERPs. Clin. Neurophysiol. 122, 530–538. doi: 10.1016/S1053-8119(03)00355-0

Monk, C. S., McClure, E. B., Nelson, E. E., Zarahn, E., Bilder, R. M., Leibenluft, E., et al. (2003). Adolescent immaturity in attention-related brain engagement to emotional facial expressions. Neuroimage 20, 420–428. doi: 10.1016/j.clinph.2007.04.026

Näätänen, R., Paavilainen, P., Rinne, T., and Alho, K. (2007). The mismatch negativity (MMN) in basic research of central auditory processing: a review. Clin. Neurophysiol. 118, 2544–2590. doi: 10.1016/j.clinph.2003.04.001

Nelson, C. A., and de Haan, M. (1996). Neural correlates of infants’ visual responsiveness to facial expressions of emotion. Dev. Psychobiol. 29, 577–595. doi: 10.1017/S0033291704003915

Nelson, E. E., Leibenluft, E., Mcclure, E. B., and Pine, D. S. (2005). The social re-orientation of adolescence: a neuroscience perspective on the process and its relation to psychopathology. Psychol. Med. 35, 163–174. doi: 10.1016/j.biopsycho.2007.11.006

Olofsson, J. K., Nordin, S., Sequeira, H., and Polich, J. (2008). Affective picture processing: an integrative review of ERP findings. Biol. Psychol. 77, 247–265. doi: 10.1016/j.neuropsychologia.2006.04.025

Palermo, R., and Rhodes, G. (2007). Are you always on my mind? A review of how face perception and attention interact. Neuropsychologia 45, 75–92. doi: 10.1093/scan/nsp029

Passarotti, A. M., Sweeney, J. A., and Pavuluri, M. N. (2009). Neural correlates of incidental and directed facial emotion processing in adolescents and adults. Soc. Cogn. Affect. Neurosci. 4, 387–398. doi: 10.1016/j.tics.2004.12.008

Paus, T. (2005). Mapping brain maturation and cognitive development during adolescence. Trends Cogn. Sci. 9, 60–68. doi: 10.1016/j.biopsych.2006.07.011

Pavuluri, M. N., O’Connor, M. M., Harral, E. M., and Sweeney, J. A. (2007). Affective neural circuitry during facial emotion processing in pediatric bipolar disoerder. Biol. Psychiatry 62, 158–167. doi: 10.1016/S0301-0511(03)00049-8

Pazo-Alvarez, P., Cadaveira, F., and Amenedo, C. E. (2003). MMN in the visual modality: a review. Biol. Psychol. 63, 199–236. doi: 10.1046/j.1460-9568.2001.02090.x

Petrides, M., and Pandya, D. N. (2002). Comparative cytoarchitetonic analysis of the human and the macaque ventrolateral prefrontal cortex and cortico-cortical connection patterns in the monky. Euro. J. Neurosci. 16, 291–310. doi: 10.1016/j.neuron.2011.02.019

Pfeifer, J. H., Masten, C. L., Moore, W. E. III, Oswald, T. M., Mazziotta, J. C., Iacoboni, M., et al. (2011). Entering adolescence: resistance to peer influence, risky behavior, and neural changes in emotion reactivity. Neuron 69, 1029–1036. doi: 10.1002/hbm.20130

Pourtois, G., Dan, E. S., Grandjean, D., Sander, D., and Vuilleumier, P. (2005). Enhanced extrastriate visual response to bandpass spatial frequency filtered fearful faces: time course and topographic evoked-potentials mapping. Hum. Brain Mapp. 26, 65–79. doi: 10.1016/j.neulet.2004.11.091

Rossignol, M., Philippot, P., Douilliez, C., Crommelinck, M., and Campanella, S. (2005). The perception of fearful and happy facial expression is modulated by anxiety: an event-related potential study. Neurosci. Lett. 377, 115–120. doi: 10.1016/S1388-2457(98)00037-6

Rossion, B., Campanella, S., Gomez, C., Delinte, A., Debatisse, D., Liard, L., et al. (1999a). Task modulation of brain activity related to familiar and unfamiliar face processing: an ERP study. Clin. Neurophysiol. 110, 449–462. doi: 10.1016/S0301-0511(99)00013-7

Rossion, B., Delvenne, J. F., Debatisse, D., Goffaux, V., Bruyer, R., Crommelinck, M., et al. (1999b). Spatio-temporal localization of the face inversion effect: an event-related potentials study. Biol. Psychol. 50, 173–189. doi: 10.1016/j.dcn.2011.07.016

Scherf, K. S., Behrmann, M., and Dahl, R. E. (2012). Facing changes and changing faces in adolescence: a new model for investigating adolescent-specific interactions between pubertal brain and behavioral development. Dev. Cogn. Neurosci. 2, 199–219. doi: 10.1093/cercor/bhq269

Scherf, K. S., Luna, B., Avidan, G., and Behrmann, M. (2011). What precedes which: developmental neural tuning in face- and place-related cortex. Cortex 21, 1663–1680. doi: 10.1016/j.bandc.2009.10.003

Segalowitz, S. J., Santesso, D. L., and and Jetha, M. K. (2010). Electrophysiological changes during adolescence: a review. Brain Cogn. 72, 86–100. doi: 10.1016/S0028-3932(02)00147-1

Smith, N. K., Cacioppo, J. T., Larsen, J. T., and Chartrand, T. L. (2003). May I have your attention, please: electrocortical responses to positive and negative stimuli. Neuropsychologia 41, 171–183. doi: 10.1080/87565641.2010.549865

Somerville, L. H., Jones, R. M., and Casey, B. J. (2010). A time of change: behavioral and neural correlates of adolescent sensitivity to appetitive and aversive environmental cues. Brain Cogn. 72, 124–133. doi: 10.1016/j.bandc.2009.07.003

Stefanics, G., Astikainen, P., and Czigler, I. (2015). Visual mismatch negativity (vMMN): a prediction error signal in the visual modality. Front. Hum. Neurosci. 8:1074. doi: 10.3389/fnhum.2014.01074

Stefanics, G., Csukly, G., Komlósi, S., Czobor, P., and Czigler, I. (2012). Processing of unattended facial emotions: a visual mismatch negativity study. Neuroimage 59, 3042–3049. doi: 10.1016/j.neuroimage.2012.06.068

Stefanics, G., Kremláček, J., and Czigler, I. (2014). Visual mismatch negativity: a predictive coding view. Front. Hum. Neurosci. 8:666. doi: 10.3389/fnhum.2014.00666

Steinberg, L. (2005). Cognitive and affective development in adolescence. Trends Cogn. Sci. 9, 69–74. doi: 10.1016/j.brainres.2011.05.009

Sulykos, I., and Czigler, I. (2011). One plus one is less than two: visual features elicit non-additive mismatch-related brain activity. Brain Res. 1398, 64–71. doi: 10.1027/0269-8803/a000085

Sulykos, I., Kecskés-Kovács, K., and Czigler, I. (2013). Memory mismatch in vision: no reactivation. J. Psychophysiol. 27, 1–6. doi: 10.1023/B:BRAT.0000032863.39907.cb

Susac, A., Ilmoniemi, R. J., Pihko, E., and Supek, S. (2004). Neurodynamic studies on emotional and inverted faces in an oddball paradigm. Brain Topogr. 16, 265–268. doi: 10.1162/0898929042304732

Taylor, M. J., Batty, M., and Itier, R. J. (2004). The faces of development: a review of early face processing over childhood. J. Cogn. Neurosci. 16, 1426–1442. doi: 10.3389/fnhum.2014.00453

Taylor, M. J., Doesburg, S. M., and Pang, E. W. (2014). Neuromagnetic vistas into typical and atypical development of frontal lobe functions. Front. Hum. Neurosci. 8:453. doi: 10.3389/fnhum.2014.00453

Taylor, M. J., McCarthy, G., Saliba, E., and Degiovanni, E. (1999). ERP evidence of developmental changes in processing of faces. Clin. Neurophysiol. 110, 910–915. doi: 10.1007/s11062-012-9280-2

Tomio, N., Fuchigami, T., Fujita, Y., Okubo, O., and Mugishima, H. (2012). Developmental changes of visual mismatch negativity. Neurophysiology 44, 138–143. doi: 10.1111/1469-8986.3850816

Urakawa, T., Inui, K., Yamashiro, K., and Kakigi, R. (2010). Cortical dynamics of the visual change detection process. Psychopysiology 47, 905–912. doi: 10.1111/j.1469-8986.2010.00987.x

Vaish, A., Grossmann, T., and Woodward, A. (2008). Not all emotions are created equal: the negativity bias in social-emotional development. Psychol. Bull. 134, 383–403. doi: 10.1016/j.dcn.2012.09.005

van den Bulk, B. G., Koolschijn, P. C., Meens, P. H., van Lang, N. D., van der Wee, N. J., Rombouts, S. A., et al. (2012). How stable is activation in the amygdala and prefrontal cortex in adolescence? A study of emotional face processing across three measurements. Dev. Cogn. Neurosci. 4, 65–76. doi: 10.1017/S0033291710000929

van Rijn, S., Aleman, A., de Sonneville, L., Sprong, M., Ziermans, T., Schothorst, P., et al. (2011). Misattribution of facial expressions of emotion in adolescents at increased risk of psychosis: the role of inhibitory control. Psychol. Med. 41, 499–508. doi: 10.1080/13803390802255635

Williams, L. M., Mathersul, D., Palmer, D. M., Gur, R. C., Gur, R. E., and Gordon, E. (2009). Explicit identification and implicit recognition of facial emotions: I. Age effects in males and females across 10 decades. J. Clin. Exp. Neuropsychol. 31, 257–277. doi: 10.1016/j.neuroimage.2005.12.009

Williams, L. M., Palmer, D., Liddell, B. J., Song, L., and Gordon, E. (2006). The ‘when’ and ‘where’ of perceiving signals of threat versus non-threat. Neuroimage 31, 458–467. doi: 10.1016/j.ijpsycho.2011.10.001

Winkler, I., and Czigler, I. (2012). Evidence from auditory and visual event-related potential (ERP) studies of deviance detection (MMN and vMMN) linking predictive coding theories and perceptual object representations. Int. J. Psychophysiol. 83, 132–143. doi: 10.1016/j.neuroimage.2006.08.050

Yucel, G., McCarthy, G., and Belger, A. (2007). fMRI reveals that involuntary visual deviance processing is resource limited. Neuroimage 34, 1245–1252. doi: 10.1016/j.conb.2007.03.009

Yurgelun-Todd, D. (2007). Emotional and cognitive changes during adolescence. Curr. Opin. Neurobiol. 17, 251–257. doi: 10.1016/j.neulet.2006.07.046

Yurgelun-Todd, D. A., and Killgore, W. D. (2006). Fear-related activity in the prefrontal cortex increases with age during adolescence: a preliminary fMRI study. Neurosci. Lett. 406, 194–199. doi: 10.1016/j.brainres.2013.03.044

Zhang, D., Luo, W., and Luo, Y. (2013). Single-trial ERP analysis reveals facial expression category in a three-stage scheme. Brain Res. 1512, 78–88. doi: 10.1016/j.neulet.2006.09.081

Keywords: automatic change detection, ERPs, visual mismatch negativity, facial expression perception, adolescence

Citation: Liu T, Xiao T and Shi J (2016) Automatic Change Detection to Facial Expressions in Adolescents: Evidence from Visual Mismatch Negativity Responses. Front. Psychol. 7:462. doi: 10.3389/fpsyg.2016.00462

Received: 08 December 2015; Accepted: 15 March 2016;

Published: 30 March 2016.

Edited by:

Rosario Cabello, University of Granada, SpainReviewed by:

Andrea Berger, Ben-Gurion University of the Negev, IsraelIstván Czigler, Hungarian Academy of Sciences, Hungary

Copyright © 2016 Liu, Xiao and Shi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tongran Liu, liutr@psych.ac.cn; Jiannong Shi, shijn@psych.ac.cn

Tongran Liu

Tongran Liu Tong Xiao2

Tong Xiao2