- Brighton and Sussex Medical School, Brighton, UK

Background and aims: Most of the instruments used to assess the quality of health information on the Web (e.g., the JAMA criteria) only analyze one dimension of information quality (IQ), trustworthiness. In this study, we analyzed the type of intervention that websites describe, whether supported by evidence-based medicine (EBM) or not, to provide a further dimension of IQ, accuracy, and correlated this with the established criteria.

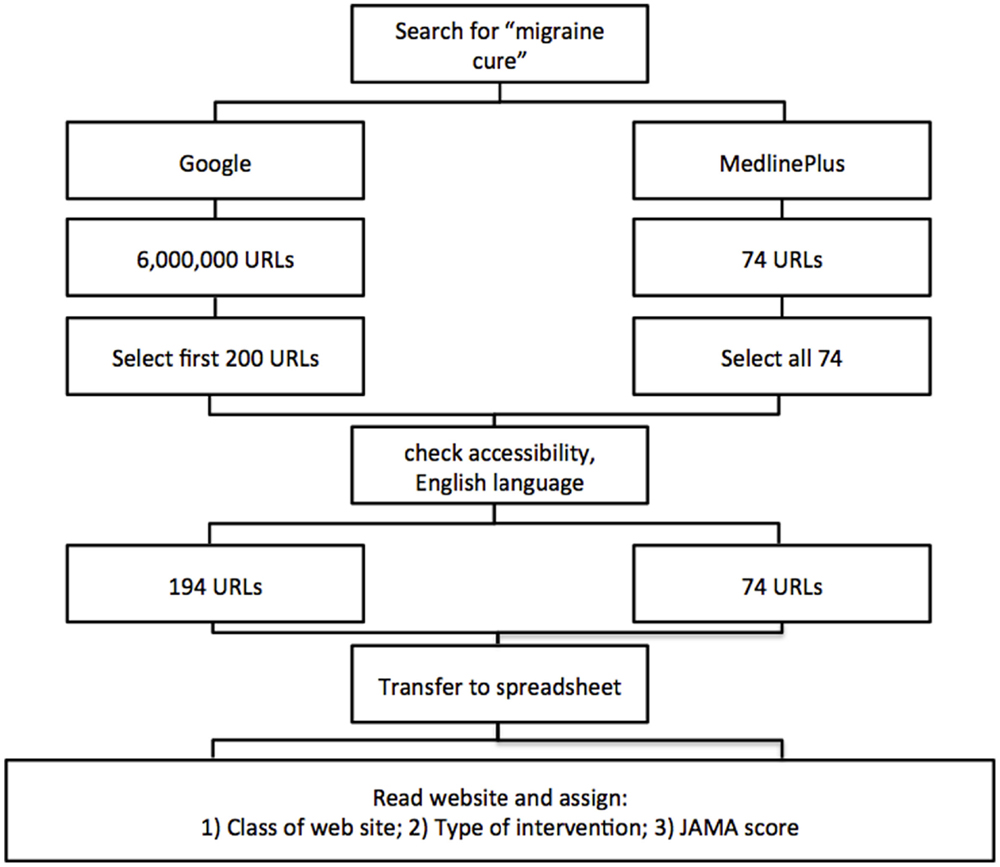

Methods: We searched Google for “migraine cure” and analyzed the first 200 websites for: (1) JAMA criteria (authorship, attribution, disclosure, currency); (2) class of websites (commercial, health portals, professional, patient groups, no-profit); and (3) type of intervention described (approved drugs, alternative medicine, food, procedures, lifestyle, drugs still at the research stage). We used hierarchical cluster analysis to identify different patterns of websites according to their classification and the information provided. Subgroup analysis on the first 10 websites returned was performed.

Results: Google returned health portals (44%), followed by commercial websites (31%) and journalism websites (11%). The type of intervention mentioned most often was alternative medicine (55%), followed by procedures (49%), lifestyle (42%), food (41%), and approved drugs (35%). Cluster analysis indicated that health portals are more likely to describe more than one type of treatment while commercial websites most often describe only one. The average JAMA score of commercial websites was significantly lower than for health portals or journalism websites, and this was mainly due to lack of information on the authors of the text and indication of the date the information was written. Looking at the first 10 websites from Google, commercial websites are underrepresented and approved drugs overrepresented.

Conclusion: Analyzing the type of therapies/prevention methods provides additional information to the trustworthiness measures, such as the JAMA score, and could be a convenient and objective indicator of websites whose information is based on EBM.

Introduction

As more patients search for health information on the Internet, many studies have analyzed the quality of health information available on the web for different pathological conditions. There is a concern that, because the Internet is practically not controlled or regulated, this might expose the public to misinformation, particularly those with low information literacy, which is the ability to critically appraise the information (1, 2). This could potentially result in patients turning to non-approved therapies, whose efficacy (and risks associated) has not been scientifically proven. Furthermore, in recent years, there have been an increasing number of commercial websites selling counterfeit medicines, with additional risks for vulnerable patients (3).

Although there are a number of specialized health websites, most patients will use generic search engines, such as Google, to search for health-related information (4), and several studies have tried to address the quality of health information available on the Internet using various methods.

The most used are the Health-on-the-Net [HON (5)] seal of approval, the JAMA criteria (6), and the DISCERN criteria (7). The latter two are, in fact, instruments to quantitatively measure the information quality (IQ) by assigning a score based on the website matching some requirements. In particular, these criteria consider whether a website provides information about authorship, ownership/financial interests, advertising, contact details, or date of update (currency). Another parameter that is also evaluated when assessing health information is its readability. For health information to be accessible to lay people, it has to be comprehensible by the average reader, and it was suggested that a patient information leaflet should be aimed at a reading ease of sixth grade in order to be understandable by 75% of the population (8). Studies have shown that the average reading level of health websites is between 10th and 13th grade (9, 10), and thus may not be accessible to individuals with lower literacy. Of note, readability and the trustworthiness indicators above do not always correlate, and one study reported that readability might be lower in websites displaying health seals of approval (11).

All of the criteria for evaluating health websites mentioned above do not take into consideration the content of the website. Therefore, they are mainly evaluating the transparency/trustworthiness of the website rather than the overall IQ, in that they do not consider the content of the site and whether the information provided is scientifically correct and evidence-based.

There are several dimensions of IQ as originally described by Wang and Strong in their seminal study (12). The study identified almost 200 data quality attributes and 18 dimensions of IQ. This and other studies group the various attributes of IQ in four categories of IQ: accessibility IQ, representational IQ (that includes understandability, format, appearance), contextual IQ (including completeness and timeliness), and intrinsic IQ (that include accuracy, correctness, and objectivity) (13). In the context of health information, probably intrinsic IQ components should include the correctness of the description of the scientific basis of the information described, that would be missing, for instance, in a website reporting that AIDS is not due to a viral infection. Assessment of these intrinsic dimensions of IQ will require an in-depth analysis of the content to be done by a panel of experts. A few studies have analyzed the content of the websites. For instance, Peterlin et al. analyzed the IQ available on cluster headache in 40 websites found using the search engine, MetaCrawler (14). In that study, the authors used a scoring system for the technical component that evaluated the correctness of the information on aspects, such as epidemiology, risk factors, diagnosis, pathophysiology, treatment, and prevention. A similar approach has been used to assess the IQ in 114 websites returned by MetaCrawler or MSN searching for retinopathy of prematurity (15).

In this study, we aim analyzing the type of treatment reported, an alternative, and simpler strategy to add an intrinsic quality dimension to the health IQ, beyond the first layer of contextual and representational IQ measured by the JAMA score.

We searched the words “migraine cure” because we aimed at looking at the information that would be returned from a search done by a layperson. In fact, studies have shown that the quality of information varies with the search term used, that is the more sophisticated the search term, the higher the IQ (16, 17). It should be noted, however, that we were more interested in setting up a methodology to study the correlations between classes of websites, type of recommendation, and the JAMA criteria as a trustworthiness index, and we did not assign a particular importance to the search terms used.

We selected the search engine, Google, because it is the most popular search engine, and, as a reference, one specialized health portal (the US Government Medline Plus). We analyzed the first 200 hits and classified the type of websites according to their affiliation (i.e., if they were commercial, professional, health portal, journalism, patient group, non-profit, or other). We also analyzed the text of the websites and classified the treatments/interventions described (whether approved drugs, alternative medicine, lifestyle or nutritional advice, etc.). We also used an established measure of the health IQ of websites (the JAMA criteria).

Materials and Methods

We searched for websites containing information relating to management of migraine. The search was conducted between December 2013 and January 2014, using google.com (US) clearing cookies and using the “private browsing” of Mozilla Firefox browser to prevent, as much as possible, personalized results due to previous browsing history. We are aware that the search results may still be influenced by the detection of the geographical location via the IP address.

Google search found 6,040,000 hits and we considered the first 200. Of those, 198 were accessible (thus excluding non-functioning links; denied direct access through password requirement, payment or subscription; not written in English) and were used in the subsequent analysis. We did not take into account the websites marked “advertisement” that Google shows before the actual search results.

MedlinePlus returned 74 hits that were all accessible and included in the analysis. The flow chart describing how the data were collected and processed is shown in Figure 1. Website links were transferred into a spreadsheet from Google using the SEOquake tool. For MedlinePlus, links were manually transferred into a spreadsheet.

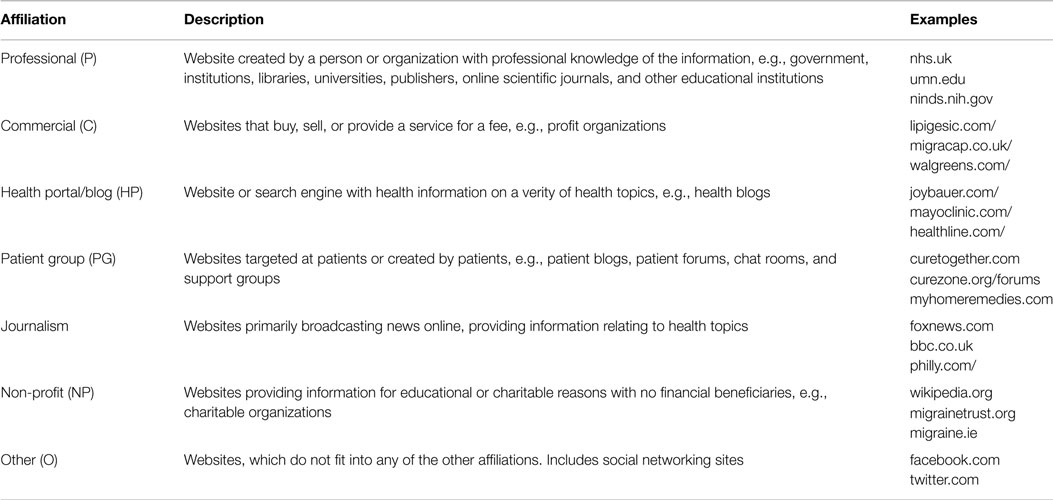

Websites were classified in accordance to their affiliation as being professional, patient group, commercial, health portal, non-profit, journalism, or others, as summarized in Table 1. Two researchers independently classified the websites and their findings were compared. For those cases where there was no agreement, the website was revisited by both researchers and a consensus achieved by discussion.

There were 10 disagreements on 196 websites (95% agreement). Calculating inter-rater reliability for two coders using Recal2 (18) showed that the highest disagreement was on “Other” websites (Cohen’s kappa coefficient, −0.01; six disagreements) followed by “Professional” websites (Cohen’s kappa coefficient, 0.76; three disagreements), and “Patients Groups” (Cohen’s kappa coefficient, 0.86; three disagreements). All other classes of websites (Commercial, Health Portal, Journalism, No-profit) showed higher agreement rates, with Cohen’s kappa coefficient >0.93. In the case of Health Portals, there were five disagreements but, because of the large number of websites in this class, Cohen’s kappa coefficient was 0.95. There was no disagreement on the type of intervention described as that was less subjective as was simply based on an intervention being mentioned in the text.

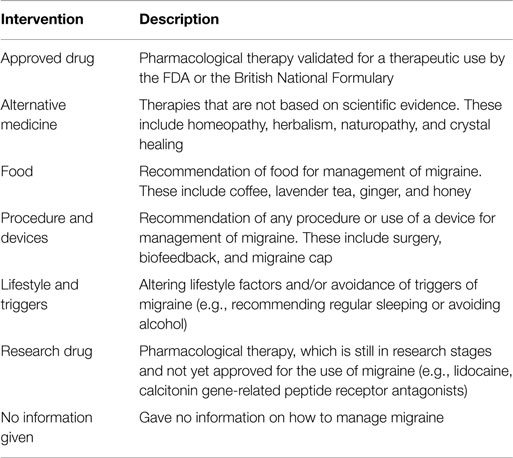

Websites were then classified according to the different types of intervention they indicated as described in Table 2. One website could mention more than one intervention. If a website gave a link to a different page of the same website, this information was also analyzed. However, if the link was given to an external site, this information was dismissed. If a website mentioned an intervention only to state that it is not effective, then the information was dismissed. Therefore, a website was tagged for a type of intervention if either it mentioned the intervention being an effective one, or listed it as one of the possible interventions without further comments.

We then gave each website a score according to the JAMA criteria (6, 19, 20). For this purpose, websites were analyzed for the following information: (1) authorship (identification of authors/contributors); (2) attribution (references listing sources of information); (3) disclosure (of ownership, advertising, conflict of interests); and (4) indication of date content was posted or updated. For each of these four criteria, we assigned a score of 1 if the information was present, or 0 if absent or unclear. If the information was not available on the initial website information, then the three-click rule was used. The three-click rule is an unofficial website navigation rule that suggests information should be accessible within three clicks (21). In previous studies, a website scoring a mean JAMA score of 3 or above has been suggested to be of high quality (19, 22).

The Kruskal–Wallis test was used for multiple comparisons of non-parametric variables, followed by Dunn’s test, using GraphPad Prism software (GraphPad Prism Software Inc., La Jolla, CA, USA). The Mann–Whitney test was used where there were two independent groups. When indicated, contingency tables were analyzed using a one-tailed Chi-square test for non-parametric data. Hierarchical cluster analysis was performed using the Genesis software (http://genome.tugraz.at/genesisclient/genesisclient_description.shtml) (Version 1.7.6 for Mac OSX).

Because websites URLs are not permanent to ensure that the reader will be able to see examples of the search results, the top 10 URLs returned in each of the 5 Google SERPs listed in Tables S1 and S2 in Supplementary Material were archived. For this purpose, we used WebCite®, an on-demand archiving system for webreferences and the archived URL is provided next to the original URL. Two webpages, all from the domain, www.health.com, were indicated as not available (n/a) as they could not be archived, presumably because either the site in question refuses connections by crawling robots or is inaccessible from the WebCite network.

Results

Distribution of Websites

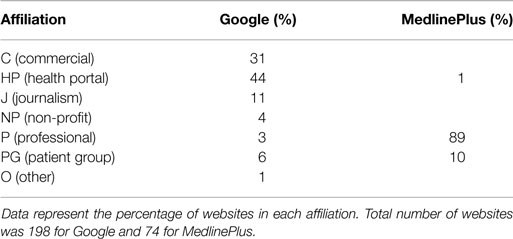

The 198 websites returned by Google were analyzed by their affiliation and the type of intervention they describe. The raw data, with the list of websites URLs and how they were classified in terms of class of websites, type of intervention, and JAMA scores are provided in Table S1 in Supplementary Material. As shown in Table 3, commercial websites and health portals made up for 75% of the total, in contrast with the 74 returned by MedlinePlus, where professional websites accounted for nearly 90% of the hits returned.

Table 3. Distribution of websites generated from search in Google or MedlinePlus according to their affiliation.

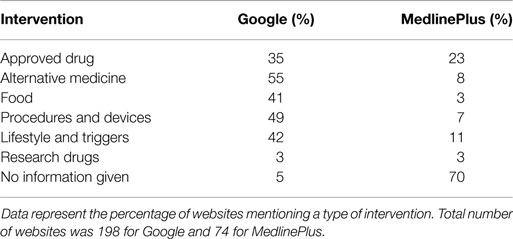

Table 4 shows the distribution of websites according to the type of intervention described. Of the 198 websites returned by Google, 184 could be assigned to an “intervention” group. However, of the 74 websites returned by MedlinePlus, 70% did not mention an intervention and could not be considered for this type of analysis. The largest proportion of websites returned by Google dealt with alternative medicine (55%) followed by procedures and devices (49%). The total for Google adds up to more than 100% because, many websites mentioned more than one type of intervention.

Table 4. Distribution of websites generated from search in Google or MedlinePlus according to the intervention indicated.

Cluster Analysis of Website Patterns

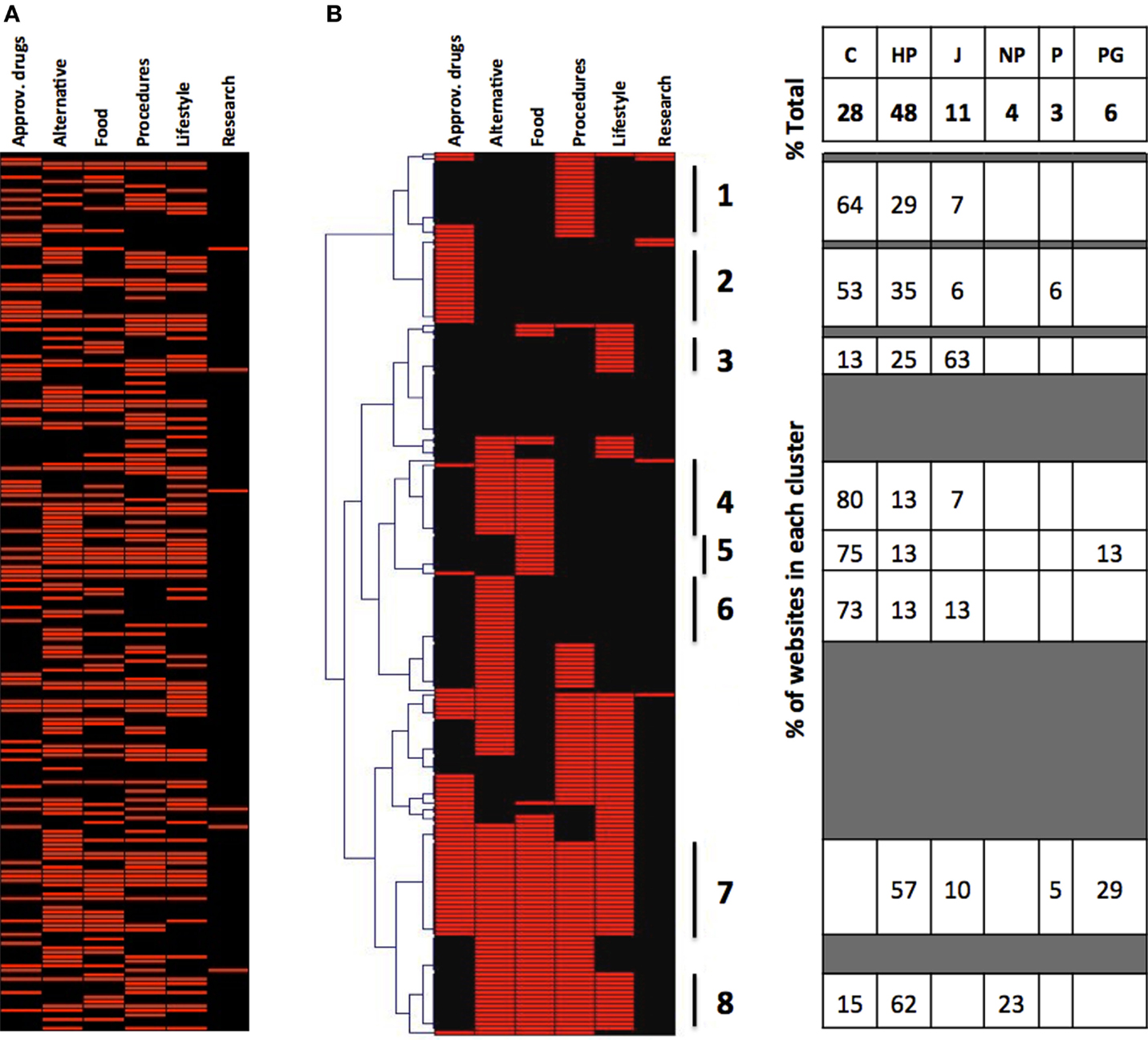

We then decided to analyze whether groups of websites could be identified based on the type of interventions they describe and taking into account the fact that most of them will describe more than one type of intervention. The type of data visualization used above does not allow a detailed analysis of co-occurrence of intervention mentioned. Therefore, we have used a graphic representation where each website is assigned a value of 1 for each type of intervention mentioned. Each website is listed in one row and the type of intervention in a column. The value 1 is then represented in red and the visual representation is shown in Figure 2A, where one can appreciate that some websites mention more than one intervention and some only one.

Figure 2. Cluster analysis of the websites returned by Google. (A) Type of intervention mentioned by the 198 websites (in alphabetical order by website URL). (B) Hierarchical cluster analysis on websites from (A), clustered by type of intervention. The table on the right shows the composition by class of websites of the eight clusters identified in this figure.

We wanted to see, using this type of visual representation, whether websites mentioned or excluded specific interventions and whether this correlated with the class of website. We thus first performed a hierarchical cluster analysis of the websites according to the intervention they mention, and the results are presented in Figure 2B. In the left part of the figure, it can be seen that we can identify clusters of websites that describe only procedure and devices (cluster 1), only approved drugs (cluster 2), only lifestyle (cluster 3), only alternative medicine (cluster 6), and websites that describe all possible interventions (cluster 7) except for the experimental drugs that were mentioned in very few websites. While there were several websites (cluster 8) that described multiple interventions excluding approved drugs, only one website described multiple interventions excluding alternative medicine.

This type of visualization allowed us to then analyze the composition, in terms of the different classes of websites, of these clusters, and the results are shown in the left side of Figure 2B.

The commercial websites preferentially mention only one intervention. In fact, while commercial websites are 28% of the total, they are 75% of those mentioning only nutrition, 73% of those mentioning only alternative medicine, 64% of those mentioning only procedures and devices. However, there are no commercial websites among those mentioning all possible indications (cluster 7), and only 15% in those mentioning all interventions excluding approved drugs (cluster 8). Health portals, which are 48% of the total, were overrepresented among the websites reporting several treatment options (clusters 7 and 8). Journalism websites, which represent 11% of the total, are prevalent among those that only mention “lifestyle” interventions (cluster 3, 63%) and never mention all the possible interventions (cluster 7). It is difficult to comment on the other classes of websites due to their small number. Likewise, a cluster analysis of the MedlinePlus did not provide much information due to the small number of websites, of those returned, that describe an intervention (not shown).

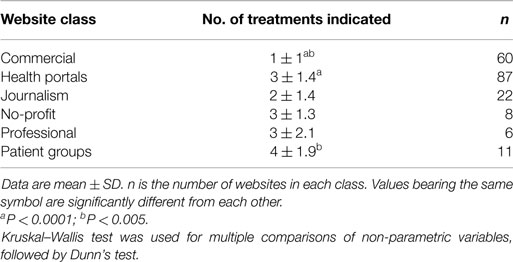

The fact that commercial websites preferentially describe only one type of intervention is also evident by calculating the mean number of different types of intervention described by the different classes of websites (Table 5).

It can be seen that the average number of treatments described by the website is 1 in the commercial websites, followed by journalistic sources with an average of 2, health portals, non-profit, and professional websites with an average of 3, while patient group websites are those describing a wider picture of treatments (an average of 4). Commercial websites describe significantly less treatment options than health portals or patient groups.

Distribution of Classes of Websites and Types of Intervention in the Ranking of Google Search: Analysis of the First 10 Websites Returned

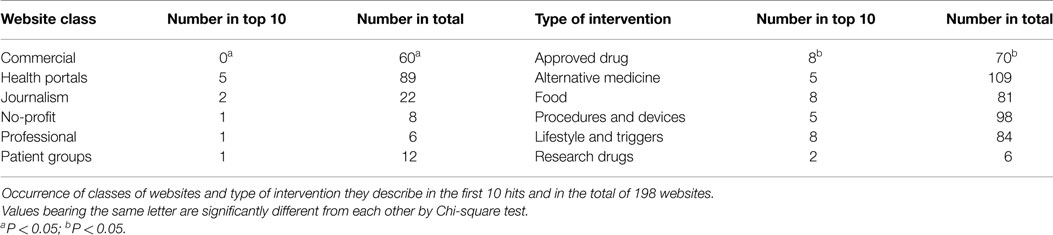

We wanted to investigate whether the top 10 websites returned by Google were following a different pattern in terms of class of website or type of intervention described. As shown in Table 6, there were significantly less commercial websites (0/10) in the top 10 then in all 198 websites (60/198). No other significant difference was observed for any of the other classes of websites. Thus, commercial websites are underrepresented in the top 10 results. Table 6 also shows the occurrence of the different types of intervention in the top 10 websites and in the total number of websites. The only significant difference found was that approved drugs are overrepresented in the 10 top ranking websites.

Table 6. Distribution of classes of websites and types of intervention in the ranking of Google search.

Analysis of Trustworthiness (JAMA scores) of the Different Classes of Websites

In this part of the study, we wanted to assess whether different classes of websites or websites describing different types of interventions differed for their JAMA score, as a recognized measure of website quality/trustworthiness.

Overall, there was a small but significant difference (P < 0.02 by Student’s t-test) in the average JAMA score of websites returned from Google (1.9 ± 0.8; 27% having a score ≥3) or MedlinePlus (2.1 ± 0.6; 19% having a score ≥3). The number of websites with a JAMA score ≥3 was slightly higher with Google (Google, 55/198, 27%; MedlinePlus, 14/74, 19%) but the difference was not statistically significant (Chi-square without Yates correction gave a one-tailed P value of 0.0675). The distribution of JAMA scores was different, and while in MedlinePlus 72% of the websites scored 2, and 19% scored 3, Google had a broader distribution, with 39, 33, and 27%, scoring 1, 2, and 3, respectively).

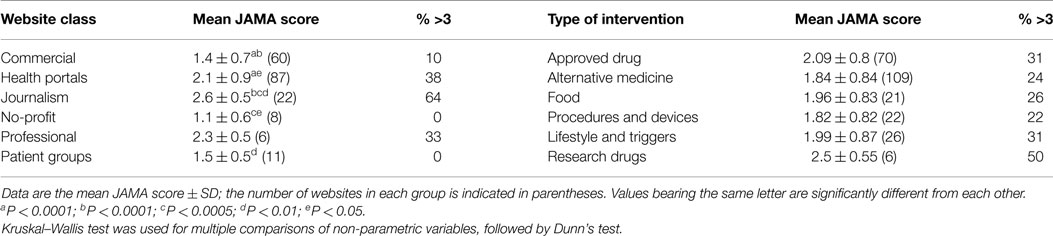

We then analyzed the JAMA score of websites returned from Google according to their class and the type of intervention, and the results are reported in Table 7. It can be seen that there are significant differences in the mean JAMA score of different classes of websites, with journalistic websites scoring the highest and commercial websites the lowest. This is also evident if we look at the percentage of websites that have a JAMA score of ≥3, where journalism websites score the highest, followed by health portals, and commercial websites the lowest. It is difficult to comment on classes of websites with 10 or less websites.

When we look at the JAMA score of websites classified by types of intervention, approved drugs score slightly higher but the differences are small and not statistically significant. Again, it is difficult to comment on the high score of websites that mention research drugs, as there are only 6.

Because MedlinePlus returned only two types of websites, and few of them indicated an intervention, this analysis has not been performed on those websites.

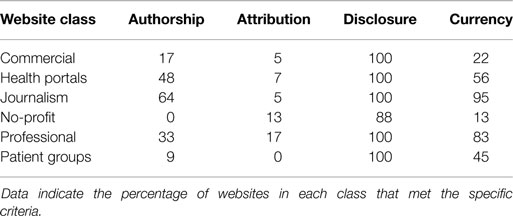

To identify the reasons for the different JAMA scores in the different classes of website described in Table 7, we have disaggregated the JAMA score in its four components and have analyzed them in the different classes of websites (Table 8). From this is clear that most websites met the “disclosure” criteria, and very few the “attribution.” The two criteria for which commercial websites and no-profit ones differed the most from the ones having a higher JAMA score (health portals and journalism) were “authorship” and “currency.”

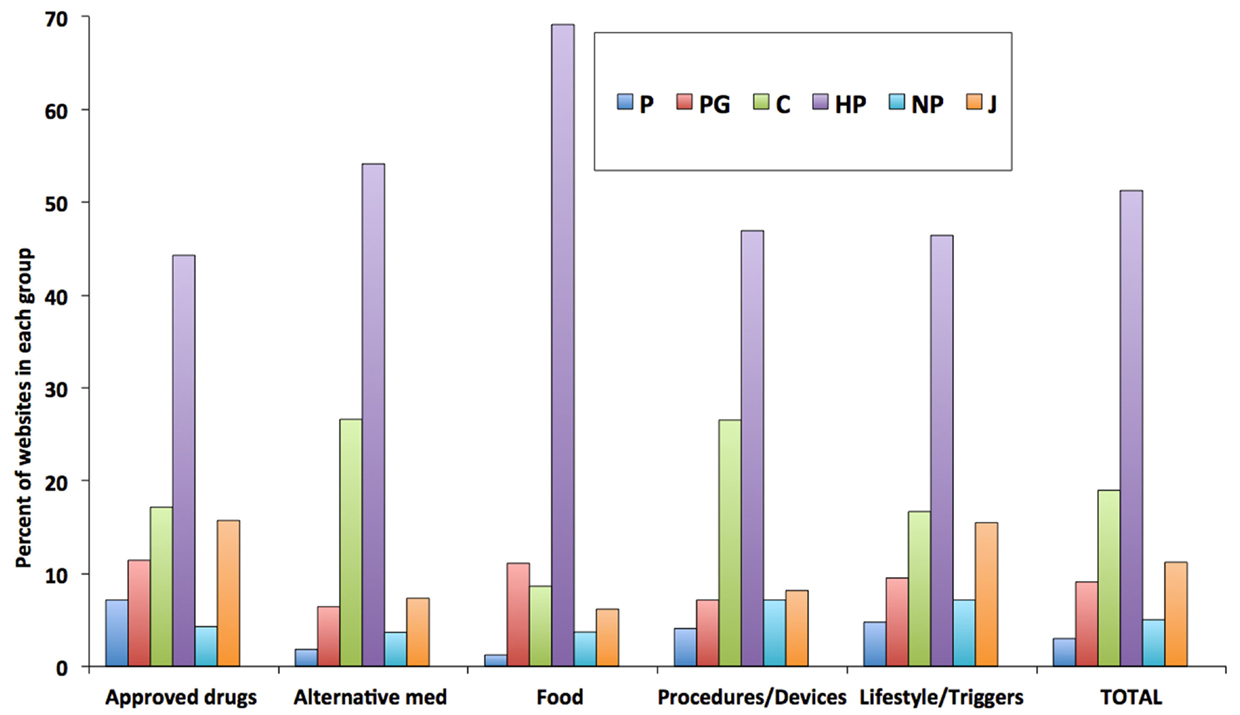

We then analyzed the distribution of intervention groups by class of websites. As shown in Figure 3, it is clear that the pattern of classes of websites is similar across all the types on intervention mentioned, i.e., there is not a type of intervention that is preferentially described by a specific class of websites. Possibly, there is a preference toward alternative medicine and procedure/devices in commercial websites, and for nutritional interventions in health portals. Of note, the pattern cannot be compared with that given in Table 3 because website can mention multiple types of interventions and an average value, obtained by adding up the values for all the interventions, is shown for comparison.

Figure 3. Composition of websites mentioning different types of intervention. Data are expressed as a percentage of the total number of websites mentioning a type of intervention. Data labels are shown above each bar. Research drugs as type of intervention are not included because of the small number of websites (n = 4).

Discussion

The present study extends our previous approach of assessing the quality of the health information of websites returned by search engines using a medical search term. In the previous work, we analyzed different classes of websites using instruments (HON code, JAMA score) that measure the trustworthiness of a website rather than the quality of the information provided. We have analyzed the websites returned by a Google search in terms of the type of intervention indicated in their content. Of course, we cannot make conclusion from our findings that could be extended to any search query. Also, results returned by Google will change with time, location, and search history. Nevertheless, the 200 websites returned represent a good sample of migraine-related websites so that some conclusion can be drawn on the usefulness of the methodology described here.

The approach used here is unbiased, and does not provide an absolute indicator of “health IQ.” However, seen from the perspective of evidence-based medicine (EBM), one should consider a website of higher quality if it points to a drug approved by a regulatory agency, and that has gone through EBM criteria for its approval, rather than to, for instance, crystal healing.

In this respect, it is important to note that, as shown in Table 7, a trustworthiness score, such as the JAMA score, is not predictive of whether a website will promote the use of alternative medicine rather than that of an approved drug, confirming that the type of intervention and the criteria used in the JAMA score are assessing different dimensions of IQ. This confirms conclusions made from a larger study on over 300 web pages providing information on breast cancer, where the JAMA score was found not to predict whether the information provided was scientifically accurate (23).

Commercial websites, no-profit organizations and patient groups have a lower JAMA score lower than health portals, professional, or journalism websites. This is exactly the same pattern that we previously reported in a study on health information on diabetic neuropathy, independently on the search engine used, whether it was Google, Yahoo, Bing, or Ask (24).

One recommendation that can be made from this observation is that owners/publishers of commercial, patient group, and no-profit websites try to improve their trustworthiness, which could often be achieved by providing simple information, such as the author of the text, and date of last update.

Another pattern that is evidenced in the present study (particularly in the cluster analysis and in Table 5) is that commercial websites will often describe only one type of intervention. This comes as no surprise and it was probably expected that a website, www.tylenol.com, describes only acetaminophen; it is probably a very good source of information on how to use this drug. However, for a patient searching the Internet on how to cure their disease, it is important to have websites that mention different options, possibly with a critical analysis, or at least a description, of their respective benefits, risks, and the scientific evidence for their efficacy. This might identify criteria to be considered in evaluating health websites, inclusivity as opposed to exclusiveness. Clearly, including various intervention options, it may be desirable or not depending on the purpose of a website, but it may be helpful to direct patients toward health portals if they want to have a general idea of the different options and to commercial websites if they seek information on one specific type of intervention.

An important question in studies of this kind is whether it is relevant to analyze 200 websites returned by Google. In fact, many studies have shown that the user directs most of the attention to the first items in the search list (25) and a study in patients searching health-related information found that they will usually look at the first 10 websites returned by the search engine (2). The difference in the pattern observed for all 200 websites and the top 10 is most interesting. Contrary to common belief that for-profit search engines, like Google, will preferentially return commercial websites, we found, as shown in Table 6, that these were in fact underrepresented, to a statistically significant extent, in the top 10 results. Of course, this may depend on the search terms, or the disease condition we search for. We have reanalyzed the raw data from our previous study where we searched the term “diabetic pain” using Google and analyzed 200 websites returned (24), and found that also in that dataset, commercial websites are underrepresented in the first 10 hits. In fact, in that study, the total number of commercial websites was 42 but they were not present in the first 10 hits. Also in that case, commercial websites were significantly underrepresented. To assess how much this depends on the terms used in the query, we performed, during the revision of this manuscript, 4 different searches on “migraine cure,” “migraine medicine,” “migraine treatment,” and “migraine therapy” and looked at the top 10 websites returned by Google. As shown in Table S2 in Supplementary Material, there were no websites classifiable as “Commercial” in any of these SERPs. Clearly, we cannot generalize and the distribution of websites returned will vary with the terms used in the queries. It would be interesting to characterize the pattern of websites returned by different search queries, possibly analyzing also existing, published data. We reanalyzed the data reported in an IQ study on kidney transplant for which a list of 94 websites was reported. In that study, commercial websites were 25% of the total, but none was in the top 10 returned by Google (26).

Also, it is often suspected that the Internet would generally point the patient toward alternative medicine approaches rather than medicinal products whose approval is based on EBM criteria. However, the data reported here show that, although among the total 200 websites analyzed that alternative medicine interventions are mentioned more frequently than approved drugs (55 vs. 35%), this is not true in the first 10 items returned by Google, where approved drugs are significantly overrepresented.

Of course, there are other features of the websites that should be taken into account. The fact that a website is returned in a Google search does not mean that it will be read. That depends on several issues including attractiveness, readability, and various aspects of the website’s design and content, that would require, in addition to the readability test mentioned above, using eye-tracking analysis (27).

More importantly, we need to stress once again that these results were obtained with a specific query. Anyone who had a chance of searching the Internet for health information knows how often low-quality websites pointing the layperson toward treatments, or strategies for prevention, that are not evidence-based, are found in the top results. Ideally, performing a large number of searches on different health-related queries would point at where misinformation, or disinformation, makes it to the top of the search. Clearly, this is a larger project that would require machine processing rather than individual scoring. The use of the type of intervention described here is something that could be implemented by defining list of keywords that using automated machine learning. This study shows that analyzing websites for the type of intervention they describe could provide other dimensions of health IQ in addition to those described by the JAMA score. In fact, at least within the sample of 200 websites retrieved here, the JAMA score did not differentiate between websites describing approved drugs from those describing alternative medicine approaches.

Performing a cluster analysis of websites, based on their classification and the intervention described, can identify patterns of websites pointing patients toward one or more treatments. In the specific example of health-related query studied here, we were surprised that commercial websites ranked low in the search list. In terms of the type of intervention recommended, complementary/alternative medicine occurred more often than approved, EBM-based drugs, but the latter were more frequent in the top 10 results, possibly due to the intrinsic higher IQ features of these websites.

It would be important to apply this type of analysis over a wide range of search terms and disease conditions and health topics in order to identify areas that are more at risk of directing patients toward non-EBM types of intervention, and thus potentially pose problems to public healthcare systems and health insurance companies. This would indicate where policy makers and professional organizations should concentrate their effort to inform patients on the benefits, risk, and scientific basis of existing therapies.

Limitations

The results of the study are specific for one specific query on one health topic. In particular, the observation that commercial websites are under-represented in the first 10 results, and that health portals are the most frequent class of websites and alternative medicine the most frequently described type of intervention may be specific to this topic; searches in other health domains may give completely different results. Additional studies on different health topics will be needed to assess the usefulness of the proposed methodology (classification by type of intervention and cluster analysis). Finally, the type of classification we have used (both in terms of website classes and types of interventions) may need to be tailored to the specific research questions.

Author Contributions

MY and PG both analyzed the data and wrote the paper.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at http://journal.frontiersin.org/article/10.3389/fpubh.2015.00204

Abbreviations

EBM, evidence-based medicine; IQ, information quality; JAMA, Journal of the American Medical Association

References

1. Berland GK, Elliott MN, Morales LS, Algazy JI, Kravitz RL, Broder MS, et al. Health information on the internet: accessibility, quality, and readability in English and Spanish. JAMA (2001) 285:2612–21. doi: 10.1001/jama.285.20.2612

2. Eysenbach G, Kohler C. How do consumers search for and appraise health information on the world wide web? Qualitative study using focus groups, usability tests, and in-depth interviews. BMJ (2002) 324:573–7. doi:10.1136/bmj.324.7337.573

3. Liang BA, Mackey T. Searching for safety: addressing search engine, website, and provider accountability for illicit online drug sales. Am J Law Med (2009) 35(1):125–84. doi:10.1177/009885880903500104

4. Fox S, Duggan M. Health Online 2013. PEW Internet and American Life Project. Washington, DC: Pew Research Center’s Internet and American Life Project (2013).

5. Health on the Net Foundation. The HON Code of Conduct for Medical and Health Web Sites (HONcode). Available from: http://www.hon.ch/HONcode/Conduct.html

6. Silberg WM, Lundberg GD, Musacchio RA. Assessing, controlling, and assuring the quality of medical information on the internet: caveant lector et viewor – let the reader and viewer beware. JAMA (1997) 277:1244–5. doi:10.1001/jama.1997.03540390074039

7. Charnock D, Shepperd S, Needham G, Gann R. DISCERN: an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health (1999) 53:105–11. doi:10.1136/jech.53.2.105

8. Brosnan S, Barron E, Sahm LJ. Health literacy and the clozapine patient. Perspect Public Health (2012) 132:39–42. doi:10.1177/1757913911431038

9. Kaphingst KA, Zanfini CJ, Emmons KM. Accessibility of web sites containing colorectal cancer information to adults with limited literacy (United States). Cancer Causes Control (2006) 17:147–51. doi:10.1007/s10552-005-5116-3

10. Thompson AE, Graydon SL. Patient-oriented methotrexate information sites on the internet: a review of completeness, accuracy, format, reliability, credibility, and readability. J Rheumatol (2009) 36:41–9. doi:10.3899/jrheum.080430

11. Kaicker J, Debono VB, Dang W, Buckley N, Thabane L. Assessment of the quality and variability of health information on chronic pain websites using the DISCERN instrument. BMC Med (2010) 8:59. doi:10.1186/1741-7015-8-59

12. Wang RY, Strong DM. Beyond accuracy: what data quality means to data consumers. J Manag Inf Syst (1996):5–33.

13. Lee YW, Strong DM, Kahn BK, Wang RY. AIMQ: a methodology for information quality assessment. Inf Manag (2002) 40:133–46. doi:10.1016/S0378-7206(02)00043-5

14. Peterlin BL, Gambini-Suarez E, Lidicker J, Levin M. An analysis of cluster headache information provided on internet websites. Headache (2008) 48:378–84. doi:10.1111/j.1526-4610.2007.00951.x

15. Martins EN, Morse LS. Evaluation of internet websites about retinopathy of prematurity patient education. Br J Ophthalmol (2005) 89:565–8. doi:10.1136/bjo.2004.055111

16. Dy CJ, Taylor SA, Patel RM, Kitay A, Roberts TR, Daluiski A. The effect of search term on the quality and accuracy of online information regarding distal radius fractures. J Hand Surg Am (2012) 37:1881–7. doi:10.1016/j.jhsa.2012.05.021

17. Fabricant PD, Dy CJ, Patel RM, Blanco JS, Doyle SM. Internet search term affects the quality and accuracy of online information about developmental hip dysplasia. J Pediatr Orthop (2013) 33:361–5. doi:10.1097/BPO.0b013e31827d0dd2

18. Freelon DG. ReCal: intercoder reliability calculation as a web service. Int J Internet Sci (2010) 5:20–33.

19. Meric F, Bernstam EV, Mirza NQ, Hunt KK, Ames FC, Ross MI, et al. Breast cancer on the world wide web: cross sectional survey of quality of information and popularity of websites. BMJ (2002) 324:577–81. doi:10.1136/bmj.324.7337.577

20. Barker S, Charlton NP, Holstege CP. Accuracy of internet recommendations for prehospital care of venomous snake bites. Wilderness Environ Med (2010) 21:298–302. doi:10.1016/j.wem.2010.08.016

21. Zeldman J. Taking Your Talent to the Web: A Guide for the Transitioning Designer. San Francisco, CA: New Riders Publishing (2001).

22. Ghezzi P, Chumber S, Brabazon T. Educating medical students to evaluate the quality of health information on the web. The Philosophy of Information Quality. Cham: Springer International (2014). p. 183–99.

23. Bernstam EV, Walji MF, Sagaram S, Sagaram D, Johnson CW, Meric-Bernstam F. Commonly cited website quality criteria are not effective at identifying inaccurate online information about breast cancer. Cancer (2008) 112:1206–13. doi:10.1002/cncr.23308

24. Chumber S, Huber J, Ghezzi P. A methodology to analyze the quality of health information on the internet: the example of diabetic neuropathy. Diabetes Educ (2014) 41(1):95–105. doi:10.1177/0145721714560772

25. Cutrell E, Guan Z. What are you looking for? An eye-tracking study of information usage in web search. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. New York, NY: ACM (2007). p. 407–16.

26. Hanif F, Abayasekara K, Willcocks L, Jolly EC, Jamieson NV, Praseedom RK, et al. The quality of information about kidney transplantation on the World Wide Web. Clin Transplant (2007) 21:371–6. doi:10.1111/j.1399-0012.2006.00652.x

Keywords: Internet, IQ, websites, migraine, information, online

Citation: Yaqub M and Ghezzi P (2015) Adding dimensions to the analysis of the quality of health information of websites returned by Google: cluster analysis identifies patterns of websites according to their classification and the type of intervention described. Front. Public Health 3:204. doi: 10.3389/fpubh.2015.00204

Received: 13 April 2015; Accepted: 10 August 2015;

Published: 25 August 2015

Edited by:

Nuria Oliver, Telefonica Research and Development, SpainReviewed by:

Aleksandar Matic, Telefonica Research and Development, SpainLuis Fernandez Luque, Norut, Norway

Copyright: © 2015 Yaqub and Ghezzi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Pietro Ghezzi, Brighton and Sussex Medical School, Trafford Centre, Eastern Ring Road, Falmer, Brighton BN1 9RY, UK, p.ghezzi@bsms.ac.uk

Mubashar Yaqub

Mubashar Yaqub Pietro Ghezzi

Pietro Ghezzi