- 1College of Information and Computer Sciences, University of Massachusetts, Amherst, MA, USA

- 2Neuroscience and Behavior Program, University of Massachusetts, Amherst, MA, USA

- 3Department of Mechanical and Industrial Engineering, University of Massachusetts, Amherst, MA, USA

Objective: We aimed to elucidate how our domain-general cuing algorithm improved multitasking performance and changed behavioral strategies in human operators.

Background: Though many gaze-control systems have been designed, previous real-time gaze-aware assistance systems were not both successful and domain-general. It is largely unknown what constitutes optimal search efficiency using the eyes, or ideal control using the mouse. It is unclear what the best coordinating strategies are between these two modalities. Our previously developed closed-loop multitasking aid drastically improved multitasking performance, though the behavioral mechanisms through which it acted were unknown.

Methods: We performed in-depth analyses and generated novel eye tracking and mouse movement measures, to explore the complex effects of our helpful system on gaze and motor behavior.

Results: Our overlay cuing algorithm improved control efficiency and reduced well-known biases in search patterns. This system also reduced micromanaging behavior, with humans rationally relying more on imperfect automation in experimental assistance cue conditions. We showed that mouse and gaze were more independently specialized in the helpful cuing condition than in control conditions. Specifically, with our aid, the gaze performed more global movement, and the mouse performed more local clustered movement. Further, the gaze shifted toward search over processing with the helpful cuing system. We also illustrated a relationship between the mouse and the gaze, such that in these studies, “the hand was quicker than the eye.”

Conclusion: Overall, results suggested that our cuing system improved performance and reduced short-term working memory load on humans by delegating it to the computer in real time. Further, it reduced the number of required repeated decisions by an estimate of about one per second. It also enabled the gaze to specialize for improved visual search behavior, and the mouse to specialize for improved control.

1. Introduction

The vast majority of people are poor multitaskers (Watson and Strayer, 2010). To make matters worse, some of those who score worst on measures of multitasking performance tend to perceive that they are better at multitasking, with a negative correlation between perception and ability in large studies (Sanbonmatsu et al., 2013). These issues are particularly important, since in every day work-life, multitasking may often be necessary or efficient for a variety of human labor.

Tracking a participant’s eye movements while multitasking is an especially good way to glean optimal cognitive strategies. Much work has shown that eye tracking to determine point of gaze can reliably convey the location at which humans’ visual attention is currently directed (Just and Carpenter, 1976; Nielsen and Pernice, 2010). Locus of attention is a factor that can illustrate which of multiple tasks a participant is currently attending to, as well as many other details. Further, measuring where humans look tends to be highly informative of what is interesting to them in a particular scene (Buswell, 1935; Yarbus, 1967), and can be helpful for inferring cognitive strategies. Generally, gaze appears deeply intertwined with cognitive processes. For example, eye movements during an actual event have been shown to resemble those during the recollection of a similar event (de’Sperati, 2003). Even though “looked but did not see” events have been experimentally recorded in special circumstances, typically even brief gazes are informative about attention. For example, the location of very small unintentional eye movements called microsaccades may index intention to look at a non-fixated location or even mere interest in a non-fixated location in the form of covert attention (Hafed and Clark, 2002).

Multitasking principles also apply when managing multiple items in working memory (Heathcote et al., 2014). The canonical number of items capable of maintenance in working memory is 7 ± 2 (Miller, 1956), though this is likely a high estimate, whereas the real-world version, running memory, is likely around 5 chunks of “familiar information” (Moray, 1980). For working memory, another cognitive construct that is difficult to measure and discussed at length below, eye movement paradigms have revealed how visual search tasks can be interfered with when working memory is being taxed (Downing, 2000; Oh and Kim, 2004; Woodman and Luck, 2004).

Though many paradigms have been developed to study multitasking using eye tracking, most traditional applications of eye tracking are not used in real time, but instead to augment training, or simply to observe optimal strategies. For an example of training, post-experiment analysis of gaze data can be used to determine an attention strategy of the best-performing participants or groups. Then, these higher-performing strategies can be taught during training sessions at a later date (Rosch and Vogel-Walcutt, 2013). Implemented examples include educating health care professionals on visual scanning patterns associated with reduced incidence of medical documentation errors (Marquard et al., 2011; He et al., 2014), and training novice drivers’ gaze behaviors to mimic more experienced drivers with lower crash risk (Taylor et al., 2013). As eye tracking methods become more popular, they have been applied in the field of human–computer interaction and usability (Jaimes and Sebe, 2007; Strandvall, 2009; Drewes, 2010), as well as human–robot interaction (Atienza and Zelinsky, 2002; Bhuiyan et al., 2004; Majaranta et al., 2011), though in this area, guiding principles for optimal gaze strategies are still nascent.

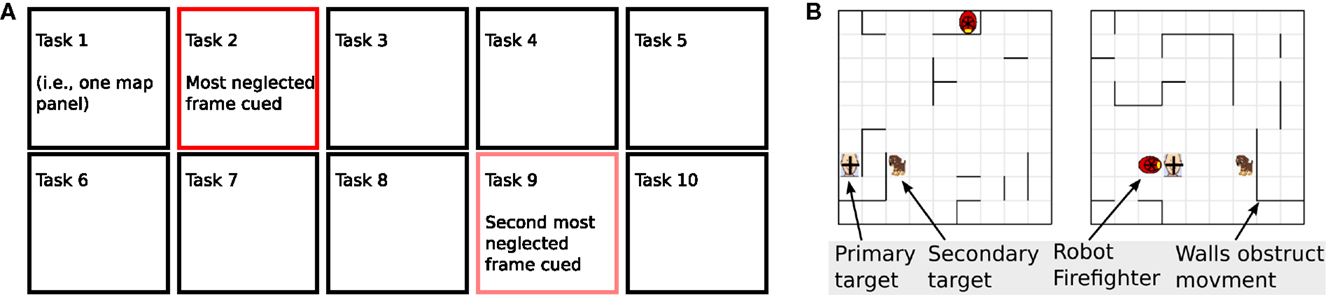

Real-time reminders for tasks can improve user performance (Moray, 1981). Generally, real-time cuing of goals can speed or increase the accuracy of detection (Eriksen and Collins, 1969). Highlighting display elements in a multi-display may assist in directing attention (Fisher and Tan, 1989; Hammer, 1999), though eye tracking may often be critical to reliably automate such reminders for many tasks. As described above, there is little previous work developing real-time eye tracking assistance, with most research focused on training, evaluation, or basic hypothesis testing. The real-time systems developed previously, elaborated extensively in the Discussion below (Section 4.5), were lacking in domain-generality, utility, and flexibility. For example, one previous application-specific approach has been to cue the gaze history itself, as a form of bookmark of where has been visited (Ohno, 2004). There appears to be a need for an assistive device for managing multiple visual tasks, which is domain-general, transparent, intuitive, non-interfering, non-command, improves control (without replacing direct control), and adaptively extrapolates to a variety of circumstances. Somewhat counter-intuitively, in the current experiment we explicitly and simply cued the inverse of gaze recency. Thus, we evaluated a system that successfully addressed the need for domain-general multitasking assistance (Figure 1). The goal of this paper is to illustrate the mechanistic means by which this system influenced human strategies for multitasking.

Figure 1. Diagram of the general cuing system employed. (A) Panels of tasks compose a visual array (10 tasks depicted here). A dark red frame cues the most neglected map panel task (looked at longest ago), while pale red highlights the next most neglected map panel task. (B) Participants controlled firefighting robots to rescue targets on map panel tasks (2 displayed). Robots were displayed as red firefighter helmets, each traveling through one separate map panel task to rescue targets. Participants received points for rescuing targets via contact. Each robot remained within its own separate map panel task, and navigated independently of other robots and other panels. Occasional human intervention could improve upon error-prone semi-autonomous movement. In our experiment, each task was an independent robot game task.

The structure of our paper is as follows: the Materials and Methods section details the design of our cuing system, our previous evaluation of its basic effectiveness toward improving multitasking performance, the participant details, technical implementation, and statistical procedures. All of our measures (except one) were novel and custom to this circumstance. Thus, the algorithms for analysis were not included in the Methods section, but instead were interleaved with the Results below for better readability, and since these analysis methods are new contributions themselves. Then, the Section Discussion includes both the findings in the context of the engineering psychology literature and in-depth review of the related eye tracking work. We end the paper with brief conclusions and suggestions of wider application.

2. Materials and Methods

2.1. Experiment: The Game and Conditions

In a previous paper, we demonstrated that cuing participants with the inverse of eye-gaze recency (the most neglected task at the moment) drastically improved users’ performance (Taylor et al., 2015). The eye tracker continuously notified the computer and game software of the location of gaze. Frame cues for the most neglected map panel were automatically quickly removed and re-updated if a participant gazed at any map panel task.

To evaluate this system, participants played a multi-agent game (Ember’s game): they managed multiple simulated robotic firefighters simultaneously to save rescue victims. The semi-random automated movement of the robot would eventually rescue some targets, though occasional human intervention could speed rescue times. Each participant session had 7 experimental blocks, and the number of robots they managed increased from 4 to 10 across the 7 blocks, e.g., they managed 4 robots in Block-1, 5 robots in Block-2, and 10 robots in Block-7. Each robot moved in a separate map panel task. An image of the game and eye tracking algorithm design was included in Figure 1, and with full detail in Taylor et al. (2015). To optimize performance, a participant must divide their attention across many independent map panel tasks.

This study adopted a between subject design with one independent variable, the type of frame cuing each of multiple simultaneous map panel tasks. Participants were randomly assigned to one of the three conditions (one test and two control), determined by three frame cue types: (1) “On” condition: helpful gaze history frame cues surrounded the most neglected task (test experimental condition), (2) “Random” condition: randomly moving frames, which were the same physical stimulus, but without any relationship to the gaze (an “active” control condition), and (3) “Off” condition: no frames (an “absent” control condition). All other game parameters were equal across conditions.

In our previous study, we analyzed data collected from participants’ eye movements, mouse movements, and task performance (score). The system displayed large improvements in performance in the On (Helpful frame cue) condition over both controls. In addition, the Helpful frame cue group demonstrated faster reaction times and showed reduced pupil dilation as a proxy for reduced cognitive load (Taylor et al., 2015). Given the simple nature of the frame cue aid, this performance improvement is likely to be similarly seen in many multitasking scenarios. Specifically, it likely extrapolates to many tasks where a user must engage with multiple separate visual processes or agents simultaneously, and the probability that any single task entity needs user interaction increases with duration of time since the user’s last interaction with that same task. Overall, our solution as described in Taylor et al. (2015), appears to be uniquely assistive, domain-general, non-interfering, purely gaze-aware, improves pre-existing control, and most importantly, yielded improvements in task performance with very large effect sizes. However, we did not fully explore how these improvements manifested mechanistically. Therefore, in this current work, we investigated and compared the visual scanning patterns and motor control behaviors between the three experimental groups, to further explore the mechanisms of benefit.

2.2. Participants

A total of 44 human subjects participated in Ember’s game. All procedures complied with departmental and university guidelines for research with human participants and were approved by the university institutional review board, and participants provided written informed consent. Participants were recruited from the university population at large and were compensated for their time with $5 USD. Data were not excluded based on behavioral task performance in order to obtain a generalizable sample of individual variation on performance of the task while avoiding a restriction of range (Myers et al., 2010). Two participants with vision correction causing poor calibration quality for entire blocks were excluded, leaving 42 subjects. No data were excluded within this remaining pool of subjects. Each participant reported extent of past video game experience, current vision correction if any, age, and sleep measures for the previous several days; this was done after rather than before experimental task participation to prevent bias. The statistics describing these demographic surveys were detailed below at the end of the Analysis and Results section. Further participant details were provided in Taylor et al. (2015).

2.3. Technical Implementation and Data Logging

We used a desk-mounted GazePoint GP3 eye tracker positioned directly under the computer monitor to pinpoint the users’ point of gaze, i.e., the point on the screen the user was fixating. All experimental presentation procedures, data collection, and analyses were fully automated. Python and PyGame were used to program the experiment, and interfaced with the eye tracker’s open standard API via TCP/IP, generously provided by GazePoint (http://www.gazept.com). The eye tracker has an accuracy of about [0.5–1] degrees of visual angle, and error was minimized by re-calibrating after every 150 s block. Data logging included the status of all experimental variables on every refresh (at 30 Hz) during experimental trials. Behavioral data were indexed by the location and status of all game elements, such as robot location, path location, target location, and time of target detection. Eye data were indexed by left and right point of gaze on the screen (x, y coordinates) at the refresh rate frequency, the calibration quality data (error quantity) before every new block, and pupil dilation of left and right eye diameter in milliliters at every time-step. Mouse location (x, y coordinates) was recorded at the same frequency for comparison with gaze data. Full technical experimental procedures were described in Taylor et al. (2015).

2.4. Statistical Procedures

Most statistics were displayed within figures themselves, either (1) as SEM bars, which in our experiment conservatively indicate statistically significant differences between groups by approximating t-tests if SEM bars are not overlapping between conditions, as explained below, (2) as pairwise t-tests superimposed on map bias task arrays, (3) as Pearson’s product moment correlation coefficient r and p-values superimposed on scatter plots, (4) and as effect sizes calculated via Cohen’s d (Table 1).

Table 1. Effect sizes presented as Cohen’s d.

Table 1. Effect sizes presented as Cohen’s d.

The t-statistic is defined as the difference between the means of two compared groups, divided by the SEM, (u1–u2)/SEM. Thus, within the parameters of this experiment (and above any typical n) it is a mathematical necessity that when the SEM bars do not overlap, a t-test on those same data would be significant above an alpha criterion of around p < 0.03 for a one-tailed t-test for effects in the expected direction (as most were in this experiment).

The low number of tests within proposed statistical families, the presence of consistent global trends, and guidelines cited here below, all argue against correcting any values for multiple comparisons (Rothman, 1990; Saville, 1990; Perneger, 1998; Feise, 2002; Gelman et al., 2012). Further, many statisticians do not recommend numerically correcting for multiple comparisons (Rothman, 1990; Saville, 1990). Rather, it is often suggested to document individual uncorrected comparisons and descriptive statistics (e.g., SEM or effect sizes), while being transparent that no correction was performed. Further, it should be noted that our conclusions rested not upon a single test, but upon globally uniform patterns.

Automated data processing and plotting were programed in the R-project statistical environment (Core Team, 2013).

3. Analysis and Results

3.1. Compliance with Gaze Assistance was Confirmed

3.1.1. Procedure and Justification

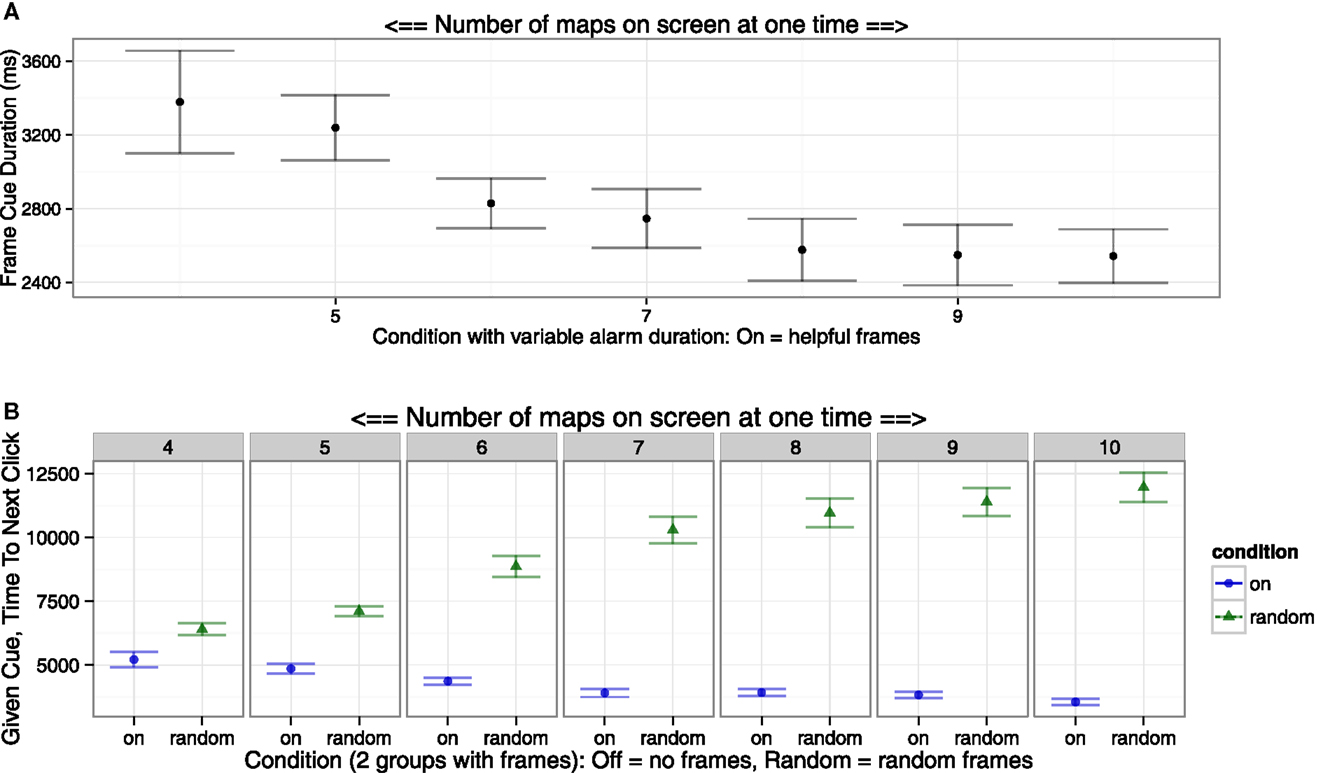

To determine whether participants actually used the assistive frame cue, we employed two overlapping measures to see how quickly participants responded to frame cues, one with the eyes, and another with the mouse: (1) For the eyes, we calculated the mean duration of the most neglected frame cue, for each of the 7 blocks (i.e., 4, 5, …, 10 map task panels) in the treatment group, only in the Helpful “On” condition. This duration was defined as an interval from when the most neglected cue frame started to highlight a map panel task to when that frame disappeared from that panel (Figure 2A). Since a cue frame disappeared from a panel immediately after that panel received the participant’s point of gaze, the duration is an estimate of the cue-to-eye response time. (2) For the mouse, we calculated the mean time between the most neglected frame cue starting to highlight a map panel task and a mouse clicking on that panel. We calculated this measure for each of the 7 blocks in “On” and “Random” groups (Figure 2B).

Figure 2. Participants followed the Helpful frame cue eye aid on average. (A) The duration of time a frame cue highlighted the most neglected map panel task served as an estimate of the time since the frame cue appeared until the participant looked at the cued map panel task. (B) Another similar duration was calculated: given that a frame cue was on the map panel task, the mean duration until participants clicked on that map panel task. Both results confirmed that participants were using the frame cues as instructed.

3.1.2. Result

The duration from the cue starting until the gaze or click interaction with that map task was reduced as map number increased (Figures 2A,B), perhaps reaching a lower threshold. Participants in the On group subjectively reported using the Helpful cues via verbal self-report during and after the experiment.

3.1.3. Interpretation

The decreasing durations for the Helpful frame cue condition suggested that the users were consistently looking at, and taking action (clicking), on the highlighted map panel tasks, confirming usage of the Helpful frame cue. This decrease in time means that participants utilized the cuing system to a greater degree over time, until an observed ceiling of compliance. The increasing duration in the Random condition associated with increased map task numbers, suggesting participants were choosing which map panel task to look at independently of the frame highlighting. This was as expected, since the participants in the Random condition were instructed that the frames were irrelevant to game-play.

3.2. Mouse and Click Efficiency Measures were Improved

3.2.1. Procedure and Justification

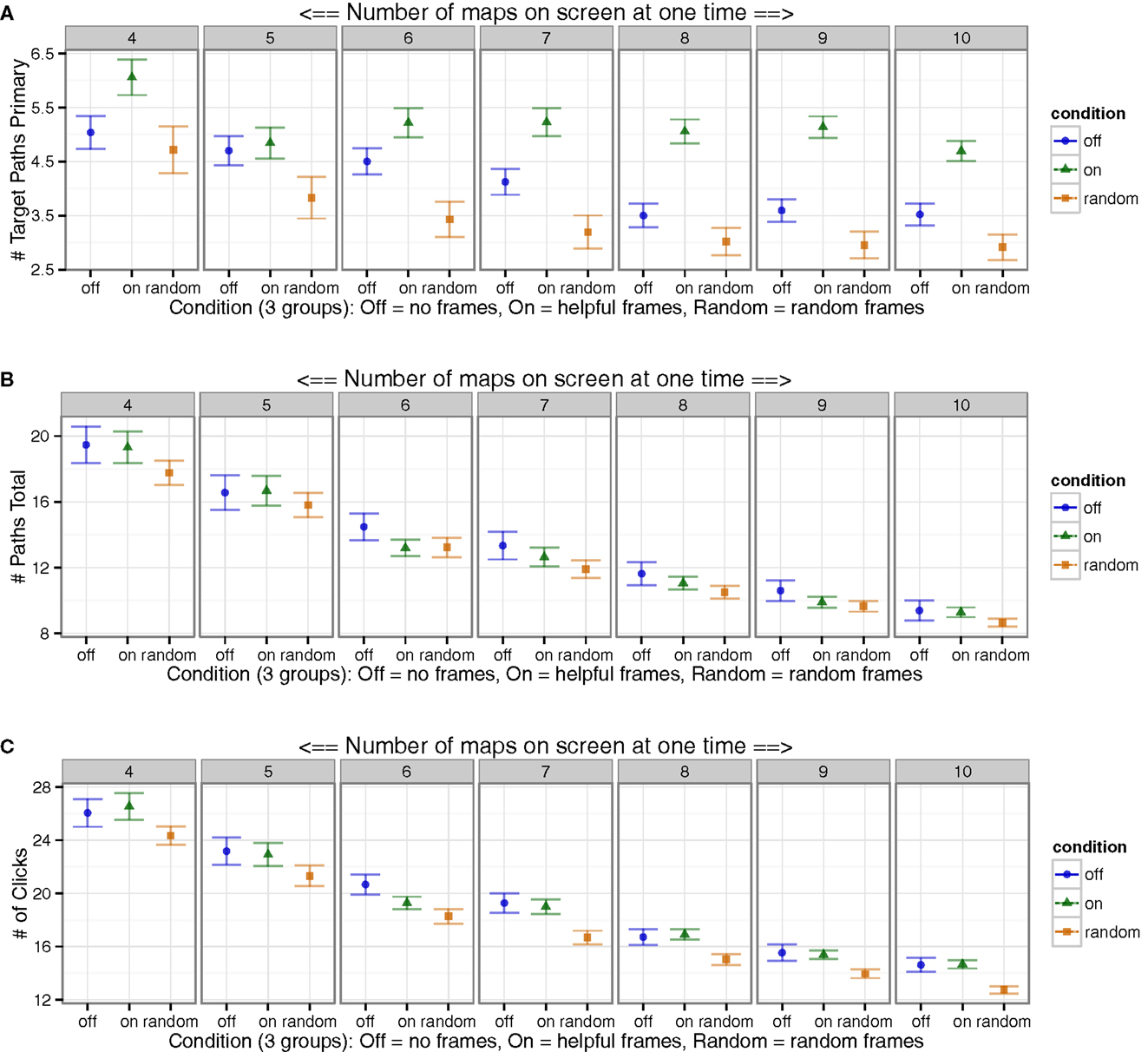

Multiple measures of mouse and click efficiency were calculated to determine the strategies employed by users in the better-performing Helpful frame cue condition: (1) The total number of paths users set to send the robot to primary targets was calculated; (2) the total number of paths was calculated as a baseline reference; (3) the total number of clicks was calculated as another baseline reference.

3.2.2. Result

The number of paths the users set to send the robot directly to primary targets was higher (better) with Helpful frames compared to both controls, while the Random condition had the lowest (worst) values (Figure 3A). The total number of paths set for robots to go to any location was not appreciably different between conditions (Figure 3B), and the total number of clicks in the block was not appreciably different (Figure 3C).

Figure 3. Participants clicked more efficiently. (A) Participants in Helpful frame conditions set a greater number of paths to targets (number of times the robot was sent directly to the target by the human). By contrast, there were no convincing effects for (B) total number of paths (number of locations the robot was directed toward by the human), or (C) total number of clicks (all clicks within the block, whether relevant or not). Overall, participants displayed an improved efficiency of strategy in their clicks.

3.2.3. Interpretation

Use of the eye tracking cuing system improved strategic efficiency of user’s mouse interaction with the task. Since it appeared that relevant clicks were increased in number over irrelevant clicks in the Helpful condition, these measures inspired the next analysis to explore micromanaging.

3.3. Micromanaging Measures were Reduced

3.3.1. Procedure and Justification

When performing the task a user could have either sequentially specified many intermediate path goals along the way to a target, or sent the semi-automated robot directly toward a primary rescue target with a single click at its ultimate goal. These two strategies illustrate the range of behaviors for “micromanaging” the robot’s location and path. Two measures of micromanaging were defined. (1) The ratio of clicking directly on-target path goals (clicking on the target) versus clicking on non-target (intermediate location) path goals was computed. This proportion resulted in our micromanaging coefficient, with lower numbers indicating less micromanaging (Figure 4A). (2) Another measure related to micromanaging was defined as the average length of a path. Path length was the mean distance from the robot’s current location, to the end-goal of the path set for that robot (Figure 4B).

Figure 4. Micromanaging was reduced with Helpful frame cues. (A) Helpful frame groups micromanaged less than controls, by setting larger percentages of paths directly from the robot to the target, rather than specifying intermediate points along the way first. (B) We calculated a mean path length using the distance the robot had to cross to get to each assigned target location. Participants set longer more direct paths with the Helpful frames. (C) When including all, conditions, individuals, and blocks, in this analysis, longer paths associated with better performance, such that individuals who set longer paths tended to perform better. Overall, participants in the Helpful frame cue condition sent the robot more directly to targets, which allowed the robot algorithm to make micro-decisions.

3.3.2. Result

The Helpful frame cue condition demonstrated less micromanaging behavior than the Off frames condition, which in turn demonstrated less than the Random condition (Figure 4A). Path length was longer in the Helpful frame cue condition (Figure 4B). Interestingly, when averaging across all conditions, to plot all individual data points, longer path length was associated with higher total scores (Figure 4C).

3.3.3. Interpretation

These results highlighted the importance of utilizing automated systems to their fullest extent, often even when such automation is incomplete and error prone. Some robots heading directly to targets likely took inefficient paths, whereas other robots were not directed to targets at all, the latter being a much more important task to satisfy. This cuing system reduced such irrational perseverance and micromanaging behavior, and improved reliance on the semi-autonomous robots. Participants in the Helpful condition appeared to rely more on the robot’s sub-optimal automation.

3.4. Large-Scale Movement was Greater for Gaze and Reduced for Mouse

3.4.1. Procedure and Justification

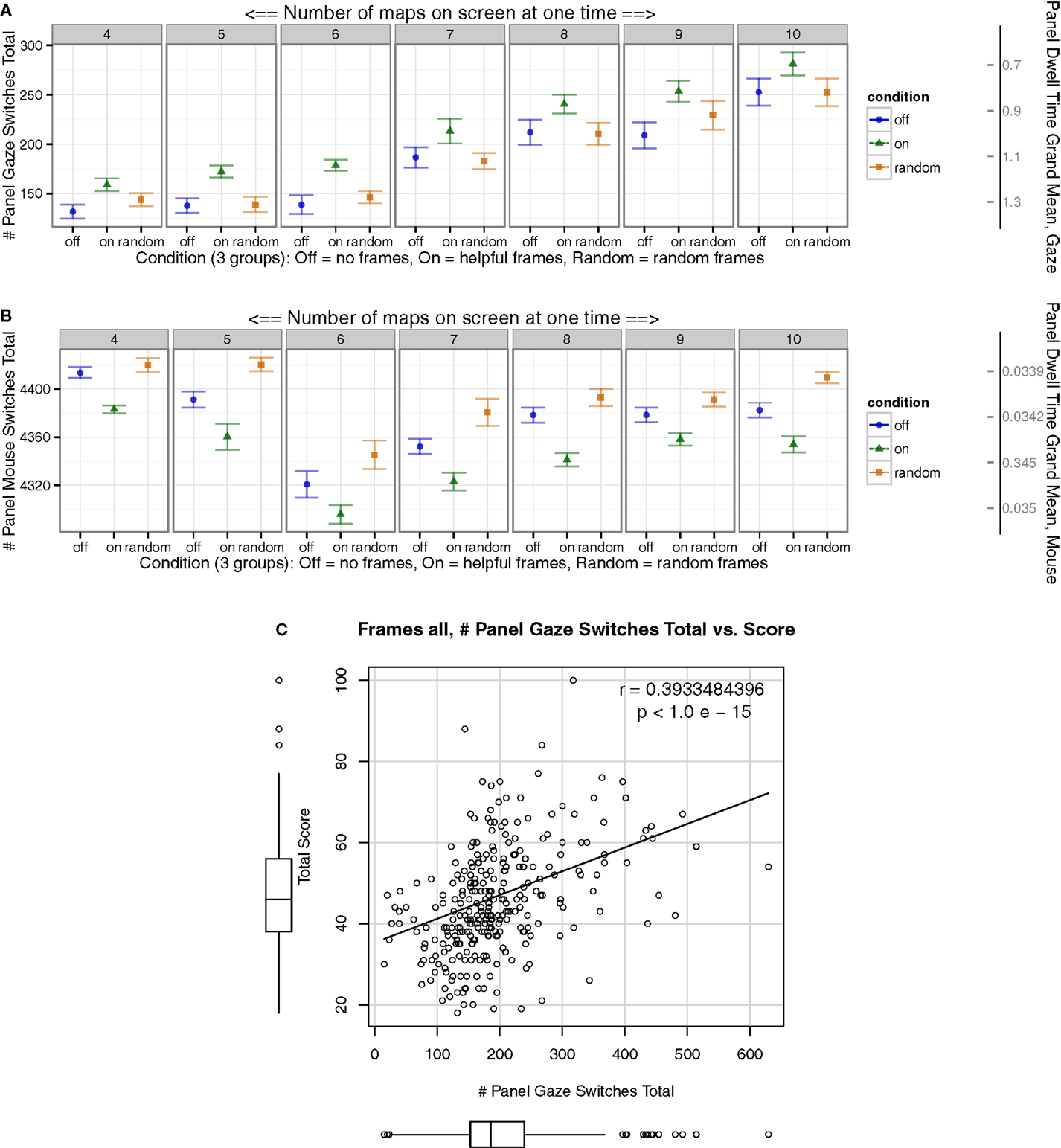

To ascertain information about the type of movement strategies, the mouse and gaze were performing across conditions, the global and local dynamics of eye movements were explored. Multiple measures were calculated: (1) For both the mouse and the gaze, the number of times the mouse or gaze switched between map panel tasks in the array of 4–10 panels was calculated for each condition. The larger this number, the greater the global movement, and large-scale task switching. (2) A related measure was generated by taking the mean time that the mouse or gaze spent within a single map panel task, before leaving it and moving to the next map panel task, averaged across all map panel tasks in a single block.

3.4.2. Result

The Helpful frames increased the number of macro-level map panel task switches for gaze relative to the two control conditions (Figure 5A, left Y-axis). Fascinatingly, a similar measure for mouse movement yielded the opposite pattern, with reduced global mouse movement (Figure 5B, left Y-axis). This opposite pattern was confirmed in another related measure. With Helpful frames less eye time was spent per panel on average (Figure 5A, right Y-axis), while for the mouse, the opposite was true with more time per panel (Figure 5B, right Y-axis). Note that right Y-axes display inverted values. This increased activity of the gaze for global movement was also associated with higher total scores when comparing across all individuals in the study (Figure 5C).

Figure 5. Participants’ gaze and mouse switches across map panel tasks (left Y-axis) and mean time spent on each map panel task before continuing (right Y-axis). [(A)-left] Gaze switched map tasks more frequently over the whole trial in the Helpful “On” condition compared to controls. [(B)-left] Mouse switched map tasks less frequently over the whole trial in the Helpful “On” condition compared to controls. [(A)-right]. Note: inverted scale. Participants spent less time gazing at each panel in the experimental “On” conditions, and [(B)-right] more time for mouse. (C) Number of gaze map panel task switches positively associated with better score across all observations. Overall, the gaze demonstrated more global movement, while the mouse showed reduced global movement.

3.4.3. Interpretation

These results suggested that gaze movements were more distributed, long range, or global, while the mouse made fewer macro-level map panel task transitions, perhaps for more efficient task switching. These results inspired the next measure, extending the investigation of mouse movements.

3.5. Mouse Movements Appeared more Local and Clustered

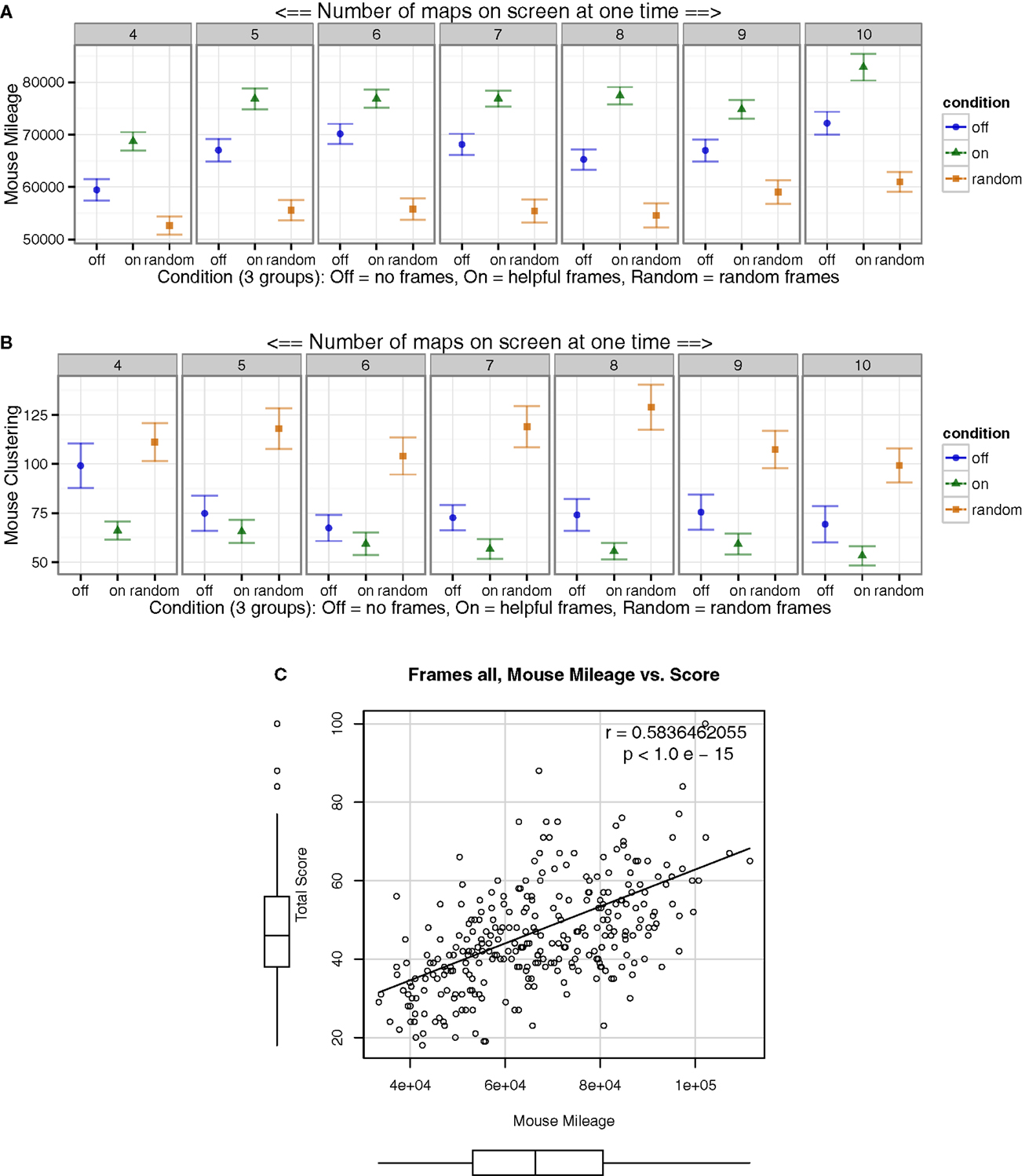

3.5.1. Procedure and Justification

To further characterize the global–local properties of mouse movements, these were analyzed using two measures: (1) The total cumulative distance covered by the mouse traversing the computer monitor over the course of a block, for each condition and block, was computed. (2) A novel measure of mouse-clustering was defined. Mouse movements were classified into clusters by setting an upper limit on the Euclidean distance (>2 cm) between a minimum number of sequential adjacent fixation coordinates, which spanned roughly 100 ms. We then quantified the proportion of mouse movements not in clusters (large movements) versus within clusters (small adjacent movements). The proportion of adjacent long movements (>2 cm) versus shorter movements estimated the degree to which mouse movements were clustered. Larger values indicate greater proportion of long movements, and smaller values indicate greater clustering (smaller movements).

3.5.2. Result

Surprisingly, despite the above discussed decrease in global mouse movement (number of panel mouse switches), an increase in mouse mileage was observed in the Helpful experimental condition, indicating more local movement relative to global movement with the mouse (Figure 6A). With Helpful frames, more clustered micro-movements were observed compared to the control conditions, and the Random condition showed an exaggerated decrease of mouse micro-movements with less clustering (Figure 6B). Greater cumulative mouse mileage over all observations was positively associated with higher total scores (Figure 6C).

Figure 6. Mouse movements were more local and more clustered in the experimental “On” condition compared to controls. (A) Over the course of a block, the mouse traversed more total distance, in pixels, in “On” condition compared to controls. (B) Measures of mouse clustering were developed, with higher ratios indicating a greater proportion of larger distributed movements, and smaller numbers indicating increased proportion of clustered small movements. Y-axis plotted the ratio of long-range mouse movements/short-range adjacent movements. Mouse movements were more clustered in the Helpful experimental condition. (C) Greater mouse mileage associated with better performance. Overall, Helpful frame groups showed an increase in local mouse activity.

3.5.3. Interpretation

There was an observed opposite pattern in the gaze and mouse measures for map panel task switches (previously), which agreed with the current result of higher mouse mileage when compared to lower mouse global switches. Together, these indicated a functional specialization. Specifically, the mouse and gaze could work more independently; i.e., the gaze could search broadly, and the mouse could move in a more clustered local manner. In conclusion, with Helpful frames compared to other conditions, the eye-gaze appeared to specialize for global search, while the mouse for local movement.

3.6. Measures of Gaze Demonstrated more Saccades and Fewer Fixations

3.6.1. Procedure and Justification

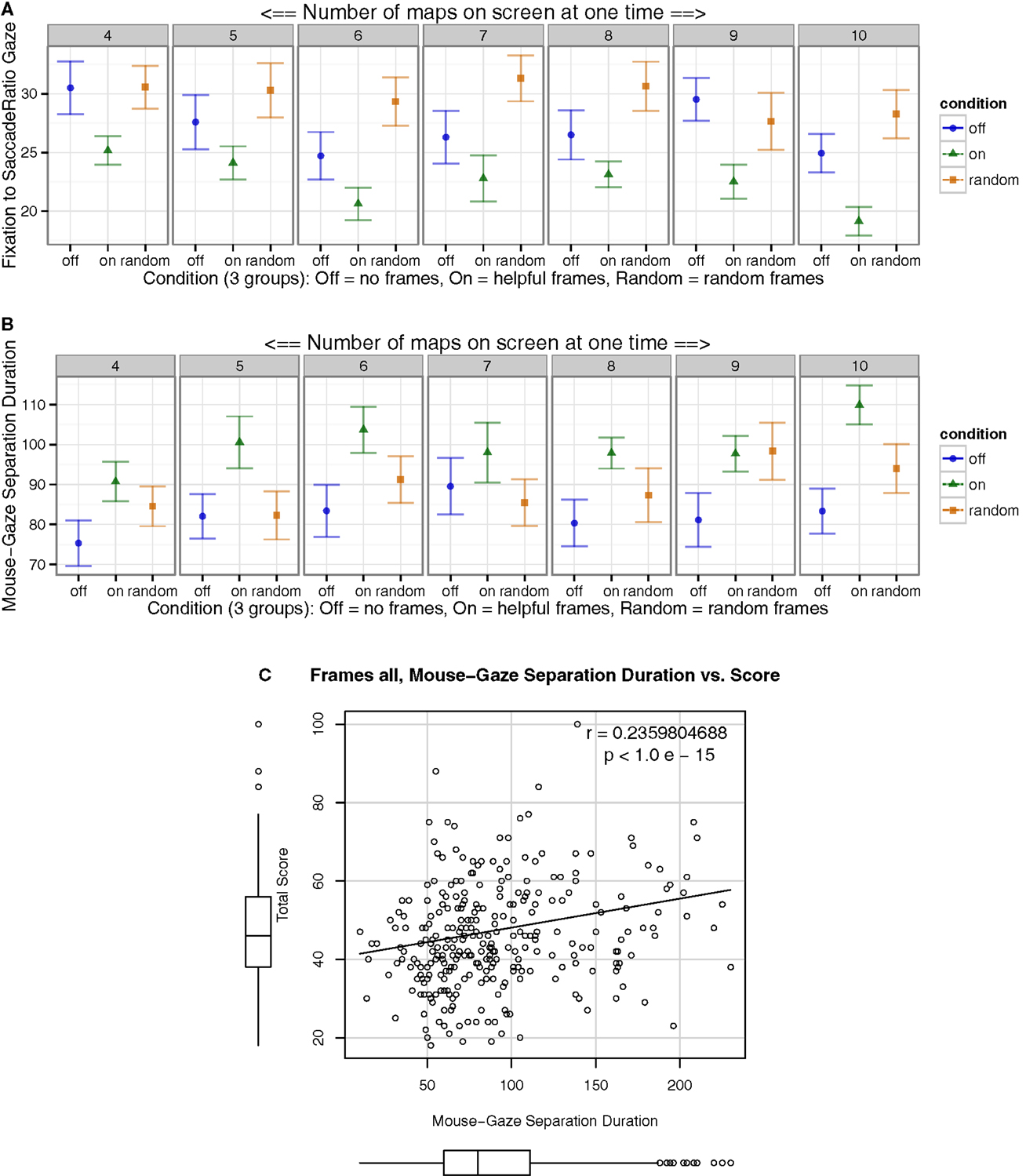

Much previous work defines the basic terminology for eye-gaze patterns (Goldberg and Kotval, 1999; Jacob and Karn, 2003; Poole and Ball, 2006). A fixation is defined as a consistent gaze position within roughly two degrees of visual angle with a minimum duration (usually 100–200 ms), and velocity lower than a threshold (around 15–100°/s). Saccades are fast movements of the eyes, ranging from 20 to 100 ms depending on experimental context. A gaze is defined as a series of repeated contiguous fixations within an area of interest; a fixation occurring outside the area of interest ends the gaze. Search behavior can be characterized by breaking down punctuated eye movements to particular locations by their duration, into fixations (typically >120 ms) and saccades (typically <120 ms). Fixation and saccade measurements have been shown to be informative of attentional processing (Velichkovsky et al., 2000; Velichkovsky, 2002; Unema et al., 2005). Importantly, the fixation-to-saccade ratio has be used as an index of processing (fixation) versus search (saccades) (Goldberg and Kotval, 1999); higher ratios indicate either more processing and/or less search activity than lower ratios.

To further explore search behavior, two measures were computed: (1) We estimated the fixation-to-saccade ratio. Fixations were gazes lasting >120 ms, within roughly 1 cm, while any shorter duration movements were classified as saccades. (2) To explore the temporal relationship between the mouse and gaze during search, a measure of how long the mouse and gaze were separated before reuniting was calculated, the mouse-gaze separation duration. Specifically, when the mouse and gaze overlapped in 2D space (were on the same square centimeter), they were classified as overlapping. The mean duration between these overlap events was computed, to represent the duration of time the mouse and gaze spent separate. The average duration in time between these overlap events (i.e., the mean duration of time-segments where the mouse and gaze were separate) was plotted (Figure 7B).

Figure 7. Gaze, “search” versus “processing.” (A) Results indicated more short saccades or fewer long fixations in experimental “On” condition. (B) In Helpful frame conditions, the duration of contiguous time the mouse and gaze were separate before reuniting (i.e., are close) was longer. Thus, the mouse and gaze were nearby less frequently with Helpful frames than controls. (C) Mouse-gaze separation duration associated with better performance. Overall, in the Helpful frames condition, the gaze appeared to specialize for search behavior, and the mouse and gaze remained distant longer.

3.6.2. Result

In the Helpful frames condition, the fixation-to-saccade ratio was lower, with a smaller proportion of fixations or greater proportion of saccades (Figure 7A). Further, the Random condition had larger ratios than the Off condition. With Helpful frames the duration in mean time the mouse and gaze were separate for a contiguous block before reuniting, was larger compared to controls (Figure 7B). Though the Random condition may have had a longer mouse-gaze separation duration than Off, these differences were not consistent. Greater duration of time between this spatial overlap of mouse and gaze positively associated with higher total scores (Figure 7C).

3.6.3. Interpretation

More short saccades and fewer long fixations would be classically interpreted to mean that participants searched more and processed less, respectively. These results indicated that the eye gaze may have specialized for search behavior (saccades) over processing (fixations), which could then be more efficient and directed. This was congruent with the above finding of greater global movement of the eyes with Helpful frames. This further supports the notion that the mouse and gaze were able to be functionally and spatially independent to a greater degree in the Helpful frames condition. This indicates a functional specialization of the mouse and gaze in the Helpful frames condition.

3.7. Gaze, Mouse, and Click Timing: The Hand is Quicker than the Eye

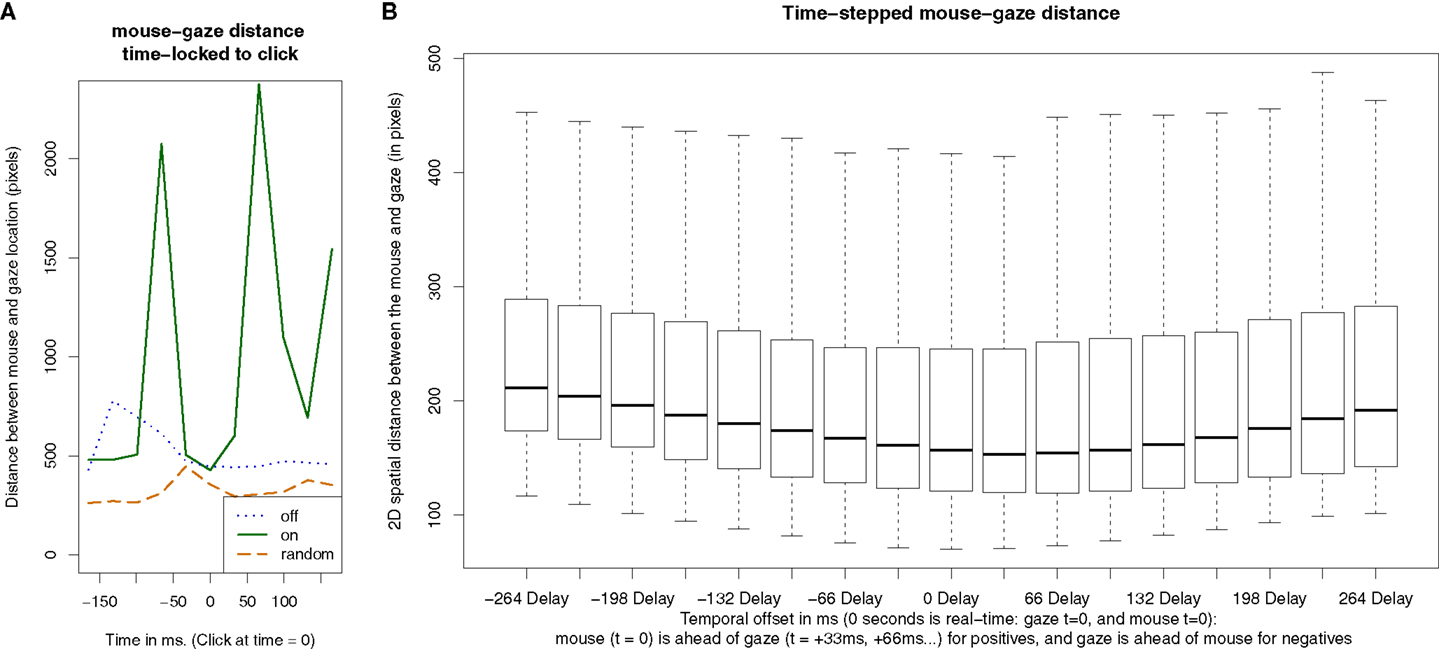

3.7.1. Procedure and Justification

To further elucidate mouse-gaze relationships, three more novel measures were defined: (1) Mouse-gaze distance around click. It was predicted that the mouse and gaze would be closer in 2D space around the time of a click, relative to when clicks were not occurring. To test this prediction, the distance between the mouse and gaze was plotted as a function of time, time-locked to the click, averaged across all clicks and across all blocks (Figure 8A). Time-locking refers to using a consistent time-point of reference for the purpose of averaging in relation to that point. In order to calculate the mean distance time series, for each click, we collected the time series of mouse and gaze position information 200 ms before and after that click, and computed the mouse-gaze distance during that period surrounding the click. For example, there were many clicks for each participant over the course of the experiment. For every click in each condition, we calculated mouse-gaze distances between the 200 ms before and after the click, generating as many time-series as clicks. The time-course of mouse-gaze distance was averaged over all these instances while time-locked to the click. To do so, for each point in time within the window (33 ms increments), we calculated the average distance at that equivalent time point across all time series. Following this procedure, we were able to generate a single distance time series for each condition. This measure was inspired by event related potential (ERP) analyses derived from electroencephalogram (EEG) recordings of neuronal activity. In those analysis, EEG waveforms are averaged time-locked to a particular event of interest to increase the signal to noise (SNR) of the neurological correlates of that event, when the neurological event is minuscule relative to background noise. This method applied here served to increase the SNR of factors related to clicks (mouse-gaze distance), revealing the small statistical relationship between mouse and gaze, in relation to the timing of clicks. (2) Mouse-click distance around click. A similar measure was calculated where the location of the click was treated as fixed, and artificially distributed to the entire “mouse” time-line, and then compared to the gaze over the same duration. In other words, the distance between the gaze and the click itself was plotted over the time just before and after the click. (3) Time-stepped mouse-gaze correlation. To further explore whether the gaze follows the mouse, or the opposite, a measure of mouse-gaze correlation was computed over parametrically varied artificial time-shifts. For a mouse position point and a gaze position at the same point of time, we first calculated their distance, and this was the 0 delay scenario. Then, we shifted the time series of mouse positions forward, e.g., the original time 0 became now −33 ms, denoted as delay −33 ms, and used the new mouse position and gaze position at the adjacent point in time to calculate mouse-gaze distance. In other words, the coordinates for the mouse at time-step t = 0, were used to calculate a distance from the gaze, at the same time-step (t = 0), or at later or earlier time-steps (t = −33 ms or +33 ms.). First, the mouse coordinates at t = 0 were compared to the gaze coordinates at t = 0. Then, the mouse coordinates at t = +33 ms were compared to the gaze location at t = 0. This was iterated forward and backward in time. The delay with the minimum distance in this plot was the timing at which the mouse and gaze were closest (most correlated) in space, out of all possible artificial time-shifts we calculated. The average distance between mouse and gaze was plotted as a function of the time-offset (Figure 8B).

Figure 8. Mouse-gaze-click temporal relationships. (A) Mean mouse-gaze distance time-locked to the click across all trials (see text for details). Y-axis is 2D spatial distance between mouse and gaze. In the Helpful condition, participants’ mouse and gaze worked more independently, apparently moving further apart between clicks. Based on minima in distance relative to click (+20 to +55 ms), mouse might have slightly lead or preceded gaze. (B) Mouse and gaze distance, as a function of repeated artificial shift in time to compare location, either comparing present to past locations of mouse and gaze, or present to future locations. Y-axis is 2D distance between mouse and gaze. 0 delay is real (actual) time for both mouse and gaze. Based on which offset minimizes distance (mouse ahead of gaze by 33 or 66 ms), mouse location may predict gaze location in time. It is important to note that, on the right half of the plot, the coordinates in mouse distance are at t = 0 with the gaze following at later time-steps (33 ms increments), and on the left half of the plot the gaze is at t = 0 with the mouse following at later time-steps. Overall, both measures indirectly suggested that the mouse may have lead the gaze.

3.7.2. Result

The mouse and gaze appeared distant from each other, except when they came close immediately after a click (Figure 8A). In the Helpful “On” frames condition, the mouse and gaze were much further apart immediately preceding and following the click. The distance between mouse and gaze may have been closest around 25–50 ms after the click, congruent with the possibility that the mouse location may lead or predict the location of the gaze (Figure 8A). Gaze-click distance (not graphically depicted) results appeared equivalent to mouse-gaze distance plots (Figure 8A). Time-stepped mouse-gaze correlations demonstrated that the mouse and gaze were closest when the mouse lead the gaze by 33 ms (Figure 8B).

3.7.3. Interpretation

The mouse-gaze-click analysis further extended the earlier observation that the mouse and gaze may have been functionally specialized to a greater degree in the Helpful frames “On” condition. An equivalence of gaze-click-click and gaze-mouse-click plots indicated that the mouse stills, slowing movement just before the click to a greater degree than the gaze, which continues to move up until the click. The click analyses and the time-stepped correlation both indicate, but do not necessitate, the conclusion that the mouse preceded the gaze. These two findings supported the adage that the “the hand is quicker than the eye,” and previous studies (Land et al., 1999; Ishida and Sawada, 2004). Some studies have also found that when manipulating objects the eyes follow the hand (Ballard et al., 1992). Note, we only assert that the measured variable of mouse predicts the measured eye-gaze location, not that one causes the other. A causal model might include covert attention and internal motor-planning, which cause both the hand to move and the eyes to follow. These ideas are congruent with the role of intention and covert attention preceding eye movements, e.g., as is demonstrated in parafoveal preview (scanning the upcoming words with non-foveal retina) benefiting reading speeds. Mouse-gaze-click measures suggested a greater independence of function of mouse and gaze for the Helpful conditions.

3.8. Commonly Occurring Horizontal Transition Biases were Reduced

3.8.1. Procedure and Justification

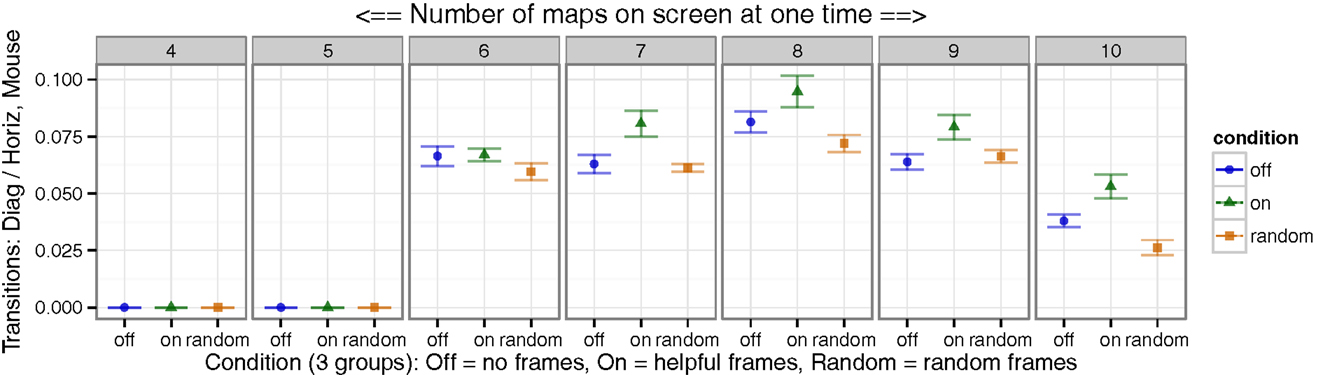

Much previous work has demonstrated that human observers have a bias toward horizontal detection and transition over a diagonal, even when diagonal transitions are optimal (Megaw and Richardson, 1979; Parasuraman, 1986; Donk, 1994; Bellenkes et al., 1997). It has been hypothesized that the human visual system has a bias and enhanced ability to process horizontal search or change (over vertical) due to the overwhelming experience of most land dwelling vertebrates with information varying more in horizontal plane than the vertical; alternatively, the majority of written languages are read on a horizontal plane, with years of reading experience also providing extensive practice with horizontal visual shifts. To determine which specific biases users were overcoming in mouse movements, we calculated full transition matrices, for each block and condition. Each mouse transition matrix contained the frequency of mouse transition from every map panel task, to every other map panel task, over the entire block. These matrices were then used to calculate horizontal and diagonal transition counts for the mouse. These were then used to compute the proportion of mouse cursor transitions diagonally versus horizontally (Figure 9).

Figure 9. Mouse transitional frequencies were less biased horizontally. Since horizontal transitions are typically over-represented in humans, we measured the ratio of diagonal map-to-map transitions, over horizontal transitions. Greater diagonal relative to horizontal transitions were observed for mouse movement in the Helpful condition. For blocks with only 4 or 5 map panel tasks, all fit on one row, and thus there were no diagonal transitions. The Helpful group displayed reduced common toward horizontal search behaviors.

3.8.2. Result

With Helpful frames, participants transitioned more frequently between map panel tasks diagonally relative to horizontally in the Helpful frames condition compared to controls (Figure 9).

3.8.3. Interpretation

In addition to our previous demonstration of reducing global bias (Taylor et al., 2015), this finding further suggested that the Helpful frames may have reduced common biases and improved rational search behavior.

3.9. Effect Sizes

To measure effect sizes, Cohen’s d was computed for the comparison of each control to the experimental condition. Traditional specifications classify effect sizes as: small at around <0.2, medium ranging from 0.3–0.6, and large >0.6–0.8. With Cohens d around 1, a difference between the means is large, at one full SD. With a Cohen’s d value of 1, it is also the case that there is a 76% chance that a participant sampled randomly from our experimental condition will have a higher score than a participant chosen randomly from a control condition (i.e., probability of superiority), and that 84% of our experimental group is above the mean of a control group (i.e., Cohen’s U3 percentage). Many of our theoretically relevant effects demonstrated large effect sizes around 1 or greater (Table 1).

3.10. Participant Sample Statistics

We confirmed that there were no incidental differences between subject groups in each condition for measured features known to influence experimental performance. To do so, we tested the null hypothesis that each group had the same population mean using ANOVA for the following measures: (A) hours of sleep in the previous week did not differ (F = 0.2, p < 0.8), (B) mean age in years was 26 and did not differ (F = 1.2, p < 0.3), and (C) multiple measures of video game experience did not vary between conditions, as measured by post-experimental surveys assessing multiple measures of gaming frequency (F = 0.8, p < 0.5 – days/year; F = 0.8, p < 0.5 – h/week), and gaming history (F = 0.1, p < 0.9 – duration; F = 0.5, p < 0.6 – age started playing). Full details were included in Taylor et al. (2015).

4. Discussion and Related Work

4.1. Divided Attention and Bias, Supervisory Sampling, and Search

Dividing attention over multiple tasks or entities is notoriously problematic for most humans (Watson and Strayer, 2010). For example, operators have been found to be overly biased to the most important elements of a display (Bellenkes et al., 1997). Biases for search and scanning patterns appear in the upper left of a display, hypothesized as an artifact of western left-to-right reading (Megaw and Richardson, 1979). Operators are biased to use horizontal over diagonal eye movements and scanning patterns, even when diagonal scanning was optimal (Donk, 1994). Search also tends to be overly biased toward central regions of available visual space, termed an “edge effect” (Parasuraman, 1986). To remedy some problems with divided attention, augmented displays have been the subject of extensive study, such as classic experiments with heads up displays (HUD), which superimposed important information transparently over the frontal field of view (Weintraub, 1992; Newman, 1995; Wickens, 1997; Wickens and Seidler, 1997), with mixed results, such that care must be taken when designing such assistive systems.

In supervisory control sampling tasks, where operators perform something like instrument scanning or sampling (Moray, 1981, 1986), expertise improves sampling probabilities (Bellenkes et al., 1997). Sampling reminders for these tasks similarly improve user performance (Moray, 1981). This functions in part because generally, cuing targets can speed or increase the accuracy of detection (Eriksen and Collins, 1969). Highlighting display elements in a multi-display may assist in directing attention (Fisher and Tan, 1989; Hammer, 1999). Cuing for target detection has been studied in the real world in helmet displays, finding that cues were helpful for expected targets, but harmful for unexpected targets (Yeh et al., 1998). In theoretical support of external cuing, it has been suggested that exogenously captured attention drives faster saccades than endogenously intended reaction times as measured by saccades (Kean and Lambert, 2003). Predictive displays show operators what possible future states might arise (Kelley, 1968; Gallaher et al., 1977; Lintern et al., 1990). Preview of which element to attend to next may ameliorate delays for rapid decision making (Grossman, 1960; Elkind and Sprague, 1961; Reid and Drewell, 1972; Grunwald, 1985). Our algorithm took advantage of many of these principles, using a simple external cue, which applies domain-generally.

4.2. Task Switching

The costs for task switching are many: the rapid decision making required, the code-switching needed to switch between tasks, and the observed perseverance bias for continuing tasks longer than ideal (Jersild, 1927; Sheridan, 1972; Moray, 1986; Rogers and Monsell, 1995; Schutte and Trujillo, 1996). For tracking or decision tasks, a limit has been observed where human operators can not reliably make greater than two decisions per second (Craik, 1948; Elkind and Sprague, 1961; Fitts and Posner, 1967; Debecker and Desmedt, 1970). Our system potentially eliminates one decision per second, or per map switch, a non-trivial benefit.

Irrational perseverance is also illustrated by a past study showing that when noticing a problem with one element, operators failed to continue monitoring all tasks well, and did not move on effectively (Moray and Rotenberg, 1989), which may have been observed with our control conditions micromanaging more. For task management, it has been shown that operators’ planning strategies are not elaborate or ideal, and are typically overly simplified (Liao and Moray, 1993; Raby and Wickens, 1994; Laudeman and Palmer, 1995), because these planning strategies are resource intensive and require high cognitive workload (Tulga and Sheridan, 1980). Confusion between tasks when task switching is greater for sub-tasks which are similar when compared to sub-tasks which are dissimilar (Hirst and Kalmar, 1987), perhaps due to what is termed outcome conflict (Navon, 1984; Navon and Miller, 1987). Outcome conflict is complementary but different from the multiple resource model (Wickens et al., 1986), where different types of sensory input to an operator interfere less than similar input (Tayyari and Smith, 1987; Martin et al., 1988). Our system was particularly helpful for managing multiple similar tasks.

To ameliorate task switching costs, visual external cues for task switching may assist operators (Allport et al., 1994; Wickens, 1997). Cuing important or neglected tasks can be helpful (Wiener and Curry, 1980; Funk, 1996; Hammer, 1999); however, doing so successfully in a domain-general manner has not been accomplished. Secondary tasks have long been used to index workload for a primary task (Rolfe, 1973; Ogden et al., 1979); while indirectly these results speak to the benefit of eliminating the secondary task (tracking gaze history) in our game. Therefore, for high workload situations (like 8 or more maps here) it may be optimal to have a computer-externalized planning strategy.

Our studies took advantage of these phenomena to optimize the primary task, when a secondary task is also helpful to perform, but can be performed by the computer. Our frame cue aid may allow for more full focus on a single map, with more effective and rapid task switching between maps.

4.3. Working Memory

With multiple entities to track and evaluate, past studies showed that the human user is often limited by functional working memory load. Different working memory resources are thought to exist for different modalities or mental processes; for example, visual working memory has been proposed to be stored in what is called the visuospatial sketchpad (Salway and Logie, 1995), with dissociable memory components for different modalities (e.g., auditory) or even visual domains (Logie and Pearson, 1997). Without rehearsal, the typical duration of working memory is 10–20s, though with more items in a set to remember the set decays even faster (Brown, 1958; Peterson and Peterson, 1959; Melton, 1963; Moray, 1986). The classically proposed number of items capable of maintenance in working memory is seven chunks of familiar information, plus or minus two (Miller, 1956). However, artificial lab experiments produced an unrealistically high estimate of seven items, whereas the real-world version of working memory (running memory) is probably closer to five chunks of familiar information (Moray, 1980). Expertise expands working memory for items for which the expert has experience, for example, in chess (Chase and Simon, 1973; Groot, 1978; Gobet, 1998) or computer programing (Vessey, 1985; Ye and Salvendy, 1994; Barfield, 1997), in part via chunking (Anderson and Neely, 1996a,b; Anderson et al., 1996). In summary, a single human is capable, in principle, of monitoring multiple semi-autonomous operations, but the number is limited, in part, by running memory capacity. We expanded this functional capacity for multi-agent management tasks, by delegating working memory requirements to the computer.

With industrial relevance, vigilance tasks are hypothesized to produce continuous loads on working memory (Parasuraman, 1979; Deaton and Parasuraman, 1988). Working memory updating is error prone when updating a similar variable repeatedly (as in map location) as opposed to different variables (Yntema, 1963). Items in working memory can experience retroactive interference where items presented later interfere with the first, as well as proactive interference where items presented before an item to be remembered interfere (McGeoch, 1936; Keppel and Underwood, 1962; Anderson and Neely, 1996a), while up to 10 s between item presentation is needed to offset these interference effects. Maintaining an item in working memory is interfered with by having participants attempt to maintain a second similar set of items, more than if the second set of items is dissimilar to the first (McGeoch, 1936; Bailey, 1989; Anderson and Neely, 1996a,b). For example, presenting new spatial information interferes with previously presented spatial information, compared to interference from non-spatial information (Hole, 1996). Particularly for a spatial task-like robot manipulation, spatial working memory is taxed by performing multiple spatial tasks at one time. Short-term or working memory capacity is further limited by the fact that keeping track of which items need to be re-checked (secondary task) uses the same cognitive resources as the spatial manipulation task (primary task), further limiting task performance by the necessity of remembering attention history.

To perform optimally the user must, among other things, remember where was monitored last, since the longer the time that has elapsed since a check, the greater the probability of a situation requiring human assistance; this, however, is also a spatial working memory task. With high numbers of tasks, working memory load increases, as measured by blunting typical assistance via peripheral preview (Tulga and Sheridan, 1980). Previous studies have shown it can be practically helpful to supplement working memory with an external display, e.g., air traffic control (Wiener and Nagel, 1988) or cockpit display of traffic information (CDTI) (Hart and Loomis, 1980).

Our algorithm aids multitasking in a domain-general manner, for the first time. It is likely that one mechanism of action was via the delegation of short-term working memory to the computer in real time, freeing cognitive resources from a secondary task, so that the resources can be invested in the primary task.

4.4. Augmented Cognition, Human-Agent, Human–Robot, Human–Swarm Interaction, and Automation Assistance

Extensive research has been performed under DARPA initiatives to further real-time assistance for technical scenarios (St. John et al., 2004). In such studies of augmented cognition, assistance often involves detection and utilization of the human operator’s mental state to optimize performance (Dorneich et al., 2003; Schmorrow and McBride, 2004; Erdogmus et al., 2005; Greitzer, 2005; Ivory et al., 2005; Miller and Dorneich, 2006; Carlson et al., 2007; Fuchs et al., 2007b; Kollmorgen, 2007; Schmorrow and Reeves, 2007; Barber et al., 2008; Ushakov and Bubeev, 2008; Vogel-Walcutt et al., 2008; Agarwal and Dagli, 2013; Kolsch et al., 2013; Abbass et al., 2014; Putze and Schultz, 2014).

Similarly, human–robot interaction must be optimized for human performance; such studies take the form of designing command interfaces, optimizing naturalness, gesture-based communication, hardware design, and social considerations (Waldherr et al., 2000; Goodrich and Schultz, 2007; Lavine et al., 2007; Pfeifer et al., 2007; Bicho et al., 2011; Goodrich et al., 2011a,b; Lenzi et al., 2011; Sciutti et al., 2012; Canning and Scheutz, 2013; Kondo et al., 2013; Murphy and Schreckenghost, 2013; Tiberio et al., 2013; Trafton et al., 2013). Human–robot interactions are not always singular, and there has been progress in the design and communication of human–robot teams (Cuevas et al., 2007; Fiore et al., 2011). These multi-agent communications become even more complex when considering autonomous swarm-based agents, and some studies have begun to make progress in improving human control of swarms (Naghsh et al., 2008; Hashimoto et al., 2009; Marjovi et al., 2009; Ducatelle et al., 2011; Penders et al., 2011; Giusti et al., 2012a,b; Goodrich et al., 2012, 2013; Kerman et al., 2012; Kolling et al., 2012, 2013; Nagi et al., 2012; Brambilla et al., 2013; Mi and Yang, 2013). We build upon these studies by demonstrating a method to improve human control over multiple automated agents.

Though simple cuing can assist users in knowing what to attend to in multitasking scenarios (Seidlits et al., 2003; Boucheix and Lowe, 2010; Groen and Noyes, 2010; Ozcelik et al., 2010), the highly complex multitasking needed to manage multiple autonomous agents, requires more advanced computational assistance. For more abstract or complex tasks, proceduralization is a term used to define systematic externalized systems for improving decision making (Bazerman, 1998), often with computer assistance (Dawes et al., 1989), which our system does. Computerized displays to assist users in decision making are often domain-specific expert systems (Shortliffe, 1983; Chignell and Peterson, 1988; Schkade and Kleinmuntz, 1994; Stone et al., 1997), whereas our system is domain-general. Automation assistance has been developed to assist human users in managing automation when the tasks are complex and dynamic (Endsley, 1999; Inagaki, 2003; Kaber and Endsley, 2004), which our algorithm would assist with as well.

4.5. Real-Time Eye Tracking: Pupil Size, Contingent, Gaze-Control, and Gaze-Aware

Gaze location can be used to modify a display or physical device in real-time, depending on either pupil size or on the location of gaze itself, with both summarized in the following five parts: (1) Augmented cognition, (2) Contingency, (3) Gaze-control, (4) Gaze-aware systems, and (5) Previous limitations.

4.5.1. Augmented Cognition: Real-Time Assessment and Utilization of Pupil Size, as a Theoretical Proxy for Cognitive Load

The DARPA augmented cognition initiative has considered the use of gaze tracking as a potentially important for assisting users in military scenarios (Crosby et al., 2003; Marshall and Raley, 2004; Nicholson et al., 2005; Fuchs et al., 2007a; Stanney et al., 2009). The majority of these studies used pupil dilation as a measure of cognitive load (Marshall, 2002; Marshall et al., 2003; St John et al., 2003; Taylor et al., 2003; Raley et al., 2004; St. John et al., 2004; Johnson et al., 2005; Mathan et al., 2005; Russo et al., 2005; Ververs et al., 2005; De Greef et al., 2007; Coyne et al., 2009), while only few attempted to actually develop the technical infrastructure for real-time assistance via gaze location (Barber et al., 2008), though none produced results using gaze location.

4.5.2. Contingent: Gaze-Based Real-Time Display Updating, as an Experimental Tool

Contingent (interactive) eye tracking is defined as modifying a display or process in real-time based on gaze location, and has previously been used in lab experiments, though often as an impediment, not for the point of optimizing performance. Early in the development of the technology, the fields of linguistics and reading employed paradigms like the moving window paradigm (Reder, 1973; McConkie and Rayner, 1975), which impedes the user in real-time by replacing the upcoming periphery with noise or random letters, for example, while reading, to eliminate parafoveal preview; alternately one can also blur the periphery. The moving mask paradigm is the opposite, blurring the fovea (Rayner and Bertera, 1979); the moving mask paradigm has been used to study visual learning (Castelhano and Henderson, 2008) and visual search (Miellet et al., 2010). Another method is the parafoveal magnification paradigm (Miellet et al., 2009), which involves magnifying the periphery, to compensate for reduced resolution in the periphery, and is also used in reading studies to manipulate parafoveal preview. Others have created a central hole allowing visibility only at the fovea, like seeing through a telescope (Shimojo et al., 2003). In light of the fact that breaking or harming performance can serve as an excellent experimental probe, these paradigms were successful. However, none improved human performance on a practical task in real-time.

4.5.3. Gaze Control: Computer, Robot, and Swarm Control

A very large quantity of work on gaze-based paradigms, which are intended to control computerized systems, wheelchairs, or other robots with the eyes, exists. These have been pioneered both inside academia and out, for groups of individuals with diseases like amyotrophic lateral sclerosis (ALS), a peripheral motor-neuron disease paralyzing the body, while leaving eye movements in tact. These studies most often involve the movement of a cursor, wheelchair, and accessories via the point of gaze, while defining a variety of mouse-click paradigms, including blinks and others, as well as the use of gaze location to control computer graphical menus, zooming of windows, or context-sensitive presentation of information (Jacob, 1990, 1991, 1993a,b; Jakob, 1998; Zhai et al., 1999; Tanriverdi and Jacob, 2000; Ashmore et al., 2005; Laqua et al., 2007; Liu et al., 2012; Sundstedt, 2012; Hild et al., 2013; Wankhede et al., 2013). These paradigms have been extended to improve upon human control of robots (Carlson and Demiris, 2009), as well as humans controlling swarms (Couture-Beil et al., 2010; Monajjemi et al., 2013). Our study may assist those attempting to control multiple robots or swarms, since the algorithm may improve a variety of such control systems.

Many of these gaze control-based paradigms are very beneficial, and some include gaze-aware features, though we would like to distinguish the purely gaze-aware from the purely control or control which has some additional assistive function. The experiments presented here demonstrate a gaze-aware system, which can improve performance, without direct input, and may assist operators in a variety of scenarios, both control and non-control. We now progress to further discussion of gaze-aware components.

4.5.4. Gaze-Aware: Command vs. Assistance, Domain-Specific vs. General, and Descriptive vs. Predictive

Most gaze-display paradigms have some control component, though some displays are purely gaze-aware but not intended for user assistance: for example, video compression is used in gaze contingent displays (GCDs) to maintain image resolution while compressing the periphery and to optimize computational resources (Reingold et al., 2003; Duchowski et al., 2004; Loschky and Wolverton, 2007). Despite the fact that they do not assist the user, these systems illustrate many good features of user-assisting systems: being non-intrusive, intuitive, transparent, and non-command, features which are currently underrepresented in gaze or attentive user interfaces (AUIs).

Most of the currently existing gaze-aware systems or AUIs actually also include an active control component, for example, in the selection of which window to interact with, or which menu item to enlarge. Many of these attentive or gaze-aware interfaces are also quite domain-specific, with examples including reading, menu selection, scrolling, or information presentation (Bolt, 1981; Starker and Bolt, 1990; Sibert and Jacob, 2000; Hyrskykari et al., 2003; Fono and Vertegaal, 2004, 2005; Iqbal and Bailey, 2004; Ohno, 2004, 2007; Spakov and Miniotas, 2005; Hyrskykari, 2006; Merten and Conati, 2006; Kumar et al., 2007; Buscher et al., 2008; Bulling et al., 2011).

In robot interaction, most real-time eye interfaces have been designed to mimic gaze for social or communicative reasons (Staudte and Crocker, 2008, 2009, 2011; Jones and Schmidlin, 2011; Boucher et al., 2012; Kohlbecher et al., 2012). Only few human–robot studies took gaze location into consideration for improving human task performance (DeJong et al., 2011), which involved improving human performance in spatial transformations using robotic arms.

A common theme in gaze-aware interfaces is to attempt to predict the users’ preferences or gaze location in domain-specific scenarios, such as map scanning, reading, eye-typing, or entertainment media (Goldberg and Schryver, 1993, 1995; Salvucci, 1999; Qvarfordt and Zhai, 2005; Bee et al., 2006; Buscher and Dengel, 2008; Jie and Clark, 2008; Xu et al., 2008; Hwang et al., 2013). Some of these attempts at prediction may generalize well to forecasting wider subsets of real-world tasks (Hwang et al., 2013), though still require intelligent computer vision, image processing, and adaptation of algorithms for new tasks to be predicted optimally. For a paradigm to be domain-general to the greatest degree, it must eliminate specific prediction, relying upon description, or generalized probability distributions over the display space.

Though some have attempted domain-general systems, such attempts have interfered with the user by obscuring the display inflexibly (e.g., make everything looked at entirely opaque), or only apply to a specific subset of behavior, such as within some types of search (Pavel et al., 2003; Roy et al., 2004; Bosse et al., 2007). Our system addresses many of these limitations, in that it does not require the computer have content knowledge (e.g., vision), is domain-general, intuitive, closed-loop, non-predictive, and most importantly, effective.

4.5.5. Obviousness and Previous Limitations

Given the seeming obviousness of our solution, it is notable that despite the need and benefit from this situation-blind multitasking aid, no others exist to date. The benefits were non-trivial, with very large statistical effect sizes, and potential for wide generalization. Previous eye trackers cost upwards of $20,000 USD, which often prohibited this as a feasible solution. However, recently eye trackers have come down in price, with the eye tracker used in this study being printed on a 3D printer, with an open API, while many others have now published designs for open and inexpensive hardware (Babcock and Pelz, 2004; Li et al., 2006; Lemahieu and Wyns, 2011).

4.6. Conclusion

In part, previous applications attempted to predict human intentions, to better provide the human with what they wanted. For general benefits, it is more efficient to eliminate prediction, and define domain-general probability utility over the visual display, such that the display is transparent, non-interfering, and assistive. Our application may function via delegating short-term memory load to the computer, and reducing the number of repeated decisions the user needed to make. Highlighting the gaze history on multiple tracking tasks could theoretically improve performance on many tasks where the probability of task relevance relates to the delay since gaze, even in complex ways. The design of the assistive algorithms tested here demonstrated clearly greater application-independence than any previous AUIs or gaze-aware interfaces. Rather than enable novel control means, these experiments demonstrated an overlay procedure to transparently accelerate normal interaction or pre-existing control, likely generalizing to a wide variety of multi-agent applications.

Author Contributions

PT designed experiment, with assistance from NB, HS, and ZH. NB programmed experiment, with contributions and edits by PT. PT and ZH ran human subjects. ZH and PT programmed data analysis. PT wrote manuscript, with contributions from HS, ZH, and NB. HS supervised all research.

Data Sharing

Source code for the experimental game will be provided upon request, under the open GPLv3 license.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Funding

We thank the Office of Naval Research, award: N00014-09-1-0069.

References

Abbass, H. A., Tang, J., Amin, R., Ellejmi, M., and Kirby, S. (2014). “Augmented cognition using real-time EEG-based adaptive strategies for air traffic control,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Vol. 58 (Chicago, IL: SAGE), 230–234. doi:10.1177/1541931214581048

Agarwal, S., and Dagli, C. H. (2013). Augmented cognition in human-system interaction through coupled action of body sensor network and agent based modeling. Procedia Comput. Sci. 16, 20–28. doi:10.1016/j.procs.2013.01.003

Allport, D., Styles, E., and Hsieh, S. (1994). “Shifting intentional set: exploring the dynamic control of tasks,” in Attention and Performance 15: Conscious and Nonconscious Information Processing, eds C. Umilt and M. Moscovitch (Cambridge, MA: The MIT Press), 421–452.

Anderson, J., and Neely, J. (1996a). “Chapter 8 – interference and inhibition in memory retrieval,” in Memory, eds E. L. Bjork and R. A. Bjork (San Diego, CA: Academic Press), 237–313.

Anderson, J. R., Reder, L. M., and Lebiere, C. (1996). Working memory: activation limitations on retrieval. Cogn. Psychol. 30, 221–256. doi:10.1006/cogp.1996.0007

Anderson, M. C., and Neely, J. H. (1996b). “Chapter 8 – interference and inhibition in memory retrieval,” in Memory, eds E. L. Bjork and R. A. Bjork (San Diego, CA: Academic Press), 237–313.

Ashmore, M., Duchowski, A. T., and Shoemaker, G. (2005). Efficient Eye Pointing with a Fisheye Lens, Vol. 05. Waterloo, ON: Canadian Human-Computer Communications Society, School of Computer Science, University of Waterloo, 203–210.

Atienza, R., and Zelinsky, A. (2002). “Active gaze tracking for human-robot interaction,” in Proceedings of the 4th IEEE International Conference on Multimodal Interfaces (Washington, DC: IEEE Computer Society), 261. doi:10.1109/ICMI.2002.1167004

Babcock, J. S., and Pelz, J. B. (2004). “Building a lightweight eyetracking headgear,” in Proceedings of the 2004 Symposium on Eye Tracking Research & Applications (New York, NY: ACM). 109–114. doi:10.1145/968363.968386

Bailey, R. (1989). Human Performance Engineering: Using Human Factors/Ergonomics to Achieve Computer System Usability. Englewood-Cliffs, NJ: Prentice Hall.

Ballard, D. H., Hayhoe, M. M., Li, F., Whitehead, S. D., Frisby, J. P., Taylor, J. G., et al. (1992). Hand-eye coordination during sequential tasks [and discussion]. Philos. Trans. R. Soc. Lond. B Biol. Sci. 337, 331–339. doi:10.1098/rstb.1992.0111

Barber, D., Davis, L., Nicholson, D., Finkelstein, N., and Chen, J. Y. (2008). The Mixed Initiative Experimental (MIX) Testbed for Human Robot Interactions with Varied Levels of Automation. Technical Report. Orlando, FL: DTIC Document.

Barfield, W. (1997). Skilled performance on software as a function of domain expertise and program organization. Percept. Mot. Skills 85, 1471–1480. doi:10.2466/pms.1997.85.3f.1471

Bee, N., Prendinger, H., Andrè, E., and Ishizuka, M. (2006). “Automatic preference detection by analyzing the gaze ‘cascade effect’,” in Electronic Proceedings of the 2nd conference on communication by gaze interaction (Turin: COGAIN), 61–64.

Bellenkes, A. H., Wickens, C. D., and Kramer, A. F. (1997). Visual scanning and pilot expertise: the role of attentional flexibility and mental model development. Aviat. Space Environ. Med. 68, 569–579.

Bhuiyan, M., Ampornaramveth, V., Muto, S.-Y, and Ueno, H. (2004). On tracking of eye for human-robot interface. Int. J. Robot. Autom. 19, 42–54. doi:10.2316/Journal.206.2004.1.206-2605

Bicho, E., Erlhagen, W., Louro, L., and Costa e Silva, E. (2011). Neuro-cognitive mechanisms of decision making in joint action: a human-robot interaction study. Hum. Mov. Sci. 30, 846–868. doi:10.1016/j.humov.2010.08.012

Bolt, R. A. (1981). “Gaze-orchestrated dynamic windows,” in SIGGRAPH ’81 (New York, NY: ACM), 109–119.

Bosse, T., Van Doesburg, W., van Maanen, P.-P., and Treur, J. (2007). “Augmented metacognition addressing dynamic allocation of tasks requiring visual attention,” in Foundations of Augmented Cognition. eds D. Schmorrow and L. Reeves (Berlin Heidelberg: Springer), 166–175. doi:10.1007/978-3-540-73216-7_19

Boucheix, J.-M., and Lowe, R. K. (2010). An eye tracking comparison of external pointing cues and internal continuous cues in learning with complex animations. Learn. Instruct. 20, 123–135. doi:10.1016/j.learninstruc.2009.02.015

Boucher, J.-D., Pattacini, U., Lelong, A., Bailly, G., Elisei, F., Fagel, S., et al. (2012). I reach faster when I see you look: gaze effects in human-human and human-robot face-to-face cooperation. Front. Neurorobot. 6:3. doi:10.3389/fnbot.2012.00003

Brambilla, M., Ferrante, E., Birattari, M., and Dorigo, M. (2013). Swarm robotics: a review from the swarm engineering perspective. Swarm Intell. 7, 1–41. doi:10.1007/s11721-012-0075-2

Brown, J. (1958). Some tests of the decay theory of immediate memory. Q. J. Exp. Psychol. 10, 12–21. doi:10.1080/17470215808416249

Bulling, A., Roggen, D., and Troester, G. (2011). What’s in the eyes for context-awareness? IEEE Pervasive Comput. 10, 48–57. doi:10.1109/MPRV.2010.49

Buscher, G., and Dengel, A. (2008). “Attention-based document classifier learning,” in Document Analysis Systems, 2008. DAS’08. The Eighth IAPR International Workshop on (Nara: IEEE), 87–94.

Buscher, G., Dengel, A., and van Elst, L. (2008). “Query expansion using gaze-based feedback on the subdocument level,” in SIGIR ’08 (New York, NY: ACM), 387–394. doi:10.1145/1390334.1390401

Buswell, G. (1935). How People Look at Pictures: A Study of the Psychology and Perception in Art. Oxford: Univ. Chicago Press.

Canning, C., and Scheutz, M. (2013). Functional near-infrared spectroscopy in human-robot interaction. J. Hum. Robot Interact. 2, 62–84. doi:10.5898/JHRI.2.3.Canning

Carlson, R. A., Gray, W. D., Kirlik, A., Kirsh, D., Payne, S. J., and Neth, H. (2007). “Immediate interactive behavior: how embodied and embedded cognition uses and changes the world to achieve its goal,” in Proceedings of the 29th Annual Conference of the Cognitive Science Society (Nashville, TN), 33–34.

Carlson, T., and Demiris, Y. (2009). Using Visual Attention to Evaluate Collaborative Control Architectures for Human Robot Interaction. 38–43.

Castelhano, M. S., and Henderson, J. M. (2008). Stable individual differences across images in human saccadic eye movements. Can. J. Exp. Psychol. 62, 1–14. doi:10.1037/1196-1961.62.1.1

Chase, W. G., and Simon, H. A. (1973). “The mind’s eye in chess,” in Visual Information Processing, Vol. xiv. ed. W. G. Chase (Oxford: Academic), 555

Chignell, M. H., and Peterson, J. G. (1988). Strategic issues in knowledge engineering. J. Hum. Fact. Ergon. Soc. 30, 381–394. doi:10.1177/001872088803000402

Core Team, R. (2013). R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing.

Couture-Beil, A., Vaughan, R. T., and Mori, G. (2010). “Selecting and commanding individual robots in a multi-robot system,” in Computer and Robot Vision (CRV), 2010 Canadian Conference on (Ottawa, ON: IEEE), 159–166. doi:10.1109/CRV.2010.28

Coyne, J., Baldwin, C., Cole, A., Sibley, C., and Roberts, D. (2009). “Applying real time physiological measures of cognitive load to improve training,” in Foundations of Augmented Cognition. Neuroergonomics and Operational Neuroscience. eds D. Schmorrow, I. Estabrooke, and M. Grootjen (Springer Berlin: Springer), 469–478. doi:10.1007/978-3-642-02812-0_55

Craik, K. J. W. (1948). Theory of the human operator in control systems. Br. J. Psychol. 38, 142–148. doi:10.1111/j.2044-8295.1948.tb01149.x

Crosby, M. E., Iding, M. K., and Chin, D. N. (2003). “Research on task complexity as a foundation for augmented cognition,” in International Conference on System Sciences, 2003. Proceedings of the 36th Annual Hawaii (Hawaii: IEEE), 9.

Cuevas, H. M., Fiore, S. M., CAlDWEll, B. S., and StRAtER, L. (2007). Augmenting team cognition in human-automation teams performing in complex operational environments. Aviat. Space Environ. Med. 78(Suppl. 1), B63–B70.

Dawes, R. M., Faust, D., and Meehl, P. E. (1989). Clinical versus actuarial judgment. Science 243, 1668–1674. doi:10.1126/science.2648573

De Greef, T., van Dongen, K., Grootjen, M., and Lindenberg, J. (2007). “Augmenting cognition: reviewing the symbiotic relation between man and machine,” in Foundations of Augmented Cognition. eds D. Schmorrow and L. Reeves (Berlin Heidelberg: Springer), 439–448. doi:10.1007/978-3-540-73216-7_51

Deaton, J., and Parasuraman, R. (1988). Effects of Task Demands and Age on Vigilance and Subjective Workload. Anaheim, CA.

Debecker, J., and Desmedt, J. E. (1970). Maximum capacity for sequential one-bit auditory decisions. J. Exp. Psychol. 83, 366. doi:10.1037/h0028848

DeJong, B., Colgate, J., and Peshkin, M. (2011). “Mental transformations in human-robot interaction,” in Mixed Reality and Human-Robot Interaction. ed. X. Wang (Netherlands: Springer), 35–51. doi:10.1007/978-94-007-0582-1_3

de’Sperati, C. (2003). “The inner-workings of dynamic visuo-spatial imagery as revealed by spontaneous eye movements,” in The Mind’s Eye: Cognitive and Applied Aspects of Eye Movement Research. eds R. Radach, J. Hyona, and H. Deubel (Oxford: Elsevier).

Donk, M. (1994). Human monitoring behavior in a multiple-instrument setting: independent sampling, sequential sampling or arrangement-dependent sampling. Acta Psychol. 86, 31–55. doi:10.1016/0001-6918(94)90010-8

Dorneich, M. C., Whitlow, S. D., Ververs, P. M., and Rogers, W. H. (2003). “Mitigating cognitive bottlenecks via an augmented cognition adaptive system,” in Systems, Man and Cybernetics, 2003. IEEE International Conference on, Vol. 1 (Washington, DC: IEEE), 937–944.

Downing, P. E. (2000). Interactions between visual working memory and selective attention. Psychol. Sci. 11, 467–473. doi:10.1111/1467-9280.00290

Ducatelle, F., Di Caro, G. A., Pinciroli, C., Mondada, F., and Gambardella, L. M. (2011). “Communication assisted navigation in robotic swarms: self-organization and cooperation,” in Intelligent Robots and Systems (IROS), 2011 IEEE/RSJ International Conference on (San Francisco, CA), 4981–4988.