FIJI: A Framework for the Immersion-Journalism Intersection

- 1The University of Texas at Dallas, Richardson, TX, United States

- 2Future Immersive Virtual Environments (FIVE) Lab, Department of Computer Science, The University of Texas at Dallas, Richardson, TX, United States

As journalists experiment with developing immersive journalism—first-person, interactive experiences of news events—guidelines are needed to help bridge a disconnect between the requirements of journalism and the capabilities of emerging technologies. Many journalists need to better understand the fundamental concepts of immersion and the capabilities and limitations of common immersive technologies. Similarly, developers of immersive journalism works need to know the fundamentals that define journalistic professionalism and excellence and the key requirements of various types of journalistic stories. To address these gaps, we have developed a Framework for the Immersion-Journalism Intersection (FIJI). In FIJI, we have identified four domains of knowledge that intersect to define the key requirements of immersive journalism: the fundamentals of immersion, common immersive technologies, the fundamentals of journalism, and the major types of journalistic stories. Based on these key requirements, we have formally defined four types of immersive journalism that are appropriate for public dissemination. In this article, we discuss the history of immersive journalism, present the four domains and key intersection of FIJI, and provide a number of guidelines for journalists new to creating immersive experiences.

Introduction

The concept of immersive journalism was introduced by de la Peña et al. (2010) as “the production of news in a form in which people can gain first-person experiences of the events or situation described in news stories.” To accomplish this, de la Peña et al. (2010) used virtual reality (VR) technologies, such as head-worn displays (HWDs; Cakmakci and Rolland, 2006), to allow people to enter virtual worlds and scenarios representing actual news stories. However, much before, in the 1990s, Biocca and Levy (1995) discussed the possibilities of employing VR devices for journalistic purposes. They believed VR would enable journalists to move steadily closer to fulfilling “the oldest dream of the journalist, to conquer time and space” by creating “a sense on the part of audiences of being present at distant, newsworthy locations and events.” This “dream”—to conquer time and space to transport an audience from the real world to the story world—is the heart of narrative in any form, including journalistic storytelling. It is also the primary view of narrative transportation theory, which proposes that an individual’s mental process, attitudes, and beliefs can change when the individual is “transported from the real world to a story world” through a narrative’s “integrative melding of attention, imagery, and feelings” (Green and Brock, 2000).

Although journalism currently is presented in many interactive formats, from interactive maps (Parasie and Dagiral, 2013) to news games (Bogost et al., 2012), de la Peña et al. (2010) differentiated between these “interactive journalism or low-level immersive journalism” forms and “deep immersive journalism,” in which the audience member “can feel that his or her actual location has been transformed to the location of the news story.” In practice, the most common approach to immersive journalism has been to use inexpensive HWDs, such as the Google Cardboard, and 360° videos to immerse the audience in the location of the news story. The New York Times was the first news organization to distribute Google Cardboard to its subscribers so they could experience 360° videos, such as The Displaced, one of The New York Times’ first immersive journalism mini-documentaries about three refugee children forced to leave their homes in Lebanon, South Sudan, and Ukraine (Sirkkunen et al., 2016). The New York Times now has a news channel, The Daily 360, to highlight its immersive journalism production.

However, in addition to immersing the audience in the visuals of the news story’s location, de la Peña et al. (2010) contended that it is even more important that the audience member’s “actual body has transformed, becoming a central part of the news story itself.” To accomplish this, they tracked the head rotations of the user and then applied those same rotations to the head of a virtual avatar resembling a Guantanamo Bay detainee, which could be seen from both a first-person perspective and a third-person perspective, thanks to a virtual mirror. They took this approach to invoke a sense of body ownership (see Common Immersive Technologies) of the virtual detainee’s avatar within the audience members.

The concept of body ownership has not been embraced by many journalists, however. Instead, audiences are often presented with immersive experiences that lack any visual representation of the viewer’s real or virtual body and low levels of interactivity. Such practices illustrate a major disconnect in the current state of immersive journalism production. Many journalists need to better understand the capabilities and limitations of immersive technologies for telling impactful stories, whereas developers need to better understand the requirements that govern journalism and the fundamental types of journalistic storytelling.

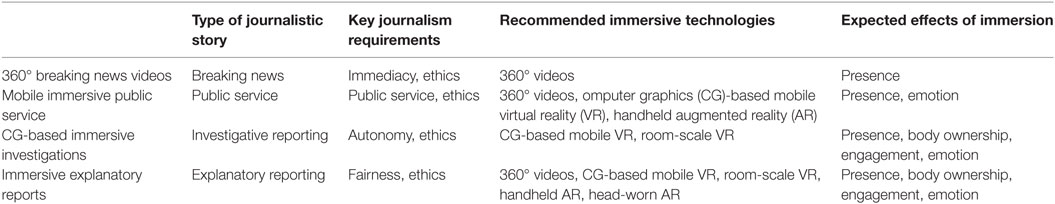

To address these gaps and help guide journalists and developers new to immersive journalism, we have created a Framework for the Immersion-Journalism Intersection (FIJI). As numerous journalism organizations begin experimenting with immersive technology, our theoretical framework is designed to take a step back and remind both journalists and developers to keep in mind the diverse foundational requirements that intersect during the process of creating immersive journalism. FIJI accounts for the fundamentals of immersion and how they affect various aspects of the user’s experience. Closely related are the capabilities and limitations of common immersive technologies that journalists could use currently, such as 360° videos, computer graphics (CG)-based mobile VR, room-scale VR, handheld augmented reality (AR), and head-worn AR. FIJI also carefully considers the fundamentals of journalism, including the requirements that define the journalism profession and govern journalistic excellence. FIJI identifies four types of journalistic stories—breaking news, public service, investigative reporting, and explanatory reporting—and their key journalistic requirements. Finally, we have used these four domains of knowledge and their intersection within FIJI to formally define four types of immersive journalism that are appropriate for public dissemination (Figure 1).

Figure 1. As a guide for journalists and developers, the Framework for the Immersion-Journalism Intersection integrates four domains of knowledge key to creating and defining requirements for four types of immersive journalism, based on their intersection.

In this article, we examine the theoretical, technological, professional, and practical considerations that are key to creating immersive journalism works. We start with a brief overview of related immersive journalism works and research. We then present the four foundational domains of knowledge and requirements that FIJI encapsulates: the fundamentals of immersion, common immersive technologies, the fundamentals of journalism, and the types of journalistic stories. We then use the intersection of these domains to formally define and discuss four types of immersive journalism works. We provide several design guidelines for both journalists and developers to follow to create better immersive journalism works and present four case studies that demonstrate the four types of immersive journalism.

Related Work

In this section, we provide a brief historical account of notable immersive journalism pieces and research. This review is only intended to provide a perspective of the evolution of immersive journalism. It is not meant to serve as a complete historical record of immersive journalism.

Groundbreaking Immersive Journalism

While Biocca and Levy (1995) were perhaps the first to formally discuss the possibilities of using immersive technologies for journalistic purposes, Columbia University’s Center for New Media took the first major steps toward implementing those possibilities. Using one of the first omnidirectional cameras (Nayar, 1997), a team of nine students created a 360° video of the 1997 St. Patrick’s Day Parade in New York (Pérez Seijo, 2017). The immersive video captured members of the Irish Lesbian and Gay Organization protesting for not being included in the parade and police arresting numerous demonstrators.

In addition to capturing 360° videos, Columbia University also pioneered the use of AR technologies for journalism purposes. Feiner et al. (1997) developed the “mobile journalist’s workstation,” a head-tracked, see-through HWD and handheld 2D display with stylus and trackpad, driven by a wireless computer, that was capable of presenting multimedia information about a prior event in the spatial location and context of that event. This technology was used to recreate the 1968 student revolt at the same location of the original event on Columbia University’s campus (Pérez Seijo, 2017) through images, audio recordings, and videos (Feiner et al., 1997).

Journalism in Second Life

Second Life, “a computer-generated alternative reality” (Brennen and Dela Cerna, 2010), ushered the next major era of immersive journalism’s evolution. While not truly immersive due to the third-person perspective, the desktop-based VR platform provided a virtual world for users from all over the world to navigate and interact via avatars. Second Life gave rise to three newspapers focused on the events of the virtual world: the Alpaville Herald, the Metaverse Messenger, and the Second Life Newspaper (Brennen and Dela Cerna, 2010). In addition to these virtual-world newspapers, Second Life facilitated the Metanomics (Metaverse and economics) weekly interview program hosted by Robert Bloomfield (Jensen, 2009). Metanomics became so successful that Cruz and Fernandes (2011) described it as “the seminal form of what journalism will look like in the 21st century.”

Around the same time, de la Peña investigated using Second Life as an opportunity for interactive journalism. Her first Second Life journalism piece was Gone Gitmo, a virtual recreation of the Guantanamo Bay prison incident, in which the user is represented by an avatar that is unexpectedly detained and imprisoned in a cage in Camp X-Ray (de la Peña et al., 2010). The Second Life experience also confronted the audience with documentary footage from the US Department of Defense of the real-life detainees. Her next Second Life work was Cap and Trade, which explored the cap-and-trade markets by having users first select the aspect of their lives that they intended to change to offset their annual carbon emissions: their cars, a transcontinental plane flight, or heating their house for a year. The audience would then be presented with “virtual replicas of actual projects where human-rights consequences, financial waste, and questionable practices provide a glimpse behind an opaque system” (de la Peña et al., 2010). Users’ avatars were also followed by a personal carbon cloud to emphasize their individual responsibilities in the greater pollution problem.

Immersive Journalism with First-Person CG

Following her pursuits with Second Life, de la Peña spearheaded the use of immersive VR technologies and CG-rendered simulations in an effort to put the audience into first-person reproductions of real-life events. Her first notable work was an adaptation of Gone Gitmo that used a tracking system to match the head rotations of a Guantanamo Bay detainee avatar to the user’s physical head movements (de la Peña et al., 2010). It was through an exploration of this piece that de la Peña et al. (2010) determined that body ownership was an important aspect of immersive journalism.

Hunger in Los Angeles was de la Peña’s next piece of immersive CG journalism, which was presented at the 2012 Sundance Film Festival. Like the previous piece, each audience member would use an HWD to immersively view a CG-based simulation. However, in Hunger in Los Angeles, the simulation reproduced a real-life event involving a diabetic man having a seizure and falling into a coma at a food bank outside the First Unitarian Church in Los Angeles in August 2010 (Kavner, 2012). The immersive CG piece was so moving that many viewers were “coming out crying” (Kavner, 2012).

Continuing her success of using HWDs and CG-based simulations, de la Peña’s next major immersive journalism piece was Project Syria in 2014 (Pérez Seijo, 2017). Originally commissioned by the World Economic Forum, Project Syria was based on actual photographs, audio, and video taken from an event involving an explosion during Syria’s civil war. The immersive journalism piece focused on the plight of the children that made up more than half of Syria’s refugees. More recently, de la Peña presented Across the Line, a piece about antiabortion protestors, and Kiya, a piece about domestic violence, at the 2016 Sundance Film Festival (Robertson, 2016). Like her previous immersive CG works, Across the Line and Kiya are virtual reproductions of real-life events.

Aside from de la Peña, others are beginning to experiment in immersive CG journalism. In an early experiment produced in 2014, The Des Moines Register developed a mixed reality (MR) production called Harvest of Change, which blended 360° video and a CG virtual farm. The immersive, interactive experience accompanied a series of published articles examining the state of American farming (Jackson and Eller, 2014). In 2015, the Los Angeles Times’ Data and Data Visualization desks developed a CG experience allowing audiences to explore a virtual crater on Mars (Emamdjomeh, 2015). Kors et al. (2016) developed an immersive CG experience called A Breathtaking Journey, in which the user is placed “in the shoes of a refugee who is fleeing from a war-torn country, hiding in the back of a truck, to reach a safe haven.” However, Kors et al. (2016) extended beyond just immersive CG by incorporating several MR elements into their immersive piece, including placing the user in a wooden crate to simulate the cramped back of the truck, automatically dropping a pair of mandarins in the user’s lap when a virtual pair fall within the virtual environment, and providing a gasmask that emitted an olfactory stimulus for the mandarins and measured the user’s breathing. The Associated Press now has its AP Digital Products news channel, which includes a variety of immersive journalism experiences using CG presented in a 360° video format, such as a virtual journey inside the brain to explore scientific theories on changes that are believed to contribute to the development of Alzheimer’s disease. Remembering Pearl Harbor is an immersive journalism experience produced for the HTC Vive HWD by Deluxe VR and presented by TIME’s LIFE VR (Rothman, 2016). The immersive CG experience allows the audience member to experience the surprise Japanese aerial attack on Pearl Harbor in 1941 and its aftermath from the perspective of Lt. Jim Downing, one of the oldest living American veterans to have witnessed the attack. The piece was created from Downing’s memories of serving as the postmaster on the USS West Virginia and resources provided by the National WWII Museum and the Library of Congress.

Immersive Journalism with 360° Videos

Whereas interactive experiences using CG-based virtual environments are largely in the experimental stage, the proliferation of inexpensive HWDs and 360° video cameras gave rise to what is more commonly presented as immersive journalism. The first notable 360° video journalism piece was Clouds Over Sidra, the story of a 12-year-old girl named Sidra in Za’atari, a Syrian refugee camp in Jordan (Kool, 2016). The 360° video was created by Chris Milk, in collaboration with the United Nations and Samsung. It won the Interactive Award at the Sheffield International Documentary Festival in 2015.

In November 2015, The New York Times published its first 360° video, The Displaced (Sirkkunen et al., 2016). As described in the introduction, the mini-documentary focused on the stories of three children forced to leave their homes due to crisis in Lebanon, South Sudan, and Ukraine. Around the same time, The New York Times hired a street artist to create its magazine cover and published Walking New York, a 360° video showing the artist’s process (Sirkkunen et al., 2016). Since 2015, the New York Times has published more than one hundred 360° videos and launched its news channel, The Daily 360. Other news outlets have also started producing their own 360° videos, including ABC, BBC, Vice, and The Verge.

While the use of both immersive CG and 360° video continue to grow, it will be interesting to see whether both survive the near future. Both approaches have their benefits and limitations for immersive journalism. How effectively immersive journalists use them will ultimately decide which medium, if either, becomes the standard. The framework that we present in this article is intended to inform and help journalists take steps toward better immersive journalism practices.

FIJI: A Framework for the Immersion-Journalism Intersection

As explained in the introduction, we developed FIJI to help journalists better understand the fundamentals of immersion and common immersive technologies and to help developers better understand the fundamentals of journalism and types of journalistic stories. By inspecting the intersection of these domains of knowledge, we have identified four types of immersive journalism that are appropriate for public dissemination (Figure 1). This section first describes the fundamentals of immersion and the common immersive technologies that are available to journalists. The section then presents the fundamentals of journalism and the types of journalistic stories that should be considered when developing immersive journalism works. Finally, the section details the four types of immersive journalism that we have identified—360° breaking videos, mobile public service, CG investigative reporting, and purposed explanatory reporting.

Fundamentals of Immersion

In this section, we discuss the fundamentals of immersion and how it affects various aspects of the user’s experience, including presence, body ownership, engagement, emotion, and cybersickness.

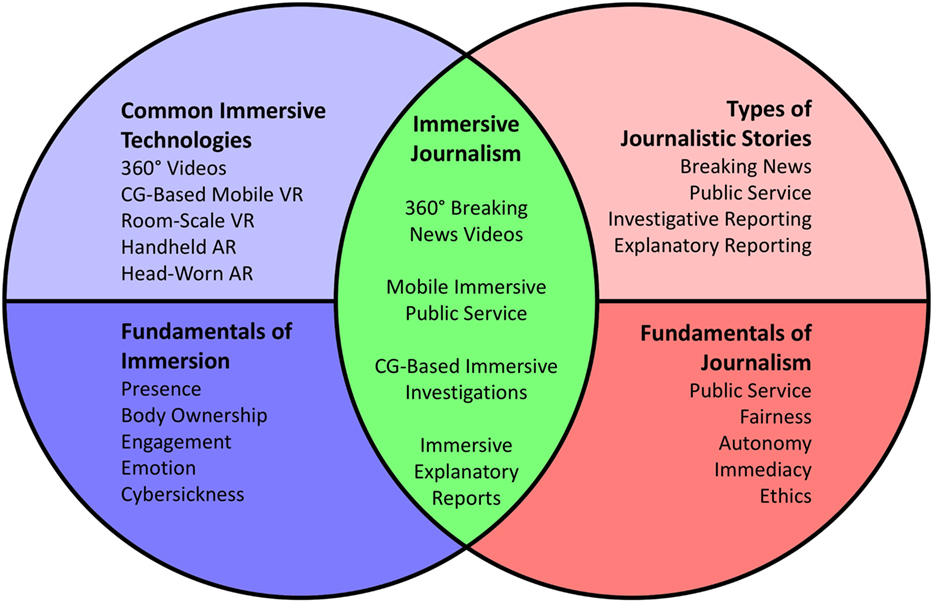

Slater and Wilbur (1997) explain that immersion is a description of “the extent to which the computer displays are capable of delivering an inclusive, extensive, surrounding and vivid illusion of reality to the senses of a human participant.” They further define inclusive as “the extent to which physical reality is shut out” (Slater and Wilbur, 1997). For example, most modern HWDs, such as the Oculus Rift and HTC Vive, are inclusive by completely enclosing the user’s view with goggle-like form factors, as opposed to the visor-like form factors of some HWDs that permit the real world to be seen in the user’s periphery (Ma et al., 2016). Refer to Figure 2 for (a) an example of a completely inclusive HWD and (b) an example of a partially inclusive HWD.

Figure 2. Head-worn displays can be (A) completely inclusive when the devices entirely enclose the user’s view and shut out the physical reality or (B) only partially inclusive by permitting the real world to be seen in the user’s periphery. (Image courtesy of Ryan P. McMahan; published with the written and informed consent of the subject.)

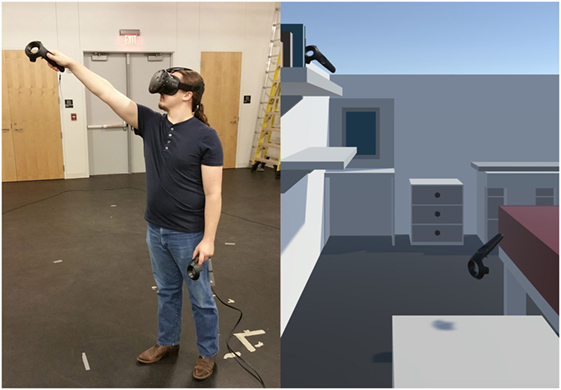

Slater and Wilbur (1997) define extensive as indicating “the range of sensory modalities accommodated.” Many forms of multimedia provide visuals and audio; however, immersive experiences also afford proprioception, which is “a person’s sense of the position and orientation of his body and limbs” (Mine et al., 1997), by permitting users to physically look and move around the virtual world (Figure 3). Some immersive experiences provide additional sensory stimuli, such as tactile (Kim et al., 2013; Sodhi et al., 2013; Tang et al., 2014), olfactory (Dinh et al., 1999; Howell et al., 2016), vestibular (Brooks, 1999; Fung et al., 2004; Riecke et al., 2005), and gustatory cues (Nakamura and Miyashita, 2011; Narumi et al., 2011a,b).

Figure 3. Room-scale virtual reality experiences permit users to physically look and move around the virtual world, which affords proprioception. (Published with written and informed consent of the subject.)

Slater and Wilbur (1997) used the term surrounding to refer to the extent to which an immersive experience is panoramic. Nearly all modern HWDs provide a full 360° field of regard (FOR), which is the total size of the visual field surrounding the user (Bowman and McMahan, 2007), by leveraging inertial measurement units to track the orientation of the user’s head (LaValle et al., 2014). Within this full FOR, many modern HWDs provide approximately a 90° field of view (FOV), which is the size of the visual field that can be viewed instantaneously (Bowman and McMahan, 2007). Refer Figure 4 for a depiction of the relationship between a 360° FOR and a 90° FOV.

Figure 4. A depiction of the relationship between a 360° field of regard (FOR) and a 90° field of view (FOV). The red shape highlights the boundaries of the FOV while the unseen portions of the FOR are shown in gray.

Finally, Slater and Wilbur (1997) used the term vivid to indicate “the resolution, fidelity, and variety of energy simulated within a particularly modality.” This quality addresses a broad range of aspects, including display resolution (Ni et al., 2006), visual realism (Lee et al., 2013), visual complexity (Ragan et al., 2015), and many others. For more in-depth discussions of the components of immersion, and system fidelity in general, we recommend reading Bowman and McMahan (2007), McMahan (2011), Bowman et al. (2012), McMahan et al. (2012), Ragan et al. (2015), and McMahan and Herrera (2016).

The objective qualities of immersive VR systems mentioned previously have been repeatedly demonstrated to elicit visceral subjective responses, such as presence, body ownership, engagement, emotion, and cybersickness. We define and explain each of these subjective responses to immersion below. Additionally, we provide examples of how immersion influences such responses.

Presence

Presence is the term used to describe the psychological and phenomenological sense of being in a virtual environment and having a first-person experience of a computer-generated world or simulation. Presence is “a state of consciousness” experienced during the sense of “being there” in a virtual world (Slater and Wilbur, 1997). At its optimal communicative state, presence is a product of place illusion and plausibility. Place illusion is the feeling of a virtual embodied transformation into the VR experience, while plausibility illusion is the belief that events are really happening even though the user knows that the events are not real (Slater, 2009). Based on prior empirical evidence, Slater (2009) has argued that users will respond realistically to a VR experience when both place illusion and plausibility illusion occur. Hence, through place illusion and plausibility illusion, VR offers the possibility of achieving the journalist’s dream of transporting the audience from the real world to the news-story world.

Immersion has been demonstrated to affect presence in a number of ways. For example, Barfield et al. (1999) found that the introduction of stereoscopy—“the display of different images to each eye to provide an additional depth cue” (Bowman and McMahan, 2007)—resulted in increased levels of reported presence, despite not improving user performance for a 3D wire tracing task. Similarly, Freeman et al. (2000) and IJsselsteijn et al. (2001) found that stereoscopy caused increases in reported presence when viewing virtual scenes. Lin et al. (2002) used a three-screen driving simulator to control FOR and found that users reported greater presence with increasing degrees of FOR. Similarly, McMahan et al. (2012) used a six-sided CAVE (a room-sized display environment) and found that a 360° FOR yielded significantly greater presence than a 90° FOR. In the same study, McMahan et al. (2012) also found that a higher fidelity interaction metaphor resulted in significantly greater presence than a low-fidelity keyboard and mouse interaction technique. Tan et al. (2003) evaluated the effects of display size by controlling the FOV and display resolution of two displays located at different distances from the user and determined that the larger display afforded greater presence than the smaller display for a spatial orientation task. Meehan et al. (2002) evaluated the effects of frame rate on presence in a stressful virtual environment and found that presence significantly increased with faster frame rates and decreased latency.

From these results, we can see that several components of immersion affect presence, including stereoscopy, FOR, display size, and frame rate. These effects of immersion on presence are only a subset of those possible. Many more are reported throughout the literature, but such an extensive survey is beyond the scope of this article.

Body Ownership

Body ownership is “the special perceptual status of one’s own body, which makes bodily sensations seem unique to oneself” (Tsakiris et al., 2006). Research indicates that body ownership may play a critical role in the somatosensory nervous system, which processes internal signals from the body (Tsakiris, 2010). However, researchers have demonstrated that immersive VR systems can be used to create body ownership illusions, in which “healthy subjects experience an artificial body as if it were their own physical body” (Maselli and Slater, 2013). In other words, users may unconsciously accept an avatar representation of themselves as their own body and respond to events involving their avatar, such as possible harm (González-Franco et al., 2014), uncontrolled movements (Pomes and Slater, 2013), expected social behaviors (Kilteni et al., 2013), and even time travel (Friedman et al., 2014). If immersive technologies can be used to induce such body ownership illusions, particularly time travel, they can clearly be used to place the audience within a news story.

Various aspects of immersion have been shown to influence the success of body ownership illusions. In their research, Petkova et al. (2011) found results that indicate a first-person perspective is needed for the body ownership illusion. Maselli and Slater (2013) later confirmed in one of their experiments that a first-person perspective was indeed a necessary condition for the body ownership illusion to occur. Maselli and Slater (2013) also demonstrated that seeing a realistic virtual body in the same location and posture as the physical body is sufficient enough to elicit the body ownership illusion, without the need for visual sensorimotor cues, such as moving one’s head to look around, or the need to have synchronous visuotactile cues (i.e., receiving a tactile stimulus at the same time as seeing the virtual body being touched by an object). Using HWDs, Petkova and Ehrsson (2008) demonstrated that the body ownership illusion could occur even when the appearance of the virtual body was overly simple like a mannequin or was even a different gender from the user. Other results indicate that the illusion can even occur with virtual bodies of different skin color (Kilteni et al., 2013) or even an unnatural appendage, such as a tail, when viewed from a third-person perspective (Steptoe et al., 2013). However, research by Maselli and Slater (2013) indicates that the body ownership is strongest when the virtual body closely resembles the user in terms of skin color and clothes.

There has been an explosion of research on the body ownership illusion within the past decade, including how immersion affects the success of the illusion. For those interested in more details, we recommend reading the articles cited above.

Engagement

Like presence, engagement is a state of consciousness, but one in which the user’s attention is attracted to, involved with, and occupied by a user interface or piece of multimedia. Engagement is “a quality of user experience that depends on several factors, including the esthetic appeal, novelty, and usability of the system, the ability of the user to attend to and become involved in the experience, and the user’s overall evaluation of the salience of the experience” (O’Brien and Toms, 2013). As such, engagement is clearly important for immersive journalism. In order to “provide people with information they need” (Kovach and Rosenstiel, 2014), the news story and its presentation must attract, involve, and occupy the attention of the audience, otherwise the information will not be encoded and instead will be lost (Chun and Turk-Browne, 2007).

Due to the multifaceted nature of engagement, it depends on several variables, such as the interestingness of the content (Arapakis et al., 2014), the user’s gender (Chou and Tsai, 2007), the user’s motives for “selecting and engaging in activities” (Tan, 2008), and how well the skills of the user match the challenges presented by the activity (Sutcliffe, 2009). However, some qualities of immersion have been found to affect engagement. Freeman et al. (2000) found that stereoscopy increased users’ subjective ratings of involvement when viewing a virtual scene. Lugrin et al. (2013) compared a CAVE-based implementation of a first-person shooter, which used a six-degree-of-freedom (6-DOF) tracked device for 3D shooting and hand-directed steering, to a traditional desktop version that used keyboard and mouse. They found that the CAVE-based implementation yielded greater evidence of engagement than the desktop version. Similarly, McMahan et al. (2012) found that increased display fidelity (via increased FOR and added stereoscopy) resulted in greater reported engagement, as did increased interaction fidelity (via a 6-DOF tracked device, as opposed to a keyboard and mouse metaphor). Additionally, McMahan et al. (2012) found that increasing both display fidelity and interaction fidelity yielded the highest levels of reported engagement. For a more-thorough discussion of engagement and what affects it, we recommend reading Boyle et al. (2012).

Emotion

According to Barrett et al. (2007), “experiences of emotion are content-rich events that emerge at the level of psychological description, but must be causally constituted by neurobiological processes.” As Shih and Liu (2007) point out, users are no longer satisfied with usability and usefulness, but also want “emotional satisfaction” from their systems. This is why design processes have recently focused much more on the emotional impact of systems (Hartson and Pyla, 2012). In addition to satisfaction, emotions are known to affect how users cognitively process information and events (Barrett et al., 2007). Hence, emotions will affect how each audience member understands a news story.

Like engagement, there are several processes and variables that influence emotional states (Barrett et al., 2007), including immersion. One of the early successful uses of immersive VR systems was inducing fear for phobia therapy treatments (Bowman and McMahan, 2007), including acrophobia (Rothbaum et al., 1995), arachnophobia (Garcia-Palacios et al., 2002), and glossophobia (Pertaub et al., 2002). Similarly, Chittaro et al. (2014) found that fear and skin conductance could be induced by providing high-fidelity portrayals of character harm (e.g., rendering of blood) during an aircraft evacuation simulation. However, Volonte et al. (2016) found that lower fidelity cartoon and sketch-based rendering algorithms elicited greater negative emotions (e.g., anger, fear, and guilt) than a high-fidelity rendering algorithm, when interacting with a deteriorating virtual patient.

In other research, Riva et al. (2007) demonstrated that the audiovisual features of a virtual environment could be manipulated to specifically elicit anxiety and relaxation. More recently, Naz et al. (2017) investigated how the visual aspects of a virtual environment influenced emotional qualities and found that color affected warmth, brightness affected spaciousness, and both color and brightness affected excitement. Additionally, Kruijff et al. (2016) have investigated how multisensory cues affect emotion in immersive systems and found that surprise could be elicited by low-frequency sounds and back vibrations while happiness could be elicited by wind and smells.

These are just a few of the examples of immersion influencing emotional states. For a more in-depth review of how VR impacts emotional reactions, we recommend reading Diemer et al. (2015).

Cybersickness

Cybersickness is the feeling of physical discomfort brought on by the use of immersive technologies (LaViola et al., 2017). It is also often referred to as simulator sickness, or simply motion sickness (Stanney et al., 1997). The primary symptoms of cybersickness include disorientation, difficulty focusing, nausea, blurred vision, dizziness, vertigo, fatigue, headache, eye strain, increased salivation, sweating, stomach awareness, and burping (Kennedy et al., 1993). There are three prominent theories for what causes cybersickness—the poison theory, the postural instability theory, and the sensory conflict theory (LaViola, 2000). The poison theory stipulates that the body misreads the stimuli produced during the immersive experience as if it had ingested some type of toxic substance, which leads to “an emetic response” (LaViola, 2000). The postural instability theory is based on the idea that one of the primary behavioral goals of humans is to maintain postural stability, and therefore, prolonged postural instability results in motion sickness symptoms (Riccio and Stoffregen, 1991). Finally, “the most longstanding and popular explanation for cybersickness” is the sensory conflict theory (Davis et al., 2014), which is based on the premise that discrepancies among the senses regarding the body’s orientation and motion cause a perceptual conflict that the body does not know how to handle (LaViola, 2000).

While we may not know exactly why the body’s processes cause cybersickness when using immersive technologies, we do know that several factors can contribute to or induce cybersickness. Davis et al. (2014) identified three categories of factors that affect cybersickness—individual factors, device factors, and task factors. Individual factors include aspects specific to each user, such as age (Reason and Brand, 1975), gender (Biocca, 1992), preexisting illness (LaViola, 2000), and posture (Riccio and Stoffregen, 1991). Device factors include issues specific to the immersive hardware being used, including latency (Pausch et al., 1992), flicker (Harwood and Foley, 1987), interpupillary calibration (Davis et al., 2014), and ergonomics (McCauley and Sharkey, 1992). Finally, task factors include the level of control that the user has and the duration of the specific task that the user is performing (Davis et al., 2014). It is important to note that while immersive journalists have little control over individual factors and device factors, they can actively attempt to ensure that the audience’s task does not contribute to or induce cybersickness. For more details and discussion regarding cybersickness, we recommend reading LaViola (2000) and Davis et al. (2014).

Common Immersive Technologies

In this section, we discuss common immersive technologies that most journalists could use currently. We have purposely excluded research-oriented technologies, such as full-body motion capture systems, special tactile feedback devices, and olfactory displays. As well, we note that, even with commonly available technologies, several factors limit the production and dissemination of immersive journalism experiences. First, in order to use such technologies, journalists will need to expand their skill sets and/or establish new relationships with researchers or developers capable of creating and using such technologies. Second, news organizations will need sufficient monetary resources to cover the currently high costs of development. Third, even though such unique technologies might afford more-realistic experiences that optimize the impact of journalistic storytelling, dissemination could be extremely limited to audiences that have the physical and financial capability to access the technologies. We view immersive journalism in its current state as a form of storytelling that can supplement or complement other forms of presentation and therefore focus on technologies that are more readily accessible now to both journalists and broader audiences.

360° Videos

As discussed previously, 360° videos are the most common technology currently being used to create immersive experiences. One reason why is because 360° videos do not require any CG rendering or 3D virtual objects to create the videos, unlike the rest of the technologies discuss below. CG effects are only needed for 360° videos if information or infographics are added during the post-processing stage (Owen et al., 2015).

There are a number of cameras for capturing 360° videos. Consumer 360°cameras usually cost less than $1,000 USD, and examples include the Samsung Gear 360, Nikon KeyMission 360, Nico360, Kodak Pixpro SP360, 360fly, and Ricoh Theta cameras. Professional 360°cameras support higher-resolution videos and usually provide stereoscopic capabilities. However, these professional cameras are expensive and usually involve complex processes for stitching multiple camera feeds into a single 360° video. Examples of professional 360°cameras include the Jump by Google, Nokia OZO, PanoCam3D, and the non-stereoscopic GoPro Omni. It is important to note here that The New York Times uses the Samsung Gear 360 for capturing its 360° videos, partially due to the simple stitching process that the camera supports.

Another advantage of using 360° videos is that they can be viewed through several means. Nearly any HWD, from the inexpensive Google Cardboard to the HTC Vive, can immerse the viewer in a 360° video. Using the same inertial tracking technology, 360° videos can also be viewed on nearly any smartphone by moving the device around like a window to the virtual world. Finally, 360° videos can also be viewed through most web browsers by dragging to change how the current FOV is mapped to the 360° FOR. This wide range of viewing options makes 360° videos the most accessible immersive technology for broad audience consumption.

In terms of subjective responses, 360° videos can afford relatively high levels of presence when viewed through an HWD. However, they afford less presence when viewed on smartphones (Rupp et al., 2016), and even less when viewed through a web browser, based on anecdotal evidence collected by the authors. As several results indicate a strong correlation between presence and emotion (Diemer et al., 2015), 360° videos are more likely to elicit emotions when viewed through an HWD.

While 360° videos can enable presence and emotions, they are not apt for affording body ownership. In many 360° videos, there is no representation of the viewer’s body. In some videos, visual artifacts, such as a camera tripod or a person carrying the camera, clearly indicate that the viewer’s body is not present in the world of the video. As such, most 360° videos will not provide the sense of body ownership. However, there are some exceptions, such as Jane Gauntlett’s work “Dancing With Myself”.1

Another limitation of 360° videos is the lack of interactivity. Because 360° videos are nothing more than 2D pixels wrapped around the viewer, the worlds seen in the videos cannot be changed (at least not without complex computer vision algorithms and advanced processes). Hence, users cannot grab or manipulate objects that they see in the news story world. Additionally, users cannot change their viewing position within the videos, as that is determined by camera’s position. Users can only change the direction of their view from the camera’s position by rotating their heads. Based on the work by McMahan et al. (2012), this lack of interactivity indicates that 360° videos cannot afford extremely high levels of engagement, as some other immersive technologies may be able to.

Finally, journalists must be careful how they capture 360° videos, as they can induce cybersickness, especially when viewed through an immersive HWD. As discussed in Section “Types of Immersive Journalism,” cybersickness is likely caused by discrepancies between the visual and vestibular senses. Such discrepancies occur with 360° videos when the camera is being moved, as the recorded visuals will indicate that the user’s body is moving while the viewer’s inner ear will indicate that the body is not moving from a vestibular sense. Hence, moving the camera while capturing a 360° video is very likely to induce cybersickness in the audience members, which we have observed anecdotally. This leads to our design guideline to not move the camera, even slowly, when capturing 360° video (see Design Guidelines for Immersive Journalism).

CG-Based Mobile VR

This category generally refers to any technology that uses a smartphone, a mobile HWD peripheral, and a CG-based simulation to provide an immersive experience. Examples of mobile VR devices include the Samsung Gear VR, Google Cardboard, and the Google Daydream HWDs. While 360° videos can also be viewed on these devices, the use of immersive CG is what sets CG-based mobile VR experiences apart. For example, Chernobyl VR Project is an immersive journalism piece that combines CG-based simulations with a documentary narrative approach to inform the audience about the Chernobyl disaster in Ukraine.

Computer graphics-based mobile VR experiences afford relatively high levels of presence, similar to viewing a 360° video with an HWD. While some journalists may worry about how the CG-based simulations of mobile VR experiences impact the emotions of their audience, prior research indicates that emotions can not only be elicited with CG (Chittaro et al., 2014), but that lower fidelity visual realism may elicit more emotions than high-fidelity visual realism (Volonte et al., 2016). Also, CG-based mobile VR is more likely to induce the sense of body ownership than 360° videos because the viewer’s body can be easily represented in the virtual world. For example, though not a journalistic piece, Insurgent VR is a CG-based mobile VR experience that shows the viewer’s virtual body statically positioned in a chair. The experience even portrays robotic wires connecting to the virtual body in a Matrix-like fashion. However, the sense of body ownership is limited, as CG-based mobile VR devices only track the rotations of the user’s head and are incapable of tracking bodily movements like walking or grabbing objects.

Because CG-based mobile VR relies on CG-based simulations, it affords more interactivity than a 360° video does. The virtual world can be interacted with and changed according to the user’s actions. However, those actions are restricted by and based solely on the user’s head orientation, a few peripheral buttons, and possibly a joystick, if an additional game controller is used with the mobile VR device. Such interactions are extremely unnatural and likely to decrease the user’s sense of engagement (Bowman et al., 2012).

As with 360° videos, journalists must be careful not to induce cybersickness with their CG-based mobile VR applications. While CG-based simulations can allow users to travel in any direction at any time, immersive journalists and developers should avoid the temptation to provide steering via a game controller or to use simulated walking animations with mobile VR devices. Both of these approaches present the same visual-vestibular conflicts that 360° camera movements do. Instead, a teleporting approach should be used, in which the user selects the next position to view the virtual world from, and the simulation uses a fade-out-and-fade-in technique to change the user’s view without any visual-vestibular conflicts. Remembering Pearl Harbor employs such a technique.

Room-Scale VR

Room-scale VR technologies are very similar to CG-based mobile VR technologies, except that room-scale technologies have more tracking capabilities. These additional capabilities allow users to walk around the physical room (hence, the term “room-scale”) and to naturally interact with virtual objects using handheld controllers, as opposed to an unnatural joystick or button technique. There are currently three room-scale VR systems commercially available: the Oculus Rift + Touch, the HTC Vive, and the PlayStation VR.

With the increased level of interactivity afforded by the additional sensing technologies, room-scale VR affords some of the most-convincing immersive experiences. Audience members are more likely to experience presence as a result of the increased interaction fidelity (McMahan et al., 2012). The sense of body ownership is more likely to be elicited, as inverse kinematics can be used to move a virtual avatar’s arms in the same manner that users move their arms (Maselli and Slater, 2013). With increased presence and body ownership, room-scale VR experiences are more likely to elicit emotion from the audience members (de la Peña et al., 2010). The increased interaction fidelity is also likely to elicit more engagement on the part of the audience (McMahan et al., 2012). Finally, due to the ability to walk around, room-scale VR systems are less likely to present the visual-vestibular conflicts that cause cybersickness for many users. It is important to note that a teleporting technique should still be used instead of steering or simulated walking when the user desires to move to a position beyond the physical tracking limits.

Handheld AR

Another technology that has been used for immersive journalism is handheld AR, the same technology used by the once wildly popular Pokémon GO game. With handheld AR, a camera-equipped smartphone or tablet is pointed at a predefined 2D image and then a video or virtual 3D object is overlaid onto that image (Pavlik and Bridges, 2013). Essentially, the smartphone or tablet becomes a handheld window to a world comprised of the surrounding real world and virtual objects. For example, The New York Times used handheld AR to transform its masthead banner into a 3D animation of New York City (Pavlik and Bridges, 2013). Another example of handheld AR journalism is the CI-Spy application developed by Singh et al. (2015), in which users were introduced to the history of a local historic site.

Handheld AR can be used with nearly any smartphone or tablet, as the functionality is afforded by software, rather than hardware. This makes immersive journalism via handheld AR available to a wide audience, as many people have smartphones today. In order to create the necessary software, journalists and developers can use AR solutions, such as ARToolKit, Vuforia, Aurasma, and Layar.

The effect of handheld AR on a user’s sense of presence is questionable. In one regard, the user is already physically located in the news story world, so this would lead to the thought that the user must experience “being there.” However, if the virtual objects are intended to alter the story world, the user may not feel present in that alternate world. Additionally, handheld devices have been shown to afford less presence than immersive HWDs for 360° videos (Rupp et al., 2016). We believe determining the effects of handheld AR on presence to be an open research question. Likewise, we believe the effect of handheld AR on eliciting emotions is also currently unknown.

Because AR relies on the user’s physical surrounding environment, it affords high levels of interactivity with real objects as the user can interact with those objects as he or she normally would. Additionally, it should also provide a high sense of body ownership, as a virtual avatar is not even necessary, and the user can see their own body and limbs. However, with handheld AR, the user is required to hold the smartphone or tablet, which can impede the user’s ability to interact with the physical environment. Also, if the user is expected to interact with virtual objects, interactions such as swiping and pinching the touchscreen will likely be used instead of grasping and reaching. Hence, the user’s level of engagement may be impeded by such unnatural interactions. Finally, handheld AR has been demonstrated to not significantly impact cybersickness (Berning et al., 2014), though it may cause arm fatigue due to holding the smartphone or tablet (LaViola et al., 2017).

Head-Worn AR

Another technology that may soon be adopted for immersive journalism is head-worn AR, in which the user wears a see-through HWD, and videos or virtual 3D objects are superimposed within the user’s view. Currently, examples of head-worn AR journalism are difficult to find. However, devices such as the Epson Moverio BT-300, Microsoft HoloLens, and upcoming Meta 2 could be used to produce immersive journalism pieces similar to the handheld AR examples above.

In terms of immersion and subjective responses, the biggest difference between handheld AR and head-worn AR is that the user wears a headset instead of holding a smartphone or tablet. The headset provides a stereoscopic display and head tracking, whereas handheld AR does not. This means that head-worn AR is likely to afford greater levels of presence (Steptoe et al., 2014). However, it also means that head-worn AR is more likely to induce cybersickness due to accommodation problems, latency, and poor headset ergonomics (Kaufmann and Dünser, 2007).

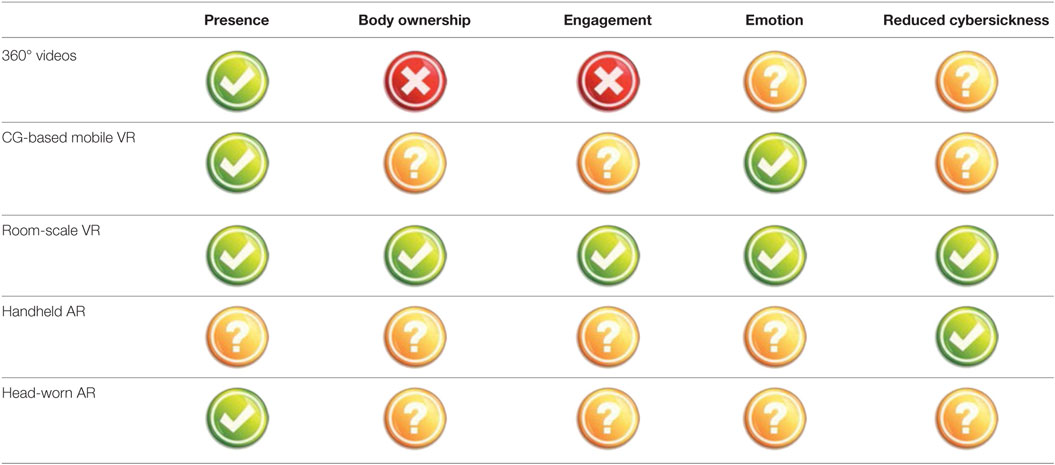

In Table 1, we provide a summary of the capabilities and limitations of the immersive technologies discussed above.

Fundamentals of Journalism

Journalism is essentially storytelling with the purpose “to provide people with information they need to understand the world” (Kovach and Rosenstiel, 2014). Defining professional journalism in its current practice is easily the subject of a large volume of research and therefore beyond the scope of this article. However, to present a concise discussion, we employ the framework proposed by Deuze (2005) that captures five key requirements of journalism—public service, fairness (originally objectivity), autonomy, immediacy, and ethics—all of which are commonly reflected in more extensive analyses of professional journalism, such as the one presented by Kovach and Rosenstiel (2014). These requirements not only define professional journalism throughout the reporting, editing, and presentation processes, but they are also barometers for measuring journalistic excellence. Numerous award competitions, such as The Pulitzer Prizes,2 recognizes the best journalism based on these measures of excellence, which we describe subsequently.

Public Service

Journalism that provides public service essentially has a broad impact on a wide audience. Deuze (2005) contends that journalists provide a public service as “watchdogs” and “active collectors and disseminators of information.” Professional journalists and news organizations strive to use resources to report and present stories with the greatest importance to the general public. Additionally, public service is highly prioritized within many journalism codes of ethics. The Society of Professional Journalists (SPJ) Code of Ethics3 states that the “highest and primary obligation of ethical journalism is to serve the public” and that journalists should seek “to ensure that the public’s business is conducted in the open, and that public records are open to all.” The Radio Television Digital News Association (RTDNA) Code of Ethics4 decrees that journalism “places the public’s interests ahead of commercial, political and personal interests” and “empowers viewers, listeners and readers to make more informed decisions for themselves.”

Fairness

The goal of professional journalism is to provide fair reporting and proportional news presentation through a thorough, contextual, and balanced fact-gathering process. The SPJ Code of Ethics states that ethical journalism “should be accurate and fair. Journalists should be honest and courageous in gathering, reporting and interpreting information.” The Media, Entertainment & Arts Alliance Journalist Code of Ethics5 informs journalists not to “suppress relevant available facts, or give distorting emphasis.”

Originally, Deuze (2005) used the term objectivity for this key requirement, but we believe the term fairness better reflects the current philosophy of professional journalism while at the same time recognizing the subjective nature of decision-making throughout the entire journalistic process. Even Deuze (2005) recognized that “objectivity has a problematic status in current thinking about the impossibility of value-neutrality” and that “academics and journalists alike revisit this value through synonymous concepts like ‘fairness’.”

Autonomy

As Deuze (2005) indicates, “journalists must be autonomous, free and independent in their work.” The SPJ Code of Ethics informs journalists to “avoid conflicts of interest, real or perceived. Disclose unavoidable conflicts” and to “resist internal and external pressure to influence coverage.” The RTDNA Code of Ethics addresses the importance of transparency by “acknowledging sponsor-provided content, commercial concerns or political relationships” and points out that “transparency alone is not adequate. It does not entitle journalists to lower their standards of fairness or truth.”

Immediacy

News, by its nature, is about recent current events. “News isn’t news if it isn’t fresh and being first to a story is part of what drives journalism” (Sanders, 2003). That said, many forms of reporting, particularly explanatory or investigative stories, require time to produce. Immediacy can be considered relative in terms of its relation to the actual moment of an event, but journalists are motivated to provide information as near to the event’s occurrence as possible. Therefore, journalism “involves notions of speed, fast decision-making, hastiness, and working in accelerated real-time” (Deuze, 2005). However, as the RTDNA Code of Ethics points out, “facts change over time. Responsible reporting includes updating stories and amending archival versions to make them more accurate and to avoid misinforming those who, through search, stumble upon outdated material.”

Ethics

What elevates journalism as a profession are the ethics that guide the creation process: reporting, editing, presentation and dissemination. As Deuze (2005) states, “journalists have a sense of ethics, validity and legitimacy” that give the professionals a sense of purpose and guide their decision making. In addition to the requirements of servicing the general public while acting fairly, autonomously, and with some sense of immediacy, journalistic ethics comprise other foundational concepts as well that any journalistic story must meet. When devising our framework for journalism requirements, we apply ethical standards for accuracy, honesty and context across the board to all story types. As journalist Walter Isaacson states, “In the end, you’re going to be judged on whether you got it right, not just on whether you got it first” (Sanders, 2003). Along these lines, the RTDNA Code of Ethics indicates that “ethical journalism requires owning errors, correcting them promptly and giving corrections as much prominence as the error itself had.” The SPJ Code of Ethics underscores the importance of minimizing harm and treating “sources, subjects, colleagues and members of the public as human beings deserving of respect.” This includes realizing “that private people have a greater right to control information about themselves than public figures and other who seek power, influence, or attention.”

Types of Journalistic Stories

Journalistic stories are produced in many forms and cover wide ranges of topics. In this section, we discuss major types of hard news—news that is politically relevant, reports in a thematic way, focuses on the societal consequences of events, and is impersonal and unemotional in its style (Reinemann et al., 2012). In particular, we discuss four types of hard news based on the categories of The Pulitzer Prizes (see text footnote 2): breaking news, public service, investigative reporting, and explanatory reporting. The four Pulitzer Prize categories used here serve as a high-level umbrella for all subgenres of hard news, such as local, national, or international reporting.

Below, we examine each of the five journalistic requirements discussed in the previous section as they relate to the four hard-news story types. Although all five requirements are clearly important to any journalistic production, some requirements require greater emphasis based on the key characteristics of the story type. Table 2 summarizes these key journalism requirements, which allow us to discuss which immersive technologies are best suited for each type of story in the next section.

Breaking News

The Pulitzer website describes this category as the reporting of “breaking news that, as quickly as possible, captures events accurately as they occur, and, as time passes, illuminates, provides context and expands upon the initial coverage.” By its nature, news is new information, time-pressured by varying degrees, especially as events unfold and information changes rapidly. When considering the five requirements of journalism, the key requirement for breaking news is immediacy and providing the public with the best information available in an often-changing environment.

Public Service

The Pulitzer website describes this category as “a distinguished example of meritorious public service by a newspaper or news site through the use of its journalistic resources.” Although this category can be immediate in nature, require investigative reporting, and use deep explanation, the primary requirement for public service stories is public service through the magnitude of the story’s impact on a broad audience. The core value guiding public service journalism is the deontological ethic that journalists and news organizations embrace as their “notion of duty” to serve democracy as a watchdog for public interest (Sanders, 2003; Kovach and Rosenstiel, 2014). This means disseminating information as widely and affordably as possible to broad audiences.

Investigative Reporting

The term “investigative” typically is understood as journalism that exposes details of a subject of public interest that, for example, might be hidden from public view or dissemination, or a topic that becomes apparent only through reporting that aggregates data and connects disparate information into a cohesive narrative (Kovach and Rosenstiel, 2014). Although many forms of reporting can be considered investigative, in-depth investigative reporting has two hallmark characteristics according to the Investigative Reporters & Editors Awards6: “substantially the product of the reporter’s own initiative and effort” and “uncovers facts that someone or some agency may have tried to keep from public scrutiny.” These characteristics elevate the importance of autonomous, independent reporting that may be required to overcome legal, financial, or political pressures. A foundational value in journalism is that journalists “must maintain an independence from those that they cover” (Kovach and Rosenstiel, 2014), and this requirement takes on additional importance when reporters may have to resist influences of those that want to keep stories and facts hidden.

Explanatory Reporting

The Pulitzer website describes explanatory reporting as journalism that “illuminates a significant and complex subject, demonstrating mastery of the subject, lucid writing and clear presentation, using any available journalistic tool.” While providing thorough, contextual, and proportional information is critical for all types of journalism, we believe it is especially important to explanatory reporting, in which the primary objective is to illuminate a complex subject from all perspectives. Narrative structure serves to identify the most significant facts to present and in the interest of efficiency and understanding, eliminates or reduces others. However, the overarching goal of explanatory journalism is to broaden the narrative and to include more information, which might be left out of other types of news stories.

Types of Immersive Journalism

Now that we have described the four fundamental domains of knowledge of FIJI, we present four types of immersive journalism based on our analysis of the intersection of the fundamental domains. This intersection helps us identify which of the immersive technologies are best suited for each type of journalistic story, given its key journalism requirements. Additionally, we present examples of each type of immersive journalism. Table 3 provides a summary of the four types of immersive journalism, including their type of journalistic story, key journalism requirements, the immersive technologies that we recommend implementing them with, and the expected effects of immersion, given the recommended technologies.

360° Breaking News Videos

The first type of immersive journalism that we have identified is 360° breaking news videos, which use 360° videos to convey breaking news stories. As identified earlier within our framework, immediacy is the key journalistic requirement for breaking news stories. Due to the time and resource-intensive nature of creating CG-based simulations, most of the immersive technologies previously discussed are not suitable for breaking news stories. However, 360° videos can be rapidly captured and produced to meet the immediacy requirement of breaking news. For example, the 2016 Olympics held in Rio de Janeiro were covered by multiple news outlets using 360° videos, including live streams by BBC and NBC. By using a 360°camera, such as the Samsung Gear 360, journalists can capture and produce immersive journalism pieces in a breaking news fashion. However, this also limits immersive breaking news stories to primarily affording only presence, as body ownership and engagement are usually limited when using 360° videos, as discussed in Section “360° Videos.”

Mobile Immersive Public Service

The second type of immersive journalism that we have identified is mobile immersive public service pieces, which use affordable mobile technologies to widely disseminate public service news stories. As explained earlier, the primary focus of public service news stories is wide dissemination of important news that impacts broad audiences. This requirement therefore quickly eliminates some immersive technologies from being used for public service pieces. For example, the number of users that can currently experience a highly interactive public service news story in room-scale VR is limited. This is because room-scale VR technologies are new to consumers, with the Oculus Rift, HTC Vive, and PlayStation VR all being released in 2016, and these technologies require expensive desktop computers with high-end graphics cards, which many consumers cannot afford. Similarly, head-worn AR technologies, such as the Microsoft HoloLens, are even more scarce and facilitate an even smaller audience. Hence, neither room-scale VR nor head-worn AR technologies are useful for immersive public service journalism.

To reach the broadest audience, a 360° video is the best current option for immersive public service. As discussed earlier, these videos can be consumed via web browsers, smartphones, and immersive HWDs, which facilitate a very large audience in a number of ways. CG-based mobile VR technologies can also be used to reach a fairly broad audience, as most people have smartphones, and HWD peripherals like the Google Cardboard are inexpensive. For similar reasons, handheld AR can also be used to widely disseminate immersive public service journalism. Interestingly, handheld AR can also be used to provide a public service news story within its spatial context to local audiences that have access to the real-world location.

An example of a mobile immersive public service piece is Still Living With Bottled Water in Flint, a 360° video published by The New York Times in January 2017. The immersive journalism piece depicts how Flint, MI, USA, residents are still facing issues due to poorly filtered water, despite officials stating that the water is safe. Because the piece is a 360° video, it can be easily experienced via a desktop web browser, a smartphone app, or with an immersive HWD.

CG-Based Immersive Investigation

The third type of immersive journalism that we have identified is CG-based immersive investigations, which use CG-based simulations to provide virtual access to key locations or events being exposed in an investigative reporting news story. As discussed earlier, the key requirement of investigative reporting is autonomy of the journalist, as there are often third parties that wish to keep information hidden. In many cases, it may be difficult or impossible for the journalist to access the physical real-world locations or events that are the subject of an investigative piece. Hence, it is unlikely that a 360° video can be used for an immersive investigation piece. Additionally, if the journalist is unable to access the real-world locations and events, it is improbable that the general public would be able to access them. Hence, handheld AR and head-worn AR technologies would not be effective for most immersive investigation pieces. CG-based mobile VR and room-scale VR simulations are the only way to provide the general public access to locations or events being exposed in investigative reports.

Many of de la Peña’s immersive journalism works have been CG-based immersive investigations that reproduce real-life events. Hunger in Los Angeles provides viewers virtual access to a real-life event in which a diabetic man seized and fell into a coma at a food bank in Los Angeles in August 2010 (Kavner, 2012). Project Syria portrays a bomb explosion that occurred on a busy street in Aleppo, Syria in November 2012 (Pérez Seijo, 2017). More recently, Kiya depicts the real-life events surrounding a domestic violence incident that resulted in a murder-suicide in North Charleston, SC, USA in June 2013 (Robertson, 2016). In order to produce these CG-based immersive investigations, de la Peña used actual photographs, audio, and video taken from the events to recreate them through CG-based simulation, which resonates the point of Kovach and Rosenstiel (2014) that investigative reporting aggregates data and connects disparate information into a cohesive narrative.

Immersive Explanatory Reports

The fourth type of immersive journalism that we have identified is immersive explanatory reports, which can use any type of immersive technology to convey complex subjects through explanatory reporting. As discussed earlier, the key requirement of explanatory reporting is fairness by providing thorough, contextual, and proportional information. Immediacy, public service, and autonomy may still be important, but many explanatory stories are guided by the need to clearly present complex information “using any available journalistic tool,” as quoted from The Pulitzer website. Hence, immersive explanatory reports should use whichever of the common immersive technologies that best conveys the story’s information.

A 360° video example of an immersive explanatory report is How Garbage Becomes ‘Black Gold’, published by The New York Times in June 2017. The immersive journalism piece shows how New York City is collecting food waste to divert organic refuse from landfills and instead create compost and gas resources. A CG-based mobile VR example of an immersive explanatory report is Alzheimer’s Disease: Exploring The Brain published by AP Digital Products, which explains the latest scientific theories on changes in the brain that are believed to contribute to Alzheimer’s disease. A head-worn AR example of an immersive explanatory report is the early immersive documentary explaining the events of the 1968 Columbia University student revolt through images, audio recordings, and videos (Feiner et al., 1997).

Design Guidelines for Immersive Journalism

Throughout our research, we have identified a number of design guidelines for immersive journalism. We present those here:

Guideline: Do not move the camera when capturing a 360° video.

As explained in Section “360° Videos,” moving the camera when capturing a 360° video will create a visual-vestibular sensory conflict for viewers, especially for those consuming the video through an immersive HWD. Such sensory conflicts are believed to be the cause of most cybersickness cases and should be avoided (LaViola, 2000). Hence, journalists must be careful not to move the camera when capturing a 360° video. If the journalist desires to portray multiple locations, a separate 360° video should be created for each location. A fade-out-and-fade-in technique, like the one described in Section “CG-Based Mobile VR,” could be used to transition between the videos.

Guideline: Avoid virtual camera motion in CG-based mobile and room-scale VR.

Many mobile VR and room-scale VR developers provide steering or simulated walking techniques for traveling within their CG-based simulations. However, like 360°camera movements, these virtual camera-motion techniques create visual-vestibular conflicts for users. While cybersickness may be an acceptable side effect for some immersive experiences, such as VR-based videogames, it should be avoided at all costs for immersive journalism pieces, which are meant to broadly serve the general public. Hence, we recommend using a teleportation technique that allows the user to point to a new location and then uses a fade-out-and-fade-in animation to transition the user to the new location to eliminate sensory conflicts and avoid inducing cybersickness.

Guideline: Use 360° videos when immediacy is your primary journalistic requirement.

As discussed in Section “360° Breaking News Videos,” CG-based simulations are costly to produce in terms of time and resources. On the other hand, consumer 360° cameras can be used to quickly capture and produce immersive journalism pieces, even in real time for live events, such as the 2016 Olympics.

Guideline: Do not use room-scale VR or head-worn AR when public service is your primary journalistic requirement.

Currently, room-scale VR and head-worn AR technologies have not yet been adopted by a wide audience. As discussed in Section “Public Service,” public service pieces should target a wide audience for dissemination. Hence, neither room-scale VR nor head-worn AR should be used for immersive public service journalism.

Guideline: Use CG-based mobile VR or room-scale VR technologies when working on an investigative reporting piece involving restricted real-world locations or events.

As explained in Section “Investigative Reporting,” investigative reporting often involves third parties that do not want information disclosed. As a result, physical access to the real-world locations involved in the story may be limited or not available. Hence, 360° videos, handheld AR, and head-worn AR are less viable options for such immersive investigative reporting pieces, as discussed in Section “Computer Graphics-Based Immersive Investigation.” Therefore, CG-based mobile VR or room-scale VR technologies are recommended for such immersive stories.

This concludes our design guidelines for immersive journalists. For information on how to develop immersive journalism pieces, we recommend reading de la Peña et al. (2010) and Owen et al. (2015). In particular, Owen et al. (2015) discuss several design guidelines beyond the scope of this article.

Case Studies: FIJI and the 2017 Pulitzer Prize Winners

In this section, we briefly discuss the most recent Pulitzer Prize winning entries as case studies to demonstrate how FIJI could be used to guide decisions about how to create immersive journalism experiences for each story type.

Case Study #1: 2017 Pulitzer Prize for Breaking News Reporting

Numerous journalists from the East Bay Times in Oakland, California, were awarded this prize for coverage of an early morning warehouse fire that killed 36 people. The rapidly developing story was first reported at 3:45 a.m. on Saturday, December 3, 2016. As details unfolded, the news staff captured and disseminated information through text reports, photography, and video that was presented in print, on websites and blogs, and using social media. Immediacy was the driving journalism requirement; various technology and media platforms were used to present new information as quickly as possible to family members and concerned loved ones about potential fire victims. Within 18 h, the East Bay Times published seven major updates of the news story.

According to the Pulitzer website, Harry Harris was the first reporter from the East Bay Times on the scene and was initially limited to talking with firefighters still trying to extinguish the fire. If Harris had had access to a consumer 360° camera, he could have captured a 360° video of the entire scene, including the continuing blaze, the firefighters still fighting to extinguish it, and the crowd watching the events unfold near the corner of 31st Avenue and International Boulevard. Viewers would likely experience presence watching the 360° breaking news video and feel like they were there at the scene as the blaze continued out of control until morning.

Case Study #2: 2017 Pulitzer Prize for Public Service

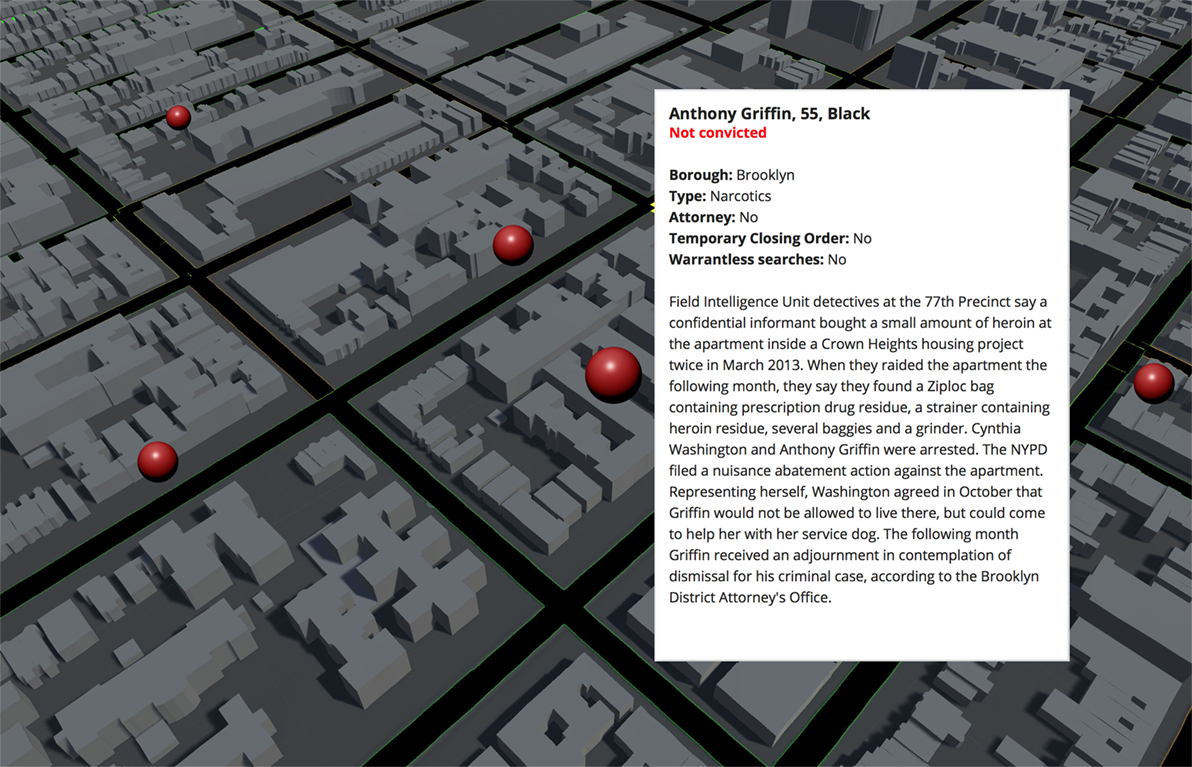

The New York Daily News and Pro Publica collaborated to analyze public records and publish information over a 10-month period about how and against whom the city of New York was enforcing its nuisance abatement law to oust hundreds of people, most of them poor minorities, from their residences. The news organizations developed an interactive graphic that connected each nuisance abatement case to a mapped location of the residence. Stories, editorials, and graphics were presented through print and websites for wide dissemination to a larger audience—including those likely less affluent.

As per our framework, a mobile immersive public service piece would be the most suitable form of immersive journalism to ensure wide dissemination, especially to those potentially affected by the nuisance abatement law. One potential approach would be to use 360° videos to show those affected by the cases and their apartment homes and neighborhoods. Such videos would likely provide a sense of presence within the residences and evoke emotions while listening to the residents speak about being evicted from their homes. Another immersive journalism approach would be to create a CG-based mobile VR version of the interactive graphic connecting each case to a mapped location. Such a CG-based simulation could be created by integrating an external tool like Mapbox with information collected by the reporters (Figure 5 for a mockup). Finally, a handheld AR application could be developed by displaying similar information near the real-world residences, based on Global Positioning System data.

Figure 5. A mockup of what a computer graphics-based mobile virtual reality application might visualize for the 2017 Pulitzer Prize for public service news story.

Case Study #3: 2017 Pulitzer Prize for Investigative Reporting

The 2017 Pulitzer Prize for Investigative Reporting was awarded to Eric Eyre of the Charleston Gazette-Mail, in Charleston, West Virginia. Judges recognized Eyre “for courageous reporting, performed in the face of powerful opposition, to expose the flood of opioids” into West Virginia. This recognition highlights our framework’s guiding requirement for investigative journalism—autonomy. Large drug companies repeatedly fought the newspaper’s efforts to access the sealed court records that revealed massive shipments of opioids to the state. Print and online stories described the connection between the state’s high number of addictions and overdose deaths, and the millions of pain pills sent to local pharmacies.

Following our framework, a CG-based immersive investigation could be created for this news story, using either CG-based mobile VR or room-scale VR technology, to allow the general public to view simulations of the real-life events behind this story. For the less-interactive mobile VR technology, a CG-based simulation could allow the user to observe how a Raleigh County doctor lectured one woman about the benefits of vitamins but handed her prescriptions for OxyContin, which resulted in her addiction to pain pills. For the more-interactive room-scale VR technology, a CG-based simulation of Hurley Pharmacy, one of West Virginia’s “pill mills,” could allow the user to see, and even dispense, the 157,400 hydrocodone tablets that a drug wholesaler shipped to the pharmacy in January 2008. If implemented with an avatar, such a room-scale CG-based immersive investigation could afford body ownership, in addition to presence, engagement, and emotion.

Case Study #4: 2017 Pulitzer Prize for Explanatory Reporting

This Pulitzer Prize was awarded to the International Consortium of Investigative Journalists, McClatchy and the Miami Herald for a series of stories exposing the hidden infrastructure and global scale of offshore tax havens. These stories were generated by a collaboration of more than 300 reporters on six continents, representative of what we identify in our framework as the preeminent journalism requirement of explanatory journalism—thoroughness in presenting complex subjects fairly and contextually. The stories are based on a “massive” leak of documents, which revealed offshore holdings of politicians and public officials around the world, and thus by their very nature were largely presented as written analyses of textual data. In several cases, multimedia presentations were used to help explain the complexity of offshore corporations and how they operate. The Miami Herald, for example, produced a graphic and short representational video titled “The Secret Shell Game” that helped illustrate how offshore companies work.