Peripersonal Space: An Index of Multisensory Body–Environment Interactions in Real, Virtual, and Mixed Realities

- 1Laboratory of Cognitive Neuroscience, Center for Neuroprosthetics and Brain Mind Institute, École Polytechnique Fédérale de Lausanne (EPFL), Lausanne, Switzerland

- 2Department of Clinical Neuroscience, Centre Hospitalier Universitaire Vaudois (CHUV), Lausanne, Switzerland

- 3Vanderbilt Brain Institute, Vanderbilt University, Nashville, TN, United States

- 4Department of Cognitive Science and Psychology, University of Trento, Trento, Italy

- 5Department of Neurology, Université de Genève, Geneva, Switzerland

Human–environment interactions normally occur in the physical milieu and thus by medium of the body and within the space immediately adjacent to and surrounding the body, the peripersonal space (PPS). However, human interactions increasingly occur with or within virtual environments, and hence novel approaches and metrics must be developed to index human–environment interactions in virtual reality (VR). Here, we present a multisensory task that measures the spatial extent of human PPS in real, virtual, and augmented realities. We validated it in a mixed reality (MR) ecosystem in which real environment and virtual objects are blended together in order to administer and control visual, auditory, and tactile stimuli in ecologically valid conditions. Within this mixed-reality environment, participants are asked to respond as fast as possible to tactile stimuli on their body, while task-irrelevant visual or audiovisual stimuli approach their body. Results demonstrate that, in analogy with observations derived from monkey electrophysiology and in real environmental surroundings, tactile detection is enhanced when visual or auditory stimuli are close to the body, and not when far from it. We then calculate the location where this multisensory facilitation occurs as a proxy of the boundary of PPS. We observe that mapping of PPS via audiovisual, as opposed to visual alone, looming stimuli results in sigmoidal fits—allowing for the bifurcation between near and far space—with greater goodness of fit. In sum, our approach is able to capture the boundaries of PPS on a spatial continuum, at the individual-subject level, and within a fully controlled and previously laboratory-validated setup, while maintaining the richness and ecological validity of real-life events. The task can therefore be applied to study the properties of PPS in humans and to index the features governing human–environment interactions in virtual or MR. We propose PPS as an ecologically valid and neurophysiologically established metric in the study of the impact of VR and related technologies on society and individuals.

Introduction

The manner in which the brain integrates information from different senses in order to boost perception and guide actions is a major research topic in cognitive neuroscience (Calvert et al., 2004; Spence and Driver, 2004; Stein, 2012) and a topic of increasing interest in the design of virtual environments. Multisensory integration of bodily inputs, in particular, has been recently proposed as a key mechanism underlying the experience of oneself within a body, which is perceived as one’s own (body ownership), which occupies a specific location in space (self-location), and from which the external world is perceived (first person-perspective), i.e., the different components of what has been called bodily self-consciousness (Blanke and Metzinger, 2009; Blanke, 2012; Blanke et al., 2015). Accordingly, the manipulation of bodily inputs has been used to induce the feeling that an artificial or virtual body is one’s own and to generate the sensation of being located within a virtual environment (Tsakiris, 2010; Blanke, 2012; Ehrsson, 2012; Serino et al., 2013; Noel et al., 2015b; Salomon et al., 2017). These findings thus highlight the particularly relevant role of bodily inputs for virtual reality (VR) (Herbelin et al., 2016). Multisensory integration of bodily-relevant inputs naturally happen within a limited space immediately surrounding the body, where external stimuli can have direct contacts with the body, i.e., the peripersonal space (PPS; Figure 1; Rizzolatti et al., 1997; Ladavas, 2002; Graziano and Cooke, 2006). PPS has been suggested to index the self-space (Blanke et al., 2015; Noel et al., 2015b, 2017; Salomon et al., 2017) and to represent the space wherein the individual interacts with external stimuli. Evolutionarily, until very recently, all direct body-objects interactions have been experienced within a physical PPS. However, as human interactions are increasingly occurring not within the real, but also within virtual or mixed realities, it is interesting to study and characterize how PPS is represented in VR (see Iachini et al., 2016, for a recent delineation of interpersonal space in virtual and real environments). Here, we propose and demonstrate that it is possible to delineate and measure a representation of PPS within virtual and mixed reality (MR) environments.

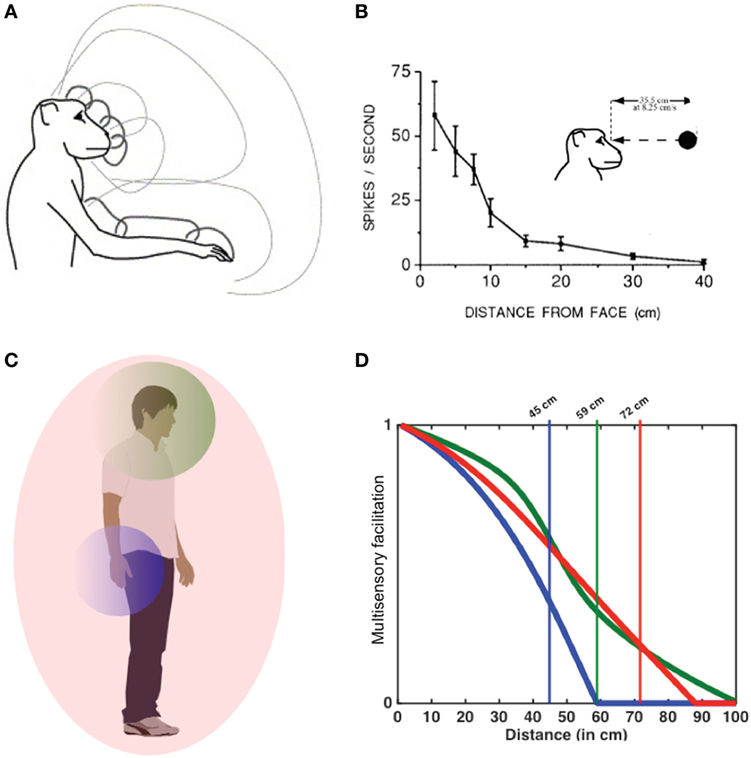

Figure 1. Peripersonal space (PPS) in monkeys and humans. In monkeys, PPS is represented by specific neuronal populations having tactile receptive fields on different parts of the animal’s body and visual and/or auditory receptive fields extending for few centimeters in space around the same body part (A). This way PPS neurons respond to an external stimulus as a function of its distance from the animal’s body, depending on the extent their multisensory receptive fields (B). In humans, an analogous multisensory system representing the PPS around different body parts has been described (C), so that visual and/or auditory stimuli more strongly interact with tactile processing depending on their distance from the stimulated body part (D). The farthest distance evoking significant multisensory interaction is considered a proxy of the boundaries of PPS in humans.

Several lines of work in neurophysiology and neuroimaging have shown that PPS representation is implemented by specific neuronal populations, which selectively integrate tactile stimuli on the body with visual or auditory cues related to external objects as they approach the body (Ladavas and Serino, 2008; Macaluso and Maravita, 2010; Cléry et al., 2015, 2017). In this manner, the brain builds a representation of spatial locations in the environment where body-objects points of contact may potentially occur (Cléry et al., 2015, 2017), a mechanism which is postulated to be fundamental for defensive as well as for approaching behaviors (Cléry et al., 2015; de Vignemont and Iannetti, 2015). In monkey neurophysiology, PPS has been studied by measuring the response properties of multisensory neurons, mainly located in the ventral premotor cortex (Rizzolatti et al., 1981; Graziano et al., 1997) and the posterior parietal cortex (Duhamel et al., 1998; Avillac et al., 2005). These neurons respond to tactile stimulation on a particular part of the animal’s body (hand, face, and trunk most commonly), as well as to visual or auditory stimuli presented close to the same body part (see Figure 1). Importantly, these neurophysiological recordings suggest that neurons encoding for PPS representations are solely responsive when the exteroceptive sensory stimulus is close to the body, but not when auditory or visual stimuli are presented far from it. Additionally, these neurons are most responsive to moving, as opposed to static, stimuli (Fogassi et al., 1996). The extent of PPS is defined by the size of the multisensory receptive fields of such particular class of multisensory neurons.

Directly inspired by the monkey neurophysiology work, we have developed a psychophysical experimental task to measure behaviorally in humans the extent of PPS around the different parts of the body (Canzoneri et al., 2012; Teneggi et al., 2013; Serino et al., 2015a). This approach has been extensively used in neuroscience research in order to investigate different properties of human PPS (Canzoneri et al., 2013a; Bassolino et al., 2014; Taffou and Viaud-Delmon, 2014; Ferri et al., 2015a,b; Galli et al., 2015; Noel et al., 2015b; Serino et al., 2015a; Kandula et al., 2017; Pellencin et al., 2017; Salomon et al., 2017). In this task, participants are requested to respond as fast as possible to a tactile stimulus administered on a given body part, while a task-irrelevant auditory or visual stimulus, which they are instructed to ignore, are presented approaching along the frontal plane at different distances from the participant’s body. Taken together, the results of the array of experiments abovementioned demonstrate that tactile reaction times (RTs) speed up as sounds or visual stimuli are presented closer to the body. Further, and critically, the speed up of tactile detection as a function of exteroceptive stimuli distance to the body is not linear, but sigmoidal. Thus, there is a veritable inflexion point wherein if auditory or visual stimuli are presented within the given spatial range, tactile detection is facilitated and it is possible to identify this spatial range wherein multisensory facilitation occurs. Since the main property of the PPS system is in integrating tactile processing with external stimuli when these occur within the PPS (Maravita et al., 2003), the critical distance at which the sound or visual stimuli speed up tactile RTs is taken as a proxy of PPS extension. Such measure has been reliably used to study precisely the extent of individual’s PPS (Ferri et al., 2015a,b; Serino, 2016), its plastic and dynamic modification following different kinds of sensory manipulations (Canzoneri et al., 2013b; Ferri et al., 2015a,b; Noel et al., 2015a,b; Serino et al., 2015b; Patané et al., 2016) and following interactions, such as social interactions (Teneggi et al., 2013; Iachini et al., 2014; Pellencin et al., 2017).

The PPS measurement task, originally developed to measure audiotactile interactions, has been adapted to a visuotactile version using 3D computer graphics and head-mounted displays (HMDs) in order to present dynamic visual stimuli (Herbelin et al., 2015; Serino et al., 2015a). Here, we describe the most recent evolution of the task based on MR where real environment and virtual objects are blended. This technology allows for the administration and control of visual, auditory, and tactile stimuli, while participants can see online their own body immersed in a highly realistic prerecorded panoramic capture of a real environment. MR provides us, and cognitive, social, and behavioral scientists generally, with the ability to empirically study the interface between the user’s body and the environment. This technology equally permits the freedom to experimentally decide whether the utilization of a virtual or a real environment and/or body is most desirable, or even whether some mixture between the real and virtual is most appropriate.

In this document, after introducing the general setup of the PPS task in its visuotactile version, we present how the MR setup allows delineating the PPS of participants in a tri-modal condition (audiovisuotactile). That is, we query whether the bifurcation of near- and far-space is better defined—in terms of goodness of fit—when further exteroceptive input is administered. In this manner, we query whether the representation of PPS may differentiate between the real environment (where all naturalistic sensory cues are presented), mixed-realties (where some naturalistic sensory cues may be present), and virtual environments (where the sensory periphery has no access to the real world). In the experiments reported below, the real body is always rendered within an environment composed of real contextual cues and virtual objects. In a first experiment (Experiment 1), we present visual looming stimuli, while in the second experiment (Experiment 2), we use audiovisual dynamic stimuli. In both cases, stimuli are combined with tactile stimulation. The results show that the PPS task is able to capture the boundaries of the multisensory PPS at the individual level, in a fully controlled and previously laboratory-validated setup, and, for the first time, maintaining the richness and ecological validity of real-life situations. In addition, results suggest that the utilization of the trimodal version of the task, as opposed to the bimodal, allows for the most reliable delineation of PPS (vis-à-vis goodness of fit), further highlighting the necessity to employ ecologically valid and multisensory scenarios, be it in the real or a virtual environment.

Materials and Methods

Technological Components

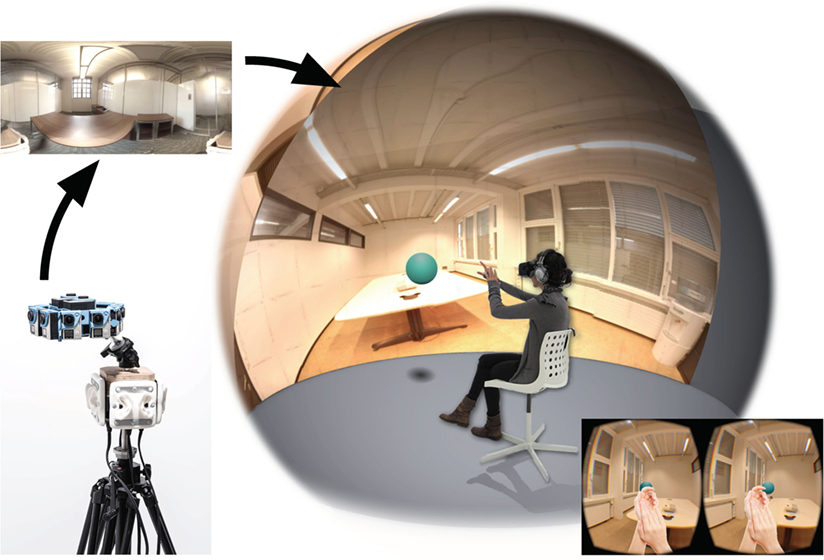

We developed a mixed-reality technology for simulating real and/or virtual environments in first person perspective based on the omnidirectional capture and recording of visual and auditory stimuli. This approach involves two phases, first the capture and then the re-experiencing. For capturing the scene, several cameras and microphones are assembled to cover the entire sphere of perception around a viewpoint (360° horizontal and vertical stereoscopic vision, horizontal panoramic binaural audio). The panoramic video environment is captured using seven pairs of GoPro Hero4 cameras placed in a spherical rig (3D 360hero 3DH3PRO14H, 6.3 cm intercamera distance per pair) and stitched in two large panoramic videos. Four pairs of binaural microphones (3DIO Omni Binaural Microphone) are used to capture binaural audio in four directions. Our in-house MR software (RealiSM, http://lnco.epfl.ch/realism) then aggregates all data into a single high-resolution panoramic and stereoscopic audiovisual custom format (one panorama per eye, acoustic interpolation of binaural audio between directions). For the re-experiencing phase, VR devices such as HMD (Oculus Rift DK2; 960 × 1,080 per eye at 75 Hz, ~105° FOV diagonal, ~85° FOV horizontal) and stereophonic noise-canceling headphones (BOSE QC15) are used to immerse subjects into the scene. Importantly, a head-mounted stereoscopic depths camera (Duo3D MLX, 752 × 480 at 56 Hz) fixed on the HMD captures the user’s body from a first-person perspective, and the stereoscopic image of the body is merged into the virtual scene in replacement of the real body (see Figure 2).

Figure 2. The RealiSM technology. Reality substitution combines the features of classical virtual reality with 360°video and audio capturing, thus offering extended capabilities: stereoscopic rendering, binaural panoramic audio, merging of virtual objects, and integration of first-person perspective stereoscopic video images of the body in the video environment. (Written and informed consent has been obtained from the depicted individual for the publication of their identifiable image.)

The resulting rendered scene highly resembles the recorded scene and the subjects experience seeing themselves (and not a 3D avatar) teleported there. Only head rotations around the captured viewpoint are however possible, and placing subjects in a sitting position is therefore preferable. Any kind of virtual multimedia object can also be merged into the scene, allowing fully controlled presentation of sensory stimuli.

The control of experimental flow, synchronization between tactile, visual, and auditory stimuli, as well as the recording of responses, was implemented in our custom software ExpyVR. This software provides graphical user interface (Qt4) and scripting capabilities (Python 2.7) to drive all the equipment used in the PPS task. It is freely available online at http://lnco.epfl.ch/expyvr.

PPS Measurement Components: Tactile Stimuli

For all experiments presented here, tactile stimuli of 10 ms are delivered on participant’s right cheek and by means of a mechanical solenoid controlled via a stimulator (MSTC-3 tappers, M&E Solve).

PPS Measurement Components: Acoustic Stimuli

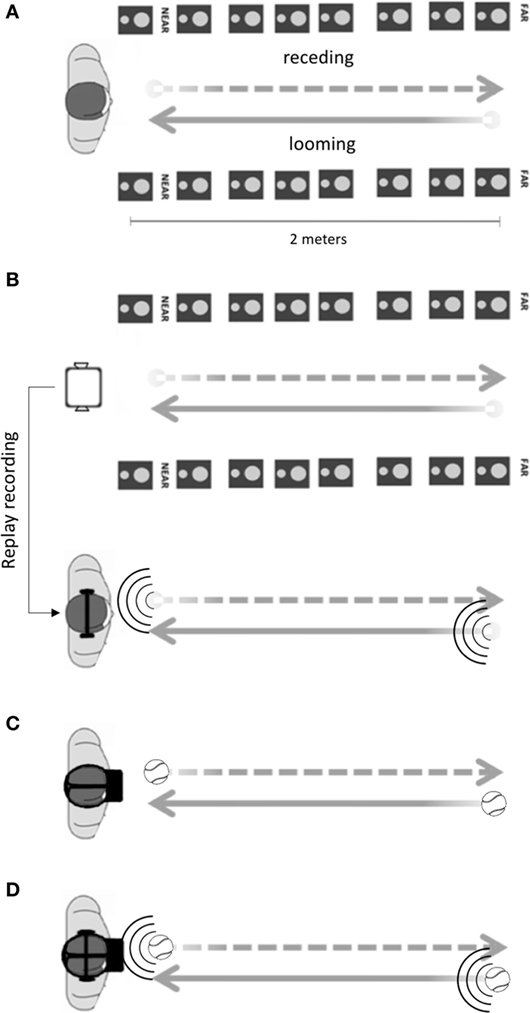

Prior work from our group has extensively used a 2 (Canzoneri et al., 2012) and 16 (Galli et al., 2015; Noel et al., 2015b; Serino et al., 2015a) speakers setup expressively developed for the measurement of PPS boundaries. The 16 loudspeakers setup is an audio rendering system composed of two uniform linear arrays of eight loudspeakers each (JBL Control 1 Pro WH Pair, M-Audio FastTrack Ultra 8R) which simulates a white noise sound source, perceived at the middle location between the two loudspeakers rows, and approaching from 2 m away until the position of the participant (see Figure 3A). The dynamic nature, intensity, and origin of the sound are manipulated by software acoustic simulations, and the algorithm governing the placement of the virtual sound source has been previously detailed (Serino et al., 2015a). Here, in order to adapt the acoustic stimuli for a VR setup, we developed a headphones version of the task whereby sounds generated via the abovementioned 16 speakers setup are recorded with binaural microphones (3Dio Omni Binaural) and replayed with stereo headphones (see Figure 3B). This version of the setup, hence, allows for the measurement of PPS via an audiotactile paradigm without the necessity for the large array of speakers (see Figure 1 in Galli et al., 2015).

Figure 3. Peripersonal space (PPS) experimental setup. (A) In the audiotactile version of the task, auditory looming sounds were presented by placing participants between two arrays of eight speakers (2 m of longitudinal distance, and 50 cm between participant midline and each array of speakers in the horizontal plane). The stimulus generated by the loudspeakers spatialized a moving broadband sound source moving at a constant speed. (B) In order to create a portable version of the audiotactile task, the RealiSM technology was used to prerecord the sounds from the location of an ideal participant, by means of the 360°audiocapturing system; then, those sounds tracks have been played back to actual participants by means of stereoscopic headphones. (C) In the visuotactile version of the task, by means of an HMD, looming virtual stimuli are visually presented being overimposed in an online recording (or prerecorded video) of the external environment and of the participant’s body within the scene. (D) In the trimodal, audiovisuotactile version of the task, both virtual visual (thought HMD) and auditory (by means of headphones) stimuli are simultaneously presented [combining (B,C)].

PPS Measurement Components: Visual Stimuli

The PPS task can equally be extended to the visual modality (Pellencin et al., 2017). In this case, a tridimensional virtual tennis ball looming toward participants’ face is used as visual stimulus (Figure 3C). This ball travels 2 m in virtual space at a velocity of 75 cm/s until making fictive contact with the participant’s face. The virtual ball is superimposed on the recording of the environment, and the images are presented on the HMD.

Participants

Here, we report two datasets, respectively, from 27 students (11 females, mean age 24 years) and 26 students (12, female, mean age 23 years), from the Ecole Polytechnique Federale de Lausanne (EPFL), who participated in the visuotactile (Experiment 1) and in the trimodal audiovisuotactile (Experiment 2) versions of the experiment. Subjects were right-handed, with normal or corrected-to-normal eyesight, normal hearing, and no history of neurological or psychiatric disease. All participants received monetary compensation for their time (20 CHF/h) and gave their informed and written consent to take part in this study, which was approved by the ethics committee of the Brain and Mind Institute of the EPFL.

Experimental Design

Seventy percent (70%) of trials are experimental multisensory (visuotactile in Experiment 1 and audiovisuotactile in Experiment 2) trials in which participants hear a sound (or see a moving ball) approaching toward them. At a given moment in time (hereafter T), they receive the tactile target stimulus. Participants are requested to respond to touch as rapidly as possible via button press. When subjects are presented with looming visual stimuli (which therefore by definition start far and over time come closer to the participant), the stimuli temporal and spatial dimensions map negatively and linearly. That is, D1 and D2, respectively, correspond to the last and penultimate temporal delays, and so forth.

Ten percent (10%) of trials are unimodal visual trials where only the virtual ball is presented, but no tactile stimulus is given. Thus, based on the task request, these are catch trials and participants are to withhold from responding. Catch trials are important in order to avoid entrainment of an automatic motoric response and to assure that participants are attentive to the task. It also allows measuring false positives and reducing temporal expectancy effects (Kandula et al., 2017).

Because the aim of the task is to identify the farthest distance from the body (D) at which visual stimuli significantly speed up tactile processing, that is when visuotactile RTs become significantly faster than responses to tactile stimulation alone, the task includes also 20% of unimodal tactile trials in which a vibrotactile target stimulus is delivered in the absence of visual stimulation. Unimodal tactile trials are considered baseline trials and are used to show a multisensory facilitation effect on tactile RT due to visual or audiovisual stimuli presented within the PPS as compared to RT of unimodal tactile stimuli. Note that we denote the PPS effect—namely, the facilitation of tactile RTs via exteroceptive sensory modalities presented near the body—as a multisensory facilitation effect, and not as indexing multisensory integration, as statistical summation or sensory binding is not indexed here.

Procedure

Upon arrival at the laboratory, a tactile stimulator is placed on the participant’s face (right check). Subjects are informed that they will feel a tactile vibration and that their task is to respond as accurately and rapidly as possible to this tactile stimulation. Participants are equally informed that there will be a task-irrelevant visual (Experiment 1) or audiovisual (Experiment 2) stimuli that will approach toward them. Finally, participants are informed that in some trials (catch trials) only visual stimuli without tactile stimulation will be presented, and yet on other trials (baseline trials) only a tactile vibration will be administered (see above for breakdown of trials).

On each trial, the tactile target stimulus is delivered at a different delay from the moment when the trial start; thus, in the multisensory trials, the tactile stimulus is processed when the visual (or audiovisual) stimulus is perceived as being at different distances from the participant (see Serino et al., 2015a, Figure 1, for evidence that approaching auditory stimuli within this context is localized by participants as closer the longer the stimuli has loomed for). In the case of Experiment 1, the visual stimulus approached the participant’s face at a constant speed of 75 cm/s and was presented for 2,600 ms. Following the end of the visual stimuli movement, the ball remained on screen for 400 ms, followed by 500 ms of no stimulation. A fixation cross was presented for 1,200 ms in between trials, and the ball initiated approach toward the participant’s face 300 ms after offset of the fixation cross. Each trial lasted 5,000 ms. In this experiment, six different temporal delays were used for unimodal and bimodal conditions. In total, Experiment 1 consisted of 300 trials (36 trials per delay for the multimodal condition, randomly intermingled with 8 unimodal tactile trials per delay and 36 unimodal visual trials). Trials were equally divided in four blocks of 75 trials, lasting approximately 7 min each.

In the trimodal version of the task (Experiment 2), the visual stimulation was the same as in Experiment 1, but in addition, dynamic sounds moving at the same velocity and direction of the virtual ball were simultaneously presented. The pre-recorded binaural sounds were administered during the experiment by means of noise-canceling headphones (see PPS Measurement Components: Acoustic Stimuli). Five different temporal delays were used for unimodal and bimodal conditions in Experiment 2, which consisted of a total of 540 trials (12 trials per delay for each trimodal condition, 12 trials per delay for each unimodal condition and 60 catch trials), divided in four blocks of 135 trials, lasting about 12 min each.

We measure RTs to tactile stimuli at each temporal interval, i.e., each distance, and search for the critical distance at which the dynamic multisensory stimulus significantly speeds up RT to tactile stimuli, as compared to the unimodal tactile baseline condition. This distance indicates the spatial location where an external stimulus in space significantly interacts with tactile processing on the body and is taken as a proxy of individuals’ PPS boundaries.

Analysis

Analysis procedure is identical for both Experiment 1 and Experiment 2. Preliminary analyses are conducted on unimodal auditory/visual catch trials in order to test for accuracy in the tactile task. Due to the settings of the tactile target stimulation, participants are very accurate in the task and thus, performance is analyzed in terms of RT only.

We first search for a significant difference in modulation of tactile RT, depending on the distance of the auditory or visual stimuli. To this aim, we compare RT in the multisensory condition with those in the baseline unimodal condition, at the different temporal delays. Thus, we first run a repeated measure ANOVA on RT with sensory condition (unimodal and bimodal) and distance as factors. We search for a significant interaction and then check that a significant effect of distance is presented in the bimodal condition, and not (or much less) in the unimodal condition. In this way, baseline trials are used to control for spurious modulation in RT due to an expectancy effect (i.e., the fact that if a trial has started a moment ago and no tactile vibration has been given, it is more and more likely that the tactile stimuli is approaching in time).

At this point, in order to identify the location of the external visual or audiovisual stimulus in space leading to a significant modulation of tactile processing, RT in the multisensory stimulation conditions are corrected, on an individual basis, for baseline performance. That is, for each participant, we identify the baseline condition resulting in the fastest RT among the baseline unimodal tactile conditions. We calculate the mean raw RT for that condition, and this value is subtracted from the mean raw RT to tactile stimulus for each audiotactile or visuotactile condition. In this way, we adopt the most conservative criterion to show a facilitation effect on tactile RT due to visual or audiovisual presentation. Negative deviations from the baseline (which by definition is now zero) indicate a multisensory facilitation effect (visuotactile or audiovisuotactile RTs that are faster than the fastest unimodal response). In order to identify the boundaries of PPS representations, we search for the farthest point in space where either visual or audiovisual stimuli induce a significant facilitation effect as compared to baseline (i.e., the fastest unimodal tactile condition).

Finally, in order to extract unique parameters able to estimate the PPS boundary at the individual level, we equally fit the data to a sigmoidal function (Eq. 1),

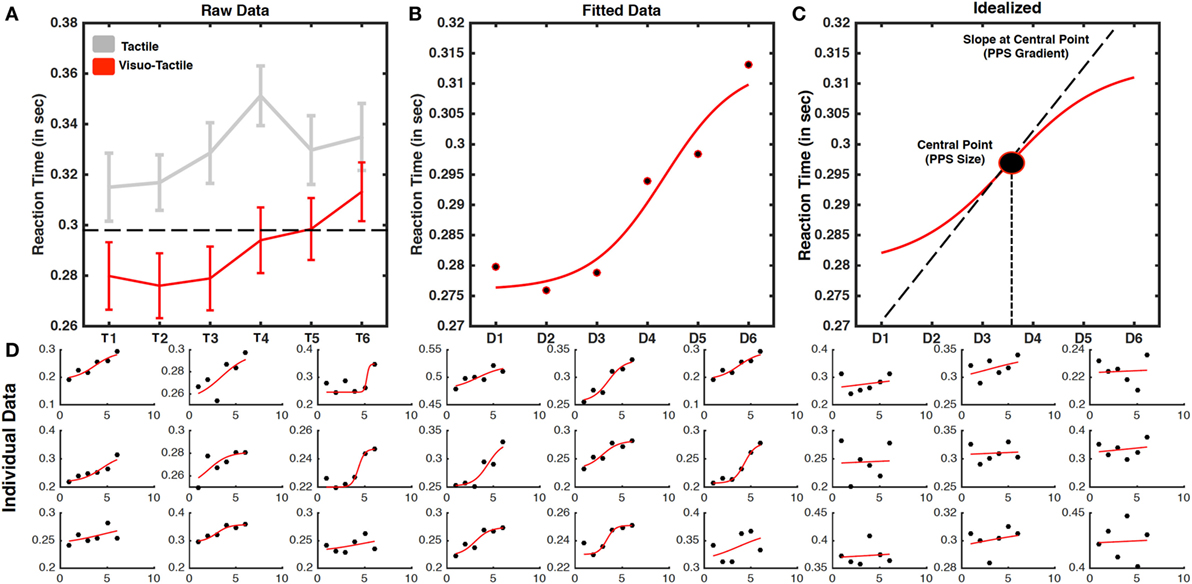

where x represents the independent variable (i.e., the distance of the sound or ball), y is the dependent variable (i.e., the RT), ymin and ymax represent the lower and upper saturation levels of the sigmoid, xc the value of the abscissa at the central point of the sigmoid [i.e., the value of x at which y = (ymin + ymax)/2] and b establishes the slope of the sigmoid at the central point. The ideal sigmoidal function fitting RT in multimodal condition is reported in Figure 4 (top right panel). Two parameters are free to vary and thus estimated: the central position of the sigmoid and the slope of the sigmoid at the central point. The root mean error and the coefficient of determination (R2) are equally extracted from the fitting procedure as goodness-of-fit measures. Each of this parameter gives specific information concerning the spatial modulation of multisensory interaction at the individual level. The R2 is used to evaluate the goodness of fit of the function, i.e., how well the spatial dependent modulation of RT is described by a sigmoidal function. We have shown that a sigmoidal model better explains RT modulation in the bimodal condition as compared to a linear model (Canzoneri et al., 2012). However, there are individual differences in the goodness of fit of the model. For individual data with R2 < 0.50, no other parameters are considered, since a sigmoidal model does not adequately fit with the data. For R2 ≥ 0.50, the central point of the sigmoidal function indicates the middle point of the spatial range where the pattern of RT changes from slow to fast, typically corresponding, respectively, to far and near sound or ball location. Thus, the function’s central point can be considered a single-value proxy of the location where the multisensory facilitation effect occur and therefore of the PPS boundary. Finally, the slope of the function reflects how quick the transition between slow and fast RT is. Thus, it can be considered a measure of how well defined the PPS boundary is (Noel et al., 2016). It is worth noting that according to the formula above, the larger the parameter b, the shallower the slope, and vice-versa.

Figure 4. Representative results from a visuotactile peripersonal space (PPS) task. (A) Averaged reaction times (RTs) (error bars represent SEM) to tactile stimulation as a function of temporal delays for unimodal tactile (gray) and visuotactile trials (red). Visuotactile stimuli induced a stronger modulation of tactile RT, as compared to unimodal tactile stimuli, depending on temporal delays, that is on the position of the virtual ball in space at the time of tactile stimulation. The PPS boundary is identified as the distance at which the visual stimulus induced significantly faster RT as compared to the fastest unimodal tactile RT (as indicated by the dashed line). (B) Sigmoidal fitting of averaged raw RT in the visuotactile condition. (C) Ideal PPS curve from sigmoidal fitting: the central point of the curve is a single-data point proxy of transition between slow and far RT, i.e., between PPS and extrapersonal space, where the slope of the function at the central point indicates how sharp this transition is. (D) RT and fitting for individual subjects (ordered as a function of the goodness of fitting, based on individual R2).

Results

Experiment 1—Visuotactile PPS

Mean RTs to tactile stimuli were calculated for each temporal delay (from T1 to T6) and submitted to a 2 (Condition: Visuotactile, Tactile) × 6 (Distances of the ball) repeated-measures ANOVA. As illustrated in Figure 4A, the interaction was significant [F(5,130) = 6.796; p < 0.001], showing that tactile responses were more strongly modulated as a function of temporal delays in the visuotactile than in the unimodal tactile condition. The ANOVA run on visuotactile trials showed that RT became progressively faster at decreasing ball distances. In order to identify the location in space where the virtual ball made RT in the visuotactile condition significantly faster than unimodal responses, for each participant, we first identified the condition of tactile stimulation resulting in faster RT. We compared these values with the mean RT at the different distances in the visuotactile conditions by means of one-sample t-test, corrected for multiple comparisons (six comparisons) with the Bonferroni method. RTs in the visuotactile condition was faster than the fastest unimodal RT when tactile stimulation was associated with a virtual ball at D1, D2, and D3 (all p-values < 0.001), and not when the ball was at father distance, i.e., D4, D5, and D6. Thus, the PPS boundary was located between D3 and D4.

In order to represent such differential modulation of tactile processing at the individual subjects level, we fit (Eq. 1) the relationship between tactile RTs and timing at which tactile stimuli occurred with a sigmoidal function as described above. The averaged and individual data fit is shown in Figure 4B (see Figure 4C for an idealized case). Importantly, at the individual level, the sigmoidal fitting was able to represent the distance dependent modulation of tactile response with an R2 higher than 0.10 in 20 out of 27 participants and higher than 0.50 in 16 participants, where mean R2 was equal to 0.83 (individual fitting data are shown in Figure 4D). From these data, we were able to estimate the average central point of the sigmoidal function at a distance equal to 123 cm (3.71/6.00 × 200 cm).

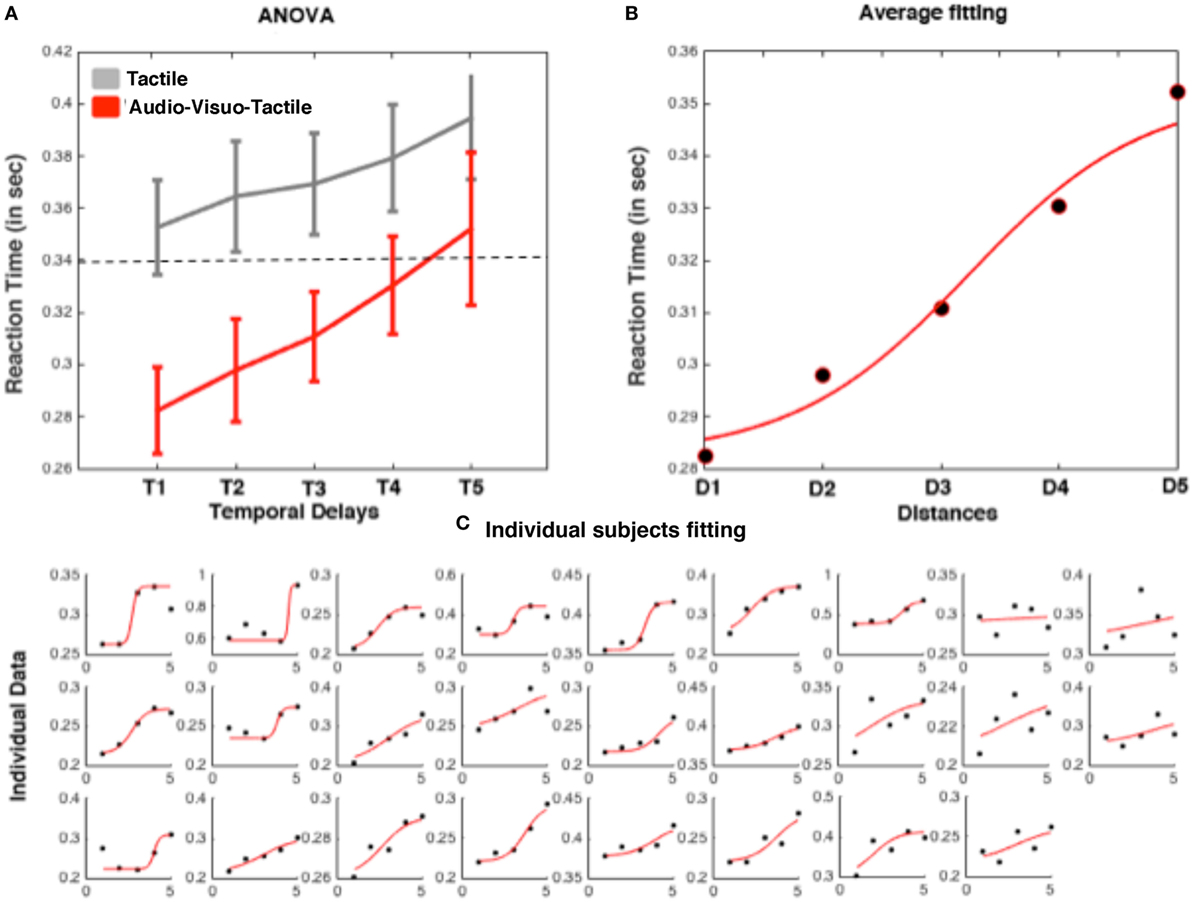

Experiment 2—Audiovisuotactile PPS Task

Mean RTs to tactile stimuli were calculated for each temporal delay (from D1 to D5) and submitted to a 2 (Condition: Audiovisuotactile, Tactile) × 5 (Distances of the ball/sound) repeated-measures ANOVA. The interaction was significant [F(4,100) = 2.71; p = 0.037], showing that tactile responses were more strongly modulated as a function of temporal delays in the audiovisuotactile than in the unimodal tactile condition. The ANOVA run on audiovisuotactile trials showed that RTs became progressively faster at decreasing ball/sound distances (see Figure 5A). In order to identify the location in space where the virtual ball (and sound) made RT in the audiovisuotactile condition significantly faster than unimodal responses, for each participant, we used the same procedure as described above for Experiment 1. To this aim, we compared the condition of tactile stimulation resulting in faster RT with the mean RT at the different distances in the audiovisuotactile conditions by means of one-samples t-test, corrected for multiple comparisons (5 comparisons) with the Bonferroni method. RT in the audiovisuotactile condition was faster than the fastest unimodal RT when tactile stimulation was associated with a virtual ball at D1, D2, and D3 (all p-values < 0.01), and not when the ball was at a farther distance, i.e., D4 and D5. Thus, the PPS boundary was located between D3 and D4.

Figure 5. Representative results from a trimodal audiovisuotactile peripersonal space (PPS) task. (A) Averaged reaction times (RTs) (plus SEM) to tactile stimulation as a function of temporal delays for unimodal tactile (gray) and audiovisuotactile trials (red). (B) Sigmoidal fitting of averaged raw RT in the trimodal condition. (C) RT and fitting for individual subjects (ordered as a function of goodness of fitting).

Also in this case, in order to represent such differential modulation of tactile processing at the individual subject level, we fit (Eq. 1) the relationship between tactile RTs and timing at which tactile stimuli with a sigmoidal function as described above. The averaged and individual data fit are shown in Figure 5B. Importantly, at the individual level, the sigmoidal fitting was able to represent the distance dependent modulation of tactile response with an R2 higher than 0.10 in 24 out of 26 participants and higher than 0.50 in 18 participants (whose mean R2 was equal 0.97; individual fitting data are shown in Figure 5C). From these data, we were able to estimate the average central point of the sigmoidal function at a distance equal to 105 cm (2.63/5.00 × 200 cm).

Contrast between Visuotactile and Audiovisuotactile Delineations of PPS

Comparing the results from Experiments 1 and 2, thus, even if just qualitatively, seemingly indicates that whether measured via a visuotactile or an audiovisuotactile paradigm, the extent of PPS remains stable. That is, in Experiment 1 the boundary of PPS was measured at 123 cm, while it was measured at 105 cm in Experiment 2. Indeed, these two average measurements were not statistically different from one another (independent-samples t-test, p = 0.21). Of note, however, it appears that the representation of PPS is most readily captured—in terms of goodness of fit—via the tri-modal paradigm, as opposed to the bimodal one. While 74% of subject’s data in Experiment 1 fit the sigmoidal function with R2 > 0.10, 92% of the data from Experiment 2 met this threshold. Similarly, 59% of subjects fit the sigmoidal with R2 > 0.50, a number that increased to 69% in Experiment 2. Finally, the average R2 (after rejecting participants with R2 < 0.60) in Experiment 1 was 0.83 (SEM = 0.05), far from the 0.97 (SEM = 0.05) in Experiment 2 [unpaired t-test t(51) = 2.19, p = 0.032].

Discussion

We present how the boundaries of PPS can be measured in terms of spatially dependent modulation of multisensory responses with a simple behavioral task that can be conducted with participants immersed in a MR environment. In this context, the PPS boundary is identified as the location in space where tactile processing is significantly boosted by the presentation of an external event, as signaled by visual or audiovisual stimulation. Further, we show that the delineation of PPS is most robustly (i.e., goodness of fit) accomplished via the presentation of approaching audiovisual stimuli than simply visual stimuli. This latter finding seemingly implies that there is a gradual relationship between the faithfulness or completeness of exteroceptive sensory representation and the delineation of PPS. That is, the near and far spaces are most clearly bifurcated when sensory information pertaining to the external environment is richer.

Our results indicate that the extent of the multisensory PPS assessed behaviorally in MR is comparable with the extent of multisensory receptive fields observed in neurophysiological studies (i.e., spatial modulations of tactile responses in Figures 4 and 5 are similar to spatial modulation from PPS neurons shown for instance in Figure 1B). Furthermore, the measure of individuals’ PPS is most robustly accomplished with a multimodal approach such as with the MR technology presented here. By merging pre-recorded scenes with real-time input and computer graphics, our technology allows presenting multimodal stimuli while participants are immersed in a surrounding visual and acoustic environment. Importantly, the participants also see their own body acting within the same environment. The complementary richness and ecological validity of the setup and the perfect control of the experimental apparatus allows, on the one hand, to correctly run the PPS task with the scientific rigor of previous laboratory setups, and on the other hand, to present ecologically valid and rich scenarios, close to real life events. This represents an essential added value for cognitive science research, since PPS is a multisensory-motor representation of the body in interaction with its environment (Serino et al., 2015a). This approach opens new perspectives for studying cognitive foundations of human behavior in real life contexts, while the subject is interacting with the environment and, maybe even more interestingly, when interacting with other people. Indeed, recent studies have shown that the nature of one’s interaction with another agent (Teneggi et al., 2013) or even our social perception of the other (Pellencin et al., 2017) shapes our multisensory PPS.

The proposed analyses, based on standard analysis of variance, but perhaps most importantly also on function fitting, allows for accurately measuring the PPS boundary at the group level, but also at the individual level for the majority of the participants when audiovisual multisensory stimuli approached participants. This sigmoidal fitting approach and the observation that fits are robust under audiovisual multisensory conditions has important implication for the study of individual differences in PPS representation at the neural (Ferri et al., 2015a,b), physiological (Sambo and Iannetti, 2013), and behavioral (Taffou and Viaud-Delmon, 2014) levels. Indeed, an array of recent observations indicates that PPS is not only heavily influenced by external or environmental conditions, but also by personality traits such as anxiety (Sambo and Iannetti, 2013) or claustrophobia (Lourenco et al., 2011). Similarly, theoretical postulations suggest that the representation of PPS may play an under-appreciated role in psychopathology (Candini et al., 2017; Noel et al., 2017). Thus, the results reported here suggest that function fitting coupled with immersion in a realistic environment and the presentation of multiple cues of information pertaining to the external environment may be best suited for future individual differences studies of PPS.

The empirical observation that a sigmoidal fitting allowing for the bifurcation between the PPS and extrapersonal space is most robust (e.g., fits raw data appropriately over a greater percentage of participants) when audiovisual (vs. visual alone) stimuli loom in a virtual environment has strong implications for the study of bodily self-consciousness and multisensory integration generally. The finding implies that the degree to which a virtual environment is rendered affects bodily representation, or the bifurcation between the external environment and the body. Interestingly, Samad et al. (2015), recently cast the rubber-hand illusion (RHI; Botvinick and Cohen, 1998) —an illusion whereby participants feel ownership over a fake hand after congruent visuosomatosensory stimulation—in light of Bayesian Casual Inference (Körding et al., 2007; Shams and Beierholm, 2010). Under this framework, localization of an object/organism in the environment depends on the relatively reliability of the sensory representation of that particular object/organism, as well as that of other objects/organisms present in the environment. Hence, in Samad et al. (2015), it is computationally predicted that the rubber-hand illusion would not occur after visuosomatosensory displacement of approximately 30 cm—the sources of sensory information being too far. This prediction has been suggested in empirical studies (Lloyd, 2007), and interestingly 30 cm equally corresponds with the approximate size of perihand representation (Serino et al., 2015a), implying that embodiment of a fake hand can solely occur within PPS. In turn, the current findings seemingly indicate that the faithfulness of rendering of virtual environments may affect the possibility for embodiment within that environment (see also Gonzalez-Franco and Lanier, 2017). It will be interesting in future studies to determine the interplay between the faithfulness of virtual environment renderings and bodily representations. For instance, does the ability to embody alternative bodies (such as a body with a very long arm; Kilteni et al., 2012) differ in unisensory and multisensory environmental conditions? Similarly, it has been suggested that embodiment relaxes the temporal constraint for multisensory integration (Maselli et al., 2012), while others have shown that audiovisual temporal acuity is impaired within PPS (Noel et al., 2016), hence begetting the question; how does PPS and embodiment—as affected by virtual representations—interplay and interact with multisensory processes such as temporal binding or inclusively cross-modal attention (e.g., Gonzalez-Franco et al., 2017)?

Lastly, in a context of growing interest for VR technologies, it is becoming essential to evaluate and to scientifically study human interactions in virtual and MR conditions (Herbelin et al., 2016). The measure of PPS presented here offers to scientists in the field of cognitive and behavioral sciences, as well as to researchers on the sense of presence and on interactivity in VR, an objective and easily-implemented assessment of basic neural responses to rich and immersive exposure to complex interactive scenarios. The delineation of PPS has a strong tradition within neurophysiology and a growing body of literature within psychophysics. Perhaps even more interestingly for its utility within the study of the impact of virtual environments on individuals and society, the PPS is taken to index human–environment interactions. In other words, the PPS has been shown to surround not the physical body but the perceived self-location (Noel et al., 2015b; Salomon et al., 2017), and as such it is seemingly a metric that can readily be utilized in characterizing presence or immersion in virtual environments. On the other hand, as we demonstrate here, the boundary of PPS is most readily delineated when a rich environmental context is administered (i.e., a multisensory delineation of the external environment). As such, the measure of PPS appears ideally suited to arbitrate the push and pull in mixed realities between administering a rich virtual experience leading to presence and place illusion, and administering sufficient real world environmental context in order to remain grounded in the physical milieu. Thus, the mixed-reality PPS task presented here might be particularly powerful to study social interactions at the individual subject level, allowing manipulating rich and complex social context (e.g., by presenting crowded environments), while preserving the sensitivity and the rigor of a proper experimental protocol.

Finally, as our societies become more accustomed and even entrenched in virtual environments, it may be interesting to chart how representations of environmental space, such as the PPS, become altered after long term VR experiences. Technological improvements should therefore be brought to the setup presented here. First and foremost, navigation in space is not supported by our panoramic capture, and other approaches for graphic (3D graphics or volumetric reconstruction) and audio rendering (HRTF and acoustic spatial audio simulation) should be used for enabling free navigation inside the scene. Second, the body integration would benefit from improvement of the field of view and the addition of visuotactile cues [such as those described in Gonzalez-Franco and Lanier (2017)] in order to strengthen the illusion of owning the body presented in the simulated environment and to better understand the dynamic changes of PPS when active during VR immersion.

Ethics Statement

Studies were approved by the ethics committee of the Brain and Mind Institute of the EPFL.

Author Contributions

AS, OB, BH, and JN defined the scientific goals and designed the experiments. BH, RM, and JBR developed the technology and implemented the experimental platform. JN, AS, EC, EP, and FB conducted the experiments and analyzed the data. AS, JN, and BH wrote the manuscript. All authors contributed to and reviewed the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

ExpyVR is a multimedia and virtual reality platform for cognitive science experimentation developed at the EPFL Laboratory of Cognitive Neurosciences (http://lnco.epfl.ch) by Bruno Herbelin, Javier Bello Ruiz, Nathan Evans, and Tobias Leugger.

Funding

RM, EC, and FB are supported by W-Science Investment, Zurich, within the Reality Substitution Machine project (RealiSM, http://lnco.epfl.ch/realism). AS, BH, and OB are supported by grants from the Swiss National Science Foundation. OB is supported by the Bertarelli Foundation. AS is supported by the Leenaard Foundation.

References

Avillac, M., Denève, S., Olivier, E., Pouget, A., and Duhamel, J. R. (2005). Reference frames for representing visual and tactile locations in parietal cortex. Nat. Neurosci. 8, 941–949. doi: 10.1038/nn1480

Bassolino, M., Finisguerra, A., Canzoneri, E., Serino, A., and Pozzo, T. (2014). Dissociating effect of upper limb non-use and overuse on space and body representations. Neuropsychologia 70, 385–392. doi:10.1016/j.neuropsychologia.2014.11.028

Botvinick, M., and Cohen, J. (1998). Rubber hands ’feel’ touch that eyes see. Nature 391, 756. doi:10.1038/35784

Blanke, O. (2012). Multisensory brain mechanisms of bodily self-consciousness. Nat. Rev. Neurosci. 13, 556–571.

Blanke, O., and Metzinger, T. (2009). Full-body illusions and minimal phenomenal selfhood. Trends Cogn. Sci. 13, 7–13.

Blanke, O., Slater, M., and Serino, A. (2015). Behavioral, neural, and computational principles of bodily self-consciousness. Neuron 88, 145–166.

Calvert, G., Spence, C., and Stein, B. E. (2004). The Handbook of Multisensory Processes. Cambridge, MA: MIT Press.

Candini, M., Giuberti, V., Manattini, A., Grittani, S., di Pellegrino, G., and Frassinetti, F. (2017). Personal space regulation in childhood autism: effects of social interaction and person’s perspective. Autism. Res. 10, 144–154. doi:10.1002/aur.1637

Canzoneri, E., Magosso, E., and Serino, A. (2012). Dynamic sounds capture the boundaries of peripersonal space representation in humans. PLoS ONE 7:e44306. doi:10.1371/journal.pone.0044306

Canzoneri, E., Marzolla, M., Amoresano, A., Verni, G., and Serino, A. (2013a). Amputation and prosthesis implantation shape body and peripersonal space representations. Sci. Rep. 3, 2844. doi:10.1038/srep02844

Canzoneri, E., Ubaldi, S., Rastelli, V., Finisguerra, A., Bassolino, M., and Serino, A. (2013b). Tool-use reshapes the boundaries of body and peripersonal space representations. Exp. Brain Res. 228, 25–42. doi:10.1007/s00221-013-3532-2

Cléry, J., Guipponi, O., Odouard, S., Pinède, S., Wardak, C., and Hamed, B. S. (2017). The prediction of impact of a looming stimulus onto the body is subserved by multisensory integration mechanisms. J. Neurosci. 37, 10656–10670. doi:10.1523/JNEUROSCI.0610-17.2017

Cléry, J., Guipponi, O., Wardak, C., and Hamed, S. B. (2015). Neuronal bases of peripersonal and extrapersonal spaces, their plasticity and their dynamics: knowns and unknowns. Neuropsychologia 70, 313–326. doi:10.1016/j.neuropsychologia.2014.10.022

de Vignemont, F., and Iannetti, G. D. (2015). How many peripersonal spaces? Neuropsychologia 70, 327–334. doi:10.1016/j.neuropsychologia.2014.11.018

Duhamel, J. R., Colby, C. L., and Goldberg, M. E. (1998). Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J. Neurophysiol. 79, 126–136. doi:10.1152/jn.1998.79.1.126

Ehrsson, H. H. (2012). “43 The Concept of Body Ownership and Its Relation to Multisensory Integration”, in The New Handbook of Multisensory Process, ed. B. E. Stein (Cambridge, MA: MIT Press).

Ferri, F., Costantini, M., Huang, Z., Perrucci, M. G., Ferretti, A., Romani, G. L., et al. (2015a). Intertrial variability in the premotor cortex accounts for individual differences in peripersonal space. J. Neurosci. 35, 16328–16339. doi:10.1523/JNEUROSCI.1696-15.2015

Ferri, F., Tajadura-Jimenez, A., Valjamae, A., Vastano, R., and Costantini, M. (2015b). Emotion-inducing approaching sounds shape the boundaries of multisensory peripersonal space. Neuropsychologia 70, 468–475. doi:10.1016/j.neuropsychologia.2015.03.001

Fogassi, L., Gallese, V., Fadiga, L., Luppino, G., Matelli, M., and Rizzolatti, G. (1996). Coding of peripersonal space in inferior premotor cortex (area F4). J. Neurophysiol. 76, 141–157. doi:10.1152/jn.1996.76.1.141

Galli, G., Noel, J. P., Canzoneri, E., Blanke, O., and Serino, A. (2015). The wheelchair as a full-body tool extending the peripersonal space. Front. Psychol. 6:639. doi:10.3389/fpsyg.2015.00639

Gonzalez-Franco, M., and Lanier, J. (2017). Model of illusions and virtual reality. Front. Psychol. 8:1–8. doi:10.3389/fpsyg.2017.01125

Gonzalez-Franco, M., Maselli, A., Florencio, D., Smolyanskiy, N., and Zhang, Z. (2017). Concurrent talking in immersive virtual reality: on the dominance of visual speech cues. Sci. Rep. 7, 3817. doi:10.1038/s41598-017-04201-x

Graziano, M. S., and Cooke, D. F. (2006). Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia 44, 845–859. doi:10.1016/j.neuropsychologia.2005.09.011

Graziano, M. S., Hu, X. T., and Gross, C. G. (1997). Visuospatial properties of ventral premotor cortex. J. Neurophysiol. 77, 2268–2292. doi:10.1152/jn.1997.77.5.2268

Herbelin, B., Mange, R., Pellencin, E., Canzoneri, E., Blanke, O., and Serino, A. (2015). “Reality substitution captures the boundaries of peripersonal space in ecological conditions,” in Proceedings of ACM ITS 2015 Conférence (Madeira, Portugal).

Herbelin, B., Serino, A., Salomon, R., and Blanke, O. (2016). “Neural mechanisms of bodily self-consciousness and the experience of presence in virtual reality,” in Human Computer Confluence, eds A. Gaggioli, A. Ferscha, G. Riva, S. Dunne, and I. Viaud-Delmon (De Gruyter Online), 80–96.

Iachini, T., Coello, Y., Frassinetti, F., and Ruggiero, G. (2014). Body space in social interactions: a comparison of reaching and comfort distance in immersive virtual reality. PLoS ONE 9:e111511. doi:10.1371/journal.pone.0111511

Iachini, T., Coello, Y., Frassinetti, F., Senese, V. P., Galante, F., and Ruggiero, G. (2016). Peripersonal and interpersonal space in virtual and real environments: effects of gender and age. J. Environ. Psychol. 45, 154–164. doi:10.1016/j.jenvp.2016.01.004

Kandula, M., Van der Stoep, N., Hofman, D., and Dijkerman, H. C. (2017). On the contribution of tactile expectations to visuo-tactile interactions within the peripersonal space. Exp. Brain Res. 235, 2511–2522. doi:10.1007/s00221-017-4965-9

Kilteni, K., Normand, J., Sanchez-Vives, M. V., and Slater, M. (2012). Extending body space in immersive virtual reality: a very long arm illusion. PLoS ONE 7:e40867. doi:10.1371/journal.pone.0040867

Körding, K. P., Beierholm, U., Ma, W. J., Quartz, S., Tenenbaum, J. B., and Shams, L. (2007). Causal inference in multisensory perception. PLoS ONE 2:e943. doi:10.1371/journal.pone.0000943

Ladavas, E. (2002). Functional and dynamic properties of visual peripersonal space. Trends Cogn. Sci. 6, 17–22. doi:10.1016/S1364-6613(00)01814-3

Ladavas, E., and Serino, A. (2008). Action-dependent plasticity in peripersonal space representations. Cogn. Neuropsychol. 25, 1099–1113. doi:10.1080/02643290802359113

Lloyd, D. M. (2007). Spatial limits on referred touch to an alien limb may reflect boundaries of visuo-tactile peripersonal space surrounding the hand. Brain Cogn. 64, 104–109. doi:10.1016/j.bandc.2006.09.013

Lourenco, S. F., Longo, M. R., and Pathman, T. (2011). Near space and its relation to claustrophobic fear. Cognition 119, 448–453. doi:10.1016/j.cognition.2011.02.009

Macaluso, E., and Maravita, A. (2010). The representation of space near the body through touch and vision. Neuropsychologia 48, 782–795. doi:10.1016/j.neuropsychologia.2009.10.010

Maravita, A., Spence, C., and Driver, J. (2003). Multisensory integration and the body schema: close to hand and within reach. Curr. Biol. 13, 531–539. doi:10.1016/S0960-9822(03)00449-4

Maselli, A., Kilteni, K., López-Moliner, J., and Slater, M. (2012). The sense of body ownership relaxes temporal constraints for multisensory integration. Sci. Rep. 6, 30628. doi:10.1038/srep30628

Noel, J., Lukowska, M., Wallace, M., and Serino, A. (2016). Multisensory simultaneity judgment and proximity to the body. J. Vis. 16, 1–17. doi:10.1167/16.3.21

Noel, J. P., Grivaz, P., Marmaroli, P., Lissek, H., Blanke, O., and Serino, A. (2015a). Full body action remapping of peripersonal space: the case of walking. Neuropsychologia 70, 375–384. doi:10.1016/j.neuropsychologia.2014.08.030

Noel, J. P., Pfeiffer, C., Blanke, O., and Serino, A. (2015b). Full body peripersonal space as the space of the bodily self. Cognition 144, 49–57. doi:10.1016/j.cognition.2015.07.012

Patané, I., Iachini, T., Farnè, A., and Frassinetti, F. (2016). Disentangling action from social space: tool-use differently shapes the space around Us. PLoS ONE 11:e0154247. doi:10.1371/journal.pone.0154247

Pellencin, E., Paladino, P., Herbelin, B., and Serino, A. (2017). Social perception of others spaces one’s own multisensory peripersonal space. Cortex S0010-9452, 30290–30293. doi:10.1016/j.cortex.2017.08.033

Rizzolatti, G., Fadiga, L., Fogassi, L., and Gallese, V. (1997). The space around us. Science 277, 190–191. doi:10.1126/science.277.5323.190

Rizzolatti, G., Scandolara, C., Matelli, M., and Gentilucci, M. (1981). Afferent properties of periarcuate neurons in macaque monkeys. I. Somatosensory responses. Behav. Brain Res. 2, 125–146.

Salomon, R., Noel, J.-P., Lukowska, M., Faivre, N., Metzinger, T., Serino, A., et al. (2017). Unconscious integration of multisensory bodily input in the peripersonal space shapes bodily self consciousness. Cognition 166, 174–183. doi:10.1016/j.cognition.2017.05.028

Samad, M., Chung, A. J., and Shams, L. (2015). Perception of body ownership is driven by Bayesian sensory inference. PLoS ONE 10:e0117178. doi:10.1371/journal.pone.0117178

Sambo, C. F., and Iannetti, G. D. (2013). Better safe than sorry? The safety margin surrounding the body is increased by anxiety. J. Neurosci. 33, 14225–14230. doi:10.1523/JNEUROSCI.0706-13.2013

Serino, A. (2016). Variability in multisensory responses predicts the self-space. Trends Cogn. Sci. 20, 169–170. doi:10.1016/j.tics.2016.01.005

Serino, A., Alsmith, A., Costantini, M., Mandrigin, A., Tajadura-Jimenez, A., and Lopez, C. (2013). Bodily ownership and self-location: components of bodily self-consciousness. Conscious. Cogn. 22, 1239–1252. doi:10.1097/00001756-199610020-00010

Serino, A., Canzoneri, E., Marzolla, M., di Pellegrino, G., and Magosso, E. (2015a). Extending peripersonal space representation without tool-use: evidence from a combined behavioral computational approach. Front. Behav. Neurosci. 9:4. doi:10.3389/fnbeh.2015.00004

Serino, A., Noel, J. P., Galli, G., Canzoneri, E., Marmaroli, P., Lissek, H., et al. (2015b). Body part-centered and full body-centered peripersonal space representations. Sci. Rep. 5, 18603. doi:10.1038/srep18603

Shams, L., and Beierholm, U. R. (2010). Causal inference in perception. Trends Cogn. Sci. 14, 425–432. doi:10.1016/j.tics.2010.07.001

Spence, C., and Driver, J. (2004). Crossmodal Space and Crossmodal Attention. Oxford, UK: Oxford University Press.

Taffou, M., and Viaud-Delmon, I. (2014). Cynophobic fear adaptively extends peri-personal space. Front. Psychiatry 5:122. doi:10.3389/fpsyt.2014.00122

Teneggi, C., Canzoneri, E., di Pellegrino, G., and Serino, A. (2013). Social modulation of peripersonal space boundaries. Curr. Biol. 23, 406–411. doi:10.1016/j.cub.2013.01.043

Keywords: virtual reality, mixed reality, peripersonal space, multisensory integration, body, self

Citation: Serino A, Noel J-P, Mange R, Canzoneri E, Pellencin E, Ruiz JB, Bernasconi F, Blanke O and Herbelin B (2018) Peripersonal Space: An Index of Multisensory Body–Environment Interactions in Real, Virtual, and Mixed Realities. Front. ICT 4:31. doi: 10.3389/fict.2017.00031

Received: 31 October 2017; Accepted: 20 December 2017;

Published: 22 January 2018

Edited by:

Mel Slater, University of Barcelona, SpainReviewed by:

Alessandro Farne, Institut National de la Santé et de la Recherche Médicale, FranceMar Gonzalez-Franco, Microsoft Research, United States

Copyright: © 2018 Serino, Noel, Mange, Canzoneri, Pellencin, Ruiz, Bernasconi, Blanke and Herbelin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Andrea Serino, andrea.serino@unil.ch

Andrea Serino1,2*

Andrea Serino1,2*

Jean-Paul Noel

Jean-Paul Noel Elisa Canzoneri

Elisa Canzoneri Olaf Blanke

Olaf Blanke Bruno Herbelin

Bruno Herbelin