Toward a new model of scientific publishing: discussion and a proposal

- Unit on Learning and Plasticity, Laboratory of Brain and Cognition, National Institute of Mental Health, National Institutes of Health, Bethesda, MD, USA

The current system of publishing in the biological sciences is notable for its redundancy, inconsistency, sluggishness, and opacity. These problems persist, and grow worse, because the peer review system remains focused on deciding whether or not to publish a paper in a particular journal rather than providing (1) a high-quality evaluation of scientific merit and (2) the information necessary to organize and prioritize the literature. Online access has eliminated the need for journals as distribution channels, so their primary current role is to provide authors with feedback prior to publication and a quick way for other researchers to prioritize the literature based on which journal publishes a paper. However, the feedback provided by reviewers is not focused on scientific merit but on whether to publish in a particular journal, which is generally of little use to authors and an opaque and noisy basis for prioritizing the literature. Further, each submission of a rejected manuscript requires the entire machinery of peer review to creak to life anew. This redundancy incurs delays, inconsistency, and increased burdens on authors, reviewers, and editors. Finally, reviewers have no real incentive to review well or quickly, as their performance is not tracked, let alone rewarded. One of the consistent suggestions for modifying the current peer review system is the introduction of some form of post-publication reception, and the development of a marketplace where the priority of a paper rises and falls based on its reception from the field (see other articles in this special topics). However, the information that accompanies a paper into the marketplace is as important as the marketplace’s mechanics. Beyond suggestions concerning the mechanisms of reception, we propose an update to the system of publishing in which publication is guaranteed, but pre-publication peer review still occurs, giving the authors the opportunity to revise their work following a mini pre-reception from the field. This step also provides a consistent set of rankings and reviews to the marketplace, allowing for early prioritization and stabilizing its early dynamics. We further propose to improve the general quality of reviewing by providing tangible rewards to those who do it well.

Introduction

To begin, it is important to understand the scope and purpose of this paper. First, this paper is an attempt to describe the problems with scientific publishing as it is currently instantiated. We are both cognitive neuroscientists, and while some of the issues discussed in this paper are undoubtedly applicable to a wide array of fields they are most directly applicable to the fields of psychology and neuroscience. Second, this paper is an attempt to lay out, in a very broad way, the quantifiable and intangible costs and benefits associated with publishing so that both the functioning of the current system and the relative costs of alternatives can be evaluated. To provide some empirical basis we performed an informal survey of colleagues to obtain estimates of some of the costs. Finally, this paper includes a proposal for an alternative form of scientific publishing and post-publication review. This proposal represents our best attempt at defining an improved system that could actually be implemented given the realities of transitioning from the current system. The proposal is quite specific, but that specificity is meant more to serve as a catalyst and basis for discussion than as a final prescription for a new form of publishing.

The paper begins with a brief discussion of the current system from an historical perspective with consideration of its modern function. Following this section is a detailed description of peer review and its tangible and intangible costs and benefits. Based on these analyses we then propose a new system for publishing empirical papers that streamlines the existing system while still serving the purposes of modern publishing. We then address the cost and benefits of this new system relative to the current system and lay out the remaining open questions.

Current System

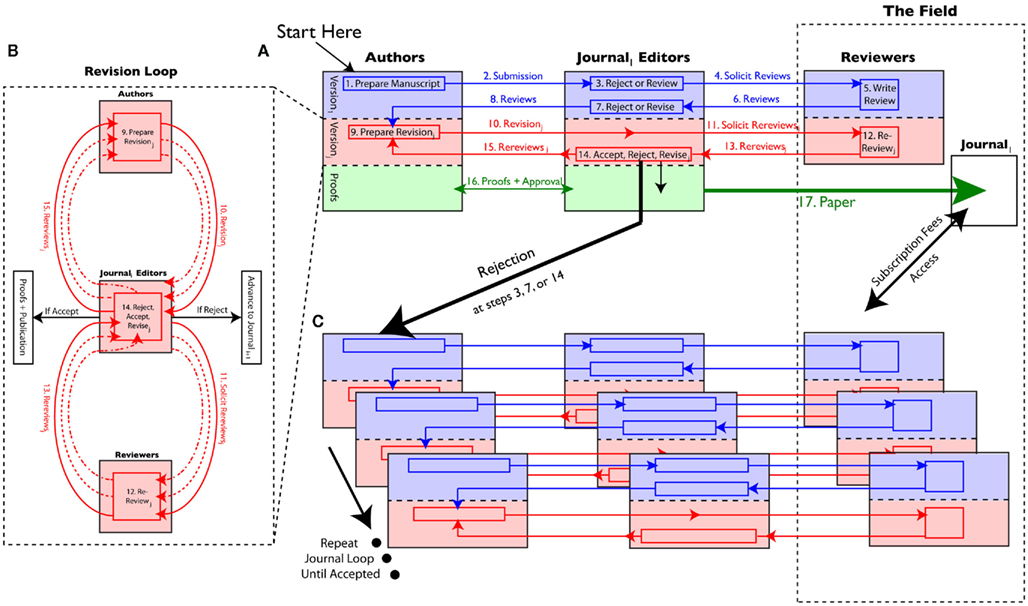

First, we examine the origins of the system of scientific publishing before specifying its modern form in detail. We then analyze the pragmatic, quantifiable costs of publishing based on an informal survey of 22 of our colleagues, which asked them to provide information about their experience with peer review on several of their most recent papers (see Supplementary Material for survey), and collected information on 55 cognitive and neuroscience papers. Following this quantification of the tangible costs, we examine the intangible effects of the current system caused by the misalignment of its structure and incentives with the functions of scientific publishing.

History and Modern Purpose

Scientific papers are published through a legacy system that was not designed to meet the needs of contemporary scientists, the demands of modern publishing, or to take advantage of current technology. The system is largely carried forward from one designed for publishers and scientists in 1665 (UK House of Commons, 2004). The most important historical constraint in shaping scientific publishing was a restriction on the available publication space. Publishing a journal, even in the recent past, was quite expensive and its likely audience quite small. Further, publishing costs are the same regardless of the quality of its content (good and bad thought costs the same to print and ship). Thus, publishers had a strong incentive to limit publication size so that the costs to readers were reasonable and to find the strongest possible content to fill that limited space. In this context, pre-publication peer review provided the publisher with a test run of the reception a paper is likely to receive from the field; providing a ranking of the likely quality of all the submitted papers. The journal then simply selected the top n papers for publication to meet its size requirement.

From the point of view of the scientists, the journals were an absolute necessity for broadly distributing their work to colleagues while still establishing ownership and precedence over a particular result (UK House of Commons, 2004). Peer review also gave scientists the same pre-reception it provided the journals, and with it the opportunity to revise or retract work before it was sent to the larger scientific community.

As the number of scientists grew and, concomitantly, the number of papers submitted, this system of publishing unexpectedly provided another benefit: prioritization of the literature. Consider the following: the price of a journal is dependent on the perceived quality of its content more than on the number of papers published. The top journal has little impetus to publish more papers as submissions increase, since by simply maintaining the number of accepted papers, the exclusivity of the journal increases and with it the perceived quality and price, with little additional expenditure (Young et al., 2008). Rejections also create a market for lower-tier journals to publish rejected papers at a reduced, but still profitable price. Scientists will naturally want to publish their work in the journal with the highest perceived quality they can, so they will submit papers to those journals first. A series of rejections and resubmissions to the next best journal will naturally lead a paper to land in the journal whose perceived quality matches that of the manuscript. Given broad agreement between scientists as to the ordering of journals by quality, and assuming that peer review is highly accurate in gauging scientific quality, the journal where a paper is published is an index to quality and thus provides its priority.

In the modern world, this prioritization and the pre-reception afforded by peer review are the primary benefits the current system of publishing provides to scientists, as the Internet has essentially eliminated any need for journals as distribution channels. However, as the following analyses will show, the actual mechanisms of scientific publishing are poorly optimized to serve these functions.

Quantification of Modern Peer Review

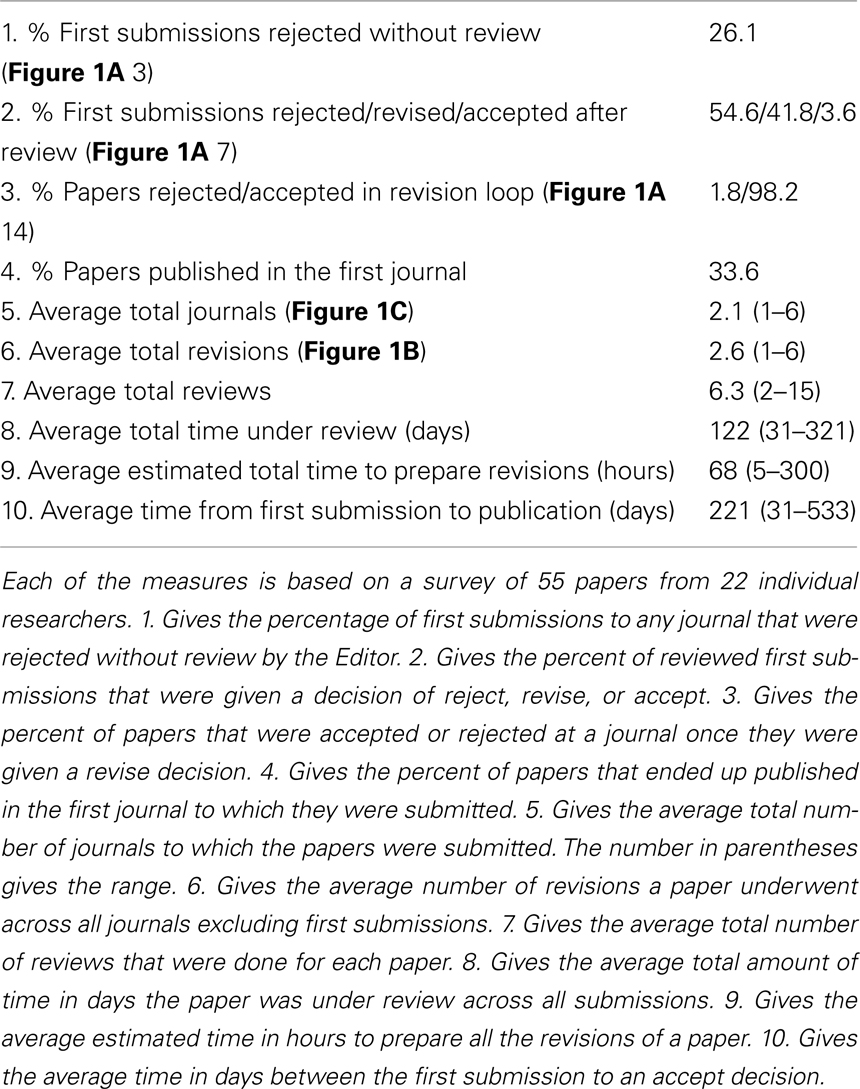

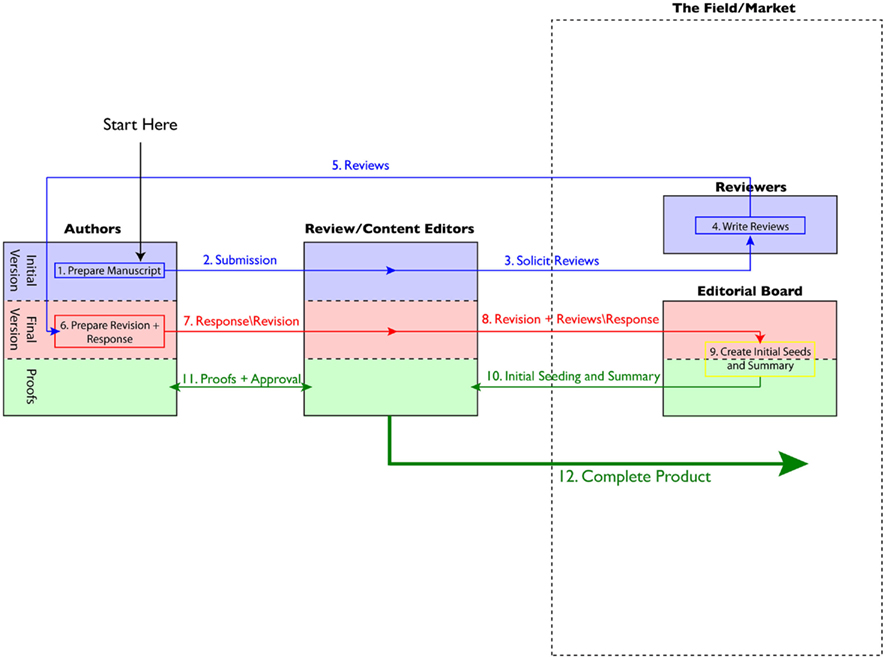

To effectively evaluate peer review, it is helpful to specify fully the process by which a peer-reviewed paper is currently published (Figure 1). There are three primary groups that participate in this process: Authors who perform research and prepare papers, Editors who coordinate the process of review and publication and make decisions about whether to publish or reject papers, and Reviewers who provide expert opinions on which the Editors base their decisions. After the initial submission by the Authors, Editors decide whether to review or reject the manuscript. If they decide to review the paper, Reviewers are solicited and, on the basis of their opinions, Editors decide whether to allow revisions to address the Reviewers’ comments or to reject the paper (Figure 1A). If the decision is to allow revision, a theoretically unbounded revision loop begins in which the revisions pass between the three groups until the Editors ultimately reject or accept the paper (Figure 1B). In the case of a rejection the Authors generally proceed to submit the paper to a different journal, beginning a journal loop bounded only by the number of journals available and the dignity of the Authors (Figure 1C). When a paper is accepted, it is published in the journal and becomes available to the Field, which, for our purposes, is the set of researchers within a certain domain of research (e.g., cognitive neuroscience).

Figure 1. Diagram of the current publishing process. (A) Outline of the steps involved in the first submission of a paper to a journal. (1, 2) Authors prepare and submit the manuscript. (3) Journal editors decide whether to reject the paper or send it for review. If the decision is to review, reviews are solicited (4), written by the reviewers (5), and sent back to the editors (6). (7) Editors then decide whether to accept, reject, or allow revision and resubmission of the manuscript. In practice almost no paper is accepted in this first round of review. If the decision is to allow revision, the reviews are sent to the authors (8) and a theoretically unbounded revision loop (B) then proceeds. This loop can terminate in either acceptance or rejection. If the paper is accepted it proceeds to the proof stage, where it is exchanged between the editors and authors (16) until ready for publication in the journal (17). If the paper is rejected at any of the decision points (3, 7, 14) the authors will generally proceed to submit it to another journal, beginning a journal loop (C). (B) Details of the revision loop. In each iteration the authors prepare the revision (9), which is communicated to the reviewers by the editors (10, 11). The reviewers write re-reviews (12) that are sent back to the editors (13). (14) The editors then decide whether to accept, reject, or allow revision. If the decision is revision the loop begins again, and continues until an accept or reject decision is reached. (C) If a paper is rejected, a loop of repeated submissions to many journals begins until the paper is accepted. In practice, few papers are ever abandoned, so the loop generally continues until acceptance an publication. Each new submission has the same step as the original submission (A).

Having specified the process we can now proceed to analyze it from the point of view of its efficiency (time), cost/benefit ratio (actual expenditures of money and effort against the benefits provided), and predictability (variability in that time and effort). An ideal process maximizes the cost/benefit ratio and efficiency, while simultaneously being highly predictable. A process that is unpredictable incurs indirect costs related to the uncertainty of its function (see below).

We begin with averages representing the efficiency of the process derived from our informal survey (Table 1). There are three decision points at which Editors determine whether a paper will be rejected or continue the process at any particular journal. First, they decide whether to send papers out for review or reject them outright (26.1%; Figure 1A 3). Editors also decide whether to accept, reject, or make revisions to the manuscript following the receipt of the initial reviews (Figure 1A 7). Functionally, almost no manuscripts in our survey were accepted in the first round of review (3.6%), with most rejected (54.6%) or revised (41.8%). Once the revision loop begins, Editors repeatedly make the same accept, reject, revise decision (Figures 1A,B 14). The vast majority of papers were accepted in the same journal once the revision loop began (98.2%). Overall, however, only 33.6% of papers were published in the journal to which they were first submitted. On average, papers were submitted to 2.1 different journals (Figure 1B), underwent 2.6 revisions across all journals, and received a total of 6.3 reviews before they were published. We only collected information on papers that had been published, but it is likely that very few papers are abandoned without publication anywhere, especially given the diversity of journals now available (see also Fabiato, 1994; Suls and Martin, 2009).

Beyond these raw numbers our survey also provided us with estimates of the amount of time taken in various steps of the process. Here, what is striking is less the average amount of time, which is quite long, but more its unpredictability. In total, each paper was under review for an average of 122 days but with a minimum of 31 days and a maximum of 321. The average time between the first submission and acceptance, including time for revisions by the authors was 221 days (range: 31–533). This uncertainty in time makes it difficult to schedule and predict the outcome of large research projects. For example, it is difficult to be certain whether a novel result will be published before a competitor’s even it were submitted first, or to know when follow up studies can be published. It also makes it difficult for junior researchers to plan their careers, as job applications and tenure are dependent on having published papers.

We also asked for the amount of time taken to prepare submissions and reviews, allowing us to estimate the actual work and expenditure consumed in the process. Leaving aside the initial preparation of the paper (Figure 1A 1) we begin with the preparation of reviews (Figure 1A 5). Each paper received, on average, 6.3 reviews and, each review takes, on average, 6 h to prepare (based on an informal survey of post-docs in our lab). At the average salary for a NIH post-doc ($47,130 for approximately 2000 yearly hours1), this roughly translates to a cost of $140 per review and $840 per paper. Importantly, these reviews will never been seen outside of the review process, so their only utility is in refining published manuscripts. Next we consider the preparation of revisions and submissions to different journals. In our survey, Authors estimated that they spent, on average, 68 h on all the revisions and resubmissions, roughly translating to a cost of $1600 per paper prior to acceptance. While these estimates of time spent may not be highly precise, they do provide a rough basis for estimating the total cost. Finally, (based on the last few publications from our lab) the average direct cost of publishing a paper in terms of publication fees (e.g., color figure costs) was $1930. Beyond the costs of actually performing the research and preparing the first draft of the manuscript, it costs the field of neuroscience, and ultimately the funding agencies, approximately $4370 per paper and $9.2 million over the approximately 2100 neuroscience papers published last year. This excludes the substantial expense of the journal subscriptions required to actually read the research the field produces and the unquantifiable cost of the publishing lag (221 days) and the uncertainty incurred by that delay.

Intangible Costs and Benefits

Given these costs, we now turn to evaluating the functionality provided by the current system to the field, which ultimately funds its every component. Beyond the ineffectiveness of the current system in providing pre-reception and a prioritization of the literature, we also highlight the costs caused by the misalignment of incentives and the adversarial relationship between the Reviewers and Authors caused in the current system.

The current system serves the purposes of the journals, providing them with a pre-reception that allows them to prioritize papers for publication. However, this pre-reception is ill-suited to needs of scientists as it is optimized to help the journals decide whether or not to publish and not for providing feedback about scientific merit. Further, because the sample of Reviewers is so small relative to the size of the Field, and their identities generally unknown, it is very hard for Authors to know how general the Reviewers’ opinions will be in all but the most extreme cases. Reviewers may also be implicitly biased in their reviews by their feelings about particular Authors. One study (Peters and Ceci, 1982) resubmitted 12 articles already published in high-tier journals with different authors names and institutions. First, only three of the papers were detected as already published, and at a time when the number of published papers was much lower than it is today. Second, eight of the nine remaining papers were rejected, none for novelty, but generally for “serious methodological flaws.” This result might suggest a systematic bias by Reviewers or that peer review itself is unreliable. In either case, this form of pre-reception is clearly not optimal for Authors.

The prioritization of the literature afforded by this system is also quite poor. From the point of view of the Authors, the system is so stochastic and redundant as to be an active hindrance to the progress of research. The redundancy also increases the burden on Reviewers, who are essentially uncompensated, as the same paper requires a multitude of reviews through the revision and journal loops. From the point of view of an individual researcher in the field, there is no guarantee that the criteria of a journal or those of the Reviewers match their own, especially in the case of the highest tier journals in which novelty plays a large part in the decision to publish. Not only is novelty inherently subjective, the question is being asked of specialists who are unlikely to have a good intuition of novelty or general interest in the larger scientific community. Further, the general novelty of a result may have little to do with its actual importance to the research program of any particular researcher. Thus the prioritization of the literature provided by this process is, at best, noisy, opaque, and very expensive.

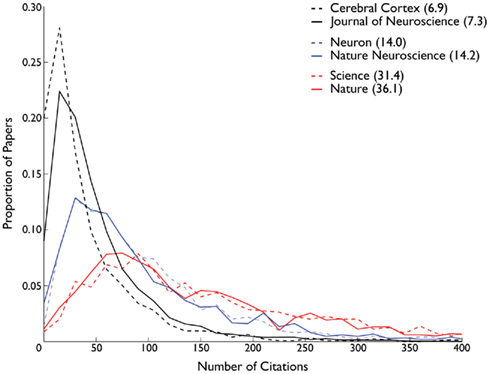

To quantitatively evaluate the performance of the journals in prioritizing the literature, we extracted from SCOPUS the current number of citations for all the neuroscience papers published between 2000 and 2007 in six major journals. The journals were chosen from three distinct tiers based on impact factor, the dominant measure of journal quality used in the field. If the journal is a good marker of a paper’s quality and eventual impact on the field, than the eventual citation count of that paper should be predictable from the journal where it was published. Viewed retrospectively, this should lead to largely non-overlapping distributions of citation counts between journals in different tiers. It should be noted that this measure is somewhat confounded by the fact that high-tier journals are both more visible and more likely to attract submissions than lower-tier journals. However, both of these confounds should act to increase distinctions between the tiers. Our evaluation reveals that far from a perfect filter, the distribution of citations largely overlaps across all six journals (Figure 2). We then asked whether the citation count of a paper could predict the tier at which it was published and found that between adjacent tiers this could only be achieved at 66% accuracy and between the top and third tier at 79%2. Thus, even given the self-reinforcing confounds, the journals tiers are far from a perfect method of prioritizing the literature.

Figure 2. Prioritization of the literature by the current system. Histogram of the distribution of the current number of citations for every neuroscience paper published between 2000 and 2007 for six major journals (15 citation width bins). The x-axis is cutoff at 400 citations only for display purposes. There were a small proportion of papers that had more citations, and these papers were included in all analyses. There are three rough tiers of journals, based on their 2010 impact factors (to the right of the journal names in the legend). Note the large amount of overlap between the distributions; indicating the journal where a paper is published is not strongly predictive of the eventual number of citations it will acquire.

The current system is also notable for the misalignment of incentives for both Authors and Reviewers relative to progress in science. Scientific progress is supposed to be largely incremental, with each new result fully contextualized with the extant literature and fully explored with many different analyses and manipulations. Replications, with even the tiniest additional manipulations, are critical to refining our understanding of the implications of any result. Yet, with the focus on the worthiness for publication, especially novelty, rather than on scientific merit, Reviewers look on strong links with previous literature as a weakness rather than strength. Authors are incentivized to highlight the novelty of a result, often to the detriment of linking it with the previous literature or overarching theoretical frameworks. Worse still, the novelty constraint disincentives even performing incremental research or replications, as they cost just as much as running novel studies and will likely not be published in high-tier journals.

The current system also creates an adversarial relationship between Reviewers and Authors. Asking Reviewers to make judgments about publication worthiness reduces criticism to a dichotomy: Accept or Reject. Most of the comments in reviews reduce to this boolean, so Authors are incentivized not to argue or discuss points but simply to do enough to get a paper past the Reviewers. Reviewers are essentially uncompensated and completely anonymous, so there is no incentive to produce timely, let alone detailed constructive reviews. To Authors, a review often reduces to a list of tasks rather than as a scientific critique or discussion that refines a paper. In practice, most reviews are rejections or lists of control experiments that are often not central to the theoretical point being addressed which bloat papers rather than refining them. To be clear, these problems occur even with the most conscientious Reviewers, which most researchers try to be, simply because of the nature of the current system of publishing. With no reward for or training in good reviewing and counter-productive incentives, it is unsurprising that peer review is ineffective at producing either a high-quality pre-reception or a prioritization of the literature.

Proposed System of Peer Review

Luckily, these deficiencies are structural and do not arise because of evil Authors, Reviewer, or Editors. Rather, they are largely a symptom of the legacy system of scientific publishing, which grew from a constraint on the amount of physical space available in journals. The advent of the Internet eliminates the need for physical copies of journals and with it any real space restrictions. In fact, none of the researchers in our lab had read a physical copy of a journal in the past year that was not sent to them for free. Without the space constraint there is no need to deny publication for any but the most egregiously unscientific of papers. In fact, we argue that simply guaranteeing publication for any scientifically valid empirical manuscript attenuates all of the intangible and quantifiable costs described above. Functionally, publication is already guaranteed, it is simply accomplished through a very inefficient system. 98.2% of all papers that enter the revision loop are published at that same journal and few papers are abandoned over the course of the journal loop.

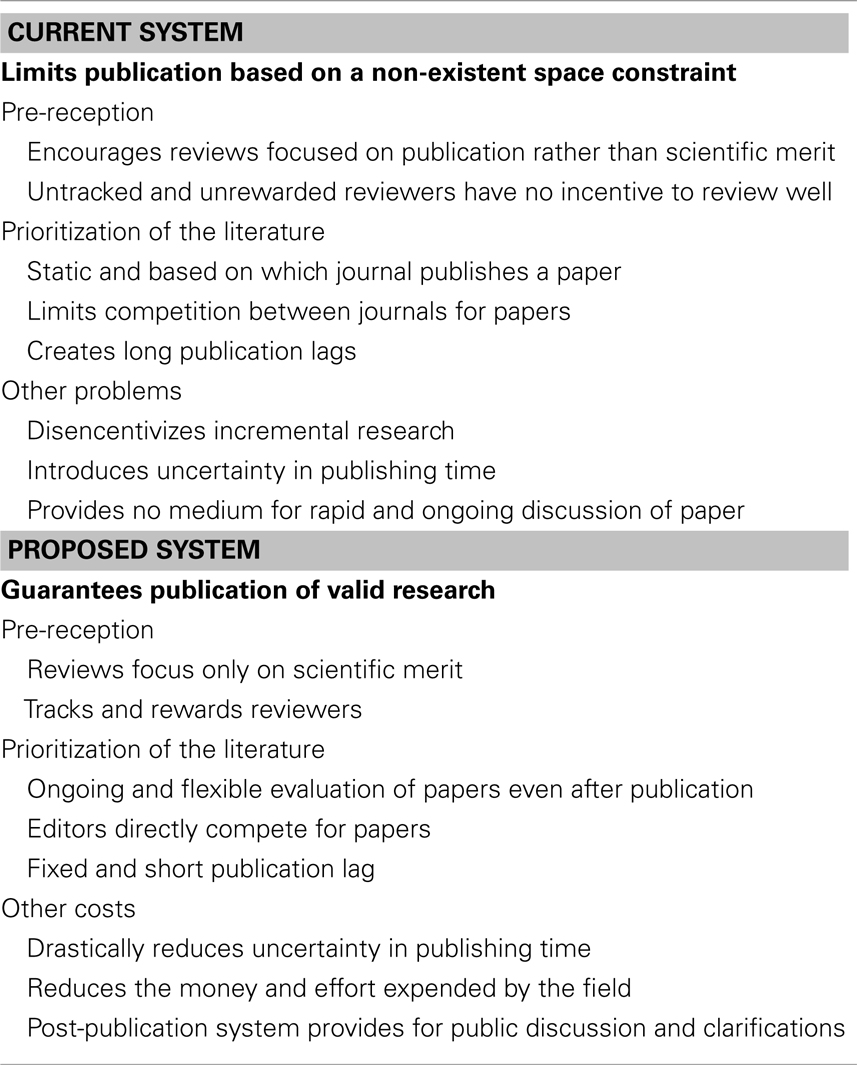

Guaranteeing publication would dramatically simplify the process of peer review, align the incentives of Authors and Reviewers with scientific progress, and reduce costs in time, money, effort, and uncertainty. In our detailed description of our proposed system (see below), we will even show that guaranteed publication does not sacrifice, and in fact, improves both pre-reception and the prioritization of the literature. We begin with a specification of the mechanisms and costs of the proposed system, followed by a discussion of the intangible costs and benefits. A high level summary can be found in Table 2.

Table 2. This table contains a rough summary of the key differences between the current and proposed systems of peer review.

Proposed System of Peer Review and Quantification

Guaranteeing publication would eliminate the redundancy of the revision and journal loops, improving every quantifiable aspect of peer review. Under the proposed system (Figure 3) all papers are reviewed. The purpose of the Editors is twofold. First, they coordinate the entire review process. Second, they maintain the anonymity of both the Reviewers and Authors, so that all reviewing is double-blind (see Peters and Ceci, 1984 for a discussion). Editors pick a set of three anonymous reviewers based on their expertise and availability (Figure 3 3). Once the reviews are prepared, they are passed automatically to the Authors, without the need for any editorial decisions (Figure 3 5). The purpose of these reviews is not to decide whether the paper should be published, but to give the Authors feedback on the scientific quality of the research and the Reviewer’s understanding of its context and importance in the field. This scientific pre-reception affords the Authors the opportunity to significantly revise or retract their work if they choose. If both the Authors and Reviewers agree multiple rounds of review are possible (e.g., Frontiers system). However, in most cases, the Authors will instead respond to the reviews once and make some revisions to their manuscript (Figure 3 6 + 7). Having communicated that revision to the Editors, publication is now guaranteed with no further rounds of revision or review. The elimination of the revision and journal loops significantly reduces the inefficiency and speed of publication but a method is still required for prioritizing the literature.

Figure 3. Diagram of the proposed publishing process. (1, 2) Authors prepare and submit the manuscript. As all papers are reviewed, the editors select a set of reviewers and solicit reviews (3). The reviewers write the reviews (4), which are automatically conveyed to the authors (5). Authors then have the choice of revising the manuscript or leaving it unchanged. They prepare the revision and their response to the reviews (6), which is conveyed to the editors (7). The editors select an appropriate editorial board member and convey the reviews, revision, and the author’s response (8). The board member uses these components to craft a high level summary of the work, its importance and context within the literature, and a set of initial numerical seeds representing the quality of the paper (9). This summary is conveyed to the editors (10), who begin the proof exchange with the authors (11). Once the proofs are approved, the editors publish the complete product (12), which is the final manuscript and board summary and seed values.

To this end, we propose combining post-publication review (see below) with an Editorial Board, whose function is to provide initial seeds that will be the basis of the early prioritization of papers as they are published. The Editorial Board essentially acts as a rating service, fashioning a coherent summary and set of ratings from the raw initial reviews and responses that the field can use to initially prioritize a paper. The Board will be comprised of a small set of leaders in the field, chosen, at least initially (see below), by vote amongst the field. Editors will send the paper, reviews, and responses (all anonymous) to a primary member of the Editorial Board, who will be responsible for providing the initial ratings and summary, including their own impressions of the implications of the paper in context with the literature (see also Faculty of 1000 for a related system; Figure 3 9). Once these seeds are complete and the proofs receive final approval (Figure 3 11), the paper is immediately published.

The proposed system will immediately reduce the burden in time, money, and effort on the entire field. Given a single round of review, the number of reviews is reduced from a current average of 6.3 to 3 saving the field 18.2 h of reviewing and $430 per paper on average (52%). There is only a single optional round of revision, saving Authors an average of 42 h and $990 per paper on average (62%) according to our survey. Even assuming that the publication and submission fees remain constant to pay for the implementation of the new system (and color figure fees would certainly be eliminated), a total savings of $1420 would be achieved for each paper (32%). That translates to an annual savings of three million dollars for the field, not including the benefits of a reduction in publication lag and the decrease in uncertainty.

Intangible Costs and Benefits

The proposed system of peer review streamlines the existing system, benefiting Authors without fundamentally changing their role. Authors continue to perform research and write papers, but a greater proportion of their time can now be devoted to actually doing those things. They are also the beneficiaries of an improved, more scientific pre-reception and a reduced cost and lag for publishing papers. The reduced variability in time reduces uncertainty, helping junior scientists plan their careers more effectively, and helping senior researchers plan large-scale research projects.

The role of Reviewers is altered from assessing publication worthiness to providing a critique of the paper’s scientific merit. This should reduce the adversarial relationship between Authors and Reviewers, and foster more constructive criticism. When this system is combined with an appropriate system of post-publication review, it may also provide Reviewers with the opportunity to be directly rewarded for producing high-quality punctual reviews (see Compensation for Reviewers section below).

For Editors, the change will be fundamental. Currently, Editors are the gatekeepers to publication in a particular journal. Their purpose is to serve the interests of the journal as a business and not the interests of Authors. There is also no real competition between Editors, as the entire system rests on a relatively well-established hierarchy of journals to provide the prioritization of the literature. In the proposed system, journals do not truly exist as distinct entities for the purposes of peer review (though they may play a role in post-publication as discussed below in the Financing section). Instead, Editors must function in a way somewhat analogous to an investment bank, shepherding a paper into the market in its best possible form. Editors can compete with each other based on the price and quality of the services they provide. For example, Editors can both coordinate the pre-publication review process, and more or less extensively edit the manuscript and figures, provide digestible press releases for high-profile papers, and promote the manuscript within the community. The Nature publishing group has started offering a variant of this service already, by offering to edit manuscripts they will not necessarily publish3. The proposed system aligns Editor’s incentives with the desire of the Authors to publish the best possible paper in a certain time frame with a reasonable cost.

Reasons for Double-Blind Pre-Publication Review

Unlike many other proposals we propose maintaining some pre-publication peer review. While eliminating this step would further simplify and streamline publishing we believe it to be critical for three reasons. First, review by experts in the field prior to publication is critical for providing the Authors with an effective pre-reception that can be the basis for revising or retracting papers before they become widely available. Second, the reviews, once synthesized by the editorial board, can also serve as an early input into the post-publication market, stabilizing initial reception. Third, it also guarantees that every paper will receive an initial set of reviews, eliminating the concern that a paper that is never commented on post-publication is essentially invisible to any prioritization (see also below).

We further argue that this pre-publication review should be double-blind, with the identities of both the Authors and Reviewers unknown to the other. The anonymity of the Reviewers is critical to obtaining unadulterated reviews, particularly when more junior scientists are reviewing the work of senior faculty (e.g., Wright, 1994). In cases of completely open peer review, reviews become more positive and acceptances increase (Van Rooyen et al., 1999), but so does hesitancy to review in the first case. It is unclear whether the increased positivity reflects genuine enthusiasm or merely the desire to avoid conflict. The anonymity of the Authors reduces the possibility of Reviewer bias either for or against particular authors or institutions (see Peters and Ceci, 1982 for an example). While the identity of the Authors might be guessed by the Reviewers, any ambiguity should act to reduce this bias.

Reasons for Including an Editorial Board

Beyond streamlining the existing system of peer review we propose the addition of an Editorial Board, responsible for preparing a summary based on the initial reviews and a set of initial ratings that accompany a paper as it is published into the market. The inclusion of this group adds steps and time to the process of publication and also creates a new burden on the field. Nonetheless, the benefits of the Editorial Board outweigh these costs.

Current systems that depend on post-publication review are plagued by an uneven initial reception. Complete post-publication review puts an enormous burden on the field to conscientiously search the literature and offer commentary without any compensation whatsoever (Lipworth et al., 2011). The only researchers likely to offer comments are those deeply invested in a particular result, and there is little point in offering positive commentary on a paper. In current open review systems, some papers are commented on extensively, while others never receive a single comment (e.g., Nature open peer review debate4). The latter case provides neither the field nor the Authors any sort of feedback on the quality of the research, nor any prioritization of the literature. The Editorial Board provides ratings and a summary that can provide an early prioritization of papers and guarantee that every paper is read and contextualized with the extant literature.

The Editorial Board offers significant advantages over publishing the raw initial reviews with the paper. First, many of the initial issues will be fully addressed by the response and will add nothing to the early reception of the paper. Second, publishing the reviews would tend to recreate the adversarial relationship between the Authors and Reviewers, as the Reviewers would be implicitly accepting the Authors’ response without the opportunity to argue their points or to revise their review. The inclusion of an impartial third party to provide the final word on whether issues have been addressed or remain outstanding, gives both the Reviewers and Authors some distance from their reviews and responses. Finally, the Editorial Board can also evaluate the quality and timeliness of reviews, perhaps providing a metric on the basis of which Reviewers can be rewarded (see Compensation for Reviewers section below).

Proposed Post-Publication System

There are four primary functions that the structure of a paper must serve if it is to be considered effective. (1) It must convey the content of the research in such a way that it can be understood and replicated. The existing structure of published papers is well-established and entirely sufficient to accomplish this goal. (2) It must provide a way to contact Authors for clarifications. (3) The structure must provide an easy method for indexing the paper in relation to the issues it addresses and the rest of the literature. Currently, this indexing is accomplished through the combination of keyword searches and citation linkages. (4) It must have a set of statistics and comments associated with it that allow its reception by the field to be tracked for the purposes of evaluating individuals for funding and promotions and prioritizing it within the literature. Some journals and search engines have already begun to track download count and number of citations. While the current structure has been adapted to serve these functions, it is far from optimal, and online access allows us the opportunity to design a new structure with superior functionality.

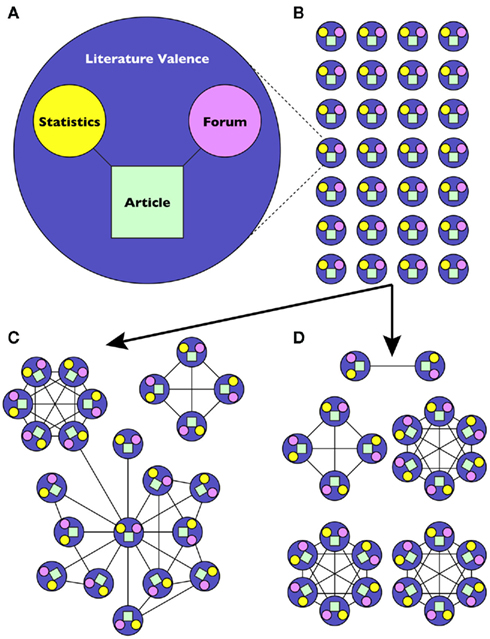

Structure of Papers Post-Publication

Under the proposed system, a published paper will consist of the following components. First, the article itself (Figure 4A, green box), which has essentially the same structure as papers currently have with the addition of the summary and initial ratings from the Editorial Board. The original article will be the only component that is immutable – once published it will never change. This component provides a consistent way for Authors to claim work as their own, and the familiar format of the article is ideally suited to serving the first function. The only major change in this structure will be that the format will be consistent. We will, as a Field, decide on a common list of components (e.g., abstract, introduction, etc.,) and stick to it, rather than reformatting manuscripts for each journal.

Figure 4. Post-publication system. (A) A paper in its complete form under the proposed publishing process. Every paper will be comprised first of the manuscript itself (green square). This manuscript will have a standard format comprised of the familiar components along with the editorial board summary and ratings. The manuscript will be stable over time and none of its components subject to change. Associated with this manuscript will be a set of statistics (yellow circle) that include any additional ratings from the field collected post-publication and continually updated counts of its citations and downloads. A forum will also be attached to the manuscript where members of the field can post their own detailed comments on the paper and authors have the opportunity to respond. Members of the field may also pose theoretical and methodological questions that can be answered either by the authors or any other member of the field. Finally, the manuscript also has a literature valence (large blue circle), comprised of its citations and those papers which have cited it as well as topics and uncited work deemed by either the board, the authors, or the field to be related. The valence also includes ID numbers for the authors, editorial board member who provided the summary, and the reviewers. (B) Currently, a literature search generates a relatively unstructured list of papers, organized by author or subject heading. The literature valence will allow for dynamic organizations of the literature based on the needs of a particular researcher. (C) For example, organizing the papers in (B) by citation and topic might reveal that there were actually two sub-fields within the list. One a small set of distinct closely related papers, while the other is a large complex set, centered on a single paper. (D) This organization might differ entirely when organized by the method used, revealing a different set of relationships amongst the papers.

Second, the paper will also be associated with a forum (Figure 4A, purple circle) within which members of the field can ask methodological and theoretical questions as well as offer up their own detailed reviews. Upon publication, the original Reviewers will be invited to anonymously post their reviews (with any modifications) if they choose, but all other contributions will be open and directly associated with particular researchers. Authors are free to respond to any post in the forum, adding comments, or additional data as appropriate, as are members of the field in general. The forum provides a way for Authors to publicly refine their work and theories as the process of reception unfolds without needing to publish new papers on minor incremental or clarifying points. The forum also provides a way for the field to reach a consensus on the implications and limitations of any result. Critically, the forums provide a record of these discussions, again providing Authors the ability to claim at least informal ownership of particular ideas outside of the context of published papers.

The paper will also contain a set of continuously updated statistics (Figure 4A, yellow circle) which track the reception the paper is receiving from the field. These statistics are essentially numeric data that provide an easy way of prioritizing the paper by tracking things like citation and download counts. They also include ratings provided by members of the field after publication.

Finally, all of these components along with some additional information comprise a literature valence (Figure 4A, large blue circle), which can be used to both prioritize the paper and place it in context with the literature. The additional information includes other work that has cited the paper since publication, the IDs of the Authors, Reviewers, Editors, and Editorial Board member, keywords, and additional related literature suggested by them or any other members of the field.

The structure described above is relatively similar to the backbone of social networking websites like Facebook. The problems being addressed by the two systems is similar in that both create a virtual anchor for an actual person or paper, to which content can be continuously added and indexed online without altering the fundamental link between the anchor and the actual content. In fact, beyond the statistics tracking, most of the functionality described above can be achieved simply by making a Facebook page for a paper. This similarity is a strength of the proposed system as it dramatically simplifies and cheapens the implementation of the proposed system (see Finance section below).

Searching and Organizing the Literature

When the information in the literature valence is married to the appropriate algorithms it can yield a very powerful and flexible way of organizing the literature. For example, literature searches currently yield a list of papers associated with a particular keyword or author, generally ordered by date (Figure 4B). While this organization is useful as a first pass, an additional algorithm which takes into account the citations might reveal a much more informative structure: in this example, two distinct subgroups of papers with one subgroup being centered on a single seminal paper (Figure 4C). Alternatively, an organization based on the methods used (e.g., fMRI) might show an entirely different grouping, with many different methods being used to address the same topic (Figure 4D). The proposed system would also allow searches and organizations based on who reviewed the paper, which editorial board member wrote the summary, or the post-publication ratings of a particular individual researcher. The point is not the particular organization but to build a structure flexible enough to support a wide range of organizations tailor-made to the needs of individual researchers.

Open Questions

Financing the System and Transition from the Current System

In the preceding sections we proposed a new system of publishing that does not completely demolish the existing system but streamlines it and optimizes it to leverage the currently available technology. This approach is critical, as it leads to a new system that can be easily and cheaply transitioned to from the current system. In this section we review the major components of the proposed system that will require expenditures of money and effort to implement and maintain.

First, there is the coordination of the review process. Currently, this function is served by journals that are financed by a combination of subscription, submission, advertising, and publication fees. In the proposed system, the editorial process is decoupled from publication, all published papers are freely available, and physical copies of journals are no longer produced. This reduces the source of revenue for the editorial process essentially to submission fees provided by the Authors. There are, however, several factors that will attenuate these costs. (1) Publication is guaranteed, so payment of the fee will definitely lead to a publication. (2) Editors will now have to directly compete with one another on the basis of price and quality of service (i.e., speed, copy editing, publicity for high-profile results). This competition should lead to a wide range of Editor pricing and services and should reduce fees overall. (3) It is likely advisable to have a single electronic backbone that is used for the coordination of Reviewers and the Editorial Board. This system could track the number of papers currently assigned to individuals, making the assignment of new papers more efficient. It would also eliminate redundant implementations of similar systems by different Editors, and provide a common set of anonymous IDs for Reviewers across all submissions. All of these factors should increase efficiency and reduce the overall price. The implementation and maintenance of such a system is quite simple and could be easily paid for from a general funding source (e.g., NIH) or by a proportion of the submission fees. The transition to this system of pre-publication review will probably need to be done as a field, as the proposed system would be hard-pressed to compete with the more prestigious journals that already exist. The other alternative is to create such a system and wait for its increased efficiency to render the other modes of publication obsolete over a likely period of many years.

Second, the backbone of the post-publication market must be implemented and maintained. Again, it is likely advisable that a single system serves the entire field, to maintain consistency, reduce redundancy, and provide a common access point for the literature. A single system could also be used to track all users and to restrict access to accredited institutions and individuals or to ban users who abuse the system if needs be. Since the basic structure of the proposed post-publication market is similar to existing social networking sites, the minor extensions required would not be overly costly to implement or maintain for these companies. Revenue could be generated by again taking a proportion of the submission fees. It could also be generated through targeted advertisements. The topic headings of papers provide an excellent index into the scientific apparatus likely needed by researchers reading that paper. Whereas currently most advertisements for these products are scattershot, pushed through journals or emails, associating the ads with particular papers might be more effective. Another advantage of the proposed post-publication market is that it can be implemented independent of the proposed system of pre-publication review. Even existing papers can be adapted into the proposed marketplace and their reception tracked, easing the transition to the proposed system.

Finally, the front-end service by which the literature can be searched, organized, prioritized, and presented to researchers will need to be funded. Currently, there are a number of search engines (e.g., Pubmed), financed by the major funding agencies that could be adapted to serve these functions. However, this is also a potential market for the existing journals, which could provide several distinct services to scientists. (1) Journals can produce their own proprietary prioritizations of the literature. In the proposed system any prioritization essentially reduces to some, likely linear, formula representing a combination of all the available factors. That equation can be proprietary and journals can offer their own prioritizations to researchers for a fee. In fact, some journals have already begun to offer something similar to this function, by providing field-wide research highlights with every published issue. This can lead to the strange experience of being rejected by a journal and then having the same paper highlighted within it later. (2) Similarly, journals can offer new algorithms for organizing the literature; perhaps even offering a direct service to researchers. (3) Journals might also be the logical outlet for review articles, which would be trivial to publish under the proposed system. If review articles were limited to invited pieces in particular journals, they could be published under a different system more directly suited for them. Journals could also charge for access to these articles just as they charge for empirical pieces currently.

Compensation for Reviewers

Our proposed system reduces the reviewing burden on the field and better aligns the incentives, but we recognize that our proposed system is still dependent on the efficiency and quality of the reviews. Unless reviewing is directly rewarded, it will always be at the bottom of the stack for any researcher. Further, we, as a Field, need to acknowledge the importance of reviewing as part of doing good science and reward researchers for doing it well. In the current system, good reviewing is not even defined, let alone tracked, and it is the backbone of all publishing. Finding a way to track and reward good reviewing might also reveal a heretofore-unknown group of researchers who are gifted in it and might teach the rest of us how to do it effectively.

Our proposed system provides mechanisms that allow reviewing to be tracked and rewarded. The raw initial reviews are provided to the Editorial Board, whose members could be asked to rate the usefulness and insightfulness of those reviews. Assuming that the identity of the Reviewers is kept anonymous, this could provide a relatively unbiased estimate of the quality of the reviews, similar to a system already in place at some journals (e.g., PLoS ONE). Upon publication, the Reviewers could also be asked to provide final ratings that could be regressed against the actual reception of the paper and final reviews that could be rated by the field.

Having tracked the quality of individual Reviewers, the question is how best to reward them. First, statistics representing the quality of a Reviewer could be cited in job applications and tenure reviews. Second, high-quality reviewing could qualify a Reviewer for membership in the Editorial Board (see below). Finally, Reviewers could also be paid a proportion of the submission fees commensurate with the quality of their reviewing for each paper they review. These fees would not have to paid to Reviewers directly, instead they could be added to existing grants in the Reviewers lab, or could simply defray submission costs for the Reviewer’s own papers.

Mechanisms of the Editorial Board

Under the proposed system the Editorial Board has a very important responsibility to provide the initial summary and ratings that accompany a paper into the marketplace. Beyond this responsibility, members of the Editorial Board also have the burden of producing these summaries and ratings for every published paper. As such, the size of Board, its membership, and compensation for serving on it must be carefully considered.

The size of the Board is the least complicated of the issues. All that is required is to ascertain the average amount of time it takes to produce a summary and a set of initial rankings for each paper. Assuming that this process is comparable to reviewing a paper (6 h), an Editorial Board member could reasonably handle two papers a week. Dividing the number of papers submitted in a week (∼40)5 by this number would yield a rough estimate of the necessary size of the Editorial Board (∼20). This number could then be adjusted after the system begins operation. Alternates could also be specified who could contribute during times with very high numbers of submissions.

The membership of the Editorial Board is a more complex issue. Initially members should probably be elected to some set terms by the members of the field. Once those terms end or members resign, they can also be replaced by a voting procedure. Some positions might also be filled by the best Reviewers in the field (see Compensation for Reviewers section above), providing another reward for good reviewing.

Finally, serving on the Editorial Board incurs a significant cost in both time and effort and its members will need to be compensated. On the one hand, serving on the Editorial Board will be very prestigious and the position provides the opportunity to help shape the direction of the field, so in some sense serving is its own reward. On the other hand, members could also receive some direct compensation, likely in the form of some guaranteed funding for their labs. This would remove members from the grant treadmill, freeing them to more fully immerse themselves in the literature. Further, it would reduce the burden on grant reviewers, who would no longer have to review grants that are very likely to be funded (particularly if membership in the Editoral Board is determined by voting).

Conclusion

Ultimately, the process of reforming the current system of publishing will be long, arduous, and fraught with uncertainty. The purpose of this manuscript is not to propose a final solution; by no means is the proposed system perfect. Instead we sought to highlight the problems in the current system, the functions that should guide the new system, and the necessity of reforming the system (see Table 2). It is to this final point that we now turn in some additional detail. Above, we have argued, in some depth, that the current system is needlessly redundant, expensive, and ill-suited to meeting the needs of the field, specifically a scientific pre-reception for Authors, and a prioritization of the literature for all researchers. To these factors we now add several more dynamics that will make the current system of publishing in the neurosciences even more untenable in the future.

First, neuroscience, as a discipline, has several characteristics that make the current system of publishing particularly problematic. The brain is a hugely complicated system, and its components cannot be easily studied in isolation, or strong conclusions drawn about the function of isolated components in the complete system. Progress depends on the development of large-scale theoretical frameworks and the building of consensus around the critical data that support, refine, or repudiate them. The intuitions and theories conveyed by a paper and the relationship between those theories and the literature are often as important as the data itself. The current system encourages novel seeming, isolated research, which is often directly contrary to establishing theories and interpretations in relation to the literature. Research designed to refine or address existing theories is relegated to specialist journals. This dynamic would be acceptable if this type of research was widespread, but there are few incentives to actually perform it. The lag and uncertainty in publication time and the relative uselessness of low-profile publications in promotion and tenure decisions rule out junior faculty or post-docs and these two groups perform most of the research in the labs of tenured faculty.

Second, the field of neuroscience, in both papers and researchers, is growing quickly. This year over 700 neuroscience doctorates will likely be awarded, compared with only 276 in 1993 (NSF Survey of Graduate Students). This increase in the number of researchers translates into an increase in the number of submissions to existing journals (e.g., average annual increase from 2006 to 2009: nature 4.8%; Journal of Neuroscience 2.6%). The concomitant increase in the number of rejections and the ease of opening an online publication has also led to the creation of new journals. From 2000 to 2006 the number of neuroscience journals was essentially steady at around 200. From 2006 to 2009 the number of journals increased to 231, an annual increase of approximately 5% (derived from the Web of Science). As the field and the associated literature grow, the inefficiencies of the current system will become increasingly problematic. The amount of time it takes to publish a paper, the number of reviews written, and the difficulty in organizing and comprehending the literature will increase and eventually become a limiting factor on progress in the field, if it is not already.

Hopefully, this paper will help begin a conversation about the problems and inefficiencies inherent in the current system of publishing. The system proposed in this paper is not meant as a final proposal, but as a reasonable starting point that addresses many of the current flaws in the system and could reasonably be implemented. We hope that it will engender debate, which is at the heart of scientific progress, but too little emphasized in the current system of publishing.

This paper is also not meant to be an indictment of the existing journals; they are businesses whose purpose is to provide a service at a reasonable price. By and large they accomplish this purpose and are staffed by dedicated professionals wrestling with a difficult job. This paper is an indictment of the service that we, as a field, ask them to provide. We are paying, in both time and money, for a system constrained by the physical distribution of papers, when we no longer read physical copies of journals. What we should be paying for, and where private companies can be innovative, is in the coordination of the review process, the publicizing of results, and methods for searching and organizing the literature. Providing this last service can be quite profitable, Google has a profit margin of 21%. A better post-publication system will also improve the quality and frequency of scientific discussion between labs, which is now largely limited to conferences and published papers. In a time with increasingly constrained budgets and funding sources needing to see progress to justify taxpayer outlays, reforming the system of publishing might not only decrease our costs but increase our productivity as well.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Please note that the opinions expressed in this article are solely the opinion of the authors and do not necessarily reflect the opinion of the NIH. Thanks to Niko Kreigeskorte and Punitha Manavalan for many helpful discussions. Thanks to Sandra Truong for her extensive comments. Thanks to all those researchers who provided data for our analysis of the current system of peer review.

Supplementary Material

The Supplementary Material for this article can be found online at http://www.frontiersin.org/Computational_Neuroscience/10.3389/fncom.2011.00055/abstract

Footnotes

- ^http://www.glassdoor.com/Salary/NIH-Postdoctoral-Fellow-Salaries-E11709_D_KO4,23.htm

- ^This calculation was achieved by drawing every possible boundary in citation count and assessing the proportion of the distribution for each journal that fell on either side of the boundary. Subtracting the proportion of the each journal that fell on the same side of the boundary from one another provides the percent correct for a particular boundary. The percent correct from the best boundary is reported.

- ^http://languageediting.nature.com/

- ^http://www.nature.com/nature/peerreview/debate/index.html

- ^This number was calculated by dividing the number of papers with the topic “neuroscience” published in 2010 (2100) by 52 weeks.

References

Lipworth, W. L., Kerridge, I. H., Carter, S. M., and Little, M. (2011). Journal peer review in context: a qualitative study of the social and subjective dimensions of manuscript review in biomedical publishing. Soc. Sci. Med. 72, 1056–1063.

Peters, D. P., and Ceci, S. J. (1982). Peer-review practices of psychological journals: the fate of published articles, submitted again. Behav. Brain Sci. 5, 187–195.

Suls, J., and Martin, R. (2009). The air we breath: a critical look at practices and alternatives in the peer-review process. Perspect. Psychol. Sci. 4, 40–50.

UK House of Commons. (2004). The Origin of the Scientific Journal and the Process of Peer Review. Available at: http://eprints.ecs.soton.ac.uk/13105/2/399we23.htm

Van Rooyen, S., Godlee, F., Evans, S., Black, N., and Smith, R. (1999). Effect of open peer review on quality of reviews and on reviewers’ recommendations: a randomized trial. Br. Med. J. 318, 23–27.

Keywords: peer review, neuroscience, publishing

Citation: Kravitz DJ and Baker CI (2011) Toward a new model of scientific publishing: discussion and a proposal. Front. Comput. Neurosci. 5:55. doi: 10.3389/fncom.2011.00055

Received: 20 June 2011;

Accepted: 11 November 2011;

Published online: 05 December 2011.

Edited by:

Nikolaus Kriegeskorte, Medical Research Council Cognition and Brain Sciences Unit, UKReviewed by:

Thomas Boraud, Universite de Bordeaux, CNRS, FranceMarc Timme, Max Planck Institute for Dynamics and Self Organization, Germany

Copyright: © 2011 Kravitz and Baker. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: Dwight J. Kravitz, Unit on Learning and Plasticity, Laboratory of Brain and Cognition, National Institute of Mental Health, National Institutes of Health, Bethesda, MD 20892, USA. e-mail: kravitzd@mail.nih.gov