Self-organization of synchronous activity propagation in neuronal networks driven by local excitation

- 1Mercator Research Group “Structure of Memory”, Ruhr-Universität Bochum, Bochum, Germany

- 2Department of Physics, Institute for Advanced Studies in Basic Sciences, Zanjan, Iran

- 3School of Cognitive Sciences, Institute for Research in Fundamental Sciences, Tehran, Iran

- 4School of Mathematics, Institute for Research in Fundamental Sciences, Tehran, Iran

- 5Department of Psychology, Ruhr-Universität Bochum, Bochum, Germany

Many experimental and theoretical studies have suggested that the reliable propagation of synchronous neural activity is crucial for neural information processing. The propagation of synchronous firing activity in so-called synfire chains has been studied extensively in feed-forward networks of spiking neurons. However, it remains unclear how such neural activity could emerge in recurrent neuronal networks through synaptic plasticity. In this study, we investigate whether local excitation, i.e., neurons that fire at a higher frequency than the other, spontaneously active neurons in the network, can shape a network to allow for synchronous activity propagation. We use two-dimensional, locally connected and heterogeneous neuronal networks with spike-timing dependent plasticity (STDP). We find that, in our model, local excitation drives profound network changes within seconds. In the emergent network, neural activity propagates synchronously through the network. This activity originates from the site of the local excitation and propagates through the network. The synchronous activity propagation persists, even when the local excitation is removed, since it derives from the synaptic weight matrix. Importantly, once this connectivity is established it remains stable even in the presence of spontaneous activity. Our results suggest that synfire-chain-like activity can emerge in a relatively simple way in realistic neural networks by locally exciting the desired origin of the neuronal sequence.

1. Introduction

The propagation of neural spiking activity has been observed in various parts of the brain, including neocortex (Mao et al., 2001; Ikegaya et al., 2004; Pinto et al., 2005) and hippocampus (Miles et al., 1988; Nádasdy et al., 1999; Buhry et al., 2011; Cheng, 2013). It has been suggested that the reliable propagation and transformation of neural activity between different brain regions is crucial for neural information processing. Therefore, a number of computational and theoretical studies have studied the conditions under which neural activity can propagate reliably in neural networks (Diesmann et al., 1999; Kistler and Gerstner, 2002; Yazdanbakhsh et al., 2002; Aviel et al., 2005; Kumar et al., 2008). A prominent model for activity propagation is the synfire chain model, in which groups of neurons that fire synchronously are chained together into a larger feedforward network (Abeles et al., 1993; Mao et al., 2001; Abeles, 2009). Although isolated feedforward networks can account for the repeated dynamics in cortical networks (Diesmann et al., 1999; van Rossum et al., 2002), anatomical studies suggests biological networks are more adequately modeled by local recurrent connectivity than by feedforward structures (Hellwig, 2000).

Furthermore, most biological networks are not hard-wired; they are often structured by synaptic plasticity, such as spike-timing dependent plasticity (STDP) (Gerstner et al., 1996; Markram, 1997; Bi and Poo, 1998; Zhang et al., 1998; Kempter et al., 1999). STDP is widely thought to underlie learning processes, and is the focus of many theoretical studies (Leibold et al., 2008; Voegtlin, 2009; Masquelier et al., 2009; D'Souza et al., 2010; Gilson et al., 2010). The shape of the STDP curve has been proposed to trade off two competing features of STDP, synaptic competition and synaptic stability (Gütig et al., 2003; Morrison et al., 2007; Babadi and Abbott, 2010, 2013). In the case of anti-symmetric STDP, reversing the ordering of pre- and post-synaptic spikes reverses the direction of synaptic change. It breaks strong reciprocal connections between neuron pairs (Abbott and Nelson, 2000; Kozloski and Cecchi, 2010). Any inhomogeneity in initial synaptic weights, or in firing rates, determines which of the synapses will be potentiated and which will be eliminated (Babadi and Abbott, 2013). Through structural changes such as these, STDP also alters the network dynamics. For instance, STDP tends to enhance population synchrony in recurrent networks (Levy et al., 2001; Kitano et al., 2002a,b; Takahashi et al., 2009), and facilitates the formation of synfire chains when applied to feedforward or random networks (Hertz and Prügel-Bennett, 1996; Suri and Sejnowski, 2002).

The converse effect, that is the network activity affects the network structure, is observed as well. For instance, if some components in a network fire at a higher rate than the remaining network, the dynamics of physical (Gavrielides et al., 1998; Valizadeh et al., 2010) or biological systems (Glass and Mackey, 1988; Panfilov and Holden, 1997) change. The neurons with the highest inherent frequency can serve as pacemaker and train their post-synaptic partner neurons in networks with all-to-all (Bayati and Valizadeh, 2012) and random connections (Takahashi et al., 2009). If the neurons in a two-neuron network, which are connected mutually, fire at different intrinsic rates, STDP strengthens the synapse from the high-frequency to the low-frequency neuron and weakens the other one. This occurs if the initial synaptic strengths are set to the values which are enough for onset of frequency synchronization after changing the strengths due to the STDP. The same argument holds for larger networks as well. With discrepancy in the intrinsic firing rates of neurons, the reciprocal connections are broken in favor of the fast-to-slow links such that an effective flow of connections emerges from fast to slow neurons (Bayati and Valizadeh, 2012). This example shows how STDP as a spatially local mechanism for modification of synapses induces global structure in recurrent networks (Babadi and Abbott, 2013).

Here, we therefore asked whether local excitation together with STDP can drive the establishment of robust propagation of synchronous activity that is characteristic of synfire chains. To do so, we study the population activity in a locally connected random network (LCRN). Local excitation is provided by a small number of neurons that fire at a higher frequency (fast-spiking neurons, FSNs). We find that robust activity propagation indeed arises and does so quickly within seconds of simulated time. The synchronous activity originates at the location of the FSNs and propagates away from them. This network behavior is the result of an effective feedforward structure that emerges spontaneously in a recurrent network through STDP. Once the network reaches the steady state, synchronous activity propagation remains stable even when the local excitation is removed. It is thus conceivable that temporary local excitation is provided to a biological neural network to establish synchronous activity propagation, and to have that activity pattern continue after the excitation is removed.

2. Materials and Methods

2.1. Network Dynamics and Topology

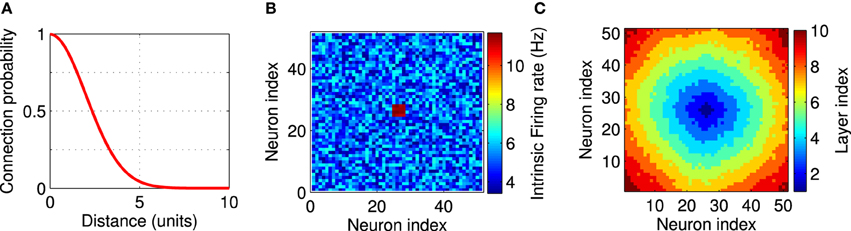

In our simulations we use a LCRN (Mehring et al., 2003; Kumar et al., 2008) comprising N = 51 × 51 units on a two-dimensional square grid. The distance between two neighboring units along the main axes of the grid is one unit. The adjacency matrix aij (Reka and Barabási, 2002) indicates whether neuron j projects to neuron i (aij = 1), or not (aij = 0). We choose the probability of a connection between any pair of neurons to decrease with the Euclidean distance (dij) between the neurons since anatomical studies have found that cortical networks are mostly locally connected (Hellwig, 2000). More specifically, we connected neuron i to ki post-synaptic partner neurons, which are chosen at random according to a local connectivity kernel, a Gaussian with zero mean and standard deviation σ = 2 (in units of the grid spacing) (Figure 1A). The post-synaptic partners were determined as follows. We sampled a random number according to the connectivity kernel, which indicates the distance between the pre- and post-synaptic neurons, and a random number from a uniform distribution between zero and 360, which indicates the direction from the pre- to the post-synaptic neuron. The connection was discarded, if the chosen position of the post-synaptic partner laid outside the boundaries of the network, which was more frequently the case for neurons at the edges of the network. We repeated the sampling of post-synaptic partners 40 times, so that ki ≤ 40. Our connection procedure allows only one connection between two neurons. Anatomical studies suggest σ ≃ 0.5 mm (Hellwig, 2000). So our model covers a cortical surface of about 6 cm2 with only 2601 neurons. That means, we assume that the network described here constitutes a subset of a larger cortical network whose effect is considered as external currents to the modeled neurons (Kumar et al., 2008; Hahn et al., 2014).

Figure 1. Network setup. The network consists of a square grid of N = 51 × 51 = 2601 excitatory leaky integrate-and-fire neurons. (A) Synaptic connectivity kernel in the two-dimensional network model. Each neuron is pre-synaptic to 40 of its neighbors. (B) An example for the firing rate of the neurons in the network. Each neuron receives a constant external input current, independently selected from a uniform distribution on the interval [I0 − δ, I0 + δ] with I0 = 16.21 mV, δ = 0.2 mV, which leads to background firing rates from 3.4 to 6.8 Hz. Twelve neurons in the center of the network (shown in red) receive higher input currents (I0 = 18.05 mV, δ = 0.15 mV), and therefore fire at higher rates between 11.1 and 11.7 Hz. These high-frequency neurons provide local excitation to the network above the background firing. Firing rates are measured in isolated neurons without recurrent connections. (C) A layer index is assigned to each neuron based on the synaptic distance to the high-frequency neurons. All neuron with the same layer index can be thought of being arranged in an abstract layer.

The neurons' subthreshold dynamics were modeled by a leaky integrate-and-fire (LIF) model:

where Vi represents the membrane potential of neuron i and i = 1, 2, …, N. The membrane potentials were set to random initial values at the beginning of each simulation. τm = 20 ms is the membrane time constant, Vrest = −70 mV the resting membrane potential, Ii the external input current and Iij the synaptic current from neuron j to neuron i. Although these inputs appear as currents, they are measured in units of the membrane potential mV since a factor of the membrane resistance has been absorbed into their definition. The differential equations were solved using the Runge-Kutta second-order method with a timestep of 0.1 ms. Whenever the membrane potential of a neuron reaches a fixed threshold at Vth = −54 mV, the neuron generates an action potential, the membrane potential is reset to the resting potential, and a refractory period of 2 ms followed. Each time a neuron spikes, a synaptic current gij is transmitted from the pre-synaptic to the post-synaptic neuron as a pulse, with a delay of D = 1 ms regardless of the distance between the connected neurons. Thus, the synaptic dynamics is neglected. Writing the neuron's response function (Dayan and Abbott, 2001) as xj (t) = ∑m δ (t − tmj), where tmj is the time of the m-th spike of neuron j and δ (x) is the Dirac delta function, the synaptic current Iij is given by

In the units that we adopted here, gij represents the synaptic strength. It is positive throughout this study since we only modeled excitatory synapses. All synaptic weights were initially set to g0 = 0.02 mV.

Inhomogeneity in the intrinsic firing rates were imposed by unequal external currents. The external input currents of all neurons, except for the FSNs, were randomly chosen from a uniform distribution on the interval [I0 − δ, I0 + δ]. With I0 = 16.21 mV and δ = 0.2 mV, the background firing rates range between 3.4 and 6.8 Hz. Local excitation was provided to the network by choosing those n neurons that are closest to the center of the network and providing them with higher input currents (I0 = 18.05 mV, δ = 0.15 mV). These neurons therefore fire at higher rates between 11.1 and 11.7 Hz (Figure 1B), which is why we call them FSNs. These background firing rates are measured in isolated neurons without recurrent connection. Firing rates are different when the network is recurrently connected as described above and fluctuate during the evolution of the network (see Figure 7A). Throughout this paper n = 12, unless otherwise noted.

The synaptic strengths evolved according to a pair-based STDP rule with nearest spike neighbors interaction (Izhikevich and Desai, 2003), neglecting interactions between remote spike pairs (Froemke and Dan, 2002; Pfister and Gerstner, 2006). A change of synaptic strength Δgij was induced by a pair of pre- and post-synaptic action potentials with time difference Δt = tpost − tpre. The functional relation between the synaptic modification and the pairing interval was

The positive parameters A+ and A− specify the maximum potentiation and depression, respectively. We expressed the synaptic strengths in units of the membrane potential (mV), so A+ and A− have units of mV. The time constants τ+ and τ− determine the temporal spread of the STDP window for potentiation and depression, which have been reported to be in the 10 - 20 ms range (Song et al., 2000). We impose hard bounds on the synaptic weights (0 < g < gmax, where gmax = 2 g0), by truncating any modification that would take a synaptic weight outside the allowed range.

Therefore, all synapses in the network are excitatory, which is in accord with in vitro findings that synchronous activity propagation depends mainly on excitatory synaptic connections (Pinto et al., 2005), but may be modulated by inhibitory neurons (Mehring et al., 2003; Aviel et al., 2005).

To set the value of A±, we assumed that synaptic weakening through STDP is, overall, slightly larger than synaptic strengthening (A− τ− > A+ τ+). This condition ensures that uncorrelated pre- and post-synaptic spikes weaken synapses (Song et al., 2000). In our simulations, we used A− τ− / A+ τ+ ≃ 1.06. The individual parameters were A+ = 5 × 10−5 mV, A− = 4.4 × 10−5 mV, τ+ = 10 ms, and τ− = 12 ms.

2.2. Network Analysis

To better illustrate the structure of the network, we assigned each neuron a layer index to indicate its distance from the FSNs (Figure 1C). We define the layer index li as the smallest number of directed edges that are necessary to move from a FSN to neuron i. Neurons with the same layer index are considered to be in the same abstract layer (Masuda and Kori, 2007). In Figure 1C, all pixels of the same color represent neurons in the same layer. In a strictly feedforward network, neurons would project only to other neurons that are located in a higher layer. To compare the network structures that emerge after synaptic plasticity to a feedforward network, we therefore quantified the connectivity between the abstract layers in our recurrent networks.

In reference to the FSNs, for each layer (l), we call the sum of the weights of all the ij links for which the layer index of their post-synaptic neuron is larger than the pre-synaptic one (li > lj) as “forward synaptic flow”:

And the sum of the weights of all the ij links for which the layer index of their post-synaptic neuron is smaller than the pre-synaptic one (li < lj), as “backward synaptic flow”:

To quantify the asymmetry of connections, we introduced the difference between the forward and backward synaptic flows for each layer Cl = C+l − C−l as the feedforward parameter. For simplicity, we normalized the feedforward parameter by dividing it by its total forward and backward synaptic flows. A positive feedforward parameter means that the forward synapses are stronger than the backward synapses. The opposite would be indicated by a negative feedforward parameter. Note that in the calculation of feedforward parameter only the inter-layer connections are considered and the large value of feedforward parameter does not rule out the presence of intra-layer connections.

To quantify the effective imbalance in the network we introduced an average feedforward parameter

in which Nl is the total number of abstract layers. The average feedforward parameter can be used as a tool to survey the evolution of network and determine the emergence of feedforward structures (Bayati and Valizadeh, 2012). When all the feedforward parameters are zero the average feedforward parameter is also zero, but the inverse is not true.

2.3. Analysis of Network Activity

To study the coherence of the neural activity in the network, we used the average response of all the neurons in the network

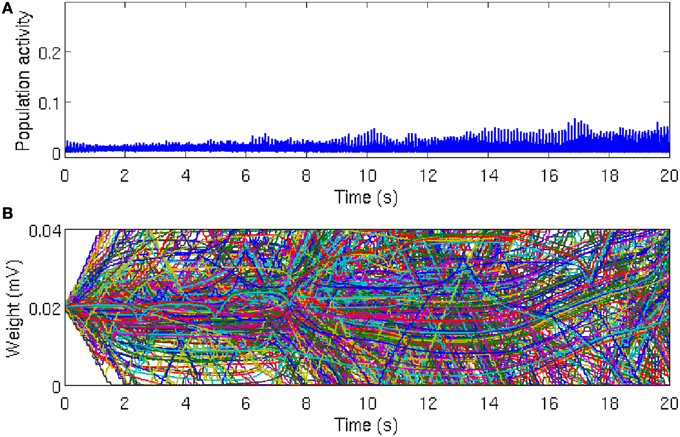

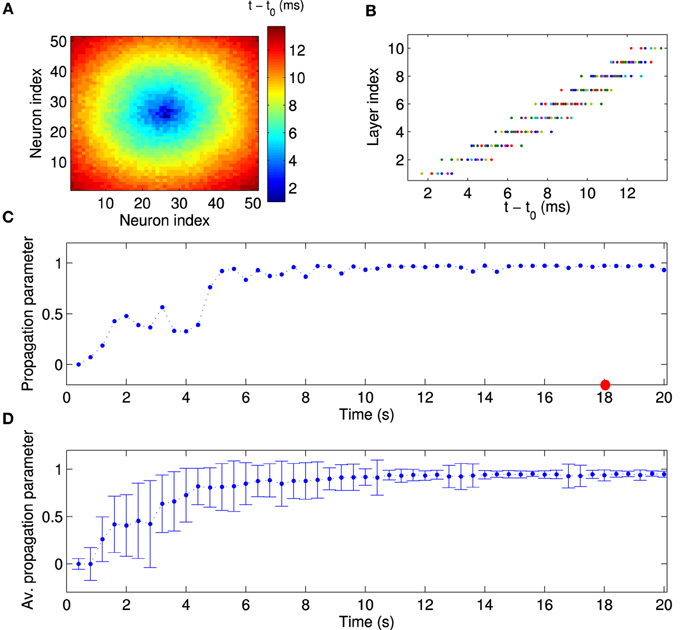

which we call the population activity. In our simulation, the population activity was calculated in time bins of 1 ms. Asynchronous firing of neurons results in low and noisy population activity (Figure 2A). By contrast, large values of the population activity indicates that the network is active coherently, such as during oscillatory behavior (Figure 3). The population activity can thus be used to measure the degree of synchrony in the network.

Figure 2. Population activity remains asynchronous in the absence of local excitation. (A) Global network activity, as measured by the average number of neurons firing within a time bin of 1 ms, increases over time due to ongoing synaptic plasticity. However, the network activity remains relatively low and noisy, which indicates that the neurons in the network are firing asynchronously. While there is a tendency for the peak of the population activity to increase slightly, reflecting more synchronous activity, there is no evidence for global synchrony in the network. (B) Time course of 500 randomly chosen synaptic weights. Most of the synaptic weights fluctuate between the bounding values, which are g = 0 mV and g = 0.04 mV, respectively. Since STDP eliminates synaptic loops in neural networks, after a sufficiently long simulation time, about 50% of the synaptic weights reach the threshold values, but there is no specific direction for the elimination of synaptic connections. The reason is that the heterogeneity between the firing rates are large enough and the initial synaptic weights are not strong enough to overcome the inhomogeneity (disorder) in the network and synaptic connections are eliminated in a random directions in the network.

Figure 3. Global synchronous activity is established by local excitation. (A) In presence of local excitation, after about 5 s simulation time, global synchrony emerges in the network. Periodic network activity with relatively large amplitudes indicates that the system is in a one-cluster state. Insets of the network activity at magnified time scale show that (B) in the transient state synchronized network activity emerges gradually and (C) activity peaks are precise in the steady state. Thus, local excitation enables the network to overcome the disorder in the firing rate of neurons and to establish global synchronous activity. We used one particular population burst [shown by red circle in (A)] to show reliable synchronous activity propagation in Figures 4A,B.

In this study, we were interested in the temporal fine structure of population bursts in the network activity and therefore we identified the time periods in which the population bursts occurred with the following algorithm. We first defined a search window of 180 ms, which corresponds roughly to the average period of neurons in the network. This search window was moved through time in steps of 15 ms until the population was silent at the beginning of the search window t0, i.e., X(t0) = 0. Once this condition was satisfied, we similarly moved the end of the search window t1 forward in time until X(t1) = 0. Therefore, the width of the search window was variable. If at any time between t0 and t1, the population activity exceeded the threshold Xth = 0.015, we considered the search window to contain a population burst and used it for further analysis (see next paragraph). Otherwise, we rejected the search window.

To quantify how orderly neural activity propagates through the abstract layers, we calculated the rank-order correlation between the time of the first spikes fi that the neurons fired within the search window and the layer indices li. We refer to this correlation value as the propagation parameter.

where xi is the rank of neuron i in the list fi, and yi is its rank according to li. The means of these values are represented by x and y, respectively.

3. Results

3.1. Activity in a Locally Connected, Random Network is Asynchronous Despite STDP

As the asynchronous state is a more realistic description of ongoing cortical activity in the absence of strong external excitation, we set the initial conditions such that the network activity remains asynchronous. In this case, the population activity remains relatively low and noisy with irregular small peaks due to the synchronous firing of few neurons by chance (Figure 2A). In line with this irregular activity, the synaptic weights in the network fluctuate, as evident in the evolution of 500 randomly selected synaptic weights (Figure 2B). It is well-known that after a long time, most of the synapses reach the limiting values imposed by the hard bounds in the conventional linear STDP (Song et al., 2000). However, in the intermediate time range no specific network structure emerges and most of the synaptic weights remain in-between the bounding values. These results demonstrates that in the homogeneous network, in the absence of local excitation, STDP cannot establish synchronous firing. Similar asynchronous network activity has been reported for a different type of STDP rule (Morrison et al., 2007).

3.2. Synchronous Activity Propagation Develops due to Local Excitation

It was reported that the presence of neurons with higher firing rate can substantially change the dynamics of neural networks (Masuda and Kori, 2007; Bayati and Valizadeh, 2012). In our simulation, we introduced stronger inputs to n = 12 neurons than to the other neurons in the network (see Materials and Methods). This setup can be related to a biological neural network in which only a small subset of neurons receive external inputs while the remaining neurons do not. With the local excitation, the network dynamics gradually changes from asynchronous initially to synchronous after about 5 s simulated time (Figure 3). The periodic peaks in the population activity in the stationary state is related to the maximum firing rate of the background firing rate (6.8 Hz). We confirmed that an overall increase in network activity, e.g., by increasing the background firing rate of all neurons to the range [7.4 − 10.4] Hz, does not give rise to synchronous activity propagation (data are not shown). We therefore conclude that local excitation in a LCRN with STDP drives the network toward global synchronous activity.

Next, we examined the population activity within a population burst at a finer temporal resolution to reveal the relative spike timing of the neurons. Figure 4A shows one particular population burst in which the neural activity propagates from the center outward. A comparison with the layer index (Figure 1C) suggests that neural activity starts in the FSNs (first layer) and then propagates to downstream layers. In addition, neurons that belong to the same or nearest layers fire synchronously. These observations are confirmed by a direct comparison of relative spike times during the population burst and the assigned layer index (Figure 4B). The population burst in Figures 4A,B shows that activity is propagated along the abstract layers much like in synfire chains (Abeles, 1991, 2009). Note that this synchronous activity is evident in the first layer, in that spike times in the first layer have a narrow distribution (Figure 4B), and then propagates through the network. This pattern contrasts with other models in which, for instance, synchrony builds up as activity propagates through the layers of a feedforward network (Tetzlaff et al., 2002, 2003). Our model therefore shows how synfire chains could emerge in recurrent networks driven by a small number of FSNs.

Figure 4. Quantitative analysis of synchronous activity propagation. (A) One particular population burst of activity propagation in the stationary state. Colors indicate the spike time of each neuron relative to the beginning of the population burst (t0 = 18 s). Note how the activity propagates from the first layer (high-frequency neurons) to higher layers (compare to Figure 1C). (B) Direct comparison of spike time and assigned layer index for each neuron, for the same time window as in (A). Neurons indeed fire sequentially according to their layer index. Since neurons in the same layer also fire synchronously, activity in our network propagates much like in synfire chains. Note that this synchronous propagation emerged in our network in a self-organized manner and was not hand-tuned. To quantitatively measure synchronous activity propagation in the network, we calculated the rank-order correlation between layer index and spike time (propagation parameter). For instance, the propagation parameter in this example is about 0.98 (red circle in C). (C) The propagation parameter as a function of time for one example network. In this case, activity propagation is established after about 5 s. The fluctuations are reduced after 10 s. (D) The propagation parameter averaged across 50 simulations with different random input currents. Errorbars indicate the standard deviation. It decreases as the synchronous propagation in the network stabilizes after about 10 s.

To quantitatively measure synchronous activity propagation in the network, we calculated the propagation parameter for each population burst, i.e., the rank-order correlation between layer index and time of first spike (see Materials and Methods). For instance, the propagation parameter for the example in Figures 4A,B is about 0.98, which indicates highly reliable activity propagation. We then followed the propagation parameter across time. During the initial stages of the simulation, the propagation parameter increases rapidly, then fluctuates at a high level, and finally stabilizes after about 10 s (Figure 4C). This temporal pattern is seen consistently across multiple simulations, when the propagation parameter is averaged across 50 simulations with different random input currents (Figure 4D). Note, in particular, the higher standard deviation for the data points during the transient state. When the network reaches the stationary state, the variability is fairly small. During the transient state, the network is in two or more cluster states and the propagation can be seen in part of the network (results not shown). In conclusion, local excitation drives network changes such that initially asynchronous firing becomes coherent and neurons in each layer fire successively one after another.

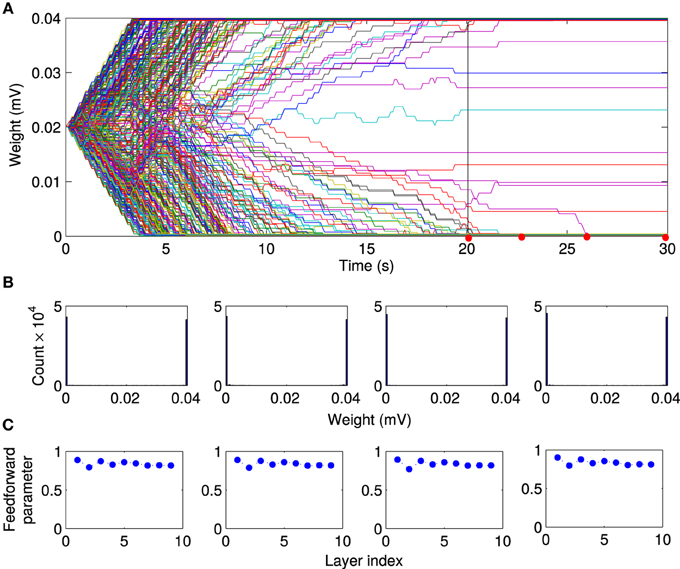

3.3. Local Excitation and STDP Create Feedforward Network Structures

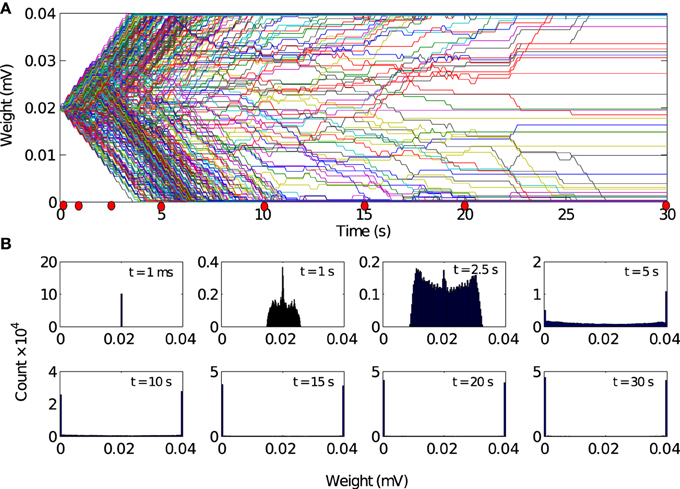

How does local excitation lead to the emergence of synchronous activity propagation? We hypothesized that the answer lies in the changes in the network structure, rather than the dynamic effects of FSNs alone. The evolution of 500 randomly selected synaptic weights reveals that the network activity and STDP force most of the synaptic weights (about 90%) to converge to the bounding values (Figure 5A), g = 0 mV and g = 0.04 mV. We sought to confirm this observation by examining the distribution of all synaptic weights at different times during the simulation. After the transient state, the synaptic weights quickly reach a stable bimodal distribution (Figure 5B). Note that the convergence of the synaptic weights to the limiting values is much faster than in the case of the networks without local excitation.

Figure 5. Evolution of synaptic weights driven by local excitation. (A) Results are shown for 500 randomly selected synapses. Most weights (about 90%) converged toward the limiting values of 0 mV and 0.04 mV. (B) Histogram of the synaptic weight distribution at the different timepoints marked by red dots in A. The distribution of synaptic weights converge from delta-function distribution to a stable bimodal distribution. There are no apparent changes in the weight distributions between t = 20 s and t = 30 s. So we conclude that the network has reached a steady state after 20 s of simulation time.

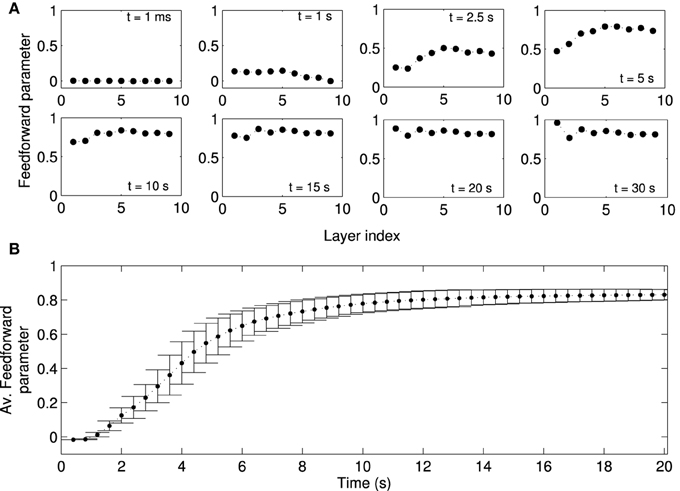

We next studied the directionality of synaptic connections, which is more important for activity propagation than the distribution of synaptic weights. Due to the random assignment of initial synaptic connections, the synaptic projections in the forward and backward directions are initially balanced, i.e., the initial feedforward parameters in all layers are close to zero (Figure 6A). Over time, a feedforward structure gradually emerges in the network. The feedforward structure first emerges in the layers with larger indexes, i.e., those far from the FSNs, and later in layers close to the FSNs. After about 20 s simulation time, the feedforward parameter is close to one in all the layers.

Figure 6. Formation of a feed-forward structure in locally connected networks. Local excitation drives STDP in the network such that synapses from lower to higher layers become strengthened, while the synapses in the reverse direction are weakened. Note that “layers” in our study refers to abstract layers based on synaptic distance to high frequency neurons (Figure 1C). We do not manually set up a feedforward structure in our network. (A) The feedforward parameter as a function of the layer is shown at different times (same as Figure 5B) for one representative network simulation. Initially, the feed-forward parameters are close to zero because the initial synaptic weights are symmetric. Values of the feedforward parameter near 1 indicate a feedforward structure. Due to plasticity the feedforward structure emerges gradually in the network, first in higher layers (t = 5 s) and later in the lower layers. (B) Feedforward parameter as a function of simulation time averaged across 50 simulations with different random input currents. There are no apparent changes in the structure of the network after t = 20 s. Errorbars show the standard deviation (SD) at each timepoint. Note that the SD decreases as the weights reach the steady state.

The example studied above is representative of other instances of the random network. When averaged across 50 simulations, in which networks receive a different sampling of random input currents, the evolution of the average feedforward parameter clearly shows the three stages discussed for the example above. The network is initially (t < 2 s) symmetric and the average feedforward parameter is near zero with little variability (Figure 6B). As the network structure changes in the transient state (2 s < t < 8 s), the average feedforward parameter increases and there is large variability. When the network reaches the stationary state, the average feedforward parameter approaches the maximal value and the variability is markedly reduced. The evolution of the network structure, while not perfectly matching, parallels the evolution of network activity.

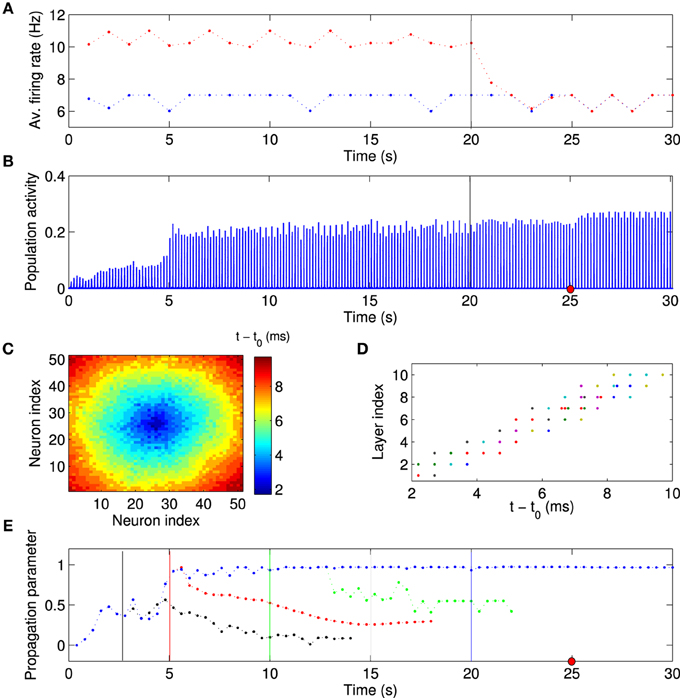

3.4. Synfire Chain Activity Persists in Absence of Local Excitation

Since we view the local excitation as a model of some salient, but temporary, external stimulation (Ritz and Sejnowski, 1997), one might expect that the firing rates of the FSNs return to baseline once the external stimuli are removed. We therefore asked whether the established network structure and activity patterns remain stable after the local excitation ceases. After the network has reached the stationary state (t = 20 s), we reset the external input current to the FSNs to values drawn from the same distribution as for the background neurons. Thus, the firing rates of the former FSNs are now the same as those of the other neurons in the network (Figure 7A). Importantly, the population activity remains synchronous (Figure 7B) and neural activity continues to propagate through the abstract layers of the network (Figures 7C–E). Surprisingly, the values of the population activity and propagation parameter are slightly higher and less variable after local excitation has been removed (Figures 7B,E), indicating more stable activity propagation.

Figure 7. Synchronous activity propagation persists after local excitation is removed. (A) Average firing rate of background neurons (blue points) and high-frequency neurons (red points) as a function of time. After the network has reached the stationary state, the local excitation is removed at t = 20 s (vertical line), i.e., the high-frequency neurons receive an input current from the same distribution as the other neurons in the network. Despite this manipulation, (B) the network activity remains highly synchronized and the activity propagation remains stable (C–E). (C,D) Example of spike times, taken at the time indicated by the red dot in (B). (E) If anything, the activity propagation appears to be more stable since there are smaller fluctuations in the propagation parameter (blue data points). The same analysis was performed with the local excitation removed at different times as indicated by the vertical lines (black, red, green, and blue). When the local excitation is removed at earlier time points, activity propagation destabilizes. This simulation used the same network parameters as the one shown in Figure 6. Visual inspection suggests that the feedforward parameter (Figure 6A) determines whether activity propagation remains stable after the local excitation has been removed. If the network structure is feedforward, including the first layer, i.e., the feedforward parameter is larger than about 0.75, then stable synfire-chain- like propagation remains stable after learning.

We then investigated the mechanism responsible for the synchronous activity propagation through the network in more detail in two different ways. First, we removed the local excitation at earlier timepoints (t = 2.5 s, t = 5 s, and t = 10 s) in the same network we used to generate Figure 6. Synchronous activity propagation degraded in each of these cases. By comparing the network structure at these times (Figure 6A) and the propagation parameter after removing the local excitation (Figure 7E), we found that only when the whole network, including the first layer, reaches a feedforward structure, the synfire-chain-like propagation remains stable without local excitation. This result is consistent with previous studies of synfire chains, but unlike in previous models, STDP continues to operate in our model and could therefore change synaptic weights after the local excitation has been removed. In other words, the overall network structure is stable against changes in individual synapses, which continue to occur in our model (Figure 8).

Figure 8. Stability of feed-forward structure after removing local excitation. Comparing the evolution of the network structure in the presence of local excitation t < 20 s and after removing it (t > 20 s). (A) After the local excitation is removed, the synaptic weights remain at their stationary values, although STDP is still active in the network. Furthermore, as shown at selected timepoints [red dots in (A)], (B) the bimodal distribution of synaptic weights, and (C) the feedforward structure remains stable after removing the local excitation.

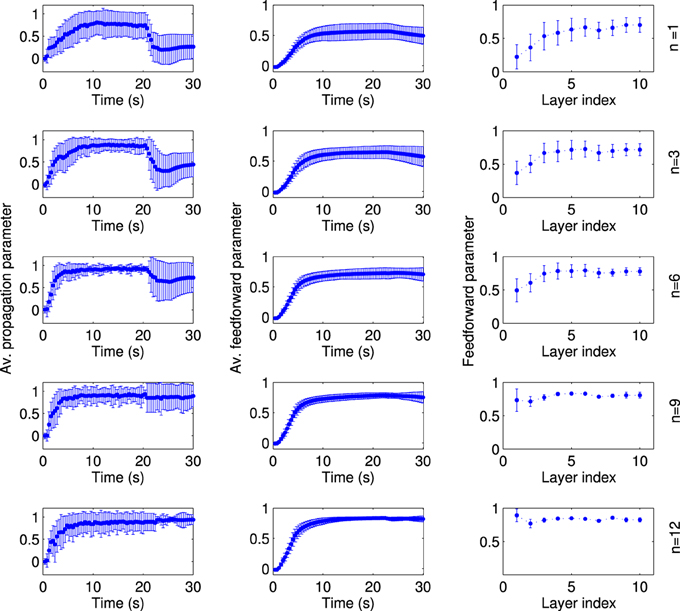

Second, we changed the extent of the local excitation, i.e., the number of FSNs, while fixing the average firing rate of FSNs at fFSN ≈ 11.4 Hz. Otherwise, networks simulation were setup as before and run for t = 20 s, before the local excitation was switched off. It is striking, but consistent with experimental evidence (Miles and Wong, 1983), that even a single FSN can drive synfire chain activity in the network (Figure 9, top left panel). However, the network activity pattern is not stable when local excitation is removed (t > 20 s). This stability occurs only for larger numbers of FSNs, n > 8 (Figure 9, left column). The reason for this difference appears to be that the structure of the network is not completely feedforward for small number of FSNs (Figure 9, middle column). In particular, at the end of the learning period (t = 20 s) the feedforward parameter of the first layer is quite small for n ≤ 8 (Figure 9, right column). These results suggest that even a small number of FSNs can change the network structure for synchronous activity propagation, but only a larger number of FSNs can drive more substantial changes in the network that are sustained after the FSNs are removed.

Figure 9. The extend of local excitation that is required to establish lasting network structures. Each row shows the results for local excitation involving a different number n of fast spiking neurons, as indicated on the far right. The networks are simulated in the presence of local excitation for 20 s, after which the local excitation is removed. All data points are averaged across 50 simulations with different random input currents. The average propagation parameter (left column) shows that stable activity propagation can be established for any number of fast-spiking cells. However, after the local excitation is removed the average propagation parameter remains stable only for n ≥ 8. The reason for this differentiation is evident in the network structure. For n < 8, the average feedforward parameter (middle column) is smaller and, importantly, the feedforward parameter of the fist layer at the stationary state (right column) is smaller as compared to the simulations with n ≥ 8.

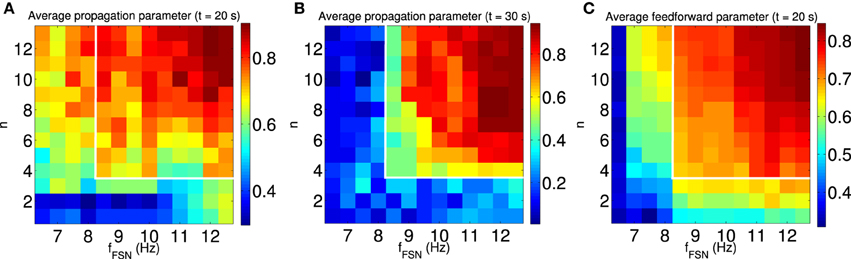

To confirm the relationship between feedforward network structure and sustained synchronous activity propagation, we systematically study the dependence of these properties on the model parameters. Since plasticity is driven by the FSNs, we chose as independent variables the extent of the local excitation, as introduced above, and the intensity of local excitation, i.e., the difference between the average firing rate of FSNs and the background firing rate. In the presence of local excitation, synchronous activity propagation emerges in the steady state (t = 20 s; Figure 10A) for a large number of parameter combinations. However, in only a subset of these cases is synchronous activity propagation sustained after the local excitation is removed (t = 30 s; Figure 10B, see white outlines). In the same parameter regime (Figure 10, white outlines), and only there, the average feedforward parameter is approximately ≥ 0.7 (Figure 10C). These results confirm our hypothesis that the synchronous activity propagation is sustainable in the absence of local excitation if the network has a strong feedforward structure.

Figure 10. Synchronous activity propagation is sustained only if the network is feedforward. We systematically characterized the propagation of synchronous activity in the network as a function of two properties of the local excitation: the extend and the intensity of local excitation. (A,B) The average propagation parameter in the steady state [t = 20 s, (A)] and after removing the local excitation [t = 30 s, (B)] indicated by the color scale. The white outline indicates the region with sustained propagation of synchronous activity in the absence of local excitation. Note that for some parameters synchronous activity propagates through the network only when the local excitation is present. (C) The average feedforward parameter in the steady state (t = 20 s) is indicated by color scale. Only when the structure of the network is mostly feedforward (average feedforward parameter approximately ≥ 0.7), is the synchronous activity propagation sustained after removing the local excitation (see white outlines). All data points are averaged across 100 simulations with different random input currents.

4. Discussion

Here, we have explored the dynamics of the network activity and the structure of a locally connected random network (LCRN) using computational simulations. Specifically, we have studied the role of local excitation in shaping such a network through spike-timing dependent plasticity (STDP) to generate synchronous activity propagation through the network. This synfire-chain like pattern of activity emerges when a feedforward structure self-organizes in the network.

Our work is clearly distinct from previous models of synchronous activity propagation in neural networks. Most previous models use hard-wired synaptic connections (Abeles, 1991; Diesmann et al., 1999; Kumar et al., 2010; Azizi et al., 2013, for instance), but models in which synchronous activity propagation self-organizes also differ from ours. For instance, Fukai and colleagues (Kitano et al., 2002a,b) discuss models in which a chain of a handful of clusters emerged. The neurons within the clusters fire nearly synchronously, while the clusters excite each other sequentially. Thus, in their model, activity propagates in volleys through the network. By contrast, in our model, activity propagates at the single neuron level and there is significant overlap between the activity in different layers, which appears to be a better description of in vivo data (Ikegaya et al., 2004).

4.1. Relation to Other Studies and Predictions

Our modeling results can account for modeling findings which show that network activity propagation can be transiently synchronized by external stimulation and return to an asynchronous state after the stimulation is removed (Tsodyks and Sejnowski, 1995; Mehring et al., 2003). We showed here that synchronous activity propagation remains transient, if the network structure has not become fully feedforward during the stimulation, because the external stimulation was not long-lasting, extensive or intense enough (Figures 7, 10). We therefore predict that changing the stimulation parameters in the experiments will result in synchronous activity propagation that persists beyond the duration of stimulation. Furthermore, our results predict that the switch between transient and stable synfire chain activity is controlled by the degree to which the network structure is feedforward. Finally, our model predicts that the feedforward network structure starts to forms first in the last layer and then propagates backwards to the first layer. These predictions of our model can be contrasted with an alternative account of the experimental observation based on balanced excitation and inhibition (Marder and Buonomano, 2004). In this account, the network initially responds with an explosion in activity when the stimulation is applied, and then switches to an asynchronous state due to the global inhibition when the excitation is removed.

In our study, local excitation drives the self-organization of a largely feedforward network structure in LCRN through STDP. Our results thus further strengthen the view that feedforward network structures are particularly well-suited to propagate synchronous activity. An in vitro study reported that the neural activity becomes progressively more synchronized as it propagates through the layers of constructed feedforward networks (Reyes, 2003). Furthermore, after removing local excitation in the stationary state (t > 20 s), the synchronous activity propagation appears to be robust. For instance, when we resampled the external input to all neurons from the interval of background firing rates ([3.4–6.8] Hz), the network continued to support the propagation of synchronous activity (data not shown). This result suggests that the feedforward structure of the network can compensate for the perturbation in the network inputs. The robustness to other forms of network manipulation remains to be explored in the future.

Although neural synchronization might be important for information processing in the nervous system, excessive synchrony may impair brain function and causes several neurological disorders (Pyragas et al., 2007). Therefore, it is important for the brain to control this spontaneous synchronous activity. In Section 3.4, we showed that synchronous activity persists even though local excitation is switched off. We also found that reducing the average background firing rate by 0.6 Hz switches off the synchronous activity propagation (data not shown). In other words, the activity in the network is driven by the external input and synchronized by the synaptic connections. Without any external input the activity in the network ceases.

4.2. The Importance of the Initial Weights and Baseline Firing Rates

The population activity is sensitive to any sufficiently large inhomogeneity. In the absence of local excitation the network breaks into several synchronous subclusters, probably because of small disparity in the firing rates. For a range of parameters, local excitation can override this clustering effect and establish a feedforward network structure. However, after the local excitation is removed, the network structure breaks into the subclusters again and abolishes the synfire chain activity. To avoid this clustering effect, we had to adjust the initial weight matrix and dispersion of the input currents. If the initial weights are low and baseline firing rates are drawn from a wide distribution, the neurons near the boundary cannot be trained by the central ones and network activity remains asynchronous. On the other hand, some neurons randomly have higher firing rate than their neighboring neurons and due to that they are able to entrain some of their neighbors. As a result, there is a tendency of the population activity to become more synchronous and increasing in peak of population activity (Figure 2A), despite the absence of local excitation.

The asynchronous network state is considered a more realistic model of cortical background activity in the absence of external stimulation to the network (Brunel, 2000; Mehring et al., 2003). However, the initial weights cannot be too small since activity has to propagate through the abstract layers in order to train the weights (Kumar et al., 2008, 2010; Jahnke et al., 2013). Therefore, the initial weights and dispersion of input currents in our model were chosen to balance these opposing requirements.

There is little experimental data to suggest what the distribution of initial synaptic weights before the learning period look like, even though the initial weights play a central role in the dynamics of the network activity and structure. For instance, Babadi and Abbott (2013) have shown for networks of two excitatory neurons that different initial weights of the reciprocal connections lead to different final network structures in the steady state. The exact relationship depends on the STDP rule and on the firing rates of the two neurons. If the STDP rule is dominated by depression, which is the case in our model, and firing rates are the same, then the stronger weight will prevail and the other connection is eliminated. That is, unless the weights are both weak, in which case both synapses are eliminated. If, on the other hand, the two neurons have different firing rates and equal initial synaptic weights, the low frequency neuron receives more excitation, and as a result, the synapse from the high to low frequency neuron grows and the other synapse weakens, ultimately leading to a feedforward network (Bayati and Valizadeh, 2012, for instance). These results also suggest that in large networks, the initial distribution of synaptic weights might be an important factor in determining the final steady state structure of the network. In our current simulations, we used identical weights; future work is needed to study the impact of the initial weight distribution on the synchronous activity propagation.

4.3. Other Directions for Future Studies

In addition to the initial synaptic weights and baseline firing rates, a number of other variables might affect synchronous activity propagation. The network dynamics of a simulated recurrent network of spiking neurons where all connections between excitatory neurons are subject to STDP is quite sensitive to the particular STDP-rule that is used. Variants of this STDP rule have been proposed in order to prevent the instability induced by conventional STDP (Pfister and Gerstner, 2006; Babadi and Abbott, 2010, 2013; Clopath and Gerstner, 2010; Clopath et al., 2010). In this study, we used the additive STDP model which modifies the synapses independently of the synaptic weight (Song et al., 2000). This STDP rule leads to a bimodal synaptic weight distribution, which in turn forms synfire-chain-like structure in our work. In contrast, weight-dependent STDP does not lead to the emergence of synfire-chain-like connectivity patterns from a random architecture, despite local excitation of selected neuron groups (Morrison et al., 2007). The main reason is that weight-dependent STDP gives rise to a unimodal weight distribution and strong synapses are harder to potentiate than weak ones. While the network effects of the STDP rule can be partly predicted by the results for a two-neuron network, our work shows that the emergent structure is also affected by the initial network architecture and the presence of local excitation. Further studies are needed to reveal how different STDP rules affect the emergent structure.

Another variable of interest are synaptic delays which can be implemented either as axonal delay or dendritic delay. Previous studies showed that these different type of delays lead to different results. When only dendritic, and not axonal, delays are implemented (Morrison et al., 2007), simultaneous firing of two reciprocally connected neurons lead to the selective strengthening of recurrent connections between those neurons, instead of exerting a decoupling force as observed in the case of implementing the axonal delay (Lubenov and Siapas, 2008). Here we have used an axonal delay of 1 ms for all synapses regardless of the distance between the neurons. This might be another reason for the discrepancy between our results and those of Morrison et al. (2007).

On the other hand, several studies have suggested that conduction delays are heterogeneous (Soleng et al., 2003; Pyka et al., 2014) and that this heterogeneity has important functional consequences (Izhikevich, 2006; Pyka and Cheng, 2014; Sadeghi and Valizadeh, 2014). It was shown previously that neuronal synchrony can arise in a network which contains long conduction delays (Vicente et al., 2008). Nevertheless, more work is needed to study whether the results reported here can be reproduced in networks with heterogeneous and long conduction delays. There is an overwhelming consensus in neuroscience that information processing in the brain is organized in functional modules (Perkel and Bullock, 1968) and that these modules are further arranged in hierarchies, such as in the visual system (Payne and Peters, 2001). If we consider LCRNs to be the modules, in which activity is synchronized locally, as we show in this paper, then an interesting question is how a system with multiple synaptically linked modules would behave.

5. Conclusion

Synfire chains have been popular for modeling the synchronous propagation of neural activity in neural networks. Here we found a biologically plausible mechanism for the self-organization of synfire-chain-like activity by combining local excitation and STDP in a LCRN. Local excitation could be supplied either by external stimuli or by another brain region. Only a few seconds of local excitation suffice to drive the establishment of a feedforward structure that persists even after the local excitation is removed.

Author Contributions

Conceived and designed the experiments: MB, AV, AA, and SC. Performed the experiments: MB and SC. Analyzed the data: MB, AV, AA, and SC. Contributed reagents/materials/analysis tools: MB. Wrote the paper: MB and SC.

Funding

This work was supported by a grant (SFB 874, project B2) from the German Research Foundation (Deutsche Forschungsgemeinschaft, DFG) and a grant from the Stiftung Mercator, both to SC. Preliminary work on this project was supported by a grant from IPM (No.92920128) to MB.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors thank Sam Neymotin for helpful comments on the manuscript.

References

Abbott, L. F., and Nelson, S. B. (2000). Synaptic plasticity: taming the beast. Nat. Neurosci. 3, 1178–1183. doi: 10.1038/81453

Abeles, M., Bergman, H., Margalit, E., and Vaadia, E. (1993). Spatiotemporal firing patterns in the frontal cortex of behaving monkeys. J. Neurophysiol. 70, 1629–1638.

Abeles, M. (1991). Corticonics: Neural Circuits of the Cerebral Cortex. Cambridge: Cambridge University Press.

Aviel, Y., Horn, D., and Abeles, M. (2005). Memory capacity of balanced networks. Neural Comput. 17, 691–713. doi: 10.1162/0899766053019962

Azizi, A. H., Wiskott, L., and Cheng, S. (2013). A computational model for preplay in the Hippocampus. Front. Comput. Neurosci. 7:161. doi: 10.3389/fncom.2013.00161

Babadi, B., and Abbott, L. F. (2010). Intrinsic stability of temporally shifted spike-timing dependent plasticity. PLoS Comput. Biol. 6:e1000961. doi: 10.1371/journal.pcbi.1000961

Babadi, B., and Abbott, L. F. (2013). Pairwise analysis can account for network structures arising from spike-timing dependent plasticity. PLoS Comput. Biol. 9:e1002906. doi: 10.1371/journal.pcbi.1002906

Bayati, M., and Valizadeh, A. (2012). Effect of synaptic plasticity on the structure and dynamics of disordered networks of coupled neurons. Phys. Rev. E. Stat. Nonlin. Soft Matter Phys. 86:011925. doi: 10.1103/PhysRevE.86.011925

Bi, G. Q., and Poo, M. M. (1998). Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci. 18, 10464–10472.

Brunel, N. (2000). Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J. Comput. Neurosci. 8, 183–208. doi: 10.1023/A:1008925309027

Buhry, L., Azizi, A. H., and Cheng, S. (2011). Reactivation, replay, and preplay: how it might all fit together. Neural Plast. 2011:203462. doi: 10.1155/2011/203462

Cheng, S. (2013). The CRISP theory of hippocampal function in episodic memory. Front. Neural Circuits 7:88. doi: 10.3389/fncir.2013.00088

Clopath, C., and Gerstner, W. (2010). Voltage and spike timing interact in STDP - a unified model. Front. Synaptic Neurosci. 2:25. doi: 10.3389/fnsyn.2010.00025

Clopath, C., Busing, L., Vasilaki, E., and Gerstner, W. (2010). Connectivity reflects coding: a model of voltage-based STDP with homeostasis. Nat. Neurosci. 13, 344–352. doi: 10.1038/nn.2479

Dayan, P., and Abbott, L. F. (2001). Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems. Cambridge, MA: MIT Press.

Diesmann, M., Gewaltig, M.-O., and Aertsen, A. (1999). Stable propagation of synchronous spiking in cortical neural networks. Nature 402, 529–533.

D'Souza, P., Liu, S.-C., and Hahnloser, R. H. R. (2010). Perceptron learning rule derived from spike-frequency adaptation and spike-time-dependent plasticity. Proc. Natl. Acad. Sci. U.S.A. 107, 4722–4727. doi: 10.1073/pnas.0909394107

Froemke, R. C., and Dan, Y. (2002). Spike-timing-dependent synaptic modification induced by natural spike trains. Nature 416, 433–438. doi: 10.1038/416433a

Gütig, R., Aharonov, R., Rotter, S., and Sompolinsky, H. (2003). Learning input correlations through nonlinear temporally asymmetric Hebbian plasticity. J. Neurosci. 23, 3697–3714.

Gavrielides, A., Kottos, T., Kovanis, V., and Tsironis, G. P. (1998). Self-organization of coupled nonlinear oscillators through impurities. Europhys. Lett. 44, 559–564.

Gerstner, W., Kempter, R., van Hemmen, J. L., and Wagner, H. (1996). A neuronal learning rule for sub-millisecond temporal coding. Nature 383, 76–81.

Gilson, M., Burkitt, A. N., Grayden, D. B., Thomas, D. A., and van Hemmen, J. L. (2010). Representation of input structure in synaptic weights by spike-timing-dependent plasticity. Phys. Rev. E. Stat. Nonlin. Soft Matter Phys. 82:021912. doi: 10.1103/PhysRevE.82.021912

Glass, L., and Mackey, M. (1988). From Clocks to Chaos: The Rhythms of Life. Princeton, NJ: Princeton University Press.

Hahn, G., Bujan, A. F., Frégnac, Y., Aertsen, A., and Kumar, A. (2014). Communication through resonance in spiking neuronal networks. PLoS Comput. Biol. 10:e1003811. doi: 10.1371/journal.pcbi.1003811

Hellwig, B. (2000). A quantitative analysis of the local connectivity between pyramidal neurons in layers 2/3 of the rat visual cortex. Biol. Cybern. 82, 111–121. doi: 10.1007/PL00007964

Hertz, J., and Prügel-Bennett, A. (1996). Learning synfire chains: turning noise into signal. Int. J. Neural Syst. 7, 445–450.

Ikegaya, Y., Aaron, G., Cossart, R., Aronov, D., Lampl, I., Ferster, D., et al. (2004). Synfire chains and cortical songs: temporal modules of cortical activity. Science 304, 559–564. doi: 10.1126/science.1093173

Izhikevich, E. M., and Desai, N. S. (2003). Relating STDP to BCM. Neural Comput. 15, 1511–1523. doi: 10.1162/089976603321891783

Izhikevich, E. M. (2006). Polychronization: computation with spikes. Neural Comput. 18, 245–282. doi: 10.1162/089976606775093882

Jahnke, S., Memmesheimer, R.-M., and Timme, M. (2013). Propagating synchrony in feed-forward networks. Front. Comput. Neurosci. 7:153. doi: 10.3389/fncom.2013.00153

Kempter, R., Gerstner, W., and van Hemmen, J. (1999). Hebbian learning and spiking Neurons. Phys. Rev. E. Stat. Nonlin. Soft Matter Phys. 59, 4498–4514.

Kistler, W. M., and Gerstner, W. (2002). Stable propagation of activity pulses in populations of spiking neurons. Neural Comput. 14, 987–997. doi: 10.1162/089976602753633358

Kitano, K., Câteau, H., and Fukai, T. (2002a). Self-organization of memory activity through spike-timing-dependent plasticity. Neuroreport 13, 795–798. doi: 10.1097/00001756-200205070-00012

Kitano, K., Câteau, H., and Fukai, T. (2002b). Sustained activity with low firing rate in a recurrent network regulated by spike-timing-dependent plasticity. Neurocomputing 44, 473–478. doi: 10.1016/S0925-2312(02)00404-6

Kozloski, J., and Cecchi, G. A. (2010). A theory of loop formation and elimination by spike timing-dependent plasticity. Front. Neural Circuits 4:7. doi: 10.3389/fncir.2010.00007

Kumar, A., Rotter, S., and Aertsen, A. (2008). Conditions for propagating synchronous spiking and asynchronous firing rates in a cortical network model. J. Neurosci. 28, 5268–5280. doi: 10.1523/JNEUROSCI.2542-07.2008

Kumar, A., Rotter, S., and Aertsen, A. (2010). Spiking activity propagation in neuronal networks: reconciling different perspectives on neural coding. Nat. Rev. Neurosci. 11, 615–627. doi: 10.1038/nrn2886

Leibold, C., Gundlfinger, A., Schmidt, R., Thurley, K., Schmitz, D., and Kempter, R. (2008). Temporal compression mediated by short-term synaptic plasticity. Proc. Natl. Acad. Sci. U.S.A. 105, 4417–4422. doi: 10.1073/pnas.0708711105

Levy, N., Horn, D., Meilijson, I., and Ruppin, E. (2001). Distributed synchrony in a cell assembly of spiking neurons. Neural Netw. 14, 815–824. doi: 10.1016/S0893-6080(01)00044-2

Lubenov, E. V., and Siapas, A. G. (2008). Decoupling through synchrony in neuronal circuits with propagation delays. Neuron 58, 118–131. doi: 10.1016/j.neuron.2008.01.036

Mao, B.-Q., Hamzei-Sichani, F., Aronov, D., Froemke, R. C., and Yuste, R. (2001). Dynamics of spontaneous activity in neocortical slices. Neuron 32, 883–898. doi: 10.1016/S0896-6273(01)00518-9

Marder, C. P., and Buonomano, D. V. (2004). Timing and balance of inhibition enhance the effect of long-term potentiation on cell firing. J. Neurosci. 24, 8873–8884. doi: 10.1523/JNEUROSCI.2661-04.2004

Markram, H. (1997). Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science 275, 213–215.

Masquelier, T., Guyonneau, R., and Thorpe, S. J. (2009). Competitive STDP-based spike pattern learning. Neural Comput. 21, 1259–1276. doi: 10.1162/neco.2008.06-08-804

Masuda, N., and Kori, H. (2007). Formation of feedforward networks and frequency synchrony by spike-timing-dependent plasticity. J. Comput. Neurosci. 22, 327–345. doi: 10.1007/s10827-007-0022-1

Mehring, C., Hehl, U., Kubo, M., Diesmann, M., and Aertsen, A. (2003). Activity dynamics and propagation of synchronous spiking in locally connected random networks. Biol. Cybern. 88, 395–408. doi: 10.1007/s00422-002-0384-4

Miles, R., and Wong, R. K. (1983). Single neurones can initiate synchronized population discharge in the Hippocampus. Nature 306, 371–373.

Miles, R., Traub, R. D., and Wong, R. K. (1988). Spread of synchronous firing in longitudinal slices from the CA3 region of the Hippocampus. J. Neurophysiol. 60, 1481–1496.

Morrison, A., Aertsen, A., and Diesmann, M. (2007). Spike-timing-dependent plasticity in balanced random networks. Neural Comput. 19, 1437–1467. doi: 10.1162/neco.2007.19.6.1437

Nádasdy, Z., Hirase, H., Czurkó, A., Csicsvari, J., and Buzsáki, G. (1999). Replay and time compression of recurring spike sequences in the Hippocampus. J. Neurosci. 19, 9497–9507.

Perkel, D. H., and Bullock, T. H. (1968). Neural coding: a report based on an NRP work session. Neurosci. Res. Program Bull. 6, 219–349.

Pfister, J.-P., and Gerstner, W. (2006). Triplets of spikes in a model of spike timing-dependent plasticity. J. Neurosci. 26, 9673–9682. doi: 10.1523/JNEUROSCI.1425-06.2006

Pinto, D. J., Patrick, S. L., Huang, W. C., and Connors, B. W. (2005). Initiation, propagation, and termination of epileptiform activity in rodent neocortex in vitro involve distinct mechanisms. J. Neurosci. 25, 8131–8140. doi: 10.1523/JNEUROSCI.2278-05.2005

Pyka, M., and Cheng, S. (2014). Pattern association and consolidation emerges from connectivity properties between cortex and Hippocampus. PLoS ONE 9:e85016. doi: 10.1371/journal.pone.0085016

Pyka, M., Klatt, S., and Cheng, S. (2014). Parametric anatomical modeling: a method for modeling the anatomical layout of neurons and their projections. Front. Neuroanat. 8:91. doi: 10.3389/fnana.2014.00091

Pyragas, K., Popovych, O., and Tass, P. (2007). Controlling synchrony in oscillatory networks with a separate stimulation-registration setup. Europhys. Lett. 80:40002. doi: 10.1209/0295-5075/80/40002

Reka, A., and Barabási (2002). Statistical mechanics of complex networks. Rev. Mod. Phys. 74, 47–97. doi: 10.1103/RevModPhys.74.47

Reyes, A. D. (2003). Synchrony-dependent propagation of firing rate in iteratively constructed networks in vitro. Nat. Neurosci. 6, 593–599. doi: 10.1038/nn1056

Ritz, R., and Sejnowski, T. J. (1997). Synchronous oscillatory activity in sensory systems: new vistas on mechanisms. Curr. Opin. Neurobiol. 7, 536–546.

Sadeghi, S., and Valizadeh, A. (2014). Synchronization of delayed coupled neurons in presence of inhomogeneity. J. Comput. Neurosci. 36, 55–66. doi: 10.1007/s10827-013-0461-9

Soleng, A. F., Raastad, M., and Andersen, P. (2003). Conduction latency along CA3 hippocampal axons from rat. Hippocampus 13, 953–961. doi: 10.1002/hipo.10141

Song, S., Miller, K. D., and Abbott, L. F. (2000). Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nat. Neurosci. 3, 919–926. doi: 10.1038/78829

Suri, R. E., and Sejnowski, T. J. (2002). Spike propagation synchronized by temporally asymmetric Hebbian learning. Biol. Cybern. 87, 440–445. doi: 10.1007/s00422-002-0355-9

Takahashi, Y. K., Kori, H., and Masuda, N. (2009). Self-organization of feed-forward structure and entrainment in excitatory neural networks with spike-timing-dependent plasticity. Phys. Rev. E. Stat. Nonlin. Soft Matter Phys. 79(5 Pt 1):051904. doi: 10.1103/physreve.79.051904

Tetzlaff, T., Geisel, T., and Diesmann, M. (2002). The ground state of cortical feed-forward networks. Neurocomputing 44, 673–678. doi: 10.1016/S0925-2312(02)00456-3

Tetzlaff, T., Buschermöhle, M., Geisel, T., and Diesmann, M. (2003). The spread of rate and correlation in stationary cortical networks. Neurocomputing 52, 949–954. doi: 10.1016/S0925-2312(02)00854-8

Tsodyks, M. V., and Sejnowski, T. (1995). Rapid state switching in balanced cortical network models. Network 6, 111–124.

Valizadeh, A., Kolahchi, M. R., and Straley, J. P. (2010). Single phase-slip junction site can synchronize a parallel superconducting array of linearly coupled Josephson junctions. Phys. Rev. B 82:144520. doi: 10.1103/physrevb.82.144520

van Rossum, M. C. W., Turrigiano, G. G., and Nelson, S. B. (2002). Fast propagation of firing rates through layered networks of noisy neurons. J. Neurosci. 22, 1956–1966.

Vicente, R., Gollo, L. L., Mirasso, C. R., Fischer, I., and Pipa, G. (2008). Dynamical relaying can yield zero time lag Neuronal synchrony despite long conduction delays. Proc. Natl. Acad. Sci. U.S.A. 105, 17157–17162. doi: 10.1073/pnas.0809353105

Voegtlin, T. (2009). Adaptive synchronization of activities in a recurrent network. Neural Comput. 21, 1749–1775. doi: 10.1162/neco.2009.02-08-708

Yazdanbakhsh, A., Babadi, B., Rouhani, S., Arabzadeh, E., and Abbassian, A. (2002). New attractor states for synchronous activity in synfire chains with excitatory and inhibitory coupling. Biol. Cybern. 86, 367–378. doi: 10.1007/s00422-001-0293-y

Keywords: synfire chains, spike timing dependent plasticity (STDP), locally connected random networks, feed-forward networks, neuronal sequence

Citation: Bayati M, Valizadeh A, Abbassian A and Cheng S (2015) Self-organization of synchronous activity propagation in neuronal networks driven by local excitation. Front. Comput. Neurosci. 9:69. doi: 10.3389/fncom.2015.00069

Received: 02 December 2014; Accepted: 20 May 2015;

Published: 04 June 2015.

Edited by:

Guenther Palm, University of Ulm, GermanyCopyright © 2015 Bayati, Valizadeh, Abbassian and Cheng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sen Cheng, Mercator Research Group “Structure of Memory”, Ruhr-Universität Bochum, Universittsstrae 150, 44801 Bochum, Germany, sen.cheng@rub.de

Mehdi Bayati

Mehdi Bayati Alireza Valizadeh

Alireza Valizadeh Abdolhossein Abbassian

Abdolhossein Abbassian Sen Cheng

Sen Cheng