Synaptic dynamics and neuronal network connectivity are reflected in the distribution of times in Up states

- 1IBENS, Ecole Normale Supérieure, Applied Mathematics and Computational Biology, Paris, France

- 2Institute of Neuroscience, Technische Universität München, Munchen, Germany

- 3Institut de Neurobiologie de la Méditerranée-INSERM U901, Marseille, France

The dynamics of neuronal networks connected by synaptic dynamics can sustain long periods of depolarization that can last for hundreds of milliseconds such as Up states recorded during sleep or anesthesia. Yet the underlying mechanism driving these periods remain unclear. We show here within a mean-field model that the residence time of the neuronal membrane potential in cortical Up states does not follow a Poissonian law, but presents several peaks. Furthermore, the present modeling approach allows extracting some information about the neuronal network connectivity from the time distribution histogram. Based on a synaptic-depression model, we find that these peaks, that can be observed in histograms of patch-clamp recordings are not artifacts of electrophysiological measurements, but rather are an inherent property of the network dynamics. Analysis of the equations reveals a stable focus located close to the unstable limit cycle, delimiting a region that defines the Up state. The model further shows that the peaks observed in the Up state time distribution are due to winding around the focus before escaping from the basin of attraction. Finally, we use in vivo recordings of intracellular membrane potential and we recover from the peak distribution, some information about the network connectivity. We conclude that it is possible to recover the network connectivity from the distribution of times that the neuronal membrane voltage spends in Up states.

Significant Statement

Neuronal networks generate complex patterns of activity that depend on functional synapses. Synaptic dynamics generates network dynamics characterized by long periods of depolarization called Up states, that can last for hundreds of milliseconds. Yet understanding the network connectivity and the mechanisms underlying these periods remain unclear. Using a mean-field model, we find that the distribution of times in Up states does not follow a Poissonian law, but presents several unexplained peaks. These peaks are not artifacts of electrophysiological measurements, but result from the inherent dynamics properties of the network. Moreover, the present method allows resolving a reverse problem: how to extract the neuronal network connectivity from the distribution of times in a time series (Up states). We conclude that the distribution of times in Up states is due to synaptic dynamics in a neuronal network with sufficient connections and the position of peaks in the time distribution characterizes the degree of functional network connectivity, usually difficult to estimate.

1. Introduction

The cerebral cortex is continuously active even in the absence of external stimuli, showing patterns of activation that resemble the ones generated by direct stimulations (Kenet et al., 2003; Chen et al., 2013). Recurrent patterns have also been found in neuronal ensembles (Cossart et al., 2003). Yet the origin of this recurrent activity based on network properties remains unexplained. Several computational studies have addressed successfully the role of noise in generating oscillations in recurrent networks (Verechtchaguina et al., 2006; Nesse et al., 2008). The spontaneous activity of the membrane potential of connected neurons has further revealed that it can switch between an Up and a Down state (Lampl et al., 1999; Anderson et al., 2000; Chen et al., 2013).

This Up and Down state phenomena has been modeled for excitatory network by synaptic-depression mean-field equations (Tsodyks et al., 1998; Torres et al., 2002; Holcman and Tsodyks, 2006), where early simulations suggested that the residence time of the membrane potential in the cortical Up state does not follow a Poissonian law, but presents several unexplained peaks. Using a mean field model, we show here that these peaks are neither artifacts of numerical simulations nor electrophysiological measurements, but are rather an inherent property of the underlying dynamics.

Using excitatory synaptic transmission only, we shall see that the model used in this manuscript reproduces experimental settings, where inhibition is shut down in picrotoxin condition. By analyzing the times in Up states, we will show that their distribution presents oscillation peaks, allowing a novel characterization of time series recording of Up and Down states. We show in Supplementary Information that adding inhibition affects but do not change the nature of the oscillation peaks. Note that these peaks were never reported before and are completely different from the phenomenon of stochastic amplification of the voltage in Up state, where fluctuations of the mean rate in the Up states shows a single peak in the power spectrum (Hidalgo et al., 2012).

We present here a model and a method to analyze the mean properties of neuronal networks and in particular to recover the degree of connectivity from the distribution of times in depolarized states. We apply our analysis to in vivo recordings of intracellular membrane potential. The first part of our approach consists in studying a mean-field model and we find that the Up states are characterized by a basin of attraction where the stable focus is located near the unstable limit cycle. For a specific range of the noise amplitude, we demonstrate that the distribution of times in Up states, which is the survival probability of the Fokker-Planck equation has periodic decreasing peaks. The period of this oscillatory resonance is surprisingly exactly the imaginary part of the eigenvalue of the jacobian of the vector field at the focus, in agreement with asymptotic computations, obtained for generic dynamical systems (Dao Duc et al., 2014). We conclude that neuronal network driven by synaptic depression operates in a regime of parameters that leads to non-Poissonian residence time in the Up state. Finally, recovering the network connectivity from the distribution of times of the mean neuronal membrane voltage or in general from the distribution of firing rates, is a general concept that should be applied to neuronal networks of various sizes.

2. Materials and Methods

2.1. Mean-field Equations for Synaptic-depression

A neuronal network connected by excitatory connections is described here by its mean firing rate averaged over the neural population. When the neuronal network is mostly connected with depressing synapses, the state of a synapse is represented by the depression parameter μ (Tsodyks and Markram, 1997). The mean neuronal activity follows the stochastic system (Bart et al., 2005; Holcman and Tsodyks, 2006)

where V is an average voltage (measured in mV with a base line at 0 mV), J is the average synaptic strength in the network, U and tr are utilization parameters and recovery time constants of the synaptic depression respectively (Tsodyks and Markram, 1997; Bart et al., 2005). We use a δ-correlated white noise of mean zero and variance one (Schuss, 2010). The first term on the right-hand side of the first equation of Equation (fdt) accounts for the intrinsic biophysical decay to equilibrium. The second term represents the synaptic input, which is scaled by the synaptic depression parameter μ. The third one summarizes all uncorrelated sources of noise with amplitude σ. R(V) is the average firing rate (in Hz) approximated by a threshold-linear voltage dependence function

where T > 0 is a threshold and α = 1Hz∕mV is a conversion factor. In general, the function R(V) is a sigmoid because it should account for the saturation of the neuronal firing rate. However, due to the synaptic-depression, the dynamics never reaches the saturation regime and we can thus use the function R(V) defined above. The second equation in fdt describes the activity-dependent synaptic depression according to the classical phenomenological model (Tsodyks and Markram, 1997): briefly, every incoming spike leads to an abrupt decrease in the instantaneous synaptic efficacy, measured by a utilization factor U, due to depletion of neurotransmitters. Between spikes, the synaptic efficacy recovers to its original state (μ = 1) with a time constant tr (see parameters in Table 1). Entering into an Up state corresponds to an escape event in the region between the separatrices and the unstable limit cycle. It is known that the dynamics shows a fast rotation (population spike) around the unstable limit cycle and a small noise fluctuation can produce the impulsion necessary to push a trajectory into an Up state (Holcman and Tsodyks, 2006). This model has been used to describe excitatory neuronal network.

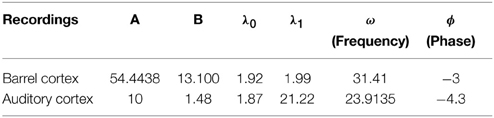

Table 1. Simulation parameters (Holcman and Tsodyks, 2006).

2.2. Experimental Protocols

2.2.1. In vivo Whole-cell Recording in the Barrel Cortex

Experimental procedures were performed in accordance with the recommendations of the French Institut National de la Santé et de la Recherche Médicale animal care and use committee and the European Council Directives (2010/63/UE). A male wistar rat (P27/87 g) was anesthetized briefly with isoflurane followed by urethane (1 g/kg body weight, i.p.) plus additional doses of a mixture of ketamine (50 mg/kg) and xylazine (2.5 mg/kg) as needed then head-fixed into a stereotaxic frame. A local analgesic (lidocane) was injected under the skin before the first incision. The skull was exposed and a small craniotomy was performed over the somatosensory cortex (2.5 mm posterior and 5.5 mm lateral to the bregma). Blind in vivo whole-cell recordings were obtained using previously described procedures (Margrie et al., 2002). Borosilicate glass patch pipettes (resistance 5–7 M?) were filled with an intracellular solution containing (in mM): 130 KMeSO4, 5 KCl, 5 NaCl, 10 HEPES-K, 2.5 MgATP, 0.3 NaGTP, 0.2 EGTA and 0.1 % of biocytin (pH adjusted to 7.2) and advanced into the brain perpendicular to the surface of the cortex. The cell membrane potential was recorded in current clamp mode without any current injection. The signal was amplified using an Axoclamp-2B amplifier (Molecular devices, Sunnyvale, California), low-pass filtered online at 3 kHz and digitized with a digidata 1440A (Molecular devices) at 20 kHz.

2.2.2. In vivo Whole-Cell Patch-Clamp Recording of Layer 2/3 Neurons in the Auditory Cortex

Whole-cell patch-clamp recordings of layer 2/3 neurons in the auditory cortex of C57BL/6 mice (30–50 postnatal days old) were obtained using the procedure as described previously (Kitamura et al., 2008; Jia et al., 2010; Chen et al., 2011). Briefly, the mouse was placed onto a warming plate (37.5–38°C) and anesthetized with 0.8–1.2% isoflurane in pure O2. The recording chamber was perfused with normal Ringer's solution containing (in mM): 125 NaCl, 4.5 KCl, 26 NaHCO3, 1.25 NaH2PO4, 2 CaCl2, 1 MgCl2, and 20 glucose, pH 7.4, when bubbled with 95% O2 and 5% CO2. The body temperature of the mouse was maintained in the range of 36.5–37.5°C throughout the recording period. Somatic current-clamp recordings were performed with an EPC-10 amplifier (USB Quadro Amplifier, HEKA Elektronik, Lambrecht/Pfalz, Germany) under two-photon imaging guidance. Borosilicate glass pipettes with open tip resistance of 5–7 MΩ were filled with an intracellular solution containing: 112 mM K-gluconate, 8 mM KCl, 10 mM HEPES, 4 mM Mg-ATP, 0.3 mM Na2GTP, 10 mM Na-Phosphocreatine, titrated to pH 7.20–7.25. The pipette series resistance was continuously measured and neurons were rejected for data analyses if the resistance was higher than 30 MΩ. Electrophysiological data were filtered at 10 kHz and sampled at 20 kHz using Patchmaster software (HEKA, Lambrecht, Germany). Two-photon imaging was performed with a custom-built video-rate two-photon microscope based on a resonant scanner (Leybaert et al., 2005) and a mode-locked femtosecond pulse laser, operating at 710–920 nm wavelength (MaiTai, Spectra Physics, Mountain View, CA). The scanner was mounted on an upright microscope (BX61WI, Olympus, Tokyo, Japan) equipped with a 40/0.80 water-immersion objective (Nikon, Japan).

2.3. Fitting Procedure

We fitted the distribution of time in the Up state using the following fitting procedure:

1. We first fitted the first exponential Ae−λ0t using the Matlab fitting toolbox using the constraint: 0.9λ~0 ≤ λ0 ≤ 1.1λ~0.λ~0 = 1/MFPT, where the MFPT is computed by averaging the time in the Up state.

2. We fitted the second exponential Be−λ1t by subtracting the first one from the data and by considering the convex hull of the resulting curve. λ1 was manually adjusted maintaining the condition that it is larger than λ0.

3. We fitted the periodic part cos(ωt + ϕ) by manually finding the period T between two consecutive peaks on the histogram with .

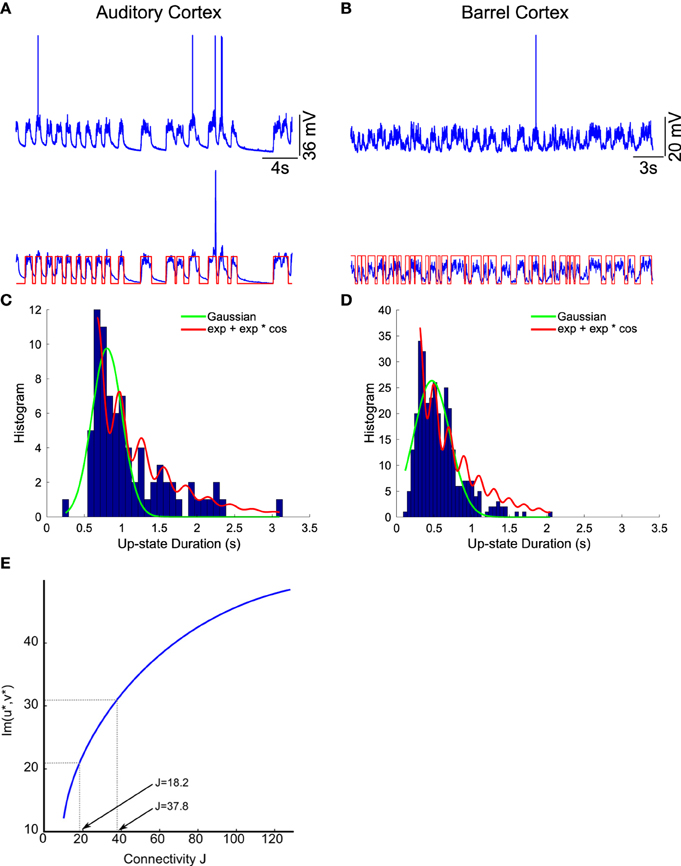

The Up state histogram is obtained from electrophysiological recordings lasting 300 s of neurons located in the barrel cortex. The data were down-sampled by 100 Hz and the minimal value was subtracted so that now it is equal to zero. We annotate the Up states defined by a starting spike, followed by a period of stable depolarized membrane potential and the end is usually a single exponential decay. From the Up and Down states classification, we extracted the histogram of the Up states duration. It is composed of 325 Up states, that we bin into epochs of 0.0293 s (70 bins for 2.05 s). The histogram is presented in Figure 8A.

We also analyzed the histogram of Up states, recorded in the auditory cortex from 5 different electrophysiological traces, each lasting 32 s, obtained in vivo from a neuron of the L2 cerebral cortex in an anesthetized mouse (Chen et al., 2013). We extracted the histogram of the Up states duration, composed of 79 Up states that were put into bins each representing 0.0622 s (50 bins for 3.1 s). The histogram is presented in Figure 8B.

3. Results/Discussion

3.1. The Phase-space Analysis Unveils the Origin of the Peak Oscillation

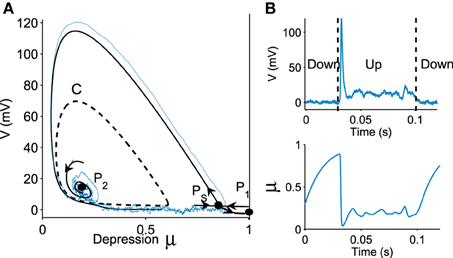

We now represent the Phase-Space (V, μ) in Figure 1A, which contains three critical points: two attractors P1 (located at V = 0, μ = 1), P2 and one saddle point PS. The basin of attraction for the stable focus P2 is delimited by an unstable limit cycle C (dashed line in Figure 1A), which defines the Up state region. This basin of attraction (Up state) appears when the network connectivity J exceeds a minimal value (see Holcman and Tsodyks, 2006). Indeed, as the parameter J increases, following a super-critical (with a minus sign) Hopf-bifurcation, the point P2, which was previously a repulser becomes an attractor and an unstable limit cycle appears around it (Figure 1A). Intuitively, this bifurcation occurs when the recurrent connections are becoming sufficiently strong.

Figure 1. Transition to an Up state. (A) Phase portrait (V, μ) defined by Equation (fdt), containing a stochastic trajectory (blue). The phase space shows a limit cycle C (dashed line) containing a stable focus P2, a saddle point PS and a stable attractor P1. The Up state is the domain inside the limit cycle C. (B) transition of a trajectory to an Up state in the time domain for the variable V and μ.

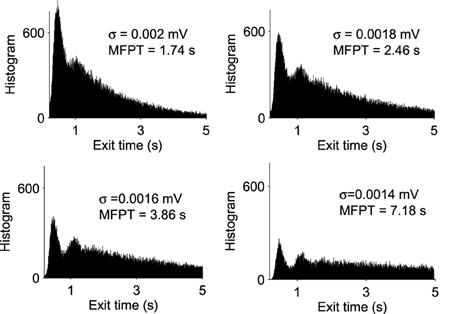

A trajectory enters the limit cycle by a noise induced transition (see Figure 1B). The stable focus is at the intersection of nullclines (in red in Figure 3). The time in the Up state is precisely the mean first passage time for a trajectory before it reaches the unstable limit cycle (dashed line) which is the basin of attraction and defines the Up state in the phase space. Once a trajectory exits through the limit cycle, the dynamics relaxes exponentially to the Down-state. We study here the distribution of times spent in the Up states (DTUS) only. The mean of this time has been estimated asymptotically and is the reciprocal of the escape rate (Schuss, 1980, 2010; Matkowsky and Schuss, 1982; Freidlin and Wentzell, 1998). It characterizes the escape in the generic activation problem. However, this analysis is not sufficient to characterize escape when the focus is located close the separatrix as it is the case here. Indeed, the DTUS shows various oscillation peaks in Figure 2, confirming that it is non-Poissonian and in addition, the amplitude of the DTUS, but not the frequency of DTUS oscillation depends on the noise size. As the noise decreases, the distribution of exit time evolves from an initially decaying exponentially to an oscillatory decay with several apparent peaks. We conclude that these peaks are a direct consequence of the dynamics induced by the geometrical organization of the recurrent ensembles, where the focus is very close to the unstable limit cycle. In the remaining part of this article, we study these peak oscillations.

Figure 2. Distribution of exit times from Up States for various noise amplitudes (σ = 0.0014, 0.0016, 0.0018, and 0.002 mV). With each histogram, we computed the MFPT, which is a decreasing function of σ (total of 100,000 runs).

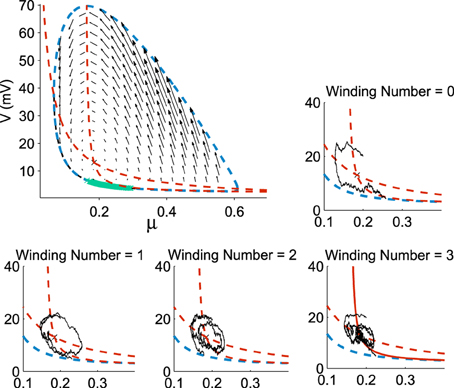

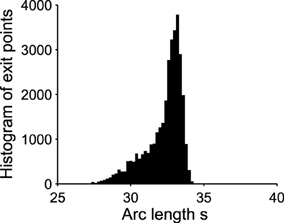

Already, we see that for a focus located near the limit cycle, the vector field vanishes close at the boundary, leading to a dynamics dominated by the noise. Thus, escape should occur in a small region of the limit cycle close to the focus. Consequently, if a trajectory does not exit in that small region, it winds around the focus before returning to the region where it can escape. This is shown in Figure 3. This rotation phenomena is responsible for the peaks in the DTUS. Moreover, the periodic peaks in DTUS is associated with a distribution of exit points concentrated in a very small part of the limit cycle, as shown in Figure 4. These periodic peaks in DTUS are in contrast with the Down-states distribution that shows a simple exponential (see Supplementary Information).

Figure 3. Distribution of exit points from the Up states. The exit points (green) for trajectories are located on a small portion of the limit cycle (dotted blue) with nullclines (dotted red) and the vector field (black arrows) (100,000 runs). In each sub-figure, a trajectory reaches the limit cycle after 0, 1, 2, and 3 rotations. All trajectories start at the initial point (μ0, V0) = (0.2, 20).

Figure 4. Exit point distribution along the unstable limit cycle. The distribution of exit points (associated to the green points in Figure 3) peaks in a very small region of the boundary. The x-axis is the arc length along the limit cycle oriented counterclockwise. The origin is (μ, V) = (0.035, 40) and the total arclength is 130. The initial point for all trajectories is (0.3, 30). Number of runs = 10000.

We further characterize the peak oscillations as follows: we find that the oscillation frequency in DTUS is equal to the imaginary part of the Jacobian of the deterministic vector field of system fdt at the focus point. Indeed, the coordinate of the focus in the phase-space is given by Holcman and Tsodyks (2006)

where . Using parameters of Table 1, we find the coordinates (μ*, V*) = (0.1882, 12.7865). The Jacobian matrix at a point (μ, V) is

and the imaginary part of the eigenvalues is

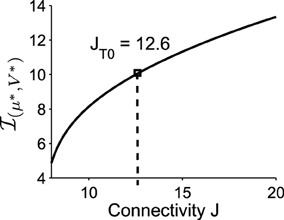

Applying formula Im, we find that the imaginary part of the eigenvalues of the Jacobian matrix at the Up state is . The associated period is thus , which is very close to the period that we obtained with Brownian simulations (see Figure 1) . This result shows that the oscillation frequency in the DTUS is determined by the deterministic property of the field at the focus only, a situation that is exact for a large class of dynamical system (Dao Duc et al., 2014). Finally, the oscillation frequency is an increasing function of the connectivity J, as shown in Figure 5.

Figure 5. The imaginary part of the eigenvalues of the linearized system at the Upstate. It increases with the connectivity J (the value of J used in the previous simulations is J = JT0 = 12.6).

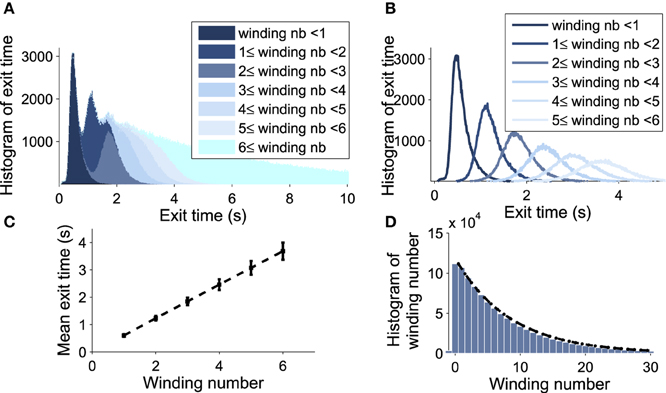

3.2. Winding-decomposition for the Time Distribution in Up State

We explore now the contribution to the DTUS of each trajectory making exactly k-turns before exit. We decompose the empirical distribution of exit times by peaks, which are precisely determined by the winding number of a trajectory around the focus: the first peak is mainly composed of trajectories making no rotation, the second one is made of trajectories making exactly one rotation and so on (see Figures 6A,B). However, the dispersion of exit times for trajectories making several turns (Figure 6C) smoothes out the shape of the next peaks. To gain a better understanding of the DTUS, we decompose the exit time based on the turning number: after k turns, the exit time is the sum , where τe is the time to exit without turning and τq is the winding time between the qth and q−1th turn. The variables τq are i.i.d because a trajectory restarts independently of its previous initial position close to the focus. Defining the probability p = Pr{τe < τr} to rotate before exit (τr is the first time to complete a rotation), we use Bayes'law to decompose the distribution of exit times as

where Prrot{k} is the probability of making exactly k rotations before exit. Due to the independence of the renewal process after each rotation, it is equal to the probability to make exactly k−1 turns multiplied by the probability p to exit

Figure 6. Decomposition of the DTUS with respect to the winding number. (A) Distribution of exit times. (B) Distribution of exit times conditioned on the winding number. (C) Mean exit time and variance increase linearly with the winding number. (D) Exponential approximation of the winding probability. The probability of escaping after n turns is f(n) = p(1−p)n−1, where p is computed empirically as the ratio of trajectories making one turn to the total number. We use 106 runs, trajectories start at (μ0, V0) = (0.21, 20mV) with σ = 0.0015.

We confirm the exponential decay of the probability Prrot{k} to exit after k turns (Equation W). It is well approximated by empirical stochastic simulations (Figure 6D). Furthermore, by computing the empirical ratio of the number of trajectories that exit after a single turn to the ones that rotate, we obtain the probability p = 0.12 (using parameters of Table 1). Finally, using the i.i.d property of the rotation times τq, the conditional distribution probability to exit after k rotations Pr(τe<t|k) is the k-convolution of the distribution of times of a single rotation f1(t) = Pr(τ1 = t) by the distribution of exit time without turning :

where is the kth convolution power of f0 (). Thus, the pdf of exit time is given by

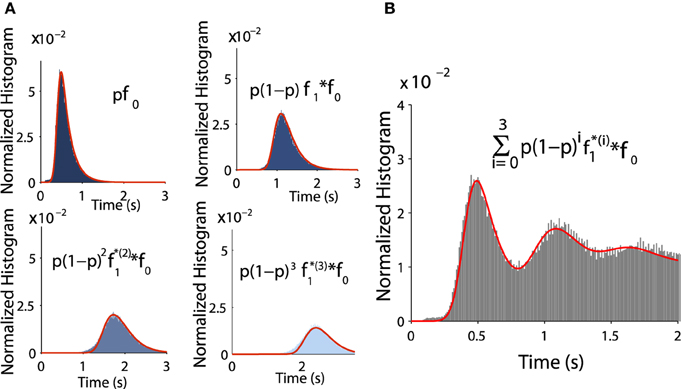

To validate Equation (tau), we estimated for all successive peaks and compared them to Brownian simulations as follows: the distributions f0 and f1 are exponentially distributed at infinity and can be approximated by

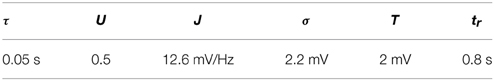

where k = 0, 1 and the normalization constants Ck are obtained by . Fitting the empirical histograms conditioned on a winding number < 1, we obtain for f0 the following parameters a0 = 0.43, b0 = 0.1, λ0 = 4.8. By simulating trajectories that are making one turn, we obtain for f1: a1 = 0.48, b1 = 0.1, λ1 = 5.5. Then we use these analytical expressions and compare them with the Brownian simulations (see histograms obtained for k = 1, 2, 3, 4 in Figures 7A,B).

Figure 7. Distribution of exit time conditioned by the winding number. (A) Comparison between histograms of trajectories of 1, 2, 3, and 4 turns and formulae f0 and fk (in red) (B) Comparison between the histogram of exit time obtained from subfigure A with the analytical formula (in red) Equation (8).

The oscillations in the Up state histogram shown in Figure 7 were not reported before and are for instance completely different from the stochastic amplification phenomena for the voltage in the Up states reported in Hidalgo et al. (2012), which concerns fluctuations of the mean firing rate with a peak in the power spectrum. This effect is fundamentally different: while the frequency of the peak depends on the noise amplitude in Hidalgo et al. (2012), it is independent here and determined only by the Jacobian of the deterministic system at the attractor.

Moreover, the oscillation peaks that we found here are a consequence of the close proximity between the two-dimensional limit cycle and the point attractor. For that same reason, our findings also differ from that of Mejias et al. (2010), in which the stochastic dynamical system is reduced to a one dimensional Ornstein-Uhlenbeck process. Computing the power distribution under this reduction implies that the attractor is far enough away from the basin of attraction of the Up state. However, this situation is not the one obtained from parameters accounting for physiological conditions (Tsodyks and Markram, 1997; Holcman and Tsodyks, 2006; Dao Duc et al., 2015), where the attractor is located in the boundary of the basin of attraction (see Figure 1). In this case, the exit time distribution cannot be well characterized simply by the first eigenvalue of the associated Fokker-Planck equation. Indeed, the second one is also needed and contains an imaginary component (Dao Duc et al., 2014), a phenomenon that is only possible for nonself-adjoint operator in two dimensions at least.

3.3. Extracting the Neuronal Network Connectivity from the Distribution of Times in Up States

The distribution of times in Up states is non-Poissonian, because the underlying dynamics is a non-classical escape problem (Holcman and Schuss, 2014). Analysis of the Fokker-Planck equation for generic dynamical systems (Dao Duc et al., 2014) reveals that the distribution of time can be expanded in eigenvalue series

where A0, Cm, n are constants, λn, m complex-valued higher-order eigenvalues and λ0 is the principal eigenvalue of the Fokker-Planck in the domain that defines the Up state (basin of attraction delimited by an unstable limit cycle). The second order approximation is

where A, B are constants and the intrinsic parameters are λ0, λ1, ω, ϕ. All these constants needed to be identified. The theory predicts (Schuss, 2010) that , which is the mean time the voltage membrane stays in Up states. It can be directly estimated from the histogram by computing the mean (first moment). The other parameters should be fitted from the histogram with the constraint that λ1 > λ0 and is the period between two consecutive peaks and can be determined from histograms.

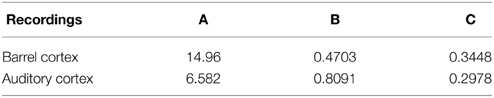

We apply the present theory to two different intracellular recordings obtained in the barrel cortex and the auditory cortex (see Section 2) (Figure 8) and we obtain for the first exponent λ0 = 1.92 and λ0 = 1.48 respectively (see Table 2). We found that the period interval between two consecutive peaks and , leading respectively to J = 37 and J = 18.2, where we used the graph presented in Figure 8. We can recover the connectivity from the imaginary part of the eigenvalue of the field at the attractor, which is exactly the period T. We obtained for each case the degree of connectivity using τ = 0.02. The fitted curves are represented in Figure 8 where we also plotted Gaussian fits (Table 3). It is remarkable that in both cases, the first and the second eigenvalues λ0 and λ1 are sufficiently close, leading to the oscillation phenomena in the distribution of exit times.

Figure 8. Two electrophysiological recordings (top) and their annotation after processing (bottom). (A) An entire 32 s recording from a L2 cortical neuron of the auditory cortex. (B) A 30 s sample of a recording from a L4 cortical neuron (barrel cortex). Histogram of Up states duration obtained from intracellular in vivo recordings from neurons in auditory (C) (with error R2 = 0.73 Gaussian and R2 = 0.89 for the model fUp) and barrel cortex (D) (with error R2 = 0.85 Gaussian and R2 = 0.90 for the model fUp). We fitted each distribution with a Gaussian curve (green) and the function fUp(t) = (red), predicted by our model. The parameters of the fit are summarized in Table 2. The fit reveals the peak oscillations predicted by the depression mean-field model. (E) Imaginary part or period of the peaks as a function of the connectivity J (τ = 0.02). We obtained the connectivity J = 18.2 and 37.8.

We conclude that in both cases, we obtain that the distance between two peaks is the period T = 0.2, which is related to the imaginary of the eigenvalue at the attractor. Using Figure 8, we obtain that the degree of connectivity J responsible for such dynamics is J = 30. It is possible to relate the abstract degree of connectivity J used in the equations to the actual number of synapses by calibrating the model with experimental data. This procedure has been applied in neuronal cultures, where we recently related the connectivity J = 40 (for τ = 0.05) to an approximating 3000 synapses per neuron in hippocampal islands containing 5–30 neurons homogenously connected (Dao Duc et al., 2015). Finally, the present analysis based on the depression-facilitation model differs significantly from the hidden-Markov model approach (McFarland et al., 2011), where several exponential distributions including the gamma distribution and inverse Gaussian distribution and the geometric distribution were used to fit the distribution.

4. Conclusion

Using a mean-field model, we have shown here that the distribution of times in the Up state of the neuronal membrane potential shows several oscillation peaks. These oscillations are due to the intrinsic synaptic dynamics in the depression model. The peaks frequency is independent of the noise amplitude, but depends on the amount of synaptic connection (Figure 5). It is conceivable that neurons have found a possibility to exploit this quantification property. A possible prediction of the model is that working memory could also show period time, where the histogram of durations show multiple peaks, a phenomena that would be interesting to demonstrate experimentally. The peak oscillation phenomena described here is a generic effect of stochastic dynamical systems where recurrent set (focus point here) are located close enough to the boundary.

Another aspect of the present work is the possibility to extract the mean network connectivity from the distribution of peaks, located in the Up state time series histogram. This analysis is a direct consequence of the model, from which we show the distance between two consecutive peaks depends on network connectivity (mean number of synapses per neurons). In future work, more general models, including inhibition, intrinsic electrical properties of neurons, should be used to recover more information about the network connectivity from the distribution of times of the mean neuronal membrane voltage or in general from the distribution of firing rates, which could be applied to neuronal network of various sizes.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/article/10.3389/fncom.2015.00096

References

Anderson, J., Lampl, I., Reichova, I., Carandini, M., and Ferster, D. (2000). Stimulus dependence of two-state fluctuations of membrane potential in cat visual cortex. Nat. Neurosci. 3, 617–621. doi: 10.1038/75797

Bart, E., Bao, S., and Holcman, D. (2005). Modeling the spontaneous activity of the auditory cortex. J. Comput. Neurosci. 19, 357–378. doi: 10.1007/s10827-005-3099-4

Chen, X., Leischner, U., Rochefort, N. L., and Nelken, I. Konnerth, A. (2011). Functional mapping of single spines in cortical neurons in vivo. Nature 475, 501–505. doi: 10.1038/nature10193

Chen, X., Rochefort, N. L., Sakmann, B., and Konnerth, A. (2013). Reactivation of the same synapses during spontaneous up states and sensory stimuli. Cell Rep. 4, 31–39. doi: 10.1016/j.celrep.2013.05.042

Cossart, R., Aronov, D., and Yuste, R. (2003). Attractor dynamics of network UP states in the neocortex. Nature 423, 283–288. doi: 10.1038/nature01614

Dao Duc, K., Cohen, D., Segal, M., Rouach, N., and Holcman, D. (2015). Facilitation-depression synapses underlie neuronal network reverberation. PLoS ONE 10:e0124694. doi: 10.1371/journal.pone.0124694

Dao Duc, K., Schuss, Z., and Holcman, D. (2014). Oscillatory decay of the survival probability of activated diffusion across a limit cycle. Phys. Rev. E Stat. Nonlin. Soft. Matter Phys. 89:030101. doi: 10.1103/PhysRevE.89.030101

Freidlin, M. I., and Wentzell, A. D. (1998). Random Perturbations of Dynamical Systems 260, 2nd Edn. New York, NY: Springer-Verlag.

Hidalgo, J., Seoane, L. F., Cortés, J. M., and Muñoz, M. A. (2012). Stochastic amplification of fluctuations in cortical Up-States. PLoS ONE 7:e40710. doi: 10.1371/journal.pone.0040710

Holcman, D., and Schuss, Z. (2014). Oscillatory survival probability and eigenvalues of the non-self adjoint Fokker-Planck operator. Multiscale Model. Simul. 12, 1294–1308. doi: 10.1137/130925414

Holcman, D., and Tsodyks, M. (2006). The emergence of Up and Down states in cortical networks. PLoS Comput. Biol. 2:e23. doi: 10.1371/journal.pcbi.0020023

Jia, H., Rochefort, N. L., Chen, X., and Konnerth, A. (2010). Dendritic organization of sensory input to cortical neurons in vivo. Nature 464, 1307–1312. doi: 10.1038/nature08947

Kenet, T., Bibitchkov, D., Tsodyks, M., Grinvald, A., and Arieli, A. (2003). Spontaneously emerging cortical representations of visual attributes. Nature 425, 954–956. doi: 10.1038/nature02078

Kitamura, K., Judkewitz, B., Kano, M., Denk, W. Hausser, M. (2008). Targeted patch-clamp recordings and single-cell electroporation of unlabeled neurons in vivo. Nat. Methods 5, 61–67. doi: 10.1038/nmeth1150

Lampl, I., Reichova, I., and Ferster, D. (1999). Synchronous membrane potential fluctuations in neurons of the cat visual cortex. Neuron 22, 361–374. doi: 10.1016/S0896-6273(00)81096-X

Leybaert, L., de Meyer, A., and Mabilde, C. Sanderson, M. J. (2005). A simple and practical method to acquire geometrically correct images with resonant scanning-based line scanning in a custom-built video-rate laser scanning microscope. J. Microsc. 219, 133–140. doi: 10.1111/j.1365-2818.2005.01502.x

Margrie, T. W., Brecht, M., and Sakmann, B. (2002). In vivo, low-resistance, whole-cell recordings from neurons in the anaesthetized and awake mammalian brain. Pflugers Arch. 444, 491–498. doi: 10.1007/s00424-002-0831-z

Matkowsky, B. J., and Schuss, Z. (1982). Diffusion across characteristic boundaries. SIAM J. Appl. Math. 42, 822–834. doi: 10.1137/0142057

Mejias, J. F., Kappen, H. J., and Torres, J. J. (2010). Irregular dynamics in up and down cortical states. PLoS ONE 5:e13651. doi: 10.1371/journal.pone.0013651

McFarland, J. M., Hahn, T. T., and Mehta, M. R. (2011). Explicit-duration hidden Markov model inference of UP-DOWN states from continuous signals. PLoS ONE 6:e21606. doi: 10.1371/journal.pone.0021606

Nesse, W. H., Negro, C. A., and Bressloff, P. C. (2008). Oscillation regularity in noise-driven excitable systems with multi-timescale adaptation. Phys. Rev. Lett. 101:088101. doi: 10.1103/PhysRevLett.101.088101

Schuss, Z. (1980). Theory and Applications of Stochastic Differential Equations. Wiley series in Probability and Statistics. New York, NY: John Wiley Sons, Inc.

Schuss, Z. (2010). Diffusion and Stochastic Processes: An Analytical Approach. Springer series on Applied mathematical sciences, Vol. 170. New York, NY: Springer.

Torres, J., Pantic, L., and Kappen, H. (2002). Storage capacity of attractor neural networks with depressing synapses. Phys. Rev. E 66:061910. doi: 10.1103/PhysRevE.66.061910

Tsodyks, M., Pawelzik, K., and Markram, H. (1998). Neural networks with dynamic synapses. Neural Comput. 10, 821–835. doi: 10.1162/089976698300017502

Tsodyks, M. V., and Markram, H. (1997). The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proc. Natl. Acad. Sci. U.S.A. 94, 719–723. (Erratum in: Proc. Natl. Acad. Sci. U.S.A. 1997, 94:5495). doi: 10.1073/pnas.94.2.719

Keywords: modeling, inverse problem, synaptic depression, Up states, non-poissonnian distribution, first passage times, mean-field model, neuronal networks

Citation: Dao Duc K, Parutto P, Chen X, Epsztein J, Konnerth A and Holcman D (2015) Synaptic dynamics and neuronal network connectivity are reflected in the distribution of times in Up states. Front. Comput. Neurosci. 9:96. doi: 10.3389/fncom.2015.00096

Received: 07 March 2015; Accepted: 13 July 2015;

Published: 29 July 2015.

Edited by:

David Hansel, University of Paris, FranceReviewed by:

Carl Van Vreeswijk, Centre National de la Recherche Scientifique, FranceJoaquín J. Torres, University of Granada, Spain

Copyright © 2015 Dao Duc, Parutto, Chen, Epsztein, Konnerth and Holcman. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: David Holcman, IBENS, Ecole Normale Supérieure, Applied Mathematics and Computational Biology, 46 rue d'Ulm, 75005 Paris, France, holcman@biologie.ens.fr

Khanh Dao Duc

Khanh Dao Duc Pierre Parutto

Pierre Parutto Xiaowei Chen2

Xiaowei Chen2  David Holcman

David Holcman