Exploring Combinations of Different Color and Facial Expression Stimuli for Gaze-Independent BCIs

- 1Key Laboratory of Advanced Control and Optimization for Chemical Processes, Ministry of Education, East China University of Science and Technology, Shanghai, China

- 2Brain Embodiment Lab, School of Systems Engineering, University of Reading, Reading, UK

- 3Riken Brain Science Institute, Wako-shi, Japan

- 4Systems Research Institute of Polish Academy of Sciences, Warsaw, Poland

- 5Skolkovo Institute of Science and Technology, Moscow, Russia

Background: Some studies have proven that a conventional visual brain computer interface (BCI) based on overt attention cannot be used effectively when eye movement control is not possible. To solve this problem, a novel visual-based BCI system based on covert attention and feature attention has been proposed and was called the gaze-independent BCI. Color and shape difference between stimuli and backgrounds have generally been used in examples of gaze-independent BCIs. Recently, a new paradigm based on facial expression changes has been presented, and obtained high performance. However, some facial expressions were so similar that users couldn't tell them apart, especially when they were presented at the same position in a rapid serial visual presentation (RSVP) paradigm. Consequently, the performance of the BCI is reduced.

New Method: In this paper, we combined facial expressions and colors to optimize the stimuli presentation in the gaze-independent BCI. This optimized paradigm was called the colored dummy face pattern. It is suggested that different colors and facial expressions could help users to locate the target and evoke larger event-related potentials (ERPs). In order to evaluate the performance of this new paradigm, two other paradigms were presented, called the gray dummy face pattern and the colored ball pattern.

Comparison with Existing Method(s): The key point that determined the value of the colored dummy faces stimuli in BCI systems was whether the dummy face stimuli could obtain higher performance than gray faces or colored balls stimuli. Ten healthy participants (seven male, aged 21–26 years, mean 24.5 ± 1.25) participated in our experiment. Online and offline results of four different paradigms were obtained and comparatively analyzed.

Results: The results showed that the colored dummy face pattern could evoke higher P300 and N400 ERP amplitudes, compared with the gray dummy face pattern and the colored ball pattern. Online results showed that the colored dummy face pattern had a significant advantage in terms of classification accuracy (p < 0.05) and information transfer rate (p < 0.05) compared to the other two patterns.

Conclusions: The stimuli used in the colored dummy face paradigm combined color and facial expressions. This had a significant advantage in terms of the evoked P300 and N400 amplitudes and resulted in high classification accuracies and information transfer rates. It was compared with colored ball and gray dummy face stimuli.

Introduction

A brain-computer interface is designed to establish a communication channel between a human and external devices, without the help of peripheral nerves and muscle tissue (Wolpaw et al., 2002; Neuper et al., 2006; Allison et al., 2007; Mak and Wolpaw, 2009; Treder et al., 2011; Rodríguez-Bermúdez et al., 2013). Event-related potential (ERP)-based BCIs are able to obtain high classification accuracy and information transfer rates. Consequently, they are one of the most widely used BCI systems. ERP-based BCIs are used to control external devices such as wheel-chairs, spelling devices, and computers (Lécuyer et al., 2008; Cecotti, 2011; Li et al., 2013; Yin et al., 2013).

The N200, P300, and N400 ERPs are most frequently used in ERP-based BCIs. The P300 component is a positive potential, observed at central and parietal electrode sites about 300–400 ms after stimulus onset, which can be observed during an oddball paradigm (Polich, 2007; Acqualagna and Blankertz, 2013). The N200 and N400 components are negative potentials, which can be observed approximately 200–300 and 400–700 ms after stimulus onset (Polich, 2007).

A P300 BCI is a typical example of a BCI system based on visual, audio, or tactile stimuli (Hill et al., 2004; Fazel-Rezai, 2007; Kim et al., 2011; Mak et al., 2011; Jin et al., 2014a; Kaufmann et al., 2014). The first P300-based BCI system was presented by Farwell and Dochin using a 6 × 6 matrix of letters (Farwell and Donchin, 1988). This stimulus matrix was a mental typewriter, which consisted of symbols laid out in six rows and six columns. The user focused on one of symbols while the rows and columns were highlighted in a random order. The symbol the user focusses on (the target) could be identified based on the classification result of the ERPs. However, the information transfer rate and classification accuracy of the system was not high enough for practical applications. Many studies had been conducted to attempt to improve classification accuracies and information transfer rates of the P300 speller (Donchin et al., 2000; Guan et al., 2004; Townsend et al., 2010; Bin et al., 2011; Jin et al., 2011; Zhang et al., 2011).

One of the main goals of BCI is to help people who have lost the ability to communicate or control external devices. Most of the visual-based BCI systems use a matrix like Forwell and Donchin's system (Farwell and Donchin, 1988). However, recent studies have shown that the matrix-based speller does not work well for individuals who are not able to control their gaze (Brunner et al., 2010; Treder and Blankertz, 2010; Frenzel et al., 2011). In these patterns, the absence of early occipital components reduced classification performance (Brunner et al., 2010). It has been proved that these components are modulated by overt attention and contribute to classification performance in BCI systems (Shishkin et al., 2009; Bianchi et al., 2010; Brunner et al., 2010; Frenzel et al., 2011). Furthermore, Frenzel et al's research suggested that the occipital N200 component mainly indexed the locus of eye gaze and that the P300 mainly indexed the locus of attention (Frenzel et al., 2011).

In view of the above problems, researchers have made efforts to develop BCI systems which are independent of eye gaze. A possible solution is to use non-visual stimuli such as auditory or tactile stimuli (Klobassa et al., 2009; Kübler et al., 2009; Brouwer and van Erp, 2010; Chreuder et al., 2011; Schreuder et al., 2011; Lopez-Gordo et al., 2012; Thurlings et al., 2012). Additionally, for visual-based BCI systems, some new areas of research were developed (Marchetti et al., 2013; An et al., 2014; Lesenfants et al., 2014). For example, Treder et al. presented the first study on gaze independent BCIs in 2010 (Treder and Blankertz, 2010). Following on from this work they optimized the gaze-independent pattern by using non-spatial feature attention and facilitating spatial covert attention in 2011 (Treder et al., 2011).

Schaeff et al. reported a gaze-independent paradigm with motion VEPs in 2012 (Schaeff et al., 2012). Acqualagna et al. presented a novel gaze-independent paradigm called the rapid serial visual presentation (RSVP) paradigm in 2010 (Acqualagna et al., 2010). In this paradigm, all the symbols were presented one by one in a serial manner and in the center of the display. The classification accuracy of these presented paradigms was acceptable and could work for individuals who could not control their gaze. However, the classification accuracies and information transfer rates of these gaze-independent BCIs should be improved further for practical applications. The common stimuli used in gaze-independent paradigms were letters, numbers, and polygons, which were used to evoke false positive ERPs in the non-target trials.

It had been proven that face stimuli could be used to obtain high BCI performance (Jin et al., 2012; Kaufmann et al., 2012; Zhang et al., 2012). Facial expressions on dummy faces could evoke strong ERPs (Curran and Hancock, 2007; Chen et al., 2014; Jin et al., 2015). Additionally, the use of different facial expressions has been shown to produce different ERP amplitudes during a BCI control experiment. In our study, the stimuli were colored ball and dummy faces, which were only composed of simple lines and arcs. Meanwhile, dummy faces could show a person's facial expressions and are easily edited without copyright infringement problems. That is, every face was a cartoon face. The primary goal of this study was to survey whether the stimuli, which combined different facial expression and colors, could obtain higher performance compared with the traditional gaze independent pattern.

Different colors and facial expressions were used to help participants to locate the target stimulus, resulting in enlarged evoked ERPs when the participant was focusing on the target. Furthermore, different facial expressions were used to decrease the repetition effects in evoking the ERPs (Jin et al., 2014b). To evaluate the validity of the colored dummy face pattern in increasing information transfer rates, improving classification accuracies, and evoking ERPs, three different paradigms were presented, which were called the colored dummy face pattern, the gray dummy face pattern, and the colored ball pattern. The colored ball pattern was a gaze-independent paradigm using different colors; the gray dummy face pattern was a gaze-independent paradigm using different facial expressions; finally, the colored dummy face pattern was a gaze-independent paradigm that combined the different colors and facial expressions.

Materials and Methods

Participants

Ten healthy individuals (seven male, aged 21–26 years, mean 24.5 ± 1.25) participated in this study, and were marked as S1, S2, S3, S4, S5, S6, S7, S8, S9, and S10. The 10 participants signed a written consent form prior to this experiment and were paid for their participation. The local ethics committee approved the consent form and experimental procedure before any individuals participated. The native language of all the participants was Mandarin Chinese.

Stimuli and Procedure

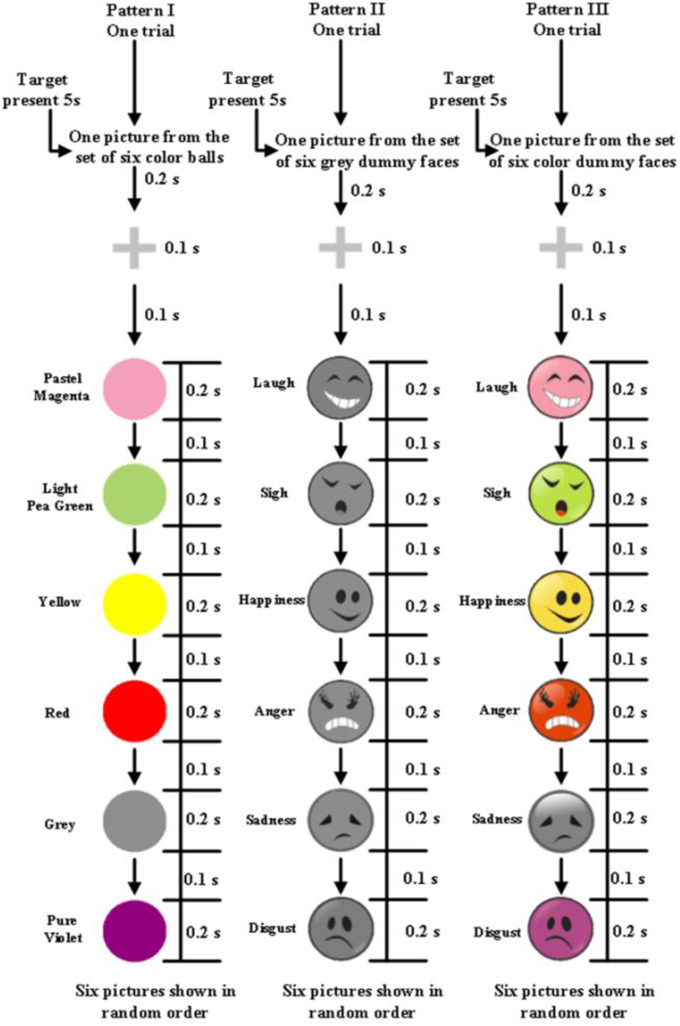

After being prepared for EEG recording, the participants were seated in a comfortable chair 70 ± 3 cm in front of a standard 24 inch LED monitor (60 Hz refresh rate, 1920 × 1080 screen resolution) in a shielded room. The stimuli were presented in the middle of the computer screen. During data acquisition, participants were asked to relax and avoid unnecessary movement. There were three experimental paradigms, the colored ball paradigm (Pattern I), the gray dummy face paradigm (Pattern II), and the color dummy face paradigm (Pattern III). Every dummy face paradigm included six different cartoon face stimuli, which were taken from the internet and modified with Photoshop 7.0. These face stimuli encode six facial expressions, which could be facially encoded laughter, sighing, happiness, anger, sadness, or disgust. These facial stimuli had the same size and lighting. The stimuli used in these three paradigms are shown in Figure 1. Every dummy face picture consisted of simple lines and arcs.

Figure 1. One trial of the experiment. Pattern I is the colored ball paradigm, pattern II is the gray dummy face paradigm, and pattern III is the colored dummy face paradigm.

Every picture as a stimulus was shown in the middle of a computer screen (Figure 2). The serial number of each picture (including target and non-target) was shown at the top of the screen. Only three conditions differed between the stimuli images. Every condition contained six pictures (Figure 1). The flash stimulus on time was 200 ms, and the off time was 100 ms (Figure 1). After the stimuli off time there was nothing in the screen. The stimuli of three paradigms were illustrated in Figure 2.

Experiment Setup, Offline, and Online Protocols

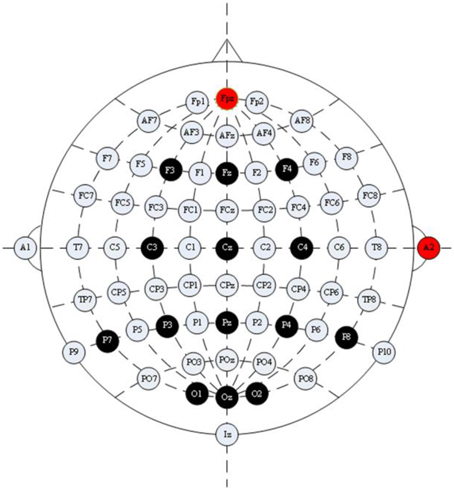

EEG signals were recorded with a g.USBamp and a g.EEGcap (Guger Technologies, Graz, Austria) with a sensitivity of 100 μV, band pass filtered between 0.1 and 30Hz, and sampled at 256Hz. We recorded from 14 EEG electrode positions based on the extended International 10–20 system (Figure 3). These electrodes were Cz, Pz, Oz, Fz, F3, F4, C3, C4, P3, P4, P7, P8, O1, and O2. The right mastoid electrode was used as the reference and the front electrode (FPz) was used as the ground. Data were recorded and analyzed by using the ECUST BCI platform software package which was developed by East China University of Science and Technology (Jin et al., 2011).

Figure 3. Configuration of electrode positions. The electrode positions used in our experiment were F3, F4, Fz, C3, C4, Cz, P7, P3, Pz, P4, P8, O1, O2, and Oz; Fpz was used as the ground electrode position; A2 was used as the reference electrode position.

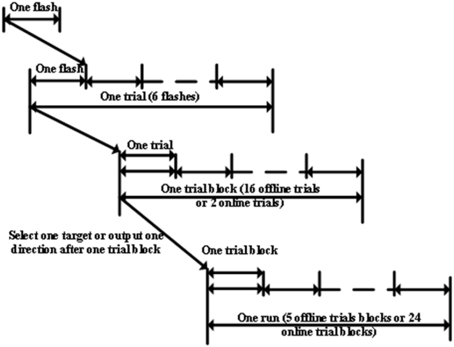

In this paper, the term “flash” referred to each individual event. A single character flash pattern was used here. In each trial, each ball, or dummy face, was flashed once. In other words, each trial included six flashes. All patterns had 200 ms of flashes followed by a 100 ms delay, and each trial lasted 1.8 s with one target and five non-targets (see Figure 1). A trial block referred to a group of trials with the same target. During the offline experiment, there were 16 trials per trial block and each run consisted of five trial blocks, each of which involved a different target. Participants had a 2 min break after each offline run. During the online experiment participants attempted to identify 24 targets (see Figure 4).

There were three conditions, which were presented to each participant in random order. For each condition, participants first took part in three offline runs. Participants had 2 min rest between each offline run (Figure 4). After all offline runs, participants were asked to attempt to identify 24 targets for each pattern in the online experiment. Feedback and target selection time was 5 s before each trial block. Participants had 2 min rest before starting the online task for each condition. The target cue (a dummy face or a colored ball) was shown in the middle of the screen for 2 s before each run. Participants were instructed to focus on, and count, appearances of this cue during both the online and offline experiments. The feedback, which was obtained during the online experiments, was shown at the top of the screen.

Feature Extraction Procedure

A third-order Butterworth band pass filter was used to filter the EEG between 0.1 and 30 Hz. The EEG was then down-sampled from 256 to 51 Hz by selecting every fifth sample from the filtered EEG. A single flash, which lasted 800 ms, was extracted from the data. For the offline data, Windsorizing was used to remove the electrooculogram (EOG). The 10th percentile and the 90th percentile were computed for the samples from each electrode. Amplitude values lying below the 10th percentile or above the 90th percentile were, respectively, replaced by the 10th percentile or 90th percentile (Hoffmann et al., 2008).

Classification Scheme

Bayesian linear discriminant analysis (BLDA) is an extension of Fisher's linear discriminant analysis (FLDA) that avoids over fitting and possibly noisy datasets. The detail of the algorithm can be found in Hoffmann et al. (2008). By using a Bayesian analysis, the degree of regularization can be estimated automatically and quickly from the training data (Hoffmann et al., 2008). Data acquired from the offline experiment was used to train the classifier using the BLDA classifier to obtain the classifier model. This model is then used in the online system.

Raw Bit Rate and Practical Bit Rate

In this paper, we used a bit rate calculation method called raw bit rate (RBR), which was calculated via

where P denotes the classification accuracy and N denotes the number of target every trial. N was equal to six in our experiment. T denotes the completion time of the target selection task. Bit rate is an objective measure for measuring BCI performance and for comparing different BCIs (Wolpaw et al., 2002). RBR is calculated without selection time as defined in Wolpaw et al. (2002).

Practical bit rate (PBR) may be used to estimate the speed of the system in a real-world setting. PBR incorporates the fact that every error requires two additional selections to correct the error. Thus, selecting the wrong character is followed by a backspace and the selection of the correct character. The PRB is calculated as RBR * (1 – 2 * P1), where RBR is the raw bit rate and P is the online error rate of the system (Townsend et al., 2010). If P > 50%, PRB is zero. The PBR also incorporates the time between selections (4 s).

Results

Offline Analysis

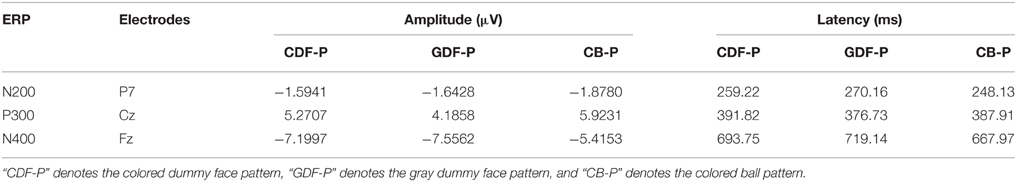

In this paper, electrode P7 was selected to measure the amplitude of N200 difference (Hong et al., 2009); electrode Cz was selected to measure the amplitude of P300 (Treder et al., 2011); and electrode Fz was selected to measure the amplitude of the N400 (Jin et al., 2014a).

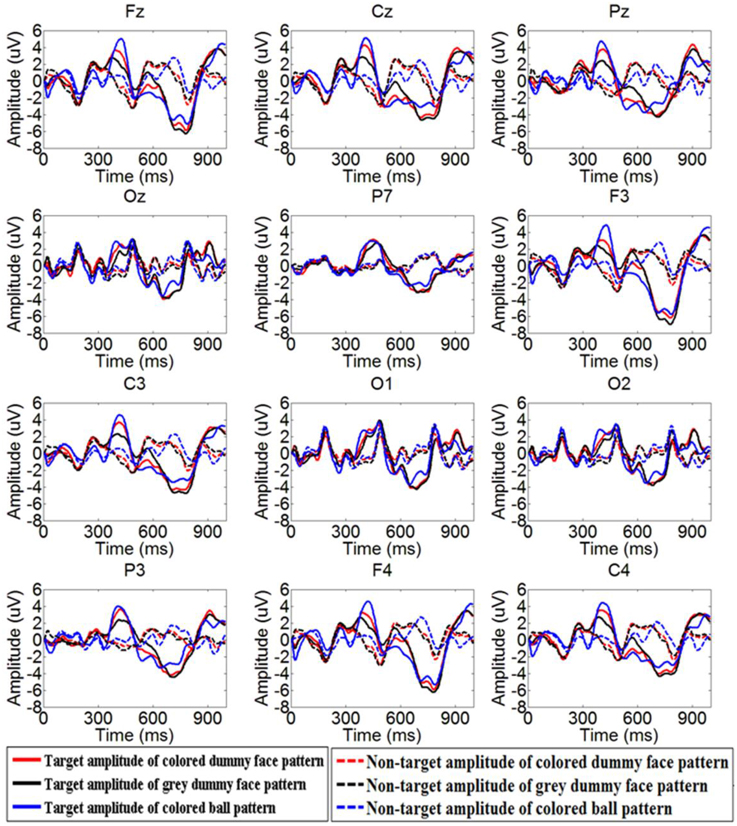

The mean latency and amplitude of ERPs from all 10 participants is shown in Table 1. Figure 5 shows the grand averaged amplitudes of target and non-target flashes across all participants over 12 electrode sites for the colored dummy face pattern, the gray dummy face pattern, and the colored ball pattern. Specifically, frontal and central channels contain an early negative ERP at around 250 ms (N200), followed by a high positive potential at around 350 ms (P300), and then a larger negative ERP at around 700 ms (N400).

Figure 5. Grand averaged ERPs of target flashes across all participants over 12 electrode sites. The red line, black line, and green line indicate the target amplitude of the colored dummy face pattern, gray dummy face pattern, and colored ball pattern, respectively.

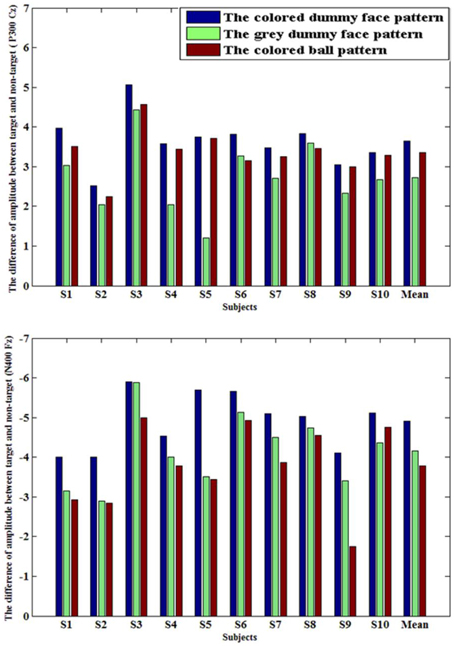

Figure 6 shows the amplitude differences between target and non-target ERPs at Cz (P300, peak point ± 25 ms), and at Fz (N400, peak point ± 25 ms). A one-way repeated measures ANOVA was used to show P300 peak amplitude [F(2, 27) = 4.1, p = 0.0279], and N400 peak amplitude [F(2, 27) = 3.9, p= 0.0324) among three patterns. It was shown that the colored dummy face pattern evoked a significantly higher P300 ERP (p < 0.05) and N400 ERP (p < 0.05) compared to other two patterns.

Figure 6. Upper part: The amplitude difference of P300 between target and non-target ERP amplitudes at electrode Cz across all 10 participants (μV); Lower part: The amplitude difference of N400 between target and non-target ERP amplitudes at electrode Fz across all 10 participants (μV).

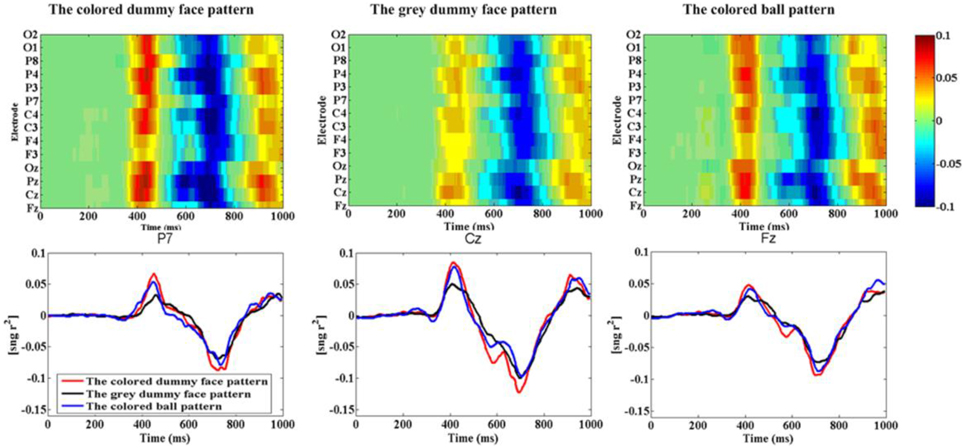

Figure 7 shows the grand average r-squared values of ERPs across all 10 participants at site Fz, Cz, Pz, Oz, F3, F4, C3, C4, P7, P3, P4, P8, O1, and O2. A one-way repeated measures ANOVA was used to show the r-squared value difference of the N200 ERP across all 10 participants. It was significant at electrode P7 [F(2, 27) = 0.12, p = 0.91] for the N200 (peak point ± 25 ms), at Cz [F(2, 27) = 3.74, p = 0.0368] for the P300 (peak point ± 25 ms), and at Fz [F(2, 27) = 3.38, p = 0.049] for the N400 (peak point ± 25 ms). It was shown that the colored dummy face pattern obtained significantly higher r-squared values during the P300 ERP (p < 0.05) and the N400 ERP (p < 0.05) compared to the other two patterns.

Figure 7. R-squared values of ERPs. (A) R-squared values of ERPs from the three paradigms between 1 and 1000 ms averaged from participants 1–10 at sites Fz, Cz, Pz, Oz, F3, F4, C3, C4, P7, P3, P4, P8, O1, and O2. (B) The r-squared values at P7, Cz, and Fz.

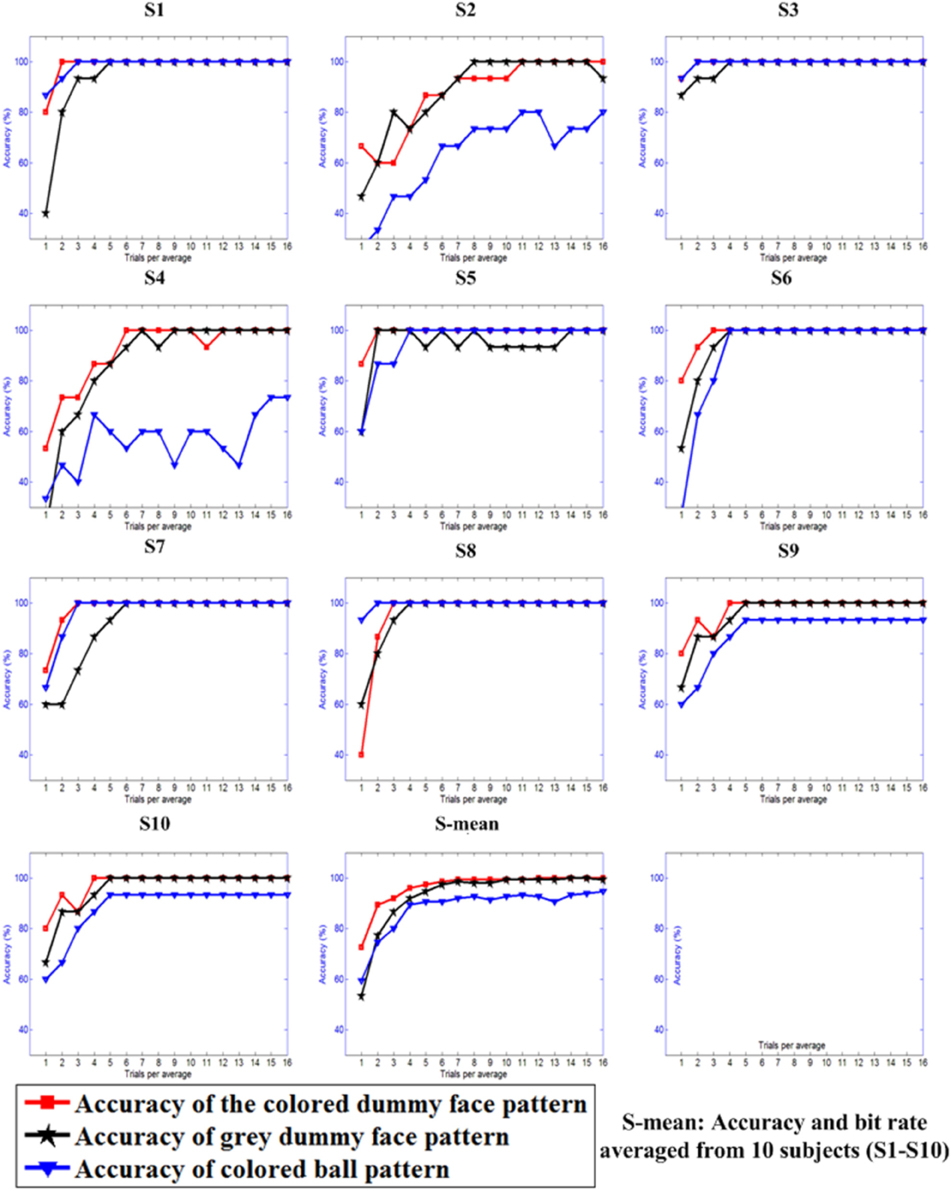

Figure 8 shows the offline classification accuracies of the three patterns when differing numbers of trials (1–16) were used to construct the averaged ERP.

Figure 8. Offline classification accuracies with differing numbers of trials used for constructing the averaged ERPs.

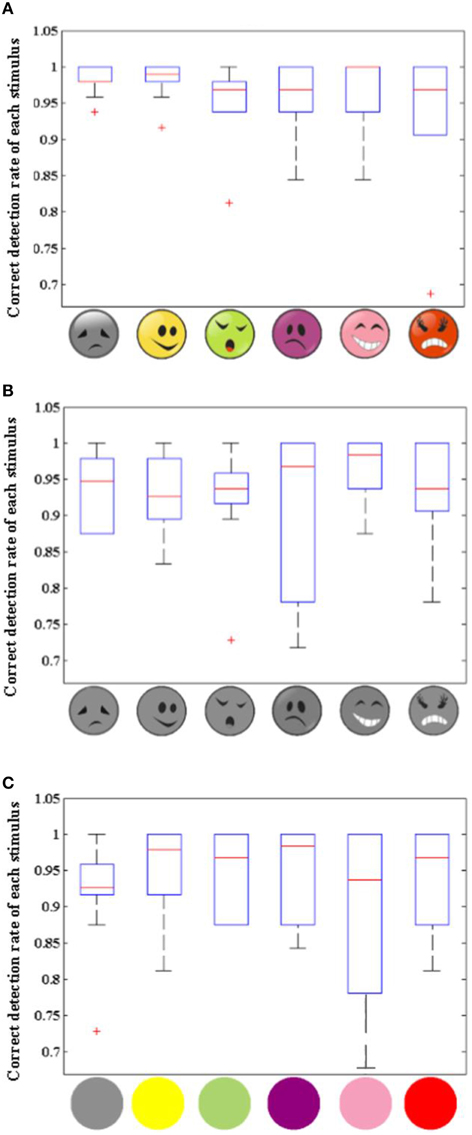

Figure 9 shows three boxplots, which illustrate the distribution of correct detection rates of each stimulus across all 10 participants for the three patterns. Correct detection rate of a stimulus shows the rate of correct classification for one stimulus. It indicates that the classification accuracy of the six stimuli in the colored dummy face pattern was the most stable when compared to the other two patterns.

Figure 9. Boxplot of correct detection rate of each stimulus for 10 participants. Panels (A), (B), and (C) are the Boxplots of correct detection rate of each stimulus across all 10 participants for the colored dummy face pattern (A), the gray dummy face pattern (B), and the colored ball pattern (C), respectively.

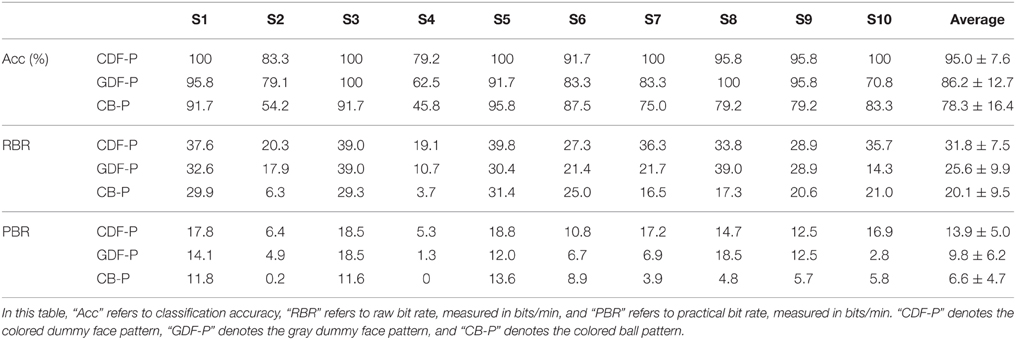

Online Results

Table 2 shows the online classification accuracies and information transfer rates with an adaptive strategy (Jin et al., 2011). A one-way repeated measures ANOVA was used to show the difference in classification accuracies [F(2, 27) = 4.27, p = 0.0245], RBRs [F(2, 27) = 4.17, p = 0.0264], and PBR [F(2, 27) = 4.63, p = 0.0186] across three patterns. It shows that the colored dummy face pattern achieved significantly higher classification accuracies (p < 0.05), RBRs (p < 0.05), and PRB (p < 0.05), than the gray dummy face pattern and the colored ball pattern.

Table 2. Classification accuracy, raw bit rate, and practical bit rates achieved during online experiments.

Discussion

The primary goal of this study was to verify that the colored dummy face pattern could increase the distinguishability of stimuli, and improving classification accuracies and information transfer rates. Facial expression stimuli could evoke strong N200, P300, and N400 ERPs, while colored stimuli could also evoke high P300 ERPs, especially in gaze-independent paradigms (Treder et al., 2011; Acqualagna and Blankertz, 2013; Chen et al., 2014; Jin et al., 2015). In this paper, the different colors and face expressions were combined to produce enlarged ERPs. The results show that higher N400 and P300 ERPs were evoked by the colored dummy face pattern, compared with the gray dummy face pattern and the colored ball pattern. The colored dummy face pattern had a significant advantage in terms of the P300 (p < 0.05) and N400 (p < 0.05) ERP amplitudes (see Figure 6) compared to other two patterns. Furthermore, the colored dummy face pattern had an advantage over other two patterns in terms of r-squared values of ERP amplitudes at Cz (p < 0.05) for the P300 ERP, and the N400 ERP at Fz p < 0.05).

Classification Accuracy and Information Transfer Rate

Classification accuracy and information transfer rate are two important indexes to measure the performance of BCI systems. Online classification accuracies and information transfer rates of the three patterns are shown in Table 2. A one-way repeated ANOVA was used to show the difference in classification accuracy (F = 4.27, p < 0.05), RBR (F = 4.17, p < 0.05), PBR (F = 4.63, p < 0.05) between the three patterns. It showed that the use of the colored dummy face pattern resulted in significantly higher classification accuracies (p < 0.05), RBRs (p < 0.05), and PBRs (p < 0.05), compared with the gray dummy face pattern and the colored ball pattern. The mean classification accuracy of the colored dummy face pattern was 8.8% higher than that of the gray dummy face pattern, while the mean RBR of the colored dummy face pattern was 6.2 bits min−1 higher than that of the gray dummy face pattern. The mean classification accuracy and RBR of the colored dummy face pattern are 16.7% and 11.7 bit min−1 higher than those of the colored ball pattern.

Potential Advantage for Users

The system is a kind of gaze-independent BCI, which is based on RSVP, which could be used by individuals who completely or partially lost their ability to control their eye gaze. Figure 9 shows that the stimuli used in the colored dummy face pattern is more stable for all participants compared to the other two patterns, which shows the advantage of the colored dummy face pattern for practical applications.

Conclusions

A colored dummy face paradigm for visual attention-based BCIs was presented. The stimuli used in this pattern combined colors and facial expressions, which lead to high classification accuracies during RSVP. It had a significant advantage in terms of the evoked P300 and N400 amplitudes. It was also able to obtain high classification accuracies and information transfer rates, compared with the color change paradigm and the facial expression paradigm. In the future we will further verify the performance of this paradigm with patients.

Author Contributions

LC and JJ had designed and finished the experiment. ID, YZ, XW, and AC gave guidances. LC completed the manuscript. JJ and ID modified manuscript. XW provided the required experimental funds.

Funding

This work was supported in part by the Grant National Natural Science Foundation of China, under Grant Nos. 61573142, 61203127, 91420302, and 61305028. This work was also supported by the Fundamental Research Funds for the Central Universities (WG1414005, WH1314023, and WH1516018) and Shanghai Chenguang Program under Grant 14CG31.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Acqualagna, L., and Blankertz, B. (2013). Gaze-independent BCI-spelling using rapid serial visual presentation (RSVP). Clin. Neurophysiol. 124, 901–908. doi: 10.1016/j.clinph.2012.12.050

Acqualagna, L., Treder, M. S., Schreuder, M., and Blankertz, B. (2010). “A novel brain-computer interface based on the rapid serial visual presentation paradigm,” in Conference Proceedings IEEE Engineering in Medicine and Biology Society (Buenos Aires), 2686–2689.

Allison, B. Z., Wolpaw, E. W., and Wolpaw, J. R. (2007). Brain-computer interface systems: progress and prospects. Expert Rev. Med. Devices 4, 463–474. doi: 10.1586/17434440.4.4.463

An, X., Höhne, J., Ming, D., and Blankertz, B. (2014). Exploring combinations of auditory and visual stimuli for gaze-independent brain-computer interfaces. PLoS ONE 9:0111070. doi: 10.1371/journal.pone.0111070

Bianchi, L., Sami, S., Hillebrand, A., Fawcett, I. P., Quitadamo, L. R., and Seri, S. (2010). Which physiological components are more suitable for visual ERP based brain-computer interface? A preliminary MEG/EEG study. Brain Topogr. 23, 180–185. doi: 10.1007/s10548-010-0143-0

Bin, G., Gao, X., Wang, Y., Li, Y., Hong, B., and Gao, S. (2011). A high-speed BCI based on code modulation VEP. J. Neural Eng. 8:025015. doi: 10.1088/1741-2560/8/2/025015

Brouwer, A. M., and van Erp, J. B. (2010). A tactile P300 brain-computer interface. Front. Neurosci. 4:00019. doi: 10.3389/fnins.2010.00019

Brunner, P., Joshi, S., Briskin, S., Wolpaw, J. R., Bischof, H., and Schalk, G. (2010). Does the “P300” speller depend on eye gaze? J. Neural Eng. 7:056013. doi: 10.1088/1741-2560/7/5/056013

Cecotti, H. (2011). Spelling with non-invasive brain-computer interfaces-current and future trends. J. Physiol. Paris. 105, 106–114. doi: 10.1016/j.jphysparis.2011.08.003

Chen, L., Jin, J., Zhang, Y., Wang, X., and Cichocki, A. (2014). A survey of dummy face and human face stimuli used in BCI paradigm. J. Neurosci. Methods 239, 18–27. doi: 10.1016/j.jneumeth.2014.10.002

Chreuder, M. S., Rost, T., and Tangermann, M. (2011). Listen, you are writing! Speeding up online spelling with a dynamic auditory BCI. Front. Neurosci. 5:00112. doi: 10.3389/fnins.2011.00112

Curran, T., and Hancock, J. (2007). The FN400 indexes familiarity-based recognition of faces. Neuroimage 36, 464–471. doi: 10.1016/j.neuroimage.2006.12.016

Donchin, E., Spencer, K. M., and Wijesinghe, R. (2000). The mental prosthesis: assessing the speed of a P300-based brain-computer interface. IEEE Trans. Rehabil. Eng. 8, 381–388. doi: 10.1109/86.847808

Farwell, L. A., and Donchin, E. (1988). Talking off the top your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 70, 510–523. doi: 10.1016/0013-4694(88)90149-6

Fazel-Rezai, R. (2007). Human error in P300 speller paradigm for brain-computer interface. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2007, 2516–2519. doi: 10.1109/IEMBS.2007.4352840

Frenzel, S., Neubert, E., and Bandt, C. (2011). Two communication lines in a 3 × 3 matrix speller. J. Neural Eng. 8:036021. doi: 10.1088/1741-2560/8/3/036021

Guan, C., Thulasidas, M., and Wu, J. (2004). “High performance P300 speller for brain-computer interface,” in IEEE International Workshop on Biomedical Circuits and Systems (Singapore), S3/5/INV–S3/13-16

Hill, N. J., Lal, T. M., Bierig, K., Birbaumer, N., and Schölkopf, B. (2004). An Auditory Paradigm for Brain-Computer Interface NIPS. Available online at: books.nips.cc/papers/files/nips17/NIPS2004_0503.ps.gzı

Hoffmann, U., Vesin, J. M., Ebrahimi, T., and Diserens, K. (2008). An efficient P300-based brain-computer interface for disabled subjects. J. Neurosci. Methods 167, 115–125. doi: 10.1016/j.jneumeth.2007.03.005

Hong, B., Guo, F., Liu, T., Gao, X., and Gao, S. (2009). N200-speller using motion-onset visual response. Clin. Neurophysiol. 120, 1658–1666. doi: 10.1016/j.clinph.2009.06.026

Jin, J., Allison, B. Z., Kaufmann, T., Kübler, A., Zhang, Y., Wang, X., et al. (2012). The changing face of P300 BCIs: a comparison of stimulus changes in a P300 BCI involving faces, emotion, and movement. PLoS ONE 7:0049688. doi: 10.1371/journal.pone.0049688

Jin, J., Allison, B. Z., Sellers, E. W., Brunner, C., Horki, P., Wang, X., et al. (2011). An adaptive P300-based control system. J. Neural Eng. 8:036006. doi: 10.1088/1741-2560/8/3/036006

Jin, J., Allison, B. Z., Zhang, Y., Wang, X., and Cichoki, A. (2014b). An erp-based bci using an oddball paradigm with different faces and reduced errors in critical functions. Int. J. Neural Syst. 24:1450027. doi: 10.1142/S0129065714500270

Jin, J., Daly, I., Zhang, Y., Wang, X., and Cichocki, A. (2014a). An optimized ERP brain–computer interface based on facial expression changes. J. Neural Eng. 11:036004. doi: 10.1088/1741-2560/11/3/036004

Jin, J., Sellers, E. W., Zhou, S., Zhang, Y., Wang, X., and Cichocki, A. (2015). A p300 brain-computer interface based on a modification of the mismatch negativity paradigm. Int. J. Neural Syst. 25:1550011. doi: 10.1142/S0129065715500112

Kaufmann, T., Herweg, A., and Kübler, A. (2014). Toward brain-computer interface based wheelchair control utilizing tactually-evoked event-related potentials. J. Neuroeng Rehabil. 11:7. doi: 10.1186/1743-0003-11-7

Kaufmann, T., Schulz, S. M., Köblitz, A., Renner, G., Wessig, C., and Kübler, A. (2012). Face stimuli effectively prevent brain–computer interface inefficiency in patients with neurodegenerative disease. Clin. Neurophysiol. 124, 893–900. doi: 10.1016/j.clinph.2012.11.006

Kim, D. W., Hwang, H. J., Lim, J. H., Lee, Y. H., Jung, K. Y., and Im, C. H. (2011). Classification of selective attention to auditory stimuli: toward vision-free brain-computer interfacing. J. Neurosci. Methods 197, 180–185. doi: 10.1016/j.jneumeth.2011.02.007

Klobassa, D. S., Vaughan, T. M., Brunner, P., Schwartz, N. E., Wolpaw, J. R., Neuper, C., et al. (2009). Toward a high-throughput auditory P300-based brain-computer interface. Clin. Neurophysiol. 120, 1252–1261. doi: 10.1016/j.clinph.2009.04.019

Kübler, A., Furdea, A., Halder, S., Hammer, E. M., Nijboer, F., and Kotchoubey, B. (2009). A brain- computer interface controlled auditory event-related potential (P300) spelling system for locked-in patients. Ann. N.Y. Acad. Sci. 1157, 90–100. doi: 10.1111/j.1749-6632.2008.04122.x

Lécuyer, A., Lotte, F., Reilly, R. B., Leeb, R., Hirose, M., and Slater, M. (2008). Brain-computer interfaces, virtual reality, and video games. Computer 41, 66–72. doi: 10.1109/MC.2008.410

Lesenfants, D., Habbal, D., Lugo, Z., Lebeau, M., Horki, P., Amico, E., et al. (2014). An independent SSVEP-based brain-computer interface in locked-in syndrome. J. Neural Eng. 11:035002. doi: 10.1088/1741-2560/11/3/035002

Li, J., Liang, J., Zhao, Q., Li, J., Hong, K., and Zhang, L. (2013). Design of assistive wheelchair system directly steered by human thoughts. Int. J. Neural Syst. 23:1350013. doi: 10.1142/S0129065713500135

Lopez-Gordo, M. A., Pelayo, F., Prieto, A., and Fernandez, E. (2012). An auditory brain-computer interface with accuracy prediction. Int. J. Neural Syst. 22:1250009. doi: 10.1142/S0129065712500098

Mak, J. N., Arbel, Y., Minett, J. W., McCane, L. M., Yuksel, B., Ryan, D., et al. (2011). Optimizing the P300-based brain-computer interface: current status, limitations and future directions. J. Neural Eng. 8:025003. doi: 10.1088/1741-2560/8/2/025003

Mak, J. N., and Wolpaw, J. R. (2009). Clinical applications of brain–computer interfaces: current state and future prospects. IEEE Rev. Biomed. Eng. 2, 187–199. doi: 10.1109/RBME.2009.2035356

Marchetti, M., Piccione, F., Silvoni, S., Gamberini, L., and Priftis, K. (2013). Covert visuospatial attention orienting in a brain-computer interface for amyotrophic lateral sclerosis patients. Neurorehabil. Neural Repair 27, 430–438. doi: 10.1177/1545968312471903

Neuper, C., Müller-Putz, G. R., Scherer, R., and Pfurtscheller, G. (2006). Motor-imagery and EEG-based control of spelling devices and neuroprostheses. Prog. Brain. Res. 159, 393–409. doi: 10.1016/S0079-6123(06)59025-9

Polich, J. (2007). Updating P300: an integrative theory of P3a and P3b. Clin. Neurophysiol. 118, 2128–2148. doi: 10.1016/j.clinph.2007.04.019

Rodríguez-Bermúdez, G., García-Laencina, P. J., and Roca-Dorda, J. (2013). Efficient automatic selection and combination of EEG features in least squares classifiers for motor imagery brain-computer interfaces. Int. J. Neural Syst. 23:1350015. doi: 10.1142/S0129065713500159

Schaeff, S., Treder, M. S., Venthur, B., and Blankertz, B. (2012). Exploring motion VEPs for gaze-independent communication. J. Neural. Eng. 9:045006. doi: 10.1088/1741-2560/9/4/045006

Schreuder, M., Hohne, J., Treder, M. S., Blankertz, B., and Tangermann, M. (2011). Performance optimization of ERP-based BCIs using dynamic stopping. Conf. Proc. IEEE Eng. Med. Boil. Soc. 2011, 4580–4583. doi: 10.1109/iembs.2011.6091134

Shishkin, S. L., Ganin, I. P., Basyul, I. A., Zhigalov, A. Y., and Kaplan, A. Y. (2009). N1 wave in the P300 BCI is not sensitive to the physical characteristics of stimuli. J. Integr. Neurosci. 8, 471–485. doi: 10.1142/S0219635209002320

Thurlings, M. E., van Erp, J. B., Brouwer, A. M., Blankertz, B., and Werkhoven, P. (2012). Control-display mapping in brain-computer interfaces. Ergonomics 55, 564–580. doi: 10.1080/00140139.2012.661085

Townsend, G., LaPallo, B. K., Boulay, C. B., Krusienski, D. J., Frye, G. E., and Hauser, C. K. (2010). A novel P300-based brain-computer interface stimulus presentation paradigm: moving beyond rows and columns. Clin. Neurophysiol. 121, 1109–1120. doi: 10.1016/j.clinph.2010.01.030

Treder, M. S., and Blankertz, B. (2010). (C)overt attention and visual speller design in an ERP-based brain-computer interface. Behav. Brain Funct. 6:28. doi: 10.1186/1744-9081-6-28

Treder, M. S., Schmidt, N. M., and Blankertz, B. (2011). Gaze-independent brain-computer interfaces based on covert attention and feature attention. J. Neural Eng. 8:066003. doi: 10.1088/1741-2560/8/6/066003

Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., and Vaughan, T. M. (2002). Brain-computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791. doi: 10.1016/S1388-2457(02)00057-3

Yin, E., Zhou, Z., Jiang, J., Chen, F., Liu, Y., and Hu, D. (2013). A novel hybrid BCI speller based on the incorporation of SSVEP into the P300 paradigm. J Neural Eng. 10:026012. doi: 10.1088/1741-2560/10/2/026012

Zhang, D., Honglai, X., Wu, W., Gao, S., and Hong, B. (2011). “Integrating the spatial profile of the N200 speller for asynchronous brain-computer interfaces,” in Conference Proceedings IEEE Engineering in Medicine and Biology Society (Boston, MA), 4564–4567.

Keywords: event-related potentials, brain-computer interface (BCI), dummy face, fusion stimuli, gaze-independent, facial expression

Citation: Chen L, Jin J, Daly I, Zhang Y, Wang X and Cichocki A (2016) Exploring Combinations of Different Color and Facial Expression Stimuli for Gaze-Independent BCIs. Front. Comput. Neurosci. 10:5. doi: 10.3389/fncom.2016.00005

Received: 13 November 2015; Accepted: 11 January 2016;

Published: 29 January 2016.

Edited by:

John Suckling, University of Cambridge, UKCopyright © 2016 Chen, Jin, Daly, Zhang, Wang and Cichocki. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jing Jin, jinjingat@gmail.com;

Xingyu Wang, xywang@ecust.edu.cn

Long Chen

Long Chen Jing Jin

Jing Jin Ian Daly

Ian Daly Yu Zhang

Yu Zhang Xingyu Wang

Xingyu Wang Andrzej Cichocki

Andrzej Cichocki