Gesturing meaning: non-action words activate the motor system

- 1 School of Psychology, Bangor University, Bangor, UK

- 2 School of Psychology, University of Plymouth, Plymouth, UK

- 3 Institute of Sport Science, Saarland University, Saarbrücken, Germany

Across cultures, speakers produce iconic gestures, which add – through the movement of the speakers’ hands – a pictorial dimension to the speakers’ message. These gestures capture not only the motor content but also the visuospatial content of the message. Here, we provide first evidence for a direct link between the representation of perceptual information and the motor system that can account for these observations. Across four experiments, participants’ hand movements captured both shapes that were directly perceived, and shapes that were only implicitly activated by unrelated semantic judgments of object words. These results were obtained even though the objects were not associated with any motor behaviors that would match the gestures the participants had to produce. Moreover, implied shape affected not only gesture selection processes but also their actual execution – as measured by the shape of hand motion through space – revealing intimate links between implied shape representation and motor output. The results are discussed in terms of ideomotor theories of action and perception, and provide one avenue for explaining the ubiquitous phenomenon of iconic gestures.

Introduction

When people talk, even on the phone, they often find themselves producing iconic gestures: without any conscious intention, their hand movements capture the actions and objects they speak about (e.g., Iverson and Goldin-Meadow, 1998). Despite its unconscious and non-strategic nature, the production of these gestures is not without its uses. Gesturing enhances verbal fluency (Krauss et al., 1996; Kita, 2000) and transmits information that complements the information transmitted by language (e.g., Melinger and Levelt, 2004), even when not consciously accessible by the speaker (Goldin-Meadow and Wagner, 2005). On the side of the receiver, observing gestures helps language comprehension, as the gestural content is automatically integrated with the accompanying sentence (e.g., Holle and Gunter, 2007; Wu and Coulson, 2007a,b) and engages the same semantic system as language itself (e.g., Gunter and Bach, 2004; Özyürek et al., 2007).

Despite these important functions for communication, the phenomenon of iconic gestures has not been convincingly explained. Why is gesturing such an effortless process, considering that it happens alongside the complex act of speaking? Why does gesturing even improve the verbal fluency of the speaker, and, conversely, why does preventing people from gesturing interfere with speaking (Rauscher et al., 1996)?

Two frameworks provide potential explanations for these phenomena. One avenue for explanation is provided by “simulation” or “motor resonance” accounts of language that presuppose that any mental representation of a bodily movement relies on the sensorimotor structures that are involved in its actual production (e.g., Buccino et al., 2005; for a review, see Fischer and Zwaan, 2008; for neuroimaging data, see Pulvermüller and Fadiga, 2010). Various studies have demonstrated that seeing actions primes similar actions in the observer and engages motor-related brain structures (di Pellegrino et al., 1992; Brass et al., 2000; Bach and Tipper, 2007; Griffiths and Tipper, 2009). Important for explaining gesturing in a language context, these effects are also observed for movements that are only available mentally, not visually. For instance, reading words denoting actions (e.g., “close the drawer”) primes the associated movements in the same way as seeing the actions themselves (e.g., pushing one’s hand forward, Glenberg and Kaschak, 2002; for similar results, see Buccino et al., 2005; Zwaan and Taylor, 2006), and object words evoke the actions that are typically performed with them, such as appropriate hand configurations for grasping or use (e.g., Glover et al., 2004; Tucker and Ellis, 2004; Bub et al., 2008). That implicit activation of action information suffices to induce motor activation has also been demonstrated with face stimuli. Identifying famous soccer and tennis players affects responses with the effectors involved in their sports, even when these athletes were identified from their faces and no direct visual cues to their sports were present (Bach and Tipper, 2006; Candidi et al., 2010; Tipper and Bach, 2010).

These findings indicate that the actions associated with words or objects are automatically retrieved and flow into the motor system, suggesting that gesturing may be a direct consequence of representing the action content of a message. Yet, there is reason to believe that such motor resonance accounts are too limited. Iconic gestures are not only driven by action content. Many iconic gestures refer to the spatial content of language and capture, for instance, spatial layouts, motion through space, or object shape (e.g., Graham and Argyle, 1975; Seyfeddinipur and Kita, 2001; Kita and Özyürek, 2003; for a review, see Alibali, 2005). Another class of theories – so-called ideomotor or common coding views of cognition (e.g., Greenwald, 1970; Prinz, 1990; Hommel et al., 2001; Knoblich and Prinz, 2005) – is not affected by these limitations and can account also for the spatial content transmitted by gestures. According to these theories, the motor priming effects discussed above are only one instantiation of a more general principle. Movements are planned on a perceptual level, in terms of their directly perceivable consequences (their effects). The same representations are therefore used to describe stimuli in the environment and to plan movements toward them. For example, the same code that represents the orientation of a bar in front of you would also be used as possible goal state for the orientation of your own hand, allowing visual stimuli to directly evoke appropriate movements toward them. There are numerous studies that demonstrate the automatic translation of simple spatial stimulus features into motor actions, such as laterality (Simon, 1969) or orientation (Craighero et al., 1999), but also complex features, such as hand postures (Stürmer et al., 2000), or body parts (Bach et al., 2007; Gillmeister et al., 2008; Tessari et al., 2010; for a recent review, see Knoblich and Prinz, 2005).

Ideomotor accounts therefore differ from motor simulation accounts in that they assume that motor output can also be driven by perceptual rather than motor content of a message. They predict that not only action, but any spatial content – even such complex cues as an object’s shape – should drive the motor system, irrespective of whether it directly relates to action. In fact, the motor system may be influenced by words denoting objects, which we have never interacted with before (e.g., “moon”), as long as their representation carries strong visual shape information. This study directly tests this hypothesis. It investigates whether mentally representing an object automatically activates iconic movements that capture the object’s shape. Importantly, and in contrast to previous research investigating motor effects in word processing (e.g., Glenberg and Kaschak, 2002; Tucker and Ellis, 2004; Zwaan and Taylor, 2006; Bub et al., 2008), it investigates gestures that are far removed from the actual way in which we typically interact with the objects, minimizing affordance-based contributions to gestural output.

We used the following strategy. The first experiment uses a stimulus–response compatibility (SRC) paradigm to establish a technique that can then be applied to study word processing. Participants saw abstract geometrical shapes – circles or squares – appearing on the screen, and were instructed to either produce a bimanual round or a square gesture in the vertical space in front of their body (Figure 1), depending on the color of the shapes. We found that the similarity of seen and to-be produced shapes affected both the time to initiate the gesture and the actual time required to perform the gestures. This study therefore demonstrates that even such complex cues as geometrical shape are mapped onto the motor system and facilitate the selection and performance of corresponding gestures. The second experiment takes this logic further, and investigates whether shape information also flows into the motor system when this shape information is not directly available from the stimulus, but is carried by words referring to either round (e.g., “carousel”) or square objects (e.g., “billboard”), which have no direct links to action. We tested whether such influences on gesturing requires explicit semantic processing of the words. Experiment 3 replicates these effects with non-words that were, in a previous learning task, arbitrarily associated with objects. Finally, Experiment 4 investigates the actual performance of the gestures with motion tracking techniques and directly demonstrates that processing words that represent objects of particular shapes influences the actual performance of gestures – the shape of hand motion through space – and not just gesture selection processes. The experiment therefore reveals intimate links between the representation of implied shape and actual motor control.

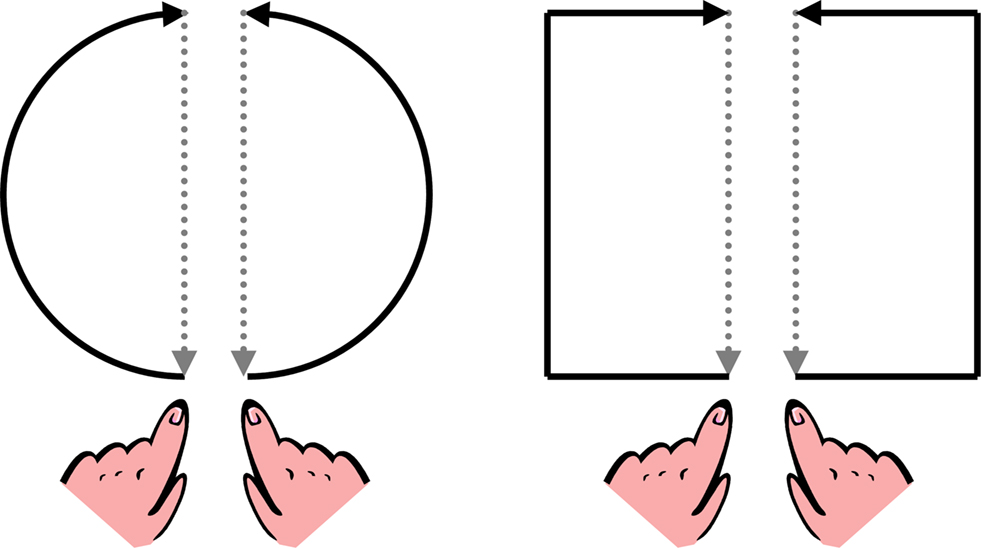

Figure 1. The two gestures that participants were instructed to perform in all experiments. Black arrows show the actual shapes, gray arrows show movements back to the rest keys.

Experiment 1: Visual Shapes

Experiment 1 investigates whether seeing abstract geometrical shapes affects ongoing behavior, even when irrelevant to current behavioral goals. Participants produced bimanual square or circle gestures in the vertical plane in front of their body (Figure 1) in response to the color of squares and circles. From a motor simulation perspective, there should be minimal grounds for predicting effects of the perceived shapes on motor behavior. First, in contrast to the 3D photographs of real objects with strong affordances used in prior research (Tucker and Ellis, 1998, 2001; Bub et al., 2008), we presented abstract geometrical shapes as two-dimensional abstract wire frames, which are unlikely be associated with specific motor responses (e.g., Symes et al., 2007). Second, the bimanual gestures required by the participants do not mirror any object-directed behavior and are not part of the typical set of affordances of commonplace objects. Yet, if the same spatial codes represent both shapes in the environment and actions one can performed, then these hand movements should nevertheless be influenced by the presented shapes. In particular, participants should be quicker to initiate and produce square gestures in response to seeing a square and slower when seeing a circle, and vice versa. Such findings would confirm that not only simple perceptual properties such as laterality (Simon, 1969) are represented in common perceptual-motor codes, but that the same is true for more complex geometrical shape information.

Materials and Methods

Participants

Ten participants (seven females), all students at Bangor University, Wales, took part in the experiment. They ranged in age from 18 to 22 years and had normal or corrected-to-normal vision. The participants received course credits for their participation. They satisfied all requirements in volunteer screening and gave informed consent approved by the School of Psychology at Bangor University, Wales, and the North-West Wales Health Trust, and in accordance with the Declaration of Helsinki.

Materials and apparatus

The experiment was controlled by the software Presentation run on a 3.2-GHz PC running Windows XP. The stimulus set consisted of seven pictures: a fixation cross (generated by the + sign of the Trebuchet MS font in white on a black background), a green circle, a green square, a red circle and a red square, plus a white square and a white circle. The circles and squares were line drawings (line strength 4.5) generated in PowerPoint and subsequently exported to bitmap (.bmp) format. The circles and squares were presented on a black background and were 12 cm tall and wide.

Procedure and design

The participants were seated in a dimly lit room facing a color monitor at an approximate distance of 60 cm. The participants received a computer driven instruction and their response assignment (i.e., whether the red color cued square gestures and green color cued round gestures, or vice versa). They then performed 12 training trials. When both experimenter and participant were satisfied that the task was understood, the main experiment started. It consisted of four blocks of 60 trials each, separated by a short pause that participants could terminate by pressing the space bar. Altogether, there were 12 different trial types, resulting from the factorial combinations of two movements (square, round), two shapes (square, round), and three SOAs (100, 200, 500 ms).

Participants initiated a trial by resting their index finger of the left and right hands on previously designated “rest” buttons (the V and N keys on the computer keyboard). After the presentation of a fixation cross (500 ms.), and a short blank (500 ms.), one of the two neutral stimuli was presented (white square or circle). After an SOA of 100, 200, or 500 ms, the circle turned either green or red. This served as the imperative cue for the participants to perform one of the previously designated gestures. Depending on the color of the stimulus, the participant would lift their fingers from the rest keys and either gesture a circle or a square with both hands (index fingers extended) in the vertical space in front of their body (black arrows in Figure 1). After finishing the gesture participants were instructed to bring the fingers back as quickly as possible, and in a straight line, to the rest keys (gray arrows in Figure 1). The assignment of gestures to colors was counterbalanced across participants. As soon as the fingers left the keys, the displayed shape was removed and replaced by a blank screen that remained on screen for the duration of the movement. As soon as the fingers returned to the rest keys the next trial started.

Both the times from stimulus onset to release of the rest keys (response times/RTs) and times from movement onset to return to the rest keys (movement times/MTs) were measured. RTs and MTs were calculated from the average RTs and MTs of both hands. Please note that the equipment used in Experiment 1 does not allow us to measure gesturing accuracy. The issue of gesturing accuracy is specifically addressed in Experiment 4, in which motion tracking was used to record the participants’ movements through 3D space.

Results

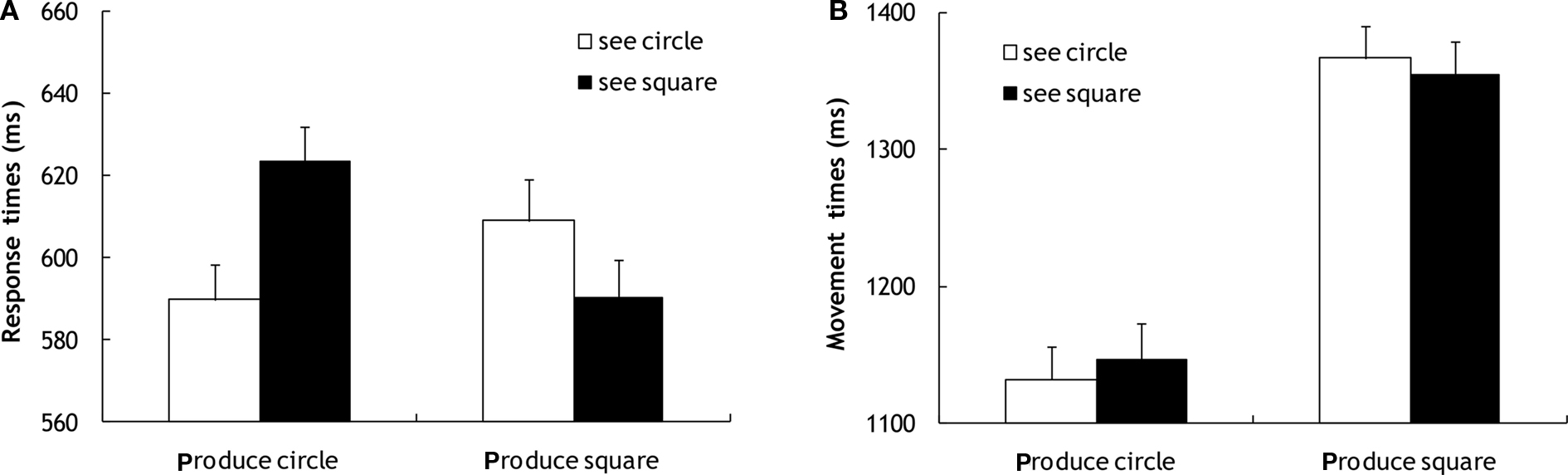

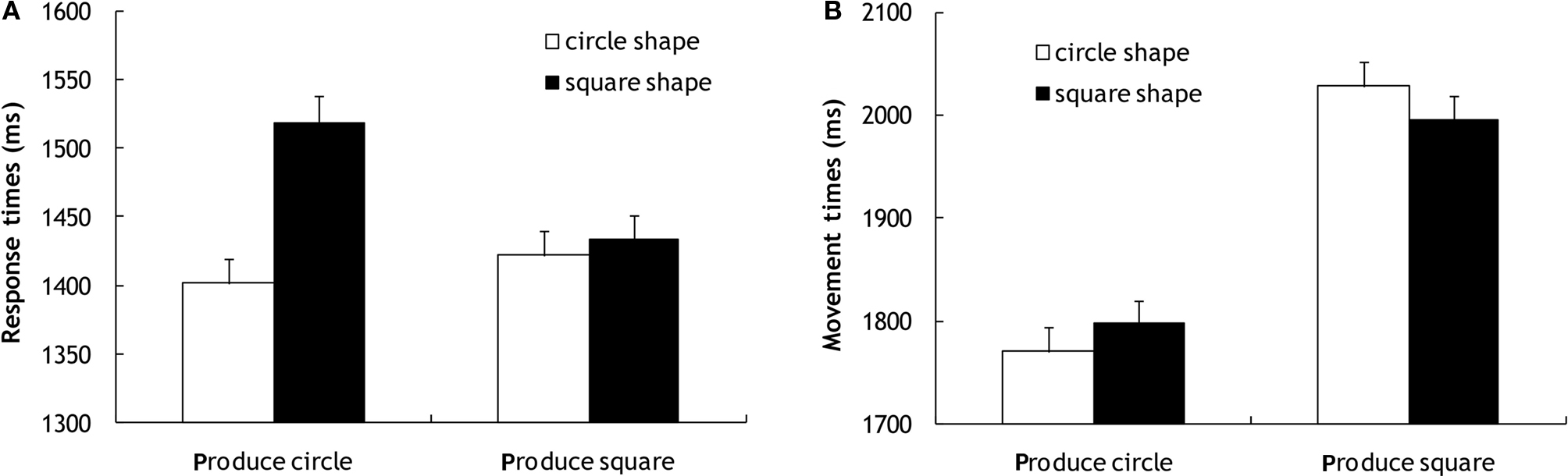

For the analysis of RTs (Figure 2A), only trials were considered in which the release of both rest keys followed by the return to both rest keys was detected. All trials that lay beyond three standard deviations of the condition means were excluded (2.9% of trials). The remaining RTs were analyzed with 2 × 2 repeated measurement ANOVA with the factors Shape (circle, square) and Gesture (circle, square). Entering SOA as a further variable in the ANOVA did not reveal any additional interactions (F < 1); data were therefore collapsed across the three SOAs. The analysis revealed neither a main effect of Gesture (F[1, 9] < 1), nor a main effect of Shape (F[1, 9] = 2.25; p = 0.17), but the predicted interaction of both factors was significant (F[1, 9] = 27.23; p < 0.001, η2 = 0.752). Post hoc t-tests showed that participants initiated a circle more quickly when seeing a circle than a square (t[9] = 5.21; p < 0.001), but that they initiated a square more quickly when they saw a square than a circle (t[9] = 2.55; p = 0.031).

Figure 2. Response times (A) and movement times (B) in Experiment 1. Error bars show the standard error of the mean.

Movement times (Figure 2B) were analyzed in an analogous manner. As SOA did not interact with compatibility effects (F = 1.470, p = 0.259), we again collapsed the data across this factor. The analysis did not reveal a main effect of Shape (F[1, 9] < 1), but a main effect of Gesture (F[1, 9] = 32.44; p < 0.001), with participants gesturing circles more quickly than squares. The predicted interaction of Shape and Gesture just failed to reach full significance (F[1, 9] = 4.85; p = 0.055, η2 = 0.350). Participants gestured circles more quickly when the stimulus was a circle that when it was a square (t[9] = 1.89; p = 0.091), but gestured a square more quickly when responding to a square than to a circle (t[9] = 1,79; p = 0.11), though the effect failed to reach significance.

Discussion

The experiment demonstrated that seeing geometrical shapes facilitates the production of similar gestures. Squares were gestured more efficiently when responding to a square, and circles were gestured more efficiently when responding to a circle. These effects were found even though the shapes did not belong to recognizable objects and even though they did not have to be encoded for the participant’s color decision task. Moreover, the required movements of the participants were not goal directed and did not match the usage patterns of commonplace objects. Finally, typical object-based affordance effects are usually not observed when responses are based on an action-irrelevant feature, such as color (e.g., Tipper et al., 2006). That robust effects on gesture output were nevertheless detected therefore indicates that the shape of viewed objects feeds directly into the motor system, affecting the production of ongoing movements, as predicted by ideomotor accounts of action control. Our data therefore put shape information on a similar level as perceptual-motor features, such as laterality (i.e., the Simon effect, Simon, 1969; for a review, see Hommel, 2010) or magnitude (i.e., the SNARC effect, Dehaene et al., 1993), for which similar perception-action links have been reported.

Although the effect was less robust, the compatibility of shape and movement appeared to not only affect gesture selection (as measured by RTs), but also their actual execution (MTs). As the geometrical shapes disappeared as soon as the participants released the rest key and started the movement, this MT effect cannot be attributed to direct perceptual interference with gesture production. Rather, it appears to have a more cognitive origin, suggesting that the shape codes generated during shape observation have a long lasting effect on both gesture selection and actual execution.

Experiment 2: Object Words

Experiment 1 demonstrated that simply seeing abstract shapes – circles or squares – may elicit gestures of the same shape. Although no language stimuli were utilized, the data are nevertheless relevant to understanding gesture. In many situations, people have to coordinate their actions and attention to share information or achieve a goal. Often, this coordination is achieved not only through language, but though iconic gestures that transmit the communicative intent by capturing the objects’ attributes (e.g., Morsella and Krauss, 2005). Experiment 1 provides evidence for a natural and highly automatic pathway that can explain gesturing in such circumstances.

To explain gesturing in a language context, it is important to demonstrate that similar processes also occur for content that is available only mentally, not visually. Experiment 2 addresses this question, by activating shape information through words denoting either round or square objects. Previous work has demonstrated motor system activation for words directly representing actions, such as “kick” or “push” (Hauk et al., 2004; Kemmerer et al., 2008), or objects that have become associated with specific motor responses (e.g., Tucker and Ellis, 2004; Bub et al., 2008). Here, we go beyond these findings to demonstrate that motor codes are activated even when the words do not describe actions or objects associated with specific behaviors. The word “billboard,” for example, possesses no directly available shape information (the word itself has no square shape), does not directly describe any specific behavior (unlike words such a “push”), and it is not linked to a prior history of associated motor behaviors. Moreover, as in Experiment 1, the bimanual gestures participants had to produce were not goal directed and did not correspond to the typical usage patterns of commonplace objects. Yet, if (a) reading object words activates a visual representation of the object’s shape, and (b) shape information is represented in terms of motor codes, then compatibility effects analogous to Experiment 1 should nevertheless be observed. Both MTs and RTs should again be slowed when participants have to gesture a circle in response to a word denoting a square object compared to a round object, and vice versa when participants have to gesture a square.

A second issue is how deeply the word has to be encoded for these effects to take place. If gestural effects are driven by visual shape information associated with the semantic knowledge of the word, then motor codes should only be activated when the word is processed semantically. We therefore compared two conditions, tested in separate experimental blocks. In one condition, participants had to select the gesture to perform – round or square – on the basis of the color of the presented word, minimizing the requirement of semantic or even lexical analysis of the word. In the other condition, participants selected the gesture based on semantic information carried by the word. If the referent object was typically found inside the house (e.g., fridge) participants would have to perform one gesture and the other gesture if the object was typically found outside the house (e.g., billboard).

Materials and Methods

Participants

Sixteen participants (14 females) took part in the experiment, ranging from 18 to 25 years. All other aspects of the participant selection were identical to Experiment 1.

Materials and apparatus

The apparatus was identical to Experiment 1. The materials consisted of a fixation cross (see Experiment 1) and 16 target words. Four words denoted round objects found outside the house (moon, steering wheel, carousel, roundabout), four words denoted square objects found outside the house (bungalow, goal posts, billboard, garage door), four words denoted square objects found inside the house (TV set, book, picture frame, fridge), and four words denoted round objects found inside the house (fish bowl, bottle top, hamster ball, dart board). The words were displayed on a black background in a 20-point Times New Roman font and were printed in either yellow or green (resulting in 32 different color-word combinations as stimuli).

Procedure and design

Each participant performed two task blocks (order counterbalanced across participants). In each task block, the procedure was similar to Experiment 1. Participants first received a computer driven instruction and the response assignment (i.e., whether the yellow color cued square gestures and green colors cued the round gesture, or vice versa; or whether objects found inside the house cued round movements and outside objects square movements or vice versa). They then performed 16 training trials, and two blocks of 96 trials of the actual experiment, again separated by a short pause that could be terminated by pressing the space bar. There were 32 different trial types altogether, resulting from the factorial combination of four words/objects for each of the two locations (inside, outside), shapes (round, square), and two colors (yellow, green). The course of each trial was identical to Experiment 1, with the exception that the geometrical shape stimuli were replaced with the word stimuli and that there was no neutral stimulus (i.e., the words immediately appeared in their final colors without any SOA). In the location task, participants ignored the color of the word and gestured a square or circle, depending on the typical location of the object (inside/outside the house). In the color task, they ignored the object’s typical location and produced a circle or square depending on the word’s color (green/yellow), with the assignment of colors/locations to responses counterbalanced across participants. Again, the target word was replaced with a blank screen, as soon as the fingers left the rest keys.

Results

All trials for which either the RTs or MTs lay beyond three standard deviations of the condition mean were again excluded (location task, 2.5% of the trials; color task, 2.6%). For each task, the remaining trials were analyzed with 2 × 2 repeated measurement ANOVAs with Shape (word referring to round or square object) and Gesture (circle, square) as within subject factors.

Location task

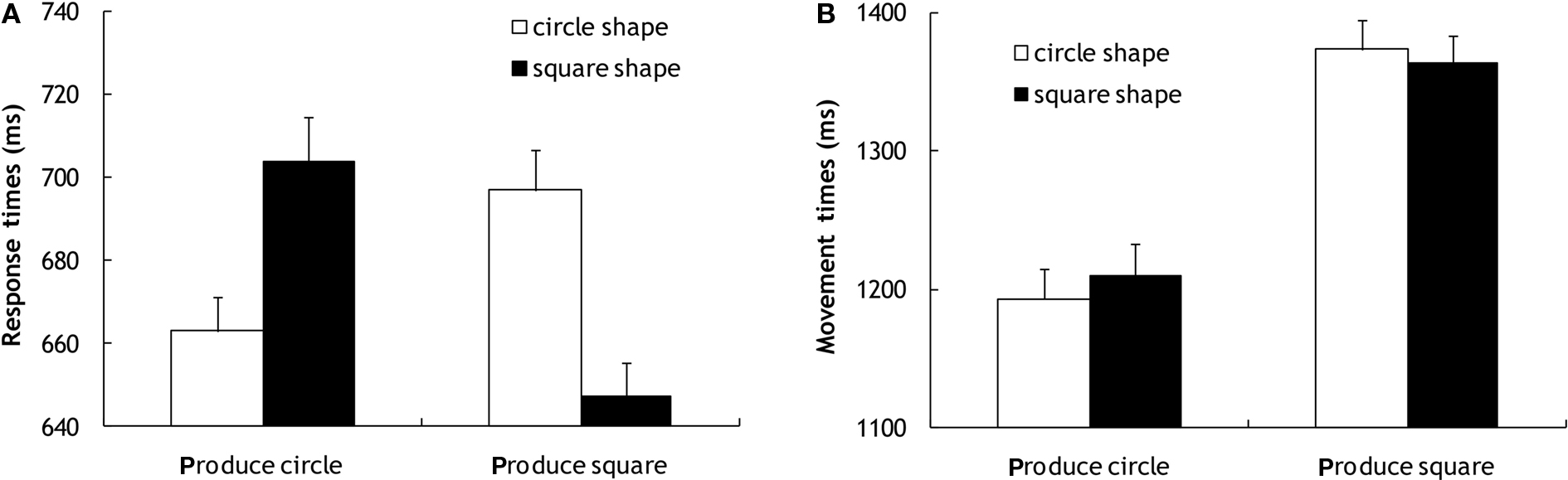

For RTs (Figure 3A), the analysis revealed no main effect of Gesture (F[1, 15] < 1) and no main effect of Shape (F[1, 15] < 1), but the critical interaction was significant (F[1, 15] = 6.14; p = 0.026; η2 = 0.291). When gesturing a square, participants were quicker to initiate the movement when responding to a word denoting a square than a round object (t[15] = 2.84; p = 0.012). When they gestured a circle, they were numerically quicker to initiate the movement when responding to a word denoting a round object than a square object (t[15] = 1.70; p = 0.11).

Figure 3. Response times (A) and movement times (B) in the location task in Experiment 2. Error bars show the standard error of the mean.

Movement times were analyzed in an analogous manner (Figure 3B). The ANOVA did not reveal a main effect of Shape (F[1, 15] < 1), but a main effect of Gesture (F[1, 15] = 31.1; p < 0.001). The critical interaction of Shape and Gesture was marginally significant (F[1, 15] = 3.63; p = 0.076; η2 = 0.195). Gesturing a circle was faster when responding to a word denoting a round object than a square object (t[15] = 2.70; p = 0.017), but, numerically, gesturing a square was faster when responding to a word denoting a square object (t[15] < 1; n.s.).

Color task

For RTs (Figure 4A), an analogous ANOVA neither revealed a main effect of Gesture (F[1, 15] = 1.53; p = 0.236), nor of Shape (F[1, 15] < 1), nor an interaction Gesture and Shape (F[1, 15] = 1.78; p = 0.202; η2 = 0.106). For MTs (Figure 4B), the analysis revealed the known main effect of Gesture (F[1, 15] = 43.0; p < 0.001), and of Shape (F[1, 15] = 5.31; p = 0.036). However, there was no sign of the critical interaction of Gesture and Shape (F[1, 15] < 1).

Figure 4. Response times (A) and movement times (B) in the color task in Experiment 2. Error bars show the standard error of the mean.

Discussion

Experiment 2 replicated the results of Experiment 1 and demonstrates that words that contain no direct visual or semantic cues to action nevertheless influence gesture production. Both RTs and – to a lesser extent – MTs were slower when the shape of the object denoted by a word was different to the gesture that participants produced. Participants took longer to select and produce circle gestures when processing a word denoting a square object (e.g., “billboard”). Conversely, when executing a square gesture participants took longer for round objects (e.g., “carousel”). This indicates, first, that word comprehension involves a perceptual simulation of the referent object’s shape. Second, as it was the case for directly perceived shapes, implicit shape information fed into the motor system and affected ongoing behavior, as predicted by the notion that perceptual and motor codes are directly linked (Prinz, 1990; Hommel et al., 2001).

Interestingly, compatibility effects were only observed when participants attended to the semantic properties of the words and decided whether the object would be typically found inside or outside a house. No significant effects were detected when participants responded to the color in which the word was printed. As the effect was nevertheless present numerically, it may have been that responses in the color task were too fast for such an influence to be detected with the subject numbers used here. Indeed, when correlating the size of the RT compatibility effects with participants’ overall RT, significant positive correlations emerged in both tasks (color task, r = 0.58; p = 0.019; location task, r = 0.49; p = 0.054), suggesting that those participants with the slowest RTs do show compatibility effects even in the color task. Thus, the data from both tasks provide evidence for a highly automatic mechanism that activates shape information of referent objects and feeds them into the motor system. Although tentative, our data suggest that such links between word meaning and motor activation are also established even when participants merely attend to the color of the printed words, as long as RTs are long enough for incidental semantic processing to take place (for similar findings, see research on the Stroop task, Stroop, 1935, for a review, see MacLeod, 1991).

Experiment 3: Arbitrary Word–Meaning Associations

One limitation of the experiments reported so far is that, although unlikely, there might be subtle differences in the visual word forms itself that might have carried shape information, which in turn might have affected gesture output. For example, there is a large variation in the visual properties of the words used in these experiments. Some words are short, such as “moon,” while others are longer, such as “billboard.” Moreover, the two Os in “Moon” might have evoked roundness, which in turn might have been responsible for the effects of gesture production. To show that the effects truly originate on a semantic level, we therefore replicated the location task of Experiment 2 with non-words, for which length and syllable structure was balanced. Participants first had to learn novel word–meaning associations. For example, they learnt that “prent” meant “moon” whereas “onhid” meant “billboard.” We then investigated whether effects on gesture production can also be observed when participants had to retrieve the meaning previously associated with these non-words. Across participants, we counterbalanced whether the non-words were associated with a round object or a square object, allowing us to rule out influences of visual word forms on gesture output.

Materials and Methods

Participants

Twenty-six participants (20 females) took part in the experiment, ranging from 18 to 23 years. All other aspects of the participant selection were identical to Experiment 1.

Materials and apparatus

The non-words to be learned were presented to the participants paired with an English word on an A4 card. Four of the words had one syllable (frosp, spand, prent, pring), four had two syllables (onhid, arlet, aglot, undeg). There were four English indoor words (dart board, fridge, fish bowl, book case) and four outdoor words (moon, bungalow, sunflower, billboard). In each of these conditions there were two circular and two square objects. There were two sets of pairings to counterbalance the assignment of non-words to round/square objects across participants. All further experimental manipulations were run on a 3.2-GHz PC running windows XP. The learning phase was controlled by E-Prime. The gesture production phase was controlled by Presentation.

Procedure and design

The experiment was divided in three phases. In the first phase, participants were told that they would take part in a word leaning/categorization experiment and were given eight five-lettered non-word/English word-pairs to learn. They were instructed that they would have to retrieve the meaning of the non-words in the experiments’ later stages. Each participant was given 7 min to learn the pairings.

The second phase was a learning/testing phase intended to further familiarize participants with the non-words and their assigned meanings. This second phase was subdivided into two sections. In the first section, participants were presented with one of the non-word in the upper half of the screen and a choice of two English words on the left and right of the lower half of the screen. They indicated their choice by pressing either the “C” key for the word on the left, or the “N” key for the word on the right. If their choice was incorrect, they were given feedback and shown the correct pairing. Each trial began with a fixation cross (1000 ms), followed by a screen presenting the word and its two possible matches. This screen remained on for 3000 ms or until the participant responded. This was followed by a blank screen (2000 ms), which, in the case of an incorrect response, notified the participants – together with a warning sound – of their error and displayed the correct English/non-word pair. They continued to be presented with words until they had either correctly matched 16 pairs in a row or they had received 128 presentations of non-words. In the second section, participants were presented with an English word and given a choice of two non-words. In both sections, the trials continued until they had correctly matched 16 words in a row or had completed 128 trials. At the end of this phase participants were read each of the non-words and asked to give the correct English match. Two female participants were excluded from the study as they could not correctly recall all the appropriate English words. All participants included in the study completed the learning/testing phase without needing the full 128 presentations in each section.

The course of each trial in the third phase of the experiment was the same as in the location task in Experiment 2, except for the use of non-words as stimuli. Again, participants had to gesture either a circle or square depending on the typical location of the referent object.

Results

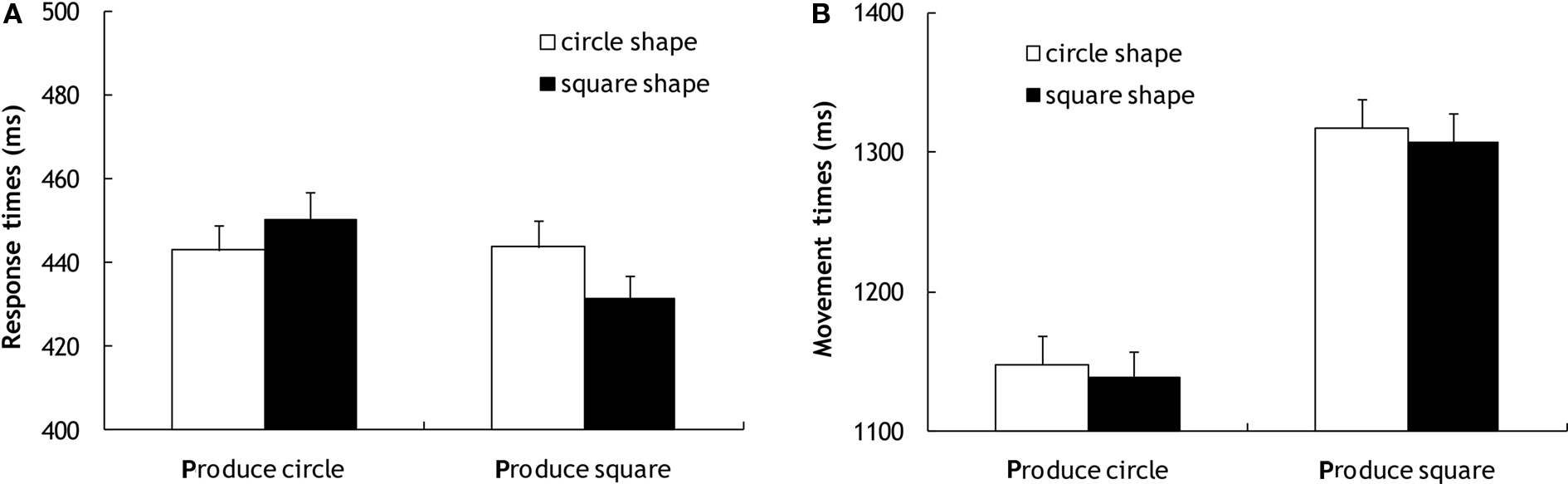

Again, only trials were considered in which the release of both rest keys followed by the return to both rest keys were detected. All trials that lay beyond three standard deviations of the condition means were excluded (2.9% of trials). The remaining RTs (Figure 5A) were analyzed with 2 × 2 repeated measurement ANOVAs with the factors Shape (circle, square) and Gesture (circle, square). This analysis revealed no main effect of Gesture (F[1, 23] = 1.15; p = 0.295), no main effect of Shape (F[1, 23] = 2.16; p = 0.156), and no interaction (F[1, 23] = 1.26; p = 0.273; η2 = 0.052).

Figure 5. Response times (A) and movement times (B) in Experiment 3. Error bars show the standard error of the mean.

The analysis of MTs (Figure 5B) revealed no main effect of Shape (F[1, 23] < 1), but the known main effect of Gesture (F[1, 23] = 28.1; p < 0.001), with participants gesturing circles more quickly than squares. Importantly, for MTs, the predicted interaction of Shape and Gesture was highly significant (F[1, 23] = 12.0; p = 0.002; η2 = 0.342). Participants gestured circles more quickly after reading a word referring to a round object than a square object (t[23] = 3.32; p = 0.003), but gestured squares more quickly when reading a word referring to a square object (t[23] = 1.58; p = 0.13).

Discussion

The experiment confirmed that semantic word processing affects gestural output, even in the case of non-words that had previously been associated with specific objects. The RT effects did not reach significance, but the MT effects were highly significant, demonstrating similar compatibility effects as when categorizing real words. Several factors might have contributed to the shift of effect from RTs to MTs in this experiment. The mean RTs reported here were over 700 ms longer than those of Experiment 2 when categorizing words. The RTs were therefore strongly affected by the additional demands of retrieving word meaning from memory, introducing considerable noise. Moreover, due to the demand of retrieving word meaning, participants might have fully processed the meaning – and therefore the shape of the target words – only after they initiated the gesture. That highly significant effects were observed despite these additional task requirements and potential shifts in strategy extends our proposal that the representation of implicit shape can influence an arbitrary action. Here, a previously unfamiliar set of letters influenced how a person moves their fingers to map out a particular shape in space.

Experiment 4: Hand Trajectories

Experiment 2 indicated that a deep semantic processing is required to evoke gestures that match the objects’ shape. If such a semantic processing takes place, the shape of the processed objects affects both the selection of gestures (RT), but also their actual performance (MT). However, so far, it has been hard to ascertain the form of the MT effects. It is possible that the gesture was spatially accurate in both compatible and incompatible conditions, just being easier when object shape and hand action match. Alternatively, it may be the case that the meaning of the word alters the actual form of the hand path itself. Such a finding would provide direct evidence that implied shape information not only affects relatively high-level gesture selection processes, but is also directly linked to the control of arbitrary movements through space.

To examine hand trajectory, we now record hand movements through 3D space as participants produce circle and square gestures. Two measures were of interest. First, we specifically measured gesturing errors, which was impossible in the previous experiments. Ideomotor accounts predict that viewing an incompatible shape might not only affect RTs, but might lead participants to accidentally select the wrong gesture to perform. Second, if the same codes are used to describe stimuli in the environment and to control own action in space, not only gesture selection but their actual performance should be affected. In other words, even those gestures that were selected correctly should be produced differently when the target word is incompatible. Processing a round object should therefore render square gestures more circle-like, and circle gestures should be more similar to squares when processing a square object.

Materials and Methods

Participants

Fifteen participants, all students at the University of Wales, Bangor, took part in the experiment. They ranged in age from 18 to 30 years. All other aspects of the participant selection was identical to the previous experiments.

Materials and apparatus

The experiment was controlled by Presentation run on a 3.2-GHz PC running Windows XP. Participants had one retro reflective marker placed on each of their index fingers. Participants’ movements were tracked using a Qualisys ProReflex motion capturing system (Qualisys AB, Gothenburg, Sweden), and the data were recorded using Qualisys Track Manager (QTM) software (Qualisys AB). The stimuli consisted of a fixation cross and 16 target words. The target words were displayed in a 20-point Times New Roman font and appeared either in red or green on a black background. Four of the words were square outside objects (bungalow, billboard, garage door, goal posts), four of the words were round outside objects (carousel, sunflower, moon, tire), four of the words were square inside objects (picture frame, book, fridge, TV set), and four of the words were round inside objects (darts board, fishbowl, bottle top, hamster ball).

Procedure and design

The procedure and design corresponded in all aspects to the location task of Experiment 2.

Data Recording and analysis

The recording of trajectories started as soon as both fingers left the rest keys and concluded as soon as both fingers returned. The Qualisys output for a single trajectory consists of x, y, and z coordinates for the marker on the participants’ two fingernails, sampled 200 times per second. As the subjects were instructed to produce movements in the vertical plane in front of their body, we only considered the movement in a 2D plane, that is, we only used the y (height) and x (left/right) coordinates. The z coordinate (the distance away from the participant) was not considered. All trials were excluded for which the trajectories were not complete (i.e., covering the full movement path from release to return to the rest keys), as were trials for which either RT or MT lay beyond three standard deviations from the condition means (2.2% of trials), as in the previous experiments.

For the purpose of data reduction and to be able to compare participants’ trajectories to one another, we transformed the different trajectories of each participant into one common reference frame. For that purpose, each trajectory of each participant was transformed such that they had identical height, based on the difference between the highest and lowest points of a trajectory. This stretches or compresses each trajectory on the y axis, but leaves x coordinates untouched. The average distance from the midline on the x coordinate was then extracted for eight equidistant intervals along each trajectory’s height, for each hand separately. As the gestures produced by the left and the right hand were mirror images of one another, the data from the left hand was mirrored along the y axis and averaged with the data from the right hand. This procedure yields, for each trial of a participant, the deviation from the midline for eight equidistant coordinates on the y axis. The first bin was not analyzed as it mainly captures release of the rest keys.

Erroneous gestures were identified with a correlation-based criterion. For every trial of every participant, we calculated how well the trial correlated with both the participant’s average signature of the gesture she/he should have produced in this trial (averaged over both compatible and incompatible trials), and the alternative gesture. Trials were considered errors if they correlated more strongly with the respective other gesture than with the required gesture. This correlation-based procedure provides an objective measure of gesturing accuracy, measuring how well each trial conformed to the ideal shape that should have been produced in this trial. The distances from the midline in the remaining trials (x coordinates) were then averaged, for each participant, condition, and y coordinate separately.

Results

Gesture errors

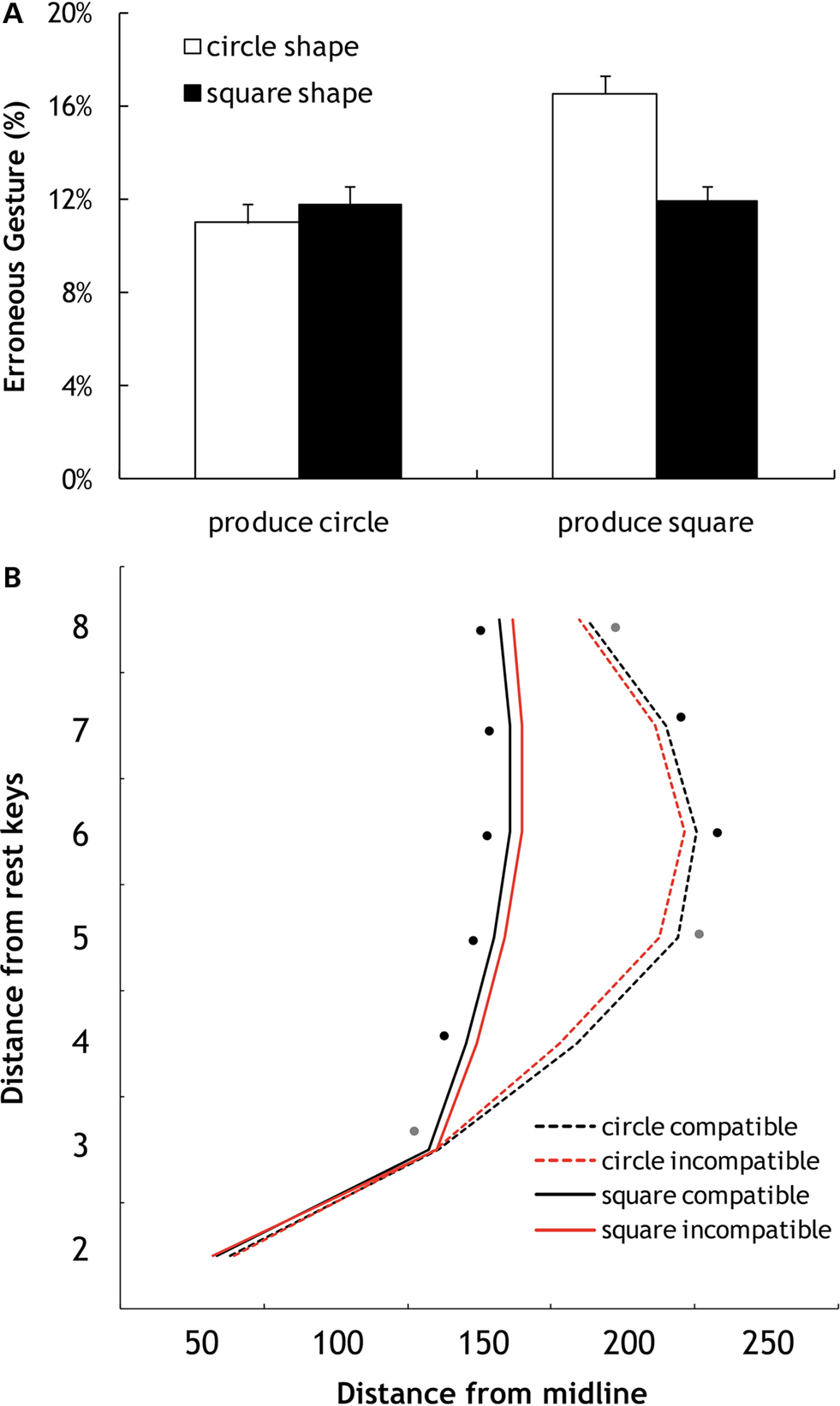

Figure 6A shows the percentage of erroneous trials identified by the correlation criterion in each condition. An analysis performed on the participants mean error rates revealed no main effect of Gesture (F[1, 14] = 1.66; p = 0.219) and no main effect of Shape (F[1, 14] = 2.82; p = 0.116), but the critical interaction was marginally significant (F[1, 14] = 3.71; p = 0.075; η2 = 0.209). When required to produce a square, participants more often erroneously produced a circle after reading a round word than after reading a square word (t[14] = 2.74; p = 0.016). For circles, a small and non-significant (t[14] < 1) difference in the other direction was apparent.

Figure 6. (A) Represents the percentage of erroneous trials detected by the correlation procedure. Error bars show the standard error of the mean. (B) Shows the trajectories for circles and squares in the compatible and incompatible trials. Dots next to the trajectories mark significant differences in pairwise two-sided t-tests (gray, p < 0.10, black; p < 0.05) between the compatible and incompatible conditions for circles and squares for each of the y coordinates.

Trajectories

Figure 6B shows the mean the across-participant trajectories when required to produce circles (dotted lines) and squares (solid lines) when gesture and shape were either compatible (black) or incompatible (red). To investigate whether there are significant differences in how round and square gestures where performed in the compatible and incompatible conditions, we entered each participants x coordinate (distance from the midline) into a 2 × 2 × 7 repeated measures ANOVA with the factors Gesture (circle, square), Compatibility (compatible, incompatible) and y coordinate (second to eight bin). This analysis revealed main effects of Gesture (F[1, 14] = 5.92; p = 0.029) and y coordinate (F[6, 84] = 33.78; p < 0.001). Circles were generally wider than squares, and x coordinates were generally wider at the middle and the top than at the bottom just after release from the rest keys. The interaction of Gesture and y coordinate (F[6, 84] = 5.66; p < 0.022) confirmed that for circles and squares the trajectories developed differently across y coordinates. Most importantly, the critical interactions of Gesture and Compatibility (F[1, 14] = 8.03; p < 0.013; η2 = 0.365) and of Gesture, Compatibility and y coordinate (F[6, 84] = 4.70; p < 0.021; η2 = 0.251) were significant. As can be seen in Figure 5A, in the incompatible conditions, the trajectories of circles and squares were closer to each other than in the compatible conditions. In other words, incompatible object shapes intruded on gesture production and pushed circles inwards to the typical trajectories of squares, and squares outwards toward the typical trajectories of circles.

Discussion

Experiment 4 confirms and extends the gesture–language compatibility effects observed in the previous experiments. We found evidence for two effects. First, when required gesture and object shape mismatched, participants tended to accidentally select the incorrect gesture. This confirms the effects on gesture selection demonstrated in Experiment 1 and 2. Second, and more importantly, even the correctly selected gestures were influenced by shape information carried by the words. When tracking the actual movement trajectories produced by the participants, we found that circles became more square-like when reading an incompatible word referring to a square object (“billboard”), and squares became more circle-like when reading an incompatible word referring to a round object (“carousel”).

This is the first demonstration that highly specific motor properties such as hand trajectory are influenced by stimuli that have no direct action properties. Shape information therefore not only affects high-level gesture selection processes, but the control of hand movements through space, indicating intimate links between shape representation and the control of non-goal directed movements in space. It is consistent with the idea that action control is mediated by “common codes” (Prinz, 1997) that are utilized to represent shapes in the environment and to control the actors own hand movements. In such models, shape information therefore functions both as a description of stimuli in the environment and as goal states for one’s own movements.

General Discussion

We investigated the processes that may underlie the production of iconic gestures. Previous studies have demonstrated that sentences describing actions or objects directly associated with behaviors automatically activate the motor system and influence ongoing behavior (e.g., Glenberg and Kaschak, 2002; Zwaan and Taylor, 2006; Bub et al., 2008), suggesting that gesturing might be a function of the action content of the message. Here, we show that the link between perception and action is more widespread. Across multiple experiments, complex gestures were automatically released by words with no direct links to action, providing evidence for a more general mechanism that automatically transforms shape information into trajectories of congruent gestures.

In Experiment 1, participants produced square or round gestures in response to the color of presented circles or squares. We found that seeing circles or squares directly influenced gesture production, facilitating gestures of the same shape as displayed on the screen. Importantly, the seen shapes not only influenced the selection of the appropriate gesture (as measured by RTs), but their actual production (as measured by MT), although the latter effect was less robust. These data indicate that, once encoded, shape feeds directly into motor output systems and affects ongoing behavior. To our knowledge, this is the first demonstration that shape information can facilitate the production of similar movements in space, even when the shapes were not part of recognizable objects, the movements of the participants did not match the typical usage patterns of such objects, and even when shape did not have to be encoded for the participants’ task.

Further experiments extended these findings to a language context and demonstrated that the shapes need not be directly perceived to influence ongoing behavior. Here, the shapes were not primed visually, but through words that denoted either round (“carousel”) or square (“billboard”) objects. When semantic analysis of the words was required – deciding whether the referent object would be found inside or outside a house – word meaning facilitated both the selection (RTs) and production (MTs) of gestures of the same shape. Although tentative, subtle effects were even detected when participants ignored the semantics of the words and focused on their color. The analysis did not reveal overall effects on RTs or MTs, but correlational analyses suggested that those participants with slower RTs showed similar compatibility effects as the participants in the location task. Moreover, similar effects were also observed when the objects were arbitrarily associated with non-words (Experiment 3), further supporting a semantic origin of the effects. Finally, the MT effect was shown to be due, at least in part, to changes in the shape of hand path (Experiment 4), where the shape of the referent object intruded into the shape the participant was attempting to produce. We found that reading words referring to square objects rendered round gestures more square-like, and vice versa for reading words referring to round objects. Shape information therefore not only affects high-level gesture selection processes, but the control of hand movements through space, indicating intimate links between (explicit or implicit) shape representation and motor control.

These findings demonstrate that directly perceiving or thinking about an object activates information about its shape, which in turn feeds into the motor system and elicits congruent movements in space. As noted, the objects and shapes presented were not associated with specific motor behaviors. Moreover, the gestures participants were required to perform – bimanual movements in the vertical space in front of their body – were abstract, not directed toward a goal, and unrelated to typical actions directed toward objects. The data therefore suggest that gesture–language interactions emerge not only from motor simulations that capture the action content of a message, but that perceptual information about an object (here, its shape) suffices to elicit congruent gestures.

Previous studies have provided evidence that language comprehension entails highly specific perceptual simulations that allow language users to interact with the material as if it was actually perceived (e.g., Zwaan et al., 2002; Richardson et al., 2003; Zwaan and Yaxley, 2003; Pecher et al., 2009). For example, reading a sentence like “the ranger saw an eagle in the sky” primes images of an eagle with spread wings as opposed to an eagle with its wings folded (Zwaan et al., 2002). Our data indicate, first, that such perceptual simulations of an object’s shape can also be observed outside of a sentence context, and occur on a single word basis, at least in situations where the requirement to produce gestures renders shape information relatively salient (cf. Pecher et al., 1998; see Hommel, 2010, for a similar point with regard to the Simon effect). Second, and more importantly, the data suggest that these perceptual simulations may suffice to engender motor processes that capture these shapes, and may give rise to the production. Such findings were predicted from ideomotor theories of action control that assume that the same codes are utilized to represent stimuli in the environment and plan one’s own actions (e.g., Greenwald, 1970; Prinz, 1990; Hommel et al., 2001). In such models, shape information functions both as a description of objects in the environment and of possible movements that the observer can perform.

A direct link between perceptual information and the motor system is consistent with observations from spontaneous gesturing. Iconic gestures similarly capture not only the motor but also visuospatial aspects of a message. It has been found, for example, that people with high spatial skills produce more iconic gestures (Hostetter and Alibali, 2007), that iconic gestures predominantly occur with spatial words (Rauscher et al., 1996; Krauss, 1998; Morsella and Krauss, 2005; Trafton et al., 2006) and that restricting people from gesturing selectively impairs the production of spatial words (Rauscher et al., 1996). Various accounts have been put forward to explain the link between gesturing and visuospatial information. It has been proposed, for example, that gesturing reduces cognitive load by offloading spatial information onto the motor system (Goldin-Meadow et al., 2001), supports the segregation of complex material into discrete elements for verbalization (e.g., Kita, 2000), or facilitates lexical access of words in the mental lexicon (e.g., Krauss et al., 2000). Our data suggest that, even though gesturing might well have these benefits, the general tendency to gesture may arise on a more basic level and reflect direct links between perceptual information and the motor system (e.g., Prinz, 1997; Hommel et al., 2001). Even though future studies have to confirm that similar links can be observed when people are actively producing language, the present data suggest that gesturing is a direct consequence of a cognitive system that utilizes the same codes to represent stimuli in the environment and to control possible movements in space (see Hostetter and Alibali, 2008, for a similar account based on data of spontaneous gesturing).

Further Predictions and Implications

The notion that perceptual simulations may suffice to elicit complex gestures in space has implications for the interpretation of embodied effects observed in language paradigms. Various studies (e.g., Glenberg and Kaschak, 2002; Zwaan and Taylor, 2006) have reported language–action compatibility effects, where reading a sentence such as “turn up the volume” leads to a facilitation of corresponding movements (turning a knob to the right). Our findings open up the possibility that these effects may not – or not only – reflect the “motor” components of the message, but their perceptual components. In the above example, the motor effects could therefore reflect the clockwise motion that results from the observed action, rather than the implied motor action itself. If true, then a sentence such as “the wheel spins clockwise” should be as efficient as the sentence “he spins the wheel clockwise” in eliciting turning gestures of one’s hand. A second prediction is that any motor activation observed in such paradigms is not directly due to the language understanding processes, but due to perceptual simulations of the sentence content. This assumption can be tested with language comprehension paradigms that reliably measure both motor activation (e.g., Glenberg and Kaschak, 2002) and perceptual activation (e.g., Zwaan et al., 2002; Zwaan and Yaxley, 2003). Mediation analysis (e.g., Baron and Kenny, 1986) would then allow one to establish whether the motor effects indeed reflect underlying perceptual simulations. If so, then the presence of perceptual effects should be able to fully explain to which extent motor effects are evoked by the experimental manipulation across participants, but not vice versa.

Ideomotor theories not only predict that perceptual information will elicit corresponding motor output, but they also predict that, conversely, action planning will affect perception and cognition (e.g., Hommel et al., 2001). If actions are controlled by their perceptual effects, then planning or performing an action should affect how stimuli in the environment are perceived, and how easily they are processed. There are now numerous illustrations of this principle. For example, in a remarkable demonstration, Wohlschläger and Wohlschläger (1998) and Wohlschläger (2000) have reported that clockwise or counterclockwise movements of one’s hand cause an ambiguously rotating figure to be perceived as rotating in the same direction. Similarly, the perception of directional cues (i.e., arrows) is affected when participants plan movements in the same or different direction (e.g., Müsseler and Hommel, 1997; for similar effects, see Symes et al., 2008, 2009, 2010). Such reverse effects from action on perception and cognition have indeed also been demonstrated for gesturing. For example, gesturing facilitates word retrieval processes in tip-of-the-tongue states (Pine et al., 2007). Sentence production is more fluent when people are allowed to gesture, and impaired when they are restricted from gesturing (Rauscher et al., 1996). Finally, people that are allowed to gesture speak more about spatial topics (Rimè et al., 1984) and rely more strongly on spatial strategies in problem solving tasks (Alibali et al., 2004).

This “self-priming function” of gestures can be tested with analogous paradigms as reported here. If motor codes are linked to perceptual effects, then producing gestures of distinct shapes should affect participants’ ability to process stimuli of similar shapes. For example, while producing a round gesture, participants should find it easier to make judgments about (verbally presented) objects with round shapes than objects with square shapes. Such bidirectional links between perception and action can also be investigated with new methods in functional magnetic resonance imaging (fMRI). Repetition suppression and multivoxel pattern classification (MVPC) allow one to investigate not only whether the same neuronal populations are engaged during production of a particular shape, but also whether they represent this shape when it belongs to an object, irrespective of whether directly perceived or presented verbally. Such data would provide direct evidence that produced, observed and merely implicitly represented shapes rely on the same neural codes.

Conclusions

Previous accounts have assumed that object-based motor effects reflect the processing of affordances: learned ways to interact with the objects that are automatically retrieved upon object perception, or automatic motor simulation of action verbs. Our results demonstrate that the interaction between perception and action is far more widespread. Words can influence how participants produce motor behavior, even when the words have no direct links to action. This demonstrates the broad interactions between perceptual and motor systems consistent with accounts that assume that visual representations overlap with motor representations and are either indistinguishable or very closely interconnected (Greenwald, 1970; Prinz, 1990; Hommel et al., 2001). This link between perceptual and motor information may underlie the ubiquitous phenomenon of iconic gestures.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The work was supported by a Wellcome Trust Progamme grant awarded to Steven P. Tipper. We thank Ed Symes for many helpful comments and Anna Tett, Richard Webb, and Cheree Baker for help with the data collection.

References

Alibali, M. W. (2005). Gesture in spatial cognition: expressing, communicating, and thinking about spatial information. Spat. Cogn. Comput. 5, 307–331.

Alibali, M. W., Spencer, R. C., and Kita, S. (2004). “Spontaneous gestures influence strategy choice in problem solving,” in Paper presented at the Biennial Meeting of the Society for Research in Child Development, Atlanta, GA.

Bach, P., Peatfield, N. A., and Tipper, S. P. (2007). Focusing on body sites: the role of spatial attention in action perception. Exp. Brain Res. 178, 509–517.

Bach, P., and Tipper, S. P. (2006). Bend it like Beckham: embodying the motor skills of famous athletes. Q. J. Exp. Psychol. 59, 2033–2039.

Bach, P., and Tipper, S. P. (2007). Implicit action encoding influences personal-trait judgments. Cognition 102, 151–78.

Baron, R. M., and Kenny, D. A. (1986). The moderator-mediator variable distinction in social psychological research: conceptual, strategic and statistical considerations. J. Pers. Soc. Psychol. 51, 1173–1182.

Brass, M., Bekkering, H., Wohlschläger, A., and Prinz, W. (2000). Compatibility between observed and executed finger movements: comparing symbolic, spatial, and imitative cues. Brain Cogn. 143, 124–143.

Bub, D. N., Masson, M. E. J., and Cree, G. S. (2008). Evocation of functional and volumetric gestural knowledge by objects and words. Cognition 106, 27–58.

Buccino, G., Riggio, L., Melli, G., Binkofski, F., Gallese, V., and Rizzolatti, G. (2005). Listening to action-related sentences modulates the activity of the motor system: a combined TMS and behavioral study. Brain Res. Cogn. Brain Res. 24, 355–363.

Candidi, M., Vicario, C. M., Abreu, A. M., and Aglioti, S. M. (2010). Competing mechanisms for mapping action-related categorical knowledge and observed actions. Cereb. Cortex. doi: 10.1093/cercor/bhq033.

Craighero, L., Fadiga, L., Rizzolatti, G., and Umiltà, C. (1999). Action for perception: a motor-visual attentional effect. J. Exp. Psychol. Hum. Percept. Perform. 25, 1673–1692.

Dehaene, S., Bossini, S., and Giraux, P. (1993). The mental representation of parity and numerical magnitude. J. Exp. Psychol. Gen. 122, 371–396.

di Pellegrino, G., Fadiga, L., Fogassi, L., Gallese, V., and Rizzolatti, G. (1992). Understanding motor events: a neurophysiological study. Exp. Brain Res. 91, 176–180.

Fischer, M. H., and Zwaan, R. A. (2008). Embodied language - a review of the role of the motor system in language comprehension. Q. J. Exp. Psychol. 61, 825–850.

Gillmeister, H., Catmur, C., Liepelt, R., Brass, M., and Heyes, C. (2008). Experience-based priming of body parts: a study of action imitation. Brain Res. 1217, 157–170.

Glenberg, A. M., and Kaschak, M. P. (2002). Grounding language in action. Psychon. Bull. Rev. 9, 558–565.

Glover, S., Rosenbaum, D. A., Graham, J., and Dixon, P. (2004). Grasping the meaning of words. Exp. Brain Res. 154, 103–108.

Goldin-Meadow, S., Nusbaum, H., Kelly, S., and Wagner, S. (2001). Explaining math: gesturing lightens the load. Psychol. Sci. 12, 516–522.

Goldin-Meadow, S., and Wagner, S. M. A. (2005). How our hands help us learn. Trends Cogn. Sci. 9, 234–241.

Graham, J. A., and Argyle, M. (1975). A cross-cultural study of the communication of extra-verbal meaning by gestures. Int. J. Psychol. 10, 57–67.

Greenwald, A. G. (1970). Sensory feedback mechanisms in performance control: with special reference to the ideomotor mechanism. Psychol. Rev. 77, 73–99.

Griffiths, D., and Tipper, S. P. (2009). Priming of reach trajectory when observing actions: hand-centred effects. Q. J. Exp. Psychol. 62, 2450–2470.

Gunter, T. C., and Bach, P. (2004). Communicating hands: ERPs elicited by meaningful symbolic hand postures. Neurosci. Lett. 372, 52–56.

Hauk, O., Johnsrude, I., and Pulvermüller, F. (2004). Somatotopic representation of action words in the motor and premotor cortex. Neuron 41, 301–307.

Holle, H., and Gunter, T. C. (2007). The role of iconic gestures in speech disambiguation: ERP evidence. J. Cogn. Neurosci. 19, 1175–92.

Hommel, B. (2010). The Simon effect as tool and heuristic. Acta Psychol. doi:10.1016/actpsy.2010.04.011

Hommel, B., Müsseler, J., Aschersleben, G., and Prinz, W. (2001). The theory of event coding (TEC). A framework for perception and action planning. Behav. Brain Sci. 24, 849–878.

Hostetter, A. B., and Alibali, M. W. (2007). Raise your hand if you’re spatial: relations between verbal and spatial skills and gesture production. Gesture 7, 73–95.

Hostetter, A. B., and Alibali, M. W. (2008). Visible embodiment: gestures as simulated action. Psychon. Bull. Rev. 15, 495–514.

Kemmerer, D., Castillo, J. G., Talavage, T., Patterson, S., and Wiley, C. (2008). Neuroanatomical distribution of five semantic components of verbs: evidence from fMRI. Brain Lang. 107, 16–43.

Kita, S. (2000). “How representational gestures help speaking,” In Language and Gesture, ed. D. McNeill (Cambridge: Cambridge University Press), 162–185.

Kita, S., and Özyürek, A. (2003). What does cross-linguistic variation in semantic coordination of speech and gesture reveal? Evidence for an interface representation of spatial thinking and speaking. J. Mem. Lang. 48, 16–32.

Knoblich, G., and Prinz, W. (2005). “Linking perception and action: an ideomotor approach,” in Higher-Order Motor Disorders, eds H.-J. Freund, M. Jeannerod, M. Hallett, and R. C. Leiguarda (Oxford, UK: Oxford University Press), 79–104.

Krauss, R. M., Chen, Y., and Chawla, P. (1996). Nonverbal behavior and nonverbal communication: what do conversational hand gestures tell us? Adv. Exp. Soc. Psychol. 28, 389–450.

Krauss, R. M., Chen, Y., and Gottesman, R. (2000). “Lexical gestures and lexical access: a process model,” in Language and Gesture, ed. D. McNeill (Cambridge, UK: Cambridge University Press), 261–283.

MacLeod, C. (1991). Half a century of research on the Stroop effect: an integrative review. Psychol. Bull. 109, 163–203.

Melinger, A., and Levelt, W. (2004). Gesture and the communicative intention of the speaker. Gesture 4, 119–141.

Morsella, E., and Krauss, R. M. (2005). Muscular activity in the arm during lexical retrieval: implications for gesture-speech theories. J. Psycholinguist. Res. 34, 415–427.

Müsseler, J., and Hommel, B. (1997). Blindness to response-compatible stimuli. J. Exp. Psychol. Hum. Percept. Perform. 23, 861–872.

Özyürek, A., Willems, R. M., Kita, S., and Hagoort, P. (2007). On-line integration of semantic information from speech and gesture: insights from event-related brain potentials. J. Cogn. Neurosci. 4, 605–616.

Pecher, D., van Dantzig, S., Zwaan, R., and Zeelenberg, R. (2009). Language comprehenders retain implied shape and orientation of objects. J. Exp. Psychol. 10, 1–7.

Pecher, D., Zeelenberg, R., and Raaijmakers, J. G. W. (1998). Does pizza prime coin? Perceptual priming in lexical decision and pronunciation. J. Mem. Lang. 38, 401–418.

Pine, K., Bird, H., and Kirk, E. (2007). The effects of prohibiting gestures on children’s lexical retrieval ability. Dev. Sci. 10, 747–754.

Prinz, W. (1990). “A common-coding approach to perception and action,” in Relationships Between Perception and Action: Current Approaches, eds O. Neumann and W. Prinz (Berlin/Heidelberg/New York: Springer), 167–201.

Pulvermüller, F., and Fadiga, L. (2010). Active perception: sensorimotor circuits as a cortical basis for language. Nat. Rev. Neurosci. 11, 351–360.

Rauscher, F. H., Krauss, R. M., and Chen, Y. (1996). Gesture, speech, and lexical access: the role of lexical movements in speech production. Psychol. Sci. 7, 226–231.

Richardson, D. C., Spivey, M. J., Barsalou, L. W., and McRae, K. (2003). Spatial representations activated during real-time comprehension of verbs. Cogn. Sci. 27, 767–780.

Rimè, B., Shiaratura, L., Hupet, M., and Ghysselinckx, A. (1984). Effects of relative immobilization on the speaker’s nonverbal behavior and on the dialogue imagery level. Motiv. Emot. 8, 311–325.

Seyfeddinipur, M., and Kita, S. (2001). “Gesture and disfluencies in speech,” in Oralité et gestualité: Interactions et comportements multimodaux dans la communication [Orality and gestuality: multimodal interaction and behavior in communication]. Actes du colloque [Proceedings of the meeting of] ORAGE 2001, eds C. Cavé, I. Guaïtella, and S. Santi (Paris, France: L’Harmattan), 266–270.

Stroop, J. R. (1935). Studies of interference in serial verbal reactions. J. Exp. Psychol. 18, 643–662.

Stürmer, B., Aschersleben, G., and Prinz, W. (2000). Correspondence effects with manual gestures and postures: a study of imitation. J. Exp. Psychol. Hum. Percept. Perform. 26, 1746–1759.

Symes, E., Ellis, R., and Tucker, M. (2007). Visual object affordances: object orientation. Acta Psychol. 124, 238–255.

Symes, E., Ottoboni, G., Tucker, M., Ellis, R., and Tessari, A. (2009). When motor attention improves selective attention: the dissociating role of saliency. Q. J. Exp. Psychol. doi: 10.1080/17470210903380806.

Symes, E., Tucker, M., Ellis, R., Vainio, L., and Ottoboni, G. (2008). Grasp preparation improves change detection for congruent objects. J. Exp. Psychol. Hum. Percept. Perform. 34, 854–871.

Symes, E., Tucker, M., and Ottoboni, G. (2010). Integrating action and language through biased competition. Front. Neurorobot. 4:9. doi: 10.3389/fnbot.2010.00009.

Tessari, A., Ottoboni, G., Symes, E., and Cubelli, R. (2010). Hand processing depends on the implicit access to a spatially and bio-mechanically organized structural description of the body. Neuropsychologia 48, 681–688.

Tipper, S. P., and Bach, P. (2010). The face inhibition effect: social contrast or motor competition? Eur. J. Cogn. Psychol. (in press).

Tipper, S. P., Paul, M., and Hayes, A. (2006). Vision-for-action: the effects of object property discrimination and action state on affordance compatibility effects. Psychon. Bull. Rev. 13, 493–498.

Trafton, J. G., Trickett, S. B., Stitzlein, C. A., Saner, L., Schunn, C. D., and Kirschenbaum, S. S. (2006). The relationship between spatial transformations and iconic gestures. Spat. Cogn. Comput. 6, 1–29.

Tucker, M., and Ellis, R. (1998). On the relations between seen objects and components of potential actions. J. Exp. Psychol. Hum. Percept. Perform. 24, 830–846.

Tucker, M., and Ellis, R. (2001). The potentiation of grasp types during visual object recognition. Vis. Cogn. 8, 769–800.

Tucker, R., and Ellis, M. (2004). Action priming by briefly presented objects. Acta Psychol. 116, 185–203.

Wohlschläger, A., and Wohlschläger, A. (1998). Mental and manual rotation. J. Exp. Psychol. Hum. Percept. Perform. 24, 397–412.

Wu, Y. C., and Coulson, S. (2007a). Iconic gestures prime related concepts: an ERP study. Psychon. Bull. Rev. 14, 57–63.

Wu, Y. C., and Coulson, S. (2007b). How iconic gestures enhance communication: an ERP study. Brain Lang. 101, 234–245.

Zwaan, R. A., Stanfield, R. A., and Yaxley, R. H. (2002). Language comprehenders mentally represent the shapes of objects. Psychol. Sci. 13, 168–171.

Zwaan, R. A., and Taylor, L. J. (2006). Seeing, acting, understanding: motor resonance in language comprehension. J. Exp. Psychol. Gen. 135, 1–11.

Keywords: stimulus–response compatibility, affordances, ideomotor theories, language, gesture, object shape

Citation: Bach P, Griffiths D, Weigelt M and Tipper SP (2010) Gesturing meaning: non-action words activate the motor system. Front. Hum. Neurosci. 4:214. doi: 10.3389/fnhum.2010.00214

Received: 15 June 2010;

Paper pending published: 16 June 2010;

Accepted: 14 October 2010;

Published online: 04 November 2010.

Edited by:

Harold Bekkering, University of Nijmegen, NetherlandsReviewed by:

Harold Bekkering, University of Nijmegen, NetherlandsDiane Pecher, Erasmus University Rotterdam, Netherlands

Birgit Stürmer, Humboldt Universität Berlin, Germany

Copyright: © 2010 Bach, Griffiths, Weigelt and Tipper. This is an open-access article subject to an exclusive license agreement between the authors and the Frontiers Research Foundation, which permits unrestricted use, distribution, and reproduction in any medium, provided the original authors and source are credited.

*Correspondence: Patric Bach, School of Psychology, University of Plymouth, Drake Circus, Devon PL4 8AA, UK. e-mail: patric.bach@plymouth.ac.uk