Moral Enhancement Using Non-invasive Brain Stimulation

- Berenson-Allen Center for Noninvasive Brain Stimulation, Cognitive Neurology Unit at Beth Israel Deaconess Medical Center, Harvard Medical School, Boston, MA, USA

Biomedical enhancement refers to the use of biomedical interventions to improve capacities beyond normal, rather than to treat deficiencies due to diseases. Enhancement can target physical or cognitive capacities, but also complex human behaviors such as morality. However, the complexity of normal moral behavior makes it unlikely that morality is a single capacity that can be deficient or enhanced. Instead, our central hypothesis will be that moral behavior results from multiple, interacting cognitive-affective networks in the brain. First, we will test this hypothesis by reviewing evidence for modulation of moral behavior using non-invasive brain stimulation. Next, we will discuss how this evidence affects ethical issues related to the use of moral enhancement. We end with the conclusion that while brain stimulation has the potential to alter moral behavior, such alteration is unlikely to improve moral behavior in all situations, and may even lead to less morally desirable behavior in some instances.

Introduction

Biomedical enhancement refers to biomedical interventions used to improve certain capacities beyond normal, rather than to restore capacities deficient as a result of a disease (Chatterjee, 2004). Cognitive enhancement, for example, involves using biomedical interventions like drugs (Maher, 2008) or non-invasive brain stimulation (Wurzman et al., 2016) to improve an individual's memory, attention, executive functions, or other cognitive functions beyond normal. The use of cognitive enhancement has increased, leading to intense ethical debates about whether such enhancement is morally permissible (Chatterjee, 2004; Greely et al., 2008; Darby, 2010).

Moral enhancement refers to improving moral or social behavior, rather than cognition or physical attributes (Harris and Savulescu, 2014). Morality, broadly defined, refers to evaluating whether an action (or person performing an action) is good or bad (Haidt, 2001). The idea to use biomedical interventions to improve moral behavior is not new. For example, Delgado, an early pioneer in brain stimulation, argued that progress toward a “psycho-civilized society” would require both educational and biomedical interventions to improve moral motivations and reduce tendencies toward violence (Delgado, 1969). His concerns for unrestrained advances in “technologies of destruction” (Delgado, 1969) without accompanying advances in moral behavior parallel many modern ethicists (Persson and Savulescu, 2013). Interventions to treat violent or immoral behavior were also advocated for by early supporters of psychosurgery (Mark, 1970).

Moral enhancement raises a number of unique ethical questions that are different than ethical issues in the use of cognitive enhancement. For example, cognitive enhancement benefits the individual but can be harmful to society in terms of distributive justice and fairness. However, the opposite argument could be made for moral enhancement, which is more likely to benefit society but not necessarily the individual (Douglas, 2016). Second, allowing cognitive enhancement is argued to maximize an individual's autonomy [although coercion to use cognitive enhancement in order to “keep up” may limit autonomy (Darby, 2010)]. In contrast, critics of moral enhancement argue that such interventions would significantly limit an individual's freedom, freedom that is intrinsically valuable even if that choice is to behave immorally (Harris, 2011). Third, the “moral” decision in many situations remains uncertain, and depends on one's philosophical, political, religious, or cultural beliefs. Moral “enhancement” might therefore differ depending on one's ideology. Finally, while proponents of cognitive enhancement argue that cognitive interventions should be morally permissible, certain proponents of moral enhancement argue that moral enhancement should be morally obligatory, or required (Persson and Savulescu, 2013).

A critical point is that discussions about cognitive enhancement often focus on improving cognitive capacities as a means to improve cognition, while discussions in moral enhancement have focused on the ultimate ends of moral enhancement. However, by doing so, the practical means of how altering specific cognitive-affective capacities can lead to changes in moral behavior is neglected. Given the intense and conflicting ethical opinions about moral enhancement, it is especially important to consider whether morality can be enhanced, and, if so, what the likely consequences of such modification will be Douglas (2013). We will argue that morality does not consist of a single neuropsychological capacity, but rather is a group of neuropsychological capacities that guide normal social behavior (Darby et al., 2016). This position is not new, and is based on a growing body of evidence from moral psychology (Cushman et al., 2006; Cushman and Young, 2011), functional neuroimaging (Greene et al., 2001, 2004; Young et al., 2007), and the study of neurological patients with antisocial behaviors (Moll et al., 2005; Ciaramelli et al., 2007, 2012; Koenigs et al., 2007; Mendez, 2009; Thomas et al., 2011; Fumagalli and Priori, 2012; Ibañez and Manes, 2012) demonstrating the complexity and heterogeneity of the “moral” brain.

In this article, we review the existing literature on the use of non-invasive brain stimulation (NIBS) to modulate moral behavior. Rather than improving or impairing a single “moral” process, we will show that non-invasive brain stimulation alters specific neuropsychological processes contributing to normal moral behavior. Such alterations can be viewed as moral “enhancement” in certain situations, but may lead to immoral behavior in other situations. Finally, we will discuss how the evidence from non-invasive brain stimulation affects the ethical debate on moral enhancement.

Methods

We searched Pubmed (from indexing through 1/2016) for all articles involving non-invasive bran stimulation (using the terms “transcranial magnetic stimulation” OR “transcranial direct current stimulation” OR TMS OR tDCS OR “non-invasive brain stimulation” OR “theta burst stimulation” OR TBS) and moral behavior (using the terms moral OR morality OR altruism OR cooperation OR fairness OR unfairness OR empathy OR social OR aggression). We additionally searched references in review articles for additional cases. This search resulted in 470 articles. From these, 48 specifically involved the use of non-invasive brain stimulation on morally relevant behaviors. We classified articles based on the methodology (neurophysiological response to moral stimuli using NIBS vs. modulation of moral behavior using NIBS), NIBS parameters (TMS vs. tDCS, stimulation, frequency, duration, location, use of neuronavigation), psychological tests used, and significant findings.

Modulation of Moral Judgments Using Non-invasive Brain Stimulation

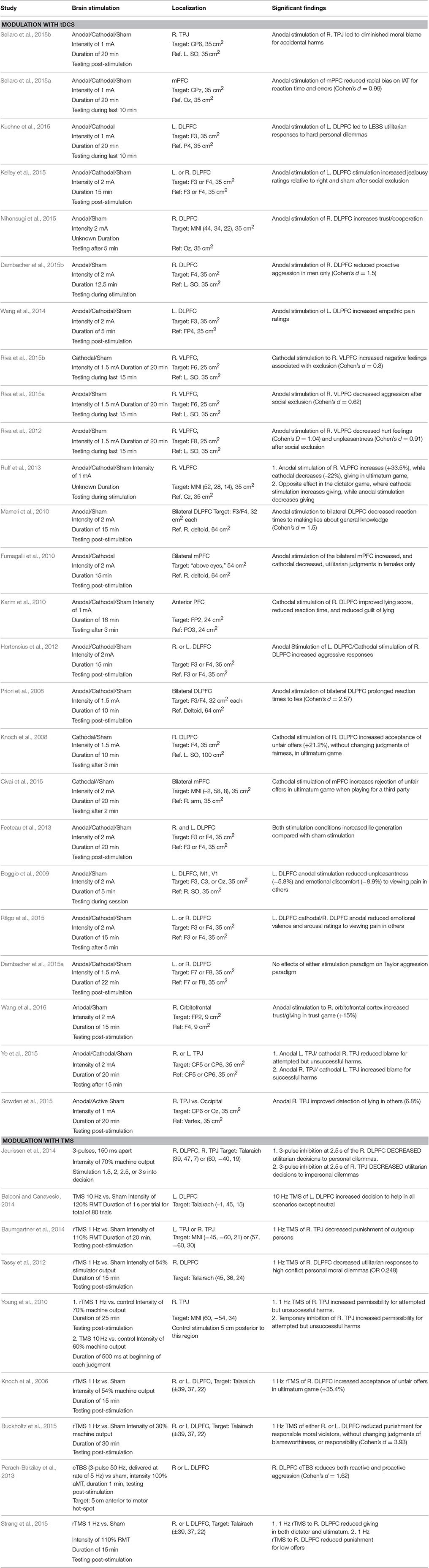

A small but growing number of studies have examined the role of non-invasive brain stimulation in moral behavior and decision-making in normal persons (Table 1). Repetitive transcranial magnetic stimulation (rTMS) studies use low frequency rTMS to create a “functional lesion” to test hypotheses regarding whether specific brain regions are necessary for specific moral judgments, while high frequency rTMS is thought depolarize or activate cortex. Similarly, transcranial direct current stimulation (tDCS) studies examine both whether increasing (anodal) or decreasing (cathodal) cortical excitability in a particular brain region altered moral behavior. However, the actual physiological effects of specific brain stimulation parameters remain unknown. Therefore, it is most appropriate to state that a particular NIBS protocol modulates moral behavior while remaining agnostic as to whether this is due to increasing or decreasing brain activity in a specific region vs. a more complex pattern of modulation. The majority of studies focus exclusively on the role of the dorsolateral prefrontal cortex (DLPFC), although a smaller number have investigated the role of the right temporal-parietal junction (TPJ) and the medial frontal lobe. Finally, it should be noted that this review is likely to under-report non-significant findings given publishing biases toward positive results.

Modulation of Specific Moral Behaviors

One important and well-studied factor in moral behavior is the aversion to violating the personal rights of other persons, such as causing them physical harm. One method of experimentally testing aversion to harming others has been to use personal and impersonal moral dilemmas, where a subject must chose whether to harm one person in order to save many others (Greene et al., 2001, 2004). These dilemmas require choosing between competing moral considerations: the personal rights of a single person, and the aversion to causing direct harm to them, versus the utilitarian benefit of saving a larger number of persons. One Hertz rTMS over the right DLPFC increased utilitarian responses to first-person, high conflict dilemmas specifically, suggesting a diminished aversion to violating the rights of others (Tassy et al., 2012). A potentially conflicting result was seen in a study where three repetitive pulses administered to the right DLPFC at critical time-points resulted in decreased utilitarian responses, suggesting either a disruption in utilitarian considerations, or an enhanced consideration of the personal rights of others (Jeurissen et al., 2014). However, the time-specific protocol of this study in relation to the final moral judgment makes it difficult to interpret the causal role the right DLPFC in either of these processes. It may be that the right DLPFC instead integrates both harm aversion and utilitarian consequences, so that inhibition of a critical time-point would prevent the input of the emotional salience of personal harms from reaching the right DLPFC. This highlights the uncertainty of determining the actual neurophysiologic effects of different types of brain stimulation.

Complicating matters further, anodal stimulation to the left DLPFC led to decreased utilitarian responses (or an increased sensitivity to moral harms) (Kuehne et al., 2015), while in a different study, bilateral anodal stimulation of the medial prefrontal cortex increased, while cathodal stimulation decreased, utilitarian responses in females only (Fumagalli et al., 2010). It therefore remains uncertain whether modulation in this task is based on right vs. left hemisphere stimulation, medial vs. lateral stimulation, or a combination of these factors. Moreover, given that the task leads to competing moral considerations, it is unclear which factor (harm aversion or utilitarian reasoning) is being modified by brain stimulation.

Non-invasive brain stimulation can also influence altruism, trust, cooperation, and other prosocial behaviors. One approach to studying prosocial behavior uses economics games where one person (player A) is given a sum of money and has the option to give a portion of that money to another person (player B). In the dictator game, there is no threat of retribution for unfair offers, while in the ultimatum game, player B can reject an unfair offer, in which case neither player will receive any money (in a sense, paying to punish the unfair offer of player A). Anodal tDCS to the right DLPFC led to lower offers without the threat of punishment but higher offers with the threat of punishment, while cathodal tDCS had the opposite effect (Ruff et al., 2013). However, in a different study, 1 Hz rTMS to the same region reduced giving in both the dictator and ultimatum games (Strang et al., 2015). In another economic game, player A can choose to “trust” player B by giving money to player B, which will be tripled. Player B can then give back a portion of this investment to player A (“cooperate”), or keep an even larger sum of money and not give any money back to player B. Anodal stimulation of the right DLPFC [or the right orbitofrontal cortex (Wang et al., 2016)] increased decisions to trust (as player A) and cooperate (as player B), indicating a general enhancement of prosocial behavior (Nihonsugi et al., 2015). Similarly, 10 Hz rTMS to the right DLPFC enhanced the willingness to intervene to help others in simulated situations (Balconi and Canavesio, 2014). Cathodal stimulation of the right DLPFC increased lying, a behavior where one is being untrustworthy (Karim et al., 2010). These results generally support the view that increasing activity in the right DLPFC increases decisions to trust and cooperate, although reduced giving in the dictator game in one study (Ruff et al., 2013) suggests that this may not be due to pure altruism per se, but instead may be due to an increased adherence to expected social norms. Understanding the social expectations of other players in these economic games can lead to the mutually beneficial outcomes available through cooperation compared with selfish playing.

A related issue is whether to punish others who are unfair or violate other social norms. Both low frequency rTMS (Knoch et al., 2006) and cathodal tDCS (Knoch et al., 2008) of the right DLPFC increase a subjects willingness to accept unfair offers as player B in the ultimatum game, suggesting that the perceived unfairness of the offer (which remains unchanged by stimulation) exerts less influence on decision-making. In other words, participants are less willing to “pay” to punish those who give unfair offers. In a model of determining blame and punishment for those committing crimes, simulating the experience of jurors in a criminal trial, 1 Hz rTMS to the right DLPFC reduced the punishment, without affecting blameworthiness, of the criminals (Buckholtz et al., 2015). In contrast, reducing right DLPFC activity (by applying anodal tDCS to the left and cathodal to the right DLPFC) increased punishment of others who were critical to subjects (Hortensius et al., 2012). Retributive punishment after being socially excluded in a virtual ball-sharing task is reduced after anodal stimulation to the right DLPFC (Riva et al., 2015a), as is proactive aggression without any provocation (Dambacher et al., 2015b). These latter findings suggest that the right DLPFC may play a more general role in tempering aggressive behaviors toward others.

Finally, several studies have investigated modulation of the right temporal-parietal junction (right TPJ), an area important in mediating the effects of intentions and mental states on moral judgments, a process referred to as theory of mind (Young et al., 2007). Low frequency rTMS or cathodal tDCS to the right TPJ reduces the effect of the agent's negative belief on moral blameworthiness for attempted but unsuccessful harms (e.g., attempted murder) (Young et al., 2010; Ye et al., 2015), while anodal tDCS to this area resulted in either increased blame for actual harms (Ye et al., 2015), or an increased consideration of an agent's innocent intentions in accidental harms (e.g., accidentally shooting someone), reducing judgments of moral blame (Sellaro et al., 2015b). These results are consistent with neuroimaging literature showing that this area is particularly sensitive to the role of intentions in moral judgments (Young et al., 2007; Koster-Hale et al., 2013). The right TPJ is also involved in biasing in-group vs. out-group status, as inhibition of this region reduces the increased punishment that normally occurs when out-group members do not cooperate compared with in-group members (Baumgartner et al., 2014). A review of how brain stimulation to the right TPJ affects social behavior outside the domain of morality can be found elsewhere (Donaldson et al., 2015; Mai et al., 2016).

Neuroanatomical Specificity of Brain Stimulation on Moral Behavior

Based on these studies, tentative proposals can be mad for the regionally specific effects of brain stimulation on moral behavior.

Inhibition of the right DLPFC reduces the influence of harm on decision-making, both when deciding to perform a harmful action oneself, and when punishing harmful or unfair behaviors in others. This reduces decisions to punish or disapprove of potentially harmful personal moral violations in others. However, it also increased the likelihood of performing a harmful action oneself, such as lying or reacting aggressively. In contrast, excitatory brain stimulation to the right DLPFC reduced aggression and increased adherence to social norms. Taken together, these results suggest that the right DLPFC is important for representing aversion to harm, leading individuals to avoid harming others, but also to punish those who do cause harm.

This role may not be specific to the right hemisphere, as stimulation of the left DLPFC may also results in increased cooperation and increased consideration of harm violations in moral dilemmas.

Finally, the right TPJ presumably exerts a more fundamental role in modulating the role of beliefs and intentions in moral judgments. This may influence the degree to which a harmful action is perceived as moral (e.g., intended) vs. non-moral (e.g., an accident). It also more generally shifts focus toward the viewpoint of other persons, leading to improved lie detection, for example Sowden et al. (2015).

Moral Enhancement and Non-invasive Brain Stimulation

The previous section provides evidence to support our hypothesis that non-invasive brain stimulation can modulate certain cognitive-affective capacities involved in moral cognition. In the following section, we will discuss how modulating capacities contributing to moral behavior, rather than assuming enhancement of a single moral capacity, alters key ethical considerations in moral enhancement.

Autonomy

Autonomy refers to the capacity of a person to define his or her preferences, desires, values, and ideals (Dworkin, 1988). A key component of such autonomy is the ability to critically reflect upon, and either accept or change, one's beliefs (Dworkin, 1988). Proponents of cognitive enhancement argue that individuals should be allowed to use cognitive enhancement if such use aligns with their values and beliefs (Darby, 2010). A similar argument can be made for moral enhancement. Moral enhancement allows for an individual to express his or her autonomy by choosing to improve specific capacities contributing to moral behavior. For example, brain stimulation could potentially modulate one's capacity for empathy, or one's predisposition toward reacting aggressively. However, as discussed previously, such modulation is likely to affect only certain moral behaviors, but not others. Moreover, altering a capacity that decreases reactive aggression, which may be desired, might also decrease one's willingness to punish those who act violently, which may not be desired. So, in order for individuals to make autonomous decisions regarding moral enhancement, it is critical to understand how altering a capacity will alter moral behavior in ways that are either desirable or undesirable.

Permissible vs. Obligatory Moral Enhancement

Certain proponents have argued that moral enhancement should be required, or obligatory (Persson and Savulescu, 2013, 2014). They argue that technological advances have created tools that can cause human suffering on catastrophic levels, ranging from climate instability to weapons of mass destruction, similar to historical arguments in favor of moral enhancement (Delgado, 1969). Without increasing our moral capacities, it is argued that humanity faces inevitable destruction. In order to prevent such destruction, mandatory moral enhancement is required. Such requirements clearly violate one's autonomy, but the impending moral catastrophe outweighs this loss of personal liberties.

Again, such an argument assumes that one intervention can be used to enhance all moral decisions, without considering the mechanism by which such enhancement must occur. The evidence presented from non-invasive brain stimulation instead shows that only specific cognitive-affective capacities contributing to moral behavior are likely to be enhanced, affecting some but not all behaviors, and not always in the desired direction. For example, improving empathy may lead to improved moral behavior toward one's in-group, but more harsh retaliation against those outside of one's group. Conversely, increasing cognitive processes contributing to utilitarian reasoning to consider the interests of many groups of persons might come at the expense of decreasing one's aversion to harming persons in general. Given this reality, it is unlikely that moral biomedical enhancement will alter behavior to the extent necessary to requiring individuals to use moral enhancing technologies. Proponents of moral enhancement agree that such technologies are unlikely in the near future but should be possible eventually (Persson and Savulescu, 2013, 2014). However, it is difficult to imagine how such a technology could ever be developed given our current understanding of moral psychology.

Freedom of Choice and Authenticity

Harris (2011, 2013) has argued that moral enhancement would reduce an individual's freedom of choice, even if that choice is to act immorally. Invoking Milton's Paradise Lost, he argues that part of what makes humanity worthwhile is the freedom to fall from grace (Harris, 2011). Again, this implies that moral enhancement would make it impossible to act immorally, while the evidence from non-invasive brain stimulation suggests that such universal improvement is unlikely. More likely, moral enhancement will alter an individual's tendencies to make certain moral decisions but not others. Because the decision to enhance would be free, doing so would improve an individual's ability to act in accordance with what they deem to be morally appropriate. While preferences might shift, the choice would remain free.

This argument is perhaps similar to the authenticity debate in cognitive enhancement, where some have expressed concern that enhancement would taint the authentic, or true, self (Parens, 2005). One could similarly argue that moral enhancement would lead to inauthentic person, even if morally superior. This debate centers on whether authenticity is considered an innate, static gift that should be appreciated and unchanged, or an ultimate goal that one must aspire toward Parens (2005). According to the latter definition, neither cognitive nor moral enhancement threatens authenticity.

Moral Plurality

Part of the difficulty in understanding moral enhancement is that many moral challenges do not have clear solutions. So while there is agreement that improving memory, multi-tasking, and executive functions are indicators of cognitive enhancement, it is not clear how moral enhancement is supposed to affect our views on controversial topics like abortion, distributed justice, or choosing between the welfare of different groups of persons. A more reasonable goal would be to enhance certain moral motivations that are universally agreed upon, which research in non-invasive brain stimulation has shown to be theoretically possible. However, enhancing a single capacity is unlikely to lead to what one would consider a superior moral decision in every instance.

That being said, it is reasonable that enhancing certain cognitive-affective processes would lead to improved moral behavior on average (Douglas, 2016). Moreover, those with relatively low baseline capacities for certain moral motivations might show a significant benefit from moral enhancement. By understanding the mechanism and implications of moral enhancement using non-invasive brain stimulation, a rational but ethical implementation is possible.

Conclusions

In conclusion, limited but growing evidence thus far suggests that brain stimulation can modulate specific cognitive-affective processes involved in moral behavior, making moral enhancement possible. However, rather than improving one single moral capacity, brain stimulation alters specific neuropsychological processes contributing to moral behavior. Enhancement of these processes can lead to morally enhanced behavior in some situations, but less morally desirable behavior in other circumstances. This influences the ethical debate regarding moral enhancement, showing that technologies will be unlikely to change moral behavior to the extent required to make moral enhancement obligatory, or to raise concern regarding our freedom to act immorally. However, the more modest goal of improving our tendencies to act in accordance with our moral motivations is likely possible, and may be desirable for large numbers of people. Given the very real threats of weapons of mass destruction and other technologies noted by past and current scholars, there is a clear need to research moral interventions in greater depth. Such research is needed to determine the implications of enhancing certain capacities contributing to moral behavior so that informed, rational debates regarding the use of moral enhancement are possible.

Author Contributions

RD for study concept and design and for writing the manuscript, AP for critical revisions of the manuscript.

Funding

This work was supported by funding from the Sidney R. Baer, Jr. Foundation (RD, AP), the NIH (R01HD069776, R01NS073601, R21 NS082870, R21 MH099196, R21 NS085491, R21 HD07616 to AP), the Football Players Health Study at Harvard University (AP), and Harvard Catalyst. The Harvard Clinical and Translational Science Center (NCRR and the NCATS NIH, UL1 RR025758 to AP).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Balconi, M., and Canavesio, Y. (2014). High-frequency rTMS on DLPFC increases prosocial attitude in case of decision to support people. Soc. Neurosci. 9, 82–93. doi: 10.1080/17470919.2013.861361

Baumgartner, T., Schiller, B., Rieskamp, J., Gianotti, L. R., and Knoch, D. (2014). Diminishing parochialism in intergroup conflict by disrupting the right temporo-parietal junction. Soc. Cogn. Affect. Neurosci. 9, 653–660. doi: 10.1093/scan/nst023

Boggio, P. S., Zaghi, S., and Fregni, F. (2009). Modulation of emotions associated with images of human pain using anodal transcranial direct current stimulation (tDCS). Neuropsychologia 47, 212–217. doi: 10.1016/j.neuropsychologia.2008.07.022

Buckholtz, J. W., Martin, J. W., Treadway, M. T., Jan, K., Zald, D. H., Jones, O., et al. (2015). From blame to punishment: disrupting prefrontal cortex activity reveals norm enforcement mechanisms. Neuron 87, 1369–1380. doi: 10.1016/j.neuron.2015.08.023

Chatterjee, A. (2004). Cosmetic neurology: the controversy over enhancing movement, mentation, and mood. Neurology 63, 968–974. doi: 10.1212/01.WNL.0000138438.88589.7C

Ciaramelli, E., Braghittoni, D., and di Pellegrino, G. (2012). It is the outcome that counts! Damage to the ventromedial prefrontal cortex disrupts the integration of outcome and belief information for moral judgment. J. Int. Neuropsychol. Soc. 18, 962–971. doi: 10.1017/S1355617712000690

Ciaramelli, E., Muccioli, M., Làdavas, E., and di Pellegrino, G. (2007). Selective deficit in personal moral judgment following damage to ventromedial prefrontal cortex. Soc. Cogn. Affect. Neurosci. 2, 84–92. doi: 10.1093/scan/nsm001

Civai, C., Miniussi, C., and Rumiati, R. I. (2015). Medial prefrontal cortex reacts to unfairness if this damages the self: a tDCS study. Soc. Cogn. Affect. Neurosci. 10, 1054–1060. doi: 10.1093/scan/nsu154

Cushman, F., and Young, L. (2011). Patterns of moral judgment derive from nonmoral psychological representations. Cogn. Sci. 35, 1052–1075. doi: 10.1111/j.1551-6709.2010.01167.x

Cushman, F., Young, L., and Hauser, M. (2006). The role of conscious reasoning and intuition in moral judgment: testing three principles of harm. Psychol. Sci. 17, 1082–1089. doi: 10.1111/j.1467-9280.2006.01834.x

Dambacher, F., Schuhmann, T., Lobbestael, J., Arntz, A., Brugman, S., and Sack, A. T. (2015a). No effects of bilateral tDCS over inferior frontal gyrus on response inhibition and aggression. PLoS ONE 10:e0132170. doi: 10.1371/journal.pone.0132170

Dambacher, F., Schuhmann, T., Lobbestael, J., Arntz, A., Brugman, S., and Sack, A. T. (2015b). Reducing proactive aggression through non-invasive brain stimulation. Soc. Cogn. Affect. Neurosci. 10, 1303–1309. doi: 10.1093/scan/nsv018

Darby, R. (2010). Ethical issues in the use of cognitive enhancement. Pharos Alpha Omega Alpha Honor. Med. Soc. 73, 16–22.

Darby, R. R., Edersheim, J., and Price, B. H. (2016). What patients with behavioral-variant frontotemporal dementia can teach us about moral responsibility. AJOB Neurosci. 7, 193–201. doi: 10.1080/21507740.2016.1236044

Delgado, J. M. R. (1969). Physical Control of the Mind: Towards a Psychocivilized Society. New York, NY: Harper and Row. Available online at: https://books.google.com/books?id=nzIenQEACAAJ

Donaldson, P. H., Rinehart, N. J., and Enticott, P. G. (2015). Noninvasive stimulation of the temporoparietal junction: a systematic review. Neurosci. Biobehav. Rev. 55, 547–572. doi: 10.1016/j.neubiorev.2015.05.017

Douglas, T. (2013). Moral enhancement via direct emotion modulation: a reply to John Harris. Bioethics 27, 160–168. doi: 10.1111/j.1467-8519.2011.01919.x

Douglas, T. (2016). Moral enhancement. J. Appl. Philos. 25, 228–245. doi: 10.1111/j.1468-5930.2008.00412.x

Dworkin, G. (ed.). (1988). Autonomy, science, and morality, in “The Theory and Practice of Autonomy,” (New York, NY: Cambridge University Press), 48–61.

Fecteau, S., Boggio, P., Fregni, F., and Pascual-Leone, A. (2013). Modulation of untruthful responses with non-invasive brain stimulation. Front. Psychiatry 3:97. doi: 10.3389/fpsyt.2012.00097

Fumagalli, M., and Priori, A. (2012). Functional and clinical neuroanatomy of morality. Brain 135, 2006–2021. doi: 10.1093/brain/awr334

Fumagalli, M., Vergari, M., Pasqualetti, P., Marceglia, S., Mameli, F., Ferrucci, R., et al. (2010). Brain switches utilitarian behavior: does gender make the difference? PLoS ONE 5:e8865. doi: 10.1371/journal.pone.0008865

Greely, H., Sahakian, B., Harris, J., Kessler, R. C., Gazzaniga, M., Campbell, P., et al. (2008). Towards responsible use of cognitive-enhancing drugs by the healthy. Nature 456, 702–705. doi: 10.1038/456702a

Greene, J. D., Nystrom, L. E., Engell, A. D., Darley, J. M., and Cohen, J. D. (2004). The neural bases of cognitive conflict and control in moral judgment. Neuron 44, 389–400. doi: 10.1016/j.neuron.2004.09.027

Greene, J. D., Sommerville, R. B., Nystrom, L. E., Darley, J. M., and Cohen, J. D. (2001). An fMRI investigation of emotional engagement in moral judgment. Science 293, 2105–2108. doi: 10.1126/science.1062872

Haidt, J. (2001). The emotional dog and its rational tail: a social intuitionist approach to moral judgment. Psychol. Rev. 108, 814–834. doi: 10.1037/0033-295X.108.4.814

Harris, J. (2011). Moral enhancement and freedom. Bioethics 25, 102–111. doi: 10.1111/j.1467-8519.2010.01854.x

Harris, J. (2013). Moral progress and moral enhancement. Bioethics 27, 285–290. doi: 10.1111/j.1467-8519.2012.01965.x

Harris, J., and Savulescu, J. (2014). A debate about moral enhancement. Camb. Q. Healthc. Ethics 24, 8–22. doi: 10.1017/S0963180114000279

Hortensius, R., Schutter, D. J., and Harmon-Jones, E. (2012). When anger leads to aggression: induction of relative left frontal cortical activity with transcranial direct current stimulation increases the anger-aggression relationship. Soc. Cogn. Affect. Neurosci. 7, 342–347. doi: 10.1093/scan/nsr012

Ibañez, A., and Manes, F. (2012). Contextual social cognition and the behavioral variant of frontotemporal dementia. Neurology 78, 1354–1362. doi: 10.1212/WNL.0b013e3182518375

Jeurissen, D., Sack, A. T., Roebroeck, A., Russ, B. E., and Pascual-Leone, A. (2014). TMS affects moral judgment, showing the role of DLPFC and TPJ in cognitive and emotional processing. Front. Neurosci. 8:18. doi: 10.3389/fnins.2014.00018

Karim, A. A., Schneider, M., Lotze, M., Veit, R., Sauseng, P., Braun, C., et al. (2010). The truth about lying: inhibition of the anterior prefrontal cortex improves deceptive behavior. Cereb. Cortex 20, 205–213. doi: 10.1093/cercor/bhp090

Kelley, N. J., Eastwick, P. W., Harmon-Jones, E., and Schmeichel, B. J. (2015). Jealousy increased by induced relative left frontal cortical activity. Emotion 15, 550–555. doi: 10.1037/emo0000068

Knoch, D., Nitsche, M. A., Fischbacher, U., Eisenegger, C., Pascual-Leone, A., and Fehr, E. (2008). Studying the neurobiology of social interaction with transcranial direct current stimulation–the example of punishing unfairness. Cereb. Cortex 18, 1987–1990. doi: 10.1093/cercor/bhm237

Knoch, D., Pascual-Leone, A., Meyer, K., Treyer, V., and Fehr, E. (2006). Diminishing reciprocal fairness by disrupting the right prefrontal cortex. Science 314, 829–832. doi: 10.1126/science.1129156

Koenigs, M., Young, L., Adolphs, R., Tranel, D., Cushman, F., Hauser, M., et al. (2007). Damage to the prefrontal cortex increases utilitarian moral judgements. Nature 446, 908–911. doi: 10.1038/nature05631

Koster-Hale, J., Saxe, R., Dungan, J., and Young, L. L. (2013). Decoding moral judgments from neural representations of intentions. Proc. Natl. Acad. Sci. U.S.A. 110, 5648–5653. doi: 10.1073/pnas.1207992110

Kuehne, M., Heimrath, K., Heinze, H.-J., and Zaehle, T. (2015). Transcranial direct current stimulation of the left dorsolateral prefrontal cortex shifts preference of moral judgments. PLoS ONE 10:e0127061. doi: 10.1371/journal.pone.0127061

Mai, X., Zhang, W., Hu, X., Zhen, Z., Xu, Z., Zhang, J., et al. (2016). Using tDCS to Explore the role of the right temporo-parietal junction in theory of mind and cognitive empathy. Front. Psychol. 7:380. doi: 10.3389/fpsyg.2016.00380

Mameli, F., Mrakic-Sposta, S., Vergari, M., Fumagalli, M., Macis, M., Ferrucci, R., et al. (2010). Dorsolateral prefrontal cortex specifically processes general but not personal knowledge deception: multiple brain networks for lying. Behav. Brain Res. 211, 164–168. doi: 10.1016/j.bbr.2010.03.024

Mark, V. H. (1970). Violence and the Brain. New York, NY: Harper and Row. Available online at: https://books.google.com/books?id=x5I4YT_njaUC

Mendez, M. F. (2009). The neurobiology of moral behavior: review and neuropsychiatric implications. CNS Spectr. 14, 608–620. doi: 10.1017/S1092852900023853

Moll, J., Zahn, R., de Oliveira-Souza, R., Krueger, F., and Grafman, J. (2005). Opinion: the neural basis of human moral cognition. Nat. Rev. Neurosci. 6, 799–809. doi: 10.1038/nrn1768

Nihonsugi, T., Ihara, A., and Haruno, M. (2015). Selective increase of intention-based economic decisions by noninvasive brain stimulation to the dorsolateral prefrontal cortex. J. Neurosci. 35, 3412–3419. doi: 10.1523/JNEUROSCI.3885-14.2015

Parens, E. (2005). Authenticity and ambivalence: toward understanding the enhancement debate. Hastings Cent. Rep. 35, 34–41. doi: 10.1353/hcr.2005.0067

Perach-Barzilay, N., Tauber, A., Klein, E., Chistyakov, A., Ne'eman, R., and Shamay-Tsoory, S. G. (2013). Asymmetry in the dorsolateral prefrontal cortex and aggressive behavior: a continuous theta-burst magnetic stimulation study. Soc. Neurosci. 8, 178–188. doi: 10.1080/17470919.2012.720602

Persson, I., and Savulescu, J. (2013). Getting moral enhancement right: the desirability of moral bioenhancement. Bioethics 27, 124–131. doi: 10.1111/j.1467-8519.2011.01907.x

Persson, I., and Savulescu, J. (2014). The art of misunderstanding moral bioenhancement. Camb. Q. Healthc. Ethics 24, 48–57. doi: 10.1017/S0963180114000292

Priori, A., Mameli, F., Cogiamanian, F., Marceglia, S., Tiriticco, M., Mrakic-Sposta, S., et al. (2008). Lie-specific involvement of dorsolateral prefrontal cortex in deception. Cereb. Cortex 18, 451–455. doi: 10.1093/cercor/bhm088

Rêgo, G. G., Lapenta, O. M., Marques, L. M., Costa, T. L., Leite, J., Carvalho, S., et al. (2015). Hemispheric dorsolateral prefrontal cortex lateralization in the regulation of empathy for pain. Neurosci. Lett. 594, 12–16. doi: 10.1016/j.neulet.2015.03.042

Riva, P., Romero Lauro, L. J., Dewall, C. N., and Bushman, B. J. (2012). Buffer the pain away: stimulating the right ventrolateral prefrontal cortex reduces pain following social exclusion. Psychol. Sci. 23, 1473–1475. doi: 10.1177/0956797612450894

Riva, P., Romero Lauro, L. J., DeWall, C. N., Chester, D. S., and Bushman, B. J. (2015a). Reducing aggressive responses to social exclusion using transcranial direct current stimulation. Soc. Cogn. Affect. Neurosci. 10, 352–356. doi: 10.1093/scan/nsu053

Riva, P., Romero Lauro, L. J., Vergallito, A., DeWall, C. N., and Bushman, B. J. (2015b). Electrified emotions: modulatory effects of transcranial direct stimulation on negative emotional reactions to social exclusion. Soc. Neurosci. 10, 46–54. doi: 10.1080/17470919.2014.946621

Ruff, C. C., Ugazio, G., and Fehr, E. (2013). Changing social norm compliance with noninvasive brain stimulation. Science 342, 482–484. doi: 10.1126/science.1241399

Sellaro, R., Derks, B., Nitsche, M. A., Hommel, B., van den Wildenberg, W. P., van Dam, K., et al. (2015a). Reducing prejudice through brain stimulation. Brain Stimul. 8, 891–897. doi: 10.1016/j.brs.2015.04.003

Sellaro, R., Güroǧlu, B., Nitsche, M. A., van den Wildenberg, W. P., Massaro, V., Durieux, J., et al. (2015b). Increasing the role of belief information in moral judgments by stimulating the right temporoparietal junction. Neuropsychologia 77, 400–408. doi: 10.1016/j.neuropsychologia.2015.09.016

Sowden, S., Wright, G. R., Banissy, M. J., Catmur, C., and Bird, G. (2015). Transcranial current stimulation of the temporoparietal junction improves Lie detection. Curr. Biol. 25, 2447–2451. doi: 10.1016/j.cub.2015.08.014

Strang, S., Gross, J., Schuhmann, T., Riedl, A., Weber, B., and Sack, A. T. (2015). Be nice if you have to–the neurobiological roots of strategic fairness. Soc. Cogn. Affect. Neurosci. 10, 790–796. doi: 10.1093/scan/nsu114

Tassy, S., Oullier, O., Duclos, Y., Coulon, O., Mancini, J., Deruelle, C., et al. (2012). Disrupting the right prefrontal cortex alters moral judgement. Soc. Cogn. Affect. Neurosci. 7, 282–288. doi: 10.1093/scan/nsr008

Thomas, B. C., Croft, K. E., and Tranel, D. (2011). Harming kin to save strangers: further evidence for abnormally utilitarian moral judgments after ventromedial prefrontal damage. J. Cogn. Neurosci. 23, 2186–2196. doi: 10.1162/jocn.2010.21591

Wang, G., Li, J., Yin, X., Li, S., and Wei, M. (2016). Modulating activity in the orbitofrontal cortex changes trustees cooperation: a transcranial direct current stimulation study. Behav. Brain Res. 303, 71–75. doi: 10.1016/j.bbr.2016.01.047

Wang, J., Wang, Y., Hu, Z., and Li, X. (2014). Transcranial direct current stimulation of the dorsolateral prefrontal cortex increased pain empathy. Neuroscience 281, 202–207. doi: 10.1016/j.neuroscience.2014.09.044

Wurzman, R., Hamilton, R. H., Pascual-Leone, A., and Fox, M. D. (2016). An open letter concerning do-it-yourself users of transcranial direct current stimulation. Ann. Neurol. 80, 1–4. doi: 10.1002/ana.24689

Ye, H., Chen, S., Huang, D., Zheng, H., Jia, Y., and Luo, J. (2015). Modulation of neural activity in the temporoparietal junction with transcranial direct current stimulation changes the role of beliefs in moral judgment. Front. Hum. Neurosci. 9:659. doi: 10.3389/fnhum.2015.00659

Young, L., Camprodon, J. A., Hauser, M., Pascual-Leone, A., and Saxe, R. (2010). Disruption of the right temporoparietal junction with transcranial magnetic stimulation reduces the role of beliefs in moral judgments. Proc. Natl. Acad. Sci. U.S.A. 107, 6753–6758. doi: 10.1073/pnas.0914826107

Keywords: morality, enhancement, brain stimulation, TMS, tDCS, ethics, neuroethics

Citation: Darby RR and Pascual-Leone A (2017) Moral Enhancement Using Non-invasive Brain Stimulation. Front. Hum. Neurosci. 11:77. doi: 10.3389/fnhum.2017.00077

Received: 16 September 2016; Accepted: 08 February 2017;

Published: 22 February 2017.

Edited by:

Martin J. Herrmann, University of Würzburg, GermanyReviewed by:

Chao Liu, Beijing Normal University, ChinaElena Rusconi, University College London, UK

Copyright © 2017 Darby and Pascual-Leone. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: R. Ryan Darby, rdarby@bidmc.harvard.edu

R. Ryan Darby

R. Ryan Darby Alvaro Pascual-Leone

Alvaro Pascual-Leone