Isolating Discriminant Neural Activity in the Presence of Eye Movements and Concurrent Task Demands

- 1U.S. Army Research Laboratory, Future Soldier Technologies Division, Human Research and Engineering Directorate, Aberdeen Proving Ground, Aberdeen, MD, United States

- 2Teledyne Scientific Company, Durham, NC, United States

- 3Qusp Labs, San Diego, CA, United States

A growing number of studies use the combination of eye-tracking and electroencephalographic (EEG) measures to explore the neural processes that underlie visual perception. In these studies, fixation-related potentials (FRPs) are commonly used to quantify early and late stages of visual processing that follow the onset of each fixation. However, FRPs reflect a mixture of bottom-up (sensory-driven) and top-down (goal-directed) processes, in addition to eye movement artifacts and unrelated neural activity. At present there is little consensus on how to separate this evoked response into its constituent elements. In this study we sought to isolate the neural sources of target detection in the presence of eye movements and over a range of concurrent task demands. Here, participants were asked to identify visual targets (Ts) amongst a grid of distractor stimuli (Ls), while simultaneously performing an auditory N-back task. To identify the discriminant activity, we used independent components analysis (ICA) for the separation of EEG into neural and non-neural sources. We then further separated the neural sources, using a modified measure-projection approach, into six regions of interest (ROIs): occipital, fusiform, temporal, parietal, cingulate, and frontal cortices. Using activity from these ROIs, we identified target from non-target fixations in all participants at a level similar to other state-of-the-art classification techniques. Importantly, we isolated the time course and spectral features of this discriminant activity in each ROI. In addition, we were able to quantify the effect of cognitive load on both fixation-locked potential and classification performance across regions. Together, our results show the utility of a measure-projection approach for separating task-relevant neural activity into meaningful ROIs within more complex contexts that include eye movements.

Introduction

Goal-directed eye movements are a ubiquitous component of everyday life and integral to our perception of the world. Over recent decades, numerous visual search studies have used eye movement patterns to better understand perceptual and attentional processes that underlie human vision (Kowler, 2011). In contrast, the majority of human electrophysiological studies of visual search continue to use fixation constrained paradigms, artificially limiting the natural linkage between attentional shifts and subsequent eye movements. Thus, extending these paradigms into a framework of overt visual search would enable the validation of attentional models in a more natural context. However, a number of potential confounds and analytical challenges emerge when interpreting electroencephalography (EEG) in the presences of eye movements (Nikolaev et al., 2016). One of the primary confounds is the large eye movement related signals around the events of interest, namely saccades and fixations. These include the corneo-retinal and saccadic spike potentials along with eyelid artifacts. Importantly, the magnitude of these signals systematically scale with the direction, amplitude, and velocity of the saccade. Furthermore, saccade features themselves can systematically vary with task design or conditions. To address this concern, a number of methods have been developed to account for the effects of saccade sequence (Dandekar et al., 2012b) or isolate eye movement related signals within the EEG record (Plöchl et al., 2012). These approaches have been successful to the degree that they were able to reveal task-relevant activity, such as the P3 component, that may otherwise have been conflated with eye movement related artifacts (Dandekar et al., 2012a; Devillez et al., 2015).

Robust saccade detection and quantification presents another methodological challenge. Previously, EEG studies often relied on an explicit electrooculography (EOG) measurement, horizontal or vertical, for detecting the onset of a saccade (Gaarder et al., 1964; Thickbroom et al., 1991; Kazai and Yagi, 1999). The benefit of using this signal is both the high temporal resolution and the de facto alignment with the EEG record. Unfortunately, the lack of precision in determining the direction and distance of saccades limits these studies to paradigms with a small number of predetermined fixation locations. However, recent advances in the speed and accuracy of infrared eye-tracking technology has made it possible to link gaze position with neural activity at both high spatial and temporal resolution. This has led to a growing number of studies that explore the neural correlates of target detection during visual search, in both controlled (Brouwer et al., 2013) and free-viewing paradigms (Kamienkowski et al., 2012; Dias et al., 2013; Jangraw et al., 2014; Kaunitz et al., 2014; Ušćumlić and Blankertz, 2016; Wenzel et al., 2016).

In addition to the above measurement and signal processing challenges, there is the more nuanced task of interpreting brain activity in the context of planned and executed eye movements (Nikolaev et al., 2016). This remains a significant obstacle for studies focusing on both perceptual and cognitive phenomena. First, there is the task of quantifying or controlling for stimulus properties. When the eyes are free to move, stimuli impinging on the retina will necessarily vary across conditions and participants, even when gaze position is guided by the task sequence. In more controlled settings, experimental design can ensure that small differences in eye position do not significantly bias the statistics of the stimuli. However, this becomes more challenging for the ultimate goal of free-viewing in natural scenes where spatial frequency, orientation, and chromatic distributions can vary widely within a single image. Likewise, there is the challenge of separating saccade planning and execution from the perceptual or cognitive signal of interest. Even when utilizing high density EEG and source localization techniques, the spatial resolution of the saccadic preparatory signals is limited. Thus, accounting for these signals via subtraction across equated conditions (Nikolaev et al., 2013), regression (Dandekar et al., 2012b), or other techniques (Dias et al., 2013) is an important factor for the interpretation of para-saccadic neural activity.

Despite these recent advancements, there remains a need for development and validation of methods for the quantification of both perceptual and cognitive phenomena in the presence of eye movements. Part of this process is the evaluation of novel analytical approaches within paradigms that enable a more direct comparison to related fixation-constrained studies. Similarly, any particular method may only address some of the above challenges while still providing valuable insight when applied within the appropriate constraints or combined with other techniques. It is within this context that we propose the following approach for separating neural activity into meaningful regions of interest (ROIs) in the presence of eye movements. To evaluate our approach, we utilized data from a previously publish study (Ries et al., 2016) that employed a dual-task paradigm, visual target detection and auditory N-back, to quantify the effect of working memory load on the lambda response. The primary observation from this study was a small but significant reduction in the lambda amplitude with increasing cognitive load.

Here, we were able to separate the neural response to each fixation into six ROIs by applying a technique that linearly combines activity from independent sources based on their equivalent dipole location. Within each ROI we show a distinct neural response that, to varying degrees, discriminated target from non-target fixations and was differentially modulated by cognitive load. While the task design mitigated the overlapping response from adjacent saccades, common in free-viewing visual search, this approach is a substantive step in the interpretation of fixation-related brain activity. When combined with GLM-based techniques for the deconvolution of overlapping FRPs, this approach can be applied to more natural contexts where the interplay between bottom-up and top-down neural activity is not well understood.

Materials and Methods

The experiment used in this study has been described in a previous publication (Ries et al., 2016). Here, we provide a summary of stimuli and procedure, followed by a more detailed description of the novel ROI analysis method.

Participants

Fourteen participants volunteered for the study; all participants were right-handed males with an average age of 32.8 years. All participants had 20/20 vision or corrected to 20/20 vision. This study was conducted in accordance with the U.S. Army Research Laboratory's IRB requirements (32 CFR 219 and DoDI 3216.02). The voluntary, fully informed consent of research participants was obtained in written form. The study was reviewed and approved by the U.S. Army Research Laboratory's IRB before the study began.

Stimuli and Procedure

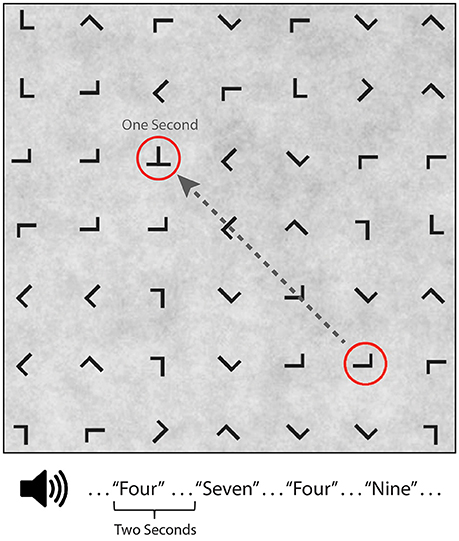

Participants performed a guided visual target detection task on a 7 × 7 grid (23.9° × 23.9° visual angle) of equally spaced and variably oriented “T” or “L” characters (1.1° visual angle) presented on a low contrast 1/f noise background from a viewing distance of approximately 65 cm (Figure 1). Eye fixations were guided across the grid by a red annulus (2.3° visual angle) that randomly surrounded one of the characters for a duration of 1 s before moving to the next randomly selected character. Participants were instructed to saccade to and fixate on the character in the center of the red annulus and to press a button (left hand) only when a “T” (visual target) was present. Visual target characters appeared on 10% of trials. Participants were instructed to maintain fixation on the character until the next red annulus appeared. All red annuli surrounding a non-target “L” were at least two characters from any “T” present on the grid to minimize peripheral detection. The guided visual target detection task was performed in one of five conditions: visual alone (silent condition), while ignoring binaurally presented digits (numbers 0–9), or while using the auditory digits in a 0, 1, or 2-back working memory task. The digit “0” was only used in the 0-Back condition where it served as the auditory target. Auditory stimuli were presented every 2 s with a 500 ms offset from a shift in the red annulus location. Participants were instructed to make a button press (right hand) for auditory targets, which occurred on 20% of trials. Thus, the same number of targets appeared in both tasks during the 0-back, 1-back, and 2-back conditions. Participants performed two consecutive blocks of the same condition (silent, ignore, 0-back, 1-back, 2-back) with the condition order counterbalanced. Each of the 10 blocks had a duration of 200 s with self-paced rest periods between blocks. Participants were given practice in each N-back condition, prior to experimental data collection, until they reached above chance performance.

Figure 1. Guided visual target detection task with concurrent auditory N-back task. Example search grid containing target (“T”) and non-target (“L”) stimuli. A red annuls, surrounding one character, randomly shifted grid location every second. Auditory stimuli consisted of digits (numbers 0–9), binaurally presented 500 ms after annuls onset. Auditory task conditions included silent (no auditory stimuli), ignore, 0-back, 1-back, and 2-back.

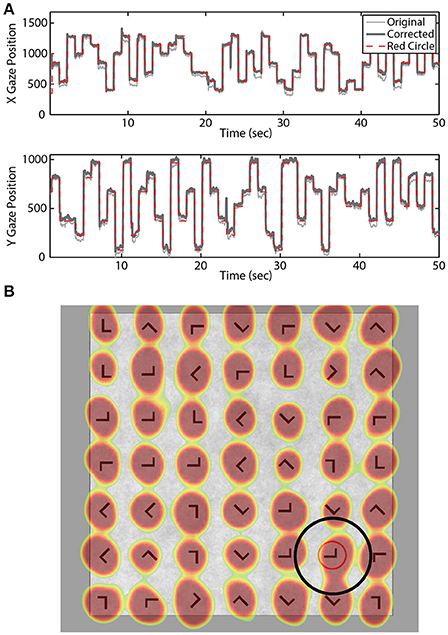

Eye Tracking

Eye-tracking data were sampled at 250 Hz using the SMI RED 250 system (Teltow, Germany). A 15-point calibration was performed prior to the practice and experimental blocks. A post-hoc model was fit to the eye-tracking data for each participant to increase accuracy of the gaze position estimate. Briefly, we used the expected eye position (i.e., location of the red annulus) to fit a quadratic regression model for both the horizontal and vertical gaze position vectors (Figure 2). A temporal lag (250 ms) was applied to the expected location (red annulus) to account for the delay between annulus onset and subsequent fixation.

Figure 2. Gaze location estimation. (A) Example X and Y gaze vectors before and after correction. Dashed red line indicates location of the red annulus. (B) Example search grid overlaid with aggregate gaze position across all blocks for one participant. Black circle illustrates the fixation area considered valid for that stimulus location.

Saccades and fixations were detected in the eye-tracking data using a velocity-based algorithm (Engbert and Mergenthaler, 2006; Dimigen et al., 2011). Saccades and fixations were detected using a velocity factor of 6 (standard deviations of the velocity distributions), minimum saccade duration of 20 ms, minimum fixation duration of 350 ms. If two saccades occurred within a 350 ms window, only the fixation corresponding to the largest saccade was preserved. Fixations were only considered task-relevant or “valid” if they were within 3 degrees of the current stimulus location. These criteria were chosen to focus analyses on the first saccade onto the new stimulus (red annulus) location.

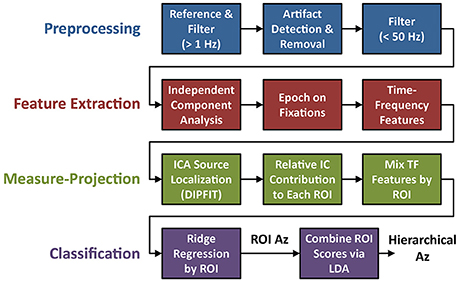

Electroencephalography and Feature Extraction

Electrophysiological signal acquisition and analysis steps are outlined in Figure 3. EEG recordings were digitally sampled at 1,024 Hz from 64 scalp electrodes over the entire scalp using a BioSemi Active Two system (Amsterdam, Netherlands). External leads were placed on the outer canthi, and above and below the orbital fossa of the right eye to record electrooculography (EOG). EEG was referenced offline to the average mastoids, down-sampled to 256 Hz (fs), and digitally high-pass filtered above 1 Hz using the EEGLAB toolbox (Delorme and Makeig, 2004). Large artifacts were detected using a previous described technique (Touryan et al., 2016). Briefly, EEG sessions were segmented into high-resolution 100 ms epochs, with a 10 ms step size. Epochs were marked as high noise if the average power between 90 and 120 Hz was greater than three standard deviations above the mean for all epochs. These epochs were then removed and the remaining EEG record was lowpass filtered below 50 Hz.

Figure 3. Flowchart of EEG preprocessing and model fitting. The four steps of the analysis include: preprocessing (blue), feature extraction (red), ROI-based measure-projection (green), and hierarchical classification (purple).

Each “clean” EEG session was decomposed into independent components using the Extended Infomax ICA algorithm implemented in EEGLAB (Delorme and Makeig, 2004). The equivalent dipole locations of these independent sources were then estimated using the EEGLAB implementation of DIPFIT (Scherg, 1990; Pascual-Marqui et al., 1994). IC activation epochs were extracted around each valid fixation using a temporal window spanning 300 ms before and 1,000 ms after fixation onset. Time-frequency features were also calculated for each epoch using a wavelet transform (Torrence and Compo, 1998). Specifically, we used the Morlet wavelet function:

where ω0 is the central frequency and c the normalization constant. This function was used to create a basis set of 30 wavelets covering the available frequency range with minimum scale of 2/fs and a discrete step size of 0.25 (wavelet transform software available at http://paos.colorado.edu/research/wavelets/). After the wavelet transform, the spectral power of each epoch was computed via multiplication with the complex conjugate of the corresponding epoch. While this time-frequency decomposition included frequencies from 1 to 128 Hz, only frequencies below 32 Hz were included in subsequent analyses.

To isolate activity in brain regions of interest (ROIs), the above IC activation epochs were linearly mixed based on equivalent dipole location using the initial steps of measure-projection analysis (Bigdely-Shamlo et al., 2013). ICs with equivalent dipoles outside of the MNI model brain volume were identified and excluded from analysis (see Supplementary Section 3). These ICs often corresponded to corneo-retinal potentials (i.e., EOG) or muscle artifacts (i.e., EMG). The remaining k IC processes were preserved and their corresponding fixation-locked activation epochs used as the “measure” for each dipole location in the mixing process. Specifically, the fixation-locked activations or measures can be indexed as Mi, i = 1…k for each IC, and the equivalent dipole location x ∈ V ⊂ R3 indexed as D(xi), i = 1 …k. Importantly, there exists uncertainty in dipole localization arising from errors in tissue conductivity parameters, electrode co-registration, noise in the IC estimate process, and between-subject variability in the location of equivalent functional cortical areas. To capture this uncertainty in the mixing process we can instead model each equivalent dipole as a spherical (3-D) Gaussian with uniform covariance σ2, centered at the estimated dipole location xi. The spherical Gaussian is truncated at t*σ to minimize the erroneous influence of distant dipoles in sparsely populated regions. Thus, the probability of dipole D(xj) being located at position y ∈ V now becomes , where TN is a truncated normal distribution centered at xj. Then for an arbitrary location y ∈ V, the expected value of the measure becomes:

Where M(y) is the combined fixation-locked activity at location y from all proximal ICs. We used this approach to calculate the aggregate measure 〈M(y)〉, either fixation-related potential (FRP) or time-frequency spectrum, for specified regions of the brain volume. For this study, six a priori ROIs (Figure 4) were defined using the Measure Projection Toolbox (http://sccn.ucsd.edu/wiki/MPT). Each ROI consisted of all regions of LONI LPBA40 atlas (Shattuck et al., 2008) that included the corresponding anatomical label (e.g., “occipital”). The only additional parameter σ (standard deviation of the Gaussian distribution) in this calculation was set to 12 mm. This value produced smooth spatial distributions in each ROI given the relatively small number of participants (N = 14). The six ROIs included: occipital, fusiform, temporal, parietal, cingulate, and frontal cortices.

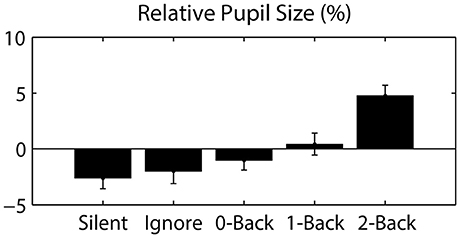

Figure 4. Pupil size, relative to the session average, as a function of auditory task condition. Error bars represent standard error of the mean (SEM).

Hierarchical Classification

For the classification step, we used a two-stage hierarchical approach to dissociate target and non-target fixation epochs. In the first stage, ridge regression (MATLAB® ridge function) was applied separately to the time-frequency epochs from each ROI. Specifically, we applied regularized regression to the entire temporal epoch and frequencies up to 32 Hz. The regularization parameter was determined via calculating the effective degrees of freedom as a function of lambda (λ):

where dj are the singular values of the n x m data matrix X. As these functions were roughly similar across ROIs (Supplementary Figure 3), we selected a hyperparameter value (Lemm et al., 2011) such there were approximately 1 target and 10 non-target observations per degree of freedom (see Model Considerations for a discussion of the validity of this approach). However, the exact value had minimal effect on the results (see Supplementary Section 2). The second stage utilized the regression output, or latent variable estimate, from the six ROIs to provide a single classification score and label for each fixation epoch. In this second stage, we employed linear discriminant analysis (LDA; MATLAB® fitcdiscr function) and coefficients for both stages were fit within a single 5-fold cross-validation scheme. Area under the ROC curve (Az) was calculated for each ROI, as well as for the second-stage LDA classifier. Finally, for direct inference into the discriminant neural activity we calculated the forward model for each ROI (Haufe et al., 2014). Specifically, the regression weights (W) were used to estimate the forward model (A), such that , where ΣX and are the empirical data and score covariance respectively.

To facilitate comparison with other approaches, we included two techniques commonly used for single-trial classification of EEG. Both methods were applied directly to the filtered EEG data (64 channels) using the same fixation epochs described above. First, Hierarchical Discriminant Components Analysis (HDCA) was applied with each epoch divided into 8 equal-sized temporal windows (Gerson et al., 2006). Second, we used the xDAWN algorithm (Rivet et al., 2009) to identify the 8 most discriminant spatial filters followed by a Bayesian linear discriminant analysis, collectively referred to as XD+BLDA (Cecotti et al., 2011). Area under the ROC curve was calculated for both of these classifiers on all participants.

Results

Behavioral and Ocular Measures

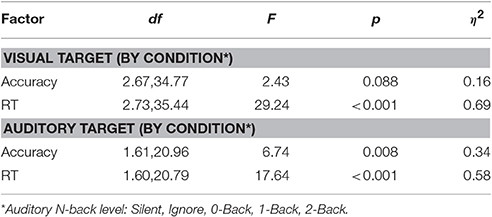

Detailed behavioral analysis of this study has been previously reported (Ries et al., 2016), however the relevant statistics are summarized below for comparison with the classification results. Reaction time and accuracy were analyzed separately for the visual and auditory tasks using a one-way repeated measures ANOVA (Greenhouse-Geisser correction reported where appropriate). The primary factor was auditory task condition, which had five levels in the visual task (Silent, Ignore, 0-Back, 1-Back, 2-Back), and three levels in the auditory task (0-Back, 1-Back, 2-Back). There was a trend for decreased accuracy in the visual task as a function of cognitive load (i.e., auditory N-back level); however this was not statistically significant (Table 1). We did observe a highly significant effect of cognitive load on reaction time (RT) in the visual task [F(2.73, 35.44) = 29.24, p < 0.001, η2 = 0.69] showing that visual target RT increased as a function of cognitive load. Likewise, analysis of the auditory task showed both a significant decrease in accuracy [F(1.61, 20.96) = 6.74, p < 0.01, η2 = 0.34] and increase in RT [F(1.60, 20.79) = 17.64, p < 0.001, η2 = 0.58] with increasing auditory task demands. While the behavioral results showed that auditory working memory load had a significant negative impact on visual task performance, exhibited through increased RT, the near-ceiling accuracy likely mitigated any decline of this corresponding metric. Together, the behavioral results suggest that participants were not exclusively favoring one modality as performance declined in both the visual and auditory tasks with increased cognitive load.

Since eye movements were constrained by the nature of the visual task (guided target detection), the majority of ocular metrics did not significantly differ across blocks or conditions. As expected, we found no significant difference in fixation duration (0.962 ± 0.053 s; mean ± STD) or saccade distance (13.316 ± 1.072 degrees) across auditory task conditions. However, we did observe a large change in pupil dilation as a function of cognitive load (Figure 4). Specifically, we calculated the average pupil size in each condition relative to the average size across each participant's entire session. This relative pupil-size metric was significantly modulated by auditory task condition [F(3.04, 39.57) = 7.98, p < 0.001, η2 = 0.38], exhibiting an increase in size with working memory load. Due to static luminance and counterbalanced condition order (see Materials and Methods), this modulation was unlikely to be a consequence of either changes in luminance or time-on-task (Beatty, 1982). Thus, our results indicate that the task-induced cognitive load increased the arousal level of participants, as has been shown in similar paradigms (Kahneman and Beatty, 1966).

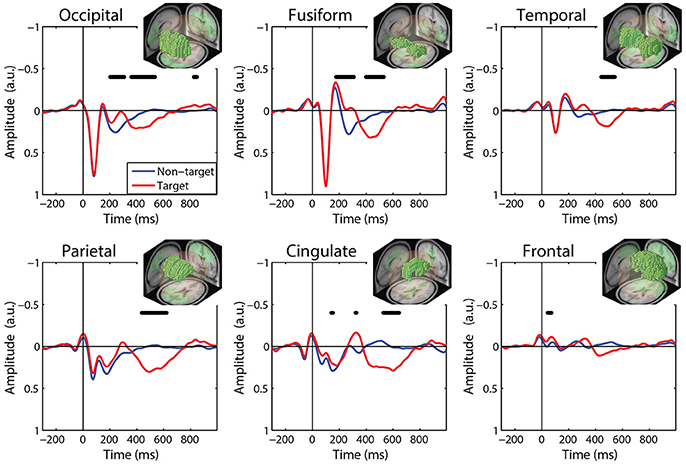

Fixation-Related Potentials (FRPs) by ROI

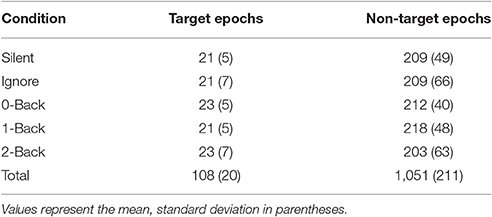

We calculated FRPs for each brain region by combining independent components activations within fixation epochs, using a ROI-based measure-projection approach (ROI-MPA). An IC's contribution to a given ROI was determined by the overlap between the anatomically defined region and the equivalent dipole Gaussian density function (see Materials and Methods). Importantly, by excluding equivalent dipoles located outside of this brain volume this approach attempts to minimize the influence of non-brain signals, such as those generated by eye movements, from the ROIs (see Supplementary Section 3). Figure 5 shows the grand average FRPs from each ROI: occipital, fusiform, temporal, parietal, cingulate, and frontal cortices. To account for the differing number of included ICs, FRPs from each participant were uniformly scaled by total variance and are shown in arbitrary units. All Included epochs were from valid fixations (within 3 degrees of the current stimulus) and free of large artifacts. The average number of target and non-target epochs, by condition, are shown in Table 2.

Figure 5. Grand average FRP by ROI. Target and non-target waveforms from each ROI (black line indicates a significant difference, p < 0.01). All voxels included in the ROI are shown as the inset. FRP response consists of a linear sum of IC activations weighted by their contribution to the corresponding ROI and are shown in arbitrary units. Note: ICs with equivalent dipoles located outside of the brain volume, such as those produced by EOG, are not aggregated in the ROI FRPs.

The ROI FRP waveforms shown in Figure 5 exhibit a clear distinction across brain regions. Both target and non-target FRPs show a temporal progression through the visual cortices (occipital, fusiform, temporal) and reflect known electrophysiological signatures, such as the P1 or lambda component. Importantly, the distinction between target and non-target FRPs is evident in most ROIs. To identify periods of significant difference in the FRP waveforms we used a paired t-test at causal time points in each ROIs (255 time points × 6 ROIs). A single false-discovery-rate correction for multiple comparisons was then applied to all p-values (Benjamini and Hochberg, 1995). As expected, visual cortices show this distinction in earlier epochs, consistent with the visual mismatch negativity (vMMN: 150–250 ms), while the parietal and cingulate cortex exhibit a clear late positive deflection, indicative of the P3 component. In contrast, the frontal cortex shows little saccade-related EOG artifact that would be expected to dominate frontal electrodes (e.g., Fz).

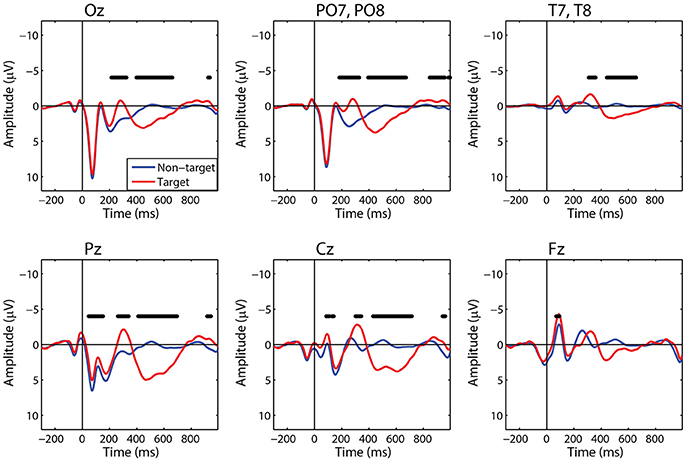

For comparison to the standard approach, we also calculated target and non-target FRPs for electrodes that most directly correspond to each ROI. Figure 6 shows the grand average FRP from these corresponding electrodes, using the same fixation epochs as above (Table 2). For occipital regions, the electrode and ROI FRPs are quite similar, as these electrodes are least affected by changes in the corneo-retinal potential and other saccade related activity. However, EOG artifact increasingly dominates the anterior regions, especially frontal electrodes (e.g., Fz). This can lead to difficulty in dissociating neural from EOG phenomena in more cognitive processes. For these grand averages, the number of target epochs was an order of magnitude lower than that for non-target epochs. However, a similar result was found for both ROI and electrode FRPs when these numbers were equated by randomly sampling a subset of non-target epochs (Supplementary Figures 1, 2).

Figure 6. Grand average FRP by electrode. Target and non-target waveforms from electrodes corresponding to each ROI (black line indicates a significant difference, p < 0.01).

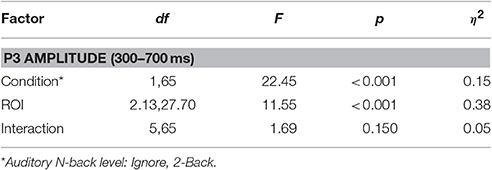

Finally, to quantify the effect of auditory task condition (i.e., working memory load) on the ROI FRP we performed the following analysis. We measured the amplitude of the FRP for auditory conditions at either end of the difficulty spectrum: Ignore and 2-Back. These were chosen as representative of low and high cognitive load conditions; although similar results were found when comparing the Silent and 2-Back conditions. To capture the P3 waveform, we calculated the average amplitude within a 300–700 ms post-fixation window. We then applied a two-way repeated measures ANOVA, with factors ROI and condition, to quantify the effect of cognitive load on this components (Table 3). As expected, there was a strong effect of auditory task condition [F(1, 65) = 22.45, p < 0.001, η2 = 0.15] with the amplitude of the P3 being significantly smaller during high, relative to low, working memory load.

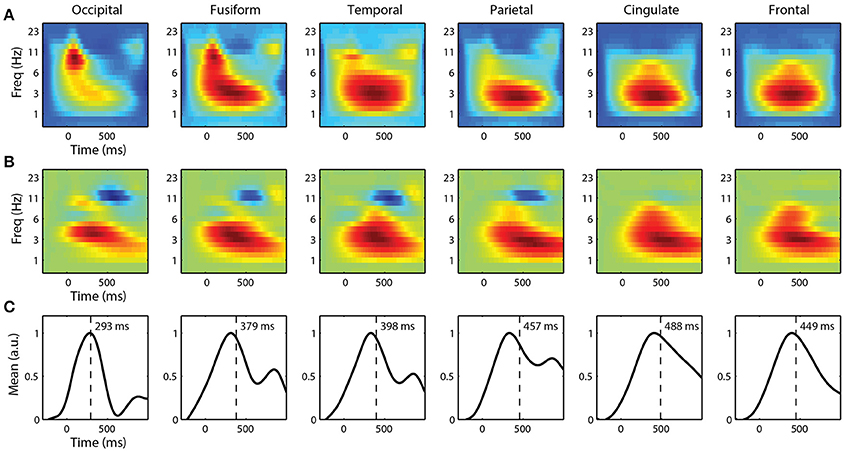

Classification by ROI

For single-trial classification, we used fixation-locked time-frequency features from each ROI. Before linearly mixing IC activations, we first applied a Morlet wavelet transform to each epoch. We then calculated the spectral power of the wavelet transform before combining these time-frequency epochs. Figure 7A shows the grand average spectral FRPs for target epochs from each ROI. These average time-frequency responses, analogous to event related spectral perturbations (ERSP), show a similar time course as the FRPs above. Visual cortices have an early, mid-frequency (alpha band) component that is the spectral equivalent of the lambda response. Similarly, the parietal, cingulate, and frontal cortices are dominated by a later lower frequency (delta band) activity, reflecting the P3 component.

Figure 7. Grand average fixation-related time-frequency features by ROI. (A) Average time-frequency features of target epochs. (B) Forward model and (C) corresponding marginal activation showing the discriminant time course of each ROI. Vertical line represents center-of-mass.

To classify target from non-target fixation epochs, we used ridge regression on these high-dimensional time-frequency features. We constructed separate classifiers for each ROI that utilized spectral information, below 32 Hz, from the entire fixation epoch. The forward models for each ROI are shown in Figure 7B. Again, the time course is similar to the grand average FRPs, where visual cortices have discriminant activity with smaller latencies and higher-frequency components. The marginal activations (Figure 7C) provide a more direct view of the temporal profile of the discriminant activity.

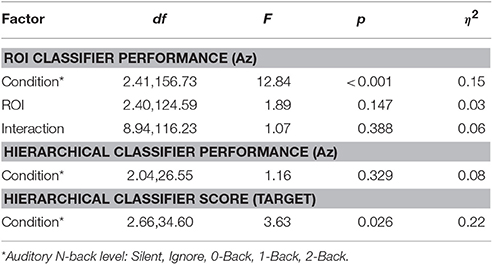

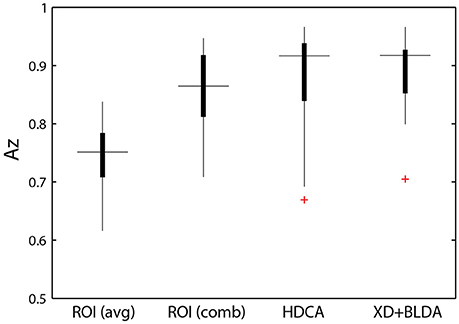

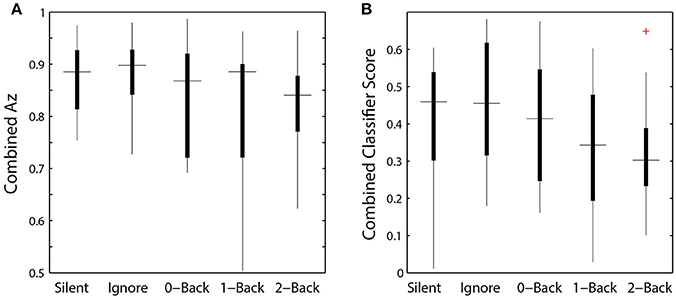

The relative discriminant power of each ROI was quantified by using classifier performance in a two-way repeated measures ANOVA, with factors ROI and auditory task condition (Table 4). We found a significant modulation of the area under the ROC curve (Az) by region [F(2.41, 156.73) = 12.84, p < 0.001, η2 = 0.15]. The average performance across all ROIs and participants was 0.741 ± 0.068 (Figure 8), substantially below behavioral performance in the visual detection task (average accuracy = 0.974 ± 0.030).

Figure 8. Classifier performance. ROI classifier performance (averaged) compared with the hierarchical approach (combined). Also shown are channel-based classification techniques HDCA and XD+BLDA. Horizontal lines indicate median values and box area covers the 25th–75th percentiles.

Integration across regions required a second-stage classifier applied to the output of the ROI regression step. For each epoch, the output from the ROI classifiers (i.e., vector of six classification scores) were combined using a linear discriminant function. Not surprisingly, this hierarchical approach resulted in significantly better performance (Az: 0.851 ± 0.096) than the individual ROI classifiers (p < 0.001; Wilcoxon signed rank test). Interestingly, there was a wide range in classifier performance across participants with Az values ranging from 0.708 to 0.947, indicating that for some individuals our approach was able to identify visual targets at an accuracy similar to behavioral performance. This was despite ongoing neural activity related to the concurrent auditory task as well as the planning and execution of eye movements.

This hierarchical approach compared favorably to other common classification techniques (Figure 8B). Specifically, we applied Hierarchical Discriminant Components Analysis (Gerson et al., 2006) to the EEG channel data using the same epochs as above. We also applied the xDAWN filtering algorithm (Rivet et al., 2009) followed by Bayesian linear discriminant analysis, or XD+BLDA (Cecotti et al., 2011). HDCA and XD+BLDA classification accuracies were similar to our hierarchical approach with HDCA having slightly higher overall performance (p = 0.013).

While we were able to classify visual target from non-target stimuli during a concurrent auditory task, there was a significant modulation of ROI classification performance as a function of cognitive load (Table 4). Here, this modulation was the inverse of that observed in the relative pupil size. Classification performance decreased with increasing auditory task demands; except in the silent and ignore condition in which performance was similar. At the hierarchical stage, while the target scores were significantly modulated by condition (Figure 9B), the classification performance was not (Table 4, Figure 9A). Much like behavioral accuracy in the visual task, the hierarchical classifier performance remained relatively constant across auditory task conditions. However, increasing working memory load clearly affected both the neural activity and pupil diameter in a manner consistent with increased arousal (Murphy et al., 2011).

Figure 9. Hierarchical classifier performance and task difficulty. (A) Classifier performance as a function of auditory task condition. (B) Classifier target scores as a function of auditory task condition. Horizontal lines indicate median values and box area covers the 25th–75th percentiles.

Discussion

In this study, we used a novel approach to determine if the neural response associated with visual target detection could be separated into meaningful components in the presence of eye movements and concurrent task demands. Here, we employed a single framework to isolate neural from non-neural activity and to separate the FRP into cortical regions. Using common statistical techniques we were then able to classify target from non-target FRPs across ROIs on a single-trial basis at a level similar to state-of-the-art machine learning algorithms. By doing so, we were able to show a clear time-course of discriminant activity associated with target detection as well as the modulating effect of cognitive load. While our task design mitigated the overlapping response from previous or subsequent fixations, the results demonstrate the potential for separating task-relevant neural activity in more complex contexts that include eye movements and concurrent tasks.

The EEG analysis framework described here is both specific enough to separate activity by ROI and sensitive enough to evaluate the effects of cognitive load. While more traditional channel-based approaches of FRP analysis may be able to separate these effects by scalp location (e.g., Oz vs. Pz), the inference into the constituent neural sources remains more difficult. Likewise, channel-based approaches require an explicit EOG mitigation or removal process, using ICA (Plöchl et al., 2012) or other techniques (Parra et al., 2005). For example, a number of recent studies have utilized ICA for the identification and removal of EOG components (Nikolaev et al., 2011, 2013; Devillez et al., 2015). However, these studies typically included a manual or semi-manual step for the identification of ICs related to corneo-retinal potentials and eyelid artifacts (although see Mognon et al., 2011; Plöchl et al., 2012). In contrast, our approach uses equivalent dipole locations to include or exclude particular ICs. While there is ongoing debate as to the accuracy of source localization techniques such as LORETA, there is growing evidence that suggests independent sources are indeed dipolar (Delorme et al., 2012). Fortunately, eye movement related ICs typically explained large fractions of the total signal variance and resolve to equivalent dipoles outside the brain volume with relatively little residual error.

In comparison, IC clustering results (Makeig et al., 2002) are highly dependent on the choice of the clustering parameters (in many cases up to 12 tunable parameters without a clear physiological interpretation for each parameter) and provide no guarantees in terms of producing clusters at particular ROIs. However, the ROI-based measure projection approach (ROI-MPA) is able to focus the analysis on selected ROIs while utilizing only a single parameter with a physiological interpretation (i.e., the expected spatial uncertainty in IC dipole localization). Likewise, IC clusters are not well-suited for single-trial analysis. Simply averaging the single-trial activity of the ICs contained in each cluster would not properly account the spatial distribution of dipole locations. For example, ICs adjacent to the cluster boundary would be excluded (weighted zero) while ICs just inside the cluster weighted at unity. ROI-MPA directly incorporates this spatial information and the ROI structure by forming a weighted sum based on IC spatial probability overlap with each ROI.

Eye Tracking, Pupillometry, and EEG

A growing number of studies are combining eye tracking with EEG to enable the exploration for neural activity during visual search. While the task employed here was not a visual search paradigm, our results demonstrate the ability to acquire and utilize gaze position to detect saccades and quantify evoked neural activity. Importantly, our experimental configuration employed a head-free tracking system (SMI RED 250) without a requisite chinrest. This configuration facilitates visual search paradigms or related tasks requiring a large field of view that may naturally engender small head movements. To improve the spatial accuracy of such a system, our analysis included a post-hoc calibration of gaze position. Specifically, we utilized task information to infer gaze position when adjusting the offline calibration model. While this type of information may not always be available, experimenters can and should use an opportunistic calibration approach during periods where gaze position can reasonably be inferred (e.g., prior to trial initiation or visual target detection).

An additional benefit derived from the inclusion of eye tracking is the coincident measure of pupil size. For example, the change in pupil diameter shown here suggests that increased working memory load resulted in an increase in arousal level, an important modulator of cognitive performance. Since arousal is largely regulated through the norepinephrine system via the locus coeruleus (LC), a nucleus within the dorsal pons, it cannot be measured directly via EEG. However, several studies have shown that pupil dilation can be used as a proxy for LC activity (Aston-Jones and Cohen, 2005; Murphy et al., 2011; Hong et al., 2014). Furthermore, the LC receives input from anterior cingulate and dorsolateral prefrontal cortex and some studies have suggested that the LC system underlies the parietal P3 ERP, specifically the P3b (Nieuwenhuis et al., 2005, 2011).

Overall, our results confirm the localization of the P3 to parietal ROIs and show a significant effect of arousal on both pupil diameter and P3 amplitude (Murphy et al., 2011). In addition, target FRPs in the posterior ROIs show a negative deflection, relative to non-target FRPs, beginning around 200 ms post-fixation. This difference is consistent with the visual mismatch negativity (vMMN); a negative posterior deflection elicited by an infrequent (deviant) visual stimulus presented in a homogenous sequence of frequent (standard) stimuli (Czigler et al., 2002). In particular, this difference is consistent with the later components of the vMMN associated with memory-comparison-based change detection (Kimura et al., 2009). However, since the infrequent stimuli (Ts) are also task-relevant, it is difficult to dissociate this vMMN from the attentional orienting component of the P3 (Polich, 2007).

Interestingly, the frontal ROI and Fz electrode showed a small but significant difference between target and non-target fixations at an early latency (approximately 80 ms). Previous studies have shown activity associated with peripheral detection in frontal-parietal regions early in and even prior to target fixations (Dias et al., 2013; Devillez et al., 2015). In this paradigm, target stimuli were never immediately adjacent to the current grid location (red annulus), making the peripheral detection of an upcoming target unlikely. However, it would be reasonable for participants to anticipate a target fixation after a sequence of non-target stimuli were encountered. This phenomenon illustrates the manifold difficulties in the interpretation of eye movement related activity given the dependencies between eye movement behavior (e.g., saccade size, fixation duration) and the elicited response. Similarly, in free-viewing contexts there remains the additional challenge of separating overlapping responses from adjacent saccades and fixations. Fortunately, several techniques have now been proposed to address this potential confound using regression or GLM-based approaches (Burns et al., 2013; Smith and Kutas, 2015; Kristensen et al., 2017).

Model Considerations

The ICA-based, hierarchical classification algorithm described here is not ideally suited for real-time application or meant as an alternative to other channel-based approaches (e.g., HDCA). Rather, the goal of this study was to identify the discriminant neural response in each ROI and to quantify the effect of cognitive load on that response. As such, we did not take additional steps to separate data in our cross-validation scheme. Due to data limitations, ICA was applied to the entire EEG record for each participant rather than independently to each training set. Since ICA is an unsupervised technique, however, the potential for overfitting is limited. Additionally, we selected the hyperparameter by balancing the effective degrees of freedom with the number of data points (fixation epochs) rather than through a separate cross-validation step. Here, the exact choice of parameter did not substantially influence the results (see Supplementary Section 2). The added separation of these additional cross-validation steps would significantly reduce the amount of data and the quality of the estimated discriminate functions without providing any additional insight into the neural processes.

Implications for BCI

Importantly, our hierarchical classification scheme was shown to perform at a level similar to other state-of-the-art machine learning algorithms such as HDCA and XD-BLDA. This result suggests that our approach captured the majority of the task-related variance within the EEG record. While the average FRPs are useful and exhibit an effect of cognitive load, single-trial classification techniques can reveal additional discriminative activity (Brouwer et al., 2012). The forward model of each ROI (Figure 7) reveal both the time course and spectral characteristics of the discriminant neural response. Thus, while our approach imposes an additional computational burden, compared with the above methods, it adds insight into the source of task-related neural activity.

Our results can likewise be used to guide BCI development and future applications. P3-based paradigms remain a key component of the BCI application space, such as the P300 Speller (Krusienski et al., 2006). Presently, these reactive BCIs typically classify the neural response to passively viewed stimuli, such as in a rapid serial visual presentation (Gerson et al., 2005; Touryan et al., 2011; Bigdely-Shamlo et al., 2013). In this passive condition, stimuli are presented to the user who detects the desired target (e.g., target object within an image). In contrast, for targets occurring in natural or ordered environments, a more ecologically valid approach for detection would be through goal-directed visual search (Jangraw et al., 2014; Ušćumlić and Blankertz, 2016). In this case, stimulus presentation is controlled through the user's search strategy, with fixation onset serving as a natural time-locking event. Thus, the growing body of work on single-trial classification of FRPs will support the improved performance of future FRP BCI technology.

Conclusion and Future Work

In this study, we provide a principled framework for interpreting EEG in the presence of eye movements and concurrent task demands by adapting a recently developed independent source aggregation technique (Bigdely-Shamlo et al., 2013). This approach enabled us to both quantify the discriminant information contained within each cortical region and measure the effect of cognitive load on the evoked response. While these phenomena have been previously observed, our results demonstrate the feasibility and utility of combining synchronous recordings of EEG and eye-tracking to measure both sensory and cognitive processes. Our approach can be extended to tasks that incorporate unconstrained eye movements, however, additional techniques would be needed to account for the overlapping activity from adjacent saccades and fixations.

In this experiment we did not explicitly manipulate top-down (goal-directed) or bottom-up (stimulus driven) components of the visual task beyond increasing the overall working memory load. However, our ROI mapping framework would be well suited for such an assessment. ROI analysis could be applied across conditions to identify what factors bias top-down vs. bottom-up neural activity in a visual search paradigm. Eye movements biased by top-down task influences may have greater pre- or post-saccadic activity in frontal cortices (Nikolaev et al., 2013). Likewise, eye movements driven by bottom-up stimulus influences may have greater post-saccadic activity in occipital cortex. This distinction may become essential for understanding free-viewing search in natural scenes where visual information leading to a detection event can be accumulated across fixations (Jangraw et al., 2014) rather than isolated to a single gaze position.

Ethics Statement

The voluntary, fully informed consent of the persons used in this research was obtained in written form. The document used to obtain informed consent was approved by the U.S. Army Research Laboratory's Institutional Review Board (IRB) in accordance with 32 CFR 219 and AR 70-25, and also in compliance with the Declaration of Helsinki. The study was reviewed and approved (approval# ARL 14-042) by the U.S. Army Research Laboratory's IRB before the study began.

Author Contributions

JT, AR, and PC develop the task and conducted the experiment. VL and NB developed the single-trial ROI method. AR and JT analyzed the data. JT wrote the manuscript.

Funding

Research was sponsored by the U.S. Army Research Laboratory and was accomplished under Contract Number W911NF-09-D-0001, W911NF-10-D-0022, and ARL-74A-HR53. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the Army Research Laboratory or U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation herein.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank Barry Ahrens for the development of the task software and data synchronization scheme. The authors would also like to thank Stephen Gordon and Michael Nonte for help with HDCA and XD+BLDA classification and Anne-Marie Brouwer for helpful comments on the manuscript.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/article/10.3389/fnhum.2017.00357/full#supplementary-material

References

Aston-Jones, G., and Cohen, J. D. (2005). An integrative theory of locus coeruleus-norepinephrine function: adaptive gain and optimal performance. Annu. Rev. Neurosci. 28, 403–450. doi: 10.1146/annurev.neuro.28.061604.135709

Beatty, J. (1982). Task-evoked pupillary responses, processing load, and the structure of processing resources. Psychol. Bull. 91, 276–292. doi: 10.1037/0033-2909.91.2.276

Benjamini, Y., and Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. B 55, 289–300.

Bigdely-Shamlo, N., Mullen, T., Kreutz-Delgado, K., and Makeig, S. (2013). Measure projection analysis: a probabilistic approach to EEG source comparison and multi-subject inference. Neuroimage 72, 287–303. doi: 10.1016/j.neuroimage.2013.01.040

Brouwer, A.-M., Hogervorst, M. A., Erp, J. B. F., Heffelaar, T., Zimmerman, P. H., and Oostenveld, R. (2012). Estimating workload using EEG spectral power and ERPs in the n-back task. J. Neural Eng. 9:045008. doi: 10.1088/1741-2560/9/4/045008

Brouwer, A.-M., Reuderink, B., Vincent, J., van Gerven, M. A. J., and van Erp, J. B. F. (2013). Distinguishing between target and nontarget fixations in a visual search task using fixation-related potentials. J. Vis. 13:17. doi: 10.1167/13.3.17

Burns, M. D., Bigdely-Shamlo, N., Smith, N. J., Kreutz-Delgado, K., and Makeig, S. (2013). “Comparison of averaging and regression techniques for estimating Event Related Potentials,” in 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (Osaka), 1680–1683.

Cecotti, H., Rivet, B., Congedo, M., Jutten, C., Bertrand, O., Maby, E., et al. (2011). A robust sensor-selection method for P300 brain–computer interfaces. J. Neural Eng. 8:016001. doi: 10.1088/1741-2560/8/1/016001

Czigler, I., Balázs, L., and Winkler, I. (2002). Memory-based detection of task-irrelevant visual changes. Psychophysiology 39, 869–873. doi: 10.1111/1469-8986.3960869

Dandekar, S., Ding, J., Privitera, C., Carney, T., and Klein, S. A. (2012a). The fixation and saccade P3. PLoS ONE 7:e48761. doi: 10.1371/journal.pone.0048761

Dandekar, S., Privitera, C., Carney, T., and Klein, S. A. (2012b). Neural saccadic response estimation during natural viewing. J. Neurophysiol. 107, 1776–1790. doi: 10.1152/jn.00237.2011

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Delorme, A., Palmer, J., Onton, J., Oostenveld, R., and Makeig, S. (2012). Independent EEG sources are dipolar. PLoS ONE 7:e30135. doi: 10.1371/journal.pone.0030135

Devillez, H., Guyader, N., and Guérin-Dugué, A. (2015). An eye fixation–related potentials analysis of the P300 potential for fixations onto a target object when exploring natural scenes. J. Vis. 15, 20–20. doi: 10.1167/15.13.20

Dias, J. C., Sajda, P., Dmochowski, J. P., and Parra, L. C. (2013). EEG precursors of detected and missed targets during free-viewing search. J. Vis. 13, 13–13. doi: 10.1167/13.13.13

Dimigen, O., Sommer, W., Hohlfeld, A., Jacobs, A. M., and Kliegl, R. (2011). Coregistration of eye movements and EEG in natural reading: analyses and review. J. Exp. Psychol. Gen. 140, 552–572. doi: 10.1037/a0023885

Engbert, R., and Mergenthaler, K. (2006). Microsaccades are triggered by low retinal image slip. Proc. Natl. Acad. Sci. U.S.A. 103, 7192–7197. doi: 10.1073/pnas.0509557103

Gaarder, K., Krauskopf, J., Graf, V., Kropfl, W., and Armington, J. C. (1964). Averaged brain activity following saccadic eye movement. Science 146, 1481–1483.

Gerson, A. D., Parra, L. C., and Sajda, P. (2005). Cortical origins of response time variability during rapid discrimination of visual objects. Neuroimage 28, 342–353. doi: 10.1016/j.neuroimage.2005.06.026

Gerson, A. D., Parra, L. C., and Sajda, P. (2006). Cortically coupled computer vision for rapid image search. IEEE Trans. Neural Syst. Rehabil. Eng. 14, 174–179. doi: 10.1109/TNSRE.2006.875550

Haufe, S., Meinecke, F., Görgen, K., Dähne, S., Haynes, J.-D., Blankertz, B., et al. (2014). On the interpretation of weight vectors of linear models in multivariate neuroimaging. Neuroimage 87, 96–110. doi: 10.1016/j.neuroimage.2013.10.067

Hong, L., Walz, J. M., and Sajda, P. (2014). Your eyes give you away: prestimulus changes in pupil diameter correlate with poststimulus task-related EEG dynamics. PLoS ONE 9:e91321. doi: 10.1371/journal.pone.0091321

Jangraw, D. C., Wang, J., Lance, B. J., Chang, S.-F., and Sajda, P. (2014). Neurally and ocularly informed graph-based models for searching 3D environments. J. Neural Eng. 11:046003. doi: 10.1088/1741-2560/11/4/046003

Kamienkowski, J. E., Ison, M. J., Quiroga, R. Q., and Sigman, M. (2012). Fixation-related potentials in visual search: a combined EEG and eye tracking study. J. Vis. 12:4. doi: 10.1167/12.7.4

Kaunitz, L. N., Kamienkowski, J. E., Varatharajah, A., Sigman, M., Quiroga, R. Q., and Ison, M. J. (2014). Looking for a face in the crowd: fixation-related potentials in an eye-movement visual search task. Neuroimage 89, 297–305. doi: 10.1016/j.neuroimage.2013.12.006

Kazai, K., and Yagi, A. (1999). Integrated effect of stimulation at fixation points on EFRP (eye-fixation related brain potentials). Int. J. Psychophysiol. 32, 193–203. doi: 10.1016/S0167-8760(99)00010-0

Kimura, M., Katayama, J., Ohira, H., and Schröger, E. (2009). Visual mismatch negativity: new evidence from the equiprobable paradigm. Psychophysiology 46, 402–409. doi: 10.1111/j.1469-8986.2008.00767.x

Kowler, E. (2011). Eye movements: the past 25 years. Vision Res. 51, 1457–1483. doi: 10.1016/j.visres.2010.12.014

Kristensen, E., Guerin-Dugué, A., and Rivet, B. (2017). Regularization and a general linear model for event-related potential estimation. Behav. Res. Methods doi: 10.3758/s13428-017-0856-z. [Epub ahead of print].

Krusienski, D. J., Sellers, E. W., Cabestaing, F., Bayoudh, S., McFarland, D. J., Vaughan, T. M., et al. (2006). A comparison of classification techniques for the P300 Speller. J. Neural Eng. 3, 299–305. doi: 10.1088/1741-2560/3/4/007

Lemm, S., Blankertz, B., Dickhaus, T., and Müller, K.-R. (2011). Introduction to machine learning for brain imaging. Neuroimage 56, 387–399. doi: 10.1016/j.neuroimage.2010.11.004

Makeig, S., Westerfield, M., Jung, T.-P., Enghoff, S., Townsend, J., Courchesne, E., et al. (2002). Dynamic brain sources of visual evoked responses. Science 295, 690–694. doi: 10.1126/science.1066168

Mognon, A., Jovicich, J., Bruzzone, L., and Buiatti, M. (2011). ADJUST: an automatic EEG artifact detector based on the joint use of spatial and temporal features. Psychophysiology 48, 229–240. doi: 10.1111/j.1469-8986.2010.01061.x

Murphy, P. R., Robertson, I. H., Balsters, J. H., and O'connell, R. G. (2011). Pupillometry and P3 index the locus coeruleus–noradrenergic arousal function in humans. Psychophysiology 48, 1532–1543. doi: 10.1111/j.1469-8986.2011.01226.x

Nieuwenhuis, S., Aston-Jones, G., and Cohen, J. D. (2005). Decision making, the P3, and the locus coeruleus–norepinephrine system. Psychol. Bull. 131:510. doi: 10.1037/0033-2909.131.4.510

Nieuwenhuis, S., De Geus, E. J., and Aston-Jones, G. (2011). The anatomical and functional relationship between the P3 and autonomic components of the orienting response. Psychophysiology 48, 162–175. doi: 10.1111/j.1469-8986.2010.01057.x

Nikolaev, A. R., Jurica, P., Nakatani, C., Plomp, G., and van Leeuwen, C. (2013). Visual encoding and fixation target selection in free viewing: presaccadic brain potentials. Front. Syst. Neurosci. 7:26. doi: 10.3389/fnsys.2013.00026

Nikolaev, A. R., Meghanathan, R. N., and van Leeuwen, C. (2016). Combining EEG and eye movement recording in free viewing: pitfalls and possibilities. Brain Cogn. 107, 55–83. doi: 10.1016/j.bandc.2016.06.004

Nikolaev, A. R., Nakatani, C., Plomp, G., Jurica, P., and van Leeuwen, C. (2011). Eye fixation-related potentials in free viewing identify encoding failures in change detection. Neuroimage 56, 1598–1607. doi: 10.1016/j.neuroimage.2011.03.021

Parra, L. C., Spence, C. D., Gerson, A. D., and Sajda, P. (2005). Recipes for the linear analysis of EEG. Neuroimage 28, 326–341. doi: 10.1016/j.neuroimage.2005.05.032

Pascual-Marqui, R. D., Michel, C. M., and Lehmann, D. (1994). Low resolution electromagnetic tomography: a new method for localizing electrical activity in the brain. Int. J. Psychophysiol. 18, 49–65. doi: 10.1016/0167-8760(84)90014-X

Plöchl, M., Ossandón, J. P., and König, P. (2012). Combining EEG and eye tracking: identification, characterization, and correction of eye movement artifacts in electroencephalographic data. Front. Hum. Neurosci. 6:278. doi: 10.3389/fnhum.2012.00278

Polich, J. (2007). Updating P300: an integrative theory of P3a and P3b. Clin. Neurophysiol. 118, 2128–2148. doi: 10.1016/j.clinph.2007.04.019

Ries, A. J., Touryan, J., Ahrens, B., and Connolly, P. (2016). The impact of task demands on fixation-related brain potentials during guided search. PLoS ONE 11:e0157260. doi: 10.1371/journal.pone.0157260

Rivet, B., Souloumiac, A., Attina, V., and Gibert, G. (2009). xDAWN algorithm to enhance evoked potentials: application to brain-computer interface. IEEE Trans. Biomed. Eng. 56, 2035–2043. doi: 10.1109/TBME.2009.2012869

Scherg, M. (1990). “Fundamentals of dipole source potential analysis,” in Auditory Evoked Magnetic Fields and Electric Potentials, eds F. Grandori, M. Hoke, G. L. Romani (Basel: Karger), 40–69.

Shattuck, D. W., Mirza, M., Adisetiyo, V., Hojatkashani, C., Salamon, G., Narr, K. L., et al. (2008). Construction of a 3D probabilistic atlas of human cortical structures. Neuroimage 39, 1064–1080. doi: 10.1016/j.neuroimage.2007.09.031

Smith, N. J., and Kutas, M. (2015). Regression-based estimation of ERP waveforms: I. The rERP framework. Psychophysiology 52, 157–168. doi: 10.1111/psyp.12317

Thickbroom, G. W., Knezevič, W., Carroll, W. M., and Mastaglia, F. L. (1991). Saccade onset and offset lambda waves: relation to pattern movement visually evoked potentials. Brain Res. 551, 150–156. doi: 10.1016/0006-8993(91)90927-N

Torrence, C., and Compo, G. P. (1998). A practical guide to wavelet analysis. Bull. Am. Meteorol. Soc. 79, 61–78.

Touryan, J., Gibson, L., Horne, J. H., and Weber, P. (2011). Real-time measurement of face recognition in rapid serial visual presentation. Front. Psychol. 2:42. doi: 10.3389/fpsyg.2011.00042

Touryan, J., Lance, B. J., Kerick, S. E., Ries, A. J., and McDowell, K. (2016). Common EEG features for behavioral estimation in disparate, real-world tasks. Biol. Psychol. 114, 93–107. doi: 10.1016/j.biopsycho.2015.12.009

Ušćumlić, M., and Blankertz, B. (2016). Active visual search in non-stationary scenes: coping with temporal variability and uncertainty. J. Neural Eng. 13:016015. doi: 10.1088/1741-2560/13/1/016015

Keywords: fixation-related potentials, EEG, eye tracking, target detection, cognitive load

Citation: Touryan J, Lawhern VJ, Connolly PM, Bigdely-Shamlo N and Ries AJ (2017) Isolating Discriminant Neural Activity in the Presence of Eye Movements and Concurrent Task Demands. Front. Hum. Neurosci. 11:357. doi: 10.3389/fnhum.2017.00357

Received: 10 January 2017; Accepted: 21 June 2017;

Published: 07 July 2017.

Edited by:

Fabien Lotte, Institut National de Recherche en Informatique et en Automatique (INRIA), FranceReviewed by:

Benjamin Blankertz, Technische Universität Berlin, GermanyAndrey R. Nikolaev, KU Leuven, Belgium

Copyright © 2017 Touryan, Lawhern, Connolly, Bigdely-Shamlo and Ries. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jon Touryan, jonathan.o.touryan.civ@mail.mil

Jon Touryan

Jon Touryan Vernon J. Lawhern

Vernon J. Lawhern Patrick M. Connolly

Patrick M. Connolly Nima Bigdely-Shamlo

Nima Bigdely-Shamlo Anthony J. Ries

Anthony J. Ries