Static and Dynamic Measures of Human Brain Connectivity Predict Complementary Aspects of Human Cognitive Performance

- 1Department of Psychology, Rice University, Houston, TX, United States

- 2Department of Physics & Astronomy, Rice University, Houston, TX, United States

- 3Center for Theoretical Biological Physics, Rice University, Houston, TX, United States

- 4Department of Bioengineering, Rice University, Houston, TX, United States

In cognitive network neuroscience, the connectivity and community structure of the brain network is related to measures of cognitive performance, like attention and memory. Research in this emerging discipline has largely focused on two measures of connectivity—modularity and flexibility—which, for the most part, have been examined in isolation. The current project investigates the relationship between these two measures of connectivity and how they make separable contribution to predicting individual differences in performance on cognitive tasks. Using resting state fMRI data from 52 young adults, we show that flexibility and modularity are highly negatively correlated. We use a Brodmann parcellation of the fMRI data and a sliding window approach for calculation of the flexibility. We also demonstrate that flexibility and modularity make unique contributions to explain task performance, with a clear result showing that modularity, not flexibility, predicts performance for simple tasks and that flexibility plays a greater role in predicting performance on complex tasks that require cognitive control and executive functioning. The theory and results presented here allow for stronger links between measures of brain network connectivity and cognitive processes.

Introduction

Research in cognitive neuroscience has typically focused on identifying the function of individual brain regions. Recent advances, however, have led to thinking about the brain as consisting of interacting subnetworks that can be identified by examining connectivity across the whole brain. This emerging discipline of cognitive network neuroscience has been made possible by combining methods from functional neuroimaging and network science (Bullmore et al., 2009; Sporns, 2014; Medaglia et al., 2015; Mill et al., 2017). Functional and diffusion MRI methods provide a rich source of data for characterizing the connections—either functionally or structurally—between different brain regions. Using these data, network science provides mathematical tools for investigating the structure of the brain network, with brain regions serving as nodes, and the connections between brain regions serving as edges in the analysis.

Under this framework, the structure of the brain network can be characterized with a variety of measures. For example, one measure, network modularity, captures the extent to which a network has community structure, by dividing the brain into different modules that are more internally dense than would be expected by random connections (Newman, 2006). A different measure, network flexibility, characterizes how frequently regions of the brain switch allegiance from one module to another over time (Bassett et al., 2010). Going forward, a major challenge of cognitive network neuroscience is to determine the relationship between measures of brain network structure and cognitive processes (Sporns, 2014).

Relating individual differences in brain network structure to behavioral performance on cognitive tasks provides one tool for addressing this challenge. Both modularity and flexibility have been shown to correlate with variation in cognitive performance. Previous empirical research from our laboratory (Yue et al., 2017) and others (Cohen and D'Esposito, 2016) suggest an interaction between measures of network structure and performance on simple vs. complex tasks. For example, previous studies have shown that individual differences in modularity correlate with variation in memory capacity (e.g., Stevens et al., 2012; Meunier et al., 2014). This work has been extended in several recent studies (Cohen and D'Esposito, 2016; Yue et al., 2017), which report a systematic relationship between an individual's performance on a range of behavioral tasks. Both studies report a cross-over interaction; individuals with lower network modularity perform better on complex tasks, like working memory tasks (n-back or operation span), that likely require communication across different brain networks, while individuals with higher network modularity perform better on simpler tasks, like reaction to exogenous cues of attention, simple visual change detection, or low level motor learning. This interaction is predicted by theoretical work on modularity in biological systems (Deem, 2013) which finds that at short time scales, systems with higher modularity afford greater fitness than systems with lower modularity, while at longer times scales, systems with lower modularity are preferred. At the same time, many recent studies have reported that individual variation in network flexibility can explain a host of performance measures, skill learning (e.g., Bassett et al., 2010, 2013), cognitive control (e.g., Alavash et al., 2015; Braun et al., 2015), and mood (Betzel et al., 2016). Indeed, brain network flexibility has been identified as a biomarker of the cognitive construct of cognitive flexibility (Braun et al., 2015).

These prior investigations have focused on either modularity or flexibility as a network measure. Some of the studies have investigated quite different cognitive processes for modularity (e.g., attentional control) and flexibility (e.g., mood). On the other hand, other studies have focused on similar constructs (e.g., working memory for modularity and cognitive control for flexibility or motor learning for both). Thus, these studies leave open the question of the extent to which modularity and flexibility underlie different or similar cognitive abilities. There is an intuitive basis for thinking that they reflect different capacities, as flexibility relates to how much brain networks change over time, and modularity relates to differences in interconnectivity. However, such a conclusion would be premature, since each of these previous studies measured modularity and flexibility in isolation, without considering whether the other measure could also explain variation in the same cognitive performance and whether each contributes independently when the contributions of both are considered simultaneously. No study has directly addressed the basic question of the relationship between modularity and flexibility1. This relationship might be one key to understanding how different measures from network neuroscience relate to different cognitive functions.

The current study investigated the relationship between flexibility and modularity, demonstrating a strong relationship between the two measures and presenting a theoretical framework that explains this relationship. Despite this correlation, we argue that modularity and flexibility still reflect distinct cognitive abilities. Specifically, previous theory suggests that high modularity should result in better performance on simple tasks while low modularity should result in better performance on complex tasks (Deem, 2013). This same theory suggests that flexibility should be negatively correlated with performance on simple tasks and positively correlated with performance on complex tasks, and so we investigated the relationship between flexibility, modularity and performance on a battery of simple and complex cognitive tasks. Based on both theory and previous empirical results, we predict that higher brain modularity and/or lower flexibility is related to better performance on simpler tasks while lower brain modularity and/or higher flexibility is related to better performance on more complex tasks.

Methods

Participants

Participants were 52 (18–26 years old, Mean: 19.8 years; 16 males and 36 females) students from Rice University with no neurological or psychiatric disorders. Subjects were given informed consent in accordance with procedures approved by the Rice University Institutional Review Board. Subjects were compensated with $50 upon their participation in both the behavioral and imaging sessions.

Resting-State fMRI

Imaging Data Acquisition

A high-resolution T1-weighted structural and three resting state functional scans were acquired during a 30-min session using a 3T Siemens Magnetom Tim Trio scanner equipped with a 12-channel head coil. Scanning was done at the Core for Advanced Magnetic Resonance Imaging (CAMRI) at Baylor College of Medicine. A T1-weighted structural scan was collected first, followed by three consecutive 7-min functional scans. In between runs, subjects were instructed to remain lying down in the scanner and were informed that the next run would begin shortly. All 52 subjects participated in the imaging session, which lasted about 30 min. The T1-weighted structural scan involved the following parameters: TR = 2,500 ms, TE = 4.71 ms, FoV = 256 mm, matrix size = 256 × 256, voxel size = 1 × 1 × 1 mm3.. Functional runs were three 7-min resting-state scans obtained by using the following sequences: TR = 2,000 ms, TE = 40 ms, FoV = 220 mm, voxel size = 3 × 3 × 4 mm, slice thickness = 4 mm. A total of 210 volumes per run each with 34 slices were acquired in the axial plane to cover the whole brain.

Preprocessing

Image preprocessing was conducted using afni_proc.py script from example 9a of AFNI_2011_12_21_1014 version software (Cox, 1996) and recommended preprocessing pipelines by Jo et al., 2013. Each functional run was preprocessed separately, including de-spiking of large fluctuations for some time points, slice timing and head motion correction. Then each subject's functional images were aligned to that individual's structural image, warped to the Talairach standard space, and resampled to 3-mm isotropic voxels. Next, the functional images were spatially smoothed with a 4-mm full-width half-maximum Gaussian kernel. A whole brain mask was then generated and applied for all subsequent analysis. A multiple regression model was then applied to each voxel's time series to regress out several nuisance signals, including third-order polynomial baseline trends, six head motion correction parameters and six derivatives of head motion2. The outliers censoring process recorded the time points in which the head motion exceeded a distance (Euclidean Norm) of 0.2 mm with respect to the previous time point, or in which >10% of whole brain voxels were considered as outliers by AFNI's 3dToutcount. Then, the recorded time points in each brain voxel were censored by replacing signal at these points using linear interpolation. A multiple regression model was then applied to each voxel's time series to regress out several nuisance signals, including third-order polynomial baseline trends, six head motion correction parameters, and six derivatives of head motion3. In order to reduce the effects of low frequency physiological noise and to ensure that no nuisance-related variation was introduced, a bandpass (0.005–0.1 Hz) filtering was conducted in the same regression model (Biswal et al., 1995; Cordes et al., 2001; Hallquist et al., 2013; Ciric et al., 2017). The residual time series after application of the regression model were used for the following network analyses.

Network Re-construction, Modularity, and Flexibility Calculation

Network re-construction

The whole brain network was re-constructed based on different functional and anatomical brain parcellations including others used in the resting state literature (Power et al., 2011; Craddock et al., 2012; Glasser et al., 2016; Gordon et al., 2016). The full set of results for all parcellation schemes is reported in the Supplementary Material. The results are largely consistent across parcellation scheme, but were the clearest with the anatomical parcellation from the 84 Brodmann areas (BA) (42 Brodmann areas for left and right hemispheres, respectively). Therefore, results from the BA anatomical parcellation are reported below. First, Brodmann area masks were generated using the TT_Daemon standard AFNI atlas (Lancaster et al., 2000), from AFNI_2011_12_21_1014 version. Then the mean time series for each area was extracted by averaging the preprocessed time series across all voxels covered by the corresponding mask. In the network, each Brodmann area served as a node and the edge between any two nodes was defined by the Pearson correlation of the time series for those two nodes. For each subject and each run, edges for all pairs of nodes in the network were estimated, resulting in an 84 × 84 correlation matrix. While the modularity values varied within subject across the three runs, calculating modularity values from each of the run separately and then averaging together those three modularity values was highly correlated [r = 0.92, p = 1.8*10∧(−22)] with the modularity value obtained from a correlation matrix that averaged the correlations across the three runs. Thus, the averaged correlation matrix across three runs was later used to calculate modularity for each subject by applying the Newman algorithm (Newman, 2006).

Modularity

Modularity is a measure of the excess probability of connections within the modules, relative to what is expected by chance. To calculate modularity, we first took the absolute values of each correlation and set all the diagonal elements of the correlation matrix to zero. Since fewer than 0.05% of the elements in the matrix were negative and their absolute values were relatively small, taking absolute values did not have a major effect on the results. To show that taking the absolute values did not have major effects on the results, the correlation between modularity and flexibility from raw data is also reported. The resulting matrix was binarized by setting the largest 400 edges (i.e., 11.47% graph density) in the network to 1 and all others to 0. To show that the results were persistent with the cutoff, we also considered 300 (8.61%) and 500 edges (14.34%). The non-linear filtering afforded by the binarization process has been argued to improve detection of modularity, by increasing the signal-to-noise ratio (Chen and Deem, 2015). Modularity was defined as in Equation (1) below, where Aij is 1 if there is an edge between Broadmann areas i and j and zero otherwise, the value of ai = ΣjAij is the degree of Brodamann area i, and e = Σiai is the total number of edges, here set to 300, 400, and 500, respectively. Newman's algorithm was applied to the binarized matrix to obtain the (maximal) modularity value and the corresponding partitioning of Brodmann areas into different modules for each subject.

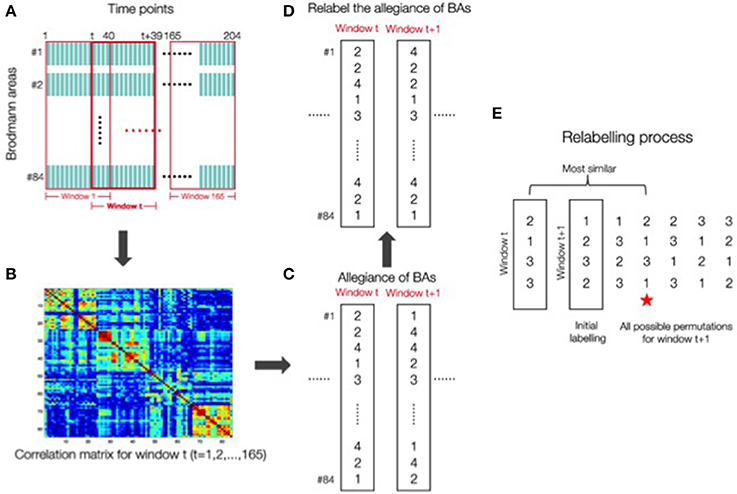

Flexibility

Different methods have been proposed for calculating flexibility, either from methods that rely on modeling the networks as a multi-layer ensemble (Bassett et al., 2010; Mucha et al., 2010), or from a sliding window approach, in which the scan session is divided into overlapping sub-intervals, modularity is calculated over each sub-interval and each of the parcellation of brain regions into modules is compared across adjacent windows (Hutchison et al., 2013). Here we adopt this latter approach for calculating flexibility, as this sliding window analysis is the most commonly used strategy for examining dynamics in resting-state connectivity (see Hutchison et al., 2013 for a discussion). Flexibility was calculated from the time series data by following a sliding window with 40 time points (Figure 1A). This sliding window of 40 points was chosen because the autocorrelations returned to zero at around time 40 (see Supplementary Figure 3) as well as being a length within the norm of previous studies (Leonardi and Van De Ville, 2015; Zalesky and Breakspear, 2015). For each window we obtained a correlation matrix as shown in Figure 1B. Figure 1C reflects the computation of Ci(t), the record of which module the ith Brodmann area is in, at time window t, 1≤t≤165. Flexibility for a given Brodmann area of a given subject is the number of changes in the value of Ci(t) across the 165 time windows of length 40. Note that the labeling of the modules can change between time points. Therefore, to account for this effect, we relabeled module numbering so that the difference, defined as the number of areas that have Ci(t+1)≠Ci(t), i = 1–84 is minimized. The assumption behind this is that the allegiance of areas either only have a minimal change, or no change at all because between adjacent windows there is only one time point out of 40 that has changed. This minimized distance is considered the real difference between windows (Figures 1C,D). A detailed illustration of the relabeling process is in Figure 1E. Flexibility for a given subject is the average of flexibility values from three runs across all Brodmann areas for that subject. The data from the three runs were not concatenated. Such concatenation would introduce a discontinuity in the time series data, include machine artifacts at the beginning of each run, and lead to spurious correlations in the dynamics. Several recent studies have raised questions about the use of this sliding window approach for calculating network flexibility (Hindriks et al., 2016, Kudela et al., 2017). In particular, these studies raise issues about the ability to differentiate signal from noise with small time series of resting-state data. We can address this concern with our data by correlating the flexibility values obtained from each of the three runs across participants. If these flexibility values simply reflect fluctuations caused by noise, then correlations should not be observed across runs. However, flexibility values are significantly correlated with each other (p < 0.03, see Supplementary Information), indicating signal has been detected in the data.

Figure 1. (A) Flexibility calculation using a sliding window method with 40 time points. (B) For each window a correlation matrix was obtained. (C) Computation of the record of which module the ith Brodmann areas was in C1(t) at time point window t, 1≤t≤165. (D) Relabeling of the allegiance of Brodmann areas was done to account for the fact that the labeling of the modules can change between time points. This relabeling process was done to ensure that the difference defined as the number of areas that have Ci(t+1) ≠ Ci(t) (i = 1–84) was minimized. (E) A detail of the relabeling process is shown here. The red star indicates that the permutation for window (t+1) “2 1 3 1” is the closest to the window t labeling “2 1 3 3”. The relabelling process will choose the starred permutation for window (t+1).

Behavioral Tasks

Previous empirical research from our laboratory (Yue et al., 2017) and others (Cohen and D'Esposito, 2016) suggests an interaction between measures of network structure and performance on simple vs. complex tasks. This interaction is supported by theoretical work on modularity (Deem, 2013) which finds that at short time scales, biological systems with higher modularity are preferred over systems with lower modularity, while at longer times scales, biological systems with lower modularity are preferred. Relating this theory to brain modularity and performance on cognitive tasks, we predict that higher brain modularity would be related to better performance on simpler tasks while lower brain modularity would be related to better performance on more complex tasks. For the purposes of the current research, this simple vs. complex task distinction is operationalized in the following way: complex tasks are those tasks in which executive attention and cognitive control (the ability to ignore preponderant distractors while performing correctly the task at hand) are required to properly perform the task. Simple tasks are those tasks whose performance does not depend on these operations. Because of the engagement of cognitive control, complex tasks typically require longer processing times than simple tasks. The full battery of tasks is described below. Complex tasks in our battery include measures that require shifting between tasks [operation span (Unsworth et al., 2005) and task-shifting], attentional control (visual arrays; Shipstead et al., 2014), short-term memory maintenance as well as controlled search of memory (digit span; Unsworth and Engle, 2007), and the resolution component of the ANT, which taps into the coordination of perception and cognitive control. Simple tasks include the alerting and orienting components of the ANT, which measure automatic responses to exogenous attentional cues (Corbetta and Shulman, 2002) and the traffic light task, which is a measure of response to low level visual properties. To limit the number of behavioral measures in the analysis, improve the reliability of the dependent measure, and to tap into the cognitive mechanisms shared by the tasks (Winer et al., 1971; Nunnally and Bernstein, 1994), two composite scores, simple and complex, were calculated for the 40 subjects who participated in all of the tasks described below. These composites were computed by summing the z-scores (z-scores were calculated by subtracting the mean from each individual score and dividing it by the standard deviation) for the performance measures for the simple and complex tasks. It is important to note that sorting the tasks in a dichotomous manner is merely a practical procedure and not a suggestion that cognitive control cannot be a continuous process as has been suggested in previous work (Rougier et al., 2005). Future work will be to examine cognitive control as a continuous mechanism of varying degrees.

Operation span

Subjects were administered the operation span task (Unsworth et al., 2005) to measure their working memory capacity. This task has been shown to have high test-retest reliability, thus providing a stable measure in terms of the rankings of individuals across test sessions (Redick et al., 2012). In this task, for each trial, participants saw an arithmetic problem, e.g., (2 × 3)+1, and were instructed to solve the arithmetic problem as quickly and accurately as possible. The problem was presented for 2 s. Then, a digit, e.g., 7, was presented on the next screen. Subjects judged whether this digit was a correct solution to the previous arithmetic problem by using a mouse to click a “True” or “False” box on the screen. After the arithmetic problem, a letter was presented on the screen for 800 ms that subjects were instructed to remember. Then the second arithmetic problem was presented, followed by the digit and then the second letter, with the same processing requirements for both arithmetic problem and letter, and so forth. At the end of each trial, subjects were asked to recall the letters in the same order in which they were presented. The recall screen consisted of a 3 × 4 matrix of letters on the screen and subjects checked the boxes aside letters to recall. Subjects used the mouse to respond to the arithmetic problem and to recall letters. The experimental trials included set sizes of six or seven arithmetic problem—letter pairs. There were 12 trials for each set size, resulting a total of 156 letters and 156 math problems. The six and seven set size trials were randomly presented.

Before the actual experiment, a practice session was administered to familiarize subjects with the task. The practice session consisted of a block involving only letter recall, e.g., recalling 2 or 3 letters in a trial, a block involving only arithmetic problems, and a mixed block in which the trial had the same procedure as in the experimental trials, i.e., solving the arithmetic problems while memorizing the letters, and recalling them at the end, but with smaller set sizes of 2, 3, or 4. The response times for math problems and accuracy for arithmetic problems and letter recall were recorded. The operation span score is the accuracy for letter recall, calculated as the number of letters that were recalled at the correct position out of total number of the presented letters. The maximum span score is 156.

Visual arrays task

A visual arrays task was used to tap visual short-term memory capacity. In this task, subjects were instructed to fixate at the center of the screen. Arrays of 2–5 colored squares at different positions on the screen were presented for 500 ms, followed by a blank screen for 500 ms, and then by multi-colored masks for 500 ms. A single probe square was then presented at one of locations where the colored squares had appeared. Subjects had to judge whether the probe square had the same or different color as the one at the same position. The order of different array sizes was random. Each array size condition had 32 trials, half of which were positive response trials and half negative. The visual short-term memory score was calculated by averaging the accuracy across all array sizes.

Digit span

In this task a list of numbers were presented in auditory form at the rate of one number per second and participants were required to memorize them. After presenting the last number in the list, a blank screen prompted participants to recall the numbers in the order in which they were presented by typing on the keyboard. Participants were given five trials for each set size starting at two. The program would terminate if participants got fewer than 3 correct trials for that set size (60% accuracy). Digit span was calculated by estimating the list length at which the subject would score 60% correct using linear interpolation between the two set sizes that spanned this threshold.

Task-shifting task

In this task, participants responded to an object according to a preceding cue word. The object was either a square or a triangle, and the color of the object was either blue or yellow. If the cue was “color,” participants pressed a button to indicate whether the object was blue or yellow, and if the cue was “shape,” they pressed a button to indicate whether the object was a square or a triangle. The same buttons were used for the two tasks. The response time was recorded from the onset of the object. For half of the trials, the cue was the same as that in the previous trial, a repeat trial, and for the other half, the cue changed, a switch trial. For each condition, to take into account both response time and accuracy in a single measure, we calculated the inverse efficiency (IE) score (Townsend and Ashby, 1985) defined as mean RT/proportion correct. The task shifting cost was measured as the difference in inverse efficiency score between the repeat and switch trials. We also adjusted cue-stimulus interval (CSI), which is the time between onset of the cue and onset of the object, using CSIs of 200, 400, 600, and 800 ms. However, as the effect of modularity on IE did not differ for different CSIs, the data were averaged across CSI. In total, there were 256 repeat trials and 256 switch trials.

Attention network test

The Attention Network Test (ANT; Fan et al., 2002) was used to measure three different attentional components: alerting, orienting, and conflict resolution. In this task, subjects responded to the direction of a central arrow, pressing the left or right mouse button to indicate whether it was pointing left or right. The arrow(s) appeared above or below a fixation cross, which was in the center of the screen. The central arrow appeared alone on a third of the trials and was flanked by two arrows on the left and two on the right on the remaining two third of trials. The flanking arrows were evenly split between a condition in which they pointed in the same direction as the central one, a congruent condition, and a condition in which they pointed in the opposite direction, an incongruent condition. In the neutral condition, there were no flanking arrows. On three-quarters of the trials, the arrow(s) were cued by an asterisk or two asterisks, which appeared for 100 ms on the screen. The interval between offset of the cue and onset of the arrow was 400 ms. There were four cue conditions: (1) no cue condition, (2), a cue at fixation, (3) double-cue condition, with one cue above and the other below fixation and (4) spatial-cue condition, where the cue appeared above or below the fixation to indicate where the arrows would appear. Thus, the task had a 4 cue × 3 flanker condition factorial design. The experimental trials consisted of three sessions, with 96 trials in each session, and 8 trials for each condition. For half of all trials, arrows were presented above the fixation and for the other half below. Also, for half of the trials, the middle arrow pointed left and for the other half right. The order of trials in each session was random. Before the experimental trials, 24 practice trials with feedback were given to subjects that included trials of all types.

Response times and accuracy were recorded. Mean RT for each condition for each subject was computed based on correct trials only. As with task shifting, we calculated the inverse efficiency (IE) score for each condition. The alerting effect was computed by subtracting the IE for the no cue condition from the IE for the double cue condition. The orienting effect was computed by subtracting the IE for the center cue condition from the IE for the spatial cue condition. The conflict effect was computed by subtracting the IE for the congruent condition from the IE for the incongruent condition. To make the direction of the conflict effect the same as that of alerting and orienting effects, we reversed the sign of conflict effect. Thus, the more negative the conflict effect value, the greater the interference from the incongruent flankers.

Traffic light task

In this task, subjects saw a red square in the center of screen, which was replaced after an unpredictable time delay (from 2 to 3 s) by a green circle. Subjects pressed a button as quickly as possible when they saw the green circle. There were 25 trials in total. Mean response time was calculated for each subject.

All 52 subjects participated in the operation span and task-shifting tasks. Forty-three of them participated in the ANT task and visual short term memory task, and 44 subjects participated in the traffic light task and digit span task, as these were done in a different session, and not all subjects returned to participate in all tasks. The interval between neuroimaging and behavioral sessions varied from 0 (i.e., measuring resting-state fMRI and behavior on the same day but during different sessions) to 140 days.

Methods for Linking Brain and Behavior

To limit the number of behavioral measures in the analysis, improve the reliability of the dependent measure, and to tap into the cognitive mechanisms shared by the tasks (Winer et al., 1971; Nunnally and Bernstein, 1994), two composite scores, simple and complex, were calculated for the 40 subjects who participated in all of the tasks described above. These composites were computed by summing the z-scores (z-scores were calculated by subtracting the mean from each individual score and dividing it by the standard deviation) for the performance measures for the simple and complex tasks. A series of planned comparisons were evaluated, drawn from the theoretical literature. Because these tests are all based on a priori hypotheses, no correction for multiple comparisons was necessary. The present study examined the relationship between modularity and flexibility, modularity with simple and complex task performance, and flexibility with simple task and complex task performance by calculating Pearson product-moment correlation coefficients. Additionally, in order to measure the unique contribution of modularity and flexibility on simple and complex task performance, partial correlation analyses were used in which the effect of one variable was controlled for to examine the effect of the other.

Results

Correlations of Modularity and Flexibility

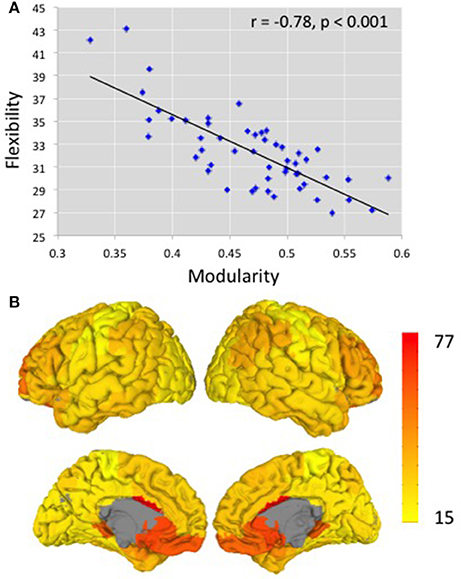

Using Brodmann areas as nodes and functional connectivity between these nodes (determined from resting state fMRI) as the measure of the strength of edges, we determined modularity and flexibility for each subject. Figure 2A depicts the relationship between modularity and flexibility across our 52 participants. For the 400-edge analysis, modularity values ranged from 0.33 to 0.59, with a mean of 0.47 (standard deviation 0.056) on a scale from 0 to 1.0. Flexibility values ranged from 27 to 43 with a mean of 32.38 (standard deviation 3.40). A strong negative correlation r = −0.78 (p < 0.001) was obtained between these two mathematically different measures, modularity and flexibility, which had not been previously reported. The analysis for the 300- and 500-edge yielded negative correlations of r = −0.81 (p < 0.001) and r = −0.74 (p < 0.001), respectively. In order to show that taking the absolute value does not have a major effect on results, a strong correlation was also found between flexibility and modularity from raw non-binarized data (r = − 0.647, p < 0.001). Results from other functional and anatomical brain parcellations including others used in the resting state literature (Power et al., 2011; Craddock et al., 2012; Glasser et al., 2016; Gordon et al., 2016) are reported in Supplementary Table 1.

Figure 2. (A) The relationship between modularity and flexibility across 52 participants. r = −0.78 (p < 0.001). The y-axis shows the averaged flexibility values over all Brodmann areas across subjects. The x-axis shows modularity values, the excess probability of connections within the modules, relative to what is expected at random (B) Illustration of flexibility measures over cortical structures. Brodmann areas with higher flexibility are shown in red and those with lower flexibility are shown in yellow.

Consistency of Constituent BAs in Modules and Flexibility across BAs

Previous research has shown variability in brain regions, with some regions exhibiting higher connectivity variability and other regions showing lower variability (Allen et al., 2014), as well as inter-subject variablility in functional connectivity (Mueller et al., 2013). As is shown in Figure 2B, which aggregates flexibility across subjects for each BA, the same pattern was observed in our data set. Some BAs more frequently changed module alignment across time, and therefore had higher flexibility scores, than others. Regions with higher flexibility include the anterior cingulate cortex, ventromedial prefrontal cortex, orbitofrontal cortex, and dorsolateral prefrontal cortex bilaterally, regions typically associated with cognitive control and executive functions. Regions with lower flexibility are those regions involved in motor, gustatory, visual, and auditory processes such as postcentral gyrus, primary motor cortex, primary gustatory cortex, and secondary visual cortex. The regions with higher and lower flexibility from the Brodmann areas anatomical atlas discussed here are similar to those obtained when calculating flexibility from functional atlases (Craddock et al., 2012; Supplementary Figure 2). In general, the regions showing lower consistency in module assignment, as measured by the average distance of an individual's modular organization to the modular organization of the group-average data (distance is described in Yue et al., 2017) were the same regions showing greater flexibility (r = 0.606, p < 0.001). One concern for interpreting these analyses is that Brodmann areas vary in size; therefore it is possible that the heterogeneity in the size of the regions could affect the results. However, in an additional analysis, the size of each BA was partialled out and the correlation between a region's flexibility and its distance from the average modular organization remained, indicating that size did not have an effect on the results.

Relationship with Cognitive Performance

For task-shifting, there were 52 subjects but one performed at chance level of accuracy and one other showed a shift cost more than three standard deviations above the group mean. Thus, these two participants were excluded from the analysis. For the visual arrays and ANT task 43 subjects participated and for the digit span task and the traffic light there were 44 subjects. Task-shifting results demonstrated that there was a task shifting effect [mean shift cost = 194 ms, SD; t(49) = 14.54 ms, p < 0.0001]. The mean accuracy for the visual arrays task was 89.5%, with a standard deviation of 5.4%. This result has been reported previously (Cowan, 2000). The mean capacity for digit span was 4.26, with a standard deviation of 0.94. The results from the ANT task demonstrated a significant alerting effect [mean = 43 ms/proportion correct, SD: 28 ms/proportion correct; t(42) = 10.14, p < 0.001], a significant orienting effect [mean = 49 ms/proportion correct, SD: 29 ms/proportion correct; t(42) = 10.89, p < 0.001], and a significant conflict effect [mean = 139 ms/proportion correct, SD: 42 ms/proportion correct; t(41) = 21.26, p < 0.001], replicating previous findings (Fan et al., 2002). The group mean RT from the traffic light task was 227 ms, with a standard deviation of 17 ms. All tasks (except for alerting) had medium to high reliabilities. The Spearman-Brown prophecy reliabilities were 0.85 for operation span, 0.84 for visual arrays task, 0.90 for digit span, 0.68 for shifting task, 0.55 for conflict measure of ANT task, 0.38 for orienting measure of ANT task, and 0.94 for the traffic light task. The reliability for the alerting measure of ANT task was −0.31, thus this measure was eliminated from further consideration.

Scores from the seven remaining behavioral tasks were converted into z-scores by subtracting the group mean from the individual score and dividing it by the standard deviation. They were then combined to create a simple composite score that included an orienting measure of the ANT task and the traffic light task and a complex composite score composed of the operation span task, visual arrays task, digit span task, shifting task, and a conflict resolution measure from the ANT task. Specifically, we found that all five complex tasks' measures correlated significantly with the complex composite score (r = 0.63, p < 0.001 for operation span; r = 0.49, p = 0.001 for visual arrays; r = 0.72, p < 0.001 for digit span; r = 0.58, p < 0.001 for conflict from the ANT task; r = 0.37, p = 0.018 for shifting), but were not significantly correlated with the simple composite score (r = −0.25, p = 0.13 for operation span; r = 0.27, p = 0.09 for visual arrays; r = 0.08, p = 0.63 for digit span; r = 0.06, p = 0.7 for conflict from the ANT task; r = −0.15, p = 0.34 for shifting). For the simple composite score, given that only two measures went into the composite, the correlations between the individual measures and the composite were necessarily high and equivalent (r = 0.73, p < 0.001 for orienting measures of the ANT task; r = 0.73, p < 0.001 for the traffic light task) The scores from the simple tasks were not significantly correlated with the complex composite score (r = 0.03, p = 0.84 for orienting measures of the ANT task; r = −0.03, p = 0.87 for the traffic light task). The correlation between the simple and complex composite scores was near zero (r = 0.005, p = 0.98).

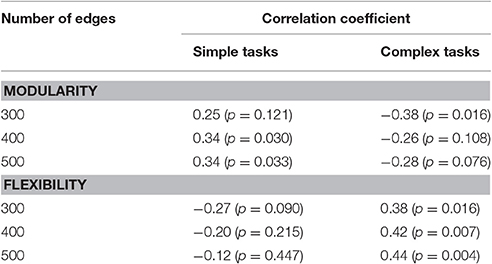

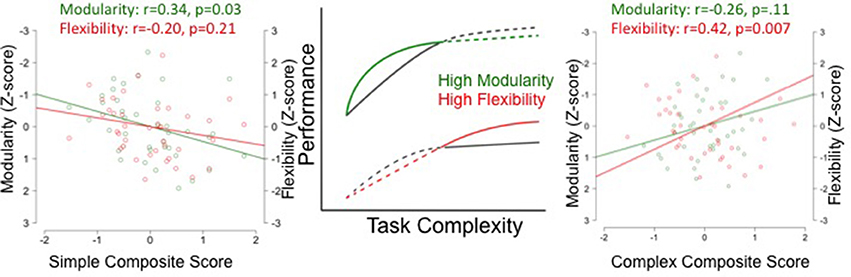

A priori correlation analyses revealed a non-significant negative correlation between modularity measured with 400 edges and the complex composite (r = −0.26, p = 0.108). For simpler tasks, individuals with high modularity performed better, with a significant positive correlation between modularity and the simple composite (r = 0.34, p = 0.030). As might be expected, given the strong negative correlation between modularity and flexibility, there was a significant positive correlation between flexibility measured with 400 edges and the complex composite (r = 0.42, p = 0.007) and a non-significant negative correlation with the simple composite (r = −0.20, p = 0.215). The same pattern was observed at different edge densities (see Table 1).

Despite the strong correlation between flexibility and modularity, it is possible that they make independent contributions to explaining individual differences in cognitive performance. As shown in Figure 3 and Table 1, the magnitude of the correlation coefficient between modularity and the simple task composite is larger than the correlation coefficient between flexibility and simple task composite, across edge densities. The opposite pattern is true for the complex tasks. The correlation coefficient between flexibility and task performance is higher than the correlation coefficient between modularity and task performance. This pattern is partly confirmed by partial correlations analysis controlling for the effect of modularity and flexibility on task performance measured in a network with 400 edges to determine the significance of the unique contribution of each. For the simple task composite, the partial correlation for modularity was significant (r = 0.32, p = 0.046), but that for flexibility was not (r = 0.13, p = 0.44). The partial correlation for the complex task composite and flexibility was significant (r = 0.36, p = 0.022), but that for modularity was not (r = 0.13, p = 0.39).

Figure 3. Modularity and Flexibility predict different performance during simple and complex tasks. The two graphs on the left and right illustrate the relationship between simple and complex composite scores (calculated by summing the z-scores for the performance measures for the simple and complex tasks) and modularity (green) and flexibility (red). This relationship is represented by the magnitude of the coefficient between modularity and task performance and flexibility and task performance for simple (left) and complex (right). The center of the figure depicts the theoretical prediction relating performance to task complexity for individuals with high and low modularity (green curve) and flexibility (red curve).

Discussion

The present results show that the two measures of brain network structure that are treated as independent in the literature—flexibility and modularity—are actually highly related. Still, each seems to make independent contributions to cognitive performance, with modularity contributing more to performance on simple tasks, and flexibility contributing more to performance on complex tasks.

The first major finding in the current study is that two prominent network neuroscience measures—modularity and flexibility—have a strong negative relationship across individuals. In some sense, this finding is consistent with previous studies that have examined relationships between static and dynamic measure of connectivity. For example, Thompson and Fransson (2015) focused on variation in the connectivity between brain regions. They used a sliding time-window of 90 s and calculated the correlation coefficients between regions during each time window and then calculated the mean and variance of that coefficient for each connection across all subjects. Connections with a higher mean connectivity tended to have a low variance and vice-versa. Unlike this previous work, the current study focuses on measures that take into account the entire brain network, with modularity a static measure of whole-brain network structure, and flexibility a dynamic measure of whole-brain network structure. Instead of analyzing variation across individual connections, we analyzed variation across individual subjects. We found a negative relationship between static and dynamic measure of functional connectivity.

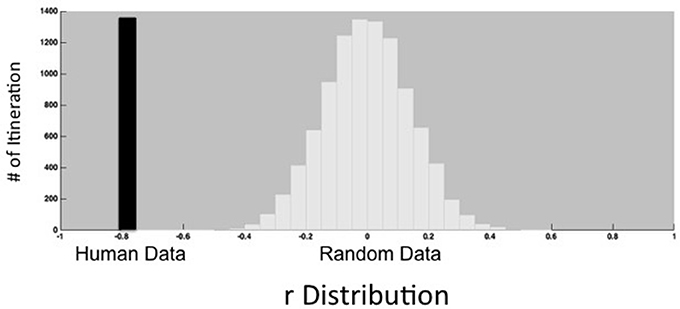

How might we account for this strong negative correlation between flexibility and modularity? An intuitive explanation for the negative correlation between flexibility and modularity derives from a dynamical systems perspective that views different configurations of brain regions as attractor states, with modularity measuring the depth of the attractor states (Smolensky et al., 1996). Flexibility measures how frequently the brain transitions between states. Deeper states will naturally be more stable and resistant to transitions, leading to a negative correlation between modularity and flexibility. Given the high degree of correlation between these two measures, it is difficult to interpret findings in the literature that report only one of these measures in isolation. In order to test that the relationship between modularity and flexibility is not due to a method-based explanation, we tested this relationship from randomized signal and compared that of the human subjects to that of an artificial network where modularity is matched to the modularity values from the human subjects. Figure 4 shows that the negative correlation coefficient we observed for the 52 human subjects was more than just a method-based correlation.

Figure 4. Observed correlation between modularity and flexibility with 52 participants (black bar) compared with the distribution of the correlation between modularity and flexibility from simulations of an artificial network in which modularity is matched to the modularity values from the human subjects (white bars). The observed correlation falls well outside of the distribution of correlations from the random data.

However, it would be incorrect to conclude that flexibility and modularity are simply two measures of the same property of the brain network. The second major result from the current investigation is that flexibility and modularity make independent contributions to explaining task performance and, therefore, are likely to link to different cognitive processes. Specifically, our results suggest that flexibility may reflect cognitive control processes (Bassett et al., 2010, 2013), while modularity may reflect simple processes like reaction to exogenous cues of attention, simple visual change detection, or low level motor learning. The regions that show the highest flexibility (Figure 2B) are those that have been previously implicated in control and/or multimodal processes. The complex tasks used in the current study all require aspects of control such as switching between tasks, response selection, and maintaining working memory, while the simple tasks do not. Assuming flexibility indexes cognitive control capacity, we can explain why variation in flexibility plays a larger role in explaining performance on complex tasks. Modularity, on the other hand, seems to explain performance for simple processes. That is, network systems that are highly modular favor performance related to simple processes. This result has been shown in previous work from our laboratory (Yue et al., 2017) and in Cohen and D'Esposito (2016). Strong within-module connections favor low level processing while strong between-module connections favor high order processing. However, the unique contribution of modularity and flexibility on task performance needs further work with greater sample sizes.

In contrast to the complex tasks, flexibility is only weakly related to performance on simple tasks, for these simple tasks, there is a clear contribution of modularity on task performance, even as the contribution of flexibility is partialled out. One interpretation of this finding is that simple tasks tend to rely on only a single network module (Cohen and D'Esposito, 2016; Yue et al., 2017). Stronger connections within that module yield better performance on these tasks, while stronger connections between that module and other network modules have a negative impact on performance for these simple tasks. Given that higher modularity scores correspond to higher within module connections and lower between module connections, higher modularity should be related to better performance on simple tasks that rely on a single network module.

However, flexibility has previously been reported to have strong relationships with the ability to learn even in very simple motor tasks (Bassett et al., 2010). How can we account for these seemingly contradictory results? One possibility is that the methods for measuring flexibility differ between the two studies, with the current study using a sliding-window method for calculating flexibility while Bassett and colleagues rely on a multi-layer dynamic modeling approach. There remains open debate about how to appropriately measure network flexibility from resting state data (Hindriks et al., 2016, Kudela et al., 2017). However, it seems unlikely that these methodological differences would flip the direction of the correlation, with individuals who have higher than average flexibility values by one metric consistently showing lower than average flexibility values by the other, or vice versa. A more theoretically interesting possibility is that the initial stages of learning even simple skill benefits from cognitive control operations, making tasks appear more complex. Therefore, at the initial stages of learning, it is beneficial to have a more flexible brain (Bassett et al., 2013). As learning progresses and the task becomes automatized, cognitive control is no longer necessary and the task becomes simpler. Following the theory depicted in Figure 2, as learning progresses, flexibility should decrease and modularity should increase, as has been previously observed (Bassett et al., 2013, 2015).

There are several methodological concerns that arise from the current study. However, we contend that while additional studies should address these limitations, they are unlikely to reverse the two main findings first, that modularity and flexibility have a strong negative correlation and second, that modularity and flexibility make separable contributions to explaining task performance. One concern is that the negative correlation between modularity and flexibility could have a method-based rationale. In order to ensure this was not the case, we tested the relationship between modularity and flexibility for randomized signal and compared it to that of human subjects as well as to an artificial network where modularity values matched those of the human subjects. As shown in Figure 4, the negative correlation coefficient between modularity and flexibility observed in our 52 human subjects was more than just a method-based correlation. A second concern is that there was wide variability in the time that elapsed between collecting the behavioral measures and resting state fMRI data (from 0 to 140 days). Yue et al. (2017) report that the correlations between modularity and task performance weaken with a greater time between behavioral testing and resting state fMRI. Therefore, including longer elapsed times in our analysis did in fact weaken the relationship between either modularity or flexibility, and performance during the individual tasks (Supplementary Table 3). However, this concern will have no effect on the relationship between modularity and flexibility, two measures that are calculated over the same set of resting-state fMRI data, and the elapsed time is identical for both flexibility and modularity and the behavioral results. It is unclear how variation in time between behavioral testing and neuroimaging could explain why flexibility has a strong association with complex task performance and modularity has a stronger association with simple task performance. A third concern is that motion artifacts have been known to introduce signal biases in the resting state data and individuals vary in the extent to which they move during scanning (For a review see Ciric et al., 2017). However, our analyses of the relationship between modularity, flexibility and task performance regressed out individual differences in motion (time series with excessive motion) during scanning. Therefore, the results of the current study are not simply an artifact of individual differences in motion. Still, the issue about motion and connectivity relationship is an area that requires more investigation. For a review of recent systematic comparisons of various motion correction practices see (Ciric et al., 2017). In terms of the measures available to study brain network and cognitive function relationships, the current study focused on two, modularity and flexibility, which were appropriate for the questions explored here. Future studies should examine other types of network measures such as the degree of local information integration, global information segregation, local properties such as the degree centrality of a node, or core periphery organization in order to arrive at a complete picture (Medaglia et al., 2015).

A final concern raised by the current study is how the measures of network organization vary as a function of brain parcellation scheme. Specifically, the results reported here focused on an anatomical atlas (Brodmann's areas). Other functionally-defined atlases were used to calculate both modularity and flexibility (see Supplementary Table 1) but were not related to behavioral measures because Yue et al. (2017) report the strongest correlations between modularity in the BA parcellation and performance. Similarly, we found that the correlation between modularity and flexibility was significant with all parcellations considered, but highest for the BA parcellations. Other studies have reported low correlations consistency between functional and anatomical atlases. For example, Cohen and D'Esposito (2016) reported that the correlation between modularity from an anatomical parcellation and a simple sequence tapping task was significant but not with modularity from a functional atlas (Cohen and D'Esposito, 2016). Across at least several studies, anatomical atlases appear to be better at characterizing brain networks than functionally defined atlases. Since previous work has argued that inappropriate node definition might mischaracterize brain regions which have distinctive functions (Wig et al., 2011), and found that nodes derived based on task-based fMRI studies did not align well with anatomical parcellations (Power et al., 2011), one might have expected the opposite—that is, that using network nodes determined at least in part from functional activations (e.g., Glasser et al., 2016) would be better for cognitive network neuroscience than anatomically defined atlases. Given the results of the current study and work from other labs, the question of the best parcellation scheme for cognitive network neuroscience remains an open one. However, it is beyond the scope of the current study.

Conclusion

For cognitive network neuroscience to advance, better links between measures of network structure to cognitive and neural computations must be developed (Sporns, 2014). The theory and results presented here, disentangling the effects of two commonly, but interrelated measures, are one step. By considering how different measures of brain structure relate to each other and relate to variation in performance, we can start to develop stronger links between the cognitive and the network sides of this new approach.

Ethics Statement

This study was carried out in accordance with the recommendations of the Rice University Office of Research Compliance with written informed consent from all subjects. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the Rice University Institutional Review Board.

Author Contributions

AR: Collected the resting state data and organized it for analysis, discussed the tasks used in this study, interpreted the results, and wrote the manuscript. SF, RM, MD: Provided the theoretical background, discussed the tasks, interpreted the results and edited the manuscript drafts. QY: Collected the resting state and behavioral data, discussed the tasks, and edited the manuscript drafts. FY: Preprocessed and analyzed the resting state data and calculated modularity and flexibility, and discussed the tasks.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer HYL and handling Editor declared their shared affiliation, and the handling Editor states that the process nevertheless met the standards of a fair and objective review

Acknowledgments

We are grateful for the students who participated in this study. This work was partially supported by the T.L.L. Temple Foundation. MD and FY were partially supported by the Center for Theoretical Biological Physics under NSF grant #PHY-1427654.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/article/10.3389/fnhum.2017.00420/full#supplementary-material

Footnotes

1. ^There are several recent studies that have focused on the relationships between static and dynamic measure of connectivity that are tangentially relevant to our current investigation. Thompson and Fransson (2015) focus on variation in the connectivity between brain regions. They used a sliding time-window of 90 seconds and calculated the correlation coefficients between regions during each time window. They calculated the mean and variance of the connectivity time-series for each subject and pair of connections. Their results showed that the mean and variance of fMRI connectivity time-series scale negatively. Betzel et al. (2016) examined variation in connectivity over time, using a sliding window to identify time periods when functional connectivity deviates significantly from the connectivity found for data from the whole session. These time periods with greater deviance showed higher modularity compared with time periods in which the functional connectivity is closer to the average. Both papers suggest some relationship between static and dynamic measure of functional connectivity, though neither investigates the relationship between whole brain flexibility and modularity across individual subjects.

2. ^Some preprocessing pipelines suggest regressing out signal from white matter and ventricles, under the assumption that any signal measured in these regions must be nuisance signal. However, other recent studies have suggested that BOLD response detected in the white matter reflects a local physiological response, which would suggest that regressing out white matter signal is unwarranted (Gawryluk et al., 2014). Aurich et al. (2015) have shown that different preprocessing strategies can have impact on some graph theoretical measures. While there is the concern that white matter activity reflects something other than a nuisance signal, we still went ahead and ran analyses in both ways. It is worth mentioning here that when we regressed out WM and CSF signal, the correlations between flexibility and task performance dropped substantially. We reason that if WM and CSF are simply nuisance variables, then removing them should not remove the relationship between flexibility and the behavioral measures of interest. Therefore, the main results are presented from data where WM and CSF signal were not regressed out and in our supplementary materials in Table 2 we present the data from WM and CSF signal regressed out.

3. ^There is an ongoing debate about the best practices for motion correction, which is outside of the scope of the current study (Power et al., 2015; Ciric et al., 2017). Since we are calculating measures of flexibility, censoring out time points and leaving gaps in the data would have not been the best practice. Therefore, we replaced censored signal from time points in which the head motion exceeded a distance (Euclidean Norm) of 0.2 mm relative to the previous time point using linear interpolation.

References

Alavash, M., Hilgetag, C. C., Thiel, C. M., and Gießing, C. (2015). Persistency and flexibility of complex brain networks underlie dual-task interference. Hum. Brain Mapp. 36, 3542–3562. doi: 10.1002/hbm.22861

Allen, E. A., Damaraju, E., Plis, S. M., Erhardt, E. B., Eichele, T., and Calhoun, V. D. (2014). Tracking whole-brain connectivity dynamics in the resting state. Cereb. Cortex 24, 663–676. doi: 10.1093/cercor/bhs352

Aurich, N. K., Filho, J. O. A., da Silva, A. M. M., and Franco, A. R. (2015). Evaluating the reliability of different preprocessing steps to estimate graph theoretical measures in resting state fMRI data. Front. Neurosci. 9:48. doi: 10.3389/fnins.2015.00048

Bassett, D. S., Wymbs, N. F., Porter, M. A., Mucha, P. J., Carlson, J. M., and Grafton, S. T. (2010). Dynamic reconfiguration of human brain networks during learning. Proc. Natl. Acad. Sci. U.S.A. 108, 7641–7646. doi: 10.1073/pnas.1018985108

Bassett, D. S., Wymbs, N. F., Rombach, M. P., Porter, M. A., Mucha, P. J., and Grafton, S. T. (2013). Task-based core-periphery organization of human brain dynamics. PLoS Comput. Biol. 10:e1003617. doi: 10.1371/journal.pcbi.1003617

Bassett, D. S., Yang, M., Wymbs, N. F., and Grafton, S. T. (2015). Learning-induced autonomy of sensorimotor systems. Nat. Neurosci. 18, 744–751. doi: 10.1038/nn.3993

Betzel, R. F., Satterthwaite, T. D., Gold, J. I., and Bassett, D. S. (2016). A positive mood, a flexible brain. arXiv:1601.07881v1.

Biswal, B., Yetkin, F. Z., Haughton, V. M., and Hyde, J. S. (1995). Functional connectivity in the motor cortex of resting human brain using echo-planar MRI. Magn. Reson. Med. 34, 537–541. doi: 10.1002/mrm.1910340409

Braun, U., Schäfer, A., Walter, H., Erk, S., Romanczuk-Seiferth, N., Haddad, L., et al. (2015). Dynamic reconfiguration of frontal brain networks during executive cognition in humans. Proc. Natl. Acad. Sci. U.S.A. 112, 11678–11683. doi: 10.1073/pnas.1422487112

Bullmore, E., Bullmore, E., Sporns, O., and Sporns, O. (2009). Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 10, 186–198. doi: 10.1038/nrn2575

Chen, M., and Deem, M. W. (2015). Development of modularity in the neural activity of children' s brains. Phys. Biol. 12:16009. doi: 10.1088/1478-3975/12/1/016009

Ciric, R., Wolf, D. H., Power, J. D., Roalf, D. R., Baum, G. L., Ruparel, K., et al. (2017). Benchmarking of participant-level confound regression strategies for the control of motion artifact in studies of functional connectivity. Neuroimage 154, 174–187. doi: 10.1016/j.neuroimage.2017.03.020

Cohen, J. R., and D'Esposito, M. (2016). The Segregation and integration of distinct brain networks and their relationship to cognition. J. Neurosci. 36, 12083–12094. doi: 10.1523/JNEUROSCI.2965-15.2016

Corbetta, M., and Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 215–229. doi: 10.1038/nrn755

Cordes, D., Haughton, V. M., Arfanakis, K., Carew, J. D., Turski, P. A., Moritz, C. H., et al. (2001). Frequencies contributing to functional connectivity in the cerebral cortex in “resting-state” data. Am. J. Neuroradiol. 22, 1326–1333.

Cowan, N. (2000). The magical number 4 in short-term memory: a reconsideration of mental storage capacity. Behav. Brain Sci. 24, 87–185. doi: 10.1017/S0140525X01003922

Cox, R. W. (1996). AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput. Biomed. Res. 29, 162–173. doi: 10.1006/cbmr.1996.0014

Craddock, R. C., James, G. A., Holtzheimer, P. E., Hu, X. P., and Mayberg, H. S. (2012). A whole brain fMRI atlas generated via spatially constrained spectral clustering. Hum. Brain Mapp. 33, 1914–1928. doi: 10.1002/hbm.21333

Deem, M. W. (2013). Statistical mechanics of modularity and horizontal gene transfer. Annu. Rev. Condens. Matter Phys. 4, 287–311. doi: 10.1146/annurev-conmatphys-030212-184316

Fan, J., McCandliss, B. D., Sommer, T., Raz, A., and Posner, M. I. (2002). Testing the efficiency and independence of attentional networks. J. Cogn. Neurosci. 14, 340–347. doi: 10.1162/089892902317361886

Gawryluk, J. R., Mazerolle, E. L., and D'Arcy, R. C. N. (2014). Does functional MRI detect activation in white matter? A review of emerging evidence, issues, and future directions. Front. Neurosci. 8:239. doi: 10.3389/fnins.2014.00239

Glasser, M. F., Coalson, T. S., Robinson, E. C., Hacker, C. D., Harwell, J., Yacoub, E., et al. (2016). A multi-modal parcellation of human cerebral cortex. Nature 536, 171–178. doi: 10.1038/nature18933

Gordon, E. M., Laumann, T. O., Adeyemo, B., Huckins, J. F., Kelley, W. M., and Petersen, S. E. (2016). Generation and evaluation of a cortical area parcellation from resting-state correlations. Cereb. Cortex 26, 288–303. doi: 10.1093/cercor/bhu239

Hallquist, M. N., Hwang, K., and Luna, B. (2013). The nuisance of nuisance regression: spectral misspecification in a common approach to resting-state fMRI preprocessing reintroduces noise and obscures functional connectivity. Neuroimage 82, 208–225. doi: 10.1016/j.neuroimage.2013.05.116

Hindriks, R., Adhikari, M. H., Murayama, Y., Ganzetti, M., Mantini, D., Logothetis, N. K., et al. (2016). Can sliding-window correlations reveal dynamic functional connectivity in resting-state fMRI? Neuroimage 127, 242–256. doi: 10.1016/j.neuroimage.2015.11.055

Hutchison, R. M., Womelsdorf, T., Allen, E. A., Bandettini, P. A., Calhoun, V. D., Corbetta, M., et al. (2013). Dynamic functional connectivity: promise, issues, and interpretations. Neuroimage 80, 360–378. doi: 10.1016/j.neuroimage.2013.05.079

Jo, H. J., Gotts, S. J., Reynolds, R. C., Bandettini, P. A., Martin, A., Cox, R. W., et al. (2013). Effective preprocessing procedures virtually eliminate distance-dependent motion artifacts in resting state FMRI. J. Appl. Math. 2013:935154. doi: 10.1155/2013/935154

Kudela, M., Harezlak, J., and Lindquist, M. A. (2017). Assessing uncertainty in dynamic functional connectivity. Neuroimage 149, 165–177. doi: 10.1016/j.neuroimage.2017.01.056

Lancaster, J. L., Woldorff, M. G., Parsons, L. M., Liotti, M., Freitas, C. S., Rainey, L., et al. (2000). Automated talairach atlas labels for functional brain mapping. Hum. Brain Mapp. 10, 120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8

Leonardi, N., and Van De Ville, D. (2015). On spurious and real fluctuations of dynamic functional connectivity during rest. Neuroimage 104, 430–436. doi: 10.1016/j.neuroimage.2014.09.007

Medaglia, J. D., Lynall, M.-E., and Bassett, D. S. (2015). Cognitive network neuroscience. J. Cogn. Neurosci. 27, 1471–1491. doi: 10.1162/jocn_a_00810

Meunier, D., Fonlupt, P., Saive, A.-L., Plailly, J., Ravel, N., and Royet, J.-P. (2014). Modular structure of functional networks in olfactory memory. Neuroimage 95, 264–275. doi: 10.1016/j.neuroimage.2014.03.041

Mill, R. D., Ito, T., and Cole, M. W. (2017). From connectome to cognition: the search for mechanism in human functional brain networks. Neuroimage. doi: 10.1016/j.neuroimage.2017.01.060. [Epub ahead of print].

Mucha, P. J., Richardson, T., Macon, K., Porter, M. A., and Onnela, J.-P. (2010). Community structure in time-dependent, multiscale, and multiplex networks. Science 328, 876–878. doi: 10.1126/science.1184819

Mueller, S., Wang, D., Fox, M. D., Yeo, B. T. T., Sepulcre, J., Sabuncu, M. R., et al. (2013). Individual variability in functional connectivity architecture of the human brain. Neuron 77, 586–595. doi: 10.1016/j.neuron.2012.12.028

Newman, M. E. J. (2006). Modularity and community structure in networks. Proc. Natl. Acad. Sci. U.S.A. 103, 8577–8582. doi: 10.1073/pnas.0601602103

Nunnally, J. C., and Bernstein, I. H. (1994). Psychometric Theory, 3rd Edn. New York, NY: McGraw-Hill.

Power, J. D., Cohen, A. L., Nelson, S. M., Wig, G. S., Barnes, K. A., Church, J. A., et al. (2011). Functional network organization of the human brain. Neuron 72, 665–678. doi: 10.1016/j.neuron.2011.09.006

Power, J. D., Schlaggar, B. L., and Petersen, S. E. (2015). Recent progress and outstanding issues in motion correction in resting state fMRI. Neuroimage 105, 536–551. doi: 10.1016/j.neuroimage.2014.10.044

Redick, T. S., Broadway, J. M., Meier, M. E., Kuriakose, P. S., Unsworth, N., Kane, M. J., et al. (2012). Measuring working memory capacity with automated complex span tasks. Eur. J. Psychol. Assess. 28, 164–171. doi: 10.1027/1015-5759/a000123

Rougier, N. P., Noelle, D. C., Braver, T. S., Cohen, J. D., and O'Reilly, R. C. (2005). Prefrontal cortex and flexible cognitive control: rules without symbols. Proc. Natl. Acad. Sci. U.S.A. 102, 7338–7343. doi: 10.1073/pnas.0502455102

Shipstead, Z., Lindsey, D. R. B., Marshall, R. L., and Engle, R. W. (2014). The mechanisms of working memory capacity: primary memory, secondary memory, and attention control. J. Mem. Lang. 72, 116–141. doi: 10.1016/j.jml.2014.01.004

Smolensky, P., Mozer, M. C., and Rumelhart, D. E. (1996). “Mathematical perspective,” in Mathematical Perspectives on Neural Networks, eds P. Smolensky, M. C. Mozer, and D. E. Rumelhart (Mahwah, NJ: Lawrence Erlbaum Associates, Inc.), 875.

Sporns, O. (2014). Contributions and challenges for network models in cognitive neuroscience. Nat. Neurosci. 17, 652–660. doi: 10.1038/nn.3690

Stevens, A. A., Tappon, S. C., Garg, A., and Fair, D. A. (2012). Functional brain network modularity captures inter- and intra-individual variation in working memory capacity. PLoS ONE 7:e30468. doi: 10.1371/journal.pone.0030468

Thompson, W. H., and Fransson, P. (2015). The mean–variance relationship reveals two possible strategies for dynamic brain connectivity analysis in fMRI. Front. Hum. Neurosci. 9:398. doi: 10.3389/fnhum.2015.00398

Townsend, J. T., and Ashby, F. G. (1985). Stochastic modeling of elementary psychological processes. Am. J. Psychol. 98, 480–484. doi: 10.2307/1422636

Unsworth, N., and Engle, R. W. (2007). On the division of short-term and working memory: an examination of simple and complex span and their relation to higher order abilities. Psychol. Bull. 133, 1038–1066. doi: 10.1037/0033-2909.133.6.1038

Unsworth, N., Heitz, R. P., Schrock, J. C., and Engle, R. W. (2005). An automated version of the operation span task. Behav. Res. Methods 37, 498–505. doi: 10.3758/BF03192720

Wig, G. S., Schlaggar, B. L., and Petersen, S. E. (2011). Concepts and principles in the analysis of brain networks. Ann. N. Y. Acad. Sci. 1224, 126–146. doi: 10.1111/j.1749-6632.2010.05947.x

Winer, B. J., Brown, D. R., and Michels, K. M. (1971). Statistical Principles in Experimental Design, Vol. 2. New York, NY: McGraw-Hill.

Yue, Q., Martin, R., Fischer-Baum, S., Ramos-Nuñez, A., Ye, F., and Deem, M. (2017). Brain modularity mediates the relation between task complexity and performance. J. Cogn. Neurosci. 29, 1532–1546. doi: 10.1162/jocn_a_01142

Keywords: flexibility, modularity, resting-state fMRI, task complexity, individual differences, brain network connectivity

Citation: Ramos-Nuñez AI, Fischer-Baum S, Martin RC, Yue Q, Ye F and Deem MW (2017) Static and Dynamic Measures of Human Brain Connectivity Predict Complementary Aspects of Human Cognitive Performance. Front. Hum. Neurosci. 11:420. doi: 10.3389/fnhum.2017.00420

Received: 03 January 2017; Accepted: 04 August 2017;

Published: 24 August 2017.

Edited by:

Joshua Oon Soo Goh, National Taiwan University, TaiwanReviewed by:

John Dominic Medaglia, University of Pennsylvania, United StatesHsiang-Yuan Lin, National Taiwan University Hospital, Taiwan

Copyright © 2017 Ramos-Nuñez, Fischer-Baum, Martin, Yue, Ye and Deem. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Randi C. Martin, rmartin@rice.edu

Aurora I. Ramos-Nuñez

Aurora I. Ramos-Nuñez Simon Fischer-Baum

Simon Fischer-Baum Randi C. Martin

Randi C. Martin Qiuhai Yue

Qiuhai Yue Fengdan Ye

Fengdan Ye Michael W. Deem

Michael W. Deem