Classifying Schizotypy Using an Audiovisual Emotion Perception Test and Scalp Electroencephalography

- 1Department of Psychology, Korea University, Seoul, South Korea

- 2Department of Information Security, Korea University, Seoul, South Korea

- 3Department of Neuropsychiatry, Jeju National University Hospital, Jeju, South Korea

Schizotypy refers to the personality trait of experiencing “psychotic” symptoms and can be regarded as a predisposition of schizophrenia-spectrum psychopathology (Raine, 1991). Cumulative evidence has revealed that individuals with schizotypy, as well as schizophrenia patients, have emotional processing deficits. In the present study, we investigated multimodal emotion perception in schizotypy and implemented the machine learning technique to find out whether a schizotypy group (ST) is distinguishable from a control group (NC), using electroencephalogram (EEG) signals. Forty-five subjects (30 ST and 15 NC) were divided into two groups based on their scores on a Schizotypal Personality Questionnaire. All participants performed an audiovisual emotion perception test while EEG was recorded. After the preprocessing stage, the discriminatory features were extracted using a mean subsampling technique. For an accurate estimation of covariance matrices, the shrinkage linear discriminant algorithm was used. The classification attained over 98% accuracy and zero rate of false-positive results. This method may have important clinical implications in discriminating those among the general population who have a subtle risk for schizotypy, requiring intervention in advance.

Introduction

Schizotypy is a pervasive pattern of intrapersonal and interpersonal deficits, characterized by perceptual, emotional, and cognitive distortions, as well as eccentric behaviors found on a schizophrenia spectrum (Chapman et al., 1994; Claridge et al., 1996; Kerns, 2006). The spectral view of psychosis, but not the categorical view, postulates that there is no clear-cut criterion between sanity and insanity (Eysenck, 1992; Claridge et al., 1996). Accordingly, schizotypy has been thought to have relatively mild positive and negative symptoms and dimensions, compared to those found in patients clinically diagnosed with schizophrenia (Gruzelier and Doig, 1996), and show different levels of cognitive and emotional impairments within certain contexts (Genetic Risk Outcome in Psychosis (GROUP) Investigators, 2011; Hori et al., 2012; Rossler et al., 2014). Therefore, studies of schizotypy in a normal population have provided a promising framework to understand the psychopathology of schizophrenia, as well as elucidating the psychobiological underpinnings of schizotypy itself, within the spectral view of psychosis.

One of the cardinal symptoms associated with schizophrenia concerns deficits in emotion perception. Patients with schizophrenia have consistently been reported to show deficits in recognizing emotions in facial (Borod et al., 1993; Hall et al., 2004; Kosmidis et al., 2007) and vocal (Bozikas et al., 2006; Leitman et al., 2010; Gold et al., 2012) expressions, and this finding is observed in both behavioral and electrophysiological studies (Campanella et al., 2006; Turetsky et al., 2007; Lynn and Salisbury, 2008; Wynn et al., 2008; Ramos-Loyo et al., 2009; Pinheiro et al., 2013).

For symptoms associated with schizotypy, recent neuroimaging studies have shown schizotypy have a mild level of emotional deficits. In an functional magnetic resonance imaging (fMRI) study, for instance, individuals with schizotypy showed emotion regulation difficulties, showing stronger activation in a number of prefrontal regions and decreased amygdala activation, when compared to controls, while reappraising emotion (Modinos et al., 2010). Further, the results of a study using emotional facial stimuli suggested the possibility that individuals with schizotypy have social interaction difficulties (Huang et al., 2013). Additionally, individuals with schizotypy have shown different patterns of brain activation to dynamically changed facial expressions compared to controls. This was especially true in the positive type of schizotypy, which showed deficits in emotion-cognition processes, showing qualitative alterations in neural processing patterns while performing an emotional Stroop task (Mohanty et al., 2005). Lastly, in an electroencephalogram (EEG) study, individuals with schizotypy showed impaired processing for social-emotional information. These individuals showed less prefrontal-posterior functional coupling than controls, which is considered to be indicative of a mechanism to protect the individual from becoming overwhelmed by the perception of social-emotion information, during exposure to auditory displays of strong emotion (Papousek et al., 2014).

As schizotypy is associated with a risk of developing schizophrenia, and some individuals with schizotypy become clinically ill, the discovery of a reliable method that can distinguish schizotypy from healthy individuals is vital. However, no reliable method has thus far been developed utilizing an objective measure for discriminating individuals with schizotypy from normal controls. Although, the Schizotypal Personality Questionnaire (SPQ; Raine, 1991) has been widely used for schizophrenia spectrum diagnoses, it is a self-report measure. Self-report measures are more often used for measuring levels of traits or symptom severity. Moreover, self-report questionnaires could suffer from social desirability bias, which can cause over-reporting of socially desirable and under-reporting of socially undesirable behaviors (Paulhus and Vazire, 2007).

In previous studies, EEG has been consistently used as a sensitive biomarker to distinguish individuals with impaired mental functioning, including Parkinson's Disease, Schizophrenia, and Alzheimer's Disease (AD), from controls (Kalatzis et al., 2004; Chapman et al., 2007; Lehmann et al., 2007; Sabeti et al., 2011; Yuvaraj and Murugappan, 2016). For instance, EEG was recorded in patients with Parkinson's disease with the left (LPD) or right side affected (RPD) and controls during the identification of six basic emotions: happiness, sadness, fear, anger, surprise, and disgust. Poorer classification performance using emotion-specific EEG features was shown in patients with LPD (inferred right-hemisphere pathology), which indicates that patients with LPD were more impaired in emotion processing compared to patients with RPD as well as controls. Moreover, EEG has been used to predict the progression to AD among individuals with Mild Cognitive Impairment (MCI), which is a transitional state between normal aging and AD (Chapman et al., 2011). In this study, a model was developed using an EEG signal for the discrimination of individuals with schizotypy and controls.

Several studies have shown the application of machine learning algorithms for the classifications of EEG signals (Cao et al., 2003; Wang and Paliwal, 2003; Liu et al., 2010; Subasi and Gursoy, 2010; Blankertz et al., 2011; Zhang et al., 2013; Chen et al., 2016). In this study, a supervised classification algorithm called the shrinkage linear discriminant analysis (SKLDA) was used to classify subjects based on their event-related potentials (ERP), since the sample size is much smaller than the dimensions of the feature vector. Previous research revealed that when the number of instances of the training data is much smaller than the dimensions of the feature vectors, a classifier could provide poor results (Jain and Chandrasekaran, 1982; Raudys and Jain, 1991). Researchers have recommended using, at least, 5–10 times as many training samples (per class) as the dimensionality. However, this is not easy for brain computer interface (BCI) applications because the dimensionality of the ERP feature vectors is usually much larger than the training set. It is possible to use other algorithms for dimensionality reduction, such as principal component analysis, to reduce the dimensionality significantly. However, EEG have a weak signal-to-noise ratio and their sensitivity to discriminatory features could easily be lost during the reduction. The SKLDA algorithm was proposed (Blankertz et al., 2011) to remedy this bias. The key advantage of SKLDA is to estimate an accurate covariance matrix that is particularly hard in studies with high dimensionality.

In the previous studies, a variety of tests using visual or auditory stimuli were used to investigate whether individuals with schizotypy have emotional deficits. However, as far as we know, there has been no investigation differentiating individuals with schizotypy from controls using a multimodal (audiovisual) emotion perception test. In a social context, emotional processing utilizing multiple sensory information is much more natural and common, as people rely on the visual (e.g., facial expressions and gestures) as well as the auditory modality (e.g., vocal tone, prosody, and accent) to judge the emotional states of others. Moreover, as individuals with schizotypy are considered to be non-clinical subjects, the heightened impact of emotionally laden inputs through a multimodal emotional test might produce significant differences between them and normal controls. Therefore, this study adopted a multimodal emotion perception test that might be theoretically appropriate as well as ecologically valid for assessing the brain function of individuals with schizotypy.

In summary, the objective of this study was to propose a reliable method to distinguish schizotypy from controls, based on measures of brain activity during emotional processing. To achieve this purpose, firstly, we developed a multimodal (audiovisual) emotion perception test, where subjects were asked to judge the emotions from both face and voice stimuli, presented simultaneously. We expected that performing a test with a higher emotional processing demand would allow for the assessment of brain functioning that may be affected by schizotypy. Furthermore, we adopted EEG methods, which can provide high temporal resolution. Whereas fMRI is temporally limited by the latency of the hemodynamic response, EEG directly measures the electric fields produced by neuronal activity (Dale et al., 2000). Therefore, it could be adequate for detecting brain activity during the emotional multisensory integration process. To identify individuals with schizotypy from controls based on their EEG data, we implemented the SKLDA. The details of the EEG data processing and features of extraction methods are presented in the sections below.

Materials and Methods

Subjects

A set of questionnaires were administered online to 1,287 Korean university students. A set of questionnaires included a consent form, demographic questions, the SPQ, and the Center for Epidemiological Studies Depression Scale (CES-D; Radloff, 1977).

The SPQ is a 74-item scale (score ranges: 0–74) modeled on DSM-III-R criteria for schizotypal personality disorder. One hundred and five subjects who scored in the top 10% on the SPQ (scores of ≥ 31) were selected as the schizotypy (ST) group, and 464 subjects who scored within ±0.5 standard deviation (SD) from the mean (M) score on the SPQ were selected as the normal control (NC) group. Among them, 51 subjects from the ST group and 45 subjects from NC group were excluded based on scores of the CES-D (i.e., scores ≥ 25) because depression was reported to be comorbid with schizophrenia (Lewandowski et al., 2006) and could influence on facial emotion recognition (Feinberg et al., 1986; Persad and Polivy, 1993).

Thirty-four subjects from the ST group (age: M = 20.83, SD = 2.42; % female: 57.22) and 17 subjects from NC group (age: M = 21.06, SD = 1.60; % female: 50.00) were randomly selected to participate in the experiment among the 513 subjects (ST: 94, NC: 419). Among them, data from 6 subjects (4 NC and 2 ST) were excluded from the analyses because of excessive electrical artifacts during EEG recordings. Mean SPQ scores from ST and NC groups were 37.35 (SD = 7.57) and 15.53 (SD = 3.12), respectively. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the Ethics Committee of Korea University (1040548-KU-IRB-14-75-A-3). All subjects had normal or corrected to normal vision and no history of neurological and/or psychiatric disorders.

Audiovisual Emotion Perception Test (AEPT)

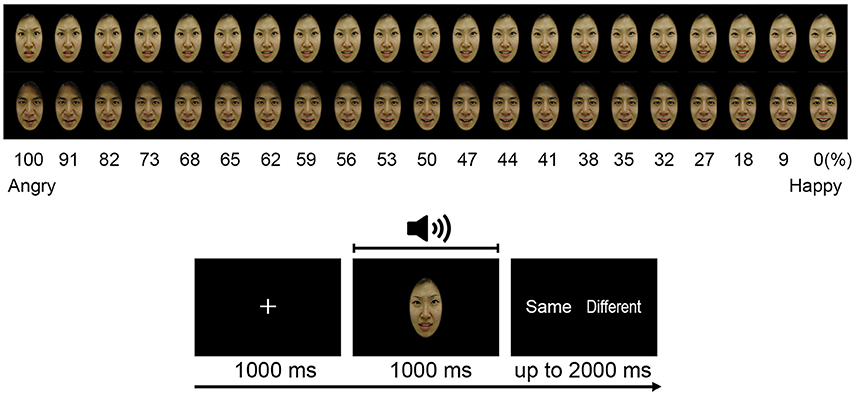

Figure 1 illustrates the Audiovisual emotion perception test. The facial images (2 males, 2 females) were created from the Korea University Facial Expression Collection (KUFEC; Kim et al., 2011). Photoshop CS5 (Adobe systems, USA) was used to crop the photographs, remove non-facial attributes (e.g., hair, ears), and create a uniform black background. A computerized morphing program (Abrosoft FantaMorph version 5.4.1; Abrosoft, USA) was used to create a linear continuum of facial images. Morphed faces were created by merging two face pictures (e.g., angry face and happy face) in 1% steps, resulting in 101 morphed face images (e.g., from 0 to 100% happy), with the graded blending of the facial features of the two faces. Two prototypical images (e.g., 100% angry and happy faces), 6 unambiguous images near to the prototypical images (91, 82, 73, 27, 18, and 9% morphed faces), and 13 ambiguous images in the midrange (68, 65, 62, 59, 56, 53, 50, 47, 44, 41, 38, 35, and 32% morphed faces) were selected from the continuum and used for the AEPT.

Figure 1. Audiovisual emotion perception test. Subjects were asked to judge whether the emotions on the face and the voice were same or not. Figure shows morphed face images used at the AEPT (upper) and the AEPT trial sequence (lower).

Vocal stimuli of happy and angry emotional valence were recorded by four amateur actors (two males, two female) in a noise-free room. The actors were instructed to pronounce four semantically neutral sentences either in an angry or happy voice since content cues could help in identifying the emotion. Four Korean sentences were “stayed in the house,” “went by airplane,” “will be going to Seoul,” and “arrived in Busan.” The mean length of the 16 voice stimuli (4 sentences × 2 actors × 2 emotions) was 1.06s (SD = 0.059).

The AEPT consisted of 840 trials (4 identities × 21 morphed images × 2 voice emotions × 5 repetitions), which were 400 congruent (e.g., angry face/angry voice or happy face/happy voice audiovisual stimulus pairs), 400 incongruent (e.g., angry face/happy voice or happy face/angry voice audiovisual stimulus pairs), and 40 trials with no correct answer (e.g., 50% morphed face/happy voice or 50% morphed face/angry voice audiovisual stimulus pairs). These 40 trials were excluded from the data analysis. In the test, subjects were asked to judge whether the emotions on the face and the voice were same (congruent) or not (incongruent).

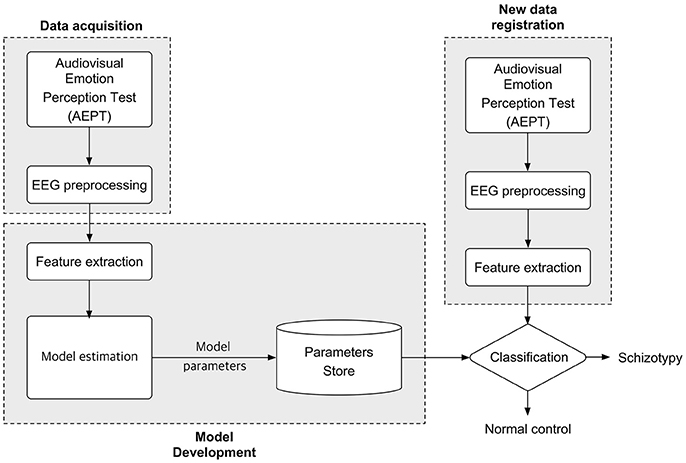

A trial started with the presentation of a 1,000 ms fixation, which was then followed by the presentation of the audiovisual stimulus pairs. The face was presented for 1,000 ms, while the voice was delivered via earphone. When the length of the voice was longer than 1,000 ms, a black blank screen was briefly presented on the screen for the rest of the duration. Subjects pressed either a “same” or “different” button during the subsequent 2,000 ms. Subjects pressed buttons using one hand, and response hands were counterbalanced across subjects. The flowchart for the proposed classification approach is depicted in Figure 2.

EEG Recordings and Preprocessing

EEG was recorded continuously from 14 electrodes (Fz, Cz, Pz, OZ, F3, F4, C3, C4, P3, P4, O1, O2, T5, and T6) using a Grass Model 12 Neurodata Acquisition System (Grass Technologies Astro-Med, Inc., West Warwick, USA) according to the extended 10–20 system. The vertical electrooculogram (EOG), which records the voltage difference between two electrodes placed above and below the left eye, was used to detect eye blinks. A single ground electrode was placed on the forehead, and the reference electrode was located at the right earlobe. The signals were recorded continuously at a sampling rate of 1,024 Hz (bandpass filter, 0.01–100 Hz). The electrode impedances were below 5 kΩ.

The offline data analysis was performed with EEGLAB (version 12.0.2.2) running under Matlab 2012a (Mathworks, USA; MATLAB, 2012). All EEG data were re-referenced to linked earlobes. Gross movement artifacts were removed from the data based on visual inspection. The data were digitally filtered with a band pass of 0.1–30 Hz. The data were epoched between −200 and 800 ms, relative to stimulus onset. The epochs were baseline-corrected, and those containing artifacts larger than ±50 μV were removed. Individual EOG artifact correction was conducted using an independent component analysis. Data was then down sampled to 350 data points by taking mean of four consecutive data points.

Behavioral and Event-Related Potentials Analysis

The epochs were separately averaged for the ST and NC groups to obtain ERP. The assumptions for the normality and homogeneity of variance were tested with the Shapiro–Wilk and Levene's test, respectively, for both behavioral and ERP data. For the behavioral data analysis, one-way ANOVAs were performed on the percent of correct responses and reaction times. For the ERP data analysis, mixed-model analysis of variance (ANOVA) for averaged ERP amplitude in a time window ranging from 150 to 270 ms (P2 component) was performed, in which site (14) was the within-subjects factor and group (2) was the between-subjects factor. The Greenhouse-Geisser adjustment was used to correct for violations of sphericity.

Feature Extraction

Let the ERP at channel c that varies as a function of time t be denoted by Xc(t). Assume that a trial lasts for T time units, where T = {t1, t2, t3, …, tT}, and is sampled at equally spaced intervals. Let Ci(t) be the potential of the ith channel at time point t. Then, the vector XCi(T) = [c(t1), c(t2), …, c(tN)] defines the ERP of this channel for the duration T. Given C = {C1, C2, …, CM}, as a subset of channels chosen for analysis, where for Cj, j = 1, 2, 3, …, and M represents a chosen channel. Equation (1) defines a vector of potential values for the subset of channels at time point t for the kth trial, and τ denotes vector transpose.

Concatenating those vectors for all time points of T across all chosen channels, for the kth trial gives the spatio-temporal features shown in Equation (2).

For the sake of readability, notations are simplified as follows: both C and T (constants) are omitted, and is denoted by XK. Hence, XK ϵ RM × N represents spatiotemporal data of one participant for the kth trial. The data set is grouped into three sets based on the stimuli used in the AEPT test. The first set contains all trials for happy visual stimuli only. Similarly, the second set contains all data from angry stimuli only, and the third set contains all data from both happy and angry stimuli trials. Let xH, xA, and xHA denote the data set of happy only, angry only, and both happy and angry stimuli, respectively. Then, define the averages of the ith participant's data over stimuli type using Equation (3).

Here, , m ϵ{a, h, ha} defines the mean of the ith participant samples over stimuli type; NH and NA is the number of trials with happy and angry stimuli, respectively, and NHA = NH + NA. As explained earlier in this section, T has equally spaced intervals. Hence, dimensionality reduction achieved by mean subsampling (replacing the n consecutive data points by their mean), where n is a heuristically determined constant.

To be specific to this study, ERP values from 0 ms (right after onset of stimuli) to 800 ms after stimuli onset were chosen for analysis. The sampling rate was reduced from 1,024 to 256 Hz using mean subsampling (for n = 4). After down sampling, features were extracted per channel. For the analysis, the first 200 features were selected. In total, 2,800 features were extracted from the 14 channels and utilized as input during classification.

Classification

SKLDA

The conventional LDA has been widely adopted for feature reduction during the classification of ERP for BCI applications (Lehmann et al., 2007; Lotte et al., 2007; Subasi and Gursoy, 2010). Due to the high similarity of covariance matrices in the Gaussian distribution corresponding to the features of ERP and non-ERP (i.e., targets and non-targets), LDA performs well for the classification of ERP (Blankertz et al., 2011) and it can be described using the Rayleigh Equation, as defined by Equation (4).

where Sb and Sw are the between and within class scatter matrices, respectively; W is the projection vector and T is the transpose. The optimum transformation is obtained by the maximization of and the minimization of .

The empirically estimated covariance is a standard estimator for the covariance matrix. This estimator is unbiased and has good properties under usual conditions. However, it may give an imprecise estimation if data with high dimensionality and low sample size are used for training. This is because the number of unknown parameters that have to be estimated is quadratic in the number of dimensions, leading to a systematic error. The systematic error causes estimation of large eigenvalues of the covariance matrix to be very large and estimation of small eigenvalues to be very small.

Shrinkage of the estimated covariance matrix is a way of correcting this systematic bias. The SKLDA can reduce the ill-conditioned covariance matrix with an appropriately selected shrinkage parameter, and can effectively enhance the generalization capability of the classifier, thereby providing more accurate classification of ERP, even when using insufficient training samples (Blankertz et al., 2011). The SKLDA is an accurate covariance matrix estimator, particularly useful in high dimension studies. Let be n feature vectors with dimension d. Denote the unbiased estimator of the covariance matrix as . Then, a shrinkage parameter gamma, γ is used to relate to as defined in Equation (5).

In this equation, γ ϵ [0, 1] is the shrinkage parameter, and are the shrinkage and empirical covariances, respectively, and ν is the average eigenvalue of the empirical covariance matrix, ; d denotes the dimensionality of the data, and I the identity matrix. The fact that , when γ = 0 means that the sample-based estimated covariance can accurately measure the variability of the training sets, and no shrinkage is required. However, = γvI when γ = 1, means the estimated covariance matrix poorly measures the variability in the sample. Heuristically, γ is set as γϵS = {0, 0.05, 0.2, 0.4, 0.6, 0.8, 1.0}, considering its linear property and using a heuristic approach. Schäfer and Strimmer (2005) provide the analytical solution of γ for reference. In this paper, nested-cross-validation is used to estimate γ.

LOOCV

The leave-one-out cross-validation (LOOCV), also known as nested-cross-validation, is used for classifier evaluation. LOOCV works as follows: (1) group the entire training set into N-folds; (2) holding first-fold out, again group the data from the remaining N−1 folds into M-folds; (3) holding the first fold from the M-folds out, train a model (classifier) using the data from the M-1 folds; (4) score (predict) the first-fold from the second step using the developed model. Keep this classifier and its accuracy; (5) repeat this from the third step onward for every fold; (6) choose the model giving the minimum classification error rate from the models in fourth step as a candidate classifier; (7) score (validate) the first-fold in step one using the candidate classifier obtained in step six. Repeat from step one for the remaining folds. The steps produce N scores that do not capitalize on one chance. Choose the model with the highest classification accuracy. The assumption is that classifiers showing higher classification accuracy on the testing data are more likely to have higher classification accuracy on the validation data.

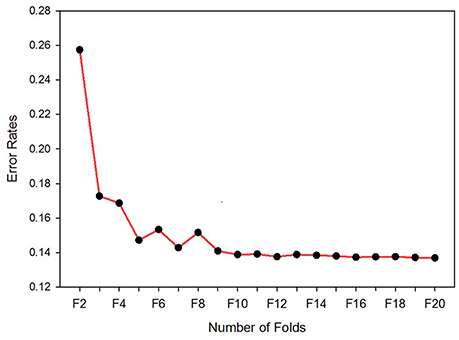

The number of folds used for the cross-validation is decided on by running the classifier for 20-folds, starting from 2- to 20-folds. Figure 3 depicts the error rates versus number of folds. The experimental result shows that as the number of folds increased, the error rate decreased rapidly, up until the ninth-fold. However, from the tenth-fold onwards the error rate decreased very slowly. Taking into consideration the iteration cost and the error rate, the tenth-folds is used for cross-validation. Stratification is used to split the sample sets.

Figure 3. Error rates vs. number of folds. The optimum number of folds for the cross-validation determined by running the classifier for 20 times per fold.

Performance Measures

To evaluate the correctness of a classifier, some statistical performance measures can be used. These measures depend on the four entries of a confusion matrix, namely, the number of correctly recognized class examples (true positives, TP), the number of correctly recognized examples that do not belong to the class (true negatives, TN), and examples that either were incorrectly assigned to the class (false positives, FP) or those that were not recognized as class examples (false negatives, FN). Based on the four terms, the following performance measures were used to evaluate the classifier.

Accuracy: the fraction of correct classifications. It summarizes the overall effectiveness of the classifier.

Recall: proportion of actual positives, which are predicted positive. Also known as sensitivity.

Precision: proportion of predicted positives which are actual positive.

Specificity: fraction of those negatives that will have a negative test result. Also referred to as true negative rate.

F1-measure (weighted harmonic mean): combined measure that assesses precision/recall tradeoff. It provides a relation between the positive labels of the data and those given by a classifier.

where ρ is an adaptive normalization constant used for imbalanced data. It is computed as the ratio of the number of samples in the smaller sample size to the number of samples in the larger sized class.

Channel Selection

To choose the smallest subset of the 14 channels with the highest discriminatory information, a systematic selection algorithm is required. For this, the sequential forward selection (SFS) greedy algorithm (Marcano-Cedeño et al., 2010; Liu et al., 2015) can be used. The SFS algorithm starts with an empty list. Pairs of channels giving the highest classification accuracy are searched for and added to the empty list. Next, a third channel satisfying two conditions is obtained. There are two important aspects to this; first, this channel should give the highest classification accuracy when combined with the previously chosen channels; second, the classification accuracy of the three channels must be greater than the classification accuracy of the previously chosen channels. Repeat the steps until adding any more channel tends to decrease the performance.

Results

Behavior

Finally, 30 ST and 15 NC were included in the data analyses. The percentage of correct responses (%) was not significantly different between the ST (M = 81.73, SD = 5.17) and NC (M = 83.97, SD = 4.23) groups [F(1, 44) = 0.050, p = 0.83]. The reaction time (ms) was also not significantly different between the two groups [M = 414, SD = 138 for ST; M = 424, SD = 135 for NC; F(1, 44) = 2.097, p = 0.16].

ERP

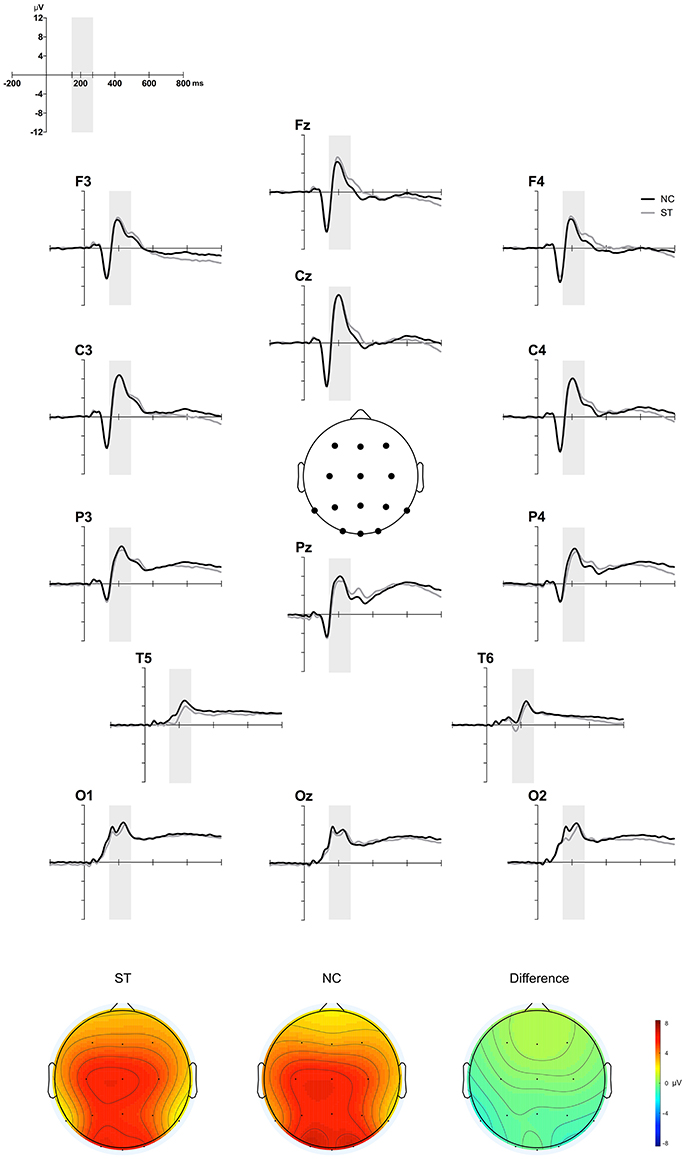

Figure 4 depicts the grand-averaged ERP waveforms at all electrode sites (upper) and the NC minus ST difference in topographies in the 150–270 ms time window (lower). A group × site ANOVA showed a significant site effect [M = 3.88, SE = 0.49 for Fz; M = 3.89, SE = 0.42 for F3; M = 3.77, SE = 0.49 for F4; M = 6.23, SE = 0.55 for Cz; M = 5.96, SE = 0.42 for C3; M = 5.11, SE = 0.47 for C4; M = 5.73, SE = 0.51 for Pz; M = 5.55, SE = 0.36 for P3; M = 4.86, SE = 0.42 for P4; M = 6.25, SE = 0.47 for Oz; M = 6.88, SE = 0.51 for O1; M = 6.57, SE = 0.45 for O2; M = 2.89, SE = 0.26 for T5; M = 2.32, SE = 0.29 for T6; F(13, 559) = 20.69, p < 0.001], but not a significant group effect [M = 4.82, SE = 0.38 for ST; M = 5.16, SE = 0.53 for NC; F(1, 43) = 0.27, p = 0.60]. Interaction effects were not observed [F(13, 559) = 1.01, p = 0.39]. These results suggest that P2 component, an indicative of multisensory processing, was not different between two groups.

Figure 4. ERP results. Figure shows the grand-averaged ERP waveforms during the AEPT for the ST and NC at 12 sites (upper) and the NC minus ST difference topographical maps in the 150–270 ms time window (lower).

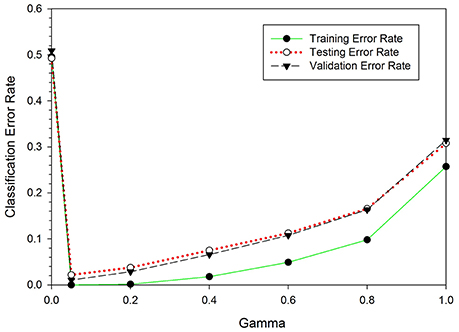

Classification

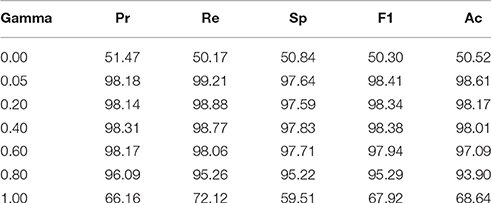

The SKLDA algorithm was implemented through the Matlab2012a working environment. The classifier was trained using EEG data from the NC and ST groups; it was evaluated using LOOCV and the performance measures provided in Section LOOCV. As mentioned earlier, one key advantage of SKLDA is an accurate estimation of covariance matrices; this is particularly useful for high dimensional data. Before estimating the covariance, the shrinkage parameter value was computed. Figure 5 shows the classification error rates as a function of the shrinkage parameter gamma, γ. In this figure, the vertical and the horizontal axes represent the classification error rates and the shrinkage parameters, respectively. The three curves represent the misclassification rates for the training (green), testing (red) and validation (blue) sets. All three curves attain their minimum at γ = 0.05, verifying the robustness for the value of γ. Considering the convexity of Equation (4), and principles of calculus for first derivatives, the optimal value of γ was estimated to be 0.05. Table 1 presents performance rates of the classifier for the validation set as a function of γ. When the value of γ increased from 0.00 to 0.05, the classification accuracy increased from 50% to over 98%. Similarly, the values of the precision (Pr), recall (Re), specificity (Sp), and F1-measure (F1) also increased. However, when γ increased from 0.05 to 1.0, the classification accuracy and other performance measures decreased. Therefore, we chose γ = 0.05 as an optimal value for the shrinkage parameter. Unless mentioned otherwise, all reports in this document are based on the value of γ = 0.05.

Figure 5. Classification error rates as a function of the shrinkage parameter, γ. When γ increased from 0 to 0.05, the classification error rates dropped from 0.55 to 0.04. However, as γ increased from 0.05 to 1.0 the classification error rates increased from 0.04 to 0.31. For all three curves, the error rate is minimum at γ = 0.05.

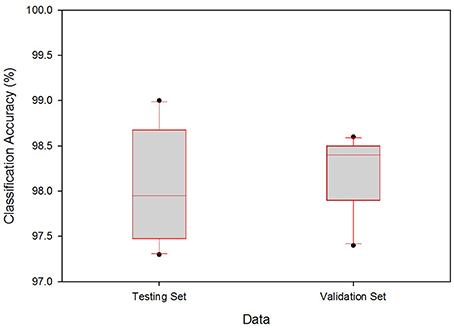

The boxplot in Figure 6 shows the range of classification accuracy rates at γ = 0.05. The vertical axis denotes the range of classification accuracy rates for the testing and validation data. From the given boxplots, the testing set accuracies range from 0.9732 to 0.99, i.e., for the testing set, the minimum accuracy is 0.9732 ≅ 97.32% and the maximum is 99.0%. Similarly, the minimum and the maximum classification accuracy for the validation set are 97.42 and 98.56%, respectively. From this figure, one can see that the accuracy range for the cross-validation is smaller than that of the testing accuracy range which supports the assumption given in Section LOOCV, i.e., best classifiers for the testing can do better for the validation set.

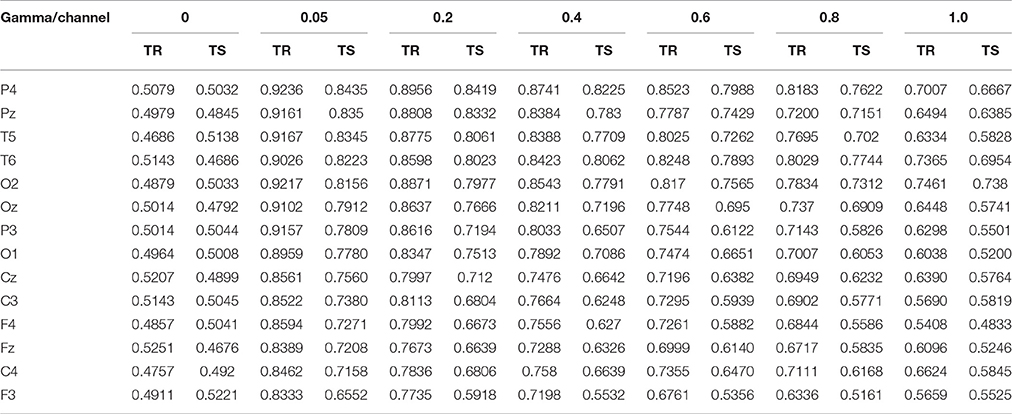

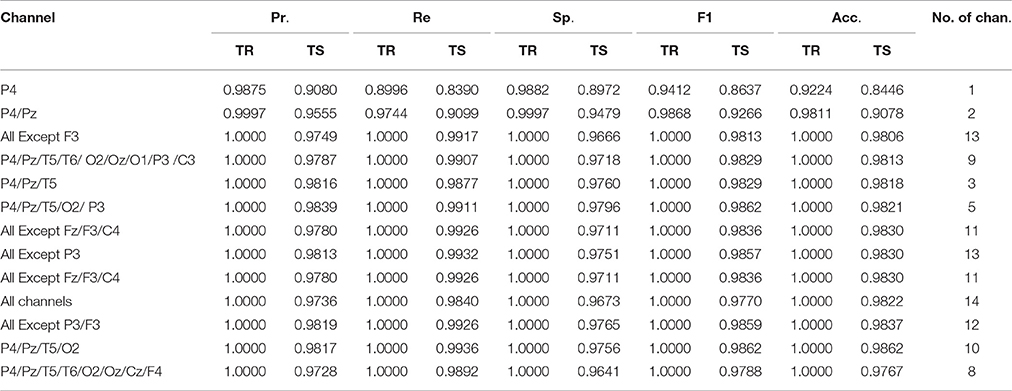

Table 2 presents the classification accuracies for both the training (TR) and testing (TS) sets. Channels found at the top of this table have the highest classification accuracies (smallest error rate) and those found near the bottom of this table have the least classification accuracies. Similar to the case of using 14 channels at once, these classifiers acquired their minimum classification error at γ = 0.05.

Using the SFS greedy selection algorithm, we found that three channels (P4, Pz, and T5) perform very similarly to the 14 channels, but with minimum computational cost for the developmental phase. Using the selected channels, we obtained a classification accuracy of 98.18%, which is approximately equal to the accuracy obtained while using all the 14 channels (98.22%) at once. Table 3 presents the results of SFS algorithm.

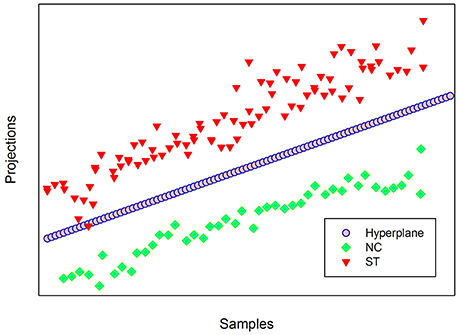

In linear binary classification, the hyperplane (W' · X − b = 0), is used as a class boundary between the two classes. Where W' is the transpose of W defined in Equation (4), X is the data set to be classified and b is a constant. The classifier is assigned a given input sample X ϵ Rd (in this case, d = 2, 800) according to the sign of (W' · X − b). If the sign of (W' · X − b) is positive, X belongs to the schizotypy group, and if the sign is negative, X belongs to the control group. Figure 7 visually presents the projection of NC (green) and ST (red) groups on a 2-dimensional coordinate plane. The hyperplane indicated by a broken line creates an ideal boundary between the two groups. For any unseen data, if its projection lies on the lower side of the hyperplane, the new data is classified as NC; and it is classified as ST if its projection lies on the upper side of the hyperplane. The classifier achieved true positive and true negative rates of 98.8 and 100%, respectively.

Discussion and Conclusions

To distinguish individuals with schizotypy from controls, a customized version of the linear discriminant analysis algorithm, called SKLDA, was used. EEG data were fed as input into the discriminant analysis to obtain the discriminant function. The classifier achieved an average of 98.18 % precision, and 98.77% recall rate. Our method achieves a “partially unbiased estimate,” as the data used to develop the discriminant function was not used during validation. Thus, this approach has better generalizability of the results.

The two important tasks that contribute to the enhanced accuracy of our method include estimation of the shrinkage parameter and the LOOCV. Particularly important was the LOOCV procedure, because in this method, the subjects being tested are not involved in the development of the classification functions. This crucial step gives our approach the ability to generalize results for unseen subjects. In addition to the LOOCV, the use of the shrinkage parameter helped to establish a covariance matrix with optimum discriminate information. The minimum classification error occurred at γ = 0.05. When γ = 0.0, since the misclassification rate got its maximum, this verified that the empirical covariance did not contain enough discriminatory information. It also shows the advantage of using the shrinkage linear discriminant analysis over the traditional linear discriminant analysis.

The other important finding of our experiment is the output of the SFS greedy selection algorithm. We found classification accuracy using three channels (Pz, P4, and T5) that showed similar performance to those using 14 channels in identifying individuals with schizotypy from controls, but with significantly reduced computational and calibration cost. This result suggests the possibility that brain activity in the parieto-temporal region can reflect different emotional processing of multisensory stimuli between the two groups. Previous brain imaging studies, using functional magnetic resonance imaging (Calvert, 2001) and magnetoencephalography (Raij et al., 2000) have found that parieto-temporal regions are part of the brain network involved during the audiovisual integration process. More direct evidence from a positron emission tomography study, using test with emotional audio-visual pairs (face expressions and emotional voices), found that the left lateral temporal cortex was related to processing of multisensory stimuli (Pourtois et al., 2005). ERP studies have also found that a positive ERP deflection (P2), a marker of multisensory processing, was mainly localized in the posterior areas (Balconi and Carrera, 2014).

Our study has several advantages. First, using a paradigm with emotional processing demands, which is believed to be affected by schizotypy, allows specific assessment of brain functioning of schizotypy. Because individuals with schizotypy are considered as non-clinical subjects, EEG measurements, with a high level of imposed demands through multimodal emotional processing, might produce significant differences between two groups. In social contexts, moreover, emotional processing ability using multiple sensory modalities is an essential component. While people predominantly rely on the visual modality to judge the emotional states of others, the auditory modality also provides a great deal of emotional information. The ability to extract emotional salience from both visual and auditory signals, and integrate this information, has important implications for successful communication in social life. Therefore, the advantage of our study is the use of an ecologically valid test for assessing brain function of individuals with schizotypy.

Second, a discriminant function is suggested in this paper as a useful diagnostic validator that can reliably distinguish schizotypy from control. Although, self-report questionnaires have been being widely used for schizophrenia spectrum diagnoses, the suggested method has two advantages. First, whereas self-report questionnaires, such as the SPQ, would be affected by the subject's understanding and introspective ability and suffer from response biases, such as the social desirability bias and acquiescence bias (Paulhus and Vazire, 2007), brain activity measurements might be considered more objective and less vulnerable to these types of bias. Because schizotypy is associated with a vulnerability to schizophrenia, it is important to predict which individuals could later develop schizophrenia. This method may have important clinical implications in discriminating individuals with schizotypy, who have a subtle risk and need intervention in advance, among the general population. Therapeutic intervention is effective in the early phase of the disease, and early detection at the beginning of a schizophrenia spectrum disorder results in a direct therapeutic benefit for the potential patient population.

The limitation of our study inferred directly from the use of cross-validation with data of the same session for analysis. Some previous research reported that performance degradation in classification could be observed because of session-to-session transfer (Marcel and Millán, 2007). However, a recent in-depth study on the stability of EEG features for biometrics concluded that EEG signals contain discriminative information that are stable across time (Maiorana et al., 2016). Although, we have not estimated this type of degradation here, we expected the performance degradation to be minimal since EEG is robust against session-to-session transfer.

In sum, a successful method is proposed in this paper to identifying schizotypy from controls based on neurophysiological outcomes of audiovisual emotion perception and shrinkage linear discriminant analysis. Good accuracy and zero false positive rates are among the advantages of our method. The classification accuracy was significantly high (98.22%), in which subjects were correctly classified with an average 98.18% precision and 98.77% recall rates. This method could be useful in early detection of psychosis prone individuals, such as those suffering from schizotypy, as well as helping to elucidate understanding of the progression from schizotypy to schizophrenia.

Author Contributions

JJ designed the experiment, analyzed the data, and wrote the manuscript. TW analyzed the data and wrote the manuscript. EC performed literature research and data analysis and edited the manuscript. YC performed literature research and data analysis and conducted the experiment. JP provided clinical bases of data analysis. HJK supervised the methodological aspects of this study and managed the overall data analysis. HTK supervised the theoretical works and the practical aspects of this study and managed the overall data collection and analysis. All of the authors contributed to the correction of the final manuscript.

Funding

This work were supported by a Korea University Grant and the MSIP (Ministry of Science, ICT and Future Planning), Korea, under the ITRC (Information Technology Research Center) support program (IITP-2017-2015-0-00385) supervised by the IITP (Institute for Information & communication Technology Promotion).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Balconi, M., and Carrera, A. (2014). Cross-modal integration of emotional face and voice in congruous and incongruous pairs: the P2 ERP effect. J. Cogn. Psychol. 23, 132–139. doi: 10.1080/20445911.2011.473560

Blankertz, B., Lemm, S., Treder, M., Haufe, S., and Muller, K. R. (2011). Single-trial analysis and classification of ERP components–a tutorial. Neuroimage 56, 814–825. doi: 10.1016/j.neuroimage.2010.06.048

Borod, J. C., Martin, C. C., Alpert, M., Brozgold, A., and Welkowitz, J. (1993). Perception of facial emotion in schizophrenic and right brain-damaged patients. J. Nerv. Ment. Dis. 181, 494–502. doi: 10.1097/00005053-199308000-00004

Bozikas, V. P., Kosmidis, M. H., Anezoulaki, D., Giannakou, M., Andreou, C., and Karavatos, A. (2006). Impaired perception of affective prosody in schizophrenia. J. Neuropsychiatry Clin. Neurosci. 18, 81–85. doi: 10.1176/jnp.18.1.81

Calvert, G. A. (2001). Crossmodal processing in the human brain: insights from functional neuroimaging studies. Cereb. Cortex 11, 1110–1123. doi: 10.1093/cercor/11.12.1110

Campanella, S., Montedoro, C., Streel, E., Verbanck, P., and Rosier, V. (2006). Early visual components (P100, N170) are disrupted in chronic schizophrenic patients: an event-related potentials study. Neurophysiol. Clin. 36, 71–78. doi: 10.1016/j.neucli.2006.04.005

Cao, L. J., Chua, K. S., Chong, W. K., Lee, H. P., and Gu, Q. M. (2003). A comparison of PCA, KPCA and ICA for dimensionality reduction in support vector machine. Neurocomputing 55, 321–336. doi: 10.1016/S0925-2312(03)00433-8

Chapman, L. J., Chapman, J. P., Kwapil, T. R., Eckblad, M., and Zinser, M. C. (1994). Putatively psychosis-prone subjects 10 years later. J. Abnorm. Psychol. 103, 171–183. doi: 10.1037/0021-843X.103.2.171

Chapman, R. M., Mccrary, J. W., Gardner, M. N., Sandoval, T. C., Guillily, M. D., Reilly, L. A., et al. (2011). Brain ERP components predict which individuals progress to Alzheimer's disease and which do not. Neurobiol. Aging 32, 1742–1755. doi: 10.1016/j.neurobiolaging.2009.11.010

Chapman, R. M., Nowlis, G. H., McCrary, J. W., Chapman, J. A., Sandoval, T. C., Guillily, M. D., et al. (2007). Brain event-related potentials: diagnosing early-stage Alzheimer's disease. Neurobiol. Aging 28, 194–201. doi: 10.1016/j.neurobiolaging.2005.12.008

Chen, Y., Dessalegn, A., Schlattner, I., Tariku, W., Roh, M. C., Kim, H. J., et al. (2016). A high-security EEG-based login system with RSVP stimuli and dry electrodes. IEEE Trans. Inf. Forensic Secur. 11, 2635–2647. doi: 10.1109/TIFS.2016.2577551

Claridge, G., McCreery, C., Mason, O., Bentall, R., Boyle, G., Slade, P., et al. (1996). The factor structure of 'schizotypal' traits: a large replication study. Br. J. Clin. Psychol. 35(Pt 1), 103–115. doi: 10.1111/j.2044-8260.1996.tb01166.x

Dale, A. M., Liu, A. K., Fischl, B. R., Buckner, R. L., Belliveau, J. W., Lewine, J. D., et al. (2000). Dynamic statistical parametric mapping: combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron 26, 55–67. doi: 10.1016/S0896-6273(00)81138-1

Eysenck, H. J. (1992). The definition and measurements of psychoticism. Pers. Individ. Dif. 13, 757–785. doi: 10.1016/0191-8869(92)90050-Y

Feinberg, T. E., Rifkin, A., Schaffer, C., and Walker, E. (1986). Facial discrimination and emotional recognition in schizophrenia and affective disorders. Arch. Gen. Psychiatry 43, 276–279. doi: 10.1001/archpsyc.1986.01800030094010

Genetic Risk and Outcome in Psychosis (GROUP) Investigators (2011). Evidence that familial liability for psychosis is expressed as differential sensitivity to cannabis: an analysis of patient-sibling and sibling-control pairs. Arch. Gen. Psychiatry 68, 138–147. doi: 10.1001/archgenpsychiatry.2010.132

Gold, R., Butler, P., Revheim, N., Leitman, D. I., Hansen, J. A., Gur, R. C., et al. (2012). Auditory emotion recognition impairments in schizophrenia: relationship to acoustic features and cognition. Am. J. Psychiatry 169, 424–432. doi: 10.1176/appi.ajp.2011.11081230

Gruzelier, J. H., and Doig, A. (1996). The factorial structure of schizotypy: Part II. Cognitive asymmetry, arousal, handedness, and sex. Schizophr. Bull. 22, 621–634. doi: 10.1093/schbul/22.4.621

Hall, J., Harris, J. M., Sprengelmeyer, R., Sprengelmeyer, A., Young, A. W., Santos, I. M., et al. (2004). Social cognition and face processing in schizophrenia. Br. J. Psychiatry 185, 169–170. doi: 10.1192/bjp.185.2.169

Hori, H., Teraishi, T., Sasayama, D., Hattori, K., Ota, M., Matsuo, J., et al. (2012). Schizotypal trait in healthy women is associated with a shift away from dextrality on a spatial motor control task, but not on a force control task. Psychiatry Res. 200, 629–634. doi: 10.1016/j.psychres.2012.05.032

Huang, J., Wang, Y., Jin, Z., Di, X., Yang, T., Gur, R. C., et al. (2013). Happy facial expression processing with different social interaction cues: an fMRI study of individuals with schizotypal personality traits. Prog. Neuropsychopharmacol. Biol. Psychiatry 44, 108–117. doi: 10.1016/j.pnpbp.2013.02.004

Jain, A. K., and Chandrasekaran, B. (1982). 39 dimensionality and sample size considerations in pattern recognition practice. Handb. Stat. 2, 835–855. doi: 10.1016/S0169-7161(82)02042-2

Kalatzis, I., Piliouras, N., Ventouras, E., Papageorgiou, C. C., Rabavilas, A. D., and Cavouras, D. (2004). Design and implementation of an SVM-based computer classification system for discriminating depressive patients from healthy controls using the P600 component of ERP signals. Comput. Methods Programs Biomed. 75, 11–22. doi: 10.1016/j.cmpb.2003.09.003

Kerns, J. G. (2006). Schizotypy facets, cognitive control, and emotion. J. Abnorm. Psychol. 115, 418–427. doi: 10.1037/0021-843X.115.3.418

Kim, M. W., Cho, Y. S., and Choi, J. S. (2011). The Korea University Facial Expression Collection (KUFEC) and semantic differential ratings of emotion. Korean J. Psychol. Gen. 30, 1189–1211. doi: 10.3389/fpsyg.2017.00769

Kosmidis, M. H., Bozikas, V. P., Giannakou, M., Anezoulaki, D., Fantie, B. D., and Karavatos, A. (2007). Impaired emotion perception in schizophrenia: a differential deficit. Psychiatry Res. 149, 279–284. doi: 10.1016/j.psychres.2004.09.011

Lehmann, C., Koenig, T., Jelic, V., Prichep, L., John, R. E., Wahlund, L. O., et al. (2007). Application and comparison of classification algorithms for recognition of Alzheimer's disease in electrical brain activity (EEG). J. Neurosci. Methods 161, 342–350. doi: 10.1016/j.jneumeth.2006.10.023

Leitman, D. I., Laukka, P., Juslin, P. N., Saccente, E., Butler, P., and Javitt, D. C. (2010). Getting the cue: sensory contributions to auditory emotion recognition impairments in schizophrenia. Schizophr. Bull. 36, 545–556. doi: 10.1093/schbul/sbn115

Lewandowski, K. E., Barrantes-Vidal, N., Nelson-Gray, R. O., Clancy, C., Kepley, H. O., and Kwapil, T. R. (2006). Anxiety and depression symptoms in psychometrically identified schizotypy. Schizophr. Res. 83, 225–235. doi: 10.1016/j.schres.2005.11.024

Liu, J., Zhang, C., and Zheng, C. (2010). EEG-based estimation of mental fatigue by using KPCA–HMM and complexity parameters. Biomed. Signal Process Control. 5, 124–130. doi: 10.1016/j.bspc.2010.01.001

Liu, K. H., Yan, S., and Kuo, C. C. J. (2015). Age estimation via grouping and decision fusion. IEEE Trans. Inf. Forensic Secur. 10, 2408–2423. doi: 10.1109/TIFS.2015.2462732

Lotte, F., Congedo, M., Lecuyer, A., Lamarche, F., and Arnaldi, B. (2007). A review of classification algorithms for EEG-based brain-computer interfaces. J. Neural. Eng. 4, R1–R13. doi: 10.1088/1741-2560/4/2/R01

Lynn, S. K., and Salisbury, D. F. (2008). Attenuated modulation of the N170 ERP by facial expressions in schizophrenia. Clin. EEG Neurosci. 39, 108–111. doi: 10.1177/155005940803900218

Maiorana, E., La Rocca, D., and Campisi, P. (2016). On the permanence of EEG signals for biometric recognition. IEEE Trans. Inf. Forensic Secur. 11, 163–175. doi: 10.1109/TIFS.2015.2481870

Marcano-Cedeño, A., Quintanilla-Domínguez, J., Cortina-Januchs, M. G., and Andina, D. (2010). “Feature selection using sequential forward selection and classification applying artificial metaplasticity neural network,” in IECON 2010-36th Annual Conference on IEEE Industrial Electronics Society (Glendale, CA), 2845–2850.

Marcel, S., and Millán, J. D. R. (2007). Person authentication using brainwaves (EEG) and maximum a posteriori model adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 29, 743–752. doi: 10.1109/TPAMI.2007.1012

Modinos, G., Renken, R., Shamay-Tsoory, S. G., Ormel, J., and Aleman, A. (2010). Neurobiological correlates of theory of mind in psychosis proneness. Neuropsychologia 48, 3715–3724. doi: 10.1016/j.neuropsychologia.2010.09.030

Mohanty, A., Herrington, J. D., Koven, N. S., Fisher, J. E., Wenzel, E. A., Webb, A. G., et al. (2005). Neural mechanisms of affective interference in schizotypy. J. Abnorm. Psychol. 114, 16–27. doi: 10.1037/0021-843X.114.1.16

Papousek, I., Weiss, E. M., Mosbacher, J. A., Reiser, E. M., Schulter, G., and Fink, A. (2014). Affective processing in positive schizotypy: Loose control of social-emotional information. Brain Cogn. 92C, 84–91. doi: 10.1016/j.bandc.2014.10.008

Paulhus, D. L., and Vazire, S. (2007). “The self-report method,” in Handbook of Research Methods in Personality Psychology, eds R. W. Robins, R. C. Fraley, and R. F. Krueger (New York, NY: Guilford Press, Inc.), 224–239.

Persad, S. M., and Polivy, J. (1993). Differences between depressed and nondepressed individuals in the recognition of and response to facial emotional cues. J. Abnorm. Psychol. 102, 358–368. doi: 10.1037/0021-843X.102.3.358

Pinheiro, A. P., Del Re, E., Mezin, J., Nestor, P. G., Rauber, A., McCarley, R. W., et al. (2013). Sensory-based and higher-order operations contribute to abnormal emotional prosody processing in schizophrenia: an electrophysiological investigation. Psychol. Med. 43, 603–618. doi: 10.1017/S003329171200133X

Pourtois, G., De Gelder, B., Bol, A., and Crommelinck, M. (2005). Perception of facial expressions and voices and of their combination in the human brain. Cortex 41, 49–59. doi: 10.1016/S0010-9452(08)70177-1

Radloff, L. S. (1977). The CES-D scale: a self-report depression scale for research in the general population. Appl. Psychol.Meas. 1, 385–401. doi: 10.1177/014662167700100306

Raij, T., Uutela, K., and Hari, R. (2000). Audiovisual integration of letters in the human brain. Neuron 28, 617–625. doi: 10.1016/S0896-6273(00)00138-0

Raine, A. (1991). The SPQ: a scale for the assessment of schizotypal personality based on DSM-III-R criteria. Schizophr. Bull. 17, 555–564. doi: 10.1093/schbul/17.4.555

Ramos-Loyo, J., Gonzalez-Garrido, A. A., Sanchez-Loyo, L. M., Medina, V., and Basar-Eroglu, C. (2009). Event-related potentials and event-related oscillations during identity and facial emotional processing in schizophrenia. Int. J. Psychophysiol. 71, 84–90. doi: 10.1016/j.ijpsycho.2008.07.008

Raudys, S. J., and Jain, A. K. (1991). Small sample size effects in statistical pattern recognition: Recommendations for practitioners. IEEE Trans. Pattern Anal. Mach. Intell. 13, 252–264. doi: 10.1109/34.75512

Rossler, W., Hengartner, M. P., Ajdacic-Gross, V., Haker, H., and Angst, J. (2014). Impact of childhood adversity on the onset and course of subclinical psychosis symptoms–results from a 30-year prospective community study. Schizophr. Res. 153, 189–195. doi: 10.1016/j.schres.2014.01.040

Sabeti, M., Katebi, R., Boostani, R., and Price, G. W. (2011). A new appproach for EEG signal classification of schizophrenic and control participatns. Expert Syst. Appl. 38, 2063–2071. doi: 10.1016/j.eswa.2010.07.145

Schäfer, J., and Strimmer, K. (2005). A shrinkage approach to large-scale covariance matrix estimation and implications for functional genomics. Stat. Appl. Genet. Mol. Biol. 4, 32. doi: 10.2202/1544-6115.1175

Subasi, A., and Gursoy, M. I. (2010). EEG signal classification using PCA, ICA, LDA and support vector machines. Expert Syst. Appl. 37, 8659–8666. doi: 10.1016/j.eswa.2010.06.065

Turetsky, B. I., Kohler, C. G., Indersmitten, T., Bhati, M. T., Charbonnier, D., and Gur, R. C. (2007). Facial emotion recognition in schizophrenia: when and why does it go awry? Schizophr. Res. 94, 253–263. doi: 10.1016/j.schres.2007.05.001

Wang, X., and Paliwal, K. K. (2003). Feature extraction and dimensionality reduction algorithms and their applications in vowel recognition. Pattern Recogn. 36, 2429–2439. doi: 10.1016/S0031-3203(03)00044-X

Wynn, J. K., Lee, J., Horan, W. P., and Green, M. F. (2008). Using event related potentials to explore stages of facial affect recognition deficits in schizophrenia. Schizophr. Bull. 34, 679–687. doi: 10.1093/schbul/sbn047

Yuvaraj, R., and Murugappan, M. (2016). Hemispheric asymmetry non-linear analysis of EEG during emotional responses from idiopathic Parkinson's disease patients. Cogn. Neurodynamics 10, 225–234. doi: 10.1007/s11571-016-9375-3

Keywords: Schizotypy, classification, EEG, multimodal emotion perception, shrinkage linear discriminant analysis

Citation: Jeong JW, Wendimagegn TW, Chang E, Chun Y, Park JH, Kim HJ and Kim HT (2017) Classifying Schizotypy Using an Audiovisual Emotion Perception Test and Scalp Electroencephalography. Front. Hum. Neurosci. 11:450. doi: 10.3389/fnhum.2017.00450

Received: 26 December 2016; Accepted: 24 August 2017;

Published: 12 September 2017.

Edited by:

Chang S. Nam, North Carolina State University, United StatesReviewed by:

Sung-Phil Kim, Ulsan National Institute of Science and Technology, South KoreaRahul Goel, University of Houston, United States

Copyright © 2017 Jeong, Wendimagegn, Chang, Chun, Park, Kim and Kim. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hyoung Joong Kim, khj-@korea.ac.kr

Hyun Taek Kim, neurolab@korea.ac.kr

†These authors have contributed equally to this work.

Ji Woon Jeong

Ji Woon Jeong Tariku W. Wendimagegn

Tariku W. Wendimagegn Eunhee Chang

Eunhee Chang Yeseul Chun1

Yeseul Chun1  Joon Hyuk Park

Joon Hyuk Park Hyun Taek Kim

Hyun Taek Kim