- 1 Systems Neuroscience Section, Department of Rehabilitation for Brain Functions, Research Institute of National Rehabilitation Center for Persons with Disabilities, Tokorozawa, Japan

- 2 Mechatronics Section, Department of Rehabilitation Engineering, Research Institute of National Rehabilitation Center for Persons with Disabilities, Tokorozawa, Japan

The brain–machine interface (BMI) or brain–computer interface is a new interface technology that uses neurophysiological signals from the brain to control external machines or computers. This technology is expected to support daily activities, especially for persons with disabilities. To expand the range of activities enabled by this type of interface, here, we added augmented reality (AR) to a P300-based BMI. In this new system, we used a see-through head-mount display (HMD) to create control panels with flicker visual stimuli to support the user in areas close to controllable devices. When the attached camera detects an AR marker, the position and orientation of the marker are calculated, and the control panel for the pre-assigned appliance is created by the AR system and superimposed on the HMD. The participants were required to control system-compatible devices, and they successfully operated them without significant training. Online performance with the HMD was not different from that using an LCD monitor. Posterior and lateral (right or left) channel selections contributed to operation of the AR–BMI with both the HMD and LCD monitor. Our results indicate that AR–BMI systems operated with a see-through HMD may be useful in building advanced intelligent environments.

Introduction

The brain–machine interface (BMI) or brain–computer interface (BCI) is a new interface technology that uses neurophysiological signals from the brain to control external computers or machines (Birbaumer et al., 1999; Wolpaw and Mcfarland, 2004; Birbaumer and Cohen, 2007). Electroencephalography (EEG), in which neurophysiological signals are recorded using electrodes placed on the scalp, represents the primary non-invasive methodology for studying BMI. Our group applied EEG and developed a BMI-based system for environmental control and communication. In this system, we modified a P300 speller (Farwell and Donchin, 1988). The P300 speller uses the P300 paradigm and involves the presentation of a selection of icons arranged in a matrix. According to this protocol, the participant focuses on one icon in the matrix as the target, and each row/column or a single icon of the matrix is then intensified in a random sequence. The target stimuli are presented as rare stimuli (i.e., the oddball paradigm). We elicited P300 responses to the target stimuli and then extracted and classified these responses with respect to the target. In our former study, we prepared a green/blue flicker matrix because this color combination is considered safest (Parra et al., 2007). We showed that the green/blue flicker matrix was associated with a better subjective feeling of comfort than was the white/gray flicker matrix, and we also found that the green/blue flicker matrix was associated with better performance (Takano et al., 2009a,b). The BMI system was satisfactorily used by individuals with cervical spinal cord injury (Komatsu et al., 2008; Ikegami et al., 2011).

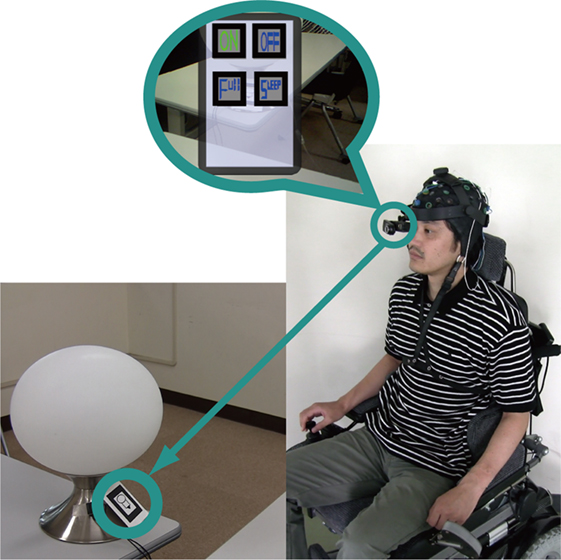

Such a system could be used by persons with disabilities to support their daily activities. In this type of system, users rely on control panels that are pre-equipped; thus, each system is specialized for the user’s specific environment (e.g., his or her home). To expand the range of possible activities, it is desirable to develop a new system that can be readily used in new environments, such as hospitals. To make this possible, here, we added an augmented reality (AR) feature to a P300-based BMI. In the system, we used a see-through head-mount display (HMD) to create control panels with flicker visual stimuli, thereby giving users suitable panels when they come close to a controllable device. When the attached camera detects an AR marker, the position and orientation of the marker are calculated, and the control panel for the pre-assigned appliance is created by the AR system and superimposed on the scene via the HMD (Figures 1 and 2).

Figure 1. Diagram of the AR–BMI system. When the USB camera detects an AR marker, the control panels for a pre-assigned appliance (e.g., desk light) are added to the user’s sight and the device becomes controllable. The subjects are able to operate the appliance by focusing on an icon on the augmented control panel.

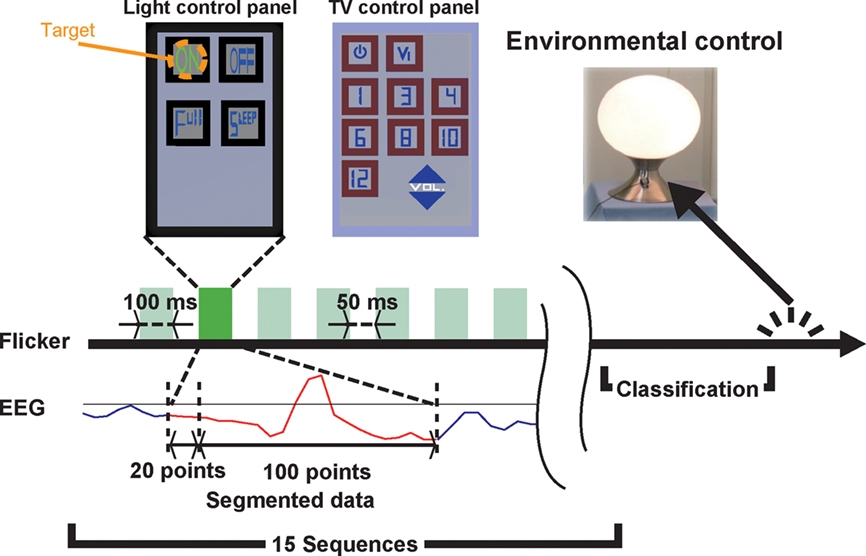

Figure 2. Experimental procedure. The icons change color from blue to green at the time of intensification, and the elicited ERPs are recorded. The red solid line in the EEG data indicates the segmented portion used for classification. The target was classified by Fisher’s linear discriminant analysis after 15 sequences. The light control panel had four icons (turn on, turn off, light up, and dim), whereas the TV control panel had 11 icons (turn on, change channel, change video mode, volume up, and volume down).

We used a see-through HMD in this study. To evaluate the effects of different types of visual stimuli on the new AR–BMI, we compared a see-through HMD with an LCD monitor. The participants were asked to control devices using the AR–BMI system with both a see-through HMD and an LCD monitor. In doing so, we found that the AR–BMI system with the see-through HMD worked well.

Materials and Methods

Subjects

Fifteen subjects were recruited as participants (aged 19–46 years; 3 females, 12 males). All subjects were neurologically normal and strongly right-handed according to the Edinburgh Inventory (Oldfield, 1971). Our study was approved by the Institutional Review Board at the National Rehabilitation Center for Persons with Disabilities. All subjects provided written informed consent in accordance with institutional guidelines.

Experimental Design

Augmented reality techniques were combined with a BMI (Figure 1). The AR–BMI system consisted of an HMD (LE750A, Liteye Systems, Inc., Centennial, CO, USA) or LCD monitor (E207WFPc, Dell Inc., Round Rock, TX, USA), a PC, USB camera (QCAM-200V, Logicool, Tokyo, Japan), EEG amplifier (g.USBamp, Guger Technologies OEG, Graz, Austria), and EEG cap (g.EEGcap, Guger Technologies OEG). We used the ARToolKit C-language library for the system (Kato and Billinghurst, 1999). When the camera detects an AR marker, the pre-assigned infrared appliance becomes controllable. The AR marker’s position and posture were calculated from the images detected by the camera, and a control panel for the appliances was created by the AR system and superimposed within sight of the subject. We prepared a TV and a desk light as controllable devices. AR markers for the control panels for the TV and desk light were prepared (Figure 2).

We prepared green/blue flicker matrices (Takano et al., 2009b) as control panels. The duration of intensification/rest was 100/50 ms. All icons flickered in random order, creating a sequence. One classification was carried out per 15 sequences (Figure 2). Subjects were required to send five commands to control both the TV and desk light. We asked the subjects to focus on one of the icons.

EEG Recording and Analysis

Eight-channel (Fz, Cz, Pz, P3, P4, Oz, Po7, and Po8) EEG data were recorded using a cap. All channels were referenced to the Fpz and grounded to the AFz. Electrode impedance was under 20 kΩ. The EEG data were amplified/digitized at a rate of 128 Hz using a gUSBamp. The gUSBamp internal digitization rate was higher than 128 Hz, so the data were down-sampled. The digitized data were filtered with an eighth-order high-pass filter at 0.1 Hz and a fourth-order 48–52 Hz notch filter.

In the analyses, recorded EEG data were filtered with a first-order band-pass filter (1.27–2.86 Hz); 120 digitization points of ERP data were recorded according to the timing of the intensification. Data from the first 20 points (before intensification) were used for baseline correction. The remaining 100 points (after intensification) were down-sampled to 25.6 Hz and used for classification.

In training sets, we recorded EEG data to create a feature vector beforehand. Subjects were required to focus on one of the target icons, and four target icons were used. Sixty (4 trials × 15 intensifications) sets of digitization points were recorded as the target data set, and 600 (4 trials × 15 intensifications × 10 non-target icons) sets of digitization points were recorded as the non-target data set. Each data set included 100 digitization points per each EEG channel, and these data sets were down-sampled to 20 digitization points per each EEG channel. In total, 160 dimension-feature vectors (20 dimensions per EEG channel) were calculated using the segmented data for each subject. Feature vectors were derived for each experimental condition (LCD and HMD).

In testing sets, using the feature vectors, target and non-target icons were discriminated using Fisher’s linear discriminant analysis. The result of the classification, as the maximum of the summed scores, was used to determine the icon to which the subjects were attending.

Results

Online Performance and Offline Evaluation

In the current study, we prepared an AR–BMI to control system-compatible devices. We used both a see-through HMD and an LCD monitor to further evaluate the effect of different types of visual stimuli on the AR–BMI.

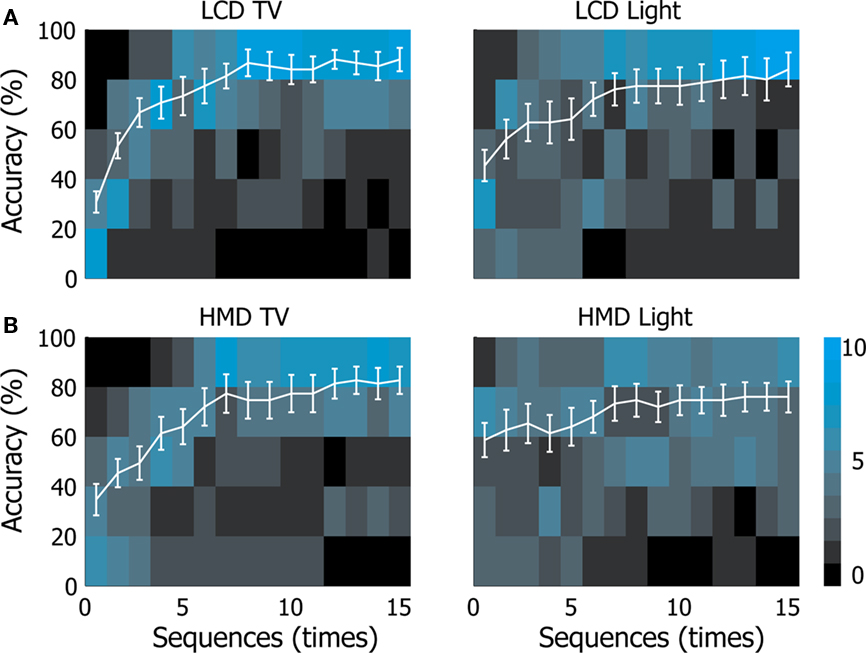

Online performance was evaluated and the mean accuracy rate for the TV control panel was 88% (SD = 3.20) with the LCD monitor, compared to 82.7% (SD = 2.63) with the HMD; however, these results were not significantly different (Figure 3). In contrast, a significant difference was noted in an offline evaluation [two-way repeated ANOVA F(1,420) = 13.6, p < 0.05; Tukey–Kramer test, p < 0.05].

Figure 3. Subjects’ control accuracy. Accuracy in controlling the TV and desk light are shown. The horizontal axes indicate the number of sequences, while the vertical axes indicate the accuracy. White solid lines show the mean accuracy with the SE. The blue squares behind the white solid lines are two-dimensional histograms; each blue square indicates the frequency of the subjects in each sequence and their accuracy [(A): LCD, (B): HMD].

The mean accuracy rate for light control was 84% (SD = 3.40) with the LCD monitor, compared to 76% (SD = 2.06) with the HMD; however, the results were not significantly different. The results were also not significantly different in an offline evaluation. Thus, our AR–BMI system could be operated not only by using a PC display, but also by using an HMD.

Channel Selection

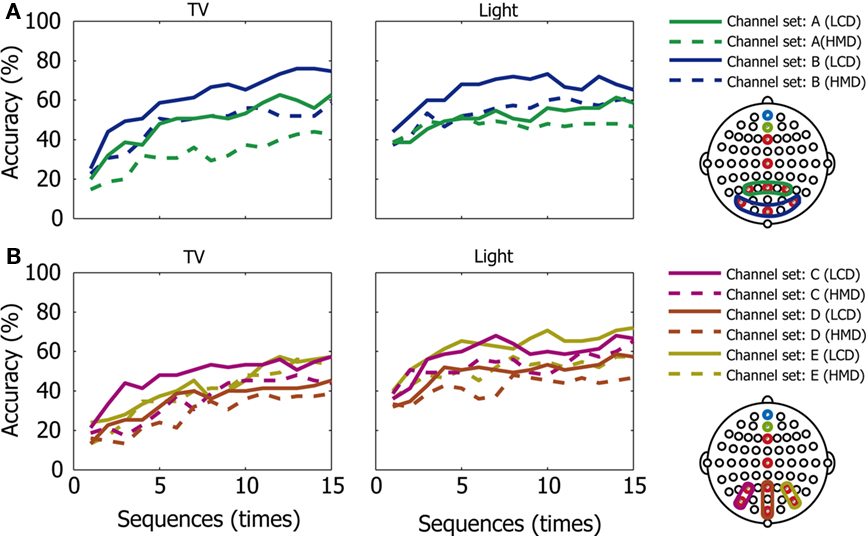

We further investigated the effects of channel selection on the operation of the AR–BMI using an HMD and LCD monitor. We divided the EEG channels into different sets and evaluated their accuracy.

When we analyzed the data in two horizontal channel sets [A (P3, Pz, and P4) and B (Po7, Oz, and Po8; Figure 4A)], set B (posterior set) showed significantly higher accuracy than set A (anterior set) in all sessions and under all conditions (p < 0.05, two-way repeated ANOVA, no interaction).

Figure 4. Control accuracy in the channel sets. Accuracy in controlling the TV and desk light are shown in different channel sets (A–E). The horizontal axes indicate the number of sequences, while the vertical axes indicate the accuracy. (A): channel sets A (P3, Pz, and P4) and B (Po7, Oz, and Po8); (B): channel sets C (P3 and Po7), D (Pz and Oz), and E (P4 and Po8). Solid lines indicate performance with the LCD, while broken lines indicate performance with the HMD for each channel set.

When we analyzed the data in three vertical channel sets [C (P3 and Po7), D (Pz and Oz), and E (P4 and Po8; Figure 4B)], set D (middle set) showed significantly lower accuracy than the others (left and right sets) in all sessions and under all conditions (p < 0.05, two-way repeated ANOVA, no interaction, and Tukey–Kramer as a post hoc test).

These results show that the posterior and lateral (right or left) channel sets provided better performance in the operation of the AR–BMI with both the HMD and LCD monitor.

Discussion

In this study, we found that by applying an AR–BMI system operated with a see-through HMD, which can provide suitable control panels to users when they come into an area close to a controllable device, participants successfully operated system-compatible devices without significant training.

HMD vs. LCD Monitor

When visual-evoked potentials are applied to a BMI system, the effects of visual stimuli can be better evaluated. Townsend et al. (2010) reported that a checkerboard paradigm for visual stimuli increased accuracy. Our group found that green/blue flicker stimuli improved performance during operation of a P300-based BMI (Takano et al., 2009b). A BMI study that used an immersive HMD and LCD monitor to provide visual stimuli showed no significant difference between the two technologies (Bayliss, 2003).

In this study, we applied both a see-through HMD and an LCD monitor to an AR–BMI system to further evaluate the effect of different types of visual stimuli, and in the online evaluation, the performance with the HMD was not different from that with the LCD monitor. The percent accuracy in this study ranged from 76 to 88%; because the incidence of correct responses exceeded 70%, the system is considered to have reached the level of actual usage (Kubler and Birbaumer, 2008; Nijboer et al., 2008).

In offline analyses, the see-through HMD provided significantly lower accuracy for TV control than the LCD monitor. Because icon size and the distance between icons can affect the accuracy of classification (Sellers et al., 2006), this may have been caused by the different types of visual stimuli between the HMD and LCD monitor. Thus, the effects of visual stimuli on BMI operation should be investigated further.

Channel Set

We also investigated the effects of channel selection on operation of the AR–BMI using an HMD and LCD monitor, and found that posterior and lateral (right or left) channel selections contributed favorably to the operation of the AR–BMI with both the HMD and LCD monitor. Important roles for postero-lateral channels in driving a P300-based BMI have been reported (Krusienski et al., 2008; Rakotomamonjy and Guigue, 2008). Rakotomamonjy and Guigue (2008) scored the effectiveness of channels in a P300-based BMI using a support vector machine and found an advantage with Po7 and Po8. Krusienski et al. (2008) showed that the occipito-parietal (Po7, Oz, and Po8) and midline (Fz, Cz, and Pz) electrodes provided better accuracy.

The neuronal mechanisms of the P300 have been investigated, and it has been noted that that the P300 reflects stimulus-driven and top-down attentional processes with other cognitive process, including categorization (Bledowski et al., 2004; Polich, 2007). Our tasks used green/blue color stimuli so that the processing of chromatic information, which occurs primarily in the V4 area, was also required (Lueck et al., 1989; Plendl et al., 1993; Murphey et al., 2008). Additional studies are necessary to fully understand the neuronal processes underlying the P300 paradigm with green/blue color flickering stimuli; however, this study suggests the importance of posterior and lateral (right or left) channel sets in the operation of an AR–BMI with both an HMD and LCD display.

Toward Advanced Intelligent Environments

Several combinations between BMI and other technologies have been attempted, such as BMI with eye tracking (Popescu et al., 2006), and BMI with robotics (Valbuena et al., 2007). AR was combined with SSVEP BMI to provide a rich virtual environment (Faller et al., 2010), and we used AR with an LCD monitor and an agent robot in P300 BMI so that the users could operate home electronics in the robot’s environment (Kansaku et al., 2010). In this study, we developed an AR–BMI system operated with a see-through HMD, which may be useful in building advanced intelligent environments (Kansaku, in press).

The systems developed by our group use a modified P300 speller (Farwell and Donchin, 1988). Although the P300 speller has primarily been used for communication using spelling alphabets, the system has recently been used to control more complex system-compatible devices, including robots (Bell et al., 2008; Komatsu et al., 2008). Thus, each icon expresses the user’s thoughts by assigning more complex meanings.

Our AR–BMI with a see-through HMD can be used to control more types of devices; thus, the system may be helpful in expanding the range of activities for persons with disabilities. The future extension of the environment for human activities along these lines, using either non-invasive neurophysiological signals or neuronal firing data, may enable new daily activities not only for persons with physical disabilities, but also for able-bodied persons.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This study was supported in part by a grant from the Ministry of Health, Labor, and Welfare to K. Kansaku. K. Takano and N. Hata were supported by NRCD fellowships. We thank T. Komatsu. S. Ikegami, and M. Wada for their help, and Y. Nakajima and M. Suwa for their continuous encouragement.

References

Bayliss, J. D. (2003). Use of the evoked potential P3 component for control in a virtual apartment. IEEE Trans. Neural Syst. Rehabil. Eng. 11, 113–116.

Bell, C. J., Shenoy, P., Chalodhorn, R., and Rao, R. P. (2008). Control of a humanoid robot by a noninvasive brain-computer interface in humans. J. Neural Eng. 5, 214–220.

Birbaumer, N., and Cohen, L. G. (2007). Brain-computer interfaces: communication and restoration of movement in paralysis. J. Physiol. 579, 621–636.

Birbaumer, N., Ghanayim, N., Hinterberger, T., Iversen, I., Kotchoubey, B., Kubler, A., Perelmouter, J., Taub, E., and Flor, H. (1999). A spelling device for the paralysed. Nature 398, 297–298.

Bledowski, C., Prvulovic, D., Hoechstetter, K., Scherg, M., Wibral, M., Goebel, R., and Linden, D. E. (2004). Localizing P300 generators in visual target and distractor processing: a combined event-related potential and functional magnetic resonance imaging study. J. Neurosci. 24, 9353–9360.

Faller, J., Leeb, R., Pfurtscheller, G., and Scherer, R. (2010). “Avatar navigation in virtual and augmented reality environments using an SSVEP BCI,” in International Conference on Apllied Bionics and Biomechanics-2010, Venice, 1–4.

Farwell, L. A., and Donchin, E. (1988). Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 70, 510–523.

Ikegami, S., Takano, K., Saeki, N., and Kansaku, K. (2011). Operation of a P300-based brain-computer interface by individuals with cervical spinal cord injury. Clin. Neurophysiol. 122, 991–996

Kansaku, K. (in press). “The intelligent environment: brain–machine interfaces for environmental control,” in Smart Houses: Advanced Technology for Living Independently, eds. M. Ferguson-Pell and D. Stefanov (Berlin: Springer Verlag).

Kansaku, K., Hata, N., and Takano, K. (2010). My thoughts through a robot’s eyes: an augmented reality-brain-machine interface. Neurosci. Res. 66, 219–222.

Kato, H., and Billinghurst, M. (1999). “Marker tracking and HMD calibration for a video-based augmented reality conferencing system,” in The 2nd International Workshop on Augmented Reality (IWAR 99), San Francisco, 85–94.

Komatsu, T., Hata, N., Nakajima, Y., and Kansaku, K. (2008). A non-training EEG-based BMI system for environmental control. Neurosci. Res. 61(Suppl. 1), :S251.

Krusienski, D. J., Sellers, E. W., Mcfarland, D. J., Vaughan, T. M., and Wolpaw, J. R. (2008). Toward enhanced P300 speller performance. J. Neurosci. Methods 167, 15–21.

Kubler, A., and Birbaumer, N. (2008). Brain-computer interfaces and communication in paralysis: extinction of goal directed thinking in completely paralysed patients? Clin. Neurophysiol. 119, 2658–2666.

Lueck, C. J., Zeki, S., Friston, K. J., Deiber, M. P., Cope, P., Cunningham, V. J., Lammertsma, A. A., Kennard, C., and Frackowiak, R. S. (1989). The colour centre in the cerebral cortex of man. Nature 340, 386–389.

Murphey, D. K., Yoshor, D., and Beauchamp, M. S. (2008). Perception matches selectivity in the human anterior color center. Curr. Biol. 18, 216–220.

Nijboer, F., Sellers, E. W., Mellinger, J., Jordan, M. A., Matuz, T., Furdea, A., Halder, S., Mochty, U., Krusienski, D. J., Vaughan, T. M., Wolpaw, J. R., Birbaumer, N., and Kubler, A. (2008). A P300-based brain-computer interface for people with amyotrophic lateral sclerosis. Clin. Neurophysiol. 119, 1909–1916.

Oldfield, R. C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9, 97–113.

Parra, J., Lopes Da Silva, F. H., Stroink, H., and Kalitzin, S. (2007). Is colour modulation an independent factor in human visual photosensitivity? Brain 130, 1679–1689.

Plendl, H., Paulus, W., Roberts, I. G., Botzel, K., Towell, A., Pitman, J. R., Scherg, M., and Halliday, A. M. (1993). The time course and location of cerebral evoked activity associated with the processing of colour stimuli in man. Neurosci. Lett. 150, 9–12.

Polich, J. (2007). Updating P300: an integrative theory of P3a and P3b. Clin. Neurophysiol. 118, 2128–2148.

Popescu, F., Badower, Y., Fazli, S., Dornhege, G., and Müller, K. (2006). “EEG-based control of reaching to visual targets,” in EPFL-LATSIS Symposium 2006, Lausanne, 123–124.

Rakotomamonjy, A., and Guigue, V. (2008). BCI competition III: dataset II- ensemble of SVMs for BCI P300 speller. IEEE Trans. Biomed. Eng. 55, 1147–1154.

Sellers, E. W., Krusienski, D. J., Mcfarland, D. J., Vaughan, T. M., and Wolpaw, J. R. (2006). A P300 event-related potential brain-computer interface (BCI): the effects of matrix size and inter- stimulus interval on performance. Biol. Psychol. 73, 242–252.

Takano, K., Ikegami, S., Komatsu, T., and Kansaku, K. (2009a). Green/blue flicker matrices for the P300 BCI improve the subjective feeling of comfort. Neurosci. Res. 65(Suppl. 1), S182.

Takano, K., Komatsu, T., Hata, N., Nakajima, Y., and Kansaku, K. (2009b). Visual stimuli for the P300 brain-computer interface: a comparison of white/gray and green/blue flicker matrices. Clin. Neurophysiol. 120, 1562–1566.

Townsend, G., Lapallo, B. K., Boulay, C. B., Krusienski, D. J., Frye, G. E., Hauser, C. K., Schwartz, N. E., Vaughan, T. M., Wolpaw, J. R., and Sellers, E. W. (2010). A novel P300-based brain-computer interface stimulus presentation paradigm: moving beyond rows and columns. Clin. Neurophysiol. 121, 1109–1120.

Valbuena, D., Cyriacks, M., Friman, O., Volosyak, I., and Graser, A. (2007). “Brain-computer Interface for high-level control of rehabilitation robotic systems,” IEEE 10th International Conference on Rehabilitation Robotics, 2007. ICORR 2007, Noordwijk, 619–625.

Keywords: BMI, BCI, augmented reality, head-mount display, environmental control system

Citation: Takano K, Hata N and Kansaku K (2011) Towards intelligent environments: an augmented reality–brain–machine interface operated with a see-through head-mount display. Front. Neurosci. 5:60. doi: 10.3389/fnins.2011.00060

Received: 15 October 2010;

Accepted: 08 April 2011;

Published online: 20 April 2011.

Edited by:

Herta Flor, Central Institute of Mental Health, GermanyReviewed by:

Klaus R. Mueller, Technical University, GermanyEugen Diesch, Central Institute of Mental Health, Germany

Copyright: © 2011 Takano, Hata and Kansaku. This is an open-access article subject to a non-exclusive license between the authors and Frontiers Media SA, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and other Frontiers conditions are complied with.

*Correspondence: Kenji Kansaku, Systems Neuroscience Section, Department of Rehabilitation for Brain Functions, Research Institute of National Rehabilitation Center for Persons with Disabilities, 4-1 Namiki, Tokorozawa, Saitama 359-8555, Japan. e-mail: kansaku-kenji@rehab.go.jp