- 1Department of Information and Communication Technologies, Universitat Pompeu Fabra, Barcelona, Spain

- 2Department of Communication, Universitat Pompeu Fabra, Barcelona, Spain

We introduce a new neurofeedback approach, which allows users to manipulate expressive parameters in music performances using their emotional state, and we present the results of a pilot clinical experiment applying the approach to alleviate depression in elderly people. Ten adults (9 female and 1 male, mean = 84, SD = 5.8) with normal hearing participated in the neurofeedback study consisting of 10 sessions (2 sessions per week) of 15 min each. EEG data was acquired using the Emotiv EPOC EEG device. In all sessions, subjects were asked to sit in a comfortable chair facing two loudspeakers, to close their eyes, and to avoid moving during the experiment. Participants listened to music pieces preselected according to their music preferences, and were encouraged to increase the loudness and tempo of the pieces, based on their arousal and valence levels. The neurofeedback system was tuned so that increased arousal, computed as beta to alpha activity ratio in the frontal cortex corresponded to increased loudness, and increased valence, computed as relative frontal alpha activity in the right lobe compared to the left lobe, corresponded to increased tempo. Pre and post evaluation of six participants was performed using the BDI depression test, showing an average improvement of 17.2% (1.3) in their BDI scores at the end of the study. In addition, an analysis of the collected EEG data of the participants showed a significant decrease of relative alpha activity in their left frontal lobe (p = 0.00008), which may be interpreted as an improvement of their depression condition.

Introduction

There is ample literature reporting on the importance and benefits of music for older adults (Ruud, 1997; Cohen et al., 2002; McCaffrey, 2008). Some studies suggest that music contributes to positive aging by promoting self-esteem, feelings of competence and independence while diminishing the feelings of isolation (Hays and Minichiello, 2005). Listening to music appears to be rated as a very pleasant experience by older adults since it promotes relaxation, decreases anxiety, and distracts people from unpleasant experiences (Cutshall et al., 2007; Ziv et al., 2007; Fukui and Toyoshima, 2008). It can also evoke very strong feelings, both positive and negative, which very often result in physiological changes (Lundqvist et al., 2009). These positive effects seem to be also experienced by people with dementia (Särkämö et al., 2012, 2014; Hsu et al., 2015). All these findings have led many researchers to be interested in the topic of the contribution of music to the quality of life and to life satisfaction of older people (Vanderak et al., 1983). Music activities (both passive and active) can affect older adults' perceptions of their quality of life, valuing highly the non-musical dimensions of being involved in music activities such as physical, psychological, and social aspects (Coffman, 2002; Cohen-Mansfield et al., 2011). Music experiences, led by music therapists or by other caregivers, besides being a source of entertainment, seem to provide older people the mentioned benefits (Hays and Minichiello, 2005; Solé et al., 2010). Music has been shown to be beneficial in patients with different medical conditions. Särkämö et al. (2010) demonstrated that stroke patients merely listening to music and speech after neural damage can induce long-term plastic changes in early sensory processing, which, in turn, may facilitate the recovery of higher cognitive functions. The Cochrane review by Maratos et al. (2008) highlighted the potential benefits of music therapy for improving mood in those with depression. Erkkilä et al. (2011) showed that music therapy combined with standard care is effective for depression among working-age people with depression. In their study, patients receiving music therapy plus standard care showed greater improvement in depression symptoms than those receiving standard care only.

Neurofeedback has been found to be effective in producing significant improvements in medical conditions such as depression (Kumano et al., 1996; Rosenfeld, 2000; Hammond, 2004), anxiety (Vanathy et al., 1998; Kerson et al., 2009), migraine (Walker, 2011), epilepsy (Swingle, 1998), attention deficit/hyperactivity disorder (Moriyama et al., 2012), alcoholism/substance abuse (Peniston and Kulkosky, 1990), and chronic pain (Jensen et al., 2007), among many others (Kropotov, 2009). For instance, Sterman (2000) reports that 82% of the most severe, uncontrolled epileptics demonstrated a significant reduction in seizure frequency, with an average of a 70% reduction in seizures. The benefits of neurofeedback in this context were shown to lead to significant normalization of brain activity even when patients were asleep. The effectiveness of neurofeedback was validated compared to medication and placebo (Kotchoubey et al., 2001). Similarly, Monastra et al.'s (2002) research found neurofeedback to be significantly more effective than ritalin in changing ADD/ADHD, without having to remain on drugs. Other studies (Fuchs et al., 2003) have found comparable improvements with 20 h of neurofeedback training (forty 30-min sessions) to those produced by ritalin, even after only twenty 30-min sessions of neurofeedback (Rossiter and La Vaque, 1995). In the context of depression treatment, there are several clinical protocols used to apply neurofeedback such as shifting the alpha predominance in the left hemisphere to the right by decreasing left-hemispheric alpha activity, or increasing right hemispheric alpha activity, shifting an asymmetry index toward the right in order to rebalance activation levels in favor of the left hemisphere, and the reduction of Theta activity (4–8 Hz) in relation to Beta (15–28 Hz) in the left prefrontal cortex (i.e., decrease in the Theta/Beta ratio on the left prefrontal cortex) (Gruzelier and Egner, 2005; Michael et al., 2005; Ali et al., 2015). Dias and van Deusen (2011) applied a neurofeedback protocol that is simultaneously capable of providing the training demands of Alpha asymmetry and increased Beta/Theta relationship in the left prefrontal cortex.

A still relatively new field of research in affective computing attempts to detect emotion states in users using electroencephalogram (EEG) data (Chanel et al., 2006). Alpha and beta wave activity may be used in different ways for detecting emotional (arousal and valence) states of mind in humans. For instance, Choppin (2000) propose to use EEG signals for classifying six emotions using neural networks. Choppin's approach is based on emotional valence and arousal by characterizing valence, arousal and dominance from EEG signals. He characterizes positive emotions by a high frontal coherence in alpha, and high right parietal beta power. Higher arousal (excitation) is characterized by a higher beta power and coherence in the parietal lobe, plus lower alpha activity, while dominance (strength) of an emotion is characterized as an increase in the beta/alpha activity ratio in the frontal lobe, plus an increase in beta activity at the parietal lobe. Ramirez and Vamvakousis (2012) characterize emotional states by computing arousal levels as the prefrontal cortex beta to alpha ratio and valence levels as the alpha asymmetry between lobes. They show that by applying machine learning techniques (support vector machines with different kernels) to the computed arousal and valence values it is possible to classify the user emotion into high/low arousal and positive/negative valence emotional states, with average accuracies of 77.82, and 80.11%, respectively. These results show that the computed arousal and valence values indeed contain meaningful user's emotional information.

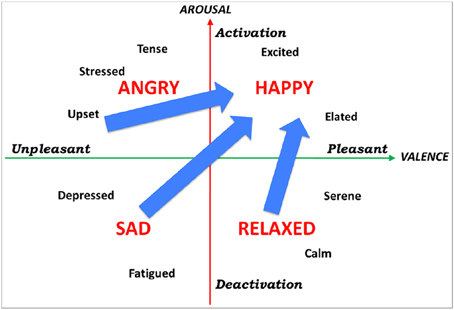

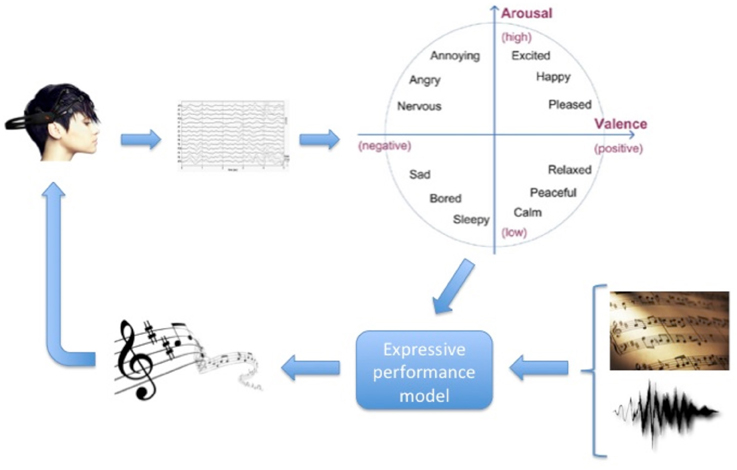

In this paper we investigate the potential benefits of combining music (therapy), neurofeedback and emotion detection for improving elderly people's mental health. Specifically, our main goal is to investigate the emotional reinforcement capacity of automatic music neurofeedback systems, and its effects for improving depression in elderly people. With this aim, we propose a new neurofeedback approach, which allows users to manipulate expressive parameters in music performances using their emotional state. The users' instantaneous emotional state is characterized by a coordinate in the arousal-valence plane decoded from their EEG activity. The resulting coordinate is then used to change expressive aspects of music such as tempo, dynamics, and articulation. We present the results of a pilot clinical experiment applying our neurofeedback approach to a group of 10 elderly people with depression.

Materials and Methods

Participants

Ten adults (9 female and 1 male, mean = 84, SD = 5.8) with normal hearing participated in the neurofeedback study consisting of 10 sessions (2 sessions per week) of 15 min each. Participants granted their written consent and procedures were positively evaluated by the Clinical Research Ethical Committee of the Parc de Salut Mar (CEIC-Parc de Salut Mar), Barcelona, Spain, under the reference number: 2015/6343/I. EEG data was acquired using the Emotiv EPOC EEG device. Participants were either residents or day users in an elderly home in Barcelona and were selected according to their cognitive capacities, sensitivity to music and depression condition: all of them declared to regularly listen to music and presented with a primary complaint of depression, which was confirmed by the psychologist of the center. Informed consent was obtained from all participants. There were four people who abandoned the study toward the end of it due to illness.

Materials

Music Material

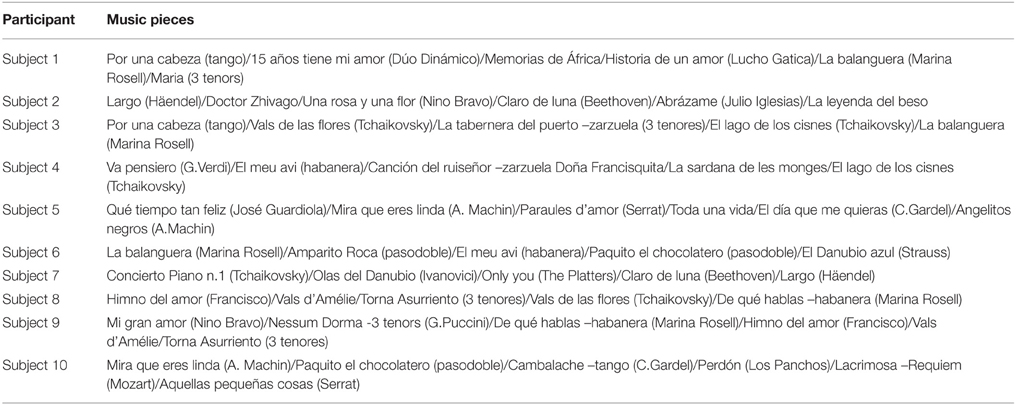

Prior to the first session, the participants in the study were interviewed in order to determine the music they liked and to identify particular pieces to be included in their feedback sessions. Following the interviews, for each participant a set of 5–6 music pieces was collected from commercial audio CDs. During each session a subset of the selected pieces was played to the participant. Table 1 shows the selected pieces for each participant.

Data Acquisition and Processing

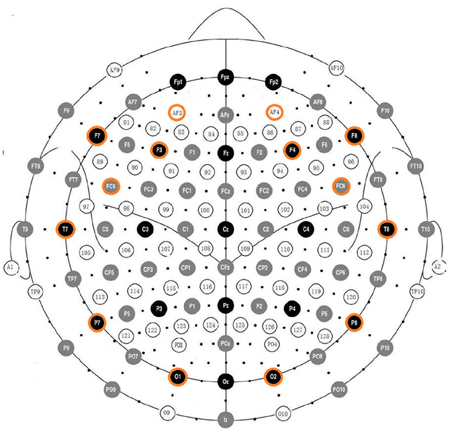

The Emotiv EPOC EEG system (Emotiv, 20141) was used for acquiring the EEG data. It consists of 16 wet saline electrodes, providing 14 EEG channels, and a wireless amplifier. The electrodes were located at the positions AF3, F7, F3, FC5, T7, P7, O1, O2, P8, T8, FC6, F4, F8, AF4 according to the international 10–20 system (see Figure 1). Two electrodes located just above the subject's ears (P3, P4) were used as reference. The data were digitized using the embedded 16-bit ADC with 128 Hz sampling frequency per channel and sent to the computer via Bluetooth. The EEG signals were band-pass filtered with Butterworth 8–12 Hz and 12–28 Hz filters. The impedance of the electrode contact to the scalp was visually monitored using Emotiv Control Panel software.

The Emotiv EPOC EEG system is part of a number of low-cost EEG systems, which have been recently commercialized [a usability review of some of them can be found in (10)]. These systems are mainly marketed as gaming devices and the quality of the signal they capture is lower than the signal captured by more expensive equipment. However, recent research on evaluating the reliability of some of these low-cost EEG devices for research purposes has suggested that they are reliable for measuring EEG signals (Debener et al., 2012; Thie et al., 2012; Badcock et al., 2013). In the case of our study, the Emotiv EPOC device has provided several important pragmatic advantages compared with more expensive equipment: the setting up time of the Emotiv EPOC system at the beginning of each session is considerably shorter than that of an expensive EEG system (for which an experienced clinical professional can take up to an hour to place the electrodes on the patient's scalp, which results in long and tedious sessions). Furthermore, expensive EEG systems typically require the application of conductive gel in order to create a reliable connection between each electrode and the patient's scalp (the gel attaches to the patient's hair and can only be properly removed by washing the entire head at the end of each session). The setting up time of the Emotiv EPOC takes a few minutes (typically 3–5 min) and conductive gel is not necessary for the Emotiv EPOC's wet saline electrodes. However, the inferior signal quality of the Emotiv EPOC device is a limitation of this study, and thus it should be emphasized that future studies should involve the use of a more accurate EEG device.

We collected and processed the data using the OpenViBE platform (Renard et al., 2010). In order to play and transform the music feedback through the OpenVibe platform, a VRPN (Virtual-Reality Peripheral Network) to OSC (Open Sound Control protocol) gateway was implemented and used to communicate OpenViBE with Pure Data (Puckette, 1996). OSC is a protocol for networking sound synthesizers, computers, and other multimedia devices for purposes such as musical performance, while VRPN is a device-independent and network-transparent system for accessing virtual reality peripherals in applications. The VRPN-OSC-Gateway connects to a VRPN server, converts the tracking data and sends it to an OSC server. Music feedback was played by AudioMulch VST-host application, which received MIDI messages from Pure Data, and in which a tempo transformation plugin (Mayor et al., 2011) was installed. The plugin allows performing pitch-independent real-time tempo transformations (i.e., time stretch transformations) using audio spectral analysis-synthesis techniques. The plugin parameters were controlled using the MIDI messages sent by Pure Data. Music tempo and loudness were controlled assigning the corresponding MIDI control message coming from Pure Data. Both data acquisition and music playback were performed on a laptop with an Intel Core i5 2.53 Ghz processor with 4 GB of RAM, running windows 7 64-bit Operating System and using the laptop's internal sound card (Realtek ALC269). Music was amplified by two loudspeakers Roland MA150U.

Methods

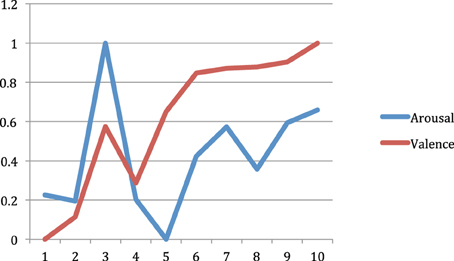

Participants were treated individually. At the beginning of each feedback session, participants were informed about the experiment procedure, were asked to sit in a comfortable chair facing two loudspeakers, to close their eyes, and avoid moving during the experiment. Participants listened to preselected music pieces according to their music preferences for 15 min. Within these 15 min music pieces were separated by a pause of 1 s. Participants were encouraged to increase the loudness and tempo of the pieces so the pieces sounded “happier.” As the system was tuned so that increased arousal corresponded to increased loudness, and increased valence corresponded to increased tempo, participants were encouraged to increase their arousal and valence, in other words to direct their emotional state to the high-arousal/positive-valence quadrant in the arousal-valence plane (see Figure 2). At the end of each session, participants were asked if they perceived they were able to modify the music tempo and volume. Pre and post evaluation of participants was performed using the BDI depression test.

Figure 2. Arousal-valence plane. By encouraging participants to increase the loudness and tempo of musical pieces, they were encouraged to increase their arousal and valence, and thus direct their emotional state to the top-right quadrant in the arousal-valence plane.

No artifact detection/elimination method was applied to the measured EEG signal. Both electrooculographic (EOG) and electromyographic (EMG) artifacts were minimized by asking participants to close their eyes and avoid movement. No control of the interface through eye or muscle movement was observed during the experimental sessions. However, it must be noted that it is important to extend/redo the reported study using artifact detection methods.

The EEG data processing was adapted from Ramirez and Vamvakousis (2012). Based on the EEG signal of a person, the arousal level was determined by computing the ratio of the beta (12–28 Hz) and alpha (8–12 Hz) brainwaves. EEG signal was measured in four locations (i.e., electrodes) in the prefrontal cortex: AF3, AF4, F3, and F4 (see Figure 1). Beta waves β are associated with an alert or excited state of mind, whereas alpha waves α are more dominant in a relaxed state. Alpha activity has also been associated to brain inactivation. Thus, the beta/alpha ratio is a reasonable indicator of the arousal state of a person. Concretely, arousal level was computed as following:

In order to determine the valence level, activation levels of the two cortical hemispheres were compared. A large number of EEG studies (Henriques and Davidson, 1991; Davidson, 1992, 1995, 1998), have demonstrated that the left frontal area is associated with more positive affect and memories, and the right hemisphere is more involved in negative emotion. F3 and F4 are the most used positions for looking at this alpha/beta activity related to valence, as they are located in the prefrontal lobe, which plays a crucial role in emotion regulation and conscious experience. Valence values were computed by comparing the alpha power α in channels F3 and F4. Concretely, valence level was computed as following:

Valence and arousal computation was adapted from Ramirez and Vamvakousis (2012), where the authors show that the computed arousal and valence values indeed contain meaningful user's emotional information.

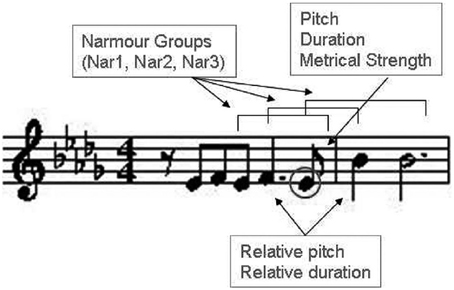

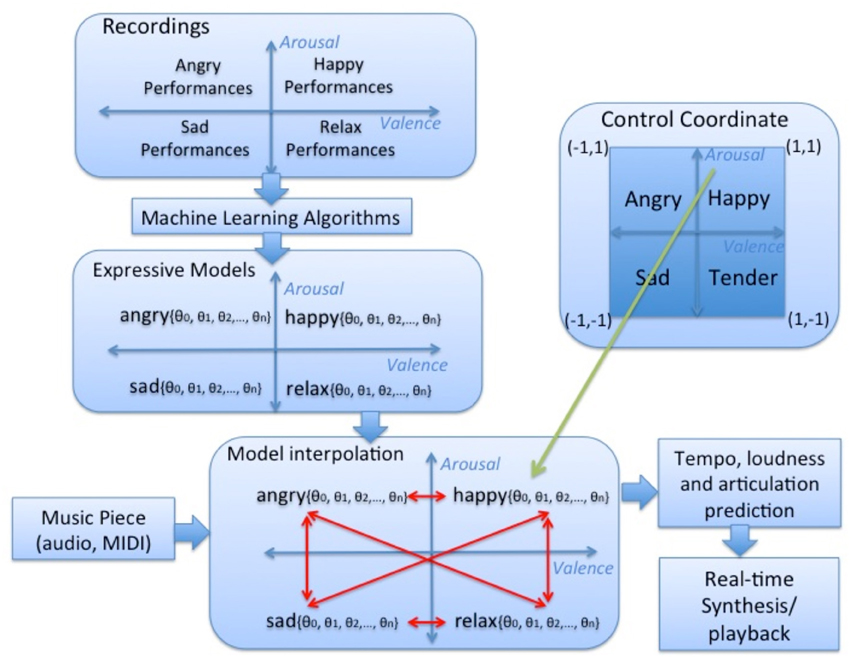

Computed arousal and valence values are fed into an expressive music performance system which calculates appropriate expressive transformations on timing, loudness and articulation (however, in the present study only timing and loudness transformations are considered). The expressive performance system is based on a music performance model, which was obtained by training four models using machine learning techniques (Mitchell, 1997) applied to recordings of musical pieces in four emotions: happy, relaxed, sad, and angry (each corresponding to a quadrant in the arousal-valence plane). The coefficients of the four models were interpolated in order to obtain intermediate models (in addition to the four trained models) and corresponding performance predictions (Figure 3). Details about the expressive music performance system and our approach to expressive performance modeling can be found in (Ramirez et al., 2010; Giraldo, 2012; Ramirez et al., 2012; Giraldo and Ramirez, 2013; Marchini et al., 2014). In order to model expression in music performances we characterized each performed note by a set of inter-note features representing both properties of the note itself and aspects of the musical context in which the note appears (Figure 4). Information about the note included note pitch (Pitch), note duration (dur), and note metrical strenght (MetrStr), while information about its melodic context included the relative pitch and duration of the neighboring notes (PrevPitch, PrevDur, NextPitch, NextDur), i.e., previous and following notes, as well as the music structure (i.e., Narmour groups) in which the note appears (Narmour, 1991). We also extracted the amount of legato with the previous note, the amount of legato with the following note, and mean energy. We applied machine learning techniques to train a linear regression models for predicting duration, and energy deviations expressed as a ratio of the values specified in the score (for energy which is not specified in the score we take the score value as the average of the energy of all the notes in the piece). For instance, for duration a predicted value of 1.14 represents a prediction of 14% lengthening of the note with respect to the score. In the case of energy it indicates that the note should be played a 14% louder than the average energy in the piece.

Figure 3. Overview of the expressive music performance system. Happy, relaxed, sad, and angry models were learnt from music recordings with these emotions using machine learning techniques and interpolated in order to obtain intermediate models and corresponding performance predictions.

The general proposed emotion-based musical neurofeedback system is depicted in Figure 5. The system consisted of a real-time feedback loop in which the brain activity of participants was processed to estimate their emotional state, which in turn was used to control an expressive rendition of the music piece. The user's EEG activity is mapped into a coordinate in the arousal-valence space that is fed to a pre-trained expressive music model in order to trigger appropriate expressive transformations to a given music piece (audio or MIDI).

Figure 5. Neurofeedback system Overview: real-time feedback loop in which the brain activity of a person is processed to produce an expressive rendition of a music piece according to the person's estimated emotional state.

Results

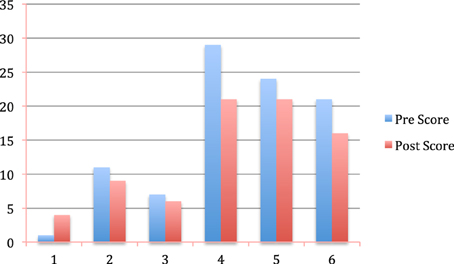

Seven participants completed training, requiring a total of ten 15 min sessions (2.5 h) of neurofeedback, with no other psychotherapy provided. There were four people who abandoned the study toward the end of it due to health problems. Pre and post evaluation of 6 participants was performed using the BDI depression test (One participant was not able to respond to the BDI depression test at the end of the treatment due to serious health reasons). The BDI evaluation performed using the BDI depression test, showed an average improvement of 17.2% (1.3) in BDI scores at the end of the study. Pre–post changes on the BDI test are shown in Figure 6.

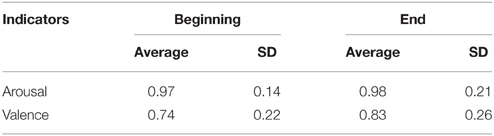

We computed average valence and arousal values at the beginning of the first session and the beginning of the last session of the study. The obtained average valence values were 0.74 (0.22) and 0.83 (0.26) for the beginning of the first session and the beginning of the last session, respectively, while the obtained average arousal values were 0.97 (0.14) and 0.98 (0.21) for the beginning of the first session and the beginning of the last session, respectively (Table 2).

Figure 7 shows the correlation within sessions between the computed arousal and valence values, and time (1 min periods) within sessions. For valence we obtained a correlation of r = 0.919 (p = 0.000171) while for arousal we obtained a correlation of r = 0.315 (p = 0.375335).

Discussion

Five out of six participants who responded to the BDI test made improvements in their BDI, and one patient improved from depressed to slight perturbation in the BDI scale. One participant, who initially scored as not depressed in the BDI pre-test (score = 1), did not show any improvement in her BDI post-score (score = 4), which was also in the non-depressed range. Either the participant was not depressed at the beginning of the study, or her responses to the BDI tests were not reliable. Excluding her from the BDI test analysis, the mean decrease in BDI scores was 20.6% (0.06). These differences were found significant (p = 0.018).

EEG data obtained during the course of the study showed that overall valence level increased at the end of the treatment compared to the starting level. The difference between valence values at the beginning and end of the study is statistically significant (p = 0.00008). This result should be interpreted as a decrease of relative alpha activity in the left frontal lobe, which may be interpreted as an improvement of the depression condition (Henriques and Davidson, 1991; Gotlib et al., 1999). Arousal values at the beginning and at the end of the study showed no significant difference (p = 0.33). However, in this study the most important indicator was valence since it reflects changes in negative/positive emotional states, which are directly related to depression conditions.

Correlation between valence values and time within sessions was found significant (p < 0.00018) but that was not the case for the correlation between arousal values and time. The fact that arousal-time correlation was not significant is not a negative result since valence is the most relevant indicator for depression.

Taking into account the obtained within- and cross-session improvements in valence levels and the limited duration of both each session (i.e., 15 min) and the complete treatment (i.e., 10 sessions), it may be reasonable to think that further improvements in valence could have been reached if sessions and/or treatment had been longer. We plan to investigate the impact of treatments with longer duration in the future.

Very few studies in the literature have examined the long-term effect of neurofeedback, but the few studies that did it found promising results (Gani et al., 2008; Gevensleben et al., 2010). Both Gani et al. (2008) and Gevensleben et al. (2010) showed that after the end of their studies, improvements were maintained and some additional benefits could be noted, suggesting that patients were still improving even after the end of treatment. In the current study, in addition to the post study BDI test, no follow-up for the participants was conducted in order to examine the long-term effect of our approach. This issue should be investigated in the future.

As it is the case of most of the literature on the use of neurofeedback to treat depression, which mainly represent uncontrolled case study reports, no control group has been considered in this pilot study. In order to quantify the benefits of combining music and neurofeedback compared to other approaches, ideally 3 groups should have been considered: one group with music therapy, one group with neurofeedback, and one group with the proposed approach combining music therapy and neurofeedback. In this way it would have been possible to quantify the added value of combining music therapy and neurofeedback. However, due to the limited number of participants this was not possible.

Some researchers have showed that listening to music regularly during the early stages of rehabilitation can aid the recovery maintaining attention, and preventing depressed and confused moods in stroke patients (Särkämö et al., 2008). Särkämö et al. conclude that in addition to these effects, music listening may also have general effects on brain plasticity, as the activation it causes in the brain is in both hemispheres, and more widely distributed than that caused by verbal material alone. In the current study, we propose a new neurofeedback approach, which combines emotion-driven neurofeedback with (active) music listening. In the light of the mentioned benefits of music listening/receptive music therapy (Grocke et al., 2007), it is reasonable to think that incorporating music listening in a neurofeedback setting can only improve the benefits of traditional neurofeedback systems. Furthermore, we argue that the combination of neurofeedback and receptive music therapy provides the benefits of both techniques, while eliminating potential drawbacks of each separate technique. When considered as separate methods, the advantages of receptive music therapy and neurofeedback are clear: they both provide a noninvasive method with a lack of contraindications. In addition, neurofeedback is oriented to encourage patients to self-regulate their brain activity in order to promote beneficial activity patterns, while receptive music therapy relies on the emotional therapeutic effects of listening to music. These positive properties of both techniques are clearly preserved by the proposed approach. On the other hand, neurofeedback procedures often can be tedious and consist of tasks involving visual or auditory feedback with little or no emotional content (e.g., moving a car on a computer screen). Furthermore, a drawback of neurofeedback is that it is based on traditional EEG-rhythms (e.g., theta, alpha, beta), which are functionally heterogeneous and individual (Hammond, 2010). Receptive music therapy methods are combined with the difficulty of selecting music material corresponding to the individual needs of the patient (MacDonald, 2013). These shortcomings are avoided by the proposed system: The system provides attractive feedback consisting of music material specially selected by the individual participants, and it is based on high-level descriptors (i.e., arousal and valence) representing the emotional state of users.

The results obtained in the current study seem to indicate that music has the potential to be a useful component in neurofeedback treatment. However, future research needs to explore the effect of individual responses' variables to music through direct experimental comparison. Future investigation of individual variables, such as music sensibility (e.g., music experience/familiarity) and the impact of depression severity, in addition to more stringent methodology, is required.

In summary, we have introduced a new neurofeedback approach, which allows users to manipulate expressive parameters in music performances using their emotional state, and presented the results of a neurofeedback clinical pilot study for treating depression in elderly people. The neurofeedback study consisted of 10 sessions (2 sessions per week) of 15 min each initially involving 10 participants from a residential home for the elderly in Barcelona. Participants were asked to listen to music pieces preselected by them according to their music preferences, and were encouraged to increase the loudness and tempo of the pieces, based on their arousal and valence levels, respectively: arousal was computed as beta to alpha activity ratio in the frontal cortex, and valence was computed as relative frontal alpha activity in the right lobe compared to the left lobe. Pre and post evaluation of 6 participants was performed using the BDI depression test, showing an average improvement of 17.2% (1.3) in their BDI scores at the end of the study. Analysis of the participants' EEG data showed a decrease of relative alpha activity in their left frontal lobe, which may be interpreted as an improvement of their depression condition. The positive results of our clinical experiment, suggest that new research with the proposed music neurofeedback approach is worthwhile.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work has been partly sponsored by the Ministerio de Economia y Competitividad under Grant TIN2013-48152-C2-2-R (TIMuL Project).

Footnotes

1. ^Emotiv Systems Inc. Researchers. (2014). Available online at: http://www.emotiv.com/researchers/

References

Ali, Y., Mahmud, N. A., and Samaneh, R. (2015). Current advances in neurofeedback techniques for the treatment of ADHD. Biomed. Pharma. J. 8, 65–177. doi: 10.13005/bpj/573

Badcock, N. A., Mousikou, P., Mahajan, Y., de Lissa, P., Johnson, T., and McArthur, G. (2013). Validation of the Emotiv EPOC® EEG gaming system for measuring research quality auditory ERPs. Peer J. 1:e38. doi: 10.7717/peerj.38

Chanel, G., Kronegg, J., Grandjean, D., and Pun, T. (2006). “Emotion assessment: arousal evaluation using eeg's and peripheral physiological signals,” in MRCS 2006. LNCS, Vol. 4105, eds B. Gunsel, A. K. Jain, A. M. Tekalp, and B. Sankur (Heidelberg: Springer), 530–537.

Choppin, A. (2000). EEG-based Human Interface for Disabled Individuals: Emotion Expression with Neural Networks. Masters thesis, Tokyo Institute of Technology, Yokohama, Japan.

Coffman, D. D. (2002). Banding together: new horizons in lifelong music making. J. Aging Identity 7, 133–143. doi: 10.1023/A:1015491202347

Cohen, A., Bailey, B., and Nilsson, T. (2002). The importance of music to seniors. Psychomusicology 18, 89–102. doi: 10.1037/h0094049

Cohen-Mansfield, J., Marx, M. S., Thein, K., and Dakheel-Ali, M. (2011). The impact of stimuli on affect in persons with dementia. J. Clin. Psychiatry 72, 480–486. doi: 10.4088/JCP.09m05694oli

Cutshall, S. M., Fenske, L. L., Kelly, R. F., Phillips, B. R., Sundt, T. M., and Bauer, B. A. (2007). Creation of a healing program at an academic medical center. Complement. Ther. Clin. Pract. 13, 217–223. doi: 10.1016/j.ctcp.2007.02.001

Davidson, R. J. (1992). Emotion and affective style: hemispheric substrates. Psychol. Sci. 3, 39–43. doi: 10.1111/j.1467-9280.1992.tb00254.x

Davidson, R. J. (1995). “Cerebral asymmetry, emotion and affective style,” in Brain asymmetry, eds R. J. Davidson and K. Hugdahl (Boston, MA: MIT Press), 361–387.

Davidson, R. J. (1998). Affective style and affective disorders: perspectives from affective neuroscience. Cogn. Emot. 12, 307–330. doi: 10.1080/026999398379628

Debener, S., Minow, F., Emkes, R., Gandras, G., and de Vos, M. (2012). How about taking a low-cost, small, and wireless EEG for a walk? Psychophysiology 49, 1617–1621. doi: 10.1111/j.1469-8986.2012.01471.x

Dias, A. M., and van Deusen, A. (2011). A new neurofeedback protocol for depression. Span. J. Psychol. 14, 374–384. doi: 10.5209/rev_SJOP.2011.v14.n1.34

Erkkilä, J., Punkanen, M., Fachner, J., Ala-Ruona, E., Pöntiö, I., Tervaniemi, M., et al. (2011). Individual music therapy for depression: randomised controlled trial. Br. J. Psychiatry 199, 132–139. doi: 10.1192/bjp.bp.110.085431

Fuchs, T., Birbaumer, N., Lutzenberger, W., Gruzelier, J. H., and Kaiser, J. (2003). Neurofeedback treatment for attention-deficit/hyperactivity disorder in children: a comparison with methylphenidate. Appl. Psychophysiol. Biofeedback 28, 1–12. doi: 10.1023/A:1022353731579

Fukui, H., and Toyoshima, K. (2008). Music facilitates the neurogenesis, regeneration and repairof neurons. Med. Hypotheses 71, 765–769. doi: 10.1016/j.mehy.2008.06.019

Gani, C., Birbaumer, N., and Strehl, U. (2008). Long term effects after feedback of slow cortical potentials and of theta-beta-amplitudes in children with attention-deficit/hyperactivity disorder (ADHD). Int. J. Bioelectromagn. 10, 209–232.

Gevensleben, H., Holl, B., and Albrecht, B. (2010). Neurofeedback training in children with ADHD: 6-month follow-up of a randomized controlled trial. Eur. Child Adolesc. Psychiatry 19, 715–724. doi: 10.1007/s00787-010-0109-5

Giraldo, S. (2012). Modeling Embellishment, Duration and Energy Expressive Transformations in Jazz Guitar. Masters thesis, Pompeu Fabra University, Barcelona, Spain.

Giraldo, S., and Ramirez, R. (2013). “Real Time Modeling of Emotions by Linear Regression,” in International Workshop on Machine Learning and Music, International Conference on Machine Leaning (Edinburgh).

Gotlib, I. H., Ranganath, C., and Rosenfeld, J. P. (1999). Frontal EEG alpha asymmetry, depression, and cognitive functioning. Cogn. Emot. 12, 449–478. doi: 10.1080/026999398379673

Grocke, D. E., Grocke, D., and Wigram, T. (2007). Receptive Methods in Music Therapy: Techniques and Clinical Applications for Music Therapy Clinicians, Educators and Students. London, UK: Jessica Kingsley Publishers.

Gruzelier, J., and Egner, T. (2005). Critical validation studies of neurofeedback. Child Adolesc. Psychiatr. Clin. N. Am. 14, 83–104. doi: 10.1016/j.chc.2004.07.002

Hammond, D. C. (2004). Neurofeedback treatment of depression and anxiety. J. Adult Dev. 4, 45–56. doi: 10.1007/s10804-005-7029-5

Hammond, D. C. (2010). The need for individualization in neurofeedback: heterogeneity in QEEG patterns associated with diagnoses and symptoms. Appl. Psychophysiol. Biofeedback 35, 31–36. doi: 10.1007/s10484-009-9106-1

Hays, T., and Minichiello, V. (2005). The meaning of music in the lives of older people: a qualitative study. Psychol. Music 33, 437–451. doi: 10.1177/0305735605056160

Henriques, J. B., and Davidson, R. J. (1991). Left frontal hypoactivation in depression. J. Abnorm. Psychol. 100, 534–545. doi: 10.1037/0021-843X.100.4.535

Hsu, M. H., Flowerdew, R., Parker, M., Fachner, J., and Odell-Miller, H. (2015). Individual music therapy for managing neuropsychiatric symptoms for people with dementia and their carers: a cluster randomised controlled feasibility study. BMC Geriatr. 15:84. doi: 10.1186/s12877-015-0082-4

Jensen, M. P., Grierson, R. N., Tracy-Smith, V., Bacigalupi, S. C., and Othmer, S. F. (2007). Neurofeedback treatment for pain associated with complex regional pain syndrome type I. J. Neurother. 11, 45–53. doi: 10.1300/J184v11n01_04

Kerson, C., Sherman, R. A., and Kozlowski, G. P. (2009). Alpha suppression and symmetry training for generalized anxiety symptoms. J. Neurother. 13, 146–155. doi: 10.1080/10874200903107405

Kotchoubey, B., Strehl, U., Uhlmann, C., Holzapfel, S., König, M., Fröscher, W., et al. (2001). Modification of slow cortical potentials in patients with refractory epilepsy: a controlled outcome study. Epilepsia 42, 406–416. doi: 10.1046/j.1528-1157.2001.22200.x

Kropotov, J. D. (2009). Quantitative EEG, Event-related Potentials and Neurotherapy. San Diego, CA: Elsevier Academic Press.

Kumano, H., Horie, H., Shidara, T., Kuboki, T., Suematsu, H., and Yasushi, M. (1996). Treatment of a depressive disorder patient with EEG-driven photic stimulation. Biofeedback Self Regul. 21, 323–334. doi: 10.1007/BF02214432

Lundqvist, L.-O., Carlsson, F., Hilmersson, P., and Juslin, P. N. (2009). Emotional responses to music: experience, expression, and physiology. Psychol. Music 37, 61–90. doi: 10.1177/0305735607086048

MacDonald, R. A. (2013). Music, health, and well-being: a review. Int. J. Qual. Stud. Health Well-being 8:20635. doi: 10.3402/qhw.v8i0.20635

Maratos, A. S., Gold, C., Wang, X., and Crawford, M. J. (2008). Music therapy for depression. Cochrane Database Syst. Rev. 1:CD004517. doi: 10.1002/14651858.CD004517.pub2

Marchini, M., Ramirez, R., Maestre, M., and Papiotis, P. (2014). The sense of ensemble: a machine learning approach to expressive performance modelling in string quartets. J. New Music Res. 43, 303–317. doi: 10.1080/09298215.2014.922999

Mayor, O., Bonada, J., and Janer, J. (2011). “Audio transformation technologies applied to video games,” in Audio Engineering Society Conference: 41st International Conference: Audio for Games (London, UK).

McCaffrey, R. (2008). Music listening: its effects in creating a healing environment. J. Psychosoc. Nurs. 46, 39–45. doi: 10.3928/02793695-20081001-08

Michael, A. J., Krishnaswamy, S., and Mohamed, J. (2005). An open label study of the use of EEG biofeedback using beta training to reduce anxiety for patients with cardiac events. Neuropsychiatr. Dis. Treat. 1:357.

Monastra, V. J., Monsatra, D. M., and George, S. (2002). The effects of stimulant therapy, EEG biofeedback and parenting style on the primary symptoms of attention deficit/hyperactivity disorder. Appl. Psychophysiol. Biofeedback 27, 231–246 doi: 10.1023/A:1021018700609

Moriyama, T. S., Polanczyk, G., Caye, A., Banaschewski, T., Brandeis, D., and Rohde, L. A. (2012). Evidence-based information on the clinical use of neurofeedback for ADHD. Neurotherapeutics 9, 588–598 doi: 10.1007/s13311-012-0136-7

Narmour, E. (1991). The Analysis and Cognition of Melodic Complexity: The Implication Realization Model. Chicago, IL: University of Chicago Press.

Peniston, E. G., and Kulkosky, P. J. (1990). Alcoholic personality and alpha-theta brainwave training. Med. Psychother. 3, 37–55.

Puckette, M. (1996). “Pure Data: another integrated computer music environment,” in Proceedings of the Second Intercollege Computer Music Concerts (Tachikawa), 37–41.

Ramirez, R., Maestre, E., and Serra, X. (2010). Automatic performer identification in commercial monophonic Jazz performances. Pattern Recognit. Lett. 31, 1514–1523. doi: 10.1016/j.patrec.2009.12.032

Ramirez, R., Maestre, E., and Serra, X. (2012). A rule-based evolutionary approach to music performance modeling. IEEE Trans. Evol. Comput. 16, 96–107. doi: 10.1109/TEVC.2010.2077299

Ramirez, R., and Vamvakousis, Z. (2012). “Detecting emotion from EEG signals using the emotive epoc device,” in Proceedings of the 2012 International Conference on Brain Informatics, LNCS 7670 (Macau: Springer), 175–184.

Renard, Y., Lotte, F., Gibert, G., Congedo, M., Maby, E., Delannoy, V., et al. (2010). An open-source software platform to design, test, and use brain-computer interfaces in real and virtual environments. MIT Press J. Presence 19, 35–53. doi: 10.1162/pres.19.1.35

Rosenfeld, J. P. (2000). An EEG biofeedback protocol for affective disorders. Clin. Electroencephalogr. 31, 7–12. doi: 10.1177/155005940003100106

Rossiter, T. R., and La Vaque, T. J. (1995). A comparison of EEG biofeedback and psychostimulants in treating attention deficit/hyperactivity disorders. J. Neurother. 1, 48–59. doi: 10.1300/J184v01n01_07

Ruud, E. (1997). Music and the quality of life. Nord. J. Music Ther. 6, 86–97. doi: 10.1080/08098139709477902

Särkämö, T., Laitinen, S., Tervaniemi, M., Numminen, A., Kurki, M., and Rantanen, P. (2012). Music, emotion, and dementia insight from neuroscientific and clinical research. Music Med. 4, 153–162. doi: 10.1177/1943862112445323

Särkämö, T., Tervaniemi, M., Laitinen, S., Forsblom, A., Soinila, S., Mikkonen, M., et al. (2008). Music listening enhances cognitive recovery and mood after middle cerebral artery stroke. Brain 131, 866–876. doi: 10.1093/brain/awn013

Särkämö, T., Tervaniemi, M., Laitinen, S., Numminen, A., Kurki, M., Johnson, J. K., et al. (2014). Cognitive, emotional, and social benefits of regular musical activities in early dementia: randomized controlled study. Gerontologist 54, 634–650. doi: 10.1093/geront/gnt100

Särkämö, T., Tervaniemi, M., Soinila, S., Autti, T., Silvennoinen, H. M., Laine, M., et al. (2010). Auditory and cognitive deficits associated with acquired amusia after stroke: a magnetoencephalography and neuropsychological follow-up study. PLoS ONE 5:e15157. doi: 10.1371/journal.pone.0015157

Solé, C., Mercadal, M., Gallego, S., and Riera, M. A. (2010). Quality of life of older people: contributions of music. J. Music Ther. 42, 264–261. doi: 10.1093/jmt/47.3.264

Sterman, M. B. (2000). Basic concepts and clinical findings in the treatment of seizure disorders with EEG operant conditioning. Clin. Electroencephalogr. 31, 45–55. doi: 10.1177/155005940003100111

Swingle, P. G. (1998). Neurofeedback treatment of pseudoseizure disorder. Biol. Psychiatry 44, 1196–1199. doi: 10.1016/S0006-3223(97)00541-6

Thie, J., Klistorner, A., and Graham, S. L. (2012). Biomedical signal acquisition with streaming wireless communication for recording evoked potentials. Doc. Ophthalmol. 125, 149–159. doi: 10.1007/s10633-012-9345-y

Vanathy, S., Sharma, P. S. V. N., and Kumar, K. B. (1998). The efficacy of alpha and theta neurofeedback training in treatment of generalized anxiety disorder. Indian J. Clin. Psychol. 25, 136–143.

Vanderak, S., Newman, I., and Bell, S. (1983). The effects of music participation on quality of life of the elderly. Music Ther. 3, 71–81. doi: 10.1093/mt/3.1.71

Walker, J. E. (2011). QEEG-guided neurofeedback for recurrent migraine headaches. Clin. EEG Neurosci. 42, 59–61. doi: 10.1177/155005941104200112

Keywords: music, neurofeedback, emotions, expressive performance, depression, electroencephalography, elderly patients

Citation: Ramirez R, Palencia-Lefler M, Giraldo S and Vamvakousis Z (2015) Musical neurofeedback for treating depression in elderly people. Front. Neurosci. 9:354. doi: 10.3389/fnins.2015.00354

Received: 01 May 2015; Accepted: 17 September 2015;

Published: 02 October 2015.

Edited by:

Julian O'Kelly, Royal Hospital for Neuro-disability, UKReviewed by:

Lutz Jäncke, University of Zurich, SwitzerlandEric Miller, Montclair State University, USA

Copyright © 2015 Ramirez, Palencia-Lefler, Giraldo and Vamvakousis. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rafael Ramirez, Department of Information and Communication Technologies, Universitat Pompeu Fabra, Roc Boronat 138, 08018 Barcelona, Spain, rafael.ramirez@upf.edu

Rafael Ramirez

Rafael Ramirez Manel Palencia-Lefler

Manel Palencia-Lefler Sergio Giraldo

Sergio Giraldo Zacharias Vamvakousis

Zacharias Vamvakousis