Citizen Science Practices for Computational Social Science Research: The Conceptualization of Pop-Up Experiments

- 1Complexity Lab Barcelona, Departament de Física Fonamental, Universitat de Barcelona, Barcelona, Spain

- 2OpenSystems Research, Departament de Física Fonamental, Universitat de Barcelona, Barcelona, Spain

Under the name of Citizen Science, many innovative practices in which volunteers partner up with scientists to pose and answer real-world questions are growing rapidly worldwide. Citizen Science can furnish ready-made solutions with citizens playing an active role. However, this framework is still far from being well established as a standard tool for computational social science research. Here, we present our experience in bridging gap between computational social science and the philosophy underlying Citizen Science, which in our case has taken the form of what we call “pop-up experiments.” These are non-permanent, highly participatory collective experiments which blend features developed by big data methodologies and behavioral experimental protocols with the ideals of Citizen Science. The main issues to take into account whenever planning experiments of this type are classified, discussed and grouped into three categories: infrastructure, public engagement, and the knowledge return for citizens. We explain the solutions we have implemented, providing practical examples grounded in our own experience in an urban context (Barcelona, Spain). Our aim here is that this work will serve as a guideline for groups willing to adopt and expand such in vivo practices and we hope it opens up the debate regarding the possibilities (and also the limitations) that the Citizen Science framework can offer the study of social phenomena.

1. Introduction

The relationship between knowledge and society has always been an important aspect to consider when one tries to understand how science advances and how research is performed [1, 2]. The general public has, however, mostly been left out of this methodology and creation processes [3, 4]. Citizens are generally considered as passive subjects to whom only finished results are presented in the form of simplified statements; yet paradoxically, we implicitly ask them to support and encourage research. The acknowledgment of this ivory tower problem has recently opened up new and exciting opportunities to open-minded scientists. The advent of digital communication technologies, mobile devices and Web 2.0 is fostering a new kind of relation between professional scientists and dedicated volunteers or participants.

Under the name of Citizen Science (CS), many innovative practices in which “volunteers partner with scientists to answer and pose real-world questions” (as stated in the Cornell Ornithology Lab web page; one of the precursors of CS practices in the 1980s) are growing rapidly worldwide [5–8]. Recently, CS has been formally defined by the Socientize White Paper as: “general public engagement in scientific research activities when citizens actively contribute to science either with their intellectual effort or surrounding knowledge or with their own tools and resources” [9]. This open, networked and transdisciplinary scenario, favors more democratic research, thanks to contributions from amateur or non-professional scientists [10]. Over the last few years, important results have been published in high-impact journals by using participatory practices [6, 7]. All too often the hidden power of thousands of hands working together is making itself apparent in many fields, and showing its performance to be comparable to (or even better than) expensive supercomputers when used to analyse/classify astronomical images [11], to reconstruct 3D brain maps based on 2D images [12], or to find stable biomolecular structures [13], to name very few of the cases with a large impact. Citizen contributions can also have a direct impact on society by, for instance, helping to create exhaustive and shared geolocalized datasets [14] at a density level unattainable by the vast majority of private sensor networks (and at a much reduced cost) or by collectively gathering empirical evidence to force public administration action (for example, the shutdown of a noisy factory located in London 15). Most active volunteers can contribute by providing experimental data to widen the reach of researchers, raise new questions and co-create a new scientific culture [3, 16].

Computational social science (CSS) is a multidisciplinary field at the intersection of social, computational and complexity sciences, whose subject of study is human interactions and society itself [17, 18]. However, CS practices remain vastly unexplored in this context when compared to other fields such as environmental sciences, in which they already have a long history [19–21]. Attempts to incorporate the participation of ordinary citizens as playing an important role can be found in fields such as experimental economics [22], the design of financial trading floors [23], and human mobility [24]. Work on the emergence of cooperation [25] and the dynamics of social interactions [26] is also noteworthy. All these experiments yielded important scientific outcomes, with protocols that are well-established and robust within the behavioral sciences (see for instance [27] and [28]), but unfortunately they remain on the very first level of the CS scale [15]. At that level, citizens are involved only as sensors or volunteer subjects for certain experiments in strictly controlled environments; their participation and potential are only partially unleashed. One possible way out of this first level was already provided by Latour [3, 29], when he proposed collective experiments in which the public becomes a driving force of the research. Researchers in the wild are then directly concerned with the knowledge they produce, because they are both objects and subjects of their research [4]. Some interesting research initiatives have emerged along these lines and involve massive experiments in collaboration with a CS foundation, such as Ibercivis [30] or through online platforms such as Volunteer Science (a Lazer Lab platform). More radical initiatives consider collaboration with artists as well, and some have been realized in museums or exhibitions and as large-scale performance art [31, 32].

CSS research has also recently been applied in the so-called “big data" paradigm [33, 34]. Much has been said about it and the possibilities it offers to society, industry and researchers. “Smart cities” pack urban areas with all kinds of sensors and integrate the information into a broad collection of datasets. Mobile devices also represent a powerful tool to monitor real-time user-related statistics, such as health, and major businesses opportunities are already being foreseen by companies. However, these approaches again treat citizens as passive subjects from whom one records private data in an non-consensual way, and throws up the aggravated problem that the unaware producers of these data (i.e., citizens) lose control of their use, exploitation and analysis. The validity of the conclusions drawn from the analysis of such datasets are still today a subject of discussion, mainly due to poor control of the process of gathering the data (by the public in general and by scientists in particular), inherent population and sampling biases [35] and the lack of reproducibility, among other systemic problems [36]. Last but not least, the big data paradigm has so far failed to provide society with the necessary public debate and transparent practices, adopting the bottom-up approach it preconizes. It currently relies on huge infrastructures only available to private corporations, whose objectives may not coincide with those of researchers and the citizenry, and provides conditioned access to the data contents which, in addition, generally cannot be freely (re)used without filtering.

Our purpose here, however, is not to discuss problems inherent to big data. Rather, the approach we present aims to explore the potential of blending interesting features recently developed by big data methodologies with the ambitious and democratic ideals of CS. Public participation and scientific empowerment induce a level of (conscious) proximity with the subjects of the experiments that can be a highly valuable source of high-quality data [37, 38], or at least, of non-conflictive information with regards to data anonymity [39], that may correct biases and systematic experimental errors. This approach is potentially a way to overcome privacy and ethical issues that arise when collecting data from digital social platforms, while keeping high standards of participation [33, 40, 41]. Moreover, CS projects can use a vast variety of social platforms to optimize dissemination, encourage and increase participation and develop gamification strategies [42] to reinforce engagement. The so-called Science of Citizen Science studies the emergent participatory dynamics in this class of projects [43, 44], so that this also opens the door to new contexts within which study social phenomena.

The open philosophy at the heart of CS methods, such as open data licensing and coding, can also clearly improve science–society–policy interactions in a democratic and transparent way [45] through so-called deliberative democracy [46]. The CS approach simultaneously represents a powerful example of responsible research and innovation (RRI) practices included in the EU Horizon 2020 research programme [47] and the Quadruple Helix model in which government, industry, academia and civil participants work together to co-create the future and drive structural changes far beyond the scope of what any one organization or person could do alone [48]. Along these lines, we consider that the potential of CSS when adopting CS methods is vast, since its subject of study is citizens themselves. Therefore, their engagement with projects that study their own behavior is highly likely, since it has an immediate impact on their daily lives. As a result, large motivated communities and scientists can work hand in hand to tackle the challenges arising from CSS, but also collectively circumvent potential side effects. The possibility of reaching wider and more diverse communities will help in the refinement of more universal statements avoiding the population biases [49] and problems of reproducibility present in empirical social science studies [50, 51]. Another important advantage of working jointly with different communities is that it allows scientists to set up lab-in-the-field or in vivo experiments, which instead of isolating subjects from their natural urban environment—where socialization takes place—are transparent, fully consensual and enriched, thanks to the active participation of citizens [4, 31]. Such practices and methodologies, however, are still far from being well established as a standard tool for CSS research.

The main goal of this paper is precisely to motivate the somewhat unexplored incorporation of CS practices into CSS research activities. Given the arduous natural of such a task, here, we limit ourselves to a reformatting of existing standard experimental strategies and methods in science through what we call “pop-up experiments" (PUEs). Such a concept has been shaped by the lessons we have learned while running experiments in public spaces in the city of Barcelona (Spain). Section 2 introduces this very flexible solution which makes collective experimentation possible, and discusses its three essential ingredients: adaptable infrastructure, public engagement and the knowledge return for citizens. Finally, Section 3 concludes the manuscript with a discussion of what we have presented to that point, together with some considerations concerning the future of CS practices within CSS research.

2. A Flexible Solution for Citizen Science Practices Within CSS: the Pop-Up Experiment

2.1. Context and Motivation

Over the last 4 years, the local authorities in Barcelona (i.e., City Council) and its Creativity and Innovation Direction in collaboration with several organizations have set as an objective the exploration of the possibilities of transforming the city into a public living lab [48], where new creative technologies can be tested and new knowledge can be constructed collectively. This has been done through the Barcelona Lab platform and one of its most pre-eminent actions has been establishing CS practices in and with the city. The first task was to create the Barcelona Citizen Science Office and to build a community of practitioners where most of the CS projects from different research institutions in Barcelona could converge. The Office serves as a meeting point for CS projects, where researchers can pool forces, experiences and knowledge, and also where citizens can connect with these initiatives easily and effectively. The second task is directly linked with the subject of this paper and was conceived to test how far the different public administrations can go in opening up their resources to collectively run scientific experiments [31]. The CS toolbox clearly provides the perfect framework for the design of public experiments, and exploration of the emergent tensions and problematic issues when running public living labs. Furthermore, the involvement of the City Council provided us with the opportunity to embed these experiments into important massive cultural events, which constitute the perfect environment for reinforcing the openness and transparency of our research process with respect to society, or at least to the citizens of Barcelona.

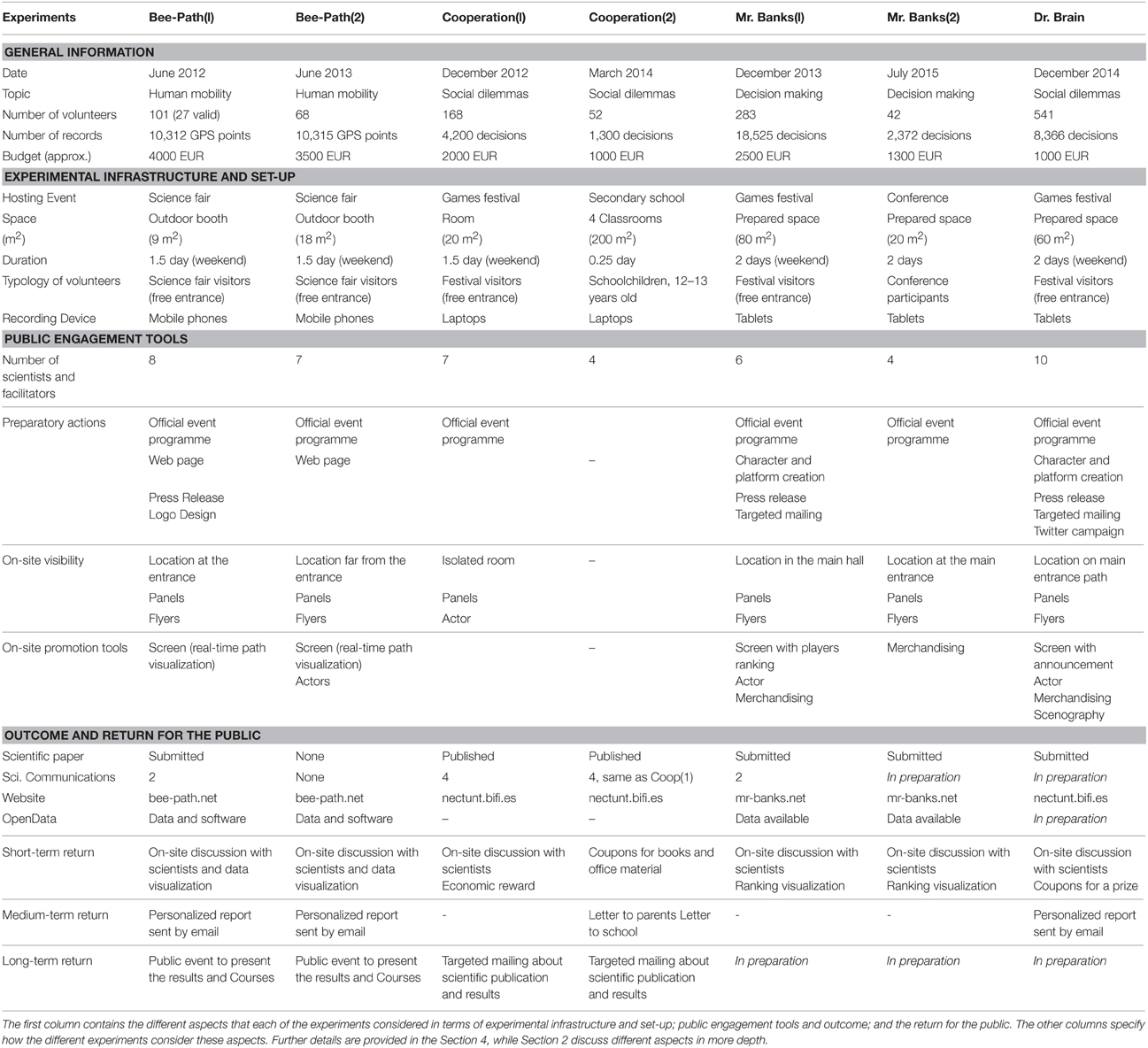

We have conducted several experiments to put CS ideals into practice and test their potential in urban contexts. In contrast with existing environmental CS projects in other cities such as London or New York (with civic initiatives such as Mapping for Change or Public Lab, respectively), we focussed our attention on CSS related problems. Our aim was to explore relations between city, citizens and scientists, which we considered had been neglected or inadequately addressed. More specifically, this rather wild testing consisted of seven different experiments performed between 2012 and 2015 on three different topics that address different questions: human mobility (How do we move?), social dilemmas (How cooperative are we?) and decision-making process (How do we take decisions in a very uncertain environment such as financial markets?). These are summarized in Table 1 and fully described in Section 4. Other points common to these experiments are the large number of volunteers that participate (up to 541 in a single experiment; 1255 in total) and the consequent large number of records (up to 18,525 decisions for a single experiment, 55,390 entries in total), despite the rather limited budget allocated to the experiments (from 1000 EUR to 4000 EUR per experiment, and around 2200 EUR on average). Despite dealing with different research questions, common problems (related to CS practices, but also to CSS research studying human behavior in general [34]) were identified and potential solutions were developed to overcome them in all cases. Some of the potential solutions that were implemented were successful, others were not; but all the experiences have shaped the concept and the process of experimentation in CSS research consistent with CS ideals.

Table 1. Summary of our seven experiments to test CS practices with pop-up experiments in urban contexts and through computational social science research.

2.2. Definition of a Pop-Up Experiment and the Underlying Process

The generic definition of a pop-up, according to the Cambridge dictionary, is: “Pop-up (adj.): used to described a shop, restaurant, etc. that operated temporarily and only for a short period when it is likely to get a lot of customers.” From the initial stages, we thought that this description fitted well into our non-permanent but highly participatory experimental set-up when applying CS principles to CSS research in urban contexts. The parallel is very illustrative to understand better a much more formal definition that we use to describe our approach from a theoretical perspective. It is based on the expertise gained from the seven experiments carried out over the last 4 years and reads:

A PUE is a physical, light, very flexible, highly adaptable, reproducible, transportable, tuneable, collective, participatory and public experimental set-up for urban contexts that: (1) applies Citizen Science practices and ideals to provide ground-breaking knowledge; and (2) transforms the experiment into a valuable, socially respectful, consented and transparent experience for non-expert volunteer participants with the possibility of building common urban knowledge that arises from fact-based effective knowledge valid for both cities and citizens.

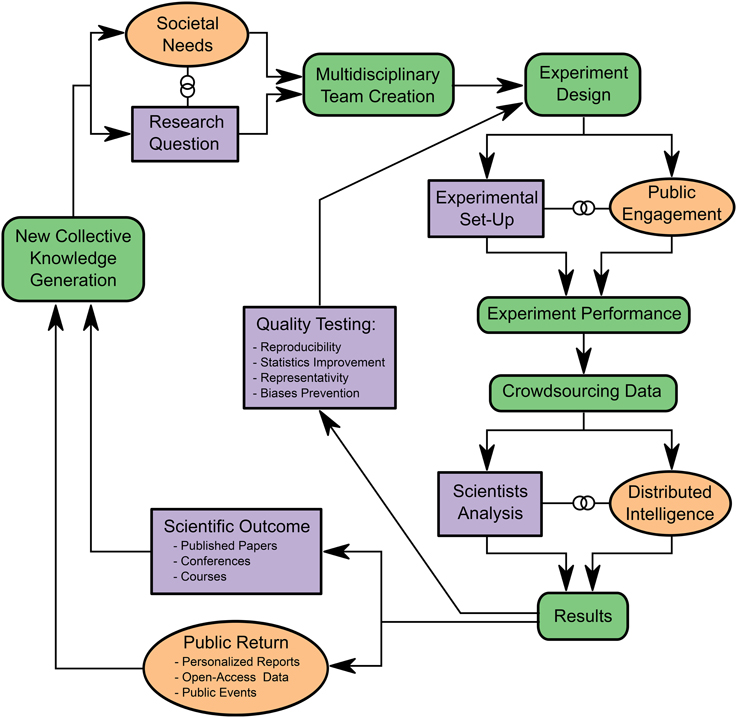

In our case, we apply this concept to CSS with the aim of answering very specific research questions with the participation of larger population samples than those in behavioral experiments. The research process that emerges from PUEs can be synthesized in the flow diagram in Figure 1. The whole process starts with a research question or a challenge for society that may be promoted by citizens or scientists, but also by private organizations, public institutions or civil movements. The initial impulse helps to create an adequate research group, which will need to be multidisciplinary if it is to tackle a complex problem consisting of many intertwined issues. The group then co-creates the experiment both considering the experimental set-up and the tasks that unavoidably involve public engagement. The experiment is then carried out and data are generated collectively (crowdsourced) under the particular constrains of public spaces which depend not only on the conditions designed by the scientists but also on many other practical limitations. The data are then analyzed using standard scientific methods, but non-professional scientists are also invited to contribute to specific tasks [11, 12] or by using other non-standard strategies in the exploration of the data [13]. These two contributions by volunteers make up what we call “distributed intelligence” and generate results that it is difficult to match by conventional computer analysis. The results can take many forms, depending on the audience being addressed; from a scientific paper to personalized reports that can be read by any citizen or even recommendations that are valid for policymakers at the city level. Finally, the whole process can generate the impulse necessary to promote and face a new social and scientific challenge or an existing need through the same scheme.

Figure 1. The research process in pop-up experiments. We identify the different steps in the whole process in a set of boxes, from conception to completion. Starting from the research question, we introduce the design and performance of a given experiment that is intended to respond to a particular challenge. The crowdsourced data gathered can be analyzed both by expert scientists and amateur citizens to produce new knowledge (the return) in different forms. The results are finally taken as inspiration and renewed energy to face new challenges and new research questions. Data quality is also part of the process: the lessons learned from the scientific analysis can improve future experimental conditions. Ovals in orange: volunteers' contributions; squares in magenta: tasks performed exclusively by scientists; rounded rectangles in green: tasks shared by both citizens and scientists.

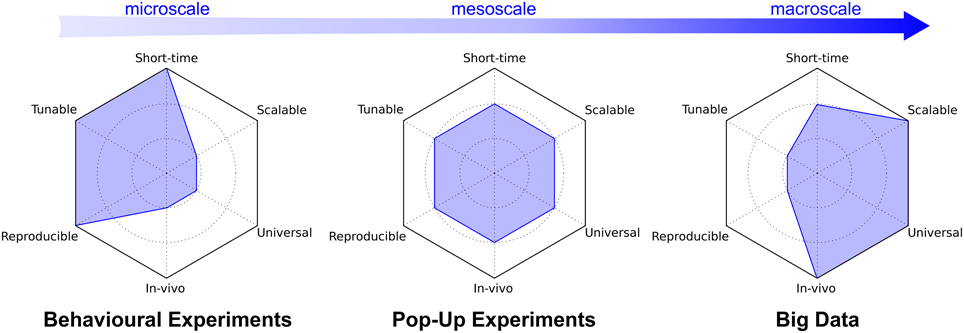

The PUE solution also represents middle ground between behavioral science experiments and big data methodologies. To understand the context in which the PUEs we propose can be placed better, Figure 2 compares the different approaches considered using a radar chart that qualitatively measures, with three degrees of intensity (low, medium and high), six different aspects that characterize each type of experiment. We can observe that the three different approaches cover different areas. Behavioral experiments and big data have a limited overlap, while the PUEs share several aspects with the former two. One might argue that the excess of openness of CS constitutes a severe limitation with respect to objectivity, compared to the solid experimental protocols in behavioral science [27, 52]. However, it is also true that the highly participatory nature of CS can be very effective at reaching a more realistic spectrum of the population and a larger sample thereby obtaining more general statements with stronger statistical support (see [28], for alternative and complementary methods). Since it is directly attached to real-world situations, the PUE solution avoids the danger of exclusive and distorted spaces of in vitro (or ex vivo) laboratory experiments. It also brings additional values to the more classic social science lab-in-the-field experiments which generally limit interaction among subjects and scientists as much as possible. At the other extreme, CS practices will never be able to compete in terms of the quantity of data with the big data world, but this can be compensated for. A better understanding of the volunteers involved and improved knowledge of their peculiarities helps to avoid possible biases. Furthermore, the active nature of PUEs allows some conditions of the experiments to be tuned to explore alternative scenarios. PUEs can indeed be an alternative to the controversial virtual labs in social networks and mobile games which have yielded interesting results, for instance, in emotional contagion with experiments on the Facebook platform [53], not without an intense public debate on ethical and privacy issues concerning the way the experiments were performed [54].

Figure 2. Radar chart comparing seven characteristics of the pop-up experiment approach with behavioral experiment and big data research. The features are graded in three different degrees of intensity from low (smallest radius) to high (largest radius). “Short-time” describes the time required to run the experiment. “Scalable” qualifies how easy it is to scale up and increase the number of subjects in the experiment while preserving original design. “Universal” quantifies the generality of the statements produced by the experiments. “In vivo” measures how close the experimental set-up is to everyday situations or everyday life. “Reproducible” assesses the capacity to repeat the experiment under identical conditions. Finally, “Tunable” quantifies how flexible and versatile the conditions of the experimental design are.

We think that PUEs could become an essential approach for the empirically testing of the many statements of CSS which is complementary to the lab-in-the-field, virtual labs and in vitro experiences. For this to happen, we have identified the main obstacles that hinder the development of CS initiatives with respect to other forms of social experimentation. They can be grouped into three categories: infrastructure, engagement, and return. In what follows, we detail each of the obstacles and illustrate the solutions that PUEs offer, together with practical examples applied to each case.

2.3. Light and Flexible Experimental Infrastructure

By infrastructure, we understand all the logistics necessary to make the experiments possible. In a broad CS context, the necessary elements differ from those of orthodox scientific infrastructure. As discussed in Bonney et al. [6] and Franzoni and Sauermann [55], they include other tools, other technical support and other spaces. The second block of Table 1 lists some of the elements we have deemed capital to satisfactorily collect reliable data. PUEs should be designed favoring scalability, in the sense of easily allowing an increase in the population sample size or repetition of the experiment in another space. To make this possible, the experiments must rely on solid and well-tested infrastructure, with an appealing volunteer experience to avoid frustrating the participants. When considering the experimental set-up, we used several strategies to foster participation and ensure the success of the experiments.

First, we physically set up the PUEs in very particular contexts in urban areas and, in all cases, we placed them in crowded (moderately to highly dense) places, to reach volunteers easily. In other words, we preferred to go where citizens were instead of encouraging them to come to our labs. To make this possible, the City Council offered specific windows, for instance, at a couple of festivals, as hosting events (the Bee-Path(1), Bee-Path(2), Cooperation(1), Mr. Banks and Dr. Brain experiments). This meant that we had to adapt to these specific out-of-the-lab and in vivo contexts; the logistics and the composition of the research teams thus unavoidably became more diverse, complex, heterodox and highly multidisciplinary. In collaboration with the event organizers, we then prepared a specific space, of reduced dimensions, for the experiment, where the volunteers (whose typology was different in each case) could participate through a recording device.

Second, PUEs demand that the devices used by participants to collect and manipulate data, either actively or passively, must be familiar to them. In our experiments we designed specific software to run on laptops (the Cooperation(1) and Cooperation(2) experiments), mobile phones (the Bee-Path(1) and Bee-Path(2) experiments) and tablets (the Mr. Banks and Dr. Brain experiments). However, it may also be possible to use cameras, video cameras, or any other sophisticated device as long as the participants easily become familiarized with it after a few instructions or a tutorial. This sort of infrastructure is in the end what allows us to carry out experiments in a participant's everyday (not strange: in vivo) environment. Initially, we overlooked this aspect in the design of the set-up and the allocation of resources, but having a user-friendly interface is important if our aim is for people to behave normally. Similarly, both the instructions and interface should be understandable and manageable for people of all ages.

Third, in order to study social behavior in different environments, the experiments need to be adaptable, tunable, transportable, versatile and easy to set up in different places. All the devices mentioned above fulfill this requirement as well.

Fourth, PUEs are typically one-shot affairs, since they are hosted at a festival, a fair or in a classroom, which means that they are concentrated in time (with a duration of 0.25–2 days) and there is no chance of a second shot. All the collectable data could be threatened if something goes wrong. Extensive beta testing and defensive programming is imperative to ensure that the collected data is reliable. It is also necessary to anticipate potential problems: one must be flexible enough to be able to retrace alternative research questions on the fly if the PUE location and conditions are not fully satisfactory to respond to the initial research purposes.

Finally, numbers matter and experiments must reach enough statistical strength for rigorous analysis to be performed, and this needs to be carefully taken into account during the design phase of the experiment (see Figure 1). Typically, the more expensive devices are, the fewer data collectors you can have; so the capacity to collect data is affected and this is not a very effective strategy in a rather short lived one-shot event. Therefore, cheap infrastructure favors scalability in the end. Alternatively, new collaborators needs to be found or an extra effort is required to find sponsorship (for instance, related to science outreach) which, in any case, will complicate the preparation phase of the experiment. Scalability is indeed interesting, but it has its side effects as well: relying on infrastructure provided by volunteers, such as smart phones, can greatly influence the quality of the data and its normalization (one of the central problems related to the big data paradigm). In the Bee-Path(1) experiment, where we used the GPS of the smartphones of the participants themselves, the cleaning process was far more laborious than in the other cases, due to this (see numbers in Table 1). In contrast, the Cooperation(1), Cooperation(2), Mr. Banks and Dr. Brain experiments, where we designed and programmed the software and supplied the hardware for the experiences of the participants, did not require much post-collection treatment.

2.4. Public Engagement Tools and Strategies

PUEs are physically based and rooted in particular, temporal, local contexts. This delimited framework allows dissemination resources and efforts to be concentrated at a given spot over a certain time, which increases the effectiveness and efficiency of the campaign in terms of both workforce and budget. Additionally, the one-shot nature of PUEs allows us to avoid the problem of keeping participants engaged in an activity spanning long periods of time; but consequently they rely completely on constantly renewing the base of participants (which may require higher dissemination). To this end, the initial action was the creation of a census of volunteers shared by all members of the Barcelona Citizen Science Office research group.

Another factor of major importance is related to the contact between researchers, organizers and citizens in the set-up of the experiment. This allows for pleasant dialogue and exchange of views that in turn helps to frame the scientific question being studied, as well as developing possible improvements for future experiences. This can be achieved by stimulating the curiosity of participants concerning the experiment and the research associated with it. The research question should be focussed and understandable to an average person who is not an expert in the field.

To engage citizens in such a dialogue, however, requires certain steps. First, it is necessary to attract potential participants with an appealing set-up. This includes location in the physical space, but also an effective publicity campaign on the days prior to the experiment (see preparatory actions in Table 1). It is important to offer a harmonized design with common themes that citizens can relate to the experiment. To make our material appealing, we collaborated with an artist (the Bee-Path(1) and Bee-Path(2) experiments) and a graphic designer (the Cooperation(1), Cooperation(2), Mr. Banks(1), Mr. Banks(2), and Dr. Brain experiments) whose main contribution was the creation of characters associated with each experiment. The function of these characters, not far from to the world of cartoons, was to attract the attention of the public, but also to present the experiment in most of the cases as an attractive game, since one of the most powerful elements that engages people in an activity is the expectation of having fun. It is certainly possible to maintain scientific rigor while using gamification strategies to create an atmosphere of play for the study, thus transforming it into a more complete experience [56]. Moreover, actors were used as human representations of these characters (Mr. Banks and Dr. Brain). The actors were indeed an important element to bring on-site attractiveness to the experiment, along with: having a large team of scientists and facilitators present (a rotating team of up to 10 people); an optimum and visible location inside the event space; and material/devices to promote the experiment, such as screens to visualize in real time the results of the experiments or promotional material (flyers and merchandise). Based on the experience, we have optimized all these ingredients and we have included some scenographic elements in the last experiment performed within the Barcelona DAU Board Games Festival (Dr. Brain).

Notwithstanding, simply getting a large number of people engaged is not enough. Additionally, it is also necessary to aim for universality in the population sample. The experiment must be designed in such a way that people of all ages and conditions can really participate. Furthermore, a PUE has to be transportable at a minimal cost after it has been implemented once, in order to be reproduced in different environments (which may favor certain types of population). As an example, the Cooperation(1) and Cooperation(2) experiments are very illustrative. In the Cooperation(1) experiment, we discovered that different age groups, especially children ranging from 10 to 16 years old, behaved in different ways and cooperated with different probabilities. Apparently, children were more volatile and less prone to cooperate than the control group in a repeated prisoner's dilemma. Fifteen months later, we repeated the experiment in a secondary school [12–13 years old: the Cooperation(2) experiment]. On one hand, in this case the results showed the same levels of cooperation as the control group in Cooperation(1) experiment. However, on the other hand, same volatile behavior, more intense than for the control group in Cooperation(1), was again observed. Therefore, thanks to the repetition of the experiment, we rejected the early idea of different levels of cooperation in children, while at the same time it strengthened the claim that children exhibit volatile behavior (Poncela-Casasnovas et al., submitted).

2.5. Outcome and Return for the Public

Last but not least come the factors related to the management of the aftermath of the experiment (fourth section of Table 1). PUEs, as we have implemented them, are intrinsically cross-disciplinary and involve a large number of agents and institutions, which in turn may have diverging interests and expectations regarding the outcome of a particular experiment. Any successful PUE must be able to accommodate all these interests and create positive environments of collaboration in which all the actors contribute in a mutually cooperative way.

The organizers of a festival will, for instance, find in PUEs an innovative format with participatory activities to add to their programme [the Cooperation(1), Mr. Banks and Dr. Brain experiments]. PUEs can also be a transparent and pro-active system of gathering data to provide information and opportunities for analysis of the event itself: useful for planning and improvement. The Bee-Path(1) experiment studied how visitors moved around a given space and provided information based on actual facts that could be used to improve the spatial distribution in future editions of the fair where it was developed. City, local and other administrations will find in PUEs an innovative way to establish direct contact with citizens; to co-create new knowledge valid for the interests of the city as a whole and eventually to generate a census of highly motivated citizens, prepared to participate in this kind of activity. Scientists will obviously try to publish new research based on the data gathered.

All these expectations are very different from each other and should converge organically if a collective experiment is to be run successfully [3]. However, we should not forget to include quite specifically the expected return for our central actors: the volunteers. Their contribution is essential in CS practices [10] and it is therefore completely fair to argue that citizens who agree to participate in these initiatives need to see a clear benefit from their perspective, comparable to (albeit different from) that of the local authorities, festival organizers, scientists or any other contributors. Moreover, the high degree of concentration in space and time, together with the intense public exposure of PUEs, increase volunteer expectations even more compared to other ordinary cases in CS [11, 12]. The face-to-face relationship established between researchers and citizens in all the PUEs we have run testifies to this being a very delicate issue that needs to be managed with great care.

Any PUE should manage expectations on three different time scales: short term (during and immediately after the experiment), medium term (a week or a month afterwards), and long term (the following months or even years). In some of the experiments we failed in this aspect on at least one of the three time scales, since we did not properly anticipate the effort required to respond to the expectations of the volunteers.

The short term responds to basic curiosity. This point is related to engagement and the experimental set-up: the physical presence of scientists (with no mediation) allows them to explain the experiment in the most convinced and convincing way, and thus to motivate people. Also, the introduction of large screens where the progress of other participants can be followed in real time helped in this matter. In the Bee-Path experiments [Bee-Path(1) and Bee-Path(2)] we showed the GPS locations of the participants on a map; while in the Mr. Banks experiment we showed a ranking of the best players (best performances by the participants). This information was intended to boost participation and it was also chosen in such a way that it distorted the questions addressed to a minimally degree and thus did not influence the results of each experiment. The medium term relates to expectations regarding the results of the experiment. Participants want to know whether the set-up was successful and whether they performed well enough. In order to complement their short-term experience, it is important to keep participants informed as to the outcomes of the PUE. An example of a medium timescale is the Dr. Brain case, where a personalized report of performance during the experiment was sent to each participant by e-mail. In some cases, and based on these results, this also generated new dialogue between scientists and citizens. The last timescale to be managed consists of a more formal way of presenting the results of the study, through public presentations and talks. So, in the case of the Bee-Path(1) experiment, outreach conferences, public debates and even a summer course for (graduate and undergraduate) students interested in CS practices were organized.

All these are important for the success of PUEs and should be clearly laid out to volunteers before they agree to participate. The return for volunteers at all these scales is a key ingredient in the building of a critical mass of engaged citizens; not only for further experiments, but also to fulfill the objectives of the work that are not strictly scientific. Being a scientist, the direct relation with volunteers helps to improve the message and the way of delivering that message; to refine understanding of the phenomena involved in an experiment and even to refine a given experiment at future venues. One final positive side effect of this contact is the rise in public awareness of the difficulty and importance of science. As can be seen, forming the project around a rich and functional web page helps to harmonize all the time scales discussed and opens up new and interesting perspectives to bridge PUEs with other online-based CS practices. It also serves as an efficient way to communicate results and news of the project to interested citizens, and it could be used to improve data handling and sharing standards by allowing participants direct access to and management of their personal registers.

3. Discussion

The advent of globalization and the fast track taken by innovation [48], combined with enormous challenges, have created demand for answers at a very fast pace. Deeply intertwined global and local actions are necessary to meet social challenges such as the continuous growth of the human population, the effects of climate change and even the need for collective decision-taking mechanisms prepared for effective policy-making. These urgent requirements collide with the typically long-winded process of scientific research, and this situation is affecting the philosophy, available resources and methods underlying science itself [55]. Society expects much from us as scientists but still lacks reflection and knowledge concerning the route toward more collaborative, public, open and responsive research. CS practices, even though they may not provide definitive answers to social challenges, aims to shorten the gap between the public and researchers, or in the worst case scenario, at least to increase social awareness of the problems tackled. CS practices can thus allow science to furnish ready-made solutions in public, and with citizens playing an active role.

In this work we have presented our experience in bridging the gap between CSS and the philosophy underlying CS, which in our case has taken the form of what we call PUEs. We hope that this work serves as a guideline for groups willing to adopt and expand such practices, and that it opens up the debate regarding the possibilities (and also the limitations) these approaches can offer. The flexibility of behavioral experiments can be combined with the strengths of big data to create a new tool capable of generating new collective knowledge. We have conceptually identified the main issues to be taken into account whenever CS research is planned in the field of CSS. We have grouped the challenges into three categories, in accordance with our experience: infrastructure, engagement and return. Furthermore, we have explained the solutions we implemented under the framework of a PUE, providing practical examples grounded in our own experience. The importance of team work and of widening the scope to consider questions that are not directly related with lab work has been highlighted, as well as the need to work hand in hand with both the public and other social actors. Other technical aspects of the approach related to necessary but peculiar infrastructure have also been reviewed.

We firmly support the idea of abandoning the ivory tower and opening up science and its research processes. Indeed, CS research essentially relies on the collaboration of citizens, but not just in passive data gathering. We think that placing distributed intelligence (with contributions from both experts and amateurs) at the very core of scientific analysis could also be a valid strategy to obtain rigorous and valuable results. We also believe that the PUEs we present here can potentially empower citizens to take their own civil action, relying on a collectively constructed facts-based approach [16]. To co-create and co-design a smart city with citizens, along the lines of the big data paradigm, will then be much easier and even more natural, as interests and concerns will be shared throughout the whole research process. Data gathered in the wild or in vivo contexts could thus be understood as truly public and open, while data ownership and knowledge would be shared from the very start [4]. Our future venues and experiments will be more deeply inspired by the open-source, do-it-yourself, do-it-together and makers movements [57], which facilitate learning-by-doing, and low-cost heuristic skills for everybody. A fresh look at problems can result in innovative and imaginative ideas that in the end can lead to out of the box solutions. However, as scientists, we will also have to find a way to reconcile unorthodox and intuitive forms with the standards and methodologies of the world of science. Lessons will need to be learnt from the “open prototyping” approach, in which an industrial product (such as a car) can be shaped by an iterative process during which the company owning the product has no problem allowing input from outside [58]. Some other clues can be found in the form of collective experimentation, where a fruitful dialogue can be established between the matters of concern raised by citizens and the matters of fact raised by scientists. Latour [29] already introduced these concepts and discussed their symbiotic relationship by considering the case study of ecologism (a civil movement) and ecology (a scientific activity). There are still many aspects to test and explore concerning this approach in the field of CSS research.

We would also like, however, to present briefly open questions related to the way we perform science nowadays, and echo fundamental contradictions that science is not properly handling in this era of globalization [55]. CS practices yield pleasing outputs for communities; but they require a major effort from scientists, with the downside of providing very low (formal and bureaucratic) professional recognition. Open social experiments demand a high level of involvement in cooperation with non-scientific actors, which may divert professional researchers from the activity for which they are generally evaluated: the publication of results. Furthermore, such experiments often involve multidisciplinary teams, which then may encounter difficulties finding the appropriate journals to publish their findings and face difficulties with regard to acceptance in established communities. We thus urge the scientific community to actively recognize the valuable advantages of performing science within our proposed experimental framework.

Lessons learned must be shared, both within the community (as this paper attempts to do) and outside, in public spaces, including public institutions and policy makers. Science and CS are mostly publicly funded and therefore belong to society. The Internet provided new ways in which new relations among science and society can be strongly reinforced. We believe that this is good for everyone as it raises concern for science, by enhancing participation and, most importantly, by exploring new effective ways to push the boundaries of knowledge further. We hope that the present work helps in theoretically establishing the concept of the PUE and encouraging the adoption of CS practices in science, in whatever the field.

4. Materials and Methods

In this section we provide descriptions of our experiences over 4 years of performing PUEs using CS practices in the city of Barcelona. Here we detail the experimental methods and briefly summarize the outcomes. All the experiments were performed in accordance with institutional (from the Universitat de Barcelona in all cases except for the Cooperation(1) experiment, when they were from the Universidad Carlos III) and national guidelines and regulations concerning data privacy (in accordance with Spanish data protection law: the LOPD) and gave written informed consent in accordance with the Declaration of Helsinki. All interfaces used included informed consent from all subjects. The data collected were properly anonymized and not related to personal details, which in our case were age range, sex, level of education and electronic (e-mail) address.

4.1. The Bee-Path(1) Experiment

The aim of this experiment was to study the movement of visitors during their exploration of an outdoor science and technology fair where several stands with activities were located in an area of approximately 3 h inside a public park. The experiment took place during the weekend of 16th and 17th June 2012, specifically on Saturday afternoon (from 16 to 20 h) and the morning of Sunday (from 11 to 15 h). The participants had very different interests, origins, backgrounds and ages; the organization of the event estimated that 10,000 people visited the fair. The Bee-Path information stand was located at the main entrance where visitors were encouraged to participate in the experiment by downloading an App onto their mobile phones. After very simple registration and instructions on how to activate the App, the participants were left to wander around the fair while being tracked. After a laborious cleaning process, we analyzed the movement and trajectories of 27 subjects from the records provided by the 101 volunteers. We found spatio-temporal patterns in their movement and we developed a theoretical model based on Langevin dynamics driven by a gravitational potential landscape created by the stands. This model was capable of explaining the results of the experiment and predicted scenarios with other spatial configurations of the stands. A scientific paper has been submitted for publication [59]. The project description, results and data are freely accessible on the web page: www.bee-path.net.

4.2. The Bee-Path(2) Experiment

Following the previous Bee-Path(1) experiment, this set-up also focussed on studying the movement of people, but in this case we were interested in searching patterns or how people move when exploring a landscape to find something. The experiment took place at the following edition of the same science and technology fair (June 15th and 16th 2013). The participants also downloaded an App, similar to that used in Bee-Path(1), that tracked them; but in this case they were instructed to find 10 dummies hidden in the park. Numerous problems were encountered due to several technological and non-technological factors which impeded satisfactory performance of the experiment. The technological issues were principally two: the low accuracy of the recordings, due to the proximity of the regional parliament building where Wi-Fi and mobile phone coverage was inhibited; and the limited performance of the App when running in low-end devices. Non-technological problems included a bad placement of our stand (far from the entrance) where recruitment of volunteers was hard; and the unexpected fact that some members of the public altered, stole or changed the positions of some of the dummies. Notwithstanding the failure of the experiment to produce meaningful and useful results, we learnt important lessons from these complications.

4.3. The Cooperation(1) Experiment

Here we explored how important age is in the emergence of cooperation when people repeatedly face the prisoner's dilemma (PD). The experiment was carried out with 168 volunteers selected from the attendants of the Barcelona DAU Festival 2012 (Barcelona's 1st board game fair; December 15th and 16th). The set of volunteers was divided into 42 subsets of 4 players according to age: seven different age groups plus one control group in which the subjects where not distinguished by age. Each subset took part in a game where the four participants played 25 rounds (although they were not aware of it) deciding between two colors associated with a certain PD pay-off matrix. The participants played a 2 × 2 PD game with each of their 3 neighbors, choosing the same action for all opponents. In order to play with an incentive, they were remunerated with real money proportionally to their final score. During the game, volunteers interacted through software specially programmed for the experiment and installed on a laptop. They were not allowed to talk or signal in any way, but to further guarantee that potential interactions among the players would not influence the results of the experiment, the assignment of players to the different computers in the room was completely random. In this experiment, together with the “Jesuïtes Casp" experiment described in the next subsection, we found that the elderly cooperated more, and there is a behavioral transition from reciprocal but more volatile behavior, to more persistent actions toward the end of adolescence. For further details see [60].

4.4. The Cooperation(2) Experiment

The purpose of repeating the Cooperation(1) experiment at the DAU festival was to confirm the apparent tendency of children to cooperate less than the average population. Thus, we repeated the experiment simply to increase the pool of subjects in this age range, which allowed us to be more statistically accurate. We analyzed the performance of 52 secondary school children (the “Jesuïtes Casp” experiment) ranging from 12 to 13 years old. The methods and protocols were the same as in the Cooperation(1) experiment, as was the software installed on the laptops. The results of this experiment refuted the hypothesis that children cooperate less on average, but at the same time confirmed their more volatile behavior, as described in Gutiérrez-Roig et al. [60].

4.5. The Mr. Banks(1) Experiment

The Mr. Banks experiment was set up to study how non-expert people make decisions in uncertain environments; specifically, assessing their performance when trying to guess if a real financial market price will go up or down. We analyzed the performance of 283 volunteers at the Barcelona DAU Festival 2013 (from approximately 6000 attendants) on December 14th and 15th. All the volunteers played via an interface that was specifically created for the experiment and was accessible via identical tablets only available in a specific room under researcher surveillance. On the main screen the devices showed the historic daily market price curve and some other information such as 5-day and 30-day average window curves, the high-frequency price on the previous day, the opinion of an expert, the price direction on previous days and price directions of other markets around the world. All the price curves and information were extracted from real historical series. The participants could play in four different scenarios with different time and information availability constrains. In each scenario they were required to make guesses for 25 rounds, while every click on the screen was recorded. Each player started with 1000 coins and earned an additional 5% of their current score if their guess was correct or lost an equivalent negative return if their guess was wrong. We used gamification strategies and we did not provide any economic incentive, in contrast to the social dilemma experiments: Cooperation(1), Cooperation(2), and Dr. Brain. The analysis of the 18,436 recorded decisions and 44,703 clicks allowed us to conclude that participants tend to follow intuitive strategies called “market imitation” and “win–stay, lose–switch.” These strategies are followed less closely when there is more time to make a decision or some information will be provided (Gutiérrez-Roig et al., submitted). Both the experiment and information on the project are available at: www.mr-banks.net.

4.6. The Mr. Banks(2) Experiment

Here we repeated the Mr. Banks(1) experiment in a different context, in order to study the reproducibility of the results. The interface and the experimental set-up were the same as in Mr. Banks(1) but the typology of participants and the type of event were significantly different. The experiment, named Hack your Brain in the conference programme, was situated at the main entrance of the CAPS2015 conference: the International Event on Collective Awareness Platforms for Sustainability and Social Innovation, held in Brussels (July 7th and 8th 2015). The 42 volunteers who played the game provided 2372 recorded decisions. The volunteers were all registered participants at the conference with very diverse profiles (scientists, mostly from the social sciences; social innovators; designers; social entrepreneurs; policymakers; etc.). The results showed that the results of the Mr. Banks(1) experiment had a good reproducibility, as the percentage of correct guesses was similar in Mr. Banks(1) and Mr. Banks(2) (53.4 and 52.7%, respectively) as was the percentage of market-up decisions (60.8 and 60.5%, respectively). Deeper analysis of the results is underway, to check that the strategies adopted by Mr. Banks(1) volunteers were the same as those used by Mr. Banks(2) volunteers. A paper has been submitted for publication: (Gutiérrez-Roig et al., submitted).

4.7. The Dr. Brain Experiment

This was a lab-in-the-field experiment that allows for a phenotypic characterization of individuals when facing different social dilemmas. Instead of playing with the same fixed pay-off matrix, as in the Cooperation experiments, here the values and the neighbors changed every round. We discretized the (T, S)-plane as a lattice of 11 × 11 sites, allowing us to explore up to 121 different games grouped in 4 categories: Harmony Games, Stag Hunt Games, Snowdrift Games and Prisoner's Dilemma Games. Each player was given a tablet with the App for the experiment installed. The participants were shown a brief tutorial, but were not instructed in any particular way, nor with any particular goal in mind. They were informed that they had to make decisions under different conditions and against different opponents in every round. Due to practical limitations, we could only host around 25 players simultaneously, so the experiment was conducted in several sessions over a period of 2 days. In every session, all the individuals played a different number of rounds, picked at randomly between 13 and 18. The total number of participants in our experiment was 541, and a total of 8366 game actions were collected. In order to play with an incentive, they received coupons for a prize of EUR 50 to spend in neighborhood shops. During the game,the volunteers interacted through software specially programmed for the experiment and installed in a laptop. They were not allowed to talk or signal in any other way, and again were spatially placed at random. From this experiment we concluded that we can distinguish, empirically and without making any assumptions, five different types of player's behavior or phenotypes that are not theoretically predicted. A paper has been submitted for publication: (Poncela-Casasnovas et al., submitted).

Author Contributions

OS, MG, IB, and JP equally conceived and wrote the work. All the authors approved the final version of the manuscript.

Funding

The research leading to these results received funding from: Barcelona City Council (Spain); RecerCaixa (Spain) through grant Citizen Science: Research and Education; MINECO (Spain) through grants FIS2013-47532-C3-2-P and FIS2012-38266-C2-2; Generalitat de Catalunya (Spain) through grants 2014-SGR-608 and 2012-ACDC-00066; and Fundación Española para la Ciencia y la Tecnología (FECYT, Spain) through the Barcelona Citizen Science Office project of the Barcelona Lab programme. OS also acknowledges financial support from the Generalitat de Catalunya (FI programme) and the Spanish MINECO (FPU programme).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to acknowledge the participation of at least 1255 anonymous volunteers who made this research possible. We especially thank Mar Canet, Nadala Fernández, Oscar Marín from Outliers, Carlota Segura, Clàudia Payrató, Pedro Lorente, Fran Iglesias, David Roldán, Marc Anglès, and Berta Paco for all the logistics and their full collaboration which made the experiments possible in one way or another. We also acknowledge the co-authors Anxo Sánchez, Yamir Moreno, Carlos Gracia-Lázaro, Jordi Duch, Julián Vicens, Julia Poncela-Casasnovas, Jesús Gómez-Gardenes, Albert Díaz-Guilera, Federic Bartomeus, Aitana Oltra, and John Palmer in the research papers following the experiments reported here. We also thank the director of the DAU (Oriol Comas) for giving us the opportunity to perform the three experiments at the DAU Barcelona Festival. We are greatly indebted to the Barcelona Lab programme, promoted by the Direction of Creativity and Innovation of the Barcelona City Council led by Inés Garriga for their help and support in setting up the experiments at the Barcelona Science Fair (Parc de la Ciutadella, public park in Barcelona) and at the DAU Barcelona Festival (Fabra i Coats, Creativity Fabrique of the City Council). Finally, we also thank Marta Arniani from Sigma-Orionis for hosting the Mr. Banks(2) experiment at the CAPS event.

References

1. Latour B. From the world of science to the world of research? Science (1998) 280:208–9. doi: 10.1126/science.280.5361.208

2. Kuhn TS. The structure of scientific revolutions. Chicago, IL: University of Chicago Press (2012).

3. Latour B. Which protocol for the new collective experiments? (2006) 32–3. Available online at: http://www.bruno-latour.fr/sites/default/files/P-95-METHODS-EXPERIMENTS.pdf

4. Callon M, Rabeharisoa V. Research “in the wild” and the shaping of new social identities. Technol Soc. (2003) 25:193–204. doi: 10.1016/S0160-791X(03)00021-6

6. Bonney R, Shirk JL, Phillips TB, Wiggins A, Ballard HL, Miller-Rushing AJ, et al. Next steps for citizen science. Science (2014) 343:1436–7. doi: 10.1126/science.1251554

9. Socientize. White Paper on Citizen Science, Socientize. Available online at: http://ec.europa.eu/digital-agenda/en/news/green-paper-citizen-science-europe-towards-society-empowered-citizens-and-enhanced-research-0; 2014

10. Irwin A. Citizen science: A Study of People, Expertise and Sustainable Development. New York, NY: Psychology Press (1995).

11. Lintott CJ, Schawinski K, Slosar A, Land K, Bamford S, Thomas D, et al. Galaxy Zoo: morphologies derived from visual inspection of galaxies from the Sloan Digital Sky Survey. Mon Not R Astron Soc. (2008) 389:1179–89. doi: 10.1111/j.1365-2966.2008.13689.x

12. Kim JS, Greene MJ, Zlateski A, Lee K, Richardson M, Turaga SC, et al. Spacetime wiring specificity supports direction selectivity in the retina. Nature (2014) 509:331–6. doi: 10.1038/nature13240

13. Cooper S, Khatib F, Treuille A, Barbero J, Lee J, Beenen M, et al. Predicting protein structures with a multiplayer online game. Nature (2010) 466:756–60. doi: 10.1038/nature09304

14. Lauro FM, Senstius SJ, Cullen J, Neches R, Jensen RM, Brown MV, et al. The Common oceanographer: crowdsourcing the collection of oceanographic data. PLoS Biol. (2014) 12:e1001947. doi: 10.1371/journal.pbio.1001947

15. Haklay M. Citizen science and volunteered geographic information: overview and typology of participation. In: Sui D, Elwood S, Goodchild M, editors. Crowdsourcing Geographic Knowledge. Dordrecht: Springer (2013). pp. 105–122.

16. McQuillan D. The countercultural potential of citizen science. M/C J. (2014) 17. Available online at: https://www.media-culture.org.au/index.php/mcjournal/article/viewArticle/919

17. Lazer D, Pentland A, Adamic L, Aral S, Barabasi AL, Brewer D, et al. Social science. Computational social science. Science (2009) 323:721–3. doi: 10.1126/science.1167742

18. Cioffi-Revilla C. Computational social science. Wiley Interdiscip Rev. (2010) 2:259–71. doi: 10.1002/wics.95

19. Russell SA. Diary of a Citizen Scientist: Chasing Tiger Beetles and Other New Ways of Engaging the World. Corvallis, OR: Oregon State University Press (2014).

20. Dickinson JL, Bonney R. Citizen Science: Public Participation in Environmental Research. New York, NY: Comstock Publishing Associates (2015).

21. Silvertown J. A new dawn for citizen science. Trends Ecol Evol. (2009) 24:467–71. doi: 10.1016/j.tree.2009.03.017

22. Hoggatt AC. An experimental business game. Behav Sci. (1959) 4:192–203. doi: 10.1002/bs.3830040303

23. Cueva C, Roberts RE, Spencer T, Rani N, Tempest M, Tobler PN, et al. Cortisol and testosterone increase financial risk taking and may destabilize markets. Sci Rep. (2015) 5:11206. doi: 10.1038/srep11206

24. Yoshimura Y, Sobolevsky S, Ratti C, Girardin F, Carrascal JP, Blat J, et al. An analysis of visitors behavior in the louvre museum: a study using bluetooth data. Environ Plan B (2014) 41:1113–31. doi: 10.1068/b130047p

25. Grujić J, Fosco C, Araujo L, Cuesta JA, Sánchez A. Social experiments in the mesoscale: humans playing a spatial prisoner's dilemma. PLoS ONE (2010) 5:e13749. doi: 10.1371/journal.pone.0013749

26. Weiss CH, Poncela-Casasnovas J, Glaser JI, Pah AR, Persell SD, Baker DW, et al. Adoption of a high-impact innovation in a homogeneous population. Phys Rev X (2014) 4:041008. doi: 10.1103/PhysRevX.4.041008

27. Kagel JH. The Handbook of Experimental Economics. Princeton, NJ: Princeton University Press (1997).

28. Margolin D, Lin YR, Brewer D, Lazer D. Matching data and interpretation: towards a rosetta stone joining behavioral and survey data. In: Seventh International AAAI Conference on Weblogs and Social Media. Boston, MA (2013).

30. Gracia-Lázaro C, Ferrera A, Ruiza G, Tarancón A, Cuesta JA, Sánchez A, et al. Heterogeneous networks do not promote cooperation when humans play a Prisoner's Dilemma. Proc Natl Acad Sci USA. (2012) 109:12922–26. doi: 10.1073/pnas.1206681109

31. Perelló J, Murray-Rust D, Nowak A, Bishop SR. Linking science and arts: intimate science, shared spaces and living experiments. Eur Phys J Spec Top. (2012) 214:597–634. doi: 10.1140/epjst/e2012-01707-y

32. Cattuto C, Van den Broeck W, Barrat A, Colizza V, Pinton JF, Vespignani A. Dynamics of person-to-person interactions from distributed RFID sensor networks. PLoS ONE (2010) 5:e11596. doi: 10.1371/journal.pone.0011596

33. Schroeder R. Big Data and the brave new world of social media research. Big Data Soc. (2014) 1:2053951714563194. doi: 10.1177/2053951714563194

34. Conte R, Gilbert N, Bonelli G, Cioffi-Revilla C, Deffuant G, Kertesz J, et al. Manifesto of computational social science. Eur Phys J Spec Top. (2012) 214:325–46. doi: 10.1140/epjst/e2012-01697-8

35. Lazer D, Kennedy R, King G, Vespignani A. The parable of google flu: traps in big data analysis. Science (2014) 343:1203–5. doi: 10.1126/science.1248506

36. Boyd D, Crawford K. Critical questions for big data: provocations for a cultural, technological, and scholarly phenomenon. Inf Commun Soc. (2012) 15:662–79. doi: 10.1080/1369118X.2012.678878

37. Vianna GMS, Meekan MG, Bornovski TH, Meeuwig JJ. Acoustic telemetry validates a citizen science approach for monitoring sharks on coral reefs. PLoS ONE (2014) 9:e95565. doi: 10.1371/journal.pone.0095565

38. Rossiter DG, Liu J, Carlisle S, Zhu AX. Can citizen science assist digital soil mapping? Geoderma (2015) 259–260:71–80. doi: 10.1016/j.geoderma.2015.05.006

39. Bowser A, Wiggins A, Shanley L, Preece J, Henderson S. Sharing data while protecting privacy in citizen science. Interactions (2014) 21:70–73. doi: 10.1145/2540032

40. Resnik DB, Elliott KC, Miller AK. A framework for addressing ethical issues in citizen science. Environ Sci Policy (2015) 54:475–81. doi: 10.1016/j.envsci.2015.05.008

41. Riesch H, Potter C. Citizen science as seen by scientists: methodological, epistemological and ethical dimensions. Public Underst Sci. (2013) 23:107–20. doi: 10.1177/0963662513497324

42. Prestopnik NR, Crowston K. Gaming for (Citizen) science. In: Seventh IEEE International Conference on e-Science Workshops. New York, NY (2011). pp. 28–33.

43. Sauermann H, Franzoni C. Crowd science user contribution patterns and their implications. Proc Natl Acad Sci USA. (2015) 112:679–84. doi: 10.1073/pnas.1408907112

44. Curtis V. Online Citizen Science Projects: An Exploration of Motivation, Contribution and Participation. Ph.D. thesis. The Open University (2015).

45. Petersen JC. Citizen Participation in Science Policy. Amherst, MA: University of Massachusetts Press (1984).

47. EUCommision. Responsible Research and Innovation, Europeans Ability to Respond to Societal Challenges (2014). (Accessed: 2015-07-29). Avalable online at: http://ec.europa.eu/research/science-society/document_library/pdf_06/responsible-research-and-innovation-leaflet_en.pdf

48. Chesbrough HW. Open innovation: The New Imperative for Creating and Profiting From Technology. Cambridge, MA: Harvard Business Press (2006).

49. Henrich J, Heine SJ, Norenzayan A. Most people are not WEIRD. Nature (2010) 466:29. doi: 10.1038/466029a

50. Collaboration OS. Estimating the reproducibility of psychological science. Science (2015) 349:aac4716. doi: 10.1126/science.aac4716

51. Bohannon J. Many psychology papers fail replication test. Science (2015) 349:910–1. doi: 10.1126/science.349.6251.910

52. Webster MJ, Sell J. Laboratory Experiments in the Social Sciences. Boston, MA: Academic Press (2014).

53. Kramer ADI, Guillory JE, Hancock JT. Experimental evidence of massive-scale emotional contagion through social networks. Proc Natl Acad Sci USA. (2014) 111:8788–90. doi: 10.1073/pnas.1320040111

54. Gleibs IH. Turning virtual public spaces into laboratories: thoughts on conducting online field studies using social network sites. Anal Soc Issues Publ Policy (2014) 14:352–70. doi: 10.1111/asap.12036

55. Franzoni C, Sauermann H. Crowd science: the organisation of scientific research in open collaborative projects. Res Policy (2014) 43:1–20. doi: 10.1016/j.respol.2013.07.005

56. Morris B, Croker S, Zimmerman C, Gill D, Romig C. Gaming science: the ‘Gamification’ of scientific thinking. Front Psychol. (2013) 4:607. doi: 10.3389/fpsyg.2013.00607

58. Bullinger AC, Hoffmann H, Leimeister JM. The next step-open prototyping. In: ECIS. Atlanta, GA (2011).

59. Gutiérrez-Roig M, Sagarra O, Oltra A, Palmer JRB, Bartumeus F, Diaz-Guilera A, et al. Active and reactive behaviour in human mobility: the influence of attraction points on pedestrians. ArXiv: 1511.03604 (2015).

Keywords: Citizen Science, participation, engagement, computational social science, data, experiments, collective, methods

Citation: Sagarra O, Gutiérrez-Roig M, Bonhoure I and Perelló J (2016) Citizen Science Practices for Computational Social Science Research: The Conceptualization of Pop-Up Experiments. Front. Phys. 3:93. doi: 10.3389/fphy.2015.00093

Received: 22 September 2015; Accepted: 09 December 2015;

Published: 05 January 2016.

Edited by:

Javier Borge-Holthoefer, Qatar Computing Research Institute, QatarReviewed by:

Raimundo Nogueira Costa Filho, Universidade Federal do Ceará, BrazilGerardo Iñiguez, Centro de Investigación y Docencia Económicas A.C., Mexico

Copyright © 2016 Sagarra, Gutiérrez-Roig, Bonhoure and Perelló. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Josep Perelló, josep.perello@ub.edu

Oleguer Sagarra

Oleguer Sagarra Mario Gutiérrez-Roig

Mario Gutiérrez-Roig Isabelle Bonhoure2

Isabelle Bonhoure2  Josep Perelló

Josep Perelló