- 1Division of Clinical Psychology, Psychotherapy, and Health Psychology, Department of Psychology, University of Salzburg, Salzburg, Austria

- 2Department of Psychiatry, Psychotherapy, and Psychosomatics, Medical Faculty, RWTH Aachen University, Aachen, Germany

- 3Translational Brain Medicine, Jülich Aachen Research Alliance, Aachen, Germany

- 4Institute of Neuroscience and Medicine (INM-1), Research Center Jülich, Jülich, Germany

- 5Department of Psychiatry and Psychotherapy, University of Tübingen, Tübingen, Germany

- 6Centre for Cognitive Neuroscience, Department of Psychology, University of Salzburg, Salzburg, Austria

Negative social evaluations represent social threats and elicit negative emotions such as anger or fear. Positive social evaluations, by contrast, may increase self-esteem and generate positive emotions such as happiness and pride. Gender differences are likely to shape both the perception and expression of positive and negative social evaluations. Yet, current knowledge is limited by a reliance on studies that used static images of individual expressers with limited external validity. Furthermore, only few studies considered gender differences on both the expresser and perceiver side. The present study approached these limitations by utilizing a naturalistic stimulus set displaying nine males and nine females (expressers) delivering social evaluative sentences to 32 female and 26 male participants (perceivers). Perceivers watched 30 positive, 30 negative, and 30 neutral messages while facial electromyography (EMG) was continuously recorded and subjective ratings were obtained. Results indicated that men expressing positive evaluations elicited stronger EMG responses in both perceiver genders. Arousal was rated higher when positive evaluations were expressed by the opposite gender. Thus, gender differences need to be more explicitly considered in research of social cognition and affective science using naturalistic social stimuli.

Introduction

Gender differences have been fascinating scientists and lay people alike. Differences in physical characteristics such as height, weight, and brain size reveal a large body of literature (e.g., Faith et al., 2001; Rosenfeld, 2004; Cahill, 2006; Kirchengast and Marosi, 2008). Furthermore, the influence of cognitive abilities, behavior, and personality traits on gender differences is also well documented (e.g., Bussey and Bandura, 1999; Taylor et al., 2000; Chapman et al., 2007). However, gender differences in general reactivity to emotional stimuli such as affective pictures or films are less studied (e.g., Bradley et al., 2001; Derntl et al., 2009, 2010; Bagley et al., 2011). Research focusing on gender differences in interpersonal emotional contexts that examines both the stimulus side (expresser gender) and the perceiver side (participant gender) is even more scarce. It may reasonably be argued that this scarcity of research contrasts with the multitude of gender stereotypes regarding emotions in social interactions in the general population. The present study was designed to shed more light on this issue.

Social interactions encompass a coherent set of facial expressions, vocal components, and postural/gestural markers (Keltner and Haidt, 1999; Schweinberger and Schneider, 2014). Such rich communicative cues are thought to facilitate and disambiguate communication on multiple levels. Positively valenced social interactions expressing compliments, approval, and support are thought to signal sympathy and indicate affiliation or even attraction. In contrast, negatively valenced social interactions expressing criticism, disapproval, and discouragement repel the interaction and express antipathy or even hostility. Valenced social communication has a wide ranging psychological effect on the perceiver. For instance, positive evaluations may evoke emotions of happiness and pride and positively affect self-esteem (Fleming and Courtney, 1984). Negative social evaluations represent frequent and powerful stressors, eliciting anger, sadness, fear or embarrassment that may decrease self-esteem (Leary et al., 2006). In the following, we review existing behavioral, observational, and psychometric research on how gender modulates these response patterns while distinguishing between the expression (expresser) and the perception (perceiver) side.

Gender differences in the expression of emotions during social interactions (expresser side) have revealed a female susceptibility of emotional expressions (Dimberg and Lundquist, 1990; Kring and Gordon, 1998). Behaviorally, women have been shown to express positive evaluations like compliments more frequently than men (Holmes, 1993), possibly to enhance bonding with their interaction partners (Brown and Levinson, 1987) whereas men utilize compliments less often (Holmes, 1993). In contrast, negative social evaluations are used by both genders with similar frequency (Björkqvist et al., 1992). Furthermore, differences of emotion expressivity also depend on context and nature of the expressed emotion: women report more sadness, fear, shame or guilt and tend to engage more in related expressive behaviors in social encounters whereas men tend to exhibit more aggressive behavior than women when they feel angry (Biaggio, 1989; Pasick et al., 1990; Sharkin, 1993; Fischer et al., 2004a; Carré et al., 2013). Regarding the etiology of such gender differences both biological and cultural accounts have been put forward (Buck et al., 1974; Ekman and Friesen, 1982). Regarding the latter, the influence of social display rules may modulate an emotional response displayed by facial expressions (Buck, 1984). For instance, the expression of negative emotions might be more culturally acceptable for men than for women. Regarding the first (biological account), it is important to consider the evolutionary importance of mating situations and the role of positive expressions between opposite sex interaction partners to support affiliation and mating. Consistent with this account, emotional facial expressions of the opposite gender have been shown to result in faster detection times than emotional facial expressions of one’s own gender (Hofmann et al., 2006).

When perceiving negative emotions and evaluations (perceiver side) involving verbal aggression, women tend to attribute these to stress and the loss of self-control whereas men tend to view aggressive behavior as a tool to control others and demonstrate status (Campbell and Muncer, 1987). In response to positive evaluation, by contrast, men feel uncomfortable especially when perceiving them as compliments (Holmes, 1993). Gender differences have also been observed for accuracy of facial expression recognition and results mostly indicate better performances of women regardless of the displayed emotion (Thayer and Johnson, 2000; Hall and Matsumoto, 2004; Guillem and Mograss, 2005) and allegedly documenting the superiority of women in social communicative skills. However, several studies did not replicate these differences (for review see Kret and De Gelder, 2012) suggesting that the discrepancy between women and men might be task-related. In fact, in simple emotion recognition tasks with intense facial expressions women and men show similar performances (Lee et al., 2002; Hoheisel et al., 2005; Habel et al., 2007). In addition, women tend to exhibit better performances in recognizing self-conscious emotions (e.g., pride, Tracy and Robins, 2008) whereas men seem to be faster in recognizing anger (Biele and Grabowska, 2006).

Gender differences have not only been examined on subjective but also on physiological measures. Facial electromyography (EMG) research has shown rapid and spontaneous mirroring of emotional expressions in static facial displays (Buck, 1984; Dimberg, 1997) and may therefore contribute to answering questions regarding gender differences. Specifically, positive facial expressions such as happiness evoke increased zygomaticus major muscle activity (lifting the lips to smile) in contrast to negative facial expressions such as anger which elicit increased corrugator supercilii muscle activity (responsible for frowning; Dimberg, 1990). Research examining basic emotions by using short video clips, revealed that the corrugator muscle showed increased activity to expressions of anger, sadness, and disgust, and pronounced relaxation toward happy expressions (Hess and Blairy, 2001). Several studies investigating gender differences utilized static emotional faces and found that women generally exhibited greater facial EMG responses which were most pronounced to positive facial expressions (Dimberg and Lundquist, 1990). In contrast, research using general emotional images from the International Affective Picture System (IAPS; Lang et al., 1997) did not show differences in facial EMG activity between genders (Bianchin and Angrilli, 2012). However, it is important to note that research has also put forward a dynamic facial expression approach to better represent social encounters and observed greater emotion consistent EMG activity to dynamic as compared to static expressions (e.g., Weyers et al., 2006). Importantly, dynamic facial anger expressions of avatars elicited increased corrugator muscle activity in male perceivers whereas dynamic facial happy expressions elicited higher zygomaticus muscle activity in female perceivers (Soussignan et al., 2013).

Two relevant aspects have largely been neglected in the research reviewed above. First, the perceiver and the expresser perspective have rarely been considered jointly (Biaggio, 1989; Lee et al., 2002; Hall and Matsumoto, 2004; Guillem and Mograss, 2005; Bianchin and Angrilli, 2012; Carré et al., 2013). Obtaining a complete picture of social interactions requires fully crossing perceiver and expresser gender in a 2 (perceiver gender) × 2 (expresser gender) design. Second, static images of emotional facial expressions lack the dynamic complexity of naturalistic social-emotional interactions and therefore have limited external validity. Interestingly, Kret et al. (2011) showed increased brain activation in a widespread network including the fusiform gyrus, superior temporal sulcus, and the extrastriate body area in men compared to women by using different stimulus material such as postures vs. faces. Male observers showed these increased activation patterns particularly when exposed to threatening vs. neutral male body postures but not to facial expressions. This study evidenced the importance of considering the interaction of gender and specific stimulus types in emotion perception research. In line with this, some researchers have recently called for ‘more naturalistic stimuli’ and that ‘taking into account the sex of the actor could provide further insight into the issues at stake’(Kret and De Gelder, 2012, p. 1212). Addressing these aspects we have recently developed a naturalistic video set (Blechert et al., 2013) aiming at maximizing external validity within the laboratory context. This video set (termed E.Vids) is balanced in gender to facilitate perceiver gender × expresser gender studies. Emotional valence-specific subjective, facial, and neural (electrocortical, hemodynamic) responses have been documented for this video set (Blechert et al., 2013; Reichenberger et al., 2015; Wiggert et al., 2015).

Based on previous findings, we expected that videos with negative expressions of male actors compared to female actors will be rated as more unpleasant and arousing by both female and male perceivers (Blechert et al., 2013; Reichenberger et al., 2015; Wiggert et al., 2015). In contrast, we expected that videos with positive expressions of female actors compared to male actors will be rated as more pleasant and arousing by both female and male perceivers. To the degree that these experiential effects translate into specific facial expressions (Rinn, 1984; Cacioppo et al., 1992; Bunce et al., 1999; Neumann et al., 2005), more positive valence ratings should be reflected by increased zygomaticus muscle activity and more negative valence should be reflected by increased corrugator muscle activity. Moreover, these effects may be modulated by perceiver gender. For instance, women may respond more negatively to negative evaluations delivered by men. Likewise, positive evaluations may be perceived as more pleasant and arousing when expressed by the opposite actor gender, both contributing to a three-way Emotion condition × perceiver gender × expresser gender interaction effect. The present study allows for a reexamination of gender differences in response to neutral and negatively valenced social stimuli (mainly based on static images). Furthermore, this study extends previous research by including positively valenced and naturalistic stimuli in a fully crossed, participant gender X stimulus gender design. Finally, following a multi-method approach, both experiential as well as facial-muscular responses are collected.

Materials and Methods

Participants

A sample of 58 participants (32 female) with an average age of 22.9 years (SD = 2.5) was recruited through online advertisement and in psychology classes. Participants reported no current mental or neurological disorders, no current use of prescriptive medication except contraceptives, and no current alcohol or drug dependence. Men and women did not differ in age, years of education or body mass index (BMI), ts(56) < 1.13, ps > 0.061. Eligible participants read and signed a consent form that was approved by the ethics committee of the University of Salzburg and received monetary compensation or course credit for participation.

Video Set

The E.Vids video set (Blechert et al., 2013) comprises 3000 ms duration videos of eight negative, eight neutral, and eight positive sentences delivered by 20 actors (10 female) alongside the respective facial and gestural expressions in a naturalistic untrained manner. Negative sentences were chosen to express social criticism/disapproval (e.g., “I hate you”, “You are embarrassing!”), whereas positive sentences were chosen to express compliments and approval (e.g., “I’m proud of you”, “You’ve got it!”) and neutral sentences express neutral conditions (e.g., “It’s 4 o’clock.”, “The train goes fast.”). Expressers were instructed to act spontaneously and naturalistically and to speak directly to the camera to facilitate the perception of a real interaction in observers. Each video started with a neutral facial expression, which transitioned into the sentence with an associated facial expression after an average of 602.50 ms (SD = 220.32 ms). The present study utilized all sentences of 18 of the 20 expressers of E.Vids.

Stimulus-Condition Assignment

In research with static faces multiple expresser identities are used for displaying different basic emotions. The emotional condition matches the expresser identity with relevance for emotion recognition (e.g., Phillips et al., 1998; Goeleven et al., 2008). It may reasonably be argued that assessing emotion reactivity should incorporate unequivocal condition and expresser assignment. Thus, in the present task, a given expresser was always (and repeatedly, but different sentences) presented within one emotional condition (negative or neutral or positive) for a given participant but expressers ‘cycle’ through conditions across participants (Pejic et al., 2013; Hermann et al., 2014) to avoid confounding expresser identity with emotional condition. Another unique feature of the present stimulus set is that the sentences spoken by a given expresser within one condition vary for a given perceiver (five out of eight sentences). This allows us to create a more varied and supposedly more capturing/naturalistic experience of the stimuli. The present passive viewing task included 90 different expresser/sentence combinations in 30 neutral, 30 negative, and 30 positive videos. In each of these three conditions, each perceiver watched six different expressers (three male) delivering five different sentences (to validate the whole stimulus set a different set of five sentences were drawn from the eight sentences available for each condition so that, across perceivers each sentence was presented with equal frequency).

Procedure

The laboratory assessment started with sensor application for peripheral physiological measurements followed by a 4-min quiet sitting baseline and a 3-min heartbeat perception phase (results not reported here). Before the start of the task, perceivers (participants) were asked to imagine a real interaction with the displayed expressers. This was done to facilitate emotional engagement with the stimuli (Blechert et al., 2012, 2015). The 90 three-second videos were presented on a 23-inch LCD monitor with a resolution of 1920 × 1080 pixel and 120 Hz refresh rate, using E-Prime 2.0 Professional (Psychology Software Tools, Inc., Sharpsburg, PA, USA). The intertrial interval varied randomly between 5600 and 6400 ms. Video volume (delivered via external active speakers (X-140 2.0 PC-speaker system 5 W RMS, Logitech, Apples, Switzerland) was constant across perceiver. After completion of the task and sensor removal, perceiver completed several questionnaires and were then debriefed and compensated (10€) for participation.

Self – Report Measures

Valence and arousal self-reports were assessed via a horizontal on-screen visual analog scale (“How would you feel meeting this person?”). Immediately following each video, perceivers were asked to rate their emotional response to the stimulus by indicating (un)pleasantness (0 = pleasant to 100 = unpleasant) and arousal (0 = calm to 100 = aroused/excited).

Psychophysiological Measures: Recording, Offline Analysis, and Response Scoring

Psychophysiological data were recorded with a REFA 8-72 digital amplifier system (TMSi) with 24 bits resolution at 400 Hz, streamed to disk and displayed on a PC monitor for online monitoring of data quality. Facial EMG electrodes for the bipolar recording of the corrugator supercilii and zygomaticus major activities were placed according to international guidelines (Fridlund and Cacioppo, 1986) on the left side of the face. Offline data inspection and manual artifact rejection for EMG was done in ANSLAB 2.6, a customized software suite for psychophysiological recordings (Wilhelm et al., 1999; Wilhelm and Peyk, 2005). EMG preprocessing comprised a 28 Hz high-pass filter, a 50 Hz notch filter, rectification, low pass filtering (15.92 Hz), and a 50 ms moving average filter. Responses were defined as averages across the 3000 ms of the video plus one second after video-end (interval before ratings, since preliminary analyses revealed continued responding after video offset) referenced to a 2000 ms baseline immediately before start of the video. Separate averages were created for all positive, negative, and neutral videos as well as for expresser gender.

Data Reduction and Statistical Analysis

Subjective ratings of valence and arousal were analyzed in two separate 2 (Expresser gender: male vs. female) × 3 (Condition: positive, neutral, negative) × 2 (Perceiver gender: male vs. female) repeated measures analyses of variance (ANOVAs) with perceiver gender as a between subject factor. The EMG measures of the corrugator and the zygomaticus muscle activity were submitted to two separate repeated measures ANOVAs as described for subjective ratings. The alpha level for all analyses was set to 0.05 and significant main or interaction effects were followed up using pairwise comparisons for repeated measure designs applying the Sidák correction (Mean differences = MeanDiff, significance levels, and 95% confidence intervals (CI) are displayed). Effect sizes are reported as partial eta squared . When sphericity assumption was violated in ANOVAs, the Greenhouse-Geisser correction for repeated measures was applied with nominal degrees of freedom and epsilon ε being reported. All statistical analyses were performed using PASW Statistics 21 (SPSS Inc., Chicago, IL, USA).

Results

Self-Report Measures

Valence

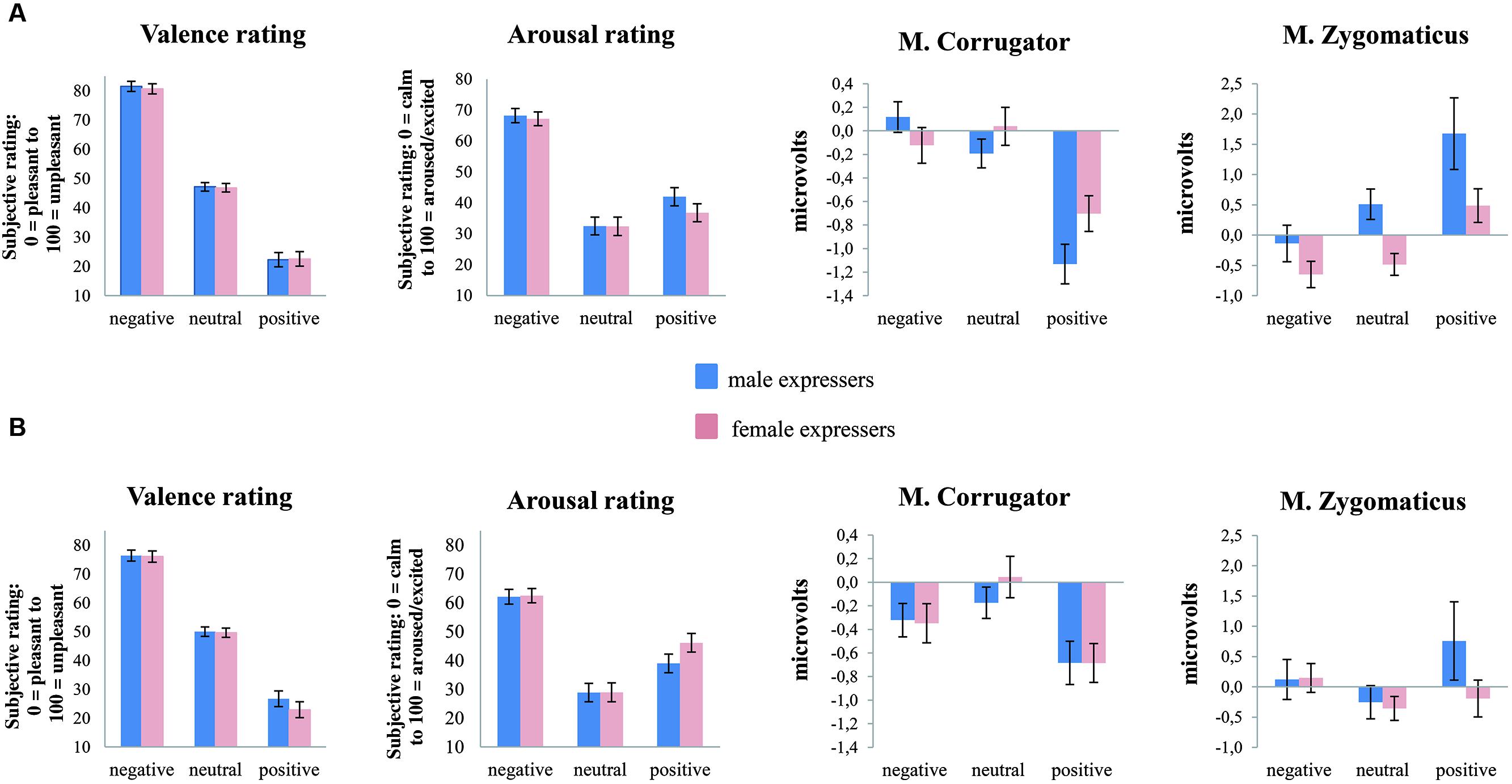

The 2 (Expresser gender: male vs. female) × 3 (Emotion condition: negative, neutral, positive) × 2 (Perceiver gender: male vs. female) repeated measures ANOVA of valence revealed a main effect of Expresser gender, F(1,56) = 4.16, p = 0.046, = 0.07, with male expressers being perceived as more unpleasant than female expressers (MeanDiff = 0.92, p = 0.046, 95% CImale expresser-female expresser [0.017, 1.83]). As expected from previous research with this stimulus set, there was a main effect of Emotion condition, F(2,112) = 351.00, p < 0.001, = 0.86, ε = 0.69. Negative videos were rated as more unpleasant than neutral videos, which in turn were rated as more unpleasant than positive videos (MeanDiffs > 24.80, ps < 0.001, 95% CIneg-neu [25.80, 34.69], 95% CIneu-pos [20.89, 28.72]; Figures 1A,B). However, no main effect of Perceiver gender, F(1,56) = 0.003, p > 0.05 and no interactions of Expresser gender x Emotion condition or Perceiver gender × Emotion condition, Fs < 2.16, ps > 0.121, emerged.

FIGURE 1. (A) Shows response patterns of female participants for valence and arousal ratings as well as M. corrugator and M. zygomaticus activity as a facial expressive response to emotion-evocative video-clips (negative, neutral, positive). (B) Shows response patterns of male participants for valence and arousal ratings as well as M. corrugator and M. zygomaticus activity. Line bars indicate standard error.

Arousal

The ANOVA of arousal ratings revealed a significant Emotion condition effect, F(2,108) = 100.52, p < 0.001, = 0.65 showing that negative videos were rated as more arousing than positive and neutral videos (MeanDiffs > 23.79, ps < 0.001, 95% CIneg-neu [27.99, 41.20], 95% CIneg-pos [18.24, 29.35]). In addition, negative and positive videos were rated as more arousing than neutral videos (MeanDiffs > -34.60, ps < 0.001, 95% CIneu-neg [-41.20, -27.99], 95% CIneu-pos [-17.05, -4.56]) indicating that emotional videos elicit more arousal than neutral videos. Moreover, a significant two-way interaction by Expresser gender × Perceiver gender F(1,54) = 12.00, p = 0.001, = 0.18 as well as a significant three-way interaction of Expresser gender × Emotion condition × Perceiver gender, F(2,108) = 9.63, p < 0.001, = 0.15, ε = 0.88, emerged. In line with our hypotheses, follow-up analyses showed that female perceivers rated positive videos of male expressers as more arousing than those of female expressers (MeanDiff = 5.28, p = 0.014, 95% CImale expresser-female expresser [1.12, 9.43]; Figure 1A) with a reverse pattern in male perceivers: they rated positive videos of female expressers as more arousing than those of male expressers (MeanDiff = 7.14, p = 0.002, 95% CImale expresser-female expresser [2.68, 11.60]; Figure 1B).

Facial EMG

Corrugator Supercilii Muscle

The 2 (Expresser gender: male vs. female) × 3 (Emotion condition: negative, neutral, positive) × 2 (Perceiver gender: male vs. female) repeated measures ANOVA revealed a significant Emotion condition effect, F(2,110) = 24.24, p < 0.001, = 0.31, with positive videos eliciting consistent corrugator muscle relaxation in both perceiver genders (MeanDiffs > -0.73, ps < 0.001, 95% CIpos-neg [-0.94, -0.32], 95% CIpos-neu [-1.02, -0.44]) relative to the other two conditions which in turn were not different from each other (MeanDiff = -0.10, p = 0.676). Moreover, significant Emotion condition × Perceiver gender, F(2,110) = 3.13, p = 0.048, = 0.05, and Emotion condition × Expresser gender interactions, F(2,110) = 6.78, p = 0.002, = 0.11, occurred. Both two-way interactions were qualified by a three-way interaction, F(2,110) = 4.37, p = 0.015, = 0.07 (Figures 1A,B). The three-way interaction was due to stronger condition effects in female perceivers, particularly when confronted with male expressers: Only in this combination (female perceivers/male expressers) all three conditions reliably differed (MeanDiffs > -1.25, ps < 0.001, 95% CIpos-neg [-1.71, -0.79], 95% CIpos-neu [-1.34, -0.54]) with an increase from positive to neutral to negative evaluations.

In contrast, male perceivers did not show different corrugator muscle responses for male vs. female expressers (MeanDiff = -0.06, p = 0.479) but different condition responses were also found in male perceivers, F(2,50) = 10.16, p < 0.001, = 0.29, with unexpected larger corrugator relaxation to positive compared to neutral videos (MeanDiff = 0.63, p = 0.002, 95% CIneu-pos [0.19, 1.05]). In sum, corrugator activity suggested that male expressers elicit linear and strong emotion effects in female perceivers, with an overall special role for positive sentences (Figure 1A).

Zygomaticus Major Muscle

This pattern was partially mirrored by zygomaticus activity, the 2 (Expresser gender: male vs. female) × 3 (Emotion condition: negative, neutral, positive) × 2 (Perceiver gender: male vs. female) repeated measures ANOVA revealed a significant Emotion condition effect, F(2,110) = 6.70, p = 0.004, = 0.11, ε = 0.81, indicating that positive videos elicited smiling in both perceiver genders (MeanDiffs > 0.81, ps < 0.026, 95% CIpos-neg [0.08, 1.55], 95% CIpos-neu [0.15, 1.51]) relative to the other two conditions which in turn were not different from each other (MeanDiff = 0.01, p = 1.00) The significant Emotion condition × Perceiver gender interaction, F(2,110) = 3.38, p = 0.048, = 0.06, ε = 0.81 revealed that female perceivers showed more reliable and condition consistent zygomaticus responses (paralleling corrugator muscle findings) than male perceiver. Pairwise comparisons revealed that female perceivers’ zygomaticus muscle activity showed increasing responses from negative to positive and from neutral to positive conditions, irrespective of expresser gender (MeanDiffs > 1.07, ps < 0.018, 95% CIpos-neg [0.48, 2.47], 95% CIpos-neu [0.15, 1.99]; Figure 1A). In male perceivers Emotion condition effects did not reach significance (MeanDiffs < 0.59, p > 0.311) regardless of expresser gender. The Emotion condition × Expresser gender interaction, F(2,110) = 5.47, p = 0.009, = 0.09, ε = 0.84 revealed that positive videos of male expressers triggered enhanced smiling responses in both perceiver genders in the positive video condition relative to neutral or negative videos (MeanDiffs > 1.09, ps < 0.020, 95% CIpos-neg [0.29, 2.16], 95% CIpos-neu [0.14, 2.04]; Figures 1A,B) which was underlined by the main effect of Expresser gender, F(1,55) = 11.84, p = 0.001, = 0.18. However, the three-way interaction was not significant, F(2,110) = 0.83, p = 0.440.

None of the dependent variables were significantly correlated (all ps > 0.05).

Discussion

Gender differences are biologically and culturally influenced (Rudman and Glick, 2010) and multiple different approaches have been put forward for their explanation. However, a large number of inconsistent findings challenge the test of their respective validity. The current study aimed to contribute to further clarify this issue. We addressed several limitations of previous research by studying social interactions considering both the expresser and the perceiver gender using a well validated, naturalistic emotion-evocative, social-evaluative video set.

Self-Report Data

In line with our prediction, we found an opposite sex preference for positive sentences (compliments/approval) on arousal ratings supporting an unequivocal interpretation that both genders are more open to such evaluation when expressed by the opposite sex, even if these are not explicitly sexual in nature. This result is in line with previous research in the context of gender differences and positive emotions (e.g., Hofmann et al., 2006). Thus, an arousal effect by the opposite gender is generally consistent with the biological evolutionary approach emphasizing that mating strategies supporting reproduction influence positively valenced communication between the sexes (cf., Darwin, 1871). Possessing positive traits, expressing them, and perceiving them in potential opposite-sex mates have evolutionary significance since they predict successful partnership and can be passed on to the offspring. Positive statements of the opposite gender elicit excitement and associated physical arousal may support effort of approach. Interestingly, valence ratings did not show this distinct pattern of gender differences, possibly because ratings of pleasantness of expresser videos were more influenced by idiosyncratic preferences. However, valence ratings underlined the expected emotional condition effects in both perceiver genders indicating more subjective pleasantness toward positive evaluations and more unpleasantness toward negative evaluations.

EMG Responses in the Context of One’s Own Gender Evaluations

Corrugator and zygomaticus muscles showed distinct activity patterns of perceiver genders in relation to expresser genders. In the context of one’s own gender, female perceivers exhibited an increasing corrugator response from positive to negative evaluations of female expressers. This was partially mirrored by the zygomaticus activity decreasing from positive to negative female evaluations. Similarly, male perceivers exhibited an analog pattern from positive to negative evaluations of male expressers. Both perceiver genders showed the expected valence consistent zygomaticus response to positive evaluations (smiling). This suggests that positive evaluations conveying acceptance and appreciation may elicit positive emotions such as happiness and pride which in turn may elevate self-esteem (Fleming and Courtney, 1984). However, both perceiver genders did not display the expected “frowning” response of the corrugator muscle to negative evaluations of their own gender. According to research of emotional mimicry which is conceptualized as a tendency to imitate the emotional expression of interaction partners particularly when people are motivated to bond with each other (Hess and Fischer, 2013), positive emotion displays are assumed to be mimicked whereas facial expressions perceived as offensive, are not mimicked (Fischer et al., 2012). In the present study, a happy face may have been mimicked by the perceiver because it was accompanied by positive evaluations which underline an affiliative intention. In contrast, negative evaluations of one’s own gender may have been considered as particularly hostile leading to an inhibition of facial responding.

EMG Responses in the Context of Opposite Gender Evaluations

Interestingly, in the context of opposite gender evaluations, female perceivers were most responsive to male expressers, with corrugator activity increase from positive to neutral and from neutral to negative. This was further supported by the zygomaticus activity indicating an activity increase from negative to neutral and from neutral to positive evaluations. This is in line with prior research suggesting that facial expressions have been associated with higher emotional responses to happiness and anger in female than male perceivers (Biele and Grabowska, 2006). The evolutionary approach (Darwin, 1871) may point toward a particular female sensitivity to affective states of potential male partners and future caregivers. Women may respond more accurately and faster to anger expressions of men because the often physically stronger men may represent greater threats than women (for an overview see, Rudman and Glick, 2010). Additionally, female perceivers have been reported to exhibit an enhanced corrugator muscle activity compared to men when exposed to anger-eliciting stimuli (Schwartz et al., 1980; Kring and Gordon, 1998; Bradley et al., 2001). In contrast, other studies have shown that men respond faster and more precisely to anger eliciting stimuli specifically when those are posed by other males (Goos and Silverman, 2002; Seidel et al., 2010). This is incongruent to the present finding of male perceivers who only responded differentially to positive evaluations regardless of the expresser gender. This particular finding may suggest that compliments expressed by men are scarce in Western societies (Holmes, 1993) and therefore it may have demonstrated a more pleasant and surprising event leading to increased smiling by both perceiver genders toward positive social evaluations of male expressers.

According to the more distinctively emotional facial muscular responses in female than male participants, women were overall more emotionally responsive than men. This is in line with our expectation and the majority of studies investigating gender differences in emotionality using EMG and facial expressions (e.g., Greenwald et al., 1989; Thunberg and Dimberg, 2000; Bradley et al., 2001). Furthermore, according to the biological approach, those gender differences of responsiveness may also be due to differences in emotional contagion which is defined as “catching another person’s emotion” (Hatfield et al., 1993), automatically mimic this emotion, and in turn through interoceptive feedback mechanisms also feeling this emotion (Flack, 2006). Positive associations between facial imitative responses and empathy have been revealed in previous research (Sonnby-Borgstrom, 2002; Sonnby-Borgstrom et al., 2003) where women tend to exhibit higher empathy scores than men (Baron-Cohen and Wheelwright, 2004; Rueckert and Naybar, 2008; Derntl et al., 2010).

Unexpectedly, male expressers of positive social evaluations elicited higher responses (on corrugator and zygomaticus activity), specifically when perceived by women (corrugator activity). This result is contrary to our expectation and previous findings showing faster responses in women to angry male faces (Rotteveel and Phaf, 2004) and stronger responses and activation patterns in specific brain areas (e.g., ACC, visual cortex) in men to threatening male stimuli (Mazurski et al., 1996; Fischer et al., 2004b). However, Seidel et al. (2010) showed that happy male faces were rated more positively than happy female faces, in contrast to angry and disgusted male faces that were rated more negatively than female faces. Our current subjective ratings do not match those previous findings but facial muscle activity partially matches this set of prior subjective results. Although such discrepancies are commonplace and not always well understood, they point to the fact that much of our non-verbal communication is not well represented in our conscious experiential systems. This indicates that some populations might show dysfunctional facial-communicative behavior without explicitly being able to report or become aware of this discrepancy, leading to ambivalent or disturbing expressions or perceptions. Concordance between self-report and psychophysiological measures is often low which highlights the importance of assessing variables from both domains in emotion research (Evers et al., 2014).

It has been suggested that women are generally more emotionally expressive than men (Kring and Gordon, 1998) but as reviewed above, angry male faces tend to elicit stronger responses in both genders. According to our results, male expressers eliciting stronger responses is not limited to negative social evaluations but encompasses positive social encounters as well. Stimulus differences may explain the extended finding in the positive emotion condition. Prior research predominantly utilized basic facial emotions, thus disregarding social environments/contexts and higher-order emotions such as pride, appreciation or embarrassment. However, research has shown that gender differences in expressive behavior are context-dependent, socialized due to display rules, and emotion-dependent (for review, Kret and De Gelder, 2012). The majority of experienced emotions in our daily lives occurring in social interactions appear to be dynamic and multifaceted in nature rather than static and similar. Hence, our study is emphasizing naturalistic, dynamic stimuli with multimodal expressions (speech, gesture, and movements).

Limitations

Our study has several limitations. We did not assess sexual orientation of participants. Furthermore, assessing contraceptive use and cycle phase in women, which have been associated with emotion recognition (Derntl et al., 2008, 2013) may further clarify variances in emotion reactivity in women. Additionally, the measurement of more facial EMG channels could give further insight to the involvement of specific emotions (for review see, Hess and Fischer, 2013). Future research may utilize this stimulus set for facial action coding (Ekman and Friesen, 1978) to more precisely map emotion expressions relating to social interactions. In this context we cannot rule out differential cognitive emotion regulation strategies in men and women. It is generally difficult in this type of research to disentangle emotion reactivity due to emotional contagion from emotional mimicry. Furthermore, the sample here was chosen to match age of the actors. Language of the stimuli was age – appropriate for university students between 20 and 30 years. Thus, the present results are probably more applicable to this age group and to peer – interaction. Other age groups or between generation interaction might well show different response patterns. This limits the generalization of the results and points to new avenues of research.

Conclusion

In summary, the current study contributes to further clarification of gender differences in emotional social interactions utilizing a more ecologically valid and naturalistic paradigm. Specifically, in the positive social evaluation condition, valence congruent facial muscular responses of both perceiver genders have been displayed. Furthermore, this study takes the first step in revealing pronounced positive expressive communication patterns in men (male expressers) during social interactions. Therefore, gender differences in positive social encounters associated with both perspectives (perceiver and expresser) deserve more attention in future research.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgment

This research was funded by the “Österreichische Nationalbank Fonds (ÖNB-Funds)” [Austrian National Bank Funds] (J15555).

Supplementary Material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fpsyg.2015.01372

References

Bagley, S. L., Weaver, T. L., and Buchanan, T. W. (2011). Sex differences in physiological and affective responses to stress in remitted depression. Physiol. Behav. 104, 180–186. doi: 10.1016/j.physbeh.2011.03.004

Baron-Cohen, S., and Wheelwright, S. (2004). The empathy quotient: an investigation of adults with Asperger syndrome or high functioning autism, and normal sex differences. J. Autism. Dev. Disord. 34, 163–175. doi: 10.1023/B:JADD.0000022607.19833.00

Biaggio, M. K. (1989). Sex differences in behavioral reactions to provocation of anger. Psychol. Rep. 64, 23–26. doi: 10.2466/pr0.1989.64.1.23

Bianchin, M., and Angrilli, A. (2012). Gender differences in emotional responses: a psychophysiological study. Physiol. Behav. 105, 925–932. doi: 10.1016/j.physbeh.2011.10.031

Biele, C., and Grabowska, A. (2006). Sex differences in perception of emotion intensity in dynamic and static facial expressions. Exp. Brain Res. 171, 1–6. doi: 10.1007/s00221-005-0254-0

Björkqvist, K., Lagerspetz, K. M. J., and Kaukiainen, A. (1992). Do girls manipulate and boys fight? Developmental trends in regard to direct and indirect aggression. Aggress. Behav. 18, 117–127. doi: 10.1002/1098-2337(1992)18:2<117::AID-AB2480180205>3.0.CO;2-3

Blechert, J., Schwitalla, M., and Wilhelm, F. H. (2013). Ein video-set zur experimentellen untersuchung von emotionen bei sozialen interaktionen: validierung und erste daten zu neuronalen effekten [A video-set for experimental assessment of emotions during social interaction: validation and first data on neuronal effects]. Z. Psychiatr. Psychol. Psychother. 61, 81–91.

Blechert, J., Sheppes, G., Di Tella, C., Williams, H., and Gross, J. J. (2012). See what you think: reappraisal modulates behavioral and neural responses to social stimuli. Psychol. Sci. 23, 346–353. doi: 10.1177/0956797612438559

Blechert, J., Wilhelm, F. H., Williams, H., Braams, B. R., Jou, J., and Gross, J. J. (2015). Reappraisal facilitates extinction in healthy and socially anxious individuals. J. Behav. Ther. Exp. Psychiatry 46, 141–150. doi: 10.1016/j.jbtep.2014.10.001

Bradley, M. M., Codispoti, M., Sabatinelli, D., and Lang, P. J. (2001). Emotion and motivation II: sex differences in picture processing. Emotion 1, 300–319. doi: 10.1037/1528-3542.1.3.300

Brown, P., and Levinson, S. (1987). Politeness: Some Universals in Language Using. Cambridge: Cambridge University Press.

Buck, R., Miller, R. E., and Caul, W. F. (1974). Sex, personality, and physiological variables in the communication of affect via facial expression. J. Pers. Soc. Psychol. 30, 587–596. doi: 10.1037/h0037041

Bunce, S. C., Bernat, E., Wong, P. S., and Shevrin, H. (1999). Further evidence for unconscious learning: preliminary support for the conditioning of facial EMG to subliminal stimuli. J. Psychiatr. Res. 33, 341–347. doi: 10.1016/S0022-3956(99)00003-5

Bussey, K., and Bandura, A. (1999). Social cognitive theory of gender development and differentiation. Psychol. Rev. 106, 676–713. doi: 10.1037/0033-295X.106.4.676

Cacioppo, J. T., Bush, L. K., and Tassinary, L. G. (1992). Microexpressive facial actions as a function of affective stimuli - replication and extension. Pers. Soc. Psychol. Bull. 18, 515–526. doi: 10.1177/0146167292185001

Cahill, L. (2006). Why sex matters for neuroscience. Nat. Rev. Neurosci. 7, 477–484. doi: 10.1038/nrn1909

Campbell, A., and Muncer, S. (1987). Models of anger and aggression in the social talk of women and men. J. Theory Soc. Behav. 17, 489–511. doi: 10.1111/j.1468-5914.1987.tb00110.x

Carré, J. M., Murphy, K. R., and Hariri, A. R. (2013). What lies beneath the face of aggression? Soc. Cogn. Affect. Neurosci. 8, 224–229. doi: 10.1093/scan/nsr096

Chapman, B. P., Duberstein, P. R., Sörensen, S., and Lyness, J. M. (2007). Gender differences in five factor model personality traits in an elderly cohort: extension of robust and surprising findings to an older generation. Pers. Individ. Dif. 43, 1594–1603. doi: 10.1016/j.paid.2007.04.028

Derntl, B., Finkelmeyer, A., Eickhoff, S., Kellermann, T., Falkenberg, D. I., Schneider, F., et al. (2010). Multidimensional assessment of empathic abilities: neural correlates and gender differences. Psychoneuroendocrinology 35, 67–82. doi: 10.1016/j.psyneuen.2009.10.006

Derntl, B., Habel, U., Windischberger, C., Robinson, S., Kryspin-Exner, I., Gur, R. C., et al. (2009). General and specific responsiveness of the amygdala during explicit emotion recognition in females and males. BMC Neurosci. 10:91. doi: 10.1186/1471-2202-10-91

Derntl, B., Kryspin-Exner, I., Fernbach, E., Moser, E., and Habel, U. (2008). Emotion recognition accuracy in healthy young females is associated with cycle phase. Horm. Behav. 53, 90–95. doi: 10.1016/j.yhbeh.2007.09.006

Derntl, B., Schopf, V., Kollndorfer, K., and Lanzenberger, R. (2013). Menstrual cycle phase and duration of oral contraception intake affect olfactory perception. Chem. Senses 38, 67–75. doi: 10.1093/chemse/bjs084

Dimberg, U. (1990). Facial electromyography and emotional reactions. Psychophysiology 27, 481–494. doi: 10.1111/j.1469-8986.1990.tb01962.x

Dimberg, U. (1997). Facial reactions: Rapidly evoked emotional responses. J. Psychophysiol. 11, 115–123.

Dimberg, U., and Lundquist, L. O. (1990). Gender differences in facial reactions to facial expressions. Biol. Psychol. 30, 151–159. doi: 10.1016/0301-0511(90)90024-Q

Ekman, P., and Friesen, W. (1978). Facial Action Coding System: A Technique for the Measurement of Facial Movement. Palo Alto: Consulting Psychologists Press.

Ekman, P., and Friesen, W. V. (1982). Felt, False, and Miserable Smiles. J. Nonverbal Behav. 6, 238–252. doi: 10.1007/BF00987191

Evers, C., Hopp, H., Gross, J. J., Fischer, A. H., Manstead, A. S., and Mauss, I. B. (2014). Emotion response coherence: a dual-process perspective. Biol. Psychol. 98, 43–49. doi: 10.1016/j.biopsycho.2013.11.003

Faith, M. S., Flint, J., Fairburn, C. G., Goodwin, G. M., and Allison, D. B. (2001). Gender differences in the relationship between personality dimensions and relative body weight. Obes. Res. 9, 647–650. doi: 10.1038/oby.2001.86

Fischer, A. H., Becker, D., and Veenstra, L. (2012). Emotional mimicry in social context: the case of disgust and pride. Front. Psychol. 3:475. doi: 10.3389/fpsyg.2012.00475

Fischer, A. H., Rodriguez Mosquera, P. M., Van Vianen, A. E., and Manstead, A. S. (2004a). Gender and culture differences in emotion. Emotion 4, 87–94. doi: 10.1037/1528-3542.4.1.87

Fischer, H., Fransson, P., Wright, C. I., and Bäckman, L. (2004b). Enhanced occipital and anterior cingulate activation in men but not in women during exposure to angry and fearful male faces. Cogn. Affect. Behav. Neurosci. 4, 326–334. doi: 10.3758/CABN.4.3.326

Flack, W. F. (2006). Peripheral feedback effects of facial expressions, bodily postures, and vocal expressions on emotional feelings. Cogn. Emot. 20, 177–195. doi: 10.1080/02699930500359617

Fleming, J. S., and Courtney, B. E. (1984). The dimensionality of self-esteem: II. Hierarchical facet model for revised measurement scales. J. Pers. Soc. Psychol. 46, 404–421. doi: 10.1037/0022-3514.46.2.404

Fridlund, A. J., and Cacioppo, J. T. (1986). Guidelines for human electromyographic research. Psychophysiology 23, 567–589. doi: 10.1111/j.1469-8986.1986.tb00676.x

Goeleven, E., De Raedt, R., Leyman, L., and Verschuere, B. (2008). The Karolinska directed emotional faces: a validation study. Cogn. Emot. 22, 1094–1118. doi: 10.1017/sjp.2013.9

Goos, L. M., and Silverman, I. (2002). Sex related factors in the perception of threatening facial expressions. J. Nonverbal Behav. 26, 27–41. doi: 10.1023/A:1014418503754

Greenwald, M. K., Cook, E. W., and Lang, P. J. (1989). Affective judgement and psychophysiology. J. Psychophysiol. 3, 51–64.

Guillem, F., and Mograss, M. (2005). Gender differences in memory processing: evidence from event-related potentials to faces. Brain Cogn. 57, 84–92. doi: 10.1016/j.bandc.2004.08.026

Habel, U., Windischberger, C., Derntl, B., Robinson, S., Kryspin-Exner, I., Gur, R. C., et al. (2007). Amygdala activation and facial expressions: explicit emotion discrimination versus implicit emotion processing. Neuropsychologia 45, 2369–2377. doi: 10.1016/j.neuropsychologia.2007.01.023

Hall, J. A., and Matsumoto, D. (2004). Gender differences in judgments of multiple emotions from facial expressions. Emotion 4, 201–206. doi: 10.1037/1528-3542.4.2.201

Hatfield, E., Cacioppo, J. T., and Rapson, R. L. (1993). Emotional Contagion. Cambridge: Cambridge University Press.

Hermann, A., Keck, T., and Stark, R. (2014). Dispositional cognitive reappraisal modulates the neural correlates of fear acquisition and extinction. Neurobiol. Learn. Mem. 113, 115–124. doi: 10.1016/j.nlm.2014.03.008

Hess, U., and Blairy, S. (2001). Facial mimicry and emotional contagion to dynamic emotional facial expressions and their influence on decoding accuracy. Int. J. Psychophysiol. 40, 129–141. doi: 10.1016/S0167-8760(00)00161-6

Hess, U., and Fischer, A. (2013). Emotional mimicry as social regulation. Pers. Soc. Psychol. Rev. 17, 142–157. doi: 10.1177/1088868312472607

Hofmann, S. G., Suvak, M., and Litz, B. T. (2006). Sex differences in face recognition and influence of facial affect. Pers. Individ. Dif. 40, 1683–1690. doi: 10.1016/j.paid.2005.12.014

Hoheisel, B., Habel, U., Windischberger, C., Robinson, S., Kryspin-Exner, I., and Moser, E. (2005). Amygdala and emotions – investigation of gender and cultural differences in facial emotion recognition. Eur. Psychiatry 20, S199–S199.

Holmes, J. (1993). New-Zealand women are good to talk to - an analysis of politeness strategies in interaction. J. Pragmat. 20, 91–116. doi: 10.1016/0378-2166(93)90078-4

Keltner, D., and Haidt, J. (1999). Social functions of emotions at four levels of analysis. Cogn. Emot. 13, 505–521. doi: 10.1080/026999399379168

Kirchengast, S., and Marosi, A. (2008). Gender differences in body composition, physical activity, eating behavior and body image among normal weight adolescents–an evolutionary approach. Coll. Antropol. 32, 1079–1086.

Kret, M. E., and De Gelder, B. (2012). A review on sex differences in processing emotional signals. Neuropsychologia 50, 1211–1221. doi: 10.1016/j.neuropsychologia.2011.12.022

Kret, M. E., Pichon, S., Grezes, J., and De Gelder, B. (2011). Men fear other men most: Gender specific brain activations in perceiving threat from dynamic faces and bodies. An fMRI study. Front. Psychol. 2:3. doi: 10.3389/fpsyg.2011.00003

Kring, A. M., and Gordon, A. H. (1998). Sex differences in emotion: expression, experience, and physiology. J. Pers. Soc. Psychol. 74, 686–703. doi: 10.1037/0022-3514.74.3.686

Lang, P. J., Bradley, M. M., and Cuthbert, B. N. (1997). International Affective Picture System (Iaps): Digitized Photographs, Instruction Manual and Affective Ratings. Technical Report A-6. Gainesville, FL: University of Florida.

Leary, M. R., Twenge, J. M., and Quinlivan, E. (2006). Interpersonal rejection as a determinant of anger and aggression. Pers. Soc. Psychol. Rev. 10, 111–132. doi: 10.1207/s15327957pspr1002_2

Lee, T. M., Liu, H. L., Hoosain, R., Liao, W. T., Wu, C. T., Yuen, K. S., et al. (2002). Gender differences in neural correlates of recognition of happy and sad faces in humans assessed by functional magnetic resonance imaging. Neurosci. Lett. 333, 13–16. doi: 10.1016/S0304-3940(02)00965-5

Mazurski, E. J., Bond, N. W., Siddle, D. A., and Lovibond, P. F. (1996). Conditioning with facial expressions of emotion: effects of CS sex and age. Psychophysiology 33, 416–425. doi: 10.1111/j.1469-8986.1996.tb01067.x

Neumann, R., Hess, M., Schulz, S. M., and Alpers, G. W. (2005). Automatic behavioural responses to valence: evidence that facial action is facilitated by evaluative processing. Cogn. Emot. 19, 499–513. doi: 10.1080/02699930512331392562

Pasick, R. S., Gordon, G., and Meth, R. L. (1990). “Helping men understand themsleves,” in Men in Therapy, eds R. L. Meth and R. S. Pasick (New York: Guilford Press), 131–151.

Pejic, T., Hermann, A., Vaitl, D., and Stark, R. (2013). Social anxiety modulates amygdala activation during social conditioning. Soc. Cogn. Affect. Neurosci. 8, 267–276. doi: 10.1093/scan/nsr095

Phillips, P. J., Wechsler, H., Huang, J., and Rauss, P. J. (1998). The FERET database and evaluation procedure for face-recognition algorithms. Image Vis. Comput. 16, 295–306. doi: 10.1016/S0262-8856(97)00070-X

Reichenberger, J., Wiggert, N., Wilhelm, F. H., Weeks, J. W., and Blechert, J. (2015). “Don’t put me down but don’t be too nice to me either”: fear of positive vs. negative evaluation and responses to positive vs. negative social-evaluative films. J. Behav. Ther. Exp. Psychiatry 46, 164–169. doi: 10.1016/j.jbtep.2014.10.004

Rinn, W. E. (1984). The neuropsychology of facial expression: a review of the neurological and psychological mechanisms for producing facial expressions. Psychol. Bull. 95, 52–77. doi: 10.1037/0033-2909.95.1.52

Rosenfeld, R. G. (2004). Gender differences in height: an evolutionary perspective. J. Pediatr. Endocrinol. Metab. 17(Suppl. 4), 1267–1271.

Rotteveel, M., and Phaf, R. H. (2004). Automatic affective evaluation does not automatically predispose for arm flexion and extension. Emotion 4, 156–172. doi: 10.1037/1528-3542.4.2.156

Rueckert, L., and Naybar, N. (2008). Gender differences in empathy: the role of the right hemisphere. Brain Cogn. 67, 162–167. doi: 10.1016/j.bandc.2008.01.002

Schwartz, G. E., Brown, S.-L., and Ahern, G. L. (1980). Facial muscle patterning and subjective experience during affective imagery: sex differences. Psychophysiology 17, 75–82. doi: 10.1111/j.1469-8986.1980.tb02463.x

Schweinberger, S. R., and Schneider, D. (2014). Wahrnehmung von personen und soziale kognitionen [Person perception and social cognition]. Psycholog. Rundsc. 65, 212–226. doi: 10.1026/0033-3042/a000225

Seidel, E. M., Habel, U., Kirschner, M., Gur, R. C., and Derntl, B. (2010). The impact of facial emotional expressions on behavioral tendencies in women and men. J. Exp. Psychol. Hum. Percept. Perform. 36, 500–507. doi: 10.1037/a0018169

Sharkin, B. S. (1993). Anger and gender - theory, research, and implications. J. Counsel. Dev. 71, 386–389. doi: 10.1002/j.1556-6676.1993.tb02653.x

Sonnby-Borgstrom, M. (2002). Automatic mimicry reactions as related to differences in emotional empathy. Scand. J. Psychol. 43, 433–443. doi: 10.1111/1467-9450.00312

Sonnby-Borgstrom, M., Jonsson, P., and Svensson, O. (2003). Emotional empathy as related to mimicry reactions at different levels of information processing. J. Nonverbal Behav. 27, 3–23. doi: 10.1023/A:1023608506243

Soussignan, R., Chadwick, M., Philip, L., Conty, L., Dezecache, G., and Grezes, J. (2013). Self-relevance appraisal of gaze direction and dynamic facial expressions: effects on facial electromyographic and autonomic reactions. Emotion 13, 330–337. doi: 10.1037/a0029892

Taylor, S. E., Klein, L. C., Lewis, B. P., Gruenewald, T. L., Gurung, R. A., and Updegraff, J. A. (2000). Biobehavioral responses to stress in females: tend-and-befriend, not fight-or-flight. Psychol. Rev. 107, 411–429. doi: 10.1037/0033-295X.107.3.411

Thayer, J. F., and Johnson, B. (2000). Sex differences in judgement of facial affect: a multivariate analysis of recognition errors. Scand. J. Psychol. 41, 243–246. doi: 10.1111/1467-9450.00193

Thunberg, M., and Dimberg, U. (2000). Gender differences in facial reactions to fear-relevant stimuli. J. Nonverbal Behav. 24, 45–51. doi: 10.1023/A:1006662822224

Tracy, J. L., and Robins, R. W. (2008). The nonverbal expression of pride: evidence for cross-cultural recognition. J. Pers. Soc. Psychol. 94, 516–530. doi: 10.1037/0022-3514.94.3.516

Weyers, P., Muhlberger, A., Hefele, C., and Pauli, P. (2006). Electromyographic responses to static and dynamic avatar emotional facial expressions. Psychophysiology 43, 450–453. doi: 10.1111/j.1469-8986.2006.00451.x

Wiggert, N., Wilhelm, F. H., Reichenberger, J., and Blechert, J. (2015). Exposure to social-evaluative video clips: neural, facial-muscular, and experiential responses and the role of social anxiety. Biol. Psychol. 110, 59–67. doi: 10.1016/j.biopsycho.2015.07.008

Wilhelm, F. H., Grossman, P., and Roth, W. T. (1999). Analysis of cardiovascular regulation. Biomed. Sci. Instrum. 35, 135–140.

Wilhelm, F. H., and Peyk, P. (2005). Autonomic Nervous System Laboratory (ANSLAB) – Shareware Version. Software Repository of the Society for Psychophysiological Research. Available at: http://www.sprweb.org

Keywords: sex differences, social evaluation, emotion, facial electromyography, social interaction

Citation: Wiggert N, Wilhelm FH, Derntl B and Blechert J (2015) Gender differences in experiential and facial reactivity to approval and disapproval during emotional social interactions. Front. Psychol. 6:1372. doi: 10.3389/fpsyg.2015.01372

Received: 30 April 2015; Accepted: 26 August 2015;

Published: 22 September 2015.

Edited by:

Agneta H. Fischer, University of Amsterdam, NetherlandsReviewed by:

Stefan Sütterlin, Lillehammer University College, NorwayMariska Esther Kret, University of Amsterdam, Netherlands

Copyright © 2015 Wiggert, Wilhelm, Derntl and Blechert. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nicole Wiggert, Division of Clinical Psychology, Psychotherapy, and Health Psychology, Department of Psychology, University of Salzburg, Hellbrunnerstrasse 34, 5020 Salzburg, Austria, nicole.wiggert@sbg.ac.at

Nicole Wiggert

Nicole Wiggert Frank H. Wilhelm

Frank H. Wilhelm Birgit Derntl

Birgit Derntl Jens Blechert

Jens Blechert