- Department of Psychology, MacEwan University, Edmonton, AB, Canada

Music perception of cochlear implants (CI) users is constrained by the absence of salient musical pitch cues crucial for melody identification, but is made possible by timing cues that are largely preserved by current devices. While musical timing cues, including beats and rhythms, are a potential route to music learning, it is not known what extent they are perceptible to CI users in complex sound scenes, especially when pitch and timbral features can co-occur and obscure these musical features. The task at hand, then, becomes one of optimizing the available timing cues for young CI users by exploring ways that they might be perceived and encoded simultaneously across multiple modalities. Accordingly, we examined whether training tasks that engage active music listening through dance might enhance the song identification skills of deaf children with CIs. Nine CI children learned new songs in two training conditions: (a) listening only (auditory learning), and (2) listening and dancing (auditory-motor learning). We examined children's ability to identify original song excerpts, as well as mistuned, and piano versions from a closed-set task. While CI children were less accurate than their normal hearing peers, they showed greater song identification accuracies in versions that preserved the original instrumental beats following learning that engaged active listening with dance. The observed performance advantage is further qualified by a medium effect size, indicating that the gains afforded by auditory-motor learning are practically meaningful. Furthermore, kinematic analyses of body movements showed that CI children synchronized to temporal structures in music in a manner that was comparable to normal hearing age-matched peers. Our findings are the first to indicate that input from CI devices enables good auditory-motor integration of timing cues in child CI users for the purposes of listening and dancing to music. Beyond the heightened arousal from active engagement with music, our findings indicate that a more robust representation or memory of musical timing features was made possible by multimodal processing. Methods that encourage CI children to entrain, or track musical timing with body movements, may be particularly effective in consolidating musical knowledge than methods that engage listening only.

Introduction

When asked to explain how she attains the elusive musicality in her dancing, Makarova (1975), one of the most celebrated classical dancers of the twentieth century replied, “Even the ears must dance” (p. 65). This is more than a motto summarizing an artistic approach to a craft—one that emphasizes the thoughtful listening necessary to achieve musicality in dance—it is also an apt description of the overlapping sensory, motor, and psychological processes that underlie the joint activities of music listening and our inclination to move to it.

We have a natural tendency to move to music, and we do so with ease, and without explicit training. This involves the coordinated interplay between different sensory systems that enables us to gain meaningful and multifaceted musical experiences. For children with profound hearing loss, a barrier to music learning stems from a lack of access to salient acoustic cues that form the basis to many musical structures. For an increasing number of profoundly deaf children, this sensory deficit is partially offset by cochlear implants (CIs). These are surgically implanted sensory prostheses that generate hearing-like sensations by means of an electrode array that stimulates the auditory nerve with electrical patterns that code the acoustic features of sound.

Cochlear Implants Are Effective for Speech, but Not for Music

What is known is that the auditory input of CIs conveys timing information that is within the normal range of hearing listeners (Gfeller and Lansing, 1991; Gfeller et al., 1997). In practice, this timing information can convey acoustic-phonetic features of speech in ideal (i.e., quiet) listening conditions (Remez et al., 1981; Wilson, 2000). Fine structure information necessary for pitch perception, however, is omitted (Shannon et al., 1995) at the expense of this timing information.

Incomplete as this form of electric hearing is, CIs afford the possibility for children who lost their hearing early in life to become good oral communicators, and many children with CIs can attain speech proficiencies that are on par with their hearing peers (Svirsky et al., 2000). In other domains such as music and voice perception, however, such constrained auditory input presents many challenges on child users' listening skills (Gfeller and Lansing, 1991; Stordahl, 2002; Vongpaisal et al., 2012) because they are mainly reliant on timing cues to decode non-speech communication signals. This presents a unique challenge for CI users in their attempts to make sense of sounds where pitch cues are prominent and timing cues are less important. For instance, melody recognition depends critically on pitch cues and less so on timing cues. Consequently, CI children have difficulty recognizing popular folk tunes (Stordahl, 2002). However, they are able to recognize songs at well-above chance levels when the original acoustic cues at the time of learning are presented (Vongpaisal et al., 2006, 2009).

For the purposes of music listening, CI users are able to use available timing cues to detect tempo in music (Kong et al., 2004), to discriminate rhythm (Gfeller and Lansing, 1991; Gfeller et al., 1997), and to recognize familiar songs when original pitch and timing cues are preserved (Volkova et al., 2014). However, it is not known to what extent these timing cues are perceptible to CI users in complex sound scenes, especially when pitch and timbral features co-occur and obscure temporal features of music. Furthermore, most Western music places greater emphasis on melodic detail, with less distinctiveness occurring in the temporal and rhythmic dimension (Fraisse, 1982). Thus, timing-based memory representations form weaker representations of songs in comparison to melodic-based ones (Hébert and Peretz, 1997), making song recognition on the basis of temporal cues a difficult task (White, 1960; Volkova et al., 2014).

Consequently, music listening based exclusively on temporal features presents a unique challenge for CI users and may limit music learning and appreciation to its full capacity, more often in adult recipients with previous hearing experience than child recipients (Fujita and Ito, 1999; Gfeller et al., 2002). Remarkably, many child CI recipients acquire music appreciation demonstrating that their perceptual acuity problems do not deter their enjoyment of music with many incorporating music and dance activities in their daily life (Stordahl, 2002; Mitani et al., 2007; Trehub et al., 2009). Nevertheless, their music learning lags behind that of their hearing peers (Gfeller and Lansing, 1991; Stordahl, 2002). Since the aforementioned limitations are unlikely to be resolved with the current configuration of CI devices, the challenge then becomes one of optimizing the available cues through novel multimodal learning strategies.

Music and Movement Go Hand in Hand: Auditory and Motor Contributions to Learning

Much of the research conducted to date on CI children's music perception has focused on assessing their listening-based musical skills (Gfeller and Lansing, 1991; Stordahl, 2002; Gfeller et al., 2005; Vongpaisal et al., 2006, 2009). Not surprisingly, CI children largely underperform their hearing peers in an array of music perception tasks (Gfeller and Lansing, 1991; Vongpaisal et al., 2004). However, such a restricted approach does not consider the role of the other senses and the multiple influences that contribute to the rich and varied musical experiences of listeners in natural settings. Furthermore, the others senses may be especially important in compensating for the restricted auditory input of current devices, thereby providing CI children an alternative route to music. For instance, hearing listeners are propelled to move to music from tapping along to the beat to dancing. The embodiment of music—the integration of actions, or purposeful movements, with sensory information to influence how we learn and think about music—involves overlapping systems that enable sensory-motor interactions to occur (Sevdalis and Keller, 2011). That is, sensory experiences can influence movement to music; while movement, in turn, can influence how music is perceived.

This natural affinity to synchronize or entrain to music emerges in early life, and the influence of movement on the perception of musical timing is evident in infancy (Phillips-Silver and Trainor, 2005). Although neurological evidence indicates that auditory and motor systems engage and map onto the same neural structures (Chen et al., 2006), much is still unknown about what this joint activation entails. For instance, do auditory and motor systems work independently and thus contribute something unique to learning, or do these systems depend on each other such that the functioning of one system is integral to the functioning of the other?

Some insight into this process has been gained from observing the auditory-motor performance of musicians and its influences on memory formation. For them, learning was greatest under multimodal conditions that engaged auditory and motor systems jointly in comparison to learning that engaged these modalities independently (Palmer and Meyer, 2000; Brown and Palmer, 2012, 2013). The findings suggest that the coupling of motor and auditory learning enhances encoding of music by creating a greater abstract or gist representation of melodies, provides multiple routes for the retrieval of information, and can provide complementary information beyond that enabled by any individual modality (Palmer and Meyer, 2000; Brown and Palmer, 2012, 2013).

While these findings corroborate what is known about the benefits of coupling action and perception in speech and language learning (MacLeod et al., 2010; MacLeod, 2011), they are the first to contribute to a unified framework on multimodal learning in music. Taken together, these findings are conceptually important and of practical significance to our present research on the musical skills of hearing impaired populations. Our focus on CI children allows us to probe more deeply into how this multimodal framework is affected by hearing loss.

Music Entrainment and Music Learning in Children with Hearing Loss

While there has been no research to date examining beat entrainment in CI children, there has been only one systematic study that examined musical beat synchronization of adult CI users to music. In comparison to hearing controls who can bounce accurately to different renditions of dance stimuli, Phillips-Silver et al. (2015) found that adult CI users bounced best to simplified drum renditions and synchronized poorly to dance stimuli that contained melodic pitch variations. While the diverse hearing histories of adults, who received their implant later in life, no doubt contribute to the variable tracking of musical tempo, the ultimate demonstration of beat entrainment with CIs would be to observe these skills in child CI users who were either born deaf or prelingually deafened.

There is little research to date examining children's movement in dance and its relationship to the psychological functions involved in music listening. However, recent work by Demir et al. (2014) demonstrating the unique contribution of gestural cues to enhance complex language processing in hearing children, lends a strong basis to our predictions on beat entrainment to enhance music learning in children with hearing loss. They found that teachers who used gestures to highlight verbal input during storytelling, encouraged more complex narratives in children's retelling of these stories in comparison to those who learned through auditory or auditory-visual means without gestures. Furthermore, the advantages of gestures were particularly pronounced in children whose language abilities have been compromised by early brain injury (Demir et al., 2014). By extension, the use of rich multimodal cues that include gestural components could augment auditory and musical skill in our sample of children with hearing loss in educational and rehabilitative contexts.

Taken together, the body of research on multimodal learning provides us with a foundation to explore how the coupling of motor and auditory systems can be used to improve musical and communicative outcomes in children with hearing impairments. From a basic research perspective, the study of children with CIs offers an unparalleled opportunity to study the limits of perception, and the conditions that enable the development of listening and communication skills when hearing is impaired and partially restored. Furthermore, the findings will expand our understanding of how the developing auditory and motor systems adapt to sensory deficits in children, how the integration of auditory and other sensory functions contribute to music learning and auditory capacity in general, and how explicit training can alter the barriers imposed by hearing loss.

In short, the coupling of motor and auditory learning enhances encoding of music, provides multiple routes for the retrieval of information, and can complement or compensate for missing information in an individual modality. These findings are conceptually important and of practical significance for the present study on the musical skills of CI children. In turn, studies on CI children allow us to probe more deeply into how this multimodal framework is affected by sensory impairment.

The aim of the present study was to examine whether dancing and movement during music listening can improve CI children's song learning by enhancing their sensitivity to musical time (e.g., beat, rhythm). We predicted that CI children's learning of songs will be better (as demonstrated by higher accuracy scores) when they dance along to the music in comparison to when they listen to the music only. Purposeful movement that is synchronized to the beat is expected to consolidate the encoding of musical timing information to a greater extent than that achieved by passive listening.

Materials and Methods

Participants

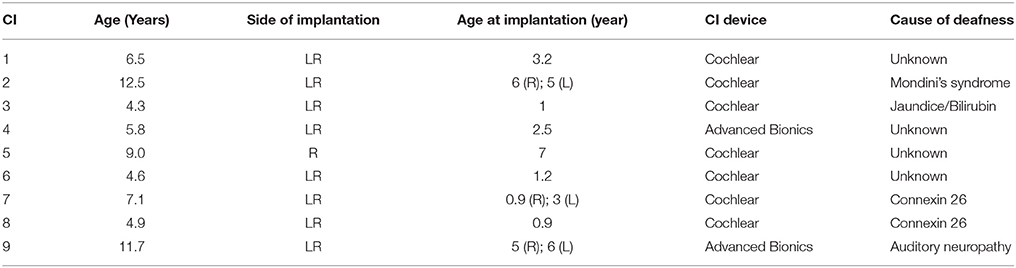

Ten CI children were initially recruited for the present study. However, one CI participant did not complete the study due to disinterest. Except for one child who was implanted in the right ear only, all were bilateral CI users (see Table 1 for individual details). They used their devices for an average of 4.3 years (SD = 1.7), ranging from 2 to 6.7 years. All children received a small toy and gift card as a token of appreciation.

A final sample of nine CI children (M = 7.4 years, SD = 3.0 years) participated in the study. For the listen and dance training task, kinematic analysis from three CI participants was not possible due to the inability to measure stable movement patterns from their motor behaviors. This included running around the room as a response to music listening (n = 1), or limited variability in movement due to shyness (n = 2). Therefore, data from seven CI children (CI 1, 4, 5, 6, 7, 8, and 9) were amenable to kinematic analyses. We recruited seven individually age-matched normal hearing controls (named accordingly to their CI matched peer: NH 1, 4, 5, 6, 7, 8, and 9 M = 8.0 years, SD = 3.4 years), who were within one year of their CI peer's age. We relied on parent reports on the normal hearing status of their child. This was confirmed by the experimenters who observed no difficulties in NH children with the listening demands of the tasks.

All parents provided written consent granting their child's participation, and all children provided verbal assent to participate in the present study. The present study was approved through MacEwan University's policy on the ethical review of research with human participants. It was carried out in full accordance with the ethical standards of the Canadian Tri-Council Policy Statement: Ethical Conduct for Research Involving Humans (TCPS 2).

Stimuli

The set of stimuli consisted of eight song excerpts (20–30 s in duration) of the pop-rock genre chosen to be unfamiliar to children in the current study. We derived a song list (see Table 2) that was child-friendly and that was likely to be unfamiliar to the young children in this sample. All selections were mid- to up-tempo songs that were contemporary popular music hits from previous decades, and were not in regular rotation on television, radio, or other entertainment media at the time of the study. For each child, three different song excerpts were randomly selected for each learning condition. Prior to testing, parents were asked to confirm whether the songs selected for testing were unfamiliar to their child; in all cases, parents did so.

Alternative renditions were generated for a subsequent song recognition task. For the mistuned versions of the excerpts, select notes were shifted by 1–2 semitones using a digital audio pitch-correcting software program (Melodyne, Celemony Software GmbH). Piano versions of the excerpts were generated by an experienced musician who performed and recorded the piano versions of the vocal melody and instrumental beat accompaniment in the original pitch and tempo of the excerpts (See Supplementary Materials). The tempo of each song was measured by a metronome in beats per minute and converted to Hertz. Table 2 lists the song set and the beat frequency of individual songs.

Procedure

Participants were tested individually and learned unfamiliar pop songs in two training condition: (1) Auditory-only: by passive listening to songs, and (2) Auditory-motor: by listening and dancing to songs. For each training condition, children learned a set of three unfamiliar pop songs that were selected randomly from the set in Table 2. The order of training conditions was counterbalanced for each child.

In the auditory-only condition, children were introduced to the song title—which was depicted by a cartoon image—presented onscreen. The images for the song titles were to be used in a subsequent song recognition task. To listen to the song, the child touched, or clicked on, the image onscreen. While the music played through loudspeakers at comfortable listening levels, (65 dB SPL), the child remained seated in front of a blank computer monitor. Each excerpt was played at least two times; however, the songs could be played as many times upon request. In actuality, each excerpt was played between two to four times. No child requested replays beyond this number, presumably to minimize restlessness or disinterest.

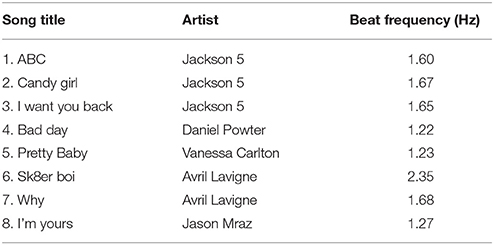

In the auditory-motor condition, children were presented with a projected point-light image of themselves onscreen (see Figure 1). A three-dimensional motion sensor camera (Microsoft Kinect for Windows), was placed in front of the child (2.1 m from the camera to the center of the dance platform, at a height of 0.76 m from the floor), and was used to capture and record the child's motion. The camera was connected to a laptop computer, which also controlled a multimedia projector (Optoma DW339) that displayed the captured point-light image on a screen (2.1 × 1.2 m, width by height, respectively) positioned in front of the child. This setting enabled the child to view his or her movements mirrored in the point-light image while the music played. Figure 1 shows the joint indices captured from a child's dancing in the auditory-motor learning condition. Twenty body indices were tracked and recorded for kinematic analyses to be conducted offline.

Figure 1. Motion capture recording (Kinect for Windows) tracking the dancing of a child participant (7 years old). Three body indices were chosen for analysis in the present study including the head, distance between hands, and distance between feet.

Children were instructed that the point-light image moved along with them, and their task was to dance, or generate movements, as they listened to the music. As with the auditory-only condition, each song excerpt was preceded by the presentation of the song title in the form of a cartoon image displayed onscreen, after which the music played. Each excerpt was played at least two times during which the children were encouraged to dance along to the songs. This was repeated as requested until they felt they could remember the songs. As with the auditory-only condition, no child requested more than four replays for any given excerpt.

Immediately following each training session, children's song learning was assessed in a computerized task that presented the original song excerpts and alternative versions including the mistuned and piano versions. Songs versions were presented in blocks, and the block order was randomized for each participant. Within each block, song excerpts were presented twice (for a total score out of 6) in pseudorandom order with the condition that no excerpt was repeated sequentially. A computer program played song excerpts through loudspeakers and recorded children's selections among three-alternative song title images presented on a touchscreen monitor. Non-contingent feedback was provided in the form of a visually engaging cartoon caricature image that encouraged children to continue.

Results

Our goal in the present study was to ascertain whether auditory and motor processes engaged in dancing and music listening, in comparison to passive listening, influenced song learning in CI children. Accordingly, our analyses focused on examining children's accuracy in identifying original and alternate versions of songs. We also sought to examine motor entrainment to music by evaluating children's body movement patterns in relation to the beat patterns in music.

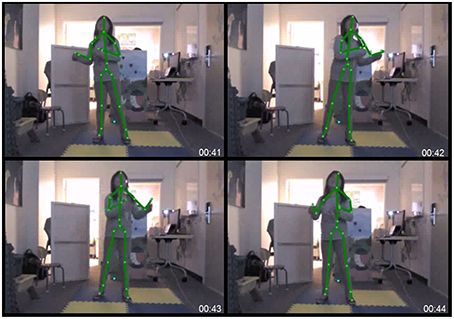

Song Recognition Task

Figure 2A shows that NH children's accuracies across conditions were uniformly high. One-sample t-tests confirmed that all six mean scores across learning and song version conditions are not significantly different from perfect accuracy, 100% (ps > 0.05). Therefore, any benefit of auditory-motor training would not emerge due to the overall ceiling performance of hearing children in this task.

Figure 2. Song recognition accuracy of NH children (A) and CI children (B) in the original and alternative song renditions in the listen-only (auditory) and listen-and-dance (auditory-motor) training conditions. Error bars represent standard errors of the mean.

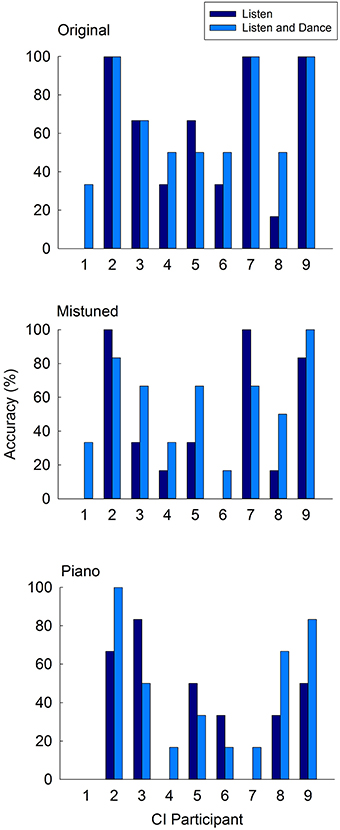

By comparison, CI children achieved more modest accuracies and showed greater variability in performance across conditions. Figure 2B shows the accuracy scores of CI children across song versions in each training condition, and individual scores are presented in Figure 3. To assess for any order effects in the administration of training conditions, we examined whether there was any difference in the overall mean scores between children who were instructed to listen-only first (n = 5), and those who were instructed to listen-and-dance first (n = 4). An independent samples t-test shows that there was no significant difference (p > 0.05) in the overall mean scores that is attributed to training order differences between these groups (M = 46.1 and 55.6%; SD = 37.6 and 27.7%, listen only first, and listen-and-dance first, respectively).

Figure 3. Individual song accuracy scores for CI participants in listening-only and listen-and-dance learning conditions.

A two-way within subjects ANOVA was used to examine training (listen-only, listen and dance) and song version (original, mistuned, piano) effects on CI children's recognition accuracy. A main effect of training was found, F(1, 8) = 6.28, p = 0.037, indicating that CI children's overall accuracy in the listen and dance training (M = 55.5%, SD = 30.0%) was greater than that in the listen-only condition (M = 45.1%, SD = 36.5%). In addition, a main effect of song version was found, F(2, 16) = 3.93, p = 0.041. Paired-sample t-tests reveal that CI children's recognition of the original song versions (M = 62.0%, SD = 32.2%) was more accurate than their recognition of mistuned versions (M = 50.0%, SD = 34.3), t(17) = 3.20, p = 0.005. In addition, their accuracy on the original versions was greater than that on piano versions (M = 38.9%, SD = 31.8%), t(17) = 3.08, p = 0.007. However, there was no significant difference between their recognition of mistuned and piano versions, t(17) = 1.36, p = 0.19. The two-way interaction between training and song version was not significant, F < 1.

To examine the difference between training conditions more closely, we conducted one-sample t-tests to compare the mean scores in each condition against chance performance, where the chance probability is 1/3. In the listen-only training condition, CI children's recognition accuracy of the original versions approached significance, t(8) = 1.888, p = 0.096. However, their accuracies in the mistuned and piano versions were not significantly different from chance performance, ps > 0.05. In the listen and dance training condition, however, CI children scored above chance in the original and mistuned versions (ps = 0.005, 0.026), while their accuracies on the piano versions were not different from chance (p > 0.05).

Furthermore, inspection of individual accuracies in the original versions (Figure 3) reveals that four children (CI 1, 4, 6, 8) showed gains from listen and dance training. Three other CI children (CI 2, 7, 9) achieved perfect scores that were equal to those achieved by listening only, and only one CI child (CI 5) scored slightly lower following listen and dance training. A clear advantage for listen and dance training, however, was observed in the recognition of mistuned songs. While three CI children (CI 4, 6, 8) showed this advantage across original and mistuned versions, the majority (7 of 9; including CI 1, 3, 4, 5, 6, 8, and 9) performed better following listen and dance training in this version. Scores in the piano versions showed no clear pattern associated with training, presumably due to the less distinctive beat cues in these versions in comparison to the full instrumental versions.

To determine the magnitude of the auditory-motor advantage, we examined the relationship between accuracy scores in the listen-only and listen and dance training. Because the accuracy scores are not normally distributed, a Spearman's correlation was used to examine the association. The analysis revealed a significant and strong positive correlation between accuracy in the listen-only and listen and dance conditions rs = 0.81, p < 0.001. A Cohen's d = 0.66 (accounting for learning condition as a within-subjects variable) reveals a medium effect size, indicating that the auditory-motor advantage is of moderate practical significance.

Thus, with short-term exposure to songs as seen in the current study, training involving listening and dancing yielded better than chance performance in versions that contained beat cues in their original instrumentation. The advantage was pronounced in a task that demanded greater transfer of learning, as that occurring in the mistuned versions.

Analysis of Body Movement and Beat Synchronization

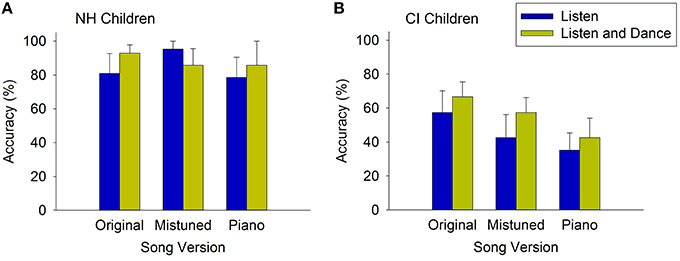

While the Kinect motion capture camera tracks and records the movement of 20 body indices (see Figure 1), we focused on three body indices that enabled us to best characterize the full body movement patterns of children in the current experimental set-up. These included movement patterns in the head, distance between hands, and distance between feet.

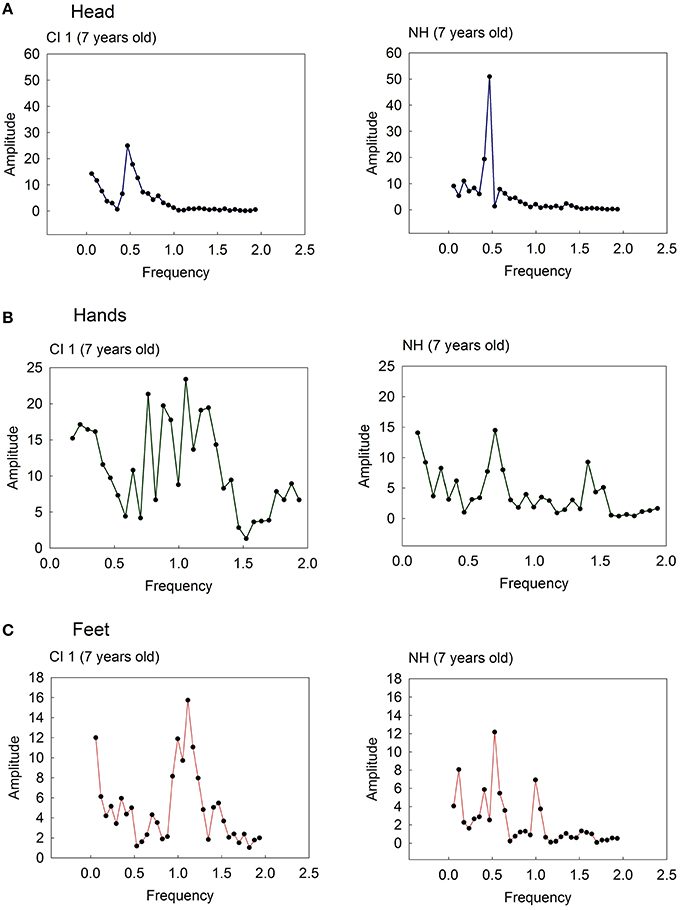

To assess whether children moved in synchrony to the beat of songs, we examined whether the body movement frequencies matched the beat frequencies of the songs. Since children's movement frequencies may vary with song, and may vary according to idiosyncratic movement tendencies, we examined body synchrony at four related beat frequencies according to the following ratios: 0.25:1, 0.5:1, 1:1, and 2:1. For each trial, body movement variability (for head, between-hands, between-feet distances) was computed as the difference between the observed body movement frequency and the expected beat frequency of the song. Figure 4 show the movement frequency distribution of the head, between-hands, and between-feet distances of a 7-year old child and age-match control for the same song. The data were submitted to a Fast Fourier Analysis to extract the dominant movement frequency. As can be seen, a dominant frequency can be extracted from the child's movement patterns (e.g., head), and in some cases, more than one dominant frequency may emerge. This often corresponds to a complex movement sequence comprising more than one movement component. For instance, two dominant frequencies in the NH child's frequency distribution of hand movements correspond to a periodic arm swing and hand shake as part of a single movement sequence to a beat (see Figure 4B). In such cases, we included up to two dominant frequencies in the computation of the average movement frequency for a body index (see Table 3).

Figure 4. Frequency distribution of the (A) head, (B) between-hands, and (C) between-feet movements of a child with cochlear implants (CI, 7 years old) and an age-matched normal hearing (NH) child.

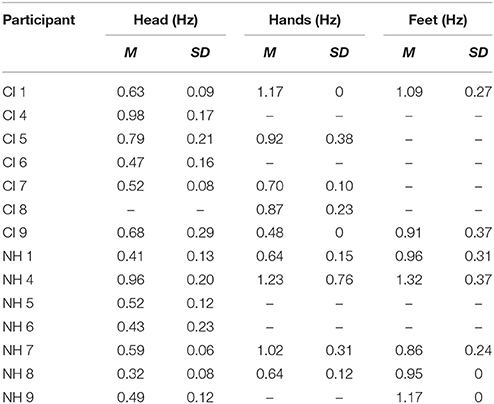

Table 3. Mean frequency of head, between-hands, between-feet movements for individual CI participants and age-matched hearing controls.

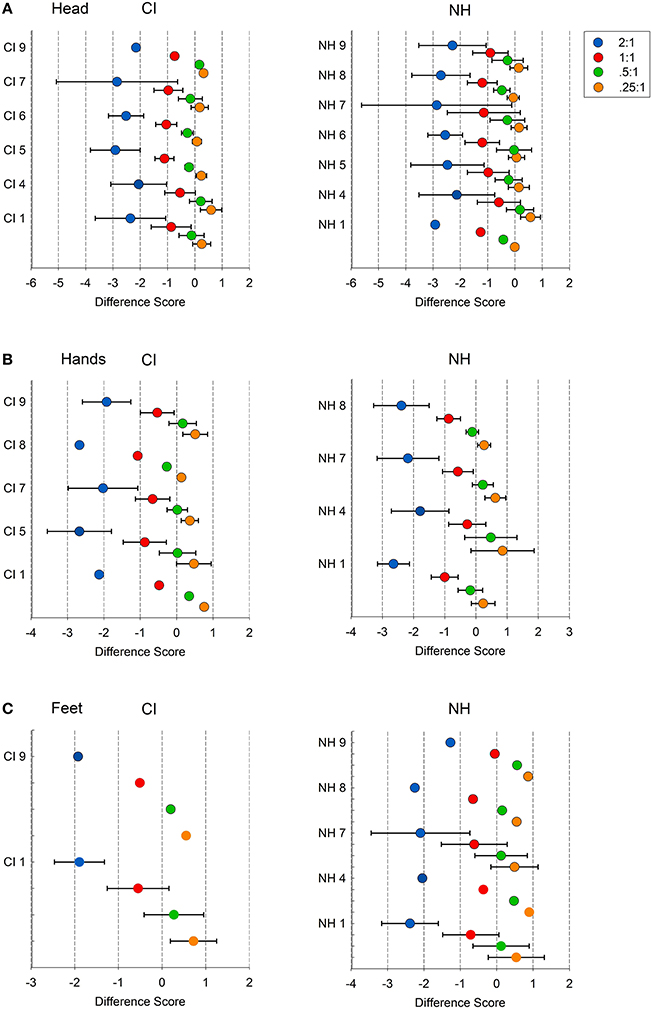

To derive a global measure of body movement variability at each beat frequency level, the average frequency across songs was calculated for each body index. Figure 5 reports the mean movement frequencies for the head, hands, and feet, and associated two-sided 95% confidence intervals, generated by each child. One-sample t-tests (2-tailed) on these means were conducted to determine whether the movement variability at each beat frequency level differed from zero. Good beat synchronization occurs when there is a close match (i.e., no significant difference) between the observed body movement frequency and the actual beat frequency level of the song. These analyses were possible when at least three movement frequencies were extracted across all song samples.

Figure 5. Mean difference scores for individual CI children and age-match controls at four beat frequency levels (2:1, 1:1, 0.5:1, and 0.25:1) and for three body indices: (A) head, (B) between-hands, and (C) between-feet. Difference scores are averaged across songs, and are computed from the difference between body movement frequency and song beat frequency. Error bars indicate 95% confidence intervals. Difference scores closer to zero indicate good beat synchrony.

When there was an insufficient number of samples to conduct meaningful significance testing, the means were simply plotted (e.g., mean head and hand frequencies for CI 9). As can be seen, for both CI and NH children's movements, confidence intervals most often included zero for the 0.5:1 and 0.25:1 beat structures levels of songs. That is, both groups of children tended to produce body movements that are synchronized to every second beat or every fourth beat in a song, respectively. Inspection of this figure also reveals that fewer children generated periodic movements with their hands and feet.

One notable observation is the occurrence of synchronized body movements to the 1:1 beat level by individual NH children (head: NH 4 and 7; hands: NH 4; and feet: NH 1 and 7) and CI children (hands: CI 4; feet: CI 1). This likely reflects a greater tendency to generate more complex body movements with individual components that are synchronized to more than one beat structure in songs. In short, kinematic analyses indicate that most NH and CI children synchronize to every second and fourth beat in songs, with some generating movement components that synchronize to every beat.

Discussion

The aim of the present study was to examine whether learning that engages auditory-motor processing during listening leads to better song knowledge than learning that engages auditory processes only. We found that, within the short time span of the present study, dancing to music had an impact on CI children's song learning, as shown by greater memory for songs in a follow-up identification task. This advantage was qualified by a medium effect size (Cohen's d = 0.66) indicating that auditory-motor processing in active music listening with dance is of practical importance. By comparison, hearing children performed at ceiling levels in remembering songs learned when dancing and when listening passively to music. Any potential gains from auditory-motor learning were likely masked due to the ease with which hearing children could remember music in the current task.

Although CI children were considerably less accurate than their hearing peers, learning conditions that engaged auditory and motor skills enabled them to identify songs at above chance levels in the original and mistuned transformations. In contrast, their identification of the piano versions was at chance level. This advantage suggests that motor responses to music are most effective in consolidating musical representations in versions that retain the original percussive beats.

We observed that the transfer of learning from listening and dance training was best seen in the mistuned condition—an unfamiliar version that retained the original instrumentation of the percussive beats. Their lower accuracies in the mistuned version, in comparison to the original version, suggest that the original spectral information was important for song recognition as distortions from our pitch shifting manipulation disrupted performance. Nevertheless, it is exceptional that they succeeded in achieving scores above chance levels in mistuned versions when engaged in listening and dancing, while they were unable to do so following training that involved passive listening.

The observed advantage is likely conservative given the short-term and self-determined lengths of exposure to songs in the training session. These margins could be increased over longer training periods and greater duration of exposure to stimuli. In addition, providing children greater structure, or guided direction in generating movements to specific timing structures, could go further in improving beat synchronization and song learning.

To further understand children's motor response to music, we examined whether their dance movements synchronized to the temporal structures in songs. Because the main objective of the present study was to examine children's natural entrainment to the beat, no attempts to constrain or choreograph children's musical movements were made of any kind. What emerged was a picture of children's implicit interpretation of timing features in music, and their natural movement patterns toward them. The majority of children generated improvisational expressive gestures that corresponded with the timing structures in songs.

Kinematic analyses of body movements showed that CI children entrained most frequently to every second or fourth beat in songs, indicating that they can hear and generate a synchronized motor response to temporal structures in music. Furthermore, an inspection of patterns across groups reveals that CI children attuned to key timing features in ways that appear qualitatively similar to those of hearing peers. This finding is consistent with the observations reported by Phillips-Silver et al. (2015) showing that adult CI users entrain to the beat of Latin Meringue music as well as hearing controls; however, for our young sample of CI children, there is no evidence that the complex spectral variations in pop songs interfered with their ability to dance to the beat. This may be due, in part, to the benefits of greater adaptation to electrical auditory input by children, and also to the heightened salience of stereotyped beats in mainstream popular dance music. Taken together, our findings suggest that partial hearing restoration with CIs can enable the development of auditory-motor circuits that support synchronized dance movements to music, which may be underpinned by mirror neuron systems that integrate motor-auditory-visual inputs (Le Bel et al., 2009).

Due to the naturalistic conditions of the study, the results represent the variable and individual movement tendencies of children to music in everyday listening conditions. Some children demonstrated a greater range of full-body movements to music, while other children tended to generate expressive head movements only. Prior to the study, none of the CI children received any formal dance or music lessons, therefore the greater variability in body movements across head, hands, and feet observed in some children is not attributed to any training advantages, and likely reflects individual differences in the production of expressive movement. Future research could determine whether explicit training, or structured learning tasks focusing on music and motor entrainment, could lead to further improvements in temporal pattern processing or musical knowledge in general.

Although it is not possible to determine any systematic effects of demographic variables or device characteristics on performance outcomes in this small sample, inspection across individual results (Figure 3) reveals that the highest song identification accuracies across versions were achieved by the oldest CI children (CI 2 and CI 9). While both of these children were late and sequential bilateral implantees, respectively, (with the latter possibly receiving delayed implantation as a result of auditory neuropathy) their advantage likely stemmed from longer duration of device use and more advanced general cognitive ability than their younger peers. It is also noteworthy that the participant (CI 1) who had the most difficulty with song recognition across versions was among the younger CI participants (CI 3, 4, 6, and 8) in this group (median age 6.5 year old). Furthermore, among these younger CI users, this participant (CI 1) was the oldest at age of implantation despite having similar length of device use. By contrast, all other younger CI participants received their implants at < 3 years of age. Thus, the participant's younger age, in combination with more advanced age at implantation, could underlie the observed poorer performance in this task relative to other CI children in our sample. Finally, it is also notable the only unilateral implantee (CI 5) displayed no particular disadvantage in this task as a result of single-sided CI input, scoring within the range of bilateral implantees.

To what can we attribute CI children's greater success in song learning when listening and dancing to music? Auditory and motor processes engaged simultaneously in music listening can promote the encoding of timing redundancies in music via rich multimodal representations of musical structure. This entrainment to music can generate heightened attention to musical features rendering them more salient for learning and memory in comparison to processing that occurs in one modality alone (Bahrick et al., 2002). This is supported by evidence indicating that neural responses to rhythms are enhanced following training that couples hand tapping movements to auditory rhythm processing (Chemin et al., 2014).

While multimodal processing involving visual-motor (Horn et al., 2007) and fine motor skills (Horn et al., 2006) have been linked with language outcomes in CI children, the present findings are an important first step toward understanding the basic auditory-motor contributions to music learning in these children. Our goal of linking motor behavior and perception in CI children's music learning may have broader implications in facilitating their learning in a range of non-musical domains. This is based on a growing body of research showing that the same auditory and motor skills engaged in music could transfer to the language domain by increasing children's sensitivity to acoustic speech features (Tierney and Kraus, 2013). In short, our findings indicate that learning strategies that recruit complementary multimodal information and capitalize on entrainment, can enhance learning and memory for music. Accordingly, this sets an important precedent, in future CI research, to examine the possible transfer of multimodal music learning to other domains that depend on good auditory capacity and listening skills.

Author Contributions

TV was primarily responsible for the conception and design of the study. All authors undertook the major task of data acquisition. All authors contributed to the analysis and interpretation of the data, as well as to the preparation and final approval of the manuscript. Finally, all authors are accountable for all aspects of the present study.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This research was supported by laboratory start-up funds provided by the Department of Psychology, a grant from the Research Services Office at MacEwan University, as well as a SSHRC Insight Development Grant awarded to TV. We thank the Cochlear Implant Unit at the Glenrose Rehabilitation Hospital (Edmonton Alberta) for advice, as well as Cheryl Redhead at the Connect Society for assistance with recruiting participants in the community.

Supplementary Material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fpsyg.2016.00835

References

Bahrick, L. E., Flom, R., and Lickliter, R. (2002). Intersensory redundancy facilitates discrimination of tempo in 3-month-old infants. Dev. Psychobiol. 41, 352–363. doi: 10.1002/dev.10049

Brown, R. M., and Palmer, C. (2012). Auditory-motor learning influences auditory memory formusic. Mem. Cognit. 40, 567–578. doi: 10.3758/s13421-011-0177-x

Brown, R. M., and Palmer, C. (2013). Auditory and motor imagery modulate learning in musicperformance. Front. Hum. Neurosci. 7:320. doi: 10.3389/fnhum.2013.00320

Chemin, B., Mouraux, A., and Nozaradan, S. (2014). Body movement selectivity shapes the neuralrepresentation of musical rhythms. Psychol. Sci. 25, 2147–2159. doi: 10.1177/0956797614551161

Chen, J. L., Zatorre, R. J., and Penhune, V. B. (2006). Interactions between auditory and dorsal premotor cortex during synchronization to musical rhythms. Neuroimage 32, 1771–1781. doi: 10.1016/j.neuroimage.2006.04.207

Demir, O. E., Fisher, J. A., Goldin-Meadow, S., and Levine, S. C. (2014). Narrative processing in typically developing children and children with early unilateral brain injury: seeing gestures matters. Dev. Psychol. 50, 815–828. doi: 10.1037/a0034322

Fraisse, P. (1982). “Rhythm and tempo,” in The Psychology of Music, ed D. Deutsch (New York, NY: Academic Press), 149–180.

Fujita, S., and Ito, J. (1999). Ability of nucleus cochlear implantees to recognize music. Ann. Otol. Rhinol. Laryngol. 108, 634–640.

Gfeller, K., and Lansing, C. R. (1991). Melodic, rhythmic, and timbral perception of adult cochlear implant users. J. Speech Hear. Res. 34, 916–920. doi: 10.1044/jshr.3404.916

Gfeller, K., Olszewski, C., Rychener, M., Sena, K., Knutson, J. F., Witt, S., et al. (2005). Recognition of “real-world” musical excerpts by cochlear implant recipients and normal-hearing adults. Ear Hear. 26, 237–250. doi: 10.1097/00003446-200506000-00001

Gfeller, K., Witt, S., Woodworth, G., Mehr, M. A., and Knutson, J. (2002). Effects of frequency, instrumental family, and cochlear implant type on timbre recognition and appraisal. Ann. Otol. Rhinol. Laryngol. 111, 349–356. doi: 10.1177/000348940211100412

Gfeller, K., Woodworth, G., Robin, D. A., Witt, S., and Knutson, J. F. (1997). Perception of rhythmic and sequential pitch patterns by normally hearing adults and cochlear implant users. Ear Hear. 18, 252–260. doi: 10.1097/00003446-199706000-00008

Hébert, S., and Peretz, I. (1997). Recognition of music in long-term memory: are melodic and temporal patterns equal partners? Mem. Cognit. 25, 518–533. doi: 10.3758/BF03201127

Horn, D. L., Fagan, M. K., Dillon, C. M., Pisoni, D. B., and Miyamoto, R. T. (2007). Visual-motor integration skills of prelingually deaf children: Implications for pediatric cochlear implantation. Laryngoscope 117, 2017–2025. doi: 10.1097/MLG.0b013e3181271401

Horn, D. L., Pisoni, D. B., and Miyamoto, R. T. (2006). Divergence of fine and gross motor skills in prelingually deaf children: implications for cochlear implantation. Laryngoscope 116, 1500–1506. doi: 10.1097/01.mlg.0000230404.84242.4c

Kong, Y. Y., Cruz, R., Jones, J. A., and Zeng, F. G. (2004). Music perception with temporal cues in acoustic and electric hearing. Ear Hear. 25, 173–185. doi: 10.1097/01.AUD.0000120365.97792.2F

Le Bel, R. M., Pineda, J. A., and Sharma, A. (2009). Motor-auditory-visual integration: The role of the human mirror neuron system in communication and communication disorders. J. Commun. Disord. 42, 299–304. doi: 10.1016/j.jcomdis.2009.03.011

MacLeod, C. M. (2011). I said, you said: the production effect gets personal. Psychon. Bull. Rev. 18, 1197–1202. doi: 10.3758/s13423-011-0168-8

MacLeod, C. M., Gopie, N., Hourihan, K. L., Neary, K. R., and Ozubko, J. D. (2010). The production effect: delineation of a phenomenon. J. Exp. Psychol. Learn. Mem. Cogn. 36, 671–685. doi: 10.1037/a0018785

Mitani, C., Nakata, T., Trehub, S. E., Kanda, Y., Kumagami, H., Takasaki, K., et al. (2007). Music recognition, music listening, and word recognition by deaf children with cochlear implants. Ear Hear. 28, 29S–33S. doi: 10.1097/AUD.0b013e318031547a

Palmer, C., and Meyer, R. K. (2000). Conceptual and motor learning in music performance. Psychol. Sci. 11, 63–68. doi: 10.1111/1467-9280.00216

Phillips-Silver, J., Toiviainen, P., Gosselin, N., Turgeon, C., Lepore, F., and Peretz, I. (2015). Cochlear implant users move in time to the beat of drum music. Hear. Res. 321, 25–34. doi: 10.1016/j.heares.2014.12.007

Phillips-Silver, J., and Trainor, L. J. (2005). Feeling the beat: movement influences infant rhythm perception. Science 308:1430. doi: 10.1126/science.1110922

Remez, R. E., Rubin, P. E., Pisoni, D. B., and Carrell, T. D. (1981). Speech perception without traditional speech cues. Science 212, 947–950. doi: 10.1126/science.7233191

Sevdalis, V., and Keller, P. E. (2011). Captured by motion: dance, action understanding, and social cognition. Brain Cogn. 77, 231–236. doi: 10.1016/j.bandc.2011.08.005

Shannon, R., Zeng, F.-G., Kamath, V., Wygonski, J., and Ekelid, M. (1995). Speech recognition with primarily temporal cues. Science 270, 303–304.

Stordahl, J. (2002). Song recognition and appraisal: A comparison of children who use cochlear implants and normally hearing children. J. Music Ther. 39, 2–19. doi: 10.1093/jmt/39.1.2

Svirsky, M. A., Robbins, A. M., Kirk, K. I., Pisoni, D. B., and Miyamoto, R. T. (2000). Language development in profoundly deaf children with cochlear implants. Psychol. Sci. 11, 153–158. doi: 10.1111/1467-9280.00231

Tierney, A., and Kraus, N. (2013). The ability to move to a beat is linked to the consistency of neural responses to sound. J. Neurosci. 33, 14981–14988. doi: 10.1523/JNEUROSCI.0612-13.2013

Trehub, S. E., Vongpaisal, T., and Nakata, T. (2009). Music in the lives of deaf children with cochlear implants. Ann. N.Y. Acad. Sci. 1169, 534–542. doi: 10.1111/j.1749-6632.2009.04554.x

Volkova, A., Trehub, S. E., Schellenberg, E. G., Papsin, B. C., and Gordon, K. A. (2014). Children's identification of familiar songs from pitch and timing cues. Front. Psychol. 5:863. doi: 10.3389/fpsyg.2014.00863

Vongpaisal, T., Trehub, S. E., and Schellenberg, E. G. (2006). Song recognition by children and adolescents with cochlear implants. J. Speech Lang. Hear. Res. 49, 1091–1103. doi: 10.1044/1092-4388(2006/078)

Vongpaisal, T., Trehub, S. E., and Schellenberg, E. G. (2009). Identification of TV tunes by children with cochlear implants. Music Percept. 27, 17–24. doi: 10.1525/mp.2009.27.1.17

Vongpaisal, T., Trehub, S. E., Schellenberg, E. G., and van Lieshout, P. (2012). Age-related changes in talker recognition with reduced spectral cues. J. Acoust. Soc. Am. 131, 501–508. doi: 10.1121/1.3669978

Vongpaisal, T., Trehub, S., Schellenberg, E. G., and Papsin, B. (2004). Music recognition by children with cochlear implants. Int. Congr. Ser. 1273, 193–196. doi: 10.1016/j.ics.2004.08.002

Keywords: auditory-motor learning, multimodal learning, music, dance, deafness, cochlear implants, children

Citation: Vongpaisal T, Caruso D and Yuan Z (2016) Dance Movements Enhance Song Learning in Deaf Children with Cochlear Implants. Front. Psychol. 7:835. doi: 10.3389/fpsyg.2016.00835

Received: 26 November 2015; Accepted: 18 May 2016;

Published: 15 June 2016.

Edited by:

Klaus Libertus, University of Pittsburgh, USAReviewed by:

Andrej Kral, Hannover School of Medicine, GermanyMaría Teresa Daza González, University of Almería, Spain

Copyright © 2016 Vongpaisal, Caruso and Yuan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tara Vongpaisal, vongpaisalt@macewan.ca

Tara Vongpaisal

Tara Vongpaisal Daniela Caruso

Daniela Caruso Zhicheng Yuan

Zhicheng Yuan