- 1Department of Psychology, University of Houston, Houston, TX, United States

- 2Department of Psychiatry, McLean Hospital, Harvard Medical School, Belmont, MA, United States

- 3School of Education, American University, Washington, DC, United States

- 4Communication Sciences and Disorders, MGH Institute of Health Professions, Charlestown, MA, United States

- 5Department of Psychology, University of Denver, Denver, CO, United States

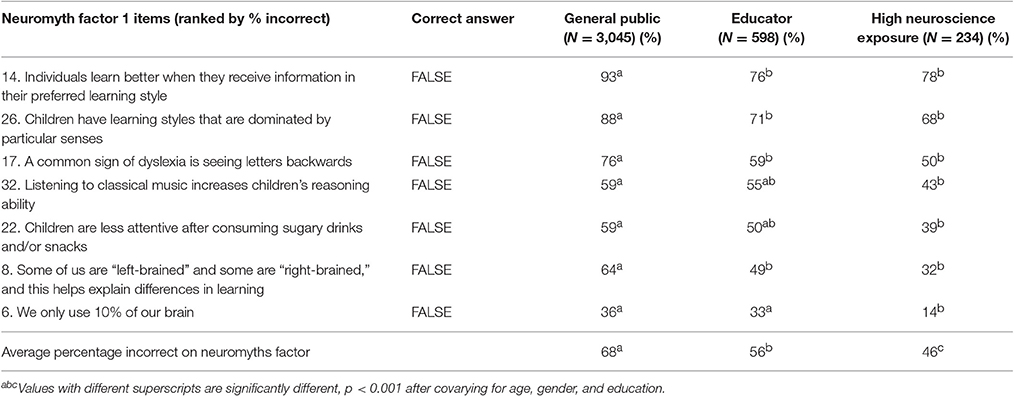

Neuromyths are misconceptions about brain research and its application to education and learning. Previous research has shown that these myths may be quite pervasive among educators, but less is known about how these rates compare to the general public or to individuals who have more exposure to neuroscience. This study is the first to use a large sample from the United States to compare the prevalence and predictors of neuromyths among educators, the general public, and individuals with high neuroscience exposure. Neuromyth survey responses and demographics were gathered via an online survey hosted at TestMyBrain.org. We compared performance among the three groups of interest: educators (N = 598), high neuroscience exposure (N = 234), and the general public (N = 3,045) and analyzed predictors of individual differences in neuromyths performance. In an exploratory factor analysis, we found that a core group of 7 “classic” neuromyths factored together (items related to learning styles, dyslexia, the Mozart effect, the impact of sugar on attention, right-brain/left-brain learners, and using 10% of the brain). The general public endorsed the greatest number of neuromyths (M = 68%), with significantly fewer endorsed by educators (M = 56%), and still fewer endorsed by the high neuroscience exposure group (M = 46%). The two most commonly endorsed neuromyths across all groups were related to learning styles and dyslexia. More accurate performance on neuromyths was predicted by age (being younger), education (having a graduate degree), exposure to neuroscience courses, and exposure to peer-reviewed science. These findings suggest that training in education and neuroscience can help reduce but does not eliminate belief in neuromyths. We discuss the possible underlying roots of the most prevalent neuromyths and implications for classroom practice. These empirical results can be useful for developing comprehensive training modules for educators that target general misconceptions about the brain and learning.

Introduction

Educational neuroscience (also known as mind, brain, education science) is an emerging field that draws attention to the potential practical implications of neuroscience research for educational contexts. This new field represents the intersection of education with neuroscience and the cognitive and developmental sciences, among other fields, in order to develop evidence-based recommendations for teaching and learning (Fischer et al., 2010). This emerging field has garnered growing interest (i.e., Gabrieli, 2009; Carew and Magsamen, 2010; Sigman et al., 2014), but it is also widely recognized that attempts to create cross-disciplinary links between education and neuroscience may create opportunities for misunderstanding and miscommunication (Bruer, 1997; Goswami, 2006; Bowers, 2016). In the field of educational neuroscience, some of the most pervasive and persistent misunderstandings about the function of the brain and its role in learning are termed “neuromyths” (OECD, 2002).

The Brain and Learning project of the UK's Organization of Economic Co-operation and Development (OECD) drew attention to the issue of neuromyths in 2002, defining a neuromyth as “a misconception generated by a misunderstanding, a misreading, or a misquoting of facts scientifically established (by brain research) to make a case for the use of brain research in education or other contexts” (OECD, 2002). Neuromyths often originate from overgeneralizations of empirical research. For example, the neuromyth that people are either “left-brained” or “right-brained” partly stems from findings in the neuropsychological and neuroimaging literatures demonstrating the lateralization of some cognitive skills (i.e., language). The fact that some neuromyths are vaguely based on empirical findings that have been misunderstood or over-exaggerated can make some neuromyths difficult to dispel.

There are several factors that contribute to the emergence and proliferation of neuromyths, most notably: (1) differences in the training background and professional vocabulary of education and neuroscience (Howard-Jones, 2014), (2) different levels of inquiry spanning basic science questions about individual neurons to evaluation of large-scale educational policies (Goswami, 2006), (3) inaccessibility of empirical research behind paywalls which fosters increased reliance on media reports rather than the original research (Ansari and Coch, 2006), (4) the lack of professionals and professional organizations trained to bridge the disciplinary gap between education and neuroscience (Ansari and Coch, 2006; Goswami, 2006), and (5) the appeal of explanations that are seemingly based on neuroscientific evidence, regardless of its legitimacy (McCabe and Castel, 2008; Weisberg et al., 2008).

Many leaders in the field have pointed out the potential benefits of bidirectional collaborations between education and neuroscience (Ansari and Coch, 2006; Goswami, 2006; Howard-Jones, 2014), but genuine progress will require a shared foundation of basic knowledge across both fields. One first step in this pursuit should be dispelling common neuromyths. Toward this end, we launched the current study to identify and quantify neuromyths that persist in educational circles and to test whether these myths are specific to educators or whether they persist in the general public and in those with high exposure to neuroscience as well. The goal of this study was to provide empirical guidance for teacher preparation and professional development programs.

The existence of neuromyths has been widely acknowledged in both the popular press (i.e., Busch, 2016; Weale, 2017) and in the educational neuroscience field (Ansari and Coch, 2006; Goswami, 2006, 2008; Geake, 2008; Pasquinelli, 2012), which has prompted research efforts to quantify teachers' beliefs in neuromyths across countries and cultures. Most of these studies suggest that the prevalence of neuromyths among educators may be quite high (Dekker et al., 2012; Simmonds, 2014). For example, Dekker et al. (2012) administered a neuromyths survey to primary and secondary school teachers throughout regions of the UK and the Netherlands and found that, on average, teachers believed about half of the myths (Dekker et al., 2012). Persistent neuromyths included the idea that students learn best when they are taught in their preferred learning style (i.e., VAK: visual, auditory, or kinesthetic; Coffield et al., 2004; Pashler et al., 2008), the idea that students should be classified as either “right-brained” or “left-brained,” and the idea that motor coordination exercises can help to integrate right and left hemisphere function. Surprisingly, they found that educators with more general knowledge about the brain were also more likely to believe neuromyths (Dekker et al., 2012). This finding that more general brain knowledge was related to an increased belief in neuromyths is surprising. One potential explanation is that teachers who are interested in learning about the brain may be exposed to more misinformation and/or may misunderstand the content such that they end up with more false beliefs. However, it is equally possible that teachers who believe neuromyths may seek out more information about the brain.

To date, the largest study of educators (N = 1,200) was conducted in the UK by the Wellcome Trust using an online survey of neuromyths (Simmonds, 2014). Consistent with the results of Dekker et al. (2012), Simmonds et al. found that the learning styles neuromyth was the most pervasive with 76% of educators indicating that they currently use this approach in their practice. The next most frequently endorsed neuromyth was left/right brain learning with 18% of educators reporting that they are currently using this idea in their practice (Simmonds, 2014). These results clearly indicate that neuromyths continue to persist among educators and are being used in current practice.

Additional studies utilizing similar surveys of neuromyths have been conducted with samples of teachers in Greece (Deligiannidi and Howard-Jones, 2015), Turkey (Karakus et al., 2015), China (Pei et al., 2015), and Latin America (Gleichgerrcht et al., 2015). Similar patterns of neuromyth endorsement have emerged across this body of literature. Two of the most pervasive myths across countries have been related to learning styles and left-brain/right-brain learning.

The global proliferation of neuromyths among educators is concerning as many of the neuromyths are directly related to student learning and development, and misconceptions among educators could be deleterious for student outcomes. For example, if an educator believes the myth that dyslexia is caused by letter reversals, students who have dyslexia but do not demonstrate letter reversals might not be identified or provided appropriate services. Another harmful consequence of neuromyths is that some “brain-based” commercialized education programs are based on these misconceptions and have limited empirical support. School districts that are unfamiliar with neuromyths may devote limited time and resources to such programs, which could have otherwise been used for empirically-validated interventions. Thus, it is important to obtain additional data about the prevalence and predictors of neuromyths in order to design effective approaches for dispelling these myths.

Available studies examining the prevalence of neuromyths provide information about neuromyth endorsement from a range of countries and cultures; however, no study to date has compared teachers' beliefs in neuromyths to a group of non-educators. Furthermore, to our knowledge, no study has systematically examined neuromyths in a sample from the United States. Given large variations in teacher preparation across countries, it is worthwhile to explore the prevalence of neuromyths in a US sample. For this reason, the current study recruited a US sample of educators and included a comparison group of individuals from the general public. We also included a second comparison group of individuals with high neuroscience exposure to further contextualize the results from the groups of educators and general public. Notably, most previous studies have used samples of educators in the range of N = 200–300, many of which are recruited from targeted schools (for an exception, see Simmonds, 2014). As a result, the current study sought to obtain a larger sample of educators (N~600) from a broad range of schools across the US through a citizen science website (TestMyBrain.org). This citizen science approach also allowed us to recruit a large sample of individuals from the general public (N~3,000). Our goal was to explore a variety of factors that might predict belief in myths, including demographics, educational attainment, and career and neuroscience-related exposure. We predicted that the most common neuromyths found in previous studies (i.e., right-brain/left-brain learners; learning styles) would also be prominent among US samples. We also predicted that the quality of an individual's neuroscience exposure would be related to belief in neuromyths, such that higher exposure to self-identified media might lead to more neuromyths, while higher exposure to academic neuroscience would lead to fewer neuromyths.

Methods

Participants

The neuromyths survey and associated demographic questionnaires were hosted on the website TestMyBrain.org from August 2014–April 2015. TestMyBrain.org is a citizen science website where members of the public can participate in research studies to contribute to science and learn more about themselves. In addition to these citizen scientists, we also explicitly advertised this study to individuals with educational or neuroscience backgrounds and encouraged them to visit TestMyBrain.org. Advertisements were distributed through professional and university listservs and social networks (i.e., Council for Exceptional Children, Spell-Talk, Society for Neuroscience DC chapter, American University (AU) School of Education, AU Behavior, Cognition, and Neuroscience program, AU College of Arts and Sciences Facebook page), as well as professional and personal contacts of the authors. In order to increase participation from educators, we used a snowball sampling technique in which each participant that completed the survey was asked to share the survey link with an educator that he/she knew through various social media (i.e., Twitter, Facebook, LinkedIn, email, etc.). This study was carried out in accordance with the recommendations of the Belmont Report as specified in US federal regulations (45 CFR 46). All participants gave written informed consent. The Institutional Review Board (IRB) at American University approved the research protocol for data collection and the IRB at the University of Denver determined that the research was exempt for de-identified data analysis.

The starting sample included surveys from 17,129 respondents worldwide. Only fully completed surveys were logged for further analysis. Participants were excluded if they reported experiencing technical problems with the survey (735 dropped), reported taking the survey more than once (e.g., based on a yes/no question at the end of the survey; 2,670 dropped), or reported cheating (e.g., looking up answers on the internet, discussing with an external person, 17 dropped).

Further exclusion criteria were age <18 years (2,121 dropped), missing data on gender or educational background (361 dropped), and non-US participant (according to IP address or self-endorsement; 6,899 dropped). Next, we plotted overall survey accuracy by time to complete the survey to filter individuals who might have rushed through the survey without reading the questions (22 dropped). On the demographics questionnaire, participants were asked if they were currently enrolled or completed middle school, high school, some college, college degree, or graduate degree. We excluded individuals with a middle school or high school degree from the analyses because of inadequate representation of these individuals in the sample (N = 424 general public, 3 educators, 0 high neuroscience exposure). Therefore, our analyses were limited to those with “some college” experience or beyond. These filtering and data cleaning steps brought our final sample size to N = 3,877 (N = 3,045 general public, 598 educators, 234 high neuroscience exposure).

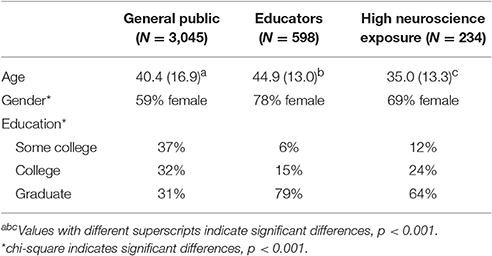

One of the goals of this study was to compare the neuromyths performance of three groups: the general public, self-identified educators, and individuals with high neuroscience exposure. We defined “high neuroscience exposure” using a question on the demographics questionnaire which asked, “Have you ever taken a college/university course related to the brain or neuroscience?” Answer options for this item were “none,” “one,” “a few,” or “many.” Those who indicated “many” were categorized in the neuroscience group, unless they also reported being an educator, in which case they remained in the educator group (N = 53 educators also reported taking many neuroscience courses). We prioritized educator status over neuroscience exposure in this grouping in order to understand the full range of training backgrounds in the educator group. The “general public” group was composed of all individuals who completed the survey but did not self-identify as an educator or having high neuroscience exposure. We use the term “general public” to refer to the citizen scientists who participated, but we acknowledge the selection artifacts inherent to this group and address these limitations in the discussion section. Table 1 shows the demographic features of the three groups.

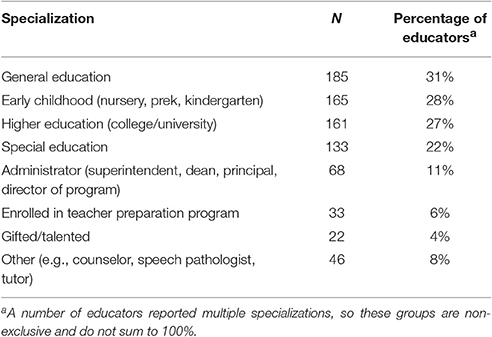

We examined differences among the three groups on basic demographic variables with one-way ANOVA and chi-square tests (Table 1). Age, gender, and highest level of education differed significantly between the groups and so were maintained as covariates in all subsequent analyses. Tables 2, 3 provide information about specializations in the educator and high neuroscience exposure groups.

Measures

Neuromyths Survey

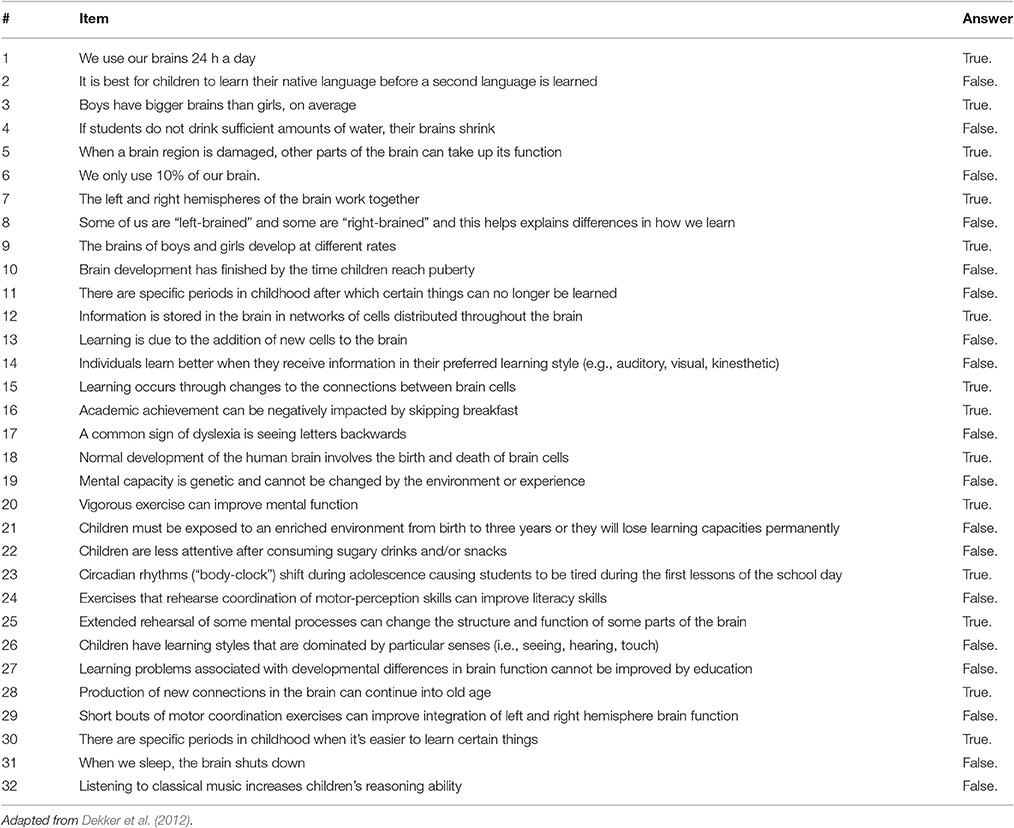

The web-based survey was adapted from Dekker et al. (2012) and consisted of 32 statements related to the brain and learning. In their survey, Dekker et al. (2012) designated 15 items as neuromyths and 17 items as general brain knowledge (e.g., “Learning occurs through changes to the connections between brain cells.”). We chose to investigate the factor structure of our adapted survey in order to determine which items would be categorized as “neuromyths” in our US-based sample.

Modifications from the survey by Dekker et al. (2012) were made to improve clarity for a US audience and to use language that is consistent with many widely held beliefs in the US. Substantive changes included revisions to the answer format from correct/incorrect to true/false. Additionally, neuromyth items that were worded to elicit a true response in Dekker et al. (2012) were reversed, such that a false response was now correct, except in one case to avoid a double-negative (#25, full survey is available in Table A1). Two questions were dropped and two questions were added in the current adaptation. A question about caffeine's effect on behavior was dropped following consultation with experts, who concluded that a simple true/false statement would not take into account the complexities of dosage. A question about fatty acid supplements (omega-3 and omega-6) affecting academic achievement was also dropped because of an emerging mixed literature on the effects of these supplements in ADHD (Johnson et al., 2009; Bloch and Qawasmi, 2011; Hawkey and Nigg, 2014). A question about the Mozart effect was added (#32) since this remains a prominent neuromyth in the US (Chabris, 1999; Pietschnig et al., 2010). A statement about dyslexia was added (#17) because of widespread misunderstanding about this neurodevelopmental disorder in the US (Moats, 2009). The appendix includes the modified questionnaire and intended answers. Items were presented in a different randomized order to each participant, and respondents were instructed to endorse each statement as either true or false. Only participants who answered all questions were included in analyses.

Demographics Questionnaire

A demographics questionnaire was also included with questions about education background, career, neuroscience exposure, and science-related media exposure. Participants who reported having a career as an educator or within the field of education were directed to answer additional questions about their training, current employment, and specializations (i.e., special education, early education, higher education).

Data Cleaning and Analysis

The data were analyzed using Stata/IC 14.0 for Windows. The statistical threshold was set to balance competing issues of the multiple comparison burden associated with 32 individual items on the survey and the expected correlation between the items. We opted to set the statistical threshold at p < 0.001 in order to account for the individual analyses of the 32 items on the neuromyth questionnaire (0.05/32 = 0.0015) and to adjust in the conservative direction for additional analyses of the composite neuromyth score.

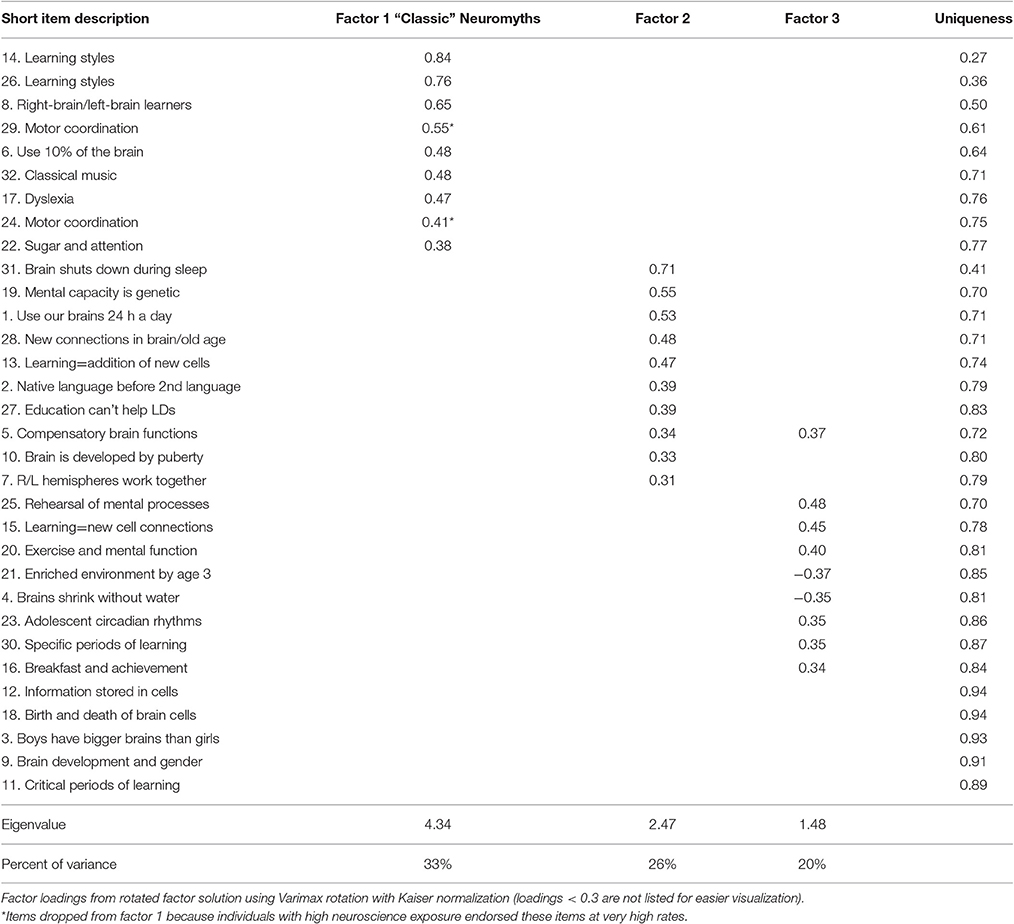

An exploratory factor analysis (EFA) was conducted to examine the underlying relationships among the survey items. Because each survey item was dichotomous (correct or incorrect), a polychoric correlation matrix was used. Based on this EFA, a neuromyths factor score was constructed by summing the number of incorrect responses on the 7 neuromyth items that loaded on the first factor. For this factor, a high score indicated poor performance on neuromyths (i.e., more neuromyths endorsed).

Group comparisons between the general public, educators, and those with high neuroscience exposure were conducted for the neuromyths factor score and overall survey accuracy using one-way ANCOVAs, covarying for age, gender, and education (dummy coded with college as reference). Performance on individual neuromyth questions (true/false) was compared using logistic regressions covarying for age, gender, and education.

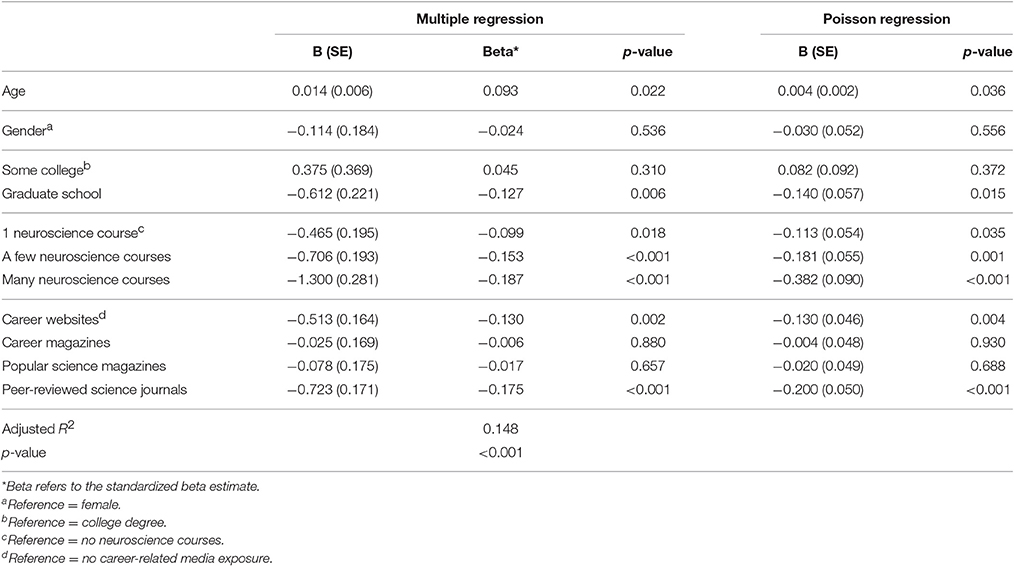

We were also interested in what factors predicted neuromyth performance in the full sample and in the subsample of educators. Ordinary least squares (OLS) and Poisson regressions were used to test the unique contribution of neuroscience exposure and exposure to science-related media, above and beyond the effects of age, gender, and education. In these regression analyses, categorical indicators (neuroscience exposure, career-related media, gender, and education level) were dummy-coded with reference categories indicated in Tables 7, 8.

We conducted Poisson regressions to analyze the variables predicting neuromyths because ordinary least squares regression (OLS) can give biased standard errors and significance tests for count data (Coxe et al., 2009). However, the coefficients of OLS can be more intuitively interpreted, so we also present the results from OLS multiple regressions, though we highlight the potential bias in the statistical significance of these results. Poisson regressions were appropriate because the neuromyths score was a count of incorrect items ranging from 0 to 7 that most closely resembled a Poisson distribution. Count variables are most appropriately analyzed using Poisson regression when the mean of the count is <10 (Coxe et al., 2009). In this study, the mean number of neuromyths endorsed was M = 4.53 (SD = 1.74). The Poisson distribution provided a good fit for the data [Pearson goodness of fit = 2361.94, p = 0.99] and we did not find significant evidence of over-dispersion of the distribution (Likelihood ratio test of alpha, p = 0.99) indicating that the standard Poisson model was appropriate for this analysis.

Lastly, within the educator subsample, we conducted three additional regression analyses to examine the impact of specific specializations on neuromyth endorsement. We examined special educators and early educators as distinct specializations because we hypothesized that both groups might be exposed to more neuromyths: special educators because of their work with children with developmental disorders and early educators because of the prominence of birth-three myths about critical periods. We also examined those teaching in higher education as a distinct group with the hypothesis that these individuals might endorse fewer neuromyths. We made this prediction based on the fact that those in higher education would have easier access to evidence-based pedagogical resources (i.e., peer-reviewed journals, membership in national societies) and perhaps more interactions with neuroscience/psychology colleagues because of their college/university setting.

Results

Psychometrics

An exploratory factor analysis based on the polychoric correlation table, which was appropriate for our categorical (True/False) data, revealed three factors with eigenvalues >1. We rotated the factors using varimax rotation with Kaiser normalization (Kaiser, 1958). An orthogonal rotation, as opposed to an oblique rotation, was chosen because the three factors showed low correlations with each other (r = 0.04–0.21). Factor 1 had high factor loadings (>0.35) for seven “classic” neuromyths (#6, 8, 14, 17, 22, 26, and 32) (KR-20 = 0.63) related to learning styles, dyslexia, the Mozart effect, the impact of sugar on attention, the role of the right and left hemispheres in learning, and using 10% of the brain (Table 4). Items 24 and 29 (i.e., relationships between motor coordination and integrating right/left hemispheres and literacy) also loaded on factor 1, but we observed that those who reported having high neuroscience exposure also endorsed these items at very high rates, which led us to question whether the wording of these items led to confusion (i.e., perhaps respondents were thinking of the beneficial effects of exercise on cognition, generally (for a review see Hillman et al., 2008). We noted that those who reported having high neuroscience exposure performed at least 15% points better than the general public on all other items loading on factor 1, except for items 24 and 29. As a result, we dropped both items from the neuromyths factor and present the data for these items separately in Table 6. A neuromyths factor score was constructed by summing the number of incorrect items on the seven “neuromyths” items, such that higher scores on the neuromyths factor reflect a larger number of incorrect responses (i.e., endorsing more neuromyths). Further analyses used this neuromyths factor score to examine the prevalence and predictors of these “classic” neuromyths.

The second and third factors were less interpretable and theoretically coherent. Internal consistency for the items loading >0.35 on the two factors was quite low (KR-20 = 0.27–0.35). As a result, these factors were not retained for further analysis, but instead data for individual survey items are reported in Table 6.

Group Comparisons for Neuromyths

Results from logistic regressions and ANCOVA analyses examining differences between groups on individual items and overall performance are presented in Tables 5, 6. Individuals in the general public endorsed significantly more neuromyths compared to educators and individuals with high neuroscience exposure. In turn, educators endorsed significantly more neuromyths than individuals with high neuroscience exposure (general public M = 68%, educators M = 56%, high neuroscience exposure M = 46%). A similar trend is present for the majority of the individual items that compose the neuromyths factor such that the general public endorsed the myths at the highest rate, followed by educators, followed by individuals with high neuroscience exposure who endorsed the myths at the lowest rate (Table 5).

The most commonly endorsed neuromyths across groups were related to learning styles and dyslexia. The most commonly endorsed neuromyths item was “individuals learn better when they receive information in their preferred learning style (e.g., auditory, visual, kinesthetic)” (general public M = 93%, educators M = 76%, high neuroscience exposure M = 78%). The second most commonly endorsed neuromyth was also related to learning styles “children have learning styles that are dominated by particular senses (i.e., seeing, hearing, touch)” (general public M = 88%, educators M = 71%, high neuroscience exposure M = 68%). Another common misconception was related to dyslexia: “a common sign of dyslexia is seeing letters backwards” (general public M = 76%, educators M = 59%, high neuroscience exposure M = 50%). It was interesting to note the very high rates of endorsement of these neuromyths even amongst individuals with high neuroscience exposure, though we note that these items are more closely related to the learning and special education fields than to neuroscience.

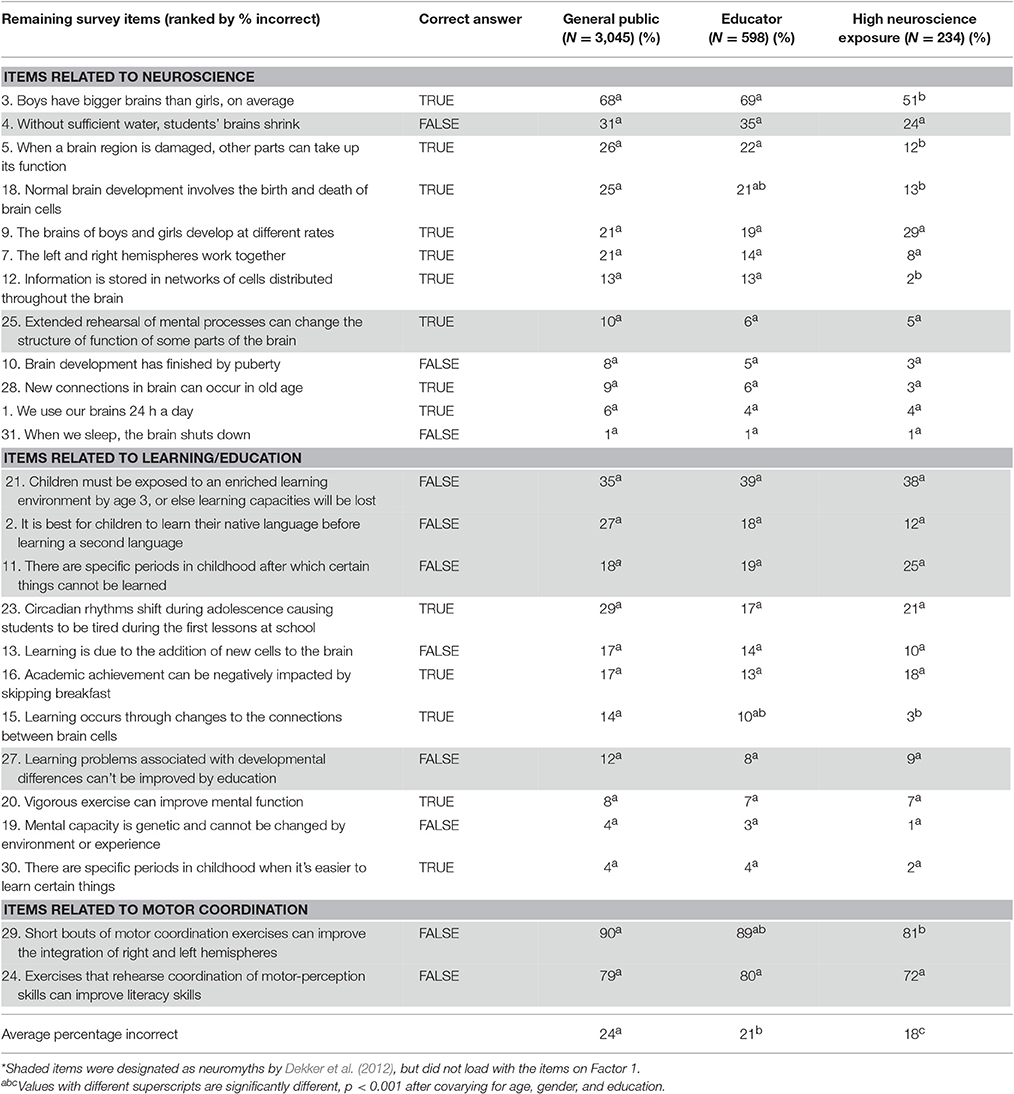

Group Comparisons for Remaining Survey Items

The remaining survey items are grouped by topic areas (i.e., learning/education, brain/neuroscience, motor coordination/exercise) in Table 6. These survey items generally followed the same trend that was evident for the neuromyths, such that the general public performed least accurately and those with high neuroscience exposure performed most accurately, with educators falling in the middle (Table 6). Of these survey items which mostly tested factual brain knowledge, it is worth noting that 10% or fewer of those with high neuroscience exposure answered these items incorrectly, consistent with the alignment of their coursework with these factual neuroscience questions. This finding stands in contrast to the performance of the high neuroscience exposure group on the “classic” neuromyth items, where one-third to three-quarters of these individuals answered incorrectly depending on the item (with the exception of the 10% of the brain item, #6).

Two items (#24 and 29) involving motor coordination and exercise were the most frequently incorrect across groups. These items were endorsed at such high rates by the high neuroscience exposure group that we questioned whether the items were persistent neuromyths or whether the item wording was misunderstood by participants. Because of this ambiguity, we decided not to include these items on the neuromyths factor. After these two items, the most frequently incorrect item across groups was “Boys have bigger brains than girls, on average” (#3).

Predictors of Neuromyths

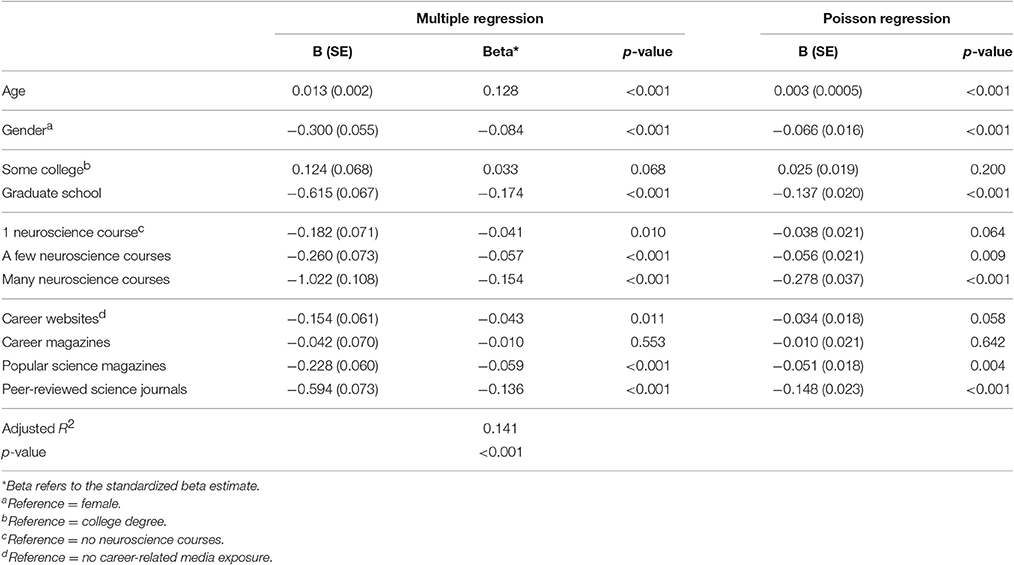

Results from both OLS multiple regression and Poisson regression are presented for the full sample and for the subsample of educators in Tables 7, 8. In both cases, the sum of incorrect items on the neuromyths factor was the outcome variable. Predictors included neuroscience exposure and science career-related media exposure, with age, gender, and education level as covariates.

Table 7. Regression results predicting neuromyths (i.e., % of items incorrect from neuromyths factor) in the full sample (N = 3,877).

Table 8. Regression results predicting neuromyths (i.e., % of items incorrect from neuromyths factor) in the educator sample (N = 598).

The results of the Poisson and OLS regressions were largely consistent. In cases of divergence, we deferred to the Poisson results which are most appropriate for the data distribution. For the full sample, demographic predictors of better performance on the neuromyths survey (p < 0.001) were age (being younger) and gender (being female). Education level also predicted neuromyth accuracy, such that those with a graduate degree performed better than those who finished college (Table 7). There was no significant difference between those who completed some college vs. those who obtained their college degree. Thus, only graduate education seemed to reduce the rate of neuromyth endorsement. Exposure to college-level neuroscience coursework also predicted neuromyths, such that those who reported completing many neuroscience courses performed better than those with no neuroscience courses. Individuals with 1 or a few neuroscience courses did not differ significantly from those with none, though there were nonsignificant trends (p = 0.064, p = 0.009, respectively). Exposure to science and career-related information also predicted neuromyths; specifically, those who reported reading peer-reviewed scientific journals performed better on neuromyths items (i.e., endorsed fewer myths) than those who did not engage with any career-related media. There were nonsignificant trends for those who read popular science magazines or visited career websites to perform better on neuromyths (p = 0.004; p = 0.058, respectively). Overall, the strongest predictors of lower rates of neuromyth endorsement in the full sample (determined by comparing standardized betas) were having a graduate degree, completing many neuroscience courses, and reading peer-reviewed journals. Although these were the strongest predictors, the effect sizes were modest. For instance, for the strongest predictor (graduate education) (partial η2 = 0.021), those with a graduate degree vs. a college degree endorsed less than 1 fewer item (out of 7) on the survey.

In the subsample of educators, significant predictors (p < 0.001) of neuromyths were similar to the full sample. Better performance was predicted by taking many neuroscience course and reading peer-reviewed scientific journals. Although these were the strongest predictors, the effect sizes were modest. For instance, for the strongest predictor (neuroscience courses) (partial η2 = 0.035), those who reported taking many neuroscience courses endorsed 1.3 items fewer on the survey (out of 7 total items) compared to those who had not taken any neuroscience courses.

In the educator subsample, we examined the impact of three specializations, special education, early education, and higher education. Each regression mirrored those in Tables 7, 8 (predictors for age, gender, education, neuroscience exposure, and science career-related media) except an additional predictor for specialization was added. In all three cases, the specialization was not associated with a significant difference in neuromyth performance in comparison to other educators without this specialization: special education (standardized β = −0.03, p = 0.45), early education (standardized β = −0.038, p = 0.33), and higher education (standardized β = −0.092, p = 0.06). The latter analysis for higher education was conducted only in those with graduate degrees (N = 471) because only a handful (n = 9) of individuals reported that they were teaching college but did not have a graduate degree. Although our results for higher education may suggest a trend for those in higher education to endorse fewer neuromyths, we note that this predictor does not meet our alpha threshold (p < 0.001) and its effect size is more modest than age, graduate school, and exposure to neuroscience and peer-reviewed science. Taken together, there was little support for educator specializations having a strong effect on neuromyth performance.

Discussion

Neuromyths are frequently mentioned as an unfortunate consequence of cross-disciplinary educational neuroscience efforts, but there is relatively little empirical data on the pervasiveness of neuromyths, particularly in large samples from the US. Existing empirical data on neuromyths also exclusively focuses on educators, so an important question is whether educators' beliefs are consistent with the general public. One assumes that training in education and in neuroscience would dispel neuromyths, but it is unclear whether and to what extent this is the case. The goal of the current study was to establish an empirical baseline for neuromyth beliefs across three broad groups: the general public, educators, and individuals with high neuroscience exposure. Our results show that both educators and individuals with high neuroscience exposure perform significantly better than the general public on neuromyths, and individuals with neuroscience exposure further exceed the performance of educators. Thus, we find that training in both education and neuroscience (as measured by self-report of taking many neuroscience courses) is associated with a reduction in belief in neuromyths. Notably, however, both educators and individuals with high neuroscience exposure continue to endorse about half or more of the “classic” neuromyths, despite their training.

From the individual differences analyses, we found that the strongest predictors of neuromyths for the full sample were having a graduate degree and completing many neuroscience courses. For the subsample of educators, the strongest predictors were completing many neuroscience courses and reading peer-reviewed scientific journals. These findings suggest that higher levels of education and increased exposure to rigorous science either through coursework or through scientific journals are associated with the ability to identify and reject these misconceptions. As we hypothesized, the quality of the media exposure matters: peer-reviewed scientific journals showed the strongest association to neuromyths accuracy compared to other science-related media sources (i.e., websites, magazines). We discuss the implications of these results further below with an emphasis on implications for professional development and targeted educational programs addressing neuromyths.

Clustering of Neuromyths

Our analyses began with a psychometric investigation of our modified version of the widely-used neuromyths survey developed by Dekker et al. (2012). Results from an exploratory factor analysis revealed one factor consisting of seven core neuromyths (i.e., items about learning styles, dyslexia, the Mozart effect, the impact of sugar on attention, the role of the right and left hemispheres in learning, and using 10% of the brain). It was somewhat surprising to us that these “classic” neuromyths items clustered together because it seemed equally plausible that belief in one neuromyth would be independent from the other neuromyths. Nevertheless, the relationships among these neuromyths indicate that curriculum development should address several misunderstandings simultaneously because individuals who believe one neuromyth are likely to believe others as well. It is unclear why this might be the case, although one speculation is that a few misunderstandings about the complexity of learning and the brain will make one susceptible to a myriad of neuromyths. Alternatively, it is possible that these neuromyths are taught explicitly and simultaneously in some professional contexts. Regardless of the source, our data pointing to the clustering of neuromyths suggests that curricula must address multiple misunderstandings simultaneously, perhaps pointing out the connections between myths, in order to effectively address their persistence among educators.

One characteristic that seems to unite the 7 “classic” neuromyths that clustered together is an underestimation of the complexity of human behavior, especially cognitive skills like learning, memory, reasoning, and attention. Rather than highlighting these complexities, each neuromyth seems to originate from a tendency to rely on a single explanatory factor, such as the single teaching approach that will be effective for all children (learning styles) or the single sign of dyslexia (reversing letters), or the single explanation for why a child is acting out (sugar). Such over-simplified explanations do not align with the scientific literature on learning and cognition. For example, in the last 20 years, the field of neuropsychology has experienced a paradigm shift marked by an evolution from single deficit to multiple deficit theories to reflect an improved understanding of complex cognitive and behavioral phenotypes (Bishop and Snowling, 2004; Sonuga-Barke, 2005; Pennington, 2006). Such a multifactorial framework might be helpful for developing a curriculum that could dispel neuromyths. Within this framework, lessons would explicitly identify the many factors that influence learning and cognition and demonstrate how a misunderstanding of the magnitude of this complexity can lead to each neuromyth.

A curriculum to effectively dispel neuromyths should also include a preventative focus. For instance, lessons might make individuals aware of the cognitive bias to judge arguments as more satisfying and logical when they include neuroscience, even if this neuroscience is unrelated to the argument (McCabe and Castel, 2008; Weisberg et al., 2008). It might be particularly useful to point out that individuals with less neuroscience experience are particularly susceptible to this error in logic (Weisberg et al., 2008). A greater awareness of this cognitive error might be an important first step toward preventing new neuromyths from proliferating.

Group Comparisons: Educators, General Public, High Neuroscience Exposure

Our findings suggest that while teachers are better able to identify neuromyths than the general public, they still endorse many of the same misconceptions at high rates. These results are quite consistent with a parallel study conducted by Dekker et al. (2012) in a sample obtained from the UK and the Netherlands. Their study also reported that specific neuromyths showed a strikingly high prevalence among educators. Three of the neuromyth items that factored together in our study were asked with identical wording by Dekker et al. and so can be directly compared. For these items, our sample showed modestly better performance (learning styles (#14): Dekker-UK: 93% incorrect, Dekker-NL: 96%, US: 76%; sugar and attention (#22): Dekker-UK: 57%, Dekker-NL: 55%, US: 50%; 10% of brain (#6): Dekker-UK: 48%, Dekker-NL: 46%, US: 33%). Although performance was slightly better in the US sample, one likely explanation for this finding stems from the differences in recruitment between the studies. Dekker et al. (2012) used a school-based recruitment strategy where they invited all teachers in a school to participate, whereas the current study used online recruitment. Even in the context of these sampling differences, however, what is most notable is the degree of consistency in the prevalence of these neuromyths.

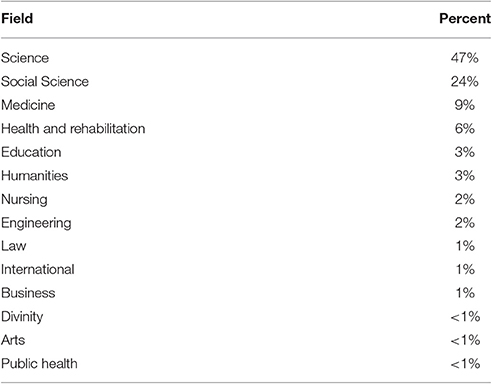

As expected, the high neuroscience exposure group endorsed the lowest number of neuromyths compared to educators and the general public, which is consistent with their background; however, they still endorsed about half (46%) of the neuromyths, on average, which might still be perceived as high. This result is even more striking in the context of the self-reported disciplinary affiliation of these individuals. Eighty-eight percent of the high neuroscience exposure group reported obtaining their highest degree in science, social science, medicine, health and rehabilitation, or nursing (47% science, 24% social science, 9% medicine, 6% health-related field, and 2% nursing), all fields that we would expect to have a strong neuroscience curriculum. Although we cannot be sure of the level and content of the neuroscience courses that the participants reported, it is striking that the vast majority of these individuals obtained their highest degree (88% college or graduate degrees) in a science- or health-related field, and yet the endorsement of neuromyths remained quite high.

One possible explanation for this surprising finding is to consider that the most commonly endorsed items by individuals with high neuroscience exposure were more closely related to learning and education than the brain and its function. This suggests that individuals with a significant number of neuroscience classes are susceptible to these misunderstandings because of the different levels of analysis of neuroscience vs. education. For instance, students might learn the synaptic basis of learning in a neuroscience course, but not educational theories of learning and how learning styles as an educational concept has developed and proliferated (see Pashler et al., 2008 for a discussion). Similarly, neuroscience students might learn the brain correlates of a developmental disorder such as dyslexia without discussing the behavioral research dispelling the neuromyth that it is caused by letter reversals. This finding suggests that if educators were to take a class in neuroscience that did not specifically address neuromyths, it would be unlikely to help with dispelling the misconceptions that are most closely related to learning and education.

Among all three groups, the two most prevalent neuromyths were related to learning styles theory and dyslexia (reversing letters). Endorsement of these neuromyths ranged from 76 to 93% in the general public group, 59 to 76% in the educator group, and 50 to 78% in the high neuroscience exposure group. Notably, over half of the surveyed teachers endorsed these items, which have direct implications for educational practice.

Learning Styles

Regarding learning styles theory, a meta-analysis from Pashler et al. (2008) reviewed findings from rigorous research studies testing learning styles theories and concluded that there was an insufficient evidence base to support its application to educational contexts. For example, the VAK (Visual, Auditory, Kinesthetic) learning styles theory posits that each student has one favored modality of learning, and that teachers should identify students' preferred learning styles and create lesson plans aimed at these learning styles (i.e., if a student self-identifies as a visual learner, content should be presented visually; Lethaby and Harries, 2015). The premise that visual learners perform better when presented with visual information alone than when presented with auditory information and vice versa for auditory learners is known as the “meshing hypothesis” (Pashler et al., 2008). While it is clear that many individuals have preferences for different styles of learning, Pashler et al. (2008) argue that the lack of empirical evidence for assessing these preferences and using them to inform instruction in order to improve student outcomes raises concerns about the pervasiveness of the theory and its near universal impact on current classroom environments. Willingham et al. (2015) argue that limited time and resources in education should be aimed at developing and implementing evidence-based interventions and approaches, and that psychology and neuroscience researchers should communicate to educators that learning styles theory has not been empirically validated (Willingham et al., 2015).

Several authors have discussed the lack of evidence for the VAK learning styles theory (Pashler et al., 2008; Riener and Willingham, 2010; Willingham et al., 2015), but in classroom practice, teachers have adapted the theory to fit classroom needs. For example, teachers weave visual and auditory modalities into a single lesson rather than providing separate modality-specific lessons to different groups of children based on self-identified learning style preferences. Hence, an unintended and potentially positive outcome of the perpetuation of the learning styles neuromyth is that teachers present material to students in novel ways through multiple modalities, thereby providing opportunities for repetition which is associated with improved learning and memory in the cognitive (for a review see Wickelgren, 1981) and educational literatures (for reviews, see Leinhardt and Greeno, 1986; Rosenshine, 1995).

Thus, the integration of multiple modalities can be beneficial for learning and this practice is conflated with the learning styles neuromyth. In other words, this particular neuromyth presents a challenge to the education field because it seems to be supporting effective instructional practice, but for the wrong reasons. To dispel this particular myth might inadvertently discourage diversity in instructional approaches if it is not paired with explicit discussion of the distinctions between learning styles theory and multimodal instruction. This specific challenge reflects the broader need to convey nuances across disciplinary boundaries of education and neuroscience to best meet the instructional and learning needs of students and educators. We would generally advocate for better information to dispel neuromyths that could be distributed broadly; however, the learning styles neuromyth appears to be a special case that requires deeper engagement with the educational community. For example, coursework or professional development might be the most effective way to address questions and controversies about the learning styles neuromyth. This stands in contrast to other neuromyths, such as that we only use 10% of our brain, which might be more easily dispelled by a handout with a short justification.

Dyslexia and Letter Reversals

In contrast to the learning styles neuromyth, which might have unintended positive consequences, the neuromyth that dyslexia is characterized by seeing letters backwards is potentially harmful for the early identification of children with dyslexia and interferes with a deeper understanding of why readers with dyslexia struggle. This idea about “backwards reading” originates from early visual theories of dyslexia (Orton, 1925). Such visual theories of dyslexia were rejected decades ago as it became clear that impairments in language abilities, primarily phonological awareness, formed the underpinnings of dyslexia (Shaywitz et al., 1999; Pennington and Lefly, 2001; Vellutino et al., 2004). Some children with dyslexia do make letter reversals, but typically-developing children make reversals as well, particularly during early literacy acquisition (Vellutino, 1979). Such reversals early in literacy acquisition (i.e., kindergarten) are not related to later reading ability (i.e., 2nd-3rd grade) (Treiman et al., 2014). For children with dyslexia who make persistent letter reversals beyond the normative age, these reversals can best be understood as a consequence of poor reading and its associated cognitive impairments, rather than a cause of the reading problems. One prominent theory regarding the mechanisms underlying letter reversals posits that the reversals are the results of phonological confusion, rather than visual confusion (Vellutino, 1979). This research clarifies that the core deficit in dyslexia is not visual, yet this myth is remarkably persistent among educators (Moats, 1994; Washburn et al., 2014). One harmful effect of this neuromyth is evident in anecdotal reports from child assessment clinics where parents and teachers have delayed a referral for dyslexia because, though the child is struggling with reading, he/she is not reversing letters. Misunderstanding of the causal factors in dyslexia also leads to the persistence of visual interventions for reading that do not have an evidence base, and which may delay access to more effective phonologically-based treatments (Pennington, 2008, 2011; Fletcher and Currie, 2011). Efforts to educate teachers, parents, and medical professionals about the true underlying causes of dyslexia continue through national professional associations (i.e., American Academy of Pediatrics, 2009, 2014 and non-profit foundations like the International Dyslexia Association (i.e., Fletcher and Currie, 2011; Pennington, 2011).

Limitations and Future Directions

Although these results provide an important empirical baseline for neuromyth prevalence in a broad US sample including educators, individuals with high neuroscience exposure, and the general public, the findings should be interpreted in light of the study limitations. First, our online recruitment strategy requires consideration of the generalizability of the sample. In this case, we primarily relied on volunteers who visited the TestMyBrain.org testmybrain.org website, some of whom were directed there by our emails to professional organizations for educators and neuroscientists. Hence, the sample was over-selected for individuals who have advanced education and who are already interested in science. Due to a limited number of participants who reported an education level as less than “some college,” we restricted our analyses to only those with some college or more. Therefore, the term “general public” may be misleading as our sample had higher rates of education than a nationally representative sample. In our sample, 100% of individuals had “some college” or more, 69% had a college degree or more, and 31% had a graduate degree, whereas these corresponding rates in a nationally representative sample are 59% (some college or more), 33% (bachelor's degree or more), and 12% (graduate degree) (Ryan and Bauman, 2016). The same critique is relevant to the educator sample where the rate of graduate education in our sample was 79% which exceeds the national average of 43% (Goldring et al., 2013). Although this selection is a limitation of the study, the fact that graduate education is associated with fewer neuromyths suggests that our results reflect the most optimistic case for the prevalence of neuromyths. We expect that the rate of neuromyths endorsement would only increase if a more representative population was obtained.

Another study limitation is related to our high neuroscience exposure group. Because we doubted the validity of self-report about the quality, content, and level of neuroscience courses, we opted for a simplified question: “Have you ever taken a college/university course related to the brain or neuroscience?” This item leaves many open questions about the nature of the neuroscience coursework, its specific emphasis, and what year it was taken. These variables are undoubtedly important to consider as there is certainly wide variation in quality and content of neuroscience curricula across the country, but would be time-intensive to collect with high validity (i.e., transcripts). A more realistic approach to control for variations in coursework would be to design studies using students who are enrolled in the same university neuroscience course.

Our online survey is subject to legitimate questions regarding quality control. We employed a number of procedures throughout the data cleaning process to ensure that we captured only legitimate responses, including examining the data for participants who were experiencing technical difficulties, cheating, taking the survey too quickly, and taking the survey more than once. Germine and colleagues have replicated well-known cognitive and perceptual experiments using the website used in the current study (TestMyBrain.org) with similar data cleaning techniques (Germine et al., 2012; Hartshorne and Germine, 2015). Although there is legitimate concern about noise with such an online approach, it is generally offset by the increase in sample size that is possible with such methods (Germine et al., 2012; Hartshorne and Germine, 2015). In this study, we note that sample sizes were largest for the educator and general public samples, while the individuals with neuroscience exposure was a smaller subgroup. Future research could benefit from further understanding of different disciplinary emphases within neuroscience training.

An additional limitation is that we did not have access to student outcomes, so we were unable to analyze how neuromyths endorsement among educators might influence academic performance in their students. Such data is important to consider in the context of our findings, as it is the only way to empirically determine whether or not believing neuromyths “matters” in the context of student outcomes. No studies to date have examined how teachers' endorsement of neuromyths may influence their attitudes toward their students' growth and learning. However, findings from previous research suggest that teachers' attitudes about the potential of students with learning disabilities to benefit from instruction predicts teachers' approaches to classroom instruction as well as students' achievement (Hornstra et al., 2010). Given this precedent, it is certainly possible that belief in neuromyths could impact teaching and student outcomes.

Lastly, we employed a slightly modified version of the neuromyths survey used by Dekker et al. (2012) because it has been widely used in a number of studies. Nevertheless, we did receive feedback from some participants that the true/false forced choice response was too simplistic. For example, the question “There are specific periods in childhood after which certain things can no longer be learned” is false in most cases, but some well-informed participants correctly pointed to areas of sensation and lower-level perception where this statement is true (i.e., Kuhl, 2010). This example demonstrates the limitation of a true/false paradigm for capturing complex scientific findings. Another limitation of the true/false paradigm is that we cannot discern participants' certainty of their answers because we did not include an “I don't know” answer choice. Future research should include such a choice or ask participants to rate their confidence in their answers on a Likert scale. Such information would be helpful for designing training curricula because a different approach might be necessary for individuals who are very sure that there are “right-brained” and “left-brained” learners, for example, compared to those who are unsure about this particular neuromyth.

Summary

Although training in education and neuroscience predicts better performance on neuromyths, such exposure does not eliminate the neuromyths entirely. Rather, some of the most common myths, such as those related to learning styles and dyslexia, remain remarkably prevalent (~50% endorsement or higher) regardless of exposure to education or neuroscience. This finding is concerning because of the time and resources that many school districts may allocate toward pedagogical techniques related to these neuromyths that have very little empirical support. The findings reported in the current study create an opportunity for cross-disciplinary collaboration among neuroscientists and educators in order to develop a brief, targeted, and robust training module to address these misconceptions.

Author Contributions

LM developed the study concept and collaborated with LG on design and implementation. LM adapted the surveys for the current project. LG developed the platform for launching the survey and for collecting the web-based data. JC, AA, and LG provided consultation to LM regarding survey design and analysis. KM and LM performed the data analyses and planned the manuscript. KM wrote the first draft of the manuscript. KM and LM collaboratively revised the manuscript. LG, JC, and AA provided critical feedback on the manuscript and approved the final version.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors received funding to conduct this study from a faculty research support grant from American University. The authors wish to thank Sanne Dekker, Ph.D. for sharing the neuromyths questionnaire and for insightful discussions about its design. The authors also wish to thank Peter Sokol-Hessner, Ph.D. for discussions that helped to clarify the manuscript's impact and message. The authors also gratefully acknowledge the thousands of volunteers who generously participated in this research and encouraged those in their social network to do the same.

References

American Academy of Pediatrics (2009). Learning disabilities, dyslexia, and vision. Pediatrics 124, 837–844. doi: 10.1542/peds.2009-1445

American Academy of Pediatrics (2014). Joint Statement: Learning Disabilities, Dyslexia, and Vision – Reaffirmed 2014. Available online at: https://www.aao.org/clinical-statement/joint-statement-learning-disabilities-dyslexia-vis

Ansari, D., and Coch, D. (2006). Bridges over troubled waters: Education and cognitive neuroscience. Trends Cogn. Sci. 10, 146–151. doi: 10.1016/j.tics.2006.02.007

Bishop, D. V., and Snowling, M. J. (2004). Developmental dyslexia and specific language impairment: same or different? Psychol. Bull. 130, 858–886. doi: 10.1037/0033-2909.130.6.858

Bloch, M. H., and Qawasmi, A. (2011). Omega-3 fatty acid supplementation for the treatment of children with attention-deficit/hyperactivity disorder symptomatology: systematic review and meta-analysis. J. Am. Acad. Child Adolesc. Psychiatry 50, 991–1000. doi: 10.1016/j.jaac.2011.06.008

Bowers, J. S. (2016). The practical and principled problems with educational neuroscience. Psychol. Rev. 123, 600–612. doi: 10.1037/rev0000025

Bruer, J. T. (1997). Education and the brain: a bridge too far. Educ. Res. 26, 4–16. doi: 10.3102/0013189X026008004

Busch, B. (2016). Four Neuromyths That Are Still Prevalent in Schools – Debunked. Available online at: https://www.theguardian.com/teacher-network/2016/feb/24/four-neuromyths-still-prevalent-in-schools-debunked (Accessed May 25, 2017).

Carew, T. J., and Magsamen, S. H. (2010). Neuroscience and education: an ideal partnership for producing evidence-based solutions to guide 21 st century learning. Neuron 67, 685–688. doi: 10.1016/j.neuron.2010.08.028

Chabris, C. F. (1999). Prelude or requiem for the ‘Mozart effect’? Nature 400, 826–827. doi: 10.1038/23608

Coffield, F., Moseley, D., Hall, E., and Ecclestone, K. (2004). Should we be Using Learning Styles? What Research Has to Say To Practice. London: Learning & Skills Research Centre.

Coxe, S., West, S. G., and Aiken, L. S. (2009). The analysis of count data: a gentle introduction to poisson regression and its alternatives. J. Pers. Assess. 91, 121–136. doi: 10.1080/00223890802634175

Dekker, S., Lee, N. C., Howard-Jones, P., and Jolles, J. (2012). Neuromyths in education: prevalence and predictors of misconceptions among teachers. Front. Psychol. 3:429. doi: 10.3389/fpsyg.2012.00429

Deligiannidi, K., and Howard-Jones, P. (2015). The neuroscience literacy of teachers in Greece. Proc. Soc. Behav. Sci. 174, 3909–3915. doi: 10.1016/j.sbspro.2015.01.1133

Fischer, K. W., Goswami, U., and Geake, J. (2010). The future of educational neuroscience. Mind Brain Educ. 4, 68–80. doi: 10.1111/j.1751-228X.2010.01086.x

Fletcher, J. M., and Currie, D. (2011). Vision efficiency interventions and reading disability. Perspect. Lang. Literacy 37, 21–24.

Gabrieli, J. D. (2009). Dyslexia: a new synergy between education and cognitive neuroscience. Science 325, 280–283. doi: 10.1126/science.1171999

Geake, J. (2008). Neuromythologies in education. Educ. Res. 50, 123–133. doi: 10.1080/00131880802082518

Germine, L., Nakayama, K., Duchaine, B. C., Chabris, C. F., Chatterjee, G., and Wilmer, J. B. (2012). Is the Web as good as the lab? Comparable performance from Web and lab in cognitive/perceptual experiments. Psychon. Bull. Rev. 19, 847–857. doi: 10.3758/s13423-012-0296-9

Gleichgerrcht, E., Lira Luttges, B., Salvarezza, F., and Campos, A. L. (2015). Educational neuromyths among teachers in latin America. Mind Brain Educ. 9, 170–178. doi: 10.1111/mbe.12086

Goldring, R., Gray, L., and Bitterman, A. (2013). Characteristics of Public and Private Elementary and Secondary School Teachers in the United States: Results from the 2011–12 Schools and Staffing Survey (NCES 2013-314). U.S. Department of Education. Washington, DC: National Center for Education Statistics.

Goswami, U. (2006). Neuroscience and education: from research to practice? Nat. Rev. Neurosci. 7, 406–413. doi: 10.1038/nrn1907

Goswami, U. (2008). Principles of learning, implications for teaching: a cognitive neuroscience perspective. J. Philos. Educ. 42, 381–399. doi: 10.1111/j.1467-9752.2008.00639.x

Hartshorne, J. K., and Germine, L. T. (2015). When does cognitive functioning peak? The asynchronous rise and fall of different cognitive abilities across the life span. Psychol. Sci. 26, 433–443. doi: 10.1177/0956797614567339

Hawkey, E., and Nigg, J. T. (2014). Omega-3 fatty acid and ADHD: blood level analysis and meta-analytic extension of supplementation trials. Clin. Psychol. Rev. 34, 496–505. doi: 10.1016/j.cpr.2014.05.005

Hillman, C. H., Erickson, K. I., and Kramer, A. F. (2008). Be smart, exercise your heart: exercise effects on brain and cognition. Nat. Rev. Neurosci. 9, 58–65. doi: 10.1038/nrn2298

Hornstra, L., Denessen, E., Bakker, J., van den Bergh, L., and Voeten, M. (2010). Teacher attitudes toward dyslexia: effects on teacher expectations and the academic achievement of students with dyslexia. J. Learn. Disabil. 43, 515–529. doi: 10.1177/0022219409355479

Howard-Jones, P. A. (2014). Neuroscience and education: myths and messages. Nat. Rev. Neurosci. 15, 817–824. doi: 10.1038/nrn3817

Johnson, M., Ostlund, S., Fransson, G., Kadesjö, B., and Gillberg, C. (2009). Omega-3/omega-6 fatty acids for attention deficit hyperactivity disorder: a randomized placebo-controlled trial in children and adolescents. J. Atten. Disord. 12, 394–401. doi: 10.1177/1087054708316261

Kaiser, H. F. (1958). The varimax criterion for analytic rotation in factor analysis. Psychometrika 23, 187–200. doi: 10.1007/BF02289233

Karakus, O., Howard-Jones, P., and Jay, T. (2015). Primary and secondary school teachers' knowledge and misconceptions about the brain in Turkey. Proc. Soc. Behav. Sci. 174, 1933–1940. doi: 10.1016/j.sbspro.2015.01.858

Kuhl, P. K. (2010). Brain mechanisms in early language acquisition. Neuron 67, 713–727. doi: 10.1016/j.neuron.2010.08.038

Leinhardt, G., and Greeno, J. G. (1986). The cognitive skill of teaching. J. Educ. Psychol. 78, 75. doi: 10.1037/0022-0663.78.2.75

Lethaby, C., and Harries, P. (2015). Learning styles and teacher training: are we perpetuating neuromyths? ELT J. 70, 16–25. doi: 10.1093/elt/ccv051

McCabe, D. P., and Castel, A. D. (2008). Seeing is believing: the effect of brain images on judgments of scientific reasoning. Cognition 107, 343–352. doi: 10.1016/j.cognition.2007.07.017

Moats, L. (1994). The missing foundation in teacher education: knowledge of the structure of spoken and written language. Ann. Dyslexia 44, 81–102. doi: 10.1007/BF02648156

Moats, L. (2009). Still wanted: Teachers with knowledge of language. J. Learn. Disabil. 42, 387–391. doi: 10.1177/0022219409338735

OECD (2002). Organisation for Economic Co-operation and Development. Understanding the Brain: Towards a New Learning Science. Paris: OECD Publishing.

Orton, S. T. (1925). Word-blindness in school children. Arch. Neurol. Psychiatry 14, 581–615. doi: 10.1001/archneurpsyc.1925.02200170002001

Pashler, H., McDaniel, M., Rohrer, D., and Bjork, R. (2008). Learning styles concepts and evidence. Psychol. Sci. Public Interest 9, 105–119. doi: 10.1111/j.1539-6053.2009.01038.x

Pasquinelli, E. (2012). Neuromyths: why do they exist and persist? Mind Brain Educ. 6, 89–96. doi: 10.1111/j.1751-228X.2012.01141.x

Pei, X., Howard-Jones, P., Zhang, S., Liu, X., and Jin, Y. (2015). Teachers' understanding about the brain in East China. Proc. Soc. Behav. Sci. 174, 3681–3688. doi: 10.1016/j.sbspro.2015.01.1091

Pennington, B. F. (2006). From single to multiple deficit models of developmental disorders. Cognition 101, 385–413. doi: 10.1016/j.cognition.2006.04.008

Pennington, B. F. (2008). Diagnosing Learning Disorders: A Neuropsychological Framework. New York, NY: Guilford Press.

Pennington, B. F., and Lefly, D. L. (2001). Early reading development in children at family risk for dyslexia. Child Dev. 72, 816–833. doi: 10.1111/1467-8624.00317

Pietschnig, J., Voracek, M., and Formann, A. K. (2010). Mozart effect–Shmozart effect: a meta-analysis. Intelligence 38, 314–323. doi: 10.1016/j.intell.2010.03.001

Riener, C., and Willingham, D. (2010). The myth of learning styles. Change 42, 32–35. doi: 10.1080/00091383.2010.503139

Rosenshine, B. (1995). Advances in research on instruction. J. Educ. Res. 88, 262–268. doi: 10.1080/00220671.1995.9941309

Ryan, C. L., and Bauman, K. (2016). Educational Attainment in the United States: 2015: Current Population Reports. Washington, DC: US Census Bureau.

Shaywitz, S. E., Fletcher, J. M., Holahan, J. M., Shneider, A. E., Marchione, K. E., Stuebing, K. K., et al. (1999). Persistence of dyslexia: the Connecticut longitudinal study at adolescence. Pediatrics 104, 1351–1359. doi: 10.1542/peds.104.6.1351

Sigman, M., Peña, M., Goldin, A. P., and Ribeiro, S. (2014). Neuroscience and education: prime time to build the bridge. Nat. Neurosci. 17, 497–502. doi: 10.1038/nn.3672

Simmonds, A. (2014). How Neuroscience is Affecting Education: Report of Teacher and Parent Surveys. Wellcome Trust.

Sonuga-Barke, E. J. (2005). Causal models of attention-deficit/hyperactivity disorder: from common simple deficits to multiple developmental pathways. Biol. Psychiatry 57, 1231–1238. doi: 10.1016/j.biopsych.2004.09.008

Treiman, R., Gordon, J., Boada, R., Peterson, R. L., and Pennington, B. F. (2014). Statistical learning, letter reversals, and reading. Sci. Stud. Reading 18, 383–394. doi: 10.1080/10888438.2013.873937

Vellutino, F. R., Fletcher, J. M., Snowling, M. J., and Scanlon, D. M. (2004). Specific reading disability (dyslexia): what have we learned in the past four decades? J. Child psychol. Psychiatry 45, 2–40. doi: 10.1046/j.0021-9630.2003.00305.x

Washburn, E. K., Binks-Cantrell, E. S., and Joshi, R. (2014). What do preservice teachers from the USA and the UK know about dyslexia? Dyslexia 20, 1–18. doi: 10.1002/dys.1459

Weale, S. (2017). Teachers Must Ditch ‘Neuromyth’ Of Learning Styles, Say Scientists. Available online at: https://www.theguardian.com/education/2017/mar/13/teachers-neuromyth-learning-styles-scientists-neuroscience-education (Accessed May 25, 2017).

Weisberg, D. S., Keil, F. C., Goodstein, J., Rawson, E., and Gray, J. R. (2008). The seductive allure of neuroscience explanations. J. Cogn. Neurosci. 20, 470–477. doi: 10.1162/jocn.2008.20040

Wickelgren, W. (1981). Human learning and memory. Annu. Rev. Psychol. 32, 21–52. doi: 10.1146/annurev.ps.32.020181.000321

Willingham, D. T., Hughes, E. M., and Dobolyi, D. G. (2015). The scientific status of learning styles theories. Teach. Psychol. 42, 266–271. doi: 10.1177/0098628315589505

Appendix A

Keywords: neuromyths, educational neuroscience, learning styles, dyslexia

Citation: Macdonald K, Germine L, Anderson A, Christodoulou J and McGrath LM (2017) Dispelling the Myth: Training in Education or Neuroscience Decreases but Does Not Eliminate Beliefs in Neuromyths. Front. Psychol. 8:1314. doi: 10.3389/fpsyg.2017.01314

Received: 24 March 2017; Accepted: 17 July 2017;

Published: 10 August 2017.

Edited by:

Jesus de la Fuente, University of Almería, SpainReviewed by:

Thomas James Lundy, Cuttlefish Arts, United StatesSteve Charlton, Douglas College, Canada

Copyright © 2017 Macdonald, Germine, Anderson, Christodoulou and McGrath. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lauren M. McGrath, lauren.mcgrath@du.edu

Kelly Macdonald

Kelly Macdonald Laura Germine

Laura Germine Alida Anderson3

Alida Anderson3 Lauren M. McGrath

Lauren M. McGrath