- 1Department of Library and Information Science, National Taiwan University, Taipei, Taiwan

- 2Department of Psychology, Department of Bio-Industry Communication and Development, National Taiwan University, Taipei, Taiwan

To support older users’ accessibility and learning of the prevalent information and communication technologies (ICTs), libraries, as informal learning institutes, are committed to information literacy education activities with friendly interfaces. Chatbots using Voice User Interfaces (VUIs) with natural and intuitive interactions have received growing research and practical attention; however, older users report regular frustrations and problems in using them. To serve as a basis for the subsequent design and development of an automated dialog mechanism in senior-friendly chatbots, a between-subject user experiment was conducted with 30 older adults divided into three groups. The preliminary findings on their interactions with the voice chatbots designed with different error handling strategies were reported. Participants’ behavioral patterns, performances, and the tactics they employed in interacting with the three types of chatbots were analyzed. The results of the study showed that the use of multiple error handling strategies is beneficial for older users to achieve effectiveness and satisfaction in human-robot interactions, and facilitate their attitude toward information technology. This study contributes empirical evidence in the genuine and pragmatic field of gerontechnology and expands upon voice chatbots research by exploring conversation errors in human-robot interactions that could be of further application in designing educational and living gerontechnology.

Introduction

In response to the popularity of information and communication technologies (ICTs) and the increasing proportion of older adult users, facilitating older users’ access to information is gaining research and practical attention. The public and private sectors are both investing in the research and development of gerontechnology and service design (Subasi et al., 2011; American Library Association, 2017). As important information agencies and social educational institutes, libraries actively use information technologies to provide older patrons with resources, including collections, services, activities, and facilities that meet their psychological, physical, and information needs. In addition to the technical services which mostly involve library automation infrastructure, few yet significant endeavors have involved the adoption of information technologies to offer reader services that involve social interaction, such as library guidance or book finding service robots (Lin et al., 2014; Tatham, 2016), a reading companion robot (Yueh et al., 2020), and a computer skill tutor robot (Waldman, 2014), all of which used Voice User Interfaces (VUIs) to achieve communication with the users. It has been found that novice users prefer VUIs over keyboards, and VUIs are regarded as highly accessible for older users due to the affordance of natural, intuitive, and easy interaction (Portet et al., 2013; Ziman and Walsh, 2018). However, our reception and interpretation of auditory stimuli are limited by innate physiological mechanisms such as linear processing, which reduce overall comprehension (Yankelovich, 1996), and by acquired psychological factors such as low self-efficacy, which reduce willingness of interaction (Bulyko et al., 2005). These mechanisms and factors make misinterpretation and errors inevitable. Previous studies of human communication with humans and artificial beings suggest that, when errors occur, both parties will attempt to repair the conversation, and the strategies they adopt to handle errors will also affect the users’ conversation behaviors and performance (Clark and Brennan, 1991; Oulasvirta et al., 2006). Despite sporadic discussions on the quantitative and qualitative nature and effectiveness of error-handling strategies, including single vs. multiple uses (Portet et al., 2013; Opfermann and Pitsch, 2017) and re-prompt vs. suggestion styles (Oviatt et al., 1998; Bulyko et al., 2005), the findings have been inconsistent and taken little account of the specific user characteristics of older adults. Previous studies has focused on developing dialog system using alternative error handling strategies other than reprompt for general user models, therefore lacking a comprehensive comparison of all error handling strategies in real use contexts. Furthermore, since the user characteristics are highly associated with the technological affordance of the system interface and modalities, these studies also suggested more research attention on users’ behaviors, performance, and preferences (Oviatt et al., 1998; Bulyko et al., 2005; Portet et al., 2013; Lu et al., 2017). More empirical and systematic investigations on older users’ interactions with VUIs are needed as a basis for designing adaptive voice AIs and voice services. This study therefore aimed to explore how older users interact with a voice chatbot that uses different error-handling strategies. Based on the systematic investigation on older users’ conversational behaviors, performances, and experiences from the error handling perspective, this study intends to present a senior-friendly VUI conversation model as a basis for designing conversational AI chatbots in the future.

In addition to error handling strategies, the motivation and performance of older users in using ICTs are also affected psychologically by their self-efficacy (Bandura, 2006; Hsiao and Tang, 2015). While previous studies of human–computer interaction suggest that older users possess relatively low beliefs and self-perceptions about their ability to use technology to access information and accomplish their goals (Hajiheydari and Ashkani, 2018), studies of human–robot interaction have further indicated that when interacting with more human-like agents, users’ self-efficacy varies due to emotional and cultural influences (Pütten and Bock, 2018). Older users’ low self-efficacy of ICT use is also affected by their physical and cognitive deterioration. As aging decreases the ability to retrieve and recognize words, older users become less sensitive to sounds and take longer to find the right words to express themselves (Baba et al., 2004). In terms of cognitive processing, older adults are less likely to suppress interference from errors or misinterpretation, and they have difficulty recalling old information to associate it with new input (Lee, 2015), both of which create obstacles to their interaction with VUIs. When older users experience difficulties in accessing information due to the abovementioned physical and psychological decline, more frustration, stress, and self-condemnation are reported (Rodin, 1986; Myers et al., 2018), and they are more likely to make errors. As a consequence, it would also prevent them from interaction, learning or the tasks they were to perform. To help older users escape this vicious cycle and have better user experiences, VUIs need not only to receive and recognize user commands but also to help users handle errors in real time and provide adaptive feedback and guidance. Previous studies support that active intervention by a conversational agent can reduce the occurrence of errors (Bulyko et al., 2005), and this study further incorporated different error handling strategies comprehensively in designing voice chatbot guidance and responses to investigate effective error-handling strategies for older users in the real use contexts. In the design of a senior-friendly VUI, the specific research questions to be answered in the present study included (1) Whether there were differences in the behaviors and performance of homogeneous older users when interacting with chatbots with different error-handling strategies? and (2) How the older users with different levels of self-efficacy interacted with the voice chatbots, and whether their performance and preferences differed.

Materials and Methods

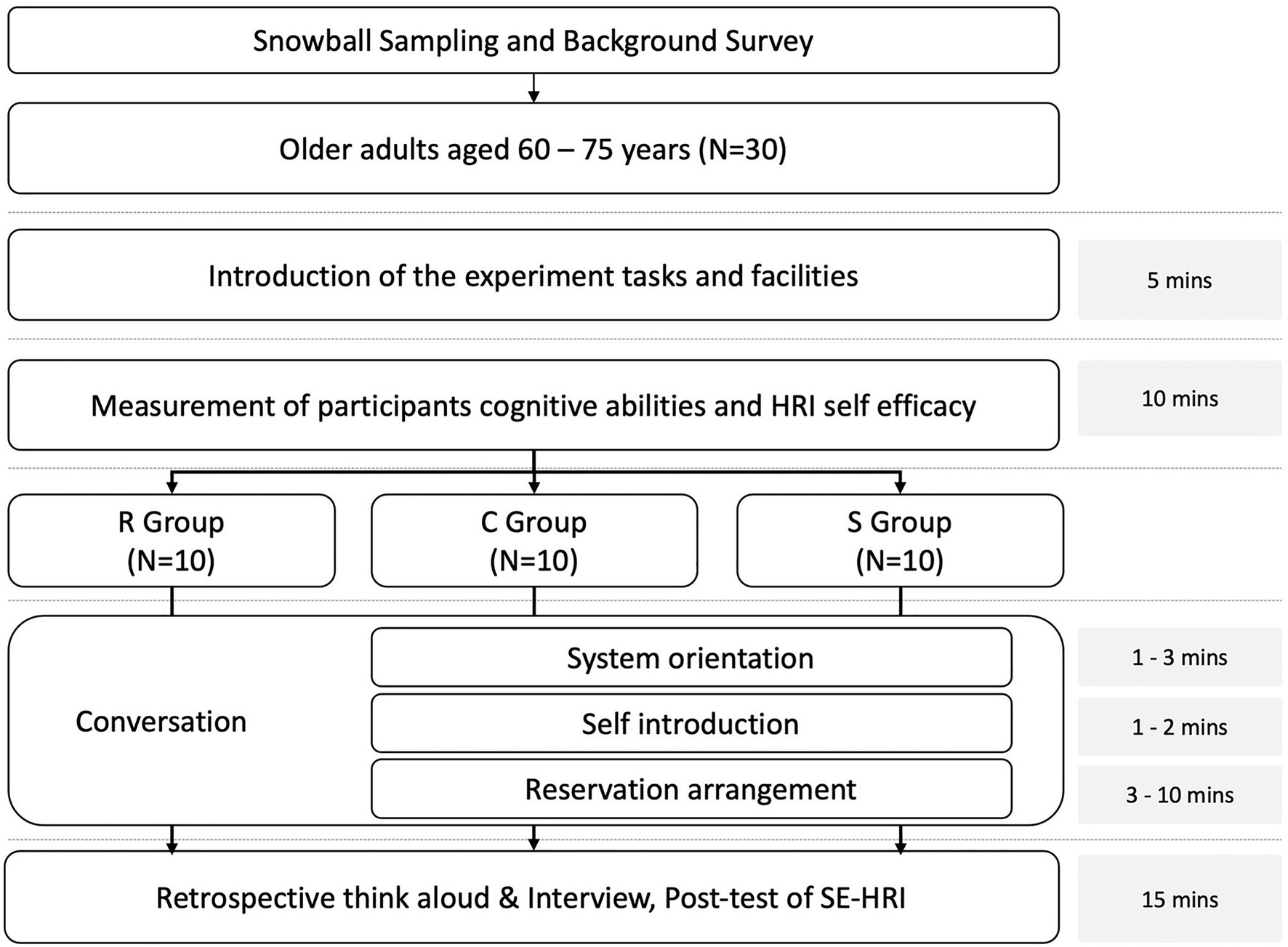

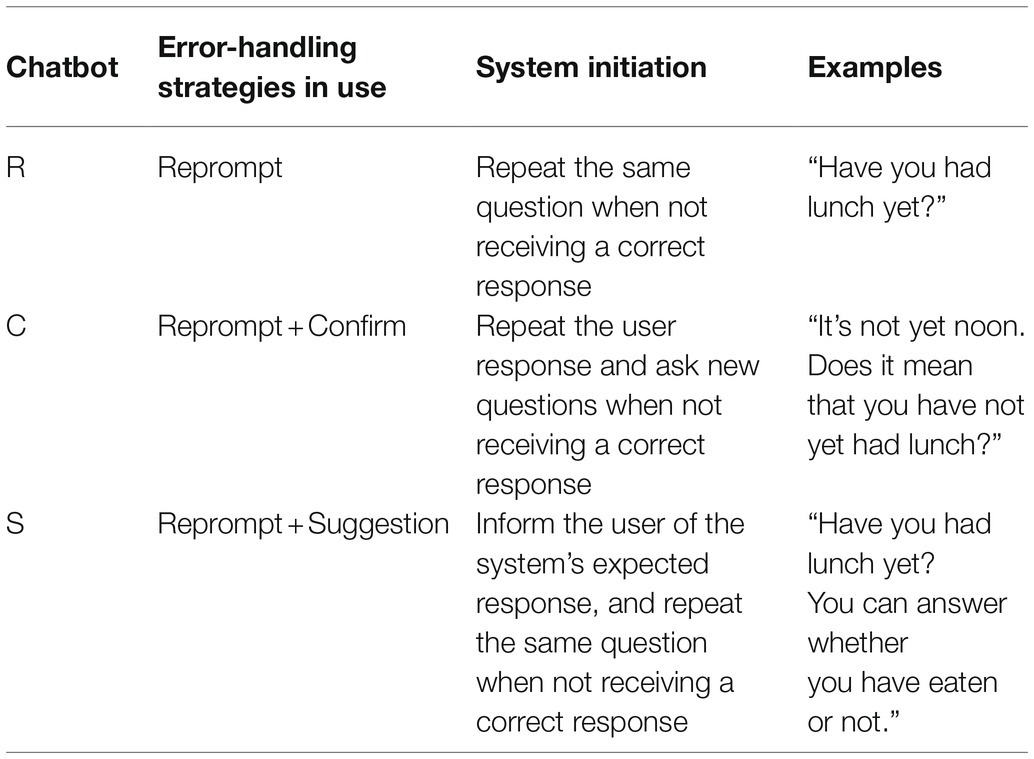

The present study adopted the methodology of user experiment with a between-subjects design to investigate how the different error handling strategies of voice chatbots affect older users’ interaction behaviors and performance. The apparatus of the study were three voice chatbots designed with different levels of error handling in terms of the types and amount of system repair initiations. In order to avoid unnecessary technical interferences (Klemmer et al., 2000), the voice chatbots were prototyping through the Wizard-of-Oz technique with the human researchers simulated the speech system. The procedure of the user experiment was illustrated in Figure 1. Within the interactional task of making a restaurant reservation, this study comprised three phases of conversation: system orientation, self-introduction, and reservation arrangement at a restaurant, each with a set of questions and formulaic beginnings of utterances programmed to acquaint older users with the voice chatbots. As shown in Table 1, this study designed and developed three types of voice chatbots, namely Reprompt (R), Reprompt + Confirm (C), and Repromt + Suggestion (S) chatbots with reference to different error-handling strategies discussed in previous studies (Oviatt et al., 1998; Bulyko et al., 2005; Bohus and Rudnicky, 2008; Paek and Pieraccini, 2008; Portet et al., 2013; Opfermann and Pitsch, 2017; Ziman and Walsh, 2018).

This study employed purposeful and non-probability sampling technique of snowball sampling (Parker et al., 2019) to recruit older adults in the fields of public libraries and community centers. A total of 30 older adults aged 60–75 years (M = 68.7, SD = 4.2) voluntarily participated in the experiment. Their prior knowledge and experiences of ICTs and VUIs were investigated during the recruitment with a self-developed Background Survey. Before the experiment, the participants’ cognitive abilities were measured with the Saint Louis University Mental Status Exam (SLUMS; Tariq et al., 2006), and their self-efficacy in interacting with technologies was measured with the Self-Efficacy in Human-Robot-Interaction Scale (SE-HRI; Pütten and Bock, 2018). According to the pre-tests, all 30 participants had normal cognitive functioning according to the SLUMS criteria. Eighteen (60.0%) of them had previously used the voice assistants of their mobile phones, and 28 (93.3%) had frequently used computers to access and process information.

Participants with different amounts of experience were evenly assigned to the three groups for interaction with the voice chatbot. They heard the prompts using different error handling strategies read by the wizard via the voice chatbot with the entire session of the experiment recorded by two video cameras to capture their utterances, facial expressions, and gestures. After the experiment, the retrospective think aloud protocol was used in interview to confirm participants’ behavior intentions. The interview consisted of 14 open questions to investigate participants’ comprehension of the chatbot utterances, perceived efforts, and feelings in the experiment session, and the reasons for their subjective preferences. Also the post-test SE-HRI was distributed to test older adults’ perceived control, confidence, easiness, and satisfaction toward the voice chatbots. The Research Ethics Committee of the University approved all procedures, the protocol, and the methodology (NTU-REC 201907HS018).

Results and Discussion

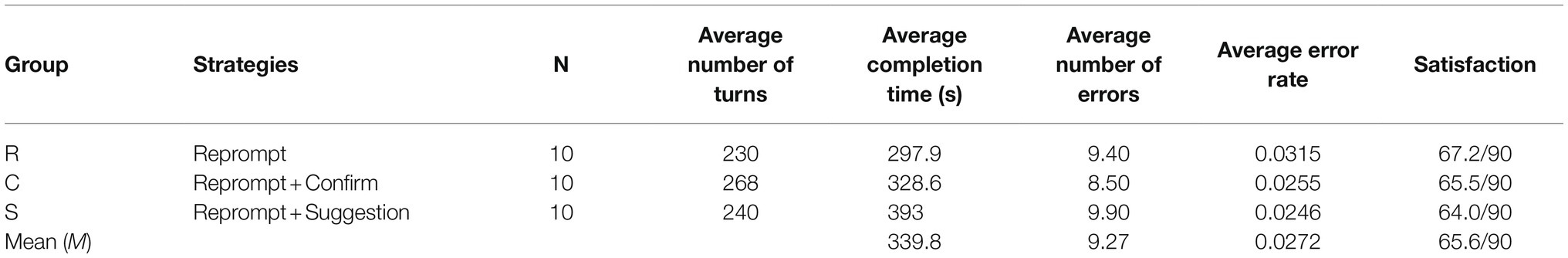

Averagely, the participants of all three groups spent nearly 6 min (M = 339.8 s) talking to the voice chatbot and completing the restaurant reservation task. The ANOVA was used to compare the performance of the three groups of users, and the results showed that subjects who interacted with Chatbot C, which used reprompt and confirmation error handling strategies, spent significantly more time than did those interacting with Chatbot S and Chatbot R (F = 5.7159, p < 0.01). As shown in Table 2, the number of back-and-forth alternations between the participants and Chatbot C was the largest. Meanwhile, the participants in Group R were the fastest to complete the task, but they spent a larger percentage of their time dealing with errors, while Group C spent more time in proceeding conversation with the voice chatbot. In addition, participants’ uses of error handling strategies were influenced by the chatbots they encountered. From the interviews, it was found that the participants in Group C regarded their voice chatbot as more human-like, and they were more likely to interact with it in a human-like manner, assimilating their conversational behaviors and actively reducing their uncertainty through additional words. As one participant reflected “It’s like teaching a child to talk, I could tell the robot what was wrong and expect improvement” (P29) since she felt the chatbot’s asking questions about everything just like novice and curious children did. Conversely, in Group S, the participants regarded the voice chatbot as a more typical machine; thus, they were more likely to treat it as a subordinate or to express their needs directly and briefly. Participants in Group R had different response strategies depending on their experience or the characteristics of the robot interaction.

It was also found that when errors occurred in the conversations, compared to the single and common strategy of reprompt, the use of multiple handling strategies, namely, suggestion and confirmation in addition to reprompt, reduced the error rate. According to the triangulation of observation and participants’ satisfaction, albeit less preferred by the older users, the “reprompt + suggestion” strategy was found to be the most effective way to handle conversation errors because the system provided clear guidance to reduce uncertainty and unnecessary trials, resulting in fewer conversational turns and lower error rate. The error handling strategy of “reprompt + confirmation” was less effective due to the lack of clear suggestions, so the participants could more easily become trapped in error loops. However, the analysis of participants’ subjective satisfaction suggested that even with higher error rates, the older users were still able to achieve satisfaction, echoing previous findings in human–robot interaction where the social robot triggered users’ mental models and expectations of human–human interaction (Lohse, 2011). Robots that make mistakes appear more human and thus are easier to accept and trust (Aronson et al., 1966; Salem et al., 2013).

The analysis of the errors revealed that the “reprompt” strategy alone was unable to reduce the number of user errors. This finding is in line with previous studies suggesting that older adults are less experienced and therefore also less aware of the technological affordance of chatbots, so they would guess or generate their responses blindly without identifying what caused the errors (Opfermann and Pitsch, 2017). The fact that older adults spend more time on word finding (Schmitter-Edgecombe et al., 2000) could be associated with the higher error rate in Group R, in which the chatbot used the single error handling strategy of reprompt without providing any guidance for the older adults to repair the conversation.

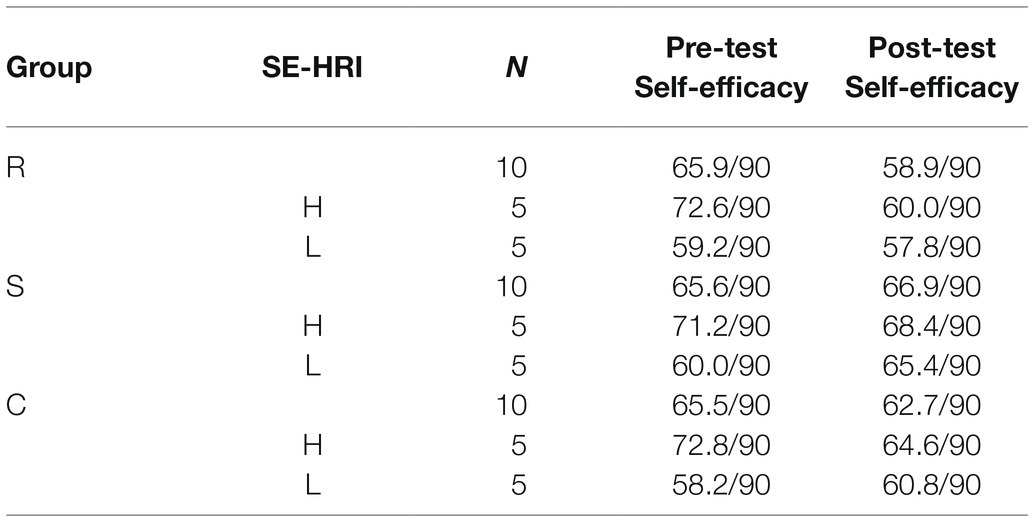

Further examination of participants’ self-efficacy and conversational performance using paired-sample t-test to compare the scores of pre- and post- SE-HRI revealed that the older adults with lower self-efficacy in the beginning perceived more control and capabilities after interacting with the voice chatbots, but those who had higher self-efficacy showed a significant decrease (t = 3.33, p < 0.05), indicating that high self-efficacy users tended to rely less on the voice chatbots to complete tasks. As shown in Table 3, those with lower self-efficacy in the beginning perceived significantly more control and capabilities in Group S (+5.4) and Group C (+2.6). Those in Groups S and C with higher self-efficacy also experienced decreases, but the decrease was smaller in Group S (−2.8) than in Group C (−8.2). However, despite the participants with lower self-efficacy having the largest increases in self-perception and conversational effectiveness in Group S, they felt the least satisfied and were disappointed with the voice chatbots using the reprompt and suggestion strategies to handle errors. According to the interview results, they expected the voice chatbot to be smarter than a human but found that they could only answer with the limited options suggested. “I felt a little annoyed that I could only answer the chatbot’s questions with a limited number of choices. I thought it should be smarter” (P15). Low self-efficacy participants were the most satisfied with the voice chatbot using reprompt and confirmation strategies because they regarded the chatbot as smart in “pointing out their errors” (P03) to eliminate uncertainties in the conversation.

A noteworthy result from the triangulation of interview and observation data suggested that those participants with high self-efficacy had a rich imagination of the chatbot’s capabilities and tended to keep guessing at the cause of system errors by themselves when such errors occurred, leading to overcorrection and frequent changes in their own responses and error handling strategies. In addition, the more confident they felt in carrying out the conversation with the voice chatbots, the more likely it was that they would give up when they could not complete the task as expected. The “reprompt + suggestion” strategy provided them with enough information to recover from errors and also revealed the limits of the chatbot’s capabilities, thus increasing their confidence in controlling the machine. Therefore, the findings supported that for high self-efficacy older users, the system error handling strategy should still reflect the nature of a machine. On the other hand, low self-efficacy users tended to attribute the errors to their own mistakes, such as inappropriate wording or pronunciation, and were more willing to repeat themselves with minor changes. For them, the “reprompt + confirmation” strategy seemed more effective because they felt encouraged when the voice chatbot, a machine, acted like a human in actively handling the errors along with them.

Conclusion

This study investigated how different error-handling strategies affected older users’ conversation performances and satisfaction with the voice chatbots. In summary, voice chatbots, which handled errors in conversation provided older users, especially those with lower self-efficacy, with more user control, resulting in higher engagement and performance. According to the comparison of different combinations of error handling strategies, the results suggested that the use of multiple error handling strategies, namely, suggestion and confirmation in addition to reprompt, is beneficial for older users to achieve effectiveness and satisfaction by reducing the error rate and improving their self-efficacy. They were generally more willing to proceed the conversation and explore new tasks including using ICTs under the facilitation of the voice chatbots, which supported the efficiency and importance of error-handling VUIs. Furthermore, the different error handling strategies used by the voice chatbots triggered different expectations of the older users, so that those of similar background and experiences had different ways of responding. The voice chatbot using confirmation to handle errors was regarded by the older users as more human-like, while the one using suggestion was regarded as more machine-like. More importantly, the older users who were less confident in using ICTs had a significant increase in self-efficacy after interacting with the error handling voice chatbots using suggestion and confirmation strategies. And the chatbot using suggestion to handle errors was also preferred by high self-efficacy users because it provided sufficient and efficient guidance for them to complete their tasks.

Based on these preliminary findings, it is suggested that older users’ self-efficacy toward ICTs should be included in the design considerations of VUIs, in general, and instructive VUIs specifically, to provide corresponding mechanisms of error handling. Methodologically, this study contributes to the field studies of gerontechnology by examining comprehensively the different error handling strategies of VUIs in real use contexts with the specific user group of older adults. While several limitations including a one-off session experiment and a relatively small sample size should still be noted, the systematic investigation made by this study on older users’ conversational behaviors, performances, and experiences from the error handling perspective could serve as a basis for designing conversational AI chatbots in the future since it has found critical elements for designing senior-friendly VUIs. The results of this study can further inform the design considerations of any application of chatbots providing information services, including living technology and learning technology in formal and informal education.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Research Ethics Committee of National Taiwan University. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

WL: conceptualization, methodology, visualization, investigation, and writing-original draft. H-CC: software and investigation. H-PY: funding acquisition and writing-review and editing. All authors contributed to the article and approved the submitted version.

Funding

This study is supported by the Taiwan Ministry of Science and Technology (MOST107-2923-S002-001-MY3 and MOST106-2410-H-002-093-MY2).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

American Library Association (2017). Guidelines for Library Services with 60+ Audience: Best Practices. IL: Chicago: American Library Association

Aronson, E., Willerman, B., and Floyd, J. (1966). The effect of a pratfall on increasing interpersonal attractiveness. Psychon. Sci. 4, 227–228. doi: 10.3758/BF03342263

Baba, A., Yoshizawa, S., Yamada, M., Lee, A., and Shikano, K. (2004). Acoustic models of the elderly for large-vocabulary continuous speech recognition. Elec. Comm. 87, 49–57. doi: 10.1002/ecjb.20101

Bandura, A. (2006). “Guide for constructing self-efficacy scales,” in Self-efficacy Beliefs of adolescents; February 1, 2006, 307–337.

Bohus, D., and Rudnicky, A. I. (2008). “Sorry, I didn’t catch that!” in Recent Trends in Discourse and Dialogue. eds. L. Dybkjær and W. Minker (Dordrecht: Springer), 123–154.

Bulyko, I., Kirchhoff, K., Ostendorf, M., and Goldberg, J. (2005). Error-correction detection and response generation in a spoken dialogue system. Speech Comm. 45, 271–288. doi: 10.1016/j.specom.2004.09.009

Clark, H. H., and Brennan, S. E. (1991). Grounding in Communication Perspectives on Socially Shared Cognition. Chicago: American Psychological Association, 127–149.

Hajiheydari, N., and Ashkani, M. (2018). Mobile application user behavior in the developing countries: A survey in Iran. Inf. Syst. 77, 22–33. doi: 10.1016/j.is.2018.05.004

Hsiao, C.-H., and Tang, K.-Y. (2015). Investigating factors affecting the acceptance of self-service technology in libraries: The moderating effect of gender. Library Hi Tech. 33, 114–133. doi: 10.1108/LHT-09-2014-0087

Klemmer, S. R., Sinha, A. K., Chen, J., Landay, J. A., Aboobaker, N., and Wang, A. (2000). “Suede: a wizard of oz prototyping tool for speech user interfaces,” in Proceedings of the 13th annual ACM symposium on User interface software and technology; November 1, 2000, 1–10.

Lee, J. Y. (2015). Aging and speech understanding. J. Audiol. Otol. 19, 7–13. doi: 10.7874/jao.2015.19.1.7

Lin, W., Yueh, H. P., Wu, H. Y., and Fu, L. C. (2014). Developing a service robot for a children's library: A design-based research approach. J. Assoc. Inf. Sci. Technol. 65, 290–301. doi: 10.1002/asi.22975

Lohse, M. (2011). The role of expectations and situations in human-robot interaction. New Front. Hum. Robot Int. 2, 35–56. doi: 10.1075/ais.2.04loh

Lu, M.-H., Lin, W., and Yueh, H.-P. (2017). Development and evaluation of a cognitive training game for older people: A design-based approach. Front. Psychol. 8:1837. doi: 10.3389/fpsyg.2017.01837

Myers, C., Furqan, A., Nebolsky, J., Caro, K., and Zhu, J. (2018). “Patterns for how users overcome obstacles in voice user interfaces,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems; April 19, 2018, 1–7.

Opfermann, C., and Pitsch, K. (2017). “Reprompts as error handling strategy in human-agent-dialog? User responses to a system's display of non-understanding,” in 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN); August 28–1 September, 2017, 310–316.

Oulasvirta, A., Engelbrecht, K.-P., Jameson, A., and Möller, S. (2006). “The relationship between user errors and perceived usability of a spoken dialogue system,” in The 2nd ISCA/DEGA Tutorial and Research Workshop on Perceptual Quality of Systems; September 3–5, 2006.

Oviatt, S., Levow, G.-A., Moreton, E., and MacEachern, M. (1998). Modeling global and focal hyperarticulation during human–computer error resolution. J. Acoust. Soc. Am. 104, 3080–3098. doi: 10.1121/1.423888

Paek, T., and Pieraccini, R. (2008). Automating spoken dialogue management design using machine learning: An industry perspective. Speech Comm. 50, 716–729. doi: 10.1016/j.specom.2008.03.010

Parker, C., Scott, S., and Geddes, A. (2019). Snowball Sampling. SAGE Research Methods Foundations. United States: SAGE Publications.

Portet, F., Vacher, M., Golanski, C., Roux, C., and Meillon, B. (2013). Design and evaluation of a smart home voice interface for the elderly: acceptability and objection aspects. Pers. Ubiquit. Comput. 17, 127–144. doi: 10.1007/s00779-011-0470-5

Pütten, A. R. V. D., and Bock, N. (2018). Development and validation of the self-efficacy in human-robot-interaction scale (SE-HRI). ACM Trans. Hum. Robot Int. 7, 1–30. doi: 10.1145/3139352

Rodin, J. (1986). Aging and health: effects of the sense of control. Science 233, 1271–1276. doi: 10.1126/science.3749877

Salem, M., Eyssel, F., Rohlfing, K., Kopp, S., and Joublin, F. (2013). To err is human (−like): effects of robot gesture on perceived anthropomorphism and likability. Int. J. Soc. Robot. 5, 313–323. doi: 10.1007/s12369-013-0196-9

Schmitter-Edgecombe, M., Vesneski, M., and Jones, D. (2000). Aging and word-finding: A comparison of spontaneous and constrained naming tests. Arch. Clin. Neuropsychol. 15, 479–493.

Subasi, Ö., Leitner, M., Hoeller, N., Geven, A., and Tscheligi, M. (2011). Designing accessible experiences for older users: user requirement analysis for a railway ticketing portal. Univ. Access Inf. Soc. 10, 391–402. doi: 10.1007/s10209-011-0223-2

Tariq, S. H., Tumosa, N., Chibnall, J. T., Perry, M. H. III, and Morley, J. E. (2006). Comparison of the Saint Louis university mental status examination and the mini-mental state examination for detecting dementia and mild neurocognitive disorder—a pilot study. Am. J. Geriatr. Psychiatry 14, 900–910. doi: 10.1097/01.JGP.0000221510.33817.86

Tatham, H. (2016). 'First of its kind' humanoid robot joins library staff in North Queensland. ABC News, December 22.

Waldman, L. (2014). “Coming soon to the library: humanoid robots,” in Wall Street Journal; September 29, 2014.

Yankelovich, N. (1996). How do users know what to say? Interactions 3, 32–43. doi: 10.1145/242485.242500

Yueh, H. P., Lin, W., Wang, S. C., and Fu, L. C. (2020). Reading with robot and human companions in library literacy activities: A comparison study. Br. J. Educ. Technol. 51, 1884–1900. doi: 10.1111/bjet.13016

Keywords: human-robot interaction, older users, literacy education, error handling strategies, voice user interfaces, chatbot

Citation: Lin W, Chen H-C and Yueh H-P (2021) Using Different Error Handling Strategies to Facilitate Older Users’ Interaction With Chatbots in Learning Information and Communication Technologies. Front. Psychol. 12:785815. doi: 10.3389/fpsyg.2021.785815

Edited by:

Elvira Popescu, University of Craiova, RomaniaReviewed by:

Jason Bernard, McMaster University, CanadaSonia Adelé, Université Gustave Eiffel, France

Copyright © 2021 Lin, Chen and Yueh. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hsiu-Ping Yueh, yueh@ntu.edu.tw

Weijane Lin

Weijane Lin Hong-Chun Chen1

Hong-Chun Chen1 Hsiu-Ping Yueh

Hsiu-Ping Yueh