Bits from brains for biologically inspired computing

- 1MEG Unit, Brain Imaging Center, Goethe University, Frankfurt am Main, Germany

- 2School of Civil Engineering, The University of Sydney, Sydney, NSW, Australia

- 3CSIRO Digital Productivity Flagship, Marsfield, NSW, Australia

- 4Department of Non-linear Dynamics, Max Planck Institute for Dynamics and Self-Organization, Göttingen, Germany

- 5Bernstein Center for Computational Neuroscience, Göttingen, Germany

Inspiration for artificial biologically inspired computing is often drawn from neural systems. This article shows how to analyze neural systems using information theory with the aim of obtaining constraints that help to identify the algorithms run by neural systems and the information they represent. Algorithms and representations identified this way may then guide the design of biologically inspired computing systems. The material covered includes the necessary introduction to information theory and to the estimation of information-theoretic quantities from neural recordings. We then show how to analyze the information encoded in a system about its environment, and also discuss recent methodological developments on the question of how much information each agent carries about the environment either uniquely or redundantly or synergistically together with others. Last, we introduce the framework of local information dynamics, where information processing is partitioned into component processes of information storage, transfer, and modification – locally in space and time. We close by discussing example applications of these measures to neural data and other complex systems.

1. Introduction

Artificial computing systems are a pervasive phenomenon in today’s life. While traditionally such systems were employed to support humans in tasks that required mere number-crunching, there is an increasing demand for systems that exhibit autonomous, intelligent behavior in complex environments. These complex environments often confront artificial systems with ill-posed problems that have to be solved under constraints of incomplete knowledge and limited resources. Tasks of this kind are typically solved with ease by biological computing systems, as these cannot afford the luxury to dismiss any problem that happens to cross their path as “ill-posed.” Consequently, biological systems have evolved algorithms to approximately solve such problems – algorithms that are adapted to their limited resources and that just yield “good enough” solutions, quickly. Algorithms from biological systems may, therefore, serve as an inspiration for artificial information processing systems to solve similar problems under tight constraints of computational power, data availability, and time.

One naive way to use this inspiration is to copy and incorporate as much detail as possible from the biological into the artificial system, in the hope to also copy the emergent information processing. However, already small errors in copying the parameters of a system may compromise success. Therefore, it may be useful to derive inspiration also in a more abstract way, which is directly linked to the information processing carried out by a biological system. But how can we gain insight into this information processing without caring for its biological implementation?

The formal language to quantitatively describe and dissect information processing – in any system – is provided by information theory. For our particular question, we can exploit the fact that information theory does not care about the nature of variables that enter the computation or information processing. Thus, it is in principle possible to treat all relevant aspects of biological computation, and of biologically inspired computing systems, in one natural framework.

Here, we will begin with a review of information-theoretic preliminaries (Section 2). Then we will systematically present how to analyze biological computing systems, especially neural systems, using methods from information theory and discuss how these information-theoretic results can inspire the design of artificial computing systems. Specifically, we focus on three types of approaches to characterizing the information processing undertaken in such systems and what this tells us about the algorithms they implement. First, we show how to analyze the information encoded in a system (responses) about its environment (stimuli) in Section 3. Second, in Section 4, we describe recent advances in quantifying how much information each response variable carries about the stimuli either uniquely or redundantly or synergistically together with others. Third, in Section 5, we introduce the framework of local information dynamics, which partitions information processing into component processes of information storage, transfer, and modification, and in particular measures these processes locally in space and time. This information dynamics approach is particularly useful in gaining insights into the information processing of system components that are far removed from direct stimulation by the outer environment. We will close in Section 6 by a brief review of studies where this information-theoretic point of view has served the goal of characterizing information processing in neural and other biological information processing systems.

2. Information Theory in Neuroscience

2.1. Information-Theoretic Preliminaries

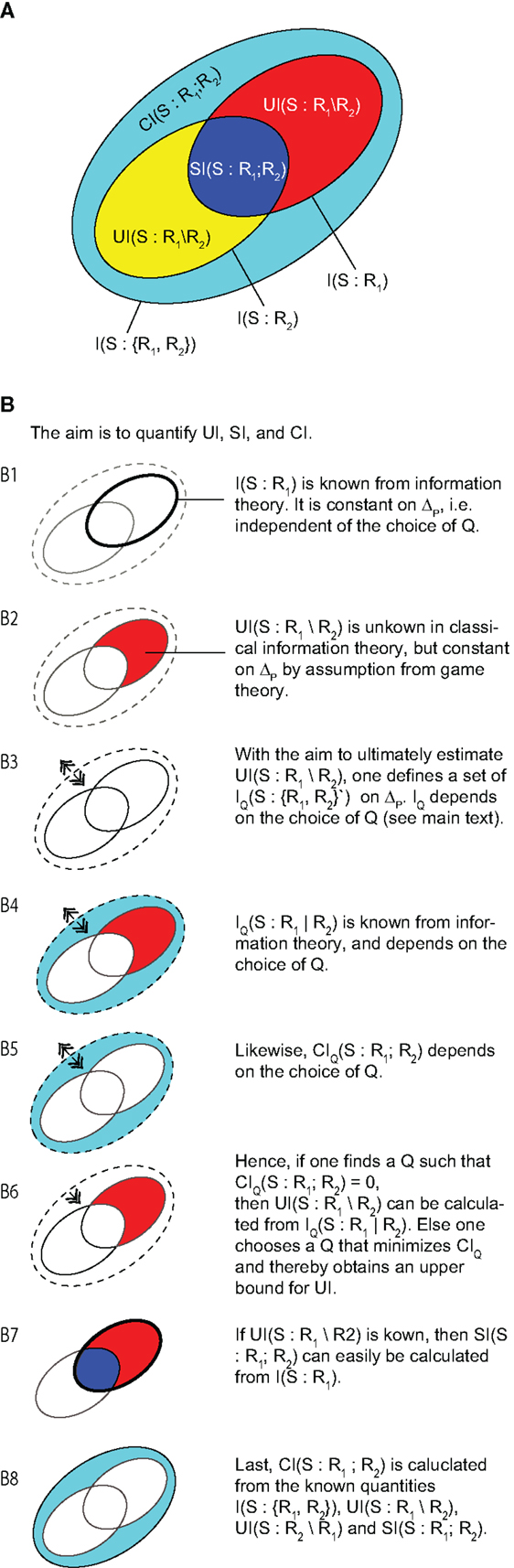

In this section, we introduce the necessary terminology, and notation, and define basic information-theoretic quantities that later analyses build on. Experts in information theory may proceed immediately to Section 2.2, which discusses the use of information theory in neuroscience.

2.1.1. Terminology and notation

To analyze neural systems and biologically inspired computing systems (BICS) alike, and to show how the analysis of one can inspire the design of the other, we have to establish a common terminology. Neural systems and BICS have the common property that they are composed of various smaller parts that interact. These parts will be called agents in general, but we will also refer to them as neurons or brain areas where appropriate. The collection of all agents will be referred to as the system.

We define that an agent 𝒳 in a system produces an observed time series {x1,…, xt,…, xN}, which is sampled at time intervals Δ. For simplicity, we choose Δ = 1, and index our measurements by t ∈{1. . . N}⊆ ℕ. The time series is understood as a realization of a random process

X. The random processes are a collection of random variables (RVs) Xt, sorted by an integer index (t). Each RV Xt, at a specific time t, is described by the set of all its J possible outcomes and their associated probabilities Since the probabilities of an outcome may change with t in non-stationary random processes, we indicate the RV the probabilities belong to by subscript: In sum, the physical agent 𝒳 is conceptualized as a random process X, composed of a collection of RVs Xt, which produce realizations xt, according to the probability distributions When referring to more than one agent, the notation is generalized to 𝒳, 𝒴, 𝒵, … . An overview of the complete notation can be found in Table 1.

2.1.2. Estimation of probability distributions for stationary and non-stationary random processes

In general, the probability distributions of the Xt are unknown. Since knowledge of these probability distributions is essential to computing any information-theoretic measure, the probability distributions have to be estimated from the observed realizations of the RVs, xt. This is only possible if we have some form of replication of the processes we wish to analyze. From such replications, the probabilities are estimated, for example, by counting relative frequencies, or by density estimation (Kozachenko and Leonenko, 1987; Kraskov et al., 2004; Victor, 2005).

In general, the probability to obtain the j-th outcome xt = aj at time t, has to be estimated from replications of the processes at the same time point t, i.e., via an ensemble of physical replications of the systems in question. These replications can often be obtained in BICS via multiple simulation runs or even physical replications if the systems in question are very small and/or simple. For complex physically embodied BICS and neural systems, generating a sufficient number of replications of a process is often impossible. Therefore, one either resorts to repetitions of parts of the process in time, to the generation of cyclostationary processes, or even assumes stationarity. All three possibilities will be discussed in the following.

2.1.2.1. General repetitions in time. If our random process can be repeated in time, then the probability to obtain the value xt = aj can be estimated from observations made at a sufficiently large set ℳ of time points t + k, where we know by design of the experiment that the process repeated itself. That is, we know that RVs X t+ k at certain time points t + k have probability distributions identical to the distribution at t that is of interest to us:

If the set ℳ of times tk that the process is repeated at is large enough, we obtain a reliable estimate of

2.1.2.2. The cyclostationary case. Cyclostationarity can be understood as a specific form of repeating parts of the random process, where the repetitions occur after regular intervals T. For cyclostationary processes X(c) we assume (Gardner, 1994; Gardner et al., 2006) that there are RVs at times t + nT that have the same probability distribution as :

This condition guarantees that we can estimate the necessary probability distributions of the RV by looking at other RVs of the process X(c).

2.1.2.3. Stationary processes. Finally, for stationary processes X(s), we can substitute T in equation (2) by T = 1 and:

In the stationary case, the probability distribution can be estimated from the entire set of measured realizations xt. Thus, we will drop the subscript index indicating the specific RV, i.e., Xt = X and xt = x when the process is stationary, and also when stationarity is irrelevant (e.g., when talking only about a single RV).

2.1.3. Basic information theory

Based on the above definitions we now define the necessary basic information-theoretic quantities. To put a focus on the often neglected local information-theoretic quantities that will become important later on, we will start with the Shannon information content of a realization of a RV.

To this end, we assume a (potentially non-stationary) random process X consisting of X1, X2, …, XN. The law of total probability states that

and the product rule yields

with

All realizations of the process starting with a specific x1 thus together have probability mass

and occupy a fraction of p(x1)/1 in the original probability space. Obtaining x1 can therefore be interpreted as informing us that the full realization lies in this fraction of the space. Thus, the reduction in uncertainty, or the information gained from x1 must be a function of 1/p(x1). To ensure that subsequent realizations from independent RVs yield additive amounts of information, we take the logarithm of this ratio to obtain the Shannon information content (Shannon, 1948) [also see MacKay (2003)], which measures the information provided by a single realization xi of a RV Xi:

Typically, we take log2 giving units in bits.

The average information content of a RV Xi is called the entropy H:

The information content of a specific realization x of X, given we already know the outcome y of another variable Y, which is not necessarily independent of X, is called conditional information content:

Averaging this for all possible outcomes of X, given their probabilities p(x|y) after the outcome y was observed and averaging then over all possible outcomes y that occur with p(y), yields the conditional entropy:

The conditional entropy H(X|Y) can be described from various perspectives: H(X|Y) is the average amount of information that we get from making an observation of X after having already made an observation of Y. In terms of uncertainties, H(X|Y) is the average remaining uncertainty in X once Y was observed. We can also say H(X|Y) is the information in X that can not be directly obtained from Y.

The conditional entropy can be used to derive the amount of information directly shared between the two variables X, Y. This is because the mutual information of two variables X, Y, I(X: Y), is the total average information in one variable [H(X)] minus the average information in this variable that can not be obtained from the other variable [H(X|Y)]. Hence, the mutual information (MI) is defined as:

Similarly to conditional entropy, we can also define a conditional mutual information between two variables X, Y, given the value of a third variable Z is known:

The above measures of mutual information are averages. Although average values are used more often than their localized counterparts, it is perfectly valid to inspect local values for MI (like the information content h, above). This “localizability” was, in fact, a requirement that both Shannon (1948) and Fano (1961) postulated for proper information-theoretic measures, and there is a growing trend in neuroscience (Lizier et al., 2011a) and in the theory of distributed computation (Lizier, 2013, 2014a) to return to local values. For the above measures of mutual information, the localized forms are listed in the following.

The local mutual information i(x : y) is defined as:

while the local conditional mutual information is defined as:

When we take the expected values of these local measures, we obtain mutual and conditional mutual information. These measures are called local, because they allow one to quantify mutual and conditional mutual information between single realizations. Note, however, that the probabilities p(⋅) involved in equations (14) and (15) are global in the sense that they are representative of all possible outcomes. In other words, a valid probability distribution has to be estimated irrespective of whether we are interested in average or local information measures. We also note that local MI and local conditional MI may be negative, unlike their averaged forms (Fano, 1961; Lizier, 2014a). This occurs for the local MI where the measurement of one variable is misinformative about the other variable, i.e., where the realization y lowers the probability p(x|y) below the initial probability p(x). This means that the observer expected x less after observing y than before, but x occurred nevertheless. Therefore, y was misinformative about x.

2.1.4. Estimating information-theoretic quantities from data

Before we advance to specific information-theoretic analyses of neural data, it must be stressed that the estimation of information-theoretic measures from finite data is a difficult task. The naive estimation of probabilities by empirically observed frequencies, followed by plugging of these probabilities into the above definitions almost inevitably leads to serious bias problems (Treves and Panzeri, 1995; Victor, 2005; Panzeri et al., 2007a). This situation can be improved to some degree by using binless density estimators (Kozachenko and Leonenko, 1987; Kraskov et al., 2004; Victor, 2005). However, usually statistical testing against surrogate data or empirical control data will be necessary to judge whether a non-zero value of a measure indicates an effect or just the bias [see, e.g., Lindner et al. (2011)].

2.1.5. Signal representation and state space reconstruction

The random processes that we analyze in the agents of a computing system usually have memory. This means that the RVs that form the process are no longer independent, but depend on variables in the past. In this setting, a proper description of the process requires to look at the present and past RVs jointly. In general, if there is any dependence between the Xt, we have to form the smallest collection of variables with ti < t that jointly make Xt+1 conditionally independent of all with tk < min(ti), i.e.,

A realization xt of such a sufficient collection Xt of past variables is called a state of the random process X at time t.

A sufficient collection of past variables, also called a delay embedding vector, can always be reconstructed from scalar observations for low-dimensional deterministic systems, as shown by Takens (1981). Unfortunately, most real world systems have high-dimensional dynamics rather than being low-dimensional deterministic. For these systems, it is not obvious that a delay embedding similar to Taken’s approach would yield the desired results. In fact, many systems require an infinite number of past random variables when only a scalar observable of the high-dimensional stochastic process is accessible (Ragwitz and Kantz, 2002). Nevertheless, the behavior of scalar observables of most of these systems can be approximated well by a finite collection of such past variables for all practical purposes (Ragwitz and Kantz, 2002); in other words, these systems can be approximated well by a finite order, one-dimensional Markov-process according to equation (16).

Note that without proper state space reconstruction information-theoretic analyses will almost inevitably miscount information in the random process. Indeed, the importance of state space reconstruction cannot be overstated; for example, a failure to reconstruct states properly leads to false positive findings and reversed directions of information transfer as shown in Vicente et al. (2011); imperfect state space reconstruction is also the cause of failure of transfer entropy analysis demonstrated in Smirnov (2013); and has been shown to impede the otherwise clear identification of coherent moving structures in cellular automata as information transfer entities (Lizier et al., 2008c).

In the remainder of the text, we therefore assume proper state space reconstruction. The resulting state space representations are indicated by bold case letters, i.e., Xt and xt refer to the state variables of X.

2.2. Why Information Theory in Neuroscience?

It is useful to organize our understanding of neural (and biologically inspired) computing systems into three major levels, originally proposed by Marr (1982), and to then see at which level information theory provides insights:

• At the level of the task, the neural system or the BICS is trying to solve (task level1) we ask what information processing problem a neural system (or a part of it) tries to solve. Such problems could, for example, be the detection of edges or objects in a visual scene, or maintaining information about an object after the object is no longer in the visual scene. It is important to note that questions at the task level typically revolve around entities that have a direct meaning to us, e.g., objects or specific object properties used as stimulus categories, or operationally defined states, or concepts such as attention or working memory. An example of an analysis carried out purely at this level is the investigation of whether a person behaves as an optimal Bayesian observer [see references in Knill and Pouget (2004)].

• At the algorithmic level, we ask what entities or quantities of the task level are represented by the neural system and how the system operates on these representations using algorithms. For example, a neural system may represent either absolute luminance or changes of luminance of the visual input. An algorithm operating on either of these representations may, for example, then try to identify an object in the input that is causing the luminance pattern either by a brute force comparison to all luminance patterns ever seen (and stored by the neural system). Alternatively, it may try to further transform the luminance representation via filtering, etc., before inferring the object via a few targeted comparisons.

• At the (biophysical) implementation level, we ask how the representations and algorithms are implemented in neural systems. Descriptions at this level are given in terms of the relationship between various biophysical properties of the neural system or its components, e.g., membrane currents or voltages, the morphology of neurons, spike rates, chemical gradients, etc. A typical study at this level might aim, for example, at reproducing observed physical behavior of neural circuits, such as gamma-frequency (>40 Hz) oscillations in local field potentials by modeling the biophysical details of these circuits from ground up (Markram, 2006).

This separation of levels of understanding served to resolve important debates in neuroscience, but there is also growing awareness of a specific shortcoming of this classic view: results obtained by careful study at any of these levels do not constrain the possibilities at any other level [see the after-word by Poggio in Marr (1982)]. For example, the task of winning a game of Tic–Tac–Toe (task level) can be reached by a brute force strategy (algorithmic level) that may be realized in a mechanical computer (implementation level) (Dewdney, 1989). Alternatively, the very same task can be solved by flexible rule use (algorithmic level) realized in biological brains (implementation level) of young children (Crowley and Siegler, 1993).

As we will see, missing relationships between Marr’s levels can be filled in by information theory: in Section 3, we show how to link the task level and the implementation level by computing various forms of mutual information between variables at these two levels. These mutual informations can be further decomposed into the contributions of each agent in a multi-agent system, as well as information carried jointly. This will be covered in Section 4. In Section 5, we use local information measures to link neural activity at the implementation level to components of information processing at the algorithmic level, such as information storage, and transfer. This will be done per agent and time step and thereby yields a sort of information theoretic “footprint” of the algorithm in space and time. To be clear, such an analysis will only yield this “footprint” – not identify the algorithm itself. Nevertheless, this footprint is a useful constraint when identifying algorithms in neural systems, because various possible algorithms to solve a problem will clearly differ with respect to this footprint. Section 4 covers current attempts to define the concept of information modification. We close by a short review of some example applications of information-theoretic analyses of neural data, and describe how they relate to Marr’s levels.

3. Analyzing Neural Coding

3.1. Neural Codes for External Stimuli

As introduced above, information theory can serve to bridge the gap between the task level, where we deal with properties of a stimulus or task that bear a direct meaning to us, and the implementation level, where we recorded physical indices of neural activity, such as action potentials. To this end, we use mutual information [equation (13)] and derivatives thereof to answer questions about neural systems like these:

1. Which (features of) neural responses (R) carry information about which (features of) stimuli (S)?

2. How much does an observer of a specific neural response r, i.e., a receiving brain area, change its beliefs about the identity of a stimulus s, from the initial belief p(s) to the posterior belief p(s|r)) after receiving the neural response r?

3. Which specific neural response r is particularly informative about an unknown stimulus s from a certain set of stimuli?

4. Which stimulus s leads to responses that are informative about this very stimulus, i.e., to responses that can “transmit” the identity of the stimulus to downstream neurons?

The empirical answers to these questions bear important implications for the design of BICS. For example, the encoding of an environment in a BICS may be modeled on that of a neural system that successfully lives in the same environment. In the following paragraphs, we will show how to answer the above questions 1–4 using information theory.

3.1.1. Which neural responses (R) carry information about which stimuli (S)?

This question can be easily answered by computing the mutual information I(S : R) between stimulus identity and neural responses. Despite its deceptive simplicity, computing this mutual information can be very informative about neural codes. This is because both the description of what constitutes a stimulus and a response rely on what we consider to be their relevant features. For example, presenting pictures of fruit as stimulus set, we could compute the mutual information between neural responses and the stimuli described as red versus green fruit or described as apples versus pears. The resulting mutual information will differ between these two descriptions of the stimulus set – allowing us to see how the neural system partitions the stimuli. Likewise, we could extract features Fi(r) of neural responses r, such as the time of the first spike [e.g., Johansson and Birznieks (2004)], or the relative spike times (O’Keefe and Recce, 1993; Havenith et al., 2011). Comparing the mutual information for two features I(S : F1(R)), I(S : F2(R)) allows to identify the feature carrying most information. This feature potentially is the one also read out internally by other stages of the neural system. However, when investigating individual stimulus or response features, one should also keep in mind that several stimulus or response features might have to be considered jointly as they could carry synergistic information (see Section 4, below).

3.1.2. How much does an observer of a specific neural response r, i.e., a receiving neuron or brain area, change its beliefs about the identity of a stimulus s, from the prior belief p(s) to the posterior belief p(s|r) after receiving the neural response r?

This question is natural to ask in the setting of Bayesian brain theories (Knill and Pouget, 2004). Since this question addresses a quantity associated with a specific response (r), we have to decompose the overall mutual information between the stimulus variable and the response variable [I(S : R)] into more specific information terms. As this question is about a difference in probability distributions, before and after receiving r, it is naturally expressed in terms of a Kullback–Leibler divergence between p(s) and p(s|r). The resulting measure is called the specific surprise isp (DeWeese and Meister, 1999):

It can be easily verified that I(S : R) = Σrp(r)isp(S : r). Hence isp is a valid partition of the mutual information into more specific, response dependent contributions. Similarly, we have isp(S : r) = Σsp(s|r)i(s : r), giving the relationship between the (fully) localized MI [equation (14)] and isp(S : r) as a partially localized MI. As a Kullback–Leibler divergence, isp is always positive or zero:

This simply indicates that any incoming response will either update our beliefs (leading to a positive Kullback–Leibler divergence) or not (in which case the Kullback–Leibler divergence will be zero). From this it immediately follows that isp cannot be additive: if of two subsequent responses r1, r2, the first leads us to update our beliefs about s from p(s) to p(s|r), but the second leads us to revert this update, i.e., p(s|r1, r2) = p(s) then isp(S : r1, r2) = 0≠ isp(S : r1) + isp(S : r2|r1). Loosely speaking, a series of surprises and belief updates does not necessarily lead to a better estimate. This fact has been largely overlooked in early applications of this measure in neuroscience as pointed out by DeWeese and Meister (1999). Some caution is therefore necessary when interpreting results from the literature before 1999 that were obtained using this particular partition of the mutual information.

3.1.3. Which specific neural response r is particularly informative about an unknown stimulus from a certain set of stimuli?

This question asks how much the knowledge about r is worth in terms of an uncertainty reduction about s, i.e., an information gain. In contrast to the question about an update of our beliefs above, we here ask whether this update increases or reduces uncertainty about s. This question is naturally expressed in terms of conditional entropies, comparing our uncertainty before the response, H(S), with our uncertainty after receiving the specific response r, H(S|r). The resulting difference is called the (response-) specific information ir(S : r) (DeWeese and Meister, 1999):

where Again it is easily verified that I(S : R) = Σrp(r)ir(S : r). However, here the individual contributions, ir(S : r), are not necessarily positive. This is because a response r can lead from a probability distribution p(s) with a low entropy H(S) to some p(s|r) with a high entropy H(S|r). Accepting such “negative information” terms makes the measure additive for two subsequent responses:

The negative contributions ir(S : r) can be interpreted as responses r that are mis-informative in the sense of an increase in uncertainty about the average outcome of S [compare the misinformation on the fully local scale indicated by negative i(x : y); see Section 2.1.3].

3.1.4. Which stimulus s leads to responses r that are informative about the stimulus itself?

In other words, which stimulus is reliably associated to responses that are relatively unique for this stimulus, so that we know about the occurrence of this specific stimulus from the response unambiguously. Here, we ask about stimuli that are being encoded well by the system, in the sense that they lead to responses that are informative to a downstream observer. In this type of question, a response is considered informative if it strongly reduces the uncertainty about the stimulus, i.e., if it has a large ir(S : r). We then ask how informative the responses for a given stimulus s are on average over all responses that the stimulus elicits with probabilities p(r|s):

The resulting measure iSSI(s : R) is called the stimulus specific information (SSI) (Butts, 2003). Again it can be verified easily that I(S : R) = Σsp(s)iSSI(s : R), meaning that iSSI is another valid partition of the mutual information. Just as the response specific information terms that it is composed of, the stimulus specific information can be negative (Butts, 2003).

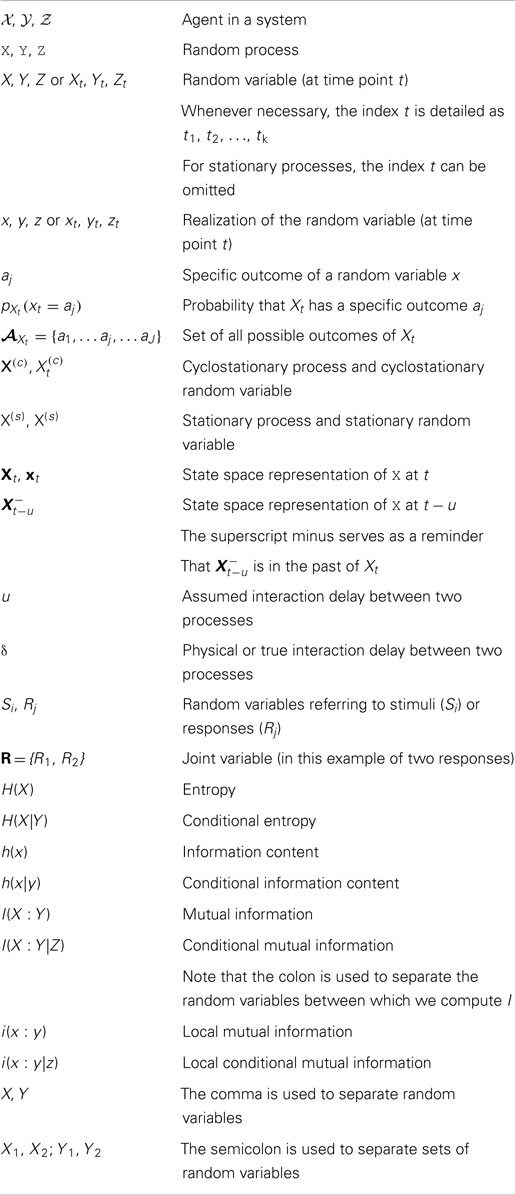

The stimulus specific information has been used to investigate, which stimuli are encoded well in neurons with a specific tuning curve; it was demonstrated that the specific stimuli that were encoded best changed with the noise level of the responses (Butts and Goldman, 2006) (Figure 1). Results of this kind may, for example, be important to consider in the design of BICS that will be confronted with varying levels of noise in their environments.

Figure 1. Stimulus specific surprise (isp) and stimulus specific information (iSSI) of an orientation tuned model neuron under two different noise regimes. (A) Tuning curve: mean firing rate (thick line), SD (thin lines) versus stimulus orientation (Θ). Repeated in for (B,D) for clarity. (B) The stimulus specific information iSSI (indicated as SSI) is maximal in regions of high slope of the tuning curve for the low noise case; (D) for the high noise case iSSI (indicated as SSI) is maximal at the peak of the tuning curve. (C,E) The corresponding values of the stimulus specific surprise isp and the relevant conditional probability distributions. Figure reproduced from Butts and Goldman (2006). Creative Commons (CC BY) Attribution License.

3.2. Importance of the Stimulus Set and Response Features

It may not immediately be visible in the above equations, but central quantities of the above treatment, such as H(S), H(S|r) depend strongly on the choice of the stimulus set 𝒜S. For example, if one chooses to study the human visual systems with a set of “visual” stimuli in the far infrared end of the spectrum, I(S : R) will most likely be very small and analysis futile (although done properly, a zero value of iSSI(s : R) for all stimuli will correctly point out that the human visual system does not care or code for any of the infrared stimuli). Hence, characterizing a neural code properly hinges to a large extent on an appropriate choice of stimuli. In this respect, it is safe to assume that a move from artificial stimuli (such as gratings in visual neuroscience) to more natural ones will alter our view of neural codes in the future. A similar argument holds for the response features that are selected for analysis. If any feature is dropped or not measured at all this may distort the information measures above. This may even happen, if the dropped feature, say the exact spike time variable RST, seems to carry no mutual information with the stimulus variable when considered alone, i.e., I(S : RST) = 0. This is because there may still be synergistic information that can only be recovered by looking at other response variables jointly with RST. For example, it would be possible in principle that neither spike time RST nor spike rate RSR carry mutual information with the stimulus variable when considered individually, i.e., I(S : RST) = I(S : RSR) = 0. Still, when considered jointly they may be informative: I(S : RST, RSR) > 0. The problem of omitted response features is almost inevitable in neuroscience, as the full sampling of all parts of a neural system is typically impossible, and we have to work with sub-sampled data. Considering only a subset of (response) variables may systematically alter the apparent dependency structure in the neural system [see Priesemann et al. (2009) for an example]. Therefore, the effects of subsampling should always be kept in mind when interpreting results of studies on neural coding. For many cases, however, it may in the future be possible to exploit regularities in the system, such as the decay of connection density between neurons, to model at least some missing parts of the overall response activity [e.g., by maximum entropy models (Tkacik et al., 2010; Granot-Atedgi et al., 2013; Priesemann et al., 2013b)].

4. Information in Ensemble Coding – Partial Information Decomposition

In neural systems, information is often encoded by ensembles of agents – as evidenced by the success of various “brain reading” and decoding techniques applied to multivariate neural data [e.g., Kriegeskorte et al. (2008)]. Knowing how this information in the ensemble is distributed over the agents can inform the designer of BICS about strategies to distribute the relevant information about a problem over the available agents. These strategies determine properties like the coding capacity of the system as well as its reliability. For example, reliability can be increased by representing the same information in multiple agents, making their information redundant. In contrast, maximizing capacity would require taking into account the full combinatorial possibilities of states of agents, making their coding synergistic.

Here, we investigate the most basic ensemble of just two agents to introduce the concepts of redundant, synergistic, and unique information (Williams and Beer, 2010; Stramaglia et al., 2012, 2014; Harder et al., 2013; Lizier et al., 2013; Barrett, 2014; Bertschinger et al., 2014; Griffith and Koch, 2014; Griffith et al., 2014; Timme et al., 2014), and note that encoding in larger ensembles is still a field of active research. More specifically, we consider an ensemble of two neurons and their responses {R1, R2}, after stimulation with stimuli s ∈ AS = {s1, s2,…}, and try to answer the following questions:

1. What information does Ri provide about S? This is the mutual information I(S : Ri) between the responses of one neuron i and the stimulus set.

2. What information does the joint variable R = {R1, R2} provide about S? This is the mutual information I(S : R1, R2) between the joint responses of the two neurons and the stimulus set.

3. What information does the joint variable R = {R1, R2} have about S that we cannot get from observing both variables R1, R2 separately? This information is called the synergy, or complementary information, of {R1, R2} with respect to S: CI(S : R1;R2).

4. What information does one of the variables, say R1, hold individually about S that we can not obtain from any other variable (R2 in our case)? This information is the unique information of R1 about S : UI(S : R1∖R2).

5. What information does one of the variables, again say R1, have about S that we could also obtain by looking at the other variable alone? This information is the redundant, or shared, information of R1 and R2 about S: SI(S : R1;R2).

Interestingly, only questions 1 and 2 can be answered using standard tools of information theory such as the mutual information. In fact, the answers to the questions 3–5, i.e., the quantification of unique, redundant and synergistic information, need new mathematical concepts as will be shown below.

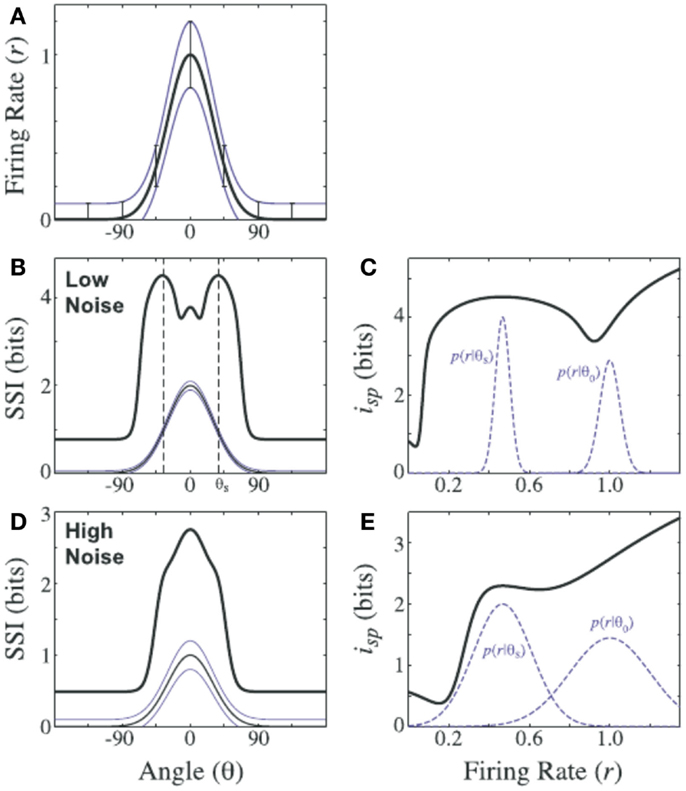

Before we present more details, we would like to illustrate the above questions by a thought experiment where three visual neurons are recorded simultaneously while being stimulated with a set of four stimuli (Figure 2). For simplicity, we will later consider the coding of these neurons with respect to questions 1–5 only in two pairwise configurations: one configuration composed of two neurons with almost identical receptive fields (RF1, RF2), another configuration of two neurons with collinear but spatially displaced receptive fields (RF1, RF3) (Figure 2A). These neurons are stimulated with one of the following stimuli (Figure 2B): s1 does not contain anything at the receptive fields of the three neurons, and the neurons stay inactive; s2 is a short bar in the receptive fields of neurons 1,2; s3 is a similar short bar, but over the receptive field of neuron 3, instead of 1,2; s4 is a long bar covering all receptive fields in the example.

Figure 2. Redundant and synergistic neural coding. (A) Receptive fields (RFs) of three neurons R1, R2, and R3. (B) Set of four stimuli. (C) Circuit for synergistic coding. Responses of neurons R1, R3 determine the response of neuron N via an XOR-function. In the hidden circuit in between R1, R2, and N open circles denote excitatory neurons, filled circles inhibitory neurons. Numbers in circles are activation thresholds, signed numbers at connecting arrows are synaptic weights.

To make things easy, let us encode responses that we get from these three neurons (colored traces in Figure 2B) in binary form, with a “1” simply indicating that there was a response in our response window (boxes with activity traces in Figure 2).

Classic information theory tells us that if we assume the stimuli to be presented with equal probability then the entropy of the stimulus set is H(S) = 2 (bit). Obviously, none of the information terms above can be larger than these 2 bits. We also see that each neuron shows activity (binary response = 1) in half of the cases, yielding an entropy H(Rj) = 1 for the responses of each neuron. The responses of the three neurons fully specify the stimulus, and therefore I(S : R1, R2, R3) = 2. To see the mutual information between an individual neuron’s response and the stimulus we may compute I(S : Ri) = H(S) − H(S|Ri). To do this, we remember H(S) = 2 and use that the number of equiprobable outcomes for S drops by half after observing a single neuron (e.g., after observing a response r1 = 1 of neuron 1, two stimuli remain possible sources of this response – s2 or s4). This gives H(S|Ri) = 1, and I(S : Ri) = 1. Hence, each neuron provides 1 bit of information about the stimulus when considered individually. Already here, we see something curious – although each of the three neurons has 1 bit about the stimulus, together they have only 2, not 3 bits. We can see the reason for this “vanishing bit” when considering responses from pairs of neurons, especially the pair {R1, R2}.

We now turn to questions 3–5, and ask about a decomposition of the information in joint variables formed from pairs of neurons:

• To understand the concept of redundant (or shared) information, consider the responses of neuron 1 and 2. These two neurons show identical responses to the stimuli. Individually, each of the neurons provides 1 bit of information about the stimulus. Jointly, i.e., if we look at them together ({R1, R2}), they still provide only 1 bit: I(S : R1, R2) = 1, not 2 bits. This is because the information carried by their responses is redundant. To see this, note that one cannot decide between stimuli s1 and s3 if one gets the result (r1 = 0, r2 = 0), and similarly one cannot not decide between stimuli s2 and s4 if one gets (r1 = 1, r2 = 1); other combinations of responses do not occur here. We see that neurons 1 and 2 have exactly the same information about the stimulus, and a measure of redundant information should yield the full 1 bit in this case2. We will later see this intuitive argument again as the “Self-Redundancy” axiom (Williams and Beer, 2010).

• To understand the concept of synergy, consider the responses {R1, R3} from the second example pair (i.e., neurons 1,3), and ask how much information they have about the presence of exactly one short bar on the screen [i.e., s2 or s3, in contrast to a long bar (S4) or no bar at all (s1)]. Mathematically, the XOR function indicates whether a short bar is present or not, N = XOR (R1, R2). For a neural implementation of the XOR function, see Figure 2C. To examine synergy, we investigate the mutual information between {R1, R3}, R1, R3, and N. The individual mutual informations of each neuron R1, R3 with the downstream neuron N are zero [I(N : Ri) = 0]. However, the mutual information between these two neurons considered jointly and the downstream neuron N equals 1 bit, because the response of N is fully determined by its two inputs: I(N : R1, R3) = 1. Thus, there is only synergistic information between R1 and R3 about N, in this example about the presence of a single short bar.

• To understand the concept of unique information, consider only the neurons 1, 3 and the two stimuli s1 and s3. (The reduced stimulus set S′ is S′ = {s1, s3}). It is trivial to see that neuron 1 does not respond to either stimulus, thus the mutual information between neuron 1 and the reduced stimulus set is zero, I(S′ : R1) = 0. In contrast, the responses of neuron 3 are fully informative about S′, I(S′ : R3) = 1. Clearly, R3 provides information about the stimulus that is not present in R1. In this example, neuron 3 has 1 bit of unique information about the stimulus set S′.

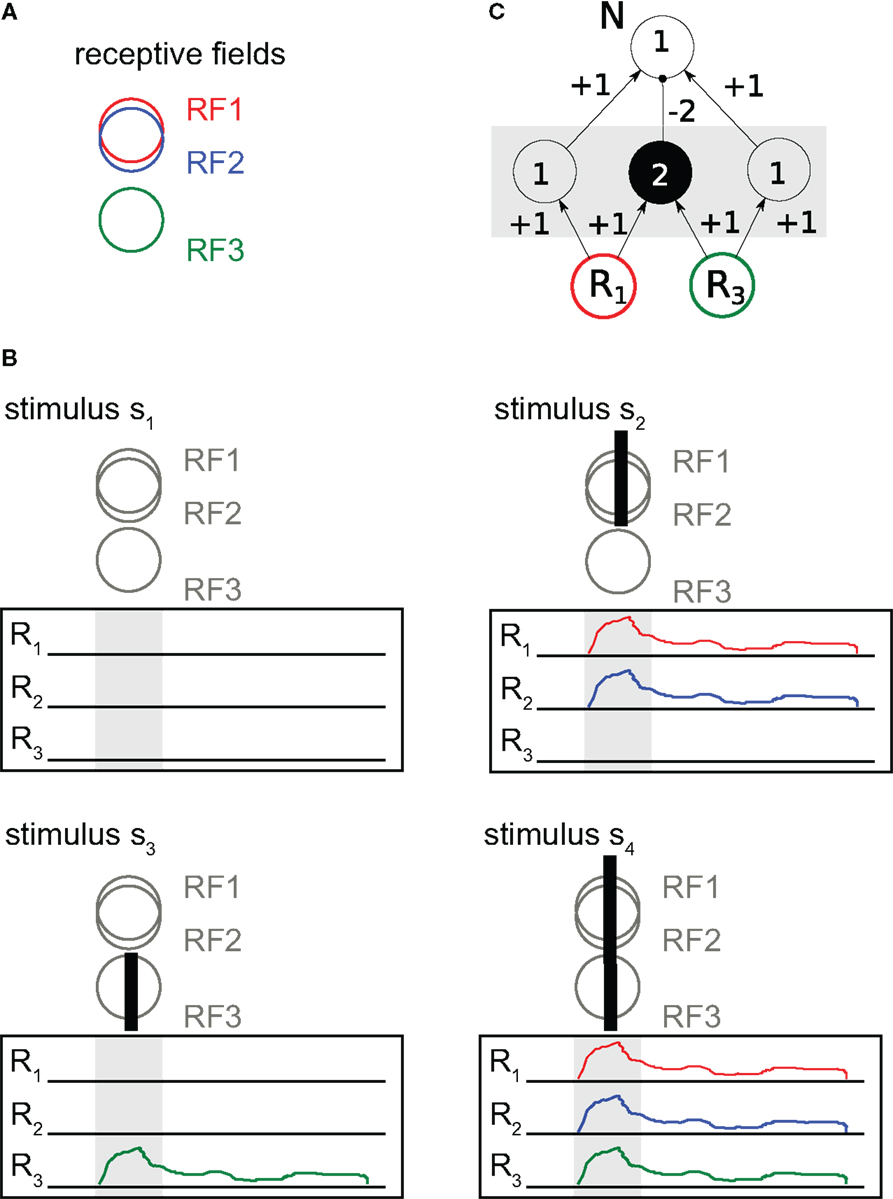

We now introduce the mathematical framework of partial information decomposition that formalizes the intuition in the above examples. We consider a decomposition of the mutual information between a set of two right hand side, or input, variables R1, R2, and a left hand side variable, or output variable S, i.e., I(S : R1, R2). In general, for a decomposition of this mutual information into unique, redundant, and synergistic information to make sense, the total information from any one variable, e.g., I(S : R1), should be decomposable into the unique information term UI(S : R1∖R2) and the redundant, or shared, information term SI(S : R1;R2) that both variables have about S:

Similarly, the total information I(S : R1, R2) from both variables should be decomposable into the two unique information terms UI(S : R1∖R2) and UI(S : R2∖R1) of each Ri about S, the redundant, or shared, information SI(S : R1;R2) that both variables have about S, and the synergistic, or complementary, information CI(S : R1;R2) that can only be obtained by considering {R1, R2} jointly:

Figure 3A shows this so-called partial information decomposition (Williams and Beer, 2010). One sees that the redundant, unique, and synergistic information cannot be obtained by simply subtracting classical mutual information terms. However, if we are given either a measure of redundant, synergistic, or unique information, the other parts of the decomposition can be computed. Hence, classic information theory is insufficient for a partial information decomposition (Williams and Beer, 2010), and a definition of either unique, redundant of synergistic information based on a choice of axioms is needed. A minimal requirement for such axioms, and measures satisfying them, is that they should comply with our intuitive notion of what unique, redundant, and synergistic information should be in some clear cut extreme cases, such as the examples above. The original set of axioms proposed for such a functional definition of redundant (and thereby also unique and synergistic) information comprises three axioms that currently all authors seem to agree on (Williams and Beer, 2010):

1. (Weak) Symmetry: the redundant information that variables R1, R2, …, Rn have about S is symmetric under permutations of the variables R1, R2, …, Rn.

2. Self-redundancy: the redundant information that R1 shares with itself about S is just the mutual information I(S : R1).

3. Monotonicity: the redundant information that variables R1, R2, …, Rn have about S is smaller than or equal to the redundant information that variables R1, R2, …, Rn−1 have about S. Equality holds if Rn−1 is a function of Rn.

Figure 3. (A) Overview of the contributions to a partial information decompositions of the mutual information I(S:R1;R2). (B) (1–8) Schematic derivation of the definition of unique information by Bertschinger et al. (2014). This figure is meant as a guide to the structure of the original work that should be consulted for the rigorous treatment of the topic.

These three axioms also lead to global positivity, i.e., and (Williams and Beer, 2010). As said above, these axioms are uncontroversial, although some authors restrict them to only two input variables R1, R2 as detailed below (Harder et al., 2013; Rauh et al., 2014). These axioms, however, are not sufficient to uniquely define a measure of either redundant, unique or synergistic information. Therefore, various additional axioms, or assumptions, have been proposed (Williams and Beer, 2010; Harder et al., 2013; Lizier et al., 2013; Bertschinger et al., 2014; Griffith and Koch, 2014; Griffith et al., 2014) that are not all compatible with each other (Bertschinger et al., 2013). Here, we exemplarily discuss the recent choice of an assumption by Bertschinger et al. (2014) to define a measure of unique information, which is, in fact, equivalent to another formulation proposed by Griffith and Koch (2014). The reasons for selecting this particular assumption are that at the time of writing it comes with the richest set of derived theorems, and that it has an appealing link to game theory and utility functions, and thus to measures of success of an agent or a BICS. We note at the outset that this is one of the measures that are defined only for two “input” variables R1, R2 and one “output” S (although the Ri themselves may be multivariate RVs). For more details on this restriction see Rauh et al. (2014).

The basic idea of the definition by Bertschinger and colleagues comes from game theory and states that someone (say Alice) who has access to one input variable R1 with unique information about an output variable S must be able to prove that her variable has information not available in the other. To prove this, Alice can design a bet on the output variable (by choosing a suitable utility function) so that someone else (say Bob) who has only access to the other input variable R2 will on average loose this bet. Via some intermediate steps, this leads to the (defining) assumption that the unique information only depends on the two marginal probability distributions P(s, r1) and P(s, r2), but not on the exact full distribution P(s, r1, r2). In other words, the unique information UI should not change when replacing P with a probability distribution Q from the space Δp of probability distributions that share these marginals with P:

where Δ is the space of all probability distributions on the support of S, R1, R1. This motivated the following definition for a measure of unique information:

where IQ(S : R1|R2) is a conditional mutual information computed with respect to the joint distribution Q(s, r1, r2) instead of P(s, r1, r2). Note that this conditional mutual information IQ(S : R1|R2) does change on Δp, and that only its minimum is a measure of the (constant) unique information (see Figure 3). As stated above, knowing one of the three parts UI, SI, CI is enough to compute the others. Therefore, the matching definitions of measures for redundant () and shared information () are:

where CoIQ(S;R1;R2) = I(S : R1) − IQ(S : R1|R2) is the so-called co-information (equivalent to the redundancy minus the synergy) for the distribution Q(s, r1, r2).

Among the notable properties of the measures defined this way is the fact that they can be found by convex optimization, and that all three measures above have been explicitly shown to be positive. Moreover, the above measures are bounds for any definitions of synergistic CI, shared (redundant) SI, and unique information UI that satisfy equations (22) and (23). That is, it can be shown that:

holds (Bertschinger et al., 2014).

The field of information decomposition has seen a rapid development since the initial study of Williams and Beer; however, some major questions remain unresolved so far. Most importantly, the definitions above have acceptable properties, but apply only for the case of decomposing mutual information into contributions of two (sets of) input variables. The structure of such a decomposition for more than two inputs is an active area of research at the moment.

5. Analyzing Distributed Computation in Neural Systems

5.1. Analyzing Neural Coding and Goal Functions in a Domain-Independent Way

The analysis of neural coding strategies presented above relies on our a priori knowledge of the set of task level (e.g., stimulus) features that is encoded in neural responses at the implementation level. If we have this knowledge, information theory will help us to link the two levels. This is somewhat similar to the situation in cryptography where we consider a code “cracked” if we obtain a human-readable plain text message, i.e., we move from the implementation level (encrypted message) to the task level (meaning). However, what happens if the plain text were in a language that one never heard of3? In this case, we would potentially crack the code without ever realizing it, as the plain text still has no meaning for us.

The situation in neuroscience bears resemblance to this example in at least two respects: first, most neurons do not have direct access to any properties of the outside world, rather they receive nothing but input spike trains. All they ever learn and process must come from the structure of these input spike trains. Second, if we as researchers probe the system beyond early sensory or motor areas, we have little knowledge of what is actually encoded by the neurons deeper inside the system. As a result, proper stimulus sets get hard to choose. In this case, the gap between the task- and the implementation level may actually become too wide for meaningful analyses, as noticed recently by Carandini (2012).

Instead of relying on descriptions of the outside world (and thereby involve the task level), we may take the point of view that information processing in a neuron is nothing but the transformation of input spike trains to output spike trains. We may then try to use information theory to link the implementation and algorithmic level, by retrieving a “footprint” of the information processing carried out by a neural circuit. This approach only builds on a very general agreement that neural systems perform at least some kind of information processing. This information processing can be partitioned into the component processes, which determine or predict the next RV of a process Y at time t, Yt: (1) information storage, (2) information transfer, and (3) information modification. A partition of this kind had already been formulated by Turing [see Langton (1990)], and was recently formalized by Lizier et al. (2014) [see also Lizier (2013)]:

• Information storage quantifies the information contained in the past state variable Yt−1 of a process that is used by the process at the next RV at t, Yt (Lizier et al., 2012b). This relatively abstract definition means that an observer will see at least a part of the past information in the process’ past again in its future, but potentially transformed. Hence, information storage can be naturally quantified by a mutual information between the past and the future4 of a process.

• Information transfer quantifies the information contained in the state variables Xt−u (found u time steps into the past) of one source process X that can be used to predict information in the future variable Yt of a target process Y, in the context of the past state variables Yt−1 of the target process (Schreiber, 2000; Paluš, 2001; Vicente et al., 2011).

• Information modification quantifies the combination of information from various source processes into a new form that is not trivially predictable from any subset of these source processes [for details of this definition also see Lizier et al. (2010, 2013)].

Based on Turing’s general partition of information processing (Langton, 1990), Lizier and colleagues recently proposed an information-theoretic framework to quantify distributed computations in terms of all three component processes locally, i.e., for each part of the system (e.g., neurons or brain areas) and each time step (Lizier et al., 2008c, 2010, 2012b). This framework is called local information dynamics and has been successfully applied to unravel computation in swarms (Wang et al., 2011), in Boolean networks (Lizier et al., 2011b), and in neural models (Boedecker et al., 2012) and data (Wibral et al., 2014a) (also see Section 6 for details on these example applications).

Crucially, information dynamics is the perspective of an observer who measures the processes X and Y and tries to partition the information in Yt into the apparent contributions from stored, transferred, and modified information, without necessarily knowing the true underlying system structure. For example, such an observer would label any recurring information in Y as information storage, even where such information causally left the system and re-entered Y at a later time (e.g., a stigmergic process).

Other partitions are possible; James et al. (2011), for example, partition information in the present of a process in terms of its relationships to the semi-infinite past and semi-infinite future. In contrast, we focus on the information dynamics perspective laid out above since it quantifies terms, which can be specifically identified as information storage, transfer, and modification, which aligns with many qualitative descriptions of dynamics in complex systems. In particular, the information dynamics perspective is novel in focusing on quantifying these operations on a local scale in space and time.

In the following we present both global and local measures of information transfer, storage, and modification, beginning with the well established measures of information transfer and ending with the highly dynamic field of information modification.

5.2. Information Transfer

The analysis of information transfer was formalized initially by Schreiber (2000) and Paluš (2001), and has seen a rapid surge of interest in neuroscience5 and general physiology6. Information transfer as measured by the transfer entropy introduced below has recently also been given a thermodynamic interpretation by Prokopenko and Lizier (2014), continuing general efforts to link information theory and thermodynamics (Szilárd, 1929; Landauer, 1961), highlighting the importance of the concept.

5.2.1. Definition

Information transfer from a process X (the source) to another process Y (the target) is measured by the transfer entropy (TE) functional7 (Schreiber, 2000):

where is the conditional mutual information, Yt is the RV of process Y at time t, and X t−u, Y t−1 are the past state-RVs of processes X and Y, respectively. The delay variable u in X t−u indicates that the past state of the source is to be taken u time steps into the past to account for a potential physical interaction delay between the processes. This parameter need not be chosen ad hoc, as it was recently proven for bivariate systems that the above estimator is maximized if the parameter u is equal to the true delay δ of the information transfer from X to Y (Wibral et al., 2013). This relationship allows one to estimate the true interaction delay δ from data by simply scanning the assumed delay u:

The TE functional can be linked to Wiener–Granger type causality (Wiener, 1956; Granger, 1969; Barnett et al., 2009). More precisely, for systems with jointly Gaussian variables, transfer entropy is equivalent8 to linear Granger causality [see Barnett et al. (2009) and references therein]. However, whether the assumption of jointly Gaussian variables is appropriate in a neural setting must be checked carefully for each case (note that Gaussianity of each marginal distribution is not sufficient). In fact, EEG source signals were found to be non-Gaussian (Wibral et al., 2008).

5.2.2. Transfer entropy estimation

When the probability distributions entering equation (28) are known (e.g., in an analytically tractable neural model), TE can be computed directly. However, in most cases, the probability distributions have to be derived from data. When probabilities are estimated naively from the data via counting, and when these estimates are then used to compute information-theoretic quantities such as the transfer entropy, we speak of a “plug in” estimator. Indeed, such plug in estimators has been used in the past, but they come with serious bias problems (Panzeri et al., 2007b). Therefore, newer approaches to TE estimation rely on a more direct estimation of the entropies that TE can be decomposed (Kraskov et al., 2004; Gomez-Herrero et al., 2010; Vicente et al., 2011; Wibral et al., 2014b). These estimators still suffer from bias problems but to a lesser degree (Kraskov et al., 2004). We therefore restrict our presentation to these approaches.

Before we can proceed to estimate TE we will have to reconstruct the states of the processes (see Section 2.1.5). One approach to state reconstruction is time delay embedding (Takens, 1981). It uses past variables Xt− nτ, n = 1, 2,… that are spaced in time by an interval τ. The number of these variables and their optimal spacing can be determined using established criteria (Ragwitz and Kantz, 2002; Small and Tse, 2004; Lindner et al., 2011; Faes et al., 2012). The realizations of the states variables can be represented as vectors of the form:

where d is the dimension of the state vector. Using this vector notation, transfer entropy can be written as:

where the subscript SPO (for self prediction optimal) is a reminder that the past states of the target, have to be constructed such that conditioning on them is optimal in the sense of taking the active information storage in the target correctly into account (Wibral et al., 2013): if one were to condition on with w≠1, instead of then the self prediction for Yt would not be optimal and the transfer entropy would be overestimated.

We can rewrite equation (32) using a representation in the form of four entropies9 H(⋅), as:

Entropies can be estimated efficiently by nearest-neighbor techniques. These techniques exploit the fact that the distances between neighboring data points in a given embedding space are inversely related to the local probability density: the higher the local probability density around an observed data point the closer are the next neighbors. Since next neighbor estimators are data efficient (Kozachenko and Leonenko, 1987; Victor, 2005), they allow to estimate entropies in high-dimensional spaces from limited real data.

Unfortunately, it is problematic to estimate TE by simply applying a naive nearest-neighbor estimator for the entropy, such as the Kozachenko–Leonenko estimator (Kozachenko and Leonenko, 1987), separately to each of the terms appearing in equation (33). The reason is that the dimensionality of the state spaces involved in equation (33) differs largely across terms – creating bias problems. These are overcome by the Kraskov–Stögbauer–Grassberger (KSG) estimator that fixes the number of neighbors k in the highest dimensional space (spanned here by ) and by projecting the resulting distances to the lower dimensional spaces as the range to look for additional neighbors there (Kraskov et al., 2004). After adapting this technique to the TE formula (Gomez-Herrero et al., 2010), the suggested estimator can be written as:

where ψ denotes the digamma function, the angle brackets (⟨⋅⟩t) indicate averaging over time for stationary systems, or over an ensemble of replications for non-stationary ones, and k is the number of nearest neighbors used for the estimation. n(⋅) refers to the number of neighbors, which are within a hypercube that defines the search range around a state vector. As described above, the size of the hypercube in each of the marginal spaces is defined based on the distance to the k-th nearest neighbor in the highest dimensional space.

5.2.3. Interpretation of transfer entropy as a measure at the algorithmic level

TE describes computation at the algorithmic level, not at the level of a physical dynamical system. As such it is not optimal for inference about causal interactions – although it has been used for this purpose in the past. The fundamental reason for this is that information transfer relies on causal interactions, but non-zero transfer entropy can occur without direct causal links, and causal interactions do not necessarily lead to non-zero information transfer (Ay and Polani, 2008; Lizier and Prokopenko, 2010; Chicharro and Ledberg, 2012). Instead, causal interactions may serve active information storage alone (see next section), or force two systems into identical synchronization, where information transfer becomes effectively zero. This might be summarized by stating that transfer entropy is limited to effects of a causal interaction from a source to a target process that are unpredictable given the past of the target process alone. In this sense, TE may be seen as quantifying causal interactions currently in use for the communication aspect of distributed computation. Therefore, one may say that TE measures predictive, or algorithmic information transfer.

A simple thought experiment may serve to illustrate this point: when one plays an unknown record, a chain of causal interactions serve the transfer of information about the music from the record to your brain. Causal interactions happen between the record’s grooves and the needle, the magnetic transducer system behind the needle, and so on, up to the conversion of pressure modulations to neural signals in the cochlea that finally activate your cortex. In this situation, there undeniably is information transfer, as the information read out from the source, the record, at any given moment is not yet known in the target process, i.e., the neural activity in the cochlea. However, this information transfer ceases if the record has a crack, making the needle skip, and repeat a certain part of the music. Obviously, no new information is transferred which under certain mild conditions is equivalent to no information transfer at all. Interestingly, an analysis of TE between sound and cochlear activity will yield the same result: the repetitive sound leads to repetitive neural activity (at least after a while). This neural activity is thus predictable by its own past, under the condition of vanishing neural “noise,” leaving no room for a prediction improvement by the sound source signal. Hence, we obtain a TE of zero, which is the correct result from a conceptual point of view. Remarkably, at the same time the chain of causal interactions remains practically unchanged. Therefore, a causal model able to fit the data from the original situation will have no problem to fit the data of the situation with the cracked record, as well. Again, this is conceptually the correct result, but this time from a causal point of view.

The difference between an analysis of information transfer in a computational sense and causality analysis based on interventions has been demonstrated convincingly in a recent study by Lizier and Prokopenko (2010). The same authors also demonstrated why an analysis of information transfer can yield better insight than the analysis of causal interactions if the computation in the system is to be understood. The difference between causality and information transfer is also reflected in the fact that a single causal structure can support diverse pattern of information transfer (functional multiplicity), and the same pattern of information transfer can be realized with different causal structures (structural degeneracy) as shown by Battaglia (2014b).

5.2.4. Local information transfer

As transfer entropy is formally just a conditional mutual information, we can obtain the corresponding local conditional mutual information [equation (15)] from equation (32). This quantity is called the local transfer entropy (Lizier et al., 2008c). For realizations xt, yt of two processes X, Y at time t it reads:

As said earlier in the section on basic information theory, the use of local information measures does not eliminate the need for an appropriate estimation of the probability distributions involved. Hence, for a non-stationary process, these distributions will still have to be estimated via an ensemble approach for each time point for the RVs involved, e.g., via physical replications of the system, or via enforcing cyclostationarity by design of the experiment.

The analysis of local transfer entropy has been applied with great success in the study of cellular automata to confirm the conjecture that certain coherent spatiotemporal structures traveling through the network are indeed the main carriers of information transfer (Lizier et al., 2008c) (see further discussion at Section 6.4). Similarly, local transfer entropy has identified coherent propagating wave structures in flocks as information cascades (Wang et al., 2012) (see Section 6.5), and indicated impending synchronization among coupled oscillators (Ceguerra et al., 2011).

5.2.5. Common problems and solutions

Typical problems in TE estimation encompass (1) finite sample bias, (2) the presence of non-stationarities in the data, and (3) the need for multivariate analyses. In recent years, all of these problems have been addressed at least in isolation, as summarized below:

• Finite sample bias can be overcome by statistical testing using surrogate data, where the observed realizations of the RVs are reassigned to other RVs of the process, such that the temporal order underlying the information transfer is destroyed [for an example see the procedures suggested in Lindner et al. (2011)]. This reassignment should conserve as many data features of the single process realizations as possible.

• As already explained in the section on basic information theory above, non-stationary random processes in principle require that the necessary estimates of the probabilities in equation (28) are based on physical replications of the systems in question. Where this is impossible, the experimenter should design the experiment in such a way that the processes are repeated in time. If such cyclostationary data are available, then TE should be estimated using ensemble methods as described in Gomez-Herrero et al. (2010) and implemented in the TRENTOOL toolbox (Lindner et al., 2011; Wollstadt et al., 2014).

• So far, we have restricted our presentation of transfer entropy estimation to the case of just two interacting random processes X, Y, i.e., a bivariate analysis. In a setting that is more realistic for neuroscience, one deals with large networks of interacting processes X, Y, Z, …. In this case, various complications arise if the analysis is performed in a bivariate manner. For example, a process Z could transfer information with two different delays δZ→X, δZ→Y to two other processes X, Y. In this case, a pairwise analysis of transfer entropy between X, Y will yield an apparent information transfer from the process that receives information from Z with the shorter delay to the one that receives it with the longer delay (common driver effect). A similar problem arises if information is transferred first from a process X to Y, and then from Y to Z. In this case, a bivariate analysis will also indicate information transfer from X to Z (cascade effect). Moreover, two sources may transfer information purely synergistically, i.e., the transfer entropy from each source alone to the target is zero, and only considering them jointly reveals the information transfer10.

From a mathematical perspective, this problem seems to be easily solved by introducing the complete transfer entropy, which is defined in terms of a conditional transfer entropy (Lizier et al., 2008c, 2010):

where the state-RV Z− is a collection of the past states of one or more processes in the network other than X, Y. We label equation (36) a complete transfer entropy TE(c)(Xt−u →Yt) when we take Z− = V−, the set of all processes in the network other than X, Y.

It is important to note that TE and conditional/complete TE are complementary (see mathematical description of this at Section 5.4) – each can reveal aspects of the underlying dynamics that the other does not and both are required for a full description. While conditional TE removes redundancies and includes synergies, knowing that redundancy is present may be important, and local pairwise TE additionally reveals interesting cases when a source is mis-informative about the dynamics (Lizier et al., 2008b,c).

Furthermore, even for small networks of random processes the joint state space of the variables Yt, Yt−1, Xt−u, V− may become intractably large from an estimation perspective. Moreover, the problem of finding all information transfers in the network, either from single sources variables into the target or synergistic transfer from collections of source variables to the target, is a combinatorial problem, and can therefore typically not be solved in a reasonable time.

Therefore, Faes et al. (2012), Lizier and Rubinov (2012), and Stramaglia et al. (2012) suggested to analyze the information transfer in a network iteratively, selecting information sources for a target in each iteration either based on magnitude of apparent information transfer (Faes et al., 2012) or its significance (Lizier and Rubinov, 2012; Stramaglia et al., 2012). In the next iteration, already selected information sources are added to the conditioning set [Z− in equation (36)], and the next search for information sources is started. The approach of Stramaglia and colleagues is particular here in that the conditional mutual information terms are computed at each level as a series expansion, following a suggestion by Bettencourt et al. (2008). This allows for an efficient computation as the series may truncate early, and the search can proceed to the next level. Importantly, these approaches also consider synergistic information transfer from more than one source variable to the target. For example, a variable transferring information purely synergistically with Z− maybe included in the next iteration, given that the other variables it transfers information with are already in the conditioning set Z−. However, there is currently no explicit indication in the approaches of Faes et al. (2012) and Lizier and Rubinov (2012) as to whether multivariate information transfer from a set of sources to the target is, in fact, synergistic; in addition, redundant links will not be included. In contrast, both redundant and synergistic multiplets of variables transferring information into a target may be identified in the approach of Stramaglia et al. (2012) by looking at the sign of the contribution of the multiplet. Unfortunately, there is also the possibility of cancellation if both types of multivariate information (redundant, synergistic) are present.

5.3. Active Information Storage

Before we present explicit measures of active information storage, a few comments may serve to avoid misunderstanding. Since we analyze neural activity here, measures of active information storage are concerned with information stored in this activity – rather than in synaptic properties, for example11. This is the perspective of what an observer of that activity (not necessarily with any knowledge of the underlying system structure) would attribute as information storage at the algorithmic level, even if the causal mechanisms at the level of a physical dynamical system underpinning such apparent storage were distributed externally to the given variable (Lizier et al., 2012b). As laid out above, storage is conceptualized here as a mutual information between past and future states of neural activity. From this it is clear that there will not be much information storage if the information contained in the future states of neural activity is low in general. If, on the other hand these future states are rich in information but bear no relation to past states, i.e., are unpredictable, again information storage will be low. Hence, large information storage occurs for activity that is rich in information but, at the same time, predictable.

Thus, information storage gives us a way to define the predictability of a process that is independent of the prediction error: information storage quantifies how much future information of a process can be predicted from its past, whereas the prediction error measures how much information can not be predicted. If both are quantified via information measures, i.e., in bits, the error and the predicted information add up to the total amount of information in a random variable of the process. Importantly, these two measures may lead to quite different views about the predictability of a process. This is because the total information can vary considerably over the process, and the predictable and the unpredictable information may thus vary almost independently. This is important for the design of BICS that use predictive coding strategies.

Before turning to the explicit definition of measures of information storage it is worth considering which temporal extent of “past” and “future” states we are interested in: most globally, predictive information (Bialek et al., 2001) or excess entropy (Crutchfield and Packard, 1982; Grassberger, 1986; Crutchfield and Feldman, 2003) is the mutual information between the semi-infinite past and semi-infinite future of a process before and after time point t. In contrast, if we are interested in the information currently used for the next step of the process, the mutual information between the semi-infinite past and the next step of the process, the active information storage (Lizier et al., 2012b) is of greater interest. Both measures are defined in the next paragraphs.

5.3.1. Predictive information/excess entropy

Excess entropy is formally defined as:

where and , indicate collections of the past and future k variables of the process X

12. These collections of RVs in the limit k →∞, span the semi-infinite past and future, respectively. In general, the mutual information in equation (37) has to be evaluated over multiple realizations of the process. For stationary processes, however, is not time-dependent, and equation (37) can be rewritten as an average over time points t and computed from a single realization of the process – at least in principle (we have to consider that the process must run for an infinite time to allow the limit for all t):

Here, is the local mutual information from equation (14), and are realizations of The limit of k →∞ can be replaced by a finite kmax if a kmax exists such that conditioning on renders conditionally independent of any Xl with l ≤ t − kmax.

Even if the process in question is non-stationary, we may look at values that are local in time as long as the probability distributions are derived appropriately (see Section 2.1.2) (Shalizi, 2001; Lizier et al., 2012b):

5.3.2. Active Information Storage

From a perspective of the dynamics of information processing, we might not be interested in information that is used by a process at some time far in the future, but at the next point in time, i.e., information that is said to be “currently in use” for the computation of the next step (the realization of the next RV) in the process (Lizier et al., 2012b). To quantify this information, a different mutual information is computed, namely the active information storage (AIS) (Lizier et al., 2007, 2012b):

AIS is similar to a measure called “regularity” introduced by Porta et al. (2000), and was also labeled as ρu (“redundant portion” of information in Xt) by James et al. (2011).

Again, if the process in question is stationary then and the expected value can be obtained from an average over time – instead of an ensemble of realizations of the process – as:

which can be read as an average over local active information storage (LAIS) values (Lizier et al., 2012b):

Even for non-stationary processes, we may investigate local active storage values, given the corresponding probability distributions are properly obtained from an ensemble of realizations of Xt, :

Again, the limit of k →∞ can be replaced by a finite kmax if a kmax exists such that conditioning on renders Xt conditionally independent of any Xl with l ≤ t − kmax [see equation (16)].

5.3.3. Interpretation of information storage as a measure at the algorithmic level

As laid out above information storage is a measure of the amount of information in a process that is predictable from its past. As such it quantifies, for example, how well activity in one brain area A can be predicted by another area, e.g., by learning its statistics. Hence, questions about information storage arise naturally when asking about the generation of predictions in the brain, e.g., in predictive coding theories (Rao and Ballard, 1999; Friston et al., 2006).

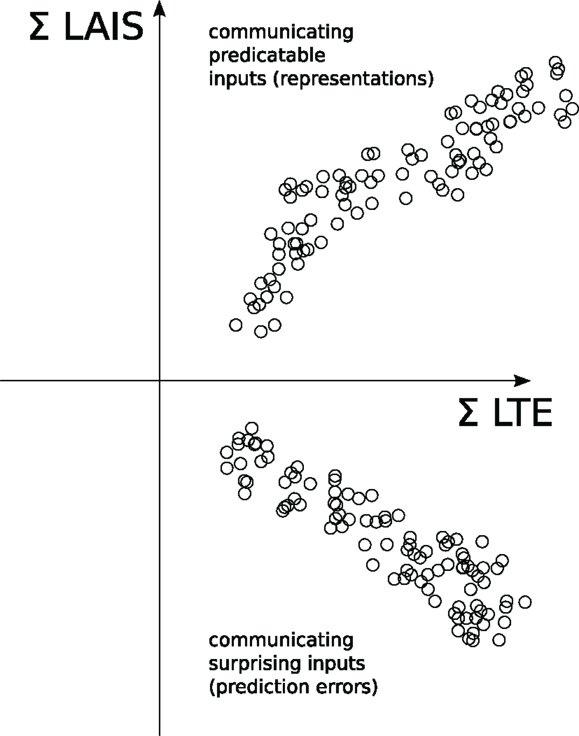

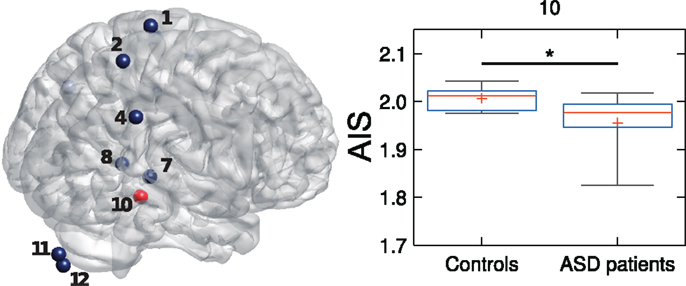

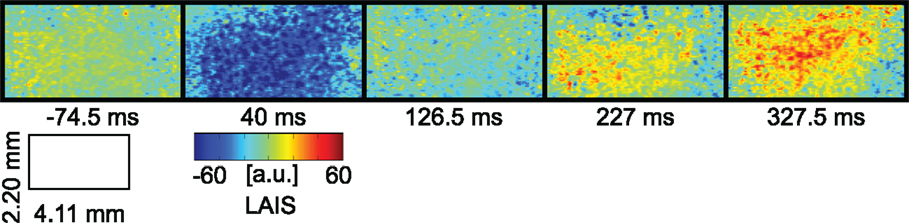

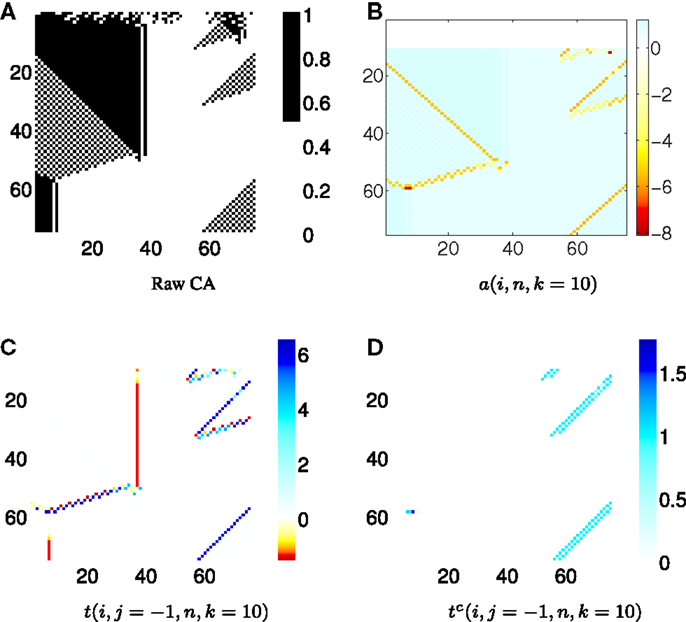

5.4. Combining the Analysis of Local Active Information Storage and Local Transfer Entropy