Bounded Rationality, Abstraction, and Hierarchical Decision-Making: An Information-Theoretic Optimality Principle

- 1Max Planck Institute for Intelligent Systems, Tübingen, Germany

- 2Max Planck Institute for Biological Cybernetics, Tübingen, Germany

- 3Graduate Training Centre of Neuroscience, Tübingen, Germany

Abstraction and hierarchical information processing are hallmarks of human and animal intelligence underlying the unrivaled flexibility of behavior in biological systems. Achieving such flexibility in artificial systems is challenging, even with more and more computational power. Here, we investigate the hypothesis that abstraction and hierarchical information processing might in fact be the consequence of limitations in information-processing power. In particular, we study an information-theoretic framework of bounded rational decision-making that trades off utility maximization against information-processing costs. We apply the basic principle of this framework to perception-action systems with multiple information-processing nodes and derive bounded-optimal solutions. We show how the formation of abstractions and decision-making hierarchies depends on information-processing costs. We illustrate the theoretical ideas with example simulations and conclude by formalizing a mathematically unifying optimization principle that could potentially be extended to more complex systems.

1. Introduction

A key characteristic of intelligent systems, both biological and artificial, is the ability to flexibly adapt behavior in order to interact with the environment in a way that is beneficial to the system. In biological systems, the ability to adapt affects the fitness of an organism and becomes key to survival not only of individual organisms but species as a whole. Both in the theoretical study of biological systems and in the design of artificial intelligent systems, the central goal is to understand adaptive behavior formally. A formal framework for tackling the problem of general adaptive systems is decision-theory, where behavior is conceptualized as a series of optimal decisions or actions that a system performs in order to respond to changes to the input of the system. An important idea, originating from the foundations of decision-theory, is the maximum expected utility (MEU) principle (Ramsey, 1931; Von Neumann and Morgenstern, 1944; Savage, 1954). Following MEU, an intelligent system is formalized as a decision-maker that chooses actions in order to maximize the desirability of the expected outcome of the action, where the desirability of an outcome is quantified by a utility function.

A fundamental problem of MEU is that the computation of an optimal action can easily exceed the computational capacity of a system. It is for example in general prohibitive trying to compute an optimal chess move due to the large number of possibilities. One way to deal with such problems is to study optimal decision-making with information-processing constraints. Following the pioneering work of Simon (1955, 1972) on bounded rationality, decision-making with limited information-processing resources has been studied extensively in psychology (Gigerenzer and Todd, 1999; Camerer, 2003; Gigerenzer and Brighton, 2009), economics (McKelvey and Palfrey, 1995; Rubinstein, 1998; Kahneman, 2003; Parkes and Wellman, 2015), political science (Jones, 2003), industrial organization (Spiegler, 2011), cognitive science (Howes et al., 2009; Janssen et al., 2011), computer science, and artificial intelligence research (Horvitz, 1988; Lipman, 1995; Russell, 1995; Russell and Subramanian, 1995; Russell and Norvig, 2002; Lewis et al., 2014). Conceptually, the approaches differ widely ranging from heuristics (Tversky and Kahneman, 1974; Gigerenzer and Todd, 1999; Gigerenzer and Brighton, 2009; Burns et al., 2013) to approximate statistical inference schemes (Levy et al., 2009; Vul et al., 2009, 2014; Sanborn et al., 2010; Tenenbaum et al., 2011; Fox and Roberts, 2012; Lieder et al., 2012).

In this study, we use an information-theoretic model of bounded rational decision-making (Braun et al., 2011; Ortega and Braun, 2012, 2013; Braun and Ortega, 2014; Ortega and Braun, 2014; Ortega et al., 2014) that has precursors in the economic literature (McKelvey and Palfrey, 1995; Mattsson and Weibull, 2002; Sims, 2003, 2005, 2006, 2010; Wolpert, 2006) and that is closely related to recent advances in the information theory of perception-action systems (Todorov, 2007, 2009; Still, 2009; Friston, 2010; Peters et al., 2010; Tishby and Polani, 2011; Daniel et al., 2012, 2013; Kappen et al., 2012; Rawlik et al., 2012; Rubin et al., 2012; Neymotin et al., 2013; Tkačik and Bialek, 2014; Palmer et al., 2015). The basis of this approach is formalized by a free energy principle that trades off expected utility, and the cost of computation that is required to adapt the system accordingly in order to achieve high utility. Here, we consider an extension of this framework to systems with multiple information-processing nodes and in particular discuss the formation of information-processing hierarchies, where different levels in the hierarchy represent different levels of abstraction. The basic intuition is that information-processing nodes with little computational resources can adapt only a little for different inputs and are therefore forced to treat different inputs in the same or a similar way, that is the system has to abstract (Genewein and Braun, 2013). Importantly, abstractions arising in decision-making hierarchies are a core feature of intelligence (Kemp et al., 2007; Braun et al., 2010a,b; Gershman and Niv, 2010; Tenenbaum et al., 2011) and constitute the basis for flexible behavior.

The paper is structured as follows. In Section 2, we recapitulate the information-theoretic framework for decision-making and show its fundamental connection to a well-known trade-off in information theory (the rate-distortion problem for lossy compression). In Section 3, we show how the extension of the basic trade-off principle leads to a theoretically grounded design principle that describes how perception is shaped by action. In Section 4, we apply the basic trade-off between expected utility and computational cost to a two-level hierarchy and show how this leads to emergent, bounded-optimal hierarchical decision-making systems. In Section 5, we present a mathematically unifying formulation that provides a starting point for generalizing the principles presented in this paper to more complex architectures.

2. Bounded Rational Decision-Making

2.1 A Free Energy Principle for Bounded Rationality

In a decision-making task with context, an actor or agent is presented with a world-state w and is then faced with finding an optimal action out of a set of actions in order to maximize the utility U(w, a):

If the cardinality of the action-set is large, the search for the single best action can become computationally very costly. For an agent with limited computational resources that has to react within a certain time-limit, the search problem can potentially become infeasible. In contrast, biological agents, such as animals and humans, are constantly confronted with picking an action out of a very large set of possible actions. For instance, when planning a movement trajectory for grasping a certain object with a biological arm with many degrees of freedom, the number of possible trajectories is infinite. Yet, humans are able to quickly find a trajectory that is not necessarily optimal but good enough. The paradigm of picking a good enough solution that is actually computable has been termed bounded rational acting (Simon, 1955, 1972; Horvitz, 1988; Horvitz et al., 1989; Horvitz and Zilberstein, 2001). Note that bounded rational policies are in general stochastic and thus expressed as a probability distribution over actions given a world-state p(a|w).

We follow the work of Ortega and Braun (2013), where the authors present a mathematical framework for bounded rational decision-making that takes into account computational limitations. Formally, an agent’s initial behavior (or search strategy through action-space) is described by a prior distribution p0(a). The agent transforms its behavior to a posterior p(a|w) in order to maximize expected utility Σap(a|w)U(w, a) under this posterior policy. The computational cost of this transformation is measured by the KL-divergence between prior and posterior and is upper-bounded in case of a bounded rational actor. Decision-making with limited computational resources can then be formalized with the following constrained optimization problem:

This principle models bounded rational actors that initially follow a prior policy p0(a) and then use information about the world-state w to adapt their behavior to p(a|w) in a way that optimally trades off the expected gain in utility against the transformation costs for adapting from p0(a) to p(a|w). The constrained optimization problem in equation (2) can be rewritten as an unconstrained variational problem using the method of Lagrange multipliers:

where β is known as the inverse temperature. The inverse temperature acts as a conversion-factor, translating the amount of information imposed by the transformation (usually measured in nats or bits) into a cost with the same units as the expected utility (utils). The distribution p⋆(a|w) that maximizes the variational principle is given by

with partition sum Z(w) = Σa p0(a) eβU(w,a). Evaluating equation (3) with the maximizing distribution p⋆(a|w) yields the free energy difference

which is well known in thermodynamics and quantifies the energy of a system that can be converted to work. ΔF(w) is composed of the expected utility under the posterior policy p⋆(a|w) minus information processing cost that is required for computing the posterior policy measured as the Kullback-Leibler (KL) divergence between the posterior p⋆(a|w) and the prior p0(a).

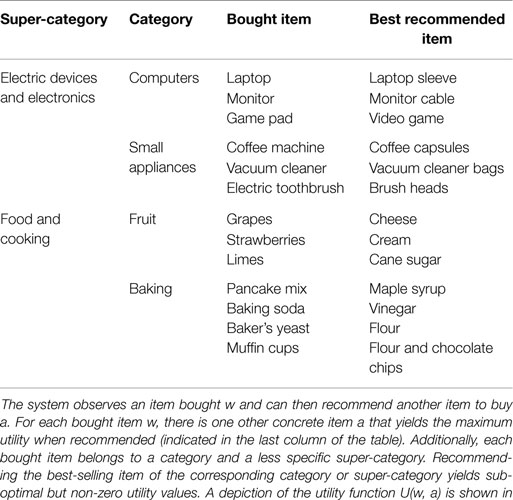

The inverse temperature β governs the influence of the transformation cost and thus the boundedness of the actor which determines the maximally allowed deviation of the final behavior p⋆(a|w) from the initial behavior p0(a). A perfectly rational actor that maximizes its utility can be recovered as the limit case β → ∞where transformation cost is ignored. This case is identical to equation (1) and simply reflects maximum utility action selection, which is the foundation of most modern decision-making frameworks. Note that the optimal policy p⋆(a|w) in this case collapses to a delta over the best action . In contrast, β → 0 corresponds to an actor that has infinite transformation cost or no computational resources and thus sticks with its prior policy p0(a). An illustrative example is given in Figure 1.

Figure 1. Bounded rationality and the free energy principle. Imagine an actor that has to grasp a particular cup w from a table. There are four options a1 to a4 to perform the movement and the utility U(w, a) shown in (A) measures the performance of each option. There are two actions a1 and a4 that lead to a successful grasp without spilling, and a4 is minimally better. Action a3 leads to a successful grasp but spills half the cup, and a2 represents an unsuccessful grasp. (B) Prior distribution over actions p0(a): no preference for a particular action. (C) Posterior p*(a|w) [equation (4)] for an actor with limited computational capacity. Due to the computational limits, the posterior cannot deviate from the prior arbitrarily far, otherwise the KL-divergence constraint would be violated. The computational resources are mostly spent on increasing the chance of picking one of the two successful options and decreasing the chance of picking a2 or a3. The agent is almost indifferent between the two successful options a1 and a4. (D) Posterior for an actor with large computational resources. Even though a4 is only slightly better than a1, the agent is almost unbounded and can deviate a lot from the prior. This solution is already close to the deterministic maximum expected utility solution and incurs a large KL-divergence from prior to posterior. (E) Expected utility Ep*(a|w)[U(w, a)] as a function of the inverse temperature β. Initially, allowing for more computational resources leads to a rapid increase in expected utility. However, this trend quickly flattens out into a regime where small increases in expected utility imply large increases in β. (F) KL-divergence DKL (p*(a|w)||p0(a)) as a function of the inverse temperature β. In order to achieve an expected utility of ≈ 95% of the maximum utility roughly 1 bit suffices [leading to a posterior similar to (C)]. Further increasing the performance by 5% requires twice the computational capacity of 2 bits [leading to a posterior similar to (D)]. A bounded rational agent that performs reasonably well could thus be designed at half the cost (in terms of computational capacity) compared to a fully rational maximum expected utility agent. An interactive version of this plot where β can be freely changed is provided in the Supplementary Jupyter Notebook “1-FreeEnergyForBoundedRationalDecisionMaking.”

Interestingly, the free energy principle for bounded rational acting can also be used for inference problems. In particular if the utility is chosen as a log-likelihood function U(w, a) = log q(w|a) and the inverse temperature β is set to one, Bayes’ rule is recovered as the optimal bounded rational solution [by plugging into equation (4)]:

Importantly, the inverse temperature β can also be interpreted in terms of computational or sample complexity (Braun and Ortega, 2014; Ortega and Braun, 2014; Ortega et al., 2014). The basic idea is that in order to make a decision, the bounded rational decision-maker needs to generate a sample from the posterior p⋆(a|w). Assuming that the decision-maker can draw samples from the prior p0(a), samples from the posterior p⋆(a|w) can be generated by rejecting any samples from p0(a) until one sample is accepted as a sample of p⋆(a|w) according to the acceptance rule u ≤ exp(β(U(w, a) − T(w))), where u is drawn from the uniform distribution over the unit interval [0;1] and T(w) is the aspiration level or acceptance target value with T(w) ≥ maxaU(w, a). This is known as rejection sampling (Neal, 2003; Bishop, 2006). The efficiency of the rejection sampling process depends on how many samples are needed on average from p0(a) to obtain one sample from p⋆(a|w). This average number of samples is given by the mean of a geometric distribution

where the partition sum Z(w) is defined as in equation (4). The average number of samples increases exponentially with increasing resource parameter β when T(w) > maxaU(w, a). It is also noteworthy that the exponential of the Kullback-Leibler divergence provides a lower bound for the required number of samples that is (see Section 6 in the Supplementary Methods for a derivation). Accordingly, a decision-maker with high β can manage high sampling complexity, whereas a decision-maker with low β can only process a few samples.

2.2 From Free Energy to Rate-Distortion: The Optimal Prior

In the free energy principle in equation (3), the prior p0(a) is assumed to be given. A very interesting question is which prior distribution p0(a) maximizes the free energy difference ΔF(w) for all world-states w on average (assuming that p(w) is given). To formalize this question, we extend the variational principle in equation (3) by taking the expectation over w and the arg max over p0(a)

The inner arg max-operator over p(a|w) and the expectation over w can be swapped because the variation is not over p(w). With the KL-term expanded this leads to

The solution to the arg max over p0(a) is given by . [see Section 2.1.1 in Tishby et al. (1999) or Csiszár and Tusnády (1984)]. Plugging in the marginal p(a) as the optimal prior yields the following variational principle for bounded rational decision-making

where I(W; A) is the mutual information between actions A and world-states W. The mutual information I(W; A) is a measure of the reduction in uncertainty about the action a after having observed w or vice versa since the mutual information is symmetric

where H(L) = −Σlp(l)log p(l) is the Shannon entropy of random variable L.

The exact same variational problem can also be obtained as the Langragian for maximizing expected utility with an upper bound on the mutual information

or in the dual point of view, as minimizing the mutual information between actions and world-states with a lower bound on the expected utility. Thus, the problem in equation (7) is equivalent to the problem formulation in rate-distortion theory (Shannon, 1948; Cover and Thomas, 1991; Tishby et al., 1999; Yeung, 2008), the information-theoretic framework for lossy compression. It deals with the problem that a stream of information must be transmitted over a channel that does not have sufficient capacity to transmit all incoming information – therefore some of the incoming information must be discarded. In rate-distortion theory, the distortion d(w, a) quantifies the recovery error of the output symbol a with respect to the input symbol w. Distortion corresponds to a negative utility which thus leads to an arg min instead of an arg max and a positive sign for the mutual information term in the optimization problem. In this case, a maximum expected utility decision-maker would minimize the expected distortion which is typically achieved by a one-to-one mapping between w and a, which implies that the compression is not lossy. From this, it becomes obvious why MEU decision-making might be problematic: if the MEU decision-maker requires a rate of information processing that is above channel capacity, it simply cannot be realized with the given system.

The solution that extremizes the variational problem of equation (7) is given by the self-consistent equations [see Tishby et al. (1999)]

with partition sum Z(w) = Σap(a)eβU(w,a).

In the limit case β → ∞where transformation costs are ignored, is the perfectly rational policy for each value of w independent of any of the other policies and p(a) becomes a mixture of these solutions. Importantly, due to the low price of information processing , high values of the mutual information term in equation (7) will not lead to a penalization, which means that actions a can be very informative about the world-state w. The behavior of an actor with infinite computational resources will thus in general be very world-state-specific.

In the case where β → 0 the mutual information between actions and world-states is minimized to I(W; A) = 0, leading to p*(a|w) = p(a) ∀w, the maximal abstraction where all w elicit the same response. Within this limitation, the actor will, however, emit actions that maximize the expected utility Σw, ap(w)p(a) U(w, a) using the same policy for all world-states.

For values of the rationality parameter β in between these limit cases, that is 0 < β < ∞, the bounded rational actor trades off world-state-specific actions that lead to a higher expected utility for particular world-states (at the cost of an increased information processing rate), against more robust or abstract actions that yield a “good” expected utility for many world-states (which allows for a decreased information processing rate).

Note that the solution for the conditional distribution p⋆(a|w) in the rate-distortion problem [equation (9)] is the same as the solution in the free energy case of the previous section [equation (4)], except that the prior p0(a) is now defined as the marginal distribution p0(a) = p(a) [see equation (10)]. This particular prior distribution minimizes the average relative entropy between p(a|w) and p(a) which is the mutual information between actions and world-states I(W; A).

An alternative interpretation is that the decision-maker is a channel that transmits information from w to a according to p(a|w). The channel has a limited capacity, which could arise from the agent not having a “brain” that is powerful enough, but a limited channel capacity could also arise from noise that is induced into the channel, i.e., an agent with noisy sensors or actuators. For a large capacity, the transmission is not severely influenced and the best action for a particular world-state can be chosen. For smaller capacities, however, some information must be discarded and robust (or abstract) actions that are “good” under a number of world-states must be chosen. This is possible by lowering β until the required rate I(W; A) does no longer exceed the channel capacity. The notion that a decision-maker can be considered as an information processing channel is not new and goes back to the cybernetics movement (Ashby, 1956; Wiener, 1961). Other recent applications of rate-distortion theory to decision-making problems can be found for example in Sims (2003, 2006) and Tishby and Polani (2011).

2.3 Computing the Self-Consistent Solution

The self-consistent solutions that maximize the variational principle in equation (7) can be computed by starting with an initial distribution pinit(a) and then iterating equations (9) and (10) in an alternating fashion. This procedure is well known in the rate-distortion framework as a Blahut-Arimoto-type algorithm (Arimoto, 1972; Blahut, 1972; Yeung, 2008). The iteration is guaranteed to converge to a unique maximum [see Section 2.1.1 in Tishby et al. (1999) and Csiszár and Tusnády (1984) and Cover and Thomas (1991)]. Note that pinit(a) has to have the same support as p(a). Implemented in a straightforward manner, the Blahut-Arimoto iterations can become computationally costly since the iterations involve evaluating the utility function for every action-world-state-pair (w, a) and computing the normalization constant Z(w). In case of continuous-valued random variables, closed-form analytic solutions exist only for special cases. Extending the sampling approach presented at the end of Section 2.1 could be one potential alleviation. A proof-of-concept implementation of the extended sampling scheme is provided in the Supplementary Jupyter Notebook “S1-SampleBasedBlahutArimoto.”

2.4 Emergence of Abstractions

The rate-distortion objective for decision-making [equation (7)] penalizes high information processing demand measured in terms of the mutual information between actions and world-states I(W; A). A large mutual information arises when actions are very informative about the world-state which is the case when a particular action is mostly chosen under a particular world-state and is rarely chosen otherwise. Policies p(a|w) with many world-state-specific actions are thus more demanding in terms of informational cost and might not be affordable by an agent with limited computational capacity. In order to keep informational costs low while at the same time optimizing expected utility, actions that yield a “good” expected utility for many different world-states must be favored. This leads to abstractions in the sense that the agent does not discriminate between different world-states out of a subset of all world-states, but rather responds with the same policy for the entire subset. Importantly, these abstractions are driven by the agent-environment structure encoded through the utility function U(w, a). Limits in computational resources thus lead to abstractions where different world-states are treated as if they were the same.

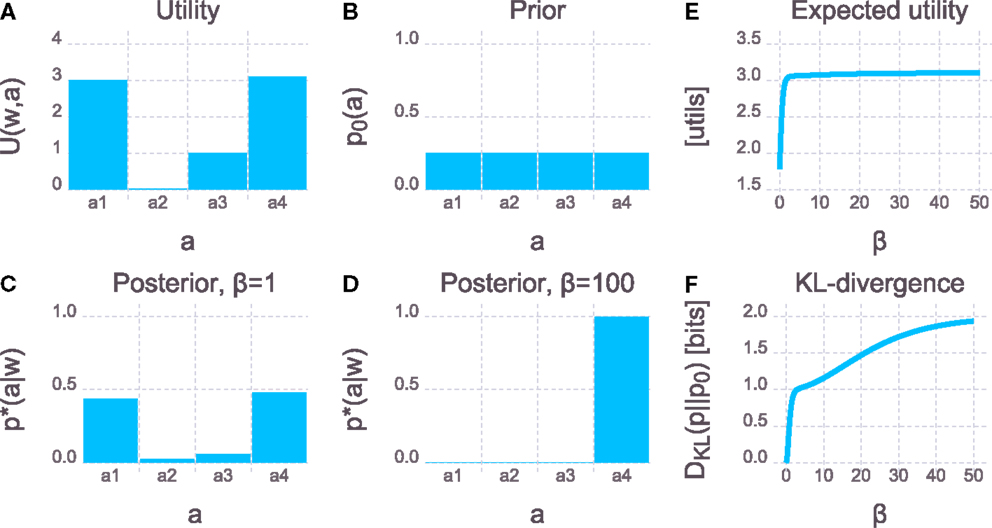

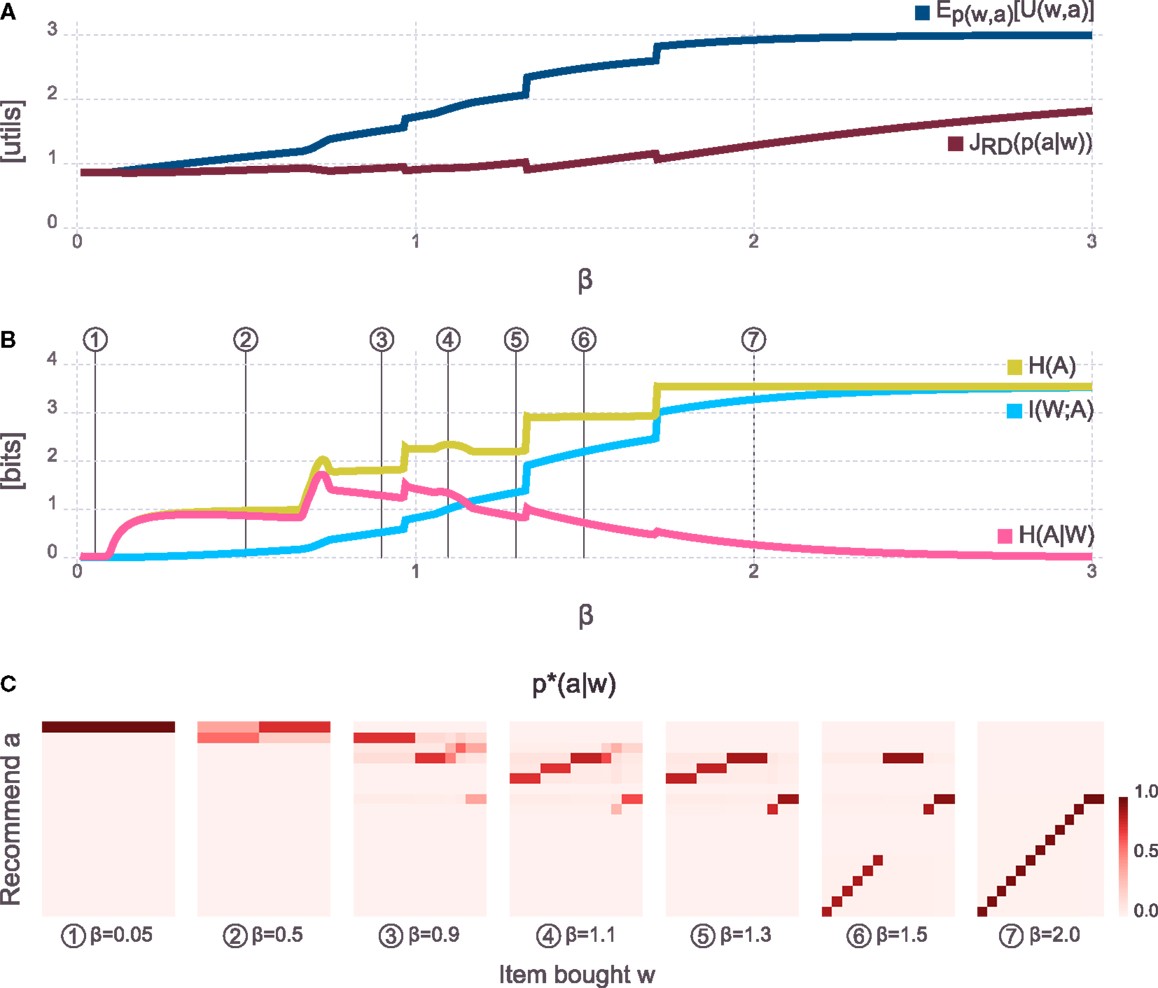

To illustrate the influence of different degrees of computational limits and the resulting emergence of abstractions we constructed the following example. The goal is to design a recommender system that observes an item bought w and then recommends another item a. In this example the system can either recommend another concrete item or the best-selling item of a certain category or the best-selling item of a super-category which subsumes several categories (see Table 1). An illustration of the example is shown in Figure 2A. The possible items bought are shown on the x-axis and possible recommendations are shown on the y-axis. The super-categories and categories as well as the corresponding bought items can be seen in Table 1 where each bought item also indicates the corresponding concrete item that scores highest when recommended.

Figure 2. Task setup and solution p*(a|w) for β = 1.3. (A) Utility function U(w, a) for the recommender system task. The recommender system observes an item w bought by a customer and recommends another item a to buy to the customer. For each item bought there is another concrete item that has a high chance of being bought by the customer. Therefore, recommending the correct concrete item leads to the maximum utility of 3. However, each item also belongs to a category (indicated by capital letters) and recommending the best-selling item of the corresponding category leads to a utility of 2.2. Finally, each item also belongs to a super-category (either “food” or “electronics”) and recommending the best-selling item of the corresponding super-category leads to a utility of 1.6. There is one item (muffin cups) where two concrete items can be recommended and both yield maximum utility. Additionally there is one item (pancake mix) where the recommendation of the best-selling item of both categories “fruit” and “baking” yields the same utility. (B) Solution p*(a|w) [equation (9)] for β = 1.3. Due to the low β, the computational resources of the recommender system are quite limited and it cannot recommend the highest scoring items (except in the last three columns). Instead, it saves computational effort by applying the same policy to multiple items.

The utility of each (w, a)-pair is color-coded in blue in Figure 2A. For each possible world-state there is one concrete item that can be recommended that will (deterministically) yield the highest possible utility of 3 utils. Further, each bought item belongs to a category and recommending the best-selling item of the corresponding category leads to a utility of 2.2 utils. Finally, recommending the best-selling item of the corresponding super-category yields a utility of 1.6 utils. For each world-state there is one specific action that leads to the highest possible utility but zero utility for all other world-states. At the same time there exist more abstract actions that are sub-optimal but still “good” for a set of world-states. See the legend of Figure 2 for more details on the example.

Figure 2B shows the result p*(a|w) obtained through Blahut-Arimoto iterations [equations (9) and (10)] for β = 1.3. For each world-state (on the x-axis) the probability over all actions (y-axis) corresponds to one column in the plot and is color-coded in red. For this particular value of β the agent cannot afford to pick the specific actions for most of the world-states (except for the last three world-states) in order to stay within the limit on the maximum allowed rate. Rather, the agent recommends the best-selling items of the corresponding category which allows for a lower rate by having identical policies (i.e., columns in the plot) for sets of world-states. The optimal policies thus lead to abstractions, where several different world-states elicit identical responses of the agent. Importantly, the abstractions are not induced because some stimuli are more similar than others under some utility-free measure and they are also not the result of a post hoc aggregation or clustering scheme. Rather, the abstractions are shaped by the utility function and appear as a consequence of bounded rational decision-making in the given task.

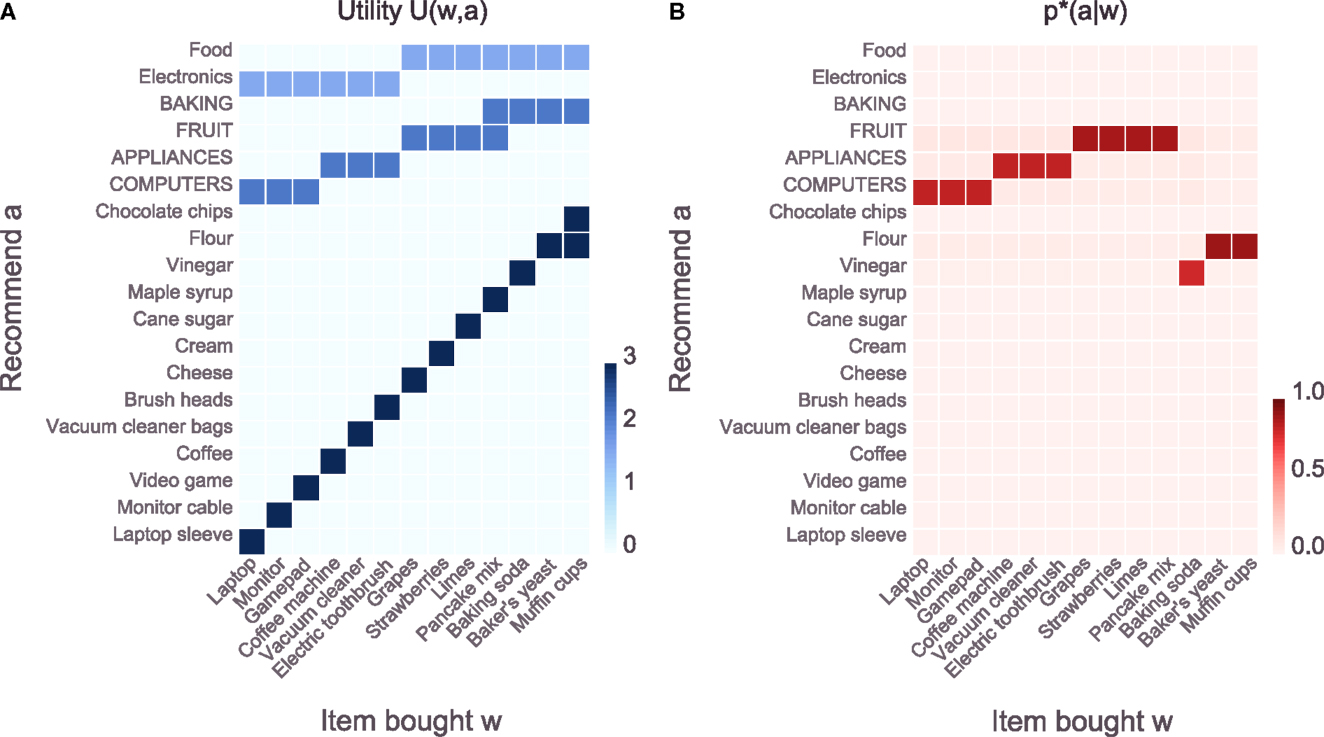

Figure 3A shows the expected utility E p( w, a)[U(w, a)] and the rate-distortion objective JRD(p(a|w)) as a function of the inverse temperature β. The plot shows that by increasing β the expected utility increases monotonically, whereas the objective JRD(p(a|w)) also shows a trend to increase but not monotonically. Interestingly, there are a few sharp transitions at the same points in both curves. The same steep transitions are also found in Figure 3B, which shows the mutual information and its decomposition into the entropic terms I(W, A) = H(A) − H(A|W) as a function of β. The line corresponding to the entropy over actions H(A) shows flat plateaus in between these phase transitions. Figure 3C illustrates solutions p⋆(a|w) for β values corresponding to points on each of the plateaus (labels for bought and recommended items have been omitted for visual compactness but are identical to the plot in Figure 2B). Surprisingly, most of the solutions correspond to different levels of abstraction – from fully abstract for β → 0, then going through several levels of abstraction and getting more and more specific up to the case β → ∞where the conditional entropy H(A|W) goes to zero implying that the conditionals p⋆(a|w) become deterministic and identical to the maximum expected utility solutions. Within a plateau of H(A), the entropy over actions does not change but the conditional entropy H(A|W) tends to decrease with increasing β. This means that qualitatively the behavior along a plateau does not change in the sense that across all world-states the same subset of actions is used. However, the stochasticity within this subset of actions decreases with increasing β (until at some point a phase-transition occurs). Changing the temperature leads to a natural emergence of different levels of abstraction – levels that emerge from the agent-environment interaction structure described by the utility function. Each level of abstraction corresponds to one plateau in H(A).

Figure 3. Sweep overβ values. (A) Evolution of expected utility and value of the objective JRD(p(a|w)) [equation (7)]. The expected utility is monotonically increasing, the objective also increases with increasing β but not monotonically. In both curves, there are some sharp kinks which indicate a change in level of abstraction (see panels below). (B) Evolution of information quantities. The same sharp kinks as in the panel above can also be seen in all three curves. Interestingly, H(A) has mostly flat plateaus in between these kinks which means that the behavior of the agent does not change qualitatively along the plateau. At the same time, H(A|W) goes down, indicating that behavior becomes less and less stochastic until a phase transition occurs where H(A) changes. (C) Solutions p*(a|w) [equation (9)] that illustrate the agents behavior on each of the plateaus of H(A). It can be seen that on each plateau (and with each kink), the level of abstraction changes.

In general, abstractions are formed by reducing the information content of an entity until it only contains relevant information. For a discrete random variable w ∈ , this translates into forming a partitioning over the space where “similar” elements are grouped into the same subset of and become indistinguishable within the subset. In physics, changing the granularity of a partitioning to a coarser level is known as coarse-graining which reduces the resolution of the space in a non-uniform manner. Here, the partitioning emerges in p⋆(a|w) as a soft-partitioning (see Still and Crutchfield, 2007), where “similar” world-states w get mapped to an action a (or a subset of actions) and essentially become indistinguishable. Readers are encouraged to interactively explore the example in the Supplementary Jupyter Notebook “2-RateDistortionForDecisionMaking.”

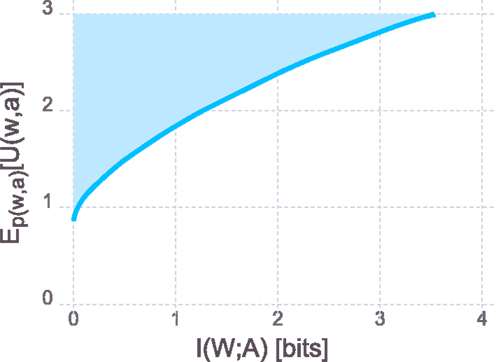

In analogy to rate-distortion theory where the rate-distortion function serves as an information-theoretic characterization of a system, one can define the rate-utility function

where the expected utility is a function of the information processing rate I(W; A). If the decision-maker is conceptualized as a communication channel between world-states and actions, the rate I(W; A) defines the minimally required capacity of that channel. The rate-utility function thus specifies the minimum required capacity for computing actions given a certain expected utility target, or analogously the maximally achievable expected utility given a certain information processing capacity. The rate-utility curve is obtained by varying the inverse temperature β (corresponding to different values of R) and plotting the expected utility as a function of the rate. The resulting plot is shown in Figure 4, where the solid line denotes the rate-utility curve and the shaded region corresponds to systems that are theoretically infeasible and cannot be achieved regardless of the implementation. Systems in the white region are sub-optimal, meaning that they could either achieve the same performance with a lower rate or given their limits on computational capacity they could theoretically achieve higher performance. This curve is interesting for both designing systems as well as characterizing the degree of sub-optimality of given systems.

Figure 4. Rate-utility curve. Analogously to the rate-distortion curve in rate-distortion theory, the rate-utility curve shows the minimally required information processing rate to achieve a certain level of expected utility or dually, the maximally achievable expected utility, given a certain rate. Systems that optimally trade-off expected utility against cost of computation lie exactly on the curve. Systems in the shaded region are theoretically impossible and cannot be realized. Systems that lie in the white region of the figure are sub-optimal in the sense that they could achieve a higher expected utility given their computational resources or they could achieve the same expected utility with lower resources.

3. Serial Information-Processing Hierarchies

In this section, we apply the rate-distortion principle for decision-making to a serial perception-action system. We design two stages: a perceptual stage p(x|w) that maps world-states w to observations x and an action stage p(a|x) that maps observations x to actions a. Note that the world-state w does not necessarily have to be considered as a latent variable but could in general also be an observation from a previous processing stage. The action stage implements a bounded rational decision-maker (similar to the one presented in the previous section) that optimally trades off expected utility against cost of computation [see equation (7)]. Classically, the perceptual stage might be designed to represent w as faithfully as possible, given the computational limitations of the perceptual stage. Here, we show that trading off expected utility against the cost of information processing on both the perceptual and the action stage leads to bounded-optimal perception that does not necessarily represent w as faithfully as possible but rather extracts the most relevant information about w such that the action stage can work most efficiently. As a result, bounded-optimal perception will be tightly coupled to the action stage and will be shaped by the utility function as well as the computational capacity of the action channel.

3.1 Optimal Perception is Shaped by Action

To model a perceptual channel we extend the model from Section 2.2 as follows: The agent is no longer capable of fully observing the state of the world W but using its sensors it is capable to form a percept X as p(x|w) which then allows for adaptation of behavior according to p(a|x). The three random variables for world-state, percept, and action form a serial chain of channels, one channel from world-states to percepts expressed by p(x|w) and another channel from percepts to actions expressed by p(a|x) which implies the following conditional independence

that is also expressed by the graphical model W → X → A. We assume that p(w) is given and the utility function depends on the world-state and the action U(w, a). Note that mathematically, the results are identical for U(w, x, a), but in this paper we consider the utility independent of the internal percept x.

Classically, inference and decision-making are separated – for instance, by first performing Bayesian inference over the state of the world w using the observation x and then choosing an action a according to the maximum expected utility principle. The MEU action-selection principle can be replaced by a bounded rational model for decision-making that takes into account the computational cost of transforming a (optimal) prior behavior p0(a) to a posterior behavior p(a|x) as shown in Section 2.

where U(x, a) = Σwp(w|x)U(w, a) is the expectation of the utility under the Bayesian posterior over w given x. Note that the bounded rational decision-maker in equation (13) is identical to the rate-distortion decision-maker introduced in Section 2 that minimizes the trade-off given by equation (7) by implementing equation (9). It includes the MEU solution as a special case for β2 → ∞. Here, the inverse temperature is denoted by β2 (instead of β as in the previous section) for notational reasons that ensure consistency with later results of this section.

In equation (12), the choice of the likelihood model p(x|w) remains unspecified and the question is where does it come from? In general, it is chosen by the designer of a system and the choice is often driven by bandwidth or memory constraints. In purely descriptive scenarios, the likelihood model is determined by the sensory setup of a given system and p(x|w) is obtained by fitting it to data of the real system. In the following, we present a particular choice of p(x|w) that is fundamentally grounded on the principle that any transformation of behavior or beliefs is costly (which is identical to the assumption of limited-rate information processing channels) and this cost should be traded off against gains in expected utility. Remarkably, equations (12) and (13) drop out naturally from the principle.

Given the graphical model: W → X → A, we consider an information processing channel between W and X and another one between X and A and introduce different rate-limits on these channels, i.e., the information processing price on the perceptual level can be different from the price of information processing on the action level . Formally, we set up the following variational problem:

Similar to the rate-distortion case, the solution is given by the following set of four self-consistent equations:

where Z(w) and Z(x) denote the corresponding normalization constants or partition sums. The conditional probability p(w|x) is given by Bayes’ rule and ΔFser(w, x) is the free energy difference of the action stage:

see also equation (5). More details on the derivation of the solution equations can be found in the Supplementary Methods Section 2.

The bounded-optimal perceptual model is given by equation (15). It follows the typical structure of a bounded rational solution consisting of a prior times the exponential of the utility multiplied by the inverse temperature. Compare equation (9) to see that the downstream free-energy trade-off ΔFser( w, x) now plays the role of the utility function for the perceptual model. The distribution p*(x|w) thus optimizes the downstream free-energy difference in a bounded rational fashion, that is taking into account the computational resources of the perceptual channel. Therefore, the optimal percept becomes tightly coupled to the agent-environment interaction structure as described by the utility function or in other words: the optimal percept is shaped by the embodiment of the agent and, importantly, is not simply a maximally faithful representation of W through X given the limited rate of the perceptual channel. A second interesting observation is that the action stage given by equation (17) turns out to be a bounded rational decision-maker using the Bayesian posterior p(w|x) for inferring the true world-state w given the observation x. This is identical to equation (13) (using the optimal prior p(a) = Σw, x p(w)p*(x|w)p*(a|x)), even though the latter was explicitly modeled by first performing Bayesian inference over the world-state w given the percept x [equation (12)] and then performing bounded rational decision-making [equation (13)], whereas the same principle drops out naturally in equation (17) as a result of optimizing equation (14).

3.2 Illustrative Example

In this section, we design a hand-crafted perceptual model pλ(x|w) with precision-parameter λ, that drives a subsequent bounded rational decision-maker that maps an observation x to a distribution over actions p(a|x) in order to maximize expected utility while not exceeding a constraint on the rate of the action channel. The latter is implemented by following equation (13) and setting β2 according to the limit on the rate I(X; A). We compare the bounded rational actor with hand-crafted perception against a bounded-optimal actor that maximizes equation (14) by implementing the four corresponding self-consistent equations (15)–(18). Importantly, the perceptual model p*(x|w) of the bounded-optimal actor maximizes the downstream free-energy trade-off of the action stage ΔFser( w, x) which leads to a tight coupling between perception and action that is not present in the hand-crafted model of perception. The action stage is identical in both models and given by equation (17).

We designed the following example where the actor is an animal in a predator-prey scenario. The actor has sensors to detect the size of other animals it encounters. In this simplified scenario, animals can only belong to one of three size-groups and their size correlates with their hearing-abilities:

• Small animals (insects): either 2, 3, or 4 size-units cannot hear very well.

• Medium-sized animals (rodents): either 6, 7, or 8 size-units can hear quite well.

• Large animals (cats of prey): either 10, 11, or 12 size-units can hear quite well.

The actor has a sensor for detecting the size of an animal, however, depending on the capacity of the perceptual channel this sensor will either be more or less noisy. To survive, the actor can hunt animals from both the small and the medium-sized group for food. On the other hand, it can fall prey to animals of the large group. The actor has three basic actions:

• Ambush: steadily wait for the other animal to get close and then strike.

• Sneak-up: slowly move closer to the animal and then strike.

• Flee: quickly move away from the other animal.

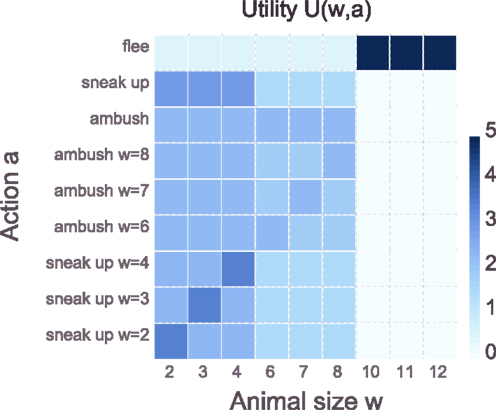

The advantage of the ambush is that it is silent, however, the risk is that the animal might not move toward the position of the ambush – it works equally well on animals from the small and medium-sized group. The sneak-up is not silent but does not rely on the other animal coincidentally getting closer – it works better than the ambush for small-sized animals but the opposite is true for medium-sized animals. If the actor encounters a large animal the only sensible action is to flee in order to avoid falling prey to the large animal. Besides these generic actions, the actor also has a repertoire of more specific hunting patterns – see Figure 5 which shows the full details of the utility function for the predator-prey scenario. The exact numeric values are found in the Supplementary Jupyter Notebook “3-SerialHierarchy.”

Figure 5. Predator-prey utility function U(w, a). The actor encounters different groups of animals – small, medium-sized, and large – and should hunt small and medium-sized animals for food and flee from large animals. Animals within a group have a similar size w: small animals ∈ {2,3,4} size-units, medium-sized animals ∈ {6,7,8} size-units, and large animals ∈ {10,11,12} size-units. For hunting – denoted by the action a – the actor can either ambush the other animal or alternatively sneak up to the other animal and strike once close enough. In general, sneaking up works well on small animals as they cannot hear very well, whereas medium-sized animals can hear quite well which decreases the chance of a successful sneak-up. The ambush works equally well on both groups of prey. Large animals should never be engaged, but the actor should flee in order to avoid falling prey to them. In addition to the generic hunting patterns that work equally well for all members of a group, for small animals there are specific sneak-up patterns that have an increased chance of success if applied to the correct animal but a decreased chance of success when applied to the wrong animal. For medium-sized animals, there are specific ambush behaviors that have the same chance of success as the generic ambush behavior when applied to the correct animal but a decreased chance of success when applied to the wrong animal. Since the specific ambush behaviors for the medium-sized animals have no advantage over the generic ambush behavior but require more information processing, any bounded rational actor should never choose the specific ambush behaviors. This somewhat artificial construction is meant to illustrate that bounded rational actors do not add unnecessary complexity to their behavior. See main text for more details on the example.

The hand-crafted model of perception is specified by pλ(x|w), where the observed size x corresponds to the actual size of the animal w corrupted by noise. The precision-parameter λ governs the noise-level and thus the quality of the perceptual channel which can be measured with I(W; X). In particular, the observation o is a discretized noisy version of w with precision λ:

where the set of world-states is given by all possible animal sizes w ∈ = {2,3,4,6,7,8,10,11,12} and the set of possible observations is given by x ∈ = {1,2,3,…,11,12,13}. To avoid a boundary-bias due to the limited interval we reject and re-sample all values of x that would fall outside of . For λ → ∞, the perceptual channel is very precise, and there is no uncertainty about the true value of w after observing x. However, such a channel incurs a large computational effort as the mutual information I(W; X) is maximal in this case. If the perceptual channel has a smaller capacity than required to uniquely map each w to an x, the rate must be reduced by lowering the precision λ. Medium precision will mostly lead to within-group confusion whereas low precision will also lead to across-group confusion and corresponds to perceptual channels with a very low rate I(W; X).

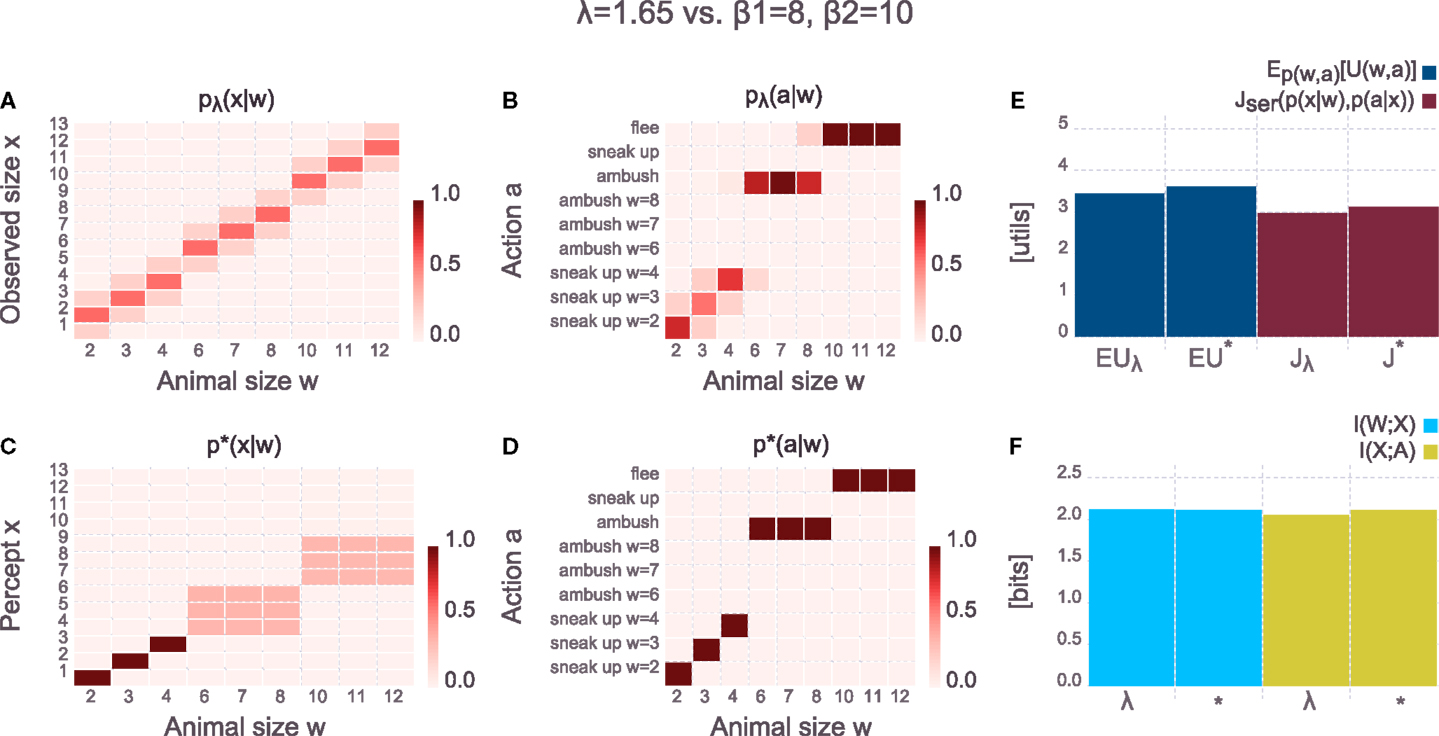

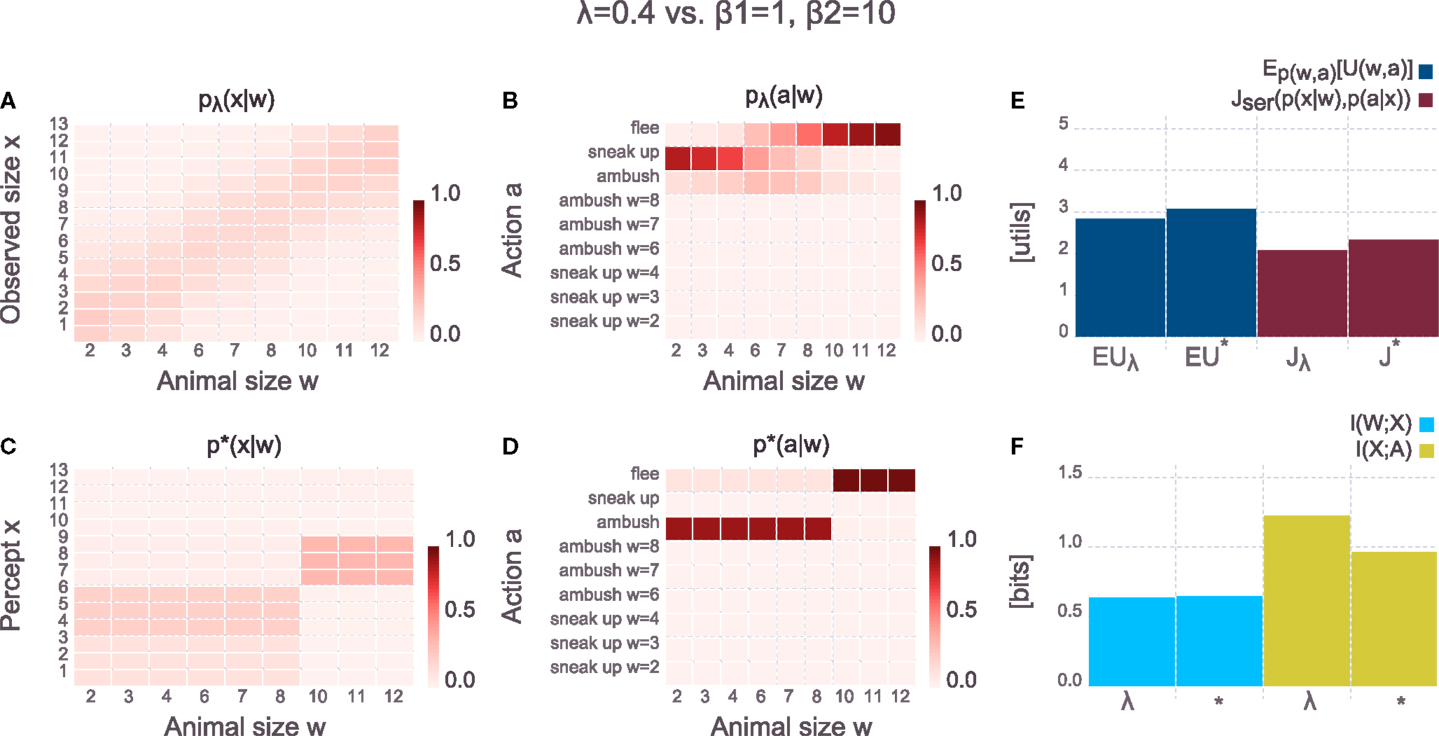

The results in Figure 6 show solutions when having large computational resources on both the perception and action channel. As the figure clearly shows, the hand-crafted model pλ(x|w) looks quite different from the bounded-optimal solution p*(x|w), even though the rate on the perceptual channel is identical in both cases (given by the mutual information I(W; X) ≈ 2 bits). The difference is that the bounded-optimal percept spends the two bits mainly on discriminating between specific animals of the small group and on discriminating between medium-sized and large animals. It does not discriminate between specific sizes within the latter two groups. This makes sense, as there is no gain in utility by applying any specific actions to specific animals in the medium- or large-sized group. Figure 6 also shows the overall-behavior from the point of view of an external observer p(a|w), which is computed as follows

Figure 6. Comparing hand-crafted perception against bounded-optimal perception (parameters are given above the panels). Both the perceptual and the action channel have large computational resources (λ or β1 for the perceptual channel, respectively, and β2 for the action channel). (A) Hand-crafted perceptual model pλ(x|w) [equation (20)] – the observed animal size is a noisy version of the actual size of the animal, but the noise-level is quite low (due to large λ). (B) Overall-behavior of the system pλ(a|w) [equation (21)] with the hand-crafted perception. For small animals, the specific sneak-up patterns are used, but the noisiness of the perception does not allow to deterministically pick the correct pattern. (C) Bounded-optimal perceptual model p*(x|w) [equation (15)] – the bounded-optimal model does not distinguish between the individual medium- or large-sized animals as all of them are treated with the respective generic action. Instead, the computational resources are spent on precisely discriminating between the different small animals. Note that this bounded-optimal perceptual model spends exactly the same computation as the hand-crafted model [see (F), blue bars]. (D) Overall-behavior p*(a|w) [equation (21)] of the bounded-optimal system. Compared to the hand-crafted system, the bounded-optimal perception allows to precisely discriminate between the different small animals which is also reflected in the overall-behavior. The bounded-optimal system thus achieves a higher expected utility (E). (E) Comparison of expected utility (dark blue, first two columns) and value of the objective [equation (14), dark red, last two columns] for the hand-crafted system (denoted by λ) and the bounded-optimal system (denoted by *). Since both systems spend roughly the same amount of computation [see (F)], but the bounded-optimal model achieves a higher expected utility, the overall objective is in favor of the bounded-optimal system. (F) Comparison of information processing effort in the hand-crafted system (denoted by λ) and the bounded-optimal system (denoted by *). Both systems spend (roughly) the same amount of computation, however, the bounded-optimal system spends it in a more clever way – compare (A,C). The distributions pλ(a|x) and p*(a|w) are not shown in the figure but can easily be inspected in the Supplementary Jupyter Notebook “3-SerialHierarchy.”

The overall-behavior in the bounded-optimal case is more deterministic, leading to a higher expected utility in the bounded-optimal case. The distributions pλ(a|x) and p⋆(a|w) are not shown in the figure but can easily be inspected in the Supplementary Jupyter Notebook “3-SerialHierarchy.” If the price of information processing on the perceptual channel in the hand-crafted model is the same as in the bounded-optimal model (given by β1), then the overall objective Jser(p(x|w), p(a|x)) is larger for the bounded-optimal case compared to the hand-crafted case, implying that the bounded optimal actor achieves a better trade-off between expected utility and computational cost. The crucial insight of this example is that the optimal percept depends on the utility function, where in this particular case it does for instance make no sense to waste computational resources on discriminating between the specific animals of the large group because the optimal response (flee with certainty) is identical to all of them. In the Supplementary Jupyter Notebook“3-SerialHierarchy” the utility function can easily be switched while keeping all other parameters identical in order to observe how the bounded-optimal percept changes accordingly. Note that the bounded-optimal behavior p⋆(a|w) shown in Figure 6D yields the highest possible expected utility in this task setup – there is no behavior that would lead to a higher expected utility (though there are other solutions that lead to the same expected utility).

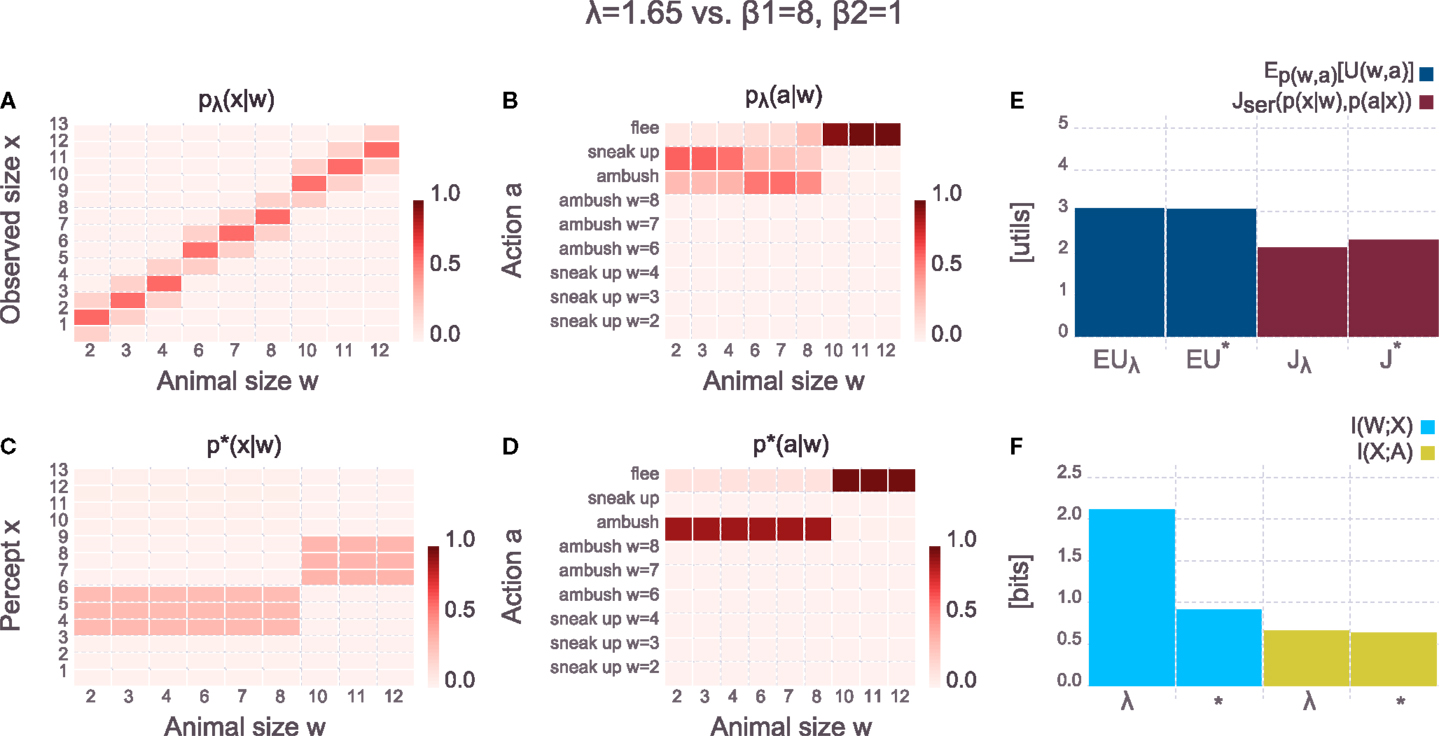

The bounded-optimal percept depends not only on the utility function but also on the behavioral richness of the actor which is governed by the rate on the action channel I(X; A). In Figure 7 we show the results of the same setup as in Figure 6 with the only change being the significantly increased price for information processing in the action stage (as specified by β2 = 1 bit per util whereas it used to be β2 = 10 bits per util in the previous figure). The hand-crafted perceptual model is unaffected by this change of the action stage, but the bounded-optimal model of perception has changed compared to the previous figure and now reflects the limited behavioral richness. As shown in p⋆(a|w) in Figure 7, the actor is no longer capable of applying different actions to animals of the small group and animals of the medium-sized group. Accordingly, the bounded-optimal percept does not waste computational resources for discriminating between small and medium-sized animals since the downstream policy is identical for both groups of animals. In terms of expected utility, both the hand-crafted model as well as the bounded-optimal decision-maker score equally at ≈3 utils. However, the bounded-optimal model does so by using lower computational resources and thus scoring better on the overall trade-off Jser(p(x|w), p(a|x)).

Figure 7. Comparing hand-crafted perception against bounded-optimal perception (parameters are given above the panels). Compared to Figure 6, the action channel of both the hand-crafted and the bounded-optimal system now has low computational resources (through the low β2 for the action channel). (A) Hand-crafted perceptual model pλ(x|w) [equation (20)] – the hand-crafted perception is unaffected by the increased limit in computational resources on the action channel. (B) Overall-behavior of the system pλ(a|w) [equation (21)] with the hand-crafted perception. Even though perception was unaffected by the increased limit on the action channel, the overall-behavior is severely affected. (C) Bounded-optimal perceptual model p*(x|w) [equation (15)] – due to the low information processing rate on the action channel [see (F), yellow bars], the bounded-optimal perception adjusts accordingly and only discriminates between predator and prey animals. (D) Overall-behavior p*(a|w) [equation (21)] of the bounded-optimal system. Compared to the hand-crafted system, the bounded-optimal system distinguishes sharply between predator and prey animals. Note, however, that both systems achieve an identical expected utility [(E), dark blue bars]. (E) Comparison of expected utility (dark blue, first two columns) and value of the objective [equation (14), dark red, last two columns] for the hand-crafted system (denoted by λ) and the bounded-optimal system (denoted by *). Both systems achieve the same expected utility, but the bounded-optimal system does so with requiring less computation on the perceptual channel [see (F), blue bars]. (F) Comparison of information processing effort in the hand-crafted system (denoted by λ) and the bounded-optimal system (denoted by *). Due to the low computational resources on the action channel, the corresponding information processing rate (yellow bars) is quite low. The bounded-optimal perception adjusts accordingly and requires a much lower rate compared to the hand-crafted model of perception that does not adjust. The distributions pλ(a|x) and p*(a|x) are not shown in the figure but can easily be inspected in the Supplementary Jupyter Notebook “3-SerialHierarchy.”

In Figure 8 we again use large resources on the action channel β2 = 10 (as in the first example in Figure 6), but now the resources on the perceptual channel are limited by setting β1 = 1 (compared to β1 = 8 in the first case). Accordingly, the precision of the hand-crafted perceptual model is tuned to λ = 0.4 (compared to λ = 1.65 in the first case) such that it has the same rate I(W; X) as the bounded-optimal model. By comparing the two panels for pλ(x|w) and p⋆(x|w), it can clearly be seen that the bounded-optimal perceptual model now spends its scarce resources to reliably discriminate between large animals and all other animals. The overall behavioral policies p(a|w) reflect the limited perceptual capacity in both cases, however, the bounded-optimal case scores a higher expected utility of ≈3 utils compared to the hand-crafted case. The overall objective Jser(p(x|w), p(a|x)) is also higher for the bounded-optimal model, indicating that this model should be preferred because it finds a better trade-off between expected utility and information processing cost.

Figure 8. Comparing hand-crafted perception against bounded-optimal perception (parameters are given above the panels). Compared to Figure 6, the perceptual channel of both the hand-crafted and the bounded-optimal system now has low computational resources (through the low λ and β1 respectively). (A) Hand-crafted perceptual model pλ(x|w) [equation (20)] – due to the low precision λ, the noise for the hand-crafted perception has increased dramatically. (B) Overall-behavior of the system pλ(a|w) [equation (21)] with the hand-crafted perception. Even though the action-part of the system still has large computational resources the overall-behavior is severely affected due to the bad perceptual channel. (C) Bounded-optimal perceptual model p*(x|w) [equation (15)] – due to the low computational resources on the perceptual channel (β1) the system can only distinguish between predator and prey animal (which is the most important information). Note how the percept in the bounded-optimal case no longer corresponds to some observed animal size but rather to a more abstract concept such as predator or prey animal. The parameters were chosen such that both the hand-crafted and the bounded-optimal system spend the same amount of information processing on the perceptual channel [see (F), blue bars]. (D) Overall-behavior p*(a|w) [equation (21)] of the bounded-optimal system. Compared to the hand-crafted system, the bounded-optimal system distinguishes sharply between predator and prey animals. This is only possible because the bounded-optimal perception spends its scarce resource to exactly perform this distinction. (E) Comparison of expected utility (dark blue, first two columns) and value of the objective [equation (14), dark red, last two columns] for the hand-crafted system (denoted by λ) and the bounded-optimal system (denoted by *). The bounded-optimal system achieves a higher expected utility even though the amount of information processing is not larger compared to the hand-crafted model [see (F)]. Rather, the bounded-optimal model spends its scarce resources more optimally. (F) Comparison of information processing effort in the hand-crafted system (denoted by λ) and the bounded-optimal system (denoted by *). Note that both perceptual channels (blue bars) require the same information processing rate, but the bounded-optimal perceptual model processes the more important information (predator versus prey) which allows the subsequent action channel to perform better [see (E), dark blue bars). The distributions pλ(a|x) and p*(a|x) are not shown in the figure but can easily be inspected in the Supplementary Jupyter Notebook “3-SerialHierarchy.”

Note that in all three examples the optimal percept p⋆(x|w) often leads to a uniform mapping of an exclusive subset of world-states w to the same set of percepts x. Importantly, these percepts do not directly correspond to an observed animal size as in the case of the hand-crafted model of perception. Rather, the optimal percepts often encode more abstract concepts such as medium- or large-sized animal (as in Figure 6) or predator and prey animal (as in Figures 7 and 8). In a sense, abstractions similar to the ones shown in the recommender system example in the previous section (Figure 3) emerge in the predator-prey example as well but now they also manifest themselves in the form of abstract percepts. Crucially, the abstract percepts allow for more efficient information processing further downstream in the decision-making part of the system. The formation of these abstract percepts is driven by the embodiment of the agent and reflects certain aspects of the utility function of the agent. For instance, unlike the actor in Figure 6, the actors in Figures 7 and 8 would not “understand” the concept of medium-sized animals as it is of no use to them: with their very limited resources it is most important for them to have the two perceptual concepts of predator and prey. Note that the cardinality of X in the bounded-optimal model of perception is fixed in all examples in order to allow for easy comparison against the hand-crafted model, but it could be reduced further without any consequences (up to a certain point) – this can be explored in the Supplementary Jupyter Notebook “3-SerialHierarchy.”

The solutions shown in this section were obtained by iterating the self-consistent equations until numerical convergence. Since there is no convergence-proof, it cannot be fully ruled out that the solutions are sub-optimal with respect to the objective. However, the point of the simulation results shown here is to allow for easier interpretation of the theoretical results and highlight certain aspects of the theoretical findings. We discuss this issue in Section 5.2.

4. Parallel Information- Processing Hierarchies

Rational decision-making requires searching through a set of alternatives a and picking the option with the highest expected utility. Bounded rational decision-making replaces the “hard maximum” operation with a soft selection mechanism where the first action that satisfies a certain level of expected utility is picked. A parallel hierarchical architecture allows for a prior partitioning of the search space which reduces the effective size of the search space and thus speeds up the search process. For instance, consider a medical system that consists of general practitioners and specialist doctors. The general doctor can restrict the search space for a particular ailment of a patient by determining which specialist the patient should see. The specialist doctor in turn can determine the exact disease. This leads to a two-level decision-making hierarchy consisting of a high-level partitioning that allows for making a subsequent low-level decision with reduced (search) effort. In statistics, the partitioning that is induced by the high-level decision is often referred to as a model and is commonly expressed as a probability distribution over the search space p(a|m) (where m indicates the model) which also allows for a soft-partitioning. The advantage of hierarchical architectures is that the computation that leads to the high-level reduction of the search space can be stored in the model (or in a set of parameters in case of a parametric model). This computation can later be re-used by using the correct model (or set of parameters) in order to perform the low-level computation more efficiently. Interestingly, it should be most economic to put the most re-usable, and thus more abstract, information into the models p(a|m) which leads to a hierarchy of abstractions. However, in order to make sure that the correct model is used, another deliberation process p(m|w) is required (where w indicates the observed stimulus or data). Another problem is how to chose the partitioning to be most effective. In this section, we address both problems from a bounded rational point of view. We show that the bounded optimal solution p*(a|m) trades off the computational cost for choosing a model m against the reduction in computational cost for the low-level decision.

To keep the notation consistent across all sections of the paper we denote the model m in the rest of the paper with the variable x. This is in contrast to Section 3, where x played the role of a percept. The advantage of this notation is that it allows to easily see similarities and differences of the information terms and solution equations of the different cases. In particular, in Section 5 we present a unifying case that includes the serial and parallel case as special cases – by keeping the notation consistent this can easily be seen.

4.1 Optimal Partitioning of the Search Space

Constructing a two-level decision-making hierarchy requires the following three components: high-level models p(a|x), a model selection mechanism p(x|w) and a low-level decision maker p(a|w, x) (w denotes the observed world-state, x indicates a particular model and a is an action). The first two distributions are free to be chosen by the designer of the system, for p(a|w, x) a maximum expected utility decision-maker is the optimal choice if computational costs are neglected. Here, we take computational cost into account and replace the MEU decision-maker with a bounded rational decision-maker that includes MEU as a special case (β3 → ∞) – the bounded rational decision-maker optimizes equation (7) by implementing equation (9). In the following we show how all parts of the hierarchical architecture:

emerge from optimally trading off computational cost against gains in utility. Importantly, p(a|x) plays the role of a prior distribution for the bounded rational decision-maker and reflects the high-level partitioning of the search space.

The optimization principle that leads to the bounded-optimal hierarchy trades off expected utility against the computational cost of model selection I(W; X) and the cost of the low-level decision using the model as a prior I(W; A|X):

The set of self-consistent solutions is given by

where Z(w) and Z(w, x) denote the corresponding normalization constants or partition sums. p(w|x) is given by Bayes’ rule and ΔFpar( w, x) is the free energy difference of the low-level stage:

see equation (5). More details on the derivation of the solution equations can be found in the Supplementary Methods Section 3. By comparing the solution equations (26)–(29) with equations (22)–(24) the hierarchical structure of the bounded-optimal solution can be seen clearly. The bounded-optimal model selector in equation (26) maximizes the downstream free-energy trade-off ΔFpar( w, x) in a bounded rational fashion and is similar to the optimal perceptual model of the serial case [equation (15)]. This means that the optimal model selection mechanism is shaped by the utility function as well as the computational process on the low-level stage of the hierarchy (governed by β3) but also by the computational cost of model selection (governed by β1). The optimal low-level decision-maker given by equation (28) turns out to be exactly a bounded rational decision-maker with p(a|x) as a prior – identical to the low-level decision-maker that was motivated in equation (24). Importantly, the bounded-optimal solution provides a principled way of designing the models p(a|x) [see equation (29)]. According to the equation, the optimal model p⋆(a|x) is given by a Bayesian mixture over optimal solutions p⋆(a|w, x) where w is known. The Bayesian mixture turns out to be the optimal compressor of actions for unknown w under the belief p(w|x).

4.2 Illustrative Example

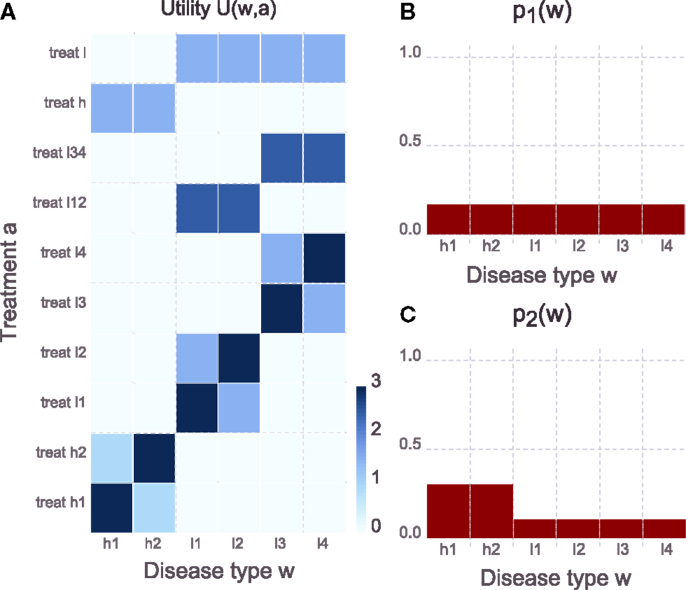

To illustrate the formation of bounded-optimal models, we designed the following example: in a simplified environment, only three diseases can occur – a heart disease or one of two possible lung diseases. Each of the diseases comes in two possible types (e.g., type 1 or type 2 diabetes). Depending on how much information is available on the symptoms of a patient, diseases can be treated according to the specific type (which is most effective) or with respect to the disease category (which is less effective but requires less information). See Figure 9 for a plot of the utility function and a detailed description of the example. The goal is to design a medical analysis hierarchy that initiates the best possible treatment, given its limitations. The hierarchy consists of an automated medical system that can cheaply take standard measurements to partially assess a patient’s disease category. Additionally, the patient is then sent to a specialist who can manually perform more elaborate measurements if necessary to further narrow down the patient’s precise disease type and recommend a treatment. The automated system should be designed in a way that minimizes the additional measurements required by the specialists. More formally, the automated system delivers a first diagnosis x given the patient’s precise disease type w according to p(x|w). The first diagnosis narrows down the possible treatments a according to a model p(a|x). For each x, a specialist can further reduce uncertainty about the correct treatment by performing more measurements p(a|w, x). We compare the optimal design of the automated system and the corresponding optimal treatment recommendations to the specialist p⋆(a|x) according to equation (29) in two different environments: one, where all disease types occur with equal probability (Figure 9B) versus two, where heart diseases occur with increased chance (Figure 9C). For this example, the number of different high-level diagnoses X is set to || = 3 which also means that there can be three different treatment recommendations p(a|x). Since in the example the total budget for performing measurements is quite low (reflected by β1, β3 both being quite low), the whole system (automated plus specialists) can in general not gather enough information about the symptoms to treat every disease type with the correct specific treatment. Rather, the low budget has to be spent on gathering the most important information.

Figure 9. Task setup of the medical system example. (A) Utility function. The disease category heart disease comes in the two types h1 or h2. There are two different categories of lung diseases that come in types l1 or l2 (lung disease A) or types l3 and l4 (lung disease B). The goal of the medical system is to gather information about the disease type and then initiate a treatment. Each of the disease types is best treated with a specific treatment. However, when the specific treatment is applied to the wrong disease type of the same disease category it is less effective. Additionally there are general treatments for the heart- and the two lung diseases that are slightly worse than the specific treatments but can be applied with less knowledge about the disease type. For lung diseases, there is a general treatment that works even if the specific lung disease is not known; however, it is less effective compared to the treatments where the disease but not the precise type is known. (B) Distribution of disease types in environment 1 – all diseases appear with equal probability. (C) Distribution of disease types in environment 2 where the heart diseases have an increased probability and the two corresponding disease types appear with higher chance.

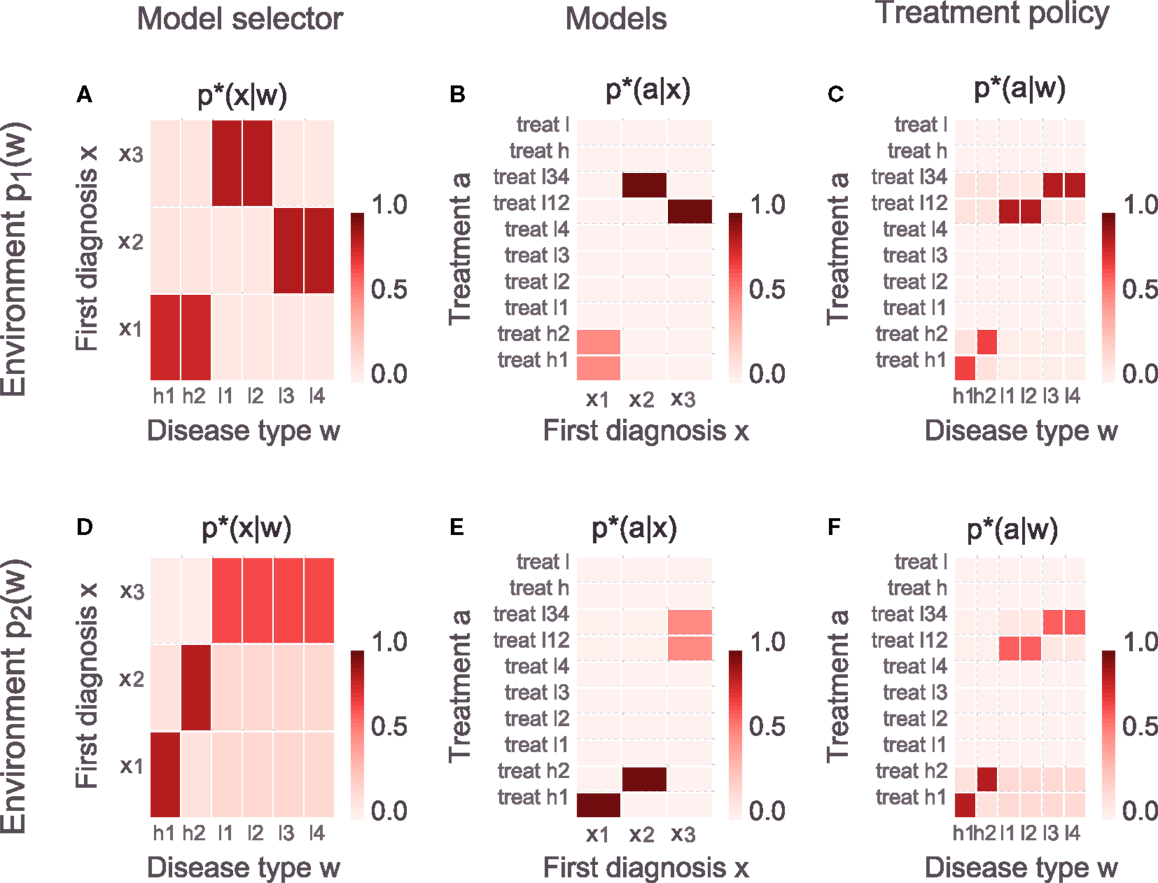

Figure 10 shows bounded-optimal hierarchies for the medical system in both environments. The top row in Figure 10 shows the optimal hierarchy for the environment where all diseases appear with equal probability: the automated system p⋆(x|w) (see Figure 10A) distinguishes between a heart disease, lung disease A and lung disease B, which means that there is one treatment recommendation for heart diseases and one treatment recommendation for each of the two possible lung diseases respectively (see the three columns of p⋆(a|x) in Figure 10B). Since the general treatment for the heart disease works less effective than the general treatments for the two lung diseases, the (very limited) budget of the specialists is completely spent on finding the correct specific heart treatment. Both lung diseases are treated with their respective general treatments since the two lung specialists have no budget for additional measurements. Since the automated system already distinguishes between the two lung diseases, it can narrow down the possible treatments to a delta over the correct general treatment, thus requiring no additional measurements by the lung specialists (shown by the two columns in p⋆(a|x) that have a delta over the treatment).

Figure 10. Bounded-optimal hierarchies for two different environments (different p(w)). Top row: all disease types are equally probable, bottom row: heart diseases and thus h1 and h2 are more probable. (A,D) Optimal model (or specialist) selectors [equation (26)]. In the uniform environment it is optimal to have one specialist for each disease category (heart, lung A, and lung B) and have a model selector that maps specific disease types to the corresponding specialist x. In the non-uniform environment it is optimal to have one specialist for each of the two types of the heart disease (h1, h2) and another one for all lung diseases. The corresponding model selector in the bottom row reflects this change in the environment. (B,E) Optimal models [equation (29), each column corresponds to one specialist]. The resources of the specialists are very limited due to a very low β3, meaning that the average deviation of p*(a|w, x) from p*(a|x) must be small. In the uniform environment it is optimal that the heart specialist spends all the resources for discriminating between the two heart disease types because using the general heart treatment on both types is less effective compared to using one of the lung disease treatments on both corresponding types. In the non-uniform environment this is reversed as the specialists for h1 and h2 do not need any more measurements to determine the correct treatment, however, the remaining budget (of 1 bit) is spent on discriminating between the two categories of lung diseases (but is insufficient for discriminating between the four possible lung disease types). (C,F) Overall-behavior of the hierarchical system p*(a|w) = Σxp*(x|w)p*(a|w, x). The distributions p*(a|w, x) [equation (28)] are not shown in the figure but can easily be investigated in the Supplementary Jupyter Notebook “4-ParallelHierarchy.”

The bottom row in Figure 10 shows the optimal hierarchy for the environment where heart diseases appear with higher probability. In this case it is optimal to redesign the automated system to distinguish between the two types of the heart disease h1, h2, and lung diseases in general (see p⋆(x|w) in Figure 10D of the figure). This means that there are now treatment recommendations p⋆(a|x) for h1 and h2 that do not require any more measurements by the specialists (shown by the delta over a treatment in the first two columns of p⋆(a|x) in Figure 10E) and there is another treatment recommendation for lung diseases. The corresponding specialist can use the limited budget to perform additional measurements to distinguish between the two categories of lung disease (but not between the four possible types as this would require more measurements than the budget allows). The example illustrates how the bounded-optimal decision-making hierarchy is shaped by the environment and emerges from optimizing the trade-off between expected utility and overall information processing cost. Readers can interactively explore the example in the Supplementary Jupyter Notebook “4-ParallelHierarchy” – in particular by changing the information processing costs of the specialists β3 or changing the number of specialists by increasing or decreasing the cardinality of X.

The solutions shown in this section were obtained by iterating the self-consistent equations until numerical convergence. Since there is no convergence-proof, it cannot be fully ruled out that the solutions are sub-optimal with respect to the objective. However, the point of the simulation results shown here is to allow for easier interpretation of the theoretical results and highlight certain aspects of the theoretical findings. We discuss this issue in Section 5.2.

4.3 Comparing Parallel and Serial Information Processing

In order to achieve a certain expected utility, a certain overall rate I(W; A) is needed. In the one-step rate-distortion case (Section 2) the channel from w to a must have a capacity larger or equal to that rate. In the serial case (Section 3) there is a channel from w to x and another channel from x to a. Both serial channels must at least have a capacity of I(W; A) in order to achieve the same overall rate, as the following inequality always holds for the serial case

In contrast, the parallel architecture allows for computing a certain overall rate I(W; A) using channels with a lower capacity because the contribution in reducing uncertainty about a on each level of the hierarchy splits up as follows:

which implies I(W, X; A) ≥ I(X; A). In particular, if the low-level step contributes information then I(W; A|X) > 0 and the previous inequality becomes strict. The same argument also holds when considering I(W; A) (see Section 5.1).

In many scenarios the maximum capacity of a single processing element is limited and it is desirable to spread the total processing load on several elements that require a lower capacity. For instance, there could be technical reasons why processing elements with 5 bits of capacity can easily be manufactured but processing elements with a capacity of 10 bits cannot be manufactured or are disproportionally more costly to produce. In the one-step case and the serial case the only way to stay below a certain capacity limit is by tuning β until the required rate is below the capacity – however, in both cases this also decreases the overall rate I(W; A). In the parallel hierarchical case several building blocks with a limited capacity can be used to produce an overall rate I(W; A) larger than the capacity of each processing block.

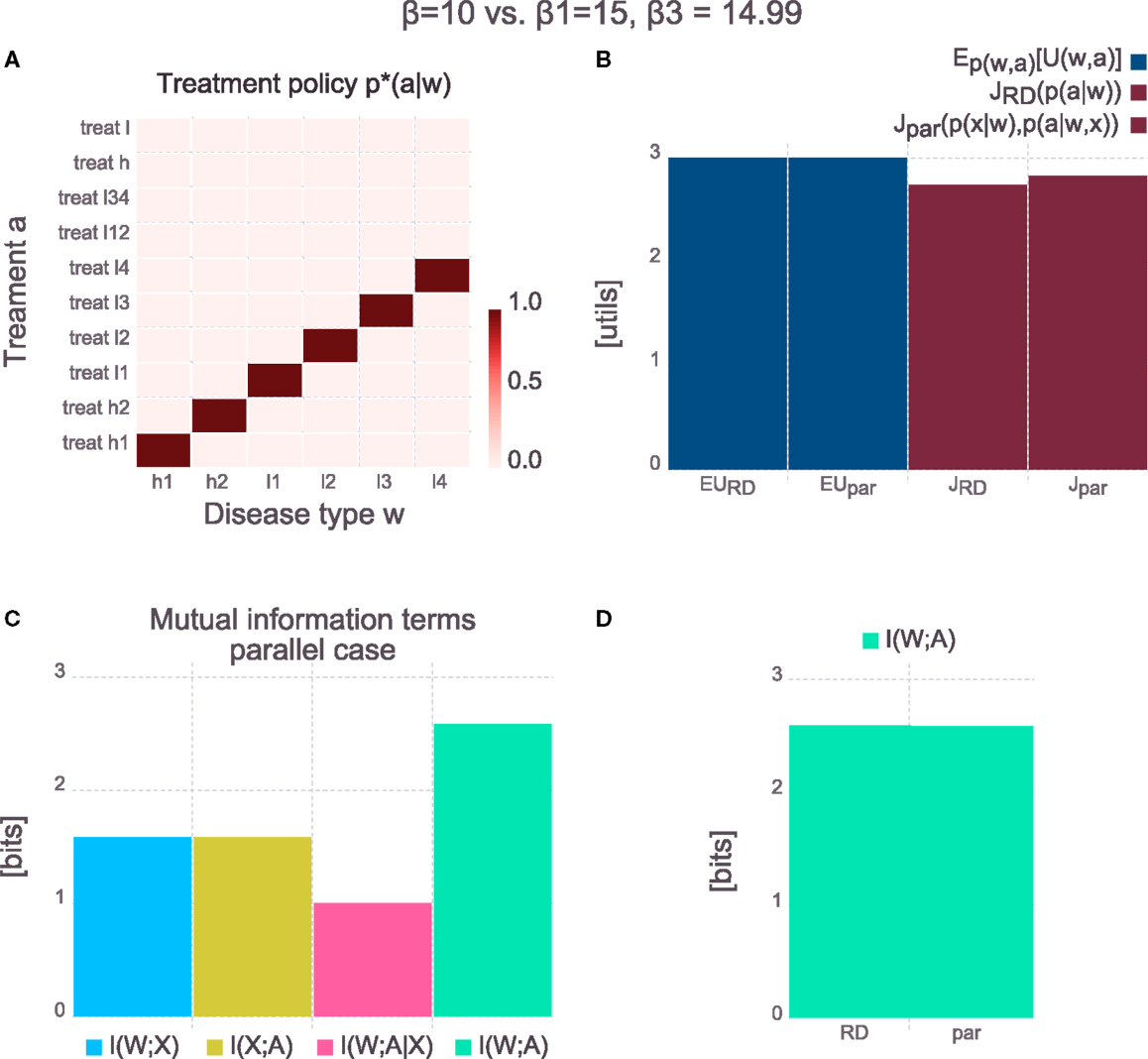

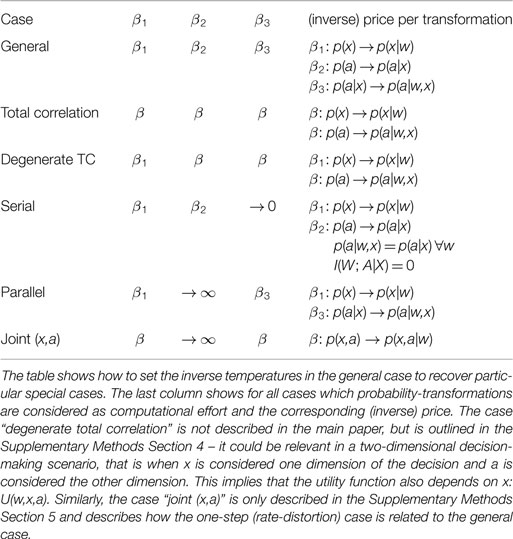

Splitting of information processing load onto several processing blocks is illustrated in Figure 11, where the one-step and parallel hierarchical solutions to the medical example are compared. In this example, the price of information processing in the one-step case is quite low (β = 10 bits per util) such that the corresponding solution leads to a deterministic mapping of each w to the best a (see Figure 11A). Doing so requires I(W; A) ≈ 2.6 bits (see Figure 11B). Now assume for the sake of this example that processing elements, where information processing cost is reduced (15 bits per util), could be manufactured, but the maximum capacity of these elements is 1.58 bits. In the one-step case these processing elements can only be used if it is acceptable to reduce the rate I(W; A) to 1.58 bits (by tuning β) which would imply a lower expected utility. However, in the parallel hierarchical case the new processing elements can be used (see Figure 11C) which leads to a reduced price of information processing (β1 = 15, β3 = 14.999). In conjunction the new processing elements process the same effective information I(W; A) (see Figure 11D) and achieve the same expected utility as the one-step case (see Figure 11B). However, since the price for information processing is lower on the more limited elements, the overall trade-off between expected utility and information processing cost is in favor of the parallel hierarchical architecture. Note that in this example it is important that the cardinality of X is limited (in this case || = 3) and β1 > β3. We discuss this in the next paragraphs.