Real Virtuality: A Code of Ethical Conduct. Recommendations for Good Scientific Practice and the Consumers of VR-Technology

- Johannes Gutenberg – Universität Mainz, Mainz, Germany

The goal of this article is to present a first list of ethical concerns that may arise from research and personal use of virtual reality (VR) and related technology, and to offer concrete recommendations for minimizing those risks. Many of the recommendations call for focused research initiatives. In the first part of the article, we discuss the relevant evidence from psychology that motivates our concerns. In Section “Plasticity in the Human Mind,” we cover some of the main results suggesting that one’s environment can influence one’s psychological states, as well as recent work on inducing illusions of embodiment. Then, in Section “Illusions of Embodiment and Their Lasting Effect,” we go on to discuss recent evidence indicating that immersion in VR can have psychological effects that last after leaving the virtual environment. In the second part of the article, we turn to the risks and recommendations. We begin, in Section “The Research Ethics of VR,” with the research ethics of VR, covering six main topics: the limits of experimental environments, informed consent, clinical risks, dual-use, online research, and a general point about the limitations of a code of conduct for research. Then, in Section “Risks for Individuals and Society,” we turn to the risks of VR for the general public, covering four main topics: long-term immersion, neglect of the social and physical environment, risky content, and privacy. We offer concrete recommendations for each of these 10 topics, summarized in Table 1.

Preliminary Remarks

Media reports indicate that virtual reality (VR) headsets will be commercially available in early 2016, or shortly thereafter, with offerings from, for example, Facebook (Oculus), HTC and Valve (Vive) Microsoft (HoloLens), and Sony (Morpheus). There has been a good bit of attention devoted to the exciting possibilities that this new technology and the research behind it have to offer, but there has been less attention devoted to novel ethical issues or the risks and dangers that are foreseeable with the widespread use of VR. Here, we wish to list some of the ethical issues, present a first, non-exhaustive list of those risks, and offer concrete recommendations for minimizing them. Of course, all this takes place in a wider sociocultural context: VR is a technology, and technologies change the objective world. Objective changes are subjectively perceived, and may lead to correlated shifts in value judgments. VR technology will eventually change not only our general image of humanity but also our understanding of deeply entrenched notions, such as “conscious experience,” “selfhood,” “authenticity,” or “realness.” In addition, it will transform the structure of our life-world, bringing about entirely novel forms of everyday social interactions and changing the very relationship we have to our own minds. In short, there will be a complex and dynamic interaction between “normality” (in the descriptive sense) and “normalization” (in the normative sense), and it is hard to predict where the overall process will lead us (Metzinger and Hildt, 2011).

Before beginning, we should quickly situate this article within the larger field of the philosophy of technology. Brey (2010) has offered a helpful taxonomy dividing the philosophy of technology into the classical works from the mid-twentieth century, on the one hand, and more recent developments that follow an “empirical turn” by focusing on the nature of particular emerging technologies, on the other hand. We intend the present article to be a contribution to the latter kind of philosophy of technology. In particular, we are investigating foundational issues in the applied ethics of VR, with a heavy emphasis on recent empirical results. Both authors have been participants in the collaborative project Virtual Embodiment and Robotic Re-Embodiment (VERE), a 5-year research program funded by the European Commission.1 Despite this explicit focus, we do not mean to imply that the issues investigated here will not find fruitful application to themes from classical twentieth century philosophy of technology (see Franssen et al., 2009). Consider, for instance, Martin Heidegger’s influential treatment of the way in which modern technology distorts our metaphysics of the natural world (Heidegger, 1977; also Borgmann, 1984), or Herbert Marcuse’s prescient account of industrial society’s ongoing creation of false needs that undermine our capacities for individuality (Marcuse, 1964). As should become clear from the examples below, immersive VR introduces new and dramatic ways of disrupting our relationship to the natural world (see Neglect of Others and the Physical Environment). Likewise, the newly created “need” to interact using social media will become even more psychologically ingrained as the interactions begin to take place while we are embodied in virtual spaces (see The Effects of Long-Term Immersion and O’Brolcháin et al., 2016). In sum, the fact that connections with classical philosophy of technology will remain largely implicit in this article should not be taken to suggest that they are not of great importance.

The main focus will be on immersive VR, in which subjects use a head-mounted display (HMD) to create the feeling of being within a virtual environment. Although our main topic involves the experience of immersion, some of the concerns raised, such as neglect of the physical environment (see Neglect of Others and the Physical Environment), can be applied to extended use of an HMD even when users do not experience immersion such as when merely using the device for 3D viewing. Many of our points are also relevant for other types of VR hardware, such as CAVE projection. One central area of concern has to do with illusions of embodiment, in which one has the feeling of being embodied other than in one’s actual physical body (Petkova and Ehrsson, 2008; Slater et al., 2010). In VR, for instance, one might have the illusion of being embodied in an avatar that looks just like one’s physical body. Or one might have the illusion of being embodied in an avatar of a different size, age, or skin color. In all of these cases, insight into the illusory nature of the overall state is preserved. The fact that VR technology can induce illusions of embodiment is one of the main motivations behind our investigation into the new risks generated by using VR by researchers and by the general public. Traditional paradigms in experimental psychology cannot induce these strong illusions. Similarly, watching a film or playing a non-immersive video game cannot create the strong illusion of owning and controlling a body that is not your own. Although our main focus will be on VR (see Figure 1), many of the risks and recommendations can be extended to augmented reality (Azuma, 1997; Metz, 2012; Huang et al., 2013 and substitutional reality (Suzuki et al., 2012; Fan et al., 2013). In augmented reality (AR, see Figure 2), one experiences virtual elements intermixed with one’s actual physical environment.

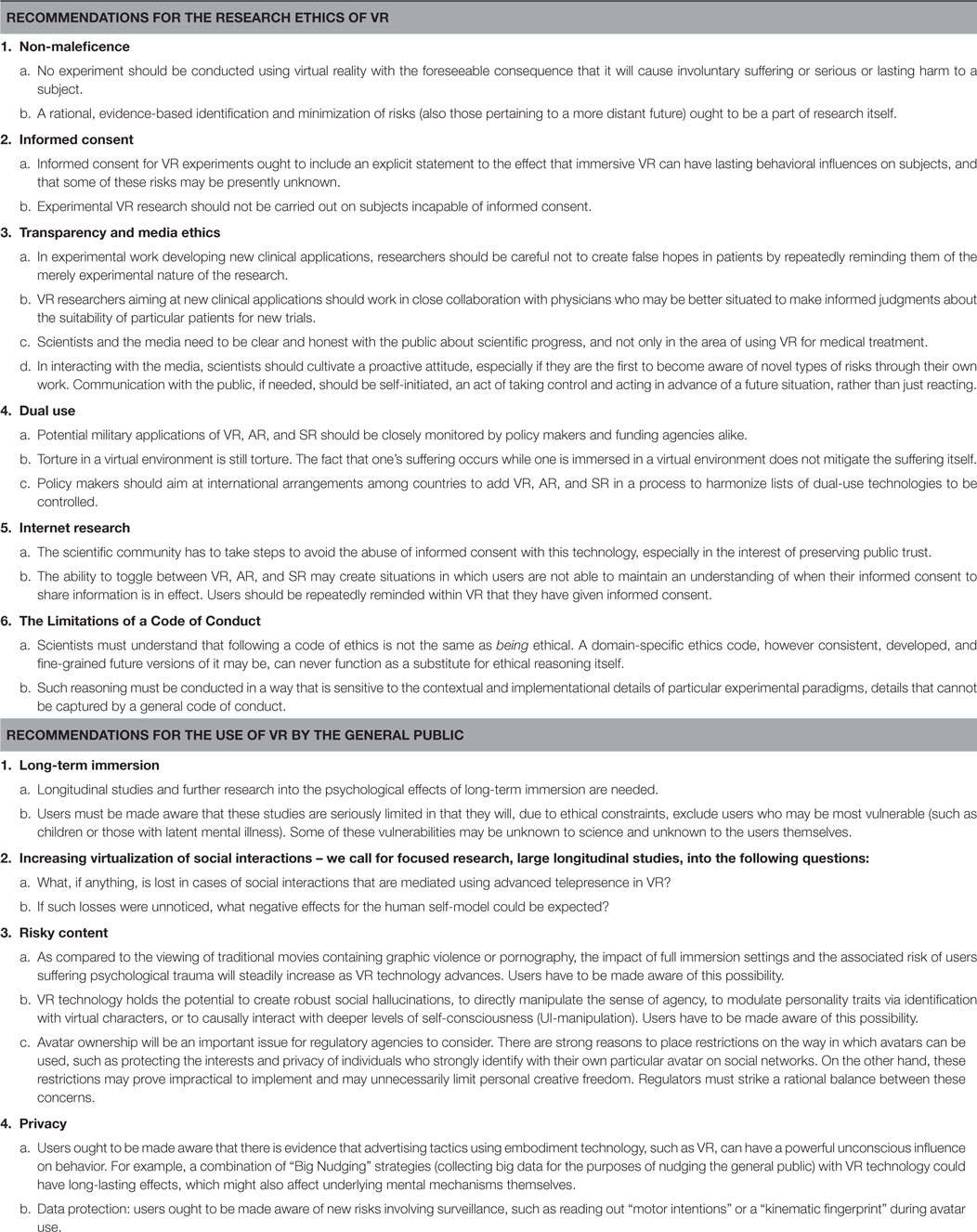

Figure 1. Illusory ownership of an avatar in virtual reality. Here, a subject is shown wearing a head-mounted display and a body tracking suit. The subject can see his avatar in VR moving in synchrony with his own movements in a virtual mirror. In this case, the avatar is designed to replicate Sigmund Freud in order to enable subjects to counsel themselves. Thus, creating what Freud may have called an instance of avatar-introjection! (Image used with kind permission from Osimo et al., 2015.)

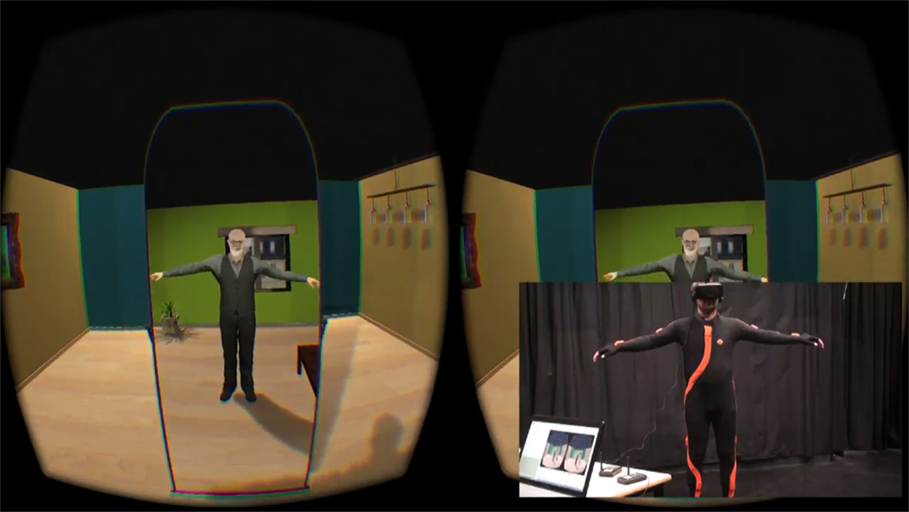

Figure 2. An augmented reality hand illusion. Here, augmented reality is used to show the subject a virtual hand in a biologically realistic location relative to his own body. This case differs from virtual reality due to the fact that the subject sees the virtual hand embedded in his own physical environment rather than in an entirely virtual environment (image used with kind permission from Keisuke Suzuki).

Following Milgram and colleagues (Milgram and Kishino, 1994; Milgram and Colquhoun, 1999), it may be helpful here to consider augmented reality along the Reality–Virtuality Continuum. The real environment is located at one extreme of the continuum and an entirely virtual environment is located at the other extreme. Displays can be placed along the continuum according to whether they primarily represent the real environment while including some virtual elements (augmented reality) or they primarily represent a virtual environment while including some real elements (augmented virtuality). Much of the following discussion will focus on entirely virtual environments, but readers should keep in mind that many of the concerns raised will also apply to environments all along the Reality–Virtuality Continuum.

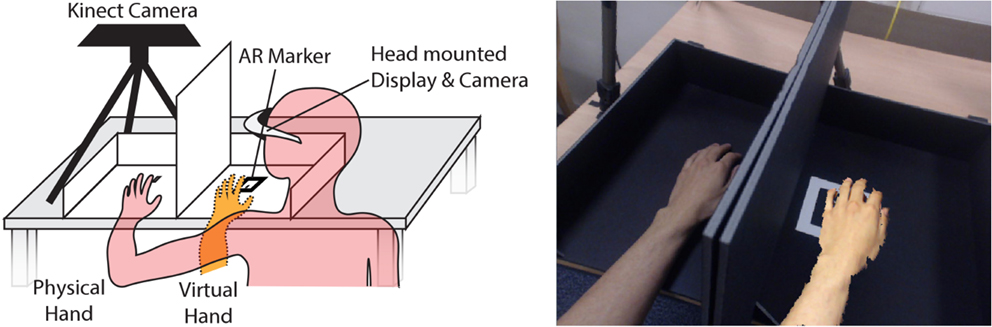

It is foreseeable that there will be ever new extensions and special cases of VR. We return to this theme with some philosophical remarks at the end of the article. For now, let us at least note that the very distinction between the real and the virtual is ripe for further philosophical investigation. One example of such a special, recent extension of VR that does not in itself form a distinct new category is “substitutional reality” (SR, see Figure 3), in which an omni-directional video feed gives one the illusion of being in a different location in space and/or time, and insight may not be preserved. Readers should keep in mind that VR headsets will likely enable users to toggle between virtual, augmented, and substitutional reality, and to adjust one’s location on the Reality–Virtuality Continuum, thus somewhat blurring the boundaries between kinds of immersive environments.

Figure 3. Immersion in the past using substitutional reality. In this example, substitutional reality is used to allow switching between a live view of the scene and a panoramic recording of that scene from the past. Note that SR could also be used to provide live (or recorded) panoramic input from a distant location, creating the illusion that one is “present” somewhere else (image used with kind permission from Anil Seth).

We divide our discussion into two main areas. First, we will address the research ethics of VR. Then we will turn to issues arising with the use of VR by the general public for entertainment and other purposes. To be clear upfront, we are not calling for general restrictions on an individual’s liberty to spend time (and money) in VR. In open democratic societies, such regulations must be based on rational arguments and available empirical evidence, and they should be guided by a general principle of liberalism: in principle, the individual citizen’s freedom and autonomy in dealing with their own brain and in choosing their own desired states of mind (including all of their phenomenal and cognitive properties) should be maximized. As a matter of fact, we would even argue for a constitutional right to mental self-determination (Bublitz and Merkel, 2014), somewhat limiting the authority of the government, because the above-mentioned values of individual freedom and mental autonomy seem to be absolutely fundamental to the idea of a liberal democracy involving a separation of powers. However, once such a general principle has been clearly stated, the much more interesting and demanding task lies in helping individuals exercise this freedom in an intelligent way, in order to minimize potential adverse effects and the overall psychosocial cost to society as a whole (Metzinger, 2009a; Metzinger and Hildt, 2011). New technologies like VR open a vast space of potential actions. This space has to be constrained in a rational and evidence-based manner.

Similarly, we fully support ongoing research using VR – indeed, we argue below that there are ethical demands to do more research using it, research that is motivated in part with the goal of mitigating harm for the general public. But we do think that it is prudent to anticipate risks and we wish to spread awareness of how possibly to avoid, or at least minimize, those risks.2 Before entering into the concrete details, we are going to make the case for being especially concerned about VR technology in contrast, say, to television or non-immersive video games. We do so in two steps. First, in Section “Plasticity in the Human Mind,” we cover some of the relevant discoveries from psychology in the past decades, including the scientific foundation for illusions of embodiment. Then in Section “Illusions of Embodiment and Their Lasting Effect,” we cover the more recent experimental work that has begun to reveal the lasting psychological effects of these illusions. Then in Section “Recommendations for the Use of VR by Researchers and Consumers,” we will cover the research ethics of VR followed by risks for the general public.

Plasticity in the Human Mind

One central result of modern experimental psychology is that human behavior can be strongly influenced by external factors while the agent is totally unaware of this influence. Behavior is context sensitive and the mind is plastic, which is to say that it is capable of being continuously shaped and re-shaped by a host of causal factors. These results, some of which we present below, suggest that our environment, including technology and other humans, has an unconscious influence on our behavior. Note that the results do not conflict with the manifest fact that most of us have relatively stable character traits over time. After all, most of us spend our time in relatively stable environments. And there may be many aspects of the functional architecture underlying the neurally realized part of the human self-model [for example, of the body model in our brain, e.g., Metzinger (2003), p. 355] that are largely genetically determined. However, we also want to point out that human beings possess a large number of epigenetic traits, that is, a stably heritable phenotype resulting from changes in a chromosome without alterations in the DNA sequence.

Context-Sensitivity All the Way Down

The way in which our behavior is sensitive to environmental features is especially relevant here due to the fact that VR introduces a completely new type of environment, a new cognitive and cultural niche, which we are now constructing for ourselves as a species.

It is not excluded that extended interactions with VR environments may lead to more fundamental changes, not only on a psychological, but also on a biological level.

Some of the most famous experiments in psychology reveal the context sensitivity of human behavior. These include the Stanford Prison Experiment, in which normal subjects playing roles as either prison guards or inmates began to show pathological behavioral traits (Haney et al., 1973), Milgram’s obedience experiments, in which subjects obeyed orders that they believed to cause serious pain and be immoral (Milgram, 1974), and Asch’s conformity experiments, in which subjects gave obviously incorrect answers to questions after hearing confederates, all give the same incorrect answers (Asch, 1951). For a more recent result showing the unconscious impact of environment on behavior, the amount of money placed in a collection box for drinks in a university break room was measured under a condition in which the image of a pair of eyes was posted above the collection box. With the eyes “watching,” coffee drinkers placed three times as much money in the box compared to the control condition with no eyes (Bateson et al., 2006). Effects like this one may be particularly relevant in VR, because the subjective experience of presence and being there is not only determined by functional factors like the number and fidelity of sensory input and output channels, the ability to modify the virtual environment, but also, importantly, the level of social interactivity, for example, in terms of actually being recognized as an existing person by others in the virtual world (Heeter, 1992; Metzinger, 2003). As investigations into VR have interestingly shown, a phenomenal reality as such becomes more real – in terms of the subjective experience of presence – as more agents recognizing one and interacting with one are contained in this reality. Phenomenologically, ongoing social cognition enhances both this reality and the self in their degree of “realness.” This principle will also hold if the subjective experience of ongoing social cognition is of a hallucinatory nature.

Potential for Deep Behavioral Manipulation

Whether physical or virtual, human behavior is situated and socially contextualized, and we are often unaware of the causal impact this fact has on learning mechanisms as well as on occurrent behavior. It is plausible to assume that this will be true of novel media environments as well. Importantly, unlike other forms of media, VR can create a situation in which the user’s entire environment is determined by the creators of the virtual world, including “social hallucinations” induced by advanced avatar technology. Unlike physical environments, virtual environments can be modified quickly and easily with the goal of influencing behavior.

The comprehensive character of VR plus the potential for the global control of experiential content introduces opportunities for new and especially powerful forms of both mental and behavioral manipulation, especially when commercial, political, religious, or governmental interests are behind the creation and maintenance of the virtual worlds.

However, the plasticity of the mind is not limited to behavioral traits. Illusions of embodiment are possible because the mind is plastic to such a degree that it can misrepresent its own embodiment. To be clear, illusions of embodiment can arise from normal brain activity alone, and need not imply changes in underlying neural structure. Such illusions occur naturally in dreams, phantom limb experiences, out-of-body experiences, and Body Integrity Identity Disorder (Brugger et al., 2000; Metzinger, 2009b; Hilti et al., 2013; Ananthaswamy, 2015; Windt, 2015), and they sometimes include a shift in what has been termed the phenomenal “unit of identification” in consciousness research (UI; Metzinger, 2013a,b), the conscious content that we currently experience as “ourselves” (please note that in the current paper “UI” does not refer to “user interface,” but always to the specific experiential content of “selfhood,” as explained below). This may be the deepest theoretical reason why we should be cautious about the psychological effects of applied VR: this technology is unique in beginning to target and manipulate the UI in our brain itself.

Direct UI-Manipulation

The UI is the form of experiential content that gives rise to autophenomenological reports of the type “I am this!” For every self-conscious system, there exists a phenomenal unit of identification, such that the system possesses a single, conscious model of reality; the UI is a part of this model; at any given point in time, the UI can be characterized by a specific and determinate representational content, which in turn constitutes the system’s phenomenal self-model (PSM, Metzinger, 2003) at t. Please note how the UI does not have to be identical with the content of the conscious body image or a region within it (like a fictitious point behind the eyes). For example, the UI can be moved out of and behind the head as phenomenally experienced in a repeatable and controllable fashion by direct electrical stimulation while preserving the visual first-person perspective with its origin behind the eyes (de Ridder et al., 2007). For human beings, the UI is dynamic and can be highly variable. There exists a minimal UI, which likely is constituted by pure spatiotemporal self-location (Blanke and Metzinger, 2009; Windt, 2010; Metzinger, 2013a,b); and in some configurations (e.g., “being one with the world”), there is also a maximal UI, likely constituted by the most general phenomenal property available, namely, the integrated nature of phenomenality per se (Metzinger, 2013a,b).

VR technology directly targets the mechanism by which human beings phenomenologically identify with the content of their self-model.

The rubber hand illusion is a simple localized illusion of embodiment that can be induced by having subjects look at a visually realistic rubber hand in a biologically realistic position (Botvinick and Cohen, 1998; Tsakiris and Haggard, 2005). When the rubber hand is stroked synchronously with the subject’s physical hand (which is hidden from view), subjects experience the rubber hand as their own.3 While the rubber hand can be used to create a partial illusion of embodiment, the same basic idea can be used to create the full-body illusion, on a global level. Subjects look through goggles through which they see a live video feed of their own bodies (or of a virtual body) located a short distance in front of their actual location. When they see their bodies being stroked on the back, and feel themselves being stroked at the same time, subjects sometimes feel as if the body that they see in front of them is their own (Lenggenhager et al., 2007; see Figure 4). This illusion is much weaker and more fragile than the RHI, but it has given us valuable new insights into the bottom-up construction of our conscious, bodily self-model in the brain (Metzinger, 2014). In more recent work, Maselli and Slater (2013) have found that tactile feedback is not required for an illusion of embodiment. They found that a virtual arm with a realistic appearance co-located with the subject’s actual arm is sufficient to induce the illusion of ownership of the virtual arm. In addition to visual and tactile signals, recent work suggests that manipulations of interoceptive signals, such as heartbeat, can also influence our experience of embodiment (Aspell et al., 2013; Seth, 2013).

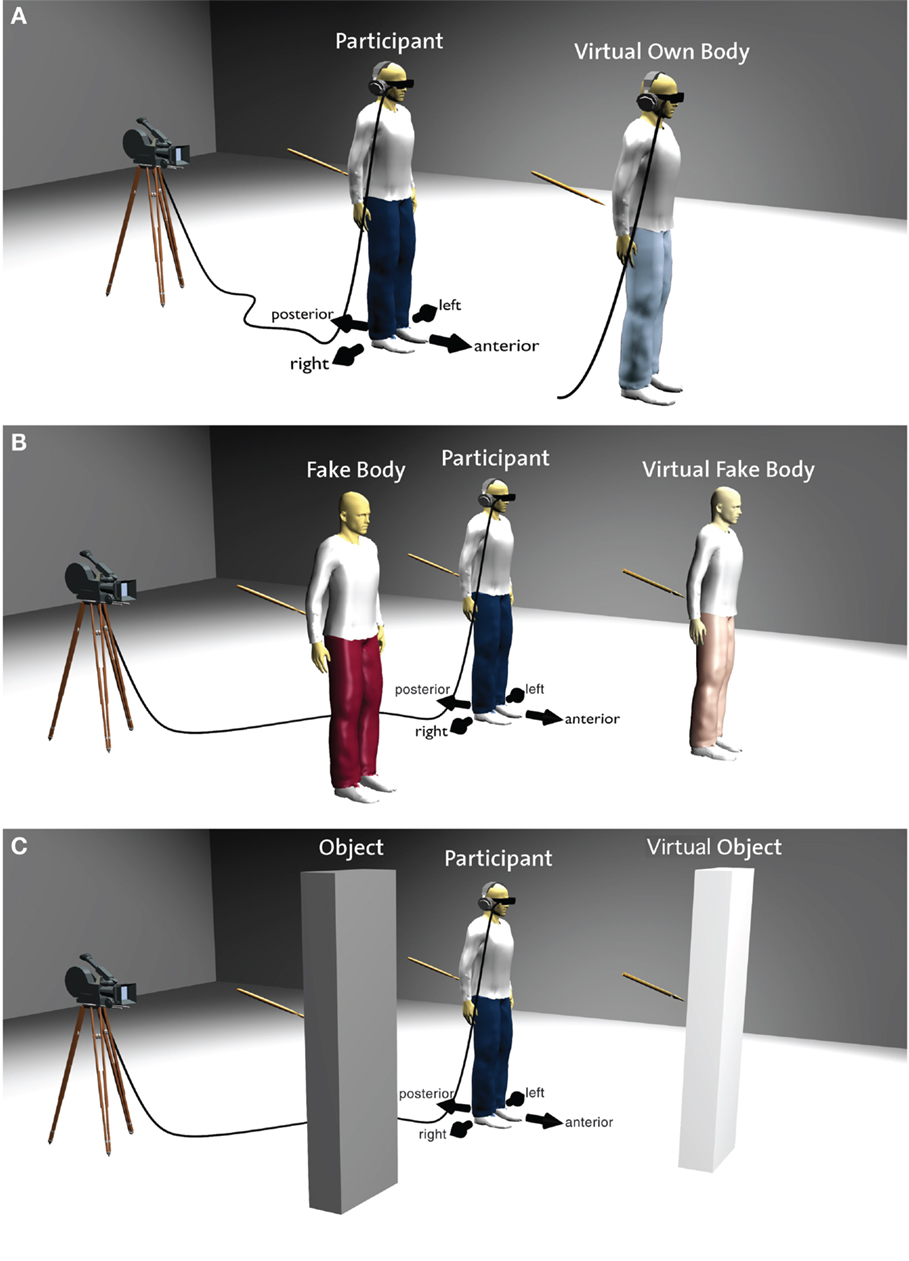

Figure 4. Creating a whole-body analog of the rubber-hand illusion. (A) Participant (dark blue trousers) sees through a HMD his own virtual body (light blue trousers) in 3D, standing 2 m in front of him and being stroked synchronously or asynchronously at the participant’s back. In other conditions, the participant sees either (B) a virtual fake body (light red trousers) or (C) a virtual non-corporeal object (light gray) being stroked synchronously or asynchronously at the back. Dark colors indicate the actual location of the physical body or object, whereas light colors represent the virtual body or object seen on the HMD. (Image used with kind permission from M. Boyer.).

The results sketched in these three sections reveal not only categories of risks but also three ways in which the human mind is plastic. First, there is “context-sensitivity all the way down,” which may involve hitherto unknown kinds of epigenetic trait formation in new environments. Second, there is evidence that behavior can be strongly influenced by environment and context, and in a deep way. Third, illusions of embodiment can be induced fairly easily in the laboratory, directly targeting the human UI itself. These results can be taken together as empirical premises for an argument stating not only that there may be unexpected psychological risks if illusions of embodiment are misused, or used recklessly, but that, if we are interested in minimizing potential damage and future psychosocial costs, these risks are themselves ethically relevant. In the following section, we review initial evidence that connects the three strands of evidence that we have just presented. That is, we review initial evidence that illusions of embodiment can be combined with a change in environment and context in order to bring about lasting psychological effects in subjects.

Illusions of Embodiment and Their Lasting Effect

In the last several years, a number of studies have found a psychological influence on subjects while immersed in a virtual environment. These studies suggest that VR poses risks that are novel, that go beyond the risks of traditional psychological experiments in isolated environments, and that go beyond the risks of existing media technology for the general public. A first important result from VR research involves what is known as the virtual pit (Meehan et al., 2002). Subjects are given a HMD that immerses them in a virtual environment in which they are standing at the edge of a deep pit. In one kind of experiment involving the pit, they are instructed to lean over the edge and drop a beanbag onto a target at the bottom. In order to enhance the illusion of standing at the edge, the subject stands on the ledge of a wooden platform in the lab that is only 1.5″ from the ground. Despite their belief that they were in no danger because the pit was “only” virtual, subjects nonetheless show increased signs of stress through increases in heart rate and skin conductance (ibid.). In a variation of the virtual pit, subjects may be told to walk across the pit over a virtual beam. In the lab, a real wooden beam is placed where subjects see the virtual beam. As one might expect, this version of the pit also elicits strong feelings of stress and fear.4 More recently, an experiment reproducing the famous Milgram obedience experiments in VR found that subjects reacted as if the shocks they administered were real, despite believing that they were merely virtual (Slater et al., 2006).

In addition to a strong emotional response from immersion, there is evidence that experiences in VR can also influence behavioral responses. One example of a behavioral influence from VR has been named the Proteus Effect by Nick Yee and Jeremy Bailenson. This effect occurs when subjects “conform to the behavior that they believe others would expect them to have” based on the appearance of their avatar (Yee and Bailenson, 2007, p. 274; Kilteni et al., 2013). They found, for example, that subjects embodied in a taller avatar negotiated more aggressively than subjects in a shorter avatar (ibid.). Changes in behavior while in the virtual environment are of ethical concern, since such behavior can have serious implications for our non-virtual physical lives – for example, as financial transactions take place in a non-physical environment (Madary, 2014).

But perhaps even more concerning for our purposes is evidence that behavior while in the virtual environment can have a lasting psychological impact after subjects return to the physical world. Hershfield et al. (2011) found that subjects embodying avatars that look like aged versions of themselves show a tendency to allocate more money for their retirement after leaving the virtual environment. Rosenberg et al. (2013) had subjects perform tasks in a virtual city. Subjects were allowed to fly through the city either using a helicopter or by their own body movements, like Superman. They found that subjects given the superpower were more likely to show altruistic behavior afterwards – they were more likely to help an experimenter pick up spilled pens. Yoon and Vargas (2014) found a similar result, although not using fully immersive VR. They had subjects play a video game as either a superhero, a supervillain, or a neutral control avatar. After playing the game, subjects were given a tasting task that they were told was unrelated to the gaming experiment. Subjects were given either chocolate or chili sauce to taste, and then told to measure out the amount of food for the subsequent subject to taste. Those who played as heroes poured out more chocolate, while those who played as villains poured out more chili.

The psychological impact of immersive VR has also been explored in a beneficent application. Peck et al. (2013) gave subjects an implicit racial bias test at least 3 days before immersion and then immediately after the immersion. In the experiment, subjects were embodied in an avatar with either light skin, dark skin, purple skin, or they were immersed in the virtual world with no body. They found that subjects who were embodied in the dark-skinned avatar showed a decrease in implicit racial bias, at least temporarily.

Recommendations for the Use of VR by Researchers and Consumers

With the results from the first section of the paper in mind as illustrative examples, we now move on to make concrete recommendations for VR in both scientific research (see The Research Ethics of VR) and consumer applications (see Risks for Individuals and Society). Our main recommendations are italicized and listed together in Table 1.

The Research Ethics of VR

In this section, we cover questions about the ethics of conducting research either on VR, or, perhaps more interestingly, research using VR as a tool. For example, it is plausible to assume that in the future there will be many experiments combining real-time fMRI and VR or ones using animal subjects in VR (Normand et al., 2012), which are not only about understanding or improving VR itself but only use it a research tool. To begin with a short example, Behr et al. (2005) have covered the research ethics of VR from a practical perspective, emphasizing that the risk of motion sickness must be minimized and that researchers ought to assist subjects as they leave the virtual environment and readjust to the real world. In this part of the article, we indicate new issues in the research ethics of VR that were not covered in Behr et al.’s initial treatment. In particular, we will raise the following six issues:

• the limits of experimental environments,

• informed consent with regard to the lasting psychological effects of VR,

• risks associated with clinical applications of VR,

• the possibility of using results of VR research for malicious purposes (dual use),

• online research using VR, and

• a general point about the inherent limitations of a code of conduct for research.

For each of these issues, we offer concrete recommendations for researchers using VR as well as ethics committees charged with evaluating the permissibility of particular experimental paradigms using VR.

Ethical Experimentation

What are the limits to what we can do ethically as experiments in VR? We recommend, at the very least, that researchers ought to follow the principle of non-maleficence: do no harm. This principle is a central component of research ethics on human subjects where it is often discussed with the accompanying principle of beneficence: maximize well-being for the subjects. Note how such a principle applies to all sentient beings capable of suffering, like non-human animals or even potential artificial subjects of experience in the future (Althaus et al., 2015, p. 10). We will return to the principle of beneficence in VR in the following section. The principle of non-maleficence can be found in the codes of ethical conduct for both the American Psychological Association (General Principle A)5 as well as in the British Psychological Society (Principle 2.4). The British Psychological Society offers the following recommendation:

Harm to research participants must be avoided. Where risks arise as an unavoidable and integral element of the research, robust risk assessment and management protocols should be developed and complied with. Normally, the risk of harm must be no greater than that encountered in ordinary life, i.e., participants should not be exposed to risks greater than or additional to those to which they are exposed in their normal lifestyles (The British Psychological Society, 2014, p. 11).

Following this recommendation in the case of VR might raise some novel challenges due to the entirely new nature of the technology. For instance, a well-known domain of application for the principle of non-maleficence has been in clinical trials for new pharmacological agents. Although this domain of research ethics still faces important and controversial issues (Wendler, 2012), thinkers in the debate can avail themselves of the history of medical technology. In many cases, precedents can be quoted. In the case of VR, there is yet no history that we can use as a source for insight. On the contrary, what is needed is a rational, ethically sound process of precedence-setting.

In its general form, the principle of non-maleficence for VR can be expressed as follows:

No experiment should be conducted using virtual reality with the foreseeable consequence that it will cause serious or lasting harm to a subject.

Although recommending adherence to this principle is nothing new, implementing this principle in VR laboratories may be challenging for the following reason. Attempts to apply non-maleficence in VR can encounter a dilemma of sorts. On the one hand, a goal of the research ought to be, as we suggest below, to gain a better understanding of the risks posed for individuals using VR. For instance, does the duration of immersion pose a greater risk for the user? Might some virtual environments be more psychologically disturbing than others? VR research should seek to answer these and similar questions. In particular, open-ended longitudinal studies will be necessary to assess the risk of long-term usage for the general population, just like with new substances for pharmaceutical cognitive enhancement or medical treatments more generally. On the other hand, it is difficult to assess these risks without running experiments that generate those possible risks, thus raising worries about non-maleficence.

A strict adherence to non-maleficence would require avoiding all experiments using virtual environments for which the risk is unknown. We suggest that this strict interpretation of non-maleficence is not optimal, because substantial ethical assessments should always be evidence-based and necessarily involve the investigation of greater time-windows and larger populations. VR researchers could and should provide a valuable service by informing the public and policy makers of the possible risks of spending large amounts of time in unregulated virtual environments. The principle of non-maleficence should be applied in the sense that experiments should not be conducted if the outcome involves foreseeable harm to the subjects. On the other hand, the same principle implies a sustained striving for rational, evidence-based minimization of risks in the more distant future. We, therefore, suggest that careful experiments designed with the beneficent intention of discovering the psychological impact of immersion in VR are ethically permissible.

In order to adhere to the principle of non-maleficence, researchers (and ethics committees) will need to utilize their knowledge of experimental psychology as well as their knowledge of results specific to VR. The kinds of results sketched in Sections “Plasticity in the Human Mind” and “Illusions of Embodiment and Their Lasting Effect” will be directly relevant for evaluating whether a line of experimentation violates non-maleficence. Similarly, the selection of subjects for VR experiments must be done with special care. New methods of prescreening for individuals with high risk factors must be incrementally developed, and funding for the development of such new methodologies needs to be allocated. We, therefore, urge careful screening of subjects to minimize the risks of aggravating an existing psychological disorder or an undetected psychiatric vulnerability (Rizzo et al., 1998; Gregg and Tarrier, 2007). Many experiments using VR currently seek to treat existing psychiatric disorders. The screening process for such experiments has the goal of selecting subjects who exhibit signs and symptoms of an existing condition. The screening process should also include exclusion criteria specific to possible risks posed by VR. Ideally, the VR research community will seek to establish an empirically motivated standard set of exclusion criteria. As we will discuss in Section “The Effects of Long-Term Immersion” below, of particular concern are vulnerabilities to disorders that could potentially become aggravated by prolonged immersion and illusions of embodiment, such as Depersonalization/Derealization Disorder (DDD; see American Psychiatric Association (2013), DSM-5: 300.14). Standard exclusion criteria may involve, for instance, scoring above a particular threshold on scales testing for dissociative experiences (Bernstein and Putnam, 1986) or depersonalization (Sierra and Berrios, 2000). Of course, there may be cases in which experimenters seek to include subjects with experiences of dissociation in order to investigate ways in which VR might be used to treat the underlying conditions, such as treating post-traumatic stress disorder (PTSD) through exposure therapy in VR (Botella et al., 2015). In those special cases, it is important to implement alternative exclusion criteria, such as Rothbaum et al. (2014), who excluded subjects with a history of psychosis, bipolar disorder, and suicide risk.

Informed Consent

The results presented above clearly suggest that VR experiences can have lasting psychological impact. This new knowledge about the lasting influence of experiences in VR must not be withheld from subjects in new VR experiments.

We recommend that informed consent for VR experiments ought to include an explicit statement to the effect that immersive VR can have lasting behavioral influences on subjects, and that some of these risks may be presently unknown.

Subjects should be made aware of this possibility out of respect for their autonomy (as included, for example, in the American Psychological Association General Principle D)6. That is, if an experiment might alter their behavior without their awareness of this alteration, then such an experiment could be seen as a threat to the autonomy of the subject. A reasonable way to preserve autonomy, we suggest, is simply to inform subjects of possible lasting effects. Please again note the principled problem that research animals are not able to give informed consent, their interest needs to be represented by humans. Also note that we are not suggesting that subjects ought to be informed about the particular effects that are being investigated in the experiment. Thus, our recommendation should not raise the concern that informing subjects may compromise researchers’ abilities to test for particular behavioral effects.

Practical Applications: False Hope and Beneficence

Another concern has to do with various applications of VR. One of many promising applications for VR research is in the treatment of disease, damage, and other health-related issues, especially mental health.7 For instance, researchers found that immersing burn victims in an icy virtual environment can mitigate their experience of pain during medical procedures (Hoffman et al., 2011). Here, we wish to raise some concerns about applications of VR. The first concern is that patients may develop false hope with regard to clinical applications of VR. The second concern is that applications of VR may encounter a tension between beneficence and autonomy.

Patients may believe that treatment using VR is better than traditional interventions merely due to the fact that it is a new technology, or an experimental application of existing technology. This sense of false hope is known as the “therapeutic misconception” in the literature on the ethics of clinical research (Appelbaum et al., 1987; Kass et al., 1996; Lidz and Appelbaum, 2002; Chen et al., 2003). Researchers using VR for clinical research must be aware of established techniques for combating the therapeutic misconception in their subjects. For example, one established guideline for investigating new clinical applications is that of “clinical equipoise,” which is the requirement that there be genuine uncertainty in the medical community as to the best form of treatment (Freedman, 1987). It is important that researchers communicate their own sense of this uncertainty in a clear manner to volunteer subjects. Similarly, as Chen et al. (2003) note, physicians who have a lasting relationship with their patients may be better suited to form a judgment as to whether the patient is motivated by a clear understanding of the nature of the research, rather than motivated by false hope or even desperation.

VR researchers aiming at new clinical applications should therefore work slowly and carefully, in close collaboration with physicians who may be better situated to make informed judgments about the suitability of particular patients for new trials.

Therapeutic and clinical applications should be investigated only in the presence of certified medical personnel.

Another relevant concern here is the way in which the general public keeps informed of new developments in science through the popular media. Members of the general public with less interest in science may have a more difficult time gleaning scientific knowledge from the media than those with more interest (Takahashi and Tandoc, 2015). When considering their responsibility as scientists to communicate new results to the public (Fischhoff, 2013; Kueffer and Larson, 2014), VR researchers working in clinical applications must be careful to avoid language that might give false hope to patients.

We should also note here that there are other practical concerns about the use of VR for medical interventions. For instance, once the technology is available for patients to use, who will pay for it? Should medical insurance pay for HMDs and new software? How do we achieve distributive justice and avoid a situation where only privileged members of society benefit from technological advances? We make no recommendation here, but flag this question as something that needs to be considered by policy makers. Similarly, HMDs, CAVE immersive displays, and motion-tracking technology may have to be reclassified as medical devices.

One risk when performing the research necessary for developing such applications is that the patients involved may develop a false sense of hope due to the non-traditional nature of the intervention. As this kind of research progresses, scientists must continue to be honest with patients so as not to generate false hope. There is also an overlap between media ethics and the ethics of VR technology: a related example is that many of the early experiments on full-body illusions (Ehrsson, 2007; Lenggenhager et al., 2007) have been falsely overreported as creating full-blown “out-of-body experiences” (Metzinger, 2003, 2009a,b), and scientists have perhaps not done enough to correct this misrepresentation of their own work in the media.8 While incremental progress has clearly been made, large parts of the public still falsely believe that scientists “have created OBEs in the lab.”

Overall, scientists and the media need to be clear and honest with the public about scientific progress, especially in the area of using VR for medical treatment.

The second concern about applications of VR has to do with the well-known tension between autonomy and beneficence in applied ethics (Beauchamp and Childress, 2013: Chapter 6). As the results surveyed in the first part of this article suggest, VR enables a powerful form of non-invasive psychological manipulation. One obvious application of VR, then, would be to perform such manipulations in order to bring about desirable mental states and behavioral dispositions in subjects. Indeed, early experiments in VR have done just that, making subjects willing to save more for their retirement (Hershfield et al., 2011), perform better on tests for implicit racial bias (Peck et al., 2013), and behave in a more environmentally conscious manner (Ahn et al., 2014). In a paternalistic spirit, such as that of the UK Behavioral Insights Team, one might urge that beneficent VR applications such as these should be put in place among the general populace, perhaps as a new form of “public service announcement” for the twenty-first century. Here, we wish to note that doing so may generate another case of conflict between beneficence and autonomy. If individuals do not seek to alter their psychological profile in the ways intended by the beneficent VR interventions, then such interventions may be considered a violation of their autonomy.

Dual Use

Dual use is a well-known problem in research ethics and the ethics of technology, especially in the life sciences (Miller and Selgelid, 2008). Here, we use it to refer to the fact that technology can be used for something other than its intended purpose, in particular to military applications. In the context of VR technology, one will immediately think not only of drone warfare, teleoperated weapon systems, or “virtual suicide attacks,” but also of interrogation procedures and torture. It is not in the power of the scientists and engineers who develop the technology to police its use, but we can raise awareness about potential misuses of the technology as a way of contributing to precautionary steps.

Here is an example. One possible application of VR would be to rehabilitate violent offenders by immersing them in a virtual environment that induces a strong sense of empathy for their victims. We see no problem at all with voluntary participation in such a promising use of the technology. But it is foreseeable that governments and penal systems adopt mandatory treatment using similar techniques, calling to mind Anthony Burgess’ A Clockwork Orange. We will not comment on the moral acceptability of such a practice, noting that the details of implementation may be an important – and more controllable – unknown factor.

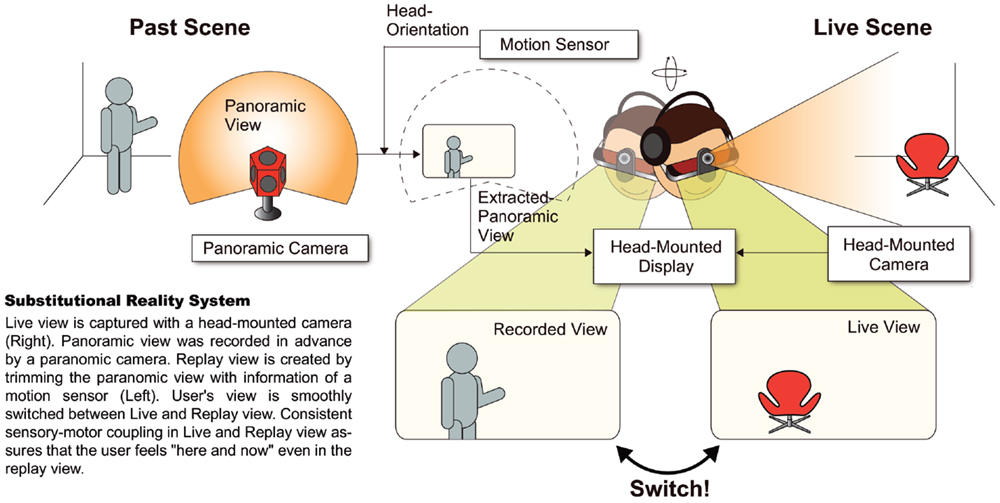

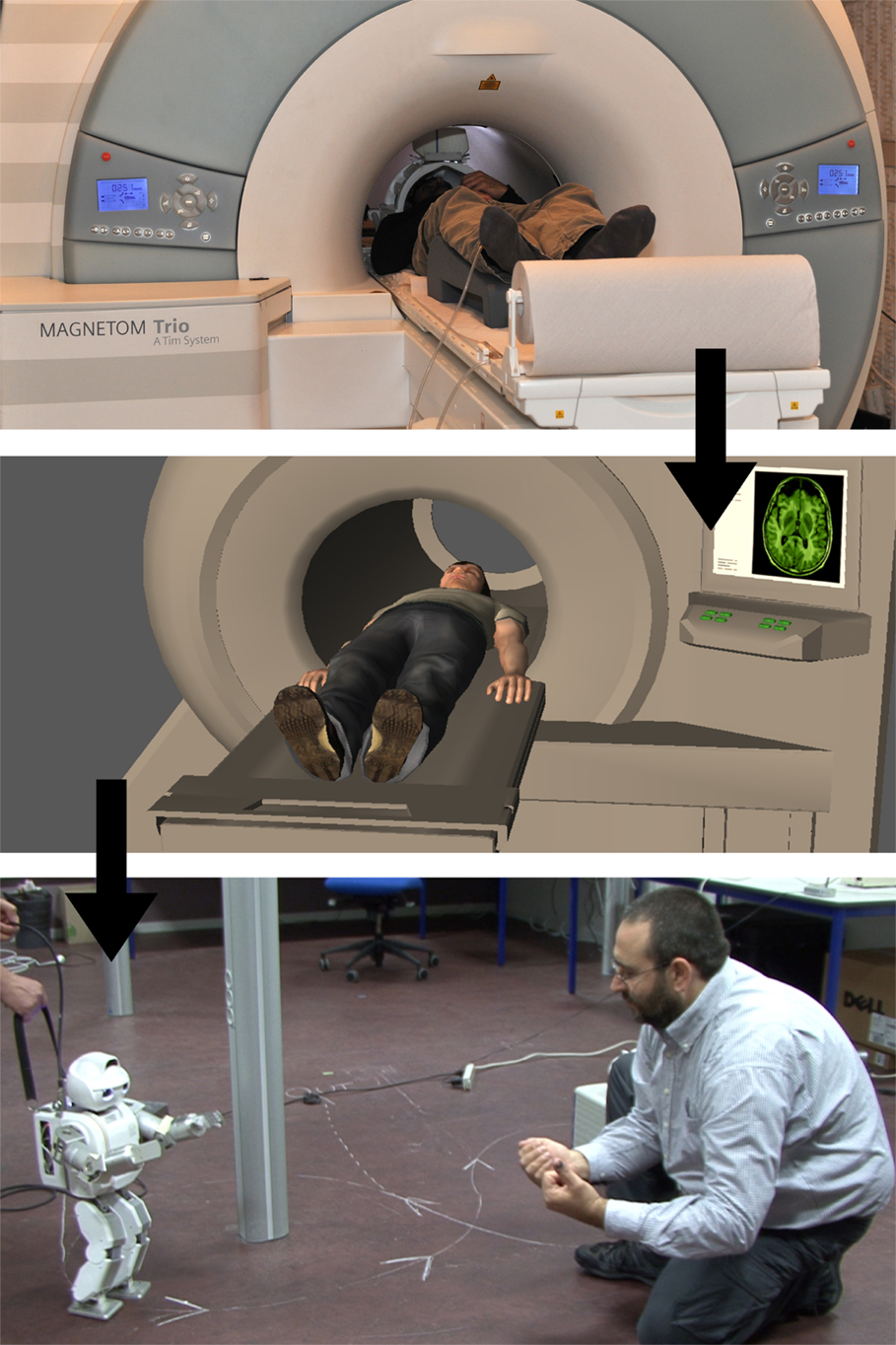

Virtual embodiment constitutes historically new form of acting. Metzinger (2013c) introduced the notion of a “PSM-action” to describe this new element more precisely. PSM-actions are those actions in which a human being exclusively uses the conscious self-model in her brain to initiate an action, causally bypassing the non-neural body (as in Figure 5). Of course, there will have to be feedback loops for complex actions, for instance, when seeing through the camera eyes of a robot, perhaps adjusting a grasping movement in real-time (which is still far from possible today). But the relevant causal starting point of the entire action is no longer the body made of flesh and bones, but the conscious self-model in our brain. We simulate an action in the self-model, in the inner image of our body, and a machine performs it. PSM-actions are almost purely “mental,” put they may have far-reaching causal consequences in the real world, for example, in combat situations. As the embodiment in avatars and physical robots may be functionally shallow and may provide only weaker and less stable forms of self-control (for example, with regard to spontaneously arising aggressive fantasies, see Metzinger, 2013c for an example), it is not clear how such PSM-actions mediated via brain–computer interfaces should be assessed in terms of accountability and ethical responsibility.9

Figure 5. “PSM-actions”: a test subject lies in a nuclear magnetic resonance tomograph at the Weizmann Institute in Israel. With the aid of data goggles he sees an avatar, also lying in a scanner. The goal is to create the illusion that he is embodied in this avatar. The test subject’s motor imagery is classified and translated into movement commands, setting the avatar in motion. After a training phase, test subjects were able to control a remote robot in France “directly with their minds” via the Internet, while they were able to see the environment in France through the robot’s camera eyes. (Image used with kind permission from Doron Friedman and Ori Cohen, cf. Cohen et al., 2014.)

Just as VR can be used to increase empathy, it can conceivably be used to decrease empathy. Doing so would have obvious military applications in training soldiers to have less empathy for enemy combatants, to feel no remorse about doing violence. We will not go further into the difficult issues regarding the use of new technology in warfare, but we note this possible alternative application of the technology. Apart from increasing or decreasing empathy, the power of VR to induce particular kinds of emotions could be used deliberately to cause suffering. Conceivably, the suffering could be so extreme as to be considered torture. Because of the transparency of the emotional layers in the human self-model (Metzinger, 2003), it will be experienced as real, even if it is accompanied by cognitive-level insight into the nature of the overall situation. Powerful emotional responses occur even when subjects are aware of the fact that they are in a virtual environment (Meehan et al., 2002).

Torture in a virtual environment is still torture. The fact that one’s suffering occurs while one is immersed in a virtual environment does not mitigate the suffering itself.

VR Research and the Internet

A final concern for the research ethics portion of this article has to do with the use of the internet in conjunction with VR research. For instance, scientists may wish to observe the patterns of behavior for users under particular conditions. It is clear that the internet will play a main role in the adoption of VR for personal use. Users will be able to inhabit virtual environments with other users through their internet connections, and perhaps enjoy new forms of avatar-based intersubjectivity. As O’Brolcháin et al. (2016) suggest, we will soon see a convergence of VR with online social networks. The overall ethical risks of this imminent development have been covered in detail by O’Brolcháin et al. (2016); in this section, we will incorporate and expand on their discussion with a focus on questions of research ethics.

There is a sizable body of literature covering the main issues of internet research ethics (Ess and Association of Internet Researchers Ethics Working Committee, 2002; Buchanan and Ess, 2008, 2009). Here, we address the following question: how should these existing issues of internet research ethics be approached for cases of internet research with the use of VR? The two main issues that we will cover here are privacy and obtaining informed consent. We will consider the internet both as a tool and a venue for research (Buchanan and Zimmer, 2015), while noting that virtual environments may place pressure on the distinction between internet as research tool and internet as research venue.

Let us begin with the question of privacy. It is widely accepted that researchers have an ethical obligation to treat confidentially any information that may be used to identify their subjects (see, for example, European Commission, 2013, p. 12). This obligation is based on the general right to privacy outside of a research context (Universal Declaration of Human Rights, article 12, 1948; European Commission Directive 95/46/EC). Practicing this confidentiality may involve, for instance, erasing, or “scrubbing,” personally identifiable information from a data set (O’Rourke, 2007; Rothstein, 2010).

As O’Brolcháin et al. note, immersive virtual environments will involve the recording of new kinds of personal information, such as “eye-movements, emotions, and real-time reactions” (2015, p. 8). We would like to add that immersive VR could eventually incorporate motion capture technology in order to record the details of users’ bodily movements for the purpose of, for example, representing their avatar as moving in a similar fashion. Although implementing this scenario may be beyond the capabilities of the forthcoming commercial hardware, it is plausible and rational to assume that the technology may evolve quickly to include such options. Data regarding the kinematics of users will be useful for researchers from a range of disciplines, especially those interested in embodied cognition (Shapiro, 2014). On the plausible assumption that one’s kinematics is very closely related to one’s personality and the deep functional structure of bodily self-consciousness – only your body moves in precisely this manner – there will a highly individual “kinematic fingerprint.” This kind of data collection presents a special threat to privacy.

O’Brolcháin et al. (2016) recommend protecting the privacy for users of online virtual environments through legislation and through incentives to develop new ways of protecting privacy. As a complement to these recommendations, we wish to highlight the threat to privacy created by motion capture technology. Unlike eye-movements and emotional reactions, one’s kinematics may be uniquely connected with one’s identity, as indicated above. Researchers collecting such data must be aware of its sensitive nature and the dangers of its misuse. In addition, commercial providers of cloud-based VR-technology will frequently have an interest of “harvesting,” storing, and analyzing such data and users should be informed about such possibilities and give explicit consent to them.

A second main concern in the ethics of internet research is that of informed consent. In contrast to informed consent for traditional face-to-face experiments, internet researchers may obtain consent by having subjects click “I agree” after being presented with the relevant documentation. There are a number of concerns and challenges regarding the practice of gaining consent for research using the internet as a venue (Buchanan and Zimmer, 2015, see section Privacy, below), including, of course, the fact that actually reading internet privacy policies before accepting them would take far more time than we are willing to allocate – on one estimate, it would take each of us 244 h per year (McDonald and Cranor, 2008).

We suggest that immersive VR will add further complications to these existing issues due to its manipulation of bodily location and its dissolution of boundaries between the real and the virtual. Consider that entering a new internet venue, say a chatroom or a forum, involves a fairly well-defined threshold at which informed consent can be requested before one enters the venue. Due to the centrality of the URL for using the web, one’s own location in cyberspace is fairly easy to track. With VR, by contrast, it is foreseeable that one’s movement through various virtual environments will be controlled by one’s bodily movements, through facial gestures, or simply by the trajectory of visual attention in a way unlike internet navigation using a mouse, keyboard, and navigation bar. In addition, and more interesting, it is also foreseeable that HMDs will incorporate simultaneous combinations of augmented, substitutional, and VR, with the user being able to toggle between elements of the three. Such a situation would add ambiguity, and perhaps confusion, for attempts to determine the user’s location in cyberspace. This ambiguity raises the likelihood that users may give consent for data collection in a particular virtual context but then become unaware of the continued data collection as the user changes context. Such a situation might occur if users of HMDs are able to toggle between, say, an entirely virtual gaming environment, a look out of the window to the busy street below presented through augmented reality, and a family gathering hundreds of kilometers away using substitutional reality through an omni-directional camera set up at the party. This worry can be addressed by giving users continuous reminders (after, of course, they have given informed consent) that their behavior is being recorded for research purposes. Perhaps the visual display could include a small symbol for the duration of the time in which data are being collected.

We leave the implementational details open, but urge the scientific community to take steps to avoid the abuse of informed consent with this technology, especially in the interest of preserving public trust.

A Note on the Limitations of a Code of Ethics for Researchers

We would like to conclude our discussion of the research ethics of VR by noting that the proposed (incomplete) code of conduct is not intended to be sufficient for guaranteeing ethical research in this domain. What we mean here is that following this code should not be considered to be a substitute for ethical reasoning on the part of researchers, reasoning that must always remain sensitive to contextual and implementational details that cannot be captured in a general code of conduct. We urge researchers to conceive of our recommendations here as an aid in their ongoing reflections about the ethical implications and permissibility of their own work, and to proactively support us in developing this ethics code into more detailed future versions. As we emphasized in the beginning of the article, this work is only intended as a first list of possible issues in the research ethics of VR and related technologies. We intend to update and revise this list continuously as new issues arise, although the venue for future revisions is undecided. In any case, we wish to open an invitation for constructive input from researchers in this field regarding issues that should be added or reformulated.

Scientists must understand that following a code of ethics is not the same as being ethical. A domain-specific ethics code, however consistent, developed, and fine grained future versions of it may be, can never function as a substitute for ethical reasoning itself.

Risks for Individuals and Society

Now consider possible issues that may arise with widespread adoption of VR for personal use. Once the technology available to the general public for entertainment (and other) purposes, individuals will have the option of spending extended periods of time immersed in VR – in a way this is already happening with the advent of smartphones, social networks, increasing time online, etc. Some of the risks and ethical concerns that we have already encountered in the early days of the internet10 will reappear, though with the added psychological impact enabled by embodiment and a strong sense of presence. We all know that internet technology has long ago begun to change our self-models and consequently our very own psychological structure. The combination with technologies of virtual and robotic re-embodiment may greatly accelerate this development.

For instance, consider the infamous case of virtual rape in LambdaMOO, the text-based multi-user dungeon (MUD). In that virtual world, a player’s character known as “Mr.Bungle” used a “voodoo doll” program to control the actions of other characters in the house. He forced them to perform a range of sexual acts, some of which are especially disturbing (Dibbell, 1993). Users of LambdaMOO were outraged, and at least one user whose character was a victim of the virtual rape reported suffering psychological trauma (ibid.). The relevant point to keep in mind here is that this entire virtual transgression occurred in a world that was entirely text based. We will soon be fully immersed in virtual environments, actually embodying – rather than merely describing – our avatars. The results sketched above in Section “Illusions of Embodiment and Their Lasting Effect” suggest that the psychological impact of full immersion will be great, likely far greater than the impact of text-based role-playing. We must now take steps in order to help users avoid suffering psychological trauma of various kinds. To this end, we will discuss four kinds of foreseeable risks:

• long-term immersion;

• neglect of embodied interaction and the physical environment;

• risky content;

• privacy.

We will offer several concrete recommendations for minimizing all four of these kinds of risks to the general public, a number of which call for focused research initiatives.

The Effects of Long-Term Immersion

First, and perhaps most obviously, we simply do not know the psychological impact of long-term immersion. So far, scientific research using VR has involved only brief periods of immersion, typically on the order of minutes rather than hours. Once the technology is adopted for personal use, there will be no limits on the time users choose to spend immersed. Similarly, most research using VR has been conducted using adult subjects. Once VR is available for commercial use, young adults and children will be able to immerse themselves in virtual environments. The risks that we discuss below are especially troublesome for these younger users who are not yet psychologically and neurophysiologically fully developed.

In order to better understand the risks, we recommend longitudinal studies, further research into the psychological effects of long-term immersion.

Of course, these studies must be conducted according to the principles of informed consent, non-maleficence, and beneficence outlined in Section “The Research Ethics of VR.” There are several possible risks that can be associated with long-term immersion: addiction, manipulation of agency, unnoticed psychological change, mental illness, and lack of what is sometimes vaguely called “authenticity” (Metzinger and Hildt, 2011, p. 253). The risks that are discovered through longitudinal studies must be directly and clearly communicated to users, preferably communicated within VR itself.

Psychologists have long expressed concern about internet use disorder (Young, 1998), and it is a topic of ongoing research (Price, 2011).11 This area of research must now expand in order to include concerns about addiction to immersive VR, both online and offline. Doing so will require monitoring users who prefer to spend long periods of time immersed (see Steinicke and Bruder, 2014 for a first self-experiment). There are two relevant open questions here. First, how might the diagnostic criteria for addiction to VR differ from the established criteria for internet use disorder and related conditions? Note that the neurophysiological underpinnings of VR addiction may differ from that of internet use disorder (Montag and Reuter, 2015) due to the prolonged illusion of embodiment created by VR technology, and because it implies causal interaction with the low-level mechanisms constituting the UI. Second, can we make use of the recommended treatments for internet use disorder for the purpose of helping individuals with VR addiction? For instance, Gresle and Lejoyeux (2011, p. 92) recommend informing users how much time they have spent playing an online game, and including non-player characters in the game to urge players to take breaks. It is plausible that these strategies would be effective for immersive VR as well, but focused research is needed.

A second concern about long-term immersion has to do with the fact that immersive VR can manipulate the user’s sense of agency (Gallagher, 2005). In order to generate a strong illusion of ownership for the virtual body, the VR technology must track the self-generated movements of the user’s real body and render the virtual body as moving in a similar manner.12 When things are working well, users experience an illusion of ownership of the virtual body (the avatar is my body), as well as an illusion of agency (I am in control of the avatar). Importantly, the sense of agency in VR is always indirect; control of the avatar is always mediated by the technology. To be more precise, the virtual body representation has been causally coupled with and temporarily embedded into the currently active conscious self-model in the user’s brain – it is not that some mysterious “self” leaves the physical body and “enters” the avatar, but rather a novel functional configuration in which two body representations dynamically interact with each other. However, the causal loop in principle enables bidirectional forms of control, or even unnoticed involuntary influence. The fact that the user’s sense of agency in VR is always continuously maintained by the technology is an important one for at least two reasons. First, the technology could be used to manipulate users’ sense of agency. Second, as we discuss in the general context of mental health below, long-term immersion could cause low-level, initially unnoticeable psychological disturbances involving a loss of the sense of agency for one’s physical body.

VR technology could manipulate users’ sense of agency by creating a false sense of agency for movements of the avatar that do not correspond to the actual body movements of the user. The same could be true for “social hallucinations,” i.e., the creation of the robust subjective impression of ongoing social agency, of engaging in a real, embodied form of social interaction, which, however, in reality is only interaction with an unconscious AI or with complex software controlling the simulated social behavior of an avatar. Using only a computer screen, a modified mouse, and headphones, a false sense of agency was created in Daniel Wegner’s well-known “I Spy” experiments (Wegner and Wheatley, 1999; Wegner, 2002). In those experiments, subjects reported that they felt themselves to be in control of a cursor selecting an icon on a computer screen when in fact the cursor was being controlled by someone else. The illusion of control was induced by auditory priming – subjects heard a word through headphones that had a semantic association with the icon that was subsequently selected by the cursor. It is reasonable to think that Wegner’s method can be implemented rather easily in VR. While immersed in VR, subjects can receive continuous audio and visual cues intended to influence their psychological states. Future experimental work can determine the conditions under which subjects will experience a sense of agency for movements of the avatar that deviate from the subject’s actual body movements (as during an OBE or in the dream state, see Kannape et al., 2010 for an empirical study). Important parameters here will likely be the timing of the false movement, the degree to which the false movement deviates from the actual position of the body, and the context of the movement within the virtual environment (including, for instance, the attentional state of the subject).

Creating a false sense of agency in VR is a clear violation of the user’s autonomy, a violation that becomes especially worrisome as users spend longer and longer periods of time immersed. Here, we will not insist that all cases of violating autonomy in this manner are ethically impermissible, noting that some such violations may be subtle and beneficent, a kind of virtual “nudge” in the right direction (Thaler and Sunstein, 2009). In addition, human beings often willfully choose to decrease their autonomy, as in drinking alcohol or playing games. But we do claim that creating a false sense of agency in VR is an unacceptable violation of individual autonomy when it is non-beneficent, such as when it is done out of avarice, for example. Manipulating the sense of agency for users in VR is a topic that deserves attention from regulatory agencies.

A third concern that we wish to raise about long-term immersion is that of risks for mental health. As stated above, we simply do not know whether long-term immersion poses a threat for mental health. Future research ought to investigate whether factors such as the duration of immersion, the content of the virtual environment (including the user’s own avatar or the way in which the software controls the automatic behavior, facial gestures, or gaze of other avatars), and the user’s pre-existing psychological profile might have lasting negative effects on the mental health of users. As mentioned above (see Ethical Experimentation), we suspect that heavy use of VR might trigger symptoms associated with Depersonalization/Derealization Disorder (DSM-5 300.14). Overall, the disorder can be characterized as having chronic feelings or sensations of unreality. In the case of depersonalization, individuals experience an unreality of the bodily self, and in the case of derealization, individuals experience the external world as unreal. For instance, those suffering from the disorder report feeling as if they are automata (loss of the sense of agency), and feeling as if they are living in a dream (see Simeon and Abugel, 2009 for illustrative reports from individuals suffering from depersonalization).13 Note that Depersonalization/Derealization Disorder involves feelings of unreality but not delusions of unreality, there is a dissociation of the low-level phenomenology of “realness” from high-level cognition. That is, someone suffering from depersonalization may lose the sense of agency, but will not thereby form the false belief that they are no longer in control of their own actions.

Depersonalization/Derealization Disorder is relevant for us here because VR technology manipulates the psychological mechanisms involved in generating experiences of “realness,” mechanisms similar or identical to those that go awry for those suffering from the disorder. Even though users of VR do not believe that the virtual environment is real, or that their avatar’s body is really their own, the technology is effective because it generates illusory feelings as if the virtual world is real (recall the virtual pit from Section “Illusions of Embodiment and Their Lasting Effect” above). What counts is the variable degree of transparency or opacity of the user’s own conscious representations (Metzinger, 2003). Our concern is that long-term immersion could cause damage to the neural mechanisms that create the feeling of reality, of being in immediate contact with the world and one’s own body. Heavy users of VR may begin to experience the real world and their real bodies as unreal, effectively shifting their sense of reality exclusively to the virtual environment.14 We recommend focused longitudinal studies on the impact on mental health of long-term immersion in VR. These studies should especially investigate risks for dissociative disorders, such as Depersonalization/Derealization Disorder.

A final concern for long-term immersion stems from the fact that some may consider experiences in the virtual environment to be “inauthentic,” because those experiences are artificially generated. This concern may remind some readers of Robert Nozick’s well-known thought experiment about an “Experience Machine” that can provide users with any experience they desire (Nozick, 1974, p. 42–45). Nozick uses the thought experiment to raise a problem for utilitarianism, urging his readers to consider reasons why one might not wish to “plug-in” to the machine, claiming that “something matters to us in addition to experience” (Nozick, 1974, p. 44). The interesting question, of course, now becomes what would happen if this “additional something” can be added to the experience itself, for example by advanced VR-technology creating the phenomenal quality of “authenticity,” of direct reference to something “meaningful,” for example by a more robust version of naïve realism on the level of subjective experience itself or by manipulation of the user’s emotional self-model. While Nozick suggests that many of us would not wish to plug-in to the experience machine for the reason just stated, recent work by Felipe de Brigard suggests otherwise. De Brigard (2010) presented students with several variations on the thought experiment all with an important twist on Nozick’s original version. In de Brigard’s version, we are told that we are already plugged-in to an experience machine and we are asked if we would like to unplug in order to return to our “real” lives. Many of de Brigard’s students replied that they would not wish to unplug, leading de Brigard to suggest that our reactions to the thought experiment are influenced more by the status quo bias (Samuelson and Zeckhauser, 1988) than by our valuing of something more than experience. It is the status quo bias, de Brigard suggests, that gives us pause about plugging-in to the machine (in Nozick’s version of the thought experiment) just as it is the status quo bias that gives us pause about unplugging (in de Brigard’s version).

Overall, de Brigard’s results offer initial reasons to be skeptical about Nozick’s supposition that we would not plug-in because we value factors beyond experience alone. Even with this skepticism, though, many of us may still feel that there is something false, “inauthentic,” or undesirable about living large portions of one’s life in an entirely artificial environment, such as VR. Apart from the dubious essentialist metaphysics lurking behind the vague and sometimes ideologically charged notion of an “authentic self,” it is important to note how such intuitions are historically plastic and culturally embedded: they may well change over time as larger parts of the population begin to use advanced forms of VR technology. As an example, please note how already today we find a considerable number of people who are not able to grasp the difference between “friendship” and “friendship on Facebook” any more. Fully engaging with the issue of losing “authenticity” in virtual environments would likely require entering into some deep philosophical waters, and we are unable to do so here, though we will touch on some of the relevant issues below. Apart from the deeper philosophical issues, there is one important point that we wish to make before moving on.

The point has to do with the way in which we imagine the possibilities of VR for personal use. One reason behind an assertion that long-term immersion would be an inauthentic way of spending one’s time is that one might assume that the content of immersive VR would be unedifying, making people more shallow as they retreat from society in favor of an artificial social world in which their decisions are made for them. Brolcháin et al. raise this sort of concern:

With little exposure to “higher” culture, to great works of art and literature; and without the skills (and maybe the attention spans) to enjoy them; people would be less able to engage with the world at a deep level. People without exposure to great works and ideas might find that [their] inner lives are shaped to a large degree by market-led cultural products rather than works of depth and profundity. (2015, p. 20)

We agree that such a scenario would be undesirable, but wish to counterbalance this concern by reminding readers that it is not unique to VR. It is a concern that can be applied in various degrees to other media technology as well, going all the way back to worries about the written word in Plato (Phaedrus 274d–275e). The printing press, for example, can enable one to disseminate great works of literature, but it can also enable the dissemination of vulgarity – and it certainly changed our minds. Readers with vulgar tastes can “immerse” themselves as they wish. The same goes for photography and motion pictures. The important point is as follows. There is no reason to doubt that works of great depth and profundity can be produced by artists who choose VR as their medium. Just as film emerged as a new predominant art form in the twentieth century, so might VR in the twenty-first century. We predict that immersive VR-technology will gradually lead to the emergence of completely new forms of art (or even architecture, see Pasqualini et al., 2013), which may be hard to conceive today, but which will certainly have cultural consequences, perhaps even in our understanding what an artistic subject and esthetic subjectivity really are.

Neglect of Others and the Physical Environment

As users spend increasing time in virtual environments, there is also a risk of their neglecting their own bodies and physical environments – just as for many people today posing and engaging in disembodied social interactions via their Facebook account has become more important than what was called “real life” in the past. In extreme cases, individuals refuse to leave their homes for extended periods of time, behavior categorized as “Hikikomori” by the Japanese Ministry of Health. VR will enable us to interact with each other in new ways, not through disembodied interaction, as in the texts, images, and videos of current social media, but rather through what we have called the illusion of embodiment. We will interact with other avatars while embodied in our own avatars. Or perhaps we will use augmented reality through omni-directional cameras that allow us to enjoy the illusion of being in the presence of someone who is far away in space and/or time. To put it more provocatively, we may soon, as Norbert Wiener anticipated many years ago, have the ability to “telegraph” human beings (Wiener, 1954, p. 103–104). Telepresence is likely to become a much more accessible, immediate, comprehensive, and embodied experience.

Our general recommendation on this theme is for focused research into the following question: What, if anything, is lost in cases of social interactions that are mediated using advanced telepresence in VR? If such losses were unnoticed, what negative effects for the human self-model could be expected?

This question has been a major theme in some of Hubert Dreyfus’ work on the philosophy of technology. Dreyfus has emphasized that mediating technologies may not capture something of what is important for real-time interactions in the flesh, what, following Merleau-Ponty, he calls “intercorporeality” (Dreyfus, 2001, p. 57). When we are not present in the flesh with others, the context and mood of a situation may be difficult to appreciate – if only because the bandwidth and the resolution of our internal models are much lower. Perhaps more importantly, there is a concern that mediating technologies will not allow us to pick up on all of the subtle bodily cues that appear to play a major role in social communication through unconscious entrainment (Frith and Frith, 2007), cues that involve ongoing embodied interaction (Gallagher, 2008; de Jaegher et al., 2010).