Social Moments: A Perspective on Interaction for Social Robotics

- School of Information Technology and Electrical Engineering, The University of Queensland, Brisbane, QLD, Australia

During a social interaction, events that happen at different timescales can indicate social meanings. In order to socially engage with humans, robots will need to be able to comprehend and manipulate the social meanings that are associated with these events. We define social moments as events that occur within a social interaction and which can signify a pragmatic or semantic meaning. A challenge for social robots is recognizing social moments that occur on short timescales, which can be on the order of 102 ms. In this perspective, we propose that understanding the range and roles of social moments in a social interaction and implementing social micro-abilities—the abilities required to engage in a timely manner through social moments—is a key challenge for the field of human robot interaction (HRI) to enable effective social interactions and social robots. In particular, it is an open question how social moments can acquire their associated meanings. Practically, the implementation of these social micro-abilities presents engineering challenges for the fields of HRI and social robotics, including performing processing of sensors and using actuators to meet fast timescales. We present a key challenge of social moments as integration of social stimuli across multiple timescales and modalities. We present the neural basis for human comprehension of social moments and review current literature related to social moments and social micro-abilities. We discuss the requirements for social micro-abilities, how these abilities can enable more natural social robots, and how to address the engineering challenges associated with social moments.

1. Introduction

For robots to develop social skills, they need to engage in interaction dynamics that convey social meanings. We term the events that occur within these interaction dynamics as social moments. Social interactions occur between social agents at multiple timescales. Conversations and other consciously considered social interactions typically span seconds, minutes, or longer. However, managing social exchanges also relies on the interpretation and manipulation of fast timescales (on the order of 102 ms) upon which the interaction is constructed.

For social robots, it is important to be able to understand the social significance of these fast interaction dynamics when participating in a social interaction. For a robot, social moments must be grounded both in the culture and personality of the interactant and also in the attributes of the interaction (environment), the roles of participants and robot, and the interaction task. The social skills required for a social robot include detecting, creating, and learning the meanings of social moments.

While the fields of human–robot interaction (HRI) and social robotics have investigated aspects of language (e.g., Kollar et al. (2010)), social interaction (e.g., Breazeal (2004)), and social motion (e.g., Hoffman and Ju (2014)), there is little or no investigation of social moments during these interactions and the short timescales associated. In this paper, we introduce and provide a definition for social moments; outline the related literature from psychology, neuroscience, and HRI; and present the practical challenges that need to be addressed to enable fast timescale social abilities.

2. Definition of Social Moments

2.1. Definition

Social moments are brief events that occur during an interaction between two or more agents that have the potential to impact social dynamics.

Social moments have the potential to convey pragmatic and semantic information during an interaction that need not be deliberate or conscious actions. If a tutor gives a lecture to a group of students and briefly looks at one of the students, only to notice the student looking away out of boredom, the behavior of the tutor can be affected by this event, potentially for the rest of the lecture. The tutor might decide to focus more on this student or to make the lecture more interesting to capture attention. Alternatively, the tutor might instead decide to ignore the student and not to look that way again for the rest of the lecture. In practice, the set of events {tutor looks at student; student looks away}, although happening in a very short period of time, carries a social meaning that can potentially affect the rest of the interaction. We refer to such sets of events as social moments.

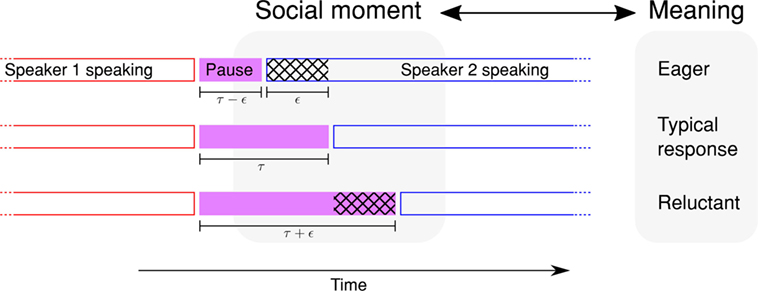

Social moments can evoke performative meanings (Condoravdi and Lauer, 2011) and can convey positive or negative valences. Communicating intentions is considered to be a prerequisite of the acquisition of language abilities in humans (Bates et al., 1975), with performative meanings conveyed in both verbal and non-verbal ways. For example, the speed of response in a social exchange can determine whether a speaker is being answered or a new comment generated (Newman et al., 2010; Maroni, 2011), and small delays can indicate the state of the responder as eager or reluctant to engage with an agent (Bögels et al., 2015) (see Figure 1). Larger delays in response can be considered to violate social norms and lead to the interpretation that an interactant is distracted, anti-social, or offensive. Additionally, movement patterns, particularly those in peripersonal space (within arms length), can convey social meanings (Ramenzoni et al., 2012). Different motions can indicate different intentions (Blythe et al., 1999), and changes in body posture can indicate different levels of engagement (Sanghvi et al., 2011).

Figure 1. A social moment that occurs between two conversing people from the changing length of a pause. There is a pause between one speaker’s turn ending and the other speaker’s turn starting. The pause length is controlled by speaker 2, and different lengths of the pause can indicate different pragmatic meanings to speaker 1. Given a typical pause length, τ, a pause that is shortened and has length τ – ϵ might indicate eagerness to speak, while a pause that is lengthened has length τ + ϵ might indicate reluctance to speak. Such an event can be considered a social moment, as the event can have an impact on the interaction dynamics. The social moment is caused by the divergence from the typical behavior by speaker 2. Speaker 1 can interpret the event and change his/her approach to the conversation. Alternatively, speaker 1 could miss or misinterpret the social moment and appear anti-social to speaker 2.

In their foundational work in the field of social robotics, Dautenhahn et al. (2002) hypothesized that as robotic agents become socially embedded, they have to be able to observe, learn from, and adapt to their social environment, but they must also be able to influence it. Accordingly, the authors defined a set of micro-behaviors for hand-annotating the impact of robots on the humans surrounding them at the temporal resolution of 500 ms (Dautenhahn and Werry, 2002). In essence, Dautenhahn’s hypothesis for social robots can be summarized as a need for them to have the ability to detect, interpret, and create social moments, but on a smaller timescale than that of micro-behaviors.

3. Timescales for Social Moments

Although a social moment may occur over any duration, social moments that occur over short timescales are difficult for a robot to detect, but can be just as significant. The importance of timing and short timescale responses is demonstrated in several social interaction studies. For tasks that require joint motor actions to achieve a goal, motor coordination becomes an inherent property of the interaction (Riley et al., 2011; Ramenzoni et al., 2012), requiring participants to be responsive to each other’s actions. Synchronization can also occur between the motions of humans even when there is no joint motor coordination (Richardson et al., 2007), and deliberate synchronicity from an experimenter can increase affiliation from a participant (Hove and Risen, 2009). These studies suggest that synchronization is a key part of social normality.

Another modality of a social interaction that is greatly influenced by timing is that of conversation. Language processing occurs at many different timescales, and different events at different times can result in both changes to perception of the interaction and interlocutors. Consonant transitions can take less than 50 ms (O’Shaughnessy, 1974), and the difference in pronunciation can allow discrimination between a native and non-native speaker. For children, the inability to discriminate between two 43 ms tones is related to speech disorders (Tallal and Piercy, 1974). However, the timescales for each of these processes are below the level of even a single word, which is often the level that social robots work at (e.g., when using speech-to-text algorithms).

Additional challenges for social robots are found at the conversation turn-taking level. Turn-taking requires meeting timescales on the order of 102 ms, but this timescale varies depending on the culture (Stivers et al., 2009). For humans, the apparent time taken to process an utterance can be 500−700 ms, resulting in at least a 200 ms gap where an interlocutor would be expected to respond before having completely processed what was said previously (Stivers et al., 2009; Levinson and Torreira, 2015). Pauses between turns can take on further critical social meanings. Longer pauses have been demonstrated to be associated with non-preferred responses (Bögels et al., 2015), while pauses also reflect opportunities to enter conversation (Newman et al., 2010; Maroni, 2011) (see Figure 1 for an example). For robots, the challenges for conversation turn-taking are to meet required social response times without complete knowledge of what was previously said and to understand the social meanings that are indicated through timing. Again, failing to meet these timing requirements can cause violations of social norms, which can carry a negative pragmatic meaning.

Altogether, a large set of events across multiple modalities can intertwine to create social meanings, given a specific context (Mondada, 2016). A good example is the McGurk effect (McGurk and MacDonald, 1976), where the visual and auditory channels are integrated during language perception. The timing of this multimodal integration is critical (Munhall et al., 1996), as delays of more than 180 ms across one modality can disrupt the perceived social moment. For a social robot, it is then critical to process social moments as spanning multiple timescales and modalities and as part of a broader context.

4. Neural Basis for Social Moments

Social moments can be constructed by societies through social norms, but they can also be grounded directly in human biology. The neural architecture required to detect social moments at multiple timescales and through different modalities is visible in the neural basis of human sensory processing. Processing of sensory (particularly visual) information in human cortical pathways shows differences linked to social information as early as 100 ms after presentation of the stimulus (Meeren et al., 2005; de Gelder, 2006). Other studies have shown that ultra-rapid categorization of visual stimuli is possible due to a likely parallel process in the visual cortex (Thorpe et al., 1996; van Rullen and Thorpe, 2001). As a consequence, the extraction of social information from visual stimuli can be done in less than 150 ms, thus being able to trigger a motor reaction to social stimuli in less than 300 ms (Thorpe et al., 1996).

Similarly, subcortical areas of the brain are believed to play a role in the processing of social stimuli (Morris et al., 1998; LeDoux, 2012), and their close link to motor structures suggests that they could play a role in the establishment of an automatic, reflex-like social behavior (de Gelder, 2006). A recent study on monkeys (Kuraoka et al., 2015) suggested that neurons were maximally informative of emotion and identity about 250 ms posterior to stimulation, which would be consistent with an extremely rapid reaction to strong social stimuli. In contrast, the maximum of information in cortical areas was observed after 300 to 1,000 ms, thus supporting a more elaborate but slower processing for emotion and an extremely rapid reaction to identity in the cortex.

Altogether, the organization of the processing of social stimuli inside human brains is consistent with a multi-scale approach to social moments. Accordingly, human behaviors are driven by the processing of social stimuli along two main scales: a very rapidly generated but very coarse representation of the social context, highly linked to motor structures and responsible for reflex-like social behavior, and a more elaborate but slower processing of social information. Although social robots do not have to implement social behavior in the same way, this organization emphasizes the different timescales and levels of processing that should be considered when designing robots.

5. Perspectives for Social Robots

Awareness of social interactions is a critical component of social robots (de Graaf et al., 2015). In particular, the speed and timing of robot responses during social interactions have been identified as necessary prerequisites to engage users (Robins et al., 2005) and for the acceptance of the robot as a social interaction partner (Lee et al., 2006). Therefore, in a similar way to the mechanisms underlying the human interaction engine (Levinson, 2006), social robots need what we term social micro-abilities. Social micro-abilities are a set of abilities that augment social interactions and provide backchannels of communication (i.e., in parallel with symbolic communication). The following paragraphs describe the requirements of social micro-abilities.

5.1. Social Robots Require Sensitivity to Events at Very Short Timescales

Social moments can happen on the order of 102 ms. The latency and rates of robot sensors directly affect the detection of social moments, as a robot’s response is constrained by hardware. For instance, robots that use standard web-cameras with latencies of 100–200 ms have restricted response times due to the time needed to capture an image during a control loop. A framerate of 30 Hz would lead to a maximum of 6 frames to capture an event of 200 ms length. Faster cameras exist, but their use comes at a cost of additional processing requirements. While there are currently constant advances in processing power, the increase comes at the cost of rethinking processing for each gain (Larus, 2009). Faster cameras have also been limited to physical applications and not considered for social interaction studies. For instance, motion capture cameras can achieve lower latencies and higher framerates and are often used for physical applications where timing is important (e.g., catching objects (Kim et al., 2014)). New event-based sensors such as the Dynamic Vision Sensor (DVS) (Lichtsteiner et al., 2008; Thorpe, 2012) can allow the detection of events at smaller timescales. There are also low-latency sensors for other modalities, such as audio, touch, and proprioception. The event-based silicon cochlea allows audio frequency data to be obtained with low latency and high frequency (Liu et al., 2010). The development of virtual whiskers (Schlegl et al., 2013) with high measurement frequency (1.25 kHz) allows rapid gathering of spatial information in the vicinity of the robot. The use of mechanical sensors such as torque sensors also allows detection of collision events in less than 15 ms (Haddadin et al., 2008). Despite the cost of the paradigm shift associated with using faster sensors, adapting such alternative approaches taken from industrial robotics or physical Human-Robot Interaction (pHRI) could augment the sensing capabilities of social robots.

5.2. Social Robots Require Rapid Response Capabilities

Achieving low latency responses to social moments requires processing events rapidly or maintaining a best guess representation of the social environment (Robins et al., 2005; Lee et al., 2006). When using control approaches that constantly update the knowledge of the environment and trigger motor actions from incomplete or uncertain knowledge, robots manage to catch flying objects whose time of flight does not exceed 700 ms (Kim et al., 2014) or react to attenuate collision forces in less than 100 ms (Haddadin et al., 2008), therefore matching or surpassing human capabilities. Such approaches have been restricted to the domain of physical robot dynamics and have not been considered in social robotics. Social robotics requires consideration of social dynamics, and models that take these dynamics into account have the potential to give social robots faster social responsiveness. In particular, a major challenge toward this goal is the difficulty of modeling the dynamics of social events compared to physical events. To achieve this goal, a better understanding of the dynamics of social interactions and social moments is required. Accordingly, using acquired knowledge of human reactions to their social environment can help predict the future occurrence of social events (e.g., Koppula and Saxena (2016)). Prediction of elements of the interaction in an anticipatory control system can also help reduce response times significantly (Huang and Mutlu, 2016) toward meeting fast response timescales. Notably, even if the amount of uncertainty contained in models of social interactions is greater than that in physical systems, it is important to note that interpretation of social interactions does not have to reach perfect accuracy. Rather, misinterpretation of social moments would contribute to give social robots human-like fallible traits that could improve their acceptance and long-term relationships with humans (Biswas and Murray, 2015). In addition to rapid processing of social events, responses at short timescales require fast actuators for robots. Social robots are often restricted to low-speed actuators to avoid harming humans—a trade-off that can restrict social ability.

5.3. Social Robots Require the Ability to Interpret Social Meanings and Maintain Social Awareness during Future Actions

The interaction with humans requires the robot to integrate social moments within their processing of the environment. This includes processing the social information and the context of the events together. As social moments are tied to social norms, detecting social moments will require the ability to predict typical behavior [e.g., motion from DVS, see Gibson et al. (2014)] and highlight deviations. Using neuromorphic processing of visual inputs, studies have achieved categorization of objects in less than 160 ms (Wang et al., 2017) or triggered robot responses in 4 cycles of a periodic event (Wiles et al., 2010). In addition, the interpretation of social moments requires the integration of information across multiple modalities, as multiple modalities contribute to the generation of social meaning (Mondada, 2016). Finally, as the social meanings interpreted from a social moment can affect long-term social interactions, robots need to be able to integrate social information obtained at short timescales into their cognitive architecture [see Lemaignan et al. (2017)] and memory to be able to represent the context against which future responses will occur.

6. Conclusion

In this paper, we have proposed the concept of a social moment and the social micro-abilities that are necessary for a robot to detect, interpret, predict, and respond to social moments. We believe that social micro-abilities are a fundamental requirement for social robots in order to gain acceptance in human societies. In particular, we believe that social robots need the ability to detect, predict, and respond to interaction dynamics across multiple modalities and at timescales as low as the order of 102 ms.

The implementation of social micro-abilities raises a set of compelling questions for the field of HRI and meets the definition of a new paradigm (Koschmann, 1996). We encourage social roboticists to consider social moments as part of their robot or architecture designs, and we anticipate new developments in robot hardware and cognitive architectures that will feature social micro-abilities. We intend to expand on the concepts of social moments and social micro-abilities and the required topics, tools, and methodologies in our future work.

From this perspective paper, we draw several current recommendations for social robotics:

(i) Although technology for implementing fast sensing and response already exists, use of such technology has been constrained to industrial robotics or pHRI. The interaction dynamics of social robotics needs to be considered just as temporally challenging as physical dynamics, with existing high speed sensors, actuators, and algorithms considered for social interactions.

(ii) Robots need to be able to respond quickly to maintain interaction dynamics even when there is missing or uncertain information about the social environment. For some interactions, there is a socially acceptable window in which a robot can respond, and no further incoming information or processing of information can compensate for responding too slowly.

(iii) Events on very short timescales and across multiple modalities can profoundly impact the current and future interactions, and therefore, it is essential for social robots to detect, predict, interpret, rapidly react to, and maintain long-term knowledge about social moments.

Author Contributions

GD, SH, and JW all contributed to the content and writing of this paper.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The ideas developed in this perspective have their roots in many conversations and collaborations across a spectrum of disciplines around the role of timing in learning and social dynamics. In particular, we thank colleagues from the Temporal Dynamics of Learning Center, the Kavli Institute for Brain and Mind at UCSD, and colleagues in the ARC Centre of Excellence for the Dynamics of Language.

Funding

This research is supported by an Asian Office of Aerospace Research and Development grant (FA2386-14-1-0017) and an Australian Research Council grant ARC Centres of Excellence (CE140100041).

References

Bates, E., Camaioni, L., and Volterra, V. (1975). The acquisition of performatives prior to speech. Merrill Palmer Q. Behav. Dev. 21, 205–226.

Biswas, M., and Murray, J. (2015). “Towards an imperfect robot for long-term companionship: case studies using cognitive biases,” in 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Hamburg: IEEE), 5978–5983.

Blythe, P. W., Todd, P. M., and Miller, G. F. (1999). “How motion reveals intention: categorizing social interactions,” in Simple Heuristics That Make Us Smart (New York, NY: Oxford University Press, Inc.), 257–285.

Bögels, S., Kendrick, K. H., and Levinson, S. C. (2015). Never say no … how the brain interprets the pregnant pause in conversation. PLoS ONE 10:e0145474. doi: 10.1371/journal.pone.0145474

Breazeal, C. (2004). Social interactions in HRI: the robot view. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 34, 181–186. doi:10.1109/tsmcc.2004.826268

Condoravdi, C., and Lauer, S. (2011). “Performative verbs and performative acts,” in Proceedings of Sinn und Bedeutung, Vol. 15 (Saarbrücken: Citeseer), 149–164.

Dautenhahn, K., Ogden, B., and Quick, T. (2002). From embodied to socially embedded agents – implications for interaction-aware robots. Cogn. Syst. Res. 3, 397–428. doi:10.1016/S1389-0417(02)00050-5

Dautenhahn, K., and Werry, I. (2002). “A quantitative technique for analysing robot-human interactions,” in 2002 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vol. 2 (Lausanne: IEEE), 1132–1138.

de Gelder, B. (2006). Towards the neurobiology of emotional body language. Nat. Rev. Neurosci. 7, 242–249. doi:10.1038/nrn1872

de Graaf, M., Allouch, S. B., and van Dijk, J. (2015). “What makes robots social? A users perspective on characteristics for social human-robot interaction,” in International Conference on Social Robotics (Paris: Springer), 184–193.

Gibson, T. A., Henderson, J. A., and Wiles, J. (2014). “Predicting temporal sequences using an event-based spiking neural network incorporating learnable delays,” in International Joint Conference on Neural Networks (IJCNN) (Beijing: IEEE), 3213–3220.

Haddadin, S., Albu-Schaffer, A., De Luca, A., and Hirzinger, G. (2008). “Collision detection and reaction: a contribution to safe physical human-robot interaction,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (Nice: IEEE), 3356–3363.

Hoffman, G., and Ju, W. (2014). Designing robots with movement in mind. J. Hum. Robot Interact. 3, 89. doi:10.5898/jhri.3.1.hoffman

Hove, M. J., and Risen, J. L. (2009). Its all in the timing: interpersonal synchrony increases affiliation. Soc. Cogn. 27, 949–960. doi:10.1521/soco.2009.27.6.949

Huang, C.-M., and Mutlu, B. (2016). “Anticipatory robot control for efficient human-robot collaboration,” in 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (Christchurch: IEEE), 83–90.

Kim, S., Shukla, A., and Billard, A. (2014). Catching objects in flight. IEEE Trans. Robot. 30, 1049–1065. doi:10.1109/tro.2014.2316022

Kollar, T., Tellex, S., Roy, D., and Roy, N. (2010). “Toward understanding natural language directions,” in 2010 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), (Osaka: IEEE).

Koppula, H. S., and Saxena, A. (2016). Anticipating human activities using object affordances for reactive robotic response. IEEE Trans. Pattern Anal. Mach. Intell. 38, 14–29. doi:10.1109/TPAMI.2015.2430335

Koschmann, T. D. (1996). CSCL, Theory and Practice of an Emerging Paradigm. New York, NY: Routledge.

Kuraoka, K., Konoike, N., and Nakamura, K. (2015). Functional differences in face processing between the amygdala and ventrolateral prefrontal cortex in monkeys. Neuroscience 304, 71–80. doi:10.1016/j.neuroscience.2015.07.047

LeDoux, J. (2012). Rethinking the emotional brain. Neuron 73, 653–676. doi:10.1016/j.neuron.2012.02.004

Lee, K. M., Peng, W., Jin, S.-A., and Yan, C. (2006). Can robots manifest personality? An empirical test of personality recognition, social responses, and social presence in human-robot interaction. J. Commun. 56, 754–772. doi:10.1111/j.1460-2466.2006.00318.x

Lemaignan, S., Warnier, M., Sisbot, E. A., Clodic, A., and Alami, R. (2017). Artificial cognition for social human–robot interaction: an implementation. Artif. Intell. 247, 45–69. doi:10.1016/j.artint.2016.07.002

Levinson, S. C. (2006). “On the human “interaction engine”,” in Wenner-Gren Foundation for Anthropological Research, Symposium, Vol. 134 (Duck, NC: Berg), 39–69.

Levinson, S. C., and Torreira, F. (2015). Timing in turn-taking and its implications for processing models of language. Front. Psychol. 6:731. doi:10.3389/fpsyg.2015.00731

Lichtsteiner, P., Posch, C., and Delbruck, T. (2008). A 128×128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE J. Solid-State Circuits 43, 566–576. doi:10.1109/jssc.2007.914337

Liu, S.-C., van Schaik, A., Mincti, B. A., and Delbruck, T. (2010). “Event-based 64-channel binaural silicon cochlea with Q enhancement mechanisms,” in Proceedings of 2010 IEEE International Symposium on Circuits and Systems (Paris: IEEE), 2027–2030.

Maroni, B. (2011). Pauses, gaps and wait time in classroom interaction in primary schools. J. Pragmat. 43, 2081–2093. doi:10.1016/j.pragma.2010.12.006

McGurk, H., and MacDonald, J. (1976). Hearing lips and seeing voices. Nature 264, 746–748. doi:10.1038/264746a0

Meeren, H. K., van Heijnsbergen, C. C., and de Gelder, B. (2005). Rapid perceptual integration of facial expression and emotional body language. Proc. Natl. Acad. Sci. U.S.A 102, 16518–16523. doi:10.1073/pnas.0507650102

Mondada, L. (2016). Challenges of multimodality: language and the body in social interaction. J. Socioling. 20, 336–366. doi:10.1111/josl.1_12177

Morris, J. S., Öhman, A., and Dolan, R. J. (1998). Conscious and unconscious emotional learning in the human amygdala. Nature 393, 467–470. doi:10.1038/30976

Munhall, K. G., Gribble, P., Sacco, L., and Ward, M. (1996). Temporal constraints on the McGurk effect. Percept. Psychophys. 58, 351–362. doi:10.3758/BF03206811

Newman, W., Button, G., and Cairns, P. (2010). Pauses in doctor–patient conversation during computer use: the design significance of their durations and accompanying topic changes. Int. J. Hum. Comput. Stud. 68, 398–409. doi:10.1016/j.ijhcs.2009.09.001

O’Shaughnessy, D. (1974). Consonant durations in clusters. IEEE Trans. Acoust. 22, 282–295. doi:10.1109/tassp.1974.1162588

Ramenzoni, V. C., Riley, M. A., Shockley, K., and Baker, A. A. (2012). Interpersonal and intrapersonal coordinative modes for joint and single task performance. Hum. Mov. Sci. 31, 1253–1267. doi:10.1016/j.humov.2011.12.004

Richardson, M. J., Marsh, K. L., Isenhower, R. W., Goodman, J. R., and Schmidt, R. (2007). Rocking together: dynamics of intentional and unintentional interpersonal coordination. Hum. Mov. Sci. 26, 867–891. doi:10.1016/j.humov.2007.07.002

Riley, M. A., Richardson, M. J., Shockley, K., and Ramenzoni, V. C. (2011). Interpersonal synergies. Front. Psychol. 2:38. doi:10.3389/fpsyg.2011.00038

Robins, B., Dautenhahn, K., Nehaniv, C. L., Mirza, N. A., François, D., and Olsson, L. (2005). “Sustaining interaction dynamics and engagement in dyadic child-robot interaction kinesics: lessons learnt from an exploratory study,” in ROMAN 2005. IEEE International Workshop on Robot and Human Interactive Communication, Vol. 2005 (Nashville, TN: IEEE), 716–722.

Sanghvi, J., Castellano, G., Leite, I., Pereira, A., McOwan, P. W., and Paiva, A. (2011). “Automatic analysis of affective postures and body motion to detect engagement with a game companion,” in Proceedings of the 6th International Conference on Human-Robot Interaction – HRI’11 (Lausanne: Association for Computing Machinery (ACM)).

Schlegl, T., Kröger, T., Gaschler, A., Khatib, O., and Zangl, H. (2013). “Virtual whiskers – highly responsive robot collision avoidance,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (Tokyo: IEEE), 5373–5379.

Stivers, T., Enfield, N. J., Brown, P., Englert, C., Hayashi, M., Heinemann, T., et al. (2009). Universals and cultural variation in turn-taking in conversation. Proc. Natl. Acad. Sci. U.S.A. 106, 10587–10592. doi:10.1073/pnas.0903616106

Tallal, P., and Piercy, M. (1974). Developmental aphasia: rate of auditory processing and selective impairment of consonant perception. Neuropsychologia 12, 83–93. doi:10.1016/0028-3932(74)90030-X

Thorpe, S., Fize, D., and Marlot, C. (1996). Speed of processing in the human visual system. Nature 381, 520–522. doi:10.1038/381520a0

Thorpe, S. J. (2012). “Spike-based image processing: can we reproduce biological vision in hardware?” in Computer Vision – ECCV 2012. Workshops and Demonstrations (Florence: Springer Nature), 516–521.

van Rullen, R., and Thorpe, S. J. (2001). The time course of visual processing: from early perception to decision-making. J. Cogn. Neurosci. 13, 454–461. doi:10.1162/08989290152001880

Wang, R., Thakur, C. S., Hamilton, T. J., Tapson, J., and van Schaik, A. (2017). Neuromorphic hardware architecture using the neural engineering framework for pattern recognition. IEEE Trans. Biomed. Circuits Syst. 11, 574–584. doi:10.1109/TBCAS.2017.2666883

Keywords: social moments, social robotics, timescales, responsiveness, social interaction, human–robot interaction

Citation: Durantin G, Heath S and Wiles J (2017) Social Moments: A Perspective on Interaction for Social Robotics. Front. Robot. AI 4:24. doi: 10.3389/frobt.2017.00024

Received: 13 January 2017; Accepted: 29 May 2017;

Published: 13 June 2017

Edited by:

Bilge Mutlu, University of Wisconsin-Madison, United StatesReviewed by:

Tony Belpaeme, Plymouth University, United KingdomFulvio Mastrogiovanni, Università di Genova, Italy

Copyright: © 2017 Durantin, Heath and Wiles. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gautier Durantin, g.durantin@uq.edu.au

†These authors have contributed equally to this work.

Gautier Durantin

Gautier Durantin Scott Heath

Scott Heath Janet Wiles

Janet Wiles